Abstract

When judging the lightness of objects, the visual system has to take into account many factors such as shading, scene geometry, occlusions or transparency. The problem then is to estimate global lightness based on a number of local samples that differ in luminance. Here, we show that eye fixations play a prominent role in this selection process. We explored a special case of transparency for which the visual system separates surface reflectance from interfering conditions to generate a layered image representation. Eye movements were recorded while the observers matched the lightness of the layered stimulus. We found that observers did focus their fixations on the target layer, and this sampling strategy affected their lightness perception. The effect of image segmentation on perceived lightness was highly correlated with the fixation strategy and was strongly affected when we manipulated it using a gaze-contingent display. Finally, we disrupted the segmentation process showing that it causally drives the selection strategy. Selection through eye fixations can so serve as a simple heuristic to estimate the target reflectance.

Keywords: lightness, eye movements, illusion

1. Introduction

The human eye is specialized to achieve vision in fine detail in the fovea. Visual acuity [1], luminance sensitivity [2], contrast sensitivity [3] and colour sensitivity [4,5] all decrease with retinal eccentricity. Therefore, our visual system has to construct its representation of the world from many small samples. The amount of light coming to the eye from an object is the product of the light striking the surface and on the proportion of light that is reflected. Considering one single point in a scene it is impossible to distinguish between illumination and reflectance. However, only the proportion of reflected light is an invariant property of the object, and thus of great importance for vision. To solve the ambiguity between illuminance and reflectance, the visual system uses different strategies to estimate an object's reflectance [6]. Several factors have been shown to play a role in lightness constancy. Lateral inhibition between retinal neurons filters out shallow intensity gradients which are mostly owing to illumination effects [7,8].

More complex factors also have an effect on lightness perception, such as object shape [9,10] or the interpretation of transparent surfaces [11–15]. Therefore, lightness, defined as the perceived reflectance of a surface [6,16], is highly dependent on the contextual properties of the whole scene [6,9,11,14,17–21], and the visual system has to use these contextual cues to infer an object's physical properties. Each scene is perceived by integrating the small high-resolution samples collected by moving the eyes around. The role of eye movements is not confined to maximize resolution, as their importance has been shown for other visual tasks. For example, Einhauser et al. [22] have found a bidirectional coupling between eye position and perceptual switching of the Necker cube. Georgiades & Harris [23] found the position of the fixation point that influences the dominance of perception when viewing ambiguous figures. Furthermore, even the appearance of basic visual features, such as spatial frequency, luminance or chromatic saturation, is distorted in the periphery of the visual field [2,4,5,24–29].

Therefore, it is sensible to assume that fixations serve as a selection mechanism for the perception of stimuli both above and below threshold. Surprisingly, eye movements have been almost completely neglected in lightness perception studies, even though general effects of viewing behaviour have been shown for some colour constancy tasks [30–32]. There is some evidence that observers do not use a physics-based approach, where they first estimate illuminance intensity and then extract relative lightness by discounting the illuminance. Rather, they might use simple cues such as brightness and contrast [33]. This agrees well with the observation of consistent failures of lightness constancy, for example with respect to geometrical variations [34,35]. In these cases, performance lay between luminance matching and lightness constancy.

In objects made of a single material, lightness, perceived reflectance, is by definition a singular value, whereas brightness, perceived luminance, typically varies across the surface. Therefore, a selection process is needed. We hypothesize that eye movements play a major role in this selection. We propose that a foveally weighted reflectance is estimated from local luminance samples. Although traditionally lightness constancy has been explained by low-level factors, for example lateral inhibition between retinal neurons [7,8,20,36], more recently a dominant influence of factors related to scene interpretation has been shown [6,9,10,13,19,37]. A simple principle proposed in the lightness perception context is the image segregation in transparent and opaque layers in order to discard the effect of the prevailing illumination and atmospheric conditions on the perceived surface. Differences in contrast magnitude along a border with a uniform contrast polarity (figure 1) are thought to be used to segregate the image. According to this heuristic, the regions whose contrast relative to the background is higher are perceived as belonging to the object surface in plain view. The regions whose border contrast is smaller are perceived as belonging to a transparent layer [12]. Here, we investigate whether observers preferentially fixate the regions of the stimuli that are perceived to be more informative on the actual reflectance of the target (i.e. the ones less occluded by the superimposed transparent layer) and whether such a fixation strategy influences the overall perceived lightness.

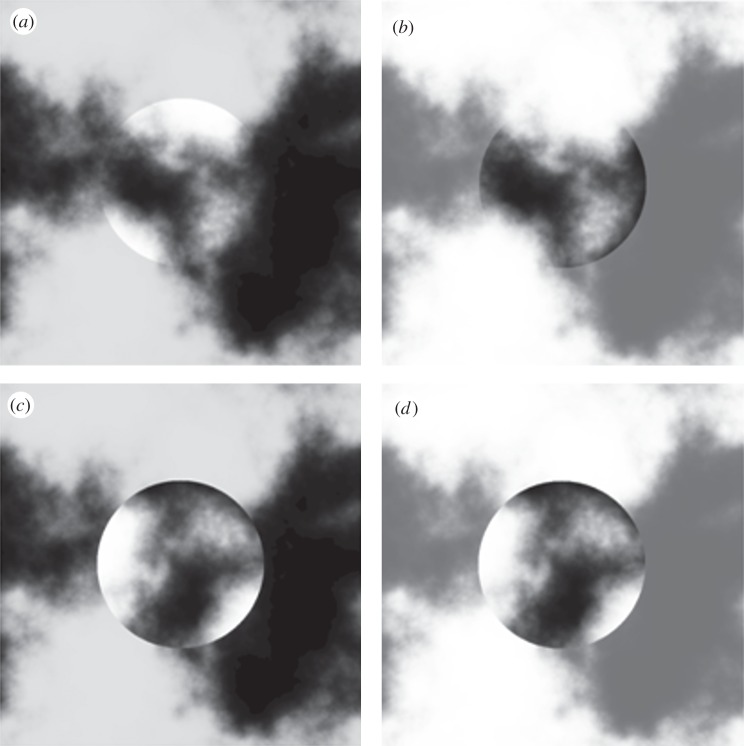

Figure 1.

Stimuli. The central circular regions in (a) and (b) are the same image, the surrounding noise has a different luminance range in order to change the contrast polarity that is uniform along the disc's border but with a different sign between (a) and (b).

2. Eye movements in a lightness estimation task

We recorded eye movements while observers matched the lightness of a visual stimulus known to induce a layered representation [13]. The stimulus consists of a disc defined by a uniform contrast polarity border with variable contrast magnitude owing to the noisy context in which it is embedded (figure 1). In this stimulus, the lowest contrast region along the border is perceived as being occluded by the foreground layer, and all regions within the object sharing the same brightness are interpreted in the same way. The areas of the object with the highest contrast magnitude are interpreted as not occluded and representing the actual reflectance of the target. In the dark surround condition (figure 1a), the smallest border contrast is associated with low luminance, and the brightest parts of the object are interpreted as the least occluded regions. On the contrary, in the light surround condition (figure 1b), where the highest contrast area along the border corresponds to darker luminance values, the darkest regions of the target are perceived to belong to the object layer. This layered representation leads to the perception of a light disc (figure 1a) embedded in dark noise and a dark disc (figure 1b) embedded in light noise, even though the luminance distributions within the two discs are identical.

Observers were asked to match the lightness of the central disc with a uniform reference disc displayed on the right side of the same CRT monitor. We represented the size of the illusion as the difference in matched luminance between the two (dark and bright surround) contextual conditions. Eye movements were measured during the matching task. Observers took 12.45 ± 5.53 s to perform these matches, 27% of which was spent on the target disc. Observers on average made five large amplitude saccades (about 14°) from the target to the matching disc or back and seven small amplitude saccades (average 2.54°) within the target. Overall, there were on average 14 fixations on the target disc with a mean duration of each fixation of 360 ms.

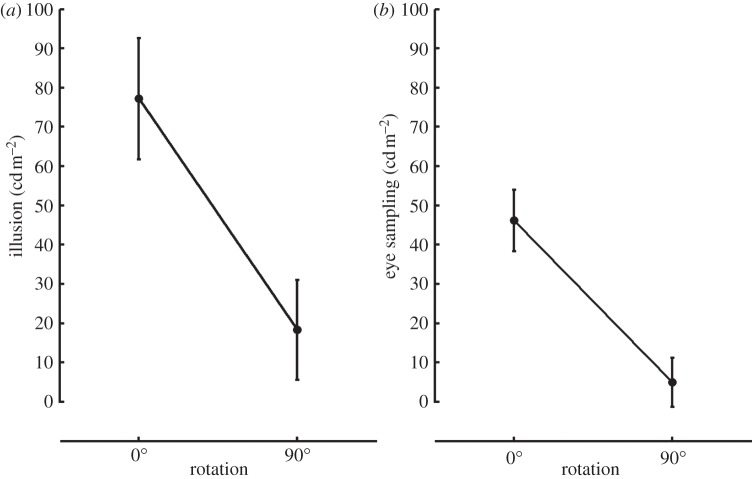

If foveal sampling plays a role in this illusion, then it is reasonable to expect that the fixation pattern should change depending on the context. In particular, the fixations should be focused on the regions of the target assumed to be on plain view, avoiding the occluding layers. Therefore, the average luminance around the fixated points should be higher in the dark surround condition than in the bright surround condition. We represented the effect of the background on the eye movement strategy as the difference between the average luminance in the regions around each fixated point in the dark surround condition and in the bright surround condition. The illusion effect was significant (t17 = 3.18, p < 0.05; figure 2), and the background condition also affected the eye sampling strategy (t17 = 2.56, p < 0.05; figure 2). As predicted, observers tended to focus on lighter parts of the disc in the dark background condition than in the light background condition. The illusion size was highly correlated with the contextual effect on eye sampling (Pearson's r = 0.904, p-value < 0.0001; figure 3). The viewing behaviour explained 82% of the between-observers' variability in the illusion size. Observers with a large perceptual effect also had large differences in eye sampling between the dark and light context condition.

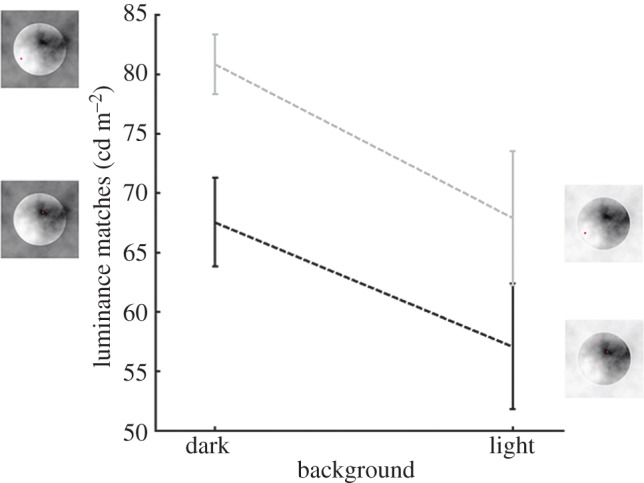

Figure 2.

Matching and eye movement sampling surround effects (means and standard errors). Illusion effect is the difference between each observer's average match in the dark and in the light surround condition. The eye movement sampling effect is the difference between the average luminance in the 1° regions around each fixated point for each observer in the dark and bright surround condition. Error bars indicate ±1 s.e.m.

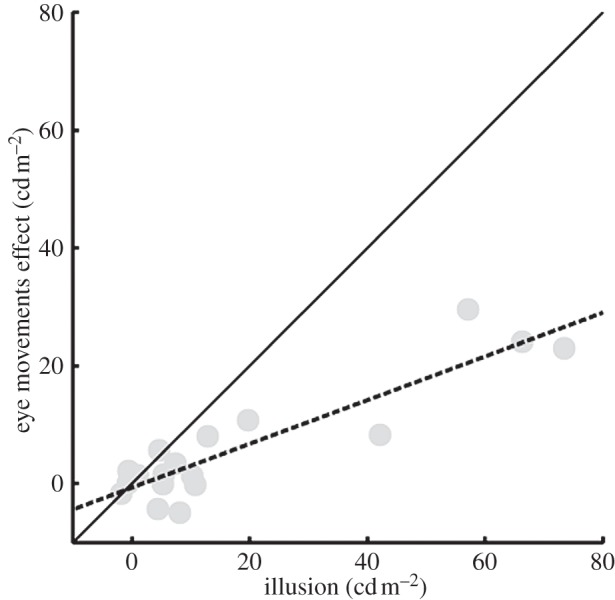

Figure 3.

Correlation between effects of scission on eye movement sampling and lightness matches. The difference in sampling the scene between the two surround conditions is highly correlated with the perceptual difference in lightness matches. The eye movements sampling strategy explains 82% of the variability in the perceptual effect.

3. Viewing strategies

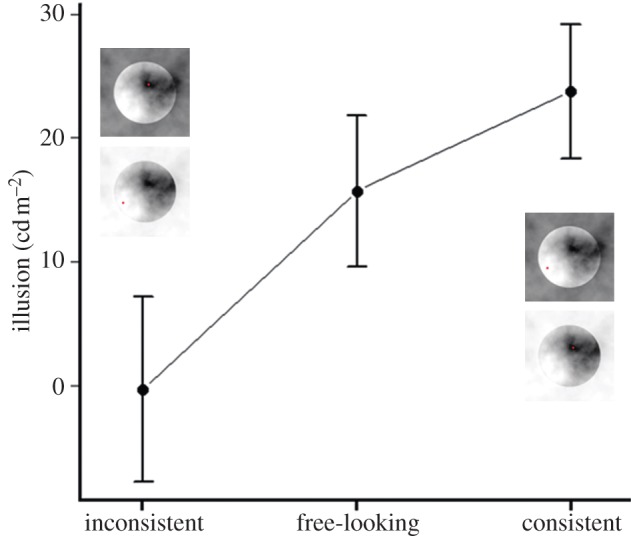

We actively manipulated the observers' fixation strategy using a gaze-contingent display in order to investigate the nature of the relationship between perception and eye sampling. We used the same stimuli as in the first experiment, but they were displayed only when the observers fixated a particular region within the target disc. We chose both bright and dark fixation regions. The luminance of the forced-fixation region strongly influenced lightness perception: fixating brighter parts of the stimulus led to brighter matches, whereas fixating darker parts led to darker matches (figure 4). A repeated measurement ANOVA revealed a strongly significant main effect of fixation condition (F1,14 = 12.81, p-value < 0.005). The interaction between fixation condition and surround condition was not significant (F1,14 = 23.3915, p-value > 0.25). The main effect of surround condition was just on the edge of significance (F1,14 = 4.3589, p = 0.05). In order to quantitatively compare the illusion effect in the free-looking condition and the forced-fixation condition, we computed the illusion effect from the forced-looking experiment as the differences between the dark and the light surround condition. We did this for the case when the forced-fixation pattern was either consistent or inconsistent with the spontaneous fixation strategy observed earlier (figure 5). In the consistent condition, observers fixated the bright region in the contextual condition that led to the perception of a light target disc (dark surround condition, figure 1a) and fixated the dark region in the contextual condition that led to the perception of a dark target disc (light surround condition, figure 1b). In the inconsistent condition, the observers fixated the darkest region in the contextual condition that led to the perception of a bright target disc and fixated the brightest region in the contextual condition that led to the perception of a dim target. Between the consistent and the inconsistent condition only the fixation area differed, but the overall stimulus was the same, therefore a difference between them could only be explained by an effect of the forced fixation. The eye sampling effect was significantly higher in the consistent condition than in the inconsistent condition (t14 = 3.5793, p < 0.005), and in this inconsistent condition, the effect disappeared completely (t14 = −0.0436, p > 0.95). The illusion effect measured in the free-looking condition was significantly higher than in the inconsistent condition (t14 = 3.3545, p < 0.005), and just lower than in the consistent condition (t14 = 2.6178, p < 0.05). Forcing the observer to a fixation strategy that emphasized the fixation strategy naturally applied by our observers, we found a stronger illusion effect. Forcing the observers to a fixation strategy at odds with their natural behaviour destroyed the illusion.

Figure 4.

Luminance matches in the forced-fixation conditions. The grey line represents the average matches in the light fixation condition, and the black line represents the average matches in the dark fixation condition. Pictures of the stimuli indicate the fixation conditions with the fixation points indicated by small spots. Error bars indicate ±1 s.e.m. (Online version in colour.)

Figure 5.

Perceptual effects during forced-fixation and free-looking conditions. Pictures of the stimuli represent the condition indicated by the label on the x-axis. The illusion effect is represented as the difference between the matches for the pictures above and below. Error bars indicate ±1 s.e.m. (Online version in colour.)

4. Causal role of the segmentation process on the fixation strategy

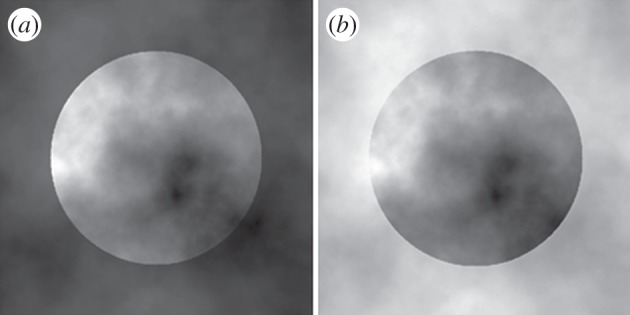

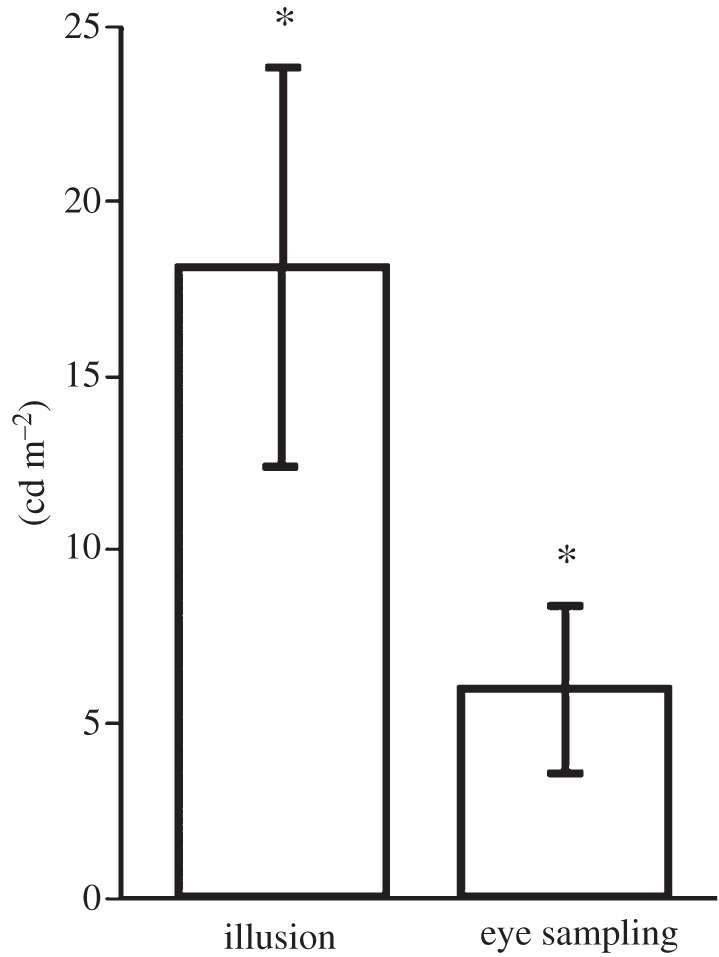

These experiments have clearly shown that perceptual effect of the segmentation process is amplified by eye movements, indicating that the foveal input was weighted more than the parafoveal input. The next question is whether eye movements are actually driven by the segmentation process or by some other property of the image, the test-background contrast, for example. Indeed, the luminance–contrast is one of the most important cues for fixation prediction [38–41]. To address this issue (Experiment 3), we repeated the first experiment rotating the test discs 90° (figure 6). This simple manipulation disrupts the segmentation process because the contrast polarity along the border is no longer uniform [15]. In line with earlier results, the illusion effect was highly reduced in the rotated version of the stimulus (t5 = 3.27, p < 0.05). The residual surround effect could be explained by simultaneous contrast, which was found to contribute 11% of the illusion [13]. The difference in the eye sampling strategy almost disappeared and was also reduced to a level similar to that for perception (t5 = 4.19, p < 0.05). At the same time, observers did not fixate more on the sharp borders of the rotated target. This finding indicates that saliency driven eye sampling does not generate the illusion. Rather, the scission process is the cause of the illusion, and the eye sampling acts as an amplifier of the perception (figure 7).

Figure 6.

Example of 90° rotated stimuli. (a,b) The squares on the top are the non-rotated stimuli in the dark and the light surround condition, and (c,d) the stimuli below are their rotated versions. After rotation, the contrast polarity along the border is not uniform anymore and the perception of transparency does not occur. The illusion looks vastly diminished in the squares (c,d).

Figure 7.

Illusion and eye sampling effects for standard and rotated version of the stimulus. The illusion effect on the ordinate in the plot (a) is the difference between the average subjective matches in the dark surround and the light surround conditions. (b) Eye sampling effect is the difference between the average 1° sampled area in the dark and in the light surround condition. Error bars indicate ±1 s.e.m.

5. Summary

Our experiments have shown that eye sampling is closely coupled with the perception of lightness. Fixations are concentrated on the layers of the image that represent the reflectance of the stimulus rather than on the occluding layers. In the dark background condition, the lighter areas of the stimulus are preferentially fixated compared with the light background condition, where the contrast relationships are reversed. Furthermore, we found an effect of the fixated position on lightness perception. The whole test stimulus was perceived as lighter when observers fixated a bright area than when they fixated a dark area. Selection strategy had a massive effect on perception. Consistent forced fixation increased the size of the lightness illusion, whereas inconsistent selection destroyed the illusion. This means that the fixation strategy is an integral component of the illusory effect, as the two cannot be dissociated. In line with this, we have shown that a disruption of the segmentation process influences both perception and eye sampling equally. It is the contrast along the border that causes the segmentation process and this process drives both perception and eye sampling.

6. Discussion

There are several known strategies that the visual system uses to disentangle illumination and reflectance. For example, when observers are asked to match a flat surface with another one in term of their paints, they partially compensate for the reduction in reflected light associated with surface slant [34]. In the Mach card effect [19], the two sides of a folded paper are perceived as being made of the same paper if the veridical shape of the card is perceived. However, if the apparent shape of the card is inverted, the two sides of the card are perceived as being made of different paper. Thus, when a change in reflected light is not consistent with a change in illumination, it can be perceived as a change in surface reflectance. Observers take into account the scene geometry in order to estimate the surface reflectance and a change in reflected light could be interpreted as a difference in albedo or illumination according to the interpretation of the scene.

Even the Cornsweet illusion [8], which has traditionally been interpreted as a low-level effect, can be modulated by scene geometry [9]. Furthermore, simply changing the stereo cues can promote a different interpretation of the scene, and thus a different perception of lightness [17]. Sometimes binocular disparity is not sufficient to assign a depth order to the single objects. Therefore, the visual system also relies on contextual cues to infer the relative positions of the scene components. It has been proposed that the visual system uses common layout features to infer the spatial relationships between the objects. For example, a uniform contrast polarity along an edge is likely to be caused by an occluding object being either darker or lighter [12–14], similarly, a shadow is always darker than the material on which it is projected [11]. In particular, geometrical two-dimensional configurations have been proposed to provide cues for the three-dimensional structure of the scenes. Specifically, the geometrical and luminance relationships within contour junctions induce illusory transparency and lightness perception, causing a perceptual scission of the scene into multiple layers [11].

These processes allow the observer to disambiguate the scene and distinguish between the surface reflectance and other factors when interpreting changes in reflected light. For example, they might suggest which parts of the scene are more informative about the albedo of a specific part of the scene. Here, we propose that eye movements are one of the mechanisms that the visual system is using to select relevant information for lightness perception once the scene has been interpreted. It is indeed known that there is a close link between where we look and the information we need for the immediate task goals [42,43]. The fixation pattern that we observed in this study was driven by a precise strategy and could not be explained by bottom-up saliency. When we rotated the stimuli, the global figure background contrast was the same as in the original version, but the fixations pattern changed together with the interpretation of the scene.

Our results show that selection processes for perception and action—lightness perception and saccadic eye movements—are closely coupled. Eye movements play a major role in perception not only when high visual acuity is required, but also in very simple lightness perception tasks. They serve as a powerful amplifier of the perceptual decisions made by the visual system.

(a). Material and methods

(i). Stimuli

The noisy texture consisted of low pass filtered normally distributed random noise. The power spectrum of the noise varied as (1/f2). The disc had the whole contrast allowed by the monitor, whereas the background contrast was reduced scaling the noise by a certain factor c [0 1]. In order to have a dark and a light surround with the same contrast, the scaled noise was shifted either to the highest or to the lowest luminance value of the screen. In Experiments 1 and 2, the spatial structure of the noise was not altered by the scaling applied to the surround, therefore the contrast polarity along the border was always uniform. The 90° rotation of the disc disrupted this uniformity, because the scaling and the noise were not spatially correlated anymore. In Experiments 1 and 2, the test disc had a 6° of visual angle radius, whereas in Experiment 3, it was 4°. The radius of the uniform comparison disc was always 4°. The initial luminance of the matching disc in the matching experiments (Experiments 1, 2 and 3) was sampled from a uniform distribution covering the whole range of the screen. In Experiments 1, 2 and 3, the test was displayed in the middle of the left half of the screen and the matching disc in the middle of the right half. The size of the texture was 860 × 860 pixel. In Experiment 3, in order to have a stronger illusion effect, the local contrasts were enhanced with a logistic transformation of the filtered noise before scaling. The transformation of the filtered noise to the higher local contrast noise is obtained by the following equation:

|

(ii). Monitor

The stimuli were presented on a calibrated Samsung SyncMaster 1100 mb monitor (40 × 30 cm; 1280 by 960 pixels; 85 Hz; 8 bits per gun). The monitor chromaticities were measured with PR650 spectroradiometer, CIE xyY coordinates for the upper right corner and for the screen centre were R = (0.610, 0.329, 22.2), G = (0.282, 0.585, 76.3) and B = (0.156, 0.066, 11.9).

(iii). Eye movement recording

Gaze position signals were recorded with a head-mounted, video-based eye tracker (EyeLink II; SR Research Ltd., Osgoode, Ontario, Canada) and were sampled at 500 Hz. Observers viewed the display binocularly, but only the right eye was sampled. Stimulus display and data collection were controlled by a PC. The eye tracker was calibrated at the beginning of each session. At the beginning of each trial, the calibration was checked, if the error was more than 1.5° of visual angle, then a new calibration was performed, else a simple drift correction was applied. A calibration was accepted only if the validation procedure revealed a mean error smaller than 0.4° of visual angle. We classified the eye movements events using the standard Eyelink saccade detection algorithm with an acceleration threshold of 8000° s−2 and a velocity threshold of 30° s−1. Consecutive samples without intervening saccades were averaged into a single fixation. Each fixation was weighted equally in the results shown here, but weighting fixations by their duration did not have any effects on any of our results. To calculate the eye sampling measure, the luminance of a 1° diameter area surrounding each fixation on the target disc was averaged. In the forced-looking experiment, eye position was monitored sample by sample and the image disappeared when the distance between the actual and desired fixated position was larger than 1.5°.

(iv). Behavioural tasks

In Experiments 1, 2 and 3, observers were asked to adjust the luminance of the matching disc to be as close as possible to the one of the target disc embedded in the texture. They could change the luminance of the disc by pressing the mouse buttons. Each press corresponded to a change of 0.552 cd m−2.

(v). Observers

Eighteen naive observers took part in Experiment 1, 15 in Experiment 2, six in Experiment 3; all of them were normal- or corrected-sighted undergraduate students.

Funding statement

This work was supported by the German Research Foundation Reinhardt–Koselleck Project grant no. GE 879/9 ‘Perception of material properties’. The authors declare that they have no competing financial interests.

References

- 1.Weymouth FW. 1958. Visual sensory units and the minimal angle of resolution. Am. J. Ophthalmol. 46, 102–113 [DOI] [PubMed] [Google Scholar]

- 2.Greenstein VC, Hood DC. 1981. Variations in brightness at two retinal locations. Vis. Res. 21, 885–891 (doi:10.1016/0042-6989(81)90189-9) [DOI] [PubMed] [Google Scholar]

- 3.Rovamo J, Franssila R, Näsänen R. 1992. Contrast sensitivity as a function of spatial frequency, viewing distance and eccentricity with and without spatial noise. Vis. Res. 32, 631–67 (doi:10.1016/0042-6989(92)90179-M) [DOI] [PubMed] [Google Scholar]

- 4.Weale RA. 1953. Spectral sensitivity and wave-length discrimination of the peripheral retina. J. Physiol. 119, 170–190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hansen T, Pracejus L, Gegenfurtner KR. 2009. Color perception in the intermediate periphery of the visual field. J. Vis. 9(4), 26.1–12 (doi:10.1167/9.4.26) [DOI] [PubMed] [Google Scholar]

- 6.Adelson EH. 2000. Lightness perception and lightness illusion. In The new cognitive neurosciences (ed. Gazzaniga M.), pp. 339–351 Cambridge, MA: MIT Press [Google Scholar]

- 7.Mach E.1868. Über die physiologische Wirkung räumlich verteilter Lichtreize [On the physiological effect of spatially distributed light stimuli]. Wiener Sitzungsber Math-Naturwiss Cl Kaiserlichen Akad Wiss 57, 1–11.

- 8.Cornsweet T. 1970. Visual perception. New York, NY: Academic Press [Google Scholar]

- 9.Knill DC, Kersten D. 1991. Apparent surface curvature affects lightness perception. Nature 351, 228–230 (doi:10.1038/351228a0) [DOI] [PubMed] [Google Scholar]

- 10.Bloj MG, Kersten D, Hurlbert AC. 1999. Perception of three-dimensional shape influences colour perception through mutual illumination. Nature 402, 877–879 (doi:10.1038/47245) [DOI] [PubMed] [Google Scholar]

- 11.Anderson BL. 1997. A theory of illusory lightness and transparency in monocular and binocular images: the role of contour junctions. Perception 26, 419–453 (doi:10.1068/p260419) [DOI] [PubMed] [Google Scholar]

- 12.Anderson BL. 2003. The role of occlusion in the perception of depth, lightness, and opacity. Psychol. Rev. 110, 785–801 (doi:10.1037/0033-295X.110.4.785) [DOI] [PubMed] [Google Scholar]

- 13.Anderson BL, Winawer J. 2005. Image segmentation and lightness perception. Nature 434, 79–83 (doi:10.1038/nature03271) [DOI] [PubMed] [Google Scholar]

- 14.Anderson BL, Winawer J. 2008. Layered image representations and the computation of surface lightness. J. Vis. 8(7), 18.1–22 (doi:10.1167/8.7.18) [DOI] [PubMed] [Google Scholar]

- 15.Singh M, Anderson BL. 2002. Toward a perceptual theory of transparency. Psychol. Rev. 109, 492–519 (doi:10.1037/0033-295X.109.3.492) [DOI] [PubMed] [Google Scholar]

- 16.Arend LE, Spehar B. 1993. Lightness, brightness, and brightness contrast: 1. Illuminance variation. Percept. Psychophys. 54, 446–456 (doi:10.3758/BF03211767) [DOI] [PubMed] [Google Scholar]

- 17.Gilchrist AL. 1980. When does perceived lightness depend on perceived spatial arrangement? Percept. Psychophys. 28, 527–538 (doi:10.3758/BF03198821) [DOI] [PubMed] [Google Scholar]

- 18.Purves D, Lotto RB, Williams SM, Nundy S, Yang Z. 2001. Why we see things the way we do: evidence for a wholly empirical strategy of vision. Phil. Trans. R. Soc. B 356, 285–297 (doi:10.1098/rstb.2000.0772) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mach E. 1959. The analysis of sensations, and the relation of the physical to the psychical. New York, NY: Dover Publications Inc [Google Scholar]

- 20.Craik K. 1966. The nature of psychology: a selection of papers, essays, and writings (ed. Sherwood SL.) Cambridge, UK: Cambridge University Press [Google Scholar]

- 21.Gilchrist AL, Radonjic A. 2009. Anchoring of lightness values by relative luminance and relative area. J. Vis. 9(9), 13.1–10 (doi:10.1167/9.9.13) [DOI] [PubMed] [Google Scholar]

- 22.Einhauser W, Martin KA, Konig P. 2004. Are switches in perception of the Necker cube related to eye position? Eur. J. Neurosci. 20, 2811–2818 (doi:10.1111/j.1460-9568.2004.03722.x) [DOI] [PubMed] [Google Scholar]

- 23.Georgiades MS, Harris JP. 1997. Biasing effects in ambiguous figures: removal or fixation of critical features can affect perception. Vis. Cogn. 4, 383–408 (doi:10.1080/713756770) [Google Scholar]

- 24.Davis ET. 1990. Modeling shifts in perceived spatial frequency between the fovea and the periphery. J. Opt. Soc. Am. A 7, 286–296 (doi:10.1364/JOSAA.7.000286) [DOI] [PubMed] [Google Scholar]

- 25.Abramov I, Gordon J. 1977. Color vision in the peripheral retina. I. Spectral sensitivity. J. Opt. Soc. Am. 67, 195–202 (doi:10.1364/JOSA.67.000195) [DOI] [PubMed] [Google Scholar]

- 26.Abramov I, Gordon J, Chan H. 1991. Color appearance in the peripheral retina: effects of stimulus size. J. Opt. Soc. Am. A 8, 404–414 (doi:10.1364/JOSAA.8.000404) [DOI] [PubMed] [Google Scholar]

- 27.Gordon J, Abramov I. 1977. Color vision in the peripheral retina. II. Hue and saturation. J. Opt. Soc. Am. 67, 202–207 (doi:10.1364/JOSA.67.000202) [DOI] [PubMed] [Google Scholar]

- 28.Johnson MA. 1986. Color vision in the peripheral retina. Am. J. Optom. Physiol. Opt. 63, 97–103 (doi:10.1097/00006324-198602000-00003) [DOI] [PubMed] [Google Scholar]

- 29.Stabell U, Stabell B. 1982. Color vision in the peripheral retina under photopic conditions. Vis. Res. 22, 839–844 (doi:10.1016/0042-6989(82)90017-7) [DOI] [PubMed] [Google Scholar]

- 30.Cornelissen FW, Brenner E. 1995. Simultaneous colour constancy revisited: an analysis of viewing strategies. Vis. Res. 35, 2431–2448 (doi:10.1016/0042-6989(94)00318-G) [PubMed] [Google Scholar]

- 31.Golz J. 2010. Colour constancy: influence of viewing behaviour on grey settings. Perception 39, 606–619 (doi:10.1068/p6052) [DOI] [PubMed] [Google Scholar]

- 32.Granzier J, Toscani M, Gegenfurtner KR. 2011. Do individual differences in eye movements explain differences in chromatic induction between subjects? Perception 40, 114 [Google Scholar]

- 33.Robilotto R, Zaidi Q. 2006. Lightness identification of patterned three-dimensional, real objects. J. Vis. 6, 18–36 (doi:10.1167/6.1.3) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ripamonti C, Bloj M, Hauck R, Kiran M, Greenwald S, Maloney SI, Brainard DH. 2004. Measurements of the effect of surface slant on perceived lightness. J. Vis. 4, 747–763 (doi:10.1167/4.9.7) [DOI] [PubMed] [Google Scholar]

- 35.Robilotto R, Zaidi Q. 2004. Limits of lightness identification for real objects under natural viewing conditions. J. Vis. 4, 779–797 (doi:10.1167/4.8.779) [DOI] [PubMed] [Google Scholar]

- 36.O'Brien V. 1959. Contrast by contour-enhancement. Am. J. Psychol. 72, 299–300 (doi:10.2307/1419385) [Google Scholar]

- 37.Koffka K. 1935. Principles of gestalt psychology. London, UK: Lund Humphries [Google Scholar]

- 38.Parkhurst D, Law K, Niebur E. 2002. Modeling the role of salience in the allocation of overt visual attention. Vis. Res. 42, 107–123 (doi:10.1016/S0042-6989(01)00250-4) [DOI] [PubMed] [Google Scholar]

- 39.Reinagel P, Zador AM. 1999. Natural scene statistics at the centre of gaze. Network 10, 341–350 (doi:10.1088/0954-898X/10/4/304) [PubMed] [Google Scholar]

- 40.Yanulevskaya V, Marsman JB, Cornelissen F, Geusebroek JM. 2011. An image statistics-based model for fixation prediction. Cogn. Comput. 3, 94–104 (doi:10.1007/s12559-010-9087-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Koch C, Ullman S. 1985. Shifts in selective visual attention: towards the underlying neural circuitry. Hum. Neurobiol. 4, 219–227 [PubMed] [Google Scholar]

- 42.Jovancevic-Misic J, Hayhoe M. 2009. Adaptive gaze control in natural environments. J. Neurosci. 29, 6234–6238 (doi:10.1523/JNEUROSCI.5570-08.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tatler BW, Hayhoe MM, Land MF, Ballard DH. 2011. Eye guidance in natural vision: reinterpreting salience. J. Vis. 11(5), 5.1–23 (doi:10.1167/11.5.5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Derrington AM, Krauskopf J, Lennie P. 1984. Chromatic mechanisms in lateral geniculate-nucleus of macaque. J. Physiol. (Lond.) 357, 241–265 [DOI] [PMC free article] [PubMed] [Google Scholar]