Abstract

Purpose

The original Clinical Research Appraisal Inventory (CRAI), which assesses the self-confidence of trainees in performing different aspects of clinical research, comprises 92 items. Completing the lengthy CRAI is time-consuming and represents a considerable burden to respondents, yet the CRAI provides useful data for evaluating research-training programs. The purpose of this study is to develop a shortened version of the CRAI and to test its validity and reliability.

Method

Trainees in clinical-research degree and career development programs at the University of Pittsburgh’s Institute for Clinical Research Education completed the 92-item CRAI between 2007 and 2012, inclusive. The authors conducted, first, exploratory factor analysis on a training dataset (2007-2010) to reduce the number of items and, then, confirmatory factor analyses on a testing dataset (2011-2012) to test the psychometric properties of the shortened version.

Results

Of 546 trainees, 394 (72%) provided study data. Exploratory factor analysis revealed six distinct factors, and confirmatory factor analysis identified the two items with the highest loadings per factor, for a total of 12 items. Cronbach’s alpha for the six new factors ranged from 0.80 to 0.94. Factors in the 12-item CRAI were strongly and significantly associated with factors in the 92-item CRAI; correlations ranged from 0.82 to 0.96 (P < .001 for each).

Conclusions

The 12-item CRAI is faster and less burdensome to complete but retains the strong psychometric properties of the original CRAI.

In response to the increasing demands for basic research findings to be translated into improvements in public health,1 many universities have introduced new clinical research training and degree programs. A large proportion of resources for these programs comes from Clinical and Translational Science Awards provided by the National Institutes of Health (NIH). Although the NIH mandates the assessment of the outcomes of such programs, many outcomes are difficult to track because usually a long lag occurs between the time that participants complete their training and the time that their success (typically measured by receiving funding and/or publishing research results) becomes evident. For this reason, there is a need for an instrument (or instruments) to assess immediate program outcomes.

Mullikin and colleagues developed the Clinical Research Appraisal Inventory (CRAI)2 to meet this need. Based on self-efficacy theory3 and social cognitive career theory,4 this instrument assesses the confidence of trainees in performing common clinical research tasks. The theory underpinning the CRAI is well described in the original article2; we present only a brief summary here. Self-efficacy theory posits that if individuals believe they can complete a task successfully, they are more likely to do so. Social cognitive career theory links the expectation of positive outcomes and success in a given set of behaviors (e.g., those related to conducting clinical research) to the likelihood of pursuing a career that requires such behaviors. The theory underscoring the CRAI, therefore, is that trainees who have high confidence in their clinical research skills are more likely to pursue a career in clinical research. Although confidence in performing a given task may not equate directly with the actual ability to perform the task, increased confidence and interest in a clinical research career are both evidence that research training and degree programs have favorable effects on students.2

In addition, being confident in one’s ability to perform research (i.e., having high research self-efficacy) can predict students’ subsequent involvement in research.5 The authors of the CRAI intended for the instrument to help evaluate individuals pursuing careers in academic medicine.2 The instrument originally comprised 10 hypothesized factors (hereafter, “domains”), derived from necessary skills for conducting clinical research, dispersed over 92 items. Subsequently, the instrument was refined to 8 factors (domains) and 88 items. The originally hypothesized 10 domains were as follows: (1) conceptualizing a study, (2) designing a study, (3) collaborating with others, (4) funding a study, (5) planning and managing a research study, (6) protecting research subjects and the responsible conduct of research, (7) collecting, recording, and analyzing data, (8) interpreting data, (9) reporting a study, and (10) presenting a study.2

The original instrument showed strong internal consistency (Cronbach’s alpha of 0.96) and test-retest reliability (median of 0.88 over an average of 4 weeks).2 The authors found some evidence for criterion-related validity in consistent increases (mostly statistically significant) through the career progression from fellowship through the pre-tenure period and on to post-tenure positions.

However, at 88 items, the instrument is time-consuming to complete for trainees who also have clinical duties and coursework. The burden of completing it is compounded by the fact that it is often administered with other surveys designed to gather sociodemographic data and/or to measure outcomes such as career progress and motivation. An additional problem posed by the length of the instrument is that respondents are more likely not to answer all of the questions, or to provide a more uniform set of answers.6 In addition, Lipira and colleagues’ analysis of a 76-item CRAI shows that some factors could be combined and some items removed (leaving 7 factors and 69 items) without compromising validity and reliability.7 Their analysis also suggests that the instrument can be shortened even further.

We designed our study to perform factor analyses of the 92-item CRAI, thereby identifying items that measure similar constructs. By removing redundant items, we hoped to arrive at an instrument that is substantially shorter but retains the validity and reliability of the longer instrument.

Method

Our study sample consisted of trainees who were enrolled from 2007 to 2012 in 12 degree or career development programs (e.g., a master’s of science in clinical research program) offered by the Institute for Clinical Research Education (ICRE) at the University of Pittsburgh. Participants in these programs (hereafter “trainees,” no matter their position) spanned the entire clinical research career spectrum, from medical school students to faculty members.

The ICRE asked all trainees in all its programs to provide informed consent and to complete a series of instruments covering a variety of topics. The University of Pittsburgh Institutional Review Board approved the ICRE survey administration under expedited review (IRB0608202). The instruments that trainees completed included questionnaires designed to obtain data on sociodemographic characteristics, career goals, mentoring, networking, resources, burnout, motivation, and important life events. While providing informed consent is not mandatory, annual completion of a subset of the instruments is required for program purposes (the ICRE does not use the data of those who do not provide consent in research or publications). The CRAI is administered at the start and end of each program.

For the study reported here, we used only baseline data (data from the start of each program). The data derived from the original 92-item CRAI, which asks trainees to rank their confidence in performing a range of clinical research tasks related to 10 domains (see above). Trainees ranked their confidence on an 11-point scale (0 indicating no confidence to perform the task successfully today and 10 indicating total confidence).

We used Cronbach’s alpha to assess the internal consistency of the instrument, and we used inter-item correlations to assess redundancy among items. To test our instrument, we conducted exploratory factor analyses (EFAs) on the training dataset (2007-2010) using Stata version 12 (Stata Corporation, College Station, TX). We used the principal-factor method with a promax rotation to identify the number of factors and to generate factor loadings. To confirm our instrument, we used confirmatory factor analysis (CFA). Using Mplus version 6.11 (Muthén & Muthén, Los Angeles, CA), we extracted the two items with the highest loadings for each factor and conducted a higher-order CFA on the testing dataset (2011-2012) to model the relationships between clinical research self-efficacy and the factors and items. For the CFA, we used maximum likelihood with pairwise deletion, which allowed us to retain as many observations as possible.

In additional tests to validate both the original and shortened CRAI scales, we used a convergent factor, which we hypothesized would show strong correlation with confidence in performing research, and a divergent factor, which we hypothesized would be unrelated to confidence in performing research. We selected the percentage of time spent on research as the convergent factor, and we selected a measure of extrinsic motivation from the Work Preference Inventory8 as the divergent factor. Both items are components of the surveys that trainees completed when they completed the CRAI. Since trainees in 2012 completed the CRAI-12, we used the training data from this year for validation.

For all analyses, we considered a two-tailed P value of < .05 to be significant.

Results

Number of participants

Of the 402 trainees who enrolled in clinical research degree and training programs from 2007 to 2010, 382 (95%) consented to participating in the research study, 255 (63%) responded to every item on the CRAI, and 333 (83%) responded to at least one item in the CRAI. Of the 144 trainees in the testing dataset (2011-2012), 139 (97%) consented, and all 139 responded to at least one item in the CRAI-12.

The EFA routine in Stata uses listwise deletion, leading to a final training data sample size for the EFA of 255. CFA in Mplus uses maximum likelihood and pairwise deletion, leading to a final testing sample size for the confirmatory factor analysis of 139.

Characteristics of the study sample

The sample of 255 trainees in the training data included slightly more men than women. The mean age was 31 years, and the majority of trainees (65%, n = 166) were white. Trainees spanned the pipeline of career development, but most (59%, n =151) had a doctoral degree (Table 1). Of the 52 respondents who were faculty members (20%), all but one were early in their career (i.e., at the instructor or assistant professor level). The data for the participants in the testing sample were similar characteristics, with only slight differences in gender and degree type (see Table 1).

Table 1.

Sociodemographic Characteristics of Study Participants, 2007-2012*

| Characteristic | Number (% of 255) participants in the training sample |

Number (% of 139) participants in the testing sample |

P value |

|---|---|---|---|

| Gender | 0.005 | ||

| Female | 112 (44) | 82 (59) | |

| Male | 132 (52) | 56 (40) | |

| Missing | 11 (4) | 1 (1) | |

| Race/ethnicity | 0.086 | ||

| White | 166 (65) | 82 (59) | |

| African American | 11 (4) | 15 (11) | |

| Asian | 57 (22) | 27 (19) | |

| Other | 1 (< 1) | 2 (1) | |

| Missing | 20 (8) | 13 (9) | |

| Position | 0.015 | ||

| Fellow or postdoctoral scholar | 74 (29) | 58 (42) | |

| Faculty member | 52 (20) | 18 (13) | |

| Medical student | 45 (18) | 18 (13) | |

| Clinical doctorate student (other than medical student) |

29 (11) | 7 (5) | |

| Resident | 28 (11) | 20 (14) | |

| PhD student | 21(8) | 15 (11) | |

| MD/PhD student | 5 (2) | 1 (1) | |

| Master’s degree student | 0 | 2 (1) | |

| Missing | 1 (< 1) | 0 | |

| Degree type | 0.001 | ||

| MD | 123 (48) | 71 (51) | |

| BS or BA | 72 (28) | 27 (19) | |

| PhD | 13 (5) | 15 (11) | |

| Other doctoral degree | 12 (5) | 8 (6) | |

| MS | 13 (5) | 14 (10) | |

| MD plus PhD | 3 (1) | 4 (3) | |

| No undergraduate degree | 4 (2) | 0 | |

| Missing | 15 (6) | 0 |

Because of rounding, percentages may not total 100. The mean (standard deviation) age in years of the participants in the training sample was 31 (6.18) and the mean (standard deviation) age in years of the participants in the testing sample was 32 (5.9) (P = .354).

Assessment of the instrument

Based on our training data, Cronbach’s alpha for the entire 92-item CRAI was 0.99, and for each of the 10 domains it ranged from 0.93 to 0.98 (Table 2).

Table 2.

Cronbach’s alpha of the 92-Item Clinical Research Appraisal Inventory (CRAI-92) and the 12-Item CRAI (CRAI-12), Along with the Mean Score for Each from a Survey of 255 Trainees, 2007-2010

| Domain/Factor* (No. of items) |

Cronbach’s alpha |

Mean (standard deviation) |

|---|---|---|

| CRAI-92 | ||

| Designing a study (12) | 0.97 | 5.3 (2.1) |

| Collecting, recording, and analyzing data (11) |

0.97 | 5.0 (2.4) |

| Reporting a study (12) | 0.97 | 5.9 (2.2) |

| Interpreting data (4) | 0.97 | 6.1 (2.3) |

| Presenting a study (3) | 0.93 | 6.5 (2.2) |

| Conceptualizing a study (10) |

0.97 | 6.2 (1.8) |

| Collaborating with others (8) |

0.95 | 6.3 (1.9) |

| Planning and managing a research study (11) |

0.97 | 5.1 (2.2) |

| Funding a study (10) | 0.98 | 4.4 (2.5) |

| Protecting research subjects and responsible conduct of research (11) |

0.97 | 5.4 (2.2) |

| CRAI-12 | ||

| Factor 1 (2) | 0.89 | 4.9 (2.4) |

| Factor 2 (2) | 0.94 | 6.3 (2.4) |

| Factor 3 (2) | 0.80 | 6.6 (1.9) |

| Factor 4 (2) | 0.87 | 4.5 (2.4) |

| Factor 5 (2) | 0.92 | 4.6 (2.7) |

| Factor 6 (2) | 0.88 | 5.7 (2.4) |

The authors refer to the 10 factors in the original CRAI-92 as “domains” and the 6 factors in their CRAI-12 as “factors.”

To determine whether this high level of internal consistency was due to the large number of items or the high correlation among the items, we examined relationships among domains and items. We found that correlations among domains ranged from 0.72 to 0.89 and that correlations of items within the domains ranged from 0.60 to 0.86 (data not shown). Across the 92 individual items, correlations ranged from 0.33 to 0.94, and the mean correlation was 0.60. The majority of the correlations were greater than 0.60, indicating a high degree of redundancy among items.

To ensure that the high level of correlation was not due to a response set pattern, we examined the raw training data and found that some degree of variability occurred among responses across the items, as evident in the standard deviations shown in Table 2.

To reduce the number of items and eliminate the redundancy, we conducted several EFAs. Given the high degree of correlation among domains, we selected a promax rotation to enable the factors to correlate. We retained factors that had an Eigenvalue greater than 1 and reduced the model by a single factor at a time until we obtained a solution that had multiple items per factor, with each item loading strongly on a single factor. With the 255 complete survey responses, our first model resulted in an 8-factor solution in which the eighth factor consisted of a single item. We conducted another EFA, this time specifying a 7-factor solution, and found similar results. For the 7-factor model, no items sufficiently loaded onto the seventh factor. Finally, when we ran an EFA that specified a 6-factor solution, we found that the 6 factors retained 3 of the original factors, had minimal cross-loading of items, and explained 85% of the variance.

In the 6-factor model (Table 3), we retained the same factor names as the original CRAI,2 combining them where appropriate, to be consistent with the literature and constructs of the original authors. Factor 1 combined items from 2 original domains (designing a study and collecting data); factor 2 combined items from 3 original domains (reporting a study, interpreting data, and presenting a study); factor 3 combined items from 2 original domains (conceptualizing a study and collaborating with others); and factors 4, 5, and 6 each consisted of items from 1 original domain (respectively, planning and managing a research study; funding a study; and protecting research subjects and the responsible conduct of research). We retained the 6-factor solution and, to shorten the measure as much as possible, selected only the two items from each factor that had the highest loading for our CFA.

Table 3.

Factors and Items in the 12-Item Clinical Research Appraisal Inventory (CRAI-12)

| Factor (Previous domains*) |

Item (abbreviated name) |

|---|---|

| Factor 1 (Designing and collecting) |

Design the best data analysis strategy for your study (analysis) |

| Determine an adequate number of subjects for your research project (number of subjects) |

|

| Factor 2 (Reporting, interpreting, and presenting) |

Write the results section of a research paper that clearly summarizes and describes the results, free of interpretative comments (results) |

| Write a discussion section for a research paper that articulates the importance of your findings relative to other studies in the field (discussion) |

|

| Factor 3 (Conceptualizing and collaborating) |

Select a suitable topic area for study (topic) |

| Identify faculty collaborators from within and outside the discipline who can offer guidance to the project (collaborators) |

|

| Factor 4 (Planning) | Set expectations and communicate them to project staff (expectations) |

| Ask staff to leave the project team when necessary (staff) | |

| Factor 5 (Funding) | Describe the proposal review and award process for a major funding agency, such as the National Institutes of Health, National Science Foundation, or other foundation (funding) |

| Locate appropriate forms for a grant application (grant) | |

| Factor 6 (Protecting) | Describe ethical concerns with the use of placebos in clinical research (ethics) |

| Apply the appropriate process for obtaining informed consent from research subjects (informed consent) |

Domain names have been shortened slightly

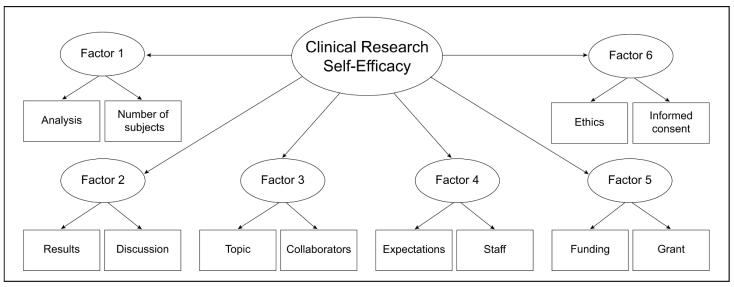

We used Mplus to analyze the hypothesized model using the testing dataset, with clinical research self-efficacy as the higher-order factor and with the 6 lower-order factors shown in Figure 1. Results indicated that the model fit the data well: root mean square error of approximation (RMSEA) = 0.08; confirmatory fit index (CFI) = 0.97; and Tucker-Lewis index (TLI) = 0.96. All component factors were significantly associated with the higher-order factor (i.e., clinical research efficacy), and all items were significantly associated with their related factors (P < .001). Standardized estimates for the relationship between component factors and the higher-order factor ranged from 0.77 to 0.86 with R2 values ranging from 0.59 to 0.74 (Table 4). Standardized estimates for the relationship between items and their related factors ranged from 0.75 to 0.97, with R2 values ranging from 0.57 to 0.94 (Table 5).

Figure 1.

Higher-order factor model for the 12-item Clinical Research Appraisal Inventory (CRAI-12). Factors and items are described in full in Table 3.

Table 4.

Results of Confirmatory Factor Analysis of the 12-Item Clinical Research Appraisal Inventory (CRAI-12): Association of Component Factors with Research Self-Efficacy

| Factor no.: Name | Estimate (standard error) |

R 2 |

|---|---|---|

| 1: Designing and collecting | 0.84 (0.03) | 0.71 |

| 2: Reporting, interpreting and presenting | 0.84 (0.03) | 0.71 |

| 3: Conceptualizing and collaborating | 0.77 (0.05) | 0.59 |

| 4: Planning | 0.86 (0.04) | 0.74 |

| 5: Funding | 0.85 (0.03) | 0.72 |

| 6: Protecting | 0.81 (0.04) | 0.65 |

Table 5.

Results of Confirmatory Factor Analysis of the 12-Item Clinical Research Appraisal Inventory (CRAI-12): Association of Individual Items to Their Factors

| Abbreviated name of item |

Factor no.: Name | Estimate (standard error) |

R 2 |

|---|---|---|---|

| Analysis | 1: Designing and collecting | 0.96 (0.02) | 0.93 |

| Number of subjects | 1: Designing and collecting | 0.93 (0.02) | 0.87 |

| Results | 2: Reporting | 0.97 (0.01) | 0.94 |

| Discussion | 2: Reporting | 0.97 (0.01) | 0.93 |

| Topic | 3: Conceptualizing and collaborating | 0.75 (0.05) | 0.57 |

| Collaborators | 3: Conceptualizing and collaborating | 0.90 (0.04) | 0.82 |

| Expectations | 4: Planning | 0.91 (0.03) | 0.84 |

| Staff | 4: Planning | 0.82 (0.04) | 0.67 |

| Funding | 5: Funding | 0.96 (0.02) | 0.92 |

| Grant | 5: Funding | 0.93 (0.02) | 0.87 |

| Ethics | 6: Protecting | 0.86 (0.03) | 0.74 |

| Informed consent | 6: Protecting | 0.95 (0.03) | 0.90 |

Cronbach’s alphas for factors in the revised 12-item scale (CRAI-12) ranged from 0.80 to 0.94 (Table 2). Factors in the CRAI-12 were strongly and significantly associated with factors in the CRAI-92 and had correlations ranging from 0.82 to 0.96 (P < .001 for each; Table 6).

Table 6.

The Correlations Between the 92-Item Clinical Research Appraisal Inventory (CRAI-92) and the 12-Item CRAI (CRAI-12)

| Domain from CRAI-92 | Factor from CRAI-12 |

Correlation |

|---|---|---|

| Designing a study | 1 | 0.94 |

| Collecting, recording, and analyzing data |

1 | 0.87 |

| Reporting a study | 2 | 0.96 |

| Interpreting data | 2 | 0.84 |

| Presenting a study | 2 | 0.82 |

| Conceptualizing a study | 3 | 0.83 |

| Collaborating with others | 3 | 0.90 |

| Planning and managing a research study |

4 | 0.93 |

| Funding a study | 5 | 0.96 |

| Protecting research subjects and responsible conduct of research |

6 | 0.95 |

When we included the correlations of the convergent and discriminant variables with the original scale for comparison, we used Spearman correlations (ρ) because of skewed distributions. We found that both the original CRAI and the CRAI-12 correlated identically with the percentage of time spent on research: convergent factor validity was 0.27 (P < .01) for each instrument. While this is low, we were more concerned with how it related to the divergent factor validity, which was much lower. Both instruments failed to correlate with extrinsic motivation: divergent factor validity was 0.08 (P = .32) for the CRAI-92 and was 0.10 (P = .23) for the CRAI-12. These findings provide evidence for the construct validity of the CRAI-12, showing that it has convergent and discriminant validity and, importantly, performs similarly to the CRAI-92.

Discussion

The purpose of our study was to create an instrument that is substantially shorter than the original CRAI but retains the original instrument’s strong psychometric properties. Our results indicate that the CRAI-12 maintains the original instrument’s level of reliability, as measured by its high internal consistency (Cronbach’s alpha 0.80 – 0.94; Table 2); is highly correlated with the original instrument (0.82 – 0.96; Table 6); and shows strong factorial validity, as demonstrated by the results of the higher-order factor analysis (R2 values from 0.57 to 0.94; Table 5). Overall, our findings suggest that the CRAI-12 can serve as an alternative, shorter assessment of clinical research self-efficacy.

The condensed factor structure, decreased from the originally hypothesized 10 domains to our 6 factors, involved combining some factors, and we see theoretical relationships in the factors that we combined. For example, we combined designing a study with collecting, recording, and analyzing data. This makes sense given that we view data collection, management, and analysis as inherent to the study design. Similarly, reporting a study, interpreting data, and presenting a study all involve communicating study results to others in a transparent fashion. Mullikin and colleagues also combined reporting and presenting into a single factor upon refining their 10 factors into 8.2 Finally, we combined conceptualizing and collaborating. In training physician-scientists, we emphasize the multi-disciplinary nature of clinical research and encourage trainees to seek collaborators from other disciplines during the conceptualization stage; therefore, it seems natural to us that these constructs be combined.

We believe that trainees who complete the shorter version of the CRAI will experience less burden, will be more likely to complete the survey, and less likely to fall into a response set pattern, and will, therefore, provide more accurate responses. The convenience of using the shortened version should increase the likelihood that institutions will administer the CRAI to their trainees. A more widespread use of the instrument will provide both practical and theoretical benefits. One practical benefit will arise from the likely administration of the CRAI to individuals both before and after they participate in clinical research training programs, allowing improved evaluation of the programs. Another practical benefit emanates from the likely number of institutions that will administer the instrument, which will give potential trainees and program directors alike the opportunity to compare programs across institutions. On the theoretical level, when combined with additional factors influencing career success,9 the clinical research and academic medicine communities may be able to increase both their knowledge of the components that lead to improved self-confidence in conducting clinical research and their understanding of the association between a clinical researcher’s self-confidence and his or her career success. The improved program evaluation data and the increased understanding of research efficacy could, in turn, lead to programmatic improvement and enhanced training.

Wider administration of the CRAI will also allow future research into whether high clinical research self-efficacy leads to enhanced productivity, higher-impact publications, and/or other quantifiable types of academic career success.

Our study had several limitations that deserve mention. First, we gathered our data from a single institution and the participants were relatively homogeneous in terms of race/ethnicity and degree level. This may limit the generalizability of the findings. Second, like the original CRAI, the CRAI-12 assesses only student confidence in performing clinical research. It does not assess actual ability or competence. Further studies should be conducted to determine whether a verifiable relationship between confidence and ability exists.

To date, the original CRAI has been used in a small number of studies. Lev, Kolassa, and Bakken10 used it to study whether faculty members’ perceptions of a student’s research abilities matched the perceptions of the student; Bakken and colleagues11 used it to assess a short-term research training program and an efficacy-enhancing intervention for novice female biomedical scientists of diverse racial backgrounds; and Lipira and colleagues7 used it to measure how clinical research self-efficacy changed after one year of training in three different clinical research programs. Use of the CRAI-12 in studies involving diverse populations and programs will add to the depth of knowledge regarding the optimal environment and structure of clinical research training.

Conclusions

As clinical research training programs, including those in CTSAs, increase in number and size to meet the demand for more trained physician-scientists, the ability to evaluate programs for purposes of improvement will become even more important. The CRAI-12 will provide a streamlined method of evaluation that retains the validity and reliability of the original instrument.

Acknowledgments

Funding/Support: The National Institutes of Health supported this study through Grant Numbers UL1 RR024153 and UL1TR000005.

Footnotes

Other disclosures: None.

Ethical approval: The University of Pittsburgh institutional review boards reviewed and approved the use of annual trainee data for research purposes.

Disclaimer: The ideas expressed in this article are solely the views of the authors and do not necessary represent the official views of the National Institutes of Health.

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Dr. Georgeanna F.W.B. Robinson, Institute for Clinical Research Education, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Dr. Galen E. Switzer, Center for Health Equity Research and Promotion, VA Pittsburgh Healthcare System, Pittsburgh, Pennsylvania, and professor of medicine, psychiatry, and clinical and translational science, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Mr. Elan D. Cohen, Center for Research on Health Care Data Center, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Dr. Brian A. Primack, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Dr. Wishwa N. Kapoor, Department of Medicine, director, Center for Research on Health Care and Institute for Clinical Research Education, and codirector, Clinical and Translational Science Institute, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Ms. Deborah L. Seltzer, Department of Medicine, and director of research development, Center for Research on Health Care and Institute for Clinical Research Education, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Dr. Lori L. Bakken, Department of Interdisciplinary Studies, School of Human Ecology, University of Wisconsin, Madison, Wisconsin.

Dr. Doris McGartland Rubio, Center for Research on Health Care Data Center, and codirector, Institute for Clinical Research Education, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

References

- 1.Drolet BC, Lorenzi NM. Translational research: Understanding the continuum from bench to bedside. Transl Res. 2011;157:1–5. doi: 10.1016/j.trsl.2010.10.002. [DOI] [PubMed] [Google Scholar]

- 2.Mullikin EA, Bakken LL, Betz NE. Assessing research self-efficacy in physician-scientists: The Clinical Research APPraisal Inventory. J Career Assess. 2007;15:367–387. [Google Scholar]

- 3.Bandura A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol Rev. 1977;84:191–215. doi: 10.1037//0033-295x.84.2.191. [DOI] [PubMed] [Google Scholar]

- 4.Lent RW, Brown SD, Hackett G. Toward a unifying social cognitive theory of career and academic interest, choice, and performance. J Vocat Behav. 1994;45:79–122. [Google Scholar]

- 5.Kahn JH. Predicting the scholarly activity of counseling psychology students: A refinement and extension. J Couns Psychol. 2001;48:344–354. [Google Scholar]

- 6.Galesic M, Bosnjak M. Effects of questionnaire length on participation and indicators of response quality in a web survey. Public Opin Quart. 2009;73:349–360. [Google Scholar]

- 7.Lipira L, Jeffe DB, Krauss M, et al. Evaluation of clinical research training programs using the Clinical Research Appraisal Inventory. Clin Transl Sci. 2010;3:243–248. doi: 10.1111/j.1752-8062.2010.00229.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Amabile TM, Hill KG, Hennessey BA, Tighe EM. The Work Preference Inventory: Assessing intrinsic and extrinsic motivational orientations. J Pers Soc Psychol. 1994;66:950–967. doi: 10.1037//0022-3514.66.5.950. [DOI] [PubMed] [Google Scholar]

- 9.Rubio DM, Primack BA, Switzer GE, Bryce CL, Seltzer DL, Kapoor WN. A comprehensive career-success model for physician-scientists. Acad Med. 2011;86:1571–1576. doi: 10.1097/ACM.0b013e31823592fd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lev EL, Kolassa J, Bakken LL. Faculty mentors’ and students’ perceptions of students’ research self-efficacy. Nurse Educ Today. 2010;30:169–174. doi: 10.1016/j.nedt.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bakken LL, Byars-Winston A, Gundermann DM, et al. Effects of an educational intervention on female biomedical scientists’ research self-efficacy. Adv Health Sci Educ Theory Pract. 2010;15:167–183. doi: 10.1007/s10459-009-9190-2. [DOI] [PMC free article] [PubMed] [Google Scholar]