Abstract

Expressions of emotion are often brief, providing only fleeting images from which to base important social judgments. We sought to characterize the sensitivity and mechanisms of emotion detection and expression categorization when exposure to faces is very brief, and to determine whether these processes dissociate. Observers viewed 2 backward-masked facial expressions in quick succession, 1 neutral and the other emotional (happy, fearful, or angry), in a 2-interval forced-choice task. On each trial, observers attempted to detect the emotional expression (emotion detection) and to classify the expression (expression categorization). Above-chance emotion detection was possible with extremely brief exposures of 10 ms and was most accurate for happy expressions. We compared categorization among expressions using a d′ analysis, and found that categorization was usually above chance for angry versus happy and fearful versus happy, but consistently poor for fearful versus angry expressions. Fearful versus angry categorization was poor even when only negative emotions (fearful, angry, or disgusted) were used, suggesting that this categorization is poor independent of decision context. Inverting faces impaired angry versus happy categorization, but not emotion detection, suggesting that information from facial features is used differently for emotion detection and expression categorizations. Emotion detection often occurred without expression categorization, and expression categorization sometimes occurred without emotion detection. These results are consistent with the notion that emotion detection and expression categorization involve separate mechanisms.

Keywords: emotion detection, expression categorization, face-inversion effect, awareness, face processing

Facial expressions of emotion convey important social information and are thus essential to detect and discriminate. Emotional expressions are identified consistently across cultures when viewing time is relatively long (e.g., Ekman & Friesen, 1971), and they can even be processed involuntarily (Dimberg, Thunberg, & Elmehed, 2000) or without conscious awareness of seeing the face (Li, Zinbarg, Boehm, & Paller, 2008; Murphy & Zajonc, 1993; Sweeny, Suzuki, Grabowecky, & Paller, 2009; Whalen et al., 1998). Rapidly detecting and categorizing facial expressions is helpful because real emotional encounters can be exceedingly brief. However, our knowledge of this ability is incomplete in many ways. What information is extracted during brief viewing? To what extent do different types of processing contribute to rapidly detecting an emotional expression versus determining which expression it was? Understanding these processes could elucidate perceptual mechanisms that shape our everyday emotional behavior.

Many studies of brief emotional expressions have investigated unconscious or subliminal perception. These studies typically use backward masking, a technique in which awareness of an emotional face can be blocked by a subsequent stimulus (e.g., Sweeny et al., 2009; Whalen et al., 1998). Although some observers in these investigations report no awareness of the expressions, it is unclear what information might still be available to them for conscious report. Some research suggests that the presentation durations used in many of these studies (usually around 30 ms) may be too long to completely block conscious access to information relevant to categorizing emotional expressions (Szczepanowski & Pessoa, 2007) and that some expressions (particularly happy expressions) are often less effectively masked than others (Maxwell & Davidson, 2004; Milders, Sahraie, & Logan, 2008). This investigation takes a closer look at what emotional information is accessible from briefly presented (and backward-masked) faces.

Emotion detection and expression categorization may unfold separately, and they may rely on different types of information in a face. For example, coarse information (e.g., teeth) available from an exceedingly brief presentation may only enable the ability to detect that an emotional expression was present, whereas subtle categorization between expressions may require a longer presentation duration and more complex information, such as emergent and holistic combinations of features within a face. This prediction has precedents in (a) findings that suggest that face detection and identification are separate processes that occur in separate levels of visual processing (e.g., Liu, Harris, & Kanwisher, 2002; Sugase, Yamane, Ueno, & Kawano, 1999; Tsao, Freiwald, Tootell, & Livingstone, 2006), and (b) studies of object recognition, which show that detecting whether an object is present dissociates from categorizing the object (Barlasov-Ioffe & Hochstein, 2008; Mack, Gauthier, Sadr, & Palmeri, 2008). Behavioral evidence has hinted that such a two-stage system of detection and categorization may apply to the processing of emotional expressions because a fearful expression is relatively easy to discriminate from a neutral expression (i.e., it can be easy to “detect” emotion) compared with discriminating between fear and another emotional expression (Goren & Wilson, 2006).

The goal of this study was to characterize how affective information in a face rapidly becomes available. Specifically, we were interested in determining (a) whether or not emotion detection and expression categorization unfold differently over time, (b) whether or not these abilities occur uniquely for different emotional expressions, (c) what information is typically used to detect or categorize emotional expressions, and (d) whether emotion detection and expression categorization dissociate. To answer these questions, we determined how emotion detection and expression categorization performance varied (a) across variations in presentation time, (b) with different emotional expressions, (c) with upright versus inverted faces (primarily engaging configural vs. featural processing; see below), (d) with faces that did or did not show teeth, (e) across observers, and (f) when one or the other failed (i.e., Could emotion detection occur without expression categorization, or could expression categorization occur without emotion detection?).

Observers viewed fearful, angry, happy, and neutral expressions briefly presented for 10, 20, 30, 40, or 50 ms in a two-interval forced-choice design. One interval contained a face with an emotional expression masked by a face with a surprise expression. The other interval contained a face with a neutral expression masked by the same surprise face. Masking with surprise faces holds several advantages (see Method section). On each trial, the first task was to indicate which interval contained the emotional expression— emotion detection—and the second task was to classify the expression of the emotional face as fearful, angry, or happy—expression categorization.

Experiment 1

Method

Observers

Thirty undergraduate students at Northwestern University gave informed consent to participate for course credit. All had normal or corrected-to-normal visual acuity and were tested individually in a dimly lit room. Fifteen observers viewed upright faces, and 15 viewed inverted faces.

Stimuli

We selected five categories of faces from the Karolinska Directed Emotion Face Set (Lundqvist, Flykt, & Ohman, 1998) according to their emotional expressions: 24 with neutral expressions, eight with surprise expressions, eight with fearful expressions, eight with angry expressions, and eight with happy expressions (see Supplemental Figure 1). Half of the faces were men and half were women. We used eight faces from each expression category to minimize the possibility of identity-specific habituation. We also used 24 faces for the neutral expression category to minimize potential effects due to differences between faces other than their expressions. Color photographs of 53 different individuals were used, and 18 individuals appeared in more than one emotional expression category. We validated these expression categories prior to the current investigation in a separate pilot experiment in which 11 observers (who did not participate in this experiment) rated the expressions (each presented alone for 800 ms) using a 6-point scale, ranging from 1 (most negative) to 6 (most positive). We included 70 unique faces from each expression category and selected a set of the best exemplars for each category on the basis of the valence ratings. The mean valence ratings of the faces selected for this experiment were 3.43 (SD = 0.37) for surprise expressions, 3.48 (SD = 0.095) for neutral expressions, 1.59 (SD = 0.228) for fearful expressions, 1.53 (SD = 0.25) for angry expressions, and 5.60 (SD = 0.18) for happy expressions. Each face was cropped using an elliptical stencil to exclude hair, which was deemed distracting from emotionally relevant internal facial features (Tyler & Chen, 2006). Faces were then scaled to be approximately the same size with respect to the length between the hairline and chin and to the length between the left and right cheeks. Each face subtended 2.75° by 3.95° of visual angle. The faces were embedded in a rectangular mask of Gaussian noise subtending 2.98° by 4.24° of visual angle. The faces in the inverted condition were exactly the same, except inverted. Four of the eight fearful expressions and two of the eight angry expressions clearly showed teeth (see Figure 1 in the online supplemental materials). All of the happy expressions showed teeth.

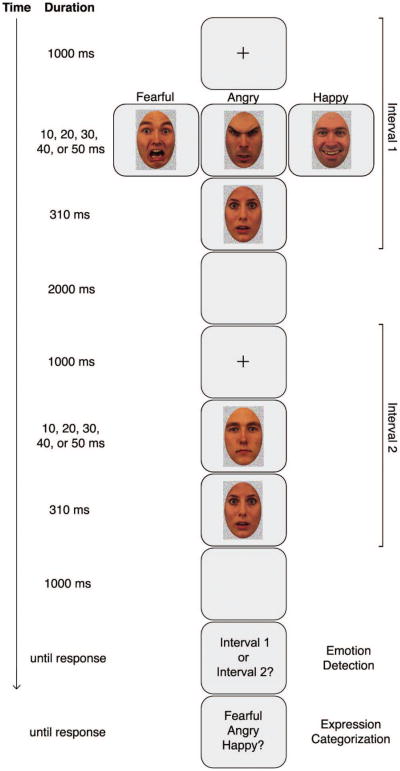

Figure 1.

Two-interval forced-choice task. A central fixation cross was shown, followed by the first interval, which contained either an emotional expression (fearful, angry, or happy) or a neutral expression backward masked by a neutral-valence surprise expression, followed by a blank screen, a central fixation cross, and the second interval, which contained a neutral expression or an emotional expression (depending on the content of the first interval) backward masked by the same surprise expression. Shortly after, observers were prompted to indicate which interval contained the emotional expression—emotion detection—and then to classify that expression as fearful, angry, or happy—expression categorization. Note that the faces are not to scale. Photographs taken from the Karolinska Directed Emotional Faces. Copyright 1998 by Karolinska Institutet, Department of Clinical Neuroscience, Section of Psychology, Stockholm, Sweeden. Reprinted with permission.

Based on a wavelet transform algorithm that models V1 activity (Willmore, Prenger, Wu, & Gallant, 2008), there were no differences in the degree to which the expression categories (fearful, angry, and happy) should have activated low-level visual areas (ps > .42). Thus, any differences between expression categories in emotion detection or expression categorization in this investigation are unlikely to be due to differences in low-level neural representations of the faces, but rather due to differences in high-level representations of facial features and feature configurations.

The experiment consisted of 240 two-interval forced-choice trials. Each trial contained two intervals, one with an emotional target face immediately backward masked by a surprise face and the other with a neutral face masked by the same surprise face (see Figure 1). Each of the 24 emotional faces was always paired with a specific neutral face (of the same gender) and a specific surprise-face mask (of the same gender as the emotional and neutral faces half of the time). The same set of eight surprise faces was paired with each emotional category. For most pairings, the emotional face, the neutral face, and the surprise-face mask were of different individuals, except for two pairings in which the emotional face and the neutral face were of the same individual. We note that mismatched identity pairings, overall, were likely to be beneficial for our purposes; introducing task-irrelevant identity changes on most trials should have reduced the effectiveness of using a simple physical change-detection strategy to detect the interval with the emotional expression (in Experiment 3, we confirmed that observers most likely did not use such a change-detection strategy). Each of the 24 face pairings was presented twice at each of the five durations, once with the emotional face first and once with the emotional face second (see Procedure section).

Surprise faces were well suited as backward masks in this experiment because they are high in arousal yet neutral in valence (Kim et al., 2004), which allows them to effectively mask the features of the previously presented face without systematically imparting a positive or negative valence. Face pairings were the same for each observer. It is unlikely that the perceived valence of the surprise-face masks systematically interfered with the expression categorization task because they were carefully selected to be neutral in valence on average (see above). The mean valance ratings of neutral faces that we paired with different expression categories were carefully matched. When presented alone in a pilot experiment, mean valence ratings were 3.47 (SD = 0.102) for the neutral faces paired with angry faces, 3.49 (SD = 0.083) for the neutral faces paired with fearful faces, and 3.49 (SD = 0.108) for the neutral faces paired with happy faces.

It would have been optimal to have an equal number of faces strongly showing teeth and faces not showing teeth (for determining how our results may depend on visible teeth). Our top priority, however, was to select the highest quality emotional expressions. Based on the results of our affective norming procedure, there were not enough high-quality exemplars of each emotional category available to create a sufficiently large set of stimuli with balanced sets of faces with teeth and without teeth. We note, however, that our emotional categories are reasonably well balanced in terms of teeth (see Supplemental Figure 1).

Stimuli were presented on a 19-in. color CRT monitor driven at 100 Hz vertical refresh rate using Presentation software (Version 13.0; http://www.neurobs.com). The viewing distance was 100 cm.

Procedure

Each trial began with the first interval consisting of a fixation cross presented for 1,000 ms followed by an emotional (or neutral) face presented for 10, 20, 30, 40, or 50 ms, backward masked by a surprise face for 310 ms. Surprise masks have been used in previous investigations with similar (Sweeny et al., 2009) or longer durations (Li et al., 2008). This was followed by a 2,000-ms blank screen and the second interval consisting of a 1,000-ms fixation followed by a neutral (or emotional) face backward masked by the same surprise face for 310 ms. We selected the durations of the emotional expressions on the basis of evidence that the thresholds for objective and subjective awareness lie within this range (Szczepanowski & Pessoa, 2007; Sweeny et al., 2009). The durations were verified using a photosensitive diode and an oscilloscope. Emotional and neutral faces were always presented for equivalent durations within each trial. A delay of 1,000 ms transpired at the end of the second interval, and then a response screen prompted each observer to indicate whether the emotional face appeared in the first or second interval by pressing one of two buttons on a keypad. This allowed us to assess emotion detection. The next response screen prompted each observer to indicate whether the emotional face was fearful, angry, or happy by pressing one of three buttons on the same keypad. This allowed us to assess expression categorization. The assignment of detection and categorization choices to specific keys was counterbalanced across observers. The use of a two-interval forced-choice design allowed us (a) to obtain a measure of emotion detection uncontaminated by response bias and (b) to obtain measures of both emotion detection and expression categorization on every trial. The intertrial interval was jittered between 1,600 and 2,400 ms. There was no time limit for either response, but observers were encouraged to respond quickly, using their “gut feeling” if necessary. Observers completed a practice session of approximately 15 trials randomly selected from the entire set before starting the experiment. Observers had no previous experience with the faces and were not advised to use any specific strategy for detecting emotion or categorizing the expressions. Half of the observers saw only upright faces and the remaining observers saw only inverted faces. Three 1-min breaks were given in the course of the 240 experimental trials.

Rationale for the use of upright and inverted faces

One tactic we used to examine whether emotion detection and expression categorization depend on different facial information was comparing judgments of upright and inverted faces. Inverting a face substantially impairs identity discrimination by disrupting the processing of configural information (i.e., the spatial relationship and distance between facial features), while leaving discriminations that can be made using individual features relatively unimpaired (e.g., Leder & Bruce, 2000; see Rossion & Gauthier, 2002, for a review, but see Richler, Gauthier, Wegner, & Palmeri, 2008, and Wegner & Ingvalson, 2002, for evidence that some results attributed to holistic processing can be explained in terms of shifts in decision criterion). Inversion has also been shown to impair configural processing of emotional expressions viewed for relatively long durations (Calder, Young, Keane, & Dean, 2000) and to impair recognition of briefly flashed emotional expressions (Goren & Wilson, 2006; Prkachin, 2003), suggesting that the mechanisms underlying expression categorization rely on configural processing. We determined whether face inversion similarly or differentially affected emotion detection and expression categorization. The result will indicate the degree to which these processes depend on configural versus feature-based processes. For example, the presence of emotion might be detected primarily by the presence of features such as wrinkles and exposed teeth that are common across many expressions (relative to neutral), whereas discriminating expressions might rely on more elaborate configural processing.

Results

Emotion detection with upright faces

Detection of emotional expressions was possible with extremely brief presentations and was better with happy expressions than with fearful or angry expressions (see Figure 2a). Emotion detection was reliably better than chance (50%) even at the 10-ms duration: happy expressions, t(14) = 5.53, p < .001, d = 1.43; fearful expressions, t(14) = 2.24, p < .05, d = 0.527; angry expressions, t(14) = 2.86, p < .05, d = 0.737. A repeated measures analysis of variance (ANOVA) with duration (10, 20, 30, 40, 50 ms) and expression (happy, fearful, angry) as the two factors and proportion correct as the dependent variable revealed a significant main effect of duration, F(4, 56) = 30.1, p < .001, = .682, and expression, F(2, 28) = 13.3, p < .001, = .487, with no interaction between duration and expression, F(8, 112) = 1.14, ns, = .076. Detection ability increased linearly with increases in duration: happy, t(14) = 6.15, p < .01, d = 1.59; fearful, t(14) = 6.77, p < .01, d = 1.75; angry, t(14) = 5.07, p < .01, d = 1.31, for linear contrasts. Averaged across all five durations, emotion detection was better with happy expressions (81.8%) than with either fearful expressions (71.2%), t(14) = 6.12, p < .001, d = 1.58, or angry expressions (68.9%), t(14) = 4.48, p < .001, d = 1.16, with no significant difference between fearful and angry expressions, t(14) = 0.732, ns, d = 0.189.

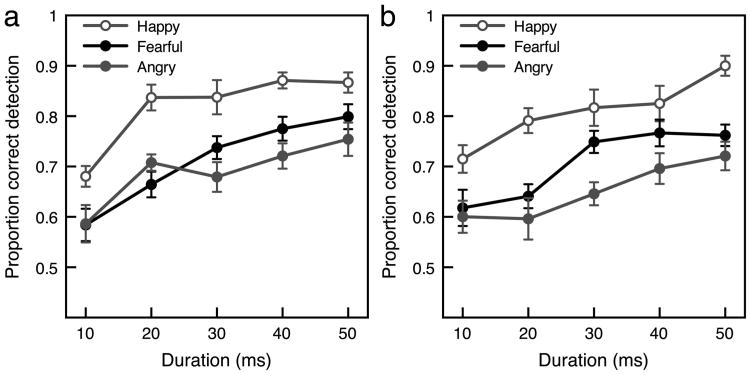

Figure 2.

Emotion detection. (a) Mean proportion correct for emotion detection for upright faces with three expressions as a function of stimulus duration. Observers detected emotion better with happy expressions than with fearful or angry expressions. (b) Mean proportion correct for emotion detection for inverted faces with three expressions as a function of stimulus duration. Inversion did not impair emotion detection compared with when faces were upright. Error bars for both panels indicate ±1 SEM (adjusted for within-observer comparisons).

Emotion detection with inverted faces

As with upright faces, emotion detection with inverted faces was remarkably accurate and was best with happy expressions (see Figure 2b). Emotion detection was reliably better than chance (50%) at the 10-ms duration: happy, t(14) = 4.21, p < .001, d = 1.09; fearful, t(14) = 3.98, p < .01, d = 1.03; angry, t(14) = 2.95, p < .05, d = 0.761. A repeated measures ANOVA with duration (10, 20, 30,40, 50 ms) and expression (happy, fearful, angry) as the two factors revealed significant main effects of duration, F(4, 56) = 11.4, p < .001, = .448, and expression, F(2, 28) = 14.6, p < .001, = .510, with no interaction between duration and expression, F(8, 112) = 1.15, ns, = .076. Detection ability increased linearly with increases in duration: happy, t(14) = 5.18,p < .01, d = 1.34; fearful, t(14) = 4.09, p < .01, d = 1.06; angry, t(14) = 3.09, p < .01, d = 0.798, for linear contrasts. Averaged across all five durations, emotion detection was better with happy expressions (80.9%) than with either fearful expressions (70.7%), t(14) = 3.47, p < .01, d = 0.895, or angry expressions (65.1%), t(14) = 4.59, p < .001, d = 1.19. Unlike with upright faces, emotion detection with inverted faces was significantly better with fearful expressions than with angry expressions, t(14) = 3.31, p < .01, d = 0.855. Overall, inverted faces yielded a pattern of results similar to that found with upright faces for emotion detection as there was no significant difference between upright and inverted faces for any expression: t(28) = 0.127, ns, d = 0.048, for happy; t(28) = 0.092, ns, d = 0.034, for fearful; or t(28) = 0.925, ns, d = 0.338, for angry.

Because emotion detection performance was nearly identical for upright and inverted faces, it is likely that observers used information from a salient facial feature(s) to detect emotion (e.g., Leder & Bruce, 2000; Rossion & Gauthier, 2002, for a review). Teeth might be such a feature as they have been shown to impact emotional judgments, but they do not necessarily improve categorization, at least with infants (Caron, Caron, & Myers, 1985).

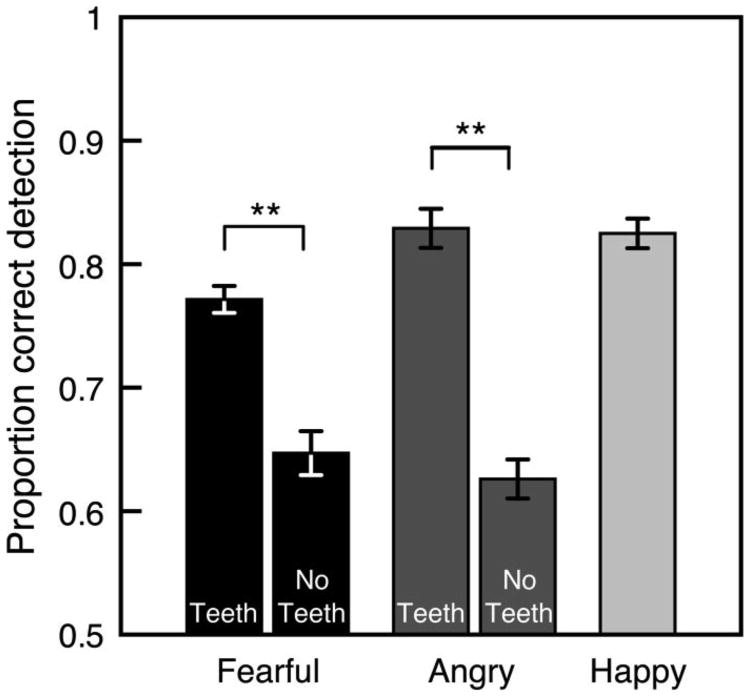

Do teeth facilitate emotion detection?

The visibility of teeth in the masked face improved detection of emotional expressions (see Figure 3). We compared emotion detection when emotional expressions clearly showed teeth compared with when they did not, but only for fearful and angry expressions (all happy expressions clearly showed teeth). Note that the emotion detection task did not require observers to categorize the emotional expression; we explore whether teeth influenced expression categorization in a separate section below. To increase power, we combined detection data from observers who viewed upright and inverted faces. Our decision to combine these conditions was validated by the lack of a significant interaction in a mixed-design ANOVA with expression (happy, fearful, angry) as the repeated measures factor and orientation (upright, inverted) as the between-observers factor, F(2, 56) < 1, ns, = .012. Emotion detection was better with fearful expressions that clearly showed teeth compared with fearful expressions that did not, t(29) = 4.98, p < .001, d = 0.909, and with angry expressions that clearly showed teeth compared with angry expressions that did not, t(29) = 7.20, p < .001, d = 1.31.

Figure 3.

Mean proportion correct for emotion detection with fearful, angry, and happy expressions as a function of whether or not the expressions displayed teeth (averaged across upright and inverted face presentations). Observers detected emotion better when fearful and angry expressions displayed teeth compared with when they did not. Error bars indicate ±1 SEM (adjusted for within-observer comparisons).

Expression categorization with upright faces

A major goal of this study was to assess the ability to categorize between specific pairs of expressions using an analysis of categorization sensitivity (d′). When observers have more than two response options, the way in which they err can be informative. For example, if observers miscategorized an angry expression, whether they erred by responding “fearful” or “happy” can be meaningful. Taking the type of error into account requires a separate calculation of angry–fearful categorization sensitivity and angry–happy categorization sensitivity. Traditional calculation of d′ classifies both of these erroneous responses as a “miss” (i.e., “not angry”) with no regard to the specific way in which the observer missed the target expression. To obtain measures of categorization sensitivity between specific expressions, we calculated d′ for each pair of expressions using hits, misses, false alarms, and correct rejections specific to each expression pair. For example, we calculated d′ to measure categorization sensitivity between angry and happy expressions as follows: hit = responding “angry” on an angry trial, miss = responding “happy” on an angry trial, false alarm = responding “angry” on a happy trial, correct rejection = responding “happy” on a happy trial,

and

Theoretically similar calculations of d′ have been used successfully in other investigations (e.g., Galvin, Podd, Drga, & Whitmore, 2003; Szczepanowski & Pessoa, 2007).

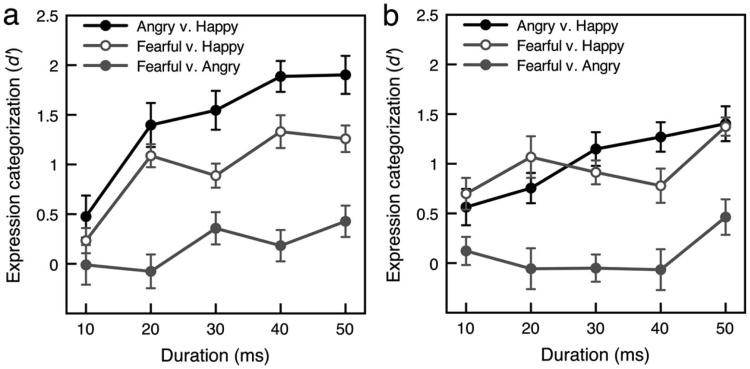

Categorization was best between negative and positive expressions, particularly good between angry and happy expressions, and sometimes above chance with very brief durations (see Figure 4a; also see Table 1 for a confusion matrix). A repeated measures ANOVA with duration (10, 20, 30, 40, 50 ms) and expression categorization (angry vs. happy, fearful vs. happy, fearful vs. angry) as the two factors and d′ as the dependent variable revealed significant main effects of duration, F(4, 56) = 9.64, p < .001, = .408, and expression pair, F(2, 28) = 37.4, p < .001, = .728, and an interaction between duration and expression pair, F(8, 112) = 4.30, p < .001, = .235. This interaction reflected greater improvements with increases in duration for categorization between positive and negative expressions (angry vs. happy and fearful vs. happy) than for categorization between negative expressions (fearful and angry). Performance increased linearly with increases in duration for angry versus happy and fearful versus happy categorizations, t(14) = 4.17, p < .01, d = 1.08, and t(14) = 4.78, p < .01, d = 1.24, respectively, for linear contrasts, but only marginally increased for fearful versus angry categorization, t(14) = 1.93, p = .075, d = 0.497, for linear contrast. Both angry versus happy and fearful versus happy categorizations became greater than chance (d′ = 0) starting at the 20-ms duration, t(14) = 5.21, p < .001, d = 1.35, and t(14) = 7.84, p < .001, d = 2.02, respectively, whereas fearful versus angry categorization remained relatively poor across durations: averaged across all durations; t(14) = 1.87, ns, d = 0.482. When data were averaged across all durations, the following results obtained: Categorization between angry and happy expressions was better than categorization between fearful and happy expressions, t(14) = 4.94, p < .001, d = 1.27. Categorization between both angry and happy expressions, t(14) = 7.17, p < .001, d = 1.85, and fearful and happy expressions, t(14) = 4.99, p < .001, d = 1.29, were better than categorization between fearful and angry expressions.

Figure 4.

Expression categorization. (a) Categorization sensitivity of upright emotional expressions as a function of stimulus duration. (b) Categorization sensitivity of inverted emotional expressions as a function of stimulus duration. Error bars for both panels indicate ±1 SEM (adjusted for within-observer comparisons).

Table 1. Proportion of Fearful, Angry, or Happy Responses for Upright Expressions from Experiment 1.

| Response | |||

|---|---|---|---|

|

|

|||

| Expression | Fearful | Angry | Happy |

| Fearful | 0.396 | 0.294 | 0.308 |

| Angry | 0.413 | 0.420 | 0.166 |

| Happy | 0.197 | 0.162 | 0.640 |

Expression categorization with inverted faces

Inversion selectively impaired categorization between angry and happy expressions (see Figure 4b; see Table 2 for a confusion matrix). A repeated measures ANOVA with duration (10, 20, 30, 40, 50 ms) and expression pair (angry vs. happy, fearful vs. happy, fearful vs. angry) as the two factors and d′ as the dependent variable revealed significant main effects of duration, F(4, 56) = 3.83, p < .01, = .215, and expression pair, F(2, 28) = 25.1, p < .001, = .642, and an interaction between duration and expression pair, F(8, 112) = 2.58, p < .05, = .156. As with upright faces, this interaction reflected greater improvements with increases in duration for categorizations between positive and negative expressions (angry vs. happy and fearful vs. happy) than for categorization between negative expressions (fearful and angry). Performance increased linearly with increases in duration for angry versus happy and fearful versus happy categorizations, t(14) = 3.86, p < .01, d = 1.0, and t(14) = 2.31, p < .01, d = 0.60, respectively, for linear contrasts, but not for fearful versus angry categorization, t(14) = 1.41, ns, d = 0.363, for linear contrast. Both angry versus happy and fearful versus happy categorizations became greater than chance (d′ = 0) starting at the 20-ms duration, t(14) = 3.79, p < .01, d = 0.979, and t(14) = 5.36,p < .001, d = 1.38, respectively, whereas fearful versus angry categorization remained relatively poor across durations: averaged across all durations, t(14) = 0.939, ns, d = 0.242. When data were averaged across all durations, unlike with upright faces, categorization between angry and happy expressions did not differ from categorization between fearful and happy expressions when faces were inverted, t(14) = 0.179, ns, d = 0.103. This change from the pattern with upright faces was statistically reliable; the advantage of the angry versus happy categorization over the fearful versus happy categorization was significantly larger with upright faces than with inverted faces, t(28) = 2.31, p < .05, d = 0.863. All other results mirrored performance with upright faces: averaged across all durations, categorization between both angry and happy expressions, t(14) = 5.60, p < .001, d = 1.46, and fearful and happy expressions, t(14) = 7.31, p < .001, d = 1.89, were better than categorization between fearful and angry expressions.

Table 2. Proportion of Fearful, Angry, or Happy Responses for Inverted Expressions from Experiment 1.

| Response | |||

|---|---|---|---|

|

|

|||

| Expression | Fearful | Angry | Happy |

| Fearful | 0.392 | 0.312 | 0.294 |

| Angry | 0.418 | 0.401 | 0.180 |

| Happy | 0.177 | 0.245 | 0.578 |

Do teeth influence categorization of an emotional expression?

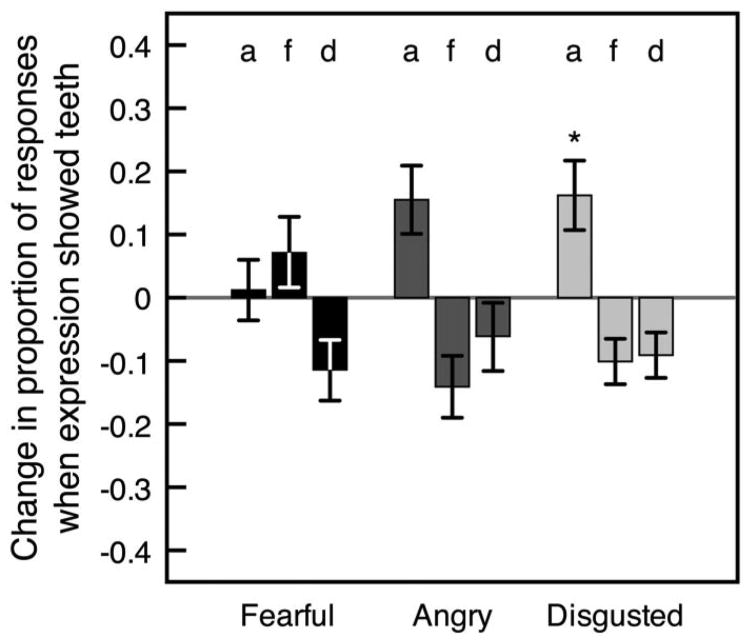

Expressions that showed teeth were perceived differently from those that did not show teeth. Because all happy faces displayed teeth, we examined categorizations of angry and fearful faces when observers correctly detected the interval containing the emotional face. Specifically, we calculated the difference in the proportion of categorizations of these faces as angry, fearful, or happy when fearful and angry faces clearly showed teeth compared with when they did not show teeth. We arcsin adjusted each value to account for compression at high proportion values and then compared each difference score against a value of zero, which would indicate no change in categorization due to teeth. We collapsed the data across upright and inverted presentations, which yielded the same pattern when combined as when analyzed separately. Clear displays of teeth made a fearful expression less likely to appear fearful, t(29) = 3.41, p < .05, d = 0.622, and more likely to appear happy, t(29) = 4.73, p < .001, d = 0.862, but did not affect the likelihood of its categorization as angry, t(29) = 1.37, ns, d = 0.251 (all p values are corrected for multiple comparisons; see Figure 5). Likewise, clear displays of teeth made an angry expression less likely to appear fearful, t(29) = 4.89, p < .001, d = 0.893, and more likely to appear happy, t(29) = 2.92, p < .05, d = 0.862, but did not affect the likelihood of its categorization as angry, t(29) = 1.35, ns, d = 0.247. Taken together, teeth clearly helped observers detect that an emotional face was present, but also caused systematic miscategorizations, particularly in making fearful and angry expressions appear happy. These results are consistent with previous findings with infants (Caron et al., 1985).

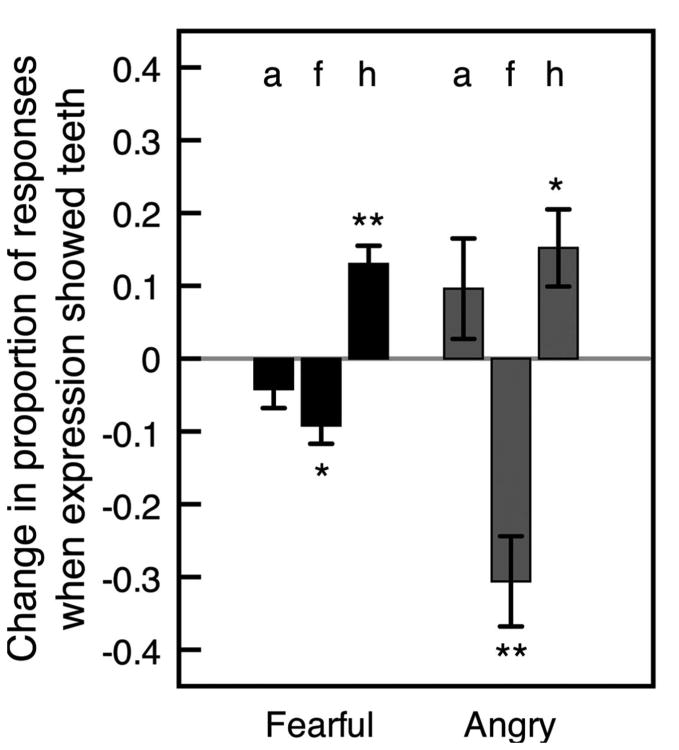

Figure 5.

Mean difference in proportion of each type of categorization between trials on which fearful and angry expressions clearly showed teeth and trials on which they did not show teeth (on trials with correct emotion detection). Positive values indicate that a categorization (a = angry, f = fearful, or h = happy) was given more often when the expression (fearful or angry) clearly showed teeth. Differences are arcsin adjusted to offset compression at high proportion values. Error bars indicate ± 1 SEM (adjusted for within-observer comparisons).

How do emotion detection and expression categorization vary across observers?

Observers varied substantially in their abilities to detect emotion and categorize expressions (see Supplemental Figure 2). Although it is unclear whether above-chance detection and categorization occurred with or without conscious awareness of the emotional faces, these data are consistent with suggestions that investigations of “unconscious” processing should take care to examine objective awareness on an observer-by-observer basis (Szczepanowski & Pessoa, 2007).

How do emotion detection and expression categorization vary within observers?

Observers were consistent in their abilities to detect emotion. That is, an observer who was good (or bad) at detection with one expression was generally good (or bad) with another expression. Interobserver correlations between proportion correct detection were positive and significant for all pairs of expressions, both with upright—angry versus happy: r = .634, t(14) = 2.95,p < .05; fearful versus happy: r = .881, t(14) = 6.74, p < .001; and angry versus fearful: r = .516, t(14) = 2.17, p < .05—and inverted presentations—angry versus happy: r = .91, t(14) = 7.92, p < .001; fearful versus happy: r = .915, t(14) = 8.18, p < .001; and angry versus fearful: r = .900, t(14) = 7.46, p < .001.

In contrast, categorization sensitivity for a given pair of expressions did not always predict categorization sensitivity for another pair of expressions, at least with upright faces. Categorization for upright angry versus happy was positively correlated with categorization for fearful versus happy—r = .827, t(14) = 5.31, p < .001—but categorization for fearful versus angry was not correlated with categorization for angry versus happy—r = .21, t(14) = 0.773, ns— or with categorization for fearful versus happy—r = −.05, t(14) = 0.182, ns. This suggests that categorizing within negative expressions (fearful vs. angry) requires different information than categorizing between positive and negative expressions (angry vs. happy and fearful vs. happy). The critical information appears to be orientation specific, as interobserver categorization correlations with inverted faces were positive and significant for all pairs of expressions: angry versus happy and fearful versus happy: r = .678, t(14) = 3.32, p < .001; angry versus happy and fearful versus angry: r = .620, t(14) = 2.85, p < .05; and fearful versus happy and fearful versus angry: r = .447, t(14) = 1.80, p < .01.

Does emotion detection predict expression categorization on a trial-by-trial basis?

Detecting that a face is emotional seems to be a simpler task than categorizing the expression on the face. One might then predict that expressions were more likely to be correctly categorized when emotion detection was successful. To test this, we compared expression categorization when emotion detection was correct compared with when emotion detection was incorrect. Categorization scores (assessed with a conventional calculation of d′ for each expression separately) were subjected to a repeated measures ANOVA with expression (happy, fearful, angry), duration (10, 20, 30, 40, 50 ms), and emotion detection (correct, incorrect) as within-subject factors. With upright faces, expression categorization was better when observers detected the interval containing the emotional face (d′ = 0.833) compared with when they did not (d′ = 0.137), F(1, 14) = 34.5, p < .01, = .711. This difference increased with longer durations, as revealed by an interaction between duration and emotion detection, F(4, 56) = 2.69, p < .05, = .161, for each expression, as revealed by no interaction between expression, duration, and emotion detection, F(8, 112) = 0.983, ns, = .066.

With inverted faces, expression categorization was also better when observers detected the interval containing the emotional face (d′ = 0.569) compared with when they did not (d′ = 0.139), F(1, 14) = 15.9, p < .01, = .532. This difference was relatively stable across durations and for different expressions as neither the interaction between duration and emotion detection, F(4, 51) = 2.01, ns, = .136, nor the interaction between expression, duration, and emotion detection, F(8, 89) = 1.93, ns, = .147, was significant.

Are emotion detection and expression categorization independent?

The preceding analyses show that emotion detection and expression categorization tend to co-occur on a trial-by-trial basis. However, this does not necessarily mean that these abilities are based on a single mechanism. Poor performance on emotion detection and expression categorization could co-occur on the same trials because other factors such as lapses in attention or blinking cause an observer to miss the relevant information. Accordingly, we sought to determine whether emotion detection performance predicts individual differences in expression categorization performance.

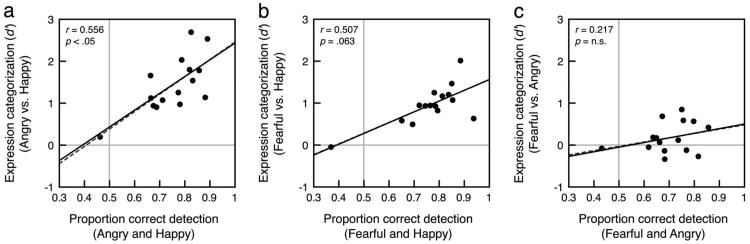

We computed the interobserver correlations between emotion detection and expression categorization performance with upright faces with angry and happy expressions, fearful and happy expressions, and fearful and angry expressions, averaged across duration. For ease of analysis and presentation (see Figure 6), we compared categorization scores (which were obtained for pairs of expressions, e.g., angry vs. happy) with a single emotion detection score (the average emotion detection with the corresponding expressions, e.g., the average of proportion correct emotion detection with angry and happy expressions). Emotion detection was positively correlated with categorization for angry versus happy, r = .556, t(14) = 2.32, p < .05. Likewise, there was a trend for emotion detection to be positively correlated with categorization for fearful versus happy, r = .507, t(14) = 2.04, p = .063. In contrast, emotion detection was not correlated with categorization for fearful versus angry, r = .217, t(14) = 0.801, ns. Inspection of Figure 6c shows that observers can perform well at emotion detection and poorly at expression categorization. Indeed, despite above-chance emotion detection performance with fearful, t(14) = 6.29, p < .01, and angry expressions, t(14) = 6.99, p < .01, categorization between fearful and angry expressions was not greater than chance (see above for statistics; see Figures 2 and 4 for mean detection and categorization performance, respectively; see Supplemental Figure 2 for data from individual observers). This dissociation could reflect distinct mechanisms for emotion detection and expression categorization.

Figure 6.

The interobserver correlation between emotion detection and expression categorization with upright (a) angry and happy expressions, (b) fearful and happy expressions, and (c) fearful and angry expressions averaged across duration. We found equivalent results with inverted faces (not shown). For simplicity of analysis, we used a single value for emotion detection performance for each observer; each value on the x-axis represents the average of emotion detection performance from both expressions used in the corresponding categorization analysis. The vertical gray line indicates chance performance for emotion detection and the horizontal gray line indicates chance performance for expression categorization. The solid black trend lines, r, and p values were computed for each figure with the obvious outlier removed (the same observer was below chance for emotion detection for all three comparisons). The gray dashed trend lines were computed with the outlier included.

Detection and categorization correlations followed the same pattern with inverted faces. Emotion detection was positively correlated with categorization for angry versus happy, r = .629, t(13) = 2.92, p < .05, and for fearful versus happy, r = .681, t(14) = 3.23, p < .01. Emotion detection was not significantly correlated with categorization for fearful versus angry, r = .404, t(14) = 1.53, ns. Like with upright faces, despite above-chance emotion detection performance with inverted fearful, t(14) = 5.49, p < .01, and angry expressions, t(14) = 4.95, p < .01, categorization between fearful and angry expressions was not greater than chance, t(14) = 0.939, ns, d = 0.242. The consistency of this dissociation across upright and inverted presentations of faces suggests that emotion detection and expression categorization rely on separate mechanisms even when configural processing is disrupted and a “part-based” strategy based on individual facial features is likely to be employed.

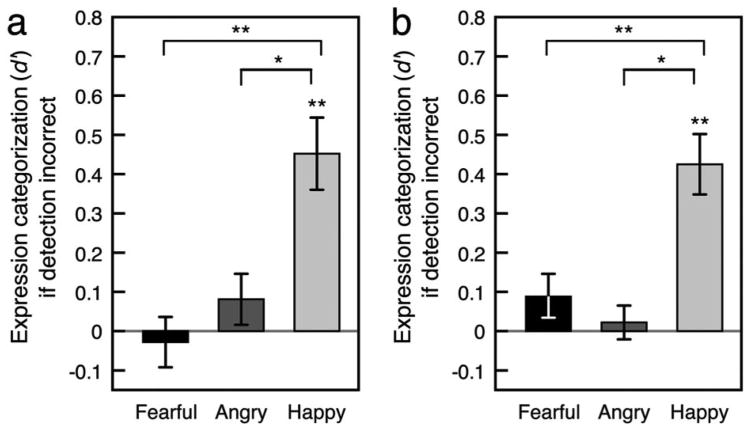

To further evaluate whether these two perceptual abilities are separable, we tested whether expression categorization was possible when emotion detection was incorrect (see Figure 7). For perception of upright faces, a repeated measures ANOVA with expression and duration as the two factors and d′ as the dependent variable revealed a main effect of expression, F(2, 28) = 9.93, p < .01, = .415, but not duration, F(4, 56) = 0.878, ns, = .059, and an interaction between expression and duration, F(8, 112) = 2.42, p < .05, = .147. The interaction reflected an inverted-U-shaped dependence of categorization performance with happy faces on duration when emotion detection was incorrect, t(14) = 3.37, p < .01, d = 0.871. Averaged across durations, categorization performance when detection was incorrect was above chance with happy expressions, t(14) = 3.43, p < .01, d = 0.884, but it was not significantly different from chance with angry expressions, t(14) = 0.965, ns, d = 0.249, or fearful expressions, t(14) = 0.273, ns, d = 0.071 (see Figure 7a). Pairwise comparisons revealed that categorization was better with happy expressions than with angry expressions, t(14) = 2.52, p < .05, d = 0.651, or with fearful expressions, t(14) = 3.30, p < .01, d = 0.852, but did not differ between angry and fearful expressions, t(14) = 1.22, ns, d = 0.314.

Figure 7.

Categorization sensitivity of (a) upright and (b) inverted emotional expressions as a function of expression when emotion detection was incorrect, averaged across durations. The gray horizontal line indicates chance performance. Error bars indicate ±1 SEM (adjusted for within-observer comparisons).

We obtained a similar pattern of results with inverted faces. A repeated measures ANOVA with expression and duration as the two factors and d′ as the dependent variable revealed a main effect of expression, F(2, 28) = 11.9, p < .01, = .457, but not duration, F(4, 56) = 0.924, ns, = .062. Unlike with upright faces, we found no significant interaction between expression and duration, F(8, 112) = 1.65, ns, = .105. Categorization performance when detection was incorrect was above chance with happy expressions, t(14) = 3.24, p < .01, d = 0.837, but it was not significantly different from chance with angry expressions, t(14) = 0.96, ns, d = 0.247, or fearful expressions, t(14) = 0.301, ns, d = 0.078 (see Figure 7b). Pairwise comparisons revealed that categorization was better with happy expressions than with angry expressions, t(14) = 2.60, p < .05, d = 0.670, or with fearful expressions, t(14) = 3.61, p < .01, d = 0.933, but did not differ between angry and fearful expressions, t(14) = 0.986, ns, d = 0.255.

Because information used to categorize expressions is likely to be subtler than information used to detect the presence of an emotion, one might assume that categorization could not occur without emotion detection. A surprising finding was that reliable categorization occurred without emotion detection when the face had a happy expression. This suggests that some characteristic features of a happy face provide sufficient information to allow explicit categorization of a happy expression even when they do not provide sufficient information to allow explicit detection of the presence of an emotional expression. In other words, even when one incorrectly determines that a briefly presented happy face did not have an emotional expression, one may still correctly categorize its expression as happy in a forced-choice categorization task. This is similar to the phenomenon of affective blind sight (e.g., a cortically blind patient correctly categorizes emotional expressions while claiming that he or she does not see the face; e.g., de Gelder, Vroomen, Pourtois, & Weskrantz, 1999). It is unclear what features of a happy expression allow its categorization without detection. Although it seems that teeth might be such a feature, this possibility is unlikely because teeth overall facilitated emotion detection but did not improve expression categorization (see above).

In summary, the fact that emotion detection occurred without expression categorization (for angry and fearful expressions) and expression categorization occurred without emotion detection (for happy expressions) suggests that emotion detection and expression categorization are supported by distinct mechanisms.

Discussion

We demonstrated that it is possible to detect that a face is emotional even when it is presented for only 10 ms and backward masked, and that emotion detection is best when a face has a happy expression. Emotion detection is also better when a face has a fearful expression compared to when a face has an angry expression, consistent with findings that fearful expressions are relatively easy to discriminate from neutral expressions (Goren & Wilson, 2006). Emotion detection in our task appears to be based on detection of salient features rather than the configural relationship between facial features, given that inverting faces did not impair detection ability. Teeth appear to be a strong signal that a face is emotional, as emotion was detected better for angry and fearful faces that showed teeth compared with those that did not. Teeth are not particularly useful for categorizing an expression, however, as they caused misclassifications of angry and fearful faces displaying teeth as “happy,” consistent with previous developmental findings (Caron et al., 1985). With a 20-ms exposure, observers were able to make categorizations between positive and negative expressions (happy and angry, happy and fearful) reasonably well, but categorizations between negative expressions (angry and fearful) tended to be poor at all durations, even at 50 ms.

Our categorization results testify to remarkably accurate emotion perception with minimal information, consistent with previous results in which observers were able to categorize expressions as angry, fearful, or happy in comparison with a neutral expression with durations of about 17 ms (Maxwell & Davidson, 2004; Szczepanowski & Pessoa, 2007). Another investigation found above-chance categorization between neutral, angry, fearful, and happy expressions at durations of 20 ms and even, for all except fearful expressions, 10 ms (Milders et al., 2008). The current investigation extends these findings across a range of presentation durations and by investigating categorization between specific pairs of emotional expressions.

For example, categorization between positive and negative expressions may be more efficient and behaviorally more important than subtle categorization between negative expressions when viewing time is limited. Indeed, categorization between fearful and angry expressions was much worse than categorization between angry and happy expressions and fearful and happy expressions, and was only slightly better than chance even with the longest duration (50 ms). This is consistent with previous demonstrations that happy expressions are easy to categorize (Rapcsak et al., 2000; Susskind, Littlewort, Bartlett, Movellan, & Anderson, 2007), easy to discriminate from other expressions (Maxwell & Davidson, 2004; Milders et al., 2008), and are recognized across cultures more consistently than other expressions (Russell, 1994). It is intriguing that categorization between angry and happy expressions was better than categorization between fearful and happy expressions. This may have been because fearful expressions are more difficult to classify than angry and happy expressions (Adolphs et al., 1999; Rapcsak et al., 2000; Susskind et al., 2007).

Inverting faces only impaired categorization between angry and happy expressions. Because inverting a face disrupts the processing of configural relationships among features, these data suggest that information from configural relationships adds to information from the individual features to support categorization between upright angry and happy expressions, whereas the presence of a single feature or multiple features can support categorization between fearful and happy expressions. Previous research has shown that inversion impairs categorization of happy and fearful faces, but not angry faces (Goren & Wilson, 2006). However, comparisons with the current investigation should be made with caution because faces in the Goren and Wilson (2006) study were presented for 100 ms, were computer-generated faces that did not include teeth or texture information (e.g., wrinkles), and were masked by a geometric pattern instead of a surprise face. Another study found inversion effects for detecting a happy, angry, or fearful expression among other expressions (Prkachin, 2003). Comparisons with the current investigation are, again, not straightforward because Prkachin (2003) used 33-ms presentations without backward masks (allowing visible persistence). Furthermore, she required observers to indicate when a specific target expression had been presented, which has very different demands than requiring observers to detect an emotional face and then categorize its expression as in the current study. Inversion might generally produce poor performance when observers are looking for a specific expression if they do so by using a “template” of the target expression, which is likely to be represented in the upright orientation. Our results suggest that in a more general situation where observers need to categorize each expression, happy and angry expressions are especially discriminable in the upright orientation. The current investigation adds to these prior studies by assessing inversion effects both for detecting emotion (any emotion against a neutral expression rather than detection of a specific target expression among other expressions) and for making categorizations between specific pairs of expressions, as a function of stimulus duration (controlled with backward masking), using photographed faces.

It is interesting that our observers varied substantially in their emotion detection and expression categorization ability. This degree of variability from person to person suggests that accurate perceptual abilities on these two measures (above chance levels) do not require a single, absolute duration of presentation.

It is surprising that both emotion detection and expression categorization were poor with negative valence expressions (fearful and angry) compared with a positive valence expression (happy), considering the amount of research that supports the existence of subcortical units specialized for threat detection (Anderson, Christoff, Panitz, De Rosa, & Gabrieli, 2003; Breiter et al., 1996; LeDoux, 1996; Vuilleumier, Armony, Driver, & Dolan, 2003; Whalen et al., 1998; Williams et al., 2006). A possible explanation for why threatening expressions were not advantaged in our experiment is that observers may have adopted a strategy for emotion detection based on a salient feature (e.g., teeth) of happy expressions. Furthermore, categorization between fearful and angry expressions in Experiment 1 may have been poor because observers were, in general, focused on broad distinctions between positive and negative valence (e.g., happy vs. nonhappy) leading to superior categorization between expressions that crossed the positive–negative boundary (happy vs. angry, happy vs. fearful) at the expense of more subtle distinctions within the dimension of negative valence (fearful vs. angry). Alternatively, emotion detection and categorization between fearful and angry expressions may be difficult for briefly presented faces irrespective of the valence of the third expression in the set.

Experiment 2

The purpose of Experiment 2 was to determine whether emotion detection with fearful and angry expressions and categorization between fearful and angry expressions depend on the emotional valence of the third expression in the set. Accordingly, we replaced happy expressions with disgusted expressions so that all expressions were negative in valence. If the inclusion of happy faces in the categorization set in Experiment 1 caused observers to adopt a strategy in which they used teeth to detect emotional expressions or a strategy in which they focused on the negative versus positive distinction, then observers would likely not use those strategies in Experiment 2 in which “happy” was not a response option. If so, emotion detection might no longer be superior for faces displaying teeth, and the categorization between angry and fearful expressions should improve.

Method

Observers

Twenty-one undergraduate students at Northwestern University gave informed consent to participate for course credit. Eighteen participated in Experiment 2 and the other three were added to the upright portion of Experiment 1 to enable comparison between the experiments with an equal number of observers. All had normal or corrected-to-normal visual acuity.

Stimuli and procedure

We selected eight faces with disgusted expressions from the Karolinska Directed Emotion Face Set (Lundqvist et al., 1998). All faces were color photographs of different individuals (half women and half men). We validated this emotional category in a separate pilot experiment similar to the one used to validate the expressions in Experiment 1. In this pilot experiment, the disgusted faces were randomly intermixed with the faces from the first pilot experiment to control for the possibility that categorization of an expression might depend on the expressions with which it is compared. We selected the eight most negatively rated disgusted faces. The mean valence rating of the disgusted faces was 1.51 (SD = 0.181). Three of the eight disgusted expressions clearly showed teeth (see Supplemental Figure 1). The design and procedure for Experiment 2 were identical to those in Experiment 1, except we used disgusted expressions instead of happy expressions and we did not use inverted faces because Experiment 2 was only intended to provide a comparison with upright faces in Experiment 1.

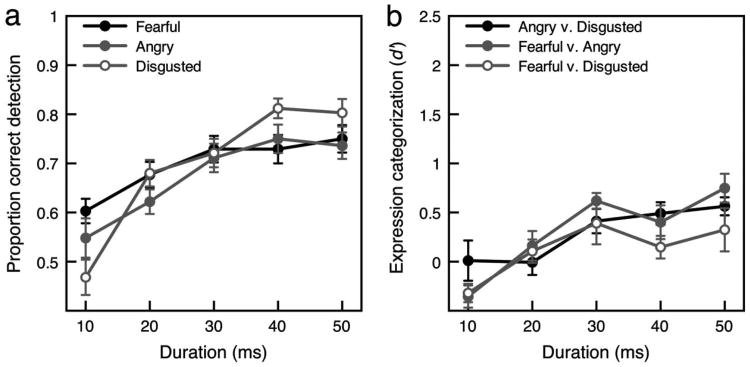

Results

Emotion detection with angry, fearful, and disgusted expressions

Experiment 2 yielded a pattern of results similar to that found in Experiment 1 (see Figure 8a). There was no difference in emotion detection between Experiment 1 and Experiment 2 when emotional faces had fearful expressions (Experiment 1: M = 0.724, SD = 0.122; Experiment 2: M = 0.703, SD = 0.133), t(34) = 0.484, ns, d = 0.161, or angry expressions (Experiment 1: M = 0.692, SD = 0.097; Experiment 2: M = 0.674, SD = 0.124), t(34) = 0.494, ns, d = 0.166.

Figure 8.

Emotion detection and expression categorization with fearful, angry, and disgusted expressions from Experiment 2. (a) Mean proportion correct for emotion detection as a function of stimulus duration. (b) Categorization sensitivity as a function of stimulus duration. Error bars for both panels indicate ±1 SEM (adjusted for within-observer comparisons).

A repeated measures ANOVA on data from Experiment 2 with duration (10, 20, 30, 40, 50 ms) and expression (fearful, angry, disgusted) as the two factors and proportion correct as the dependent variable revealed a main effect of duration, F(4, 68) = 18.35, p < .001, = .519, but no main effect of expression, F(2, 34) = 1.63, ns, = .088, and an interaction between expression and duration, F(8, 136) = 3.17, p < .01, = .157. With disgusted faces, emotion detection was worse than with fearful and angry faces at the brief 10-ms duration, t(17) = 6.26, p < .01, d = 1.48, and t(17) = 2.36, p < .05, d = 0.556, respectively, but better than with fearful and angry faces at the longest durations of 40 and 50 ms, t(17) = 2.81,p < .05, d = 0.662, and t(17) = 2.144, p < .05, d = 0.505, respectively.

Do teeth facilitate emotion detection when happy expressions are not a response option?

To determine whether the presence of teeth facilitated emotion detection, we compared emotion detection when fearful, angry, and disgusted expressions clearly showed teeth compared with when they did not. Experiment 2 yielded a pattern of results similar to that found in Experiment 1. Emotion detection was better with fearful expressions that clearly showed teeth (M = 0.754, SD = 0.134) compared with fearful expressions that did not (M = 0.433, SD = 0.101), t(17) = 16.1, p < .001, d = 3.79, and with angry expressions that clearly showed teeth (M = 0.769, SD = 0.151) compared with angry expressions that did not (M = 0.639, SD = 0.123), t(17) = 5.39, p < .001, d = 1.27. There was a trend for better categorization with disgusted expressions that clearly showed teeth (M = 0.726, SD = 0.126) compared with those that did not (M = 0.679, SD = 0.111), t(17) = 2.09, ns, d = 0.492.

Expression categorization with fearful, angry, and disgusted faces

Collapsed across durations, categorization between fearful and angry expressions was greater than chance (M = 0.315, SD = 0.348), t(14) = 3.84, p < .01, d = 0.906, as was categorization between angry and disgusted expressions (M = 0.293, SD = 0.374), t(14) = 3.33, p < .01, d = 2.02 (see Figure 8b; also see Table 3). Categorization between fearful and disgusted expressions was not greater than chance (M = 0.129, SD = 0.618), t(14) = 0.888, ns, d = 0.209.

Table 3. Proportion of Fearful, Angry, or Disgusted Responses for Expressions from Experiment 2.

| Response | |||

|---|---|---|---|

|

|

|||

| Expression | Fearful | Angry | Disgusted |

| Fearful | 0.383 | 0.320 | 0.297 |

| Angry | 0.323 | 0.419 | 0.256 |

| Disgusted | 0.356 | 0.318 | 0.324 |

More important, Experiment 2 yielded a pattern of expression categorization performance for angry and fearful faces similar to that found in Experiment 1. A repeated measures ANOVA on data from Experiment 2 with duration (10, 20, 30, 40, 50 ms) and expression categorization (fearful vs. angry, fearful vs. disgusted, angry vs. disgusted) as the two factors and d′ as the dependent variable revealed a significant main effect of duration, F(4, 68) = 8.84, p < .01, = .342, but no main effect of expression categorization, F(2, 34) = 1.31, ns, = 0.071, and no interaction between duration and expression categorization, F(8, 136) = 1.46, ns, = .079. There was no significant difference in categorization of fearful and angry expressions between Experiment 1 (M = 0.261, SD = 0.453) and Experiment 2 (M = 0.318, SD = 0.346) when d′ was collapsed across duration, t(34) = 0.426, ns, d = 0.516. Even with a 50-ms duration, there was no significant difference in categorization of fearful and angry expressions between Experiment 1 (M = 0.611, SD = 0.857) and Experiment 2 (M = 0.748, SD = .06), t(34) = 0.560, ns, d = 0.19.

Do teeth influence categorization of an emotional expression when “happy” is not a response option?

In Experiment 1, clear displays of teeth caused fearful and angry expressions to appear happy. This may indicate that teeth are an important feature of happy expressions, but it may also reflect the fact that happy faces happened to show the most teeth of the three expressions. Experiment 2 allowed us to determine the effect of teeth on expression categorization when “happy” was not a response option and none of the expressions (fearful, angry, or disgusted) clearly displayed teeth more than the others. We calculated the difference in the proportion of each type of categorization (angry, fearful, and disgusted) when fearful, angry, and disgusted expressions clearly showed teeth compared with when they did not show teeth. As in Experiment 1, we arcsin adjusted each value to account for compression at high proportion values and then compared each difference score against a value of zero, which would indicate no change in categorization. Clear displays of teeth marginally improved angry categorization by increasing the likelihood of correctly classifying angry expressions, t(17) = 2.96, p = .054, d = 0.698, and made disgusted expressions more likely to appear angry, t(17) = 3.05, p = .044, d = 0.718 (all p values are corrected for multiple comparisons; see Figure 9). In Experiment 2, teeth clearly made an expression more likely to appear angry when “happy” was not a response option.

Figure 9.

Mean difference in proportion of each type of categorization between trials on which fearful, angry, and disgusted expressions clearly showed teeth and trials on which they did not show teeth (on trials with correct emotion detection). Positive values indicate that a categorization (a = angry, f = fearful, or d = disgusted) was given more often when the expression (fearful, angry, or disgusted) clearly showed teeth. Differences are arcsin adjusted to offset compression at high proportion values. Error bars indicate ±1 SEM (adjusted for within-observer comparisons).

Discussion

The purpose of Experiment 2 was to determine whether teeth facilitated emotion detection even in the absence of happy faces, and whether the poor categorization between angry and fearful expressions in Experiment 1 was due to the inclusion of happy expressions, potentially encouraging observers to focus on the negative–positive distinction. Comparisons between Experiments 1 and 2 generally suggest that emotion detection was better with angry and fearful expressions that strongly showed teeth compared with those that did not. Furthermore, categorization between angry and fearful expressions was still poor even when all expression categories were negative, suggesting that expression categorization is not strongly affected by categorization strategies reflecting the range of valence included in the response options. This is in agreement with the constant ratio rule (Clarke, 1957), in which categorization between any two dimensions is independent of the number of dimensions in a decision space as long as each dimension is processed independently.

This independence in decision space does not necessarily suggest that emotional categories are discrete or independent. According to a discrete category account of affect, emotions are distinct (Ekman & Friesen, 1971; Izard, 1972; Tomkins, 1962, 1963) and associated with unique and independent neurophysiological patterns of activity (e.g., Adolphs et al., 1999; Harmer, Thilo, Rothwell, & Goodwin, 2001; Kawasaki et al., 2001; Panksepp, 1998; Phillips et al., 2004). In contrast, a dimensional account characterizes emotions as continuous and determined by the combined neural activity of separate arousal and valence encoding systems (e.g., Posner, Russell, & Peterson, 2005; Russell, 1980). A recent computer simulation of expression recognition supports the discrete category account. A support vector machine (VSM) with discrete expression classifiers produced judgments of expression similarity nearly identical to those from humans when presentation durations were long (Susskind et al., 2007). It is important to note that angry and fearful expressions were equally distinct from each other and from happy expressions in both the human and VSM similarity spaces. In contrast, our results are more consistent with a dimensional account, in which angry and fearful expressions are “near” each other (i.e., similar) in the arousal-valence activation space and both “far” (i.e., distinct) from happy expressions. These differing results may be complementary. Whereas Susskind and colleagues' (2007) findings support the operation of discrete expression encoding when faces are seen for long durations, our results suggest that a dimensional account based on valence and arousal may be more appropriate for describing perception when faces are seen for very brief durations.

Our results are, perhaps, most consistent with the psychological construction approach to emotion (Barrett, 2006a, 2006b, 2009). In this account, emotions are not discrete entities (i.e., “natural kinds”). Rather, emotions are continuous and variable (e.g., James, 1884; Ortony & Turner, 1990) and only appear categorically organized because humans tend to impose discrete boundaries onto sensory information. Similar to top-down mechanisms of object recognition (e.g., Bar, 2003), categorization occurs when conceptual knowledge about prior emotional experience is brought to bear, while evaluating one's own emotional state as well as evaluating emotion expressed on the face of someone else. In this framework, our results suggest that emotional expressions viewed with just a fleeting glance activate only coarse-grained conceptual knowledge sensitive enough to differentiate positive from negative valence, but insufficient to differentiate between negative valence expressions. This interpretation is consistent with facial electromyography measurements, which showed that facial movements differentiate negative versus positive valence but not discrete emotional categories (e.g., Cacioppo, Berntson, Larsen, Poehlmann, & Ito, 2000). This interpretation is also consistent with measures of affective experience in which negative emotions are highly correlated while lacking unique signatures (Feldman, 1993; Watson & Clark, 1984; Watson & Tellegen, 1985). Whether or not discrete and basic emotions exist has been hotly debated for more than a century and is beyond the scope of this investigation. There is no doubt, however, that humans categorize emotional expressions, and our results clearly characterize this important perceptual ability when visibility is brief.

Experiment 3

The final experiment provides a control for the emotion detection aspect of the results in Experiments 1 and 2. It is possible that instead of detecting emotion, observers might have used the strategy of detecting physical changes from the target faces to surprise masks. This strategy would work if the emotional faces were more physically distinct from the surprise faces than the neutral faces. This possibility is unlikely because observers using this strategy would have performed poorly in the expression categorization task. Nevertheless, we conducted a control experiment in which we asked observers to rate the physical similarity between the emotional/neutral expressions and the surprise expressions that masked them from Experiments 1 and 2. In Experiments 1 and 2, we found reliable differences in detection performance with different expressions. If observers were indeed using a change detection strategy, then the pattern of physical distinctiveness compared with surprise faces in this control experiment should mirror the pattern of detection differences across the expression categories. Otherwise, we can reasonably conclude that our results reflect detection of emotion.

Method

Observers

Ten graduate and undergraduate students at the University of California–Berkeley gave informed consent to participate. All had normal or corrected-to-normal visual acuity.

Stimuli and procedure

The stimuli and pairings between emotional (or neutral) and surprise faces were identical to those from Experiments 1 and 2. Each trial began with the presentation of a white screen for 500, 700, or 900 ms followed by a face with a happy, fearful, angry, disgusted, or neutral expression for 50 ms at the center of the screen. A surprise face then appeared at the same location for 310 ms. When the surprise-face mask disappeared, observers rated the physical similarity between the target face and the surprise-face mask on a scale of 1 to 5 (1 = very similar, 5 = very distinct). We used these brief durations to enable direct comparisons with the results of Experiments 1 and 2. Each face pair was presented once for a total of 56 trials.

Results

The emotional faces were perceived as more physically distinct from the surprise faces than the neutral faces (emotional: M = 3.34, SD = 0.448; neutral: M = 2.61, SD = 0.419), t(9) = 6.64, p < .001. This is not surprising as both the neutral and surprise faces are neutral in valence, whereas the emotional faces had strongly positive or negative valence. An important question is whether physical distinctiveness of each expression explains the pattern of emotion detection results. To compare physical distinctiveness across expressions, we subtracted the average distinctiveness ratings of the neutral faces from the average distinctiveness ratings of the expressions with which they were paired in the main experiments. In this way, we took the distinctiveness of the neutral faces in the paired intervals into account when comparing distinctiveness across expressions. Unlike the detection results, no clear pattern of distinctiveness emerged across the expressions (happy: M = 1.0, SD = 0.5, angry: M = 0.8, SD = 0.5, fearful: M = 0.5, SD = 0.5, disgusted: M = 0.7, SD = 0.6), F(3, 27) = 1.699, ns.

In addition to this lack of significance, the pattern of physical distinctiveness across expressions was different from the pattern of detection across the expressions in Experiments 1 and 2. Observers rated the angry faces as more physically distinct from their surprise masks than the fearful faces, t(9) = 2.44, p < .05, d = 0.771, whereas in Experiment 1, observers were worse at detecting the emotional interval when it contained an angry face than when it contained a fearful face. Moreover, whereas observers rated the angry faces as slightly more physically distinct than the disgusted faces, observers tended to be worse at detecting the emotional interval when it contained an angry face than when it contained a disgusted face in Experiment 2, at least with a 50-ms duration, t(17) = 2.02, p = .06, d = 0.477. Although a strategy of detecting structural change could have provided some utility in selecting the emotional interval, it is inconsistent with the differences in detection between emotional expressions. This inconsistency combined with the fact that observers were instructed to detect an emotional face and classify its expression on each trial in Experiments 1 and 2 suggests that they were likely to have used affective information to detect the emotional interval rather than focusing on the physical change between the briefly presented target face and the surprise face.

General Discussion

In this investigation, we characterized the sensitivity of emotion detection and expression categorization when faces were briefly viewed, a common occurrence in emotional encounters. By comparing the information that contributed to these perceptual abilities, we showed that detecting that a face is emotional dissociates from the ability to classify the expression on the face. We summarize three major results of this investigation to illustrate how the processes underlying emotion detection and expression categorization dissociate. We also discuss the importance of our findings with regard to unconscious and conscious processing of briefly presented affective information.

First, emotion detection depended on the presence of one or more specific facial features and not their spatial relationship because emotion detection performance was not impaired when faces were inverted. In contrast, categorization between some emotional expressions relied, in part, on the spatial relationship between features (in addition to the presence of specific features) because the ability to discriminate between happy and angry expressions was impaired by face inversion, which ostensibly disrupts configural relationships between features (e.g., Leder & Bruce, 2000; Rossion & Gauthier, 2002). In other words, categorization between some emotional expressions is supported by information from the spatial relationship between features in addition to the mere presence of specific features, whereas emotion detection is supported simply by the presence of specific features. This may explain cases in which emotion detection is possible but expression categorization is not.

Second, emotion detection was better when a face clearly showed teeth. The presence of teeth, however, did not facilitate expression categorization overall. Emotion detection was better when a face was happy compared with when it was fearful or angry, probably because all of the happy faces in this investigation clearly showed teeth. Furthermore, emotion detection was better when fearful and angry expressions showed teeth compared with when they did not show teeth. This appears to be a general strategy because observers used teeth to detect that a face was emotional even when happy faces were not included in Experiment 2. It is possible that the expressions that clearly showed teeth displayed stronger emotion in other aspects besides teeth, such that part of the advantage for emotion detection with expressions that clearly showed teeth may have been from other features. Nevertheless, these results demonstrate that teeth are a salient cue for emotion detection. This is consistent with a study showing that chimpanzees discriminate emotional expressions from neutral expressions of unfamiliar conspecifics better when they are open-mouthed compared with when they are closed-mouthed (Parr, 2003), suggesting that teeth may be a common cue across species for detecting emotion. Clear displays of teeth also caused substantial changes in expression categorization. On trials in which a fearful or angry expression was presented and observers correctly detected the target face that bore that expression, the presence of teeth made observers more likely to perceive the expression as happy. On trials in which an angry or disgusted expression was presented and “happy” was not a response option, the presence of teeth made observers more likely to perceive the expression as angry. This demonstrates that although teeth clearly helped observers detect that an emotion was present, teeth did not, in general, help observers discriminate between expressions.

Third, and most important, emotion detection often occurred without expression categorization, and expression categorization sometimes occurred without emotion detection. There was no relationship between emotion detection with fearful and angry expressions and categorization between fearful and angry expressions. Clearly, emotion could be detected above chance level when faces had fearful or angry expressions at all presentation durations, whereas categorization between fearful and angry expressions was near chance level for all durations. In other words, when a fearful or angry face was presented, observers could often detect that the face was emotional, but were unable to indicate whether it was a fearful or angry expression. This is in agreement with, and builds on a previous finding with computer-generated faces in which fearful expressions were relatively easy to discriminate from neutral expressions but difficult to discriminate from sad expressions (Goren & Wilson, 2006). Conversely, expression categorization was possible even when observers did not correctly detect the target face that bore an emotional expression, but only for happy expressions. This occurred even when a happy face was inverted, suggesting that this ability is likely to rely on the encoding of a characteristic feature of happy expressions.

These results extend previous findings in which detection that an object was present dissociated from the ability to categorize the object (Barlasov-Ioffe & Hochstein, 2008; Del Cul, Baillet, & Dehaene, 2007; Mack et al., 2008) by showing that the same principle applies to affective information in a face. Furthermore, these results fit with studies of face recognition, suggesting that detection of a face and identification of a face are supported by separate levels of visual processing (e.g., Liu et al., 2002; Sugase et al., 1999; Tsao et al., 2006). With regard to face recognition, face detection has been proposed to act as a gating mechanism to ensure that subsequent face identity processing is activated only when a face has been detected (e.g., Tsao & Livingstone, 2008). The same gating mechanism could apply to affective information in a face, such that an initial detection process could detect emotion by measuring the degree to which the features of a face differ from those of a neutral face, engaging a subsequent expression categorization process only if the amount of deviation passes a threshold. This theory suggests that detection necessarily precedes categorization, which at first seems to contradict the finding of categorization without detection in the current experiment. However, a face detection system could hypothetically engage a subsequent expression categorization stage without giving rise to the explicit experience of detecting an emotional face if activation of the face detection system did not surpass an internal threshold necessary for awareness. This speculation has precedence from neuroimaging (Bar et al., 2001) and electrophysiological (Del Cul et al., 2007) results suggesting that substantial neural activity persists even when a person is unaware of an object's identity, and that awareness of an object's identity is likely to occur when this activity surpasses an internal threshold (although inferences by these authors differ in terms of whether or not crossing the awareness threshold is marked by a nonlinear change in neural activation). Further research dissociating the neural mechanisms underlying emotion detection and expression categorization is warranted.