Abstract

Aim:

To investigate whether a hospital-specific opportunity-based composite score (OBCS) was associated with mortality in 136,392 patients with acute myocardial infarction (AMI) using data from the Myocardial Ischaemia National Audit Project (MINAP) 2008–2009.

Methods and results:

For 199 hospitals a multidimensional hospital OBCS was calculated on the number of times that aspirin, thienopyridine, angiotensin-converting enzyme inhibitor (ACEi), statin, β-blocker, and referral for cardiac rehabilitation was given to individual patients, divided by the overall number of opportunities that hospitals had to give that care. OBCS and its six components were compared using funnel plots. Associations between OBCS performance and 30-day and 6-month all-cause mortality were quantified using mixed-effects regression analysis. Median hospital OBCS was 95.3% (range 75.8–100%). By OBCS, 24.1% of hospitals were below funnel plot 99.8% CI, compared to aspirin (11.1%), thienopyridine (15.1%), β-blockers (14.7%), ACEi (19.1%), statins (12.1%), and cardiac rehabilitation (17.6%) on discharge. Mortality (95% CI) decreased with increasing hospital OBCS quartile at 30 days [Q1, 2.25% (2.07–2.43%) vs. Q4, 1.40% (1.25–1.56%)] and 6 months [Q1, 7.93% (7.61–8.25%) vs. Q4, 5.53% (5.22–5.83%)]. Hospital OBCS quartile was inversely associated with adjusted 30-day and 6-month mortality [OR (95% CI), 0.87 (0.80–0.94) and 0.92 (0.88–0.96), respectively] and persisted after adjustment for coronary artery catheterization [0.89 (0.82–0.96) and 0.95 (0.91–0.98), respectively].

Conclusions:

Multidimensional hospital OBCS in AMI survivors are high, discriminate hospital performance more readily than single performance indicators, and significantly inversely predict early and longer-term mortality.

Keywords: Acute myocardial infarction, composite performance indicators, mortality, performance, quality of care

Introduction

There is increasing demand across a number of developed countries for better indicators of hospital quality of care for patients with acute myocardial infarction (AMI). This is driven, in part, by evidence of substantial inter- and intra-regional variation in hospital care and outcomes, and by healthcare policy makers wishing to benchmark standards of care and to implement quality improvements.1–4 In addition, there are concerns that some measures of performance quality may not represent complete pathways of care and that some show only tenuous associations with subsequent mortality.5,6 Importantly, measures of hospital performance quality should be strongly associated with healthcare outcomes.7

International guidelines for the management of AMI highlight multiple optimal standards of care,8–12 yet in Europe, only indicators of single processes of care are exploited. An example of this is the determination of call to balloon time for primary percutaneous coronary intervention (PCI) in patients with ST-elevation myocardial infarction (STEMI).13,14 Erroneously, single process measures are sometimes used as surrogates for hospital performance quality, on the assumption that they reflect the entire ‘patient journey’ for the complete range of AMI subtypes.12,13,15,16 An alternative approach would be to use a series of single indicators representing each aspect of AMI care – but an exhaustive number of single indicators would be necessary. Moreover, single performance indicators only carry meaning for that indicator and lend no weight to attainment of other relevant treatments. Furthermore, hospital comparison can only be made on the same indicator and not the overall performance of the clinical pathway of care. In contrast, composite performance indicators are constructed from several components of a clinical pathway to give a single score summarizing multiple aspects of care, which thereby allows a more comprehensive comparison of provider performance.17,18 In this study, we aimed to investigate whether a specific hospital opportunity-based composite score (OBCS) of six care processes reported at the time of discharge was associated with mortality in 136,392 hospital survivors of AMI using data from the Myocardial Ischaemia National Audit Project (MINAP) 2008–2009.19

Methods

Study design

The analyses were based on data from MINAP, a multicentre prospective registry of patients hospitalized in England and Wales with an acute coronary syndrome.12,19–21 MINAP data collection and management has previously been described.22–24 Each patient case entry offers details of the ‘patient journey’, including the method and timing of admission, in-patient investigations, treatment, and where relevant, date of death (from linkage to the National Health Service Central Register (NHSCR) using a unique National Health Service (NHS) number).

Ethics

The National Institute for Cardiovascular Outcomes Research (NICOR) which manages MINAP (ref. NIGB: ECC 1–06 (d)/2011) has support under section 251 of the NHS Act 2006. Formal ethical approval was not required under NHS research governance arrangements for this study.

Cohort

We had access to MINAP data only after patient identity had been protected. We studied 136,392 patients admitted between 1 January 2008 and 31 December 2009 who had survived to discharge from 199 hospitals in England and Wales and who had a final diagnosis of AMI. The final diagnosis was determined by local clinicians using the patients’ presenting history, clinical examination and the results of inpatient investigations. We used 2 complete years of data to mitigate any biases due to seasonality.

For each hospital, we calculated a multidimensional OBCS based on the number of times particular care processes were performed (numerator) divided by the number of chances a provider (hospital) had to give that care (denominator).17 We constructed a pragmatic score incorporating evidence-based therapies applicable to both patients with STEMI and to those with non ST-elevation myocardial infarction (NSTEMI).25,26 We included provision of aspirin, clopidogrel (or other thienopyridine), β-blocker, angiotensin-converting enzyme inhibitor (ACEi), HMG CoA reductase enzyme inhibitor (statin), and enrolment in cardiac rehabilitation at the time of discharge from hospital as the care processes. In line with guidance from the National Institute for Health and Clinical Excellence (NICE), the prescription of a β-blocker and/or ACEi was considered for all patients with an AMI, regardless of the left ventricular ejection fraction.27 Furthermore, all patients were considered for enrolment of cardiac rehabilitation, including the elderly.

Although the therapies used for the OBCS are instigated in hospital, it is usually only at discharge from hospital that all opportunities are realized – we therefore only considered survivors at the time of discharge to help mitigate biases associated with in-hospital deaths. Eligible opportunities to provide care (the denominator) excluded those patients for whom particular interventions were recorded in MINAP as contra-indicated, not-applicable, or not-indicated or who refused treatment for specific, but not all, components of the composite.

Hospital performance was categorized according to quartile of hospital OBCS score (Q1–Q4). To facilitate comparison, we also quantified the relationship between unidimensional (single) hospital opportunity-based process measures of the same interventions (aspirin, thienopyridine, beta-blocker, ACEi, statin, and cardiac rehabilitation) and subsequent 30-day and 6-month mortality.

Statistics

The population was described using crude numerical data (without adjustment for any additional factors) and percentages (for categorical variables), and by medians with interquartile range (IQR) or mean with standard deviation (SD), depending upon plausibility of normality, for continuous variables. Visual representation of hospitals’ unidimensional opportunity-based indicators and the hospitals’ multidimensional OBCS, with respect to the number of opportunities to provide care, was undertaken using funnel plots with a binomial distribution and exact 99.8% credible limits (corresponding to plus or minus 3 SD of the median overall hospital OBCS).28,29

Using a linear mixed-effects regression model (with random intercepts for each hospital) with binomial distribution and a log link, we adjusted 30-day and 6-month mortality for age, systolic blood pressure and heart rate at admission, elevated troponin concentration, cardiac arrest, and presence of ST-deviation on the presenting electrocardiogram – all factors previously known to affect mortality risk following discharge from hospital.30 The random-effect logistic model allows for adjustment for an unobserved hospital-level component, capturing any hospital factors that were omitted yet which influenced mortality for all patients in that hospital. The model was used to quantify the relationship between hospital OBCS quartile (Q1–Q4) and mortality, and between unidimensional performance indicators and mortality. Finally, because there was a difference in the proportion of patients who underwent coronary artery catheterization (coronary angiography, acute/elective PCI) associated with the presence or absence of missing data for the components of the OBCS, the model was compared to one adjusted for the occurrence of coronary artery catheterization. Model outputs were represented by odds ratios (OR) and 95% confidence intervals (CI). All analyses were performed using Stata IC version 11.0 (Stat Corp, Texas, USA).

Results

For this cohort, the mean±SD age was 68.9±13.8 years, and 33.4% were women (Table 1). The proportion of patients with a previous history of AMI, hypertension, chronic heart failure, diabetes mellitus, and chronic renal failure was 26.4, 50.4, 5.6, 19.7, and 5.1%, respectively. Rates of use of aspirin, thienopyridine, β-blocker, ACEi, statin, and referral for cardiac rehabilitation on discharge from hospital were 98.4, 96.4, 94.3, 92.6, 97.0, and 89.8%, respectively. For the cohort, the unadjusted 30-day and 6-month mortality rates (95% CI) after discharge from hospital were 2.25% (2.17–2.33%) and 7.20% (10.6–7.34%) respectively. There were 34,620 (25.4%) patients with missing data for one or more of the six components of the hospital OBCS. Table 1 shows that there were no substantial differences between patient characteristics in those with and without complete hospital OBCS data other than for rates of coronary artery catheterization and mortality.

Table 1.

Patient characteristics by multidimensional hospital opportunity-based composite score (OBCS) quartile and missing data

| Characteristic | 1st quartile (n=28,206) | 2nd quartile (n=24,261) | 3rd quartile (n=27,213) | 4th quartile (n=22,092) | Missing OBCS data (n=34,620) | Complete cases (n=101,772) | Total (n=136,392) |

|---|---|---|---|---|---|---|---|

| Hospitals | 56 (28.1) | 57 (28.6) | 41 (20.6) | 45 (22.6) | – | – | 199 (100) |

| OBCS (%, IQR) | 91.5, 3.6 | 94.5, 0.8 | 96.3, 0.7 | 98.3, 1.2 | – | – | 95.3, 3.4 |

| Age (years) | 69.7±13.8 | 69.0±13.7 | 68.6±13.8 | 68.1±13.7 | 68.9±13.9 | 68.9±13.8 | 68.9±13.8 |

| Systolic blood pressure (mmHg) | 141.0±27.8 | 141.3±28.3 | 140.8±28.3 | 139.7±27.7 | 139.5±28.9 | 140.7±28.1 | 140.4±28.3 |

| Heart rate (bpm) | 81.9±22.4 | 81.4±22.7 | 81.2±22.0 | 80.1±21.2 | 82.0±23.1 | 81.2±22.1 | 81.4±22.4 |

| IMD score | 21.8±16.0 | 20.5±16.2 | 20.5±15.7 | 24.5±17.9 | 22.7±141.6 | 21.7±16.5 | 22.0±72.0 |

| Female | 9838 (35.0) | 7997 (33.0) | 8778 (32.3) | 7235 (32.8) | 11,512 (33.5) | 33,848 (33.3) | 45,360 (33.4) |

| Coronary artery catheterization* | 16,707 (61.8) | 16,189 (70.0) | 18,367 (70.1) | 15,547 (72.7) | 22,483 (70.7) | 66,810 (68.4) | 89,293 (68.9) |

| Previous AMI | 7078 (27.1) | 6007 (26.4) | 6812 (25.7) | 5579 (26.0) | 8065 (26.8) | 25,476 (26.3) | 33,541 (26.4) |

| Diabetes mellitus | 5614 (20.3) | 4667 (19.7) | 5208 (19.5) | 4087 (19.0) | 6379 (20.1) | 19,576 (19.6) | 25,955 (19.7) |

| Hypertension | 13,121 (50.1) | 11,320 (50.5) | 13,482 (51.1) | 10,646 (49.6) | 15,200 (50.5) | 48,569 (50.4) | 63,769 (50.4) |

| Chronic renal failure | 1249 (5.0) | 1113 (5.2) | 1409 (5.4) | 920 (4.3) | 1634 (5.6) | 4691 (5.0) | 6325 (5.1) |

| Chronic heart failure | 1512 (6.0) | 1249 (5.8) | 1412 (5.4) | 1052 (4.9) | 1633 (5.6) | 5225 (5.5) | 6858 (5.6) |

| Aspirin on discharge | 23,371 (96.6) | 20,375 (98.4) | 23,630 (99.1) | 19,535 (99.6) | 17,657 (95.0) | 86,911 (98.4) | 10,456 (97.8) |

| Thienopyridine on discharge | 22,232 (93.3) | 19,320 (96.7) | 22,399 (97.4) | 18,658 (98.8) | 9176 (94.7) | 82,609 (96.4) | 91,785 (96.2) |

| Beta-blocker on discharge | 19,933 (90.0) | 17,329 (93.9) | 20,291 (95.8) | 17,088 (98.4) | 14,175 (88.1) | 74,641 (94.3) | 88,816 (93.2) |

| ACEi on discharge | 20,345 (87.3) | 18,131 (91.8) | 21,451 (94.9) | 18,067 (97.5) | 14,263 (85.6) | 77,994 (92.6) | 92,257 (91.5) |

| Statin on discharge | 23,546 (94.7) | 20,477 (97.1) | 23,774 (97.9) | 19,585 (98.9) | 17,427 (93.0) | 87,382 (97.0) | 10,480 (96.4) |

| Cardiac rehabilitation on discharge | 21,560 (82.6) | 20,387 (89.2) | 23,675 (92.4) | 19,811 (96.6) | 14,288 (85.2) | 85,433 (89.8) | 99,721 (89.1) |

| 30-day mortality rate (%, 95% CI) | 2.25 (2.07–2.43) | 2.43 (2.23–2.62) | 1.66 (1.51–1.81) | 1.40 (1.25–1.56) | 3.17 (2.98–3.36) | 1.95 (1.86–2.03) | 2.25 (2.17–2.33) |

| 6-month mortality rate (%, 95% CI) | 7.93 (7.61–8.25) | 6.81 (6.48–7.13) | 6.59 (6.29–6.89) | 5.53 (5.22–5.83) | 8.50 (8.19–8.80) | 6.78 (6.62–6.93) | 7.20 (7.06–7.34) |

Values are n (%) or mean±SD unless otherwise stated. Missing OBCS data refers to one or more component of the OBCS is missing.

Coronary artery catheterization refers to any patient being referred for any form of coronary angiography including primary PCI if appropriate.

ACEi, angiotensin-converting enzyme inhibitor; AMI, acute myocardial infarction; IMD, index of multiple deprivation; PCI, percutaneous coronary intervention.

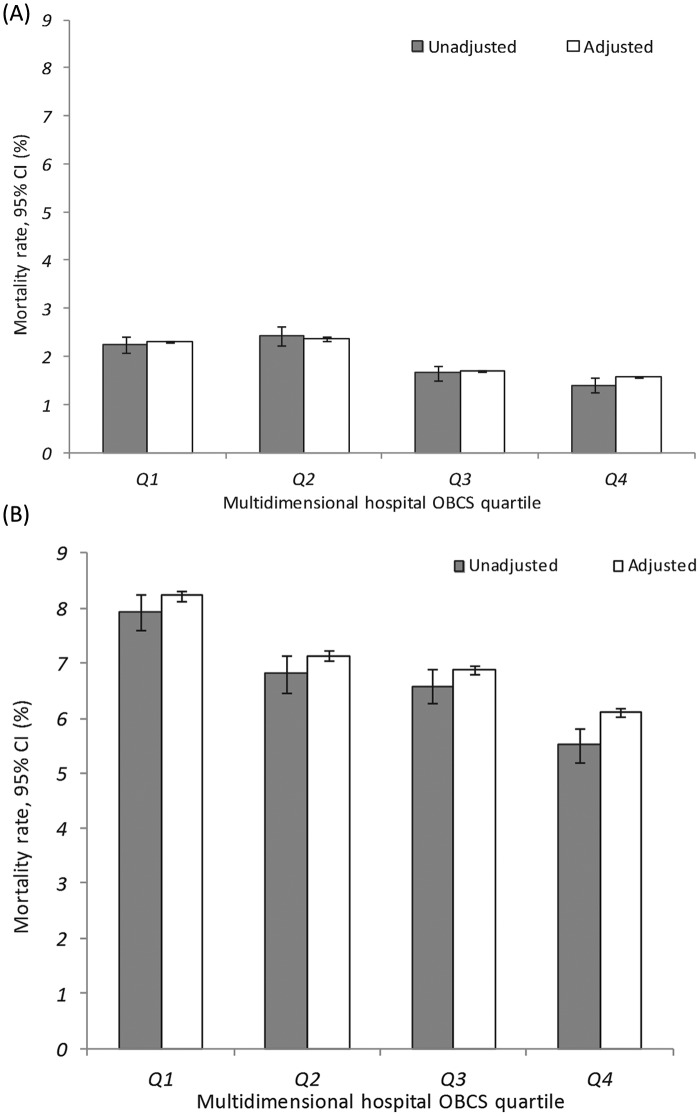

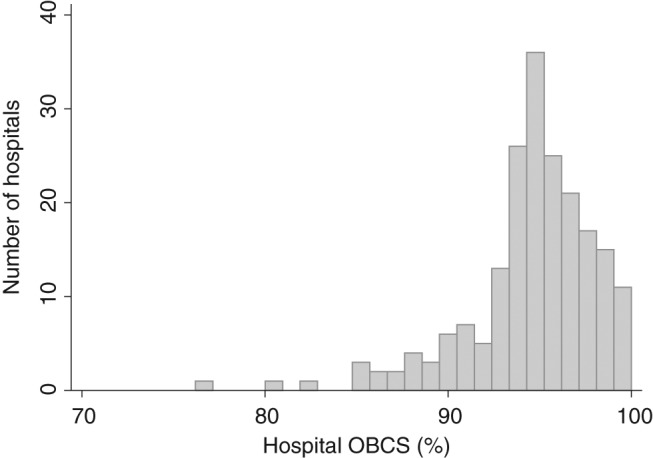

Opportunity-based composite scores

The median hospital OBCS was 95.3% (range 75.8–100%) (Figure 1). The median (IQR) hospital OBCS within each hospital quartile was: Q1, 91.5% (89.2–93.3%); Q2, 94.5 (94.1–94.9%); Q3, 96.3 (95.6–96.7); Q4, 98.3% (97.7–98.9%). Figure 2 reveals a wide distribution for hospital OBCS. There were 48 (24.1%) hospitals below the funnel plot 99.8% credible limit (inferior outliers), which compared to 22 (11.1%) for aspirin, 30 (15.1%) for thienopyridine, 28 (14.7%) for β-blockers, 38 (19.1%) for ACEi, 24 (12.1%) for statins, and 35 (17.6%) for cardiac rehabilitation on discharge respectively (Figure 3). Of those hospitals identified as outliers by the hospital OBCS, the proportion below the 99.8% credible limit as assessed by unidimensional hospital opportunity-based scores was: aspirin 19 (86.4%), thienopyridine 18 (60.0%), β-blocker 23 (82.1%), ACEi 30 (78.9%), statin 21 (87.5%), and cardiac rehabilitation 26 (74.3%). Of those hospitals identified as below the OBCS 99.8% credible limit, the proportion below the 99.8% credible limit as assessed by 6, 5, 4, 3, 2, and 1 of the unidimensional hospital opportunity-based scores was 100, 100, 71.4, 91.7, 44.4, and 42.9%, respectively.

Figure 1.

Histogram of multidimensional hospital opportunity-based composite score (OBCS).

Figure 2.

Funnel plot of multidimensional hospital discharge opportunity-based composite score (OBCS). Solid line represents cohort median score; dashed line represents the 99.8% credible limits.

Figure 3.

Funnel plots of unidimensional hospital opportunity-based score (OBS) for: (A) aspirin at discharge, (B) thienopyridine at discharge, (C) beta-blockers at discharge, (D) ACEi at discharge, (E) statin at discharge, and (F) cardiac rehabilitation at discharge. Solid line represents cohort median hospital OBS; dashed line represents the 99.8% credible limits. Light grey circles represent inferior outlying hospitals according to the multidimensional hospital OBS funnel plot.

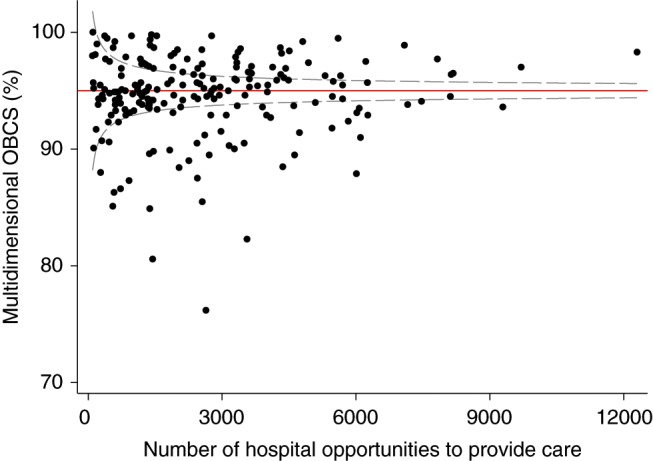

Hospital performance and mortality

The median (IQR; range) 30-day and 6-month mortality for hospitals was 2.02% (1.42–2.72%; 0.0–20.0%) and 7.03% (5.55–8.29%; 0.0–21.9%). There was a significant inverse association between hospital OBCS quartile and unadjusted 30-day mortality (95% CI) [Q1, 2.25% (2.07–2.43%) vs. Q4, 1.40% (1.25–1.56%)] and unadjusted 6-month mortality [Q1, 7.93% (7.61–8.25%) and Q4, 5.53% (5.22–5.83%)]. These associations were also present after adjustment for any confounding differences in baseline patient characteristics (case-mix). All-cause adjusted mortality at 30 days was Q1, 2.30% (2.28–2.33%) vs. Q4, 1.57% (1.56–1.59%) and at 6 months, Q1, 8.23% (8.13–8.33%) vs. Q4, 6.11% (6.03–6.20%) (Figure 4).

Figure 4.

Bar charts of all-cause mortality rates for hospital survivors of acute myocardial infarction by multidimensional hospital OBCS quartile: (A) 30-day mortality and (B) 6-month mortality. White bars indicate rates adjusted for age, systolic blood pressure and heart rate at admission, elevated troponin, cardiac arrest, and presence of ST-deviation on the presenting electrocardiogram, with random intercepts for each hospital. Grey bars indicate unadjusted rates. Whiskers indicate 95% CI.

So, hospital OBCS quartile significantly predicted 30-day mortality (OR, 95% CI: 0.87, 0.80–0.94) and 6-month mortality (0.92, 0.88–0.96) with an intuitive inverse relationship being present (better care associated with lower mortality). When the model that was fitted to the data also included coronary artery catheterization, this inverse relationship persisted and remained statistically significant (30 days: 0.89, 0.82–0.96) and 6 months: 0.95, 0.91–0.98). Overall, each 1% increase in hospital OBCS was associated with, on average, a 3% reduction in 30-day mortality (0.97, 0.95–1.00) and a 2% reduction in 6-month mortality (0.98, 0.97–0.99).

After making the same adjustments (i.e. including coronary artery catheterization), each unidimensional hospital opportunity-based score significantly predicted 30-day mortality (OR, 95% CI: aspirin, 0.55, 0.43–0.71; β-blocker, 0.60, 0.50–0.72; ACEi, 0.62, 0.53–0.73; statins, 0.52, 0.43–0.63; cardiac rehabilitation, 0.46, 0.40–0.53; thienopyridine, 0.85, 0.67–1.08). The only association, not statistically significant was for thienopyridine and 30-day mortality. Each unidimensional hospital opportunity-based score was also significantly associated with 6-month mortality (aspirin, 0.58, 0.50–0.67; β-blocker, 0.61, 0.56–0.68; ACEi, 0.69, 0.63–0.75; statins, 0.54, 0.49–0.60; cardiac rehabilitation, 0.63, 0.58–0.68; thienopyridine, 0.67, 0.59–0.75).

Discussion

Our data indicate that the quality of hospital performance measured by a composite indicator is significantly associated with reductions in early and late all-cause mortality. The multidimensional hospital OBCS adjusted for case-mix was also associated significantly with substantial differences in mortality rates. The significant associations were evident despite generally very high hospital scores (even the most poorly performing hospital took 75% of the opportunities to provide evidence-based care), and after adjustment for patient characteristics and after modelling the impact of coronary artery catheterization (and associated beneficial revascularization procedures). Compared with unidimensional hospital opportunity-based performance measures, such as use of aspirin, thienopyridine, β-blocker, ACEi, statin, and cardiac rehabilitation on discharge from hospital, the multidimensional hospital OBCS offered visual recognition of additional hospitals with inferior performance.

In addition to providing better representation of the quality of care for the AMI hospital pathway, this multidimensional approach clearly shows that a composite hospital score, derived from a ‘bundle’ of recommended evidence-based interventions, is a valid and useful indicator of care that is associated with short- and longer-term outcomes. It is also more sophisticated than simple averaging of the individual elements as an implicit weighting is introduced to reflect the number of opportunities present. For example, a hospital with many complex elderly patients might be disadvantaged if the performance score had not taken into account treatment contraindications, lack of a treatment indication, or patient refusal of treatment. In this way, it also makes allowance for case-mix even without additional adjustment. The all-or-nothing composite score is another aggregation method. By virtue of the fact that it only scores when all stipulated opportunities of care are realized, it has a greater potential to promote excellence.17,31,32 One of the drawbacks with this method is that it decreases the number of available cases for analysis. The OBCS rewards near-excellent hospital performance, and historically has been used in preference to other aggregation methods.33–35

Whilst other studies also describe an association between composite performance indicators and mortality,33–35 some do not.6,31,36 Our study adds to the literature because it demonstrates a significant association between a more widely applicable discharge hospital composite indicator and mortality at 30 days and 6 months in survivors of hospitalization for both STEMI and NSTEMI. Much of the earlier research from the CRUSADE, GWTG-CAD, and GRACE registries focused on the association between composite scores derived from admission and discharge therapies and in-hospital mortality.33,34 To date, their relationship with 30-day mortality has been reported as only ‘modest’ at 6 months and the association at 1 year ‘not clear’.31,33–36 Furthermore, the all-or-nothing score has not previously been shown to be strongly associated with mortality.31,36

To date, a limited number of studies have described the association between hospital scores for AMI and longer-term mortality in those surviving to discharge following hospitalization with AMI.35 Moreover, the previous studies were limited because those examining in-hospital mortality excluded deaths within 24 hours of hospitalization and patients transferred out of the assessing hospital, and included measures of care at discharge from hospital to evaluate mortality whilst in hospital.6 That is, studies of post discharge mortality performance have included patients dying in-hospital and used composites derived from discharge medications to serve as surrogates for in-hospital treatment. In contrast, our study shows a clear inverse relationship between opportunities to provide evidence-based therapies at discharge and longer-term mortality in a cohort of patients who survived hospitalization. Also of interest within our study is the slightly weaker strength of association between hospital performance and 30-day mortality as compared to 6-month mortality (Figure 4) particularly after additional case-mix adjustment. This observation is important given that 30-day mortality is more often assessed as a measure of performance quality – based purely on convenience. Consequently, the 6-month mortality data described here, for a very large and inclusive cohort of patients, provides strong validation for the multidimensional hospital OBCS assessment proposed.

The results of this study provide evidence to support the notion of systematic hospital-level evaluation and reporting of acute coronary syndrome care processes and their quality. If implemented, this may help healthcare professionals, commissioners, and policy makers reduce the variation across healthcare providers in the treatment and outcome from AMI.2,37,38 We have shown that funnel plots readily allow the visualization of variations in hospital performance (such as special-cause variation)39 and that there is a clear association between the fulfilment of opportunities of AMI care and lower mortality rates. Extending the multidimensional hospital OBCS to include other processes of care would permit wider representation of the use of evidenced-based interventions. However, the need to collect extra information may limit its usefulness due to a reduced number of patients with complete data. Our score, a composite of six measures of care, is generalizable, less likely to be biased by missing data, and reflects the overall journey of care for patients surviving hospitalization with AMI. Even after internal (to OBCS) adjustment for number of actual opportunities, and also external (to OBCS) adjustment for other patient factors and the occurrence of coronary artery catheterization, the predictive value of this approach was clear (e.g. a 10% increase in hospital OBCS was associated with over 30% and 20% reductions in 30-day and 6-month mortality, respectively). Moreover, it is in keeping with other influential studies.33

We found that unidimensional hospital opportunity-based performance measures also significantly predicted mortality. However, we believe their use is of more limited value for a number of reasons. First, they discriminate less well with regard to performance outliers – in our cohort, attainment rates were very high and so fewer hospitals demonstrated significant variation in these measures. Furthermore, unidimensional indicators demonstrated less consistency because most outliers were only identified by one of the six unidimensional opportunity-based scores and just four hospitals were indicated by each of the six unidimensional components. In contrast, the OBCS identified most hospitals with more than one unidimensional opportunity-based score as an outlier. This last fact also suggests that a more comprehensive list of indicators (i.e. more than the six utilized) would add limited additional predictive value.17,18 Importantly, isolated/unidimensional measures of performance do not, by design, represent the wider aspects of AMI care. Despite this limitation, they are often used by healthcare commissioners and policy makers as a reflection of acute hospital care and once a single care process becomes the dominant (or only) metric by which ‘high-quality care’ is defined, there is a greater incentive to ‘game’ or misrepresent the performance.13,36

A number of concerns with the use of composite performance indicators have been raised. These include: (1) the potential for loss of detailed performance information; (2) a lack of actionable data to allow targeting of practice; (3) concerns about transparency of composite methodology; and (4) the reliability of hospital ranking.17,40 In this study we were careful to describe the steps used to derive the multidimensional hospital OBCS so that others may apply this method. Just as single performance measures have the potential for overall performance resolution to be lost, composite indicators may also be seen to have limited resolution concerning very detailed aspects of care. A careful compromise between the evaluation of finite and fuller aspects of care is therefore necessary in the design and implementation of hospital performance indicators. This study shows, however, that the visual discriminative ability of some single processes of care (e.g. aspirin and statins at discharge) is inferior to that of composites of care. Furthermore, presenting data in funnel plots overcomes concerns of ranking into ‘best’ and ‘worst’ hospital.28,39 We also compared the multidimensional hospital OBCS with unidimensional opportunity-based scores by overall hospital median performance benchmark. It is important to recognize, nonetheless, that setting different benchmarks may alter the identification of inferior performance. To help counter this, we set the funnel plot credible limits of the overall hospital median to 99.8%.

In this study we used complete cases to construct the OBCS and relied on the accuracy of that data. The sensitivity analyses found few differences in patient characteristics between those with and without missing data, and the modelling of coronary artery catheterization did not alter the direction of the results. Even so, compared with the single opportunity-based scores, the multidimensional hospital OBCS excluded more cases due to the presence of missing data, which may have biased the results. We did not include criteria for emergency therapies for AMI (such as timely reperfusion for STEMI) in the multidimensional hospital OBCS. This is because in England and Wales, primary PCI is delivered only at dedicated PCI-capable centres often within a cardiovascular network of many hospitals, and therefore a hospital-level analysis may not reflect actual care. The aim of this study was to develop and investigate a composite indicator of performance applicable to all hospitals caring for patients with AMI.

Conclusion

Multidimensional hospital OBCS in survivors of AMI are high, discriminate hospital performance more readily than single opportunity-based performance indicators, and inversely predict early and longer-term mortality. The results of this study provide evidence to support systematic hospital-level quality evaluation and objective reporting of AMI care so as to identify examples of best practice to be emulated by other aspiring hospitals.

Acknowledgments

This study includes data collected on behalf of the British Cardiovascular Society under the auspices of the National Institute for Cardiovascular Outcomes Research (NICOR) in which patient identity was protected. The extract from the MINAP database was provided by the MINAP Academic Group, National Institute for Cardiovascular Outcomes (NICOR), University College London. We acknowledge all the hospitals in England and Wales for their contribution of data to MINAP.

Footnotes

Funding: This work was supported by the British Heart Foundation (grant PG/07/057/23215) and the National Institute for Health Research (NIHR/CS/009/004).

Conflict of interest: The authors declare that there are no conflicts of interest.

References

- 1. Gale CP, Cattle BA, Woolston A, et al. Resolving inequalities in care? Reduced mortality in the elderly after acute coronary syndromes. The Myocardial Ischaemia National Audit Project 2003–2010. Eur Heart J 2012; 33: 630–639 [DOI] [PubMed] [Google Scholar]

- 2. Fox KA, Goodman SG, Klein W, et al. Management of acute coronary syndromes. Variations in practice and outcome; findings from the Global Registry of Acute Coronary Events (GRACE). Eur Heart J 2002; 23: 1177–1189 [DOI] [PubMed] [Google Scholar]

- 3. Fox KA, Anderson FA, Jr, Dabbous OH, et al. Intervention in acute coronary syndromes: do patients undergo intervention on the basis of their risk characteristics? The Global Registry of Acute Coronary Events (GRACE). Heart 2007; 93: 177–182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Widimsky P, Wijns W, Fajadet J, et al. Reperfusion therapy for ST elevation acute myocardial infarction in Europe: description of the current situation in 30 countries. Eur Heart J 2010; 31: 943–957 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Fonarow GC, Albert NM, Curtis AB, et al. Associations between outpatient heart failure process-of-care measures and mortality/clinical perspective. Circulation 2011; 123: 1601–1610 [DOI] [PubMed] [Google Scholar]

- 6. Bradley EH, Herrin J, Elbel B, et al. Hospital quality for acute myocardial infarction: correlation among process measures and relationship with short-term mortality. JAMA 2006; 296: 72–78 [DOI] [PubMed] [Google Scholar]

- 7. Staiger DO, Dimick JB, Baser O, et al. Empirically derived composite measures of surgical performance. Med Care 2009; 47: 226–233 [DOI] [PubMed] [Google Scholar]

- 8. Antman EM, Anbe DT, Armstrong PW, et al. ACC/AHA guidelines for the management of patients with ST-elevation myocardial infarction – executive summary. A report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines (Writing Committee to revise the 1999 guidelines for the management of patients with acute myocardial infarction). J Am Coll Cardiol 2004; 44: 671–719 [DOI] [PubMed] [Google Scholar]

- 9. Wright RS, Anderson JL, Adams CD, et al. 2011 ACCF/AHA focused update incorporated into the ACC/AHA 2007 Guidelines for the Management of Patients with Unstable Angina/Non-ST-Elevation Myocardial Infarction: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines developed in collaboration with the American Academy of Family Physicians, Society for Cardiovascular Angiography and Interventions, and the Society of Thoracic Surgeons. J Am Coll Cardiol 2011; 57: e215-e367 [DOI] [PubMed] [Google Scholar]

- 10. Hamm CW, Bassand JP, Agewall S, et al. ESC Guidelines for the management of acute coronary syndromes in patients presenting without persistent ST-segment elevation. Eur Heart J 2011; 32: 2999–3054 [DOI] [PubMed] [Google Scholar]

- 11. Van de Werf F, Bax J, Betriu A, et al. ESC guidelines on management of acute myocardial infarction in patients presenting with persistent ST-segment elevation. Rev Esp Cardiol 2009; 62: 293, e1–47 [DOI] [PubMed] [Google Scholar]

- 12. Department of Health The National Service Framework for coronary heart disease: modern standards and service models. London: DOH [Google Scholar]

- 13. MINAP Steering Group, National Institute Cardiovascular Outcomes Research (NICOR) Myocardial Ischaemia National Audit Project (MINAP): how the NHS manages heart attacks. Tenth public report 2011. London: University College London, 2011 [Google Scholar]

- 14. Ministere de la Sante Public information system on hospitals. Ministere de la Sante. Available at: http://www.platines.santes.gouv.fr/ (consulted June 2012).

- 15. Krumholz HM, Anderson JL, Bachelder BL, et al. ACC/AHA 2008 performance measures for adults with ST-elevation and non-ST-elevation myocardial infarction: a report of the American College of Cardiology/American Heart Association Task Force on Performance Measures (Writing Committee to Develop Performance Measures for ST-Elevation and Non-ST-Elevation Myocardial Infarction) developed in collaboration with the American Academy of Family Physicians and American College of Emergency Physicians endorsed by the American Association of Cardiovascular and Pulmonary Rehabilitation, Society for Cardiovascular Angiography and Interventions, and Society of Hospital Medicine. J Am Coll Cardiol 2008; 52: 2046–2099 [DOI] [PubMed] [Google Scholar]

- 16. Tu JV, Khalid L, Donovan LR, et al. Indicators of quality of care for patients with acute myocardial infarction. CMAJ 2008; 179: 909–915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Peterson ED, DeLong ER, Masoudi FA, et al. ACCF/AHA 2010 Position statement on composite measures for healthcare performance assessment: American College of Cardiology Foundation/American Heart Association Task Force on Performance Measures (Writing Committee to Develop a Position Statement on Composite Measures). J Am Coll Cardiol 2010; 55: 1755–1766 [DOI] [PubMed] [Google Scholar]

- 18. Weston CFM. Performance indicators in acute myocardial infarction: a proposal for the future assessment of good quality care. Heart 2008; 94: 1397–1401 [DOI] [PubMed] [Google Scholar]

- 19. National Institute Cardiovascular Outcomes Research (NICOR) The Myocardial Ischaemia National Audit Project (MINAP). London: University College London; 2011 [Google Scholar]

- 20. Birkhead J, Walker L. National audit of myocardial infarction (MINAP): a project in evolution. Hosp Med 2004; 65: 452–453 [DOI] [PubMed] [Google Scholar]

- 21. Birkhead JS. Responding to the requirements of the national service framework for coronary disease: a core data set for myocardial infarction. Heart 2000; 84: 116–117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Herrett E, Smeeth L, Walker L, et al. The Myocardial Ischaemia National Audit Project (MINAP). Heart 2010; 96: 1264–1267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. West RM, Cattle BA, Bouyssie M, et al. Impact of hospital proportion and volume on primary percutaneous coronary intervention performance in England and Wales. Eur Heart J 2011; 32: 706–711 [DOI] [PubMed] [Google Scholar]

- 24. Rickards A, Cunningham D. From quantity to quality: the central cardiac audit database project. Heart 1999; 82: II18–II22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Bonow RO, Bennett S, Casey DE, et al. ACC/AHA clinical performance measures for adults with chronic heart failure: a report of the American College of Cardiology/American Heart Association Task Force on Performance Measures (Writing Committee to Develop Heart Failure Clinical Performance Measures) endorsed by the Heart Failure Society of America. J Am Coll Cardiol 2005; 46: 1144–1178 [DOI] [PubMed] [Google Scholar]

- 26. Spertus JA, Eagle KA, Krumholz HM, et al. American College of Cardiology and American Heart Association methodology for the selection and creation of performance measures for quantifying the quality of cardiovascular care. J Am Coll Cardiol 2005; 45: 1147–1156 [DOI] [PubMed] [Google Scholar]

- 27. Royal College of Physicians Unstable angina and NSTEMI: the early management of unstable angina and non-ST-segment-elevation myocardial infarction: clinical guideline 94. London: National Institute for Health and Clinical Excellence 2010; [PubMed] [Google Scholar]

- 28. Gale CP, Roberts AP, Batin PD, et al. Funnel plots, performance variation and the Myocardial Infarction National Audit Project 2003–2004. BMC Cardiovasc Disord 2006; 6: 34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Gale CP, Cattle BA, Moore J, et al. Impact of missing data on standardised mortality ratios for acute myocardial infarction: evidence from the Myocardial Ischaemia National Audit Project (MINAP) 2004–7. Heart 2011; 97: 1926–1931 [DOI] [PubMed] [Google Scholar]

- 30. Fox KAA, Dabbous OH, Goldberg RJ, et al. Prediction of risk of death and myocardial infarction in the six months after presentation with acute coronary syndrome: prospective multinational observational study (GRACE). BMJ 2006; 333: 1091–1094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Eapen ZJ, Fonarow GC, Dai D, et al. Comparison of composite measure methodologies for rewarding quality of care. Circ Cardiovasc Qual Outcomes 2011; 4: 610–618 [DOI] [PubMed] [Google Scholar]

- 32. Nolan T, Berwick DM. All-or-none measurement raises the bar on performance. JAMA 2006; 295: 1168–1170 [DOI] [PubMed] [Google Scholar]

- 33. Peterson ED, Roe MT, Mulgund J, et al. Association between hospital process performance and outcomes among patients with acute coronary syndromes. JAMA 2006; 295: 1912–1920 [DOI] [PubMed] [Google Scholar]

- 34. Granger CB, Steg PG, Peterson E, et al. Medication performance measures and mortality following acute coronary syndromes. Am J Med 2005; 118: 858–865 [DOI] [PubMed] [Google Scholar]

- 35. Schiele F, Meneveau N, Seronde MF, et al. Compliance with guidelines and 1-year mortality in patients with acute myocardial infarction: a prospective study. Eur Heart J 2005; 26: 873–880 [DOI] [PubMed] [Google Scholar]

- 36. Werner RM, Bradlow ET. Relationship between Medicare’s hospital compare performance measures and mortality rates. JAMA 2006; 296: 2694–2702 [DOI] [PubMed] [Google Scholar]

- 37. Mandelzweig L, Battler A, Boyko V, et al. The second Euro Heart Survey on acute coronary syndromes: characteristics, treatment, and outcome of patients with ACS in Europe and the Mediterranean Basin in 2004. Eur Heart J 2006; 27: 2285–2293 [DOI] [PubMed] [Google Scholar]

- 38. Schiele F, Hochadel M, Tubaro M, et al. Reperfusion strategy in Europe: temporal trends in performance measures for reperfusion therapy in ST-elevation myocardial infarction. Eur Heart J 2010; 31: 2614–2624 [DOI] [PubMed] [Google Scholar]

- 39. Spiegelhalter DJ. Funnel plots for comparing institutional performance. Stat Med 2005; 24: 1185–1202 [DOI] [PubMed] [Google Scholar]

- 40. Jacobs R, Goddard M, Smith PC. How robust are hospital ranks based on composite performance measures? Med Care 2005; 43: 1177–1184 [DOI] [PubMed] [Google Scholar]