Abstract

Cortical sensory representation is highly adaptive to the environment, and prevalent or behaviorally important stimuli are often overrepresented. One class of such stimuli is species-specific vocalizations. Rats vocalize in the ultrasonic range >30 kHz, but cortical representation of this frequency range has not been systematically examined. We recorded in vivo cortical electrophysiological responses to ultrasonic pure-tone pips, natural ultrasonic vocalizations, and pitch-shifted vocalizations to assess how rats represent this ethologically relevant frequency range. We find that nearly 40% of the primary auditory cortex (AI) represents an octave-wide band of ultrasonic vocalization frequencies (UVFs; 32–64 kHz) compared with <20% for other octave bands <32 kHz. These UVF neurons respond preferentially and reliably to ultrasonic vocalizations. The UVF overrepresentation matures in the cortex before it develops in the central nucleus of inferior colliculus, suggesting a cortical origin and corticofugal influences. Furthermore, the development of cortical UVF overrepresentation depends on early acoustic experience. These results indicate that natural sensory experience causes large-scale cortical map reorganization and improves representations of species-specific vocalizations.

Keywords: auditory cortex, development, inferior colliculus, plasticity, vocalizations

our interactions with the world depend on efficient neural representation of the external environment. Although sensory stimuli span a continuous physical space, the distribution of naturally occurring stimuli is highly sparse and discontinuous: behaviorally relevant stimuli are often repeated frequently, whereas random irrelevant stimuli are not. To represent such an environment efficiently with a limited number of neurons, sensory representation is often nonuniform and biased toward frequently occurring, ethologically relevant inputs (Kim and Bao 2009; Mansfield 1974).

Species-specific vocalizations are an important class of ethologically relevant sounds that play a critical role in guiding appropriate social behaviors and promoting survival (Ehret and Haack 1981; Ehret et al. 1987; Hahn and Lavooy 2005; McIntosh and Barfield 1978). In several species, auditory neurons are more selective for vocalizations or acoustic stimuli that match the spectrotemporal modulations of species-specific vocalizations and other natural stimuli (Garcia-Lazaro et al. 2006; Lewicki 2002; Portfors et al. 2009; Rieke et al. 1995; Wang and Kadia 2001). In rodents, socially relevant ultrasonic vocalizations occur in the ultrasonic vocalization frequency (UVF) range >30 kHz (Brudzynski et al. 1993, 1999; Portfors 2007; Takahashi et al. 2010), and evidence suggests that the UVF range is overrepresented in rat primary auditory cortex (AI; Kim and Bao 2009). However, the mechanisms underlying this inhomogeneous frequency representation have not been explored, as most studies have examined rat auditory cortex with pure tones <32 kHz (Chang and Merzenich 2003; Chang et al. 2005; de Villers-Sidani et al. 2008; Engineer et al. 2008; Han et al. 2007; Polley et al. 2007; Zhang et al. 2001; Zhou and Merzenich 2008).

Nonuniform sensory representation may arise through an innate process as a result of evolution or through an experience-dependent process that is adaptive to the sensory environment. Certain cortical representational features, such as the ocular dominance columns of the visual cortex, are innate, and experience serves to refine further and maintain the cortical structures (Katz and Crowley 2002). In the auditory system, the early acoustic environment plays a crucial role in shaping frequency representation in AI (de Villers-Sidani et al. 2007; Kim and Bao 2009; Zhang et al. 2001). However, these earlier studies have typically used artificial stimuli, and it is unclear how experience-dependent plasticity contributes to cortical representations of species-specific vocalizations in a natural environment.

In the present study, we examine cortical representation of UVFs and its developmental mechanisms in rat AI. We find that the overrepresentation of UVFs requires early acoustic experience and develops first in the auditory cortex before it appears in inferior colliculus (IC). Furthermore, UVF neurons respond more faithfully to repetitive vocalizations than non-UVF neurons. These findings indicate that early experience in natural environments improves the representation of species-specific vocalizations in rat AI.

MATERIALS AND METHODS

Recording and analysis of animal vocalizations.

All procedures were approved by the University of California, Berkeley Animal Care and Use Committee. Animal vocalizations were recorded as previously described (Kim and Bao 2009). Briefly, Sprague-Dawley rats were placed in an anechoic chamber with a ¼-in. Brüel & Kjær (B&K) model 4135 microphone connected to a B&K 2669 preamplifier and B&K 2690 conditioning amplifier. The signal was digitized with a 16-bit analog-to-digital converter (National Instruments) at 200 kHz. Over 2 h of vocalizations were recorded with five postnatal day 11 (P11), six P15 rat pups, and two pairs of adults. In addition, naturally occurring vocalizations were recorded from nursing P11–13 pups and their dam by placing their home cages into an anechoic chamber for 3-h long periods daily in both light and dark phases of the daily cycle.

The experimenter visually identified 1,098, 2,113, and 1,798 (P11, P15, and adult, respectively) calls, indicating for each call the approximate start time and center frequency. To increase the signal-to-noise ratio (SNR), a band-pass filter (±10 kHz) was applied around the experimenter-defined frequency for each call. The envelope of the filtered call (Hilbert transform) was used to identify the start and end of each call. To be defined as a call, the signal had to cross the threshold set at 6× the standard deviation of the residual noise (the signal 25–50 ms preceding the experimenter-defined start time). The end of a call was marked when the signal dropped below threshold for ≥40 ms. The frequency profile of an individual call was defined by collapsing the spectrogram of the filtered call across the duration of the call. The center frequency of the call was defined as the peak of the frequency profile of the call. The bandwidth (BW) of the call was the full-width at half-maximum (FWHM) of the frequency profile.

Electrophysiological recording procedure.

AI was mapped as previously described (Kim and Bao 2009), and recording of central nucleus of IC was adapted from Popescu and Polley (2010). A total of 30 rats (1,502 multiunits) underwent electrophysiological examinations. Animals were preanesthetized with buprenorphine [0.05 mg/kg subcutaneously (sc)] 1 half-hour before they were anesthetized with sodium pentobarbital (50 mg/kg ip). Atropine sulfate (0.1 mg/kg sc) and dexamethasone (1 mg/kg sc) were administered once every 6 h, and Ringer solution (0.5–1.0 ml sc) was administered once every 4 h. The head was secured in a custom head holder that left the ears unobstructed, and the cisterna magna was drained of cerebrospinal fluid. For cortical recordings, the right auditory cortex was exposed and kept under a layer of silicone oil to prevent desiccation. For IC recordings, a craniotomy was made above the right primary visual cortex (V1) at the interaural line extending ≥2 mm lateral to the midline. Multiunit responses were recorded using tungsten microelectrodes (FHC) or silicon polytrodes (NeuroNexus). References to neurons or units in this text refer to multiunit responses recorded extracellularly. Cortical responses were recorded from 500 to 625 μm below the pial surface. The central nucleus of the IC in adult animals was identified when electrodes/polytrodes had passed through visual cortex and reliable, tonotopically organized responses to tones were seen (starting ∼3 mm below surface of V1). For animals younger than P30, V1 was aspirated so the location of the IC could be visually confirmed. Cortical penetration locations were recorded on a high-resolution image, and care was taken to avoid blood vessels. IC depth was recorded off of readings from a hydraulic pump and depth along the silicon polytrode.

Acoustic stimuli.

A coupler model electrostatic speaker [EC1; Tucker-Davis Technologies (TDT), Alachua, FL] was used to present all acoustic stimuli into the left ear (contralateral to the recorded cortical and IC hemisphere). The speaker was calibrated with the B&K microphone using SigCalRP (TDT) software at 200 kHz for frequencies ≤78 kHz. Filter coefficients were generated based on the calibration curve to implement a frequency-based finite impulse response filter of the order of 500 with DSP processors (RX6; TDT). All sound signals were passed through the filter before being amplified and played to achieve an even output level across the whole frequency range. The final output level of tone pips was also manually confirmed. The speaker had <3% total harmonic distortion across the tested frequency range. Pure-tone pips were used to measure frequency receptive fields (25-ms tones, 1–74 or 2–74 kHz, 0.1-octave increments at 0- to 70-dB sound-pressure level, 10-dB increments). Each frequency × intensity combination was repeated 3 times. In a subset of animals, cortical responses to 4 bouts of pup isolation calls were recorded. These bouts were also pitch-shifted (∼2 octaves lower via Cool Edit Pro) and presented to the animals. The original ultrasonic and pitch-shifted bouts were presented in a pseudorandom order, and each bout was presented 15 times.

Manipulation of developmental environment.

Procedures for reversible conductive hearing loss by ear ligation were adapted from Popescu and Polley (2010). Small incisions were made below each pinna, and the external meatus was ligated using polyester sutures in P10 rat pups under isoflurane anesthesia. Only up to one-half of a litter went through the surgical procedure. Following recovery from surgery, rats were returned to their home cages for a minimum of 2 wk. The weights of the ligated pups and littermate controls were carefully monitored, and no systematic difference was observed. The quality of the ligation was verified around P21 with auditory brain stem responses (ABRs) and visual inspection to ensure adequate closure and bilateral hearing loss of each animal. Before cortical recordings were performed from the ligated animals, the left outer ear was removed, and any buildup was carefully removed until the tympanic membrane could be visualized. The ear removal should not affect the sound level received at the ear because the speaker tube was placed inside the ear canal. In 5 of 6 animals, ABRs were taken both before and after the ear removal to confirm the recovery of hearing. Ear ligation resulted in a ∼35-dB threshold increase across all frequencies tested (2–32 kHz). The ABR threshold was reduced by 27 dB across frequencies after surgical recovery of the ligated ear and was not significantly different from the preligation threshold.

ABRs.

ABRs were recorded to verify successful hearing loss and recovery. Half-hard, stainless steel bare wires (0.005-in. diameter) were inserted behind the pinna of both ears and the vertex of the skull. Pure-tone pips (3-ms duration) were presented at 19 pips per second with an average of 500 repetitions for every frequency-decibel combination. Data acquisition and sound presentation were done using BioSigRP software on a TDT Sys3 recording rig.

Data analysis.

The receptive fields and response properties were isolated using custom-made programs in MATLAB. For each unit, isolation of the receptive field required calculation of the response latency. First, the peristimulus time histogram (PSTH) for all sound stimuli was convolved with a uniform 5-ms window. The peak of the PSTH within 7 and 30 ms after onset of stimuli was the most reliable measure and was defined as the response latency. The baseline firing rate was taken as the mean firing rate in the 47 ms preceding the stimuli. The start of the response window was defined as the point of time at which the PSTH exceeded the baseline firing rate ≥7 ms after the onset of stimuli. The end of the response window was defined as the point of time at which the PSTH was less than the baseline firing rate ≥10 ms after the peak of the PSTH. The spikes that occurred between the start and end of the response window were counted to reconstruct an appropriate receptive field. This penetration-specific rewindowing was important due to an age-dependent shift in response latency.

Receptive fields were isolated using an automated filtering and thresholding algorithm as previously described (Insanally et al. 2010; Köver et al. 2013; Yang et al. 2013). Briefly, receptive fields were smoothed with a 3 × 3 median filter and thresholded at 20% of the maximum response. The largest continuous cluster was used as a mask to calculate the isolated receptive field. The characteristic frequency (CF) of a neuron was defined as the center of mass of the isolated receptive field. The threshold of the neuron was the lowest decibel level that elicited responses in the isolated receptive field. The maximum response magnitude was the maximal number of spikes seen for a single frequency-decibel combination. Since each frequency-decibel combination was repeated three times, the average of those three responses was taken. The BW of a neuron was defined as the FWHM of the tuning curves, where the tuning curves was calculated by collapsing the responses to the top two decibel levels of the isolated receptive field.

Responses to vocalizations were quantified by several methods. To measure trial-to-trial variability, the SNR was calculated using a coherence-based metric (Hsu et al. 2004). Briefly, an estimate of the true signal, s(t), was obtained by averaging all PSTH, R(t). The noise, n(t), for each trial was calculated as the error after subtracting s(t) from R(t). We then calculated the power spectrum of the signal, S(f), and the power spectrum of the noise, N(f), using Welch's method. The coherence γ2 between S and R was then calculated:

where R = S + N. The coherence between two spike trains will quantify the SNR as a function of frequency. To give an overall measure for across-trial reliability, we used the coherence function to calculate the normal mutual information, which gives the lower bound on the SNR:

The integration was performed up to Fb = 100 Hz, after which the relationship between the signal and noise power remained flat.

The correlation between call onsets within a bout and a PSTH of a neuron was also calculated. To assess call onsets automatically, the following methodology was applied. First, the amplitude envelope of the bout was resampled to 200 Hz. Next, to avoid misclassifying strong amplitude modulations within a call as onsets, the ceiling of the amplitude envelope was set at ∼10% of the maximal amplitude, which was significantly higher than the background noise. The first derivative was taken, and the floor was set to zero such that onsets of individual calls are clearly associated with large positive changes. This signal was then uniformly smoothed by 10 bins before correlated to a PSTH of a neuron. The response reliability index (RRI) was calculated to measure the neural response to the first call compared with the response of all subsequent calls within a bout. The number of spikes in response to each call was calculated (a window of 5–50 ms after onset of call was used). The average number of spikes from the second to last call was divided by the number of spikes to the first call to calculate the RRI. An RRI of <1 indicates neurons that respond more to the first call than subsequent calls within a bout, whereas an RRI equal to 1 indicates neurons that respond equally well to all calls within a bout.

RESULTS

Rat vocalizations are predominately ultrasonic.

To understand the representation of vocalization frequencies, we quantified the distribution of frequencies used in rat species-specific communications. P11 and P15 pup isolation and adult encounter calls were recorded in a sound-attenuated chamber (Fig. 1A). All calls were >20 kHz, with the pup isolation calls and adult encounter calls encompassing distinct portions of the frequency space (Fig. 1B). The pup isolation call distribution showed a peak ∼40 kHz, whereas adult encounter calls peaked ∼60 kHz.

Fig. 1.

Ultrasonic vocalization in the rat. A: example pup isolation calls [recorded on postnatal day 11 (P11) and P15] and adult encounter calls. B: average frequency profiles show a significant difference in frequencies used by pup and adult calls. C: distribution of call bandwidths (BW) was not different between the 3 groups. D: higher frequency calls have shorter durations (10% of data shown).

The BW of the P11 and P15 pup isolation calls and adult encounter calls were not significantly different [1-way ANOVA: F(2,5006) = 1.56, P = 0.21]. The durations of individual calls were significantly different between the adult group and the 2 pup groups [1-way ANOVA: F(2,5006) = 352, P < 0.001]. Interestingly, the durations of the individual calls were significantly inversely correlated with the center frequency of a call: calls of higher frequencies had significantly shorter duration (r = −0.65, P < 0.001, collapsing all groups; Fig. 1D). These results are consistent with findings in previous reports that rat vocalization occurs primarily in the frequency range >30 kHz (Hahn and Lavooy 2005; Knutson et al. 2002).

UVFs are preferentially represented in AI.

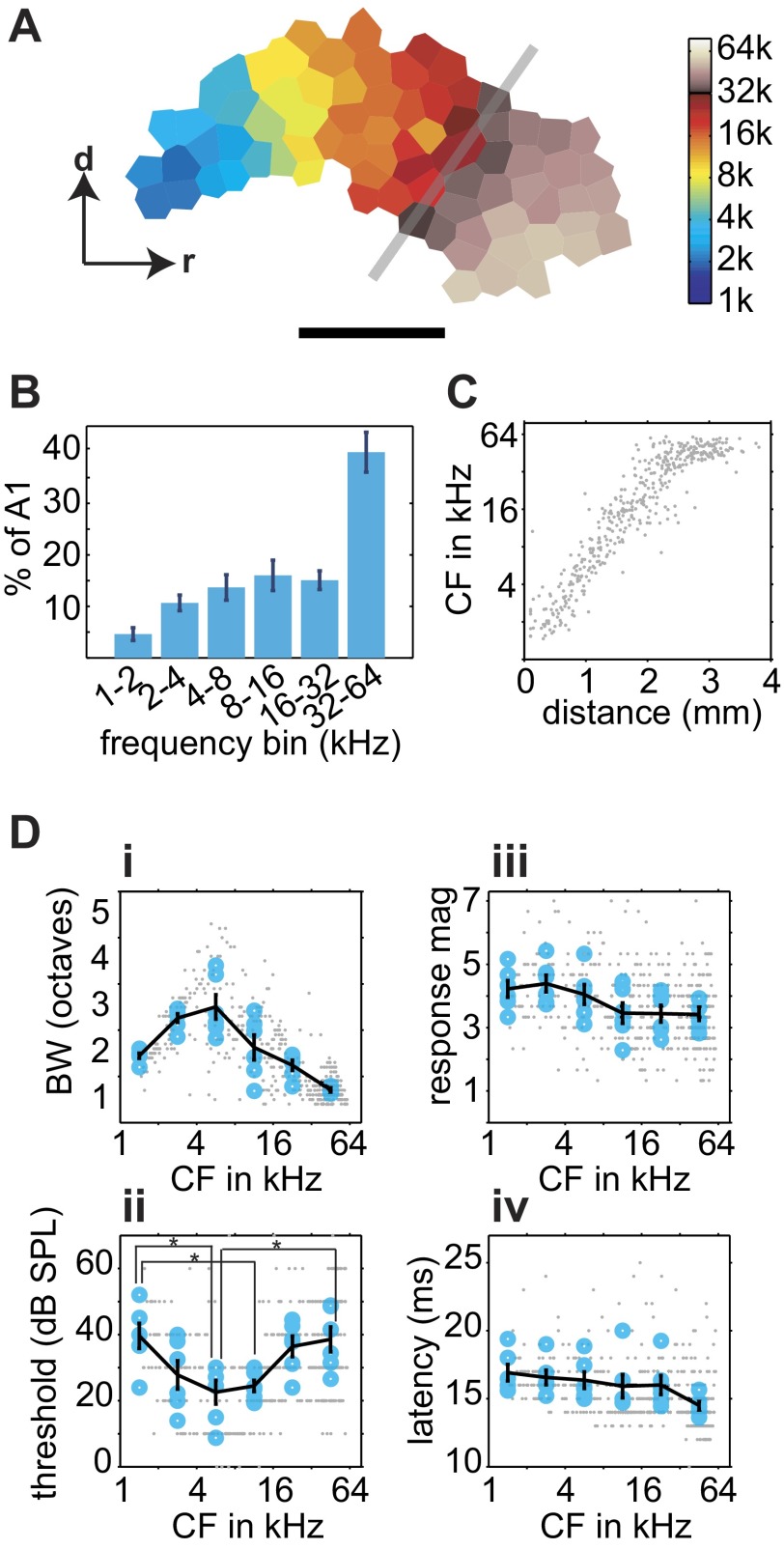

To characterize cortical representations of UVF, we mapped AI by recording 428 multiunits from 6 adult female rats (all older than P150) using 1- to 74-kHz tones, an increase of the stimulus set of 1.3 octaves (or 26%) compared with most previous mapping studies. This increase led to a near doubling in the size of AI (Fig. 2A). On average, 40% of AI was devoted to the representation of UVF frequencies (Fig. 2B), and the general tonotopic gradient was maintained throughout the areas representing UVFs (Fig. 2C), as previously reported by this laboratory (Kim and Bao 2009). The plateau of spatial distribution of CFs seen in Fig. 2C was not due to limitations of the speaker or the tested frequency range because the speaker had flat output beyond 74 kHz and no cortical neurons selectively responded to tones >70 kHz (Fig. 1C). To assign UVF neurons to AI and anterior auditory field (AAF) correctly, we strictly observed the tonotopic gradient reversal. Neurons encountered after the reversal were not assigned to AI (Fig. 1, A and C).

Fig. 2.

Representation of ultrasonic frequencies and cortical response properties. A: representative cortical characteristic frequency (CF) map showing representation of frequencies ≤74 kHz (k). Gray line indicates where typical maps would end when mapped with tone frequencies ≤32 kHz. Scale bar: 1 mm; r and d indicate rostral and dorsal directions, respectively. B: amount of cortical area representing 1-octave frequency bands. The representation of the 32- to 64-kHz band is significantly larger than that of the other bands. A1, primary auditory cortex (AI). C: distribution of CF across the tonotopic axis. D: BW (i), threshold (ii), maximal response magnitude (mag; iii), and response latency (iv) as a function of CF. Small gray points indicate individual recording sites; larger blue circles represent the octave-band average for each animal; the black lines indicate the average of all animals. *P < 0.05 in post hoc comparisons. SPL, sound-pressure level.

To confirm further that the UVF neurons were an integral part of the AI continuum, we compared response properties across this broader frequency range. We binned neurons by their CF into six octave-sized bands, and one-way ANOVAs were applied (Fig. 2D). Neurons tuned to higher frequencies showed significantly narrower tuning BW, with the exception of the two lowest-frequency bins [F(5,30) = 15.82, P = 1.2 × 10−7]. This is likely due to the fact that these low-frequency neurons are capable of responding to frequencies <1 kHz, and therefore our measurements often underestimated the tuning BW for the low-CF neurons. Nearly 75 and 45% of neurons with CF in 1–2 and 2–4 kHz, respectively, had a receptive field that extended beyond 1 kHz. In comparison, there were far fewer neurons in the subsequent frequencies bins with receptive fields that extended beyond 1 or 74 kHz (0–13%). Linear regression analysis of neurons with receptive fields completely contained within 1 and 74 kHz shows a highly significant negative correlation between CF and BW for all six animals (r = −0.77, P < 0.0001), showing narrower tuning BW for neurons in the UVF range. Interestingly, experience in multifrequency enriched environments can result in narrower frequency tuning (Köver et al. 2013; Yang et al. 2013).

The receptive field threshold was significantly lower for middle-frequency-tuned neurons compared with low- and high-frequency-tuned neurons [F(5,30) = 4.69, P = 0.0028], as previously reported (Sally and Kelly 1988). Maximal response magnitude varied significantly with CF [F(5,30) = 2.6, P = 0.046], but no post hoc pairwise comparisons were significant. However, a significant negative correlation was found between response magnitude and CF (r = −0.57, P < 0.001), suggesting UVF neurons on average fire fewer action potentials in response to a tone. We found no significant difference in response latency between frequency bins [F(5,30) = 1.8, P = 0.14]. The findings that UVF neurons follow the general AI continua on multiple dimensions (BW, threshold, magnitude, and latency) support our conclusion that they were indeed AI neurons.

UVF neurons respond reliably to ultrasonic vocalizations.

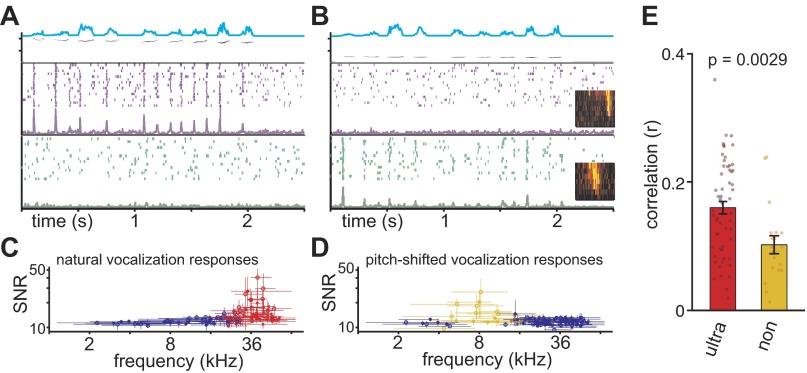

To determine whether the UVF neurons are more specialized to process vocalizations, we recorded cortical responses to four representative bouts of ultrasonic vocalizations. The bouts were between 2 and 3 s in duration with individual calls being uttered at 2–5 calls per second. In addition, these vocalizations were pitch-shifted to be 2 octaves lower than the original stimulus. A total of 120 neurons were sampled across 3 animals (P29, P30, and P31), and responses to pure-tone pips and all 8 vocalizations were recorded. We found that neurons generally responded to the onset of individual calls within a bout in a precisely time-locked manner and only responded if the tonal receptive field of the neuron overlapped with the spectral range of the vocalization (Fig. 3).

Fig. 3.

Ultrasonic vocalization frequency (UVF) neurons respond reliably to ultrasonic vocalizations. Two example cortical neurons in response to natural (A) and pitch-shifted (B) P15 vocalizations are shown. Top shows the spectrogram and amplitude envelope (cyan) of example vocalizations. Middle shows the raster plot and peristimulus time histogram (PSTH) of the example UVF neuron, whereas the bottom shows the example non-UVF neurons. Receptive fields are shown in insets of B. The UVF and non-UVF neurons response reliably to the natural and pitch-shifted vocalization, respectively. C and D: signal-to-noise ratio (SNR) as a function of CF for natural and pitch-shifted vocalizations. The horizontal error bars indicate the BW of the neuron. The vertical error bars indicate the range of the SNR values measured with the 4 vocalizations. The red and yellow data points represent neurons with BW that overlapped with the frequency range of the 4 ultrasonic vocalizations (n = 57) and the frequency range of the pitch-shifted vocalizations (n = 19), respectively. E: the correlation between cortical response magnitude and the onsets of calls. The correlation was significantly higher for UVF neurons (ultra; red) than non-UVF (non; yellow) neurons. Only neurons that were expected to respond to the natural and pitch-shifted vocalizations (i.e., those in red and yellow in C and D) were included in this analysis.

To quantify trial-to-trial variability, we calculated the SNR using a coherence-based metric, where higher SNR indicates better trial-to-trial reliability (Hsu et al. 2004). The distributions of the average SNR for ultrasonic (Fig. 3C) and pitch-shifted (Fig. 3D) vocalizations clearly indicate a CF dependency. As a population, the neurons for which BW crossed the spectral range of the ultrasonic vocalizations (30–42 kHz, n = 57, hereto referred to as the UVF neurons) had significantly higher SNR values for ultrasonic vocalizations compared with all other neurons (P = 1.96 × 10−7). Likewise, the neurons for which BW crossed the spectral range of the pitch-shifted vocalizations (7.5–10.6 kHz, n = 19, hereto referred to as the non-UVF neurons) had significantly higher SNR values for pitch-shifted vocalizations compared with all other neurons (P = 1.61 × 10−8). Interestingly, there was no significant difference in SNRs between the UVF and non-UVF neurons (P = 0.39), suggesting both UVF and non-UVF neurons respond equally reliably to normal and pitch-shifted vocalizations, respectively.

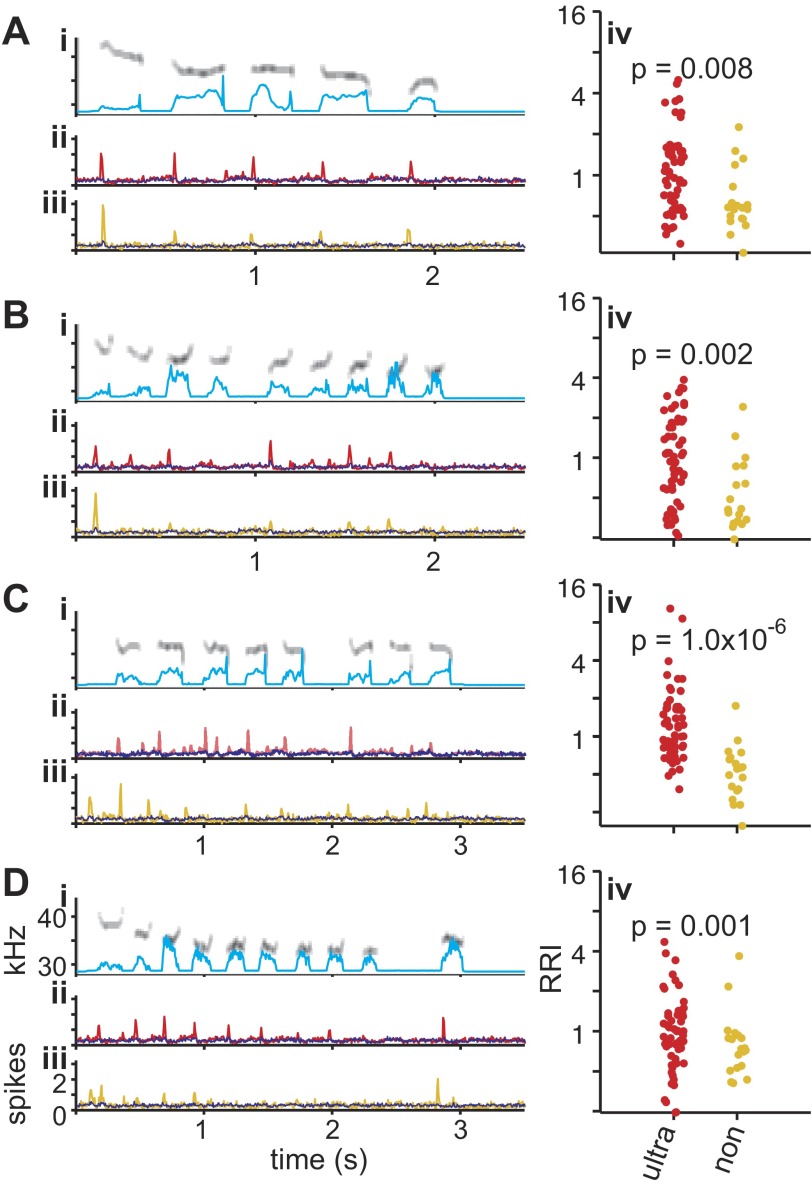

Closer examination of the responses, however, revealed that whereas UVF neurons responded to most calls in a bout of vocalizations, the non-UVF neurons responded mainly to the first call of each bout (Fig. 3, A and B). There is a significantly higher correlation between response firing rate (e.g., PSTHs) and the call onset for the UVF neurons than non-UVF neurons (Fig. 3E), showing that, compared with non-UVF neurons, UVF neurons more faithfully responded to each call within a bout. The bias toward “first call response” in the non-UVF neurons was also measured with the RRI, where the average number of spikes to the second, third, fourth, etc., calls within a bout was divided by the response to the first call. An RRI value of 1 indicates equally strong responses to all calls, whereas an RRI <1 suggests stronger responses to the first than subsequent calls. For all four vocalizations, the average RRI of the non-UVF neurons to pitch-shifted vocalizations was consistently <1 (0.75, 0.64, 0.58, and 0.94), whereas the average RRI of the UVF neurons to normal vocalizations was consistently >1 (1.3, 1.25, 1.56, and 1.1). Direct comparisons show that RRIs are consistently higher for UVF neurons than non-UVF neurons for each vocalization (Fig. 4, iv). Previous studies have shown that AI neurons generally have an RRI of 0.8–1 in response to pure-tone pip or noise bursts presented ≤10 pips per second, similar to the range observed in non-UVF neuron (Bao et al. 2004; Chang et al. 2005; Kilgard and Merzenich 1998; Kim and Bao 2009; Zhou and Merzenich 2008). These results indicate that although both UVF and non-UVF neurons can respond to normal and pitch-shifted vocalizations, respectively, UVF neurons respond in a more faithful manner to each call utterance in a vocalization bout.

Fig. 4.

Reliable population responses to vocalizations. A–D: population responses to 4 representative pup calls. i: Spectrograms of the vocalizations with amplitude envelope in cyan. Y-axis ranges from 27.5 to 43 kHz. ii: Average PSTH of 57 UVF neurons in response to normal ultrasonic vocalizations (red; same as shown in Fig. 3C). Remaining neurons are shown in blue. iii: Average PSTH of 19 non-UVF neurons in response to pitch-shifted vocalizations (yellow; same as shown in Fig. 3D). Remaining neurons are shown in blue. iv: Response reliability index (RRI) of UVF neurons (in response to ultrasonic vocalizations; red) is significantly higher than RRI of non-UVF neurons (in response to pitch-shifted vocalizations; yellow).

This first call response bias in non-UVF neurons can also be seen on the population level. An average PSTH across all UVF neurons showed responses precisely time-locked to the onset of individual calls (Fig. 4, ii). In contrast, the average PSTH of all non-UVF neurons shows a strong response to the first call of the pitch-shifted vocalizations, with smaller average responses to subsequent calls (Fig. 4, iii).

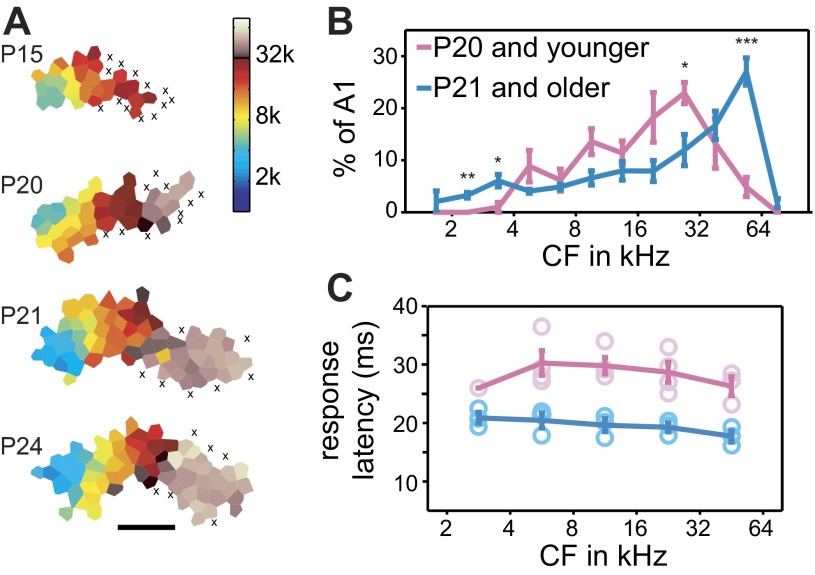

UVF representation develops relatively late.

To investigate the development of UVF representation, we mapped AI of 8 juvenile rats at different developmental ages (Fig. 5). A total of 444 multiunits were recorded for these experiments. Developing animals initially showed responses to midrange frequencies, expanding to the full spectral range with age, as previously reported (de Villers-Sidani et al. 2007; Insanally et al. 2009). Whereas the representation of 1–32 kHz in AI emerges and reaches mature size before P16 (de Villers-Sidani et al. 2007; Insanally et al. 2009), the UVF representation did not reach its full extent until P21, with younger animals showing very little UVF responses. Animals were categorized into one of two groups: P15–20 or P21–26. A two-way ANOVA (age × frequency bin) showed a highly significant interaction [F(11,72) = 7.89, P < 0.0001], likely driven by the P21–26 animals having significantly larger area responding to frequencies >45 kHz, whereas the P15–20 animals had more area responding to frequencies between 23 and 32 kHz (Fig. 5B). The P21–26 animals and the adult animals (Fig. 2) showed no significant group differences [F(1,96) < 0.01] or interactions [F(11,96) = 1.65, P > 0.05], indicating that UVF representations reached the adult level by P21.

Fig. 5.

Development of UVF representations. A: representative cortical maps of developing animals. Each x indicates an unresponsive site; scale bar: 1 mm. B: average percentage of AI area representing different frequencies in 0.5-octave bins. Cortical representations of frequencies >45 kHz were significantly larger in older (n = 4; ages: P21, P22, P24, and P26) than younger animals (n = 4; ages: P15, P17, P19, and P20). t-Tests: *P < 0.05; **P < 0.01; ***P < 0.001. C: response latency is longer in younger animals. A 2-way ANOVA reveals a significant effect for age [F(1,26) = 85.7, P < 0.00001] but not for frequency bin [F(4,26) = 1.29, P = 0.30] and no significant interaction [F(4,26) = 0.55, P = 0.70]. Response latency is significantly different for all testable frequencies (P < 0.01 for the 4 highest bins).

We also compared response properties between the P15–20 and P21–26 animals. The P15–20 animals had significantly longer response latency (P < 0.0001; Fig. 5C). There were no significant differences between the two age groups in terms of threshold or response magnitude (P > 0.10 for both), and the P15–20 group had a marginally narrower BW than the P21–26 group (P = 0.055), as has been reported previously (de Villers-Sidani et al. 2007; Insanally et al. 2009).

Early experience is required for UVF overrepresentation.

We recorded naturally occurring rat vocalizations from litters of nursing rat pups together with their dam in the home cage between P11 to P13 and found that animals vocalized at a mean rate of 38 ± 9 calls per minute. The calls were qualitatively similar to those reported in Fig. 1, with center frequencies of the calls spanning from 30 to 70 kHz, presumably including both pup and adult calls. To test whether sensory experience in a natural acoustic environment is required for the UVF overrepresentation, we induced reversible conductive hearing loss in rat pups with bilateral ear canal ligations at P10, at or before the auditory critical period and the onset of hearing (de Villers-Sidani et al. 2007; Geal-Dor et al. 1993; Popescu and Polley 2010). Adequate hearing loss was confirmed with ABRs. After ≥2 wk, animals were put under anesthesia, and the left ear was removed to recover hearing (confirmed with ABR and visual inspection of the tympanic membrane) before the right auditory cortex was mapped. A total of 6 animals retained satisfactory bilateral ligation and showed successful recovery of hearing before AI mapping, and a total of 269 multiunits were recorded from these animals. Ear-ligated animals showed a smaller representation of frequencies >45 kHz and a larger representation of frequencies between 23 and 32 kHz [2-way ANOVA, group × frequency interactions, F(10,110) = 5.73, P < 0.001; Fig. 6B]. Cortical representations of those frequency bands were not different between the ligated and the P15–20 naïve animals (P > 0.10), suggesting that maturation of the UVF overrepresentation requires normal sensory experience.

Fig. 6.

UVF overrepresentation requires early experience. A: example map from an animal with ears ligated from P10. Scale bar: 1 mm. B: average percentage of AI area representing different frequencies in 0.5-octave bins. t-Test: **P < 0.01; ***P < 0.001.

Rearing in continuous noise to mask out environmental sounds has been shown to delay the development of the auditory cortex, as evidenced by broad frequency tuning and altered CF maps (Chang and Merzenich 2003; Zhang et al. 2001; Zhou et al. 2011). Our ligated animals showed relatively normal tonotopy and AI maps (Fig. 6A). Previous monaural ligation studies have showed an expansion of low-frequency representations (Popescu and Polley 2010). The discrepancy is likely due to differences in ligation-induced conductive hearing loss. The ear-ligated animals in the present study had relatively consistent threshold shift across all tested frequencies, whereas animals in the other study had much less hearing loss for low frequencies (Popescu and Polley 2010). Comparing response properties between the ligated animals and controls, we found no significant differences in BW (P = 0.25), threshold (P = 0.87), response magnitude (P = 0.97), or response latency (P = 0.19). Thus bilateral ear ligation did not seem to cause a general developmental delay in AI.

Subcortical overrepresentation of UVFs develops after cortical maturation.

We found that UVFs were also overrepresented in the IC of adult rats (P45–50). A total of 111 multiunits were sampled from IC in this age range. The superficial layers of IC were tuned to low frequencies with progressively higher-frequency tuning for deeper layers of IC (Fig. 7A). Similar to AI, high-CF IC neurons had narrower BW (Fig. 7B). To characterize the distribution of frequencies along the depth of the IC, neurons were separated into those with CF in the UVF range and those with CF below the UVF range. The proportion of IC tonotopic distance devoted to UVFs was 43.3%, similar to the proportion of AI representing UVFs (45.5%).

Fig. 7.

UVF overrepresentation develops later in inferior colliculus (IC) compared with AI. A: CF distribution as a function of distance below primary visual cortex (V1) for 4 independent tracts across 3 adult animals (P45–50). Lines of best fit are shown for sites tuned to below or above 32 kHz. Numbers indicate sites of example receptive fields in B. B: example receptive fields. C: CF distribution as a function of distance for 4 juvenile animals (P21–30). As in A, lines of best fit are shown for sites tuned to below or above 32 kHz. D: proportion of IC representing the UVF range was significantly higher for the older (P45–50) than the younger (P21–30) animals (*P = 0.023, t-test).

We also recorded from 130 multiunits in IC of 4 animals aged between P21 and P30 (Fig. 7C). Although animals in this age range showed mature overrepresentation of UVFs in cortex, the UVF representation in IC was substantially smaller in these animals than in P45–50 adults (26.1 vs. 43.3%; P = 0.023; Fig. 7D). Studies in subcortical auditory responses have also shown a similar time course in spectral development (Romand and Ehret 1990). Thus the full extent of UVF overrepresentation emerges in cortex at P21 before it occurs in IC after P30.

DISCUSSION

We have demonstrated in the present study that representations of UVFs are enlarged in AI during early development through an experience-dependent process. In addition, UVF neurons respond more reliably to ultrasonic vocalization than non-UVF neurons respond to pitch-shifted vocalizations, indicating a specialization in the UVF range. These results are important in many respects. First, they suggest that natural acoustic experience shapes cortical sound representations. Previous studies of developmental sensory plasticity have mostly used artificial stimuli, such as pure tones, broad-band noises, and frequency-modulated sweeps, that are presented repeatedly (Barkat et al. 2011; Chang and Merzenich 2003; de Villers-Sidani et al. 2007, 2008; Han et al. 2007; Insanally et al. 2009; Kim and Bao 2009; Zhang et al. 2001). Questions have been raised whether similar processes operate for normal sensory development in natural environments (Sanes and Bao 2009). Here, we show that UVFs are overrepresented through an experience-dependent process in a natural environment.

Second, our results suggest that cortical representation of vocalizations is shaped by early experience. Several studies have shown that auditory neurons preferentially respond to conspecific vocalization over a modified version of the same vocalization or vocalization of other species (Hauber et al. 2007; Suta et al. 2007; Wang and Kadia 2001). The origin of such species specificity, in terms of nature vs. nurture, is unclear. It has been hypothesized that the auditory system and the vocal apparatus of a species coevolve, leading to specialized acoustic representations for the vocal repertoire (Endler 1993). Alternatively, the acoustic representations may become specialized as a consequence of experience-dependent representational plasticity (Cheung et al. 2005; Woolley and Moore 2011). In this study, natural sensory experience resulted in more neurons representing UVFs.

Third, our results suggest that the sensory cortex is the likely locus of developmental plasticity in a natural sensory environment. Some subcortical auditory nuclei exhibit sound experience-induced changes in stimulus selectivity, suggesting that those areas may be the loci of plasticity and that cortical plasticity might be feedforward manifestations of subcortical effects (Oliver et al. 2011; Poon and Chen 1992; Sanes and Constantine-Paton 1985). A recent study failed to observe frequency map reorganization in IC following repetitive exposure to a tone, whereas the same exposure resulted in enlarged cortical representation of the tone (Miyakawa et al. 2013). In the present study, we show that the UVF overrepresentation emerges in the sensory cortex rapidly, transitioning into the adult form at approximately P21 (Fig. 5), whereas it develops in IC during a later and protracted period up to P45 (Fig. 7). These different time courses indicate that the UVF overrepresentation in the cortex is independent of that in IC. The auditory thalamus may also be a source of plasticity and UVF overrepresentation, although a recent study failed to find tonotopic frequency map plasticity in the auditory thalamus of mice under conditions that produced frequency map reorganization in the auditory cortex (Barkat et al. 2011). The later development of UVF overrepresentation in IC may be mediated by intrinsic subcortical processes and/or top-down influences from the cortex or auditory thalamus (Bajo et al. 2010; Gao and Suga 1998, 2000).

Although a majority of studies investigating the representation of frequencies in the rat AI have used pure tones <32 kHz (e.g., Chang et al. 2005; Chang and Merzenich 2003; de Villers-Sidani et al. 2008; Engineer et al. 2008; Han et al. 2007; Polley et al. 2007; Zhang et al. 2001; Zhou and Merzenich 2008), a few have measured responses to frequencies ≤73 kHz (Kim and Bao 2009; Popescu and Polley 2010; Rutkowski et al. 2003; Sally and Kelly 1988; Wu et al. 2006). The earliest of these papers recorded frequencies ≤63 kHz and report ∼80% of AI beyond devoted to the upper 3 octaves tested, leaving 20% for the lower 4 octaves (Sally and Kelly 1988). Interestingly, a relatively more recent paper recorded frequencies ≤50 kHz and reported a much more conservative difference in representation: ½ of AI devoted to the upper 3.5 octaves and ½ devoted to the lower 2.5 octaves (Rutkowski et al. 2003). Two additional papers used frequencies ≤64 kHz but did not quantify representation of different frequency bands, although one of them reported that there was “relatively broad cortical representation of high frequencies in the rat auditory cortex” (Popescu and Polley 2010; Wu et al. 2006). Finally, a paper previously published by this laboratory reports quantifications of representation very similar to what is reported in this paper (Kim and Bao 2009).

In addition to rats, the auditory cortex has also been quantitatively examined in cats and mice, allowing a comparison across species (Andersen et al. 1980; Carrasco and Lomber 2009; Hackett et al. 2011; Reale and Imig 1980; Rothschild et al. 2010; Stiebler et al. 1997). Although neurons in the cat AI are capable of responding to high ultrasonic frequencies ≤64 kHz, the CFs show a uniform distribution (Carrasco and Lomber 2009). Unlike cats and rats, mouse AI and AAF appear to be devoid of representations of high ultrasonic frequencies. The reversal frequencies between AI and AAF in mice has been reported to be between 32 and 45 kHz (Hackett et al. 2011; Stiebler et al. 1997), substantially lower than the frequencies of their own vocalizations (Grimsley et al. 2011; Hahn and Lavooy 2005; Liu et al. 2003; Portfors 2007). Mice appear to have a separate ultrasonic field representing frequencies between 40 and 80 kHz, which may be a specialized region to process their vocalizations (Stiebler et al. 1997). This mouse ultrasonic field is considered separate from AI because of its lack of tonotopy and sharp frequency transitions between the field and other neighboring auditory fields. By contrast, the UVF area in the rat is highly tonotopic and reverses into AAF (Kim and Bao 2009; Popescu and Polley 2010; Rutkowski et al. 2003; Sally and Kelly 1988; Wu et al. 2006), suggesting the rat UVF region is indeed a part of AI. Even though the neurons in the highest frequency band have significantly narrower BW, higher thresholds, and lower response magnitudes, these differences are part of a gradual continuum that starts well below the UVF range (Fig. 2D). In this study, AI was defined functionally by topography of response properties. The lower portion of the rat UVF region (≤40 kHz) has been shown to receive nearly exclusive thalamic input from the ventral division of the medial geniculate body, the lemniscal auditory thalamus (Winer et al. 1999). However, further studies should determine whether the full UVF region receives projections from lemniscal auditory thalamus, which signifies anatomically defined AI (Roger and Arnault 1989; Romanski and LeDoux 1993).

In addition to more neurons representing UVFs, neurons in this frequency range also respond to natural vocalizations more faithfully. It has been previously reported that mouse IC neurons show robust responses to mouse vocalizations, even when the vocalization frequencies are substantially higher than the preferred frequency range of a neuron (Portfors et al. 2009). Unlike the mouse system, in this study, only UVF neurons in rat AI respond to ultrasonic vocalization (Fig. 3), suggesting that the frequency receptive field is generally a good predictor of responses to vocalizations, as previously shown in cats (Gehr et al. 2000). This appears to be a general phenomenon for all neurons in rat AI, as non-UVF neurons responded with similar reliability to pitch-shifted vocalizations when the frequency range of the vocalizations overlapped with that of the frequency receptive field of the neurons. However, the non-UVF neurons appeared to respond primarily to the first call utterance within a bout, unlike UVF neurons, which responded to most calls within a bout (Fig. 4). Interestingly, recordings from human AI also show high temporal fidelity to conspecific vocalizations of human speech sounds (Pasley et al. 2012). It should be noted that the temporal rate of the calls in a bout was lower than the cutoff rate of the temporal modulation transfer function of non-UVF neurons (Bao et al. 2004; Chang et al. 2005; Kilgard and Merzenich 1998; Kim and Bao 2009; Zhou and Merzenich 2008). Thus the difference between responses in UVF and non-UVF neurons is likely to be specific to vocalizations and not general to all repetitive stimuli. Behavioral assays have demonstrated a left-hemisphere lateralization of ultrasonic processing (Ehret 1987), suggesting that conspecific vocalizations are likely also preferentially represented in left AI. Further electrophysiological studies comparing the right and left hemispheric responses to vocalizations would be of great interest.

Blockade of normal acoustic experience with bilateral ear ligation might have prevented the emergence of UVF overrepresentations by two mechanisms: through either preventing stimulus-driven cortical map reorganization or arresting normal cortical maturation. We assessed the general state of cortical maturation using the neuronal response latency, which shows a developmental reduction that coincides with the emergence of UVF overrepresentations. The latency was not significantly different between the ear-ligated and the age-matched control groups. Furthermore, we did not observe characteristic features of developmentally retarded AI, such as broad frequency tuning (Chang and Merzenich 2003; Zhang et al. 2001; Zhou et al. 2011). These results suggest that the ear ligation prevented UVF overrepresentation without causing a general delay of cortical maturation.

Rat calls are far less frequent in the natural environment than the exposure stimuli used in typical sound exposure studies. Yet, we observed robust experience-dependent overrepresentations of the vocalization frequency range. It is possible that the animals in the sound exposure studies were unnecessarily overstimulated, whereas the cortical plasticity effects had already been saturated. Alternatively, social context, behavioral relevance, and acoustic properties of the conspecific calls might make it more efficient in shaping cortical sound representations (Cohen et al. 2011; Liu et al. 2006; Liu and Schreiner 2007; Schnupp et al. 2006). In addition, the motor production of the calls and corollary feedback might also enhance cortical plasticity and contribute to overrepresentation of UVFs (Cheung et al. 2005). Future studies should elucidate how these factors influence cortical representational plasticity.

GRANTS

This research was supported by National Institute on Deafness and Other Communication Disorders (NIDCD) Grant DC-009259 (S. Bao), the National Science Foundation's Graduate Research Fellowship Program (H. Kim), and NIDCD National Research Service Award 1F31-DC-010319 (H. Kim).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

H.K. and S.B. conception and design of research; H.K. performed experiments; H.K. analyzed data; H.K. interpreted results of experiments; H.K. prepared figures; H.K. and S.B. drafted manuscript; H.K. and S.B. edited and revised manuscript; H.K. and S.B. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank D. Blake, H. Kover, J. Elstrott, K. Ford, and B. Weiner for comments on previous versions of this manuscript, L. Hamilton for assistance with vocalization analysis, and the members of the Bao laboratory for insightful discussions.

REFERENCES

- Andersen RA, Knight PL, Merzenich MM. The thalamocortical and corticothalamic connections of AI, AII, and the anterior auditory field (AFF) in the cat: evidence of two largely segregated systems of connections. J Comp Neurol 194: 663–701, 1980 [DOI] [PubMed] [Google Scholar]

- Bajo VM, Nodal FR, Moore DR, King AJ. The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat Neurosci 13: 253–260, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bao S, Chang EF, Woods J, Merzenich MM. Temporal plasticity in the primary auditory cortex induced by operant perceptual learning. Nat Neurosci 7: 974–981, 2004 [DOI] [PubMed] [Google Scholar]

- Barkat TR, Polley DB, Hensch TK. A critical period for auditory thalamocortical connectivity. Nat Neurosci 14: 1189–1194, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brudzynski SM, Bihari F, Ociepa D, Fu XW. Analysis of 22 kHz ultrasonic vocalization in laboratory rats: long and short calls. Physiol Behav 54: 215–221, 1993 [DOI] [PubMed] [Google Scholar]

- Brudzynski SM, Kehoe P, Callahan M. Sonographic structure of isolation-induced ultrasonic calls of rat pups. Dev Psychobiol 34: 195–204, 1999 [DOI] [PubMed] [Google Scholar]

- Carrasco A, Lomber SG. Differential modulatory influences between primary auditory cortex and the anterior auditory field. J Neurosci 29: 8350–8362, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang EF, Bao S, Imaizumi K, Schreiner CE, Merzenich MM. Development of spectral and temporal response selectivity in the auditory cortex. Proc Natl Acad Sci USA 102: 16460–16465, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang EF, Merzenich MM. Environmental noise retards auditory cortical development. Science 300: 498–502, 2003 [DOI] [PubMed] [Google Scholar]

- Cheung SW, Nagarajan SS, Schreiner CE, Bedenbaugh PH, Wong A. Plasticity in primary auditory cortex of monkeys with altered vocal production. J Neurosci 25: 2490–2503, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L, Rothschild G, Mizrahi A. Multisensory integration of natural odors and sounds in the auditory cortex. Neuron 72: 357–369, 2011 [DOI] [PubMed] [Google Scholar]

- de Villers-Sidani E, Chang EF, Bao S, Merzenich MM. Critical period window for spectral tuning defined in the primary auditory cortex (A1) in the rat. J Neurosci 27: 180–189, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villers-Sidani E, Simpson KL, Lu YF, Lin RC, Merzenich MM. Manipulating critical period closure across different sectors of the primary auditory cortex. Nat Neurosci 11: 957–965, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehret G. Left hemisphere advantage in the mouse brain for recognizing ultrasonic communication calls. Nature 325: 249–251, 1987 [DOI] [PubMed] [Google Scholar]

- Ehret G, Haack B. Categorical perception of mouse pup ultrasound by lactating females. Naturwissenschaften 68: 208–209, 1981 [DOI] [PubMed] [Google Scholar]

- Ehret G, Koch M, Haack B, Markl H. Sex and parental experience determine the onset of an instinctive behavior in mice. Naturwissenschaften 74: 47, 1987 [DOI] [PubMed] [Google Scholar]

- Endler J. Some general comments on the evolution and design of animal communication systems. Philos Trans R Soc Lond B Biol Sci 340: 215–225, 1993 [DOI] [PubMed] [Google Scholar]

- Engineer CT, Perez CA, Chen YH, Carraway RS, Reed AC, Shetake JA, Jakkamsetti V, Chang KQ, Kilgard MP. Cortical activity patterns predict speech discrimination ability. Nat Neurosci 11: 603–608, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao E, Suga N. Experience-dependent corticofugal adjustment of midbrain frequency map in bat auditory system. Proc Natl Acad Sci USA 95: 12663–12670, 1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao E, Suga N. Experience-dependent plasticity in the auditory cortex and the inferior colliculus of bats: role of the corticofugal system. Proc Natl Acad Sci USA 97: 8081–8086, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcia-Lazaro JA, Ahmed B, Schnupp JW. Tuning to natural stimulus dynamics in primary auditory cortex. Curr Biol 16: 264–271, 2006 [DOI] [PubMed] [Google Scholar]

- Geal-Dor M, Freeman S, Li G, Sohmer H. Development of hearing in neonatal rats: air and bone conducted ABR thresholds. Hear Res 69: 236–242, 1993 [DOI] [PubMed] [Google Scholar]

- Gehr DD, Komiya H, Eggermont JJ. Neuronal responses in cat primary auditory cortex to natural and altered species-specific calls. Hear Res 150: 27–42, 2000 [DOI] [PubMed] [Google Scholar]

- Grimsley JM, Monaghan JJ, Wenstrup JJ. Development of social vocalizations in mice. PLoS One 6: e17460, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hackett TA, Barkat TR, O'Brien BM, Hensch TK, Polley DB. Linking topography to tonotopy in the mouse auditory thalamocortical circuit. J Neurosci 31: 2983–2995, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hahn ME, Lavooy MJ. A review of the methods of studies on infant ultrasound production and maternal retrieval in small rodents. Behav Genet 35: 31–52, 2005 [DOI] [PubMed] [Google Scholar]

- Han YK, Köver H, Insanally MN, Semerdjian JH, Bao S. Early experience impairs perceptual discrimination. Nat Neurosci 10: 1191–1197, 2007 [DOI] [PubMed] [Google Scholar]

- Hauber ME, Cassey P, Woolley SM, Theunissen FE. Neurophysiological response selectivity for conspecific songs over synthetic sounds in the auditory forebrain of non-singing female songbirds. J Comp Physiol A Neuroethol Sens Neural Behav Physiol 193: 765–774, 2007 [DOI] [PubMed] [Google Scholar]

- Hsu A, Borst A, Theunissen F. Quantifying variability in neural responses and its application for the validation of model predictions. Network 15: 91–109, 2004 [PubMed] [Google Scholar]

- Insanally MN, Albanna BF, Bao S. Pulsed noise experience disrupts complex sound representations. J Neurophysiol 103: 2611–2617, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insanally MN, Köver H, Kim H, Bao S. Feature-dependent sensitive periods in the development of complex sound representation. J Neurosci 29: 5456–5462, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz LC, Crowley JC. Development of cortical circuits: lessons from ocular dominance columns. Nat Rev Neurosci 3: 34–42, 2002 [DOI] [PubMed] [Google Scholar]

- Kilgard MP, Merzenich MM. Plasticity of temporal information processing in the primary auditory cortex. Nat Neurosci 1: 727–731, 1998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Bao S. Selective increase in representations of sounds repeated at an ethological rate. J Neurosci 29: 5163–5169, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Burgdorf J, Panksepp J. Ultrasonic vocalizations as indices of affective states in rats. Psychol Bull 128: 961–977, 2002 [DOI] [PubMed] [Google Scholar]

- Köver H, Gill K, Tseng YT, Bao S. Perceptual and neuronal boundary learned from higher-order stimulus probabilities. J Neurosci 33: 3699–3705, 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewicki MS. Efficient coding of natural sounds. Nat Neurosci 5: 356–363, 2002 [DOI] [PubMed] [Google Scholar]

- Liu RC, Linden JF, Schreiner CE. Improved cortical entrainment to infant communication calls in mothers compared with virgin mice. Eur J Neurosci 23: 3087–3097, 2006 [DOI] [PubMed] [Google Scholar]

- Liu RC, Miller KD, Merzenich MM, Schreiner CE. Acoustic variability and distinguishability among mouse ultrasound vocalizations. J Acoust Soc Am 114: 3412–3422, 2003 [DOI] [PubMed] [Google Scholar]

- Liu RC, Schreiner CE. Auditory cortical detection and discrimination correlates with communicative significance. PLoS Biol 5: e173, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mansfield RJ. Neural basis of orientation perception in primate vision. Science 186: 1133–1135, 1974 [DOI] [PubMed] [Google Scholar]

- McIntosh TK, Barfield RJ. Ultrasonic vocalisations facilitate sexual behaviour of female rats. Nature 272: 163–164, 1978 [DOI] [PubMed] [Google Scholar]

- Miyakawa A, Gibboni R, Bao S. Repeated exposure to a tone transiently alters spectral tuning bandwidth of neurons in the central nucleus of inferior colliculus in juvenile rats. Neuroscience 230: 114–120, 2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliver DL, Izquierdo MA, Malmierca MS. Persistent effects of early augmented acoustic environment on the auditory brainstem. Neuroscience 184: 75–87, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, Crone NE, Knight RT, Chang EF. Reconstructing speech from human auditory cortex. PLoS Biol 10: e1001251, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley DB, Read HL, Storace DA, Merzenich MM. Multiparametric auditory receptive field organization across five cortical fields in the albino rat. J Neurophysiol 97: 3621–3638, 2007 [DOI] [PubMed] [Google Scholar]

- Poon PW, Chen X. Postnatal exposure to tones alters the tuning characteristics of inferior collicular neurons in the rat. Brain Res 585: 391–394, 1992 [DOI] [PubMed] [Google Scholar]

- Popescu MV, Polley DB. Monaural deprivation disrupts development of binaural selectivity in auditory midbrain and cortex. Neuron 65: 718–731, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Portfors CV. Types and functions of ultrasonic vocalizations in laboratory rats and mice. J Am Assoc Lab Anim Sci 46: 28–34, 2007 [PubMed] [Google Scholar]

- Portfors CV, Roberts PD, Jonson K. Over-representation of species-specific vocalizations in the awake mouse inferior colliculus. Neuroscience 162: 486–500, 2009 [DOI] [PubMed] [Google Scholar]

- Reale RA, Imig TJ. Tonotopic organization in auditory cortex of the cat. J Comp Neurol 192: 265–291, 1980 [DOI] [PubMed] [Google Scholar]

- Rieke F, Bodnar DA, Bialek W. Naturalistic stimuli increase the rate and efficiency of information transmission by primary auditory afferents. Proc Biol Sci 262: 259–265, 1995 [DOI] [PubMed] [Google Scholar]

- Roger M, Arnault P. Anatomical study of the connections of the primary auditory area in the rat. J Comp Neurol 287: 339–356, 1989 [DOI] [PubMed] [Google Scholar]

- Romand R, Ehret G. Development of tonotopy in the inferior colliculus. I. Electrophysiological mapping in house mice. Dev Brain Res 54: 221–234, 1990 [DOI] [PubMed] [Google Scholar]

- Romanski LM, LeDoux JE. Organization of rodent auditory cortex: anterograde transport of PHA-L from MGv to temporal neocortex. Cereb Cortex 3: 499–514, 1993 [DOI] [PubMed] [Google Scholar]

- Rothschild G, Nelken I, Mizrahi A. Functional organization and population dynamics in the mouse primary auditory cortex. Nat Neurosci 13: 353–360, 2010 [DOI] [PubMed] [Google Scholar]

- Rutkowski R, Miasnikov AA, Weinberger NM. Characterisation of multiple physiological fields within the anatomical core of rat auditory cortex. Hear Res 181: 116–130, 2003 [DOI] [PubMed] [Google Scholar]

- Sally SL, Kelly JB. Organization of auditory cortex in the albino rat: sound frequency. J Neurophysiol 59: 1627–1638, 1988 [DOI] [PubMed] [Google Scholar]

- Sanes DH, Bao S. Tuning up the developing auditory CNS. Curr Opin Neurobiol 19: 188–199, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanes DH, Constantine-Paton M. The sharpening of frequency tuning curves requires patterned activity during development in the mouse, Mus musculus. J Neurosci 5: 1152–1166, 1985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnupp JW, Hall TM, Kokelaar RF, Ahmed B. Plasticity of temporal pattern codes for vocalization stimuli in primary auditory cortex. J Neurosci 26: 4785–4795, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stiebler I, Neulist R, Fichtel I, Ehret G. The auditory cortex of the house mouse: left-right differences, tonotopic organization and quantitative analysis of frequency representation. J Comp Physiol A 181: 559–571, 1997 [DOI] [PubMed] [Google Scholar]

- Suta D, Popelár J, Kvasnák E, Syka J. Representation of species-specific vocalizations in the medial geniculate body of the guinea pig. Exp Brain Res 183: 377–388, 2007 [DOI] [PubMed] [Google Scholar]

- Takahashi N, Kashino M, Hironaka N. Structure of rat ultrasonic vocalizations and its relevance to behavior. PLoS One 5: e14115, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X, Kadia SC. Differential representation of species-specific primate vocalizations in the auditory cortices of marmoset and cat. J Neurophysiol 86: 2616–2620, 2001 [DOI] [PubMed] [Google Scholar]

- Winer JA, Sally SL, Larue DT, Kelly JB. Origins of medial geniculate body projections to physiologically defined zones of rat primary auditory cortex. Hear Res 130: 42–61, 1999 [DOI] [PubMed] [Google Scholar]

- Woolley SM, Moore JM. Coevolution in communication senders and receivers: vocal behavior and auditory processing in multiple songbird species. Ann NY Acad Sci 1225: 155–165, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu GK, Li P, Tao HW, Zhang LI. Nonmonotonic synaptic excitation and imbalanced inhibition underlying cortical intensity tuning. Neuron 52: 705–715, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang S, Zhang LS, Gibboni R, Weiner B, Bao S. Impaired development and competitive refinement of the cortical frequency map in tumor necrosis factor-α-deficient mice. Cereb Cortex. First published February 28, 2013; 10.1093/cercor/bht053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang LI, Bao S, Merzenich MM. Persistent and specific influences of early acoustic environments on primary auditory cortex. Nat Neurosci 4: 1123–1130, 2001 [DOI] [PubMed] [Google Scholar]

- Zhou X, Merzenich MM. Enduring effects of early structured noise exposure on temporal modulation in the primary auditory cortex. Proc Natl Acad Sci USA 105: 4423–4428, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou X, Panizzutti R, De Villers-Sidani E, Madeira C, Merzenich MM. Natural restoration of critical period plasticity in the juvenile and adult primary auditory cortex. J Neurosci 31: 5625–5634, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]