Abstract

The regulatory review and approval process is a significant part of the workflow associated with initiating clinical and translational research projects. Ambiguity concerning submission requirements and expected times associated with the review process can create additional work for research teams and ultimately delay important scientific projects. In an effort to provide assistance to investigators, we have developed an online interactive interface which elicits basic study characteristics for a single project and subsequently produces a list of required administrative applications needed for approval along with clear instructions concerning expectations from the research team. This system, the Vanderbilt Customized Action Plan (V-CAP), was launched in October, 2006 and been used extensively. The informatics systems-based approach is scalable to other academic medical centers and the authors report details concerning: (1) V-CAP project design; (2) a reference workflow associated with Vanderbilt policies and regulations; (3) V-CAP metrics of use by Vanderbilt research teams; and (4) a list of recommendations for other academic centers considering a similar systems-based approach for helping researchers efficiently navigate processes related to regulatory approval.

Regulatory complexities and new and changing federal and institutional policies in the research environment have increased significantly over the past decade and created the need for a greater number of reviews and approvals required for the initiation and conduct of clinical and translational research. Each review provides a critical function, for example, in protecting rights and welfare of human subjects1, protecting vulnerable populations, ensuring ethical principles are upheld, maintaining financial feasibility and security, maintaining compliance with regulations, ensuring biosafety, and protecting privacy. However, responsibility for the numerous regulatory review processes is typically not centralized within institutions, forcing research teams to interact with multiple departments independently to gain approval to initiate a study. Ambiguity concerning submission requirements and expected times associated with the review process can create additional work for research teams and ultimately delay important scientific projects.

The problem is exacerbated when federal and institutional policies are added or modified, essentially creating a ‘moving target’ for researchers. Regulatory requirements can be particularly burdensome and/or confusing to new or junior investigators, those embarking on interdisciplinary research, and investigator initiated research. These are critical populations within Clinical and Translational Science Award (CTSA) programs, including the CTSA at Vanderbilt University. These challenges could theoretically lead to a scenario where a scientist with a novel research idea is dissuaded from conducting studies due at least in part to the complexity of the regulatory approvals process.2

There is a need to assist researchers with research initiation processes. In response, academic research institutions have recently begun to create offices devoted to providing assistance to researchers with such processes.3, 4 Vanderbilt initiated the Research Support Services office in 2004 to develop enterprise wide programs to facilitate clinical and translational research, and to work directly with investigators on study initiation and conduct. In addition, many institutions have realized the need for informatics to provide technical solutions for the creation and management of administrative applications5. Vanderbilt also created an Office of Research Informatics in 2007 focused on providing informatics tools and services for the clinical and translational research domain. The pairing of these complementary departments was designed to generate comprehensive initiatives with the goal of decreasing the researcher burden. Generally, we believe systems that effectively provide researchers with routine and frequently needed administrative support increases staff efficiency.

An assessment of the Vanderbilt regulatory review and approval process identified up to 20 potential applications, authorizations, or needed agreements required prior to initiating research. Streamlining the regulatory approval process for scientific investigators was therefore identified as a critical target for improvement. We examined all required review and approval procedures within the Vanderbilt research enterprise and found that requisite authorizations were triggered by a limited number of basic study characteristics. Our hypothesis was that an interactive informatics tool collecting only those specific characteristics that generate the need for a specific approval can efficiently produce a tailored, accurate list of required authorizations. We report here details concerning the informatics tool allowing researchers to receive a Vanderbilt Customized Action Plan (V-CAP) for individual research studies, a reference workflow associated with Vanderbilt regulations and policies, and researcher usage statistics for 24-months of operation. We also suggest a list of recommendations for other academic centers considering a similar systems-based approach for assisting researchers in the regulatory approval process.

Workflow for a proposed informatics solution

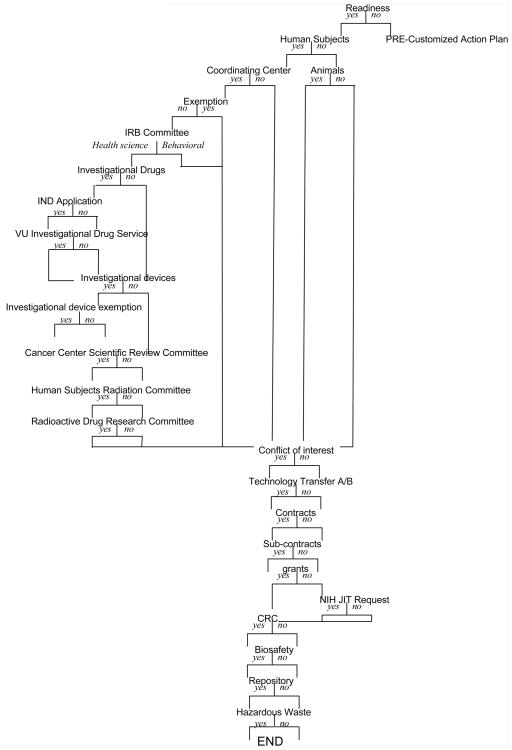

The V-CAP research planning assistant was designed around the concept of allowing investigators to describe characteristics of research they are proposing via a series of dialogue screens. The V-CAP system is accessed through StarBRITE, our centralized online research initiation, planning and support researcher portal. Users register a project name and are then presented with a series of 26 questions concerning a specific research study (Figure 1 illustrates the flow of the interview; Appendix 1 is also provided, which details the specific content of each question). Questions are shown sequentially to users and embedded program branching logic ensures that researchers are only presented questions that are relevant based on answers to previous questions. Also shown in Appendix 1 is the nature of the particular application that generated the question (i.e. whether it relates to a national policy or a local one).

Figure 1.

Flow diagram of the branching logic used in the interactive survey.

During the interview process, researcher end-users may view context-sensitive help for each question. Each help screen provides a brief description of the relevant regulation or policy, ancillary or educational information related to the needed approvals as well as relevant assistance available from the institution.

Once all relevant questions have been answered during the interview process, the user is presented with a review/validation screen and can modify answers as needed. After reviewing, the user chooses to initiate the V-CAP and is presented with a printable list of required approvals, an electronic link to each required application/form, and a link to an associated document (called “What to provide/What to expect”) created in a standardized format that systematically describes the process by which a researcher seeks and receives the particular approval (view screen shots at http://www.mc.vanderbilt.edu/victr/pub/newspub/vcap.html). The generation of a V-CAP also automatically triggers an IRB-approved anonymous follow up survey to assess the user's experience with the software.

Informatics system design

The V-CAP system uses PHP (version 5.2.6) and JavaScript scripting languages to present researcher end-users with project interview questions, customized help screens and project-specific recommendations required for regulatory approval. Programming for the researcher user-interface was designed for maximal flexibility and all details required for question dialogue screens (e.g. question label, available question responses, custom help information, branching logic to determine next questions) and answer-driven regulatory advice is stored in a data table within an institutional Oracle 10g database environment. This metadata-driven approach has proven very useful and allows relatively easy modifications by content experts within our Research Support Services group. V-CAP users enter the system using our StarBRITE researcher portal, where authentication is accomplished using institutional LDAP services. User activity across all individual projects is automatically logged and selected elements are fed to dashboards for real-time program evaluation. For example, evaluation dashboards are available to the Research Support Services and Office of Research Informatics evaluation group showing project usage over time and frequency data for user-click events (useful for determining question applicability and clarity). The V-CAP program includes a mechanism to automatically request end-user feedback concerning helpfulness and accuracy of the system and these data are also made available to governance groups in the dashboard viewing area. The V-Cap system was quality tested before implementation and automated output recommendations have been compared by manual regulatory expert review to ensure they generate all of the necessary approvals, and only those deemed necessary.

Assessment of system implementation and utilization

The V-CAP system was launched in October 2006 and was designed to be continually upgraded through results of evaluation efforts and as new regulations arise or institutional policies are created. A versioning concept was implemented in the database architecture that allows branching logic to change over time without affecting users' preexisting V-CAPs.

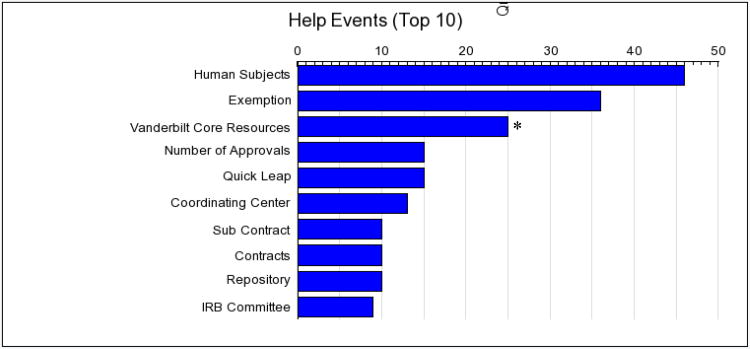

Approximately 550 V-CAP studies have been created (483 completed) by 250 unique users at Vanderbilt University (Figure 3). The average number of authorizations needed based on the output of the V-CAPs is 3.6 (range: 1 to 7). Of those that started and finished in one setting (∼414), the average time to answer questions was 3 minutes and 38 seconds. Overall, the help dialogue screens were accessed 244 times. Figure 4 provides a frequency histogram representation of individually end-user selected context sensitive help topics. The most frequently selected help topics related to research on human subjects, IRB Exemption criteria, and information on the translational technologies and resources available through the institutional shared core facility system (which is associated with a series of questions that provides assistance in directing people to needed core resources, which also have approval processes, although not all of these are compliance driven).

Figure 3. Frequency of main help categories. N= 244.

*These are help specific to the 25+ shared core facilities and are specific to Vanderbilt.

After the V-CAP is generated, automatically triggered electronic feedback surveys are collected routinely, which provide descriptive information about the usage of the system. We recently implemented this automated feedback survey to participants; it is triggered shortly after V-Cap generation. Since implementing this feature, of the 79 people who have utilized the current version of the V-CAP and received an automated feedback request email, 25 completed surveys for an estimated response rate of 32%. Other individuals responding to the survey responded to a separate mass solicitation of feedback, for a total combined sample size of 34. User feedback shows that half (17/50%) of the respondents say they learned something new from the process. Most (27/79%) would utilize this tool again for future research projects. Most (30/88%) found the interactive format easy to use. A majority (27/79%) responded that the CAP was helpful in directing their approval process and also that the questions were applicable to their research. A majority also found the standardized ‘What to Provide/What to Expect’ forms that accompany the V-CAP helpful.

Summary of this work

The V-CAP system is just one solution to the administrative barriers related to conducting clinical and translational research, but one that has been received favorably within our institution. Our data suggest that the V-CAP application is an important tool supporting regulatory compliance, as it provides investigators and study personnel with a checklist of the regulatory requirements for approval to conduct research, theoretically reducing the chance that an approval might be overlooked. It also provides the researcher with information necessary to initiate the various approvals necessary for regulatory compliance simultaneously rather than sequentially.

Although the sample size is small and not representative of the entire clinical and translational research community, the number of respondents who reported they learned something new suggests that the tool serves not only a compliance function, but also as an education resource. To assess this impact, we recently implemented a function which inquires about the number of needed approvals believed to be necessary prior to engaging and self-entering study details within the V-CAP interface. Before completing their V-CAP survey, 11 individuals were asked how many approvals/authorizations they believed were needed; the average number of approvals believed to be needed before going through the V-CAP was 2.0, while the average number of necessary approvals/authorizations based on their responses to the interactive survey questions (based on a very small sample (n=11) of researchers to date) was 3.6. These data are very preliminary but will continue to be assessed.

By referring users to support staff very early in the approval process for questions and support regarding the regulations and requirements for approval, the V-CAP might help to reduce time spent on unnecessary approvals, although we did not measure that variable. Future development will include institutional support modules providing access to ancillary support, in real time, based on user needs.

Limitations

There are limitations to these findings. Because the V-CAP utilization and feedback data were collected using an evolving system rather than based on a snapshot in time, there may be nuances resulting from a particular systems modification or sequence of events. The generalizability of findings might be limited to comparable academic medical institutions, since some of the questions are associated with local policies and requirements specific to Vanderbilt as an academic medical center. However, most questions are based on national policies and regulations, and are likely relevant at other institutions with similar research portfolios. The fact that the applicability of a large number of complicated regulations across various institutional and governmental bodies can be synthesized and determined on a small number of questions potentially has significant value for other institutions. Thus, we believe the overall approach and informatics system has broad scalability and applicability for implementation at other institutions in the support of clinical and translational research.

Things to consider if implementing a similar system

We have compiled a short list of recommendations for institutions wishing to develop a similar system. Our own system is continually evolving as we add features and functions, but the following recommendations represent design principles that have been important from the earlier days of development:

Design from the standpoint of making things as easy as possible for the research team.

Create a partnership of regulatory content experts and informatics technical experts.

Separate the content (questions, branching logic, resulting recommendations) from the presentation layer (visual user interface) used by the research team by storing content in a relational database.

Create easy methods for content experts to modify and test questions, branching logic and recommendations independently. This will free informatics staff to develop new features while enabling content experts uninhibited access to methods to continuously improve the project for researchers.

Build in metrics (real-time dashboards) for evaluation by program management experts. Monitoring all aspects of program usage by researcher end-users will enable continuous quality improvement and allow ready assessment of resource utilization during regular prioritization planning efforts.

Create easy methods for researcher end-users to ask questions and suggest improvements, both in the interview process and during feedback surveys.

Supplementary Material

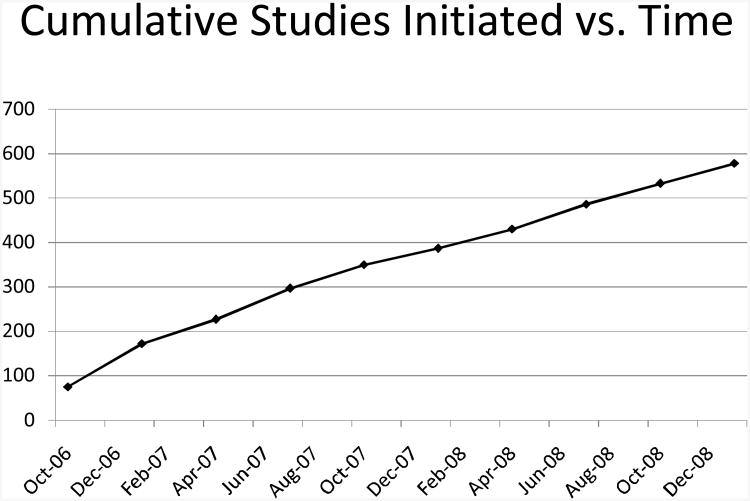

Figure 2.

Cumulative studies to date, which illustrates continued usage.

Acknowledgments

This work was supported in part by Vanderbilt CTSA grant 1 UL1 RR024975 from NCRR/NIH.

References

- 1.Parvizi J, Tarity TD, Conner K, Smith JB. Institutional review board approval: why it matters. J Bone Joint Surg Am. 2007 Feb;89(2):418–26. doi: 10.2106/JBJS.F.00362. Review. [DOI] [PubMed] [Google Scholar]

- 2.Zerhouni EA. Translational and clinical science--time for a new vision. N Engl J Med. 2005 Oct 13;353(15):1621–3. doi: 10.1056/NEJMsb053723. Epub 2005 Oct 12. No abstract available. [DOI] [PubMed] [Google Scholar]

- 3.Arbit HM, Paller MS. A program to provide regulatory support for investigator-initiated clinical research. Acad Med. 2006 Feb;81(2):146. doi: 10.1097/00001888-200602000-00007. [DOI] [PubMed] [Google Scholar]

- 4.de Melo-Martín I, Palmer LI, Fins JJ. Viewpoint: developing a research ethics consultation service to foster responsive and responsible clinical research. Acad Med. 2007 Sep;82(9):900–4. doi: 10.1097/ACM.0b013e318132f0ee. [DOI] [PubMed] [Google Scholar]

- 5.DiLaura R, Turisco F, McGrew C, Reel S, Glaser J, Crowley WF., Jr Use of informatics and information technologies in the clinical research enterprise within US academic medical centers: progress and challenges from 2005 to 2007. J Investig Med. 2008 Jun;56(5):770–9. doi: 10.2310/JIM.0b013e3175d7b4. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.