Abstract

Recent work has given rise to the view that reward-based decision making is governed by two key controllers: a habit system, which stores stimulus-response associations shaped by past reward, and a goal-oriented system that selects actions based on their anticipated outcomes. The current literature provides a rich body of computational theory addressing habit formation, centering on temporal-difference learning mechanisms. Less progress has been made toward formalizing the processes involved in goal-directed decision making. We draw on recent work in cognitive neuroscience, animal conditioning, cognitive and developmental psychology and machine learning, to outline a new theory of goal-directed decision making. Our basic proposal is that the brain, within an identifiable network of cortical and subcortical structures, implements a probabilistic generative model of reward, and that goal-directed decision making is effected through Bayesian inversion of this model. We present a set of simulations implementing the account, which address benchmark behavioral and neuroscientific findings, and which give rise to a set of testable predictions. We also discuss the relationship between the proposed framework and other models of decision making, including recent models of perceptual choice, to which our theory bears a direct connection.

Since the earliest days of both psychology and neuroscience, investigators interested in decision making and the control of behavior have recognized a fundamental distinction between habitual action and goal-directed or purposive action. Although this opposition has obvious roots in commonsense notions from folk psychology, its first rigorous expression emerged in a classic debate in the behaviorist era. On one side of this debate, Hull (1943), Spence (1956) and others characterized action selection as driven primarily by immediate associations from internal and environmental states to responses. On the other, Tolman (1932), McDougall (1923) and others portrayed action as arising from a process of prospective planning, involving the anticipation, evaluation and comparison of action outcomes. Over time, this early view of habit and goal-directedness as mutually exclusive accounts of behavior has given way to a more inclusive multiple-systems account, under which habitual and goal-directed control coexist as complementary mechanisms for action selection (Daw, Niv, & Dayan, 2005; Dayan, 2009; Dickinson, 1985; Dickinson & Balleine, 1993; Doya, 1999; Glascher, Daw, Dayan, & O'Doherty, 2010; Platt, et al., 2008; Rangel, Camerer, & Montague, 2008; Rangel & Hare, 2010; Samejima & Doya, 2007). This more recent perspective licenses the study of each form of action control in its own right, and sizeable literatures have developed concerning both habitual stimulus-response based action selection and planning-based control (see, e.g., Bargh, Green, & Fitzsimons, 2008; Bekkering, Wohlschlager, & Gattis, 2000; Gergely & Csibra, 2003; Wood & Neal, 2007; Yin & Knowlton, 2006).

Despite exciting progress in both arenas, however, a nagging imbalance has gradually arisen: Over the past decade, research on habitual, stimulus-response behavior has crystallized around an increasingly explicit set of computational ideas, originating from the field of reinforcement learning (Sutton & Barto, 1998). These ideas have not only provided a context for interpreting and predicting patterns of behavior (Barto & Sutton, 1981; Sutton & Barto, 1990; Wickens, Kotter, & Houk, 1995); they have also enabled new and detailed insights into the functional contributions of specific brain structures, including the striatum and the midbrain dopaminergic system (Barto, 1995; Houk, Adams, & Barto, 1995; Joel, Niv, & Ruppin, 2002; Montague, Dayan, & Sejnowski, 1996; Ribas-Fernandes, et al., 2011; Schultz, Dayan, & Montague, 1997). In contrast, research on goal-directed behavior, for all its sophistication, has not developed a similarly mature computational core.

In the present work, we contribute toward closing this gap in psychological and neuroscientific theory, by proposing a neuro-computational account of goal-directed decision making.

Goal-Directed Decision Making: Definition and Manifestations

It is important, from the outset, to be precise about what the expression ‘goal-directed decision making’ is intended to denote. As in the animal conditioning literature, we use the term to describe decision making based directly on predictions concerning action outcomes and their attendant incentive values. As implied by this definition, goal-directed decision making requires the agent to have access to two distinct forms of knowledge. First, it requires access to stored information about action-outcome contingencies, a body of knowledge that Tolman (1932, 1948) famously referred to as a “cognitive map.” Second, as Tolman (1932, 1949) also observed, in order for preferences to emerge over prospective outcomes, action-outcome knowledge must be integrated with incentive knowledge, knowledge of the reward values associated with individual world states. Integration of these two forms of knowledge allows the selection of actions judged most likely to bring about preferred outcomes (Balleine & Dickinson, 1998b).

Working from this conception of goal-directed decision making, animal conditioning research has generated a number of experimental paradigms that operationalize the construct, making it possible to diagnose goal-directedness in observed behavior. One particularly important experimental manipulation is known as outcome revaluation (Adams & Dickinson, 1981; Balleine, 2005; Balleine & Dickinson, 1998c; Colwill & Rescorla, 1985b; Klossek, Russell, & Dickinson, 2008). Here, an animal first learns to perform actions that yield specific rewards, for example learning to pull a chain that yields one kind of food and to press a lever that yields another. The appeal or reward value of one of the outcomes is then altered, for example by allowing the animal to eat its fill of a particular food (the specific satiety procedure; Balleine & Dickinson, 1998d; Colwill & Rescorla, 1985a), by pairing that food with an aversive event such as toxin-induced illness (conditioned aversion; Adams, 1982; Adams & Dickinson, 1981; Colwill & Rescorla, 1985a; Colwill & Rescorla, 1988), or by inducing a change in motivational state (Balleine, 1992; Balleine & Dickinson, 1994; Dickinson & Dawson, 1989). Under appropriate circumstances, this intervention results in a rapid shift in behavior either away from or toward the actions associated with the relevant outcome. Such a shift is interpreted as reflecting goal-directed behavior because it implies an integration of action-outcome knowledge with representations of outcome reward value.

Another key experimental manipulation involves breaking the causal contingency between a specific action and outcome. Here, typically, the animal first learns to associate delivery of a certain food with a particular action, but later begins to receive the food independently of the action. The upshot of this ‘contingency degradation’ is that the animal less frequently produces the action in question (Colwill & Rescorla, 1986; Dickinson & Mulatero, 1989; Williams, 1989). Such behavior provides evidence that actions are being selected based on (appropriately updated) internal representations of action-outcome contingencies, thus meeting the criteria for goal-directedness.

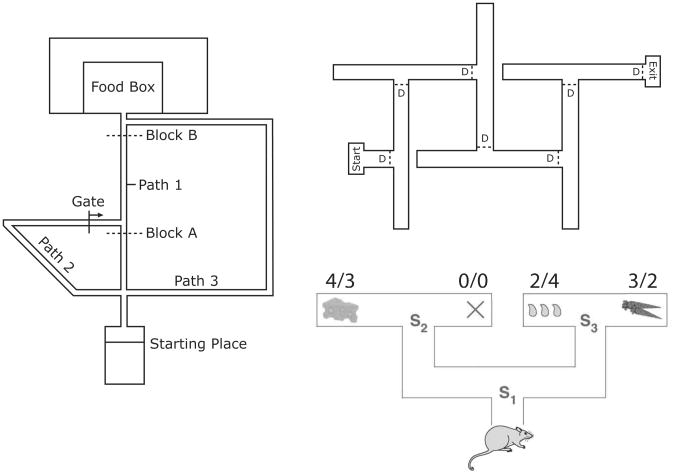

The same definition for goal-directedness extends to decisions involving sequences of action (Daw, Gershman, Seymour, Dayan, & Dolan, 2011; Ostlund, Winterbauer, & Balleine, 2009; Simon & Daw, 2011). An illustrative example, introduced by Niv, Joel and Dayan (2006), involves a rat navigating through a two-step T-maze, as shown in Figure 1 (lower right). The animal in this scenario must make a sequence of two left-right decisions, arriving by these at a terminus containing an item with a particular incentive value. A goal-directed decision at S1 would require retrieval of a sequence of action-outcome associations — linking a left turn at S1 with arrival at S2, and a left turn at S2 with cheese — as well as access to stored information about the incentive value of the available outcomes. Building on this simple example, Niv, Joel and Dayan (2006) provided an illustration of how revaluation plays out in the multi-step decision context. They considered a scenario in which fluid deprivation is used to make the rat thirsty, inducing a change in the reward values associated with the four outcomes (see Figure 1). This change in the animal's internal representations of incentive value, when integrated into the prospective operations involved in goal-directed decision making, results in a different action at S1.

Figure 1.

Left: Maze used to demonstrate detour behavior, redrawn from Tolman and Honzik (1930, page 223). Upper right: Maze used to demonstrate latent learning, redrawn from Blodgett (1929, page 117). D = door. Lower right: T-maze scenario from Niv, Joel and Dayan (2006). Outcome values relate to hungry (left) and thirsty (right) states.

While this T-maze example represents only a thought experiment, some of the issues it addresses were engaged in recent experiments by Ostlund, Winterbauer and Balleine (2009). Here, rats were trained to execute two-step sequences in order to obtain food rewards. The rats had access to two levers. When a rat pressed the right lever and then the left, a bit of sucrose was delivered. When the levers were pressed in the opposite order, the rat received polycose. The sequences left-left and right-right, meanwhile, yielded no reward. Following training, one of the food rewards was devalued through satiety. When presented with the two levers in this setting, rats tended to execute the sequence yielding the non-devalued food more frequently than the opposite sequence. Ostlund and colleagues (2009) also showed analogous changes in sequence production following contingency degradation.

Two further standard operationalizations of goal-directed decision making derive from the classic research championed by Tolman. In the latent learning paradigm (Blodgett, 1929), rats run a compound T-maze as shown in Figure 1 (upper right), until they reach the box labeled ‘exit.’ After several sessions, a food reward is placed in the exit box. After the animals discover this change, there is an immediate reduction in the frequency of entrances into blind alleys. Animals suddenly take a much more direct path to the exit box than they had previously. In detour behavior, as described by Tolman and Honzik (1930), rats run a maze configured as in Figure 1 (left). When the most direct route (Path 1) is blocked by a barrier at location A, the animals tend to opt for the shortest of the remaining paths (Path 2). However, when the block is placed at location B, animals take the third path. In each of these cases, a change in action-outcome contingencies triggers immediate adjustments in behavior, providing a hallmark of goal-directed decision making.

Toward a Computational Account

Our interest in the present work is in understanding the computations and mechanisms that underlie goal-directed decision making, as it manifests in behaviors like the ones just described. Given the recent success of temporal-difference models in research on habit formation, one approach might be to draw from the same well, surveying the wide range of algorithms that have developed in artificial intelligence, machine learning, and operations research for solving multi-step decision problems based on pre-established contingency and incentive knowledge (see Bertsekas & Tsitsiklis, 1996; Puterman, 2005; Russell & Norvig, 2002; Sutton & Barto, 1998). We do believe that it is important to consider such procedures for their potential biological relevance,1 and later we will circle back in order to do so. However, the theory we will present draws its inspiration from a rather different source, looking to previous research in neuroscience, psychology and computer science that has invoked the notion of a probabilistic generative model. In order to set the scene for what follows, it is worth briefly unpacking this construct and highlighting previous work in which it has been applied.

Generative models in psychology and neuroscience

Over recent years, a broad formal perspective has taken root within both cognitive and neural research, in which probabilistic inference plays a central organizing role. A recurring motif, across numerous applications of this perspective, is that of inverse inference within a generative model. The basic idea emerged first in research on visual perception. Early on, Helmholtz (1860/1962) characterized vision as a process of unconscious inference, whose function is to diagnose the environmental conditions responsible for generating the retinal image. In recent years, this perspective has found expression in the idea that the visual system embodies a generative model of retinal images, that is, an internal model of how the ambient scene (objects, textures, lighting, and so forth) gives rise to patterns of retinal stimulation. More specifically, this generative model encodes a conditional probability distribution, p(image | scene). The inference of which Helmholtz spoke is made by inverting this generative model using Bayes' rule, in order to compute the posterior probability p(scene | image) (Dayan, Hinton, & Zemel, 1995; Kersten, Mamassian, & Yuille, 2004; Knill & Richards, 1996; Yuille & Kersten, 2006).

The influence of this generative perspective has gradually spread from perception research to other fields. In particular, it has played an important role in recent work on motor control. Here, the generative (or forward) model maps from motor commands to their postural and environmental results, and this model is inverted in order to establish a mapping from desired effects to motor commands (Carpenter & Williams, 1981; Jordan & Rumelhart, 1992; Kilner, Friston, & Frith, 2007; Kording & Wolpert, 2006; Rao, Shon, & Meltzoff, 2007; Wolpert, Ghahramani, & Jordan, 1995; Wolpert, Doya, & Kawato, 2003). Beyond motor control and perception, theories centering on probabilistic inference over generative models have figured in numerous other realms, including language (Chater & Manning, 2006; Xu & Tenenbaum, 2007), memory (Hemmer & Steyvers, 2009), conceptual knowledge (Chater & Oaksford, 2008; Griffiths, Steyvers, & Tenenbaum, 2007), perceptual categorization (Yu, Dayan, & Cohen, 2009), and — significantly — causal learning and the learning of action-outcome contingencies (Blaisdell, Sawa, Leising, & Waldmann, 2006; Glymour, 2001; Gopnik, et al., 2004; Gopnik & Schulz, 2007; Green, Benson, Kersten, & Schrater, 2010; Sloman, 2005; Tenenbaum, Griffiths, & Niyogi, 2007).

One exciting aspect of the generative approach in psychology is that its terms can be transposed, in very much the same mathematical form, into accounts of the underlying neural computations. The notion of inverse inference within a generative model has played a central role in numerous recent theories of brain function, both in visual neuroscience (Ballard, Hinton, & Sejnowski, 1983; Barlow, 1969; Lee & Mumford, 2003; Rao & Ballard, 1999) and elsewhere (Dayan, et al., 1995; Friston, 2005; Knill & Pouget, 2004; Mumford, 1992, 1994).

Goal-directed decision making as inverse inference

Our central proposal in the present work is that goal-directed decision making, like so many other forms of human and animal information processing, can be fruitfully understood in terms of probabilistic inference. In particular, we will propose that goal-directed decisions arise out of an internal generative model, which captures how situations, plans, actions, and outcomes interact to generate reward. Decision making, as we will characterize it, involves inverse inference within this generative model: The decision process takes the occurrence of reward as a premise, and leverages the generative model to determine which course of action best explains the observation of reward.

Although this specific idea is new to psychology and neuroscience, it has a number of direct and indirect precedents in machine learning, as we shall later detail (Attias, 2003; Botvinick & An, 2009; Cooper, 1988; Dayan & Hinton, 1997; Hoffman, de Freitas, Doucet, & Peters, 2009; Shachter & Peot, 1992; Toussaint & Storkey, 2006; Verma & Rao, 2006b). In what follows, we will draw many of our raw materials from such work, but will also reshape them in order to yield an account that makes maximal contact with existing psychological and neuroscientific theory.

Overview

The ensuing presentation is divided into three main sections, corresponding to the three levels of theoretical analysis famously proposed by David Marr (1982, see also Jones, 2011). We begin in the next section by considering the computational problem underlying goal-directed control. The succeeding section moves on to consider the algorithm or procedure involved in solving that computational problem. Finally, in a third section, we consider the level of neural implementation. Following these three core sections of the paper, we discuss the relationship between the present ideas and earlier work, and consider directions for further development.

Reframing the Computational Problem

In building a formal theory, we take as our point of departure an insight recently expressed by Daw, Niv and Dayan (2005, see also Dayan & Niv, 2008), which is that goal-directed decision making can be viewed as a version of model-based reinforcement learning. The ‘model’ referred to in this term comes in two parts: a state-transition function, which maps from situation-action pairs to outcomes, and a reward function, which attaches a reward value to each world state. Model-based reinforcement learning refers to the project of discovering an optimal (reward-maximizing) policy, or mapping from states to actions, given this two-part model (Sutton & Barto, 1998).

To state this more formally: Model-based reinforcement learning begins with a set of givens, which include a set of states S; a set of actions A; a state-transition function T(s∈S,a∈A, s′;∈S), which specifies the probability of arriving in state s′ after having performed action a in state s; and a reward function R(s), which assigns a scalar reward value to each state. The computational problem is then to choose a policy π(s,a,t) = p(a|s,t) that maximizes expected cumulative reward over steps of action t up to some planning horizon T:

| (1) |

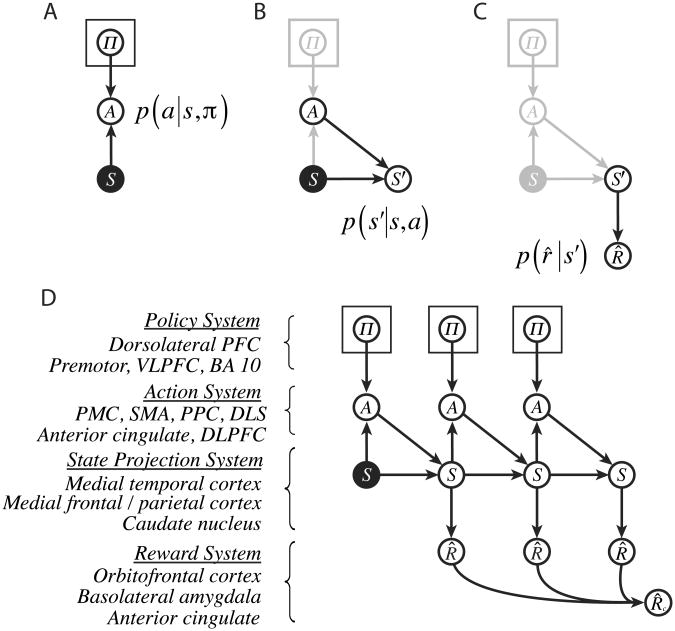

Our objective is to reframe this problem in terms of probabilistic inference. As a first step in that direction, we can represent the problem's ingredients, as well as their interrelations, in the form of a probabilistic graphical model (see Bishop, 2006; Koller & Friedman, 2009; Pearl, 1988). Figure 2A begins construction of this model with an initial set of three nodes. The node S represents a variable indicating the decision maker's current situation or state.2 This node is shaded to indicate that its value is known or observed by the decision-maker; the initial state is a ‘given’ in the action-selection problem. The node A represents a variable whose values correspond to available actions, and П represents a set of state-specific policy variables, with values corresponding to state-action pairs. The two arrows converging on A indicate that the current action a depends on both the current state s, and the policy π for that state. More specifically, node A is associated with the conditional probability distribution p(A=a|S=s, П=π), ọrfọrbrevity p(a|s,π).3

Figure 2.

Elements of the computational account. Rectangular plates surrounding policy nodes indicate the inclusion of one such node per state (see Appendices). PFC, prefrontal cortex; VLPFC, ventrolateral prefrontal cortex; PMC, premotor cortex; SMA, supplementary motor area; PPC, posterior parietal cortex; DLPFC, dorsolateral prefrontal cortex; DLS, dorsolateral striatum, BA, Brodmann area.

Figure 2B expands the model to incorporate a representation of the transition function. As above, the latter is defined as a probability distribution p(s′|s,a), where s′ is the value of a variable representing action outcomes or successor states. This variable is represented by node S′ in the figure, with incoming arrows indicating its joint dependence on S and A.

Figure 2C completes the structure by incorporating a representation of the reward function. Here, we add a node R̂ representing reward value, with an afferent arrow to indicate that the value r̂ depends on the outcome state s′. (The reason for the change in notation from R to R̂ will be disclosed in a moment.)

The architecture developed so far addresses only a single step of action. However, it is readily extended to sequences. As shown in Figure 2D, this extension is accomplished by duplicating part of the existing structure, providing a series of state, action, policy and reward nodes, one for each step of the action sequence. In extending the architecture in this way, we also introduce one final new element: a variable representing the cumulative reward accrued over an action sequence (R̂c).

A probabilistic representation of reward

To this point, our model has been built from materials directly provided by traditional reinforcement learning. At the present juncture, however, we make our first move toward reframing the goal-directed decision making problem by choosing a special form for the representation of reward. In reinforcement learning, as well as in many quarters of economics and psychology, reward magnitude is generally formalized as a scalar value. In view of this, the most intuitive approach in fleshing out our graphical model might be to treat R̂ as a continuous variable, whose value directly corresponds to reward magnitude or utility (see, e.g., Attias, 2003). However, we will find it fruitful to represent reward in a different way. Specifically, we cast R̂ as a binary variable, with discrete values of one and zero. Reward magnitude is then encoded as the probability p(r̂=1), for which we will use the shorthand p(r̂). Under this encoding, a state s′ associated with large positive reward would give p(r̂|s′) close to one. If the state were associated with large negative reward (punishment), p(r̂|s′) would fall near zero. In the sequential setting (see Figure 2D), the cumulative reward variable R̂c will also be treated as binary, with

| (2) |

where r̂t is the R̂ node associated with step t of the plan (Tatman & Shachter, 1990).

To prevent misapprehension, it is worth emphasizing that what is represented using this approach is reward magnitude, not reward probability. Although the value p(r̂) is a probability, it is being used as the vehicle for representing the size of a deterministic reward. On first blush, this approach to representing reward may seem rather perverse. However, as we shall later discuss in detail, it has precedents in economics, psychology, and neuroscience, as well as in decision theory and machine learning (Shachter & Peot, 1992; Toussaint & Storkey, 2006). For example, in the psychology literature, Stewart, Chater and Brown (2006) have proposed that the utility of a choice item is quantified as the probability that this item would be judged preferable to a randomly selected comparison item (see also Kornienko, 2010). And in neuroscience, data suggests that utility is encoded in part through the firing rates of neurons in orbitofrontal cortex, i.e., the probability that these neurons will fire within a small time window (see, e.g., Padoa-Schioppa & Assad, 2006). In both of these cases, as in our model, utility is encoded through the probability of a binary event.

By adopting this binary format for reward representation, we bring about a subtle but important change in how the goal-directed decision problem is framed. In the conventional case, where reward is represented as an ordinary real number (which we shall continue to denote by r), the problem is to find the policy that maximizes expected reward magnitude (see Eq. 1). In the scenario we are considering, the problem is instead to maximize the probability of a discrete event, p(r̂=1|π). Goal-directed decision making thus assumes the form of a likelihood maximization problem. This seemingly incidental point has far-reaching ramifications, which we shall unpack in what follows.

A Generative Model for Reward

As we have noted, the graphical model in Figure 2 can be seen as simply one way of representing the standard ingredients of a model-based reinforcement learning problem. However, another way of viewing it is as a generative model for reward. That is, the model represents the interrelated factors — initial states, policies, actions and outcomes — that together give rise to reward events.

To illustrate, we can ‘query’ the variable R̂, asking for the marginal probability p(r̂|s). In the one-step model, this probability depends on the remaining variables in the following way:

| (3) |

Note that the first factor in this sum is simply the reward function. The second term is the transition function, and the third expresses the effect of policies on action selection. The final term represents the decision-maker's prior bias toward specific policies, expressed as a probability distribution. Each of these factors corresponds to the conditional probability distribution (CPD) at a specific node in the graph.

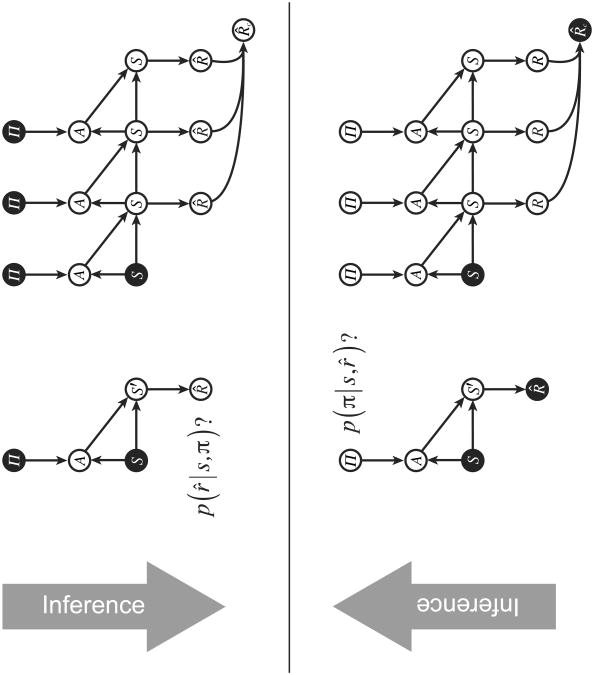

An important aspect of probabilistic graphical models is that they provide a substrate for conditional inference. Given an observed or known value for one or more variables, one can query the conditional distribution for any other set of variables (see Bishop, 2006; Koller & Friedman, 2009). Indeed, Equation 3 already provides an illustration of this, since here the value of the initial state s was an observed quantity. The same approach could be used to obtain the marginal probability of p(r̂ = 1 given a commitment to a specific policy. This is obtained by treating П as an observed variable (П = π), as illustrated in Figure 3 (top), and computing

Figure 3.

Top: Conditioning on a policy. Bottom: Conditioning on reward. Filled nodes indicate variables with stipulated values.

| (4) |

Given the definition of r̂, the conditional probability computed here corresponds to the expected reward under the designated policy π. As indicated in Figure 3 (top), in the multi-step setting, the expected cumulative reward for a specific set of policy choices can be inferred by computing the conditional probability of r̂c.

Note that conditioning on a policy and querying the reward variable in this way offers one potential method for solving the computational problem we have laid out. The decision maker could iterate through all available policies, keeping a record of the expected reward p(r̂|π, s) for each, and then choose the policy that maximizes that quantity. As discussed later, we believe this procedure may be relevant to decision making in the biological case, in some instances. However, there is also another, more interesting route to solving the computational problem.

Abductive inference

As discussed in the Introduction, the notion of a generative model has been applied extensively in work on vision. There, the proposal has been that perception seeks an explanation for retinal inputs, based on a generative model capturing the way that environmental situations give rise to those inputs. Note that the observed data in this case (i.e., the pattern of retinal stimulation) is at the ‘output’ end of the generative model. The model is used to reason not from causes to effects, but is rather inverted to reason abductively, that is, from effects to causes.

The same logic can be applied within our generative model of reward. Rather than conditioning on policies and computing rewards, it is possible to invert the model in order to reason from rewards to policies (Figure 3, bottom). Specifically, leveraging our binary representation of reward, we can condition on r̂=1 and apply Bayes' law to compute:

| (5) |

As illustrated in Figure 3, the same approach can be applied in the multi-step case by conditioning on r̂c=1.

Notice that if there is no initial bias toward any specific policy (the priors p(π) are uniform across all values of π), then the right-hand side of Equation 5 is identical to that of Equation 4, i.e.,

| (6) |

This suggests an alternative way of framing the computational problem involved in goal-directed decision making. According to our earlier formulation, the objective was to find a policy to maximize p(r̂|π). It is now evident that an equally valid objective is to find a policy to maximize p(r̂|π). Conditioning on r̂=1, the task is to identify the policy that best explains that ‘observation.’ In what ensues, we will refer to this procedure as policy abduction, considering that it involves reasoning from effects (reward) to their explanations or causes (policies for action).

It should be noted that our ability to make this important turn derives specifically from our having adopted a binary representation of reward, choosing to work with p(r̂|s)=1 rather than R(s). To see this, consider what happens if we attempt to condition on a scalar representation of reward. The most obvious approach here would be to replace the R̂ node in Figure 2 with a node R representing p(r|s′), a probability density function over the real numbers. One might then (naively) set up to find argmaxπ p(π|r). However, what specific value of r would one condition on here? If the range of R were bounded, one might be tempted to condition on its maximum: argmaxπ p(π|r=rmax). However, this will not answer. What if the outcome state s′ affording that maximum is not reachable — or not reachable with certainty — given the current situation, as will generally be the case? In the end, there is no tractable way of conditioning on a traditional scalar reward representation. The shift to a binary representation of reward is a critical step in reframing goal-directed decision making as abductive inference.

To recap, in this section we have moved through three interrelated ways of characterizing the computational problem involved in goal-directed decision making: (1) the conventional framing, which centers on the maximization of expected reward, (2) an alternative, maximum-likelihood view, and (3) a final transformation of the problem, which calls for inversion of a generative model of reward. In the next section, we retain a focus on the last of these problem formulations, turning to a consideration of the procedures by which the problem might be solved.

Algorithmic Framework

Given the preceding discussion, the appropriate procedure for goal-directed decision making may appear self-evident: In order to find argmaxπ p(r̂|s, π), condition on r̂=1 and evaluate argmaxπ p(π|s, r̂). It is true that this approach will yield the optimal policy under certain restricted circumstances. However, under others it would backfire. For one thing, the procedure requires that the decision-maker begin with no bias toward any specific policy, since as indicated by Equation 5, such prior biases enter into computing the posterior distribution p(π|s, r̂). Another more daunting problem arises in the multi-step setting. Here, taking argmaxπ p(π|s, r̂c) at each policy variable (see Figure 3, lower right) can lead to incorrect decisions. This is because, in the setting of sequential decision making, the optimal decision at any step depends on what actions are planned for later steps.

To illustrate this important point, consider the decision faced by the rat in the two-step T-maze discussed earlier and shown in Figure 1. The numbers at the top of that figure (ahead of each slash) indicate the reward values associated with items contained at the maze termini. Obviously, the optimal choice at the first decision point is to head left. However, this is only true if the animal's plan at the next juncture, S2, is to head left again. If the animal plans instead to head right if faced with decision point S2, then the best choice at S1 is actually to go right. The same is true if the animal has not yet made any decision about what to do at S2 or S3; if the animal is equally likely to head left or right at these points, then the best plan at S1 is to go right. Given this kind of interdependence, a procedure that makes independent decisions at each stage of the plan would yield unreliable results.

Before considering how a biological decision-making algorithm might cope with these issues, let us introduce one further circumstance in which simple policy abduction might fail to yield a reward-maximizing response. This is suggested by so-called random utility models of economic decision making. In such models, the value associated with any particular outcome is not a fixed quantity: Each time the decision-maker retrieves a value for an outcome, the result is drawn from a probability distribution (see Gul & Pesendorfer, 2006; Manski, 1977). According to one standard version of this idea, the goal of decision making is to maximize expected reward given such ‘noisy’ readings of outcome value (Busemeyer, 1985; Busemeyer & Townsend, 1993; Glimcher, 2009; Platt, et al., 2008; Rustichini, 2008; Shadlen, 2008).

In order to incorporate random utility into our graphical-model framework, we can simply add a stochastic component to the CPD at R̂. Thus, rather than p(r̂|s′), we have p(r̂|s′, z), where Z is a random variable (see Figure 4). Although this changes the reward model available to the decision-maker, the decision problem — to maximize p(r̂|s, π), now equal to the expectation Ez[p(r̂|s, π, Z)] — remains unchanged. Note that in this setting, as in the others we have enumerated, policy abduction is not assured to deliver the policy with the highest expected return; even a policy that maximizes p(r̂|s, π, z) may not maximize Ez[p(r̂|s, π, Z)].

Figure 4.

Top: Evidence integration in the dot motion task, focusing on the hypothesis that the underlying stimulus motion is in the upward direction. Bottom left: The graph in the top panel can also be diagrammed as a dynamic Bayesian network, with a recurrent connection running from and to the variable X. Bottom right: An architecture for evidence integration, based on the graph from Figure 3.

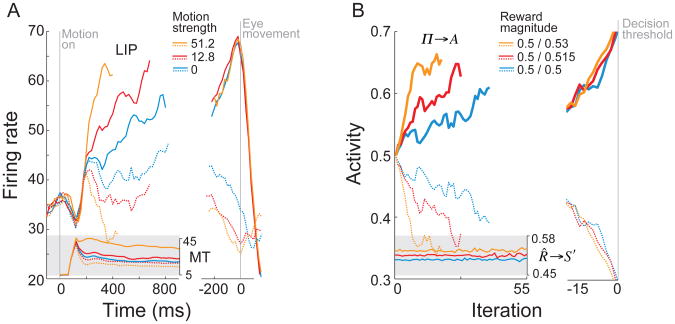

Notice that decision making under random utility, as we have just characterized it, bears a close resemblance to perceptual decision making problems involving ambiguous or noisy stimuli. A highly-studied example is the dot-motion task introduced by Newsome, Britten and Movshon (1989). Here, the subject is required to identify the predominant direction of motion in a dynamic display (Figure 4, top). Formally, the challenge is to decide between competing hypotheses (i.e., true directions of motion), given observations that provide information that is both incomplete and potentially equivocal: incomplete in the sense that p(x|y) < 1.0 for all available hypotheses x and any single observation y, and equivocal in the sense that for two observations y1 and y2 and hypotheses x1 (the true hypothesis) and x2 (false), it might occur that both p(x1 | y1) > p(x2 | y1) and p(x2 | y2) > p(x1 | y2).

In fact, this decision-making situation is isomorphic to our random utility scenario, where the single ‘observation’ r̂ = 1 provides information about candidate policies that is potentially both partial and equivocal. In both scenarios, it is hazardous to commit to an answer based on only a single observation. Given this parallel, in order to make progress in understanding goal-directed decision making, it may be fruitful to consider current models of perceptual decision making. As discussed next, these center on the theme of evidence integration.

Evidence integration

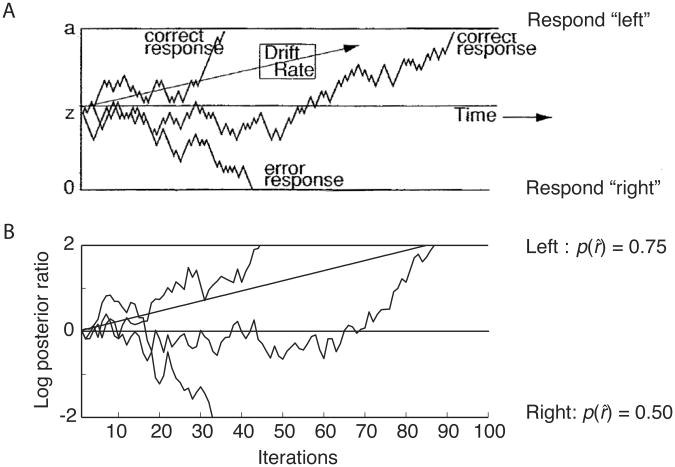

An abundance of research suggests that, in the case of perceptual decision making, human and animal decision-makers mitigate uncertainty by pooling across a series of observations. According to current evidence-integration models (see Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006), in any interval during the decision process, having made the series of observations y and a new observation ynew, the decision-maker updates a representation of the posterior probabilities p(x|y) by combining them with the likelihoods p(ynew|x): p(x|y, ynew) ∝ p(ynew|x)p(x|y). In so-called random walk or drift-diffusion models of two-alternative forced choice decision (Figure 4, top), accumulated evidence is represented in the form of a log posterior ratio, to which is added a log-likelihood ratio representing the evidence from each new observation (see Beck & Pouget, 2007; Bogacz, et al., 2006; Gold & Shadlen, 2007; Rao, 2006; Ratcliff & McKoon, 2008). Given an unlimited number of observations, this procedure is guaranteed to converge to the correct hypothesis. Moreover, when a response threshold is introduced (see Figure 5), the procedure becomes equivalent to the so-called sequential probability ratio test (Wald & Wolfowitz, 1948), which guarantees the minimum attainable reaction time for any given error rate.

Figure 5.

A. Evolution of the decision variable in a sequential-sampling model of a left-right visual motion judgment, both in the absence of noise (straight trajectory labeled “Drift Rate”) and with the addition of noise (remaining trajectories). Adapted from Ratcliff & McKoon, 2008, p. 876). B. Evolution of the log posterior ratio in the present model, as applied to a forced choice between outcomes with values as shown at right, both with and without noise (random utility)

Evidence-integration models have shown striking success in accounting for behavioral data not only in perceptual tasks, but also in memory retrieval (Ratcliff, 1978), lexical decision (e.g., Wagenmakers, et al., 2004), cognitive control (Liu, Holmes, & Cohen, 2008) and other contexts. Indeed, as reviewed later, efforts have been made to adapt the framework to reward-based decision making (Rangel & Hare, 2010; Rustichini, 2008; Usher, Elhalal, & McClelland, 2008). The apparent ubiquity of evidence-integration procedures in human and animal decision making, along with the particular parallels we have noted, makes it inviting to consider the potential relevance of these procedures to the framework we have developed for goal-directed decision making.

Goal-directed decision via iterative inference

In our framework, under policy abduction, the ‘observation’ r̂=1 is adopted, and the posteriors p(π|s, r̂) are computed.4 As we have seen, this approach is not robust, and can go awry in the presence of non-uniform priors, random utility, or sequential problem structure. However, by analogy to evidence-integration models of perceptual choice, the inference procedure can be repeated. On each iteration n, the observation r̂=1 is reinstated, and the policy posteriors are updated using Bayes' rule:

| (7) |

where α is a normalization coefficient that ensures the left-hand side term sums to one across all values of π.

Both mathematically and conceptually, this iterative procedure directly parallels the standard evidence-integration model as applied to the dot-motion task (see Figure 4). Rather than noisy perceptual observations, we have stochastic observations of reward.5 In both cases, observations are translated into likelihoods — respectively, p(y|x) and p(r̂|s, π) — which are used to update an evolving posterior distribution. Indeed, as in other evidence-integration models, the iterative procedure in Equation 7 is guaranteed to converge to the correct decision, that is, to find the optimal policy, as shown formally in Appendix A. Furthermore, in the single-step case, if a response threshold is imposed as in Figure 5, the procedure is guaranteed to yield the lowest error rate for a given expected decision time, just as in the sequential probability ratio test (see Appendix A).

Although it was random utility that led us to consider an evidence-integration approach, it turns out that the iterative procedure we have obtained also overcomes the other hazards enumerated at the outset of this section. Specifically, the procedure is guaranteed to converge to the optimal policy even in the presence of an initial bias toward a non-optimal policy, and as demonstrated in Appendix A it will also find the optimal sequential policy in the multi-step decision making case. Indeed, in the multi-step setting, our procedure shares structure with iterative procedures found in reinforcement learning and dynamic programming, where repeated updates allow a diffusion of information across temporally-distributed events (see Sutton & Barto, 1998; Toussaint & Storkey, 2006).

Simulations

Having arrived at an algorithmic account, we turn now to a set of simulations that show the procedure in action, illustrating its applicability to hallmark patterns of behavior in goal-directed decision making. Technical details, sufficient to replicate these simulations, are presented in Appendix B, and relevant code is available at www.princeton.edu/∼matthewb.

Simulation 1: Instrumental Choice

1.1 Simple binary choice

We begin with the simplest possible case: two-alternative forced choice with deterministic outcomes. For concreteness, and to prepare the ground for later simulations, consider a laboratory scenario in which a rat has access to two levers, positioned to its left and right. Pressing the left lever yields one kind of food, and pressing the right another (see, e.g., Balleine & Dickinson, 1998c). Let us assume that, at baseline, the rat prefers the food associated with the left lever, assigning a scalar reward value r = 2 to this food and a reward value r = 1 to the other.

The situation is modeled by defining three states, no-food (the initial state, r = 0), food1 and food2; and two policies, press-left and press-right, matched with corresponding actions. Our framework requires that reward values be represented as probabilities p(r̂|s′). In order to map from traditional, unbounded scalar reward values (r) to probabilities between zero and one, we will employ the following simple linear transformation (with alternatives discussed later):

| (8) |

For the present scenario, this yields p(r̂|food1)=1.00 and p(r̂|food2)=0.75.

The question is how the rat decides, based on its knowledge of the causal structure of the environment and its preferences over outcomes, which lever to press. One way of reaching a decision would involve the procedure shown in Figure 3 (top). Here, the policy variable is treated as observed, first set to press-left, then separately to press-right. In both cases, forward inference yields specific posterior probabilities at the reward node. The probability p(r̂|s, π) turns out to be larger under the press-left policy (1.00) than under press-right (0.75), providing a sufficient basis for choice.

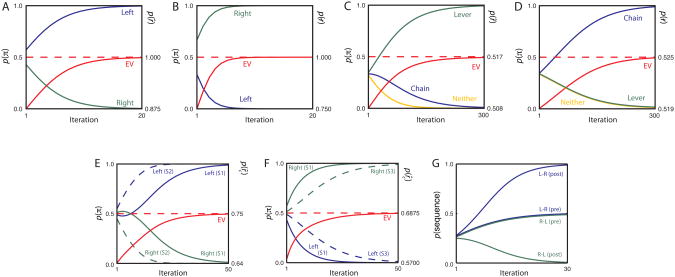

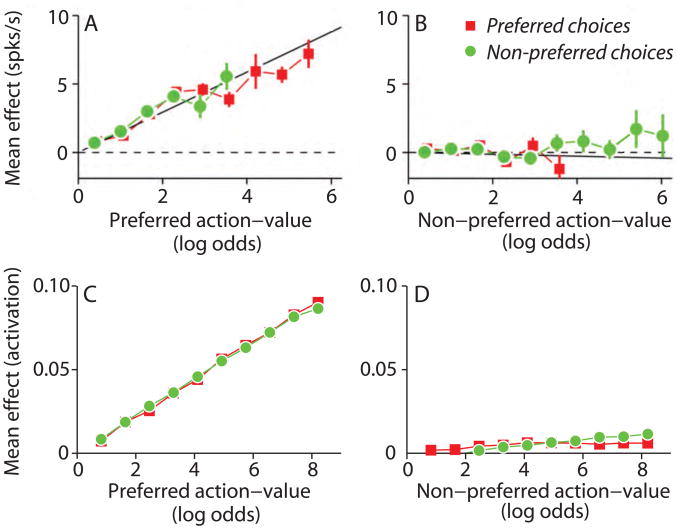

The potential relevance of serial policy evaluation, along the lines just described, has been recognized in recent theoretical work on animal decision making (see, e.g., Daw, et al., 2005; Smith, Li, Becker, & Kapur, 2004), and recent single-unit recording data in rodents provides apparent evidence for serial consideration of future actions and outcomes at behavioral choice-points (Johnson & Redish, 2007; Johnson, van der Meer, & Redish, 2008). However, our theory focuses on a different, more parallelized decision procedure. Here, the reward variable is treated as observed (r̂=1), and inference yields posterior probabilities for the two available policies. Figure 6A shows the evolution of these posteriors, over iterations of inference within a single decision-making ‘trial.’ Also displayed is the expected value of the current mixture of policies (the average of p(r̂|s, π), weighted by the posterior probability of π on the current iteration; i.e., the marginal probability p(r̂|s)). As the figure shows, as time elapses within the decision-making episode, the model converges to the optimal deterministic policy.

Figure 6.

Results of Simulations 1.1 (Panel A), 1.3 (B), 1.4 (C-D), 2.1 (E), 2.2 (F), and 2.3 (G). Blue green and yellow traces indicate the posterior probability of indicated actions/policies at each processing iteration. Red traces indicate the probability p(r̂=1) given the mixture of policies at each iteration, proportional to the expected reward for that mixture. Dashed red lines indicate p(r̂=1) for the optimal policy. In panel G, the two most central data series are offset for legibility; the values were in fact precisely equal across the two. pre, pre-devaluation. post, post-devaluation.

To make clear what is going on ‘under the hood’ in this simulation, let us step through the computations performed during its first three iterations. At the outset, the initial or prior probabilities p(π) for the policies press-left and press-right are both equal to 0.5. Labeling these policies πL and πR, the first iteration uses Eq. 5:

Dividing each of these values by their sum, to normalize, yields p1(πL|s, r̂)= 0.57 and p1(πR|s, r̂)= 0.43. On the second iteration, the results of iteration 1 are fed into Eq. 7:

Normalizing, again by dividing both values by their sum, yields p2(πL|s, r̂) ≈ 0.64 and p2(πR|s, r̂) ≈ 0.36. On the third iteration, the results of iteration 2 are fed back into Eq. 7:

and normalization yields p3(πL|s, r̂) = 0.70 and p3(πR|s, r̂) = 0.30. On the fourth iteration, these results are fed back into Eq. 7, and the process continues in that fashion. One way of summarizing the whole procedure in this simple case, where only a single step of action considered and no noise is involved, is to note that the policy posterior pn(π|s, r̂) on each iteration n is proportional to p(r̂|s, π)np(π).

1.2 Stochastic choice

In implementing random utility above, we introduced a random variable Z, which parameterized the reward function p(r̂|s′, z). For simplicity, this aspect of the model was set aside in Simulation 1.1, as it shall be in subsequent simulations. In the present simulation, however, we examine its impact on the decision-making process.

To this end, we assigned the variable Z a multivariate normal distribution with zero covariance (see Appendix B). Under these conditions, the decision dynamics take the form of a drift-diffusion process, isomorphic to those purported to underlie perceptual decision making (see Appendix A). The model's behavior is illustrated in Figure 5B, in the same lever-choice scenario considered in Simulation 1.1. For comparison with Figure 5A, the figure shows the log posterior ratio, log(p(left) / p(right)), rather than the individual posteriors. In the absence of noise, this quantity follows a straight-line course, mirroring the constant ‘drift rate’ of the drift-diffusion model (see Bogacz, et al., 2006; Gold & Shadlen, 2007; Ratcliff & McKoon, 2008). With Z active, the log posterior ratio follows a serpentine course, tending toward the optimal policy, but sometimes deviating in the other direction.

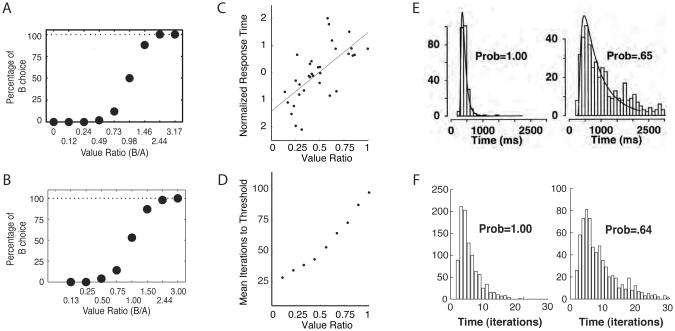

If a response threshold is introduced, as shown in Figure 5B, the match to the drift-diffusion model is complete. This formal link allows the present model to account for some important behavioral data concerning choice proportions and reaction times in reward-based decision making. Figure 7A shows data from an experiment by Padoa-Schioppa and Assad (2006), in which monkeys chose between two juice offers. A central finding in this study concerned choice variability. When one of the alternatives presented was much more valuable than the other, that option was always selected, but as the alternatives came closer together in value, the animals showed a graded increase in choice variability. When our model is faced with decisions between rewards with varying degrees of separation, it shows precisely the same kind of behavior, as illustrated in Figure 7B.

Figure 7.

A. Choice data from Padoa-Schioppa and Assad (2006, Figure 1B, p. 223). Value ratio indicates the subjective value of choice option B relative to option A as inferred from choice behavior. B. Choice data from Simulation 1.2, including random utility and a response threshold on the log posterior ratio of 2.0. Reward values for choice options were selected so as to yield the ratios shown on the x-axis. Each point reflects the choice proportion over a sample of 1000 trials. C. Response time data from Padoa-Schioppa et al. (2006). D. Response times in the simulation associated with panel B. E. Response-time distributions in a two-alternative perceptual judgment, under stimulus conditions yielding uniform judgments (Prob = 1.00) and more variable judgments (Prob = .65). The superimposed curve shows the fit of a drift-diffusion model. From Ratcliff and Rouder (1998, Figure 5, p. 352). F. Response-time distributions from the simulation associated with panels B and D, with outcome value ratios chosen so as to yield choice variabilities close to those in the Ratcliff and Rouder (1998) experiment.

In a related study, Padoa-Schioppa, Jandolo and Visalberghi (2006) showed that incentive disparity can also affect reaction time, with decisions taking longer when options are closely matched in value (Figure 7C; see also Rangel, 2008; Rustichini, Dickhaut, Ghirardato, Smith, & Pardo, 2005). This finding is also captured by our model under random utility, as shown in Figure 7D.

An important realm of data addressed by standard evidence-integration models centers on reaction-time distributions. As shown in Figure 7E, in many decision-making settings such distributions assume a characteristic skewed shape, with the distribution becoming broader under conditions leading to greater choice variability. As shown in Figure 7F, our model generates reaction time distributions showing these same characteristics. The model thus predicts that reaction-time distributions in goal-directed choice should resemble those observed in other settings, including perceptual decision-making (Ratcliff & Rouder, 1998) and memory retrieval (Ratcliff, 1978). To our knowledge, reaction-time distributions in reward-based decision making have not yet been studied experimentally.

1.3 Outcome devaluation

As a further proof of concept, our paradigm can be used to simulate incentive devaluation. For this purpose, we return to the two-lever scenario and the model introduced in Simulation 1.1. In Balleine and Dickinson (1998c), to take a representative study, the incentive value of one of two action outcomes was devalued by specific satiety, leading to an immediate reduction in performance of the associated action. This devaluation effect can be captured in our model by simply changing the reward value associated with one food outcome. To simulate the effect of this, we reduced the reward value associated with the formerly preferred food from r = 2 to r = 0. Note that this change directly affects only the CPD of the reward variable; p(r̂|s′)is reduced, for the case where s′ corresponds to the devalued food. When inference is performed, however, the impact of this local change propagates to the level of the policy node, yielding a reversal in choice (Figure 6B).

1.4 Contingency degradation

As discussed in the Introduction, changes in goal-directed decisions can be induced not only by revaluation of outcomes, but also by changes in patterns of causal contingency (Dickinson & Mulatero, 1989; Williams, 1989). A representative demonstration is reported Colwill and Rescorla (1986). Here, rats were given access to a lever and a chain. If the lever was pressed, a preferred food was delivered with probability 0.05. Pulling the chain yielded a less preferred food, again with probability 0.05. Under these conditions, not surprisingly, animals came to favor the lever. However, in the next phase of the experiment, the causal link between the lever and the preferred food was broken by delivering the preferred food with probability 0.05 regardless of animal's action (or inaction). Following this change, animals shifted their efforts toward the chain response.

To simulate this effect, we adapted the model from Simulation 1.1 to include three policies, corresponding to the actions chain, lever, and neither, and the four states no-food (r = 0), food1 (r = 1), food2 (r = 2), and both-foods (r = 3). When the model was parameterized to reflect the initial contingencies in the experiment, evidence integration led to selection of the lever action (Figure 6C). When the CPD p(s′|s, a) was updated to predict the later contingencies, where food2 could occur without any action, and both-foods could occur following chain (but not following lever), preference shifted to the chain response (Figure 6D).

In addition to illustrating contingency degradation, this simulation demonstrates the ability of the present framework to cope with probabilistic outcomes. Given an accurate representation of outcome contingencies, evidence integration will yield the response with the highest expected utility. Indeed, the likelihood p(r̂|s, π), which marginalizes over outcomes s′, can be viewed as a direct representation of expected utility (see Equation 4).

Simulation 2: Sequential Decision

Here we apply the iterated architecture from Figure 2D to simulate benchmark phenomena in multi-step decision making.

2.1 A two-stage decision problem

As an initial illustration of sequential choice, we focus in this simulation on the two-step T-maze scenario from Niv, Joel and Dayan (2006), described in the Introduction and illustrated in Figure 1. The states included in our model of this situation include the terminal reward items (cheese, carrot, water, and null), as well as the three preceding choice points. Following Niv et al. (2006), we assume the baseline reward values R(cheese)= 4, R(carrots) = 3, R(water) = 2, R(null) = 0. Figure 6E shows the decision trajectory produced by evidence integration in this problem setting. The model converges on the sequence left-left, a policy that takes it to the preferred cheese reward.

If we were to ‘look under the hood,’ tracing the computations on successive iterations at each stage of the plan, the story would be identical to that in Simulation 1.1, with the following important caveat: The calculations bearing on the first stage of the plan (i.e., the policy at S1) are impacted by the current policy posteriors at stage two (i.e., at S2 and S3). For example, the first iteration computes the posterior probability of adopting the left and right policies at S1. Calling these and , Eq. 5 gives:

The likelihood terms here — and — depend implicitly on what is planned for S2 and S3, i.e., on .

This dependence manifests in the time-courses plotted in Figure 6E. Note the trajectory of the solid blue and green traces in the figure, which relate to the decision at S1. Although the decision ultimately tips toward left, early on there is transient movement toward right. This effect stems directly from the fact that the optimal first-step choice depends on what is planned for later steps. As discussed earlier, if the animal is equally likely to go left or right upon reaching either S2 or S3, the expected reward for a left turn at S1 is (4+0)/2, and for a right turn it is (2+3)/2. The (locally) optimal choice at S1 is thus to turn right. Eventually, as better plans emerge for S2 and S3, the expected reward for left and right turns at S1 move toward 4 and 3, respectively, making it preferable to turn left at S1.

As discussed below, the kind of dynamics reflected in this simulation, arising from the interdependence of decision-making operations across plan steps, gives rise to testable model predictions.

2.2 Cumulative reward and cost-benefit analysis

A key feature of multi-step decision problems is the need to compute cumulative rewards when rewards are distributed across steps of action. A simple and ubiquitous case arises in effort-based decision making, where a cost-benefit analysis must take into account both distal rewards and the cost of proximal effort. A number of rodent studies have examined this cost-benefit analysis by placing an animal inside a T-maze where both arms contain food, but where one also contains a scalable barrier that the animal must surmount to access the food reward. The common finding is that, unless the reward on the barrier side is larger by a sufficient degree, animals will forgo it, avoiding the effort required (Salamone, Correa, Farrar, & Mingote, 2007; Walton, Kennerley, Bannerman, Phillips, & Rushworth, 2006).

This sort of cost-benefit analysis can be modeled very naturally within the present framework. For simplicity, we do so using the two-step T-maze scenario already established. Here, we re-impose the original reward values on the outcome states, but also imagine that there is now a scalable barrier placed at S2. The cost of traversing this barrier is inserted into the model by reducing R(S2) to -2. Evidence integration under these circumstances yields the decision trajectory in Figure 6F, which reflects the inference that the value of the most preferred reward is not worth the associated cost in effort.

2.3 Outcome revaluation and contingency degradation

As discussed in the Introduction, outcome revaluation can affect decisions in multi-step settings, just as in simpler decision tasks. To recap one relevant study, Ostlund, Winterbauer and Balleine (2009) trained rats to execute two two-step lever-press sequences (left-right, right-left), which yielded sucrose and polycose, respectively. When one of these outcomes was devalued through satiety, the animals tended to favor the sequence yielding the non-devalued food.

Note that, although the Ostlund et al. (2009) experiment involves lever-pressing rather than maze navigation, the form of the decision problem aligns precisely with the two-step T-maze from Niv et al. (2006). State S1 in Figure 1 now corresponds to the rat's initial situation, facing the two levers, with available actions press-left and press-right. State S2 corresponds to the rat's situation after having pressed the left lever once; state S3 the situation after pressing the right lever once.6 The outcomes for press-left and press-right are, respectively, null (r = 0) and polycose (r = 1) at S2; and sucrose (r = 1) and null (r = 0) at S3. Using the same model architecture that we used to simulate the two-step T-maze, these initial conditions lead to selection of the sequences left-right and right-left (with equal probability) over left-left and right-right (Figure 6G). Simulating devaluation by reducing R(sucrose) to 0.5 leads to a preference for left-right over all other sequences, in line with the empirical observation (see Figure 6G).7

Ostlund and colleagues (2009) also showed analogous changes in sequence production following contingency degradation. Simulating contingency degradation in the present model, using the approach established in Simulation 1.4, yields parallel results (data not shown). Using a similar logic, the model can be applied in a straightforward way to account for the classic latent learning and detour effects described in the Introduction (see Botvinick & An, 2009).

Predictions

In addition to demonstrating the ability of our framework to account for benchmark phenomena in goal-directed behavior, the foregoing simulations also give rise to several testable predictions. One of these arises from Simulation 2.1, and pertains to decision time-course. As shown in Figure 6E, the model in this simulation displayed a sort of decision-making reversal, traveling toward one policy early on and then, later, toward another. The origins of this effect, as discussed earlier, lie in the recursive structure of the planning problem: the optimal policy for any stage of the plan depends on what is planned for later stages. In Simulation 2.1, this general principle combined with a specific set of conditions, according to which the outcome with the maximum value lay in one direction, while the outcomes in the other direction had a larger mean value. Our model predicts that this mean-max conflict situation should trigger a similar reversal at the level of neural response representations in human or animal subjects. One way of testing this prediction behaviorally would be to impose response deadlines in order to elicit speeded choice reactions. Under these circumstances, the model predicts that short-latency responses in mean-max conflict conditions should show below-chance accuracy (for an initial test of this prediction, see Solway, Prabhakar, & Botvinick, in preparation).

Another prediction arises from Simulation 1.4. As illustrated there and demonstrated formally in Appendix A, our evidence-integration algorithm yields mathematically sound decisions in the face of probabilistic outcomes. However, an interesting and somewhat surprising effect arises during this process. Recall that with each iteration of the decision-making process, for each planned action, our model computes a posterior probability distribution over outcomes (s′). It turns out that this posterior distribution is optimistic. That is, it is weighted toward high-utility outcomes. For example, in Simulation 1.4, selection of the lever action prior to contingency degradation led to the outcome food2 with probability 0.05. However, at asymptote, the model attaches to this outcome a posterior probability of 0.08.

To see the origins of this optimism effect, recall that decision-making begins with an assumption of reward, i.e., the premise r̂=1. This assumption feeds into the calculation of outcome probabilities, with the natural consequence that they are weighted toward states with higher utility. It is important to emphasize that this aspect of the model does not affect the model's actual decisions; as we have noted, the model's choices of action conform to sound calculations of expected utility. Nevertheless, even as the model chooses rationally, it gives rise to optimistic estimates of outcome probability. This translates into a further testable prediction of the present theoretical account.

The predicted optimism effect bears an interesting relationship to what previous work has labeled the ‘illusion of control.’ Here, individuals make more optimistic outcome predictions when their actions are freely chosen than when they are dictated (Presson & Benassi, 1996). For example, Langer (1975) found that experimental participants expressed greater confidence in their chances of winning a drawing when they were permitted to select a ticket from among a set of objectively equivalent tickets than when a random ticket was simply given to them. A standard explanation for this effect has been that choice serves as a cue falsely implying outcome controllability (Langer, 1975; Presson & Benassi, 1996). The present work suggests a different, though perhaps not incompatible, explanation, which is that choice gives rise to optimism as a natural consequence of the computations involved in goal-directed decision making.

How do these predictions compare with those of competing theories? This is not a straightforward question to answer, given the dearth of psychological and neuroscientific theory concerning the processes underlying goal-directed decision making, particularly in sequential domains. However, it is perhaps useful to consider whether different machine-learning algorithms for model-based reinforcement learning might give rise to comparable predictions. In this respect, the above predictions concerning choice dynamics appear not to arise from algorithmic approaches in which depth-first tree search is employed (see e.g., Smith, et al., 2004), or where choice depends on backward induction (starting at the goal and working backward, in the spirit of successive ‘subgoaling’). On the other hand, the same predictions might obtain in more parallel procedures, such as the classical value iteration algorithm (see Sutton & Barto, 1998). In contrast, our model's prediction concerning optimistic state representation appears problematic even for the latter planning procedure, and thus stands as a particularly distinctive prediction of the present framework.

Neural Implementation

To this point, we have considered goal-directed decision making in abstract cognitive or information-processing terms. However, ultimately what is needed is an account that makes direct contact with neuroscientific data, pinpointing the neural structures and processes that give rise to goal-directed decisions. One of the most exciting aspects of recent empirical research on goal-directed decision making is that it has begun to shed some light on the relevant functional anatomy, identifying critical brain regions and, in some cases, characterizing the response properties of the neurons they contain. Despite such progress, we still lack a working model of how these brain structures interface and interact in order to support goal-directed decision making.

In this section we leverage the present theory to sketch out such a functional neural model. More specifically, we translate the theory into neural terms at two distinct levels of description. First, at a structural level, we map the elements of our model to specific gross brain regions, as discussed in the next subsection. Then, at a finer grain, we cash out the proposed information-processing operations within a neural network model, yielding a coarse account of how neurons within the relevant brain regions may collaborate in generating goal-directed behavior.

Four Interlocking Neural Systems

The graphical architecture we have been considering contains variables of four types, which represent, respectively, (1) policies, (2) actions, (3) current and projected situations or states, and (4) reward or utility. As noted previously, these four domains of representation, along with the transition and reward functions that link them, constitute the givens of the model-based reinforcement learning problem. However, each of the four representational domains can also be mapped to distinct sets of neuroanatomic regions. Making this mapping ties the four strata of our model to specific brain systems, opening the door to a consideration of the model's potential neuroscientific implications.

1. The policy system

Recall that the policy nodes in our model represent mappings from situations to responses. In the brain, representations of this kind have been shown to reside within the dorsolateral prefrontal cortex (DLPFC). Single-unit recording studies in primates, and complementary functional neuroimaging studies in humans, have indicated that one important function of the DLPFC may be to represent task sets or ‘rules’ (Asaad, Rainer, & Miller, 2000; Bunge & Wallis, 2007; Sakai, 2008; Wallis, Anderson, & Miller, 2001; White & Wise, 1999). The content of such rules is typically understood to establish a set of relationships between stimuli and responses (Bunge, 2004). According to the guided activation theory of Miller and Cohen (2001), a critical function of the DLPFC is to bias the flow of neural activation in pathways between stimulus and response representations, supporting transmission along task-relevant pathways. Given this role, it is not surprising that the DLPFC has been heavily implicated in planning and goal-direction (Anderson, Albert, & Fincham, 2005; Duncan, Emslie, Williams, Johnson, & Freer, 1996; Goel & Grafman, 1995; Lengfelder & Gollwitzer, 2001; Miller & Cohen, 2001; Shallice, 1982; Shallice & Burgess, 1991; Tanji & Hoshi, 2008; Tanji, Shima, & Mushiake, 2007; Unterrainer & Owen, 2006). Furthermore, studies on outcome devaluation in rodents (Balleine & Dickinson, 1998a; Corbit & Balleine, 2003; Killcross & Coutureau, 2003; although see Ostlund & Balleine, 2005), suggest that it depends critically on prelimbic cortex, a structure judged by some to represent a homologue to the primate DLPFC (Fuster, 1997; Kesner, 2000; Uylings, Goenewegen, & Kolb, 2003).

While the DLPFC is the area most heavily implicated in policy representation, there is also data suggesting that policy, task set, or rule representations may also reside in other portions of the frontal lobe, including premotor cortex (Wallis & Miller, 2003), ventrolateral prefrontal cortex (Bunge, 2004; Bunge, et al., 2005), pre-supplementary area (Dosenbach, et al., 2006; Rushworth, Walton, Kennerley, & Bannerman, 2004), and the frontal pole (Sakai & Passingham, 2003). The policy stratum in our model thus summarizes a role that is carried out in the brain by a densely interconnected network of cortical regions, with the DLPFC as an important hub.

2. The Action System

Within our model, policy nodes interface with nodes representing actions. If the pertinent actions are understood as bodily movements, then the set of relevant brain areas is relatively straightforward to identify, and would include premotor and supplementary motor cortices, portions of cingulate and parietal cortex, and associated sectors within the dorsal striatum. However, goal-directed decision making can involve more abstract forms of action, including actions defined in terms of ends rather than motoric means, implicating intraparietal and inferior frontoparietal cortex (Hamilton & Grafton, 2006, 2008), or temporally extended behaviors, currently speculated to be represented in portions of prefrontal cortex (see Badre, 2008; Botvinick, 2008). The action variables in our model thus, once again, summarize the role of a specific network of areas.

3. The State Projection System

Within our model's architecture, action nodes project to, and receive projections from, nodes representing current and projected situations or states.8 On the neuroscientific side, it is clear that the brains of higher animals must contain representations of anticipated states, as well as their dependencies on earlier states and actions (Atance & O'Neill, 2001; Gopnik & Schulz, 2007; Schutz-Bosbach & Prinz, 2007). However, despite considerable research, the neuroanatomical site of such representations is only beginning to emerge. Early studies of spatial navigation in rodents led to the idea that cognitive map representations might reside in the hippocampus (O'Keefe & Nadel, 1978), and recent research suggests that hippocampal place cells may represent projected future locations (Diba & Buzsaki, 2007; Johnson & Redish, 2007; Johnson, et al., 2008). Lesion studies have also provided evidence for the involvement of medial temporal lobe structures (entorhinal cortex, if not hippocampus) in the representation of action-outcome contingencies during instrumental learning (Corbit, Ostlund, & Balleine, 2002). Convergent neuropsychological research in humans indicates that medial temporal lobe structures may play a critical role in allowing visualization of future events, including action outcomes and goals (Buckner & Carroll, 2006; Hassabis, Kumaran, Vann, & Maguire, 2007; Schacter, Addis, & Buckner, 2007), possibly as part of a larger network including regions within medial and lateral parietal cortex (see also Hamilton & Grafton, 2008), lateral temporal cortex and medial frontal cortex (see also Matsumoto, 2004; Matsumoto, Suzuki, & Tanaka, 2003; Tanaka, Balleine, & O'Doherty, 2008). Still other work has suggested that the DLPFC may play a role in representing projected action outcomes, including both final ‘goal’ states and intermediate ‘means’ states (Fuster, 1997; Mushiake, Saito, Sakamoto, Itoyama, & Tanji, 2006; Saito, Mushiake, Sakamoto, Itoyama, & Tanji, 2005), and a recent study by Hamilton and Grafton (2008) suggests that the right inferior frontal cortex may be also be involved in representing action outcomes.

At the subcortical level, there is strong evidence for the involvement of specific basal ganglia structures in the representation of action-outcome contingencies. Research in rats has shown that damage to or inactivation of the dorsomedial striatum impairs sensitivity to outcome devaluation and changes in instrumental contingency (Balleine, 2005; Yin, Knowlton, & Balleine, 2005; Yin, Ostlund, Knowlton, & Balleine, 2005). This fits well with research implicating the caudate nucleus, the primate homologue of the dorsomedial striatum, in action-outcome contingency detection (Tanaka, et al., 2008) and planning (Monchi, Petrides, Strafella, Worsley, & Doyon, 2006; Unterrainer & Owen, 2006) in humans. A potential role for the striatum in representing action-outcome contingencies is particularly interesting given evidence for overlapping inputs from dorsal and orbital prefrontal areas within anterior striatum (Cavada, Company, Tejedor, Cruz-Rizzolo, & Reinoso-Suarez, 2000; Haber, Kim, Mailly, & Calzavara, 2006), a convergence that fits well with the structure of our graphical model.

4. The Reward System

The final set of elements in our model are nodes representing reward. Here again, the variables in question can be understood as summarizing the representational role of a specific set of brain regions. In this case, the relevant regions include, most prominently, the orbitofrontal cortex and the basolateral amygdala. The orbitofrontal cortex (OFC) has been extensively implicated, across species, in the representation of the incentive value of stimuli, including anticipatory coding for the value of predicted and even imagined outcomes (Arana, et al., 2003; Bray, Shimojo, & O'Doherty, 2010; Kringelbach, 2005; Montague & Berns, 2002; Padoa-Schioppa & Assad, 2006; Plassman, O'Doherty, & Rangel, 2007; Rolls, 2004, 2006). This function has been linked to a role in goal-directed decision making (Frank & Claus, 2006; Roberts, 2006; Rolls, 1996; Schoenbaum & Setlow, 2001; Schultz, Tremblay, & Hollerman, 2000; Wallis, 2007), based in part on studies demonstrating OFC involvement in revaluation phenomena (De Araujo, Kringelbach, Rolls, & McGlone, 2003; Gottfried, O'Doherty, & Dolan, 2003; Izquierdo, Suda, & Murray, 2004; LaBar, et al., 2001; Pickens, Saddoris, Gallagher, & Holland, 2005; Valentin, Dickinson, & O'Doherty, 2007; however, see Ostlund & Ballene, 2007).

Despite important differences in function, the basolateral amygdala (BLA) has also been extensively implicated in the representation of incentive value of stimuli, including action outcomes, and in the guidance of goal-directed behavior (Arana, et al., 2003; Balleine, 2005; Baxter & Murray, 2002; Holland & Gallagher). Like OFC, BLA has been directly implicated in revaluation phenomena (Balleine, Killcross, & Dickinson, 2003; Corbit & Balleine, 2005; Gottfried, et al., 2003; LaBar, et al., 2001). Indeed, there is evidence that revaluation sensitivity may depend on a functional interaction between BLA and OFC (Baxter, Parker, Lindner, Izquierdo, & Murray, 2000), suggesting that these structures might be most fruitfully regarded as two components within an integrated system for reward representation (Cavada, et al., 2000; Schoenbaum, Setlow, Saddoris, & Gallagher, 2003).9

Figure 2 summarizes the proposed correspondences between elements of our model and functional neural structures. At one level, these parallels simply recapitulate existing ideas concerning the functional roles of the implicated brain areas. However, because we have drawn the parallels in the context of an explicit algorithmic model, what results is a proposal concerning the way that the relevant neural structures interact to support goal-directed decision making. Of course, this account is specified at a very high level of abstraction. What we ultimately need is an account of the computations carried out by the neurons residing in each of these anatomical regions. In the next section, we extend the present account to make contact with this level of description.

Neural Network Model

The pivotal operation in our graphical framework (as in many applications of probabilistic graphical models) involves computing a marginal distribution for each variable the graph contains. What is required, in order to translate our account into neural terms, is an account of how this marginalization operation might be carried out in a neural network. Fortunately, a number of recent theoretical papers have addressed just this problem (Beck & Pouget, 2007; Deneve, 2008; Lee & Mumford, 2003; Litvak & Ullman, 2009; Ma, Beck, Latham, & Pouget, 2006; Pouget, Dayan, & Zemel, 2003; Rao, 2006). One approach that is particularly well suited to the present application was proposed by Rao (2005). Rao focused on a classic algorithm for marginalization in graphical models, known as belief propagation (Pearl, 1988). Belief propagation operates through message passing: Each variable node in the network sends to each of its neighbors a vector-valued message, the components of which encode specific marginal probabilities. The outgoing messages at each node are computed by combining incoming messages with information stored locally at the node. After the information from each node propagates throughout the network, the messages converging at each node can be combined to compute the marginal distribution for the pertinent variable (for full details of the algorithm, see Koller & Friedman, 2009; Pearl, 1988)

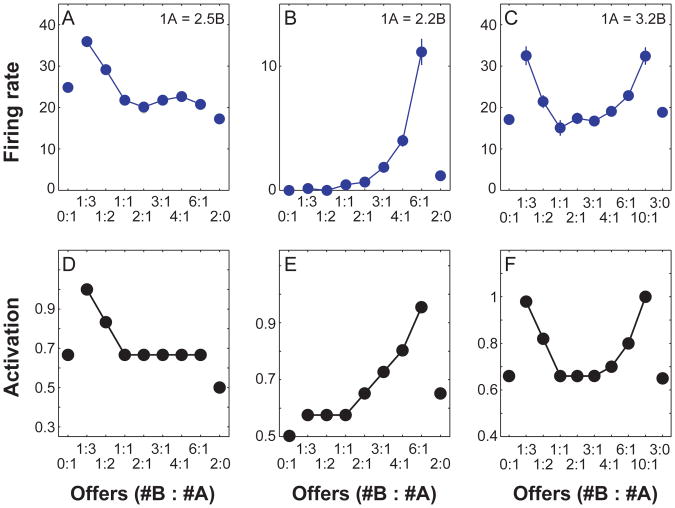

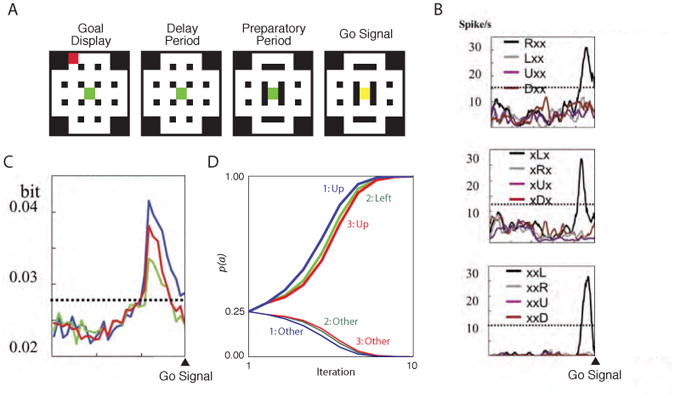

The propagation of messages in belief propagation is manifestly similar to the propagation of activation within a neural network; indeed, the algorithm was originally inspired by neural network research (Weiss & Pearl, 2010). Making good on this similarity, Rao (2005) suggested how networks of biological neurons might directly implement the belief propagation algorithm, applying the resulting approach to several specific problems, including evidence integration in perceptual decision making. Briefly, Rao's (2005) proposal was that each variable in the underlying graph is represented by a group of neurons, each coding for a particular message component in its instantaneous firing rate. The passage of messages between neighboring variables translates to synaptic transmission of firing-rate information, with synaptic weights and dendritic operations10 helping to transform the set of incoming messages into new outgoing messages.