Summary

The dorsal anterior cingulate cortex (dACC) has a near-ubiquitous presence in the neuroscience of cognitive control. It has been implicated in a diversity of functions, from reward processing and performance monitoring to the execution of control and action selection. Here, we propose that this diversity can be understood in terms of a single underlying function: allocation of control based on an evaluation of the expected value of control (EVC). We present a normative model of EVC that integrates three critical factors: the expected payoff from a controlled process, the amount of control that must be invested to achieve that payoff, and the cost in terms of cognitive effort. We propose that dACC integrates this information, using it to determine whether, where and how much control to allocate. We then consider how the EVC model can explain the diverse array of findings concerning dACC function.

The dorsal anterior cingulate cortex (dACC), spanning the cingulate gyrus and sulcus from the plane of the anterior commissure to the genu of the corpus callosum (Figure 1), is one of the most heavily studied regions of the brain and yet, remains one of the least clearly understood. Although has recently been an explosion of research on the role of dACC in cognition and behavior, this has led to a proliferation of diverging theories concerning its function. The dACC has been proposed to play a key role in pain processing, performance monitoring, value encoding, decision making, emotion, learning, and motivation. A precise and coherent account of dACC function seems as elusive now as it did in the earliest days of theory development.

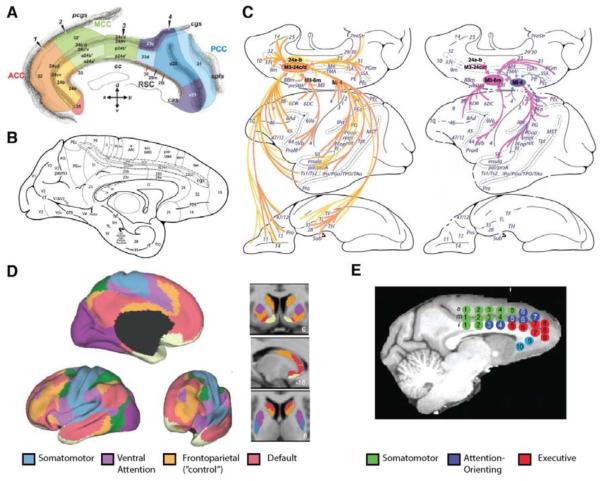

Figure 1. Anatomy and connectivity of the dorsal anterior cingulate cortex (dACC).

A-B) Cytoarchitectonic subdivisions of human (A) and macaque (B) medial prefrontal cortices. The cingulate sulcus (cgs) has been opened up in both. dACC typically refers to areas 24a-d and the dorsal extent of area 32 (32’ in A, 32(s) in B). Panel A focuses specifically on paracingulate regions of the medial surface, and the color-coding reflects Vogt et al.’s (2004) four-region model. The region referred to as human dACC throughout the main text is the anterior portion of mid-cingulate cortex (aMCC), encompassing an area Picard and Strick (1996) referred to as the rostral cingulate zone (RCZ).. C) Cortical projections to regions of dACC (left; areas 24a-b in yellow, areas 24c-d in orange) and more posterior regions of dorsomedial PFC (right; supplementary and primary motor cortices in pink and purple, respectively) in the macaque (cf. panel B). Relative to the more posterior regions, projections to dACC are much more widespread and include regions of orbital and rostrolateral PFC, temporal and parietal cortices, and insula. D-E) Patterns of resting-state functional connectivity estimated in human (D) and macaque (E) brains using fMRI. The colors in panel D label seven networks within which activity between the regions (of a given color) is highly correlated at rest. Under this parcellation scheme, regions of dACC span the frontoparietal network (orange; often referred to as the “control” network) and the ventral attention network (violet). Panel E shows a parcellation of resting-state connectivity networks focused on the connectivity of cingulate cortex regions-of-interest with the rest of the brain. Patterns of connectivity for the “executive” network (shown in red) and the “attention-orienting” network (dark blue), particularly within lateral PFC, suggest potential homologues with human frontoparietal and ventral attention networks. However, exact boundaries and homologies between dACC across species remain ambiguous (see, e.g., Cole et al., 2009). Panel A reprinted from Palomero-Gallagher et al. (2009) by permission of John Wiley and Sons; panels B-C reprinted from Morecraft et al. (2012), © (2012), modified with permission from Elsevier; panel D cortical and striatal connectivity reprinted from Yeo et al. (2011) and Choi et al. (2012), respectively, with permission from The American Physiological Society; panel E reprinted from Hutchison et al. (2011), by permission of Oxford University Press.

Two opposing tendencies appear to have slowed progress toward an integrated understanding of dACC function. One has been to base theoretical analyses on too narrow a subset of empirical findings, while another has been to embrace a wide range of empirical findings, but to reduce them to a single basic computation at the cost of oversimplifying dACC function. Here, we propose an integrative account of dACC function that strives to avoid these pitfalls.

We build on one observation which appears to be widely and consistently agreed upon: that dACC is engaged by tasks that demand cognitive control. Broadly, this can be defined as the set of mechanisms required to pursue a goal, especially when distraction and/or strong (e.g., habitual) competing responses must be overcome. Numerous meta-analyses of the neuroimaging literature have confirmed the dACC’s involvement in control-demanding tasks (Nee et al., 2007; Niendam et al., 2012; Ridderinkhof et al., 2004; Shackman et al., 2011) and these have been supplemented by evidence of a causal relationship between dACC and cognitive control. For instance, using diffusion tensor imaging (DTI), Metzler-Baddeley and colleagues (2012) showed that older adults with lower white matter integrity in the anterior cingulum bundle (the white matter bundle projecting to/from dACC) performed more poorly on control-demanding tasks.

Despite the strong consensus that dACC is involved in cognitive control, there is little agreement about the specific function(s) it subserves. Here, we synthesize a number of existing proposals concerning the role of dACC into a single theoretical construct and show how this can be reconciled with empirical findings concerning dACC function. Specifically, we propose that the dACC integrates information about the rewards and costs that can be expected from a control-demanding task, in order to estimate a quantity we refer to as the expected value of control (EVC). Put simply, EVC represents the net value associated with allocating control to a given task. We propose that dACC estimates this quantity in order to determine whether it is worth investing control in a task, how much should be invested and, when several potential tasks are in contention, which is the most worthwhile. We assume that this information is used to select among competing tasks and allocate the appropriate amount of control to performance of the one selected. This proposal ascribes to dACC a specific decision making function regarding the allocation of control that is distinct from other control-related functions, such as the valuative ones that provide input to the decision and the implementational ones responsible for executing it; these are presumed to be subserved by other neural mechanisms.

We begin by establishing some foundational points concerning cognitive control and its constituent functions that are necessary for framing the EVC theory and our consideration of dACC. We then introduce the basic elements of the EVC theory. Finally, we review key findings and existing theoretical proposals from the dACC literature, relating these to the EVC theory.

1. The computational basis of cognitive control

Processes that demand control are often distinguished from automatic processes, which involve associations that are sufficiently strong as to be resistant to distraction or interference (Botvinick and Cohen, in press; Cohen et al., 1990; Norman and Shallice, 1986; Posner and Snyder, 1975; Shiffrin and Schneider, 1977). A classic illustration of the distinction between controlled and automatic processing is provided by the Stroop task. Participants are shown a color word but asked to name the color of the font in which it is displayed. When the two dimensions disagree (e.g., GREEN), participants find it harder to name the color than when the two agree (e.g., RED). However, this interference effect does not occur when the task is, instead, to simply read the word. This difference between task conditions is explained by assuming that word reading is automatic (allowing the word to be processed even when the task is color naming), whereas color naming is controlled (preventing the color from being processed unless the task is to do so). This explanation is reinforced by the observation that, when presented with a conflict stimulus in the absence of a specific task instruction, people invariably read the word, illustrating the automatic, or “default”, nature of verbal responses to words. Verbally responding to the color requires an instruction and/or intention to do so, at least in the presence of conflicting word information.

1.1 A simple model of cognitive control

A computational model of the mechanisms underlying the Stroop task is shown in Figure 2A (Cohen et al., 1990). The model takes the form of a neural network, with units encoding stimulus features projecting forward to intermediate (associative) units, and then to output units representing verbal responses. The automaticity of the response to words is captured by strong connection weights along the pathway from word identity to verbal response. These also make it the default response (i.e., the response generated in the absence of any instruction). However, without any additional apparatus, the model would not be able to respond to the color of a conflicting stimulus. To address this, the model also includes a set of control units that represent the current task. When the unit representing the color naming task is active, this provides top-down support for units in the pathway from color to verbal response, priming these units and thereby permitting a response to the color even when there is conflicting information arriving along the word pathway. Thus, in this context, color naming can be considered to be a controlled process to the extent that a correct response to the color depends on activation of the color naming task unit.

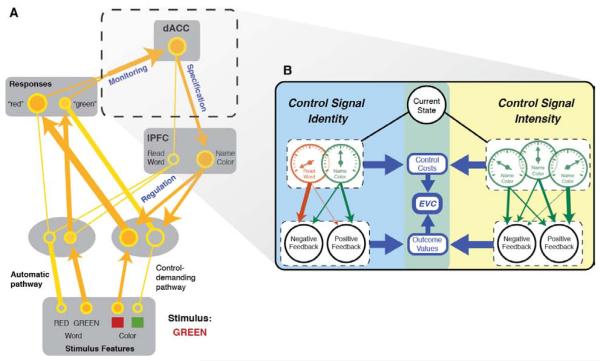

Figure 2. The Expected Value of Control (EVC) model applied to the Stroop task.

A) A model of the Stroop task illustrating the three major components of cognitive control: monitoring, specification and regulation. Thickness of the connections and size of the units denote the amplitude of the signal along each processing pathway. The figure also illustrates how the model can be extended to include conflict monitoring that, in turn, can be used to specify the strength of the control signal needed to support processing in the task-relevant pathway. Note that the model ascribes to dACC roles in monitoring and specification, and to the lPFC a role in regulation. B) The EVC model. Control signal specification involves choosing a control signal that maximizes EVC. For illustration, we diagram here the processes underlying specification for the Stroop color-naming task (illustrated in panel A). The objective is to select a control signal that maximizes the EVC. This, in turn, requires comparison of signals that differ in their identity (here, word-reading vs. color-naming task sets) and intensity (represented in the figure by meters). In both cases, the EVC estimation takes into account both the costs of each candidate signal and its expected payoffs and expected outcomes (arrow weights indicate transition probabilities). Although identity and intensity are segregated here for the purposes of illustration, they are fully integrated in the EVC estimation as specified in Eq. 1, and likely reflect the operation of a common set of mechanisms (see Section 5.3). Moreover, unlike specifying the intensity of a given control signal (right), the process of specifying control signal identities (left) does not require mutual exclusivity (i.e., multiple control signal identity-intensity pairings can be simultaneously specified as a single array).

The model shown in Figure 2A also includes a unit that serves a ‘conflict monitoring’ function, responding to coactivation of the network’s response units (see Botvinick et al., 2001). Such conflict is an indicator of inadequate control. For example, if the color naming unit is insufficiently activated, then activation of the response to the color will be weaker and compete less effectively with activation of the response to a conflicting word, allowing the latter to become more active. This coactivation will have two potentially adverse consequences for behavior. At best it will slow responding, since the correct response unit must overcome inhibitory competition from the incorrect one. At worst it will produce an error. These dangers can be ameliorated by increasing the activity of the color naming task unit. Thus, conflict serves as an indicator of the need for additional allocation of control.

1.2 Three component functions of cognitive control

This simple model of the Stroop task and conflict monitoring is of course not intended as a comprehensive model of cognitive control. However, the architecture of the model illustrates three core component functions of cognitive control (Figure 2A).

1. Regulation

The sine qua non feature of control is its capacity to govern or influence lower level information processing mechanisms, a function we refer to as regulation. In the language of engineering, activity of a task-demand unit represents a control signal, which determines the parameters for more basic processes (in this case, the sensitivity of the associative units). Note that this signal has two defining characteristics: its identity and its intensity (the strength of the signal, both in literal terms — e.g., level of activation of the task demand unit — and in terms of its impact on information processing). Control signals can determine a wide range of processing parameters, including thresholds and/or biases for responding (governing speed-accuracy tradeoffs; Bogacz et al., 2006; Wiecki and Frank, 2013), templates for attention or memory search (Desimone and Duncan, 1995; Olivers et al., 2011; Polyn et al., 2009), and modulators of emotion (Johns et al., 2008; McClure et al., 2006). In each case, a distinction can be made between signal identity (the parameter targeted) and signal intensity (the degree to which the parameter is displaced from its default value).

2. Specification

In order for regulation to occur, a critical step is for an appropriate control signal to be chosen: Control requires a decision on which, if any, controlled task should be undertaken, and on how intensively it should be pursued. We refer to this decision-making function as control signal specification, which must determine the identity and intensity of the desired control signal. Importantly, control signal specification should be distinguished from regulation which consists of implementing the specified control signal so as to actually effect the changes in information processing required for the task. This distinction between specification (the decision process) and regulation (that mediates its effects) is central to the EVC theory. While both are essential components of the control system, the EVC theory ascribes to dACC a role in specification but not regulation, as we discuss below.

3. Monitoring

In order to specify the appropriate control signal, and deploy regulative functions in an adaptive manner, the system must have access to information about current circumstances and how well it is serving task demands. Detecting and evaluating these requires a monitoring mechanism. The conflict-detection component in the Stroop model provides one example of such a monitoring function and how it can guide specification: the occurrence of response conflict indicates that insufficient control is being allocated to the current task (see Botvinick, 2007; Botvinick et al., 2001; Botvinick et al., 2004). In this instance, conflict indicates the need to re-specify control signal intensity. However, conflict is just one among many signals that can indicate the need to adjust intensity. Others include response delays, errors, negative feedback and the sensation of pain, and these signals all carry information about performance within a task and how to specify control signal intensity. Monitoring must also consider information relevant to the specification of control signal identity; that is, to task choice. Such information can come from external sources (e.g., explicit instructions, cues indicating new opportunities for reward, or the sudden appearance of a threat) or internal ones (e.g., diminishing payoffs from the current task indicating it is no longer worth performing, recollection of another task that needs to be performed, etc.). In all of these cases, monitoring must be responsive to, but should be distinguished from, the sensory and valuative processes that represent the actual information relevant to specification. Thus, just as we distinguish between specification and regulation on the efferent side of control, we distinguish between monitoring and valuation on the afferent side. In each case, the EVC theory ascribes to dACC a role in the former, but not the latter.

1.3 Optimization, motivation, and the cost of cognitive control

Research on control focused initially on regulative and monitoring mechanisms, but growing attention is being paid to the problem of control-signal specification. Work in this area has been driven increasingly by ideas from research on reward-based decision making and reinforcement learning. One emerging trend has involved reframing control-signal specification as an optimization problem, shaped by learning or planning mechanisms that serve to maximize long-term expected reward (Bogacz et al., 2006; Dayan, 2012; Hazy et al., 2007; O’Reilly and Frank, 2006; Yu et al., 2009). Under this view, cognitive control can be defined as the set of mechanisms responsible for configuring behavior in order to maximize the attainment of reward. This definition accords well with the definition of control in other fields, most notably control theory in engineering, and from this perspective, cognitive control can be viewed not only as adaptive, but also as motivated.

An emphasis on motivation also aligns with the ubiquitous observation that the exertion of cognitive control carries an inherent subjective cost. From the earliest definitions, controlled processing was described as effortful, and like physical effort, mental effort is assumed to carry intrinsic disutility. That is, people spontaneously seek to minimize it. Recent empirical work bears out this assumption, linking effort specifically to the exertion of cognitive control (Kool and Botvinick, in press; Kool et al., 2010). Human decision-makers show a bias against tasks demanding top-down control, and within certain bounds they will delay task goals or even forego reward in order to avoid such tasks (Dixon and Christoff, 2012; Kool et al., 2010; Westbrook et al., in press). These effects imply an intrinsic ‘cost of control’, which scales with the intensity of the control required to perform the task (Dixon and Christoff, 2012; Kool et al., 2010). These ideas, combined with the idea that control signals are specified based on the reward potential of the task they support, suggest that the allocation of control is driven by a cost-benefit analysis, weighing potential payoffs against attendant costs, including those inherently associated with the exertion of control itself.

1.4 The neural substrates of cognitive control

Previous work has established links between components of the Stroop model and specific neural structures involved in cognitive control. In particular, lateral prefrontal cortex (lPFC) together with associated structures (e.g., basal ganglia and brainstem dopaminergic nuclei) have been proposed to implement the regulative component of the model (Braver and Cohen, 2000; Cohen and Servan-Schreiber, 1992; Frank et al., 2001; Miller and Cohen, 2001), while dACC has been proposed to implement the monitoring component (Botvinick, 2007; Botvinick et al., 2001; Botvinick et al., 2004). According to this mapping, the key step of control-signal specification arises in the communication from dACC to lPFC (Botvinick et al., 2001; Kerns et al., 2004). That is, the model assigns to the dACC responsibility for monitoring and specification, evaluating current demands for control and using the relevant information to decide how to allocate control. The specified control signals are then implemented by lPFC and associated structures, which are assumed to be responsible for the regulative function of control — that is, actually effecting the changes in processing required to perform the task. The EVC model elaborates this proposal, structuring it in a normative description of how both the identity and the intensity of control signals are determined and placing new emphasis on optimization (i.e., reward maximization) in understanding the relationship, within dACC, between monitoring and specification.

2. Control-signal specification based on the expected value of control

The operation of cognitive control, as we have characterized it, involves deciding what control signal should be selected (i.e., its identity) and how vigorously this control signal should be engaged (i.e., its intensity) (Figure 2B). We propose that the brain makes this two-part decision in a rational or normative manner to maximize expected future reward. To make this idea precise, we will express the choice of what and how much to control in formal terms, borrowing approaches from reinforcement learning and optimal control theory to analogous problems of motor action selection.

We begin by defining a control signal to be an array variable with two components: identity (e.g., ‘respond to color’ or ‘respond to word’) and intensity. Determining the expected value of each control signal requires integration over two sources of value-related information. First, it must consider the overall payoff that can be expected from engaging this control signal, taking into account both positive and negative outcomes that could result from performing the corresponding task. Second, as discussed above, it must take into account the fact that there is an intrinsic cost to engaging control itself, which scales with the intensity of the signal required. Taken together, these two components determine what we will refer to as the expected value of control (EVC), which can be formalized as follows (see also Figures 2B and 4A-B):

| Eq.1 |

As indicated by the arguments on the left hand side, the EVC is a function of two variables, signal and state. Signal refers to a specific control signal (e.g., designating a particular task representation and its intensity). State refers to the current situation, spanning both environmental conditions and internal factors (e.g., motivational state, task difficulty, etc.). Finally, outcomes are subsequent states that result from the application of a particular control signal in the context of the current state, each with a particular probability (Pr); for example the occurrence of a correct response or of an error. Since outcomes are themselves states, the terms ‘state’ and ‘outcome’ in Eq. 1 can also be thought of as ‘current state’ and ‘future state.’ The Value of an outcome is defined recursively as follows:

| Eq.2 |

where ImmediateReward can be either positive or negative (for example, in the case of an error, monetary loss or pain; the term ‘reward’ is borrowed from reinforcement learning models but can be understood more colloquially as ‘value’). Note that the maximization of EVC in the final term is over all feasible control signals (indexed by i), with outcome serving in place of the current state. The estimation of outcome value thus folds in the EVC of control signals implemented in future states. The parameter γ is a discount factor, between zero and one, controlling how much the current decision weighs future rewards relative to more immediate ones. The significance of this final term is that it links outcome value (and thus the EVC) not only to immediate rewards, but also to predictable future events and their associated rewards.

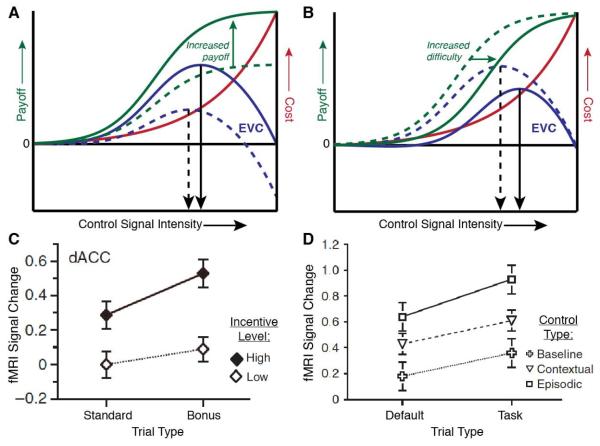

Figure 4. The influence of incentives and task difficulty on control allocation and dACC activity.

The EVC model predicts shifts in control intensity in response to changes in task incentives (panel A) and in task difficulty (B). In each case, control intensity is specified based on a maximization of the EVC (blue curves). As indicated in Eq.s 1 and 2, the EVC depends, in turn, on both the expected payoffs and costs for candidate control signals (see also Figure 2B). Payoffs (green curves) can vary with signal intensity due to resulting changes in task performance. For example, a stronger control signal might yield more accurate performance, and therefore greater payoffs. However, the inherent cost of control (red curves) also rises with control signal intensity. A) An increase in task incentives affects the payoff curve. Here, we consider a laboratory scenario in which monetary reward for each correct response shifts from a lower amount (dashed green curve) to a higher amount (solid). When integrated with cost information (red curve), this results in a shift in the EVC function (dashed blue curve to solid blue curve), and a resulting shift in the signal intensity that maximizes the EVC (dashed to solid black arrow). B) An increase in task difficulty reduces the expected payoff for any given control signal intensity (shift from dashed to solid green curve). In the present scenario this is due to a reduction in the probability, for any given signal intensity, of a correct response. The shift in the payoff curve, when integrated with cost (red curve), again yields a change in the EVC function (dashed to solid blue) and a shift in the EVC-maximizing control signal intensity (dashed to solid black arrow). C-D) Kouneiher and colleagues (2009) found that dACC activity tracked both of these EVC-relevant variables. They had participants perform a letter discrimination task and showed that dACC activity increased with the overall incentive level for the current block (C), whether or not the higher incentive was available for the current trial (Standard trials did not offer additional incentives for correct performance but Bonus trials did). They also found that dACC was modulated by the difficulty of a given trial (D; Default trials always had the same response mapping, obviating any additional letter discrimination, Task trials required using a letter discrimination rule based on the letter color, and this color-rule mapping was either stable throughout the session [Baseline/Contextual] or varied by block [Episodic]). See Sections 6 and 7 for additional details. Panels C-D reprinted by permission from Macmillan Publishers Ltd: Nature Neuroscience, Kouneiher et al. (2009), © (2009).

The final term in Eq. 1 captures the intrinsic cost of control, which is presumed to be a monotonic function of control-signal intensity (although for a richer model, see Kool and Botvinick, in press). In sum, Eq. 1 says that the EVC of any candidate control signal is the sum of its anticipated payoffs (weighted by their respective probabilities) minus the inherent cost of the signal (a function of its intensity).

Control-signal specification involves the identification of a combination of signal identity and intensity that will yield the greatest value. We propose that the control system accomplishes this by comparing the EVC across a set of candidate control signals, and seeking the optimum:

| Eq.3 |

Once it has been specified, the optimal control signal (signal*) is implemented and maintained by mechanisms responsible for the regulative component of control, which guide information processing in the service of task performance. This continues until a change in the current state — detected through monitoring — indicates that the previously specified control signal is no longer optimal (either in terms of its identity or intensity), and a new signal* should be specified.

3. An EVC model of dorsal anterior cingulate function

Drawing upon the theoretical constructs laid out above, we suggest that dACC function can be understood in terms of monitoring and control-signal specification. Specifically, we propose that the dACC monitors control-relevant information, using this to estimate the EVC of candidate control signals, selecting an optimum from among these, and outputting the result to other structures that are directly responsible for the regulative function of control (such as lPFC). Critically, we propose that the dACC’s output serves to specify both the identity and intensity of the optimal control signal. Thus, the dACC influences both the specific content of control (e.g., what task should be performed, or parameter should be adjusted) and also, by way of its intensity, the balance between controlled and automatic processing, taking into account the inherent cost of a control signal of the specified intensity.

The EVC model, shares elements both with our own and other theories concerning the mechanisms underlying cognitive control and action selection, as we shall emphasize. The value of the EVC model lies not in the novelty of its individual ingredients, but in its explicit formalization of these ingredients in a way that allows for their integration within a single coherent framework. The theory helps synthesize central concepts in theories of cognitive control — the distinction between controlled and automatic processing, and the relationship between the monitoring and specification functions of control, the relevance of both identity and intensity in control signal specification, and the costs of control — into an overarching account that is mechanistically explicit, computationally coherent, and that does justice to the wide array of findings that have been reported concerning dACC function.

4. Monitoring functions of the dACC: Supporting the calculation of EVC

Estimates of EVC require two key pieces of information: the current state (i.e. information concerning current task demands, processing capacity and motivational state) and the value of potential outcomes that may occur given each candidate control signal, taking into account their likelihood of occurrence and anticipated worth.

The EVC model proposes that dACC monitors such present-state and outcome-value information, garnered from other regions (such as orbitofrontal, ventromedial prefrontal and insular cortex), as a basis for computing and maximizing the EVC. A range of empirical evidence is consistent with the idea that dACC is responsive to each of these two types of information.

4.1. dACC and the monitoring of state information

Computing the intensity and the identity of the optimal control signal requires different types of information about present state. For example, the presence of conflict may indicate the need to increase the intensity of the control signal, whereas an unexpected environmental cue may indicate the need to change the identity of the control signal (e.g., to perform a more rewarding task). The evidence strongly suggests that dACC is sensitive to state information that serves both of these needs.

State information relevant to control signal intensity: conflict monitoring

As noted above, conflict can provide important information about the demands of the current task and the intensity of control that should be allocated. Increasing control intensity will generally improve performance. However, specifying the optimal control-signal must also take into account the cost of control, which also increases with intensity (Eq. 1). That is, control signals should be just strong enough to accomplish task objectives but no stronger (Figure 4). Given this, it is critical to determine the control demands of a task. Explicit outcomes provide one source of such information (e.g., feedback concerning performance); however, such information is not always available. Conflicts that arise during processing represent one source of internally available information useful for this purpose. As illustrated by the Stroop model, conflict during processing can provide an indication of the need to allocate additional control, much as an overt error would do. In fact, conflict serves as an earlier, and potentially more sensitive, signal of the need for control than explicit error feedback (Yeung et al., 2004).

Both empirical and computational modeling work strongly support the role of dACC in conflict monitoring. The first imaging study of the Stroop task (Pardo et al., 1990) reported dACC activity in response to conflict stimuli. A number of subsequent studies provided additional evidence that dACC activity is specifically associated with the presence of conflict in processing (Carter et al., 1998; Carter et al., 2000), and that it can be dissociated from the regulative functions of control (Botvinick et al., 1999; Egner and Hirsch, 2005a, b; Kerns, 2006; Kerns et al., 2004; Macdonald et al., 2000; however, see Figure 3 and Section 7.2). These studies focused on tasks that involved conflict among competing responses, using classic paradigms such as the Stroop task, Simon task, and Eriksen flanker task (see meta-analyses in Laird et al., 2005; Nee et al., 2007; Ridderinkhof et al., 2004). However, subsequent studies have extended the association between dACC and conflict processing to a much wider range of tasks, showing that it is also sensitive to conflicts that arise in perceptual discriminations (Ho et al., 2009; Krebs et al., 2012; Woolgar et al., 2011), language processing (Barch et al., 2000; Snyder et al., 2011), value-based decisions (Blair et al., 2006; Marsh et al., 2007; Pochon et al., 2008), moral judgments (Greene et al., 2004), social judgment (Cunningham et al., 2004), memory retrieval (Guerin and Miller, 2011), and strategy selection (Venkatraman et al., 2009).

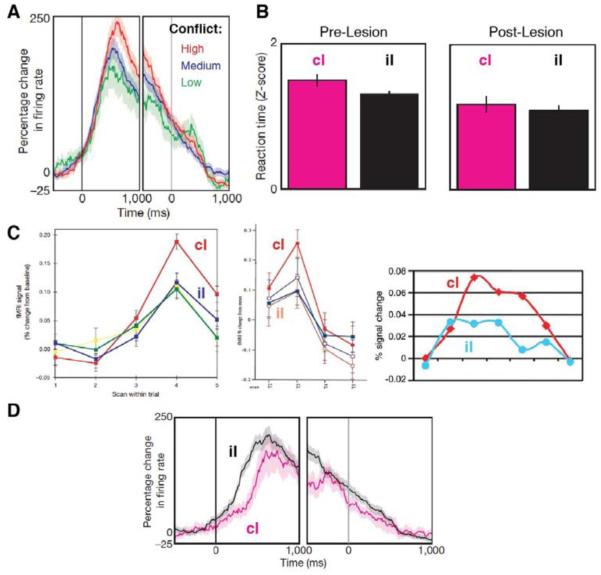

Figure 3. The relationship of conflict adaptation effects in human dACC to control monitoring versus specification.

A) Measuring from single units in human dACC, Sheth et al. (2012) found a parametric effect of current trial conflict (example neuron shown), an effect that has been widely reported in neuroimaging studies (see Section 4.1). Left and right sides of this figure plot firing rate changes aligned to stimulus and response onsets, respectively. Note that this effect alone can be indicative of either monitoring of demands and/or specification of different intensities of control accordingly. B) Left: This group also found evidence of conflict adaptation (Gratton et al., 1992), with high-conflict (incongruent) trials requiring greater control, and therefore exhibiting longer RTs, when following a low-conflict (congruent) trial (cI) than when following another incongruent trial (iI). Right: This behavioral effect was abolished after these individuals underwent cingulotomy. C) Previous fMRI studies have tied sequential adjustment effects to a particular pattern of responses in dACC: greater activity on cI than iI trials. This pattern has been observed in numerous experiments using different tasks and manipulations, including Botvinick et al., 1999 (left), Carter et al., 2000 (center), and Kerns et al., 2004 (right). It has been interpreted as reflecting a monitoring function, since greater dACC activity was observed under conditions of high conflict but low control. D) Strikingly, single unit recording data from Sheth et al. show the opposite pattern, with higher firing rates on iI than cI trials, a pattern consistent with control-signal specification. The presence of both monitoring and specification signals in dACC is consistent with the EVC theory. Determining why one function manifests in fMRI and the other in single-unit recording presents an important challenge for further research, and the EVC model may be of use in guiding such investigations. Panels A, B, and D reprinted by permission from Macmillan Publishers Ltd: Nature, Sheth et al. (2012), © (2012). Panel C: [left] reprinted by permission from Macmillan Publishers Ltd: Nature, Botvinick et al. (1999), © (1999); [center] from Carter et al. (2000), © (2000) National Academy of Sciences, USA; [right] from Kerns et al. (2004), with permission from AAAS.

The majority of evidence linking dACC to conflict monitoring has come from human neuroimaging studies. However, two recent studies have used direct neuronal recordings to test this relationship. In one study (Sheth et al., 2012), patients awaiting cingulotomy performed a Stroop-like interference task. fMRI identified conflict-related activity in a dACC region targeted for surgical resection. During the surgery itself, single-unit recording within the same region revealed firing-rate responses to conflict, providing unusually direct evidence for dACC involvement in conflict monitoring (Figure 3).

Another study provided evidence for neuronal responses to conflict in the macaque (Amemori and Graybiel, 2012). In this experiment, monkeys made choices between receiving a small reward or a larger one paired with an aversive stimulus (air-puff to the eye). Neuronal responses in pregenual ACC — a region potentially homologous to conflict-associated regions of human dACC (see Figure 1E; Hutchison et al., 2011) — tracked the subjective similarity of a given set of option values (and thus decision conflict). Variation in decision conflict accounted for variance in the firing rate of neurons in this area independently of reward, air puff magnitude, overall expected utility, or response time.

Computational modeling work has provided convergent support for the idea that dACC activity is responsive to the degree of conflict elicited by the task (Botvinick et al., 2001). Models of conflict monitoring have accounted not only for task conditions that elicit dACC activity, but also the dynamics of that activity (Cockburn and Frank, 2011; Yeung et al., 2004).

The idea that conflict monitoring provides an internal index of task difficulty is also consistent with the ubiquitous observation that dACC activity is closely associated with the cognitive demands of a task (Botvinick, 2007; Duncan, 2010; Nachev et al., 2007; Paus et al., 1998; Venkatraman and Huettel, 2012). This includes demands that are increased by responses that are sequential or depend on complex rule structure versus simple and isolated ones (e.g., Kouneiher et al., 2009; Shima and Tanji, 1998); novel versus familiar or habitual responses (e.g., Procyk et al., 2000); larger vs. smaller option sets (e.g., Barch et al., 2000; Marsh et al., 2007; Snyder et al., 2011); the accumulation of evidence over the course of making a decision (e.g., Gluth et al., 2012; Landmann et al., 2007); or the requirement for internally generated responses versus externally cued/guided ones (e.g., Fleming et al., 2012; Shima and Tanji, 1998; Walton et al., 2004).

Despite the wealth of evidence that dACC is responsive to conflicts in processing, this idea has not been without controversy (Cole et al., 2009; Ito et al., 2003; Mansouri et al., 2009; Nachev, 2011; Nakamura et al., 2005). Early debates focused on whether dACC is responsive to conflict versus explicit failures in performance (i.e., errors) and/or negative feedback. There now seems to be general consensus that, consistent with the EVC model, dACC is responsive to both (e.g., Nee et al., 2011). However, recently it has been suggested that dACC activity reflects “time-on-task” irrespective of conflict, errors, or even error likelihood (Grinband et al., 2011b), and that it is more closely tied to task maintenance or attention that endures over the course of even simple tasks. However, the theoretical analyses that have led to this conclusion have been challenged (Brown, 2011; Yeung et al., 2011; see also Grinband et al., 2011a). Furthermore, we note that their interpretation of dACC function, more closely aligned with the regulative component of control, is difficult to square with much of the literature we will review in the remaining sections. For instance, it fails to account for dACC responses to the value of outcomes or for conditions in which dACC activity is uncorrelated, or even negatively correlated with, RT (e.g., Cavanagh et al., 2011; Gluth et al., 2012; Guerin and Miller, 2011; Sheth et al., 2012; Van Maanen et al., 2011). In contrast, while the EVC model predicts that dACC responses reflecting its monitoring function may correlate with RT, it also predicts conditions under which this should not necessarily occur, as discussed further below.

State information relevant to control signal identity

So far, our consideration has focused on state information relevant to deciding how much control to allocate; that is, the specification of control signal intensity. However, knowledge concerning the current situation or state is equally important for deciding which task to pursue.; that is, the specification of control signal identity. The dACC sits in an ideal location for gathering such state information (Morecraft et al., 2012; Shackman et al., 2011; Weston, 2012; Figure 1C). Inputs from cortical areas associated with high-level perception give it immediate access to information about external task cues, and inputs from structures such as the amygdala and insula give it access to information about motivational states that may favor particular lines of behavior. Although the impact of such inputs on dACC activity has been relatively little studied, the information they carry would be of obvious relevance to selection among control signal identities. Consistent with this, dACC appears to differentiate representations of signal identity, including representations of response rules (Dixon and Christoff, 2012; Durstewitz et al., 2010; Johnston et al., 2007; Matsuzaka et al., 2012; Womelsdorf et al., 2010), task sets (Forstmann et al., 2006; Haynes et al., 2007) and specific actions (Hampton and O’Doherty, 2007; Isomura et al., 2003; for reviews see Morecraft and Tanji, 2009; Rushworth et al., 2004; Sakai, 2008). Taken together, such findings support the idea that the dACC registers state information directly relevant to the specification of control-signal identity.

4.2. dACC monitoring of outcome information

Estimation of the EVC requires not only information about the present state, but also information about potential outcomes and, critically, the positive or negative value associated with those outcomes. In order to be sensitive to such information, the dACC should register both the anticipated value of outcomes ahead of their occurrence and their value when they actually occur.

Negative-valued outcomes

Numerous neuroimaging findings have demonstrated dACC responses to negative outcomes. These range from the most concrete, such as pain (reviewed in Shackman et al., 2011), errors in task performance (e.g. Brown and Braver, 2005; Holroyd and Coles, 2002), monetary loss (Blair et al., 2006; Kahnt et al., 2009; Liu et al., 2011), and the presentation of threatening stimuli (e.g., Mobbs et al., 2010), to more abstract outcomes such as social rejection (Eisenberger and Lieberman, 2004; Kawamoto et al., 2012), a loss by a favored sports team (Cikara et al., 2011), pain experienced by another individual (Botvinick et al., 2005; Lamm et al., 2010), and even the hypothetical death of strangers (Shenhav and Greene, 2010). These findings are paralleled by direct neuronal recordings in non-human species, which have demonstrated responses in dACC to errors (Amiez et al., 2005; Ito et al., 2003; Niki and Watanabe, 1979; Totah et al., 2009), losses or less-than-anticipated gains (Ito et al., 2003; Kennerley et al., 2011), and cues predictive of aversive outcomes (Gabriel and Orona, 1982; Amemori and Graybiel, 2012).

Conflict, in addition to its role in signaling task difficulty, may itself constitute an aversive outcome (Botvinick, 2007; Fritz and Dreisbach, 2013; Hirsh et al., 2012). Amemori and Graybiel’s (2012) study provided evidence in support of this assertion, showing that patterns of activity for ACC neurons that coded positively for conflict functionally clustered with those that coded for magnitude of punishment.

Positive-valued outcomes

There is also a growing accumulation of findings indicating that dACC is also responsive to positive outcomes. Direct neuronal recordings have consistently identified responses to rewarding events, often among units interdigitated with those responsive to negative outcomes. This includes neurons responsive to the magnitude and probability of rewards, including to hypothetical rewards (for a recent review see Wallis and Kennerley, 2011). Human neuroimaging studies have also provided evidence for reward-related signals in dACC (Knutson et al., 2005; Kouneiher et al., 2009; meta-analysis in Bartra et al., 2013).

Control-relevance of outcome value

A simple interpretation of the findings above might be that dACC responds to the value of any event. However, the EVC model makes a more specific claim: dACC should be selectively responsive to the value of events that are relevant to the allocation of control. To engage dACC, a valenced event need not necessarily pertain to the current task, but it should pertain to some potential control-demanding task that could currently be executed. Although this prediction has not been well-tested in the literature, there is evidence that dACC is more sensitive to outcomes when they are tied to actions, or stimuli that demand an action, than when they are only tied to non-imperative stimuli (for reviews see Rangel and Hare, 2010; Rushworth et al., 2011; Wallis and Kennerley, 2011). Furthermore, there is evidence that dACC responses to outcomes diminish when there is a decline in demand for control. For example, fMRI studies have shown that dACC engagement falls progressively with extended practice on a cognitive task (Chein and Schneider, 2005; Chein and Schneider, 2012). Similarly, feedback-related dACC activity is observed in tasks that require subjects to search for the correct response from a set of options, but is diminished when they are allowed to repeat the correct response a number of times before outcome contingencies change (reviewed in Khamassi et al., 2010). Landmann and colleagues (2007) found the same pattern of dACC activity in a task for which participants had to progressively discover the correct sequence of button presses, also through trial and error (see also Procyk et al., 2000). They showed that dACC activity was greater during search than after discovery of the correct sequence, and that during search it correlated with the amount of information carried by feedback at each step of the current sequence.

Note that, insofar as the dACC takes account of control-relevant outcome information in estimating EVC, it should therefore predict subsequent shifts in control based on such information. There is robust evidence for such a link, as will be discussed below.

Reward-prediction error signals

As articulated in Eq. 2, the value term in the EVC expression refers not only to the immediate reward associated with an outcome, but also to the expected future rewards. This is important, because it allows control-signal specification to be based on delayed outcomes. Readers familiar with reinforcement learning will recognize this particular formulation of value from that context (Sutton and Barto, 1998). In reinforcement learning models, estimates of state value are typically shaped not directly by raw representations of reward, but instead by reward-prediction errors (PE), signals indicating the extent to which experienced rewards are better or worse than expected.

A number of findings indicate the occurrence of PE signals in the dACC. The earliest evidence came from EEG recordings demonstrating an event related potential (ERP) with a frontomedial source that occurs in response to negative outcomes. This was dubbed the feedback-related negativity (FRN; Miltner et al., 1997), referring to its occurrence in response to negative feedback such as the indication of an error in task performance or a monetary loss following a gamble (Gehring and Willoughby, 2002). Critically, the FRN has been found to be sensitive to the expectations established by local context (Holroyd et al., 2004a; Jessup et al., 2010; Nieuwenhuis et al., 2005b). For example, in a gambling task, when the range of outcomes is from negative to neutral, the FRN is observed for losses but not neutral outcomes. However, when outcomes range from neutral to positive, the FRN is now observed for neutral outcomes, but not gains. Thus, expectations established by context dictates whether the FRN is elicited by a neutral outcome (see also Jessup et al., 2010). This provides strong evidence that the FRN reflects a PE, rather than a direct representation of absolute reward. Although the source of the FRN has not been definitively localized to dACC, neuroimaging studies have demonstrated activity in dACC under conditions that mimic those in which the FRN is observed (Holroyd et al., 2004b).

The FRN is closely related to another commonly observed ERP, the error related negativity (ERN). This occurs following errors in speeded response trials even when explicit feedback is not provided. There is direct evidence that the ERN has its source in the dACC: Simultaneous recording of EEG and fMRI has shown that the magnitude of the ERN correlates with the BOLD signal from dACC on a trial-by-trial basis (Debener et al., 2005). As suggested earlier, direct neuronal recordings from regions of dACC have also revealed error-related responses in humans (Wang et al., 2005), monkeys (Emeric et al., 2008; Ito et al., 2003), and rats (Narayanan and Laubach, 2008) (however, see Cole et al., 2009 for discussion of homology). One influential interpretation of the ERN is that it reflects negative PEs (associated with the commission of an error) that are used to drive learning in the service of improving action selection (Holroyd and Coles, 2002). The EVC model is consistent with this hypothesis. However, it has been proposed that the ERN can also reflect post-response conflict, under conditions in which error information is not immediately available (Yeung et al., 2004). In this context, the ERN may reflect the role of dACC in conflict monitoring and control-signal specification, as discussed above.

In addition to these EEG findings, evidence for positive and negative PEs in dACC has been found with both direct neuronal recordings (Kennerley et al., 2011; Matsumoto et al., 2007; Quilodran et al., 2008) and fMRI (Amiez et al., 2012; Kahnt et al., 2011). Interestingly, this has suggested that dACC can also respond to PEs in an unsigned manner — that is, comparably to both positive and negative PEs. This has been shown through direct neuronal recordings (Bryden et al., 2011; Hayden et al., 2011a), and is consistent with EEG and fMRI studies showing elevated dACC activity in response to surprising outcomes (Cavanagh et al., 2012; Landmann et al., 2007; Nee et al., 2011; Wessel et al., 2012) and, more generally, following unanticipated shifts in task contingencies (Alexander and Brown, 2011; Behrens et al., 2007; Bland and Schaefer, 2011). These observations have inspired a recent model of dACC function by Alexander and Brown (2011), which suggests that dACC stores predicted associations between stimuli and response-outcome (RO) conjunctions, and signals any violations of these predicted S-RO relationships. Although this proposal is potentially compatible with the EVC model, the latter makes more direct contact with data concerning the consequences of surprise signaling, as we discuss further on.

5. Specification function of the dACC: Maximizing the expected value of control

The EVC model proposes that information provided by monitoring is used to determine when and how control signals should be adjusted in order to maximize the opportunity for reward, by specifying the optimal control-signal identity and intensity. While the mechanisms implementing specification along each of these dimensions may be tightly coupled or even the same, different circumstances may rely differentially upon these two dimensions of specification. For example, switching between two control-demanding tasks would rely more heavily on selecting a signal identity, while responding to increasing demands of the current task by augmenting control would rely more heavily on adjustments of intensity (Figure 2B).

The literature provides evidence that dACC is engaged in both types of specification, though most studies have focused on only one or the other. Accordingly, most theories have tended to ascribe to dACC a role in either task selection (identity specification) or modulation of control (intensity specification). The EVC model integrates these accounts, proposing that they refer to different dimensions of the same function. Accordingly, dACC should be responsive to circumstances that engage either or both. In the two sections that follow, we review the literature concerning the association of dACC with each of these two dimensions of control specification.

5.1. Specification of control signal identity

Among the earliest theories of dACC function were ones that proposed a role in action selection (Devinsky et al., 1995; Matsumoto et al., 2003; Rangel and Hare, 2010; Rushworth et al., 2007; Rushworth et al., 2004). More recent theories have elaborated this idea to include task selection (Holroyd and Yeung, 2012; Kouneiher et al., 2009; O’Reilly, 2010). These are commensurate with the role of dACC in the specification of control signal identity proposed by the EVC model. Some evidence for this comes from studies showing dACC selectivity for different control signal identities, including rules and task sets. However, the EVC model also requires that control signals be specified based on their expected value. This predicts that the dACC should exhibit responses that are both selective for a particular line of behavior and sensitive to the value of outcomes associated with that behavior. This prediction is consistent with the findings of several recent studies.

For example, when monkeys were required to choose between targets in a visual saccade task, overlapping populations of dACC neurons were found to encode the value and direction of the saccade chosen on a given trial (Cai and Padoa-Schioppa, 2012; Hayden and Platt, 2010). Kaping and colleagues (2011) demonstrated similar effects in a task involving covert shifts of visual attention, rather than explicit eye movements. In their study, a colored fixation cue at the start of each trial indicated which of two subsequently presented colored visual stimuli should be attended. The monkeys were then rewarded if they correctly reported whether the stimulus with the corresponding color rotated clockwise or counterclockwise. The amount of reward earned by a correct response was signaled by the color of the initial fixation cue. As in previous studies, overlapping neuronal populations in rostral dACC were found to encode the target of the attentional shifts and the value of those targets, independently of any overt saccade used to report movement direction.

These findings are consistent with a role for dACC in specifying control signal identity based on its expected value. However, an alternative interpretation is possible: they could instead reflect the state and/or outcome monitoring functions of dACC without reflecting a role in specification. Weighing against this more restrictive interpretation is the observation that activity of dACC neurons in the studies described above typically anticipated switches between actions and/or tasks (though see Cai and Padoa-Schioppa, 2012). Similarly, Womelsdorf and colleagues (2010) have shown that local field potentials (LFPs) in the theta band observed within macaque dACC could discriminate which of two stimulus-response mapping rules (pro- vs. anti-saccade) would be used prior to appearance of the stimulus. Furthermore, this rule selectivity was absent prior to error trials, consistent with the hypothesis that activity in dACC was required to specify the identity of the task-appropriate control signal. Interestingly, when rule-selective activity reemerged prior to a correct trial following an error, the selectivity was seen earlier than on correct trials that followed a previous correct one (see also Johnston et al., 2007). A subsequent study from this group used a similar task to provide causal support for this control specification role (Phillips et al., 2011). They found that stimulating dACC during the response preparation period significantly facilitated antisaccade performance (accelerating responses without increasing error rate), but had a less consistent influence on prosaccade performance, a complement to the impairments (slowing) previously found in human dACC lesion patients performing an antisaccade task (Gaymard et al., 1998).

Additional evidence consistent with identity specification comes from one of the most comprehensive analyses to date of human patients with focal brain lesions (Gläscher et al., 2012). This study combined data from four different set-shifting tasks into a single “cognitive control factor” and found that the poorest performance along this factor was associated with lesions in rostral dACC. These findings are consistent with a causal role for dACC in specifying control identities. It is also consistent with its role in specifying the intensity of those control signals.

5.2. Specification of control signal intensity

Motivation

A role in specifying control intensity is consistent with the earliest observations regarding dACC function, which ascribed to it a function in ‘motivation,’ driven in part by the observation that medial frontal damage can lead to gross deficits in motivated behavior (e.g., abulia; see Holroyd & Yeung, 2012). More recent proposals have suggested that dACC motivates or ‘energizes’ action or task engagement based on current incentives (Holroyd and Yeung, 2012; Kouneiher et al., 2009; Stuss and Alexander, 2007). In support of this, circumscribed lesions that encompass dACC produce longer overall reaction times (e.g., Alexander et al., 2007; Fellows and Farah, 2005), and higher false alarm rates (e.g., Løvstad et al., 2012; Tsuchida and Fellows, 2009). These are consistent with a role for dACC in specifying control intensity.

Adaptive adjustments in control intensity

Beyond this broad relationship to motivation, the EVC model makes the more specific proposal that the dACC is responsible for adjusting the intensity of control adaptively based on task demands. That is, not only must the control system determine what task is best to perform, but also the amount of control that must be allocated to that task so as to optimize EVC. This follows from the assumption that control is costly, as discussed earlier (see Figure 4). There is longstanding evidence for adaptive adjustments in control in the behavioral literature, for example changes in the speed-accuracy tradeoff observed following errors in simple decision tasks (see Danielmeier and Ullsperger, 2011). Gratton et al. (1992) suggested that such adaptive adjustments extend to the allocation of attention, showing that the response to an incongruent stimulus is faster when it follows another incongruent stimulus than when it follows a congruent one. This was interpreted as evidence of an enhancement of attention to the task-relevant dimension in response to the interference produced by a prior incongruent one.

In computational work, Botvinick et al. (2001) demonstrated that the behavioral effects described above could be explained by a mechanism that monitors conflict elicited by lapses in performance and/or interference and uses this to adjust the intensity of the task-relevant control signals in order to maintain task performance. However, the EVC model makes a stronger claim: that such adjustments serve to optimize the allocation of control. A modest, but growing corpus of work has begun to address this stronger claim and its relation to dACC function.

Optimization of control intensity

The most extensive analyses of control optimization have focused on simple two-alternative choice tasks, such as those used to demonstrate adaptive changes in the speed-accuracy tradeoff mentioned above. Such tasks have been modeled extensively using simple accumulator models, in which the intensity of the control signal influences two parameters of the decision process: the decision threshold and initial bias. Together, these determine the speed-accuracy tradeoff. Botvinick et al. (2001) showed that monitoring response conflict in such models and using this to adjust the intensity of the control signal accurately accounted for adaptive changes in the speed-accuracy tradeoff observed in behavior. In that model, the intensity of the control signal determined the decision threshold. More recently, formal analyses by Bogacz et al. (2006) have shown that there is an optimal threshold (i.e., speed-accuracy tradeoff) that maximizes reward rate for a given set of task conditions and similarly for initial bias. Furthermore, behavioral studies show that participants adapt their behavior to changes in task conditions in ways that often approximate adoption of the optimal threshold and bias (reviewed in Cohen and Holmes, in press). While most work addressing optimality of control has focused on adjustments of threshold and/or bias in simple two-alternative forced-choice tasks, at least one theoretical effort has addressed the optimal allocation of attention in a conflict task. Yu et al. (2009) determined the optimal strength with which attention should be allocated to the target stimulus in the Erisken flanker task. They showed that this could be approximated by within-trial adjustments in the strength of attention based on conflict monitoring, and that this in turn accurately reproduced the dynamics of attentional allocation observed in the task.

Role of dACC in adaptive adjustments of control intensity

The findings of these theoretical and behavioral studies are consistent with the idea that the intensity of the control signal is adjusted to maximize EVC. The EVC model proposes that dACC mediates these adjustments, by monitoring for the conditions that require them, and specifying the necessary adjustments for others systems responsible for implementing them. This makes two predictions: first, that dACC should be responsive to conditions indicating the need to adjust control intensity; and, second, that it should be associated with the engagement of neural systems responsible for implementing these adjustments (i.e., the regulative function of control).

There is extensive evidence in support of the first prediction, indicating that dACC is responsive to conditions requiring adjustments of threshold and/or response bias, such as increases in time pressure and changes in prior probabilities (Bogacz et al., 2010; Forstmann et al., 2010; Forstmann et al., 2008; Ivanoff et al., 2008; Mulder et al., 2012; Van Maanen et al., 2011); as well as conditions requiring changes in the degree of attention, such as the cases of processing conflict described earlier.

There is also evidence in support of the second prediction. Several studies have shown that dACC interacts directly with structures proposed to implement changes of threshold, such as the subthalamic nucleus (Aron et al., 2007; Aron and Poldrack, 2006; Cavanagh et al., 2011; Jahfari et al., 2011; Wiecki and Frank, 2013), as well as those thought to influence response biases, such as dorsal striatum (Bogacz et al., 2010; Jahfari et al., 2011; Wiecki and Frank, 2013). There is also evidence that dACC is associated with adjustments in the strength of attention in conflict tasks. Several human neuroimaging studies have demonstrated a direct association between dACC responses to conflict on one trial, and subsequent increases in the activity of regions thought to be responsible for regulating attention and corresponding improvements in performance on the next trial (e.g., Cavanagh et al., 2009; Kerns, 2006; Kerns et al., 2004; King et al., 2010; Macdonald et al., 2000). In a recent study, Danielmeier and colleagues (2011) used a variant of the Simon task to study the relationship of dACC responses to conflict, performance, and activity in stimulus-specific regions of visual cortex. As had previously been found, dACC activity associated with errors predicted response slowing on the subsequent trial. Critically, however, it also predicted the degree to which activity increased in task-relevant and decreased in task-irrelevant processing regions, consistent with an increase in the control signal following errors. EEG studies have provided similar evidence, linking indicators of dACC responses (such as the ERN) to sequential adjustments in behavior following conflict and/or errors (Crottaz-Herbette and Menon, 2006; Forster et al., 2011). Such effects can also be found within a given trial. For example, Sohn et al. (2007) found that when participants were explicitly informed about the amount of conflict likely to arise on a given trial of a problem-solving task, anticipatory dACC activity predicted how efficiently conflict was resolved on that trial (see also Aarts et al., 2008). Finally, studies in non-human species have also provided evidence that responses in dACC predict changes in the amount of attention subsequently paid to a given stimulus or task dimension (Bryden et al., 2011; Narayanan and Laubach, 2008; Totah et al., 2009).

These studies provide convergent evidence for a correlation of responses in dACC with subsequent changes in performance and task-specific neural activity indicative of adjustments in the intensity of control. Sheth et al. (2012) provided evidence that dACC contributes causally to these adjustments. Patients about to undergo cingulotomy were studied using both fMRI and intracranial recordings while performing a conflict task. Preoperatively, participants exhibited the standard conflict adaptation effects in both behavior (e.g., faster RTs on high-conflict trials that followed a high-conflict versus a low-conflict trial; Figure 3B, left) and neuronal activity (differential dACC firing rates for this same contrast; though see Figure 3C-D for discussion of a surprising divergence from previous neuroimaging findings). Importantly, following cingulotomy this adaptation effect was no longer apparent, consistent with a causal role for this region in adaptively influencing control intensities (Figure 3B, right).

Attention for learning

Another context in which the dACC appears to play a role in specifying control intensity relates to its responses to surprising events. Research demonstrating unsigned PE signals in dACC has highlighted a potential connection with the Pearce-Hall model of learning, in which surprising outcomes trigger an intensification of attention, that in turn facilitates learning. To test this, Bryden and colleagues (2011) had rats poke their nose into a port to receive an odor instructing them where to obtain a reward, and found that rats were faster to poke their nose into this port on trials that followed a surprising outcome (increases or decreases from expected reward). Critically, they found that responses in dACC to surprise on the preceding trial predicted the degree to which the animal hastened or slowed this orienting response on the trial that followed. Also consistent with an ‘attention for learning’ account, Behrens and colleagues (2007) showed that dACC responses to changes in task contingencies – specifically, to their volatility, which reflects the expected frequency of surprise – were associated with more robust learning. Such findings, and the attention-for-learning account overall, are consistent with the EVC model of dACC, insofar as the signals driving top-down attention and/or increases in learning rates may be considered as control signals.

5.3. Default override

Thus far we have treated the specification of identity and intensity separately. In reality, however, the identity and intensity of the control signal must be jointly specified (see Figure 2). For example, to perform color naming in the Stroop task, the control system must specify both the identity of the control signal (the color naming task), as well as the intensity needed to overcome any conflict from the word. Such circumstances are representative of a broader class of conditions often described as default override. In general, this refers to situations in which the task to be performed is less automatic than the default behavior in that circumstance; that is, the behavior that would normally occur in absence of control and is the source of conflict. Under such circumstances, adequate control is needed to override the default response, and execute the specified task (see, e.g., Shah and Oppenheimer, 2008).

Some of the earliest neuroimaging studies of cognitive control established a role for dACC in overriding default behavior (e.g., Paus et al., 1993). The dACC’s involvement in overriding defaults has been seen not only when the participant is explicitly instructed to perform the non-default behavior, but also when they voluntarily make a choice that runs counter to current task- or context-defined defaults, including choices to go against gain vs. loss frames (De Martino et al., 2006), against the status quo (Fleming et al., 2010), against Pavlovian response-outcome associations (Cavanagh et al., 2013), or against a group decision (Tomlin et al., 2013). Three additional circumstances that involve default override, and that have begun to attract considerable attention, are exploration, foraging and intertemporal choice.

Exploration

This refers to behavior that favors information gathering with the prospect that this will yield greater future reward, over the pursuit of behavior with known and usually more immediate reward (i.e., exploitation). People generally exhibit a strong bias toward the pursuit of more immediate reward, so exploitation can be considered the default. Choosing to explore therefore requires overriding this default, and thus allocating control. Accordingly, the EVC model predicts that exploration should engage dACC (for related accounts, see Aston-Jones and Cohen, 2005; Khamassi et al., 2010). This prediction was borne out in a study carried out by Daw et al. (2006). Participants chose among four options providing probabilistic rewards that varied gradually over time. Initially they sampled the different options (exploration) to determine which currently provided the greatest mean payoff, after which they repeatedly selected that one (exploitation) until it began to decline in value, or they suspected others may have increased in value. At that point, they chose to sample the other options again (exploration). Decisions to explore were associated with increased dACC activity. This association between dACC and exploratory behavior has been replicated in humans (Amiez et al., 2012; Cavanagh et al., 2012), and also demonstrated in monkeys (Procyk et al., 2000; Quilodran et al., 2008) and rodents (Karlsson et al., 2012).

Foraging

Like exploration, foraging involves searching for an alternative source of reward. However, in this case it typically involves an initial cost and is also usually driven by knowledge of the reward structure of the environment (whereas exploration is directed at acquiring such knowledge). Nevertheless, like exploration, foraging involves overriding current pursuit of more immediate reward to pursue an alternative that promises greater future reward, and thus relies on the allocation of control. Accordingly, the EVC model predicts that foraging should also engage the dACC. This prediction is supported by a number of studies. For instance, Kolling et al. (2012) had participants make a series of choices between pairs of options that yielded probabilistic payoffs with known means. However, before each choice, participants were given the opportunity to switch the pair of options in front of them to a different pair that could yield higher average rewards, but at a cost for the switch. This was designed to be analogous to situations in which an animal’s decision to forage carries a near term cost but a long term benefit. Activity in dACC was found to closely track the extent to which the mean value of the alternative options was greater than that of the current options, and to correlate with the decision to switch option sets in such cases (see also Boorman et al., 2013; Rushworth et al., 2012; Wunderlich et al., 2009). This is consistent with the EVC model, which predicts that dACC should track the value of control-demanding behavior, and its selection over the current default. Animal studies have provided convergent findings. For example, Hayden et al. (2011b) found that macaque dACC neurons also track the value of foraging, and Li et al. (2012) found that dACC-lesioned rats forage for food substantially less than non-lesioned animals, while continuing to engage normally in other habitual or automatic behavior.

Intertemporal choice

Finally, it is worth noting that, insofar as both exploration and foraging involve the comparative evaluation of longer term versus immediate payoffs, they both involve intertemporal choice. One universally observed finding in the literature on intertemporal choice is that people (like all other species) exhibit a strong immediacy bias. That is, they favor rewards that are immediate, even when later ones are worth considerably more. In this respect, behavior associated with immediate reward can be considered the default behavior in general, and thus should require control to be overcome. Consistent with this view, neuroimaging studies of intertemporal choice, beyond those focused on exploration or foraging, suggest that patient behavior (i.e., choices for longer term over immediate reward) rely on neural mechanisms associated with cognitive control (Figner et al., 2010; McClure et al., 2007; McClure et al., 2004), including the dACC. In these cases, as in general, the EVC model proposes that the role of dACC is to determine the EVC of the control-demanding behavior, and specify the control signal needed to pursue it. This assumes that it has access to information about the value of the options in contention that is represented in other structures, such as ventral regions of mPFC (Floresco et al., 2008; Haber and Knutson, 2010; Prévost et al., 2010; Rangel and Hare, 2010; Rushworth et al., 2011).

6. dACC and the cost of cognitive control

The expected value of a control-demanding behavior depends not only on the rewards it promises, but also on the expenditures needed to procure those rewards; that is, it depends on the cost of control (Cost(signal) in Eq. 1). As reviewed earlier, behavioral evidence supports the idea that the exertion of control is associated with subjective disutility manifest as the avoidance of control-demanding tasks (Kool and Botvinick, in press; Kool et al., 2010). The EVC model proposes that the dACC registers the costs of control in a manner that is proportional to the intensity of control and that it specifies control signals in a way that is sensitive to such costs. This proposal generates several predictions concerning dACC function and its relation to behavior.

Costs of control and control intensity

First, and most simply, the dACC should be sensitive to demands for control and/or to the intensity of the current control signal. As reviewed in the preceding sections, there is abundant evidence in support of this point. Second, the dACC should encode the exertion of control as costly. Evidence consistent with this idea has come from several recent studies. For example, Botvinick and colleagues (2009a) found that, during performance of a cognitively demanding task, a greater dACC response predicted decreased subsequent responses in nucleus accumbens to monetary rewards, interpreted as “payment” for the task. This effect is consistent with cognitive effort discounting; that is, a reduction in the subjective value attached to a reward based on cognitive costs borne to attain it. Other studies have shown that dACC responses to such costs predict subsequent decisions about control. In one, Magno and colleagues (2006) presented participants with a series of attentionally-demanding search arrays and, for each array, gave them the choice to identify the presence or absence of a target or to indicate that they would like to forgo the search on that trial. They found that dACC activity was highest on trials the participant actively chose to forgo rather than engage for potential reward (or loss). Similarly, McGuire and Botvinick (2010) found that the degree to which performance of a cognitively demanding task engaged dACC predicted the extent to which that same task would later be avoided. Collectively, these findings are consistent not only with dACC encoding of control costs, but also with a role for dACC in cost-sensitive control signal specification.

Figure 4 illustrates how the optimal control signal intensity predicted by the EVC model is determined by the relationships of control costs and payoffs to control signal intensity. These relationships determine the function relating EVC to intensity, and the optimum occurs at a point where the slope of that function is zero. Under plausible assumptions about the shape of the payoff and cost functions (see Kool and Botvinick, in press), the optimal control signal intensity will rise with the magnitude of task incentives (see Figure 4A-B). This predicts that dACC activity should grow both with task difficulty and with the stakes associated with task performance. This dual effect was reported by Kouneiher and colleagues (2009), who had participants perform a series of trials in which a colored letter cued them to perform a letter discrimination task or to simply press a single key unrelated to letter identity (‘default’ trials). Each trial was also cued with whether or not a correct response would carry a monetary bonus, and the value of these bonuses differed by trial block. The authors found that dACC activity increased with the difficulty of the trial as well as with the average incentive level for the trial block (regardless of whether a bonus was available on a particular trial; see Figure 4C-D).

Control signal intensity and willingness to pay