Abstract

Purpose

To compare the educational effectiveness of two virtual patient (VP)-based e-learning strategies, versus no training, in improving physicians’ substance abuse management knowledge, attitudes, self-reported behaviors, and decision-making.

Method

The 2011–12 study was a posttest-only, three-arm, randomized controlled trial in 90 resident and 30 faculty physicians from five adult medicine primary care training programs. The intervention was one of two 2-hour VP-based e-learning programs, designed by national experts to teach structured screening, brief interventions, referral, and treatment skills. One program used traditional problem-solving with feedback (unworked example) and the other incorporated an expert demonstration first, followed by problem-solving with feedback (worked example). The main outcome measure was performance on the Physician’s Competence in Substance Abuse Test (P-CSAT, maximum score = 315), a self-administered, previously validated measure of physicians’ competence in managing substance abuse. The survey was completed at the outset of the study and two months later.

Results

Overall P-CSAT scores were virtually identical (202–211, P > .05) between both intervention groups and the no training control group at both times. Average faculty P-CSAT scores (221.9, 224.6) were significantly higher (P < .01) than resident scores (203.7, 202.5) at both times.

Conclusions

This study did not provide evidence that a brief worked example VP-based e-learning program or a traditional unworked VP-based e-learning program was superior to no training in improving physicians’ substance abuse management skills. The study did provide additional evidence that the P-CSAT distinguishes between physicians who should possess different levels of substance abuse management skills.

National recommendations for better substance abuse management training for primary care physicians emphasize structured screening, brief interventions, referral, and treatment (SBIRT).1,2 Efforts to encourage SBIRT training in medical education have, however, been hampered by a lack of resources and qualified faculty.3 A new and evolving strategy for dealing with resource constraints in substance abuse training is the use of online training (or “e-learning”).4 To date, however, evaluations of SBIRT e-learning programs have provided no more than observational data. Cook et al. have suggested supplanting these descriptive evaluations with more rigorous comparative studies that “…deepen our understanding and advance the art and science of medical education.”5

One increasingly used but understudied e-learning approach is the virtual patient (VP. VPs are interactive computer programs, based on real clinical cases, that have the benefit of being engaging, emphasizing decision-making, and allowing for presentation of clinical situations not commonly encountered in real life.6 Given the potential benefits of VP-based medical education and the key features of SBIRT training, there may be a good match between this technology and a pressing educational need for more substance abuse management training. Unfortunately, as Cook has noted, there are no clear specifications for the best approach to VP-based e-learning.7 Ideally, the process for developing a VP-based SBIRT e-learning program should be based on evidence derived from relevant comparative studies.

We investigated an important question about VP-based e-learning in the context of improving physicians’ substance abuse management skills: how much guidance should the experience incorporate? Should VP-based e-learning let learners witness experts managing typical cases before having them manage VPs on their own, or should they be thrown directly into simulated real-life problem-solving and receive guidance afterwards? This question has been addressed in other educational areas, such as teaching algebra skills,8 and increasing evidence shows that the use of “worked examples” (that is, first seeing experts working out examples), followed by problem-solving and feedback, is more effective than problem-solving alone followed by feedback.9 We compared a worked example approach to VP-based substance abuse training with an otherwise identical “unworked” approach in an academic setting. We hypothesized that the worked example approach would be more efficient and that both e-learning approaches would be better than no training at all.

Method

Study design

This was a randomized, controlled trial of two brief e-learning programs designed to improve the decision-making and management skills of primary care physicians and physicians-in-training in dealing with patients with substance abuse issues. The study used a posttest-only design to mitigate potential effects of pretest sensitization and cueing.10–12 The two e-learning programs were identical except that one program included two (out of five) worked example VPs, while the other was entirely unworked.

Participants

In 2011–12, we recruited 815 trainees (residents) in postgraduate years 1 through 3 and faculty physicians from five geographically diverse adult primary care training programs to which we had been referred by study consultants (see Acknowledgements). Using internal e-mail and other communications from the residency program directors, we directed potential participants to a secure website where they could enroll and participate in the study. We offered a financial incentive of $100–$150 for completing the study. The study was reviewed and approved by each training program’s institutional review board.

Study hypotheses, sample size, and randomization

The primary study hypothesis was that participants completing either of the two VP-based e-learning programs would perform better on a standardized self-assessment measure of physician competence in substance abuse than participants not participating in training. Secondary hypotheses were that participants who completed the two training programs would demonstrate similar (non-inferior) performance to each other, and that one group (worked example) would spend less time using the training program.

Sample size estimates were based on an educational outcome effect size of .80, consistent with previous findings of the effects of VP-based training in the health professions.7 Using a one-tailed independent sample t test, alpha of 0.05 and power of 0.95, the required sample size was 35 participants in each group.

Potential study participants enrolled at the secure website, where they provided eligibility and demographic information. We stratified the eligible physicians (those who self-identified as residents in postgraduate years 1 through 3 or as physician faculty associated with study training programs) based on their training program, status (faculty or resident), and amount of prior training in substance abuse (≤ 8 hours or > 8 hours), and then randomly assigned them to either of the two training programs or to the control group (no training).

Interventions

The two educational interventions used an interactive VP approach that followed 12 recommended best practices.13 All VPs were text-based, but also included four to six brief (20–60 seconds) video segments using professional actors to present the persona of the VP and illustrate non-verbal cues that may affect substance abuse management, such as patient affect. The “screenplays” on which the VPs were based ranged from 9,300 to 16,000 words.

The learning objectives, developed by a panel of national experts on substance abuse education (see the acknowledgements), were to improve physicians’ ability to: 1) screen for substance abuse disorders, 2) interpret screening results, 3) manage common substance abuse disorders in the outpatient setting, with emphasis on motivational interviewing as a brief intervention, and 4) re-evaluate responses to treatment and refer for additional management when necessary. These objectives are the fundamentals of the SBIRT approach. All VPs addressed at least three of the four learning objectives, and each objective was addressed in at least three of the five VPs. Each VP was developed over 15 months by one of three experts (RS, JPS, KS), reviewed for content and clinical accuracy by another of the same group of authors, and edited for consistency of presentation by a third reviewer (JMH).

The two educational VP programs differed in their approach: unworked versus worked. Those participants in the former group were presented all five VPs and instructed to manage them in any order. Those in the latter group initially encountered two VP scenarios that presented written and audio commentaries (by authors RS and JPS), as the case progressed, instead of interactive problem-solving exercises. After viewing those two worked example VPs, the participants were then presented the three remaining unworked VPs and instructed to manage them in any order.

Each of the five unworked VPs included 17–20 interactive problem-solving exercises. An internal scoring system, developed by the VP’s author, assigned points to each decision (+1, 0, −1; where +1 was an essential and correct choice and −1 was a poor or contraindicated choice), such as what interview questions to ask, tests to order, and therapies to institute. A virtual clock, with time assigned to choices, encouraged study participants to minimize unnecessary choices. Once each VP was completed, participants received standardized author-developed feedback on their management, based on their management choices and total point scores. The clock, scoring system, and author feedback were not used in the two worked example VPs.

The five VPs demonstrated 1) high-risk alcohol use in a 31-year old white woman, 2) chronic alcohol dependency and mental health co-morbidity in a 65-year old black woman, 3) increasingly unhealthy alcohol use and denial in a 29-year old white man (worked VP), 4) chronic pain and opioid-seeking in a 45-year old white man (worked VP), and 5) possible attention deficit hyperactivity disorder and cannabis abuse in a 19-year old white Latina female.

Outcome measures

The primary educational outcome measure was the participant’s score on the selfadministered Physicians’ Competence in Substance Abuse Test (P-CSAT). Results from this survey tool, which was developed independent of these educational programs, have been shown to correlate with other measures of physicians’ substance abuse management expertise.14

Briefly, the P-CSAT is a 63-item survey that includes 30 items that assess physicians’ knowledge, attitudes, and self-reported behaviors in dealing with substance abuse. A novel feature of the P-CSAT is that even though the items dealing with knowledge, attitudes, and self-reported behaviors are either correct or incorrect, responses are based on a 6-point Likert-type scale that reflects the respondent’s confidence in the answer. This scoring approach opens up the scoring range and, to the extent that it captures respondent confidence, may better predict performance.15 The P-CSAT also measures clinical decision-making skills in substance abuse management via 33 items based on a script concordance test format. This format presents respondents with clinical decision-making challenges using a 5-point Likert-type scale. Answers are calibrated against those of an external panel of experts, where more than one choice can receive credit, but greater credit is given for those answers previously selected by larger numbers of the reference panel. The script concordance test format has been shown in multiple settings to correlate well with higher levels of training, such as fellowship study, but it has not been well-evaluated as an outcome measure for short-term training efforts.14 Total P-CSAT scores range from 0 to 315, with knowledge, attitudes, and self-reported behaviors subscale scores ranging from 0–150 and script concordance test subscale scores ranging from 0–165.

We asked the study participants to complete an online version of the P-CSAT following completion of the training program and approximately three months later to assess their knowledge and skill retention. We asked the control participants to take the P-CSAT following randomization and requested that they return to the website three months later and complete it again.

The secondary educational outcome measures were the time spent taking the e-learning program and the number of sessions taken to complete the program. Participants were allowed to pause and re-enter the program, with paused periods not counted as program use. The program incorporated an internal clock (not the same as the virtual clock presented to the participant) that allowed five minutes of inactivity before presenting a warning. If the participant did not respond to the warning and remained inactive, the program was paused, and a new session started when the participant resumed the activity. The minimum number of possible sessions was one per VP, or five total.

Statistical analysis

The analysis was performed using SPSS Version 20.0 (IBM, Corp., Armonk, NY). Independent samples t test was used to test the a priori hypotheses on the group differences in the P-CSAT scores and time spent on the training programs.

Results

Participants

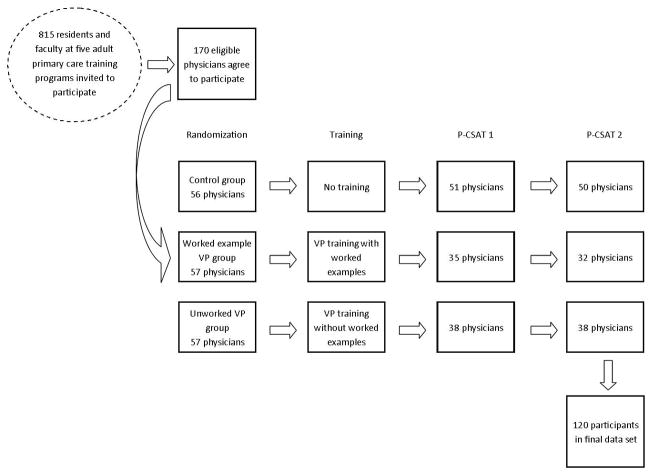

The first enrollee entered the study on October 7, 2011. Of the 815 potential participants recruited, 170 eligible physicians enrolled in the study and were randomized. Of those, 120 participants completed all phases of the study and contributed to the final data, with the last participant completing the study on March 11, 2012. The participation flow is shown in Figure 1.

Figure 1.

Flowchart of study participation. In 2011–12, 170 resident and faculty physicians from a total pool of 815 physicians in five primary care training centers agreed to participate in the evaluation of the online training programs. These physicians were randomly assigned to one of two training programs (worked or unworked virtual patients) or no training (control). All participants were requested to complete the online assessment (P-CSAT) immediately following the training or assignment (control group) and again three months later. A total of 120 physicians completed the second assessment and are the study participants for this report.

Of the 120 study participants, 60% (72) were male and 75% (90) were residents; their mean age was 34.2 years. The demographic characteristics of the participants in each study group are shown in Table 1. There were no significant differences in any measured characteristic between the groups.

Table 1.

Demographic Characteristics of 120 Participants in a Randomized Trial of Two e- Learning Strategies for Teaching Substance Abuse, 2011–12

| Characteristic | Control group: (N = 50) | Worked example group: (N = 32) | Unworked group: (N = 38) |

|---|---|---|---|

| Gender | |||

| Female, n (%) | 18 (36.0) | 16 (50.0) | 14 (36.8) |

| Male, n (%) | 32 (64.0) | 16 (50.0) | 24 (63.2) |

| Race | |||

| American Indian/Alaskan, n (%) | 0 | 0 | 0 |

| Asian, n (%) | 9 (18.0) | 7 (21.9) | 4 (10.5) |

| Native Hawaiian/Other Pacific Islander, n (%) | 2 (4.0) | 0 | 0 |

| Black or African American, n (%) | 2 (4.0) | 4 (12.5) | 3 (7.9) |

| White, n (%) | 37 (74.0) | 21 (65.6) | 31 (81.6) |

| Mean age, years (SD) | 35.6 (10.2) | 33.4 (9.0) | 33.2 (6.5) |

| Professional degree | |||

| DO, n (%) | 4 (8.0) | 1 (3.1) | 4 (10.5) |

| MD, n (%) | 46 (92.0) | 31 (96.9) | 34 (89.5) |

| Primary language | |||

| English, n (%) | 31 (62.0) | 24 (75.0) | 24 (63.2) |

| Spanish, n (%) | 8 (16.0) | 1 (3.1) | 8 (21.1) |

| Other, n (%) | 11 (22.0) | 7 (21.9) | 6 (15.8) |

| Faculty/resident status | |||

| Faculty, n (%) | 15 (30.0) | 7 (21.9) | 8 (21.1) |

| Resident, n (%) | 35 (70.0) | 25 (78.1) | 30 (78.9) |

| Training in substance abuse* | |||

| Yes, n (%) | 19 (38.0) | 17 (53.1) | 18 (47.4) |

| No, n (%) | 31 (62.0) | 15 (46.9) | 20 (52.6) |

“Yes” defined as 8+ hours of formal substance abuse training. None of the group differences are statistically significant.

Educational outcomes

The mean intervals between the two administrations of the P-CSAT were comparable: 89.8 days for the control group, 86.0 days for the worked example group, and 89.2 days for the unworked example group. None of the measured outcomes, including time spent and the number of sessions taken, differed significantly between the intervention groups. The mean time spent was 125.4 minutes and 136.1 minutes, and the mean number of sessions taken were 7.94 and 7.29 respectively for the worked example group and the unworked example group. The unworked group, on average, spent 10.7 minutes longer on the program but paused 0.65 times less compared to the worked example group.

The key educational outcomes—mean total P-CSAT and subscale scores at times 1 and 2 for the three groups—are shown in Table 2. Overall P-CSAT scores were similar for all groups. There was no evidence that either the worked example group (t(80) = −0.006, P = .995) or unworked group (t(86) = −1.522, P = .132) had higher score on P-CSAT than the control group at time 1. The same results held for time 2 (t(80) = 0.435, P = .665 for the worked example vs. the control group; t(86) = −0.443, P = .659 for the unworked group vs. the control group). Calculated effect sizes for the interventions, based on overall P-CSAT score, ranged from −0.32 to 0.10, with no evidence of a trend favoring the interventions. There was no relationship between prior substance abuse training status (at least 8 hours) and P-CSAT performance (r(90) = −0.02–0.04, P > .05) among the resident physicians.

Table 2.

Comparison of Mean Physician’s Competence in Substance Abuse Test Total Scores, Subscale Scores, and Length of Program Use Between Groups in a Randomized Trial of Two e-Learning Strategies for Teaching Substance Abuse, 2011–12

| Score at Time 1 | Score at Time 2 | ||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| Group (N) * | P-CSAT†: Mean (SD) | KAB subscale‡: Mean (SD) | SCT subscale§: Mean (SD) | P-CSAT†: Mean (SD) | KAB subscale‡: Mean (SD) | SCT subscale§: Mean (SD) | Minutes to complete program: Mean (SD) |

| Control (50) | 211.1 (28.37) | 100.2 (13.94) | 110.9 (20.74) | 208.2 (30.29) | 99.3 (12.85) | 108.9 (21.72) | Not Applicable |

| Worked (32) | 211.1 (28.14) | 101.8 (14.45) | 109.3 (17.55) | 211.2 (31.87) | 103.2 (13.91) | 108.0 (21.56) | 125.4 (51.08) |

| Unworked (38) | 202.1 (26.70) | 96.9 (13.35) | 105.1 (17.12) | 205.2 (33.29) | 101.2 (14.74) | 104.0 (21.97) | 136.1 (49.59) |

No intergroup (column) comparisons are significantly different, P ≤ 0.05.

Physician’s Competence in Substance Abuse Test; score range = 0–315.

Knowledge, Attitudes, and self-reported Behaviors subscale; score range = 0–150.

Script Concordance Test subscale; range = 0–165.

There was a significant difference in P-CSAT scores between faculty and residents at both times. As shown in Table 3, P-CSAT and subscale scores were significantly greater in all faculty than all residents. This finding is consistent with previous work showing that P-CSAT scores are higher in physicians who are likely to have greater substance abuse management expertise.14

Table 3.

Comparison of Mean P-CSAT Total and Subscale Scores Between Faculty and Residents in a Randomized Trial of Two e-Learning Strategies for Teaching Substance Abuse, 2011–12

| Scores at Time 1 | Scores at Time 2 | |||||

|---|---|---|---|---|---|---|

|

| ||||||

| Measure Group | P-CSAT* | KAB subscale† | SCT subscale‡ | P-CSAT* | KAB subscale† | SCT subscale‡ |

| All 30 faculty, mean (SD) | 221.9 (21.61) | 105.4 (10.24) | 116.5 (14.67) | 224.6 (19.76) | 107.1 (11.27) | 117.6 (13.04) |

| All 90 residents,§ mean (SD) | 203.7 (28.35) | 97.7 (14.48) | 106.0 (19.39) | 202.5 (32.80) | 98.9 (13.92) | 103.6 (22.89) |

| Mean difference (95% CI) | 18.19 (6.99–29.40) | 7.70 (2.04–13.36) | 10.49 (2.83–18.15) | 22.10 (12.17–32.04) | 8.16 (2.59–13.72) | 13.95 (7.21–20.68) |

| Independent sample t-test |

t(118) = 3.214 P = .002 |

t(118) = 2.693 P = .008 |

t(118) = 2.713 P = .008 |

t(84)¶ = 4.423 P < .001 |

t(118) = 2.904 P = .004 |

t(89)¶ = 4.115 P < .001 |

Physician’s Competence in Substance Abuse Test; score range = 0–315.

Knowledge, Attitudes, and self-reported Behaviors subscale; score range = 0–150.

Script Concordance Test subscale; range = 0–165.

All pairwise column comparisons are significant.

The degree of freedom was adjusted because equal variances could not be assumed.

Discussion

Our principal—and disturbing— finding was that neither of the two VP-based e-learning programs appeared to improve physicians’ skills in managing substance abuse. One possible explanation is that the programs we developed were not up to the challenge; it would take more or better training to improve physicians’ substance abuse management skills than a two-hour VP-based e-learning program can provide. The fact that faculty had significantly higher P-CSAT scores (presumably due to greater prior substance abuse management experience) than did residents, supports this possibility.

Another explanation is that the P-CSAT did not measure relevant educational outcomes and that some other measurement tool would have found positive results. In the absence of an alternative externally validated measure, this argument, though irrefutable, quickly becomes tautological. Currently, we know of no other standardized measure of physicians’ competence to manage substance abuse.

Between those two possible explanations lie several interesting alternative possibilities, and sometimes experimental failures point towards new research directions. For example, we did not use a pretest. All of our previously successful studies of physician e-learning have used a pretest,16–19 as did 97 of 130 internet professional education programs cited by Cook.20 Perhaps pretests have a more powerful role in e-learning than is appreciated21 and a pre/posttest design would have shown positive results. This important possibility merits further study, but doing so requires the use of a Solomon four-group design, which can assess the pretest effect, the treatment effect, and the interaction of pretest and treatment, but must double the sample-size to do so.10

Another important difference between the educational approach we used here versus our previous work is that, in this study, we designed the VPs to simulate a live mentoring experience, in which the learner participates in making decisions, but does not receive feedback until the end of the management process. It is possible that immediate feedback after every decision, which is more feasible with e-learning than live mentoring, and which we have used in our other VP-based programs, is more effective than summary feedback. This, too, merits further study.

Lastly, the data from our study can be used to inform future evaluations of substance abuse education. As noted, P-CSAT scores in this study varied with training level (residents versus faculty) and presumably reflect substance abuse management expertise. It would be helpful to see if any specific P-CSAT areas, such as substance abuse screening or substance abuse treatment, most consistently correlate with overall substance abuse expertise.

Conclusion

This study of VP-based e-learning failed to show a benefit for either a brief worked or unworked e-learning approach to improving physicians’ substance abuse management skills. This finding should caution program directors who seek to incorporate untested e-learning strategies into substance abuse training and, perhaps, into medical education in general. However, the negative findings suggest additional research questions around VP-based e-learning that could be addressed by appropriately designed comparative studies.

Acknowledgments

The following individuals served as expert consultants to the project and were compensated for their contribution: James Berry, DO, West Virginia School of Medicine, Charles Chabal, MD, Evergreen Pain Management Center, Jerry Ciocon, MD, Cleveland Clinic Florida, Robert Hazlett, PhD, Florida BRITE Project, Maria Llorente, MD, University of Miami, Jorge Ruiz, MD, University of Miami Miller School of Medicine, Kenneth Saffier, MD, Contra Costa County Health Services Department, Richard Saitz, MD, MPH, Boston University School of Medicine; J. Paul Seale, MD, Mercer University School of Medicine, Lawrence Schonfeld, PhD, Florida BRITE Project, Stephen Symes, MD, University of Miami Miller School of Medicine. The academic training programs (and residency coordinators) that participated in the project were: University of Arizona (William Johnson, MD and Randa Kutob, MD), Cleveland Clinic Florida (Jerry Ciocon, MD), Contra Costa County Regional Medical Center (Kenneth Saffier, MD), University of Miami (Stephen Symes, MD), and Valley Family Medicine (Marlene Cohen, PhD).

Funding/Support: This work was funded by grant R44 DA026218 from the National Institute on Drug Abuse (NIDA) to Medical Directions, Inc.

Footnotes

Ethical approval: This work was reviewed and approved by the institutional review boards of all participating institutions.

Other disclosures: Dr. Harris was an employee of Medical Directions, Inc. during the time this research was conducted. Medical Directions, Inc. is a for-profit small business that markets online education programs to physicians. Dr. Sun has no conflicts to disclose. The funding source played no role in the study design, data collection, data analysis, data interpretation, or manuscript writing.

Contributor Information

Dr. John M. Harris, Jr., Executive director of continuing medical education, University of Arizona College of Medicine, Tucson, Arizona.

Dr. Huaping Sun, Research associate, American Board of Anesthesiology, Raleigh, North Carolina.

References

- 1.National Institute on Drug Abuse. Principles of Drug Addiction Treatment: A Research-Based Guide. 2. Bethesda: National Institute on Drug Abuse; 2009. [Accessed May 15, 2013]. p. 16. http://www.drugabuse.gov/publications/principles-drug-addiction-treatment. [Google Scholar]

- 2.Babor TF, McRee BG, Kassebaum PA, Grimaldi PL, Ahmed K, Bray J. Screening, Brief Intervention, and Referral to Treatment (SBIRT): toward a public health approach to the management of substance abuse. Subst Abus. 2007;28(3):7–30. doi: 10.1300/J465v28n03_03. [DOI] [PubMed] [Google Scholar]

- 3.O’Connor PG, Nyquist JG, McLellan AT. Integrating addiction medicine into graduate medical education in primary care: the time has come. Ann Intern Med. 2011;154:56–9. doi: 10.7326/0003-4819-154-1-201101040-00008. [DOI] [PubMed] [Google Scholar]

- 4.Bray JH, Kowalchuk A, Waters V, Laufman L, Shilling EH. Baylor SBIRT Medical Residency Training Program: model description and initial evaluation. Subst Abus. 2012;33:231–40. doi: 10.1080/08897077.2011.640160. [DOI] [PubMed] [Google Scholar]

- 5.Cook DA, Bordage G, Schmidt HG. Description, justification and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 2008;42:128–33. doi: 10.1111/j.1365-2923.2007.02974.x. [DOI] [PubMed] [Google Scholar]

- 6.Tan ZS, Mulhausen PL, Smith SR, Ruiz JG. Virtual patients in geriatric education. Gerontol Geriatr Educ. 2010;31:163–73. doi: 10.1080/02701961003795813. [DOI] [PubMed] [Google Scholar]

- 7.Cook DA, Erwin PJ, Triola MM. Computerized virtual patients in health professions education: a systematic review and meta-analysis. Acad Med. 2010;85:1589–602. doi: 10.1097/ACM.0b013e3181edfe13. [DOI] [PubMed] [Google Scholar]

- 8.Sweller J, Cooper GA. The use of worked examples as a substitute for problem-solving in learning algebra. Cogn Instr. 1985;2:59–89. [Google Scholar]

- 9.Clark RC, Mayer RE. E-Learning and the Science of Instruction. 3. San Francisco: Pfeiffer; 2011. pp. 223–248. [Google Scholar]

- 10.Campbell DT, Stanley JC. Experimental and Quasi-Experimental Designs for Research. Chicago: Rand McNally; 1963. pp. 25–26. [Google Scholar]

- 11.Willson VL, Putnam RR. A Meta-analysis of Pretest Sensitization Effects in Experimental Design. Am Educ Res J. 1982;19:249–58. [Google Scholar]

- 12.Bos FABH, Terlouw C, Pilot A. The effect of a pretest in an interactive, multimodal pretraining system for learning science concepts. Educ Res Eval. 2009;15:571–90. [Google Scholar]

- 13.Posel N, Fleiszer D, Shore BM. 12 Tips: Guidelines for authoring virtual patient cases. Med Teach. 2009;31:701–8. doi: 10.1080/01421590902793867. [DOI] [PubMed] [Google Scholar]

- 14.Harris JM, Jr, Sun H. The Physicians’ Competence in Substance Abuse Test (P-CSAT): a multidimensional educational measurement tool for substance abuse training programs. Drug Alcohol Depend. 2012;122:236–40. doi: 10.1016/j.drugalcdep.2011.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zeleznik C, Hojat M, Goepp CE, Amadio P, Kowlessar OD, Borenstein B. Students’ certainty during course test-taking and performance on clerkships and board exams. J Med Educ. 1988;63:881–91. doi: 10.1097/00001888-198812000-00001. [DOI] [PubMed] [Google Scholar]

- 16.Harris JM, Salasche SJ, Harris RB. Can Internet-based continuing medical education improve physicians’ skin cancer knowledge and skills? J Gen Intern Med. 2001;16:50–6. doi: 10.1111/j.1525-1497.2001.00615.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Harris JM, Jr, Kutob RM, Surprenant ZJ, Maiuro RD, Delate TA. Can Internet-based education improve physician confidence in dealing with domestic violence? Fam Med. 2002;34:287–92. [PubMed] [Google Scholar]

- 18.Harris JM, Jr, Elliott TE, Davis BE, Chabal C, Fulginiti JV, Fine PG. Educating generalist physicians about chronic pain: live experts and online education can provide durable benefits. Pain Med. 2008;9:555–63. doi: 10.1111/j.1526-4637.2007.00399.x. [DOI] [PubMed] [Google Scholar]

- 19.Kutob RM, Senf JH, Harris JM., Jr Teaching culturally effective diabetes care: results of a randomized controlled trial. Fam Med. 2009;41:167–74. [PubMed] [Google Scholar]

- 20.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300:1181–96. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]

- 21.Larsen DP, Butler AC, Roediger HL., 3rd Test-enhanced learning in medical education. Med Educ. 2008;42:959–66. doi: 10.1111/j.1365-2923.2008.03124.x. [DOI] [PubMed] [Google Scholar]