ABSTRACT

Clinicians and researchers are increasingly using technology-based behavioral health interventions to improve intervention effectiveness and to reach underserved populations. However, these interventions are rarely informed by evidence-based findings of how technology can be optimized to promote acquisition of key skills and information. At the same time, experts in multimedia learning generally do not apply their findings to health education or conduct research in clinical contexts. This paper presents an overview of some key aspects of multimedia learning research that may allow those developing health interventions to apply informational technology with the same rigor as behavioral science content. We synthesized empirical multimedia learning literature from 1992 to 2011. We identified key findings and suggested a framework for integrating technology with educational and behavioral science theory. A scientific, evidence-driven approach to developing technology-based interventions can yield greater effectiveness, improved fidelity, increased outcomes, and better client service.

KEYWORDS: Technology, Behavior, Intervention, Computer, Multimedia, Learning

Interventions that use digital technology to promote positive health behavior change can help improve the effectiveness of behavioral health interventions and reach underserved populations in high volume clinical environments and other settings without unduly adding to already heavy staff workloads [1, 2]. These technology-based behavioral health interventions can be delivered using devices ranging from high-end tablets to inexpensive mobile phones; may consist of text, audio, video, animations, and/or other forms of multimedia; may be web-based or reside on individual devices in the form of stand-alone applications; and, using information from medical records, physiological data capture devices, or other sources, may be interactively customized, or tailored, to an individual user’s needs.

Technology-based interventions can provide cost-effective ways to reach populations that might otherwise be missed, while enabling increased fidelity in intervention delivery and creating new opportunities for evaluation [2]. Specifically, because of the increased fidelity they assure, technology-based interventions enable researchers to precisely control which aspects of an intervention are provided to each participant [3], and consequently create new opportunities to assess the comparative effectiveness of various clinical interventions. As researchers and clinicians have become increasingly aware of the opportunities they afford, there has been significant growth in the development, implementation, and evaluation of technology-based interventions in an array of behavioral healthcare contexts. For example, mobile phone-based interventions have been used to improve attendance at HIV treatment programs and promote adherence to antiretroviral therapy [4–6], promote sunscreen use [7], aid smoking cessation [8–10], and improve outcomes for people in recovery for alcohol dependence [11]. Web-based programs have been created to, for example, reduce psychiatric problems like anxiety and depression [12–14], assist with smoking cessation [15–17], promote weight loss [18] and reduce binge eating [19], increase diabetes self-management [20], and prevent HIV risk behavior among youth with substance use disorders [21]. Web-based interventions also have been developed to reduce problematic alcohol and/or drug use [22–24]. In general, technology-based behavioral health interventions have been shown to be well accepted, efficacious and cost effective, especially when compared to standard care [13, 22].

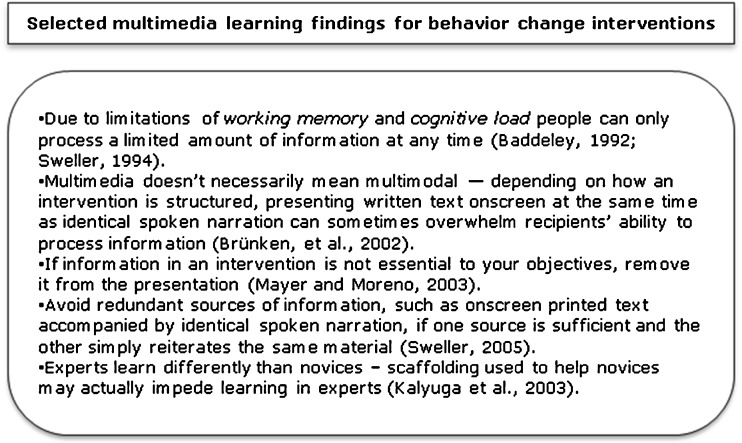

While many of these interventions have been based on empirically supported principles of behavior change, they often fail to draw upon an extensive body of literature from the field of multimedia learning [25–28] to inform the intervention’s development. Researchers who study technology-based health interventions have discussed the importance of grounding interventions in scientific, theoretical frameworks [3, 29, 30], even noting that web-based interventions grounded in theory are more effective [31]. However, some argue that current theories appear inadequate to inform applications developed for mobile devices as behavior change mechanisms may be quite different when implemented in real time [32]. Nonetheless, these examinations generally reference various conceptual frameworks of behavior change, while failing to incorporate findings from research in multimedia learning (Fig. 1). Therefore, resulting technology-based interventions are frequently not developed in accordance with evidence-based findings of how technology and multimedia learning environments can be optimized for greatest effectiveness.

Fig 1.

Selected multimedia learning findings for behavior change interventions

Researchers in multimedia learning have, in contrast, largely focused on examining how technology can be implemented to best facilitate learning in academic contexts. However, the extent to which this knowledge has been applied in clinical settings, and specifically to behavioral health interventions, has been limited to date. Researchers in multimedia learning frequently confine their work to more traditional academic subject areas, such as foreign language acquisition or science education, and often conduct their studies with college samples [33, 34]. As a result, multimedia learning findings generally are not applied in clinical settings or with underserved or clinical populations who might benefit most from increased access to affordable, high-quality, evidence-based interventions.

The current paper offers an overview of research findings in multimedia learning that may aid creators of technology-based behavioral health interventions. Our goal is to increase knowledge of evidence-based findings of multimedia learning among behavioral scientists, clinicians, developers, and others to promote more scientifically rigorous selection of the technological (e.g., multimedia) aspects of behavioral health interventions. Because many of the findings reported in this paper were derived from experiments with university students in non-clinical environments, they may not be immediately applicable to all other settings, such as interventions aimed at certain clinical or health disparity populations. However, an exploration of existing research can inform conceptions of how behavioral health interventions can employ technology in ways that are increasingly evidence-based and optimally effective for greater numbers of people.

The following sections are organized around key theories and empirical findings relevant to the design and development phases of technology-based interventions. The goal of this paper is to inform the development of technology-based interventions for health behavior change by providing an informative overview of multimedia learning, along with references for further reading. This overview is meant to provide a broad framework of issues to consider when applying technology and multimedia learning theory to behavioral health interventions. While we recognize there may be relevant literature in other areas, we have chosen to focus on major findings from the area of multimedia learning theories because of their direct applicability to a wide range of technology-based behavioral health interventions in many different contexts.

FOUNDATIONAL THEORIES AND FINDINGS

Effective uses of educational multimedia often start with a basic understanding of how people learn from multimedia and how developers can shape technology-based interventions to fit human cognition. According to the Cognitive Theory of Multimedia Learning [25], “Designing multimedia messages is always informed by the designer’s conception of how the human mind works,” (p. 42). If the design of a multimedia intervention can be built around evidence-based conceptions of how people learn, rather than based upon the features of some particular technology, the end product may be more effective. In short, the presentation of information can help facilitate understanding, or if poorly executed, the presentation can actually impair learning. A good place to begin an examination of multimedia, cognition, and learning is to discuss how people interpret and process information.

Working memory and cognitive load

People can only process a limited amount of information at any one time [28, 35]. Consequently, decisions about how to structure a technology-based intervention, how to incorporate multimedia, and how to present information can have a strong impact on an intervention’s success. In other words, if an intervention presents too much to look at, or listen to, clients may be distracted away from the essential content they need to focus on for the intervention to be successful. The inclusion of multimedia in an intervention may require clients to invest substantial additional cognitive effort in order to process the information presented, and the effectiveness of these multimedia elements depends on a range of design considerations that must be taken into account [27]. Developers often add multimedia elements, such as music or animation, to hold a viewer’s attention, but viewers sometimes pay more attention to these additional elements than to important content.

Research in multimedia learning has devoted considerable attention to the question of how much information people can process when presented with multiple visual and audio stimuli. Two important, and related, concepts to consider when addressing this issue are working memory [35] and cognitive load [28, 36]. Working memory includes three aspects: a central executive system that controls general attention, and two more rote response systems that separately process visual images and speech-based information. Understanding the limits of working memory is especially important to developers of technology-based interventions because people have limited capacity to hold and manipulate words and images in their working memories [37]. Therefore, a properly constructed technology-based intervention carefully considers how information can be presented without overwhelming this limited capacity.

An additional and important related concept is cognitive load [28, 36]. Cognitive load is the amount of mental effort required to process the information being presented at a given point in time, whether the information be presented face-to-face, online, or through some other format. Germane cognitive load is mental effort required to understand effective instruction. Intrinsic cognitive load relates to the de facto complexity of a subject. Understanding more complex, interrelated topics can entail higher intrinsic cognitive load than understanding simpler topics, regardless of presentation. For example, a regression analysis would entail greater element interactivity, or inherent complexity, and requisite prior knowledge, than would basic arithmetic. Extraneous cognitive load is when information is presented ineffectively, in a way that diverts mental effort away from essential content. This may include distracting music, flashy transitions, or other superfluous multimedia elements. Extraneous cognitive load can also be induced by seductive details [38], which can include interesting but non-essential content that draws learners’ attention away from important material. An example might be an image added to an instructional presentation as decoration—if people think about the image, they are likely focusing their attention away from other, more important content.

REDUCING EXTRANEOUS COGNITIVE LOAD

Mayer and Moreno [33] made extensive recommendations on ways to reduce cognitive load in multimedia learning environments, and wrote about experiments conducted with university student participants. Their recommendations, which are selectively summarized below, help provide a foundational understanding of how multimedia technology can be adapted to facilitate learning.

Learner control

The concept of learner control suggests that allowing clients to control the rate at which content is viewed may improve learning. For example, in one experiment, participants were randomized to see an animation explaining lightning formation either as a continuous presentation, or broken into 16 learner-controlled segments (at the end of each segment, participants clicked “continue” to move on to the next). Participants who viewed the segments performed better on subsequent knowledge tests. The authors concluded that this was because learners who controlled the rate at which they saw the information may have been able to organize and integrate the information being presented before moving on to additional material [33]. In situations where the intrinsic cognitive load of material is high enough that learners may lack adequate resources to process the essential content all at once, segmenting may enable learners to more effectively distribute the load over time [27].

Signaling

In an additional experiment, participants were randomized to see different versions of a 4-min narrated animation explaining how airplanes achieve lift. Both versions of the animation “contained many extraneous facts and somewhat confusing graphics” designed to potentially induce extraneous cognitive load and interfere with learners’ ability to focus on the salient content [33] (p. 48). However, one version of the animation contained “signaling” elements to highlight relevant content. These included key words that were stressed in the narration, and red and blue arrows added to the animation. The other version did not contain these signaling elements. Participants who viewed the signaled version of the narrated animation performed better on knowledge tests than participants who viewed the “unsignaled” version.

Dual-coding theory

In addition to streamlining presentations to reduce extraneous cognitive load, and to segmenting presentations to enable learner control, intervention developers can also structure multimedia elements to extend learners’ cognitive capacity. When words and pictures are paired effectively, people generally learn more from words and pictures together than from words alone [25]. Ineffective pairings of text and multimedia elements can actually impair learning [39, 40].

As described earlier, the success of a multimedia intervention largely depends on presenting important information without overwhelming viewers’ ability to process the material in working memory. Verbal information and non-verbal information are processed in different sub-components of working memory, and each of these sub-components has a limited capacity [35]. To increase working memory capacity in order to facilitate learning, technology-based interventions can communicate essential material using both non-verbal and verbal information. This is referred to as dual-coding theory. An example would be an animation, which clients would process as non-verbal information, accompanied by voice-over narration, which clients would process as verbal [33]. Concurrently, processing information in both non-verbal and verbal systems can also enhance recall [27].

However, multimedia does not always mean multi-modal. The specifics of how the words and pictures are presented can greatly impact learning. Brünken et al. [34] performed a series of experiments to directly measure cognitive load among university students who were being presented with information in different multimedia formats. One format paired images with written text onscreen; the other format displayed images onscreen accompanied by audio narration, but did not display text onscreen. While participants were watching these presentations, a computer measured their cognitive load by observing the participants’ performance on a simultaneous secondary task: at random intervals, a letter “a” on the screen would change color from black to red, and participants were asked to press the spacebar on the computer’s keyboard when they noticed the change. The computer automatically noted the elapsed time between the actual color change and the time the spacebar was pressed. This “dual-task methodology” was based on the idea that because available cognitive resources are limited, performance on a secondary task “will be reduced in relation to the amount of cognitive resources required by a primary task,” [34] (p. 110). In both experiments, the simultaneous visual presentation of images and written text onscreen was determined to induce significantly higher cognitive load than the presentation of images and audio narration without any written text onscreen, potentially impairing learning.

This may be due to the fact that visual stimuli containing language, such as printed text appearing onscreen, are processed by parts of the brain that specialize in language processing and are also processed in areas of the visual cortex responsible for object recognition [27]. This means that learners may process onscreen written text as both language and as a visual object, and this processing may impose extraneous cognitive load. Accordingly, Sweller [40] recommends not using multiple sources of information (such as onscreen printed text accompanied by identical spoken narration) if “one source is sufficient to allow understanding and learning while the other sources merely reiterate the information of the first source in a different form,” (p. 27).

At first, this recommendation may appear to contradict earlier statements that people learn more from words and pictures than from words alone. Instead, it suggests a more nuanced approach. In circumstances where some essential information can be effectively delivered as verbal information, and additional essential information can be effectively delivered as non-verbal information, multiple sources of information can reduce cognitive load in learners and potentially expand the capacity of working memory to facilitate learning [27, 37].

It is important to note that the studies referenced in this section were conducted with college students and may not be generalizable to many client populations. For example, it remains unknown if people with limited literacy would be similarly distracted by the simultaneous presentation of onscreen text and identical spoken narration. In addition, for clients with limited cognitive capacity, having core content presented multiple times or in differing formats may promote learning over and above having the content presented one time in a single format. Unfortunately, these areas have been underexplored empirically and there remains a significant amount of work to be done in this area.

APPLICABILITY TO TARGET POPULATIONS

Understanding the target population and context for an intervention may prove very important to applying the theories and findings in the current paper. In particular, understanding learners’ general literacy levels, and more specific health literacy levels, may inform what content is covered in an intervention and how that content can best be delivered. Accordingly, if an intervention is aimed at low-health literacy populations with limited reading skills, audio narration or dialogue in a video may prove much more effective than written text onscreen. Further, different learners may also have very different access to health education and may therefore come to the intervention with markedly different levels of relevant prior knowledge about any given topic.

The expertise reversal effect

Determining how much learners already know before they begin a technology-based intervention, and appropriately structuring the presentation of information, can greatly influence effectiveness because experts learn differently than novices [39]. “Instructional techniques that are highly effective with inexperienced learners can lose their effectiveness and even have negative consequences when used with more experienced learners,” (p. 23). Simply put, instructional aids or “scaffolding” techniques that provide additional information to support novice learners can actually impede the learning of more advanced individuals. (When scaffolding is used, learners are provided with additional support to complete a task, based on their performance and level of ability. As learners gain competence these supports are gradually removed, or “faded” [41]. For more on scaffolding and fading, see Cennamo and Kalk [41].) This is partly due to the fact that experts already “possess a large (potentially unlimited) number of domain-specific schemas,” (p. 24) while novices do not. Accordingly, different instructional strategies and intervention designs can be employed depending on the prior knowledge, or expertise, of the learner.

“Physically integrated” presentations [39 p. 25] where text is embedded onto a diagram in close proximity to related areas of the diagram and presented simultaneously, are more helpful to novice learners because they do not have to “split” their attention between the text and the diagram, and then expend additional cognitive load to assemble a working mental model. However, textual explanations integrated into diagrams can be more helpful for novice learners than for people who are more experienced. For example, inexperienced electrical trainees benefitted from written explanations integrated into diagrams of electrical circuits that were designed to reduce split attention, and in fact, were not able to comprehend a diagram-only format [39]. In contrast, more experienced trainees in the same study performed significantly better with the diagram-only format and also reported less mental effort when they studied with the diagram-only format as opposed to diagrams with integrated text that were more helpful to the inexperienced trainees [39].

Audio-based explanations paired with visual images may become similarly less helpful, or even become impediments, as learners become more experienced. Kalyuga et al. [39] described a series of experiments in which inexperienced learners benefited most from a visually presented diagram combined with concurrently presented audio explanations. However, “After additional training, the relative advantage of the audio text disappeared whereas the effectiveness of the diagram-only condition increased,” (p. 26). As the same group of learners gained additional expertise through intensive training, the advantage of the diagram-only condition increased to the point of “reversing the results of the first experiment,” (p. 26).

Issues of expertise and prior knowledge may take on particular importance in ongoing technology-based interventions that offer continued therapeutic support for clients over time. For example, if clients in a technology-based drug prevention program receive periodic booster sessions, they may begin the program as novices but progress in their expertise as the intervention continues. Likewise, if an intervention employs text messages that a client receives over an extended period of time, or a series of online modules that clients complete in succession, a strategy that initially proves useful may need to be revisited later on. This topic remains under-studied in the context of behavioral health interventions and deserves further examination.

TAILORING AND TARGETING

Additional questions of tailoring and targeting also remain largely underexplored, so a great deal remains unknown about how multimedia content can best be matched to intervention recipients. In tailored programs, individual learners’ characteristics are assessed at the beginning of an intervention and algorithms are then employed to generate learner-specific content based on assessment data [17, 42]. In targeted programs, content is optimized for a specific subgroup, or target population, usually based on demographic characteristics [42]. Although computer-based interventions facilitate the implementation of dynamically tailored education, tailoring does not in itself always lead to improved effectiveness or learner satisfaction—for example, if the assessment phase of an intervention takes too much time, learners might prefer a more simply structured non-tailored presentation [42].

Many questions remain as to how technology-based interventions can be most effectively tailored to an individual or targeted to a specific population. A good example of how complicated this can become is the design of technology-based interventions that incorporate video. Interestingly, even though the producers of any video segment need to make decisions about the race, gender, age, and other sociodemographic characteristics of every person who appears in front of the camera, definitive evidence does not yet exist to indicate whether people onscreen should match, or should intentionally not match, the demographics of the viewer [1].

Bandura [43, 44] wrote extensively about the power of vicarious learning. To increase the impact of educational modeling, he recommended that “characteristics of models such as their age, sex, and status, the type of problems with which they cope, and the situation in which they apply their skills,” be made similar to the viewers’ [44] (p. 37). However, findings of empirical trials do not always support the idea that audiences will be more receptive when the models are racially congruent to the viewer. For instance, Gerbert et al. [45] randomized participants into different groups that watched multiple video segments to examine preferences in onscreen physicians by gender and affect. They found that preference for same race doctors was mediated by the perceived warmth of the doctor. Aronson and Bania [1] randomized emergency department patients into four groups that were each shown a different computer-based video about HIV prevention and testing (the study is described in more detail in the next section). What may be the most important result of this research is the finding that different population groups responded differently to the same set of video segments viewed in the same context.

In addition, dynamic assessment and/or tailoring may include readiness to change, or characteristics of the disease/problem behavior the intervention is targeting (e.g., specific diagnosis, disease/problem severity, comorbid conditions, chronicity of disease/problem, type of problem behavior, frequency of problem behavior, etc.). While many argue that tailoring on these aspects of the learner may enhance outcomes, findings in these areas are mixed (e.g., McKay et al. [46] Project MATCH Research Group [47], Project Match Research Group [48], Strecher et al. [17], Swartz et al. [49]).

Emerging technologies can enable interventions to measure clients’ physical responses and quickly adapt content accordingly. This work with sensors and other real-time data collection tools, along with additional technology-based indicators of clients’ physiological and/or subjective states, may allow for tailoring on a whole new array of dimensions. More specifically, these tools may enable interventions that are dynamically tailored “in the moment” as needed to be responsive to the client’s state. As Riley et al. [32] explained, to take full advantage of the opportunities presented by new technologies, health behavior models must not only guide tailoring at the start of an intervention but also the dynamic process of frequent adjustments during the course of an intervention.

Due to the many possibilities and unresolved questions related to tailoring, consensus has yet to be reached in a number of areas. Although the issue is still widely discussed, there remains comparatively little agreement as to whether materials should be tailored to individual learners, and if so, how. It also remains to be seen whether, or at what point, tailoring may limit an intervention’s reach, generalizability, or cost effectiveness.

EXAMPLES OF INTERVENTIONS DEVELOPED TO INCORPORATE BEHAVIORAL SCIENCE AND MULTIMEDIA LEARNING THEORY

To illustrate how some of the theories and findings discussed so far can be applied to technology-based interventions, we provide two examples. While there are other interventions that meet these criteria, we do not have space to describe them in this publication.

Bickel, Marsch, and colleagues [22] developed an interactive, web-based psychosocial intervention for individuals with substance use disorders, called the Therapeutic Education System (TES), which is based on the empirically supported Community Reinforcement Approach (CRA) to behavior therapy. TES was developed to employ both evidence-based content from the CRA approach as well as evidence-based informational technologies to promote optimal learning and skills acquisition. In a randomized, controlled trial conducted with opioid-dependent outpatients, TES was as efficacious as clinician-delivered CRA and more efficacious than standard counseling; patients in both conditions demonstrated similar number of weeks of abstinence and greater abstinence than those patients in standard counseling. However, TES required substantially less clinician time than clinician-delivered CRA, making it more cost effective [22].

The TES web-based intervention was designed to address a number of concepts reviewed in the current paper. For example, intervention content was broken into chunks, or modules, to reduce cognitive load. The intervention then enabled clients to control how quickly they moved from one module to the next (the benefits of this approach are discussed in the earlier section on “Learner control”). As described below, TES also integrated assessment measures into the end of each module to help clients organize intervention content in long-term memory, and to measure their ability to do so. Specifically, TES employed a Fluency-based Computer-Assisted Instruction (CAI) approach (HealthSim, LLC). Behavioral fluency ensures mastery of key content by promoting both accuracy in the learner’s understanding of program content as well as speed of learner response [50] and is associated with retention and maintenance of skills and knowledge, as well as resistance to distraction [51]. The intervention measured participant response using randomly presented multiple choice questions along with fill-in-the-blank questions and calculated how long participants took to respond to each question. The speed at which questions were presented by the program to assess learners’ knowledge varied depending on participants’ demonstrated speed of responding and accuracy of responses. This procedure was designed as such to be responsive to each user’s understanding of the material to help them achieve fluency of key information and skills. The computer-based intervention also gave participants immediate feedback on their responses. Participant usage of the program was tracked in a back-end database and available for secure, electronic viewing by participants’ clinicians.

As another example, the Aronson and Bania [1] intervention to educate emergency department patients about HIV testing, mentioned earlier in this paper, also thoroughly integrates the evidence-based educational theories (cognitive load theory, the Cognitive Theory of Multimedia Learning) and an understanding of cognitive processes, including the ways working memory functions, described in the previous sections. The intervention is also based on the Information, Motivation, Behavior (IMB) model of HIV prevention which states that information, while essential to risk reduction efforts, is not enough to produce behavior change on its own; motivation and behavioral skills specific to target populations must also be addressed [52, 53]. The emergency department intervention was designed to measure both cognitive and behavioral outcomes, and the clinical trial documented statistically significant increases in knowledge of HIV prevention and testing, intent to use a condom during vaginal sex, and acceptance of an HIV test offered at the end of the intervention.

The interventions’ screen layout, information architecture, and the videos themselves were designed in accordance with the theories of multimedia learning described above. The ultimate goal was to focus clients’ cognitive resources on the intervention content rather than on the delivery technology. Although the intervention presented several sets of pre–post-intervention instruments measuring knowledge, the intent to use a condom, and participant acceptance of HIV testing, only a few questions were displayed on the screen at a time, and each question was presented in large black type on a white background. Rather than using a decorative background image or complicated color scheme Aronson and Bania selected a uniform, solid white background to avoid extraneous cognitive load. Additionally, rather than placing large groups of questions on each screen, this intervention generally presented three or four questions at a time, along with large, clearly labeled controls enabling clients to move to the next screen of questions, or return to previous screens. This was designed to help clients process information and move through the intervention at their own pace (for more on the benefits of learner control and streamlined presentations, please see the earlier section on REDUCING EXTRANEOUS COGNITIVE LOAD).

Because there were only a few questions on the screen at any one time, each could be presented in a larger type size. The resulting large black type on a plain white background proved especially easy to read, even for people with limited vision. During initial user testing, this emerged as an unexpected benefit of the design because people who rush to a hospital for emergency medical treatment frequently forget to bring their eyeglasses. Therefore, a design that was intended to work within the limits of clients’ cognitive ability also helped make the intervention accessible to people with impaired sight. (For more on user testing and related methodologies, please see the following section.)

The videos themselves were also designed to focus clients’ attention and avoid extraneous detail. As described earlier, the videos depicted healthcare professionals speaking with patients about the importance of HIV testing. Instead of using colored arrows to indicate what part of the screen clients should watch for important information (please see the description of signaling in Mayer and Moreno [33] above), the video framed the person speaking as the focus of each shot and used the composition of the image to direct clients’ attention to key content. Likewise, all video dialog was carefully edited to include only the most essential material and to avoid any redundant or unnecessary information. Similarly, the developers did not add any music or narration, nor did the video display any onscreen text or graphics that could have distracted clients’ attention from the dialog. For this reason, the video segments did not display production credits at the end because reading the names of people involved in the videos’ creation could distract clients from the more important content they just watched. Further, the editing was designed to not break clients’ concentration on the content. Rather than using a full library of available effects and transitions, the developer used a very subtle editing style intentionally designed not to call attention to itself.

NEEDS ANALYSIS AND USER TESTING

In light of the issues described in the current paper, and the effort often required to successfully address them in an intervention, two important processes may prove very helpful to the design and evaluation of projects that employ multimedia. The first is a needs analysis done at the start of a project; and the second is user testing, which can be ongoing and done at various stages. Both can help ensure effective uses of multimedia technology and the applicability of a given approach to a client population. Well-executed user testing can also be used to measure the cognitive load imposed by an intervention.

Needs analysis

In the field of multimedia learning, designers complete what is known as a needs analysis and create a design document that describes, among other things, the characteristics of the intended audience. (From a computer science perspective, the design document may be called a “functional specification.”) The characteristics noted in a needs analysis and design document would detail literacy levels, computer skills, access to technology, demographics including socioeconomic status, and physical or other disabilities relevant to the project. Additional factors would include specifics about the technology required to deliver the intervention. For instance, does an intervention require a high-speed Internet connection or can it be viewed over a dial-up connection by people who cannot afford or access broadband? Does it contain large images intended for a widescreen computer monitor or can it be viewed on a small mobile device? For more detail on needs analysis, please see Rossett [54] or Smith and Ragan [55].

Ideally, the results of a needs analysis would inform not only the content of an intervention but the selection of technology used to deliver it. As an example, an intervention aimed at adolescents might employ text messages sent to mobile phones, as the costs are relatively low, their use is widespread [56], and adolescents are among the highest users of mobile phones [57]. A needs analysis would also consider the goals of the intervention, how related outcomes could be measured to determine success, and what type of implementation technology would best facilitate these goals and outcomes.

User testing

Throughout the process, developers can work with members of the target user population to seek their input, and later once a prototype has been created, to test the intervention. Developers can, and should, solicit feedback from both end users and stakeholders. In the case of the intervention to increase HIV testing among emergency department patients described above, patients would be the end users while the hospital staff, including doctors and nurses, would be stakeholders. Developers can solicit input at all stages of the process, including the creation of the design document/functional specifications, interface mockups, and so on. Some software developers argue that it is best to give potential users a very rough sketch, possibly in pencil, of interfaces and functions/features so users can feel free to recommend changes.

Once an early stage prototype exists, developers can work with end users to test and then refine the intervention based on user response. This type of testing can be as simple as watching users interact with the intervention and observing their reactions, or interviewing them afterward to examine issues such as acceptability or feasibility. Other methods of user testing can involve structured, or semi-structured, discussions with users while they interact with an intervention.

Chan and Kaufman [58] describe a methodology in which a group of participants were asked to perform the same computer-based tasks, and were simultaneously asked to think aloud and verbalize their thoughts while completing the task. The researchers made audio recordings of each participant and used software to capture all actions on the computer screen. Later, researchers performed a step-by-step analysis of each task using the audio recording, video capture, and observation notes, examining how users completed each step in a task and noting problems users may have encountered. Implementing similar examinations during the development of health interventions can enable researchers to measure how much mental effort clients expend on using a given technology and how much of their attention they can effectively apply to understanding the intervention content.

Kalygua and Plass [59] describe using a think-aloud protocol to measure participants’ cognitive load. Participants using an interactive simulation designed to educate students about the scientific principles of gas (i.e., the effects of temperature and pressure) were asked to talk about their perceptions of the simulation while they were using it. Analysis then examined participants’ remarks and, if applicable, related them to different sources of cognitive load. As an example, the researchers determined that the participant comment “Watching them all at the same time could be difficult” [59] (p. 731) indicated the design had induced extraneous cognitive load. Kalygua and Plass [59] also note that a very rough measure of cognitive load can be obtained by asking people about their experience using a technology. For example, researchers can ask video game players questions about how difficult a game was to play or understand. Cognitive load can also be measured observationally using the dual-task methodology employed by Brünken et al. [34] which is described earlier in the section on “Dual-coding theory”.

User testing can be done as part of a summative evaluation, at the end of a project, or within a formative evaluation process conducted while the project is still ongoing and elements of the intervention are revised according to the results of the user testing. For example, if during a formative evaluation elements of an intervention were found to induce extraneous cognitive load, the intervention materials might be re-worked to reduce distractions. For more information on summative and formative evaluations, please see Gagné et al. [60]. If budgets and timelines permit, conducting repeated formative evaluations, analyzing the results, and then refining the intervention accordingly may yield an especially strong project.

Conclusion

Each intervention is different, but if the development process incorporates contributions from a team of people with technical expertise, knowledge of behavioral science, and an understanding of instructional design (as well as detailed responses from actual clients), the end product can employ multimedia elements just as effectively as it employs evidence-based behavioral science content. As described in detail above, people can only process a limited amount of information at any one time. Developing multimedia presentations to focus clients’ attention on important content rather than extraneous details, or on the delivery technology itself, can lead to more effective outcomes.

Because much of the evidence in multimedia learning comes from research with college students in academic settings, it remains unknown how some of these findings will apply to underserved populations in clinical environments, and additional research is needed. Further, it remains unknown how technology-based interventions can be made most effective for specific populations, e.g., whether tailoring is generally more effective, and if so, exactly how material should be tailored. Lastly, further research is needed to understand how the ubiquitous, real-time nature of new technologies can create opportunities to dynamically, and frequently, adjust content during the course of an intervention. In short, a major goal of the current publication is to help clinicians, researchers, developers, and others not only use technology to reach more people but to implement continually more effective, evidence-based behavioral health interventions.

Footnotes

This work was partially supported by P30 Center grant # P30DA029926 from the National Institute on Drug Abuse.

Implications

Practice: Technology-based interventions can be considerably strengthened by applying evidence-based findings of how people learn from multimedia, especially because these findings have generally not been applied in clinical contexts.

Policy: Resources can be aimed not only at using technology to reach more people, including those most in need, but also to ensure technology-based interventions are optimized for greatest effectiveness.

Research: Questions of how existing multimedia learning findings apply to low-literacy populations in clinical settings remain underexplored and deserve further examination.

References

- 1.Aronson ID, Bania TC. Race and emotion in computer-based prevention videos for emergency department patients. AIDS Educ Prev. 2011;23:91–104. doi: 10.1521/aeap.2011.23.2.91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Marsch LA. Technology-based interventions targeting substance use disorders and related issues: an editorial. Subst Use Misuse. 2011;46:1–3. doi: 10.3109/10826084.2011.521037. [DOI] [PubMed] [Google Scholar]

- 3.Ritterband LM, Thorndike FP, Cox DJ, Kovatchev BP, Gonder-Frederick LA. A behavior change model for Internet interventions. Ann Behav Med. 2009;38:18–27. doi: 10.1007/s12160-009-9133-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Crankshaw T, Corless IB, Giddy J, Nicholas PK, Eichbaum Q, Butler LM. Exploring the patterns of use and the feasibility of using cellular phones for clinic appointment reminders and adherence messages in an antiretroviral treatment clinic, Durban, South Africa. AIDS Patient Care STDS. 2010;24:729–734. doi: 10.1089/apc.2010.0146. [DOI] [PubMed] [Google Scholar]

- 5.Kunutsor S, Walley J, Katabira E, et al. Using mobile phones to improve clinic attendance amongst an antiretroviral treatment cohort in rural Uganda: a cross-sectional and prospective study. AIDS Behav. 2010;14:1347–1352. doi: 10.1007/s10461-010-9780-2. [DOI] [PubMed] [Google Scholar]

- 6.Shet A, de Costa A. India calling: harnessing the promise of mobile phones for HIV healthcare. Trop Med Int Health. 2011;16:214–216. doi: 10.1111/j.1365-3156.2010.02678.x. [DOI] [PubMed] [Google Scholar]

- 7.Armstrong AW, Watson AJ, Makredes M, Frangos JE, Kimball AB, Kvedar JC. Text-message reminders to improve sunscreen use: a randomized, controlled trial using electronic monitoring. Arch Dermatol. 2009;145:1230–1236. doi: 10.1001/archdermatol.2009.269. [DOI] [PubMed] [Google Scholar]

- 8.Brendryen H, Kraft P. Happy ending: a randomized controlled trial of a digital multi-media smoking cessation intervention. Addiction. 2008;103:478–484. doi: 10.1111/j.1360-0443.2007.02119.x. [DOI] [PubMed] [Google Scholar]

- 9.Free C, Whittaker R, Knight R, Abramsky T, Rodgers A, Roberts IG. Txt2stop: a pilot randomised controlled trial of mobile phone-based smoking cessation support. Tob Control. 2009;18:88–91. doi: 10.1136/tc.2008.026146. [DOI] [PubMed] [Google Scholar]

- 10.Free C, Knight R, Robertson S, et al. Smoking cessation support delivered via mobile phone text messaging (txt2stop): a single-blind, randomised trial. Lancet. 2011;378:49–55. doi: 10.1016/S0140-6736(11)60701-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gustafson DH, Shaw BR, Isham A, Baker T, Boyle MG, Levy M. Explicating an evidence-based, theoretically informed, mobile technology-based system to improve outcomes for people in recovery for alcohol dependence. Subst Use Misuse. 2011;46:96–111. doi: 10.3109/10826084.2011.521413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Clarke G, Eubanks D, Reid E, et al. Overcoming Depression on the Internet (ODIN) (2): a randomized trial of a self-help depression skills program with reminders. J Med Internet Res. 2005;7:e16. doi: 10.2196/jmir.7.2.e16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Titov N. Status of computerized cognitive behavioural therapy for adults. Aust N Z J Psychiatry. 2007;41:95–114. doi: 10.1080/00048670601109873. [DOI] [PubMed] [Google Scholar]

- 14.van Straten A, Cuijpers P, Smits N. Effectiveness of a web-based self-help intervention for symptoms of depression, anxiety, and stress: randomized controlled trial. J Med Internet Res. 2008;10:e7. doi: 10.2196/jmir.954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Brendryen H, Drozd F, Kraft P. A digital smoking cessation program delivered through internet and cell phone without nicotine replacement (happy ending): randomized controlled trial. J Med Internet Res. 2008;10:e51. doi: 10.2196/jmir.1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dallery J, Raiff BR. Contingency management in the 21st century: technological innovations to promote smoking cessation. Subst Use Misuse. 2011;46:10–22. doi: 10.3109/10826084.2011.521067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Strecher VJ, Shiffman S, West R. Randomized controlled trial of a web-based computer-tailored smoking cessation program as a supplement to nicotine patch therapy. Addiction. 2005;100:682–688. doi: 10.1111/j.1360-0443.2005.01093.x. [DOI] [PubMed] [Google Scholar]

- 18.Tate DF, Wing RR, Winett RA. Using Internet technology to deliver a behavioral weight loss program. JAMA. 2001;285:1172–1177. doi: 10.1001/jama.285.9.1172. [DOI] [PubMed] [Google Scholar]

- 19.Jones M, Luce KH, Osborne MI, et al. Randomized, controlled trial of an internet-facilitated intervention for reducing binge eating and overweight in adolescents. Pediatrics. 2008;121:453–462. doi: 10.1542/peds.2007-1173. [DOI] [PubMed] [Google Scholar]

- 20.Gerber BS, Solomon MC, Shaffer TL, Quinn MT, Lipton RB. Evaluation of an Internet diabetes self-management training program for adolescents and young adults. Diabetes Technol Ther. 2007;9:60–67. doi: 10.1089/dia.2006.0058. [DOI] [PubMed] [Google Scholar]

- 21.Marsch LA, Grabinski MJ, Bickel WK, et al. Computer-assisted HIV prevention for youth with substance use disorders. Subst Use Misuse. 2004;46:46–56. doi: 10.3109/10826084.2011.521088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bickel WK, Marsch LA, Buchhalter A, Badger G. Computerized behavior therapy for opioid-dependent outpatients: a randomized, controlled trial. Exp Clin Psychopharmacol. 2008;16:132–143. doi: 10.1037/1064-1297.16.2.132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ondersma SJ, Chase SK, Svikis DS, Schuster CR. Computer-based brief motivational intervention for perinatal drug use. J Subst Abuse Treat. 2005;28:305–312. doi: 10.1016/j.jsat.2005.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ondersma SJ, Svikis DS, Schuster CR. Computer-based brief intervention a randomized trial with postpartum women. Am J Prev Med. 2007;32:231–238. doi: 10.1016/j.amepre.2006.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mayer RE. Multimedia Learning. New York: Cambridge University Press; 2001. [Google Scholar]

- 26.Mayer RE, editor. The Cambridge Handbook of Multimedia Learning. New York: Cambridge University Press; 2005. [Google Scholar]

- 27.Plass JL, Homer BD, Hayward E. Design factors for educationally effective animations and simulations. J Comput High Educ. 2009;21:31–61. doi: 10.1007/s12528-009-9011-x. [DOI] [Google Scholar]

- 28.Sweller J. Cognitive load theory, learning difficulty, and instructional design. Learn Instr. 1994;4:295–312. doi: 10.1016/0959-4752(94)90003-5. [DOI] [Google Scholar]

- 29.Consolvo S, McDonald DW, Landay JA. Theory-driven design strategies for technologies that support behavior change in everyday life. Paper presented at the meeting of the Association for Computing Machinery Special Interest Group on Computer Human Interaction, Boston, MA; 2009.

- 30.Durand M, Stiel M, Boivin J, Elwyn G. Where is the theory? Evaluating the theoretical frameworks described in decision support technologies. Patient Educ Couns. 2008;71:125–135. doi: 10.1016/j.pec.2007.12.004. [DOI] [PubMed] [Google Scholar]

- 31.Webb TL, Joseph J, Yardley L, Michie S. Using the Internet to promote health behavior change: a systematic review and meta-analysis of the impact of theoretical basis, use of behavior change techniques, and mode of delivery on efficacy. J Med Internet Res. 2010;12:e4. doi: 10.2196/jmir.1376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Reily WT, Rivera DE, Atienza AA, Nilsen W, Allison SM, Mermelstein R. Health behavior models in the age of mobile interventions: are our theories up to the task? Transl Behav Med. 2011;1:53–71. doi: 10.1007/s13142-011-0021-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mayer RE, Moreno R. Nine ways to reduce cognitive load in multimedia learning. Educ Psychol. 2003;38:43–52. doi: 10.1207/S15326985EP3801_6. [DOI] [Google Scholar]

- 34.Brünken R, Steinbacher S, Plass JL, Leutner D. Assessment of cognitive load in multimedia learning using dual-task methodology. Exp Psychol. 2002;49:109–119. doi: 10.1027//1618-3169.49.2.109. [DOI] [PubMed] [Google Scholar]

- 35.Baddeley A. Working memory. Science. 1992;255:556–559. doi: 10.1126/science.1736359. [DOI] [PubMed] [Google Scholar]

- 36.Paas F, Renkl A, Sweller J. Cognitive Load Theory: instructional implications of the interaction between information structures and cognitive architecture. Instr Sci. 2004;32:1–8. doi: 10.1023/B:TRUC.0000021806.17516.d0. [DOI] [Google Scholar]

- 37.Mayer RE, Sims VK. For whom is a picture worth a thousand words? Extensions of a dual-coding theory of multimedia learning. J Educ Psychol. 1994;86:389–401. doi: 10.1037/0022-0663.86.3.389. [DOI] [Google Scholar]

- 38.Harp SF, Mayer RE. How seductive details do their damage: a theory of cognitive interest in science learning. J Educ Psychol. 1998;90:414–434. doi: 10.1037/0022-0663.90.3.414. [DOI] [Google Scholar]

- 39.Kalyuga S, Ayres P, Chandler C, Sweller J. The expertise reversal effect. Educ Psychol. 2003;38:23–31. doi: 10.1207/S15326985EP3801_4. [DOI] [Google Scholar]

- 40.Sweller J. The redundancy principle in multimedia learning. In: Mayer RE, editor. The Cambridge Handbook of Multimedia Learning. New York: Cambridge University Press; 2005. pp. 159–167. [Google Scholar]

- 41.Cennamo K, Kalk D. Real World Instructional Design. Belmont: Thomson Wadsworth; 2005. [Google Scholar]

- 42.Kreuter MW, Strecher VJ, Glassman B. One size does not fit all: the case for tailoring print materials. Ann Behav Med. 1999;21:276–283. doi: 10.1007/BF02895958. [DOI] [PubMed] [Google Scholar]

- 43.Bandura A. Social Foundations of Thought and Action: A Social Cognitive Theory. Englewood Cliffs: Prentice Hall; 1986. [Google Scholar]

- 44.Bandura A. Social cognitive theory and exercise of control over HIV infection. In: DiClemente R, Peterson J, editors. Preventing AIDS Theories and Methods of Behavioral Interventions. New York: Plenum; 1994. pp. 173–201. [Google Scholar]

- 45.Gerbert B, Berg-Smith S, Mancuso M, et al. Video study of physician selection: preferences in the face of diversity. J Fam Pract. 2003;52:552–559. [PubMed] [Google Scholar]

- 46.McKay HG, Danaher BG, Seeley JR, Lichtenstein E, Gau JM. Comparing two web-based smoking cessation programs: randomized controlled trial. J Med Internet Res. 2008;10:e40. doi: 10.2196/jmir.993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Project Match Research Group Matching alcoholism treatments to client heterogeneity: Project MATCH posttreatment drinking outcomes. J Stud Alcohol. 1997;58:7–29. [PubMed] [Google Scholar]

- 48.Project MATCH Research Group Project MATCH secondary a priori hypotheses. Addiction. 1997;92:161–1698. doi: 10.1111/j.1360-0443.1997.tb02889.x. [DOI] [PubMed] [Google Scholar]

- 49.Swartz LH, Noell JW, Schroeder SW, Ary DV. A randomised control study of a fully automated internet based smoking cessation programme. Tob Control. 2006;15:7–12. doi: 10.1136/tc.2003.006189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Binder C. Behavioral fluency: evolution of a new paradigm. Behav Anal. 1996;19:163–197. doi: 10.1007/BF03393163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Binder C. Behavioral fluency: a new paradigm. Educ Technol. 1993; October:8–14.

- 52.Fisher JD, Fisher WA. Changing AIDS-risk behavior. Psychol Bull. 1992;111:455–474. doi: 10.1037/0033-2909.111.3.455. [DOI] [PubMed] [Google Scholar]

- 53.Fisher JD, Fisher WA. Theoretical approaches to individual level change in HIV risk behavior. In: Peterson J, DiClemente R, editors. Handbook of HIV Prevention. New York: Kluwer Academic/Plenum; 2000. pp. 3–35. [Google Scholar]

- 54.Rossett A. Training Needs Assessment. Englewood Cliffs: Educational Technology; 1987. [Google Scholar]

- 55.Smith PS, Ragan TJ. Instructional Design. New York: Wiley/Jossey-Bass Education; 2004. [Google Scholar]

- 56.Cole-Lewis H, Kershaw T. Text messaging as a tool for behavior change in disease prevention and management. Epidemiol Rev. 2010;32:56–69. doi: 10.1093/epirev/mxq004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fjeldsoe BS, Marshall AL, Miller YD. Behavior change interventions delivered by mobile telephone short-message service. Am J Prev Med. 2009;36:165–173. doi: 10.1016/j.amepre.2008.09.040. [DOI] [PubMed] [Google Scholar]

- 58.Chan CV, Kaufman DR. A framework for characterizing eHealth literacy demands and barriers. J Med Internet Res. 2011;13:e94. doi: 10.2196/jmir.1750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kalyuga S, Plass JL. Evaluating and managing cognitive load in games. In: Ferdig RE, editor. Handbook of Research on Effective Electronic Gaming in Education. Hershey: Information Science Reference; 2008. pp. 719–737. [Google Scholar]

- 60.Gagné RM, Wager WW, Golas KC, Keller JM. Principles of Instructional Design. Belmont: Wadsworth/Thomson Learning; 2005. [Google Scholar]