Abstract

The brain representation of the body, called the body schema, is susceptible to plasticity. For instance, subjects experiencing a rubber hand illusion develop a sense of ownership of a mannequin hand when they view it being touched while tactile stimuli are simultaneously applied to their own hand. Here, the cortical basis of such an embodiment was investigated through concurrent recordings from primary somatosensory (i.e., S1) and motor (i.e., M1) cortical neuronal ensembles while two monkeys observed an avatar arm being touched by a virtual ball. Following a period when virtual touches occurred synchronously with physical brushes of the monkeys' arms, neurons in S1 and M1 started to respond to virtual touches applied alone. Responses to virtual touch occurred 50 to 70 ms later than to physical touch, consistent with the involvement of polysynaptic pathways linking the visual cortex to S1 and M1. We propose that S1 and M1 contribute to the rubber hand illusion and that, by taking advantage of plasticity in these areas, patients may assimilate neuroprosthetic limbs as parts of their body schema.

Keywords: multielectrode recordings, cortical plasticity

In the early 1900s, Head and Holmes coined the concept of the “body schema” to describe the spatial model of the body that the brain builds based on sensory inputs from the skin, joints, and muscles, as well as visual and auditory signals (1). Numerous studies since then have explored different aspects of the body schema (2–6), particularly the role of cortical areas (7, 8). The accumulated literature indicates that the body schema is plastic and can even incorporate artificial tools (5, 9, 10). A striking example of body schema plasticity is provided by the rubber hand illusion (RHI), in which subjects start to perceive a mannequin hand as their own after their real hand, hidden from sight, and the mannequin hand are repeatedly touched simultaneously (11–13). Subjects do not perceive a third limb, but report a shift in position sense from the real arm to the fake one (11–14), and there is even a decrease in skin temperature of the real arm (15). Incorporation of artificial limbs into the body schema began to be further explored with the advancement of brain machine interfaces (BMIs), hybrid systems that connect the brain with external devices (16–19). Here, we recorded cortical ensemble activity in monkeys exposed to the paradigm that elicits RHI in humans (11–14, 20).

Results

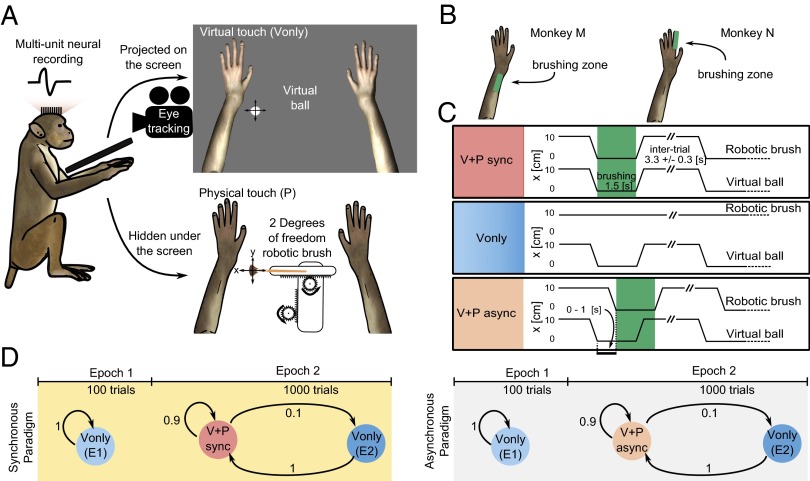

Monkeys M and N were chronically implanted with microwire arrays in the primary motor (i.e., M1) and somatosensory (i.e., S1) cortical neuronal ensembles. They observed a 3D image of a virtual arm (i.e., an avatar arm) being touched by a virtual ball on an LCD screen while a robot slid a physical brush through the skin of their real arms (Fig. 1 A and B). The virtual touch (V) and physical touch (P) were synchronous or asynchronous (Fig. 1C). In a subset of trials, virtual brushing occurred alone (i.e., Vonly).

Fig. 1.

Experimental paradigm. (A) The monkey’s arms were restrained underneath a computer screen. Three-dimensional arms were shown on the screen, with the size and position approximating the arms. Physical touches were applied with a brush mounted on a robot. (B) Skin locations to which brushing was applied. (C) Combinations of virtual and physical touch: V+P sync (Top), Vonly (Middle), and V+P async (Bottom). (D) Trial sequence: E1 consisted of 100 Vonly trials and E2 consisted of 100 Vonly trials intermingled among 900 V+P trials. Synchronous paradigm is on the left (yellow background); asynchronous is on the right (gray).

Excitatory and Inhibitory Responses to Physical Touch.

Experiments with monkey M started with epoch 1 (E1) that consisted of 100 Vonly trials, followed by epoch 2 (E2) in which 100 Vonly trials were intermingled with 900 virtual plus physical (V+P) trials (Fig. 1D). For monkey N, experiments contained only E2. Two synchronous V+P (V+P sync) sessions and one asynchronous V+P (V+P async) session were conducted on separate days for each monkey. Neurons in S1 and M1 clearly responded in V+P sync trials (Figs. 2 and 3), as confirmed by cumulative sum analysis (21, 22). The excitatory responses prevailed (122 excitatory vs. 8 inhibitory responses for both monkeys; Table S1). For monkey M, neuronal responses occurred throughout the brushing period because its receptive fields (RFs) were comparable in size with the brushed area on the forearm and were distributed over that area. For monkey N, responses were prominent toward the end of the brushing period when the brush entered the smaller RFs on the base of the index finger.

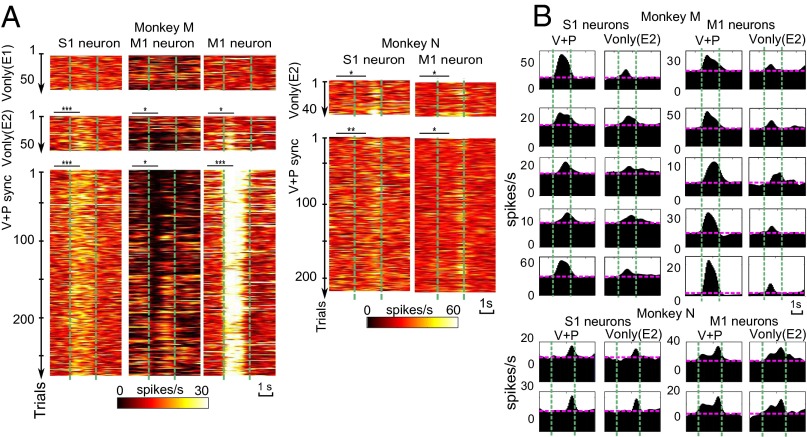

Fig. 2.

Example neurons. (A) Single-trial PSTHs for three neurons in monkey M and two neurons in monkey N. From top to bottom: Vonly trials during E1 (for monkey M only), Vonly trials during E2, and V+P sync trials during E2. Vertical lines represent the onset and offset of the brushing. Asterisks indicate significant deviations of neuronal rates from baseline by Wilcoxon test: *P < 0.05, **P < 0.01, and ***P < 0.001. (B) Representative PSTHs for V+Psync and Vonly conditions. Pink horizontal lines represent baseline rates.

Fig. 3.

Populations responses in monkey M and N. (A, B, F, and G) PSTHs for responses of S1 and M1 neurons for synchronous (shaded yellow) and asynchronous (gray) sessions. Firing rates are normalized to represent z-scores. In all plots, only neurons with significant excitatory responses to physical touch are shown. The neurons are shown in ranked order from the weakest (Top) to the strongest (Bottom) responses. Color plots show the same set of neurons for Vonly from E1 (Top), Vonly from E2 (Middle), and V+P (Bottom). Vertical green lines represent onsets and offsets of the stimuli. Average PSTHs for the population are indicated by black lines superimposed on the color plots. Asterisks indicate significant differences between response rate and baseline by Wilcoxon text: *P < 0.05, **P < 0.01, and ***P < 0.001. (C, D, H, and I) Average and cumulative PSTHs for the population for monkey M (C and D) and monkey N (H and I). Pink lines represent the mean and SD. (E and J) Distribution histograms for the difference in response onsets between V+P sync trials and Vonly trials for S1 (Upper) and M1 (Lower) neurons. Positive values indicate that V+P sync responses occurred earlier than Vonly responses. Red dashed line is the Gaussian fit. Arrows mark mean values. Mean and SEM are indicated.

In monkey M, 62 neurons were sampled in S1 and 82 were sampled in M1. In V+P sync trials, 73% of S1 neurons (n = 44) had excitatory responses, none had inhibitory responses, and 27% (n = 18) were not modulated (Table S1). In M1, 79% (n = 64) of neurons were excited, 6% (n = 5) were inhibited, and 16% (n = 13) were not modulated. In monkey N, 15 neurons were sampled in S1 and 34 were sampled in M1. In V+P trials, 33% of the S1 neurons (n = 5) were excited, no neurons had inhibitory responses, and 67% (n = 10) did not respond (Table S1). In M1, 26% neurons (n = 9) were excited, 9% (n = 3) were inhibited, and 65% (n = 22) did not respond.

Responses to Virtual Touch.

A significant number of S1 and M1 neurons with V+P responses started to respond in Vonly trials during E2 (Figs. 2 and 3 and Tables S1 and S2). In monkey M, such Vonly responses occurred in 50% (n = 31) of the total of 62 S1 neurons (all excited, none inhibited) and 70% (n = 57) of 82 M1 neurons (51 excited vs. 6 inhibited; Table S1). In monkey N, Vonly responses occurred in 40% (n = 6) of 15 S1 neurons (five excited vs. one inhibited) and 38% (n = 13) of 34 M1 neurons (eight excited vs. five inhibited). Fig. 2A, Left, illustrates peristimulus time histograms (PSTHs) for all individual trials of one S1 and two M1 neurons from monkey M. Arm electromyograms (EMGs) were sampled simultaneously (Fig. S1). These neurons did not have Vonly responses during E1, but responded in both V+P sync and Vonly trials during E2. One neuron (Fig. 2A, Center) was inhibited, whereas the other two were excited. Fig. 2A, Right, shows two monkey N neurons with excitatory responses during both V+P synch and Vonly trials of E2, and Fig. 2B depicts average PSTHs with excitatory responses for both monkeys.

Cortical responses during Vonly trials could not be explained by arm movements, as shown by EMG controls (Fig. S1). EMGs occurred sporadically, but were not time locked to the stimuli (P > 0.5, Wilcoxon test) and decreased as the session continued, despite the S1 and M1 responses remaining high (Fig. 2A).

Fig. 3 depicts population PSTHs, their averages and cumulative sums for neurons with significant V+P responses. Vonly responses occurred only during E2 of the synchronous paradigm.

Response Magnitude.

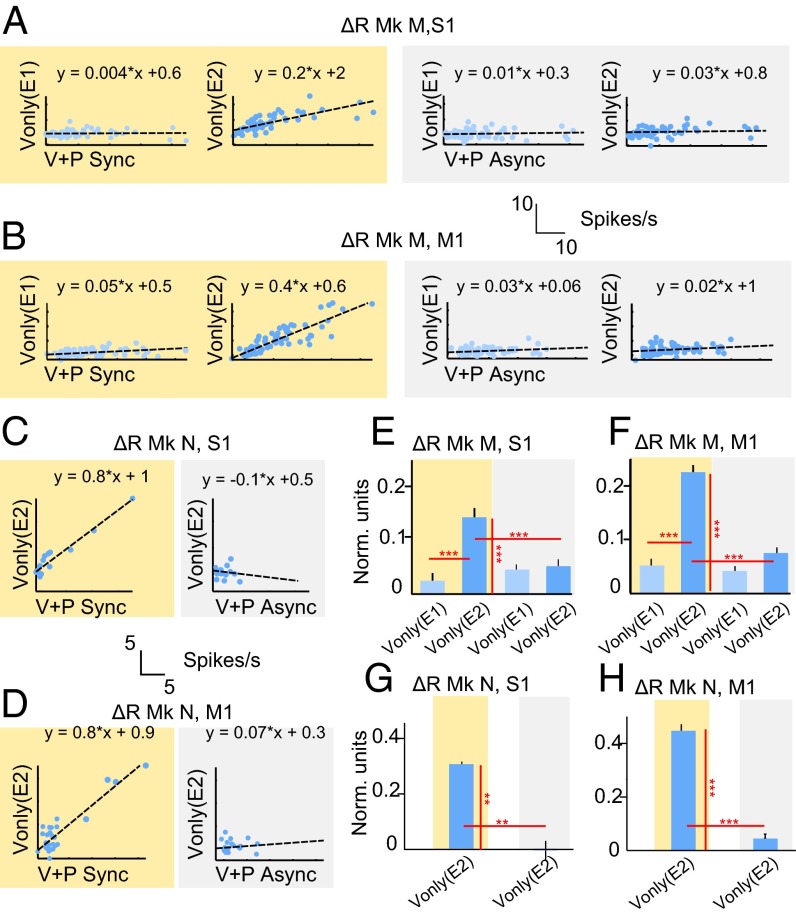

For the neurons shown in Fig. 3, neuronal rates were measured before and after the stimuli for different monkeys, areas, and paradigms (Table S2). Response magnitude was quantified as rate change from the baseline period before stimulus application (ΔR). ΔR was expressed in spikes per second and normalized units, i.e., z-scores. In monkey M, during the synchronous paradigm, ΔR in Vonly trials was approximately half that of ΔR in V+P trials (Table S2). S1 neurons with excitatory responses increased their firing rate from the baseline 34.9 ± 2.7 spikes per second to 44.8 ± 3.5 spikes per second in V+P sync trials (ΔR of 9.9 ± 2.7 spikes per second) and from 34.9 ± 2.7 spikes per second to 39.0 ± 2.9 (ΔR of 4.2 ± 2.7 spikes per second) in Vonly trials of E2. M1 neurons increased rate from 17.7 ± 2.0 spikes per second to 29.6 ± 2.9 (ΔR of 12.0 ± 2.0 spikes per second) in V+P sync trials and from 17.1 ± 1.9 spikes per second to 22.6 ± 2.4 (ΔR of 5.5 ± 1.9 spikes per second) in Vonly trials of E2. Neither S1 nor M1 neurons responded in Vonly trials of E1 (ΔR of 0.7 ± 3.0 and 1.1 ± 2.0 spikes per second, respectively). In monkey N with only E2 data, Vonly responses were of the same magnitude as V+P sync responses, which may indicate a stronger RHI in that monkey compared with monkey M (Table S2). S1 neurons with excitatory responses increased rates from 16.0 ± 1.4 spikes per second to 23.5 ± 4.0 (ΔR of 7.5 ± 1.4 spikes per second) in V+P sync trials and from 15.8 ± 1.5 spikes per second to 22.2 ± 3.4 (ΔR of 6.5 ± 1.5 spikes per second) in Vonly trials. Excited neurons in M1 increased rates from 25.8 ± 6.1 spikes per second to 35.0 ± 6.2 (ΔR of 9.2 ± 6.1 spikes per second) in V+P sync trials and from 24.9 ± 5.8 spikes per second to 33.7 ± 6.2 (ΔR of 8.9 ± 5.8 spikes per second) in Vonly trials. Vonly responses, measured in normalized units, were significantly higher during E2 than during E1 for both S1 (P < 0.001, Wilcoxon test; Fig. 4E) and M1 (P < 0.001; Fig. 4F). Furthermore, the magnitude of Vonly responses during E2 was correlated with the magnitude of V+P sync responses for monkey M (P < 0.001 for S1 and P < 0.001 for M1, t test; Fig. 4 A and B, Left) and for monkey N (P < 0.001 for S1 and M1; Fig. 4 C and D, Left). No such correlation was found between Vonly responses during E1 and V+P sync responses during E2 in monkey M (P > 0.1 for S1 and M1, t test; Fig. 4 A and B, Left).

Fig. 4.

Analysis of responses to virtual and physical touch. (A–D) Scatter plots for the correlation analysis of responses in different conditions. Each dot represents an individual neuron. V+P response magnitude (in spikes per second) is plotted against Vonly response magnitude. The results of linear regression are indicated by dashed lines, and corresponding functions are shown above the figures. (E–H) Bar plots representing mean and SEM for response amplitude in Vonly trials across cortical areas, monkeys, epochs (i.e., E1 and E2), and experimental conditions. Synchronous sessions are indicated by yellow background, asynchronous by gray background. Firing rates are normalized to z-scores. Only the neurons with significant excitatory responses to physical touch were considered. Asterisks indicate significant differences by Wilcoxon test: *P < 0.05, **P < 0.01, and ***P < 0.001.

Asynchronous Physical and Virtual Touch.

Vonly responses did not emerge under the asynchronous paradigm (Fig. 4 A–D and Tables S1 and S2). In monkey M, they occurred in only 4 of 62 S1 neurons (6%; one excited, three inhibited) and 4 of 82 M1 neurons (5%; two excited vs. two inhibited; Table S1). In monkey N, Vonly responses occurred in 2 of 15 S1 neurons (12%; one vs. one) and 2 of 34 M1 neurons (6%; one vs. one). Additionally, V+P async responses in monkey N were somewhat lower than V+P sync responses in the same monkey (Fig. 3 F–I). Whether this difference was just a statistical variability will have to be tested in future studies. An absence of Vonly responses under the asynchronous paradigm is clear from the average and cumulative PSTHs (Fig. 3 C, D, H and I).

Additionally, the analyses of response magnitude and correlation (Fig. 4 E–H) indicate clear differences between synchronous and asynchronous sessions. Vonly responses during E2 were significantly higher under the synchronous paradigm than under the asynchronous one for both monkey M (Fig. 4 E and F) and monkey N (Fig. 4 G and H). Finally, no correlation was found between Vonly and V+P async responses during E2 for either monkey (P > 0.1, t test; Fig. 4 A–D).

For the asynchronous sessions, we investigated whether the time lag between the virtual and physical touches had an effect on cortical responses. These analyses did not reveal any significant effect.

Response Latencies.

For monkey M, the Vonly responses of S1 neurons lagged the V+P sync ones by 67 ± 3.5 ms (mean ± SEM). The lag was 55 ± 3.8 ms for M1 neurons (Fig. 3E). For monkey N, the lag was 53 ± 20 ms for S1 and 60 ± 12 ms for M1 (Fig. 3J). For monkey M that had a clear response to the onset of brushing, response latency to the physical touch was 30 ± 7 ms for S1 neurons and 25 ms ± 10 ms for M1 neurons (not significantly different). Thus, V+P sync responses occurred ∼30 ms after the stimulus, and Vonly responses were 90 ms after the stimulus.

Discussion

Our experiments showed that, following synchronous V+P stimulation, neurons in S1 and M1 start to respond in a matter of minutes to virtual stimuli delivered to an avatar arm alone. This time course is consistent with the development of the sense of ownership of an artificial limb examined in humans (11–13). Although our monkeys did not report their perceptions during virtual touches, the presence of cortical responses to virtual stimulation supports our assumption that a perceptual illusion was elicited in these nonhuman primate subjects, and that it was similar to the RHI in humans. Additional evidence indicates that the monkeys could treat the avatar as being an integral part of their bodies (Fig. S2). Indeed, the level of realism of the avatar was high enough for monkeys to treat it as a conspecific animal (Fig. S2 A–C). In our previous experiments, the same avatar arm served as the BMI actuator that performed active tactile exploration of virtual objects (18). Moreover, M1 and S1 neurons were attuned to the avatar movements when monkeys passively observed it move on the screen (Fig. S2D), and the avatar trajectory could be derived from cortical activity (Fig. S2E).

On the whole, these results suggest that the avatar was realistic enough to become an equivalent of a “rubber hand” for our monkeys. Accordingly, we propose that the occurrence of sensory evoked responses in S1 and M1 neurons to virtual touches of the avatar may contribute to the experiences of the RHI. Future studies will have to address whether these S1 and M1 neuronal firing modulations are essential for the illusion or if they are secondary to the activity of associative areas, for example activity of bimodal neurons in premotor and posterior parietal areas previously shown to play a role in the RHI in humans (12).

Several previous studies reported visually evoked responses in S1. Activation of somatosensory cortical areas has been reported in human fMRI (2, 4, 5) and EEG (8) studies in which subjects observed images of body parts being touched. Such cortical activations are often interpreted as mirror responses to an observation of actions (5). Mirror response is an unlikely explanation for our present results because simply viewing virtual touches without synchronous physical stimulation was insufficient for visually evoked responses to develop. S1 modulations to visual stimuli that differs from mirror responses previously have been reported in monkeys trained to perform a visuohaptic task that required short-term retention of tactile information (23).

A somewhat surprising finding was the observation of prominent V+P and Vonly responses not only in S1, but also in M1. These responses could not be explained by overt movements because there were no accompanying EMG modulations. Our additional experiments showed that S1 and M1 responded to air-puff stimulation of the skin, and these responses were also unrelated to EMGs (Fig. S3). In our opinion, the presence of somatosensory and visual responses in M1 indicates that the cortical mediation of RHI is widely distributed throughout the frontoparietal circuit. The rich interconnections between M1 and S1, as well as their connections with the adjacent areas in the frontal and parietal lobes, provide a neuroanatomical substrate for such distributed representation (24). The absence of strict segregation of physiological functions between somatosensory and motor areas has been postulated since the mid-1900s (25). Several studies that used multielectrode recordings in rodents and primates have provided further support to this notion (26, 27). Our recent BMI studies suggest that individual S1 and M1 cortical neurons may contribute to multiple computations simultaneously (28). Moreover, both multielectrode recordings (29) and optical imaging (30) have revealed a highly distributed and dynamic representation of multiple information streams in the cortex.

Although our data do not pinpoint the source of visually driven responses in S1 and M1 neurons, the long response latencies suggest that such responses may emerge as a result of polysynaptic pathways linking visual cortical areas to the sensorimotor cortex (24). As evidence for direct projections from visual areas to S1 and M1 in monkeys is lacking to date, the most likely candidates for the source of such inputs are posterior parietal and premotor areas, which are one synapse away from S1 and M1. Traditionally, S1 and M1 have been associated with primary processing of sensory and motor signals, whereas posterior parietal and premotor areas have been considered multimodal, integrative areas where the body schema is constructed. However, in a highly interconnected frontoparietal cortical circuitry, it is practically impossible to maintain information confined to a single area. Therefore, it is not so surprising that the bimodally driven S1 and M1 neurons resemble equivalent neuronal responses reported by Graziano et al. in the premotor cortex and posterior parietal cortex (31, 32).

Previously, posterior parietal and premotor neurons were shown to generate anticipatory activity preceding the tactile responses during active touch (33). That study suggested that predictive and planning components of active touch were processed mostly in the posterior parietal and premotor areas, whereas S1 and M1 handled performance and feedback. However, this functional dissociation was not absolute. Furthermore, S1 neurons in rats exhibit anticipatory activity before whisker contact with an object (34). A study by Avillac et al. (35) argued that ventral intraparietal cortex represents visual and tactile information through a process of predictive multisensory integration. In theory, similar anticipatory mechanisms could have played a role in our study. However, we did not observe considerable activity preceding the skin touch. For instance, the Vonly responses were rather late, as they lagged the V+P responses by 50 to 70 ms. This lack of anticipatory firing may reflect the fact that our monkeys were not performing an active tactile discrimination. Whereas anticipatory activity is unlikely, a more plausible hypothesis is that S1 neurons were conditioned by V+P sync trials to respond to the virtual touches. Such interpretation would still be consistent with the common representation of visual and tactile information suggested by Avillac et al. (35).

Our present study has an implication for the future design of neuroprosthetic devices controlled by BMIs (17) by suggesting that rehabilitation training paradigms, which take advantage of cross-modal cortical plasticity observed here, will likely not only enhance the patients’ proficiency in the use of neuroprostheses, but also provide them with a renewed sense of ownership of these devices.

Methods

Subjects and Implants.

All animal procedures were performed in accordance with the National Research Council’s Guide for the Care and Use of Laboratory Animals and were approved by the Duke University Institutional Animal Care and Use Committee. Two adult rhesus macaque monkeys (Macaca mulatta) were implanted with 96-microwire arrays constructed of stainless steel 304 (16–18). Within each array, microwires were grouped in two four-by-four grids each consisting of 16 electrode triplets. The separation between adjacent triplets was 1 mm. The electrodes in each triplet had three different lengths, increasing in 300-μm steps. The penetration depth of each triplet was adjusted with a miniature screw. The longest electrode in each triplet penetrated to a depth of 2 mm as measured from the cortical surface. Each hemisphere received two arrays: one in the upper-limb representation area and one in the lower-limb representation area. These arrays sampled neurons in both M1 and S1. As the dura matter was opened during the surgery, all cortical surface features were clearly identified before implantation. We recorded from the right-hemisphere arm arrays in each monkey.

RFs and Identification of Recording Sites.

We recorded from the right cerebral hemisphere while delivering tactile/visual stimuli to the left side of the body and/or avatar. Physical brushing was applied to the forearm for monkey M and to the index finger for monkey N (Fig. 1B). Those were locations where the RFs of most S1 neurons were found after an examination that involved manually touching the skin with a cotton swab. S1 neurons had sensitive RFs clearly localizable on the animal’s arm or hand. The S1 somatotopic maps were in good correspondence with previous studies. Localization of RFs was not as clear for M1 neurons as for S1 neurons. Passive movements of the fingers, hand, and arm were typically effective to activate M1 neurons.

An additional test that we conducted to physiologically identify M1 vs. S1 was an application of intracortical microstimulation. Low-current microstimulation (biphasic pulses at 100 Hz; 100-ms pulse width; 75 µA current) applied to M1 evoked clear motor responses and EMG bursts (36), whereas such low currents did not evoke motor responses when applied to S1.

Overall, the anatomical landmarks found during the implantation surgery, examination of RFs, and microstimulation tests assured us that M1 and S1 were localized correctly.

Primate Setup.

We used a custom modified primate chair (Crist Instruments). The chair had armrests that were angled at 23° from the horizontal plane to position the monkey arms parallel to the screen. Monkeys’ arms were placed on the armrests and restrained with soft braces. Special care was taken to remove postural incongruency that could weaken and eliminate the RHI. We started with pilot studies to find the right way to position the arm rests, the chair, and the monitor so they did not permit a direct view of the arms but allowed an appropriate view of the virtual arms. We scaled the arms in the visualization software to approximate the size of the physical arms. The LCD screen was positioned at 40 cm away from the monkeys’ eyes and raised by just 10 cm relative to the armrest.

Behavioral Training.

Monkeys were introduced to the experimental apparatus several weeks before the recording sessions. Calm sitting in the chair with restrained arms while gazing at the screen was reinforced with fruit juice. The brushing stimuli were then introduced. Although both monkeys initially reacted with movements upon being brushed, gradually they acclimated to the stimuli and relaxed.

EMG Recording.

Surface EMGs were recorded with a 16-channel EMG system (Delsys). We recorded from the following muscles on both arms: biceps, deltoid, triceps, wrist flexors, and wrist extensors.

Robotic Brush.

To assure the spatial congruency of the visual and physical stimuli, we used a custom 2D serial Cartesian robot with fast control-loop (1 kHz) and precise (i.e., submillimeter) positioning. A soft brush was mounted on the end effector of the robot. A position controlled Maxon DC motor, equipped with encoders, precisely positioned the robotic actuator to the trajectory that matched the brush location shown on the screen. This control strategy resulted in accurate, reliable, and repeatable touches of the monkey arm. The skin locations touched by the physical brush were in good correspondence with the locations touched by the virtual ball. This was assured through the following steps. The monkey’s arm was shaved before the experimental sessions so we could precisely designate the location and onset of the physical touch and match the spatial and temporal characteristics of the physical brushing with the virtual touch. To set the touch points, the robot end-effector was brought in close contact with the monkey arm. The motors were turned off, but the encoders were active and recorded the precise positions of each touch point. The corresponding touch points for the virtual ball were programmed in the virtual reality software (Autodesk MotionBuilder). The instances of the brush making a contact with the skin were detected offline using the positions registered by the encoders of the robot motors. Fig. S4 shows the robot’s path recorded by the encoders.

Eye Tracking and Rewards.

The monkeys’ heads were unrestrained, and their gaze direction was monitored continuously. Custom eye tracking was designed for the purpose of the experiment. The tracking was based on the open-source TLD/Predator tracking algorithm (37). The monkey was randomly rewarded for looking at the screen every 350 ± 50 ms. The reward duration started at 35 ms and increased to 70 ms by the end of the session to maintain motivation. Periods when the monkey looked away or closed its eyes were not rewarded. Trials for which monkeys looked at the screen less than 90% of the trial duration were dismissed from the analyses.

Neural Responses Analysis.

To evaluate response magnitude, a 200-ms window was centered at the peak response estimated from the PSTH. Response rate was calculated as the average firing rate within this window. Responses amplitude, ΔR, was calculated as the difference between this response rate and the mean baseline rate before the stimulus (from −1.2 to −0.2 s relative to stimulus onset). We used raw and normalized rates to calculate ΔR. Normalized neuronal rates (z-scores) were derived from the raw rates by subtracting the mean and dividing by SD. Normalized rates were useful for both population plots and population averages because they removed a bias in favor of neurons with high firing rates.

Monkey Avatar.

The monkey avatar was designed with Autodesk 3Dsmax software based on the morphology of monkey M. Custom texture was designed based on the mixed real texture of monkey M and monkey N. Monkey avatar arms were shown from a first-person perspective on a uniform gray background. The virtual ball was represented by a white sphere.

Cross-Correlation Analysis of Response Latency.

Latencies of Vonly and V+P sync responses were compared using a cross-correlation analysis of the corresponding PSTHs. The lag between these responses was determined as the time of the peak in cross-correlation function. We preferred this method rather than calculating latencies from the PSTHs to avoid an overestimation of latency for weaker Vonly responses. Cross-correlation functions were calculated for all possible pairs of neurons. Distributions of peak times these functions (i.e., lags between Vonly and V+P sync) are shown in Fig. 3 E and J.

Supplementary Material

Acknowledgments

We thank Dragan Dimitrov, Laura Oliveira, and Tamara Phillips for conducting neurosurgeries; Johan Fruh for the design of the avatar; Laura Santos Carreras, Gary Lehew, and James Meloy for engineering support; and Susan Halkiotis for editorial support. This research was supported by National Institutes of Health/National Institute of Mental Health Award DP1MH099903 (to M.A.L.N.), National Institute of Neurological Disorders and Stroke Grant R01NS073952 (to M.A.L.N.), and Ecole Polytechnique Fédérale de Lausanne, School of Engineering, Institute of Microengineering, Laboratoire de Systèmes Robotiques 1 Grant 1016-1 (to H.B.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1308459110/-/DCSupplemental.

References

- 1.Head H, Holmes G. Sensory disturbances from cerebral lesions. Brain. 1911;34:102–254. [Google Scholar]

- 2.Blakemore SJ, Bristow D, Bird G, Frith C, Ward J. Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain. 2005;128(pt 7):1571–1583. doi: 10.1093/brain/awh500. [DOI] [PubMed] [Google Scholar]

- 3.Holmes NP, Spence C. The body schema and the multisensory representation(s) of peripersonal space. Cogn Process. 2004;5(2):94–105. doi: 10.1007/s10339-004-0013-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Keysers C, et al. A touching sight: SII/PV activation during the observation and experience of touch. Neuron. 2004;42(2):335–346. doi: 10.1016/s0896-6273(04)00156-4. [DOI] [PubMed] [Google Scholar]

- 5.Schaefer M, Xu B, Flor H, Cohen LG. Effects of different viewing perspectives on somatosensory activations during observation of touch. Hum Brain Mapp. 2009;30(9):2722–2730. doi: 10.1002/hbm.20701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Iriki A, Tanaka M, Iwamura Y. Coding of modified body schema during tool use by macaque postcentral neurones. Neuroreport. 1996;7(14):2325–2330. doi: 10.1097/00001756-199610020-00010. [DOI] [PubMed] [Google Scholar]

- 7.Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nat Rev Neurosci. 2012;13(8):556–571. doi: 10.1038/nrn3292. [DOI] [PubMed] [Google Scholar]

- 8.Bufalari I, Aprile T, Avenanti A, Di Russo F, Aglioti SM. Empathy for pain and touch in the human somatosensory cortex. Cereb Cortex. 2007;17(11):2553–2561. doi: 10.1093/cercor/bhl161. [DOI] [PubMed] [Google Scholar]

- 9.Maravita A, Iriki A. Tools for the body (schema) Trends Cogn Sci. 2004;8(2):79–86. doi: 10.1016/j.tics.2003.12.008. [DOI] [PubMed] [Google Scholar]

- 10.Pazzaglia M, Galli G, Scivoletto G, Molinari M. A functionally relevant tool for the body following spinal cord injury. PLoS ONE. 2013;8(3):e58312. doi: 10.1371/journal.pone.0058312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Botvinick M, Cohen J. Rubber hands ‘feel’ touch that eyes see. Nature. 1998;391(6669):756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- 12.Ehrsson HH, Holmes NP, Passingham RE. Touching a rubber hand: Feeling of body ownership is associated with activity in multisensory brain areas. J Neurosci. 2005;25(45):10564–10573. doi: 10.1523/JNEUROSCI.0800-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tsakiris M, Haggard P. The rubber hand illusion revisited: Visuotactile integration and self-attribution. J Exp Psychol Hum Percept Perform. 2005;31(1):80–91. doi: 10.1037/0096-1523.31.1.80. [DOI] [PubMed] [Google Scholar]

- 14.Armel KC, Ramachandran VS. Projecting sensations to external objects: Evidence from skin conductance response. Proc Biol Sci. 2003;270(1523):1499–1506. doi: 10.1098/rspb.2003.2364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Moseley GL, et al. Psychologically induced cooling of a specific body part caused by the illusory ownership of an artificial counterpart. Proc Natl Acad Sci USA. 2008;105(35):13169–13173. doi: 10.1073/pnas.0803768105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nicolelis MA. Actions from thoughts. Nature. 2001;409(6818):403–407. doi: 10.1038/35053191. [DOI] [PubMed] [Google Scholar]

- 17.Nicolelis MA, Lebedev MA. Principles of neural ensemble physiology underlying the operation of brain-machine interfaces. Nat Rev Neurosci. 2009;10(7):530–540. doi: 10.1038/nrn2653. [DOI] [PubMed] [Google Scholar]

- 18.O’Doherty JE, et al. Active tactile exploration using a brain-machine-brain interface. Nature. 2011;479(7372):228–231. doi: 10.1038/nature10489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rincon-Gonzalez L, Warren JP, Meller DM, Tillery SH. Haptic interaction of touch and proprioception: Implications for neuroprosthetics. IEEE Trans Neural Syst Rehabil Eng. 2011;19(5):490–500. doi: 10.1109/TNSRE.2011.2166808. [DOI] [PubMed] [Google Scholar]

- 20.Ehrsson HH, et al. Upper limb amputees can be induced to experience a rubber hand as their own. Brain. 2008;131(Pt 12):3443–3452. doi: 10.1093/brain/awn297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nicolelis MA, Chapin JK. Spatiotemporal structure of somatosensory responses of many-neuron ensembles in the rat ventral posterior medial nucleus of the thalamus. J Neurosci. 1994;14(6):3511–3532. doi: 10.1523/JNEUROSCI.14-06-03511.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lebedev MA, Nelson RJ. Rhythmically firing (20-50 Hz) neurons in monkey primary somatosensory cortex: Activity patterns during initiation of vibratory-cued hand movements. J Comput Neurosci. 1995;2(4):313–334. doi: 10.1007/BF00961443. [DOI] [PubMed] [Google Scholar]

- 23.Zhou YD, Fuster JM. Visuo-tactile cross-modal associations in cortical somatosensory cells. Proc Natl Acad Sci USA. 2000;97(17):9777–9782. doi: 10.1073/pnas.97.17.9777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pandya DN, Kuypers HG. Cortico-cortical connections in the rhesus monkey. Brain Res. 1969;13(1):13–36. doi: 10.1016/0006-8993(69)90141-3. [DOI] [PubMed] [Google Scholar]

- 25.Lilly JC. Distribution of ‘motor’ functions in the cerebral cortex in the conscious, intact monkey. Science. 1956;124:937. [Google Scholar]

- 26.Carmena JM, et al. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biol. 2003;1(2):E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Matyas F, et al. Motor control by sensory cortex. Science. 2010;330(6008):1240–1243. doi: 10.1126/science.1195797. [DOI] [PubMed] [Google Scholar]

- 28.Fitzsimmons NA, Lebedev MA, Peikon ID, Nicolelis MA. Extracting kinematic parameters for monkey bipedal walking from cortical neuronal ensemble activity. Front Integr Neurosci. 2009;3:3. doi: 10.3389/neuro.07.003.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nicolelis MA, Baccala LA, Lin RC, Chapin JK. Sensorimotor encoding by synchronous neural ensemble activity at multiple levels of the somatosensory system. Science. 1995;268(5215):1353–1358. doi: 10.1126/science.7761855. [DOI] [PubMed] [Google Scholar]

- 30.Petreanu L, Mao T, Sternson SM, Svoboda K. The subcellular organization of neocortical excitatory connections. Nature. 2009;457(7233):1142–1145. doi: 10.1038/nature07709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Graziano MS. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc Natl Acad Sci USA. 1999;96(18):10418–10421. doi: 10.1073/pnas.96.18.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Graziano MSA. The representation of extrapersonal space: A possible role for bimodal, visual-tactile neurons. In: Gazzaniga MS, editor. The Cognitive Neuroscience. Cambridge, MA: MIT Press; 1995. pp. 1021–1034. [Google Scholar]

- 33.Gardner EP, Ro JY, Babu KS, Ghosh S. Neurophysiology of prehension. II. Response diversity in primary somatosensory (S-I) and motor (M-I) cortices. J Neurophysiol. 2007;97(2):1656–1670. doi: 10.1152/jn.01031.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pais-Vieira M, Lebedev MA, Wiest MC, Nicolelis MA. Simultaneous top-down modulation of the primary somatosensory cortex and thalamic nuclei during active tactile discrimination. J Neurosci. 2013;33(9):4076–4093. doi: 10.1523/JNEUROSCI.1659-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8(7):941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- 36.Hanson TL, et al. High-side digitally current controlled biphasic bipolar microstimulator. IEEE Trans Neural Syst Rehabil Eng. 2012;20(3):331–340. doi: 10.1109/TNSRE.2012.2187219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kalal Z, Mikolajczyk K, Matas J. Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell. 2011;34(7):1409–1422. doi: 10.1109/TPAMI.2011.239. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.