Abstract

In this paper, we consider the batch mode reinforcement learning setting, where the central problem is to learn from a sample of trajectories a policy that satisfies or optimizes a performance criterion. We focus on the continuous state space case for which usual resolution schemes rely on function approximators either to represent the underlying control problem or to represent its value function. As an alternative to the use of function approximators, we rely on the synthesis of “artificial trajectories” from the given sample of trajectories, and show that this idea opens new avenues for designing and analyzing algorithms for batch mode reinforcement learning.

Keywords: Reinforcement Learning, Optimal Control, Artificial Trajectories, Function Approximators

1 Introduction

Optimal control problems arise in many real-life applications, such as engineering [40], medicine [41,35,34] or artificial intelligence [43]. Over the last decade, techniques developed by the Reinforcement Learning (RL) community [43] have become more and more popular for addressing those types of problems. RL was initially focusing on how to design intelligent agents able to interact with their environment so as to optimize a given performance criterion [43]. Since the end of the nineties, many researchers have focused on the resolution of a subproblem of RL: computing high performance policies when the only information available on the environment is contained in a batch collection of trajectories. This subproblem of RL is referred to as batch mode RL [18].

Most of the techniques proposed in the literature for solving batch mode RL problems over large or continuous spaces combine value or policy iteration schemes from the Dynamic Programming (DP) theory [2] with function approximators (e.g., radial basis functions, neural networks, etc) representing (state-action) value functions [7]. These approximators have two main roles: (i) to offer a concise representation of state-action value functions defined over continuous spaces and (ii) to generalize the information contained in the finite sample of input data. Another family of algorithms that has been less studied in RL adopts a two stage process for solving these batch mode RL problems. First, they train function approximators to learn a model of the environment and, afterwards, they use various optimization schemes (e.g., direct policy search, dynamic programming) to compute a policy which is (near-)optimal with respect to this model.

While successful in many studies, the use of function approximators for solving batch mode RL problems has also drawbacks. In particular, the black box nature of this approach makes performance analysis very difficult, and hence severely hinders the design of new batch mode RL algorithms presenting some a priori desired performance guarantees. Also, the policies inferred by these algorithms may have counter-intuitive properties. For example, in a deterministic framework, for a fixed initial state, and when there is in the input sample a trajectory that has been generated by an optimal policy starting from this initial state, there is no guarantee that a function approximator-based policy will reproduce this optimal behavior. This is surprising, since a simple “imitative learning” approach would have such a desirable property.

The above observations have lead us to develop a new line of research based on the synthesis of “artificial trajectories” for addressing batch mode RL problems. In our approach, artificial trajectories are rebuilt from the tuples extracted from the given batch of trajectories with the aim of achieving an optimality property. In this paper, we revisit our work on this topic [19–23], with the objective of showing that these ideas open avenues for addressing many batch mode RL related problems. In particular, four algorithms that exploit artificial trajectories will be presented. The first one computes an estimate of the performance of a given control policy [22]. The second one provides a way for computing performance guarantees in deterministic settings [19]. The third one leads to the computation of policies having high performance guarantees [20, 23], and the fourth algorithm presents a sampling strategy for generating additional trajectories [21]. Finally, we highlight connections between the concept of artificial trajectory synthesis and other standard batch mode RL techniques.

The paper is organized as follows. First, Section 2 gives a brief review of the field of batch mode RL. Section 3 presents the batch mode RL setting adopted in this paper and several of the generic problems it raises. In Section 4, we present our new line of research articulated around the synthesis of artificial trajectories. Finally, Section 5 proposes to make the link between this paradigm and existing batch mode RL techniques, and Section 6 concludes.

2 Related Work

Batch mode RL techniques are probably rooted in the works of Bradtke and Barto [6] and Boyan [4] related to the use of least-squares techniques in the context of Temporal Difference learning methods (LSTD) for estimating the return of control policies. Those works have been extended to address optimal control problems by Lagoudakis and Parr [27] who have introduced the Least-Square Policy Iteration (LSPI) algorithm that mimics the policy iteration algorithm of the DP theory [2]. Several papers have proposed some theoretical works related to least-squares TD-based algorithms, such as for example Nedić and Bertsekas [36] and Lazaric et al. [29,30].

Another algorithm from the DP theory, the value iteration algorithm, has also served as inspiration for designing batch mode RL algorithms. For example, Ormoneit and Sen have developed a batch mode RL algorithm in 2002 [37] using kernel approximators, for which theoretical analyses are also provided. Reference [12] proposes an algorithm that combines value iteration with any type of regressors (e.g., regression trees, SVMs, neural networks). Reference [13] has named this algorithm Fitted Q Iteration (FQI) and provides a careful empirical analysis of its performance when combined with ensembles of regression trees.

References [40, 28] and [44] study the performances of this FQI algorithm with (deep) neural networks and CMACs (Cerebella Model Articulator Controllers). The Regularized FQI algorithm proposes to use penalized least-squares regression as function approximator to limit the model-complexity of the original FQI algorithm [17]. Extensions of the FQI algorithm to continuous action spaces have also been proposed [1]. More theoretical works related with FQI have also been published [33, 10].

Applications of these batch mode RL techniques have already lead to promising results in robotics [38,3,45], power systems [14], image processing [15], water reservoir optimization [9,8], medicine [34,16,26] and driving assistance strategies [39].

3 Batch Mode RL: Formalization and Typical Problems

We consider a stochastic discrete-time system whose dynamics is given by

where xt belongs to a state space

⊂ ℝd, Where ℝd is the d – dimensional Euclidean space and T ∈ ℕ\{0} denotes the finite optimization horizon. At every time t ∈ {0,…, T − 1}, the system can be controlled by taking an action ut ∈

⊂ ℝd, Where ℝd is the d – dimensional Euclidean space and T ∈ ℕ\{0} denotes the finite optimization horizon. At every time t ∈ {0,…, T − 1}, the system can be controlled by taking an action ut ∈  , and is subject to a random disturbance wt ∈

, and is subject to a random disturbance wt ∈  drawn according to a probability distribution p

drawn according to a probability distribution p

(·)1. To each system transition from time t to t + 1 is associated a reward signal:

(·)1. To each system transition from time t to t + 1 is associated a reward signal:

Let h : {0, …,T−1} ×

→

→

be a control policy. When starting from a given initial state x0 and following the control policy h, an agent will get a random sum of rewards signal Rh (x0):

be a control policy. When starting from a given initial state x0 and following the control policy h, an agent will get a random sum of rewards signal Rh (x0):

In RL, the classical performance criterion for evaluating a policy h is its expected T–stage return:

Definition 1 (Expected T–stage Return)

but, when searching for risk-aware policies, it is also of interest to consider the value at risk criterion:

Definition 2 (T–stage Value at Risk)

Let b ∈ ℝ and c ∈ [0, 1[.

The central problem of batch mode RL is to find a good approximation of a policy h* that optimizes one such performance criterion, given the fact that the functions f, ρ and p

(·) are unknown, and thus not accessible to simulation. Instead, they are “replaced” by a batch collection of n ∈ ℕ\{0} elementary pieces of trajectories, defined according to the following process.

(·) are unknown, and thus not accessible to simulation. Instead, they are “replaced” by a batch collection of n ∈ ℕ\{0} elementary pieces of trajectories, defined according to the following process.

Definition 3 (Sample of transitions)

Let

be a given set of state-action pairs. Consider the ensemble of samples of one-step transitions of size n that could be generated by complementing each pair (xl, ul) of

n by drawing for each l a disturbance signal wl at random from p

n by drawing for each l a disturbance signal wl at random from p

(.), and by recording the resulting values of ρ(xl, ul, wl) and f(xl, ul, wl). We denote by

(.), and by recording the resulting values of ρ(xl, ul, wl) and f(xl, ul, wl). We denote by

n (

n (

n, w1,…,wn) one such “random” set of one-step transitions defined by a random draw of n disturbance signals wl

l = 1 … n. We assume that we know one realization of the random set

n, w1,…,wn) one such “random” set of one-step transitions defined by a random draw of n disturbance signals wl

l = 1 … n. We assume that we know one realization of the random set

n (

n (

n, w1,…,wn), that we denoted by

n, w1,…,wn), that we denoted by

n:

n:

where, for all l ∈ {1,…, n},

for some realizations of the disturbance process wl ∼p

(·).

(·).

Notice first that the resolution of the central problem of finding a good approximation of an optimal policy h* is very much correlated to the problem of estimating the performance of a given policy. Indeed, when this latter problem is solved, the search for an optimal policy can in principle be reduced to an optimization problem over the set of candidate policies. We thus will start by addressing the problem of characterizing the performance of a given policy.

It is sometimes desirable to be able to compute policies having good performance guarantees. Indeed, for many applications, even if it is perhaps not paramount to have a policy h which is very close to the optimal one, it is however crucial to be able to guarantee that the considered policy h leads to high-enough cumulated rewards. The problem of computing such policies will also be addressed later in this paper.

In many applications, one has the possibility to move away from a pure batch setting by carrying out a limited number of experiments on the real system in order to enrich the available sample of trajectories. We thus also consider the problem of designing strategies for generating optimal experiments for batch mode RL.

4 Synthesizing Artificial Trajectories

We first formalize the concept of artificial trajectories in Section 4.1. In Section 4.2, we detail, analyze and illustrate on a benchmark how artificial trajectories can be exploited for estimating the performances of policies. We focus in Section 4.3 on the deterministic case, and we show how artificial trajectories can be used for computing bounds on the performances of policies. Afterwards, we exploit these bounds for addressing two different problems: the first problem (Section 4.4) is to compute policies having good performance guarantees. The second problem (Section 4.5) is to design sampling strategies for generating additional system transitions.

4.1 Artificial Trajectories

Artificial trajectories are made of elementary pieces of trajectories (one-step system transitions) taken from the sample

n. Formally, an artificial trajectory is defined as follows:

n. Formally, an artificial trajectory is defined as follows:

Definition 4 (Artificial Trajectory)

An artificial trajectory is an (ordered) sequence of T one-step system transitions:

where

We give in Figure 1 an illustration of one such artificial trajectory.

Fig. 1.

An example of an artificial trajectory rebuilt from 4 one-step system transitions from

n.

n.

Observe that one can synthesize nT different artificial trajectories from the sample of transitions

n. In the rest of this paper, we present various techniques for extracting and exploiting “interesting” subsets of artificial trajectories.

n. In the rest of this paper, we present various techniques for extracting and exploiting “interesting” subsets of artificial trajectories.

4.2 Evaluating the Expected Return of a Policy

A major subproblem of batch mode RL is to evaluate the expected return Jh(x0) of a given policy h. Indeed, when such an oracle is available, the search for an optimal policy can be in some sense reduced to an optimization problem over the set of all candidate policies. When a model of the system dynamics, reward function and disturbances probability distribution is available, Monte Carlo estimation techniques can be run to estimate the performance of any control policy. But, this is indeed not possible in the batch mode setting. In this section, we detail an approach that estimates the performance of a policy by rebuilding artificial trajectories so as to mimic the behavior of the Monte Carlo estimator. We assume in this section (and also in Section 4.3) that the action space

is continuous and normed.

is continuous and normed.

4.2.1 Monte Carlo Estimation

The Monte Carlo (MC) estimator works in a model-based setting (i.e., in a setting where f, ρ and p

(.) are known). It estimates Jh(x0) by averaging the returns of several (say p ∈ ℕ0) trajectories which have been generated by simulating the system from x0 using the policy h. More formally, the MC estimator of the expected return of the policy h when starting from the initial state x0 writes:

(.) are known). It estimates Jh(x0) by averaging the returns of several (say p ∈ ℕ0) trajectories which have been generated by simulating the system from x0 using the policy h. More formally, the MC estimator of the expected return of the policy h when starting from the initial state x0 writes:

Definition 5 (Monte Carlo Estimator)

With ∀t ∈ {0,…,T − 1}, ∀i ∈ {1,…,p},

The bias and variance of the MC estimator are:

Proposition 1 (Bias and Variance of the MC Estimator)

where denotes the assumed finite variance of Rh (x0, w0,…,wT-1):

4.2.2 Model-free Monte Carlo Estimation

From a sample

n, our model-free MC (MFMC) estimator works by rebuilding p ∈ ℕ \ {0} artificial trajectories. These artificial trajectories will then serve as proxies of p “actual” trajectories that could be obtained by simulating the policy h on the given control problem. Our estimator averages the cumulated returns over these artificial trajectories to compute its estimate of the expected return Jh(x0). The main idea behind our method amounts at selecting the artificial trajectories so as to minimize the discrepancy of these trajectories with a classical MC sample that could be obtained by simulating the system with policy h.

n, our model-free MC (MFMC) estimator works by rebuilding p ∈ ℕ \ {0} artificial trajectories. These artificial trajectories will then serve as proxies of p “actual” trajectories that could be obtained by simulating the policy h on the given control problem. Our estimator averages the cumulated returns over these artificial trajectories to compute its estimate of the expected return Jh(x0). The main idea behind our method amounts at selecting the artificial trajectories so as to minimize the discrepancy of these trajectories with a classical MC sample that could be obtained by simulating the system with policy h.

To rebuild a sample of p artificial trajectories of length T starting from x0 and similar to trajectories that would be induced by a policy h, our algorithm uses each one-step transition in

n at most once; we thus assume that pT ≤ n. The p artificial trajectories of T one-step transitions are created sequentially. Every artificial trajectory is grown in length by selecting, among the sample of not yet used one-step transitions, a transition whose first two elements minimize the distance – using a distance metric Δ in

n at most once; we thus assume that pT ≤ n. The p artificial trajectories of T one-step transitions are created sequentially. Every artificial trajectory is grown in length by selecting, among the sample of not yet used one-step transitions, a transition whose first two elements minimize the distance – using a distance metric Δ in

×

×

– with the couple formed by the last element of the previously selected transition and the action induced by h at the end of this previous transition. Because we do not re-use one-step transitions, the disturbances associated with the selected transitions are i.i.d., which provides the MFMC estimator with interesting theoretical properties (see Section 4.2.3). Consequently, this also ensures that the p rebuilt artificial trajectories will be distinct.

– with the couple formed by the last element of the previously selected transition and the action induced by h at the end of this previous transition. Because we do not re-use one-step transitions, the disturbances associated with the selected transitions are i.i.d., which provides the MFMC estimator with interesting theoretical properties (see Section 4.2.3). Consequently, this also ensures that the p rebuilt artificial trajectories will be distinct.

| Algorithm 1 MFMC algorithm to rebuilt a set of size p of T–length artificial trajectories from a sample of n one-step transitions. |

|

|

Input:

n, h(., .),x0, Δ(., .),T,p n, h(., .),x0, Δ(., .),T,p

|

Let

denote the current set of not yet used one-step transitions in denote the current set of not yet used one-step transitions in

n; Initially, n; Initially, |

← ←

n; n; |

| for i = 1 to p (extract an artificial trajectory) do |

| t ← 0; |

| ; |

| while t < T do |

| ; |

| ; |

← lowest index in

n of the transitions that belong to n of the transitions that belong to

; ; |

| t ← t + 1; |

| ; |

| ; \\ do not re-use transitions |

| end while |

| end for |

| Return the set of indices . |

|

|

A tabular version of the algorithm for building the set of artificial trajectories is given in Table 1. It returns a set of indices of one-step transitions

from

n based on h, x0, the distance metric Δ and the parameter p. Based on this set of indices, we define our MFMC estimate of the expected return of the policy h when starting from the initial state x0:

n based on h, x0, the distance metric Δ and the parameter p. Based on this set of indices, we define our MFMC estimate of the expected return of the policy h when starting from the initial state x0:

Definition 6 (Model-free Monte Carlo Estimator)

Figure 2 illustrates the MFMC estimator. Note that the computation of the MFMC estimator

has a linear complexity with respect to the cardinality n of

n, the number of artificial trajectories p and the optimization horizon T.

n, the number of artificial trajectories p and the optimization horizon T.

Fig. 2.

Rebuilding three 4-length trajectories for estimating the return of a policy.

4.2.3 Analysis of the MFMC Estimator

In this section we characterize some main properties of our estimator. To this end, we study the distribution of our estimator

, seen as a function of the random set

; in order to characterize this distribution, we express its bias and its variance as a function of a measure of the density of the sample

n, defined by its “k–dispersion”; this is the smallest radius such that all Δ-balls in

n, defined by its “k–dispersion”; this is the smallest radius such that all Δ-balls in

×

×

of this radius contain at least k elements from

of this radius contain at least k elements from

n. The use of this notion implies that the space

n. The use of this notion implies that the space

×

×

is bounded (when measured using the distance metric Δ).

is bounded (when measured using the distance metric Δ).

The bias and variance characterization will be done under some additional assumptions detailed below. After that, we state the main theorems formulating these characterizations. Proofs are given in [22].

Assumption: Lipschitz continuity of the functions f, ρ and h

We assume that the dynamics f, the reward function ρ and the policy h are Lipschitz continuous, i.e., we assume that there exist finite constants Lf, Lρ and Lh ∈ ℝ+ such that:

where ║.║

and ║.║

and ║.║

denote the chosen norms over the spaces

denote the chosen norms over the spaces

and

and

, respectively.

, respectively.

Assumption:

×

×

is bounded

is bounded

We suppose that

×

×

is bounded when measured using the distance metric Δ.

is bounded when measured using the distance metric Δ.

Definition 7 (Distance Metric Δ)

Given k ∈ ℕ\{0} with k ≤ n, we define the k–dispersion, αk(

n) of the sample

n) of the sample

n:

n:

Definition 8 (k–Dispersion)

where

denotes the distance of (x, u) to its k–th nearest neighbor (using the distance metric Δ) in the

n sample.

n sample.

Definition 9 (Expected Value of )

We denote by the expected value:

We have the following theorem:

Theorem 1 (Bias Bound for )

The proof of this result is given in [22]. This formula shows that the bias is bounded closer to the target estimate if the sample dispersion is small. Note that the sample dispersion itself actually only depends on the sample

n and on the value of p (it will increase with the number of trajectories used by our algorithm).

n and on the value of p (it will increase with the number of trajectories used by our algorithm).

Definition 10 (Variance of )

We denote by the variance of the MFMC estimator defined by

and we give the following theorem:

Theorem 2 (Variance Bound for )

The proof of this theorem is given in [22]. We see that the variance of our MFMC estimator is guaranteed to be close to that of the classical MC estimator if the sample dispersion is small enough.

• Illustration

In this section, we illustrate the MFMC estimator on an academic problem. The system dynamics and the reward function are given by

and

with the state space

being equal to [−1,1] and the action space

being equal to [−1,1] and the action space

to [−1,1]. The disturbance wt is an element of the interval

with ∊ = 0.1 and p

to [−1,1]. The disturbance wt is an element of the interval

with ∊ = 0.1 and p

is a uniform probability distribution over this interval. The optimization horizon T is equal to 15. The policy h whose performances have to be evaluated is

is a uniform probability distribution over this interval. The optimization horizon T is equal to 15. The policy h whose performances have to be evaluated is

The initial state of the system is set x0 = −0.5.

For our first set of experiments, we choose to work with a value of p = 10 i.e., the MFMC estimator rebuilds 10 artificial trajectories to estimate Jh(−0.5). In these experiments, for different cardinalities

, we build a sample

nj = {(xl,ul)} that uniformly cover the space

nj = {(xl,ul)} that uniformly cover the space

×

×

as follows:

as follows:

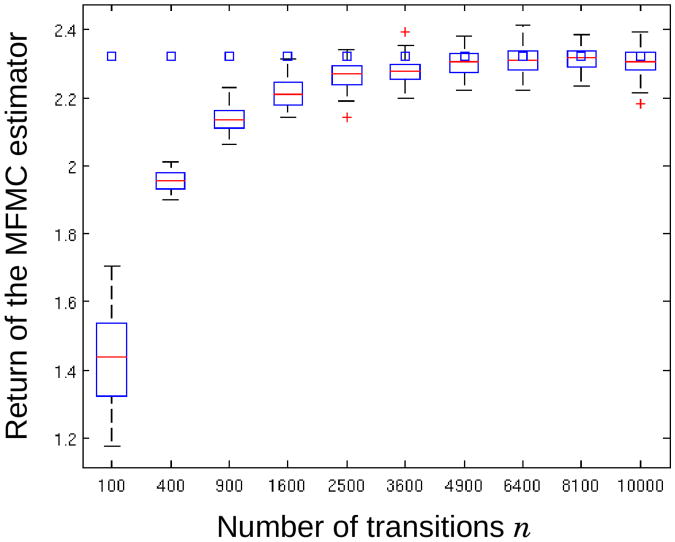

Then, we generate 50 random sets

Over

nj and run our MFMC estimator on each of these sets. The results of this first set of experiments are gathered in Figure 3. For every value of nj considered in our experiments, the 50 values computed by the MFMC estimator are concisely represented by a boxplot. The box has lines at the lower quartile, median, and upper quartile values. Whiskers extend from each end of the box to the adjacent values in the data within 1.5 times the interquartile range from the ends of the box. Outliers are data with values beyond the ends of the whiskers and are displayed with a red + sign. The squares represent an accurate estimate of Jh(−0.5) computed by running thousands of Monte Carlo simulations. As we observe, when the samples increase in size (which corresponds to a decrease of the pT–dispersion αpT(

nj and run our MFMC estimator on each of these sets. The results of this first set of experiments are gathered in Figure 3. For every value of nj considered in our experiments, the 50 values computed by the MFMC estimator are concisely represented by a boxplot. The box has lines at the lower quartile, median, and upper quartile values. Whiskers extend from each end of the box to the adjacent values in the data within 1.5 times the interquartile range from the ends of the box. Outliers are data with values beyond the ends of the whiskers and are displayed with a red + sign. The squares represent an accurate estimate of Jh(−0.5) computed by running thousands of Monte Carlo simulations. As we observe, when the samples increase in size (which corresponds to a decrease of the pT–dispersion αpT(

n)) the MFMC estimator is more likely to output accurate estimations of Jh(−0.5). As explained throughout this paper, there exist many similarities between the model-free MFMC estimator and the model-based MC estimator. These can be empirically illustrated by putting Figure 3 in perspective with Figure 4. This latter figure reports the results obtained by 50 independent runs of the MC estimator, each one of these runs using also p = 10 trajectories. As expected, one can see that the MFMC estimator tends to behave similarly to the MC estimator when the cardinality of the sample increases.

n)) the MFMC estimator is more likely to output accurate estimations of Jh(−0.5). As explained throughout this paper, there exist many similarities between the model-free MFMC estimator and the model-based MC estimator. These can be empirically illustrated by putting Figure 3 in perspective with Figure 4. This latter figure reports the results obtained by 50 independent runs of the MC estimator, each one of these runs using also p = 10 trajectories. As expected, one can see that the MFMC estimator tends to behave similarly to the MC estimator when the cardinality of the sample increases.

Fig. 3.

Computations of the MFMC estimator with p = 10, for different cardinalities n of the sample of one-step transitions. For each cardinality, 50 independent samples of transitions have generated. Squares represent Jh (x0).

Fig. 4.

Computations of the MC estimator with p = 10. 50 independent runs have been computed.

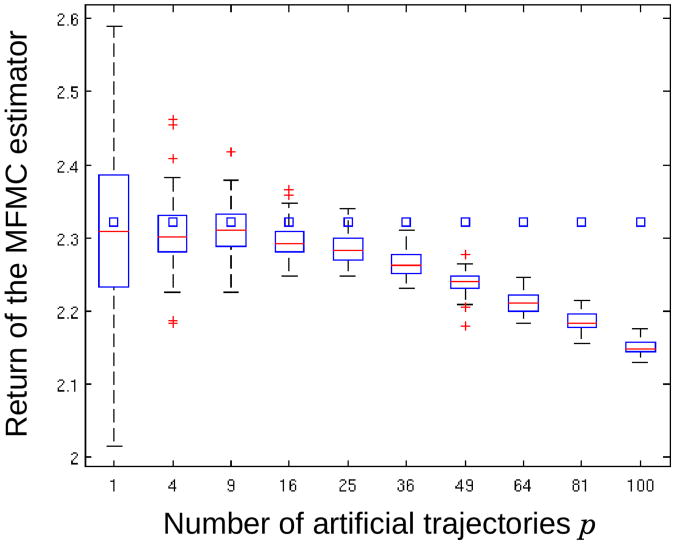

In our second set of experiments, we choose to study the influence of the number of artificial trajectories p upon which the MFMC estimator bases its prediction. For each value pj = j2

j = 1 … 10 we generate 50 samples

of one-step transitions of cardinality 10, 000 (using the sample

10000 defined in the first set of experiments) and use these samples to compute the MFMC estimator. The results are plotted in Figure 5. This figure shows that the bias of the MFMC estimator seems to be relatively small for small values of p and to increase with p. This is in accordance with Theorem 1 which bounds the bias with an expression that is increasing with p.

10000 defined in the first set of experiments) and use these samples to compute the MFMC estimator. The results are plotted in Figure 5. This figure shows that the bias of the MFMC estimator seems to be relatively small for small values of p and to increase with p. This is in accordance with Theorem 1 which bounds the bias with an expression that is increasing with p.

Fig. 5.

Computations of the MFMC estimator for different values of the number p of artificial trajectories extracted from a sample of n = 10, 000 tuples. For each value of p, 50 independent samples of transitions have generated. Squares represent Jh (x0).

In Figure 6, we have plotted the evolution of the values computed by the model-based MC estimator when the number of trajectories it considers in its prediction increases. While, for small numbers of trajectories, it behaves similarly to the MFMC estimator, the quality of its predictions steadily increases with p, while it is not the case for the MFMC estimator whose performances degrade once p crosses a threshold value. Notice that this threshold value could be made larger by increasing the size of the samples of one-step system transitions used as input of the MFMC algorithm.

Fig. 6.

Computations of the MC estimator for different values of the number of trajectories p. For each value of p, 50 independent runs of the MC estimator have been computed. Squares represent Jh(x0).

4.2.4 MFMC Estimation of the value at risk

In order to take into consideration the riskiness of policies - and not only their good performances “on average” -, one may prefer to consider a Value-at-Risk–type performance criterion instead of expected return. Notice that this type of criterion has received more and more attention during the last few years inside the RL community [11,31,32].

If we consider the p artificial trajectories that are rebuilt by the MFMC estimator, the value at risk can be efficiently approximated by the value defined as follows:

Definition 11 (Estimate of the T–stage Value at Risk)

Let b ∈ ℝ and c ∈[0, 1[.

where ri denotes the return of the i–th artificial trajectory:

4.3 Artificial Trajectories in the Deterministic Case: Computing Bounds

From this subsection to the end of Section 4, we assume a deterministic environment. More formally, we assume that the disturbances space is reduced to a single element

= {0} which concentrates the whole probability mass p

= {0} which concentrates the whole probability mass p

(0) = 1. We abusively use the convention:

(0) = 1. We abusively use the convention:

We still assume that the functions f, ρ and h are Lipschitz continuous. Observe that, in a deterministic context, only one trajectory is needed to compute Jh(x0) by Monte Carlo estimation. We have the following result:

Proposition 2 (Lower Bound from the MFMC)

Let be an artificial trajectory rebuilt by the MFMC algorithm when using the distance measure Δ. Then, we have

where

and yl−1 = x0.

The proof of this theorem can be found in [19]. Since the previous result is valid for any artificial trajectory, we have:

Corollary 1 (Lower Bound from any Artificial Trajectory)

Let be any artificial trajectory. Then,

This suggests to identify an artificial trajectory that leads to the maximization of the previous lower bound:

Definition 12 (Maximal Lower Bound)

Note that in the same way, a minimal upper bound can be computed:

Definition 13 (Minimal Upper Bound)

Additionaly, we can prove that both the lower and the upper bound are tight, in the sense that they both converge towards Jh(x0) when the dispersion of the sample of system transitions

n decreases towards zero.

n decreases towards zero.

Proposition 3 (Tightness of the Bounds)

where α1(

n) denotes the 1 – dispersion of the sample of system transitions

n) denotes the 1 – dispersion of the sample of system transitions

n.

n.

This result is proved in [19]. Note that the computation of both the maximal lower bound and minimal upper bound can be reformulated as a shortest path problem in a graph, for which the computational complexity is linear with respect to the optimization horizon T and quadratic with respect to the cardinality n of the sample of transitions

n.

n.

4.3.1 Extension to Finite Action Spaces

The results given above can be extended to the case where the action space

is finite (and thus discrete) by considering policies that are fully defined by a sequence of actions. Such policies can be qualified as “open-loop”. Let Π be the set of open-loop policies:

is finite (and thus discrete) by considering policies that are fully defined by a sequence of actions. Such policies can be qualified as “open-loop”. Let Π be the set of open-loop policies:

Definition 14 (Open-loop Policies)

Given an open-loop policy π, the (deterministic) T–stage return of π writes:

With

In the context of a finite action space, the Lipschitz continuity of f and ρ is: ∀(x, x′, u) ∈

2 ×

2 ×

,

,

Since the action space is not normed anymore, we also need to redefine the sample dispersion.

Definition 15 (Sample Dispersion)

We assume that the state space is bounded, and we define the sample dispersion α*(

n) as the smallest α such that

n) as the smallest α such that

Let π ∈ Π be an open-loop policy. We have the following result:

Proposition 4 (Lower Bound - Open-loop Policy π)

Let be an artificial trajectory such that

Then,

where

A maximal lower bound can then be computed by maximizing the previous bound over the set of all possible artificial trajectories that satisfy the condition ult = π(t) ∀t ∈ {0,…,T − 1}. In the following, we denote by the set of artificial trajectories that satisfy this condition:

Then, we have:

Definition 16 (Maximal Lower Bound - Open-loop Policy π)

Similarly, a minimal upper bound Uπ (

n, x0) can also be computed:

n, x0) can also be computed:

Definition 17 (Minimal Upper Bound - Open-loop Policy π)

Both bounds are tight in the following sense:

Proposition 5 (Tightness of the Bounds - Open-loop Policy π)

The proofs of the above stated results are given in [20].

4.4 Artificial Trajectories for Computing Safe Policies

Like in Section 4.3.1, we still assume that the action space

is finite, and we consider open-loop policies. To obtain a policy with good performance guarantees, we suggest to find an open-loop policy

such that:

is finite, and we consider open-loop policies. To obtain a policy with good performance guarantees, we suggest to find an open-loop policy

such that:

Recall that such an “open-loop” policy is optimized with respect to the initial state x0. Solving the above optimization problem can be seen as identifying an optimal rebuilt artificial trajectory and outputting as open-loop policy the sequence of actions taken along this artificial trajectory:

Finding such a policy can again be done in an efficient way by reformulating the problem as a shortest path problem in a graph. We provide in [20] an algorithm called CGRL (which stands for “Cautious approach to Generalization in RL”) of complexity

(n2T) for finding such a policy. A tabular version of the CGRL is given in Table 2 and an illustration that shows how the CGRL solution can be seen as a shortest path in a graph is also given in Figure 7. We now give a theorem which shows the convergence of the policy

towards an optimal open-loop policy when the dispersion α* (

(n2T) for finding such a policy. A tabular version of the CGRL is given in Table 2 and an illustration that shows how the CGRL solution can be seen as a shortest path in a graph is also given in Figure 7. We now give a theorem which shows the convergence of the policy

towards an optimal open-loop policy when the dispersion α* (

n) of the sample of transitions converges towards zero.

n) of the sample of transitions converges towards zero.

Fig. 7.

A graphical interpretation of the CGRL algorithm. The CGRL solution can be interpreted as a shortest path in a specific graph.

Theorem 3 (Convergence of )

Let

* (x0) be the set of optimal open-loop policies:

* (x0) be the set of optimal open-loop policies:

| Algorithm 2 CGRL algorithm. | |

|

| |

|

Input:

| |

| Initialization: | |

| D ← n × (T − 1) matrix initialized to zero; | |

| A ← n–dimensional vector initialized to zero; | |

| B ← n–dimensional vector initialized to zero; | |

| Computation of the Lipschitz constants : | |

| ; | |

| for k = 2 … T do | |

| ; | |

| end for | |

| t ← T − 2; | |

| while t > −1 do | |

| for i = 1 … n do | |

| ; | |

| ; | |

| A(i) ← m0; | |

| D(i, t + 1) ← j0; \\ best tuple at t + 1 if in tuple i at time t | |

| end for | |

| B ← A; | |

| t = t −1; | |

| end while | |

| Conclusion: | |

| S ← (T + 1)–length vector of actions initialized to zero; | |

| ; | |

| ; \\ best lower bound | |

| S(1) ← ul; \\ CGRL action for t = 0. | |

| for t = 0 … T − 2 do | |

| l′ ← D(l, t + 1); | |

| S(t + 2,:) ← ul′; other CGRL actions | |

| l ← l′; | |

| end for | |

| Return: S | |

|

|

and let us suppose that

*(x0) ≠ Π (if

*(x0) ≠ Π (if

*(x0) = Π, the search for an optimal policy is indeed trivial). We define

*(x0) = Π, the search for an optimal policy is indeed trivial). We define

Then,

The proof of this result is also given in [20].

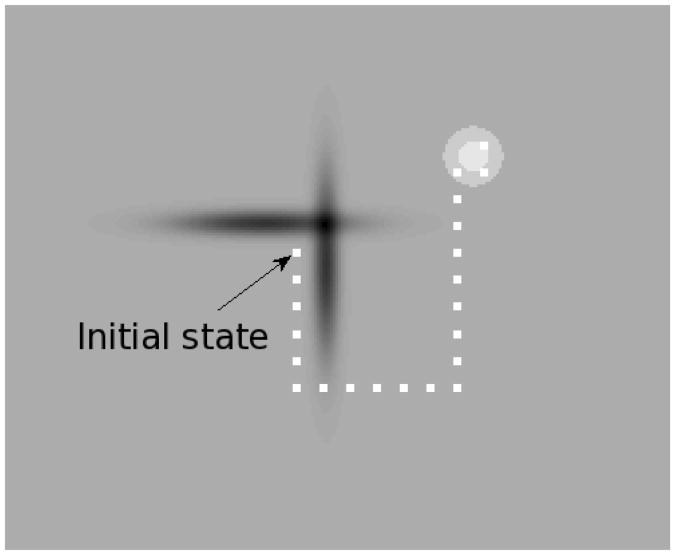

• Illustration

We now illustrate the performances of the policy

computed by the CGRL algorithm on a variant of the puddle world benchmark introduced in [42]. In this benchmark, a robot whose goal is to collect high cumulated rewards navigates on a plane. A puddle stands in between the initial position of the robot and the high reward area (see figure 8). If the robot is in the puddle, it gets highly negative rewards. An optimal navigation strategy drives the robot around the puddle to reach the high reward area. Two datasets of one-step transitions have been used in our example. The first set

contains elements that uniformly cover the area of the state space that can be reached within T steps. The set

contains elements that uniformly cover the area of the state space that can be reached within T steps. The set

′ has been obtained by removing from

′ has been obtained by removing from

the elements corresponding to the highly negative rewards. The full specification of the benchmark and the exact procedure for generating

the elements corresponding to the highly negative rewards. The full specification of the benchmark and the exact procedure for generating

and

and

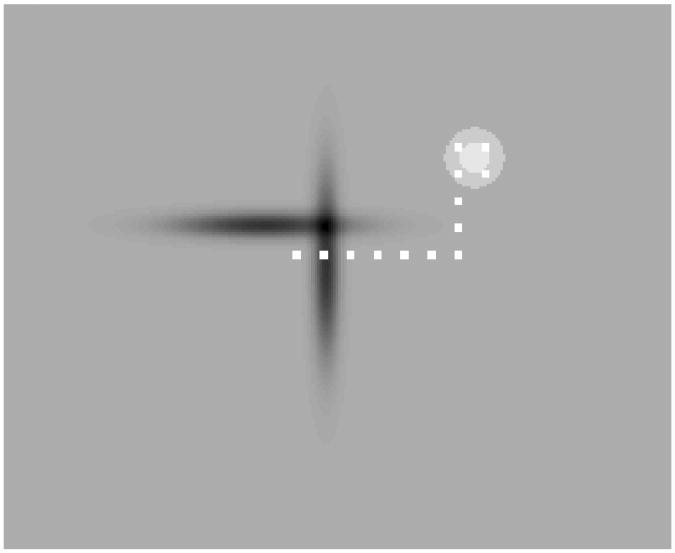

′ are given in [20]. On Figure 9, we have drawn the trajectory of the robot when following the policy

. Every state encountered is represented by a white square. The plane upon which the robot navigates has been colored such that the darker the area, the smaller the corresponding rewards are. In particular, the puddle area is colored in dark grey/black. We see that the policy

drives the robot around the puddle to reach the high-reward area – which is represented by the light-grey circles.

′ are given in [20]. On Figure 9, we have drawn the trajectory of the robot when following the policy

. Every state encountered is represented by a white square. The plane upon which the robot navigates has been colored such that the darker the area, the smaller the corresponding rewards are. In particular, the puddle area is colored in dark grey/black. We see that the policy

drives the robot around the puddle to reach the high-reward area – which is represented by the light-grey circles.

Fig. 8.

The Puddle World benchmark. Starting from x0, an agent has to avoid the puddles and navigate towards the goal.

Fig. 9.

CGRL with

.

.

Figure 10 represents the policy inferred from

by using the (finite-time version of the) Fitted Q Iteration algorithm (FQI) combined with extremely randomized trees as function approximators [13]. The trajectories computed by the policy

and FQI algorithms are very similar and so are the sums of rewards obtained by following these two trajectories. However, by using

by using the (finite-time version of the) Fitted Q Iteration algorithm (FQI) combined with extremely randomized trees as function approximators [13]. The trajectories computed by the policy

and FQI algorithms are very similar and so are the sums of rewards obtained by following these two trajectories. However, by using

′ rather that

′ rather that

, the policy

and FQI algorithms do not lead to similar trajectories, as it is shown on Figures 11 and 12. Indeed, while the policy

still drives the robot around the puddle to reach the high reward area, the FQI policy makes the robot cross the puddle. In terms of optimality, this latter navigation strategy is much worse. The difference between both navigation strategies can be explained as follows. The FQI algorithm behaves as if it were associating to areas of the state space that are not covered by the input sample, the properties of the elements of this sample that are located in the neighborhood of these areas. This in turn explains why it computes a policy that makes the robot cross the puddle. The same behavior could probably be observed by using other algorithms that combine dynamic programming strategies with kernel-based approximators or averagers [5,25,37]. The policy

generalizes the information contained in the dataset, by assuming, given the intial state, the most adverse behavior for the environment according to its weak prior knowledge about the environment. This results in the fact that it penalizes sequences of decisions that could drive the robot in areas not well covered by the sample, and this explains why the policy

drives the robot around the puddle when run with

, the policy

and FQI algorithms do not lead to similar trajectories, as it is shown on Figures 11 and 12. Indeed, while the policy

still drives the robot around the puddle to reach the high reward area, the FQI policy makes the robot cross the puddle. In terms of optimality, this latter navigation strategy is much worse. The difference between both navigation strategies can be explained as follows. The FQI algorithm behaves as if it were associating to areas of the state space that are not covered by the input sample, the properties of the elements of this sample that are located in the neighborhood of these areas. This in turn explains why it computes a policy that makes the robot cross the puddle. The same behavior could probably be observed by using other algorithms that combine dynamic programming strategies with kernel-based approximators or averagers [5,25,37]. The policy

generalizes the information contained in the dataset, by assuming, given the intial state, the most adverse behavior for the environment according to its weak prior knowledge about the environment. This results in the fact that it penalizes sequences of decisions that could drive the robot in areas not well covered by the sample, and this explains why the policy

drives the robot around the puddle when run with

′.

′.

Fig. 10.

FQI with

.

.

Fig. 11.

CGRL with

′.

′.

Fig. 12.

FQI with

′.

′.

4.4.1 Taking Advantage of Optimal Trajectories

In this section, we give another result which shows that, in the case where an optimal trajectory can be found in the sample of system transitions, then the policy computed by the CGRL algorithm is also optimal.

Theorem 4 (Optimal Policies computed from Optimal Trajectories)

Let

be an optimal open-loop policy. Let us assume that one can find in

n a sequence of T one-step system transitions

n a sequence of T one-step system transitions

such that

Let be such that

Then,

Proof Let us prove the result by contradiction. Assume that is not optimal. Since is optimal, one has:

| (1) |

Let us now consider the lower bound on the return of the policy computed from the sequence of transitions

By construction of this sequence of transitions, we have:

By definition of the policy , we have:

| (2) |

Since is a lower bound on the return of , we have:

| (3) |

Combining inequalities 2 and 3 yields a contradiction with inequality 1.

4.5 Rebuilding Artificial Trajectories for Designing Sampling Strategies

We suppose in this section that additional system transitions can be generated, and we detail hereafter a sampling strategy to select state-action pairs (x, u) for generating f(x, u) and ρ(x, u) so as to be able to discriminate rapidly – as new one-step transitions are generated – between optimal and non-optimal policies from Π. This strategy is directly based on the previously described bounds.

Before describing our proposed sampling strategy, let us introduce a few definitions. First, note that a policy can only be optimal given a set of one-step transitions

if its upper bound is not lower than the lower bound of any element of Π. We qualify as “candidate optimal policies given

if its upper bound is not lower than the lower bound of any element of Π. We qualify as “candidate optimal policies given

” and we denote by Π (

” and we denote by Π (

, x0) the set of policies which satisfy this property:

, x0) the set of policies which satisfy this property:

Definition 18 (Candidate Optimal Policies Given

)

)

We also define the set of “compatible transitions given

” as follows:

” as follows:

Definition 19 (Compatible Transitions Given

)

)

A transition (x, u, r, y) ∈

×

×

× ℝ ×

× ℝ ×

is said compatible with the set of transitions

is said compatible with the set of transitions

if

if

We denote by

(

(

) ⊂

) ⊂

×

×

× ℝ ×

× ℝ ×

the set that gathers all transitions that are compatible with the set of transitions

the set that gathers all transitions that are compatible with the set of transitions

.

.

Our sampling strategy generates new one-step transitions iteratively. Given an existing set

m of m ∈ ℕ\{0} one-step transitions, which is made of the elements of the initial set

m of m ∈ ℕ\{0} one-step transitions, which is made of the elements of the initial set

n and the m-n one-step transitions generated during the first m-n iterations of this algorithm, it selects as next sampling point (xm+1,um+1) ∈

n and the m-n one-step transitions generated during the first m-n iterations of this algorithm, it selects as next sampling point (xm+1,um+1) ∈

×

×

, the point that minimizes in the worst conditions the largest bound width among the candidate optimal policies at the next iteration:

, the point that minimizes in the worst conditions the largest bound width among the candidate optimal policies at the next iteration:

where

Based on the convergence properties of the bounds, we conjecture that the sequence (Π(

m, x0))m∈ℕ converges towards the set of all optimal policies in a finite number of iterations:

m, x0))m∈ℕ converges towards the set of all optimal policies in a finite number of iterations:

The analysis of the theoretical properties of the sampling strategy and its empirical validation are left for future work.

• Illustration

In order to illustrate how the bound-based sampling strategy detailed above allows to discriminate among policies, we consider the following toy problem. The actual dynamics and reward functions are given by:

The state space is included in ℝ. The action space is set to

We consider a time horizon T = 3, which induces 53 = 125 different policies. The initial state is set to x0 = −0.65. Consequently, there is only one optimal policy, which consists in applying action +0.20 three times.

In the following experiments, the and operators, whose computation is of huge complexity, are approximated using purely random search algorithms (i.e. by randomly generating feasible points and taking the optimum over those points). We begin with a small sample of n = 5 transitions (one for each action)

and iteratively augment it using our bound-based sampling strategy. We compare our strategy with a uniform sampling strategy (starting from the same initial sample of 5 transitions). We plot in Figure 13 the evolution of the empirical average number of candidate optimal policies (over 50 runs) with respect to the cardinality of the generated sample of transitions 2. We empirically observe that the bound-based sampling strategy allows to discriminate policies faster than the uniform sampling strategy. In particular, we observe that, on average, bound-based strategy using 40 samples provides discriminating performances that are equivalent to those of the uniform sampling strategy using 80 samples, which represents a significant increase. Note that in this specific benchmark, one should sample 5 + 25 + 125 = 155 state-action pairs (by trying all possible policies) in order to be sure to discriminate all non-optimal policies.

Fig. 13.

Evolution of the average number of candidate optimal policies with respect to the cardinality of the generated samples of transitions using our bound-based sampling strategy and a uniform sampling strategy (empirical average over 50 runs).

4.6 Summary

We synthesize in Figure 14 the different settings and the corresponding results that have been presented in Section 4. Such results are classified in two main categories: stochastic setting and deterministic setting. Among each setting, we explicit the context (continuous/finite action space) and the nature of each result (theoretical result, algorithmic contribution, empirical evaluation) using a color code.

Fig. 14.

A schematic presentation of the results presented in Section 4. algorithm.

5 Towards a New Paradigm for Batch Mode RL

In this concluding section, we highlight some connexions between the approaches based on synthesizing artificial trajectories and a more standard batch mode RL algorithm, the FQI algorithm [13] when it is used for policy evaluation. From a technical point of view, we consider again in this section the stochastic setting that was formalized in Section 3. The action space

is continuous and normed, and we consider a given closed-loop, time varying, Lipschitz continuous control policy h: {0, …, T − 1} ×

is continuous and normed, and we consider a given closed-loop, time varying, Lipschitz continuous control policy h: {0, …, T − 1} ×

→

→

.

.

5.1 Fitted Q Iteration for Policy Evaluation

The finite horizon FQI iteration algorithm for policy evaluation (FQI-PE) works by recursively computing a sequence of functions as follows:

Definition 20 (FQI-PE Algorithm)

• ∀(x, u) ∈

×

×

.

.

• For t = T − 1…0, build the dataset :

and use a regression algorithm

to infer from D the function

:

to infer from D the function

:

The FQI -PE estimator of the policy h is given by:

Definition 21 (FQI Estimator)

5.2 FQI using k–Nearest Neighbor Regressors: an Artificial Trajectory Viewpoint

We propose in this section to use a k–Nearest Neighbor algorithm (k–NN) as regression algorithm

. In the following, for a given state action couple (x, u) ∈

. In the following, for a given state action couple (x, u) ∈

×

×

, we denote by li(x, u) the lowest index in

, we denote by li(x, u) the lowest index in

n of the i-th nearest one step transition from the state-action couple (x, u) using the distance measure Δ. Using this notation, the k–NN based FQI-PE algorithm for estimating the expected return of the policy h works as follows:

n of the i-th nearest one step transition from the state-action couple (x, u) using the distance measure Δ. Using this notation, the k–NN based FQI-PE algorithm for estimating the expected return of the policy h works as follows:

Definition 22 (k–NN FQI-PE Algorithm)

• ∀(x, u) ∈

×

×

,

,

• For t = T − 1…0, ∀(x, u) ∈

×

×

,

,

The k–NN FQI-PE estimator of the policy h is given by:

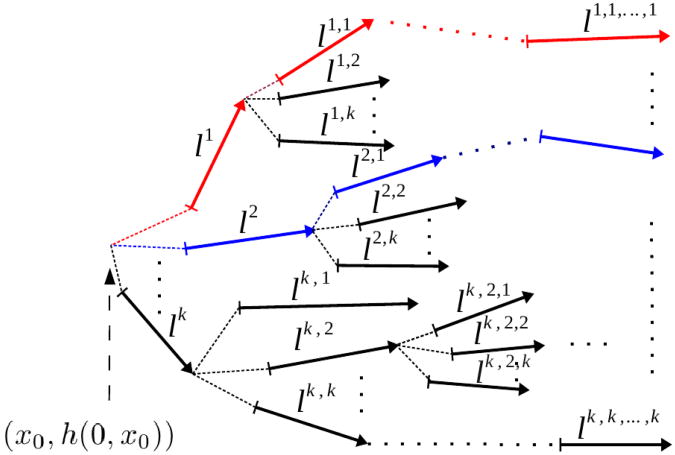

One can observe that, for a fixed initial state x0, the computation of the k–NN FQI-PE estimator of h works by identifying (k + k2 + … + kT) non-unique one-step transitions. These transitions are non-unique in the sense that some transitions can be selected several times during the process. In order to concisely denote the indexes of the one-step system transitions that are selected during the k–NN FQI-PE algorithm, we introduce the notation li0,i1, … it for refering to the transition lit (yli0, … it−1, h(t, yli0, … it−1)) for i0, … it ∈ {1, … k}, t ≥ 1 with li0 = li0(x0, h(0, x0)). Using these notations, we illustrate the computation of the k–NN FQI-PE Estimator in Figure 15. Then, we have the following result:

Fig. 15.

Illustration of the k–NN value iteration algorithm in terms of artificial trajectories.

Proposition 6 (k–NN FQI-PE using Artificial Trajectories)

where the set of rebuilt artificial trajectories

is such that ∀t ∈ {0, …, T − 1}, ∀ (i0, …,it) ∈ {1, …, k}t + 1,

Proof We propose to prove by induction the property

Basis: According to the definition of the k–NN estimator, we have:

which proves

1.

1.

Induction step: Let us assume that

t is true for t ∈ {1,…, T − 1}. Then, we have:

t is true for t ∈ {1,…, T − 1}. Then, we have:

| (4) |

According to the definition of the k–NN FQI-PE algorithm, we have:

| (5) |

Equations (4) and (5) give

which proves

t+1. The proof is completed by observing that

t+1. The proof is completed by observing that

and by observing that the property

directly comes from the use of k–NN function approximators.

The previous result shows that the estimate of the expected return of the policy h computed by the k–NN FQI-PE algorithm is the average of the return of kT artificial trajectories. These artificial trajectories are built from (k + k2 + … + kT) non-unique one-step system transitions from

n that are also chosen by minimizing the distance between two successive one-step transitions.

n that are also chosen by minimizing the distance between two successive one-step transitions.

• Illustration. We empirically compare the MFMC estimator with the k–NN FQI-PE estimator on the toy problem presented in Section 4.2, but with a smaller time horizon T = 5. For a fixed cardinality n = 100, we consider all possible values of the parameter k (k ∈ {1,…, 100} since there are at most n nearest neighbours) and p (p ∈ {1,…, 20} since one can generate at most n/T different artificial trajectories without re-using transitions). For each value of p (resp. k), we generate 1000 samples of transitions using a uniform random distribution over the state action space. For each sample, we run the MFMC (resp. the k–NN FQI-PE estimator). As a baseline comparison, we also compute a 1000 runs of the MC estimator for every value of p. Figure 16 (resp. 17 and 18) reports the obtained empirical average (resp. variance and mean squared error).

Fig. 16.

Empirical average observed for the MC estimator, the MFMC estimator and the k–NN FQI-PE estimator for different values of k and p (k ∈ {1,…, 100}, p ∈ {1,…, 20}, 1000 runs for each value of k, p).

We observe in Figure 16 that (i) the MFMC estimator with p ∈ {1,…, 3} is less biased than the k–NN FQI-PE estimator with any value of k ∈ {1,…, 100} and (ii) the bias of the MFMC estimator increases faster (with respect to p) than the bias of the k–NN FQI-PE estimator (with respect to k). The increase of the bias of the MFMC estimator with respect to p is suggested by Theorem 1, where an upper bound on the bias that increases with p is provided. This phenomenon seems to affect the k–NN FQI-PE estimator (with respect to k) to a lesser extent. In Figure 17, we observe that the k–NN FQI-PE estimator has a variance that is higher than that of the MFMC estimator for any k = p. This may be explained by the fact that for samples of n = 100 transitions, one-step transitions are often re-used by the k–NN FQI-PE estimator, which generates dependence between artificial trajectories. We finally plot in Figure 18 the observed empirical mean squared error (sum of the squared empirical bias and empirical variance) and observe that in our specific setting, the MFMC estimator offers for values of p ∈ {1,2,3,4} a better bias versus variance compromise than the k–NN FQI-PE estimator with any value of k.

Fig. 17.

Empirical variance observed for the MC estimator, the MFMC estimator and the k–NN FQI-PE estimator for different values of k and p (k ∈ {1,…, 100}, p ∈ {1,…, 20}, 1000 runs for each value of k, p).

Fig. 18.

Empirical mean square error observed for the MC estimator, the MFMC estimator and the k–NN FQI-PE estimator for different values of k and p (k ∈ {1,…, 100}, p ∈ {1,…, 20}, 1000 runs for each value of k, p).

5.3 Kernel-based and Other Averaging type Regression Algorithms

The results exposed in Section 5.2 can be extended to the case where the FQI-PE algorithm is combined with kernel-based regressors and in particular tree-based regressors. In such a context, the sequence of functions is computed as follows:

Definition 23 (KB FQI-PE Algorithm)

∀(x, u) ∈

×

×

,

,

For t = T – 1 … 0, ∀(x, u) ∈

×

×

,

,

with

where Φ: [0,1] → ℝ+ is a univariate non-negative “mother kernel” function, and bn > 0 is the bandwidth parameter. We also suppose that and Φ(x) = 0 ∀x > 1.

The KB estimator of the expected return of the policy h is given by:

Given an initial state x0 ∈

, the computation of the KB FQI-PE algorithm can also be interpreted as an identification of a set of one-step transitions from

, the computation of the KB FQI-PE algorithm can also be interpreted as an identification of a set of one-step transitions from

n. At each time step t, all the one-step transitions (xl, ul, rl, yl) that are not farther than a distance bn from (xt, h(t, xt)) are selected and weighted with a distance dependent factor. Other one-step transitions are weighted with a factor equal to zero. This process is iterated with the output of each selected one-step transitions. An illustration is given in Figure 19 . The value returned by the KB estimator can be expressed as follows:

n. At each time step t, all the one-step transitions (xl, ul, rl, yl) that are not farther than a distance bn from (xt, h(t, xt)) are selected and weighted with a distance dependent factor. Other one-step transitions are weighted with a factor equal to zero. This process is iterated with the output of each selected one-step transitions. An illustration is given in Figure 19 . The value returned by the KB estimator can be expressed as follows:

Fig. 19.

Illustration of the KB value iteration agorithm in terms of artificial trajectories.

Proposition 7

with

Proof We propose to prove by induction the property

Basis: One has

which proves

1.

1.

Induction step: Let us assume that

t is true for t ∈ {1,…, T − 1}. Then, one has

t is true for t ∈ {1,…, T − 1}. Then, one has

| (6) |

According to the KB value iteration algorithm, we have:

| (7) |

Equations (6) and (7) give

Since , one has

which proves

t + 1. The proof is completed by observing that

.

t + 1. The proof is completed by observing that

.

One can observe through Proposition 7 that the computation of the KB estimate of the expected return of the policy h can be expressed in the form of a weighted sum of the return of nT artificial trajectories. Each artificial trajectory

is weighted with a factor

. Note that some of these factors can eventually be equal to zero. Similarly to the k–NN estimator, these artificial trajectories are also built from the T × nT non-unique one-step system transitions from

n.

n.

More generally, we believe that the notion of artificial trajectory could also be used to characterize other batch mode RL algorithms that rely on other kinds of “averaging” schemes [24].

6 Conclusion

In this paper we have revisited recent works based on the idea of synthesizing artificial trajectories in the context of batch mode reinforcement learning problems. This paradigm shows to be of value in order to construct novel algorithms and performance analysis techniques. We think that it is of interest to revisit in this light the existing batch mode reinforcement algorithms based on function approximators in order to analyze their behavior and possibly create new variants presenting interesting performance guarantees.

Acknowledgments

Raphael Fonteneau is a Post-doctoral Fellow of the F.R.S.-FNRS. This paper presents research results of the European excellence network PASCAL2 and of the Belgian Network DYSCO, funded by the Interuniversity Attraction Poles Programme, initiated by the Belgian State, Science Policy Office. We also acknowledge financial support from NIH grants P50 DA10075 and R01 MH080015. The scientific responsibility rests with its authors.

Footnotes

Here the fundamental assumption is that wt is independent of wt−1, wt−2, …, w0 given xt and ut; to simplify all notations and derivations, we furthermore impose that the process is time-invariant and does not depend on the states and actions xt, ut.

We have chosen to represent the average results obtained over 50 runs for both sampling methods rather the results obtained over one single run since (i) the variance of the results obtained by uniform sampling is high and (ii) the variance of the results obtained by the bound-based approach is also significant since the procedures for approximating the and operators rely on a random number generator.

Contributor Information

Raphael Fonteneau, Email: raphael.fonteneau@ulg.ac.be, University of Liége, Belgium.

Susan A. Murphy, Email: samurphy@umich.edu, University of Michigan, USA.

Louis Wehenkel, Email: l.wehenkel@ulg.ac.be, University of Liége, Belgium.

Damien Ernst, Email: dernst@ulg.ac.be, University of Liége, Belgium.

References

- 1.Antos A, Munos R, Szepesvári C. Fitted Q-iteration in continuous action space MDPs. Advances in Neural Information Processing Systems 20 (NIPS) 2007 [Google Scholar]

- 2.Bellman R. Dynamic Programming. Princeton University Press; 1957. [Google Scholar]

- 3.Bonarini A, Caccia C, Lazaric A, Restelli M. Batch reinforcement learning for controlling a mobile wheeled pendulum robot. Artificial Intelligence in Theory and Practice II. 2008:151–160. [Google Scholar]

- 4.Boyan J. Technical update: Least-squares temporal difference learning. Machine Learning. 2005;49:233–246. [Google Scholar]

- 5.Boyan J, Moore A. Advances in Neural Information Processing Systems 7 (NIPS) MIT Press; Denver, CO, USA: 1995. Generalization in reinforcement learning: Safely approximating the value function; pp. 369–376. [Google Scholar]

- 6.Bradtke S, Barto A. Linear least-squares algorithms for temporal difference learning. Machine Learning. 1996;22:33–57. [Google Scholar]

- 7.Busoniu L, Babuska R, De Schutter B, Ernst D. Reinforcement Learning and Dynamic Programming using Function Approximators. Taylor & Francis CRC Press; 2010. [Google Scholar]

- 8.Castelletti A, Galelli S, Restelli M, Soncini-Sessa R. Tree-based reinforcement learning for optimal water reservoir operation. Water Resources Research. 2010;46 [Google Scholar]

- 9.Castelletti A, de Rigo D, Rizzoli A, Soncini-Sessa R, Weber E. Neuro-dynamic programming for designing water reservoir network management policies. Control Engineering Practice. 2007;15(8):1031–1038. [Google Scholar]

- 10.Chakraborty B, Strecher V, Murphy S. Workshop on Model Uncertainty and Risk in Reinforcement Learning. NIPS; Whistler, Canada: 2008. Bias correction and confidence intervals for fitted Q-iteration. [Google Scholar]

- 11.Defourny B, Ernst D, Wehenkel L. Workshop on Model Uncertainty and Risk in Reinforcement Learning. NIPS; Whistler, Canada: 2008. Risk-aware decision making and dynamic programming. [Google Scholar]

- 12.Ernst D, Geurts P, Wehenkel L. Iteratively extending time horizon reinforcement learning. European Conference on Machine Learning (ECML) 2003:96–107. [Google Scholar]

- 13.Ernst D, Geurts P, Wehenkel L. Tree-based batch mode reinforcement learning. Journal of Machine Learning Research. 2005;6:503–556. [Google Scholar]

- 14.Ernst D, Glavic M, Capitanescu F, Wehenkel L. Reinforcement learning versus model predictive control: a comparison on a power system problem. IEEE Transactions on Systems, Man, and Cybernetics - Part B: Cybernetics. 2009;39:517–529. doi: 10.1109/TSMCB.2008.2007630. [DOI] [PubMed] [Google Scholar]

- 15.Ernst D, Marée R, Wehenkel L. Reinforcement learning with raw image pixels as state input. International Workshop on Intelligent Computing in Pattern Analysis/Synthesis (IWICPAS) Proceedings series: Lecture Notes in Computer Science. 2006;4153:446–454. [Google Scholar]

- 16.Ernst D, Stan G, Goncalves J, Wehenkel L. Clinical data based optimal STI strategies for HIV: a reinforcement learning approach. Machine Learning Conference of Belgium and The Netherlands (BeNeLearn) 2006:65–72. [Google Scholar]

- 17.Farahmand A, Ghavamzadeh M, Szepesvri C, Mannor S. Regularized fitted q-iteration: Application to planning. In: Girgin S, Loth M, Munos R, Preux P, Ryabko D, editors. Recent Advances in Reinforcement Learning, Lecture Notes in Computer Science. Vol. 5323. Springer Berlin; Heidelberg: 2008. pp. 55–68. [Google Scholar]

- 18.Fonteneau R. Ph D thesis. University of Liége; 2011. Contributions to Batch Mode Reinforcement Learning. [Google Scholar]

- 19.Fonteneau R, Murphy S, Wehenkel L, Ernst D. IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL) Nashville, TN, USA: 2009. Inferring bounds on the performance of a control policy from a sample of trajectories. [Google Scholar]

- 20.Fonteneau R, Murphy S, Wehenkel L, Ernst D. A cautious approach to generalization in reinforcement learning. Second International Conference on Agents and Artificial Intelligence (ICAART); Valencia, Spain. 2010. [Google Scholar]

- 21.Fonteneau R, Murphy S, Wehenkel L, Ernst D. Generating informative trajectories by using bounds on the return of control policies. Workshop on Active Learning and Experimental Design 2010 (in conjunction with AISTATS 2010) 2010 [Google Scholar]

- 22.Fonteneau R, Murphy S, Wehenkel L, Ernst D. Thirteenth International Conference on Artificial Intelligence and Statistics (AISTATS), JMLR: W&CP 9. Chia Laguna, Sardinia, Italy: 2010. Model-free Monte Carlo–like policy evaluation; pp. 217–224. [Google Scholar]

- 23.Fonteneau R, Murphy SA, Wehenkel L, Ernst D. Towards min max generalization in reinforcement learning. Revised Selected Papers Series: Communications in Computer and Information Science (CCIS); Agents and Artificial Intelligence: International Conference, ICAART 2010; Valencia, Spain. January 2010; Heidelberg: Springer; 2011. pp. 61–77. [Google Scholar]

- 24.Gordon G. Stable function approximation in dynamic programming. Twelfth International Conference on Machine Learning (ICML) 1995:261–268. [Google Scholar]

- 25.Gordon G. Ph D thesis. Carnegie Mellon University; 1999. Approximate solutions to markov decision processes. [Google Scholar]

- 26.Guez A, Vincent R, Avoli M, Pineau J. Adaptive treatment of epilepsy via batch-mode reinforcement learning. Innovative Applications of Artificial Intelligence (IAAI) 2008 [Google Scholar]

- 27.Lagoudakis M, Parr R. Least-squares policy iteration. Jounal of Machine Learning Research. 2003;4:1107–1149. [Google Scholar]

- 28.Lange S, Riedmiller M. European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN) Brugge, Belgium: 2010. Deep learning of visual control policies. [Google Scholar]

- 29.Lazaric A, Ghavamzadeh M, Munos R. Tech rep, SEQUEL (INRIA) Lille - Nord Europe: 2010. Finite-sample analysis of least-squares policy iteration. [Google Scholar]

- 30.Lazaric A, Ghavamzadeh M, Munos R. Finite-sample analysis of LSTD. International Conference on Machine Learning (ICML) 2010:615–622. [Google Scholar]

- 31.Morimura T, Sugiyama M, Kashima H, Hachiya H, Tanaka T. Nonparametric return density estimation for reinforcement learning; 27th International Conference on Machine Learning (ICML); Haifa, Israel. Jun. 21-25 (2010). [Google Scholar]

- 32.Morimura T, Sugiyama M, Kashima H, Hachiya H, Tanaka T. Parametric return density estimation for reinforcement learning; 26th Conference on Uncertainty in Artificial Intelligence (UAI); Catalina Island, California, USA. Jul. 8-11; 2010. pp. 368–375. [Google Scholar]

- 33.Munos R, Szepesvári C. Finite-time bounds for fitted value iteration. Journal of Machine Learning Research. 2008:815–857. [Google Scholar]

- 34.Murphy S. Optimal dynamic treatment regimes. (Journal of the Royal Statistical Society, Series B).2003;65(2):331–366. [Google Scholar]

- 35.Murphy S, Van Der Laan M, Robins J. Marginal mean models for dynamic regimes. Journal of the American Statistical Association. 2001;96(456):1410–1423. doi: 10.1198/016214501753382327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Nedi A, Bertsekas DP. Least squares policy evaluation algorithms with linear function approximation. Discrete Event Dynamic Systems. 2003;13:79–110. doi: 10.1023/A:1022192903948. [DOI] [Google Scholar]

- 37.Ormoneit D, Sen S. Kernel-based reinforcement learning. Machine Learning. 2002;49(2-3):161–178. [Google Scholar]

- 38.Peters J, Vijayakumar S, Schaal S. Third IEEE-RAS International Conference on Humanoid Robots. Citeseer; 2003. Reinforcement learning for humanoid robotics; pp. 1–20. [Google Scholar]

- 39.Pietquin O, Tango F, Aras R. Computational Intelligence in Vehicles and Transportation Systems (CIVTS), 2011 IEEE Symposium on. IEEE; 2011. Batch reinforcement learning for optimizing longitudinal driving assistance strategies; pp. 73–79. [Google Scholar]

- 40.Riedmiller M. Sixteenth European Conference on Machine Learning (ECML) Porto, Portugal: 2005. Neural fitted Q iteration - first experiences with a data efficient neural reinforcement learning method; pp. 317–328. [Google Scholar]

- 41.Robins J. A new approach to causal inference in mortality studies with a sustained exposure period–application to control of the healthy worker survivor effect. Mathematical Modelling. 1986;7(9-12):1393–1512. [Google Scholar]

- 42.Sutton R. Advances in Neural Information Processing Systems 8 (NIPS) MIT Press; Denver, CO, USA: 1996. Generalization in reinforcement learning: Successful examples using sparse coding; pp. 1038–1044. [Google Scholar]

- 43.Sutton R, Barto A. Reinforcement Learning. MIT Press; 1998. [Google Scholar]

- 44.Timmer S, Riedmiller M. IEEE Symposium on Approximate Dynamic Programming and Reinforcement Learning (ADPRL) IEEE; 2007. Fitted Q iteration with cmacs; pp. 1–8. [Google Scholar]

- 45.Tognetti S, Savaresi S, Spelta C, Restelli M. Control Applications (CCA) & Intelligent Control (ISIC), 2009. IEEE; 2009. Batch reinforcement learning for semi-active suspension control; pp. 582–587. [Google Scholar]