Abstract

Although delay discounting, the attenuation of the value of future rewards, is a robust finding, the mechanism of discounting is not known. We propose a potential mechanism for delay discounting such that discounting emerges from a search process trying to determine what rewards will be available in the future. In this theory, the delay dependence of the discounting of future expected rewards arises from three assumptions. First, that evaluation of outcomes involves a search process. Second, that value is assigned to an outcome proportionally to how easy it is to find. Third, that outcomes that are less delayed are typically easier for the search process to find. By relaxing this third assumption (for example, by assuming that episodically cued outcomes are easier to find), our model suggests that it is possible to dissociate discounting from delay. Our theory thereby explains the empirical result that discounting is slower to episodically-imagined outcomes, because these outcomes are easier for the search process to find. Additionally, the theory explains why improving cognitive resources such as working memory slows discounting, by improving searches and thereby making rewards easier to find. The three assumptions outlined here are likely to be instantiated during deliberative decision-making, but unlikely in habitual decision-making. We model two simple implementations of this theory and show that they unify empirical results about the role of cognitive function in delay discounting, and make new neural, behavioral, and pharmacological predictions.

Introduction

Both human and non-human animals discount future rewards, preferring smaller rewards delivered sooner over larger rewards that will only be available after a delay (Madden and Bickel, 2010). In both human and non-human animals, the ability to wait for a larger reward is positively related to self-control abilities (Mischel and Underwood, 1974; Baumeister et al., 1994; Peters and Büchel, 2011) and inversely related to addiction liabilities (Heyman, 2009; Ainslie, 2001; Perry et al., 2005; Anker et al., 2009; Stanger et al., 2011; Odum et al., 2002; Bickel and Marsch, 2001). Most theories suggest that the temporal discounting of future rewards is related to issues of uncertainty, risk, and investment (Redish and Kurth-Nelson, 2010; Sozou, 1998; Samuelson, 1937); however, the mechanisms underlying the temporal discounting of future rewards remain unresolved. In non-human animals, discounting functions are usually measured through direct choices of actual rewards, often through the use of adjusting delay tasks (Mazur, 1997), in which selecting smaller-sooner or larger-later rewards changes the delay to the larger-later reward. Modeling adjusting delay tasks is possible using reinforcement learning models that adjust decisions based on feedback (Kurth-Nelson and Redish, 2009). Most human experiments, however, have measured discounting rates through questionnaires and single-trial events, in which discounting decisions are made in novel situations, often without any immediate feedback (Madden and Bickel, 2010). Modeling decision-making in novel conditions requires discounting functions that can arise from imagined futures.

Discounting experiments generally find reasonable fits to hyperbolic discounting functions, in which the value of the future reward is discounted by 1/(1+delay) (Madden and Bickel, 2010). While this fit is often very good, other functions have been proposed, including exponential (Schweighofer et al., 2006), exponential with a bonus for immediate rewards (McClure et al., 2004; Laibson, 1997), and the sum of multiple exponentials (Kurth-Nelson and Redish, 2009; Sozou, 1998). These models all assume that the value of a delayed reward is a simple function of the delay to that reward, following simple delay-dependent assumptions of risk, uncertainty, and investment in economic (Samuelson, 1937; Sozou, 1998) and reinforcement learning (Sutton and Barto, 1998; Redish and Kurth-Nelson, 2010) models.

Discounting functions, however, are also modulated by cognitive and representational factors. For example, subjects with increased cognitive resources tend to show slower discounting rates. As a trait, higher cognitive skills are correlated with better self-control and slower discounting rates (Mischel and Grusec, 1967; Mischel and Underwood, 1974; Burks et al., 2009). As a state, cognitive resources can be modulated by training working memory (presumably increasing cognitive resources), which slows discounting (Bickel et al., 2011), or by imposing a cognitive load (presumably decreasing cognitive resources), which speeds discounting (Vohs and Faber, 2007). Representationally, discounting rates depend on the episodic nature of the potential options in a questionnaire – if the delayed option is primed with an episodic cue, then subjects are more likely to select it, leading to a decrease in the rate of decay of the discounting function (Peters and Büchel, 2010; Benoit et al., 2011). Similarly, subjects prefer options presented with round numbers ($7 instead of $7.03), showing slower discounting functions when the delayed option is presented in round numbers ($7.03 now versus $20 next week) and faster discounting functions when the immediate option is presented in round numbers ($7 now versus $20.03 next week) (Ballard et al., 2009). Ebert and Prelec (2007) report that the time-sensitivity of discounting functions is susceptible to manipulations of attention, and that these manipulations differ between near-future and far-future options. These results suggest that there are other factors besides time involved in the delay-discounting phenomenon; standard economic and reinforcement-learning models do not capture these modulatory effects (Glimcher, 2008; Daw, 2003).

Current theories of decision-making systems suggest that there are multiple systems that can drive decision-making (Daw et al., 2005; Redish et al., 2008; van der Meer et al., 2012; Montague et al., 2012), including both deliberative and non-deliberative (habit) systems. Deliberative decision-making in particular has been proposed to depend on search processes which proceed through potential futures (Johnson and Redish, 2007; van der Meer et al., 2012) and/or the creation of imagined expectations (episodic future thinking, (Schacter et al., 2007; Atance and O’Neill, 2001)).

Here, we propose that discounting arises from a cognitive search process trying to identify rewarding situations in the future. We suggest that temporal discounting emerges from the correlation between delay and the ease of identifying future rewarding situations. Since cognitive processes underlie the computation of value, modulation of discounting by representation and cognitive resources emerges directly from the search process.

Theory

We present a theory of discounting in deliberative decision-making. The key to deliberative decision-making (sometimes referred to as “model-based reinforcement learning” (Kaelbling et al., 1996; Sutton and Barto, 1998)) is the ability to evaluate potential future outcomes on the fly, permitting flexible behavior in the face of changing contingencies or changing motivational states (Doya, 1999; van der Meer et al., 2012; Daw et al., 2005; Niv et al., 2006; Hill, 2008; Balleine and Ostlund, 2007; Johnson et al., 2007; Suri, 2002). Several authors have proposed that this evaluation process involves episodic projection of oneself into the future to vicariously sample the outcome and thereby establish a subjective value (van der Meer et al., 2012; Gilbert and Wilson, 2007; Buckner and Carroll, 2007; Johnson and Redish, 2007; van der Meer and Redish, 2009; Schacter et al., 2007).

Even in situations in which one is told the reward that one will receive (for example, if you are offered $100 next week), one needs to determine what the world-situation next week in order to evaluate the usefulness (the subjective value) of that $100 next week. The subjective value of that $100 next week is very different if one expects to win the lottery tomorrow. Thus, from a neural decision-making systems point of view, this situation still has to be evaluated like any other situation, in order to establish a subjective value for it. We suggest that while holding onto that semantic association of $100 next week (which modulates both the search process and the situation/reward association mapping), the agent projects an episodic self-representation forward in time. The ability to construct a vivid, associated forward projection will determine how easily one can evaluate the subjective value of that $100.

The exact mechanisms of this episodic projection in the brain are likely to be very complex. There is likely to be a combination of explicit search through a graph of states using causal models at mixed levels of abstraction (Rich and Knight, 1991; Nilsson, 1998; Newell and Simon, 1972; Botvinick and An, 2008; Smith et al., 2006); attractor networks settling into representations (Hertz et al., 1991) or following energetically-favorable pathways encoded by experienced sequences (Johnson and Redish, 2007); and application of correlational and associational knowledge. A complete theory of these processes is beyond the scope of this paper. However, we propose that episodic construction has some essential properties that give rise to temporal discounting and allow us to make qualitative neural, behavioral, and pharmacological predictions about discounting. We therefore first present the essential properties and then present two instantiations of this theory (one based on abstract search processes and one based on settling processes of attractor networks). We show the same qualitative results in each instantiation: temporal discounting of delayed rewards, and modulation of discounting by the representation of outcomes and by cognitive resources.

Assumption 1. Construction can be viewed as a search process (Winstanley et al., 2012)

Neurophysiologically, we suggest that search occurs through the activation space of the neural network that represents episodes. It follows a trajectory from the present toward a representation of a future outcome (Tolman, 1939; Buckner and Carroll, 2007; Johnson and Redish, 2007). As noted above, this is likely to be very complex, but ultimately, the representation starts from the present, and must reach a future outcome in order to value that outcome. For example, the search may involve multiple hierarchical levels, perhaps through cognitive chunks (Newell and Simon, 1972; Botvinick and An, 2008), but each step likely occurs through the settling of a dynamic attractor models, such as content-addressable memories, which in itself is mathematically akin to a search process (Hertz et al., 1991). Of course, the topology of the neural activation space may look quite different from the topology of the representation space, but we assume that there is some degree of homology. In general, if something is farther away, there are more steps to go through, even though these steps may be in a variety of different spaces.

Assumption 2. The search process values rewards proportionally to how easy they are to find

The search process attempts to find rewards in the future, but in most real-world situations, and even in many experimental tasks, it is not feasible to fully search out every possible outcome. Naturally, the search process can only value rewards if it can find them. An all-or-nothing effect may be partially smoothed out by multiple searches (whether discrete or continuous, i.e. evolving a distribution through the search space). These multiple searches may occur serially (Johnson and Redish, 2007) or in parallel (Botvinick and An, 2008). From the point of view of making adaptive decisions, valuing rewards proportionally to how easy they are to find may reflect that easy-to-search-to rewards are also statistically easier to obtain in the real world. This is expected to be a consequence of the fact that the learning mechanisms that create the representation space through which the search operates tend to associate things that are linked in the real world.

Neurophysiologically, it is likely that evaluation occurs through a downstream brain network that has learned to associate which kinds of situations are valuable and which aren’t, probably involving orbitofrontal cortex (Padoa-Schioppa and Assad, 2006; Zald and Rauch, 2006; Schoenbaum et al., 2009) and ventral striatum (van der Meer and Redish, 2011; Pennartz et al., 2009; McDannald et al., 2011; Roesch et al., 2009). If we assume that the classification process is locally smooth, then rewards will be more valued if they are easier for the episodic projection to find, because having a representation closer to something that has been learned to be rewarded will be more subjectively valuable.

Assumption 3. Multiple factors influence how easy a reward is to find, including the temporal distance to the reward and the ease of constructing the future representation

Since events are generally continuous through time, there is an inherent overlap between “now” and any given future, which decays with a regular time-course (Rachlin, 2004; Howard et al., 2005). Previous researchers have suggested that this creates a separation between current and future “selves” such that future selves are farther away than one’s current “self” (Ainslie, 1992; Ainslie, 2001; Trope and Liberman, 2003; Rachlin, 2004). Thus, we assume that the ease of construction of a future outcome is correlated with the delay to the outcome. However, because the fundamental cause of temporal discounting in our theory is that more distant rewards are harder to find, it is possible to modulate discounting by creating situations where these are dissociated. One interesting example is when outcomes are made easier to find by altering the energy landscape around them. We will show, for example, that in an attractor-network instantiation of this theory (Model 2, below), increased interconnection strength of a memory (producing a deeper basin of attraction) allowing the network to find that memory faster and more reliably, leading to slower discounting. We argue that increased interconnection strength is a feature of vivid representations. Therefore, boosting the vividness of the outcome leads to slower discounting, as observed experimentally (Peters and Büchel, 2010; Benoit et al., 2011).

Neurophysiologically, assumption 3 is met because it is easier for the projection to reach temporally nearer outcomes because they also tend to be nearer in feature space. The distance in “search space” is the ease of constructing that future (Schacter and Addis, 2007; Schacter et al., 2008). Situations that are more separated in time tend to be more separated in feature space because everything in the world is changing, and by the nature of learning in associative networks, situations that are more separated in feature space are likely to be more separated in activation space. Of course, the topology of the activation space is determined by the dynamics of the network, and things may be topologically closer or further than their distance in raw activation space. We assume that there is a finite time available for searching, so the search can’t simply run forever until it finds all outcomes. Experimentally, discounting is often measured with questionnaires, where the experimenter asks the subject’s preference between, for example, $100 today or $1000 in 1 year. In this case, even though the subject “knows where the reward is” (e.g. $1000 in 1 year), his or her episodic representation must still follow a trajectory from the present to that outcome in order to perceive it as valuable. We suggest that the broader space of possibilities (“where will I be in a year?”) leads to a decrease in the expected value of that delayed outcome. In summary, this theory constitutes a novel explanation for the mechanism of delay discounting — rewards that are temporally near will be more likely to be found and will be valued higher. We now present two models that instantiate, respectively, the generic assumptions and their mapping onto episodic construction.

Materials and methods

Model 1: Abstract search

The model simulates the process of estimating the value of a situation by identifying the future rewards that will be available.

Searches in model 1 were performed in the real number plane, R2. Searches always started from the origin. Certain locations within the representation space were defined as rewarded. For simplicity, we assumed that rewards are either present or not (i.e. they were not probabilistically delivered). Multiple searches were performed, and the value produced by the model was defined as the number of searches that found a reward, divided by the total number of searches. Delay to a reward was modeled as Euclidean distance (following Assumption 3); thus a reward available after 5 time-steps would be located 5 units from the origin.

Search dynamics proceeded by being updated through standard particle motion equations, stochastically following an imposed energy gradient,

| (1) |

| (2) |

where was the location of the particle at time t, and was the velocity at time t.

The inverse friction (slipperiness) F was set to 0.9 for all simulations. The thermal noise was isotropic; it was independently drawn at each time-step from a uniform distribution over the range (−0.5, 0.5) in each dimension. The inertia m was held at 0.1 for all simulations.

The energy gradient was defined by the surface

| (3) |

where each Bi is one basin. Basins were defined by the following equation:

| (4) |

where Di defined the depth of basin i, and (xi, yi) defined the center of the basin. Qualitatively similar results were found with a number of different basin-shapes. These parameters were chosen as being robust to allow examination of the effects of other parameters on the system. Di was set to 1 unless it was being explicitly manipulated. In Fig. 1, the number of basins was zero, so the energy landscape was flat. In Figs. 2 and 3, the number of basins was one and this basin was centered on the reward. In Fig. 3, there was a basin centered on the reward and also other basins centered at uniformly random locations within (−50, 50) in each dimension.

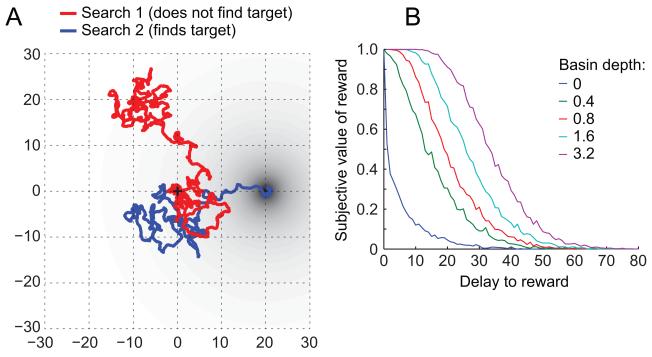

Figure 1.

Discounting arises from a set of simple assumptions about search. (A) Examples of two random searches on the plane. Black plus sign at origin indicates start of searches. Black circle indicates location of reward. (B) Subjective value (defined as the fraction of searches that find the target) is smaller when the delay (defined as distance between start of search and target reward) is larger.

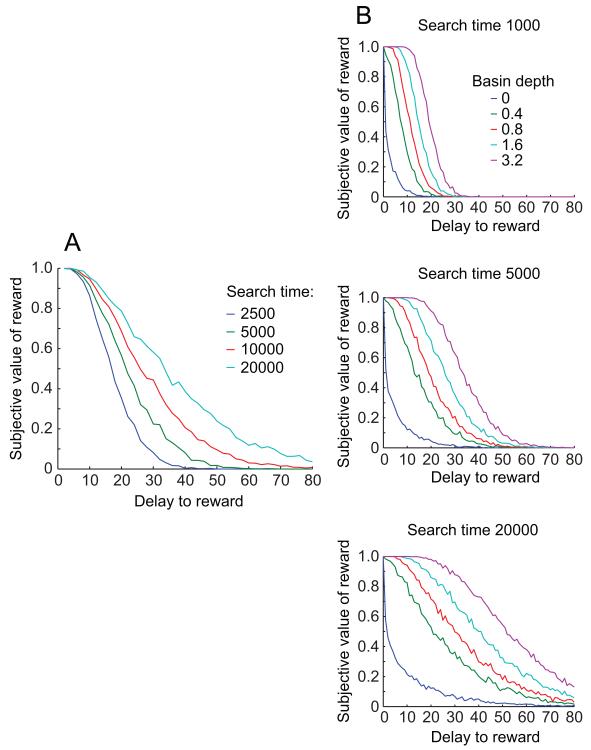

Figure 2.

Discounting is slower when the representation of the outcome is more energetically favorable. (A) Examples of two searches through an energy landscape (indicated by gray shading) with one basin, which is centered at (20,0). The reward is located at the center of the basin. (B) The attenuation of subjective value with increasing delay (i.e., discounting) is less pronounced when the basin is deeper. Note that the dark blue trace is the same as Fig. 1B.

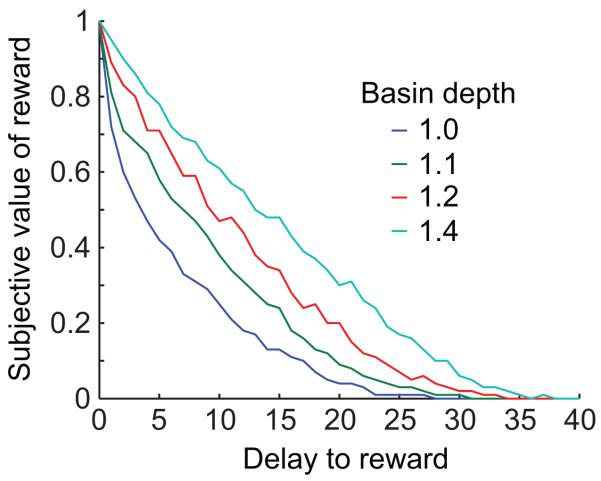

Figure 3.

Discounting is slower when more time is available to search, and this effect is greater when the outcome is more energetically favorable. (A) As maximum search time increases, the discounting function becomes shallower, meaning that the same delay produces less reduction in subjective value. (B) In each panel, discounting curves are shown for a range of different basin depths. As search time is increased from the top panel to the bottom panel, the separation between the curves increases, meaning the effects of search time and basin depth on the curves are supra-additive. The middle panel is the same as Fig. 2B.

If at any time step, the search came within 0.5 Euclidean distance of a reward, the search terminated and returned with a positive value saying that reward was found. If the search reached its maximum duration (held to 5000 time-steps for all simulations in which it was not explicitly manipulated) without finding reward, the search terminated and return with a value of 0. On each trial, 1000 independent searches were done. Subjective value was taken to be the proportion of searches that found a given reward.

Model 2: Hopfield network

This simulation used a standard Hopfield network (Hopfield, 1982; Hertz et al., 1991). Each unit i had a state si of 1 or −1. A set P of randomly defined patterns was encoded in the weights of the network. They were defined as,

| (5) |

where pi is the ith bit of pattern p, and N is a noise contribution, drawn from a uniform random distribution centered about zero. The weights were symmetric, so wij=wji. New random patterns were used on each trial to reduce the systematic bias that would arise from any particular configuration of patterns (in other words, the weights were regenerated from scratch on each trial).

One of the encoded patterns was defined as the target, to simulate where reward was located. The other patterns were non-targets.

On each trial, the activation state of the network was initialized to a pattern that was generated by starting from the target pattern and flipping H bits. The delay on that trial was defined as H, the Hamming distance between the starting position and the target. The network was then updated asynchronously until either 1) the search time limit was reached, or 2) the target pattern was reached. Exactly one unit was randomly selected for update on each time step. The new state of this unit i was,

| (6) |

where j indexes the set of units. Thus, on each time step one unit was selected for update, but that unit may or may not have changed its state on that time step, depending on whether it already had the same sign as the weighted sum of its inputs.

NT trials were run at each delay of each condition, and the fraction of these trials in which the network reached the target pattern was reported as the subjective value.

The depth of the basin for a particular pattern was manipulated by multiplying the contribution of that pattern to the weights by a constant.

In experiments where the pattern strength of the target pattern was varied, the weights were calculated as

| (7) |

where ep is the strength of pattern p. ep was always set to 1 for all non-target patterns, but could be greater than 1 for target patterns.

Results

Model 1: Abstract search

This simple model instantiates the three assumptions of our theory in the simplest way: there is an abstract search process, which follows a trajectory through an abstract space. This space could correspond to anything from neural activation space to sensory feature space to a high-level hierarchical rule space. For this model, the search space is the real plane with a Euclidean distance metric.

In Figure 1, we used random diffusion as the search dynamics. This is the simplest possible search dynamics, and simply illustrates that the phenomenon of discounting arises from our three theoretical assumptions. Many other forms of search dynamics would produce the same results.

In Figures 2 and 3, we added the simplest possible extra assumption: that the search process has some non-random dynamics that favor particular subsets of the space. Again, we implement this with the simplest possible instantiation: a gravity-like attraction to radially symmetric energy basins. This gives an example of how the assumption of adding non-random dynamics can produce a change in discounting (slower discounting to outcomes in regions of the representation space that the dynamics favors).

Starting from the current location (without loss of generality defined to be (0, 0)), we allowed a large number of serial searches to evolve along random trajectories. Examples of these trajectories are shown in Figure 1A. Delay was modeled as Euclidean distance. As a consequence, more delayed outcomes were discounted relative to less delayed outcomes (Figure 1B).

Effect of changing basin depth

In order to encode memories within our representation space, we defined an energy function over the space, distorting the two-dimensional space with basins. The random diffusion of the search was modulated by a gravity-like effect based on the shape of the energy function (Hertz et al., 1991) (Fig. 2A). Deeper and broader basins were more likely to be found, meaning that changes in the basin shape will have profound effects on the discounting function. Searches probabilistically gravitate towards basins, meaning that searches are more likely to find targets at the bottom of deeper basins before the search’s maximum search-time expires. This mitigates the effect that distance (which stands in for delay) has in making it harder to find the target, thus effectively slowing the apparent discounting rate (Fig. 2B).

It is interesting to note that, under some conditions, the discounting functions produced by this model have an initial “plateau” -- for example in the purple curve in Fig 2B, value remains near 1 until the delay reaches about 20. It is a fundamental prediction of the search+basins theory that when the search starts within some radius of an energetically favorable state, it is almost guaranteed to collapse to that state, minimizing the difference between small delays within that radius. Depending on the shape of the energy space, this effect could range from large to unnoticeable.

Effect of changing maximum search time

Finding a reward, particularly a distant reward, takes time. Presumably, a search can only proceed for a limited time before the agent will give up on the search. Therefore, to model this limitation, the available time for a given particle to find a reward was limited. If the particle did not find a reward within that limited number of steps, it returned a value of 0 or “no reward found”. Increasing the search time available to the particle increases the likelihood that the particle will find a distant reward, effectively slowing the apparent discounting rate. Figure 3A shows how changing the search time changes the discounting function. When we systematically varied both the maximum search time and the basin depth, we found a non-linear interaction between these two parameters (Fig. 3B), such that the extent to which a deeper basin slowed the discounting function became much larger when there was a greater maximum search time. This is a novel prediction -- that the effect of episodicity, like in Peters and Buchel (2010), should become more pronounced in subjects with more cognitive resources (e.g. following working memory training). It arose in the model because if the basin gradient was much less than the thermal noise, then extra search time had relatively little effect (the expected time to reach a given point was exponentially increasing with its distance). But if the basin gradient was nonnegligible relative to thermal noise, then extra search time allowed the search to follow the gradient into the basin.

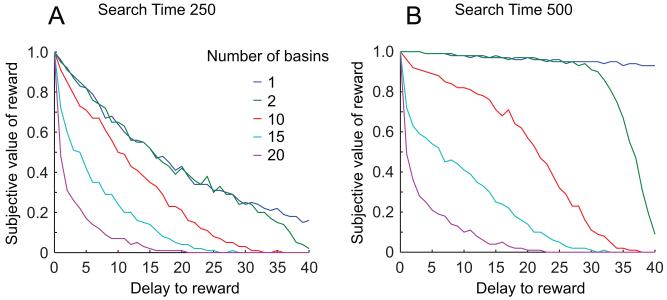

Effect of increasing the number of distractor basins

In Figure 3, there was only one basin, and it was centered on the rewarding target. We also tried adding other, non-target basins in the energy landscape, such that they contributed to the overall gradient, but reaching their center did not impart a reward. This simulates the effect of other coherent situations encoded by the representational system. We found that increasing the number of basins caused the model to discount faster (Fig. 4A), because these non-target basins could draw the search away from the rewarding target. In these simulations, the depth of each basin was 1.

Figure 4.

Discounting is slower when there are fewer incidental minima in the energy space; and this effect is greater with longer search time. (A) In figures 2 and 3, there was only one basin in the energy landscape, centered at the rewarding target. Now we add additional non-target basins. The depth of each basin, including the target basin, is 1. Increasing the number of basins increases the amount of discounting. (B) Increasing the maximum search time greatly enhances the effect of changing the number of basins, i.e., the separation between curves is greater in B than in A.

We also found that as search time increased, the effect of changing the number of basins became even more pronounced (compare Figs. 4A and B). This is because with many non-target basins, it becomes very likely that the search process will fall into one of these incidental basins between the starting point at the target, so that additional search time would be wasted on sitting at the bottom of that incidental basin.

This makes the novel prediction that increasing cognitive resources should have little effect on discount rates when there are many other targets encoded in the search space.

Model 2: Hopfield network

One interesting instantiation of our search assumptions is a settling attractor network representing an episodic future imagination process. In this section we show that a settling attractor network model exhibits the same discounting behavior as we showed for an abstract search process in the previous section. The model here consisted of a standard Hopfield network, with NP patterns encoded in the weights (Fig. 5A). One of the patterns was defined as the rewarding target.

Figure 5.

Discounting arises in the settling of an attractor network. (A) The units in the Hopfield network simulation are updated asynchronously until the target pattern is found or the maximum search time is reached. (B) Subjective value (defined as the fraction of trials where the network settles on the rewarding target) is smaller when the delay (defined as Hamming distance between the starting state of the network and the rewarding target) is larger.

Hopfield network discounts delayed rewards

We modeled the process of evaluating the subjective value of a future outcome. The system starts from a present state and projects forward to imagine a future state. Delay to the future outcome was modeled as the Hamming distance between the starting state and the outcome state. To generate a starting state for the units that was Hamming distance H from the rewarding target, we flipped a random H bits of the pattern representing the rewarding target.

After the units were initialized to a starting state, the network was allowed to run with asynchronous updates until either the network’s state matched the rewarding target, or the maximum number of asynchronous update steps (search time limit) was reached.

As we varied the delay, the subjective value calculated by the model decreased roughly exponentially (Fig. 5B).

Effect of changing basin depth

We were also interested in the effect of varying episodicity, or the strength or vividness of a future representation. We assume that the more vivid an outcome is, the stronger are the connections that encode it.

In a standard Hopfield network, the connection weight between two units is the sum (across encoded patterns) of the product of the states of those units in that pattern. In our simulations, we permitted a particular pattern to have stronger connections, by multiplying that pattern’s contribution to the weights by a constant greater than one.

When we boosted the vividness of the rewarding target (leaving all other encoded patterns the same), this had the effect of increasing the likelihood of the network settling onto the rewarding target, even when the starting state of the network had a large Hamming distance from the rewarding target. Therefore boosting vividness slowed the discount rate of the model (Fig. 6).

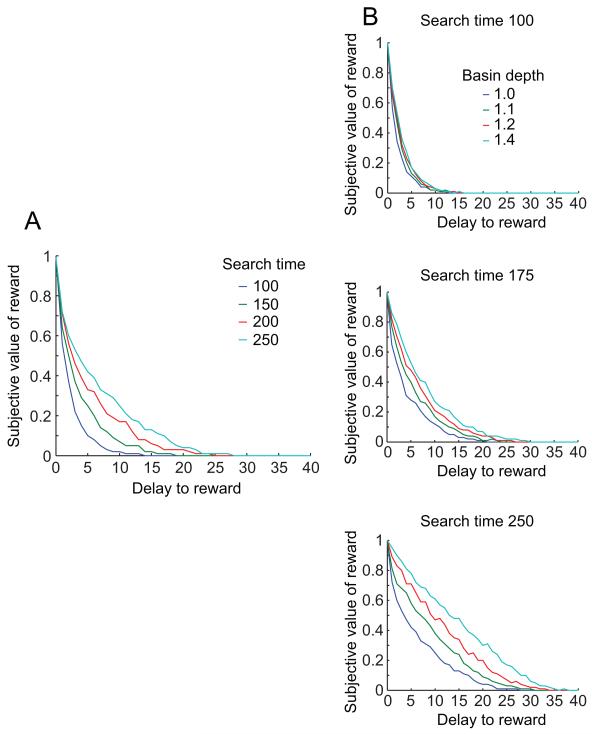

Figure 6.

Discounting is slower when the target pattern is more strongly encoded. As the weight contributions of the rewarding target pattern are increased relative to the other encoded patterns, the discounting function becomes slower.

Effect of changing maximum search time

To simulate the effect of changing the amount of cognitive resources available for construction, we varied the total number of time steps that the model was permitted to try to settle on the rewarding target. Increasing the available search time slowed the discount rate of the model (Fig. 7A), because with more time steps it was less likely that the search would time out before settling on the rewarding target.

Figure 7.

Discounting is slower when more time is available for the network to settle, and this effect is greater when the target pattern is more strongly encoded. (A) As maximum settling time increases, the discounting function becomes shallower, meaning that the same delay produces less reduction in subjective value. (B) In each panel, discounting curves are shown for a range of different basin depths. As search time is increased from the top panel to the bottom panel, the separation between the curves increases, meaning the effects of search time and basin depth on the curves are supra-additive. The bottom panel is the same as Fig. 2B.

As in the abstract search model, the effect of changing search time interacted with the effect of changing basin depth. The gap between the discounting curves at 1.0 basin depth and 1.4 basin depth grew larger as more search time was available (Fig. 7B). This supra-additive effect occurred because with a deeper basin, it became more likely that search time was the limiting factor in valuation. In other words, with a deeper basin, it was likely that even searches starting at a large Hamming distance from the rewarded target would eventually find the rewarded target; a larger search time allowed them to do so.

Effect of increasing the number of distractor basins

Auto-associative memories may encode many or few stored representations. Encoding more representations in the same network generally produces a more complex energy landscape with more local minima. We were interested in how the other patterns encoded in the network would interact with the search process to the rewarding target, so we varied the number of patterns NP encoded in the Hopfield network’s weights.

Increasing the number of encoded patterns caused discounting to become faster (Fig. 8A). As the number of encoded patterns increased, it became more likely that the network would either settle to one of the non-target patterns, or get trapped in a local minimum that didn’t correspond to any encoded pattern. It may have also made the paths through the space more tortuous, decreasing the likelihood of finding the rewarding target before the search time limit was reached.

Figure 8.

Discounting is slower when there are fewer patterns trained in the network; and this effect is greater when more time is available for the network to settle. (A) Increasing the number of patterns encoded in the network’s weights increases the amount of discounting. (B) Increasing the maximum search time enhances the effect of changing the number of basins; i.e., the separation between curves is greater in B than in A.

The effect of number of encoded patterns also interacted with the effect of changing the maximum search time (Fig 8, compare A and B). With 20 encoded patterns, the discount curves for 250 search time and 500 search time were nearly identical. But with a smaller number of encoded patterns, the discount curve for 500 search time was much slower than the discount curve for 250 search time. This is because, as more non-target basins crowded the space, it became more likely that movement in any direction away from the starting point would quickly fall into a non-target basin, negating the benefit of any further searching.

Correspondence to specific experimental results

Changes in the episodic cueing of the potential options

Peters and Büchel (2010) and Benoit et al. (2011) examined the effect of manipulating episodic cues to the delayed choice in a questionnaire task, comparing the effect of asking for a preference for larger later (delayed) over smaller sooner (immediate) choices when the delayed option was marked by a simple time (e.g. “26€ after 35 days”) or by an explicit (and correct) future cue (e.g. “35€ after 45 days during your future vacation in Paris”). Delayed options in the episodic cases were more likely to be preferred, implying a decrease in the discounting rate (Peters and Büchel, 2010; Benoit et al., 2011). We modeled this effect as an increase in the depth of the basin, under the assumption that more easily retrieved episodic memories would be at deeper spots in an attractor network (Kohonen, 1980; Hertz et al., 1991). As can be seen in Figures 4 and 8, increasing the depth of a basin has the effect of increasing the likelihood that a searching particle would find it, and thus decreasing the discounting rate to the episodically-marked future event. In a sense, this increase in depth can be interpreted as a change in the temporal attention being paid to the future event (Ebert and Prelec, 2007). Recently, (Radu et al., 2011) found that changes in how classical discounting questionnaires are framed produced changes in discounting rates, and, by studying discounting related to both future and past events, concluded that the changes provided additional attention to the temporally-distant event. The changes in basin depth shown in Figures 2 and 6 model this effect.

Changes in cognitive abilities and cognitive load

Several researchers have noted that subjects with higher cognitive abilities show slower discounting functions than subjects with lower or impaired cognitive abilities. For example, (Burks et al., 2009) correlated experimentally determined discounting rates through questionnaires and intelligence as measured by the Raven’s matrices nonverbal IQ test, the Hit-15 ability to plan task, and a quantitative literacy test. They found that increased cognitive intelligence as measured by these parameters (all of which were correlated with each other) correlated both with increased consistency between delays (later delays were more likely to be discounted more than earlier delays) and with slowed discounting rates. Franco-Watkins et al. (2006) suggest that cognitive load leads to decreased consistency, while Baumeister et al. (1994), Hinson et al. (2003), Gailliot et al. (2007), and Vohs and Faber (2007) suggest that cognitive load leads to impulsivity.

We can interpret the state of increased cognitive load and the trait of decreased cognitive skills as reductions in the available resources that can be applied to a search. We simulated this with reduced search times. As can be seen in Figure 3, decreasing the maximum time before a search gives up increases the discounting rate (speeds discounting), with an especially pronounced effect when the basin around the reward is deeper. Of course, manipulating the available search time is just a very crude proxy for a host of mechanisms that are likely part of the whole picture of cognitive resources. For example, ability to add more associations might make searches easier by reducing topological distance between points in the representation space. The general point is that by improving the search/construction process, it is more likely that the deliberative decision-making process will be able to find, and therefore value, delayed rewards.

Changes in working memory abilities

Recently, (Bickel et al., 2011) examined the effects of training working memory on discounting rates and found that working memory training produced dramatic decreases (slowing) of discounting rates. In contrast, a control cohort that received the same training, but with the answers given, thus not requiring working memory (and producing no working memory improvements), showed no significant changes in discounting rates. Presumably, some aspects of working memory are required to hold on to a search in progress (Baddeley, 1986; Kliegel et al., 2008), and thus we can hypothesize that maximum search time is related to working memory. As with changes in cognitive abilities and cognitive load, this discounting-as-search-to-reward model suggests an explanation for the decreased discounting rates through modulations in the parameters, most likely the length of time a search can proceed before it is abandoned. As can be seen in Figure 2, providing increased potential search time to each particle leads to a slowing of discounting functions.

Relationship to neurophysiology

The discounting mechanism proposed here depends on the depth of the basin of attraction of representations during the imagination of future representations (i.e. during episodic future thinking). Episodic future thinking is known to depend on hippocampus and prefrontal cortex (Schacter et al., 2007; Hassabis et al., 2007; Addis et al., 2007; Buckner, 2010), brain structures that are often modeled as auto-associators with attractor dynamics (Ermentrout, 2005; Redish, 1999; Zhang, 1996; Wilson and Cowan, 1972; Durstewitz et al., 2000). Our theory suggests that the dynamics of these brain-structures involved in episodic future thinking will translate directly into discounting rates during deliberative decision-making, in that more easily imagined futures will be discounted less than futures that are more difficult to imagine. From this correspondence, we can make several predictions.

Prediction 1. Episodic discounting rates will depend on the ability to access prefrontal representations

Extensive research in self-control has suggested that cognitive resources, in particular, self-control-related resources depend on a limited resources likely related to glucose availability in prefrontal cortical structures (Gailliot et al., 2007; Vohs and Faber, 2007). Our theory that discounting in deliberative decision-making depends on the ability to imagine future outcomes suggests that discounting rates will depend on this self-control-related resource and can be manipulated through the presence or diminishment of these prefrontal glucose resources.

Prediction 2. Subjects unable to episodically imagine futures may still show temporal discounting, but that temporal discounting will not be modulated by cognitive resources or the representational nature of the options

Discounting likely arises from a number of processes, likely different for different decision-making systems. While subjects with damage to prefrontal cortex or hippocampus may still show discounting due to other processes (such as simple associations), the evidence that subjects with hippocampal or prefrontal damage cannot accomplish episodic future thinking (Hassabis et al., 2007; Addis et al., 2007) implies that any discounting functions they do show will be immune to cognitive-resource and representational-manipulations. A recent study by (Kwan et al., 2011) found that an amnesic patient showed linear discounting rates, while controls showed hyperbolic discounting rates.

Prediction 3. Depth of basin affects discounting rates

Several models (Seamans and Yang, 2004; Redish et al., 2007; Durstewitz et al., 2010; Yamashita and Tanaka, 2005) have suggested that basin depth in prefrontal and hippocampal representations depends on dopaminergic tone, with low tonic levels of dopamine implying shallow basins and fragile representations and high tonic levels of dopamine implying deep basins and rigid representations. This suggests an inverted U in discounting rates as a function of dopaminergic tone, with overly low tonic dopamine leading to difficulty finding rewards, speeding up discounting rates, and overly high tonic dopamine leading to difficulty in moving through space, also speeding up discounting rates. This may produce discounting effects also as a function of genetic variation in dopamine SNPs (Frank et al., 2009; Frank et al., 2007).

Discussion

We propose that the discounting of expected rewards with time is due to a combination of three theoretical ideas: (1) that model-based reinforcement learning entails a search through the future (planning (Johnson and Redish, 2007), episodic future thinking (Buckner and Carroll, 2007)), (2) that temporally-delayed rewards are harder to find (because they are more contextually separated (Rachlin, 2004; Howard et al., 2005) and thus farther away in the representation space), and (3) that one calculates expected value as a function of the ability to find rewards (thus taking into account expected risk and hazard, (Sozou, 1998; Redish and Kurth-Nelson, 2010). In this paper, we show that this simple model can provide explanations for the effects of cognitive and executive functions on discounting rates, including the effect of cueing episodicity of the future reward (Peters and Büchel, 2010; Benoit et al., 2011), the effect of inherent cognitive abilities (Burks et al., 2009; Franco-Watkins et al., 2006), of compromising cognitive abilities, such as providing a cognitive load (Vohs and Faber, 2007) or depleting cognitive resources (Hinson et al., 2003; Baumeister et al., 1994; Gailliot et al., 2007), and of training cognitive abilities (Bickel et al., 2011).

Although the extent to which deliberative (model-based) decision-making depends on vivid episodic construction of future events is not completely clear (van der Meer et al., 2012; Schacter et al., 2007; Simon and Daw, 2011; Daw et al., 2011), we assume here that subjects briefly project themselves into the future to evaluate the subjective value of the outcomes. When a concrete representation of the outcome is not critical (perhaps because things are represented as abstract states (Trope and Liberman, 2003)), our theory reduces to a search through an Markov Decision Process (Smith et al., 2006), and we would predict that although cognitive skills are still relevant because they are needed to efficiently search through the graph (Huys et al., 2012), the episodic modulation would have diminishing importance.

Search takes time

One of the key points in any model of deliberative decision-making is that the deliberative process takes time (Ratcliff and McKoon, 2008; Gold and Shadlen, 2002; Krajbich et al., 2010; Johnson and Redish, 2007; van der Meer and Redish, 2010). Forcing decisions to terminate early produces more inconsistent results (Ratcliff and McKoon, 2008; Gold and Shadlen, 2002), but can also lead to falling back on inherent biases and changing discounting functions (Simonson and Tversky, 1992; Lauwereyns, 2010). In the two models, limitations on search time are used as a proxy for available cognitive resources. This makes sense because increased cognitive resources should allow a larger exploration of the representation space. However, the implications of our search-to-find-reward model on forcing decisions to terminate early depend on whether the searches are done in parallel or serial. If the searches are done serially (Johnson et al., 2007), then early stopping would be modeled by decreasing the number of searches done, which decreases consistency. If the searches are done in parallel (Botvinick and An, 2008), then early stopping would be modeled by decreasing the maximum time available to each search, which increases impulsivity. However, one has to be careful with interpreting the model in this way because forcing decisions to terminate early may also drive an agent to access different decision-making systems. Decision-making in mammalian systems arises from multiple decision-making processes, which use different information-processing algorithms, including Pavlovian, deliberative (model-based), and habit (model-free) (Daw et al., 2005; Redish et al., 2008; van der Meer and Redish, 2010; van der Meer et al., 2012; Montague et al., 2012; Huys, 2007). Different training regimens lead to the selection of different strategies (Restle, 1957) that depend on different brain structures (Barnes, 1979; Packard and McGaugh, 1996; Yin and Knowlton, 2004) and different computations (Daw et al., 2005; van der Meer and Redish, 2010).One prediction of the theory presented in this paper is that discounting will be more stable and less influenced by cognitive phenomena when an agent is primarily accessing non-deliberative decision-making systems (such as Pavlovian or model-free situation-action systems). Any experiment designed to test this prediction, however, will need to ensure that the manipulation is not pushing the agent from one system to the other (Bechara et al., 2001; Bechara et al., 1998).

How much of delay discounting is really about delay?

The search-to-find-a-reward theory proposed here suggests that what appears to be delay discounting is not an inherent consequence of delay itself, but rather to the ability of a search process to find reward (which may often be correlated with delay). This implies that value can be dissociated from delay by manipulating the ease of imagining the future outcome (Peters and Büchel, 2010), which would change the ease with which a search process can find that outcome. The difference in discounting functions seen between an amnesic patient (linear) and controls (hyperbolic) (Kwan et al., 2011) may well be due to the inability of the amnesic patient to constructively imagine future outcomes, being dependent instead on semantic associations (Hassabis et al., 2007; Kwan et al., 2011), analogous to the difference seen between recognition and recall (Eichenbaum et al., 2007).

Delay and probability discounting

It is important to note that the probability of reward delivery in an experiment is not the same thing as ease of finding reward in a search through future outcomes. Species do show probability discounting (Madden and Bickel, 2010; Myerson et al., 2011), as one would expect (Stephens and Krebs, 1986; Glimcher, 2008). The two functions are correlated in pigeons (Green et al., 2010), but one needs to account for the inherent non-linearity of risk-aversion for gains and risk-seeking for losses (Kahneman and Tversky, 1979) in order to relate the two functions (Rachlin, 2006; Myerson et al., 2011). Nevertheless, there remain subtle differences in probability and delay discounting functions that are not easily reconciled (such as different relationships to amount of reward, (Myerson et al., 2011)). Since we do not know the animal’s representation of the space of situations in which probabilistic rewards are delivered (Redish et al., 2007), it is not necessarily true that a high probability of reward delivery translates directly into ease of finding reward.

Symmetric discounting and the symmetry between episodic memory and episodic future thinking

Yi et al. (2006) asked subjects the very strange question “which would you rather have had, a smaller amount in the recent past or a larger amount in the more distant past?” Since the large amount would have been available earlier, economically, all subjects should always have chosen the larger, earlier choice. This is not, however, what subjects did; instead, they showed a similar discounting function, preferring temporally-nearby choices and discounting values into the past. In a similar study looking at past discounting in smokers, smokers discounted the past more than non-smokers, in alignment with the smokers’ faster future discounting rates (Bickel et al., 2008). Our search-based model of discounting explains this effect through the distance to that episodic past memory outcome. In our model, discounting does not depend intrinsically on actual delay, but rather, the ease of finding the outcome. Theories of episodic memory suggest that episodic memories are “rebuilt” anew each time (Loftus and Palmer, 1974) and are akin to “episodic past thinking” using similar mechanisms to “episodic future thinking” (Buckner and Carroll, 2007). Our search-based model of discounting would thus suggest similar rates between forward and backward discounting. In fact, the discounting rates between past and future gains were strongly linearly correlated for both non-smokers and smokers (Bickel et al., 2008). This suggests that more powerful episodic memories should show slower discounting functions than less powerful episodic memories. Whether the same cognitive effects seen in discounting of future rewards (Peters and Büchel, 2010; Vohs and Faber, 2007; Benoit et al., 2011; Bickel et al., 2011) will also occur in the reflected discounting of past rewards remains unknown, but that would be a prediction of our hypotheses. New evidence examining the difference between attention to future and past events seems to support this hypothesis (Radu et al., 2011).

Hierarchical planning

Agents engaged in episodic future thinking rarely progress through a direct sequence of states leading to that episodic future. For example, a postdoc deciding between faculty jobs is unlikely to include all paths to reach those two jobs. (“First, find a moving truck. Second, drive to city X…”) Instead, the postdoc is likely to simply construct that future outcome. (“Imagine, I’m now a new professor at university X. I’ll interact with person Y. I’ll get grad students like those I saw in person Z’s lab...”) The specific path that needs to be taken to achieve the outcome is likely to be considered only if it affects the cost of reaching that outcome. There has been a lot of work integrating planning and the identification of hierarchical subgoals in reinforcement learning (Botvinick and An, 2008; Ribas-Fernandes et al., 2011; Barto and Mahadevan, 2003), but an actual model including subgoals is beyond the scope of this paper as it would depend on the specific nature of those subgoals. Nevertheless, our search-to-find reward model suggests that the discounting rate seen will depend, not on the total time to reach a reward, but rather on the ability to imagine that reward episodically. This predicts that more complex paths to reward will speed up discounting rates and less complex paths to reward will slow them down. In a sense, discounting rates will depend, not on the actual time, but on the number of subgoals and substeps to reach that reward and the difficulty in finding the solution to each of those subgoals.

Summary and Conclusion

In this paper, we note that temporal discounting of future rewards occurs even in single-trial experiments (such as with questionnaires) and in novel situations (as in real-world deliberations). We therefore propose a model in which discounting arises from a correlation between time to a reward and the ability to find that reward in a search or settling process. This theory provides a ready explanation for the influence of cognitive phenomena on discounting functions and makes a number of predictions. Specifically, it predicts that the influence of these cognitive phenomena will be primarily seen when accessing model-based, deliberative decision-making systems and that the discounting function will depend, not on the absolute delay to a reward, but on the ease of finding that reward in an episodically imagined future.

Table 1.

Parameters used in Model 1 (abstract search)

| search time = 5000 |

| num searches = 1000 |

| reward radius = 0.5 |

| default basin depth = 1 |

| inverse friction F = 0.9 |

| thermal noise = uniform distribution over the range (−0.5, 0.5) in each dimension. |

| inertia m = 0.1 |

Table 2.

Parameters used in Model 2 (Hopfield)

| size of the set of patterns = 15 |

| search time limit = 250 |

| number of units = 80 |

| NT, number of trials = 1000 |

| N, noise in weights = uniform distribution over (−0.05, 0.05) |

| ep, strength of pattern when not directly modeled p = 1 |

Acknowledgements

This work was supported by NIH grant R01 DA024080.

Contributor Information

Zeb Kurth-Nelson, Wellcome Trust Center for Neuroimaging, University College London, London WC1N 3BG.

Warren Bickel, Virginia Tech Carilion School of Medicine and Research Institute Virginia Tech, Roanoke VA 24016.

A. David Redish, Department of Neuroscience, University of Minnesota, 6-145 Jackson Hall, 321 Church St. SE, Minneapolis MN 55455.

Reference List

- Addis DR, Wong AT, Schacter DL. Remembering the past and imagining the future: Common and distinct neural substrates during event construction and elaboration. Neuropsychologia. 2007;45:1363–1377. doi: 10.1016/j.neuropsychologia.2006.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ainslie G. Breakdown of will. Cambridge University Press; 2001. [Google Scholar]

- Ainslie G. Picoeconomics: the strategic interaction of successive motivational states within the person. Cambridge University Press; Cambridge [England]; New York, NY, USA: 1992. [Google Scholar]

- Anker JJ, Perry JL, Gliddon LA, Carroll ME. Impulsivity predicts the escalation of cocaine self-administration in rats. Pharmacology Biochemistry and Behavior Pharmacology Biochemistry and Behavior. 2009;93:343–348. doi: 10.1016/j.pbb.2009.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atance CM, O’Neill DK. Episodic future thinking. Trends in Cognitive Sciences. 2001;5:533–539. doi: 10.1016/s1364-6613(00)01804-0. [DOI] [PubMed] [Google Scholar]

- Baddeley AD. Working memory. Clarendon Press; Oxford University Press; Oxford [Oxfordshire]; New York: 1986. [Google Scholar]

- Ballard K, Houde S, Silver-Babaus S, McClure SM. The decimal effect: Nucleus acumens activation predicts within-subject increases in temporal discounting rates; In Society for Neuroscience Annual Meeting; 2009. [Google Scholar]

- Balleine BW, Ostlund SB. Still at the Choice-Point: Action Selection and Initiation in Instrumental Conditioning. Annals of the New York Academy of Sciences Annals of the New York Academy of Sciences. 2007;1104:147–171. doi: 10.1196/annals.1390.006. [DOI] [PubMed] [Google Scholar]

- Barnes CA. Memory deficits associated with senescence: a neurophysiological and behavioral study in the rat. Journal of comparative and physiological psychology. 1979;93:74–104. doi: 10.1037/h0077579. [DOI] [PubMed] [Google Scholar]

- Barto AG, Mahadevan S. Recent Advances in Hierarchical Reinforcement Learning. Discrete Event Dynamic Systems. 2003;13:341–379. [Google Scholar]

- Baumeister RF, Heatherton TF, Tice DM. Losing control: how and why people fail at self-regulation. Academic Press; San Diego: 1994. [Google Scholar]

- Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, Nathan PE. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- Bechara A, Nader K, van der Kooy D. A two-separate-motivational-systems hypothesis of opioid addiction. Pharmacology, biochemistry, and behavior. 1998;59:1–17. doi: 10.1016/s0091-3057(97)00047-6. [DOI] [PubMed] [Google Scholar]

- Benoit RG, Gilbert SJ, Burgess PW. A Neural Mechanism Mediating the Impact of Episodic Prospection on Farsighted Decisions. J.Neurosci.Journal of Neuroscience. 2011;31:6771–6779. doi: 10.1523/JNEUROSCI.6559-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel WK, Yi R, Kowal BP, Gatchalian KM. Cigarette smokers discount past and future rewards symmetrically and more than controls: Is discounting a measure of impulsivity? Drug Alcohol Depend. Drug and Alcohol Dependence. 2008;96:256–262. doi: 10.1016/j.drugalcdep.2008.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel WK, Yi R, Landes RD, Hill PF, Baxter C. Remember the Future: Working Memory Training Decreases Delay Discounting Among Stimulant Addicts. Biological Psychiatry. 2011;69:260–265. doi: 10.1016/j.biopsych.2010.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel WK, Marsch LA. Toward a behavioral economic understanding of drug dependence: delay discounting processes. Addiction. 2001;96:73–86. doi: 10.1046/j.1360-0443.2001.961736.x. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, An J. Goal-directed decision making in prefrontal cortex: a computational framework. In: Koller D, Bengio YY, Schuurmans D, Bouttou L, Culotta A, editors. Advances in Neural Information Processing Systems (NIPS) 2008. [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Carroll DC. Self-projection and the brain. in Cognitive Sciences. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. [DOI] [PubMed] [Google Scholar]

- Buckner RL. The Role of the Hippocampus in Prediction and Imagination. Annual review of psychology. 2010;61:27. doi: 10.1146/annurev.psych.60.110707.163508. [DOI] [PubMed] [Google Scholar]

- Burks SV, Carpenter JP, Goette L, Rustichini A. Cognitive skills affect economic preferences, strategic behavior, and job attachment. Proc.Natl.Acad.Sci.U.S.A.Proceedings of the National Academy of Sciences of the United States of America. 2009;106:7745–7750. doi: 10.1073/pnas.0812360106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat.Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Daw ND. School of Computer Science. Carnegie Mellon University; Pittsburgh, Pa: 2003. Reinforcement learning models of the dopamine system and their behavioral implications. [Google Scholar]

- Doya K. What are the computations of the cerebellum, the basal ganglia and the cerebral cortex? Neural networks: the official journal of the International Neural Network Society. 1999;12:961. doi: 10.1016/s0893-6080(99)00046-5. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Neurocomputational models of working memory. Nature neuroscience. 2000;3:1184–1191. doi: 10.1038/81460. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Vittoz NM, Floresco SB, Seamans JK. Abrupt Transitions between Prefrontal Neural Ensemble States Accompany Behavioral Transitions during Rule Learning. Neuron. 2010;66:438–448. doi: 10.1016/j.neuron.2010.03.029. [DOI] [PubMed] [Google Scholar]

- Ebert JEJ, Prelec D. The Fragility of Time: Time-Insensitivity and Valuation of the Near and Far Future. Management Science Management Science. 2007;53:1423–1438. [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C. The medial temporal lobe and recognition memory. Annual review of neuroscience. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermentrout B. Neural Oscillators. Lecture notes in mathematics. 2005:69–106. [Google Scholar]

- Franco-Watkins AM, Pashler H, Rickard TC. Does Working Memory Load Lead to Greater Impulsivity? Commentary on Hinson, Jameson, and Whitney (2003) Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:443–447. doi: 10.1037/0278-7393.32.2.443. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Doll BB, Oas-Terpstra J, Moreno F. Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nat.Neurosci.Nature Neuroscience. 2009;12:1062–1068. doi: 10.1038/nn.2342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Moustafa AA, Haughey HM, Curran T, Hutchison KE. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proceedings of the National Academy of Sciences of the United States of America. 2007;104:16311–16316. doi: 10.1073/pnas.0706111104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gailliot MT, Baumeister RF, DeWall CN, Maner JK, Plant EA, Tice DM, et al. Self-control relies on glucose as a limited energy source: willpower is more than a metaphor. Journal of personality and social psychology. 2007;92:325–336. doi: 10.1037/0022-3514.92.2.325. [DOI] [PubMed] [Google Scholar]

- Gilbert DT, Wilson TD. Science. Vol. 317. New York, N.Y.: 2007. Prospection: experiencing the future; pp. 1351–1354. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Neuroeconomics: decision making and the brain. Academic Press; London; San Diego, CA: 2008. [Google Scholar]

- Gold JI, Shadlen MN. Banburismus and the brain: decoding the relationship between sensory stimuli, decisions, and reward. Neuron. 2002;36:299–308. doi: 10.1016/s0896-6273(02)00971-6. [DOI] [PubMed] [Google Scholar]

- Green L, Myerson J, Calvert AL. Pigeons’ Discounting of Probabilistic and Delayed Reinforcers. J Exp Anal Behav Journal of the Experimental Analysis of Behavior. 2010;94:113–123. doi: 10.1901/jeab.2010.94-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Vann SD, Maguire EA. Patients with hippocampal amnesia cannot imagine new experiences. Proceedings of the National Academy of Sciences of the United States of America. 2007;104:1726–1731. doi: 10.1073/pnas.0610561104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hertz J, Krogh A, Palmer RG. Introduction to the theory of neural computation. Addison-Wesley Pub. Co.; Redwood City, Calif.: 1991. [Google Scholar]

- Heyman GM. Addiction: a disorder of choice. Harvard University Press; Cambridge, Mass.: 2009. [Google Scholar]

- Hill C. The rationality of preference construction (and the irrationality of rational choice) Minnesota Journal of Law, Science, and Technology. 2008;9:689–742. [Google Scholar]

- Hinson JM, Jameson TL, Whitney P. Impulsive decision making and working memory. Journal of experimental psychology.Learning, memory, and cognition. 2003;29:298–306. doi: 10.1037/0278-7393.29.2.298. [DOI] [PubMed] [Google Scholar]

- Hopfield JJ. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proceedings of the National Academy of Sciences of the United States of America. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard MW, Fotedar MS, Datey AV, Hasselmo ME. The Temporal Context Model in Spatial Navigation and Relational Learning: Toward a Common Explanation of Medial Temporal Lobe Function Across Domains. Psychological review. 2005;112:75–116. doi: 10.1037/0033-295X.112.1.75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys QJ. Reinforcers and control: towards a computational aetiology of depression. University College London; 2007. [Google Scholar]

- Huys QJ, Eshel N, O’Lions E, Sheridan L, Dayan P, Roiser JP. Bonsai trees in your head: how the Pavlovian system sculpts goal-directed choices by pruning decision trees. PLoS.Comput.Biol. 2012 doi: 10.1371/journal.pcbi.1002410. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson A, Redish AD. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. J.Neurosci. 2007;27:12176–12189. doi: 10.1523/JNEUROSCI.3761-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson A, van der Meer MA, Redish AD. Integrating hippocampus and striatum in decision-making. Curr.Opin.Neurobiol. 2007;17:692–697. doi: 10.1016/j.conb.2008.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaelbling LP, Littman ML, Moore AW. Reinforcement Learning: A Survey. Journal of Artificial Intelligence Research. 1996;4:237–285. [Google Scholar]

- Kahneman D, Tversky A. Prospect Theory: An Analysis of Decision under Risk. Econometrica: Journal of the Econometric Society. 1979;47:263–292. [Google Scholar]

- Kliegel M, McDaniel MA, Einstein GO. Prospective memory cognitive, neuroscience, developmental, and applied perspectives. 2008 [On-line]. Available: http://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=208812.

- Kohonen T. Content-addressable memories. Springer-Verlag; Berlin; New York: 1980. [Google Scholar]

- Krajbich I, Rangel A, Armel C. Visual fixations and the computation and comparison of value in simple choice. Nat.Neurosci.Nature Neuroscience. 2010;13:1292–1298. doi: 10.1038/nn.2635. [DOI] [PubMed] [Google Scholar]

- Kurth-Nelson Z, Redish AD. Temporal-difference reinforcement learning with distributed representations. PLoS.One. 2009;4:e7362. doi: 10.1371/journal.pone.0007362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwan D, Craver CF, Green L, Myerson J, Boyer P, Rosenbaum RS. Future decision-making without episodic mental time travel. Hippocampus, n/a. 2011 doi: 10.1002/hipo.20981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laibson D. Golden Eggs and Hyperbolic Discounting. The Quarterly Journal of Economics. 1997;112:443–477. [Google Scholar]

- Lauwereyns J. The anatomy of bias how neural circuits weigh the options. 2010 [On-line]. Available: http://site.ebrary.com/id/10364165.

- Loftus EF, Palmer JC. Reconstruction of Automobile Destruction: An Example of the Interaction between Language and Memory. Journal of Verbal Learning and Verbal Behavior. 1974;13:585–589. [Google Scholar]

- Madden GJ, Bickel WK. Impulsivity: the behavioral and neurological science of discounting. American Psychological Association; Washington, DC: 2010. [Google Scholar]

- Mazur JE. Choice, Delay, Probability, and Conditioned Reinforcement. Animal learning & behavior. 1997;25:131. [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Science. 306. New York, N.Y.: 2004. Separate neural systems value immediate and delayed monetary rewards; pp. 503–507. [DOI] [PubMed] [Google Scholar]

- McDannald MA, Schoenbaum G, Lucantonio F, Burke KA, Niv Y. Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J.Neurosci.Journal of Neuroscience. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mischel W, Grusec J. Waiting for rewards and punishments: effects of time and probability on choice. Journal of personality and social psychology. 1967;5:24–31. doi: 10.1037/h0024180. [DOI] [PubMed] [Google Scholar]

- Mischel W, Underwood B. Instrumental Ideation in Delay of Gratification. Child Development. 1974;45:1083–1088. [PubMed] [Google Scholar]

- Montague PR, Dolan RJ, Friston KJ, Dayan P. Computational psychiatry. Trends Cogn Sci. 2012;16:72–80. doi: 10.1016/j.tics.2011.11.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L, Morris J. Modeling the Effect of Reward Amount on Probability Discounting. Journal of the experimental analysis of behavior. 2011;95:175–187. doi: 10.1901/jeab.2011.95-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newell A, Simon HA. Human problem solving. Prentice-Hall; 1972. [Google Scholar]

- Nilsson NJ. Morgan Kaufmann Publishers; San Francisco, Calif.: 1998. Artificial Intelligence: a new synthesis. [Google Scholar]

- Niv Y, Joel D, Dayan P. A normative perspective on motivation. Trends Cogn Sci. 2006;10:375–381. doi: 10.1016/j.tics.2006.06.010. [DOI] [PubMed] [Google Scholar]

- Odum A, Madden G, Bickel W. Discounting of delayed health gains and losses by current, never- and ex-smokers of cigarettes. Nicotine & Tobacco Research. 2002;4:295–303. doi: 10.1080/14622200210141257. [DOI] [PubMed] [Google Scholar]

- Packard MG, McGaugh JL. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiology of learning and memory. 1996;65:65–72. doi: 10.1006/nlme.1996.0007. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennartz CM, Berke JD, Graybiel AM, Ito R, Lansink CS, van der Meer M, et al. Corticostriatal Interactions during Learning, Memory Processing, and Decision Making. J.Neurosci. 2009;29:12831–12838. doi: 10.1523/JNEUROSCI.3177-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perry JL, Larson EB, German JP, Madden GJ, Carroll ME. Impulsivity (delay discounting) as a predictor of acquisition of IV cocaine self-administration in female rats. Psychopharmacology. 2005;178:2–3. doi: 10.1007/s00213-004-1994-4. [DOI] [PubMed] [Google Scholar]

- Peters J, Büchel C. Episodic Future Thinking Reduces Reward Delay Discounting through an Enhancement of Prefrontal-Mediotemporal Interactions. Neuron. 2010;66:138–148. doi: 10.1016/j.neuron.2010.03.026. [DOI] [PubMed] [Google Scholar]

- Peters J, Büchel C. The neural mechanisms of inter-temporal decision-making: understanding variability. Trends in Cognitive Sciences. 2011;15:227–239. doi: 10.1016/j.tics.2011.03.002. [DOI] [PubMed] [Google Scholar]

- Rachlin H. Notes on discounting. Journal of the experimental analysis of behavior. 2006;85:425–435. doi: 10.1901/jeab.2006.85-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H. The science of self-control. London: Harvard University Press; Cambridge, Mass.: 2004. [Google Scholar]

- Radu PT, Yi R, Bickel WK, Gross JJ, McClure SM. A mechanism for reducing delay discounting by altering temporal attention. Journal of the experimental analysis of behavior. 2011;96:363–385. doi: 10.1901/jeab.2011.96-363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: Theory and data for two-choice decision tasks. Neural Comp.Neural Computation. 2008;20:873–922. doi: 10.1162/neco.2008.12-06-420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A. A unified framework for addiction: vulnerabilities in the decision process. Behav.Brain Sci. 2008;31:415–437. doi: 10.1017/S0140525X0800472X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: implications for addiction, relapse, and problem gambling. Psychol.Rev. 2007;114:784–805. doi: 10.1037/0033-295X.114.3.784. [DOI] [PubMed] [Google Scholar]

- Redish AD, Kurth-Nelson Z. Neural models of delay discounting. In: Madden GJ, Bickel WK, editors. Impulsivity: the behavioral and neurological science of discounting. American Psychological Association; Washington, DC: 2010. [Google Scholar]

- Redish AD. Beyond the cognitive map from place cells to episodic memory. 1999 [On-line]. Available: http://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=9360.

- Restle F. Discrimination of cues in mazes: a resolution of the place-vs.-response question. Psychological review. 1957;64:217–228. doi: 10.1037/h0040678. [DOI] [PubMed] [Google Scholar]

- Ribas-Fernandes JJF, Solway A, Diuk C, McGuire JT, Barto AG, Niv Y, et al. A Neural Signature of Hierarchical Reinforcement Learning. Neuron. 2011;71:370–379. doi: 10.1016/j.neuron.2011.05.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rich E, Knight K. Artificial intelligence. McGraw-Hill; New York: 1991. [Google Scholar]

- Roesch MR, Schoenbaum G, Singh T, Leon BP, Mullins SE. Ventral striatal neurons encode the value of the chosen action in rats deciding between differently delayed or sized rewards. J.Neurosci.Journal of Neuroscience. 2009;29:13365–13376. doi: 10.1523/JNEUROSCI.2572-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samuelson PA. A Note on Measurement of Utility. The Review of Economic Studies. 1937;4:155–161. [Google Scholar]

- Schacter DL, Addis DR. Constructive memory: the ghosts of past and future. Nature. 2007;445 doi: 10.1038/445027a. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. Remembering the past to imagine the future: the prospective brain. Nature reviews.Neuroscience. 2007;8:657–661. doi: 10.1038/nrn2213. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. Episodic Simulation of Future Events: Concepts, Data, and Applications. Annals of the New York Academy of Sciences Annals of the New York Academy of Sciences. 2008;1124:39–60. doi: 10.1196/annals.1440.001. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Stalnaker TA, Takahashi YK, Roesch MR. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat.Rev.Neurosci.Nature Reviews Neuroscience. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweighofer N, Shishida K, Han CE, Okamoto Y, Tanaka SC, Yamawaki S, et al. Humans can adopt optimal discounting strategy under real-time constraints. PLoS computational biology. 2006;2 doi: 10.1371/journal.pcbi.0020152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seamans JK, Yang CR. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Progress in neurobiology. 2004;74:1–58. doi: 10.1016/j.pneurobio.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Simon DA, Daw ND. Neural correlates of forward planning in a spatial decision task in humans. J.Neurosci.Journal of Neuroscience. 2011;31:5526–5539. doi: 10.1523/JNEUROSCI.4647-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonson I, Tversky A. Choice in Context: Tradeoff Contrast and Extremeness Aversion. Journal of Marketing Research. 1992;29:281–295. [Google Scholar]

- Smith A, Li M, Becker S, Kapur S. Dopamine, prediction error and associative learning: A model-based account. Network: Computation in Neural Systems. 2006;17:61–84. doi: 10.1080/09548980500361624. [DOI] [PubMed] [Google Scholar]

- Sozou On hyperbolic discounting and uncertain hazard rates. Proceedings: Biological Sciences. 1998;265:2015. [Google Scholar]

- Stanger C, Ryan SR, Fu H, Landes RD, Jones BA, Bickel WK, et al. Delay discounting predicts adolescent substance abuse treatment outcome. Exp.Clin.Psychopharmacol. 2011 doi: 10.1037/a0026543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging theory. Princeton University Press; Princeton, N.J.: 1986. [Google Scholar]

- Suri RE. TD models of reward predictive responses in dopamine neurons. Neural networks: the official journal of the International Neural Network Society. 2002;15 doi: 10.1016/s0893-6080(02)00046-1. [DOI] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning an introduction. 1998 [Online]. Available: http://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=1094.

- Tolman EC. Prediction of vicarious trial and error by means of the schematic sowbug. Psychological Review Psychological Review. 1939;46:318–336. [Google Scholar]

- Trope Y, Liberman N. Temporal construal. Psychological review. 2003;110:403–421. doi: 10.1037/0033-295x.110.3.403. [DOI] [PubMed] [Google Scholar]