1 Summary

Learning and memory and navigation literatures emphasize interactions between multiple memory systems: a flexible, planning-based system and a rigid, cached-value system. This has profound implications for decision-making. Recent conceptualizations of flexi-ble decision-making employ prospection and projection arising from a network involving the hippocampus. Recent recordings from rodent hippocampus in decision-making situations have found transient forward-shifted representations. Evaluation of that prediction and subsequent action-selection likely occurs downstream (e.g. in orbitofrontal cortex, in ventral and dorsomedial striatum). Classically, striatum has been identified as a critical component of the less-flexible, incremental system. Current evidence, however, suggests that striatum is involved in both flexible and stimulus-response decision-making, with dorsolateral striatum involved in stimulus-response strategies and ventral and dorsomedial striatum involved in goal-directed strategies.

2 Introduction

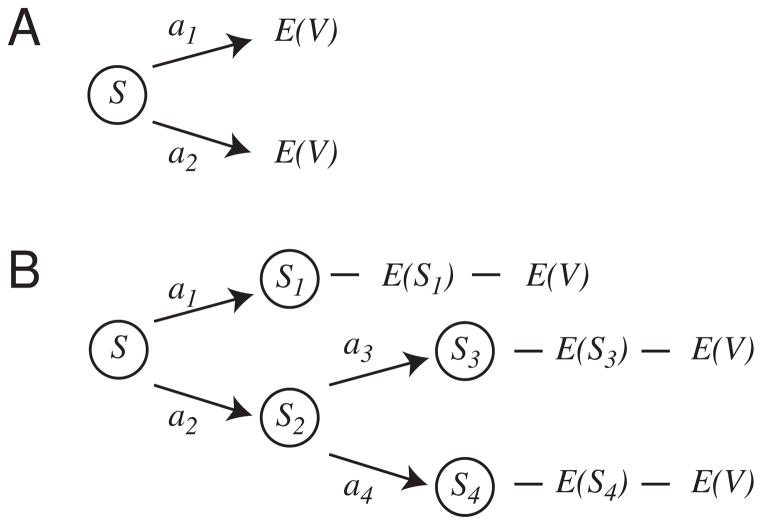

Theoretical perspectives on decision-making processes have traditionally treated decision-making from a single system perspective. Within many models of reinforcement learning, decision-making is viewed as learning a mapping of situations (world-states) s to actions a that maximizes reward by calculating the expected value E(V(s, a)) [1].

In contrast, the learning and memory literature has emphasized the interaction of multiple memory systems [2–4]. In the memory literature, these differences are distinguished between declarative and procedural systems [3]. Declarative information is broadly accessible in a range of circumstances and based on a variety of retrieval cues [5] whereas procedural information is narrowly bound and accessible only in rigid and specific sequences [6]. In the navigation literature, these differences are distinguished between cognitive map and stimulus-response (route-based) systems [7, 8]. The cognitive map system confers animals with the ability to plan trajectories within their environment and flexibly integrate new information (such as novel stimuli and reward). Stimulus-response systems provide the basis for simpler, non-integrated navigation functions such as stimulus recognition and approach.

Although many computational models of these systems have been presented, confirmation of specific mechanisms within the neurophysiology has been limited [8, 9]. Even without specific mechanisms, there has been a convergence in the proposed anatomical substrates underlying each component. These theories generally distinguish between a flexible planning system critically dependent on intact hippocampal function and a more rigid, more efficient system critically dependent on intact striatal function [3, 4, 8, 10]. As we review below, recent data now suggest roles for hippocampus in self-projection in planning strategies and striatal roles for evaluation and action-selection in both planning and cache-based strategies.

3 The hippocampus and decision making

Originally proposed in contrast to stimulus-response approaches to learning, the cognitive map described learning in terms of ubiquitous observation rather than reward-driven association, and decision-making in terms of goals and expectations rather than drives and responses [11]. Animals were hypothesized to store associations between stimuli and to plan actions based on expectations arising from those associations. But without an available computational language, identifying the mechanisms underlying decision-making in the face of explicit expectations has been difficult [11–13].

Recent considerations of goal-directed decision-making have emphasized explicit search processes, which predict and evaluate potential future situations [14–16, recalling early artificial-intelligence theories [17]]. These hypotheses parallel recent considerations of active memory that have emphasized the functional potential for projection of the conceptual self beyond the current situation [5, 16, 18]. Behaviorally, active memory processing within expectation theory predicts that animals will pause at decision points as they mentally explore available possibilities.

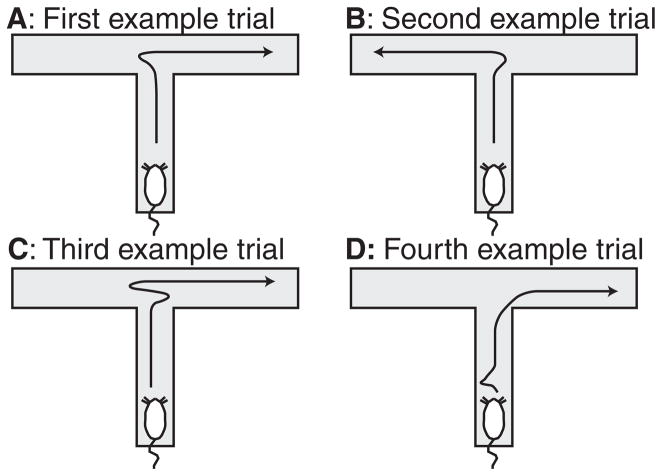

A key experimental observation of expectation-based theory of decision-making was that animals paused at choice-points and oriented towards potential options. This behavior, termed vicarious trial and error (VTE), occurs during early learning following initial exposure to a task, but before automation [11, 19]. (See Fig. 2 and supplemental movie.) Animals often show a sudden, non-linear increase in performance [20], correlated with VTE [19]. Furthermore, VTE behavior is related to hippocampal integrity — rats with hippocampal lesions display reduced VTE and choice performance [21]. VTE behavior is also correlated with cytochrome oxidase activity in hippocampus on hippocampal-dependent tasks [22]. Within the expectation-based perspective on decision-making, VTE is hypothesized to represent the behavioral residual of an animal considering different options as it plans a course of action.

Fig 2. Vicarious trial and error.

On the first example trial, the rat looks left and then goes right. On the second example trial, the rat looks right and then goes left. On the third example trial, the rat looks right, starts left, but then goes right. On the fourth example trial, the rat looks left before starting the journey down the central track and then does not pause at the actual choice point, suggesting the moment of decision may have been made before the journey down the central track in this last example. A video of a real rat running these four examples is shown in the supplemental movie.

To be useful for decision-making, planning requires several component processes: representation of the current situation (a classification process), prediction of the outcome of an action (representation of a future situation), evaluation of that predicted situation, and action-selection. While hippocampal place cell activity provides the basis for representing the current position (situation), recent reviews by Buckner and Carroll [5] and Schacter et al. [18] have suggested that self-projection processes require hippocampal function.

Recent data that patients with hippocampal leions are impaired at imagining potential futures supports this hypothesis. In a study by Hassabis et al. [23], participants were asked to imagine and describe common scenes (e.g. a market or a beach). Despite similar reports of task difficulty, the descriptions of participants with hippocampal damage displayed reduced content and profound deficits in spatial coherence compared to controls. These findings suggest that the hippocampal system plays a fundamental role in coherently representing imagined potential future situations.

The strong spatial correlates of rodent hippocampal pyramidal cells on spatial tasks (place fields, [7, 8]), and the strong spatial effects of hippocampal manipulations in rodents [7, 8] led O’Keefe and Nadel to suggest that the hippocampus could provide a neural substrate for cognitive maps [7].1 A number of studies have shown that changes in the place cell mapping are associated with errors in behavior, implying a functional use of the spatial representation [26–28]. How these maps are used, particularly for planning, remains an open question.

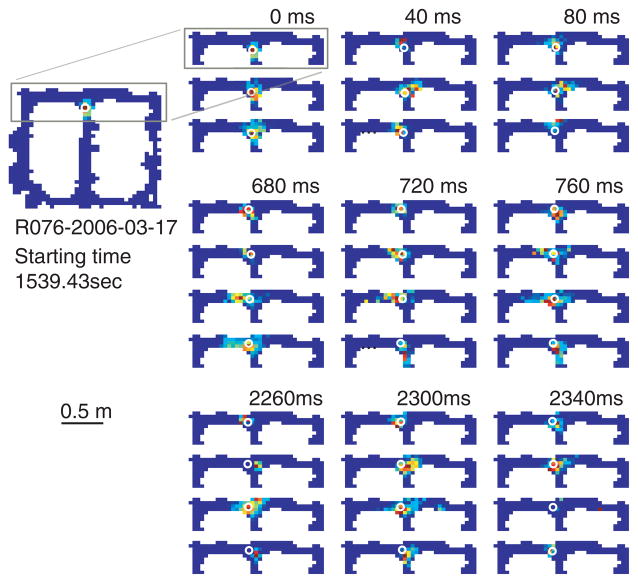

In our recent work on the fast dynamics of hippocampal representations, we found that spatial representations transiently shifted ahead of the animal at a ⊤ choice point [29]. Forward shifted hippocampal representations coherently moved ahead of the animal into one ⊤ arm and then the other (see Fig. 3). These sweeps tended to occur during VTE-like behaviors, when animals paused and looked around at the choice-point. And in much the same way that VTE is task- and experience-dependent [19, 21, 22], the behavior of forward shifted representations were task and experience dependent. In a task with stable reward positions, forward shifted representations first became biased toward one of the two options and then became truncated with further experience [29]. Forward sweeping representations provide a potential representation mechanism for the prediction component of goal-directed decision-making.

Fig 3. Forward-shifted neural representations at the choice point.

Spatial representations were decoded from the activity of simultaneously recorded neural ensembles within the CA3 region of the hippocampus. Each panel shows a sample of the decoded hippocampal spatial representation in a cued choice task [29]. Panels are arranged in 40msec intervals from left-to-right, then top-to-bottom. Representations are displayed as a probability distribution over space (red = high probability, blue = low probability) and the animal’s position is shown as a white ○. The representations closely tracked the rat’s position as the rat approached the choice point. As the rat paused at the choice point, hippocampal spatial representations moved forward of the animal into each arm (first to the left, then to the right). Forward-shifted neural representations provide a potential mechanism for consideration of future possibilities. Data is from another example from data set originally reported in [29].

Representations of future possibilities are not sufficient for action-selection. Decision processes also require mechanisms for evaluation of future expectations as well as mechanisms for flexible translation into behavior. Although other candidates exist, three structures have been suggested as key to evaluation and action-selection processes in the planning system: orbitofrontal cortex [30–32], ventral striatum [32–34], and dorsomedial striatum [35, 36]. Hippocampal activity influences ventral striatum firing [37, 38]. Anticipatory representations in oribitofrontal cortex disappear after hippocampal lesions [39]. During learning, hippocampal and dorsomedial local field potentials become transiently coherent at specific frequencies (theta, 7–10 Hz) [36]. Both ventral striatum [33, 40, 41] and orbitofrontal cortex [31, 42, 43] have been found to encode reward value and expectancy. This suggests that the hippocampus may only be providing the prediction component; evaluation of the value of that prediction and the making of the decision may happen downstream of the hippocampal prediction process.

4 The striatum and decision making

Classically within the decision-making, memory, and navigation literatures, the dorsal striatum has been identified as a critical component of incremental (procedural, route-based) stimulus-response learning, particularly in contrast to the more flexible (declarative, map-based) hippocampally-dependent learning system [4, 8, 35]. Current evidence, however, suggests that striatum is involved in both flexible (planning) and stimulus-response (habit) strategies.

A distinction can be drawn between the more anterior, dorsolateral and the more posterior, dorsomedial components of striatum [6, 35]. Dorsolateral striatum is a critical component of incremental (procedural, route-based) stimulus-response learning [35, 44]. This idea has received extensive experimental support from lesion [10, 45, 46], pharmacological [47], and recording studies [48–51]. For example, rodent recording studies during spatial tasks are consistent with such a habit learning role for dorsolateral striatum, in that firing patterns develop slowly [44, 48–50], but only under conditions in which the relationship is rewarded (N.C. Schmitzer-Torbert, PhD Thesis, University of Minnesota; A.M. Graybiel, Soc Neurosci Abstr, 2006).

In computational terms, this incremental-learning strategy is primarily captured by tem- poral difference reinforcement learning (TDRL) models, wherein a process assigns value E(V(s, a)) to taking an action a within a situation s [1]. A central characteristic of such models is that they select actions based purely on a scalar value associated with taking the action in the current situation. This means that such models cannot accommodate more flexible responses such as latent-learning, devaluation, extinction, or reversal [15, 52].

In contrast to the involvement of dorsolateral striatum in outcome-independent control, recent evidence indicates that dorsomedial striatum is involved in flexible goal-directed actions, including the map-based components of navigation tasks [53, 54] and the learning and performance of goal-directed actions of instrumental conditioning tasks [55–57].

As reviewed above, the expression of flexible goal-directed behavior requires at least two processes: access to the knowledge that a given action leads to a particular outcome, and an evaluation of the outcome that takes the organism’s current needs into account. These processes and their striatal underpinnings have been dissociated in instrumental conditioning experiments.

Knowledge of the relationship between action and outcome can be probed by observing rats’ responses to degradation of the contingency between them. When rats trained to lever-press for food X are given a session where X is now delivered independently of lever presses, they will reduce lever pressing on a subsequent extinction test [58]. Rats with dorsomedial striatum lesions [56, 59] or with NMDA-receptor antagonist infusions into dorsomedial striatum [57], however, were insensitive to such contingency degradation, suggesting that dorsomedial striatum is a key component in the processing of action-outcome relationships.

In contrast, the extant evidence suggests that ventral striatum, particularly nucleus accumbens core2 is involved in the evaluation component [63, 64]. Recordings from accumbens core have found firing correlates of reward receipt [33, 65], as well as anticipation of future rewards [37, 41, 66]. How such representations are integrated with action selection [67] is still unknown, but it is clear that striatal contributions to decision-making are not restricted to incremental, inflexible habit learning.

5 Conclusion

At this point, it is an open question whether the striatum is performing similar computational operations on different inputs to accommodate different strategies or whether there are internal differences within striatum as well. It is unknown whether computational models of dorsolateral striatum (e.g. TDRL models) can be extended to accommodate other, more flexible strategies. The most detailed theories are the self-projection theories [5, 18] and the expectancy theories [11], most-recently computationally instantiated in the recent Daw et al. [15] and Hasselmo et al. [14, 16] models. These theories suggest that orbitofrontal cortex, dorsomedial striatum, and ventral striatum may be selecting actions based on evaluations of expectancies derived from self-projection information (i.e. non-local representations) projecting in from the hippocampus and frontal cortices. The recent data from Johnson and Redish [29] that hippocampal representations sweep down potential trajectories during flexible decision-making provides a potential instantiation of a search process. Consistent with a hippocampalstriatal role in early learning is recent local field potental data that hippocampal and dorsomedial striatal theta signals increase coherence during early learning [36]. However, it is still unknown how stored contingencies are integrated with the evaluation of outcome expectancy to produce actions.

Supplementary Material

Vicarious trial and error entails behaviorally identifiably active processes. Video shows four example trials from a one-⊤ loop task. On the first example trial, the rat looks left and then goes right. On the second example trial, the rat looks right and then goes left. On the third example trial, the rat looks left, starts right, but then goes left. Of particular note is the fourth example trial in which the rat looks right before starting the journey down the central track and then does not pause at the actual choice point, suggesting the moment of decision may have been made before the journey down the central track in this last example.

On this task, reward was delivered at feeders on either the left or right return rails under an all-Left, all-Right, or alternating schedule. The schedule was selected at random each day and switched to a new schedule at a random time approximately halfway through the 30 minute session.

Trials are selected from a single session, and are in order, but are not consecutive.

Fig 1. Decision-making under formulations of cache-based stimulus-action (A) and expectation-based planning (B) strategies.

Both strategies require a situation-recognition component to produce a starting point S for predictions within the decision-making process. In cache-based models (A), decision-making entails selecting the action a with the maximum expected return E(V). This means that actions are judged only in terms of their cached expected return. In planning models (B), active memory processes allow exploration of potential future situations S1, . . . , S4. The outcome of each potential future E(Si ) can then be compared to the animal’s current needs to determine the expected value E(V). Because planning systems include future situation predictions, it can remain flexible under conditions in which cache-systems remain rigid. However, because the planning system must serially search into possible futures, it will require processing time not required by the cache-system.

Acknowledgments

Adam Johnson was supported by a Dissertation Fellowship from the University of Min-nesota. We thank Bruce Overmeier, Paul Schrater, and the Center for Cognitive Sciences at the University of Minnesota for helpful discussions. We thank John Ferguson for helpful discussions, for comments on a draft of the manuscript, and for collecting the data used in the supplemental movie. We thank Geoff Schoenbaum for comments on a draft of the manuscript.

Footnotes

The extent to which place fields are a special case of more general non-spatial information processing continues to be vigorously debated [24, 25], however from the earliest descriptions of the cognitive map, it was described as both spatial and non-spatial [7, 11].

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Sutton RS, Barto AG. Reinforcement Learning: An introduction. MIT Press; Cambridge MA: 1998. [Google Scholar]

- 2.Schacter DL, Tulving E. Memory Systems 1994. MIT Press; 1994. [Google Scholar]

- 3.Squire LR. Memory systems of the brain: a brief history and current perspective. Neurobiology of learning and memory. 2004;82:171–177. doi: 10.1016/j.nlm.2004.06.005. [DOI] [PubMed] [Google Scholar]

- 4.Poldrack RA, Packard MG. Competition among multiple memory systems: Converging evidence from animal and human studies. Neuropsychologia. 2003;41:245–251. doi: 10.1016/s0028-3932(02)00157-4. [DOI] [PubMed] [Google Scholar]

- 5•.Buckner RL, Carroll DC. Self-projection and the brain. Trends in Cognitive Sciences. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. This article brings together several areas of research including prospection, episodic memory, theory of mind and navigation in terms of self-projection. The authors highlight the functional use of a core frontal-temporal network within self-projection tasks and suggest that such function requires a similar set of processes. [DOI] [PubMed] [Google Scholar]

- 6.Hikosaka O, Nakahara H, Rand MK, Sakai K, Lu X, Nakamura K, Miyachi S, Doya K. Parallel neural networks for learning sequential procedures. Trends in Neurosciences. 1999;22:464–471. doi: 10.1016/s0166-2236(99)01439-3. [DOI] [PubMed] [Google Scholar]

- 7.O’Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Clarendon Press; Oxford: 1978. [Google Scholar]

- 8.Redish AD. Beyond the Cognitive Map: From Place Cells to Episodic Memory. MIT Press; Cambridge MA: 1999. [Google Scholar]

- 9.O’Reilly RC, Munakata Y. Computational Explorations in Cognitive Neuroscience. MIT Press; Cambridge MA: 2000. [Google Scholar]

- 10.Packard MG, McGaugh JL. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiology of Learning and Memory. 1996;65:65–72. doi: 10.1006/nlme.1996.0007. [DOI] [PubMed] [Google Scholar]

- 11.Tolman EC. Cognitive maps in rats and men. Psychological Review. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- 12.Tulving E. Episodic memory: From mind to brain. Annual Review of Psychology. 2002;53 :1–25. doi: 10.1146/annurev.psych.53.100901.135114. [DOI] [PubMed] [Google Scholar]

- 13.Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36 :285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 14.Koene RA, Gorchetchnikov A, Cannon RC, Hasselmo ME. Modeling goal-directed spatial navigation in the rat based on physiological data from the hippocampal formation. Neural Networks. 2003;16:577–584. doi: 10.1016/S0893-6080(03)00106-0. [DOI] [PubMed] [Google Scholar]

- 15••.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature Neuroscience. 2005;8:1704–1711. doi: 10.1038/nn1560. The authors develop a computational framework for examining the differential contributions of multiple memory systems to decision-making across learning. The model provides a basis for understanding flexible behaviors such as devaluation and planning in terms of multiple memory systems. [DOI] [PubMed] [Google Scholar]

- 16•.Zilli EA, Hasselmo ME. Modeling the role of working memory and episodic memory in behavioral tasks. Hippocampus. doi: 10.1002/hipo.20382. in press This paper provides an early, integrated model of flexible decision-making by incorporating active working memory and episodic memory models into reinforcement learning. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Newell A. Unified Theories of Cognition. Harvard; 1990. [Google Scholar]

- 18.Schacter DL, Addis DR, Buckner RL. Remembering the past to imagine the future: the prospective brain. Nature Reviews Neuroscience. 2007;8:657–661. doi: 10.1038/nrn2213. [DOI] [PubMed] [Google Scholar]

- 19.Tolman EC. The determiners of behavior at a choice point. Psychological Review. 1938;45 :1–41. [Google Scholar]

- 20.Gallistel CR, Fairhurst S, Balsam P. Inaugural Article: The learning curve: Implications of a quantitative analysis. Proceedings of the National Academy of Sciences, USA. 2004;101:13124–13131. doi: 10.1073/pnas.0404965101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hu D, Amsel A. A simple test of the vicarious trial-and-error hypothesis of hippocampal function. PNAS. 1995;92:5506–5509. doi: 10.1073/pnas.92.12.5506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hu D, Xu X, Gonzalez-Lima F. Vicarious trial-and-error behavior and hippocampal cytochrome oxidase activity during Y-maze discrimination learning in the rat. International Journal of Neuroscience. 2006;116:265–280. doi: 10.1080/00207450500403108. [DOI] [PubMed] [Google Scholar]

- 23••.Hassabis D, Kumaran D, Vann SD, Maguire EA. Patients with hippocampal amnesia cannot imagine new experiences. PNAS. 2007;104:1726–1731. doi: 10.1073/pnas.0610561104. In this study patients with bilateral damage to the hippocampus displayed deficits constructing newly imagined experiences. The authors suggest that constructing imagined experiences, like episodic memory, are dependent hippocampal function. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nadel L, Eichenbaum H. Introduction to the special issue on place cells. Hippocampus. 1999;9:341–345. doi: 10.1002/(SICI)1098-1063(1999)9:4<341::AID-HIPO1>3.0.CO;2-B. [DOI] [PubMed] [Google Scholar]

- 25.Mizumori SJY. Hippocampal place fields: a neural code for episodic memory? Hippocampus. 2006;16:685–690. doi: 10.1002/hipo.20209. [DOI] [PubMed] [Google Scholar]

- 26.O’Keefe J, Speakman A. Single unit activity in the rat hippocampus during a spatial memory task. Experimental Brain Research. 1987;68:1–27. doi: 10.1007/BF00255230. [DOI] [PubMed] [Google Scholar]

- 27.Lenck-Santini PP, Muller RU, Save E, Poucet B. Relationships between place cell firing fields and navigational decisions by rats. Journal of Neuroscience. 2002;22:9035–9047. doi: 10.1523/JNEUROSCI.22-20-09035.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rosenzweig ES, Barnes CA. Impact of aging on hippocampal function: plasticity, network dynamics, and cognition. Progress in Neurobiology. 2003;69:143–179. doi: 10.1016/s0301-0082(02)00126-0. [DOI] [PubMed] [Google Scholar]

- 29••.Johnson A, Redish AD. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. Journal of Neuroscience. 2007 doi: 10.1523/JNEUROSCI.3761-07.2007. Spatial representations were decoded from the activity of simultaneously recorded neural ensembles within the CA3 region of the hippocampus. At decision-points, decoded representations encoded non-local positions forward of the animal into each arm. Forward-shifted neural representations provide a potential mechanism for consideration of future possibilities. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Schoenbaum G, Roesch M, Stalnaker TA. Orbitofrontal cortex, decision making, and drug addiction. Trends in Neurosciences. 2006 doi: 10.1016/j.tins.2005.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kalivas PW, Volkow ND. The neural basis of addiction: A pathology of motivation and choice. Am J Psychiatry. 2005;162:1403–1413. doi: 10.1176/appi.ajp.162.8.1403. [DOI] [PubMed] [Google Scholar]

- 33.Lavoie AM, Mizumori SJY. Spatial-, movement- and reward-sensitive discharge by medial ventral striatum neurons in rats. Brain Research. 1994;638:157–168. doi: 10.1016/0006-8993(94)90645-9. [DOI] [PubMed] [Google Scholar]

- 34.O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable Roles of Ventral and Dorsal Striatum in Instrumental Conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 35•.Yin HH, Knowlton BJ. The role of the basal ganglia in habit formation. Nature Reviews Neuroscience. 2006;7:464–476. doi: 10.1038/nrn1919. This review highlights the differential contributions of dorso-lateral and dorsomedial striatal subregions on the classic place versus response plus-maze task. [DOI] [PubMed] [Google Scholar]

- 36.DeCoteau WE, Thorn C, Gibson DJ, Courtemanche R, Mitra P, Kubota Y, Graybiel AM. Learning-related coordination of striatal and hippocampal theta rhythms during acquisition of a procedural maze task. PNAS. 2007;104:5644–5649. doi: 10.1073/pnas.0700818104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Martin PD, Ono T. Effects of reward anticipation, reward presentation, and spatial parameters on the firing of single neurons recorded in the subiculum and nucleus accumbens of freely moving rats. Behavioural Brain Research. 2000;116:23–38. doi: 10.1016/s0166-4328(00)00249-7. [DOI] [PubMed] [Google Scholar]

- 38.Pennartz CMA, Lee E, Verheul J, Lipa P, Barnes CA, McNaughton BL. The ventral striatum in off-line processing: Ensemble reactivation during sleep and modulation by hippocampal ripples. Journal of Neuroscience. 2004;24:6446–6456. doi: 10.1523/JNEUROSCI.0575-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ramus SJ, Davis JB, Donahue RJ, Discenza CB, Waite AA. Interactions between the orbitofrontal cortex and hippocampal memory system during the storage of long-term memory. Annals of the New York Academy of Sciences. doi: 10.1196/annals.1401.038. in press. [DOI] [PubMed] [Google Scholar]

- 40.Schultz W, Apicella P, Scarnati E, Ljungberg T. Neuronal activity in monkey ventral striatum related to the expectation of reward. Journal of Neuroscience. 1992;12:4595–4610. doi: 10.1523/JNEUROSCI.12-12-04595.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yun IA, Wakabayashi KT, Fields HL, Nicola SM. The ventral tegmental area is required for the behavioral and nucleus accumbens neuronal firing responses to incentive cues. Journal of Neuroscience. 2004;24:2923–2933. doi: 10.1523/JNEUROSCI.5282-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.van Duuren E, Escámez FA, Joosten RN, Visser R, Mulder AB, Pennartz CM. Neural coding of reward magnitude in the orbitofrontal cortex of the rat during a five-odor olfactory discrimination task. Learning and Memory. 2007;14:446–456. doi: 10.1101/lm.546207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schoenbaum G, Roesch M. Orbitofrontal cortex, associative learning, and expectancies. Neuron. 2005;47:633–636. doi: 10.1016/j.neuron.2005.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Graybiel AM. The basal ganglia and chunking of action repertoires. Neurobiology of learning and memory. 1998;70:119–136. doi: 10.1006/nlme.1998.3843. [DOI] [PubMed] [Google Scholar]

- 45.Kesner RP, Bolland BL, Dakis M. Memory for spatial locations, motor responses, and objects: Triple dissociation among the hippocampus, caudate nucleus, and extras-triate visual cortex. Experimental Brain Research. 1993;93:462–470. doi: 10.1007/BF00229361. [DOI] [PubMed] [Google Scholar]

- 46.McDonald RJ, White NM. Parallel information processing in the water maze: Evidence for independent memory systems involving dorsal striatum and hippocampus. Behavioral and Neural Biology. 1994;61:260–270. doi: 10.1016/s0163-1047(05)80009-3. [DOI] [PubMed] [Google Scholar]

- 47.Gold P. Coordination of multiple memory systems. Neurobiology of Learning and Memory. 2004;82:230–242. doi: 10.1016/j.nlm.2004.07.003. [DOI] [PubMed] [Google Scholar]

- 48.Jog MS, Kubota Y, Connolly CI, Hillegaart V, Graybiel AM. Building neural representations of habits. Science. 1999;286:1746–1749. doi: 10.1126/science.286.5445.1745. [DOI] [PubMed] [Google Scholar]

- 49.Schmitzer-Torbert NC, Redish AD. Neuronal activity in the rodent dorsal striatum in sequential navigation: Separation of spatial and reward responses on the multiple- ⊤ task. Journal of Neurophysiology. 2004;91:2259–2272. doi: 10.1152/jn.00687.2003. [DOI] [PubMed] [Google Scholar]

- 50•.Barnes TD, Kubota Y, Hu D, Jin DZ, Graybiel AM. Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature. 2005;437:1158–1161. doi: 10.1038/nature04053. A clear illustration of slowly-developing dorsolateral striatal firing patterns thought to support habit learning. [DOI] [PubMed] [Google Scholar]

- 51•.Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. This study shows neurons in the primate caudate and putamen encode the value of actions, consistent with cache-based reinforcement learning models. [DOI] [PubMed] [Google Scholar]

- 52.Redish AD, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: Implications for addiction, relapse, and problem gambling. Psychological Review. 2007;114:784–805. doi: 10.1037/0033-295X.114.3.784. [DOI] [PubMed] [Google Scholar]

- 53.Devan BD, White NM. Parallel information processing in the dorsal striatum: Relation to hippocampal function. Journal of Neuroscience. 1999;19:2789–2798. doi: 10.1523/JNEUROSCI.19-07-02789.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Yin HH, Knowlton BJ. Contributions of striatal subregions to place and response learning. Learn Mem. 2004;11:459–463. doi: 10.1101/lm.81004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ragozzino ME, Jih J, Tzavos A. Involvement of the dorsomedial striatum in behavioral flexibility: role of muscarinic cholinergic receptors. Brain Research. 2002;953:205–214. doi: 10.1016/s0006-8993(02)03287-0. [DOI] [PubMed] [Google Scholar]

- 56.Yin HH, Knowlton BJ, Balleine BW. Blockade of NMDA receptors in the dorsomedial striatum prevents action-outcome learning in instrumental conditioning. European Journal of Neuroscience. 2005;22:505–512. doi: 10.1111/j.1460-9568.2005.04219.x. [DOI] [PubMed] [Google Scholar]

- 57.Yin HH, Ostlund SB, Knowlton BJ, Balleine BW. The role of the dorsomedial striatum in instrumental conditioning. European Journal of Neuroscience. 2005;22:513–523. doi: 10.1111/j.1460-9568.2005.04218.x. [DOI] [PubMed] [Google Scholar]

- 58.Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- 59.Adams S, Kesner RP, Ragozzino ME. Role of the medial and lateral caudate-putamen in mediating an auditory conditional response association. Neurobiology of Learning and Memory. 2001;76:106–116. doi: 10.1006/nlme.2000.3989. [DOI] [PubMed] [Google Scholar]

- 60.Zahm DS, Brog JS. On the significance of subterritories in the “accumbens” part of the rat ventral striatum. Neuroscience. 1992;50:751–767. doi: 10.1016/0306-4522(92)90202-d. [DOI] [PubMed] [Google Scholar]

- 61.Pennartz CMA, Groenewegen HJ, Lopes da Silva FH. The nucleus accumbens as a complex of functionally distinct neuronal ensembles: An integration of behavioural, electrophysiological, and anatomical data. Progress in Neurobiology. 1994;42 :719–761. doi: 10.1016/0301-0082(94)90025-6. [DOI] [PubMed] [Google Scholar]

- 62.Baldo BA, Kelley AE. Discrete neurochemical coding of distinguishable motivational processes: insights from nucleus accumbens control of feeding. Psychophar-macology. 2007;191:439–459. doi: 10.1007/s00213-007-0741-z. [DOI] [PubMed] [Google Scholar]

- 63.Corbit LH, Muir JL, Balleine BW. The role of the nucleus accumbens in instrumental conditioning: Evidence of a functional dissociation between accumbens core and shell. J Neurosci. 2001;21:3251–3260. doi: 10.1523/JNEUROSCI.21-09-03251.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kalivas PW, Volkow N, Seamans J. Unmanageable motivation in addiction: A pathology in prefrontal-accumbens glutamate transmission. Neuron. 2005;45:647–650. doi: 10.1016/j.neuron.2005.02.005. [DOI] [PubMed] [Google Scholar]

- 65.Miyazaki K, Mogi E, Araki N, Matsumoto G. Reward-quality dependent anticipation in rat nucleus accumbens. NeuroReport. 1998;9:3943–3948. doi: 10.1097/00001756-199812010-00032. [DOI] [PubMed] [Google Scholar]

- 66.German PW, Fields HL. Rat nucleus accumbens neurons persistently encode locations associated with morphine reward. J Neurophysiol. 2007;97:2094–2106. doi: 10.1152/jn.00304.2006. [DOI] [PubMed] [Google Scholar]

- 67.Mogenson GJ, Jones DL, Yim CY. From motivation to action: Functional interface between the limbic system and the motor system. Progress in Neurobiology. 1980;14:69–97. doi: 10.1016/0301-0082(80)90018-0. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Vicarious trial and error entails behaviorally identifiably active processes. Video shows four example trials from a one-⊤ loop task. On the first example trial, the rat looks left and then goes right. On the second example trial, the rat looks right and then goes left. On the third example trial, the rat looks left, starts right, but then goes left. Of particular note is the fourth example trial in which the rat looks right before starting the journey down the central track and then does not pause at the actual choice point, suggesting the moment of decision may have been made before the journey down the central track in this last example.

On this task, reward was delivered at feeders on either the left or right return rails under an all-Left, all-Right, or alternating schedule. The schedule was selected at random each day and switched to a new schedule at a random time approximately halfway through the 30 minute session.

Trials are selected from a single session, and are in order, but are not consecutive.