Abstract

Background

The framework of a just culture ensures balanced accountability for both individuals and the organization responsible for designing and improving systems in the workplace. Engineering principles and human factors analysis influence the design of these systems so they are safe and reliable.

Methods

Approaches for improving patient safety introduced here are (1) analysis of error, (2) specific tools to enhance safety, and (3) outcome engineering.

Conclusion

The just culture is a learning culture that is constantly improving and oriented toward patient safety.

Keywords: Behavior, medical errors, patient safety

INTRODUCTION

People make errors. Errors can cause accidents. In healthcare, errors and accidents result in morbidity and adverse outcomes and sometimes in mortality.

One organizational approach has been to seek out errors and identify the responsible individual. Individual punishment follows. This punitive approach does not solve the problem. People function within systems designed by an organization. An individual may be at fault, but frequently the system is also at fault. Punishing people without changing the system only perpetuates the problem rather than solving it.

A patient care system is obligated to collect productive investigative data that can be analyzed and acted upon to improve patient safety. This process is not possible unless members of the organization remain vigilant and mindful and maintain continuous surveillance. Similarly, people within the organization must believe that they are obligated to report errors. However, medical institutions cannot afford a blame-free culture: Some errors do warrant disciplinary action. Finding a balance between the extremes of punishment and blamelessness is the goal of developing a just culture.1

JUST CULTURE: CONCEPT AND PHILOSOPHY

A just culture balances the need for an open and honest reporting environment with the end of a quality learning environment and culture. While the organization has a duty and responsibility to employees (and ultimately to patients), all employees are held responsible for the quality of their choices. Just culture requires a change in focus from errors and outcomes to system design and management of the behavioral choices of all employees.2

Consider the following situations:

-

•

Two nurses select the (same) wrong vial of intravenous medication from the dispensing system. One nurse administers the drug, causing cardiac arrest. The other nurse realizes the switch when drawing the solution from the vial into the syringe at the bedside. How do we approach the nurses and investigate the situation?

-

•

The attending physician tells a resident physician to obtain a specific blood test. The resident forgets. Fearing the wrath of the supervising physician, the resident reports that the result is normal. How do we deal with this breach?

-

•

A surgical team does not perform a surgical time out on the grounds that no adverse events have occurred in the past. How do we handle this violation?

-

•

The night nurse supervisor reports to a medical director that the lead respiratory therapist was in the hospital at 4:00 am with alcohol on his breath. At a later date, the physician confronts the employee who vehemently denies alcohol abuse. Should the matter be dropped?

In only one of these scenarios does an adverse event occur, yet a just culture, with its insistence on a value-based culture and shared accountability, demands that all of these situations be addressed. However, individual practitioners should not be held accountable for mistakes made in a system they cannot control.3

In the first example, further investigation showed that the 2 vials of entirely different medications looked alike in shape, size, color, and print. This accident waiting to happen did happen to the first nurse and her patient. Human error was involved, but this nurse should be consoled and supported rather than punished.

The resident physician falsified patient data, which cannot be condoned and must be addressed. Honest disclosure without fear of retribution is an important characteristic of a just culture.

The surgical team cannot function outside of the value-based principles designed by the organization. Although this surgical team has never been involved in an adverse event, one may occur in the future.

As for the respiratory therapist, in a just culture we are concerned for the safety of our patients and we are concerned for and care about each other. Further nonpunitive investigation is necessary.

These examples address an aspect of just culture that goes beyond ensuring that employees feel free to report errors. Highly reliable organizations and industries foster mindfulness in their workers. Weick and Sutcliffe4 describe mindfulness in terms of 5 components:

-

1

A constant concern about the possibility of failure

-

2

Deference to expertise regardless of rank or status

-

3

Ability to adapt when the unexpected occurs

-

4

Ability to concentrate on a task while having a sense of the big picture

-

5

Ability to alter and flatten the hierarchy to fit a specific situation

Mindfulness throughout an organization considers, but moves beyond, events and occurrences. Everyone in the organization is continually learning, adjusting, and redesigning systems for safety and managing behavioral choices.

INDUSTRIES OUTSIDE OF HEALTHCARE

While the concepts of developing a just culture and supporting team function may be new to healthcare—spurred by publication of Errors in Medicine in 2000—the just culture environment has been imbedded in other industries for many years.5 The industries of aviation, train transportation, and nuclear power have been accepted as highly reliable and safe.6 For aviation, frequently compared to healthcare, these principles and their foundation span 45 years.7 Nonetheless, within these just culture industries are examples of errors, failures, and accidents that are insightful and address human behavior in complex systems.8

Chatsworth Train Collision, 2008

The Chatsworth train collision occurred on the afternoon of Friday, September 12, 2008 at the beginning of the evening commute in a high-density travel corridor.9 This mass casualty accident brought a massive emergency response by the city and county of Los Angeles, taxing resources to the breaking point. Twenty-five people died, and many survivors were hospitalized for an extended period. The Metrolink train company was exposed to more than $200 million in liability judgments.

Investigations revealed that the train engineer did not obey a signal to not enter a single-track segment (designated as such because of oncoming train traffic). The Metrolink passenger train and a freight train were headed toward one other, both moving at a speed of 40 miles per hour. The engineer of the freight train engaged his air brake 2 seconds before impact, while the engineer of the passenger train did not engage his brake. Further investigation revealed that the engineer in the commuter train had a habit of text messaging while operating the train and had been warned about this policy violation. Nevertheless, his cell phone history (delivered under subpoena) showed 2 text messages sent shortly before impact. A spokeswoman for the train company admitted the strong likelihood of operator error and was chastised by the firm as a result. She resigned.

U.S. Airways Flight 1549, 2009

Flight 1549, an Airbus A-320, departed LaGuardia Airport in New York City bound for Seattle-Tacoma International Airport with a stopover at the Charlotte-Douglas International Airport. The aircraft carried 150 passengers and a flight crew of 5. Three minutes into the flight, while still on the initial climb out of New York, the airplane encountered a flock of Canadian geese. A multiple bird strike with a total loss of power in both engines is a highly improbable occurrence, but this event is exactly what occurred.10 Within seconds, the crew determined that the plane would be unable to reach an airfield; the captain turned south along the Hudson River in a glide mode and ditched the aircraft in the river near the U.S.S. Intrepid Museum within 3 minutes of losing power. All 155 persons aboard the aircraft were rescued while the aircraft was still sinking, and only minor injuries resulted. The accident investigation revealed crew management at its finest, with the pilot communicating and the copilot audibly reading aloud procedures to ditch the aircraft. Captain Sullenberger and his crew were widely acclaimed for their performance under pressure. A former Air Force pilot with a graduate degree from Purdue University in human factors, the captain asserted that his highest duty and obligation have been to safety. A pilot colleague commented “If an unlikely tragedy like this had to occur, I can think of no other pilot I would pick to handle it other than Sully!”

The Chernobyl Accident, 1986

The Chernobyl accident in the Ukraine is the only accident in the history of nuclear power that resulted in fatalities from radiation.11 An explosion and fires released the contents of the reactor core into the atmosphere, and radioactive material moved downwind. The 2 plant operators died immediately; another 28 people died of acute radiation poisoning. The disaster provides insight into the serious consequences of ignoring safety issues. Investigation after the event revealed a flawed reactor design compounded by inadequately trained personnel operating the facility. Twenty years later, the only resulting public health issue is an increased incidence of thyroid cancer.

Applications in Healthcare

These anecdotes and exhaustive investigational material from other industries indicate several points that apply to the healthcare environment.

-

1

As in aviation, aspects of medical care (anesthesiology, surgery, emergency medicine, intensive care medicine) are event driven and dynamic, complex and tightly coupled, and uncertain and risky.8

-

2

Written checklists help prevent crises.

-

3

Established written procedures are vital in crises.

-

4

Training in decisionmaking and crew resource management are valuable.

-

5

Systematic drills and practice using simulation technology address the ability to handle crisis situations.

ANALYSIS OF ERROR

In a landmark publication, Reason presented a detailed analysis of human error.12 Reason introduced his text by referring to the 1928 studies of Spearman but asserted that the decade prior to the publication of his book was characterized by public concern about the terrible cost of human error. He lists the Tenerife runway collision in 1977, the Three Mile Island crisis in 1979, the Bhopal methyl isocyanate disaster in 1984, the Challenger and Chernobyl explosions of 1986, the capsize of the Herald of Free Enterprise, the King's Cross tube station fire in 1987, and the Piper Alpha oil platform explosion in 1988 as causes for a collective impetus to address error. Furthermore, this decade made clear that the nature and scale of such tragedies impacted wide geographic areas and generations of humans.

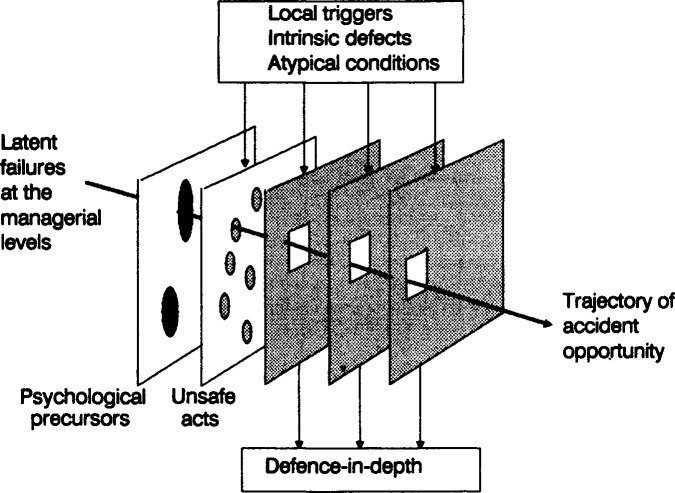

Perhaps the most significant contribution of Reason's work was his development of a model for the dynamics of accident causation, sometimes referred to as the Swiss cheese model (Figure 1). The author identified what he describes as latent failures at the managerial level before the possibility for human error is even involved. As described by Reason, latent errors are those

Figure 1.

Reason's model of accident causation. Accident opportunity and possible adverse outcomes stem from a combination of latent failures, psychological precursors, event triggers, and failure of the system's defenses. (From Reason12 with permission.)

… whose adverse consequences may lie dormant within the system for a long time, only becoming evident when they combine with other factors to breach the system's defences … Latent errors … are most likely to be spawned by those whose activities are removed in both time and space from the direct control interface: designers, high-level decision makers, construction workers, managers and maintenance personnel.

Latent errors exist in complex systems. Reason uses a medical metaphor, describing them as resident pathogens, much like microorganisms, that can exist in an organism only to emerge and proliferate when defenses are inadequate.

Reason's model of accident causation begins with latent failures, and the Swiss cheese plates represent psychological precursors and unsafe acts, local triggers, and failures of other defenses. The trajectory of error opportunity results from the alignment of the holes in the slices of Swiss cheese.

Gaba and colleagues13 adapted this model to the practice of anesthesiology. Some of the latent failures in this specialty include machine and process design flaws, manufacturing defects, maintenance failure, underlying disease, production pressure, fatigue, and environmental factors. With a triggering event and the failure of preventive measures (preoperative evaluation, checklists, etc), an event occurs. The clinician must respond with dynamic decisionmaking to detect and correct the critical incident before it results in an adverse outcome.

THE MILITARY AND THE AGENCY FOR HEALTHCARE RESEARCH AND QUALITY

Safety—fostered by teamwork, leadership, and communication—has been a major focus of military efforts. Historical events illustrate this focus.

Lord Nelson

Admiral Lord Nelson was renowned as a leader, military strategist, and communicator. His communication skills extended beyond interpersonal relationships. Communicating from his command ship through a flag system, he was able to instruct his entire fleet to come about as one to achieve unorthodox strategies. With fewer and smaller ships, but through precision ship positioning and gunnery training, he defeated the Spanish Armada (once thought invincible) and the allied French navy.14 Lord Nelson understood leadership and communication in a way that applies to medicine. His communication to his sailors was safe, timely, effective, and always oriented to the task at hand.

World War II

Officers on U.S. Navy warships during World War II had an established means of verbal communication during maneuvers:

CAPTAIN: Come right to 190, all ahead one-third.

BOSUN: Aye sir, right to 190, all ahead one-third.

The call out, check back, and other scripting techniques ensured that orders were received, confirmed, understood, and executed.

Military Aviation

Military aviation develops effective team leaders on the ground, at sea, and in the air. Whether on land or at sea, pilots assemble in a ready room to review the mission, understand objectives, and unify the team. Events are described, recorded, visually confirmed, and processed. After flying the mission, a second assembly of the pilots focuses on a thorough debriefing. What went well? What went wrong? Were objectives accomplished? What areas can be improved? What is the next step?

Coast Guard

The U.S. Coast Guard Academy has a unique method of emphasizing and developing teamwork and team leaders. All cadets serve aboard the Eagle,15 a square-rigger sailing ship with an intricate system of masts, stays, halyards, and sheets. Effective and coordinated teamwork is essential when underway, and the cadets learn not only seamanship but “team-man ship” on a 30-60 day cruise. The training emphasizes safety, as expressed in the published command philosophy of a recent captain of the Eagle15:

Safety. Above all, Safety. We learn safe practices and procedures. We follow them on board and ashore. We watch our shipmates to ensure they do too. We immediately speak up when we think we see an unsafe practice developing. We listen. We care about the safety of our shipmates and ourselves. If any situation tests our commitment to safety, safety comes first.

Strategies and Tools to Enhance Performance and Patient Safety

These same leadership and communication skills can be successfully applied to healthcare. The U.S. Congress commissioned the Department of Defense and the Agency for Healthcare Research and Quality (AHRQ) to translate the principles of a just culture into a format applicable to the delivery of healthcare.16 The result of this collaboration was the TeamSTEPPS (Strategies and Tools to Enhance Performance and Patient Safety) approach to patient safety. Consistent with the principles demonstrated in the above military examples, TeamSTEPPS focuses on leadership and communication skills (with scripting), mutual support of team members, and situation monitoring. It identifies barriers, suggests observation tools, and focuses on outcomes. TeamSTEPPS also helps participants assess whether the safety culture is being sustained.

The goal of TeamSTEPPS is the establishment, nurturing, and preservation of a just culture in which “we immediately speak up when we think we see an unsafe practice developing.”17

CREATING A JUST CULTURE

Functional issues must be addressed in a just culture.18,19 While encouraging personnel to report mistakes, identify the potential for error, and even stop work in acute situations, a just culture cannot be a blame-free enterprise. Competency is one issue, but even competent professionals make mistakes. In complex, dynamic, and inherently risky environments, society has an expectation for human performance.

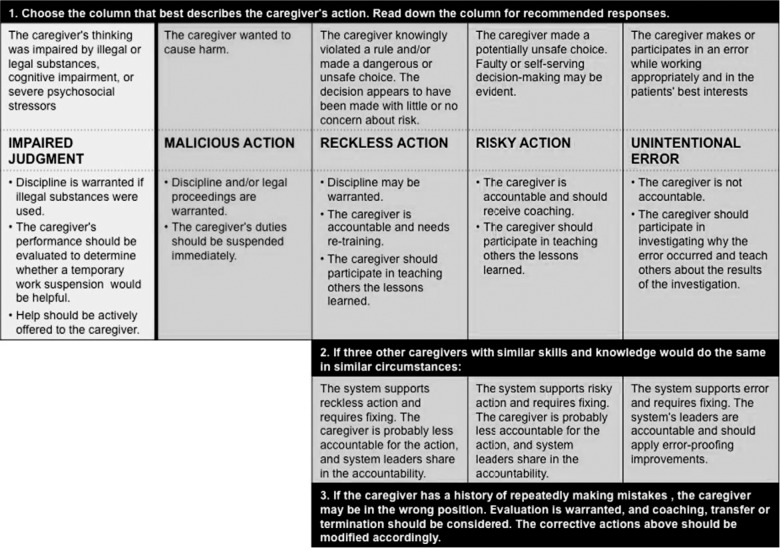

After identifying an adverse event or near miss, the investigation procedure technique becomes paramount (Figure 2). Two approaches have been successfully implemented in hospitals.

Figure 2.

Caregivers' actions and recommended responses for analyzing risks constructed from outcome engineering principles and analysis of human factors. (From Leonard and Frankel19 with permission.)

Outcome Engineering Algorithm

Marx2 coined the term outcome engineering that is applicable to healthcare and other industries. His algorithm lists 3 basic duties:

-

1

Duty to produce an outcome

-

2

Duty to follow a procedural role

-

3

Duty to avoid unjustifiable risk

Consequently, with breach of duty, the mechanism of the breach is categorized into one of the following:

-

1

Human error

-

2

At-risk behavior (a conscious drift from safe behavior)

-

3

Reckless behavior (conscious of conduct and risk)

Reason12 highlights the notion of intent when considering the nature of error. Slips (eg, Freudian slips) lack intention; that is, the actions are not carried out as intended or planned. Lapses are missed actions or omissions, with the perpetrator often conscious of the action and believing that it will not lead to harm.

Mistakes involve error, ie, faulty planning or intention; the individual involved believes the action to be correct. Corrective action and coaching, not punishment, are indicated for improving the system.

At-risk behavior includes both intention and the violation of rules, policies, and procedures and makes a system vulnerable, increasing risk. The individual should be coached to understand the risks resulting from his or her action.

Reckless behavior may be grounds for disciplinary action, and civil or criminal charges may be filed against the individual. Punishment, including termination, may be the appropriate consequence.

Repetitive patient safety problems must be addressed, whether caused by individual error or system weakness.20

United Kingdom National Health Service Algorithm

The National Health Service in the United Kingdom and the National Patient Safety Agency published an incident decision tree and a guide for its use.21 The algorithm on which the decision tree is based identifies the role of an individual and the given specific outcome. The decision tree has 4 main elements:

-

•

The deliberate harm test: a conscious and deliberate breach of duty resulting in patient harm. The goal of the institution or system is to establish or refute this violation immediately as a first step.

-

•

The physical/mental health test: a provider is impaired for any reason, including substance abuse. The impact of impairment or the patient outcome must be established.

-

•

The foresight test: once the deliberate intent to harm and physical/mental health tests have been discounted, this analysis establishes whether protocols, policies, and procedures have been followed.

-

•

The substitution test: this test asks the question, “Would another provider put in the same circumstances in the same systems environment make the same error?”

Combination of Models

Because both algorithms have merits, Leonard and Frankel19 schematically integrated them into a single format that involves a 3-step process. The first step analyzes the individual caregiver's actions via 5 measures: impaired judgment, malicious action, reckless action, risky action, and unintentional error. The second step determines if other caregivers with similar skills and knowledge would react the same way in similar circumstances. The final step is the important determination of whether the present system supports reckless or risky behavior and thus requires redesign.

CONCLUSION

A fair and just culture improves patient safety by empowering employees to proactively monitor the workplace and participate in safety efforts in the work environment. Improving patient safety reduces risk by its focus on managing human behavior (or helping others to manage their own behavior) and redesigning systems. In a just culture, employees are not only accountable for their actions and choices, but they are also accountable to each other, which may help some overcome the inherent resistance to dealing with impaired or incompetent colleagues.22

Secondary benefits of a just culture include the ability to develop a positive patient safety profile to respond to outside auditors such as The Joint Commission.23 When implemented, a just culture fosters innovation and cross-departmental communication. An example is the opportunity to revitalize the morbidity and mortality conference to cross specialty lines and develop a patient-centered focus.24

In a just culture, both the organization and its people are held accountable while focusing on risk, systems design, human behavior, and patient safety.

Safety. Above all, Safety.

Footnotes

The author has no financial or proprietary interest in the subject matter of this article.

This article meets the Accreditation Council for Graduate Medical Education and the American Board of Medical Specialties Maintenance of Certification competencies for Patient Care and Professionalism.

REFERENCES

- 1.Dekker S. Just Culture: Balancing Safety and Accountability. Burlington, VT: Ashgate Publishing;; 2008. [Google Scholar]

- 2.Marx D. Patient Safety and the Just Culture: A Primer for Health Care Executives. New York, NY: Trustees of Columbia University;; 2001. [Google Scholar]

- 3.Griffith KS. Column: the growth of a just culture. Jt Comm Perspect Patient Saf. 2009 Dec;9(12):8–9. [Google Scholar]

- 4.Weick KE, Sutcliffe KM. Managing the Unexpected: Assuring High Performance in an Age of Complexity. San Francisco, CA: Jossey Bass;; 2001. [Google Scholar]

- 5.Leape LL. Errors in medicine. Clin Chim Acta. 2009 Jun;404(1):2–5. doi: 10.1016/j.cca.2009.03.020. Epub 2009 Mar 18. [DOI] [PubMed] [Google Scholar]

- 6.Gaba DM. Structural and organizational issues in patient safety: a comparison of health care to other high hazard industries. Calif Manage Rev. 2000;43(1):83–102. Fall: [Google Scholar]

- 7.Helmreich RL. On error management: lessons from aviation. BMJ. 2000 Mar 18;320(7237):781–785. doi: 10.1136/bmj.320.7237.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Woods DD. Coping with complexity: the psychology of human behavior in complex systems. In: Goodstein LP, Olsen SE, Anderson HB, editors. Tasks, Errors, Mental Models: A Festchrifts to Celebrate the 60th Birthday of Professor Jens Rasmussen. London, England: Taylor and Francis;; 1988. pp. 128–148. In. eds. [Google Scholar]

- 9.National Transportation Safety Board. Collision of Metrolink Train 111 with Union Pacific Train LOF65–12. Railroad Accident Report NTSB/RAR-10/01. 20102012 Chatsworth, California, September 12, 2008. http://www.ntsb.gov/doclib/reports/2010/RAR1001.pdf. Accessed November 30, [Google Scholar]

- 10.National Transportation Safety Board. Loss of Thrust in Both Engines After Encountering a Flock of Birds and Subsequent Ditching on the Hudson River, US Airways Flight 1549, Airbus A320-214, N106US, Weehawken, New Jersey, January 15, 2009. Aircraft Accident Report NTSB/AAR-10/03. 20102012 http://www.ntsb.gov/doclib/reports/2010/aar1003.pdf. Accessed November 30, [Google Scholar]

- 11.World Nuclear Association. Chernobyl Accident 1986. 20122013 http://www.world-nuclear.org/info/Safety-and-Security/Safety-of-Plants/Chernobyl-Accident. Accessed June 4, [Google Scholar]

- 12.Reason J. Human Error. New York, NY: Cambridge University Press;; 1990. [Google Scholar]

- 13.Gaba DM, Fish KJ, Howard SK. Crisis Management in Anesthesiology. New York, NY: Churchill Livingstone;; 1994. [Google Scholar]

- 14.Keegan J. The Price of Admiralty: The Evolution of Naval Warfare. Philadelphia, PA: Penguin Books;; 1990. [Google Scholar]

- 15.Boysen PG. Integrating Quality: Linking Clinical and Educational Excellence. 2012 https://www.aamc.org/download/129632/data/boysen.pdf.pdf. Accessed November 30, [Google Scholar]

- 16.Kirch DG, Boysen PG. Changing the culture in medical education to teach patient safety. Health Aff (Millwood) 2010 Sep;29(9):1600–1604. doi: 10.1377/hlthaff.2010.0776. [DOI] [PubMed] [Google Scholar]

- 17.Agency for Healthcare Research and Quality. TeamSTEPPS: National Implementation. 2013 http://teamstepps.ahrq.gov/about-2cl_3.htm. Accessed June 28, [Google Scholar]

- 18.Frankel AS, Leonard MW, Denham CR. Fair and just culture, team behavior, and leadership engagement: The tools to achieve high reliability. Health Serv Res. 2006 Aug;41((4 Pt 2)):1690–1709. doi: 10.1111/j.1475-6773.2006.00572.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Leonard MW, Frankel A. The path to safe and reliable healthcare. Patient Educ Couns. 2010 Sep;80(3):288–292. doi: 10.1016/j.pec.2010.07.001. Epub 2010 Aug 4. [DOI] [PubMed] [Google Scholar]

- 20.Dekker S. Patient Safety: A Human Factor Approach. Boca Raton, FL: CRC Press;; 2011. [Google Scholar]

- 21.National Patient Safety Agency. Seven Steps to Patient Safety in General Practice. 2013 http://www.npsa.nhs.uk/EasySiteWeb/GatewayLink.aspx?alId=43743. Accessed June 28, [Google Scholar]

- 22.Wachter R. Personal Accountability in Healthcare: Searching for the Right Balance. The Health Foundation. 2013 doi: 10.1136/bmjqs-2012-001227. May 2012. http://www.health.org.uk/public/cms/75/76/313/3426/Personal%20accountability%20in%20healthcare%20searching%20for%20the%20right%20balance%20thought%20paper.pdf?realName=Al5J91.pdf. Accessed June 28, [DOI] [PubMed] [Google Scholar]

- 23.The Joint Commission. Leadership Committed to Safety. 2013 http://www.jointcommission.org/assets/1/18/SEA_43.PDF. Accessed June 28, [Google Scholar]

- 24.Orlander JD, Barber TW, Fincke BG. The morbidity and mortality conference: the delicate nature of learning from error. Acad Med. 2002 Oct;77(10):1001–1006. doi: 10.1097/00001888-200210000-00011. [DOI] [PubMed] [Google Scholar]