Abstract

Context

Rigorous outcome evaluation is essential to monitor progress toward achieving goals and objectives in comprehensive cancer control plans (CCCPs).

Objective

This report describes a systematic approach for an initial outcome evaluation of a CCCP.

Design

Using the Centers for Disease Control and Prevention evaluation framework, the evaluation focused on (1) organizing cancer plan objectives by anatomic site and risk factors, (2) rating each according to clarity and data availability, (3) the subsequent evaluation of clearly stated objectives with available outcome data, and (4) mapping allocation of implementation grants for local cancer control back to the CCCP objectives.

Setting

South Carolina.

Main Outcome Measures

Evaluation outcomes included (1) a detailed account of CCCP objectives by topic area, (2) a systematic rating of level of clarity and availability of data to measure CCCP objectives, (3) a systematic assessment of attainment of measurable objectives, and (4) a summary of how cancer control grant funds were allocated and mapped to CCCP objectives.

Results

A system was developed to evaluate the extent to which cancer plan objectives were measurable as written with data available for monitoring. Twenty-one of 64 objectives (33%) in the South Carolina's CCCP were measurable as written with data available. Of the 21 clear and measurable objectives, 38% were not met, 38% were partially met, and 24% were met. Grant allocations were summarized across CCCP chapters, revealing that prevention and early detection were the most heavily funded CCCP areas.

Conclusions

This evaluation highlights a practical, rigorous approach for generating evidence required to monitor progress, enhance planning efforts, and recommend improvements to a CCCP.

Keywords: cancer control, cancer prevention, program evaluation, state cancer plan

The Centers for Disease Control and Prevention (CDC) partners with US states, tribes, and territories to develop and implement comprehensive cancer control plans (CCCPs).1 The goals, objectives, and strategies delineated in CCCPs represent the priorities for reducing each state's cancer burden,2 making CCCPs the central blueprint for each state's cancer control activities. Evaluation is recognized as essential to successful comprehensive cancer control3–6 so that a robust evaluation plan is essential for monitoring progress toward achieving each CCCP's goals, objectives, and strategies. In fact, demonstrating outcomes through evaluation is one of the CDC-funded National Comprehensive Cancer Control Program's 6 priorities.

However, despite numerous reports of CCCP formative evaluations describing coalition models and highlighting successes,1–17 CCCP outcome evaluation has rarely been addressed. In a CDC study, a Performance Measures Worksheet was developed and pilot tested among 61 CCCPs to track performance in meeting CDC's goals for CCCPs.18 Grantees completed worksheets assessing stakeholder engagement, programmatic elements, funding, and policy outcomes. The results indicated that, on average, 64% of objectives were currently being addressed and only 66% of CCCPs included a written evaluation plan. Centers for Disease Control and Prevention investigators also developed the Cancer Plan Index to assess the coverage of 11 core CCCP components.19 Across CCCPs, the results indicated goals and objectives tended to be comprehensively described, but, in general, the evaluation plans of CCCPs were not well described and lacked funding.

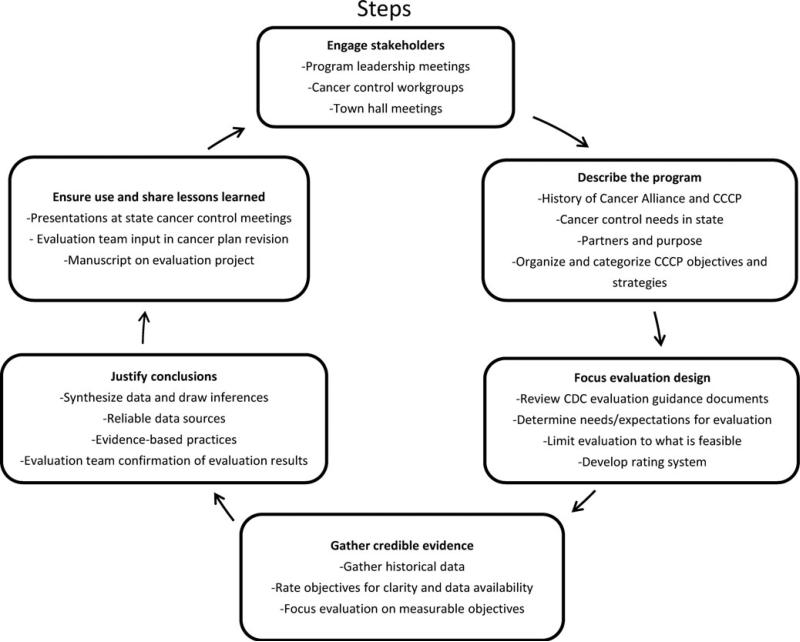

Building on these growing efforts in CCCP evaluation, the present report describes an initial process and outcome evaluation of South Carolina's CCCP that was conducted to generate evidence for monitoring progress, enhancing planning efforts, and recommending improvements to the CCCP. The evaluation plan was guided by the CDC's Framework for Program Evaluation20 (Figure 1), a model well suited for evaluating CCCPs, which relies on a diverse group of stakeholders. This cyclical evaluation approach begins with engaging stakeholders, includes establishing an evaluation design and gathering the necessary evidence to measure results, and ends with ensuring that stakeholders use the evaluation results.

FIGURE 1.

Centers for Disease Control and Prevention Framework for Program Evaluation.

Methods

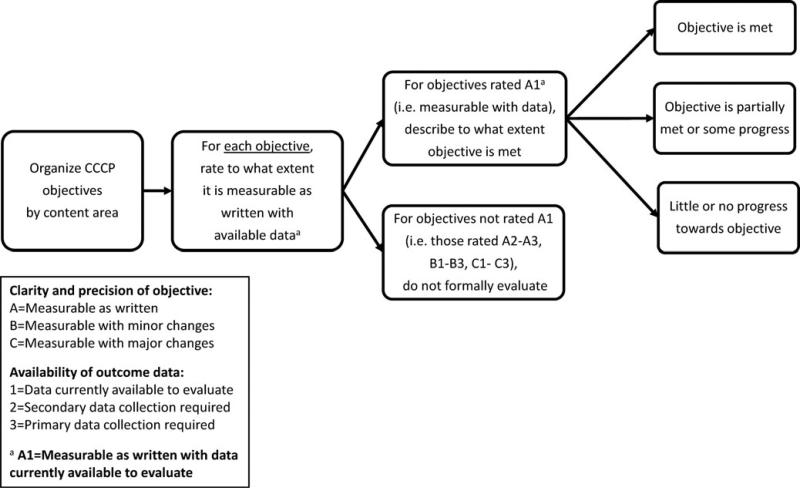

South Carolina's CCCP was first published in 2005.21 It had 8 chapters: 4 chapters related to primary, secondary, and tertiary prevention (Prevention, Early Detection, Patient Care, and Survivor and Family) and the remaining 4 related to crosscutting domains (Health Disparities, Advocacy and Policy, Research, and Genetics). In total, the CCCP had 5 goals, 64 objectives, and 203 strategies. The evaluation model was designed to measure a combination of processes and outcomes related to the quality of CCCP indicators and the extent to which CCCP objectives were achieved (Figure 2). The evaluation was designed to address the following specific aims: (1) to record cancer plan objectives and related strategies by anatomical site (eg, breast, colorectal) and risk factor (tobacco, physical activity); (2) to rate each objective according to clarity and data availability; (3) to focus subsequent evaluation on clearly stated objectives with available outcome data; and (4) to map allocation of implementation grants for local cancer control back to the CCCP objectives.

FIGURE 2.

Process of Evaluation of CCCP Objectives.

Stakeholder engagement

The evaluation team was led by university-based researchers and included members from the state's cancer registry and cancer control program. To ensure the evaluation plan was rooted in the reality of ongoing CCCP implementation activities of the South Carolina Cancer Alliance (SCCA), the organization charged with devising and implementing the plan, the evaluation team communicated with SCCA leadership, workgroups, and general membership throughout the 18-month evaluation process (July 1, 2009, to December 31, 2010). In the first 6 months of the evaluation, the evaluation team worked with SCCA leadership groups to ensure that the evaluation methodology was consistent with the organization's needs and expectations. In months 7 to 12, more than 100 key stakeholders with content area expertise and interest were contacted by phone or e-mail. In months 10 to 16, after it became clear that consensus within the organization's workgroups would be necessary to accurately describe SCCA activities and accomplishments, the evaluation team convened these workgroups with the sole purpose to collectively describe their progress toward the CCCP objectives. Input from broader statewide constituents was received at 4 town hall meetings and at 1 statewide SCCA meeting during months 12 to 18. Immediately after new information was obtained from stakeholders, the new information was recorded in an evaluation-tracking database. Stakeholder feedback informed all aspects of the evaluation, which included the needs and expectations for the evaluation, insight into stakeholder activities undertaken to achieve CCCP objectives and barriers/facilitators they encountered, history of how the CCCP had evolved over time, and suggestions for future direction for the upcoming 5-year CCCP.

Organizing the fluid cancer plan

The initial evaluation task was to dissect the CCCP's goals, objectives, and strategies. A database constructed to consolidate key data was populated with information such as current/historical goals, objectives, and strategies; history of modifications; data sources for tracking indicators; benchmarks; outcomes; and key words. This database was the central repository for tracking evaluation findings. To account for objectives and strategies that were modified, added, or removed since the initial CCCP, a numeric classification system was developed to track each indicator's initial and current location in the CCCP. During this process, 2 of the 8 chapters were identified as outliers: (1) the Genetics chapter had never been directly addressed and (2) the Health Disparities chapter was primarily a compilation of objectives/strategies from other CCCP chapters, so its content overlapped with content elsewhere in the plan. These 2 chapters were thus excluded from subsequent evaluation activities. These initial evaluation steps of organizing the fluid cancer plan took place in the first 3 months of the evaluation process.

Prioritizing the outcome evaluation by rating the evaluability of cancer plan indicators

Each objective was rated on 2 dimensions: the extent to which it (1) was measurable as written and (2) had available outcome data. This step took place in months 7 to 12 of the evaluation process. The first dimension measured the objective's clarity and precision. An “A,” “B,” or “C” rating referred to objectives that could be evaluated directly as written, with minor changes, or only with major revisions, respectively. Examples of “minor changes” were defining baseline and benchmark values or more precisely characterizing the target population. An example of a “major change” was splitting an objective that comprised multiple objectives into separate components. Objectives were also rated on the availability of outcome data. A “1,” “2,” or “3” rating meant outcome data were readily available, available with secondary data analyses, or would require new primary data collection, respectively.

These 2 separate ratings were combined into a single summary measure to jointly characterize the extent that an indicator was clear/precise and had available outcome data to assess achievement. An “A1” rating meant an objective was clear, precise and had available data to evaluate progress. In contrast, a “C3” rating lacked both clarity/precision and available outcome data. Each objective was rated independently by 2 reviewers, with differences resolved by consensus.

The rating system was used to prioritize the objectives evaluated for outcomes. Because of resource constraints, outcome data were obtained and summarized solely for the indicators rated “A1,” namely, those that were clearly written and could be linked to available outcome data. Indicators that could be measured (ie, those rated A1) were then categorized as follows: “little or no progress,” no evidence of measurable progress to meet the objective; “partially met or some progress,” evidence of progress that could result in achievement of the objective; and “met,” the objective was accomplished.

Mapping allocation of grant funding back to CCCP indicators

In South Carolina, a primary method of CCCP implementation is via allocation of grant funds for local projects. These funds are allocated by the South Carolina Department of Health and Environmental Control (SC DHEC) Cancer Control Program in 2 separate funding streams. One funding stream runs through the SCCA, which awards competitive grants to SCCA partners for projects that address CCCP objectives (SCCA grants). The other funding stream is to the state's 8 public health regions through a regional mini-grant mechanism (SC DHEC grants) focused exclusively on prevention and early detection activities. These funding streams generate “on the ground” cancer control activity. Therefore, stakeholders felt it was important to map the funded projects back to the CCCP indicators to assess the effectiveness of distributing funding across the CCCP elements.

Projects funded through the SCCA and SC DHEC mini-grant mechanisms were ascertained for the period July 1, 2005, to June 30, 2010. Data collected from the funding agency, grantee, or both included title, funding period, region served, statement of work, and a description of project components. The scarce data available for most grants precluded a summary of project outcomes. To describe the distribution of funding across the CCCP, the topics of the funded grants were mapped to pertinent CCCP indicators. The mapping of resource allocation to pertinent CCCP indicators was coded by 1 rater. If the most precise indicator(s) that a grant mapped back to was unclear, 2 raters discussed mapping until consensus was achieved.

Funded projects could address more than 1 CCCP objective, either through separate activities directed at different objectives or through a single activity that cut across multiple objectives. An example of a crosscutting activity is advocacy for increasing the tobacco tax. This activity addressed objectives in both the Advocacy and Prevention chapters; thus, this type of grant contributed toward both the chapters. For DHEC mini-grants that addressed both prevention and early detection, the funding amount was uniformly allocated evenly between the 2. The total number of unduplicated grants was calculated, as was the total number of grants that addressed prevention and that addressed early detection. The process of mapping the allocation of grant projects back to the CCCP objectives took place in months 13 to 18 of the evaluation process.

Results

Assessment of cancer plan objectives

Of the 64 objectives, 47% were measurable as written with the balance evenly divided between requiring minor and major changes (Table 1). Data were readily available for 36% of the objectives, but secondary and primary data collection was required for 28% and 37%, respectively. At the extremes of the composite ratings, 33% were A1 and 16% were C3. As a proportion of total objectives, these were the 2 largest cells in Table 1 and reflected a positive correlation between clarity and data availability.

TABLE 1.

Summary of Ratings for the South Carolina Comprehensive Cancer Control Plan Objectives (n = 64) According to the Clarity/Precision of the Written Objective (A, B, and C) and the Availability of Outcome Data (1, 2, and 3) to Evaluate It

| Clarity and Precision of Objective |

||||

|---|---|---|---|---|

| Availability of Outcome Data | A = Measurable as Written, n (%) | B = Measurable With Minor Changes, n (%) | C = Measurable With Major Changes, n (%) | Total, n (%) |

| 1 = Data currently available to evaluate | 21 (33) | 2 (3) | 0 (0) | 23 (36) |

| 2 = Secondary data collection required | 4 (6) | 7 (11) | 7 (11) | 18 (28) |

| 3 = Primary data collection required | 5 (8) | 8 (13) | 10 (16) | 23 (37) |

| Total, n (%) | 30 (47) | 17 (27) | 17 (27) | 64 (100) |

The outcome evaluation was limited to the 33% (n = 21) of A1-rated objectives that were measurable as written with available outcome data. Of these, 38% showed little or no progress, 38% were partially met, and 24% were met (Table 2). The percentage of objectives achieved (yes/no) ranged across the CCCP chapters from 0% (Prevention, Patient Care) to 67% (Research). These results were partly a function of how ambitiously the target was set. An example of a very ambitious objective was “by June 2010, decrease the rate of tobacco use among South Carolinian adults (older than 18 years) from 26% to 12%.” Thus, although smoking prevalence decreased substantially to 21%, this objective was not met. An example of a more modest target that was met was “by June 2010, increase the proportion of newly diagnosed prostate cancers that are detected at the localized stage from 73% to 75%.” These examples illustrate why precise quantification of progress toward objectives is preferred.

TABLE 2.

Status of Progress Toward Measurable Objectives and Strategies in the Cancer Plan

| Number of Measurable Objectives and Strategies | Statusa |

|||

|---|---|---|---|---|

| Cancer Plan Chapter | Little or No Progress, n (%) | PartiallyMetor Some Progress, n (%) | Met, n (%) | |

| Prevention | 4 | 2 (50) | 2 (50) | 0 (0) |

| Early Detection | 8 | 6 (75) | 0 (0) | 2 (25) |

| Advocacy and Policy | 4 | 0 (0) | 3 (75) | 1 (25) |

| Patient Care | 2 | 0 (0) | 2 (100) | 0 (0) |

| Research | 3 | 0 (0) | 1 (33) | 2 (67) |

| Survivor and Family | 0 | 0 (0) | 0 (0) | 0 (0) |

| Total | 21 | 8 (38) | 8 (38) | 5 (24) |

Status: little or no progress, no evidence of measurable progress to meet the objective; partially met or some progress, evidence of progress that could result in achievement of the objective; Met, the objective was accomplished.

Distribution of grants across CCCP objectives

During the 5-year evaluation period, a total of $1.73 million was awarded to grants for local cancer control projects: 29% via the SCCA grant mechanism and 71% via the DHEC mini-grant mechanism (Table 3). Due partly to the fact that the DHEC mini-grants focused exclusively on prevention and early detection, these 2 topic areas received by far the largest allocations: 47% and 43%, respectively.

TABLE 3.

Summary of Grants Awarded Through State Health Department Funds to Target Cancer Prevention and Control Activities

| SCCA and SC DHEC Grant Awards (2005-2010) |

||||||

|---|---|---|---|---|---|---|

| SCCA Grants |

SC DHEC Grantsa |

Total Grants |

||||

| Cancer Plan Chapter | Number of Grants | Grant Amount | Number of Grants | Grant Amount | Number of Grants | Grant Amount |

| Prevention | 15 | $138 380 | 32 | $671 538 | 47 | $809 918 |

| Early Detection | 16 | $179 559 | 27 | $558 785 | 43 | $738 344 |

| Patient Care | 9 | $84 374 | 0 | $0 | 9 | $84 374 |

| Research | 2b | $28 389 | 0 | $0 | 2 | $28 389 |

| Survivor and Family | 14 | $69 940 | 0 | $0 | 14 | $69 940 |

| Total | 56 | $500 642 | 42 | $1 230 323 | 98 | $1 730 965 |

| Advocacy and Policy c | 14 | $133 949 | . . . | . . . | . . . | $133 949 |

Abbreviations: SCCA, South Carolina Cancer Alliance; SC DHEC, South Carolina Department of Health and Environmental Control.

SC DHEC grants often addressed both prevention and early detection. To account for this, in the “number of grants” column, the Prevention and Early Detection rows each contain any grant with a focus in these areas, but the “grant total” column includes the number of unduplicated grants awarded. For the SC DHEC “grant amount” column, grants addressing both Prevention and Early Detection were assumed to be equally allocated to both Prevention and Early Detection.

Grant funds were used to conduct evaluations that were objectives within the Research chapter of the cancer plan.

Advocacy and Policy overlapped with other chapters. To account for this, grants funded for advocacy work were allocated to the content area addressed. Total funds distributed to Advocacy and Health Policy are also included separately.

There were 56 SCCA grants totaling $500 642 distributed to partners, ranging from $500 to $30 000 each. Projects addressing objectives in Early Detection comprised 36% of the total funding, followed by Prevention (28%) and Advocacy and Policy (27%; this overlapped with other chapters), whereas Patient Care (17%), Survivor and Family (14%), and Research (6%) received substantially less. The funding was strongly aligned with CCCP objectives in areas such as tobacco cessation, legislative advocacy, colorectal cancer awareness, and cancer education.

A total of 41 SC DHEC grants totaling $1 230 323 were awarded to public health regions to carry out cancer prevention and control projects. Funds awarded ranged from $160 000 to $315 000 per year; most grants ranged from $20 000 to $35 000. These grants addressed solely prevention (22%), solely early detection (34%), or both (44%). These grants were strongly aligned with the CCCP in the areas of cancer education and screening, nutrition and physical activity education, tobacco control, and sun safety education and policy.

Discussion

A novel, practical, and methodologically rigorous approach was used to implement a state CCCP outcome evaluation. The evaluation methods generated evidence to monitor progress, enhance planning efforts, and recommend improvements to the CCCP. In line with CDC's evaluation framework (step 1: engage stakeholders),20 feedback from diverse SCCA membership was proactively sought throughout the evaluation process to strengthen the usefulness of the evaluation and the commitment to a data-driven approach to future planning and priority setting.

To lay the groundwork for the evaluation, CCCP objectives and strategies were organized and categorized (step 2: describing the program).20 This foundational step was critical to understanding the CCCP history, organization, and format and led to the design of an appropriate and feasible evaluation plan (step 3: focus the evaluation design).20 A rating system was developed to assess the clarity of the CCCP objectives and strategies and to determine the availability of necessary outcome data, enabling the outcome evaluation to focus on clear indicators with available data. This rating system is likely to generalize across CCCPs and, as it promotes scrutiny of the CCCP itself, is likely to result in enhanced CCCPs as a result.

Data were then assembled to evaluate the objectives (step 4: gathering credible evidence).20 The outcome evaluation focused exclusively on objectives with available outcome data, which streamlined this process. The grant allocation portion of the evaluation exposed an information deficit, so most of the evaluation time was spent assembling data rather than being spent on data analysis and synthesis.

The final evaluation steps were to synthesize the data and draw inferences (step 5: justify conclusions) and to share the results with stakeholders (step 6: ensure use and share lessons with stakeholders).20 Evaluation results and recommendations were compiled in a report to the SCCA and shared at various stakeholder meetings. Recommendations centered on 2 key areas. First, recommendations were provided to improve the CCCP's quality, including (1) prospective centralized recordkeeping to track the status of past and current indicators and reasons indicators had changed; (2) addressing inactive CCCP chapters; (3) enhancing clarity/precision of objectives and/or availability of outcome data; (4) ensuring causal alignment between strategies and related objectives; (5) using evaluation findings to refine objectives and/or interventions to address these objectives for measures that were not met or met too easily; and (6) limiting CCCP efforts to evidence-based objectives and strategies. These evaluation findings have already been integrated into the revised South Carolina's CCCP. For example, objectives in the new CCCP were all designed to be measurable, and there is now a standalone Health Disparities section in the CCCP with a unique set of objectives. Second, recommendations were provided to routinize CCCP evaluation and monitoring, including (1) developing an evaluation database and allocating resources to properly populate and maintain it; (2) initiating a workgroup to provide a data-driven focus on CCCP outcome evaluation; (3) as far as possible rely on existing data sources to evaluate indicators, due to the resource-intensive nature of primary data collection; and (4) developing new data sources when critical data gaps exist. For example, in South Carolina, this included developing data sources to evaluate adequacy of support resources for cancer patients and survivors, prostate cancer informed decision-making practices, and sun safety practices.

Recommendations concerning the allocation of funded projects included ensuring (1) alignment of funding allocations with CCCP goals; (2) review of the relationship between grants funded in particular content areas and achievement of related objectives, with refinement of grant priorities as necessary; and (3) planning for detailed tracking of grants. For example, if CCCP priorities were not being addressed by funded projects, these underaddressed areas could be prioritized on future requests for applications. On the contrary, funding priorities could be downgraded if well-funded project areas showed little or no evidence of improvement in related CCCP objectives. The evaluation provided a critical comparison between the organization's priorities for statewide cancer control, its historical allocation of grant resources across these priority areas, and the impact of level of grant funding on these outcomes.

The lack of real-time information collected on funded projects obviated any summaries of grant deliverables or outcomes. An outcome evaluation of each project is essential to discriminate between effective and ineffective strategies. There are many reasons why strategies may or may not lead to objectives being met, such as objectives being set too high, mis-alignment between funded strategies and objectives, or ineffective interventions. For this reason, detailed, prospective monitoring of funded interventions is essential for tracking progress and priority setting. These projects represent a core investment in statewide cancer control, emphasizing the importance of accountability and adding further weight to the importance of rigorous monitoring and evaluation of each funded project.

The evaluation identified the lack of a Health Disparities section containing a unique set of objectives to address cancer-related health disparities. Because South Carolina has a high minority and rural population, the evaluation report highlighted a need to develop a robust freestanding section on health disparities. As a consequence of this evaluation recommendation, the 2010-2015 CCCP had an independent, more thorough focus on health disparities.

The proposed evaluation model provides a useful initial outcome evaluation step but is limited by the fact that the outcome evaluation focused only on a portion of CCCP. Knowing that 24% of the indicators with A1 ratings were met is a helpful beginning, but if the A1 indicators received the highest priority in the state's efforts, this could provide an overly optimistic assessment compared with the CCCP as a whole. Furthermore, the evaluation of the distribution of funding for community-based cancer control grants may not be relevant to all states and territories. Nevertheless, this portion of the evaluation provides an example of focusing on a local CCCP element of central importance. In South Carolina, describing the distribution of funds according to the CCCP's objectives provided information to assess whether funding allocations were aligned with organizational priorities. A limitation of the present evaluation was that the outcome evaluations of each of the funded projects was left unaddressed, highlighting a local data need to be more carefully tracked in future funded projects. Future CCCP efforts should consider the use of a comprehensive planning and evaluation framework (eg, reach, effectiveness, adoption, implementation, and maintenance [RE-AIM])22 to facilitate the selection and implementation of appropriate intervention strategies for optimal public health impact. Ultimately, using such a framework in the planning stages will also facilitate embedding appropriate evaluation strategies from the beginning. For example, considering the RE-AIM dimensions of reach, effectiveness, adoption, implementation, and maintenance can guide appropriate planning for intervention and evaluation efforts.

Despite these limitations, the present evaluation represents a novel model for an initial evaluation of a state's CCCP. The evaluation strategy provides a method to prioritize the most readily evaluable CCCP elements, allowing a streamlined approach to generate thorough outcome data for as much of the CCCP as possible while identifying CCCP elements in need of revision. This is practical because clarity was correlated with data availability, and hence ease of evaluation. This evaluation model may provide a practical and useful start to assessing the progress made toward CCCP objectives and to highlight future data collection and resource needs for more thorough and long-term evaluation strategies.

Acknowledgments

This research was funded by the South Carolina Cancer Alliance, the Hollings Cancer Center (P30 CA138313), the Medical University of South Carolina (UL1 RR029882), the Centers for Disease Control and Prevention (U48 DP001936), and the National Cancer Institute (U54 CA153461 [to J.R.H.] and K05 CA136975 [to J.R.H.]).

Footnotes

The authors declare no conflicts of interest.

REFERENCES

- 1.Rochester PW, Townsend JS, Given L, Krebill H, Balderrama S, Vinson C. Comprehensive cancer control: progress and accomplishments. Cancer Causes Control. 2010;21(12):1967–1977. doi: 10.1007/s10552-010-9657-8. [DOI] [PubMed] [Google Scholar]

- 2.Behringer B, Lofton S, Knight ML. Models for local implementation of comprehensive cancer control: meeting local cancer control needs through community collaboration. Cancer Causes Control. 2010;21(12):1995–2004. doi: 10.1007/s10552-010-9655-x. [DOI] [PubMed] [Google Scholar]

- 3.Given LS, Hohman K, Graaf L, Rochester P, Belle-Isle L. From planning to implementation to outcomes: comprehensive cancer control implementation building blocks. Cancer Causes Control. 2010;21(12):1987–1994. doi: 10.1007/s10552-010-9650-2. [DOI] [PubMed] [Google Scholar]

- 4.Hohman K, Rochester P, Kean T, Belle-Isle L. The CCC National Partnership: an example of organizations collaborating on comprehensive cancer control. Cancer Causes Control. 2010;21(12):1979–1985. doi: 10.1007/s10552-010-9644-0. [DOI] [PubMed] [Google Scholar]

- 5.Hager P, Given L, Redmond J, Rogers K. Revision of comprehensive cancer control plans: experiences shared by three states. Cancer Causes Control. 2010;21(12):2005–2013. doi: 10.1007/s10552-010-9662-y. [DOI] [PubMed] [Google Scholar]

- 6.Belle Isle L, Plescia M, La Porta M, Shepherd W. In conclusion: looking to the future of comprehensive cancer control. Cancer Causes Control. 2010;21(12):2049–2057. doi: 10.1007/s10552-010-9666-7. [DOI] [PubMed] [Google Scholar]

- 7.Pollack LA, Greer GE, Rowland JH, et al. Cancer survivor-ship: a new challenge in comprehensive cancer control. Cancer Causes Control. 2005;16(suppl 1):S51–S59. doi: 10.1007/s10552-005-0452-x. [DOI] [PubMed] [Google Scholar]

- 8.True S, Kean T, Nolan PA, Haviland ES, Hohman K. In conclusion: the promise of comprehensive cancer control. Cancer Causes Control. 2005;16(suppl 1):S79–S88. doi: 10.1007/s10552-005-0491-3. [DOI] [PubMed] [Google Scholar]

- 9.Erdman L, Shepherd WL, Choudry M. The North Carolina cancer plan: a living plan for the people of North Carolina. N C Med J. 2008;69(4):292–295. [PubMed] [Google Scholar]

- 10.Selig WK, Jenkins KL, Reynolds SL, Benson D, Daven M. Examining advocacy and comprehensive cancer control. Cancer Causes Control. 2005;16(suppl 1):S61–S68. doi: 10.1007/s10552-005-0485-1. [DOI] [PubMed] [Google Scholar]

- 11.Rochester P, Chapel T, Black B, Bucher J, Housemann R. The evaluation of comprehensive cancer control efforts: useful techniques and unique requirements. Cancer Causes Control. 2005;16(suppl 1):S69–S78. doi: 10.1007/s10552-005-0510-4. [DOI] [PubMed] [Google Scholar]

- 12.Given LS, Black B, Lowry G, Huang P, Kerner JF. Collaborating to conquer cancer: a comprehensive approach to cancer control. Cancer Causes Control. 2005;16(suppl 1):S3–S14. doi: 10.1007/s10552-005-0499-8. [DOI] [PubMed] [Google Scholar]

- 13.Hayes N, Rollins R, Weinberg A, et al. Cancer-related disparities: weathering the perfect storm through comprehensive cancer control approaches. Cancer Causes Control. 2005;16(suppl 1):S41–S50. doi: 10.1007/s10552-005-0487-z. [DOI] [PubMed] [Google Scholar]

- 14.Steger C, Daniel K, Gurian GL, et al. Public policy action and CCC implementation: benefits and hurdles. Cancer Causes Control. 2010;21(12):2041–2048. doi: 10.1007/s10552-010-9668-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Seeff LC, Major A, Townsend JS, et al. Comprehensive cancer control programs and coalitions: partnering to launch successful colorectal cancer screening initiatives. Cancer Causes Control. 2010;21(12):2023–2031. doi: 10.1007/s10552-010-9664-9. [DOI] [PubMed] [Google Scholar]

- 16.Given LS, Hohman K, La Porta M, Belle-Isle L, Rochester P. Comprehensive cancer control in the United States: progress and opportunity. Cancer Causes Control. 2010;21(12):1965. doi: 10.1007/s10552-010-9670-y. [DOI] [PubMed] [Google Scholar]

- 17.Weinberg AD, Jackson PM, DeCourtney CA, et al. Progress in addressing disparities through comprehensive cancer control. Cancer Causes Control. 2010;21(12):2015–2021. doi: 10.1007/s10552-010-9649-8. [DOI] [PubMed] [Google Scholar]

- 18.Rochester P, Porterfield DS, Richardson LC, McAleer K, Adams E, Holden D. Piloting performance measurement for Comprehensive Cancer Control programs. J Public Health Manag Pract. 2011;17(3):275–282. doi: 10.1097/PHH.0b013e3181fd4d19. [DOI] [PubMed] [Google Scholar]

- 19.Rochester P, Adams E, Porterfield DS, Holden D, McAleer K, Steele CB. Cancer Plan Index: a measure for assessing the quality of cancer plans. J Public Health Manag Pract. 2011;17(6):E12–E17. doi: 10.1097/PHH.0b013e318215a603. [DOI] [PubMed] [Google Scholar]

- 20.Centers for Disease Control and Prevention Framework for program evaluation in public health. MMWR: Recomm Rep. 1999;48(RR11):1–40. [PubMed] [Google Scholar]

- 21.National Cancer Institute, Cancer Control Planet [November 27, 2011];State, tribe and territory plans. http://cancercontrolplanet.cancer.gov/state plans.jsp. Published 2011.

- 22.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. doi: 10.2105/ajph.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]