Abstract

Various methods in auditory neuroscience have been used to gain knowledge about the structure and function of the human auditory cortical system. Regardless of method, hemispheric differences are evident in the normal processing of speech sounds. This review manuscript, augmented by the authors’ own work, provides evidence that asymmetries exist in both cortical and subcortical structures of the human auditory system. Asymmetries are affected by stimulus type, for example hemispheric activation patterns have been shown to change from right to left cortex as stimuli change from speech to non-speech. In addition, the presence of noise has differential effects on the contribution of the two hemispheres. Modifications of typical asymmetric cortical patterns occur when pathology is present, as in hearing loss or tinnitus. We show that in response to speech sounds, individuals with unilateral hearing loss lose the normal asymmetric pattern due to both a decrease in contralateral hemispheric activity and an increase in the ipsilateral hemisphere. These studies demonstrate the utility of modern neuroimaging techniques in functional investigations of the human auditory system. Neuroimaging techniques may provide additional insight as to how the cortical auditory pathways change with experience, including sound deprivation (e.g., hearing loss) and sound experience (e.g., training). Such investigations may explain why some populations appear to be more vulnerable to changes in hemispheric symmetry such as children with learning problems and the elderly.

Keywords: auditory cortex, hemispheric asymmetry, lateralization, sound perception, electrophysiology, neuroimaging

Introduction

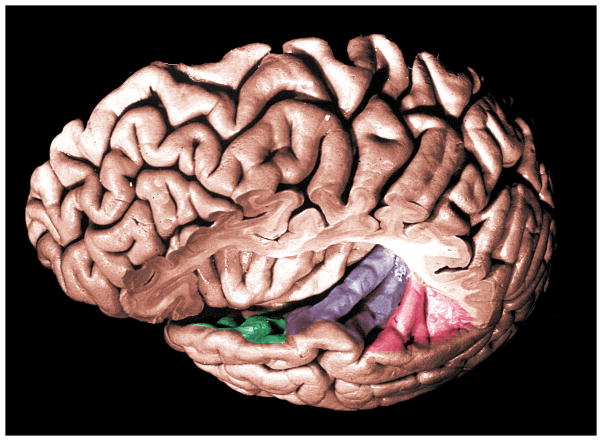

Sound can be characterized by three physical parameters—frequency, starting phase, and amplitude. Thus, acoustic stimuli provide spectral, temporal, and intensity cues that can be used for communication (e.g., speech), safety (e.g., a car horn), and pleasure (e.g., listening to music). In the auditory cortex, these cues are represented by cortical neural activity and ultimately linked to perceptual performance (Phillips, 1993). Although the perception of speech sounds can be assessed with behavioral measures in some populations, much less is known about the neurophysiology underlying speech encoding in the central auditory system. It is generally accepted that the primary auditory cortex lies deep within the lateral Sylvian fissure on the transverse gyrus of Heschl (Brodmann’s area 41, Brodmann, 1909) and that it is involved with speech processing. The secondary auditory cortex, or association cortex, lies in surrounding anatomic regions of the superior temporal gyrus (Brodmann’s areas 21, 22, 42 and 52) (Brodmann, 1909; Celesia, 1976; Talairach and Tournoux, 1988), and also is implicated in the processing of sound. Speech perception occurs through an anatomical network that consists of the temporal lobe including planum polare, transverse temporal gyrus and planum temporale (see Figure 1). However, each region’s precise contribution to the process is not completely understood.

Figure 1.

Superior aspect of left temporal gyrus: planum polare (green), anterior and posterior transverse temporal gyri (purple), planum temporale (red). Adapted with permission from Duvenoy (1999).

Input to the central auditory system comes from both ears. At all levels central to where the cochlear nerve enters the brainstem, speech cues are represented bilaterally. The superior olivary complex plays a major role in binaural hearing and is the first site of combined information that arrives from the cochlear nuclei (Brugge, 1992). At this point onward, there are crossed and uncrossed fibers that extend between the nuclei of the superior olivary complex, trapezoid body, lateral lemnisci, and inferior colliculi that contribute to the redistribution of auditory information (Clark, 1975).

The complexity of the parallel and crossed fiber tracts, ascending and descending pathways, multiple subcortical nuclei, and primary and secondary auditory cortices make following the central representation of auditory signals difficult, particularly in humans. Much of our knowledge about structure and function has been inferred from studies of the central auditory system in animals, which bears some similarities to the central auditory pathway in humans. Recently, results from neuroimaging studies have provided additional insight into the organization and function of the human auditory cortex and its relation to the processing of speech stimuli. This review will highlight some of the relationships between regional brain activity and auditory function illustrated with various methods in auditory neuroscience including recent neuroimaging techniques. In particular, this review will address right and left hemispheric activation patterns and the structural and functional implications of cortical asymmetry, bearing in mind the binaural nature of the normal auditory system.

Methods of Study in Auditory Neuroscience

Our present knowledge of the structure and function of the central auditory system in humans stems from a combination of studies that employ behavioral, anatomical (e.g., cytoarchitecture), electrophysiologic, and neuroimaging measures. Early researchers measured brain activity through direct recording of electrical events from the auditory nerve (Wever and Bray, 1930) and the brain (Woolsey and Walzl, 1942). Single neuron recordings using microelectrode techniques (Galambos and Davis, 1943) broadened the understanding of neuronal encoding mechanisms, but were limited to certain experimental conditions, primarily in animal models. Subsequent studies using noninvasive electrophysiologic recordings (e.g., brainstem to cortical responses) via surface electrodes were applied in humans. In the past decade, however, there have been further advancements in methodologies to study the cortical auditory system in vivo in the human brain using multi-electrode electrophysiology and neuroimaging techniques (Kwong et al., 1992; Ogawa et al., 1992). Although it is beyond the scope of this article to describe in detail the methods used in auditory neuroscience, a brief summary is provided to facilitate understanding of the research studies discussed. In addition, the subset of studies reviewed includes those with an emphasis on the acoustic features of the speech stimulus rather than linguistic aspects. Finally, it is essential to bear in mind that neuroimaging techniques, such as functional magnetic resonance imaging (fMRI), are still evolving. As these techniques are refined, each will impact the design of research questions in the field of auditory neuroscience in humans and the eventual application of findings to clinical populations.

EEG and MEG

Electrophysiologic methods, such as electroencephalography (EEG, i.e., recording electrical potentials from the scalp) and evoked potentials (i.e., electrical potentials evoked by an external stimulus), allow the evaluation of responses that arise from a population of individual neurons. The ability to record these evoked responses is inherently dependent upon neural synchrony and is influenced greatly by stimulus parameters (Picton et al., 1974). Magnetoencephalography (MEG) measures the magnetic field activity that is associated with intracellular ionic current flow in the brain (Hari, 1993; Lounasmaa et al., 1993). An advantage of EEG and MEG is the excellent temporal resolution, which is on the order of tens of milliseconds. A limitation of surface-recorded electrophysiologic responses, however, is the inability to differentiate specific anatomical structures. This makes it difficult to know the exact generator sites or the extent of cortical areas involved. In addition, magnetic field recordings are insensitive to currents that are oriented in the radial direction, and therefore reflect only tangentially directed currents. Although modeling methods (e.g., dipole source reconstruction) can be used to analyze and derive the underlying sources of surface electrode recordings, questions remain as to the exact modeling assumptions to be used in these analyses (Pascual-Marqui, 1999; Scherg and Picton, 1991).

PET and fMRI

Neuroimaging techniques are based on the principle that neuronal activity requires energy. Increased energy demands are reflected in increased blood flow and metabolism, and the resulting changes can be visualized in response to a task-dependent activity. With the introduction of positron emission tomography or PET, it became possible to conduct in vivo experiments in human subjects (Frackowiak et al., 1980; Phelps et al., 1981; Raichle et al., 1983). PET techniques rely on the distribution of positron-emitting radioactive isotopes in order to detect biochemical properties that enter and therefore trace physiological processes. Changes in blood flow and glucose metabolism associated with the delivery of auditory stimuli can be reconstructed based on the decay of radioisotopes. PET can be conducted in quiet test environments and is not susceptible to the scanner noise that occurs in the MRI environment. A disadvantage of PET is the need for radioactive injections, which are considered invasive and therefore not acceptable for certain populations (e.g., children).

Functional magnetic resonance imaging, or fMRI, detects increased oxygenation levels in response to the stimulus or test parameter. The blood oxygenation level dependent (BOLD) response was originally described in rat experiments by Ogawa and colleagues (1990) and then subsequently used in human studies of the brain (Kwong et al., 1992; Ogawa et al., 1992). fMRI has become more commonly used than PET for experimental purposes because of its widespread availability and because it does not require exposure to ionizing radiation or radiopharmaceuticals. In studies that employ fMRI with auditory stimuli, one complicating factor is the intensity of the scanner noise that occurs as the magnet changes gradient fields (Bandettini et al., 1998; Ulmer et al., 1998). Noise can be reduced by earphone specifications, and more importantly, by synchronizing the stimuli with the MR pulse sequences so that the auditory stimuli are presented during a quiet period (Edmister et al., 1999; Hall et al., 1999).

Both PET and fMRI responses are considered indirect measures of brain activity. The two techniques provide good spatial resolution, with the best spatial resolution obtained with fMRI (approximately 3–5, 2, and 1 mm for 1.5, 3.0 and 7.0 Tesla, respectively) compared to the resolution with PET (approximately 6–10 mm). However, the temporal resolution is poor with both methods because of the slow time course of the hemodynamic response itself, which takes seconds rather than milliseconds. Anatomical images can be easily obtained and combined with neuroimaging data to provide detail of the auditory cortex in each subject. In this way, it is possible to localize maximally activated regions and to determine how these patterns correlate with anatomy and function. Table 1 provides a summary of the temporal and spatial resolution characteristics of PET, fMRI, MEG, and EEG.

Table 1.

Methods of Study in Auditory Neuroscience.

| Method | Response Mechanism | Temporal Res. | Spatial Res. |

|---|---|---|---|

| EEG | Electrical potentials measured by multiple surface electrodes | < 1 ms | 8 – 14 mm (dep # channels) |

| MEG | Electrical currents measured by superconducting coils | < 1 ms | 8 – 14 mm (dep # channels) |

| PET | Cerebral metabolic changes measured by radioactive tracers | > 2 min | 6 – 10 mm |

| fMRI | Cerebral metabolic changes measured by changes in magnetic properties depending on blood oxygen levels | 0.25 – 3 sec | 3 – 5 mm (1.5T) 2 mm (3T) < 1 mm (7T) |

Improved knowledge of auditory cortex organization and function in humans can be achieved by combining electrophysiologic, neuroimaging, and behavioral responses obtained from the same experimental subject. This would allow for the acquisition and analysis of temporal and spatial information from the same set of neural responses. Such simultaneous recordings have been made in the visual system (Bonmasser et al., 1999) and only recently in the auditory system (Liebenthal et al., 2003; Scarff et al., 2004) in humans. In order to combine temporal resolution with spatial activation, new methods will be required to merge data that rely on different experimental designs, data analyses, and physiologic mechanisms (e.g., instantaneous neural activity but poor localizing power compared to slow hemodynamic changes with high spatial power) (Wagner and Fuchs, 2001).

In the following sections, data collected by the authors using fMRI methods with either normal hearing subjects or those with unilateral hearing loss are presented. In these experiments, auditory stimuli were tailored for each study and delivered with Avotech Inc. electrostatic headphones. A sparse sampling paradigm (Hall et al., 1999) was employed to record cortical responses with a 9.3 second quiet period between MR acquisitions and a 30-second ON-OFF paradigm for a total duration of 5.5 minutes. Data were collected on a 1.5 Tesla GE Signa CV MR scanner using a standard quadrature head coil. Ten slices of T1-weighted anatomical images (TE = 4.2 ms, TR = 265 ms, Flip angle = 80 degree) were acquired as a template for the functional MR images in the axial direction with a thickness of 5 mm (FOV=240×240mm, resolution 256×256 points) including the primary auditory cortex and surrounding area. Functional MRI data were collected as gradient echo EPI sequences (TE = 40ms, TR = 700ms, Flip Angle = 90 degree) collecting 34 volumes (resolution 64×64 points). At the end of the scanning session, a high-resolution anatomic T1-weighted SPGR image was recorded (TE=3ms, TR=25ms, Flip Angle = 30 degree) with 124 slices in the sagittal direction with a thickness of 1.3 mm (FOV=240×240mm, resolution 256×256 points) covering the whole head. Data analysis was completed using AFNI (Cox, 1996) and included motion correction, cross-correlation analysis, and spatial clustering algorithms. Ideal cluster size, minimum voxel distance and correlation threshold (correlation coefficient > 0.40) were determined by Monte-Carlo analysis for a given region of interest (ROI, specifically voxels within transverse temporal gyrus and planum temporale for the left and right hemisphere), size and spatial correlation.

Effects of Acoustic Stimulus Parameters on Cortical Activation

Acoustic stimuli that are used to evoke either neural or vascular responses can be described in terms of frequency, level or intensity, and time. There can be complex interactions among stimulus factors themselves, for example, the duration of a stimulus is closely related to its frequency and presentation rate. Changes in stimulus parameters are known to influence electrophysiologic responses. For example, an increase in stimulus intensity typically results in an increase in the magnitude of the electrophysiologic response. Responses of the central auditory system also are affected by the listening condition, such as whether the stimuli are presented monaurally or binaurally. In addition, properties of the stimulus can interact with subject characteristics, such as age and auditory pathology. The effects of acoustic stimulus properties on auditory cortical activation using neuroimaging techniques have not been thoroughly explored. Likewise, the optimal properties of acoustic stimuli to evoke spatially specific responses are not fully known.

Stimulus Frequency

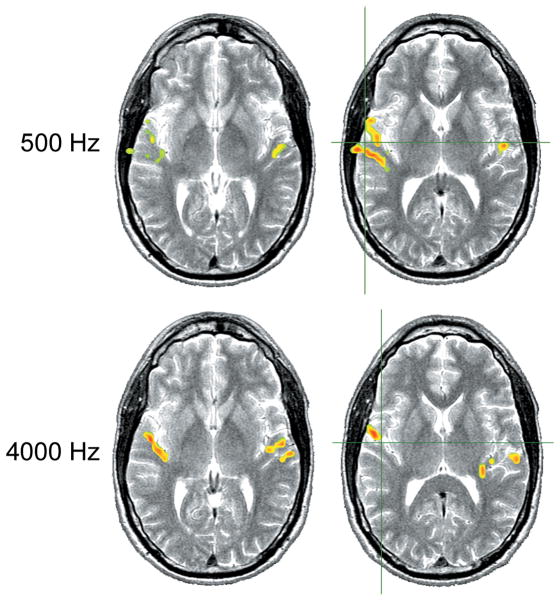

The auditory system, from the basilar membrane of the cochlea to the auditory cortex, is tonotopically organized. Neurons that are most sensitive to similar frequencies tend to be located near each other so that there is an orderly spatial representation of neurons with varying best frequencies throughout the auditory pathways. Human hemodynamic responses to binaurally presented 500 and 4000 Hz tonal stimuli at 100 dB SPL showed that high-frequency stimuli were more effective in activating centers in more frontal and medial locations within the temporal lobe than low frequency stimuli (Bilecen et al, 1998). For both high and low frequency stimulation, the activation was also reported to be greater in the left compared to the right hemisphere. A comparison of responses to 1000 and 4000 Hz tonal stimuli presented to the right ear of subjects resulted in greater activation laterally in the transverse temporal gyrus for the 1000 Hz stimulus whereas the 4000 Hz stimulus activated the medial location (Strainer et al., 1997). These findings are generally consistent with earlier reports in humans, such as those by Pantev et al. (1995), who used MEG to compare responses to 500, 1000, and 4000 Hz at 60 nHL (normative hearing level), and both Lauter et al. (1985) and Lockwood et al. (1999b), who used PET to compare responses to 500 and 4000 Hz tone bursts. In Figure 2, a comparison of fMRI activation in response to low and high frequency stimuli in one of our normal hearing subjects is shown.

Figure 2.

Each row of axial slices displays fMRI activation for a pure-tone (1.5% warble, 1.5 Hz pulsed) at 80 dB HL to the left ear at two locations through the auditory cortex overlaid onto a T2 weighted MR image. Top row shows responses for a 500 Hz stimulus and bottom row for a 4000 Hz stimulus. Center of activation for 500 Hz is more lateral, center of activation for 4000 Hz is more medial along the transverse temporal gyrus. Axial slices shown in radiological orientation (right hemisphere to the left, left hemisphere to the right). The green cross-hairs approximate the center of mass for the response on the right hemisphere.

A microelectrode placed in the right side of Heschl’s gyrus of an epileptic patient provided a unique opportunity to record single unit data from a human using tones of 24 different frequencies and an intensity level of 75 dB SPL presented monaurally in the left ear (Howard et al., 1996). The best-frequency responses of cortical units indicated that responses to higher best frequencies (i.e., 3360 Hz) were located more posterior-medial and responses to lower best frequencies (i.e., 1489 Hz) more anterior-lateral. (Although these auditory cortex units responded in a frequency-specific manner, complex temporal processing was occurring in parallel.)

A neuroimaging study in humans by Talavage and colleagues (2000) has identified multiple frequency response regions in the auditory cortex. Six subjects were stimulated binaurally with lower (i.e., less than 660 Hz) and higher (i.e., greater than 2490 Hz) frequency pairs of narrowband stimuli. Each pair was separated by two octaves so that the spatial representation of each member of the pair was approximately 6 mm and therefore differentiable with neuroimaging methods. The majority of stimuli were presented 35 dB above behavioral threshold, which was determined for each subject while in the scanner but in the absence of scanner noise. (The behavioral thresholds obtained in the scanner for the subjects were not reported.) Eight frequency-dependent response regions were identified on the superior temporal lobe, four of which were greater for high frequency signals and four for low frequency signals. In a previous study by the same researchers (Talavage et al., 1997), which used frequency sweeps (i.e., center frequency of a narrow band noise swept from low to high or high to low) as stimuli, the progression of cortical activation complemented the frequency specificity in the later study (i.e., Talavage et al., 2000) by connecting seven of the eight identified frequency response regions. These studies suggest that multiple tonotopic activation patterns exist across the auditory cortex in humans, similar to those documented in animal models (Rauschecker et al., 1997; Reale and Imig, 1980).

Little is known about the impact that hearing loss has on tonotopic organization in the human auditory cortex. Animal data indicate that tonotopic organization is disturbed in the presence of hearing impairment (Harrison et al., 1991; Kaas et al., 1983; Robertson and Irvine, 1989). Restricted cochlear damage results in significant reorganization of the representation of frequency in the auditory cortex and an expansion of frequency representation into the regions located near the deprived area (Rajan et al., 1993; Robertson and Irvine, 1989). Although peripheral damage can alter the cortical spatial representation of frequency in animals, corresponding studies in human auditory cortex have not been conducted. At this time, the functional significance of tonotopic organization is not completely understood.

Stimulus Level

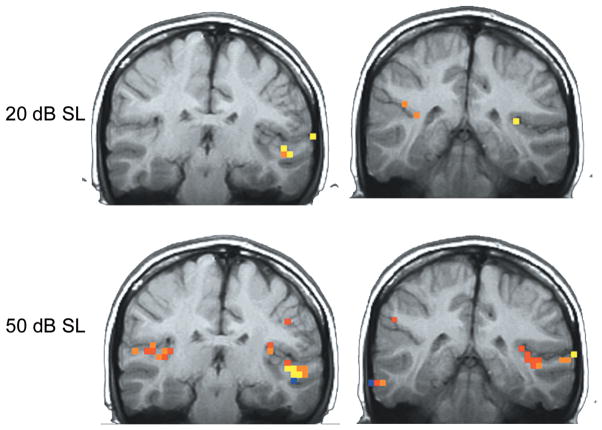

The effects of stimulus level or intensity of the acoustic signal on cortical activation using neuroimaging have been investigated in a few studies with variable results. Jäncke et al. (1998) used pure tones and consonant-vowel-consonant speech stimuli presented binaurally at 75, 85, and 95 dB SPL. The results suggested that activation was greater in the left hemisphere compared to the right in Brodmann’s area 22 for speech stimuli at the higher levels of 85 and 95 dB compared to 75 SPL. Strainer et al. (1997) evaluated two intensity levels, that of 20 and 50 dB SL (i.e., sensation level) and showed an increase in the volume of activation at the higher intensity when imaging the primary auditory cortex. These studies demonstrate an increase in the cortical response with increase in intensity level (see Figure 3), although the specifics of the loudness growth function have not been fully addressed.

Figure 3.

fMRI activation for two stimulus levels presented above auditory threshold for a 1 kHz pure tone stimulus. The top row shows responses for 20 dB above threshold at two coronal locations through the cortex, whereas the bottom row demonstrates responses at a sound level of 50 dB above threshold. Note the larger area of activation and the higher (red) correlation magnitude of the response at the higher stimulus level. Adapted with permission from Lasota et al., 2003.

In a more extensive investigation of the effects of signal intensity, 6 dB steps were used between 42 and 96 dB SPL (10 total intensity levels) for a 300 Hz tone presented monaurally to the left ear of subjects (Hart et al., 2002). Three regions of analysis included a primary area on Heschl’s gyrus, and two non-primary areas; one area lateral to Heschl’s gyrus and the posterior part of the auditory cortex, that of planum temporale. As signal intensity was increased, there was a non-linear increase in the extent and magnitude of cortical activation. Specifically, Heschl’s gyrus showed more sensitivity to increases in level compared to the two non-primary areas. In a follow up study in 2003, Hart and colleagues examined a similar intensity range, (i.e., 42–96 dB SPL) in Heschl’s gyrus using a low- (300 Hz) and high-frequency (4750 Hz) tonal stimulus presented monaurally to the left ear. Analysis of the number of activated voxels suggested that the 4750 Hz stimulus elicited a growth in activation that was steady across levels, whereas the 300 Hz stimulus showed smaller changes below 66 dB SPL followed by a sharp increase up to the highest intensity tested. This difference in the growth of activation was not as pronounced when analyzing the mean percentage of signal changes, in which case the difference in growth functions between frequencies was significant at the highest intensity only, that of 96 dB SPL. Additional studies are needed to determine the effects of intensity relative to frequency on the hemodynamic response in normal-hearing individuals. This information is required prior to the application of fMRI in the hearing-impaired population where detection thresholds and growth of loudness will vary across frequencies and subjects as well as within subjects (i.e., between ears).

Stimulus Rate

Given that the hemodynamic response stems from neural activity, and every neural event, such as an action potential or a postsynaptic membrane potential, is followed by a refractory period during which time the unit may not respond, stimulus rate could influence the magnitude of the BOLD response. In addition, it is uncertain whether auditory responses measured with different techniques would have the same apparent response to a stimulus because the physiologic responses upon which the technique is based are different. For example, in an fMRI study using rates of .17 to 2.5 Hz for speech syllables, the percent signal change of activated areas increased with increasing rate and was monotonic and nonlinear (Binder et al., 1994). Similarly, a single-subject case study was employed to evaluate the effects of stimulus presentation rate using fMRI and also showed the response to be nonlinear (Rees et al., 1997). This same study assessed the cerebral blood flow response using PET to the same stimuli and, in contrast, the results demonstrated a linear response. The differing results between fMRI and PET suggests a more complex relationship between neural activity, cerebral blood flow, and changes in oxygenation. There were also methodological differences between the two studies that could account for some of the differences, such as passive listening of nouns in a single subject (Rees et al., 1997) compared to an active discrimination task of phonemes performed by five subjects (Binder et al., 1994).

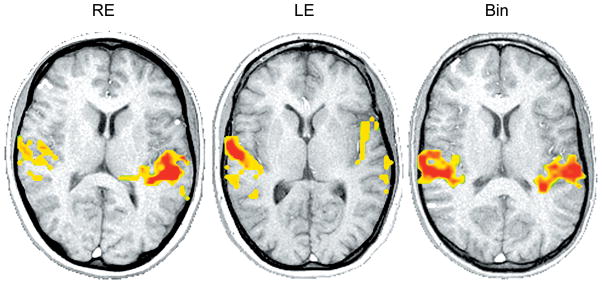

Monaural Compared to Binaural Stimulation

In the auditory system, the pathway from each ear to the contralateral cortical hemisphere is comprised of more nerve fibers than is the pathway from each ear to the ipsilateral hemisphere. Evoked responses to monaural stimulation are stronger in the contralateral compared to the ipsilateral hemisphere (Wolpaw and Penry, 1977). In studies of binaural stimulation, however, EEG and MEG recordings have shown greater responses in both hemispheres and more bilateral cortical activation patterns (Loveless et al., 1994). Similar findings have been reported using fMRI and hemodynamic responses when tonal and speech (consonant-vowel syllables) stimuli were presented in the monaural and binaural conditions (Jäncke et al., 2002; Scheffler et al., 1998). That is, there was stronger contralateral activation for monaural compared to binaural stimulation, and the strongest responses were evoked by binaural presentations. (An example of the effects of monaural and binaural stimulation using fMRI in one of our normal hearing subjects is shown in Figure 4.) Therefore, it is imperative that the listening condition with respect to monaural and binaural stimulation be taken into consideration when interpreting the activation patterns in left and right cortical hemispheres.

Figure 4.

fMRI activations for speech stimulus/ba/presented in the right ear (RE), left ear (LE) and binaural (Bin) at 80 dB HL in normal-hearing subjects. Cortical activation of the transverse temporal gyrus and planum temporale is shown for each slice. Axial slices displayed in radiological orientation (right hemisphere to the left, left hemisphere to the right). Note the more contralateral activation for the monaural presentations, and the nearly symmetric activation in response to the binaural stimulus.

Stimuli Presented in Quiet Compared to Noise

In animal studies, single auditory nerve fibers (Kiang, 1965) and cochlear nucleus units (Burkard and Palmer, 1997) show a decrease in response magnitude for click stimuli in the presence of broadband noise. Electrophysiologic studies in humans of the effects of noise with click-evoked brainstem responses indicate that the magnitude of the response diminishes and latency increases as the level of the noise is increased (Burkard and Hecox, 1983). In a study of children with diagnosed learning problems, brainstem and cortical neurophysiologic responses showed abnormalities when compared to normal controls, but only when the stimuli were presented in background noise (Cunningham et al., 2001; Kraus, 2001). In neuroimaging studies, the effects of the scanner background noise have been the focus of attention rather than the effects of noise as a direct stimulus masker during auditory presentations through headphones. Because background noise challenges the auditory system, its presence may result in changes to the functional asymmetry of speech and sound processing.

In summary, patterns of cortical activation evoked with auditory signals are dependent on the stimulus parameters (e.g., frequency, stimulus level, rate), listening condition (e.g., monaural or binaural), and complexity (e.g., tonal, speech, presence of background noise). In order to compare results of studies that have used different methodologies (i.e., electrophysiology, MEG, PET, or fMRI), it will be important to fully understand the neural mechanisms at play with each technique. Careful consideration of the stimulus variables associated with each experimental design is necessary for comparison and interpretation of data across studies using different methods. The establishment of normative data for an identified set of stimulus parameters, recording procedures, and data analyses for a given technique would provide an avenue for comparison of outcomes.

Hemispheric Asymmetries

Hemispheric differences are evident in the normal processing of speech sounds (Phillips and Farmer, 1990). The term laterality also implies hemispheric differences and refers to the dominance of one hemisphere with regard to a particular function. The lateralization of auditory language function to the left hemisphere was reported in early studies (Geschwind, 1972; Geschwind and Levitsky, 1968; Kimura, 1961). However, more recent studies suggest that lateralization may be more related to the nature of rapidly changing acoustic cues rather than whether an acoustic signal is speech or non-speech (Phillips and Farmer, 1990; Tallal et al., 1994; Zatorre et al., 1992). Even as young as infancy, evoked potentials elicited with strings of syllables show significantly larger responses over the left hemisphere compared to the right, suggesting a possible functional asymmetry for processing short syllables in the left hemisphere (Dehaene-Lambertz and Dehaene, 1994). Dichotic listening tasks, in which two different auditory stimuli are presented to both ears at the same time, indicate that subjects are more accurate in their recognition of stimuli in the right compared to the left ear. This “right-ear advantage” supported the theory that the left hemisphere contralateral to the right ear was specialized for language (Kimura, 1967)

Current studies support the notion that the left auditory cortex responds to temporal changes whereas the right auditory cortex responds to frequencies or spectral content (Liégeois-Chauvel et al., 1999, 2001; Zatorre et al., 2002). Even when non-linguistic stimuli (i.e., pure tones with frequency glides of either short or long duration) have been presented to normal hearing subjects, PET scan results demonstrate blood flow changes in left cortical areas and right cerebellum supporting left hemisphere processing of acoustic transients (Johnsrude et al., 1997). In a study by Belin and colleagues (1998), PET data indicate that the right auditory cortex responded only to a slow rate formant transition of 200 ms whereas the left auditory cortex responded to either 40 or 200 ms rates. These data suggested that the left cortex had an enhanced ability to respond to fast formant transitions. Taken together, these studies challenge the notion that the left hemisphere is specialized for “speech” and the right hemisphere is specialized for “music.” Instead, the data indicate that the processing of fast temporal cues, albeit critical for speech processing, occurs best in left auditory cortex and that tonal or spectral information is more efficiently processed in the right auditory cortex (Zatorre et al., 2002).

At the same time, studies of linguistic processing in tonal languages (Gandour et al., 1998, 2004) and left-hemisphere processing of visual sign languages by individuals with profound hearing impairment (Finney et al., 2001; Petitto et al., 2000) suggest that the left hemisphere may have a specialized role in the processing of language and communication. When subjects listened to a vowel that existed in their native language, larger electrophysiologic responses were recorded in the left compared to the right hemisphere (Näätänen et al., 1997). In contrast to this asymmetry, subject responses were similar in magnitude in the two hemispheres when presented with a non-prototype of the vowel. Therefore, the linguistic or acoustic nature of the stimulus may dictate the involvement of each cortical hemisphere. In a recent review, Zatorre and colleagues (2002) proposed that perhaps hemispheric asymmetries exist to meet the need of optimizing both temporal and spectral processing during everyday listening and challenging communication demands. Therefore, with two systems, one in each cortical hemisphere, temporal and spectral processing demands can be serviced by the partnership of the two systems relative to the listening environment.

Anatomical Asymmetries in Humans

There are asymmetries in the anatomical structures in the left and right hemisphere in the human auditory cortex. For example, Penhune et al. (1996) studied the location and extent of the area of the primary auditory cortex in humans and found that the left primary auditory cortex was larger than the right, and that white matter volume was greater in the left Heschl’s gyrus than the right. Differences in the cell organization of the right and left hemispheres also have been observed. The left auditory cortex has larger layer-III pyramidal cells, wider cell columns, and contact with a greater number of afferent fibers compared to the right hemisphere (Hutsler and Gazzaniga, 1996; Seldon, 1981a, b, c).

Planum temporale, posterior to Heschl’s gyrus, is thought to be a key site in communication processing in humans. Measurements of the surface area of planum temporale (Geschwind and Levitsky, 1968) and cytoarchitectonic studies (Galaburda et al., 1978) in humans give evidence that planum temporale is larger on the left than on the right. More recent findings suggest there is also asymmetry in the volume of white matter and in the extent of myelination in axons (Anderson et al., 1999) between left and right planum temporale. The fact that myelination and axon number is greater in the left compared to the right planum temporale and auditory cortex region suggests that the left hemisphere may contribute to faster transmission and better temporal resolution, ideal for the transmission of rapidly changing speech cues (Hutsler and Galuske, 2003; Zatorre et al., 2002).

The corpus callosum, the neural pathway that connects the right and left hemispheres with the largest fiber tract in the brain, is thought to contribute to hemispheric asymmetries by virtue of either inhibitory or excitatory functions (for review, see Bloom and Hynd, 2005). Enhancement of the contralateral hemisphere and suppression of the ipsilateral hemisphere may occur across corpus callosum, resulting in dominance of one hemisphere for a particular stimulus or function. At this time, the role of interhemispheric connections and the transfer of auditory information between hemispheres is uncertain.

Anatomical Asymmetries in Animals

Although anatomical hemispheric asymmetries were originally thought to exist only in humans, subsequent studies support asymmetries in animals as well. For example, Gannon et al. (1998) showed left hemisphere dominance of planum temporale in chimpanzees. Of the 18 subjects, 94% had a larger left than right planum temporale. The significance of this finding with respect to the evolution of humans and the role of planum temporale in communication is not entirely clear. Further evidence of laterality in nonhuman primates was demonstrated by Petersen and colleagues (1978) in Japanese macaques for which a right ear advantage was noted during analysis of communicatively relevant acoustic dimensions (i.e., peak fundamental frequency in the primate call). In a study of avian song perception (Cynx et al., 1992), hemispheric dominance was assessed by lesioning the ipsilateral auditory nucleus of the thalamus, which interrupted the input to either the right or left hemisphere. Using a behavioral song discrimination task, the birds demonstrated a left side task-specific dominance, suggesting that the right and left hemispheres process sounds differently. In the mouse brain (Ehret, 1987), the left hemispheres of maternal mice showed preferential recognition of communication calls of their young offspring. Results support a right ear and therefore left hemisphere advantage for the processing of sounds involved in communication in mice.

Subcortical Asymmetries

There is evidence that asymmetric patterns of activation occur at a subcortical level. King et al. (1999) studied differences in neural representations by recording within the right and left medial geniculate bodies of anesthetized guinea pigs. Stimuli were 2000 Hz tone bursts, clicks, and speech (i.e., synthesized/da/) stimuli presented at 85 dB SPL to the right, left, and both ears. Onset response amplitudes were measured and were larger in the left compared to the right auditory thalamus in 10 of 12 animals, suggesting some degree of asymmetry at a subcortical level.

Asymmetry has been suggested at the level of the cochlea in a study of infants using either transient-evoked otoacoustic emissions (TEOAE, elicited with clicks) or distortion product otoacoustic emissions (DPOAE, elicited with continuous tones) (Sininger and Cone-Wesson, 2004). In infants, a significant effect of OAE type and ear of stimulation was found. (Click TEOAEs were larger when evoked in right ears and tonal DPOAEs were larger when evoked in left ears.) Since otoacoustic emission measures reflect activity of the outer hair cells, these findings suggest that some differentiation in acoustic stimulus processing may occur at peripheral levels, thereby facilitating higher level hemispheric sound processing.

Effects of Stimulus Type on Asymmetrical Activation Patterns

Asymmetric response patterns are affected, however, by the type of stimulus (e.g., tones, clicks, speech). For example, the degree of asymmetry measured with evoked responses when recording from the medial geniculate bodies in guinea pigs was significantly different for a synthesized speech stimulus/da/compared to a 2000-Hz tone or a click (King et al., 1999). In this study, there appeared to be a continuum with respect to the amount of asymmetry with the greatest asymmetry noted for the speech stimulus followed by the click and then the tone, which showed no asymmetry. Using a novel continuum of auditory signals in humans, Rinne et al., (1999) tested the hypothesis that hemispheric activation changes would occur from right to left hemisphere as stimuli changed from non-speech to speech. Electrophysiologic responses showed that activation was greater in the right cortical hemisphere for a tonal stimulus with a shift in activation to the left hemisphere as stimuli became more phonetic in nature. Taken together, these results indicate that asymmetric patterns are stimulus dependent, and that right and left hemispheres have different roles in the processing of acoustically complex signals.

Hemispheric asymmetries have been reported to differ at varied stimulus intensities. Hart et al. (2002) reported that for monaural presentation of tones to the left ear and at low stimulus levels (i.e., below 72 dB SPL), the extent of activation in right and left Heschl’s gyrus (HG) was similar. At higher levels such as 92 and 96 dB SPL, contralateral hemispheric activation in HG was significantly greater compared to the ipsilateral hemisphere. This result was not observed for two other regions studied, an area lateral to HG and planum temporale, the posterior part of the auditory cortex. The authors noted that these findings suggest that HG may have a greater role in the processing of intensity levels than the non-primary areas of interest and that intensity may affect hemispheric asymmetric response patterns.

The effects of background noise on hemispheric asymmetry were assessed using the magnetic equivalent (MMNm) of the mismatch negativity response in human subjects (Shtyrov et al., 1998). Using an odd-ball paradigm, speech stimuli/pa/(standard stimulus) and/ka/(deviant stimulus) were presented binaurally at 60 dB above threshold to normal hearing subjects. The MMNm response is obtained by subtracting the standard stimuli responses from those elicited by the deviant stimuli. Three stimulus conditions were evaluated, speech in quiet and speech in two white noise conditions (+15 and +10 signal-to-noise ratios). MMNm peak amplitudes and dipole moments indicated that responses were larger in the left hemisphere in the quiet condition. In the noise conditions, the hemispheric asymmetry decreased and the responses in the right hemisphere were increased. These results suggest that noise may disrupt the more typical hemispheric asymmetry with redistribution to the right cortex.

Effects of Unilateral Hearing Loss on Hemispheric Asymmetries

Animal studies indicate that unilateral hearing loss modifies the asymmetric cortical response patterns of the auditory cortex (Kitzes 1984; Reale et al., 1987). In addition, the amount of asymmetry in humans has been shown to differ between normal hearing (NH) and unilateral hearing loss (UHL) subjects in response to tone bursts using magnetoencephalographic responses (Vasama and Makela, 1995), click stimuli using auditory evoked potentials (Ponton et al., 2001), and pulsed tonal stimuli using fMRI (Scheffler et al., 1998). In a study of hemispheric activation, measures obtained ipsilateral and contralateral to the ear of stimulation using clicks in hearing loss subjects showed hemispheric ear-dependent differences (Khosla et al., 2003).

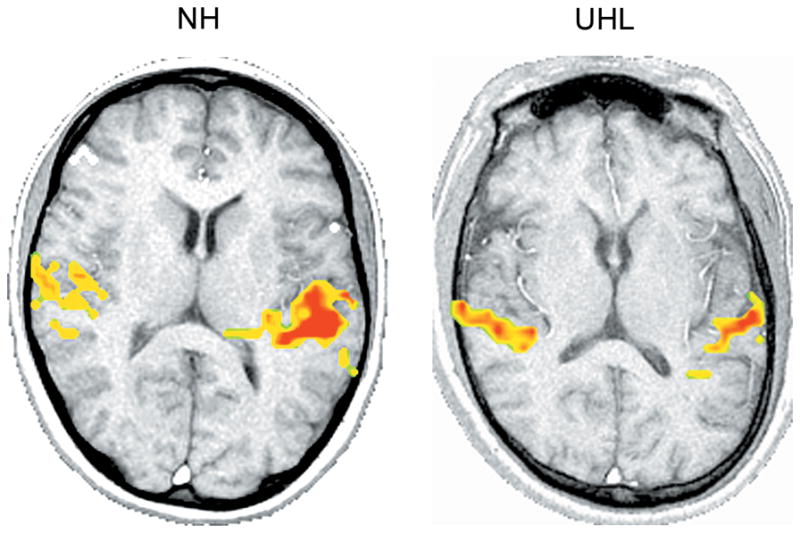

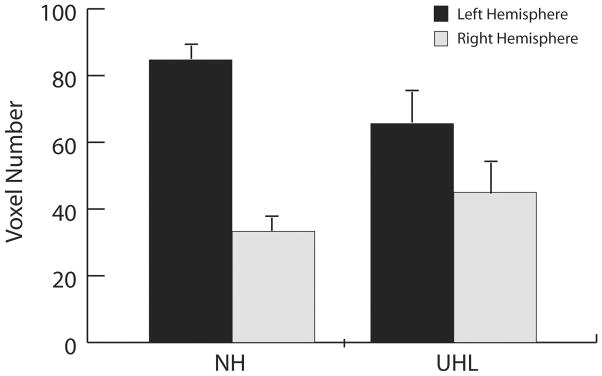

In a current study (Firszt et al., 2005), the effects of unilateral profound hearing loss on hemispheric activation patters using speech stimuli were investigated. Normal hearing subjects (n = 9) and subjects with profound hearing loss in the left ear and normal hearing in the right ear (n = 7) were presented a speech stimulus/ba/in the right ear at 80 dB HL. Hemispheric activation measures were obtained using fMRI with a 1.5T magnet and sparse sampling method (Hall et al., 1999). In normal hearing subjects, stimulation in the right ear resulted in greater activation in the contralateral (left) hemisphere compared to the ipsilateral (right) hemisphere. For the unilateral hearing loss subjects, a decrease was seen in the contralateral hemisphere and increase in the ipsilateral hemisphere compared to normal hearing subjects. An example from a normal hearing and unilateral hearing loss subject is displayed in Figure 5 and group data are shown in Figure 6. Comparison of the contralateral and ipsilateral hemispheres for the subjects indicates that cortical asymmetry is strong in response to a speech stimulus for the normal hearing subjects, and a notable decrease in asymmetry occurs for the unilateral hearing loss subjects. For this group, the asymmetry reduction is a result of both a decrease in the left (contralateral) hemisphere and an increase in the right (ipsilateral) hemisphere. These findings are consistent with those of previous reports in humans (Scheffler et al., 1998). This study documents such findings with the use of a speech stimulus and a neuroimaging technique. Further experiments are underway to explore the functional significance of changes in hemispheric asymmetry in individuals with unilateral profound hearing loss using complex stimuli. These directions should provide insight into the reorganization of the auditory system when sound deprivation occurs.

Figure 5.

Comparison of a normal hearing (NH) (left image) and unilateral hearing loss (UHL) (right image) subject. Speech stimulus/ba/is presented at 80 dB HL in the right ear of both subjects. The UHL subject has normal hearing thresholds in the right ear and profound hearing loss in the left ear. Axial slices shown in radiological orientation (right hemisphere to the left, left hemisphere to the right). The NH subject shows greater asymmetry between hemispheres compared to the UHL subject for whom activation is more balanced.

Figure 6.

Averaged hemispheric activation (voxel number in transverse temporal gyrus and planum temporale) for the left and right hemispheres for normal hearing (NH) and unilateral hearing loss (UHL) subjects displayed for speech stimulus/ba/presented to the right ear at 80 dB HL. In NH subjects, stimulation results in greater activation in the contralateral (left) hemisphere compared to the ipsilateral (right) hemisphere. For UHL subjects and right ear stimulation (left ear deafness), a decrease is seen in the contralateral hemisphere and increase in the ipsilateral side, resulting in less asymmetry compared to NH subjects.

Hemispheric Asymmetries in Pathology and Aging

Changes in hemispheric asymmetry may have a negative impact on the ability to process fast acoustic transitions such as those that are necessary to perceive speech. In addition, it may be that some clinical populations or subject characteristics are more vulnerable to changes than others. Individuals with left hemisphere cortical damage show speech perception difficulties (Auerbach et al., 1982). Studies using electrical stimulation mapping of epileptic patients show left hemisphere specialization for language (Ojemann, 1983). In children with learning problems, atypical hemispheric specialization has been reported behaviorally and at the neural level (Dawson et al., 1989; Mattson et al., 1992). Neuroimaging studies have found a loss of asymmetric characteristics between the left and right hemispheres for language impaired populations, including those with dyslexia (Galaburda et al., 1994; Leonard et al., 1993).

An electrophysiologic study in the elderly indicated age-related changes in cortical hemispheric patterns (Bellis et al., 2000). Synthetic speech syllables were used to elicit the neurophysiologic P1-N1 response in children, young adults, and older adults (over 55 years of age). Stimuli were presented at 75 dB SPL monaurally to the right ear. The cortical response under study was asymmetric in the two younger groups favoring the left hemisphere whereas responses were symmetric in the elderly group and attributable to an increase in right hemispheric activation. The older subjects also demonstrated significantly poorer abilities to discriminate rapid spectro-temporal changes in speech compared to the two younger groups, a finding consistent with many reports of speech perception difficulties in the elderly (Fitzgibbons and Gordon-Salant, 2001; Jerger et al., 1994).

Neuroimaging techniques have been used to study the physiologic mechanism of tinnitus and have shown that the loudness of tinnitus is reflected in increases and decreases in cortical responses (Cacase et al., 1996; Giraud et al., 1999; Lockwood et al., 1999a) and that asymmetric patterns are disturbed (Melcher et al.,1999). In subjects with normal hearing and monaural (i.e., lateralized) tinnitus, greater asymmetric responses in the inferior colliculi have been noted compared to control subjects (Levine et al., 1998; Melcher et al., 1999). Taken together, these findings, in addition to previous results, suggest that there may be functional implications for atypical hemispheric patterns and that the loss of normal asymmetries (i.e. symmetric responses or exaggerated asymmetric responses) should be explored further. Neuroimaging tools may ultimately provide insight to the functionality of cortical hemispheric differences in those with clinically significant impairments.

Effects of Plasticity and Training on Hemispheric Asymmetries

Auditory hemispheric patterns have been shown to change or reorganize with sound deprivation (e.g., unilateral hearing loss) and may therefore also change with sound experience or training specific to the auditory system. Behavioral studies indicate that auditory training improves speech recognition in individuals with hearing loss and is a critical component for the development of communication in children with substantial hearing impairment. If auditory training results in greater and more precise neural activity, changes in cortical activation may follow.

There are few studies of neurophysiologic changes recorded before and after training of the auditory pathway. In normal hearing subjects, cortical electrophysiologic responses (i.e., mismatch negativity response, or MMN) evoked with non-native speech syllables were initially symmetric in human subjects. However, responses were larger for the left hemisphere compared to the right following training (Tremblay et al., 1997). In children with learning problems who received training, some cortical responses improved in morphology and response areas were shifted more to the left rather than the right hemisphere (King et al., 2002).

A number of recent studies have looked at training effects in other sensory regions using neuroimaging measures, such as in the visual cortex (Kourtzi et al., 2005; Sigman et al., 2005) and motor cortex (Nyberg et al., 2005; Puttemans et al., 2005). For example, neural changes in response to category learning were assessed with fMRI by monitoring regions of activation before and after training (Little et al., 2004). This comparison showed that early training resulted in both behavioral increases in accuracy and response time and increases in volume of activation in regions known to be involved in visual and spatial processing. As training progressed, activation subsequently decreased, suggesting that there is variation in the time course of regional activation that is dependent on the course of learning.

Additional experiments using neuroimaging techniques to study the effects of auditory training and plasticity on hemispheric activation patterns in human subjects may provide further insight into how the auditory system changes with experience. If behavioral changes are supported by neural changes that result in more efficient neural connections in a specific auditory region, increased activation in the specified region may result. Behavioral improvements may also manifest as a recruitment of additional regions, therefore redefining the region and corresponding volume of activation.

Conclusions

Knowledge about the structure and function of the human auditory cortex, including the study of hemispheric asymmetry, has been facilitated by various methods in auditory neuroscience including recent neuroimaging techniques. Anatomic studies in humans and animals give evidence that asymmetries exist in both cortical and subcortical structures. Hemispheric asymmetries are affected by a number of variables including stimulus type (e.g., tones compared to speech, speech in quiet compared to speech in noise), presence of pathology (e.g., hearing loss, tinnitus) and subject characteristics (e.g., children with learning problems, dyslexia). Although it appears that the right and left hemispheres are not identical in structure or function, and that there is a correlation between anatomic, structural and functional asymmetries, there is much to be learned about the differential representation of speech sounds in human cerebral hemispheres. Neuroimaging techniques, such as fMRI, will accelerate our understanding of human auditory cortex and the relationship between structure and function.

Acknowledgments

Grant Source: National Institute on Deafness and Other Communication Disorders Grant Number: K23 DC05410

Grant Source: Radiological Society of North America Research & Education Fund Scholar’s Grant.

LITERATURE CITED

- Anderson B, Southern BD, Powers RE. Anatomic asymmetries of the posterior superior temporal lobes: a postmortem study. Neuropsychiatry Neuropsychol Behav Neurol. 1999;12:247–254. [PubMed] [Google Scholar]

- Auerbach SH, Allard T, Naeser M, Alexander MP, Albert ML. Pure word deafness. Analysis of a case with bilateral lesions and a defect at the prephonemic level. Brain. 1982;105:271–300. doi: 10.1093/brain/105.2.271. [DOI] [PubMed] [Google Scholar]

- Bandettini PA, Jesmanowicz A, Van Kylen J, Birn RM, Hyde JS. Functional MRI of brain activation induced by scanner acoustic noise. Magn Reson Med. 1998;39:410–416. doi: 10.1002/mrm.1910390311. [DOI] [PubMed] [Google Scholar]

- Belin P, Zilbovicius M, Crozier S, Thivard L, Fontaine A, Masure MC, Samson Y. Lateralization of speech and auditory temporal processing. J Cogn Neurosci. 1998;10:536–540. doi: 10.1162/089892998562834. [DOI] [PubMed] [Google Scholar]

- Bellis TJ, Nicol T, Kraus N. Aging affects hemispheric asymmetry in the neural representation of speech sounds. J Neurosci. 2000;20:791–797. doi: 10.1523/JNEUROSCI.20-02-00791.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilecen D, Scheffler K, Schmid N, Tschopp K, Seelig J. Tonotopic organization of the human auditory cortex as detected by BOLD-FMRI. Hear Res. 1998;126:19–27. doi: 10.1016/s0378-5955(98)00139-7. [DOI] [PubMed] [Google Scholar]

- Binder JR, Rao SM, Hammeke TA, Frost JA, Bandettini PA, Hyde JS. Effects of stimulus rate on signal response during functional magnetic resonance imaging of auditory cortex. Brain Res Cogn Brain Res. 1994;2:31–38. doi: 10.1016/0926-6410(94)90018-3. [DOI] [PubMed] [Google Scholar]

- Bloom JS, Hynd GW. The role of the corpus callosum in interhemispheric transfer of information: excitation or inhibition? Neuropsychol Rev. 2005;15:59–71. doi: 10.1007/s11065-005-6252-y. [DOI] [PubMed] [Google Scholar]

- Bonmassar G, Anami K, Ives J, Belliveau JW. Visual evoked potential (VEP) measured by simultaneous 64-channel EEG and 3T fMRI. Neuroreport. 1999;10:1893–1897. doi: 10.1097/00001756-199906230-00018. [DOI] [PubMed] [Google Scholar]

- Brodmann K. Vergleichende Loakalisationslehre der Grosshirnrinde. Leipzig: JA Barth; 1909. [Google Scholar]

- Brugge JF. An overview of central auditory processing. In: Popper A, Fay R, editors. The mammalian auditory pathway: neurophysiology. New York: Springer-Verlag; 1992. pp. 1–33. [Google Scholar]

- Burkard R, Hecox K. The effect of broadband noise on the human brainstem auditory evoked response. I. Rate and intensity effects. J Acoust Soc Am. 1983;74:1204–1213. doi: 10.1121/1.390024. [DOI] [PubMed] [Google Scholar]

- Burkard R, Palmer AR. Responses of chopper units in the ventral cochlear nucleus of the anaesthetised guinea pig to clicks-in-noise and click trains. Hearing Research. 1997;110:234–250. doi: 10.1016/s0378-5955(97)00084-1. [DOI] [PubMed] [Google Scholar]

- Casace AT, Cousins J, Moonen CWT, Van Gelderen P, Parnes Lovely TJ. In: Reich G, Vernon J, editors. In vivo localization of phantom auditory percepts during functional magnetic resonance imaging of the human brain; Proceedings of the Fifth International Tinnitus Seminar. Portland: American Tinnitus Association; 1996. pp. 397–401. [Google Scholar]

- Celesia GG. Organization of auditory cortical areas in man. Brain. 1976;99:403–414. doi: 10.1093/brain/99.3.403. [DOI] [PubMed] [Google Scholar]

- Clark RG. Neuroanatomy and neurophysiology. In: Clark RG, editor. Essentials of clinical neuroanatomy and neurophysiology. Philadelphia: Davis; 1975. pp. 86–90. [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–73. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cunningham J, Nicol T, Zecker SG, Bradlow A, Kraus N. Neurobiologic responses to speech in noise in children with learning problems: deficits and strategies for improvement. Clin Neurophysiol. 2001;112:758–767. doi: 10.1016/s1388-2457(01)00465-5. [DOI] [PubMed] [Google Scholar]

- Cynx J, Williams H, Nottebohm F. Hemispheric differences in avian song discrimination. Proc Natl Acad Sci U S A. 1992;89:1372–1375. doi: 10.1073/pnas.89.4.1372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Finley C, Phillips S, Lewy A. A comparison of hemispheric asymmetries in speech-related brain potentials of autistic and dysphasic children. Brain Lang. 1989;37:26–41. doi: 10.1016/0093-934x(89)90099-0. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S. Speech and cerebral correlates of syllable discrimination in infants. Nature. 1994;370:292–295. doi: 10.1038/370292a0. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. The human brain: surface, three-dimensional sectional anatomy with MRI, and blood supply. 2. Vienna: Springer; 1999. p. 22. [Google Scholar]

- Edmister WB, Talavage TM, Ledden PJ, Weisskoff RM. Improved auditory cortex imaging using clustered volume acquisitions. Hum Brain Mapp. 1999;7:89–97. doi: 10.1002/(SICI)1097-0193(1999)7:2<89::AID-HBM2>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehret G. Left hemisphere advantage in the mouse brain for recognizing ultrasonic communication calls. Nature. 1987;325:249–251. doi: 10.1038/325249a0. [DOI] [PubMed] [Google Scholar]

- Finney E, Fine I, Dobkins K. Visual stimuli activate auditory cortex in the deaf. Nat Neurosci. 2001;4:1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Gaggl W, Runge-Samuelson C, Wackym PA, Ulmer JL, Prost RW, DeYoe EA. Asymmetric hemodynamic responses of the auditory cortex in normal hearing and unilateral hearing loss subjects. Assoc Res Otolaryngol Abstr. 2005;164:465. [Google Scholar]

- Fitzgibbons PJ, Gordon-Salant S. Aging and temporal discrimination in auditory sequences. J Acoust Soc Am. 2001;109:2966–2963. doi: 10.1121/1.1371760. [DOI] [PubMed] [Google Scholar]

- Frackowiak RSJ, Lenzi GL, Jones T, Heather JD. Quantitative measurement of regional cerebral blood flow and oxygen metabolism using 15O and positron emission tomography: Theory, procedures and normal values. J Comput Assist Tomogr. 1980;4:730–736. doi: 10.1097/00004728-198012000-00001. [DOI] [PubMed] [Google Scholar]

- Galaburda A, Menard MT, Rosen GD. Evidence for aberrant auditory anatomy in developmental dyslexia. Proc Natl Acad Sci USA. 1994;91:8010–8013. doi: 10.1073/pnas.91.17.8010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galaburda A, Sanides F, Geschwind N. Human brain: Cytoarchitectonic left-right asymmetries in the temporal speech region. Arch Neur. 1978;35:812–817. doi: 10.1001/archneur.1978.00500360036007. [DOI] [PubMed] [Google Scholar]

- Galambos R, Davis H. The response of single auditory-nerve fibers to acoustic stimulation. J Neurophysiol. 1943;6:39–58. [Google Scholar]

- Gandour J, Tong Y, Wong D, Talavage T, Dzemidzic M, Xu Y, Li X, Lowe M. Hemispheric roles in the perception of speech prosody. NeuroImage. 2004;23:344–357. doi: 10.1016/j.neuroimage.2004.06.004. [DOI] [PubMed] [Google Scholar]

- Gandour J, Wong D, Hutchins G. Pitch processing in the human brain is influenced by language experience. NeuroReport. 1998;9:2115–2119. doi: 10.1097/00001756-199806220-00038. [DOI] [PubMed] [Google Scholar]

- Gannon PJ, Holloway RL, Broadfield DC, Braun AR. Asymmetry of chimpanzee planum temporale: Humanlike pattern of Wernicke’s brain language area homolog. Science. 1998;279:220–222. doi: 10.1126/science.279.5348.220. [DOI] [PubMed] [Google Scholar]

- Geschwind N. Language and the brain. Sci Am. 1972;266:76–83. doi: 10.1038/scientificamerican0472-76. [DOI] [PubMed] [Google Scholar]

- Geschwind N, Levitsky W. Human brain: Left-right asymmetries in temporal speech region. Science. 1968;161:186–187. doi: 10.1126/science.161.3837.186. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Chéry-Croze S, Fischer G, Fischer C, Vighetto A, Grégoire M-C, Lavenne F, Collet L. A selective imaging of tinnitus. NeuroReport. 1999;10:1–5. doi: 10.1097/00001756-199901180-00001. [DOI] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Gurney EM, Bowtell RW. “Sparse” temporal sampling in auditory fMRI. Hum Brain Mapp. 1999;7:213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hari R. Magnetoencephalography reveals functions of the human brain. News Physiol Sci. 1993;8:213–215. [Google Scholar]

- Harrison RV, Nagasawa A, Smith DW, Stanton S, Mount RJ. Reorganization of auditory cortex after neonatal high frequency cochlear hearing loss. Hear Res. 1991;54:11–19. doi: 10.1016/0378-5955(91)90131-r. [DOI] [PubMed] [Google Scholar]

- Hart HC, Palmer AR, Hall DA. Heschl’s gyrus is more sensitive to tone level than non-primary auditory cortex. Hear Res. 2002;171:177–190. doi: 10.1016/s0378-5955(02)00498-7. [DOI] [PubMed] [Google Scholar]

- Hart HC, Hall DA, Palmer AR. The sound-level-dependent growth in the extent of fMRI activation in Heschl’s gyrus is different for low- and high-frequency tones. Hear Res. 2003;179:104–112. doi: 10.1016/s0378-5955(03)00100-x. [DOI] [PubMed] [Google Scholar]

- Howard MA, III, Volkov IO, Abbas PJ, Damasio H, Ollendieck MC, Granner MA. A chronic microelectrode investigation of the tonotopic organization of human auditory cortex. Brain Res. 1996;724:260–264. doi: 10.1016/0006-8993(96)00315-0. [DOI] [PubMed] [Google Scholar]

- Hutsler J, Galuske RA. Hemispheric asymmetries in cerebral cortical networks. Trends Neurosci. 2003;26:429–435. doi: 10.1016/S0166-2236(03)00198-X. [DOI] [PubMed] [Google Scholar]

- Hutsler JJ, Gazzaniga MS. Acetylcholinesterase staining in human auditory and language cortices: regional variation of structural features. Cereb Cortex. 1996;6:260–270. doi: 10.1093/cercor/6.2.260. [DOI] [PubMed] [Google Scholar]

- Jancke L, Shah NJ, Posse S, Grosse-Ryuken M, Muller-Gartner HW. Intensity coding of auditory stimuli: an fMRI study. Neuropsychologia. 1998;36:875–883. doi: 10.1016/s0028-3932(98)00019-0. [DOI] [PubMed] [Google Scholar]

- Jancke L, Wustenberg T, Schulze K, Heinze HJ. Asymmetric hemodynamic responses of the human auditory cortex to monaural and binaural stimulation. Hear Res. 2002;170:166–178. doi: 10.1016/s0378-5955(02)00488-4. [DOI] [PubMed] [Google Scholar]

- Jerger J, Chmiel R, Allen J, Wilson A. Effects of age and gender on dichotic sentence identification. Ear Hear. 1994;15:274–286. doi: 10.1097/00003446-199408000-00002. [DOI] [PubMed] [Google Scholar]

- Johnsrude IS, Zatorre RJ, Milner BA, Evans AC. Left-hemisphere specialization for the processing of acoustic transients. Neuroreport. 1997;8:1761–1765. doi: 10.1097/00001756-199705060-00038. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Merzenich MM, Killackey HP. The reorganization of somatosensory cortex following peripheral nerve damage in adult and developing mammals. Annu Rev Neurosci. 1983;6:325–356. doi: 10.1146/annurev.ne.06.030183.001545. [DOI] [PubMed] [Google Scholar]

- Khosla D, Ponton CW, Eggermont JJ, Kwong B, Don M, Vasama JP. Differential ear effects of profound unilateral deafness on the adult human central auditory system. J Assoc Res Otolaryngol. 2003;4:235–249. doi: 10.1007/s10162-002-3014-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiang NYS, Watanabe T, Thomas EC, Clark LF. Research monographs. Vol. 35. Cambridge, MA: MIT press; 1965. Discharge patterns of single nerve fibers in the cat’s auditory nerve. [Google Scholar]

- Kimura D. Cerebral dominance in the perception of verbal stimuli. Can J Psychol. 1961;15:166–171. [Google Scholar]

- Kimura D. Functional asymmetry of the brain in dichotic listening. Cortex. 1967;3:163–178. [Google Scholar]

- King C, Nicol T, McGee T, Kraus N. Thalamic asymmetry is related to acoustic signal complexity. Neurosci Lett. 1999;267:89–92. doi: 10.1016/s0304-3940(99)00336-5. [DOI] [PubMed] [Google Scholar]

- King C, Warrier CM, Hayes E, Kraus N. Deficits in auditory brainstem pathway encoding of speech sounds in children with learning problems. Neurosci Lett. 2002;319:111–115. doi: 10.1016/s0304-3940(01)02556-3. [DOI] [PubMed] [Google Scholar]

- Kitzes LM. Some physiological consequences of neonatal cochlear destruction in the inferior colliculus of the gerbil, Meriones unguiculatus. Brain Res. 1984;306:171–178. doi: 10.1016/0006-8993(84)90366-4. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Betts LR, Sarkheil P, Welchman AE. Distributed neural plasticity for shape learning in the human visual cortex. PLoS Biol. 2005;3:e204. doi: 10.1371/journal.pbio.0030204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraus N. Auditory pathway encoding and neural plasticity in children with learning problems. Audiol Neurootol. 2001;6:221–227. doi: 10.1159/000046837. [DOI] [PubMed] [Google Scholar]

- Kwong KK, Belliveau JW, Chesler DA, Goldberg IE, Weisskoff RM, Poncelet BP, Kennedy DN, Hoppel BE, Cohen MS, Turner R. Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc Natl Acad Sci U S A. 1992;89:5675–5679. doi: 10.1073/pnas.89.12.5675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lasota KJ, Ulmer JL, Firszt JB, Biswal BB, Daniels DL, Prost RW. Intensity-dependent activation of the primary auditory cortex in functional magnetic resonance imaging. J Comput Assist Tomogr. 2003;27:213–218. doi: 10.1097/00004728-200303000-00018. [DOI] [PubMed] [Google Scholar]

- Lauter JL, Herscovitch P, Formby C, Raichle ME. Tonotopic organization in human auditory cortex revealed by positron emission tomography. Hear Res. 1985;20:199–205. doi: 10.1016/0378-5955(85)90024-3. [DOI] [PubMed] [Google Scholar]

- Leonard CM, Voeller KK, Lombardino LJ, Morris MK, Hynd GW, Alexander AW, Andersen HG, Garofalakis M, Honeyman JC, Mao J. Anomalous cerebral structure in dyslexia revealed with magnetic resonance imaging. Arch Neurol. 1993;50:461–469. doi: 10.1001/archneur.1993.00540050013008. [DOI] [PubMed] [Google Scholar]

- Liebenthal E, Ellingson ML, Spanaki MV, Prieto TE, Ropella KM, Binder JR. Simultaneous ERP and fMRI of the auditory cortex in a passive oddball paradigm. Neuroimage. 2003;19:1395–1404. doi: 10.1016/s1053-8119(03)00228-3. [DOI] [PubMed] [Google Scholar]

- Liégeois-Chauvel C, de Graaf JB, Laguitton V, Chauvel P. Specialization of left auditory cortex for speech perception in man depends on temporal coding. Cereb Cortex. 1999;9:484–496. doi: 10.1093/cercor/9.5.484. [DOI] [PubMed] [Google Scholar]

- Liégeois-Chauvel C, Giraud K, Badier JM, Marquis P, Chauvel P. Intracerebral evoked potentials in pitch perception reveal a functional asymmetry of the human auditory cortex. Ann N Y Acad Sci. 2001;930:117–132. doi: 10.1111/j.1749-6632.2001.tb05728.x. [DOI] [PubMed] [Google Scholar]

- Levine RA, Melcher JR, Sigalovsky I, Guinan JJ. Abnormal inferior colliculus activation in subjects with lateralized tinnitus. Ann Neurol. 1998;44:441. doi: 10.1152/jn.2000.83.2.1058. [DOI] [PubMed] [Google Scholar]

- Little DM, Klein R, Shobat DM, McClure ED, Thulborn KR. Changing patterns of brain activation during category learning revealed by functional MRI. Brain Res Cogn Brain Res. 2004;22:84–93. doi: 10.1016/j.cogbrainres.2004.07.011. [DOI] [PubMed] [Google Scholar]

- Lockwood AH, Salvi RJ, Coad ML, Arnold SA, Wack DS, Murphy BW, Burkard RF. The functional anatomy of the normal human auditory system: responses to 0.5 and 4.0 kHz tones at varied intensities. Cereb Cortex. 1999;9:65–76. doi: 10.1093/cercor/9.1.65. [DOI] [PubMed] [Google Scholar]

- Lockwood AH, Salvi RJ, Burkard RF, Galantowicz PJ, Coad ML, Wack DS. Neuroanatomy of tinnitus. Scand Audiol Suppl. 1999;51:47–52. [PubMed] [Google Scholar]

- Lounasmaa OV, Sams M, Makela J. Brain research with a whole head, 122-channel neuromagnetometer. IBRO News (International Brain research Organization) 1993;21:6–7. [Google Scholar]

- Loveless N, Vasama JP, Makela J, Hari R. Human auditory cortical mechanisms of sound lateralisation: III. Monaural and binaural shift responses. Hear Res. 1994;81:91–99. doi: 10.1016/0378-5955(94)90156-2. [DOI] [PubMed] [Google Scholar]

- Mattson AJ, Sheer DE, Fletcher JM. Electrophysiological evidence of lateralized disturbances in children with learning disabilities. J Clin Exp Neuropsychol. 1992;14:707–716. doi: 10.1080/01688639208402857. [DOI] [PubMed] [Google Scholar]

- Melcher JR, Sigalovsky I, Levine RA. Tinnitus related fMRI activation patterns in human auditory nuclei. In: Hazell J, editor. Proceedings of the Sixth International Tinnitus Seminar. London: Tinnitus and Hypercusis Centre; 1999. pp. 166–170. [Google Scholar]

- Näätänen R, Lehtokoski A, Lennes M, Cheour M, Huotilainen M, Iivonen A, Vainio M, Alku P, Ilmoniemi RJ, Luuk A, Allik J, Sinkkonen J, Alho K. Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature. 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Eriksson J, Larsson A, Marklund P. Learning by doing versus learning by thinking: an fMRI study of motor and mental training. Neuropsychol. 2005 doi: 10.1016/j.neuropsychologia.2005.08.006. in press. [DOI] [PubMed] [Google Scholar]

- Ogawa S, Lee TM, Kay AR, Tank DW. Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proc Natl Acad Sci U S A. 1990;87:9868–9872. doi: 10.1073/pnas.87.24.9868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogawa S, Tank DW, Menon R, Ellermann JM, Kim SG, Merkle H, Ugurbil K. Intrinsic signal changes accompanying sensory stimulation: functional brain mapping with magnetic resonance imaging. Proc Natl Acad Sci U S A. 1992;89:5951–5955. doi: 10.1073/pnas.89.13.5951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ojemann G. Brain organization for language from the perspective of electrical stimulation mapping. Behav brain Sci. 1983;6:189–230. [Google Scholar]

- Pantev C, Bertrand O, Eulitz C, Verkindt C, Hampson S, Schuierer G, Elbert T. Specific tonotopic organizations of different areas of the human auditory cortex revealed by simultaneous magnetic and electric recordings. Electroencephalogr Clin Neurophysiol. 1995;94:26–40. doi: 10.1016/0013-4694(94)00209-4. [DOI] [PubMed] [Google Scholar]

- Pascual-Marqui RD. Review of methods for solving the EEG inverse problem. Int J Bioelectromag. 1999;1:75–86. [Google Scholar]

- Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- Petersen MR, Beecher MD, Zoloth SR, Moody DB, Stebbins WC. Neural lateralization of species-specific vocalizations by Japanese macaques (Macaca fuscata) Science. 1978;202:324–327. doi: 10.1126/science.99817. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl Acad Sci U S A. 2000;97:13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps ME, Kuhl DE, Mazziota JC. Metabolic mapping of the brain’s response to visual stimulation: studies in humans. Science. 1981;211:1445–1448. doi: 10.1126/science.6970412. [DOI] [PubMed] [Google Scholar]

- Phillips DP, Farmer ME. Acquired word deafness, and the temporal grain of sound representation in the primary auditory cortex. Behav Brain Res. 1990;40:85–94. doi: 10.1016/0166-4328(90)90001-u. [DOI] [PubMed] [Google Scholar]

- Phillips DP. Representation of acoustic events in the primary auditory cortex. J Exp Psychol Hum Percept Perform. 1993;19:203–216. doi: 10.1037//0096-1523.19.1.203. [DOI] [PubMed] [Google Scholar]

- Picton TW, Hillyard SA, Krausz HI, Galambos R. Human auditory evoked potentials. I. Evaluation of components. Electroencephalogr Clin Neurophysiol. 1974;36:179–190. doi: 10.1016/0013-4694(74)90155-2. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Vasama JP, Tremblay K, Khosla D, Kwong B, Don M. Plasticity in the adult human central auditory system: evidence from late-onset profound unilateral deafness. Hear Res. 2001;154:32–44. doi: 10.1016/s0378-5955(01)00214-3. [DOI] [PubMed] [Google Scholar]

- Puttemans V, Wenderoth N, Swinnen SP. Changes in brain activation during the acquisition of a multifrequency bimanual coordination task: from the cognitive stage to advanced levels of automaticity. J Neurosci. 2005;25:4270–4278. doi: 10.1523/JNEUROSCI.3866-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME, Martin WR, Herscovitch P, Mintun MA, Markham J. Brain blood flow measured with intravenous H2(15)O. II. Implementation and validation. J Nucl Med. 1983;24:790–798. [PubMed] [Google Scholar]

- Rajan R, Irvine DR, Wise LZ, Heil P. Effect of unilateral partial cochlear lesions in adult cats on the representation of lesioned and unlesioned cochleas in primary auditory cortex. J Comp Neurol. 1993;338:17–49. doi: 10.1002/cne.903380104. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing. 1997 [PubMed] [Google Scholar]

- Reale RA, Imig TJ. Tonotopic organization in auditory cortex of the cat. J Comp Neurol. 1980;192:265–291. doi: 10.1002/cne.901920207. [DOI] [PubMed] [Google Scholar]

- Reale RA, Brugge JF, Chan JC. Maps of auditory cortex in cats reared after unilateral cochlear ablation in the neonatal period. Brain Res. 1987;431:281–290. doi: 10.1016/0165-3806(87)90215-x. [DOI] [PubMed] [Google Scholar]

- Rees G, Howseman A, Josephs O, Frith CD, Friston KJ, Frackowiak RS, Turner R. Characterizing the relationship between BOLD contrast and regional cerebral blood flow measurements by varying the stimulus presentation rate. Neuroimage. 1997;6:270–278. doi: 10.1006/nimg.1997.0300. [DOI] [PubMed] [Google Scholar]

- Rinne T, Alho K, Alku P, Holi M, Sinkkonen J, Virtanen J, Bertrand O, Naatanen R. Analysis of speech sounds is left-hemisphere predominant at 100–150ms after sound onset. Neuroreport. 1999;10:1113–1117. doi: 10.1097/00001756-199904060-00038. [DOI] [PubMed] [Google Scholar]

- Robertson D, Irvine DR. Plasticity of frequency organization in auditory cortex of guinea pigs with partial unilateral deafness. J Comp Neurol. 1989;282:456–471. doi: 10.1002/cne.902820311. [DOI] [PubMed] [Google Scholar]

- Scarff CJ, Reynolds A, Goodyear BG, Ponton CW, Dort JC, Eggermont JJ. Simultaneous 3-T fMRI and high-density recording of human auditory evoked potentials. Neuroimage. 2004;23:1129–1142. doi: 10.1016/j.neuroimage.2004.07.035. [DOI] [PubMed] [Google Scholar]

- Scheffler K, Bilecen D, Schmid N, Tschopp K, Seelig J. Auditory cortical responses in hearing subjects and unilateral deaf patients as detected by functional magnetic resonance imaging. Cereb Cortex. 1998;8:156–163. doi: 10.1093/cercor/8.2.156. [DOI] [PubMed] [Google Scholar]

- Scherg M, Picton TW. Separation and identification of event-related potential components by brain electric source analysis. Electroencephalogr Clin Neurophysiol Suppl. 1991;42:24–37. [PubMed] [Google Scholar]

- Seldon HL. Structure of human auditory cortex. I. Cytoarchitectonics and dendritic distributions. Brain Res. 1981;229:277–294. doi: 10.1016/0006-8993(81)90994-x. [DOI] [PubMed] [Google Scholar]

- Seldon HL. Structure of human auditory cortex. II. Axon distributions and morphological correlates of speech perception. Brain Res. 1981;229:295–310. doi: 10.1016/0006-8993(81)90995-1. [DOI] [PubMed] [Google Scholar]

- Seldon HL. Structure of human auditory cortex. III. Statistical analysis of dendritic trees. Brain Res. 1982;249:211–221. doi: 10.1016/0006-8993(82)90055-5. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Kujala T, Ahveninen J, Tervaniemi M, Alku P, Ilmoniemi RJ, Naatanen R. Background acoustic noise and the hemispheric lateralization of speech processing in the human brain: magnetic mismatch negativity study. Neurosci Lett. 1998;251:141–144. doi: 10.1016/s0304-3940(98)00529-1. [DOI] [PubMed] [Google Scholar]

- Sigman M, Pan H, Yang Y, Stern E, Silbersweig D, Gilbert CD. Top-down reorganization of activity in the visual pathway after learning a shape identification task. Neuron. 2005;46:823–835. doi: 10.1016/j.neuron.2005.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sininger YS, Cone-Wesson B. Asymmetric cochlear processing mimics hemispheric specialization. Science. 2004;305:1581. doi: 10.1126/science.1100646. [DOI] [PubMed] [Google Scholar]

- Strainer JC, Ulmer JL, Yetkin FZ, Haughton VM, Daniels DL, Millen SJ. Functional MR of the primary auditory cortex: an analysis of pure tone activation and tone discrimination. AJNR Am J Neuroradiol. 1997;18:601–610. [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. Stuttgart: Georg Thieme Verlag; 1988. [Google Scholar]

- Talavage TM, Ledden PJ, Benson RR, Rosen BR, Melcher JR. Frequency-dependent responses exhibited by multiple regions in human auditory cortex. Hear Res. 2000;150:225–244. doi: 10.1016/s0378-5955(00)00203-3. [DOI] [PubMed] [Google Scholar]

- Talavage TM, Ledden PJ, Sereno MI, Rosen BR, Dale AM. Multiple phase encoded tonotopic maps in human auditory cortex. NeuroImage. 1997;5:S8. [Google Scholar]

- Tallal P, Miller S, Fitch R. Neurobiological basis of speech: a case for the preeminence of temporal processing. Ann NY Acad Sci. 1994;682:27–47. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- Tremblay K, Kraus N, Carrell TD, McGee T. Central auditory system plasticity: generalization to novel stimuli following listening training. J Acoust Soc Am. 1997;102:3762–3773. doi: 10.1121/1.420139. [DOI] [PubMed] [Google Scholar]

- Ulmer JL, Biswal BB, Yetkin FZ, Mark LP, Mathews VP, Prost RW, Estkowski LD, McAuliffe TL, Haughton VM, Horzewski D. Cortical activation response to acoustic echo planar scanner noise. J Compu Assist Tomogr. 1998;22:111–119. doi: 10.1097/00004728-199801000-00021. [DOI] [PubMed] [Google Scholar]

- Vasama J-P, Makela JP. Auditory pathway plasticity in adult humans after unilateral idiopathic sudden sensorineural hearing loss. Hear Res. 1995;87:132–140. doi: 10.1016/0378-5955(95)00086-j. [DOI] [PubMed] [Google Scholar]

- Wagner M, Fuchs M. Integration of functional MRI, structural MRI, EEG and MEG. Int J Bioelectromag. 2001;3:1–15. [Google Scholar]

- Wever EG, Bray CW. Present possibilities of auditory theory. Psychol Rev. 1930;37:365–380. [Google Scholar]