Abstract

The feeling of being observed or merely participating in an experiment can affect individuals’ behavior. Referred to as the Hawthorne effect, this inconsistently observed phenomenon can both provide insight into individuals' behavior and confound the interpretation of experimental manipulations. Here, we pursue both topics in examining how the Hawthorne effect emerges in a large field experiment focused on residential consumers’ electricity use. These consumers received five postcards notifying, and then reminding, them of their participation in a study of household electricity use. We found evidence for a Hawthorne (study participation) effect, seen in a reduction of their electricity use—even though they received no information, instruction, or incentives to change. Responses to a follow-up survey suggested that the effect reflected heightened awareness of energy consumption. Consistent with that interpretation, the treatment effect vanished when the intervention ended.

Keywords: environmental decision making, energy conservation, electricity consumption, behavioral decision research

How to substitute human responsibility for futile strife and hatred—this is one of the most important researches of our time.

Elton Mayo, in Roethlisberger and Dickson (1)

Beginning in 1924, the Western Electric Company Hawthorne plant was the site of some of the most influential studies in the formative years of the social sciences: the illumination experiments, examining the effects of artificial lights on worker behavior. Although workers seemed to increase their productivity when lighting regimes changed, the researchers eventually concluded that those changes actually reflected psychological factors, such as workers’ responses to receiving special attention or being aware of the experiment. Subsequent studies at Hawthorne reached similar conclusions (1). Such changes came to be called Hawthorne effects (2, 3), although, ironically, secondary analyses concluded that there was no effect in the original studies or, more precisely, that the studies’ design was too flawed to establish whether the effect was, in fact, observed there (4–7).

The mythical status of the initial observation notwithstanding, the Hawthorne effect is a fundamental concern for scientists studying any program designed to change human behavior, who must distinguish the effects of the program from the effects of being in the study. As a result, the Hawthorne effect has been examined in many areas, including worker performance (8), education (9, 10), health (11), and voting (12). The evidence from these studies is mixed. Some of the variability in their results may reflect differences in how they operationalized the concept of “being in a study.” At one extreme lie such minimal manipulations as telling people no more than that they are in a study. At the other extreme lie treatments known to have their own effects, such as directly monitoring specific behaviors (13), providing performance feedback (14), inadvertently communicating research hypotheses (15), and providing new resources or instruction (16). Here, we add to the relatively small set of experiments that have examined the effects of study participation per se, with a field experiment examining electricity use of several thousand consumers. Our results reveal evidence of a pure Hawthorne effect, the psychological mechanisms shaping its size, and its implications for field studies of policy interventions.

In addition to its obvious economic and environmental importance, household electricity consumption offers several attractive features as a research domain. It is routinely measured for many households. It is such a small part of most Americans’ budgets that it typically receives little attention, meaning that participating in a study might be enough to make it salient. Finally, most people know how to save electricity—even if they do not always know which ways are most effective (17, 18). Thus, if participating in a study increases the salience of electricity consumption, people should know what to do without further instruction—which could confound the pure participation manipulation.

Although there are many studies of interventions seeking to affect energy consumption, few have assessed the impact of Hawthorne (study participation) effects on their results (19). Among those few, some used an opt-in design eliciting a commitment to participate (hence confounded the mere-participation manipulation), had small samples, used weak manipulations, or omitted essential details in the research report, making it hard to tell what they did and found (20–22). As a measure of the importance of even small changes in energy consumption, states have set goals ranging from 0.1% to 2.25% annual savings (23).

Our experiment sent five weekly postcards to a random sample of electricity customers, notifying them about their participation in a study about household electricity use. Monthly electricity use was collected before, during, and after the experimental period for the treatment group and for a similarly selected control group. One month after the last postcard was sent, we surveyed a random sample of participants, asking about their response to the study.

Experimental Design

Participants were randomly selected from residential customers of a mid-Atlantic electricity utility to be in treatment or control groups. Households in the treatment group received their first notification a few days before the start date through a postcard stating that they had been selected to be in a 1-mo study about electricity use in their home and that no action was required on their part. They then received four additional weekly postcard reminders about the study. Thus, the study’s sole stated goal was measuring electricity consumption. The control group received nothing. The observation period approximately spanned the interval between successive monthly readings. Table 1 summarizes household characteristics for the treatment and control groups. A subsample received a survey 1 mo after the end of the study period. Methods provides details on the postcards, survey, sampling, and data structure.

Table 1.

Household characteristics for control and treatment groups

| Characteristics | Control mean | Treatment mean | t | P value |

| Household size* | 2.8 | 2.8 | −0.72 | 0.47 |

| % households renting* | 13.7 | 13.9 | −0.27 | 0.79 |

| % households with electric heating† | 33.1 | 33.3 | −0.27 | 0.78 |

| No. of rooms† | 6.5 | 6.5 | −1.71 | 0.09 |

| Household income (thousands of dollars)† | 101.7 | 102.0 | −0.32 | 0.75 |

| % households with low payment history‡ | 12.1 | 12.5 | −0.50 | 0.62 |

| % households with low income subsidy‡ | 1.6 | 1.9 | −1.01 | 0.31 |

| % Whites* | 47.2 | 47.4 | −0.25 | 0.81 |

| % Blacks* | 31.1 | 31.0 | 0.08 | 0.94 |

| % Asians* | 13.5 | 13.1 | 0.90 | 0.37 |

| % Hispanics* | 11.5 | 11.7 | −0.83 | 0.40 |

| Summer 2010 electricity use‡, kW⋅h/d | 42.0 | 41.8 | 0.36 | 0.72 |

| Fall 2010 electricity use‡, kW⋅h/d | 28.5 | 28.8 | −0.74 | 0.46 |

| Winter 2011 electricity use‡, kW⋅h/d | 40.9 | 41.9 | −1.19 | 0.23 |

| Spring 2011 electricity use‡, kW⋅h/d | 29.9 | 30.0 | −0.32 | 0.75 |

Source: 2010 Census data (block-level information).

Source: 2010 Census Data (tract-level information).

Source: data provided by utility company (household-level information).

Results

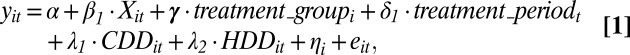

The main dependent variable was households’ electricity use. Although meter readings are scheduled for monthly intervals, there is some variability in when they are actually performed. To accommodate this variability, we adjusted each household’s electricity use by the number of days that fell during the postcard treatment period. We used this as our estimator of the intervention’s impact, comparing electricity use in treatment and control households, before and after the treatment period (Eq. 1):

|

where yit is the log of average daily electricity consumption for household i in month (billing period) t, treatment_groupi indicates whether household i is in the treatment (1) or the control group (0), and treatment_periodt is the fraction of days in the treatment period included in monthly electricity use for t. Xit is the interaction between these two terms, equal to the fraction of days in the treatment period for month t for households in the treatment group and 0 for households in the control group. Because most of the variation in electricity use in this region reflects demand for heating and air conditioning, our statistical model included heating and cooling degree days in each billing period: CDDit and HDDit are the average cooling and heating degrees days for month t, respectively, using the weather station closest to household i. Several household characteristics (e.g., household size and electric heating) were included in the regression and are denoted as ηi (see Supporting Information for details). The error term is eit. β1 is the average treatment effect of the intervention.

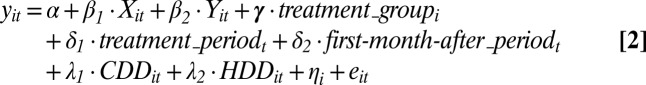

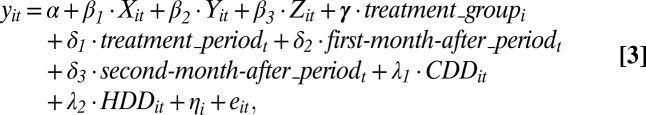

We calculated the average treatment effect estimators for the posttreatment period in the same way. We used two posttreatment periods, for the month after the intervention (Eq. 2) and for the following month (Eq. 3), to estimate carry-over effects, perhaps reflecting energy-saving habits that people formed during the intervention, and then maintained afterward.

|

|

where the new interaction terms, Yit and Zit, are equal to the fraction of days in the first and second months, respectively, after the intervention ended for households in the treatment group and 0 for households in the control group. β2 and β3 are the average treatment effect for the first and second month after the intervention, respectively. As in Eq. 1, first-month-after_periodt and second-month-after_periodt indicate use changes, that hold for both control and treatment groups, in each posttreatment period for t.

Table 2 presents ordinary least-squares (OLS) analyses, using robust SEs clustered by household. Models I, II, and III represent the treatment and posttreatment effects specified above, in Eqs. 1, 2, and 3, respectively. The table shows that the average household in the treatment group used 2.7% less electricity during the month of the study (model I, β1 = −0.027, P = 0.03), compared with the control group. These results are essentially the same when adjusted for observed days within the treatment month, as would be expected given the 97.9% overlap in the periods. As shown in models II and III, there was no posttreatment effect in either the month after the last postcard (model II, β2 = −0.007, P = 0.45) or in the second month after that (model III, β3 = −0.005, P = 0.55). Supporting Information shows that these results are highly robust to changes in model specification and that including temperature data and household characteristics markedly improve the fit of the regressions (24).

Table 2.

OLS regressions and average treatment effects, indicating the percentage change (savings) in the treatment group compared with the control group

| DV: ln(kW⋅h/d) | Model I |

Model II |

Model III |

||||||

| Coefficient | SE | t | Coefficient | SE | t | Coefficient | SE | t | |

| Treatment effect | −0.027** | 0.012 | −2.159 | −0.026** | 0.012 | −2.151 | −0.026** | 0.012 | −2.153 |

| First-month-after effect | — | — | — | −0.007 | 0.010 | −0.751 | −0.007 | 0.010 | −0.697 |

| Second-month-after effect | — | — | — | — | — | — | −0.005 | 0.009 | −0.592 |

| Treatment group (= 1; 0 if not) | 0.006 | 0.014 | 0.47 | 0.007 | 0.014 | 0.48 | 0.007 | 0.014 | 0.488 |

| Treatment period | −0.038*** | 0.008 | −4.95 | −0.038*** | 0.008 | −4.966 | −0.038*** | 0.008 | −4.945 |

| First-month-after period | — | — | — | 0.033*** | 0.006 | 5.231 | 0.032*** | 0.006 | 5.075 |

| Second-month-after period | — | — | — | — | — | — | −0.032*** | 0.006 | −5.393 |

| Cooling degree days | 0.056*** | 0.001 | 107.622 | 0.056*** | 0.001 | 104.229 | 0.056*** | 0.001 | 103.964 |

| Heating degree days | 0.014*** | <0.001 | 54.985 | 0.014*** | <0.001 | 54.458 | 0.014*** | <0.001 | 54.418 |

| Constant | 1.439*** | 0.054 | 26.484 | 1.460*** | 0.054 | 27.026 | 1.473*** | 0.054 | 27.38 |

| Household characteristics | Yes | Yes | Yes | ||||||

| Adjusted R2 | 0.220 | 0.216 | 0.218 | ||||||

| Households | 5,598 | 5,598 | 5,598 | ||||||

| Observations | 113,624 | 119,087 | 124,578 | ||||||

Dependent variable (DV) is the log of household's average daily electricity use.*P < 0.10, **P < 0.05, ***P < 0.01. A log transformation of average daily use produced residuals closer to a normal distribution than did analyses using actual use, suggesting more robust results and inference. Without the transformation, the average treatment effect was −0.998 kW⋅h/d (P = 0.04), or 2.2% based on the control group’s average daily use of 44.5 kW⋅h/d during the treatment period.

Survey

The survey was conducted a month after the field intervention ended. It followed the tailored design method (25). The analysis focuses on treatment group respondents able to answer questions about the intervention. Most treatment group respondents (68.1%) reported remembering at least one postcard out of the five, with a mean of 3.0 (SD = 1.3). Respondents who could not remember a single postcard may simply have forgotten in the month since the study ended. Or, they may not be the person who got the card—although most did say that they were the person who usually picks up the mail. When asked an open-ended question about the purpose of the study, 30.2% of these respondents cited variants of “save, learn, or be more aware about electricity,” 29.6% offered variants of “study electricity usage” (as stated in the postcards); 20.4% said that they did not know. When asked how the study had affected them, 22.2% agreed or strongly agreed with the statement “the study made me reduce my electricity usage,” where 1 = “Strongly disagree” and 5 = “Strongly agree.” In addition, 36.4% thought that the study had made them more aware of their electricity use and 30.2% that they had learned more about what things use electricity.

As seen in Table 3, treatment group respondents were more likely to report that they had reduced their electricity consumption when they saw themselves as doing more than their neighbors to save electricity (model 1; β = 0.386, P < 0.01), and when they perceived the study as having heightened their awareness of electricity use (model 3; β = 0.669, P < 0.01). Whether they reported reducing their consumption was not related to whether they expressed concern about privacy with new metering devices that would constantly track their use, which could be seen as another form of awareness. Finally, even though perceived reductions were related to believing that the study was about reducing, learning, or being aware of electricity use (model 2; β = 0.545, P < 0.01), that effect disappeared when reported electricity awareness was added to the model (model 3). Thus, perceived reductions reflect heightened awareness of electricity use as a result of participating, rather than beliefs about the study’s purpose. Additional regression analyses found no relationship between actual use and these survey questions (See Supporting Information for details). However, only 162 respondents reported remembering at least one postcard, limiting the statistical power of this test.

Table 3.

Survey measures associated with reported-use electricity reduction

| DV: perceived electricity reduction caused by the study | Model 1 |

Model 2 |

Model 3 |

||||||

| Coefficient | SE | t | Coefficient | SE | t | Coefficient | SE | t | |

| Do more than neighbors to save electricity | 0.386*** | 0.100 | 3.857 | 0.306*** | 0.101 | 3.023 | 0.120* | 0.070 | 1.71 |

| Concern about being observed with new metering devices | 0.139 | 0.089 | 1.565 | 0.132 | 0.087 | 1.528 | 0.027 | 0.060 | 0.446 |

| Study’s perceived purpose to save, learn, or be more aware about electricity (= 1; 0 if not) | — | — | — | 0.545*** | 0.188 | 2.893 | 0.186 | 0.131 | 1.420 |

| Became more aware of their electricity use because of the study | — | — | — | — | — | — | 0.669*** | 0.054 | 12.425 |

| Constant | 0.901** | 0.446 | 2.018 | 1.024** | 0.437 | 2.347 | 0.055 | 0.307 | 0.179 |

| Adjusted R2 | 0.097 | 0.145 | 0.604 | ||||||

| No. of observations | 136 | 136 | 136 | ||||||

Of the 162 treatment group respondents who reported remembering at least one postcard, hence could answer questions about the study, 136 answered all of the questions used in this analysis. Unstandardized coefficients. Unless otherwise noted, for all scales 1 = “Strongly disagree” to 5 = ”Strongly agree.” *P < 0.10, **P < 0.05, ***P < 0.01.

When asked how often they had performed nine energy-saving actions (with “never” = 1 and “always” = 5), treatment group respondents who reported reducing their electricity consumption were also more likely to report turning off their air conditioning, unplugging devices when not in use, and using electrical devices less, compared with respondents in the control group (P = 0.07, P = 0.01, and P < 0.01, respectively). There were no differences in reports of the other six actions, whether routine (e.g., turning off computers and lights) or sustained (e.g., buying energy-efficient light bulbs or appliances). Comparing all actions reported by treatment group respondents who reported not reducing their electricity use with respondents in the control group revealed only one significant difference: Control group respondents reported using electrical devices less (P < 0.01).

Discussion

We find evidence for a “pure” (study participation) Hawthorne effect in electricity use. Residential consumers who received weekly postcards informing them that they were in a study reduced their monthly use by 2.7%—an amount greater than the annual conservation goal currently mandated by any state. A follow-up survey found that participants who reported having responded more to the study also reported greater awareness of their electricity consumption and saw themselves as already doing more than their neighbors. These results suggest that the Hawthorne effect occurred here because participation in the study increased the saliency of the focal behavior—an interpretation supported by the fact that the treatment effect vanished when the intervention ended.

The field study contributes to our understanding of the Hawthorne effect by addressing methodological limitations of previous studies. Namely, our design isolates the effect of participation in a study from potential confounds, such as providing information or feedback. The follow-up survey further clarifies the underlying mechanisms. Our results suggest that participation in a study can change behavior without providing feedback, resources, information, or direct observation. The results also suggest that participants’ interpretation of the study’s purpose was less relevant than their increased awareness of the study’s topic, in a context where attention alone might change behavior—because people already know how to respond. As with any self-reports, these responses may not have reflected participants’ actual behavior or perceptions. According to one well-known account, self-reports are more accurate when the stimulus is salient and the responses plausible (26). Those conditions should hold for our stimuli (postcards) and focal responses (energy-saving actions). According to another well-known account (27), retrospective self-reports are less valid than concurrent ones. Ours fall somewhere in between, with participants reporting on the actions that they remember having taken in response to the postcards. Still, it is possible that the relationships that we observed might have been obscured by cases in which participants failed to remember their own actions or succumbed to social desirability bias and reported actions that they had not actually taken. Further clarifying the mechanisms affecting Hawthorne responses is an important topic for future research. Another topic for future research is how the Hawthorne effect varies with the source of the observation or study (neighbors, utility company, a university, or a governmental agency) and how people view (e.g., trust) it.

The Hawthorne effect has long been known as a potential experimental artifact. The present results show the importance of assessing the impact of just being in an electricity-use study, before making inferences about the impact of experimental manipulations. Were people better informed about how best to save energy, then the energy saving might be larger. However, providing guidance regarding effective energy-saving actions (28) would have confounded our study of Hawthorne effects. These results also suggest how these behavioral changes come about: by heightening awareness of electricity use. That interpretation is consistent with the observation that the effects of sustained energy conservation programs seem to decay between quarterly use reports (29). Thus, any socially acceptable way of increasing awareness might reduce consumption for those motivated to do so, but only as long as the intervention continues, unless it creates sustained habits or prompts structural changes (e.g., buying efficient air conditioning or upgrading home insulation). We cannot know what effects a sustained Hawthorne intervention (e.g., a steady stream of postcards notifying consumers about new studies) would produce. It is possible that it would lose effect over time, as consumers habituate to the messages, or have increasing effect, as awareness becomes routine and energy-saving behaviors a matter of habit. However, if awareness alone can improve performance in contexts where people require no additional information, we might retire the “Hawthorne effect” in favor of a “Hawthorne strategy” (30) of reminding people about things that matter to them but can get neglected in the turmoil of everyday life.

Methods

Field Experiment Participants.

Participants (n = 6,350) were randomly selected from a dataset of residential customers who live in one of the regions served by a major mid-Atlantic electricity utility. Customers with scheduled meter reading dates close to July 20, 2011, and August 20, 2011, were eligible for the study. Other eligibility criteria were met by almost all customers: having an individual electricity meter and being a residential customer under a standard (i.e., flat-rate) price-scheme program. From the 45,509 eligible customers, 6,350 were randomly selected and divided between the treatment and control groups. This sample size was determined through statistical power analysis using 2010 electricity-use data, considering effect sizes of previous energy conservation programs and budget constraints.

Procedure.

The intervention lasted from July 20, 2011, to August 20, 2011. Households in the treatment group received postcard notification of participation in the study a few days before its beginning. Then, at weekly intervals, they received three postcards reminding them of their participation and a final postcard notifying them that the study was ending. All material said that the households were participating in a study conducted by researchers at Carnegie Mellon University, but with no explicit goal other than measuring their electricity consumption: “You have been selected to be part of a one-month study of how much electricity you use in your home. This study will start on Wednesday July 20, 2011, close to the day of your meter reading this month. No action is needed on your part. We will send you a weekly reminder postcard about the study. Thank you. This study is being conducted by researchers at Carnegie Mellon University with help from your utility company.” Customers’ names and addresses appeared as well, using the post office format. On the front of the postcard was a picture of a house with appliances connected to an electricity meter and the Carnegie Mellon University logo. The materials were pretested with a sample of people recruited from an online national subject pool, to assess their understanding and interpretations. Postcards were sent out on Fridays, so households would receive them the following Monday or Tuesday and be informed in a timely fashion about the beginning and end of the study. We sent postcards to two households of the study’s service territory but not part of the study, who confirmed their arrival dates. The control group received no experimental material.

The field study used an opt-out design, in which customers (in the treatment group) could call a 1-800 toll-free number or visit a Web site to request not being part of the study and not receiving additional material. None of these communication channels provided more information than that shown on the postcards. Thirty-six (treatment group) participants (1.28%) elected to opt out. Although they received no additional postcards, they were included in the analyses of energy use for two reasons: (i) We had intended to treat them and (ii) receiving one or two postcards (and actively opting out) may have increased their energy awareness, distinguishing them from the control group. Excluding them did not affect our results.

Attrition.

The sample was selected with 2010 data. By the start of the study (summer 2011), 9.7% of households in the treatment group and 9.3% in the control group had moved or closed their accounts and hence did not receive the materials. Between August and October 2011, an additional 2.1% of households in the treatment group and 2.6% in the control group had moved or closed their accounts. The postcards had a “sender request to be returned” stamp, and most returns indicated that customers had moved. As of October 2011, the final treatment and control groups had 2,802 and 2,796 participants, respectively. There was no significant difference between the treatment and control group in attrition rate [χ2 (1) = 0.04, P = 0.84]. Although there are no data on electricity consumption for these households for the study period, there is information about some characteristics, revealing that they had lower baseline electricity consumption and were more likely to be renting than those who received the study materials (P < 0.05). Thus, participants in the study (n = 5,598) represented more “settled” households in the area.

Electricity Consumption Data.

All participants had 2 y of prior monthly electricity use (133,545 observations), from November 2009 to October 2011. Of these, 6.7% had monthly electricity bills based on estimates, rather than actual consumption, as a result of scheduled meter readings not being performed, typically owing to weather conditions or meter inaccessibility. They were excluded, as were a few (<0.1%) with zero readings. These exclusions left 124,578 observations in the analysis.

Meter Reading Dates.

Meter readings are not always performed on the scheduled day, meaning that the treatment period did not fully coincide with a single billing cycle of participants. Overall, there was a 97.9% overlap between participants’ bill cycle and the month of the treatment period. For the months before and after the treatment, the overlap was 9.5% and 3.6%, respectively. We adjusted our analyses to consider the days in the monthly billing cycle that overlapped with the study treatment and posttreatment periods.

Weather and Household Data.

Cooling degree days in the billing period for each household were calculated as the sum of the average daily temperature over 65 °F, for a billing period, using the closest weather station to its ZIP code. Heating degree days were calculated similarly for average daily temperatures below 65 °F. Household data were obtained from utility company and 2010 Census data, for which we geo-referenced addresses to block and tract numbers. Supporting Information details all variables.

Survey Respondents.

Participants from the treatment (n = 600) and control (n = 370) groups were mailed a survey. Given our interest in how the intervention affected treatment group members, they were oversampled, with the control group’s survey responses used to compare self-reported energy-saving actions (See Supporting Information for details). Sixty-nine participants had moved or closed their accounts, hence did not receive the survey. From the 572 (treatment group) and 329 (control group) participants, the overall response rate was 42.5%, with no significant difference between groups (P = 0.97). This response rate is greater than the response rate normally reached by the utility company that serves these customers, which is around 10%. See Supporting Information for respondents’ and nonrespondents’ characteristics, using Census and electricity-use information, with a few seemingly minor differences.

Procedure.

Participants received a package containing a cover letter explaining the goal of the survey, a 5-min survey with questions about electricity, a $2 bill, and a postage-paid envelope. One week later, all participants received a thank-you-and-reminder postcard. Participants had the option of answering the survey online. Two-thirds of participants were randomly offered participation in a raffle if they returned the survey before the end of September, as part of our ongoing study of how to increase participation rates (it made no difference.) Supporting Information details all questions included in the survey.

Supplementary Material

Acknowledgments

We thank W. Bruine de Bruin, D. Caruso, and J. Wang for their help in this study and The Energy and Behavior Group for valuable comments. This material is based upon work supported by the Department of Energy under Awards DE-OE0000300 and DE-OE0000204. This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. T.D. is a guest editor invited by the Editorial Board.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1301687110/-/DCSupplemental.

References

- 1.Roethlisberger FJ, Dickson WD. Management and the Worker. Cambridge, MA: Harvard Univ Press; 1939. [Google Scholar]

- 2.French JRJ. In: Experiments in Social Process: A Symposium on Social Psychology. Miller JG, editor. New York: McGraw-Hill; 1950. pp. 81–96. [Google Scholar]

- 3.French JRJ. In: Research Methods in the Behavioral Sciences. Festinger L, Katz D, editors. New York: Holt, Rinehart and Winston; 1953. pp. 98–135. [Google Scholar]

- 4.Jones S. Was there a Hawthorne effect? AJS. 1992;98(3):451–468. [Google Scholar]

- 5.Levitt SD, List JA. Was there really a Hawthorne effect at the Hawthorne plant? An analysis of the original illumination experiments. Am Econ J Appl Econ. 2011;3(1):224–238. [Google Scholar]

- 6.Izawa MR, French MD, Hedge a. Shining new light on the hawthorne illumination experiments. Human factors. J Human Factors Ergonom Soc. 2011;53(5):528–547. doi: 10.1177/0018720811417968. [DOI] [PubMed] [Google Scholar]

- 7.Carey A. The Hawthorne studies: A radical criticism. Am Sociol Rev. 1967;32(3):403–416. [Google Scholar]

- 8.Mangione-Smith R, Elliott MN, McDonald L, McGlynn EA. An observational study of antibiotic prescribing behavior and the Hawthorne effect. Health Serv Res. 2002;37(6):1603–1623. doi: 10.1111/1475-6773.10482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Adair J, Sharpe D, Huynh C-L. Hawthorne control procedures in educational experiments: A reconsideration of their use and effectiveness. Rev Educ Res. 1989;59(2):215–228. [Google Scholar]

- 10.Cook DL. The Hawthorne effect in educational research. Phi Delta Kappan. 1962;44(3):116–122. [Google Scholar]

- 11.Bouchet C, Guillemin F, Briançon S. Nonspecific effects in longitudinal studies: Impact on quality of life measures. J Clin Epidemiol. 1996;49(1):15–20. doi: 10.1016/0895-4356(95)00540-4. [DOI] [PubMed] [Google Scholar]

- 12.Gerber AS, Green DP, Larimer CW. Social pressure and voter turnout: Evidence from a large-scale field experiment. Am Polit Sci Rev. 2008;102(1):33–48. [Google Scholar]

- 13.Eckmanns T, Bessert J, Behnke M, Gastmeier P, Ruden H. Compliance with antiseptic hand rub use in intensive care units: The Hawthorne effect. Infect Control Hosp Epidemiol. 2006;27(9):931–934. doi: 10.1086/507294. [DOI] [PubMed] [Google Scholar]

- 14.Parsons HM. What happened at Hawthorne?: New evidence suggests the Hawthorne effect resulted from operant reinforcement contingencies. Science. 1974;183(4128):922–932. doi: 10.1126/science.183.4128.922. [DOI] [PubMed] [Google Scholar]

- 15.Adair JG. The Hawthorne effect: A reconsideration of the methodological artifact. J Appl Psychol. 1984;69(2):334–345. [Google Scholar]

- 16.Feil PH, Grauer JS, Gadbury-amyot CC, Kula K, Mccunniff MD. Intentional use of the Hawthorne effect orthodontic patients. J Dent Educ. 2002;66(10):1129–1135. [PubMed] [Google Scholar]

- 17.Attari SZ, DeKay ML, Davidson CI, Bruine de Bruin W. Public perceptions of energy consumption and savings. Proc Natl Acad Sci USA. 2010;107(37):16054–16059. doi: 10.1073/pnas.1001509107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Tompson T, et al. (2012) Energy issues: How the public understands and acts. Available at www.apnorc.org/PDFs/Energy/AP-NORC-Energy-Report.pdf. Accessed August 14, 2013.

- 19.Wood G, Newborough M. Dynamic energy-consumption indicators for domestic appliances: Environment, behaviour and design. Energy Build. 2003;35(8):821–841. [Google Scholar]

- 20.Kasulis JJ, Huettner DA, Dikeman NJ. The feasibility of changing electricity patterns consumption. J Consum Res. 1981;8(3):279–290. [Google Scholar]

- 21. Ossege A, Ozog M, Thompson P (2011) Comparison reports: Analytical insights and utility business case. Available at http://sage-view.com/wp-content/uploads/2012/01/AESP%20Comparison%20Reports.pdf. Accessed August 14, 2013.

- 22. Faruqui A, Sergici S, Akaba L (2011) Consumers energy’s personal power plan pilot- summer 2010 impact evaluation. Available at http://efile.mpsc.state.mi.us/efile/docs/16794/0003.pdf. Accessed August 14, 2013.

- 23. American Council for an Energy-Efficient Economy (2011) State Energy Efficiency Resource Standard (EERS) activity. Available at www.aceee.org/sites/default/files/publications/researchreports/u112.pdf. Accessed August 14, 2013.

- 24. US Department of Energy (2010) Design and implementation of program evaluations that utilize randomized experimental approaches. Available at www.smartgrid.gov/sites/default/files/doc/files/Guidance-doc-7-randomized-experimental-approaches.pdf. Accessed August 14, 2013.

- 25.Dillman D. Mail and Internet Surveys: The Tailored Design Method. New York: Wiley; 2000. [Google Scholar]

- 26.Nisbett R, Wilson T. Telling more than we can know: Verbal reports on mental processes. Psychol Rev. 1977;84(3):231–259. [Google Scholar]

- 27.Ericsson KA, Simon H. Protocol Analysis: Verbal Reports as Data. Cambridge, MA: MIT Press; 1993. [Google Scholar]

- 28.Gardner G, Stern PC. The short list: The most effective actions US households can take to curb climate change. Environment Magazine. 2008;50(5):12–24. [Google Scholar]

- 29.Allcott H. Social norms and energy conservation. J Public Econ. 2011;95(9):1082–1095. [Google Scholar]

- 30.Lied T, Kazandjian V. A Hawthorne strategy: Implications for performance measurement and improvement. Clin Performance quality Health Care. 1998;6(4):201–204. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.