Abstract

Context

The CAHPS® survey instruments are widely used to assess patient experiences with care but there is limited information about web-based data collection with them.

Objective

To compare web-based data collection with standard mail survey mode of collection of CAHPS® Clinician and Group survey data.

Design, setting, and patients

We randomized mode of data collection (web versus mail) of the CAHPS® Clinician and Group Survey to patients who had visited one of six clinics over a four-month period in Minnesota. A total of 410 patients responded to the web-based survey (14% response rate) and 982 patients responded to the mail survey (33% response rate).

Main outcome measures

Responses to CAHPS® survey dimensions and individual question responses, response rates, and participant characteristics.

Results

There were no significant differences in CAHPS® survey composites and individual question responses by mode, except for those addressing access. Those responding via the web reported less positive experiences with access to an appointment for urgent care as soon as needed, getting an appointment for routine care as soon as needed, getting answers to medical questions as soon as needed, and follow-up on test results (t’s=−3.64, −7.15, −2.58, −2.23; p’s=0.0003, <0.0001, 0.01, 0.03, respectively). Web respondents had more positive experiences about office wait time for the most recent visit (t = 2.32, p=0.021).

Those who participated in the study tended to be older than those that did not (ƛ2=247.51, df=8, p<0.0001 for mail; ƛ2= 4.56, df=8, p<0.0001 for the web). Females were significantly more likely than males to respond to the survey overall (24% vs. 18%, ƛ2=6.45, 1 df, p=0.011) and relatively more likely than males to respond to web (15% vs. 13%, ƛ2=1.32, 1 df, p=0.25) than mail (34% vs. 30%, ƛ2=5.42, 1 df, p=0.02). Mail respondents were more likely than web respondents to be male (28% versus 18%, ƛ2=16.27, 1 df, p<0.0001) and older (27% of the mail respondents and 19% of the web respondents were 65 or older, ƛ2=10.88, 1 df, p=0.001). Costs of web-based surveys were less than mailed surveys and were returned more quickly than mailed surveys. The correlations between reports and ratings of clinicians and clinics by mode were unreliable because of the relatively small number of web responses.

Conclusion

Web-based surveys yielded comparable results to mail (except for questions addressing access) more quickly at lower costs. The low response rates in this study are a concern although this was not intended as a test of increasing response rates. Strategies to increase response rates will be a key element of web-based data collection. The differences in costs will be an incentive for organizations to continue to pursue web-based surveying. Further studies are needed to evaluate the generalizability of the results of this one.

Keywords: CAHPS®, Mode effects, Consumer assessments, Patient evaluation of health care

Introduction

The Consumer Assessment of Healthcare Providers and Systems (CAHPS®) survey instruments are widely used to assess patient experiences with care. Both mail and telephone modes of data collection are commonly used. Physician groups and providers believe that driving improvement at the clinician level requires engaging clinicians with meaningful, timely feedback. Feedback from mailed surveys is often slow and can require 2–3 months to get information to clinicians. In addition, collecting data by mail or telephone survey is expensive, typically $10 per completed survey. Faster and less costly methods of data collection are desired. But there is limited information about the results and relative costs of alternative modes such as web-based data collection.

The intent of this study was to compare results of CAHPS® survey composites and individual question responses by mode in a real world setting. In addition, feedback intervals and costs by mode of survey administration were also studied.

The existing studies suggest that individuals who complete online surveys differ from those who complete mail or telephone surveys [1]. Web-based surveying has been found to have lower response rates than mail [2–3], A recent mixed mode study that used the web as the primary mode of data collection obtained a response rate of 53% with 72% of the completes via the web [4]. Web respondents were significantly more likely to be white and perceived their health more positively than mail respondents, but there were no differences in perceptions of communication or global ratings of the doctor [5]. A study by Rodriguez found few significant differences in responses to the CAHPS® Clinician and Group 1.0 survey between mail, web and interactive voice response, but rankings of clinics and individual clinicians were similar [2].

Electronic methods for collecting information are increasingly part of everyday life, although health care lags most other industries. Electronic methods for reporting health status have been advocated [3,6] and electronic tools to assess the patient experience have promise, especially for performance improvement and more rapid feedback [1,2,7,8].

Materials and Methods

Study Setting

Allina Health is a not for profit healthcare delivery system headquartered in Minnesota comprised of 11 hospitals and over 123 clinics with a history of working to improve the clinical care and experience of care for patients. Clinicians working in outpatient settings are 75% primary care and 25% specialty care clinicians. Timely performance feedback of clinical quality and patient experince at the individual clinician level is a key feature of improvement efforts. Delays inherent with mailed patient experience surveys and their prohibitive costs for a large organization prompted this study of web-based surveying.

Voluntary public reporting of patient experiences with care data at the clinic level began in Minnesota in 2008 with the CAHPS® Clinician and Group 1.0 hybrid survey. In 2012, the Minnesota Department of Health (MN DOH) required all clinics with greater than 715 unique face-to-face patient visits in a quarter to administer the CAHPS® Clinician and Group Survey to a sample of patients by mail. Results will be publically reported on the Minnesota Community Measurement (MNCM) website the summer of 2013.

Public reporting in Minnesota requires an external vendor to administer the survey. The costs to survey patients for three months at the clinic level for the Allina Health clinics in 2011 dollars is approximately $265,000 based on $10 per completed mailed survey, which yields clinic but not clinician-level data. This study was planned when mandated participation in the MN DOH public reporting via a mailed survey had not been finalized.

We compared responses to CAHPS® survey composites and individual questions, response rates, participant characteristics, time required for response, and costs from web-based versus mail data collection.

Survey instrument

The CAHPS® Clinician and Group hybrid survey (visit-based) was used. This survey was first tested statewide in Minnesota during 2008 with results published on Minnesota Community Measurement at www.mncm.org. The survey asks respondents about experiences during their most recent outpatient doctor visit regarding doctor communication and office staff courtesy and respect, using a three-category response scale “(Yes, definitely; Yes, somewhat; No)”. In addition, access to care during the last 12 months is assessed using a four-category response scale (Never; sometimes; usually; always). Finally, patients are asked to provide a global rating of the doctor (on a 0–10 scale). Nine demographic questions are included in the CAHPS® Clinician and Group Survey.

Survey methods

We administered the CAHPS® Clinician and Group Survey to a sample of patients receiving care at six clinics over a four-month time frame. The clinics represented a mix of rural and urban locations drawn from a large clinic and hospital system in Minnesota. The clinics were characterized by a range of performance on a previous homegrown survey-one was above the clinic mean, two clinics were near the mean, and the remaining three below the mean. Patients from the six clinics were seen by 40 primary care physicians (family practice and internal medicine).

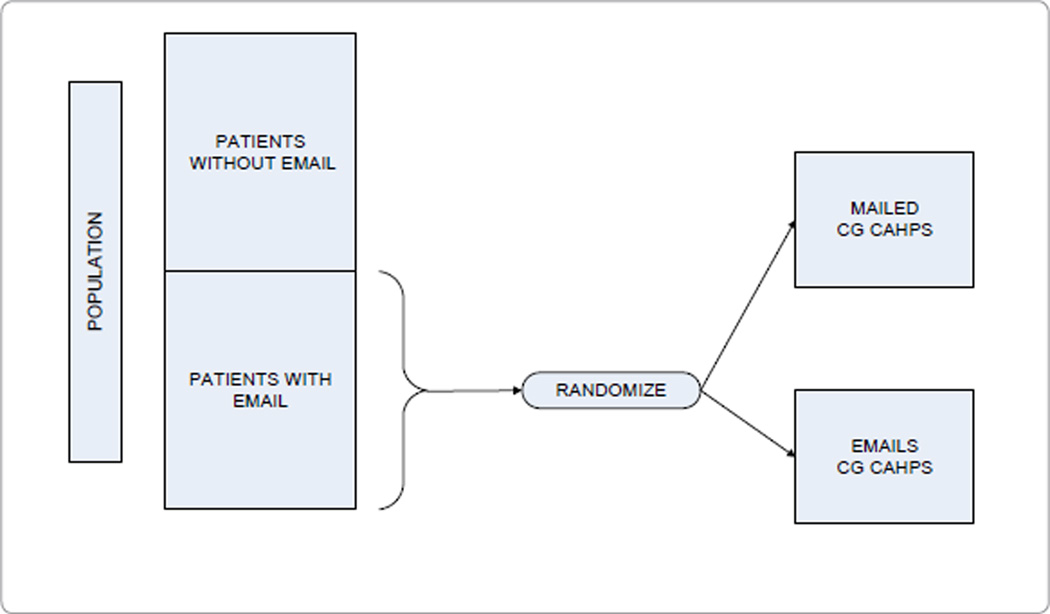

Patients from the clinic sites were randomized to receive either an e-mailed (web mode) or mailed (mail mode) invitation to complete the survey from the Executive Vice President of the organization (Figure 1). For patients randomized to the web mode (n=6,105), the e-mail contained an electronic link that brought the patient to the survey website. The e-mail included a code that the patient entered in the entry screen to initiate the survey. A three-wave survey process was administered with a reminder sent to patients who had not yet responded three days after the first e-mail, and a second reminder eight days after the first e-mail. The survey technology was compatible with browser-based (i.e., Droid®, iPhone®/iPad®) but not all phone platforms (e.g., Blackberry®). For those randomized to the mail mode (n=3,208), the invitation letter wording was identical to the e-mailed invitation. A two-wave mailed survey was administered such that patients not responding within two weeks were mailed another invitation letter and a second survey.

Figure 1.

Study design.

Both web and mail surveys were administered by the Allina Health survey vendor for the HCAHPS® (Hospital Consumer Assessment of Healthcare Providers and Systems) survey, Avatar International, LLC. Lists of eligible patients seen in the prior week were forwarded to the survey partner, and patients were sent survey invitations on a weekly basis.

Sample

Patients surveyed were 18 years or older, and had a clinic visit during September through December of 2010 with one of the 40 primary care physicians in one of the six clinic study sites. The patients eligible for the survey were those with an e-mail address on file in the Electronic Medical Record (EMR). Most patient e-mails were known because patients had electronic access to their medical record through MyChart® (>90%), although there were patients with e-mails on file who did not have MyChart®. No special effort was made to update lists of e-mails prior to the study as the intent was to compare the modes as currently implemented.

We expected about 6,000 patients with an e-mail address on file to have visits at the six clinics during the four-month period (range of e-mail address prevalences at the six clinics: 16% to 31%). We anticipated about a 25–30% response rate to the web survey and about 37% response rate to the mail survey based on prior studies and recent experience.

We projected about 1250 responses for each survey mode. With this number of completes, we would have an 80% power to detect a 0.11 effect size (trivial difference) for a two-sided test at p<0.05. We ended up with 410 responses by web (14% response rate) and 982 by mail (33% response rate). With the obtained sample sized we had 80% power to detect a 0.17 effect size (also a trivial difference) by mode.

The study was declared exempt by the Allina IRB.

Analysis plan

To evaluate CAHPS® Clinician and Group Survey responses to composites and individual questions by mode of administration, we ran ordinary least squares regression models, adjusting for gender, age, education, race/ethnicity, and self-rated health. We compared the characteristics of those randomized to web versus mail using chi-square statistics. To evaluate the extent to which the two modes of survey administration yielded similar results, we computed intraclass and rank-order correlations among scores. We also estimated the cost per returned web-based survey.

Results

There were no significant differences in the doctor communication composite, the office staff composite, or willingness to recommend the doctor’s office question by mode (Table 2). Those who responded by web reported less positive experiences than mail respondents with access to care including ‘In the last 12 months, when you phoned this provider’s office to get an appointment for care you needed right away, how often did you get an appointment as soon as you needed?’ (Web 74%, Mail 83%, p=0.003); ‘In the last 12 months, when you made an appointment for a check-up or routine care with this provider, how often did you get an appointment as soon as you needed?’ (Web 74%, Mail 86%, p=0.0001); ‘In the last 12 months, when you phoned this provider’s office during regular office hours, how often did you get an answer to your medical question that same day?’ (Web 72%, Mail 79%, p=0.01); ‘In the last 12 months, when you phoned this provider’s office after regular office hours, how often did you get an answer to your medical question as soon as you needed?’ (Web 56%, Mail 73%, p=0.09), and ‘Did someone from this provider’s office follow up to give you those results?’ (Web 79%, Mail 85%, p=0.026). However, web respondents reported more positive experiences with ‘During your most recent visit, did you see this provider within 15 minutes of your appointment time?’ (Web 84%, Mail 78%, p=0.201). When we adjusted for differences in gender, age, education, race/ethnicity and self-rated health (Table 3), the results were the same as the unadjusted results except that follow-up on test results did not differ significantly by mode. Effect sizes for the significant mode differences were trivial to small in magnitude.

Table 2.

Unadjusted Means (SD) by Mode of Administration.

| Measure | Web | t-statistic | p-value | |

|---|---|---|---|---|

| Doctor communication | 95 (15) | 94 (15) | −0.34 | 0.7331 |

| 18. explain things (recent visit) | 96 (16) | 96 (17) | 0.14 | 0.8909 |

| 19. listen carefully (recent visit) | 95 (17) | 95 (18) | −0.63 | 0.5265 |

| 21. easy to understand instructions (recent visit) | 94 (20) | 94 (19) | 0.70 | 0.4866 |

| 22. know important information (recent visit) | 92 (21) | 92 (22) | −0.01 | 0.9914 |

| 23. show respect (recent visit) | 96 (15) | 96 (16) | −0.53 | 0.5980 |

| 24. spend enough time (recent visit) | 95 (17) | 93 (21) | −1.12 | 0.2650 |

| Access to care | 78 (22) | 70 (31) | −5.43 | 0.0000 |

| 6. appointment for urgent care | 83 (23) | 74 (36) | −3.64 | 0.0003 |

| 8. appointment for routine care | 86 (21) | 74 (35) | −7.15 | 0.0000 |

| 10. got answer to questions same day | 79 (26) | 72 (34) | −2.58 | 0.0101 |

| 12. got answers to questions as soon as needed | 73 (37) | 56 (41) | −1.71 | 0.0926 |

| 13. seen within 15 minutes | 69 (32) | 66 (34) | −1.55 | 0.1210 |

| 15. office wait time (recent visit) | 78 (41) | 84 (37) | 2.32 | 0.0206 |

| 17. follow-up on test results (recent visit) | 85 (35) | 79 (41) | −2.23 | 0.0261 |

| Office staff | 94 (18) | 93 (17) | −0.51 | 0.6127 |

| 28. staff helpful (recent visit) | 93 (20) | 92 (19) | −0.40 | 0.6909 |

| 29. staff respectful (recent visit) | 95 (17) | 94 (17) | −0.90 | 0.3701 |

| Global rating of doctor | 91 (14) | 89 (15) | −1.56 | 0.1184 |

| Recommend doctor’s office | 92 (21) | 92 (21) | 0.07 | 0.9439 |

Note: All items and composites are scored on a 0–100 possible range, with higher being more positive experiences with care.

Table 3.

Adjusted Means by Mode of Administration.

| Measure | Web | t-statistic | p-value | |

|---|---|---|---|---|

| Doctor communication | 95 | 96 | −0.59 | 0.5551 |

| 18. explain things (recent visit) | 97 | 98 | −0.99 | 0.3244 |

| 19. listen carefully (recent visit) | 95 | 95 | −0.17 | 0.8647 |

| 21. easy to understand instructions (recent visit) | 94 | 96 | −1.41 | 0.1595 |

| 22. know important information (recent visit) | 91 | 92 | −0.69 | 0.4905 |

| 23. show respect (recent visit) | 97 | 97 | −0.23 | 0.8159 |

| 24. spend enough time (recent visit) | 97 | 96 | 0.39 | 0.6939 |

| Access to care | 77 | 69 | 4.82 | 0.0000 |

| 6. appointment for urgent care | 83 | 74 | 3.32 | 0.0010 |

| 8. appointment for routine care | 85 | 74 | 6.65 | 0.0000 |

| 10. got answer to questions same day | 78 | 70 | 2.81 | 0.0051 |

| 12. got answers to questions as soon as needed | 73 | 48 | 1.90 | 0.0647 |

| 13. seen within 15 minutes | 67 | 65 | 0.95 | 0.3420 |

| 15. office wait time (recent visit) | 77 | 84 | −2.88 | 0.0041 |

| 17. follow-up on test results (recent visit) | 84 | 81 | 1.11 | 0.2691 |

| Office staff | 94 | 94 | 0.00 | 0.9964 |

| 28. staff helpful (recent visit) | 92 | 93 | −0.07 | 0.9450 |

| 29. staff respectful (recent visit) | 96 | 96 | 0.45 | 0.6514 |

| Global rating of doctor | 91 | 91 | 0.81 | 0.4190 |

| Recommend doctor’s office | 94 | 95 | −0.74 | 0.4618 |

Note: Significance testing was from multiple regression model, adjusting for gender, age, education, race/ethnicity and self-rated health. Holdout group is female, white, age 45–54, some college education, and very good self-rated health.

Those who participated in the study tended to be a little older than those that did not (ƛ2=247.51, df=8, p<0.0001 for mail; ƛ2=34.56, df=8, p<0.0001 for the web) and those responding to the mail and web surveys differed significantly on some characteristics. Females were significantly more likely than males to respond to the survey overall (24% vs. 21%, ƛ2=6.45, 1 df, p=0.011) and were relatively more likely than males to respond to web (15% vs. 13%, ƛ2=1.32, 1 df, p=0.25) than mail (34% vs. 30%, λ2=5.42, 1 df, p=0.02). As shown in table 1(i–iii), mail respondents were less likely than web respondents to report having visited the doctor in the last 12 months (96% versus 99.7%, λ2=14.97, 1 df, p=0.0001) and in the last month (63% versus 78%, λ2=28.41, 1 df, p<0.0001). However, mail respondents were more likely than web respondents to have been with the sampled doctor for more than six months (84% versus 81%, λ2=1.54, 1 df, p=0.22). Mail respondents were more likely than web respondents to be male (28% versus 18%, λ2=16.27, 1 df, p<0.0001). Older persons were much more likely to participate in the mail than web surveys (27% of the mail respondents and 19% of the web respondents were 65 or older, λ2=10.88, 1 df, p=0.001).

Table 1.

| (i): Characteristics of Mail (n=982) and Online/web (n = 410) Respondents. | ||||

|---|---|---|---|---|

| Web | t-statistic | p-value | ||

| Age (Q35) | 16.97 | 0.0094 | ||

| 18–24 | 20 (2%) | 14 (4%) | 2.38 | 0.1231 |

| 25–34 | 91 (10%) | 53 (13%) | 4.39 | 0.0361 |

| 35–44 | 109 (11%) | 57 (14%) | 2.33 | 0.1272 |

| 45–54 | 200 (21%) | 86 (22%) | 0.11 | 0.7451 |

| 55–64 | 267 (28%) | 109 (28%) | 0.02 | 0.8786 |

| 65–74 | 194 (20%) | 52 (13%) | 9.67 | 0.0019 |

| 75+ | 68 (7%) | 23 (6%) | 0.76 | 0.3830 |

| Gender (Q36) | 16.27 | 0.0001 | ||

| Female | 674 (72%) | 320 (82%) | 16.49 | 0.0000 |

| Male | 266 (28%) | 69 (18%) | 16.19 | 0.0001 |

| Education (Q37) | 3.15 | 0.6766 | ||

| 8th grade or less | 3 (0.3%) | 2 (0.5%) | 0.28 | 0.5975 |

| Some high school | 9 (1%) | 4 (1%) | 0.01 | 0.9053 |

| High school graduate or GED | 159 (17%) | 54 (14%) | 1.88 | 0.1700 |

| Some college | 308 (33%) | 122 (31%) | 0.25 | 0.6173 |

| 4-year college | 216 (23%) | 96 (25%) | 0.44 | 0.5079 |

| >4-year college | 247 (26%) | 113 (29%) | 1.06 | 0.3027 |

| (ii): Characteristics of Mail (n = 982) and Online/web (n = 410) Respondents, Cont’d. | ||||

|---|---|---|---|---|

| Web | t-statistic | p-value | ||

| Race/ethnicity | 5.58 | 0.2325 | ||

| Hispanic (Q38) | 8 (1%) | 6 (2%) | 1.26 | 0.2612 |

| White (Q39A) | 896 (95%) | 362 (93%) | 2.51 | 0.1134 |

| Black (Q39B) | 9 (1%) | 9 (2%) | 3.79 | 0.0516 |

| Asian (Q39C) | 15 (2%) | 8 (2%) | 0.34 | 0.5575 |

| Other race (Q39F) | 17 (2%) | 6 (2%) | 0.11 | 0.7353 |

| Length of time going to doctor (Q3) | 3.02 | 0.5360 | ||

| < 6 months | 149 (16%) | 76 (19%) | 1.58 | 0.2090 |

| 6–11 months | 55 (6%) | 26 (6%) | 0.14 | 0.7118 |

| 1 year to < 3 years | 175 (19%) | 81 (20%) | 0.28 | 0.5955 |

| 3 years to < 5 years | 144 (16%) | 63 (16%) | 0.00 | 0.9555 |

| 5 years or more | 401 (43%) | 156 (39%) | 2.33 | 0.1269 |

| Doctor visits in last 12 months (Q4) | 24.46 | 0.0004 | ||

| None | 38 (4%) | 1 (0.3%) | 14.50 | 0.0001 |

| 1 | 174 (19%) | 92 (23%) | 3.12 | 0.0774 |

| 2 | 212 (23%) | 112 (28%) | 3.98 | 0.0461 |

| 3 | 229 (25%) | 85 (21%) | 1.82 | 0.1771 |

| 4 | 118 (13%) | 49 (12%) | 0.06 | 0.8118 |

| 5–9 | 111 (12%) | 59 (15%) | 1.93 | 0.1646 |

| 10 or more | 36 (4%) | 9 (2%) | 2.27 | 0.1319 |

| (iii). Characteristics of Mail (n = 982) and Online/web (n = 410) Respondents, Cont’d. | ||||

|---|---|---|---|---|

| Recency of last visit (Q14) | 32.89 | 0.0000 | ||

| 1 month | 540 (63%) | 317 (78%) | 32.99 | 0.0000 |

| 1 month to < 3 months | 176 (21%) | 46 (11%) | 15.06 | 0.0001 |

| 3 months to < 6 months | 59 (7%) | 10 (2%) | 10.02 | 0.001 |

| 6 months to 11 months | 45 (5%) | 18 (4%) | 0.31 | 0.5791 |

| 12 months or longer | 38 (4%) | 15 (4%) | 0.13 | 0.7150 |

Intraclass correlations and Spearman correlations (respectively) at the clinic level (n=6) were low: access to care (0.00 and 0.31, p=0.54), communication (0.00 and −.37, p=0.47), office staff (0.37 and 0.03, p=0.96), global rating of physician (0.32 and 0.43, p=0.40), and would recommend the doctor’s office (0.58 and 0.37, p=0.47). Intra class correlations and Spearman correlations (respectively) at the physician level (n=40) also tended to be low: access to care (0.04 and 0.26, p=0.11), communication (0.48 and 0.56, p=0.0002), office staff (0.20 and 0.19, p=0.26), global rating of physician (0.62 and 0.72, p ≤ 0.0001), and would recommend the physician to family and friends (0.36 and 0.30, p=0.062).

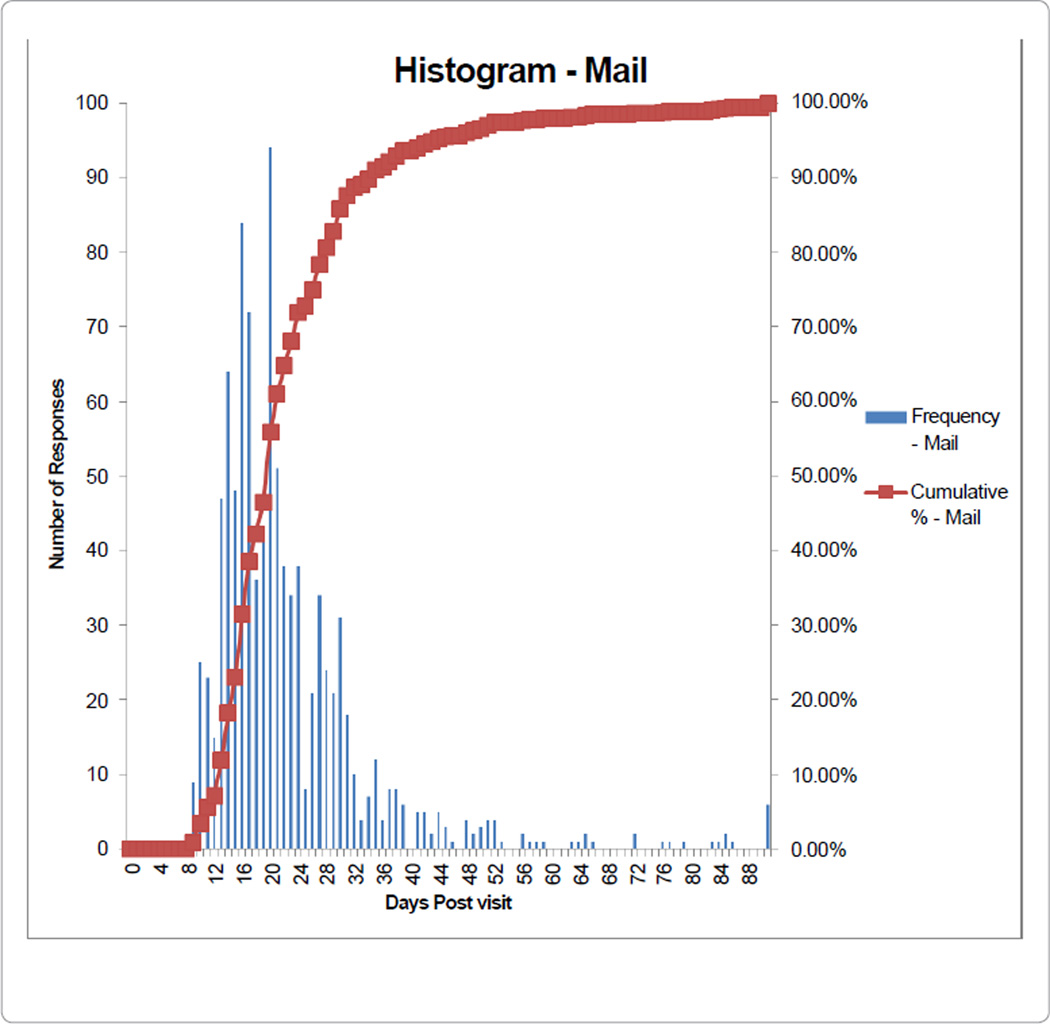

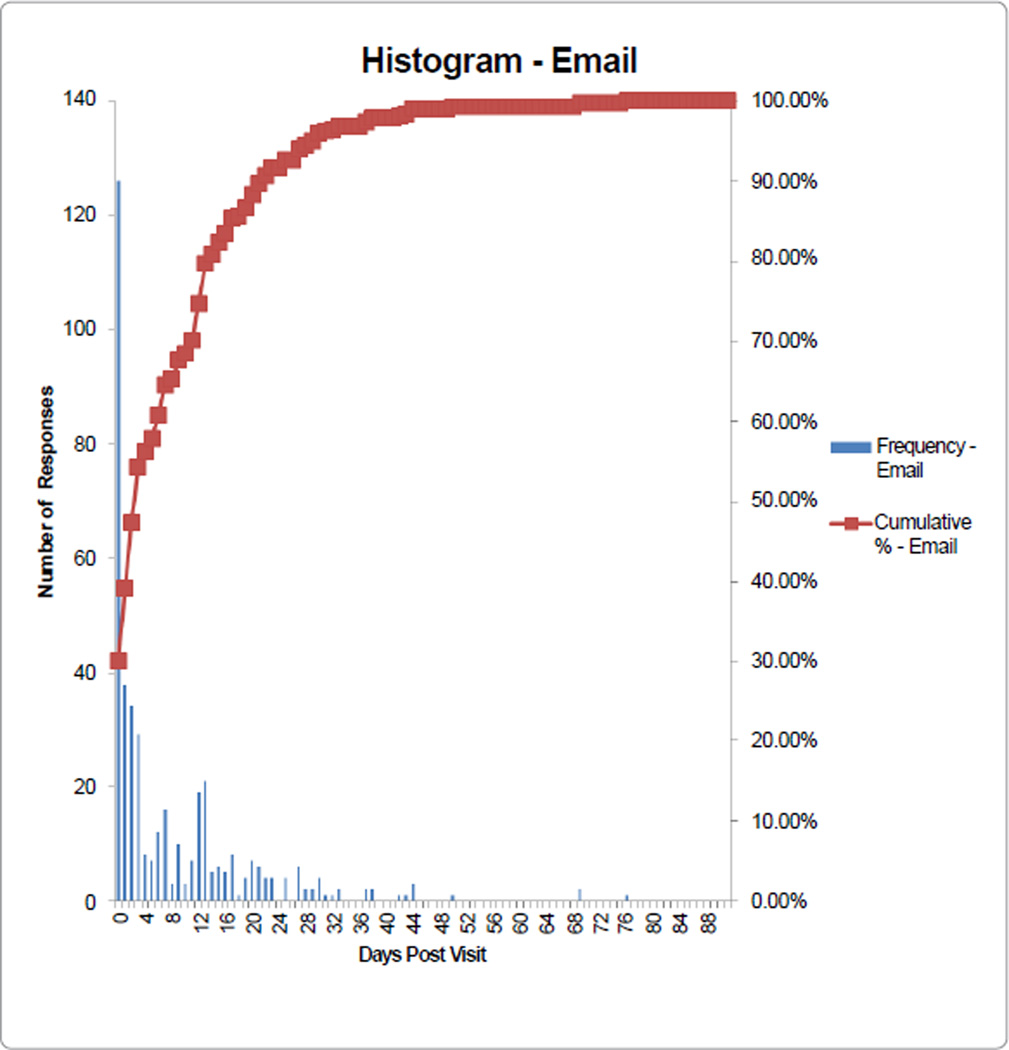

The cost per returned web based survey was less than $2.00 and was much less than Allina Health’s historical ~$10/completed survey costs, but varied by clinic volumes of patients with email addresses on file. Unlike mail surveys, once the one time setup costs were incurred the numbers of surveys could be increased without additional costs. Web surveys were returned more quickly than mail surveys (Figures 2 and 3). Specifically, while the mailed results are considered “complete” at about eight weeks from date of service, the web surveys are able to be considered “complete” at about four weeks from date of service (Figures 2 and 3).

Figure 2.

Response time to mailed survey.

Figure 3.

Response time to electronic survey.

Discussion

Despite the differences in those responding, there were no significant differences in the doctor communication composite, office staff composite, or willingness to recommend the doctor’s office question by mode. The questions addressing access were the only ones that differed significantly by mode of administration. Access to appointments for routine care had the largest difference (~1/3 SD) between the two modes. Office wait time was rated more positively on web surveys but the magnitude of difference was trivial (~1/6 SD). It is possible that the differences in reports about access to care by mode are in part due to delays inherent in delivering a survey via the mail. Persons who use electronic tools such as My Chart and who are willing to do a survey on line may have higher expectations for response and wait times than persons not adopting these technologies. In addition, there may be flaws in the CAHPS® Clinician and Group Survey itself, as the questions do not reflect current practice as it queries about scheduling by phone when many patients are scheduling appointments online.

Because the number of completes for individual physicians was relatively low (about 20 per physician for web surveys), our estimates of correlations between CAHPS® scores by mode had large standard errors. For both clinics and physicians, the rank order correlations for the CAHPS® office staff composite tended to be lower than for the communication composite.

Web-based surveys lived up to the expectation of obtaining patient feedback more quickly and are less expensive than mail surveys. Once the one time small setup costs were borne, an unlimited number of patients could be surveyed or reminders sent without incremental costs. Had we used a three-wave survey process for mail surveys, the cost differential would have been even higher.

We were discouraged by the 14% response rate for the web survey, far below the 33% response rate for mailed surveys and lower than what we had predicted based on previous studies of web based surveys. If the low response rates found in this study are characteristic of web-based data collection, this will offset the potential benefits of rapid web-based data collection. We learned that less than 5% of e-mails “bounced” or were undelivered, which indicates for the most part the addresses on file were correct. About 80% of e-mails were never opened, highlighting the need to publicize and encourage Allina Health patients to open the e-mail. Of the e-mails that were opened, almost half of patients completed the survey.

This study confirmed our worries that those responding via the web would be different than those responding by mail. Persons 65 and older and males were more likely to respond by mail, and younger persons and females were more likely to respond via the web. Despite these findings, perceptions of office staff, global ratings of doctor, and willingness to recommend the doctor did not differ by mode on average; findings similar to those reported by others [2]. When significant mode differences were found, they were small or trivial.

We found patients were not answering the first question in the survey (“Our records show that you got care from the doctor named below in the last 12 months, is that right?”) that asks them to verify they got care from the doctor for which they were sampled. This question must be answered for public reporting of results, so the process was modified to require this question to be answered to proceed to the other questions. Unopened e-mails were common and we hypothesized that having the e-mail originating from an unfamiliar e-mail address did not encourage participation. We changed the source of the e-mail from the less familiar survey partner address to Allina.com.

The technology supporting the survey was improved as well. The process for initiating the survey changed from clicking on a link and adding a code to having the link bring the patient to the survey without having to enter a code. Originally the web-based survey had a forward button to advance to the next question but no back button. A back button has been added and advancing to the next question does not require a forward button. We also shortened the “wave-time” after initial survey push to three and five days, respectively, for the e-mail invitation.

To address the low response rates, efforts to increase response rates began shortly after the study period ended. Electronic surveys were continued in the pilot clinics to measure the effect of interventions to improve response rates. We learned our existing process for collecting e-mail addresses was inadequate, thus the registration process was changed. Collection of e-mail addresses has been incorporated into the registration process with a “soft stop” or “yield” sign in the electronic medical record to remind staff to check the current e-mail address at every visit rather than only at the time of signup for My Chart®. Finally, we learned we needed to publicize the change in survey mode to patients. An awareness campaign was initiated with posters in clinic letting patients know they might receive a web-based survey and it was their opportunity to “give the clinic a checkup.”

Mailed surveys were discontinued system wide in March 2011 and all clinics have been surveyed exclusively with web based CG CAHPS starting in June 2011. The recent response rate for the Allina Health clinics is nearing 20% much higher than the 14% response rate during the study in the six clinics, but it remains lower than the previous 35% response rate for mailed surveys.

Limitations

Because this study was of adults evaluating primary care providers, the results may not be generalizable to all clinic settings. The sample was not diverse and other samples may give different results. Samples of patients drawn from specialty practices may not yield the same results.

Conclusions

Administering the CAHPS® Clinician and Group Survey via the web produced mean responses which were very similar to a mailed mode of data collection, except for questions addressing access, despite differences in the characteristics of the respondents by mode. Web based surveying was faster and less costly than the standard mail mode of administration. Web based surveys appear to be a viable option for quality improvement purposes although the low response rates in this study are a concern. Strategies to increase response rates will be a key element of web-based data collection. The differences in costs will be an incentive for organizations to continue to pursue web-based surveying. Further studies are needed to evaluate the generalizability of the results of this one.

Acknowledgements

Funding: This work was funded by Allina Health. Ron Hays was supported by a cooperative agreement from the Agency for Healthcare Research and Quality (U18 HS09204) and by grants from the NIA (P30-AG021684) and the NIMHD (2P20MD000182). The authors wish to thank Janet Wied who was instrumental in preparing clinic staff for this study and in troubleshooting low response rates to web-based surveys and improving them. We also thank Dale Shaller, PhD for his advice during the design of this study and Mary Slaughter for data analyses.

Footnotes

This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

References

- 1.Kamo N, Dandapani SV, Miksad RA, Houlihan MJ, Kaplan I, et al. Evaluation of the SCA instrument for measuring patient satisfaction with cancer care administered via paper or via the Internet. Ann Oncol. 2011;22:723–729. doi: 10.1093/annonc/mdq417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rodriguez HP, von Glahn T, Rogers WH, Chang H, Fanjiang G, et al. Evaluating patients’ experiences with individual physicians: a randomized trial of mail, internet, and interactive voice response telephone administration of surveys. Med Care. 2006;44:167–174. doi: 10.1097/01.mlr.0000196961.00933.8e. [DOI] [PubMed] [Google Scholar]

- 3.Link MW, Mokdad AH. Alternative modes for health surveillance surveys: an experiment with web mail, and telephone. Epidemiology. 2005;16:701–704. doi: 10.1097/01.ede.0000172138.67080.7f. [DOI] [PubMed] [Google Scholar]

- 4.McInnes DK, Brown JA, Hays RD, Gallagher P, Ralston JD, et al. Development and evaluation of CAHPS questions to assess the impact of health information technology on patient experiences with ambulatory care. Med Care. 2012;50:S11–S19. doi: 10.1097/MLR.0b013e3182610a50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Paper presented at the Annual meeting of the AAPOR; Phoenix, AZ. [Google Scholar]

- 6.Coons SJ, Gwaltney CJ, Hays RD, Lundy JJ, Sloan JA, et al. Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO Good Research Practices Task Force report. Value Health. 2009;12:419–429. doi: 10.1111/j.1524-4733.2008.00470.x. [DOI] [PubMed] [Google Scholar]

- 7.Greene J, Speizer H, Wiitala W. Telephone and web: mixed-mode challenge. Health Serv Res. 2008;43:230–248. doi: 10.1111/j.1475-6773.2007.00747.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Harewood GC, Yacavone RF, Locke GR, 3rd, Wiersema MJ. Prospective comparison of endoscopy patient satisfaction surveys: e-mail versus standard mail versus telephone. Am J Gastroenterol. 2001;96:3312–3317. doi: 10.1111/j.1572-0241.2001.05331.x. [DOI] [PubMed] [Google Scholar]