Abstract

Contemporary models of the neural system that supports reading propose that activity in a ventral occipitotemporal area (vOT) drives activity in higher-order language areas, for example, those in the posterior superior temporal sulcus (pSTS) and anterior superior temporal sulcus (aSTS). We used fMRI with dynamic causal modeling (DCM) to investigate evidence for other routes from visual cortex to the left temporal lobe language areas. First we identified activations in posterior inferior occipital (iO) and vOT areas that were more activated for silent reading than listening to words and sentences; and in pSTS and aSTS areas that were commonly activated for reading relative to false-fonts and listening to words relative to reversed words. Second, in three different DCM analyses, we tested whether visual processing of words modulates activity from the following: (1) iO→vOT, iO→pSTS, both, or neither; (2) vOT→pSTS, iO→pSTS, both or neither; and (3) pSTS→aSTS, vOT→aSTS, both, or neither. We found that reading words increased connectivity (1) from iO to both pSTS and vOT; (2) to pSTS from both iO and vOT; and (3) to aSTS from both vOT and pSTS. These results highlight three potential processing streams in the occipitotemporal cortex: iO→pSTS→aSTS; iO→vOT→aSTS; and iO→vOT→pSTS→aSTS. We discuss these results in terms of cognitive models of reading and propose that efficient reading relies on the integrity of all these pathways.

Introduction

Contemporary models of reading (Price, 2000; Pugh et al., 2000; Jobard et al., 2003) propose that the gateway from vision to language is in a left ventral occipitotemporal (vOT) area in the vicinity of the occipitotemporal sulcus, which lies between the lateral fusiform gyrus and the medial surface of the posterior inferior temporal gyrus. This region is thought to be special for reading (Cohen et al., 2000, 2002) because it is activated more by written words than by visually similar stimuli such as false-fonts or consonant letter strings (Price et al., 1996; Cohen et al., 2002); and damage to vOT impairs reading but not speech comprehension or production (Dejerine, 1891; Damasio and Geschwind, 1984; Binder and Mohr, 1992; Leff et al., 2001; Cohen et al., 2003, 2004; Gaillard et al., 2006; Starrfelt et al., 2009, 2010). However, the importance of a specific vOT region does not exclude the possibility that visual inputs can also access the temporal lobe language areas via other pathways (Booth et al., 2008). We investigated evidence for the contribution of alternative reading pathways using functional magnetic resonance imaging (fMRI) and dynamic causal modeling (DCM).

Early neurological models of reading (for review, see Ben-Shachar et al., 2007; Dejerine, 1892; Geschwind, 1965; Damasio and Damasio, 1983) proposed that visual processing in occipital cortex is relayed to the left angular gyrus before converging with the auditory language system in the left posterior superior temporal cortex (Wernicke's area). The problem with this account is that the left angular gyrus is not activated for reading aloud relative to lower-level baselines, unless participants are specifically required to make semantic decisions on the written words (Price, 2000; Price and Mechelli, 2005). An alternative possibility, based on cognitive studies (Ziegler and Goswami, 2005, 2006), is that learning to read establishes multiple links between the processing of visual features (in occipital cortex posterior to vOT) with phonological or semantic processing (in temporal lobe areas).

Our fMRI paradigm involved four conditions: silent reading, viewing meaningless false-fonts, listening to words, and listening to reversed words. We extracted the time course of activity from four different left-hemisphere regions: a posterior inferior occipital area (iO) that was more activated by written words than by heard words; vOT, which was more activated for written words than for false-fonts or auditory words; and posterior (pSTS) and anterior (aSTS) regions in the superior temporal sulcus that were commonly activated by written words, relative to false-fonts, and heard words, relative to reversed words. The effect of reading on functional connectivity was evaluated using DCM and Bayesian model comparison. If vOT is the only gateway from vision to language, then we would expect the best fit of the data to be the models in which reading modulated iO→vOT, vOT→pSTS, and vOT→aSTS. In contrast, if there are alternative routes to temporal language areas, we would expect evidence to be highest when the model allowed reading to modulate the connections from iO→pSTS as well as iO→vOT and vOT→pSTS.

Materials and Methods

This study was approved by the joint ethical committee of the Institute of Neurology and the National Hospital for Neurology and Neurosurgery, London, UK. Informed consent (written consent from a parent or guardian in the case of children <16 years old) was obtained from all participants.

Participants.

Data reported in this study came from 17 right-handed volunteers (11 males, mean age = 21 years, range ±9 years) who spoke English as their first language. All had normal or corrected-to-normal vision, with no reported hearing difficulties or problems with speech comprehension, speech production, or reading. We included a broad age range of participants to test functional connectivity across a general sample of typical readers. We also tested for any age-related differences in functional connectivity. An additional 12 volunteers participated in the same experiment (total 29 participants), but were excluded from DCM analysis because they (1) had poor memory of experimental stimuli (<60%) in the postscanning assessment (see below) and, therefore, we had no evidence that they were actively listening to or reading the stimuli (2 subjects); (2) moved too much in the scanner (1 subject); (3) had no task-dependent activation (p > 0.05 uncorrected) within a 6 mm sphere in one or more of the four left hemisphere regions used in the DCM analyses: posterior superior temporal sulcus (pSTS), anterior superior temporal sulcus (aSTS), ventral occipitotemporal cortex (vOT), and inferior occipital cortex (iO) (see below for details); five subjects were excluded because they had no aSTS in the visual modality, and one of these also had no pSTS activation in the visual modality; or (4) had no ventral inferior frontal activation in either the visual modality (n = 3) or the auditory modality (n = 1); four other subjects were excluded here. These additional exclusions were made to ensure that we investigated neuronal interactions within a group of subjects who activated the same set of cortical regions regardless of whether these regions were included in the DCM model. This is because regions activated outside those included within a DCM model may still exert an indirect influence on the functional interactions of the included regions (for discussion, see Seghier et al., 2010).

Overall, the strict inclusion and exclusion criteria ensured that all participants included in subsequent DCM analyses exhibited both robust and consistent activation in regions of interest predicted on the basis of previous group studies of visual and auditory word and sentence processing (Scott et al., 2000; Marinkovic et al., 2003; Spitsyna et al., 2006; Carreiras et al., 2007; Lindenberg and Scheef, 2007; Leff et al., 2008; Richardson et al., 2010). Low activation in the excluded subjects was not the result of differences in functional anatomy because a direct comparison of excluded and included participants did not identify areas that were more activated in the excluded participants. We can therefore only infer that differences in hemodynamics or attention explained the weak activation in these individuals.

It is relevant to note that, in the current study, we are concerned with evidence of regional interactions within the same participants reading the same stimuli (Seghier and Price, 2010). This contrasts with other functional connectivity approaches that have investigated how regional interactions during reading are modulated by word type or subject group (Mechelli et al., 2005; Cao et al., 2008; Levy et al., 2009; Liu et al., 2010).

Experimental design.

There were 6 conditions of interest: 3 consisting of visual stimuli and 3 of auditory stimuli. Participants were instructed to silently read/view visual stimuli and listen to auditory stimuli. No explicit task response was required. However, after fMRI data collection, all participants had a surprise memory test so that we could assess evidence for written and auditory language comprehension and attention. Within each stimulus modality, the 3 conditions involved (1) meaningful sentences (e.g., “The cow chased the fat horse”); (2) scrambled sentences (strings of words that did not constitute a meaningful sentence)—intended to tap comprehension at the individual word level (this condition is henceforth referred to as “word strings”); and (3) baseline stimuli that were meaningless at both the sentence and word level (sequences of words printed in false-fonts in the visual condition and sequences of digitally reversed words in the auditory condition).

In addition to the six conditions of interest, there were four other conditions that were included in the same experimental session. These consisted of pictures of animals, objects, meaningless items, mouth shapes, or digits, with instructions to (1) make a hand action that indicated how an object was used; (2) make a rocking hand motion regardless of the picture content; (3) imitate the mouth shape; or (4) whisper “one, three”. Full details of these conditions are described by Richardson et al. (2010) and are not described further here because they did not activate the language regions of interest and were therefore not included in our DCM analyses.

Sentence stimuli.

Sentence stimuli consisted of 80 sentences, each formed by a sequence of 6–8 words. They were constructed using high-frequency (>20 per million) monosyllabic and bisyllabic nouns, verbs, and adjectives. All words were selected to be suitable for children as young as seven years old. Therefore, although older participants were expected to be more proficient readers, all teenagers included in this study were able to comprehend the sentence stimuli with ease. Sentence sets were split into two subsets (A and B) for the purpose of presenting equivalent stimuli in both visual and auditory formats. The sentences in both sets were matched at the word level in terms of mean frequency, imageability, age of acquisition, and word and syllable length. No sentence was repeated within or across sets. The presentation of subsets A and B in either visual or auditory format was counterbalanced across participants. Word sequences for scrambled sentences were constructed from the same sequences of words as meaningful sentences (e.g., “The cow chased the fat horse”), which were then assigned a pseudo-random word order that did not form a meaningful sentence (e.g., “Chased the the horse cow fat”). This condition is therefore matched at the lexical level.

Presentation parameters.

Stimuli were presented across four scanning sessions. Each session commenced with a visual cue to “Get Ready…,” followed by a countdown, during which dummy scans were acquired. Within each session there were 24 blocks of stimuli: 6 for visual language conditions and their baselines; 6 for auditory language conditions and their baselines, and 12 for the excluded additional conditions described above. Within the auditory and visual language conditions, there were 2 blocks of sentences, 1 block of word strings, and 3 blocks of baseline. Within these blocks, 5 sequences of words were presented with 0.5 s central fixation between sequences, with a total of 37 items (words/false-font words) per block. Within each sequence, items were presented at a rate of 1 per 0.4 s, resulting in a maximum duration of 3.2 s for an eight-item sequence. The visual and auditory word presentation rates were equated by recording the auditory stimuli from a female speaker reading aloud the visual stimuli presented using the same script that was to be used in the scanner. Visually, items were presented sequentially in the center of the screen in a Helvetica font, size 20. In the auditory condition, the start of a new sentence was indicated by a pure tone beep, while in the visual condition the first word of each sentence started with a capital letter. In both conditions, each sentence ended with a fixation cross.

Modality changes were cued with visually displayed instructions (Helvetica, size 80) to “Listen” when the stimuli were going to be presented in the auditory modality or “Read” when the stimuli were going to be presented in the visual modality. Instructions were displayed for 2.2 s, and followed by an auditory pure tone, which sounded for 0.3 s. The time from the start of the instruction to the end of the block was 18 s. Blocks were separated by a brief auditory pure tone which sounded for 0.3 s, followed by a 0.2 s fixation cross. At the end of each block there was a 1.5 s pause before the onset of the next block. This resulted in a total duration of 40.5 s for one activation block (sentences or word strings) and its corresponding baseline block.

MRI acquisition.

A Siemens 1.5T Sonata scanner was used to acquire a total of 768 T2*-weighted echoplanar images with BOLD contrast (192 scans per 4 sessions). Each image comprised 30 axial slices acquired sequentially with a 2 mm thickness, with 1 mm gap and 3 × 3 mm in-plane resolution. Volumes were acquired with an effective repetition time (TR) of 2.7 s/volume (suitable for DCM analysis) (Kiebel et al., 2007) and an echo time (TE) of 50 ms. The first six (dummy) volumes of each run were discarded to allow for T1 equilibration effects. A high-resolution, 1 mm3 volumetric T1-weighted anatomical image was also acquired for each participant.

fMRI data analysis.

Preprocessing was conducted using the Statistical Parametric Mapping SPM5 software package (Wellcome Trust Centre for Neuroimaging, London, UK; http://www.fil.ion.ucl.ac.uk/spm). All functional volumes were spatially realigned, unwarped, and normalized to MNI space using the normalization–segmentation procedure of SPM5, and smoothed with an isotropic 6 mm full-width at half-maximum Gaussian kernel, with a resulting voxel size of 2 × 2 × 2 mm3. Time series from each voxel were high-pass filtered (1/128 Hz cutoff) to remove low-frequency noise and signal drift. The preprocessed functional volumes were then entered into first-level statistical analysis, in which each sentence or scrambled sentence (word or baseline condition) was modeled as an event (according to stimulus duration). Regressors were also included to model the onset of all other stimuli (Richardson et al., 2010). Each event-related regressor was convolved with a canonical hemodynamic response function.

Second-level analyses.

We conducted two second-level analyses. These analyses were performed to identify key unimodal and amodal regions activated during reading across all 29 participants who took part in the study, and were used to select regions of interest (ROIs) and obtain peak coordinates for these regions for the purpose of data extraction and subsequent DCM analyses. The coordinates reported below are those that were used for the extraction of time-series data for DCM. Because some participants were excluded from subsequent DCM analyses following the extraction process, we repeated both analyses with the 17 subjects who were selected for the DCM analysis (see Participants). These analyses illustrate that the ROIs in this sample of participants remained unchanged. Peak coordinates for all amodal regions activated are listed in Table 1 and illustrated in Figure 1. The statistical threshold for all analyses was set at p < 0.05 in height after a familywise correction for multiple comparisons across the whole brain.

Table 1.

Amodal regions activated by sentences and words (17 subjects)

| Region | Left hemisphere |

Right hemisphere |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Coordinates |

Z scores |

Coordinates |

Z scores |

|||||||||

| x | y | z | Main effect | Visual | Auditory | x | y | z | Main effect | Visual | Auditory | |

| pSTS (P) | −52 | −42 | 6 | Inf | Inf | 7.2 | ||||||

| STS | −64 | −38 | 4 | Inf | 7.7 | 6.9 | 54 | −32 | 4 | 6.6 | 5.8 | 5.8 |

| STS | −58 | −28 | 0 | Inf | 7.8 | 7.8 | ||||||

| STS | −52 | −26 | −4 | Inf | 7.2 | 7.5 | 54 | −26 | −4 | 6.8 | 5.9 | 6.4 |

| STS | −60 | −14 | −4 | 6.9 | 5.5 | 6.9 | ||||||

| STS | −54 | −6 | −10 | 7.7 | 6.5 | 7.1 | 58 | −2 | −10 | 6 | 4.7 | 5.9 |

| STS | −56 | 6 | −12 | 6.7 | 6.5 | 6.4 | ||||||

| aSTS (A) | −54 | 10 | −14 | 6.9 | 5.7 | 6.6 | 58 | 10 | −12 | 6.3 | 4.4 | 6.6 |

| 52 | 18 | −20 | 5.7 | 4.2 | 5.8 | |||||||

| Anterior occipitotemporal sulcus | −42 | −40 | −16 | 5.8 | 5.3 | 4.4 | ||||||

| Temporoparietal | ||||||||||||

| Posterior middle temporal gyrus | −50 | −56 | 12 | 5.1 | 4.2 | 4.6 | ||||||

| Posterior superior temporal gyrus | −60 | −48 | 14 | 6.6 | 5.4 | 6 | 54 | −42 | 12 | 5.2 | 4.8 | 4.6 |

| Ventral supramarginal gyrus | −54 | −42 | 24 | 6.2 | 5.5 | 5.3 | ||||||

| Inferior frontal lobe | ||||||||||||

| Pars orbitalis | −46 | 24 | −6 | 6.8 | 5.9 | 6.5 | ||||||

| Pars triangularis | −40 | 30 | −2 | 7.8 | 6.9 | 7.0 | ||||||

| −58 | 16 | 2 | 5.3 | 5.2 | 4.5 | |||||||

| Pars opercularis | −46 | 12 | 20 | 6.1 | 5.7 | 4.8 | ||||||

| −52 | 16 | 24 | 6.6 | 6.2 | 5.2 | |||||||

Main effect of auditory and visual sentences and words at p < 0.001, masked inclusively at p < 0.001, by sentences and words for each modality was measured independently. Clusters represent local maxima. ROIs used in the DCM analysis are shown in bold.

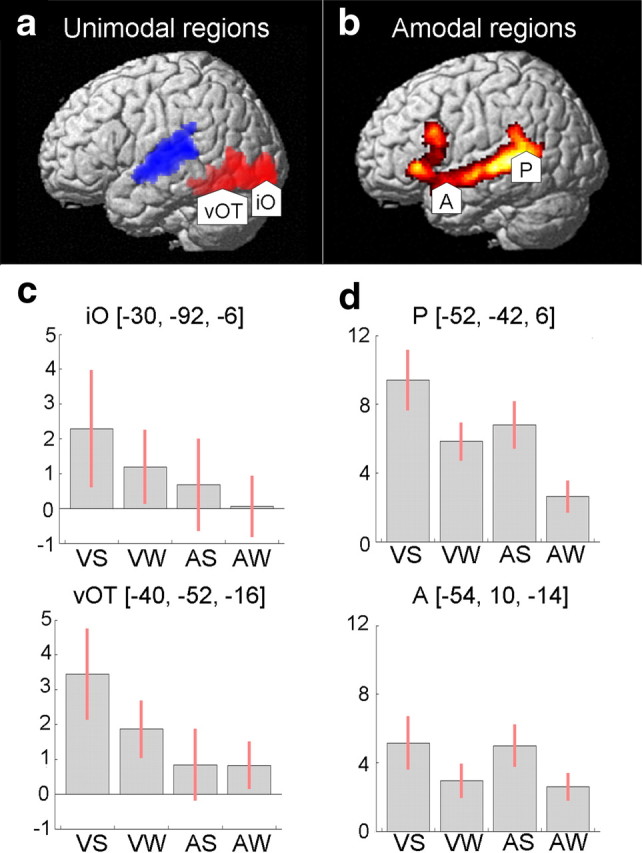

Figure 1.

Regions of interest. a, Activation for all visual and auditory conditions shown in red and blue, respectively (threshold p = 0.001, 100 voxels). Arrowheads indicate visual unimodal ROIs: iO and vOT. b, Main effect of meaningful language (threshold p < 0.001) inclusively masked by auditory sentences and scrambled sentences, and visual sentences and scrambled sentences (threshold p = 0.001, 100 voxels). Arrowheads indicate unimodal ROI: pSTS (P) and aSTS (A). c, d, Parameter estimates for visual unimodal ROIs (c) and for amodal ROIs (d). Conditions on the x-axis are as follows: visual sentences (VS), visual word strings (VW), auditory sentences (AS), and auditory word strings (AW). In all graphs, the y-axis shows effect sizes as the percentage increase relative to the global mean. The red bars are the 90% confidence intervals.

The first analysis identified unimodal visual processing areas assumed to be the source of inputs to amodal language areas. This analysis involved a one-sample t test using a contrast image from a comparison of all visual versus auditory conditions for each participant. As expected, a comparison of visual versus auditory conditions (which included baseline tasks) identified a large pattern of activation in the ventral visual stream, with two activation peaks located in (1) the left posterior inferior occipital gyrus (iO) [x = −30, y = −92, z = −6] and (2) the left ventral occipital-temporal temporal sulcus (vOT) [x = −40, y = −52, z = −16] (Fig. 1a). As observed in previous studies of reading that do not involve a decision-making task, activation in the angular gyrus was not observed in any condition (for discussion, see Price, 2000; Price and Mechelli, 2005).

For the second analysis, we conducted an ANOVA to identify amodal regions commonly activated for auditory and visual language processing relative to baseline conditions. This analysis included four contrasts from each individual's first-level analysis: (a) visual sentences–visual baseline, (b) visual word strings–visual baseline, (c) auditory sentences–auditory baseline, and (d) auditory word strings–auditory baseline. Brain regions that were commonly activated in both modalities were identified by the main effect over all conditions (a–d), inclusively masked (inclusion threshold = p < 0.001) by visual sentences and word strings (a and b) and auditory sentences and word strings (c and d). This contrast identified activation peaks in pSTS [x = −52, y = −40, z = 4] and aSTS [x = −54, y = 10, z = −12], as well as other regions that were not included in further analyses (Table 2). In addition, the same analysis confirmed that activation in vOT was higher for written words and sentences relative to false-fonts [x = −40, y = −54, z = −16; Z score = 4.6].

Table 2.

Endogenous (average) connectivity probability values for the four-region model

| To | From |

|||

|---|---|---|---|---|

| iO | vOT | pSTS | aSTS | |

| iO | 0.87 | 0.90 | ||

| vOT | 1.00 | 0.95 | 0.68 | |

| pSTS | 0.85 | 0.95 | 0.84 | |

| aSTS | 0.79 | 0.95 | ||

ROI selection for DCM.

Our DCM analyses included four left-hemisphere regions: two unimodal (iO and vOT) and two amodal (pSTS and aSTS). The vOT region was more sensitive to written words than false-fonts, but the iO region was equally activated by written words and false-fonts. The pSTS and aSTS regions were located at the most posterior and anterior peaks of the temporal lobe activation during reading. The pSTS area is in the vicinity of Wernicke's area, where both classical and contemporary models of language processing propose that written word processing accesses the language system (Geschwind, 1965; Price, 2000), and where the response is sensitive to the phonological demands of the task (Turkeltaub and Coslett, 2010). Our motivation for including the aSTS was based on the association of this region with semantic processing (Scott et al., 2000; Crinion et al., 2003; Patterson et al., 2007; Binney et al., 2010); its inclusion allowed us to test whether activity in aSTS during reading was driven by activity in vOT as predicted on the basis of cognitive models of reading that propose written words can access semantics independently of phonology (Seidenberg and McClelland, 1989; Harm and Seidenberg, 2004).

Data from inferior frontal regions were not included in the DCM study because they were not regions of interest for the hypotheses being tested. However, the implicit influence of ventral inferior frontal regions was controlled for across subjects via our participant selection criteria (see Participants). While the role of these regions needs to be investigated in future studies, we constrained the number of regions in the current study to allow us to conduct a comprehensive comparison of different patterns of functional connectivity, which is less effective as the number of regions increases (Seghier et al., 2010; Stephan et al., 2010).

Finally, although the angular gyrus was not included as a region in the DCM analyses (because it was not activated in any of our second-level analyses), our four-region model allowed us to test whether there was evidence for connectivity between iO and pSTS that either involved, or did not involve, vOT activation.

Data extraction for the DCM analyses.

Following the identification and selection of our four left-hemisphere ROIs at the group level, eigenvectors (time series) were extracted from the first-level analysis of each subject. Coordinates for unimodal visual regions iO and vOT were extracted from an activation map comparing all visual with all auditory conditions (including baseline tasks, as visual regions were also activated by our false-fonts baseline). Coordinates for pSTS and aSTS (the amodal language areas) were extracted from an activation map for the main effect of meaningful language (sentences and word strings) across both modalities. All regions were extracted using the same thresholds and parameters. Activation maps were set at a threshold of p < 0.05 (uncorrected) at the closest local maxima within a distance 6 mm of the group peak coordinates, and with a minimum extent of 4 voxels. This technique ensured that extracted regions were comparable across participants by incorporating consistent functional regions (for a similar rationale, see Stephan et al., 2007; Seghier and Price, 2010; Seghier et al., 2011).

Mean coordinates across individual analyses were: iO [x = −30 ± 3, y = −92 ± 2, z = −4 ± 4], vOT [x = −40 ± 2, y = −53 ± 2, z = −18 ± 3], pSTS = [x = −51 ± 4, y = −41 ± 3, z = 4 ± 3], and aSTS [x = −55 ± 3, y = 10 ± 3, z = −12 ± 4]. Time series were extracted separately for each session (within a 6 mm sphere) and were adjusted to the F-contrast (i.e., effects of interest) of each subject. ROI time series from each session were then entered into each individual's own session-specific bilinear deterministic DCM model (Friston et al., 2003).

DCM analyses.

DCM provides a framework for making inferences about the neuronal interactions between a fixed set of n predefined brain regions. Neuronal relationships are described in terms of endogenous connectivity (baseline connectivity that is present in the system in the absence of external inputs) and modulations of this connectivity by a single or series of experimental conditions (such as reading meaningful sentences and word strings). The optimal sites for connectivity modulations can then be tested in a systematic fashion by comparing multiple competing models and thus identify the most plausible (useful) models that provide the best explanation of the data.

All DCM analyses were performed using the most recent release of SPM8 (version DCM8). Three sets of parameters were estimated for each model, representing the following: (1) how the model responds to the influence of direct inputs or exogenous factors (i.e., our stimuli), (2) the endogenous (fixed) connectivity between regions (i.e., connectivity averaged across all stimuli), and (3) modulations in connection strength during reading. These parameters are expressed in terms of hertz within the DCM framework, and are estimated using Bayesian methods by means of the expectation maximization (EM) algorithm (as described by Friston et al., 2003). This estimation process generates two quantities: (1) the posterior distribution over model parameters, which can be used to make inferences about connectivity parameters, and (2) the probability of the model given the data, more commonly known as the model evidence, which is approximated by maximizing the negative free energy of any given model (Stephan et al., 2009).

The aim of our DCM analyses was to identify connections modulated by reading sentences and word strings and to test whether there were multiple routes to language from vision. Our four-region model included two unimodal (iO and vOT) and two amodal (pSTS and aSTS) regions. We investigated whether reading modulates access to the amodal regions from vOT, iO, both, or neither. Further details of these analyses are given below, following a description of the model space.

The model space.

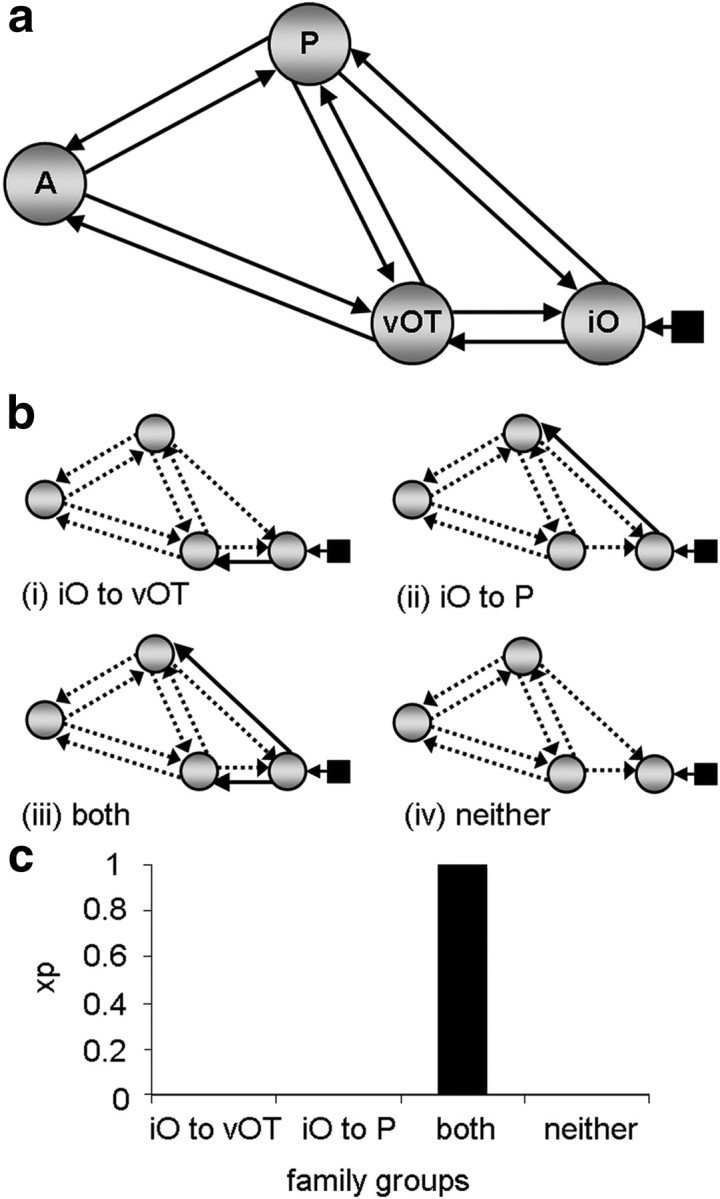

Both analyses 1 and 2 investigated how reading modulates access to pSTS. In analysis 1, we tested which connections from the driving input region (iO) were most likely to be modulated by reading sentences and word strings in the four-region model shown in Figure 2a. Since this analysis was specific to visual stimuli, the driving input to the model consisted of all conditions in the visual modality (reading sentences and words strings and viewing false-fonts). Input was assumed to enter the model at the most posterior visual region, iO. Direct connections between the input region iO and the aSTS were not included because, on the basis of previous studies (Catani et al., 2003; Schmahmann et al., 2007), we expected the connections from iO to aSTS to be either via vOT (and the inferior longitudinal fasciculus) or via pSTS (and the middle longitudinal fasciculus or the dorsal branch of the inferior longitudinal fasciculus). The resulting model therefore consisted of 10 endogenous connections. We tested all possible configurations of modulatory connectivity for these connections, resulting in a total of 1023 different models per subject with a minimum of 1, and a maximum of all 10 connections modulated (= 210 − 1 models). A comprehensive model space such as this is advantageous during model comparison because the goodness of fit of any given model is relative to those models included in the comparison. Therefore, testing across a large number of models increases the reliability of the results obtained through model-comparison procedures.

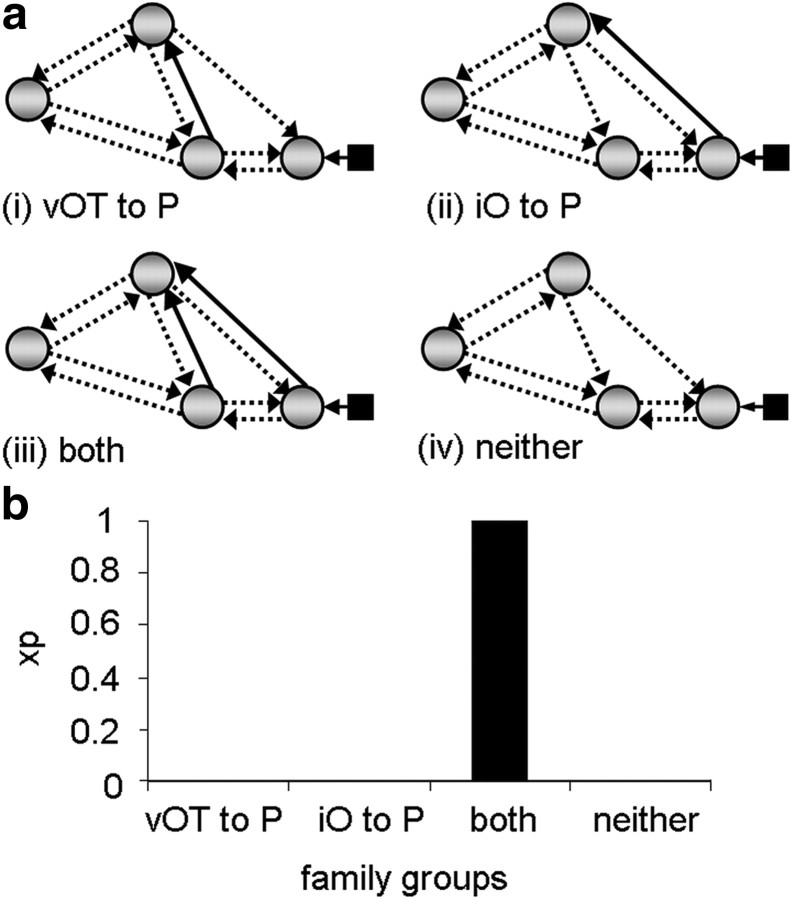

Figure 2.

Family analysis 1. a, The endogenous connections present within the four-region model. All connections were tested to determine whether they were modulated by reading. Input entered the model at region iO as indicated by the black square and arrow. b, Family groups divided according to connections modulated from input source. Solid black arrows indicate the connections used to partition model space into four families and are therefore present in all models within a given family. Dashed arrows indicate connections tested within each family across models. c, Family results—graph showing the exceedance probability (xp) for each family. A, aSTS; P, pSTS.

Because the purpose of the analyses was to identify the most likely route(s) across subjects, we performed a family-level analysis as opposed to a conventional model comparison (Penny et al., 2010). In a family analysis, models are grouped according to the presence of one or more features that are shared by that subset of models (i.e., the presence/absence of a particular connection between two regions). The results of this analysis are expressed quantitatively by an exceedance probability value for each family. These values range between 0 and 1, and sum to 1 across all families. The “family” with the highest exceedance probability value represents that which is most plausible given the data. Family analysis is most appropriate under circumstances where the model space (detailed below) consists of a large number of models. This is because model-comparison procedures that focus on identifying the “best” or “winning” model can become unstable, particularly when participants have different winning models, or when many models share similar parameters (Penny et al., 2010). We adopted a random-effects (RFX) Bayesian model selection (BMS) procedure using a Gibbs sampling method. RFX analyses are more suitable for modeling cognitive tasks when the winning model may differ between participants (Stephan et al., 2009). The use of Gibbs sampling is preferred when the number of models exceeds the number of participants in the analysis (Penny et al., 2010).

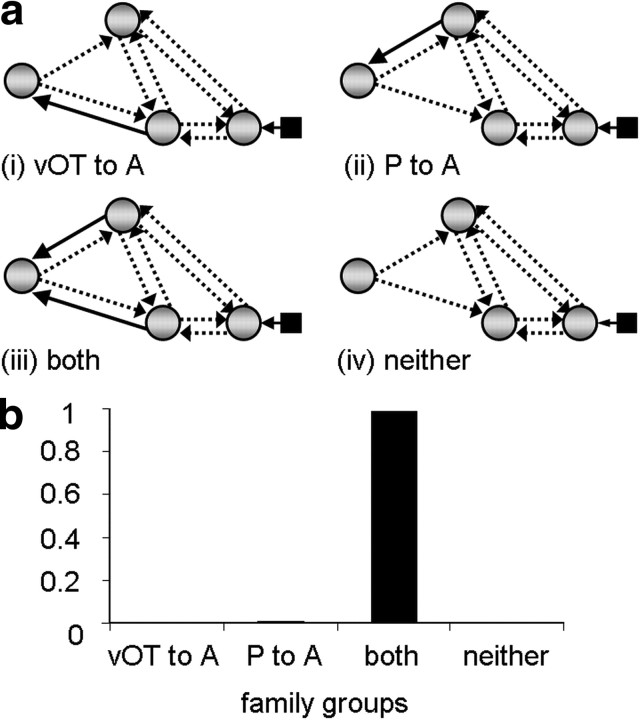

Family analyses: how does reading modulate connections to pSTS and aSTS?

We conducted 3 different family analyses. The aim of the first analysis was to identify which connections from iO were modulated by reading. The model space described above was partitioned into four mutually exclusive families according to the presence of modulatory connections from region iO to: (i) vOT only, (ii) pSTS only, (iii) vOT and pSTS, or (iv) neither vOT nor pSTS (Fig. 2b). Similarly, in the second analysis, the model space was partitioned according to whether inputs to pSTS were modulated from: (i) vOT only, (ii) iO only, (iii) both vOT and iO, or (iv) neither vOT nor iO (Fig. 3a). The motivation for this second analysis was to test whether there was evidence for connections from iO to pSTS after connectivity for vOT to pSTS had been accounted for. In the third analysis, model space was partitioned according to whether the modulatory connections to aSTS were from: (i) vOT only, (ii) pSTS only, (ii) both vOT and pSTS, or (iv) neither vOT nor pSTS (Fig. 4a). This third analysis was conducted to find evidence to confirm our hypothesis that reading would modulate connectivity from vOT to aSTS.

Figure 3.

Family analysis 2. a, Family groups divided according to modulatory connections accessing pSTS (P)—solid black arrows indicate the connections used to partition model space into four families and therefore present in all models within a given family. Dashed arrows indicate connections tested within each family across models. b, Family results—graph showing the exceedance probability (xp) for each family.

Figure 4.

Family analysis 3. a, Family groups divided according to modulatory connections sending input to aSTS (A). Solid black arrows indicate the connections used to partition model space into four families and are therefore present in all models within a given family. Dashed arrows indicate connections tested within each family across models. b, Family results—graph showing the exceedance probability (xp) for each family. P, pSTS.

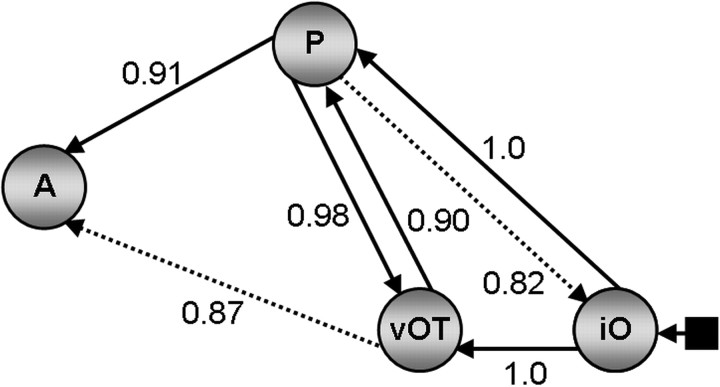

Bayesian model averaging analysis of model parameters.

Probability values for all connectivity parameters in the winning families were calculated by sampling the posterior distribution of model parameters in a Bayesian model averaging (BMA) analysis (Penny et al., 2010). This yields a weighted average for each model parameter (connection strength between two regions) by using the posterior distribution of model parameters calculated during the estimation process. Models with the highest probability make the largest contribution, while the contribution of low-probability models is minimized. BMA therefore generates a distribution of model parameters proportional to the likelihood of each model given the data, over subjects (Penny et al., 2010). We sampled 10,000 data points from the posterior distributions of model parameters generated by the BMA procedure for both endogenous and modulatory connections. The likelihood of the sample falling uniquely within the distribution of posterior densities was then calculated on a connection-by-connection basis. This allowed us to test the probability that samples for each connection differed from zero, and thus determine those connections with the highest probability of being modulated by reading sentences and word strings (for a similar procedure, see Seghier et al., 2011). Thus, a value of 0.9 on a given connection indicates that 90% of the samples for that connection differ from zero. Therefore connections at or above this threshold value have a high likelihood of being modulated by reading, while probability values ≥0.85 suggest a strong trend. In addition, mean parameter values for each subject for all endogenous and modulatory connections calculated during the BMA procedure were extracted to test for any age-related differences in connection strength.

Results

Family analyses: how does reading modulate connections to pSTS and aSTS?

The results from the family analyses unambiguously indicate (family exceedance probability = 1.0) that reading modulates (1) both connections from iO (iO→vOT and iO→pSTS); and (2) both connections to pSTS (iO→pSTS and vOT→pSTS). Together these analyses point to the existence of two functional pathways from iO to pSTS, one via vOT (iO→vOT→pSTS), and another that does not involve vOT. In addition, there was very high evidence (family exceedance probability = 0.99) that (3) aSTS receives connections from both vOT and pSTS. The results of these family comparisons are illustrated in Figures 2c, 3b, and 4b.

BMA analysis of model parameters

Probability values for all endogenous connections are reported in Table 2 and were equivalent across all three family analyses. Connections showing a strong probability of being modulated by reading sentences and word strings are illustrated in Figure 5. Modulatory connections from iO to vOT and from iO to pSTS show the maximum probability value (p = 1.0). This provides further evidence that iO sends parallel inputs to both pSTS and vOT. The interactions between pSTS and vOT were both significant (p = 0.98 for pSTS→vOT; p = 0.90 for vOT→pSTS), with the effect on pSTS→vOT being stronger than that on vOT→pSTS (t(16) = 3.140, p = 0.006). With respect to the connectivity to aSTS, this was significant from pSTS (p = 0.92 for pSTS→aSTS), with a corresponding trend from vOT (p = 0.87 for vOT→aSTS) and no significant difference between pSTS→aSTS and vOT→aSTS (t(16) = 1.481, p = 0.158). Finally, correlation analyses did not identify significant relationship between age and connection strength for endogenous or modulatory connections.

Figure 5.

BMA parameter analysis results. Probability values for modulatory connections. Regions labeled P represent pSTS, and those labeled A represent aSTS. Connections above threshold are indicated by solid black arrows. Strong trends are indicated by black dashed arrows.

Discussion

This study investigated how visual input during reading accesses amodal language areas in the left temporal lobe (pSTS and aSTS in our analysis). The expectation from contemporary models of reading was that left vOT would be involved in relaying information from early visual areas (iO in our analysis) to pSTS and aSTS. The empirical question was whether our analyses would provide evidence for the contribution of an alternative input from iO to pSTS that did not involve vOT.

The results suggest multiple connections from visual to temporal lobe language areas. First, they provide unequivocal evidence that the best fit of the data was for the DCM models that allowed reading to modulate the connections from iO→pSTS and iO→vOT (rather than iO→vOT only, iO→pSTS only, or neither of these connections). A second finding was that reading modulated connectivity from pSTS to vOT more strongly than it modulated connectivity from vOT to pSTS, which is not consistent with pSTS being driven by vOT. A third finding was that activity in aSTS is influenced by that in both vOT and pSTS. Combined together, our results suggest at least three potential reading pathways in the occipitotemporal cortex: iO→pSTS→aSTS; iO→vOT→aSTS; and iO→vOT→pSTS→aSTS.

Our results were based on a family analysis approach (Penny et al., 2010) and were confirmed with BMA. These techniques have only recently become available, with limited application in the domain of reading (Seghier and Price, 2010; Seghier et al., 2011). Analyses at the family-level provided a systematic investigation of the entire model space and identified the functional connections in our four-region model that were most consistently modulated by reading across subjects. The BMA parameter analysis supported these findings by showing that the probability values for all the identified modulatory connections were above threshold (p > 0.9), except on the connection from vOT to aSTS, which was supported by a strong trend (p = 0.87). Below we discuss the results in terms of cognitive and anatomical models of reading.

Cognitive models of reading

It is well appreciated in the cognitive literature that there are multiple ways that written words can be processed. In alphabetic languages, phonology can be linked to multiple levels of the orthographic input ranging from single letters, bigrams, syllables through to whole words (Ziegler and Goswami, 2005, 2006). In addition, lesion studies and computational models (Patterson and Shewell, 1987; Seidenberg and McClelland, 1989; Coltheart et al., 1993, 2001; Plaut and Shallice, 1993; Plaut et al., 1996; Harm and Seidenberg, 2004) have demonstrated that written words can access semantics either via phonology (orthography to phonology to semantics) or without phonology (orthography to semantics).

Mapping cognitive functions to brain structure is not straightforward because multiple brain areas contribute to orthographic (Vinckier et al., 2007), semantic (Binder et al., 2009), and phonological (Vigneau et al., 2006) processing. Moreover, each of the brain regions associated with reading is also activated by non-reading tasks (Price et al., 2006). This many-to-many structure–function mapping makes it difficult to investigate functional connectivity within the reading system. Our conclusions are therefore limited to connectivity between small sets of areas.

For the regions involved in our DCM analysis, previous studies suggest that pSTS is involved in (though not specific to) phonological processing, aSTS is involved in (though not specific to) semantic processing, and vOT and iO are involved in (though not specific to) orthographic processing. These conclusions are based on findings that our pSTS region [x = −52, y = −40, z = 4] is activated by pseudo-words compared with tones (Binder et al., 2000; [x = −53, y = −43, z = 6]), is sensitive to the phonological neighborhood of words (Okada and Hickok, 2006; [x = −53, y = −37, z = 3]), and shows a positive correlation between reading activation and phonological awareness (Turkeltaub et al., 2003; [x = −51, y = −42, z = 8]). In contrast, the aSTS [x = −54, y = 10, z = −12] is engaged in processing meaningful language (Scott et al., 2000: [x = −54, y = 6, z = −16]; Crinion et al., 2003: [x = −52, y = 10, z = −18]) and making semantic judgments on words (Binney et al., 2010; [x = −57, y = 6, z = −18]), which is consistent with the long association of the anterior temporal lobes with semantic processing (Patterson et al., 2007). The designation of iO and vOT as orthographic processing areas is based on the location of these areas in the ventral visual processing stream (Ungerleider and Haxby, 1994) and the well established knowledge that, within the ventral visual stream, there is a posterior-to-anterior gradient of increasingly complex visual responses to both written words (Nobre et al., 1994; Vinckier et al., 2007) and objects (Simons et al., 2003).

On the basis of these previous findings, we can speculate that the iO→pSTS and vOT→pSTS connections are involved in linking orthography and phonology, the pSTS→aSTS connection is involved in linking phonology and semantics, and iO→vOT→aSTS connection is involved in linking orthography and semantics. Extrapolating from these assumptions, our results provide novel evidence that activity in a phonological processing area (pSTS) is influenced by both early and late visual processing (in iO and vOT, respectively). This is consistent with the links between orthography and phonology being present in multiple “grain sizes” (Ziegler and Goswami, 2005, 2006). Evidence for the contribution of iO→pSTS as well as vOT→pSTS does not imply that these pathways are functionally equivalent or independent from one another. To the contrary, lesion studies suggest that each connection is likely to be involved in different computations. For example, damage to vOT cortex impairs fast parallel letter recognition (Cohen et al., 2004), but slower serial reading can be left intact. Slower serial translations between orthography and phonology might therefore be supported by the iO→pSTS connection or other pathways that we have not mapped in the current study, e.g., those implemented by regions located between iO and vOT, dorsal occipital areas, or right occipitotemporal cortex (Cohen et al., 2004).

Anatomical models of reading

Our findings highlight the importance of a functional link between iO and pSTS that does not involve vOT. Since a significant functional connection between pairs of regions does not necessarily imply direct anatomical pathways, there may be many cortical regions (other than vOT) that mediate information flow from iO to pSTS; or these regions might be directly connected by the inferior longitudinal fasciculus that carries information along both dorsal and ventral occipitotemporal pathways (Catani et al., 2003).

White matter tractography will be needed to study the anatomical connections between the iO and pSTS regions used in our DCM study, and functional connectivity analyses are needed to investigate intermediate cortical regions that relay information flow between iO to pSTS. Possible candidates for such intermediate regions include the occipital and posterior middle temporal areas that are posterior to the vOT area included in our DCM analysis (Fig. 1b).The angular gyrus may also play a role. Although it was not activated in the current study, a previous DCM analysis of reading relative to picture naming (Carreiras et al., 2009) indicated that reading increased functional connectivity from the angular gyrus to a dorsal occipital area that fed information to the left supramarginal gyrus and left superior temporal cortex. Thus, the angular gyrus appeared to be moderating rather than mediating the word recognition process.

In conclusion, our results point toward a model of reading that includes multiple pathways from vision to higher-order temporal lobe language areas. At the very least, these include: iO→pSTS→aSTS; iO→vOT→aSTS; and iO→vOT→pSTS→aSTS. Pathways involving vOT are consistent with functional imaging data. Pathways that do not include vOT will need to be considered in future anatomical and functional connectivity studies of the reading system; for example, those investigating how functional connectivity in the reading system is modulated by word type or participant group (Mechelli et al., 2005; Cao et al., 2008; Levy et al., 2009; Liu et al., 2010). It will also be important to establish how our four regions of interest interact with the many other left as well as right hemisphere frontal and temporoparietal areas that are involved in reading. This would provide a useful step toward understanding how networks of brain regions activated during reading interact to achieve successful comprehension and production.

Footnotes

This research was funded by the Wellcome Trust.

References

- Ben-Shachar M, Dougherty RF, Wandell BA. White matter pathways in reading. Curr Opin Neurobiol. 2007;17:258–270. doi: 10.1016/j.conb.2007.03.006. [DOI] [PubMed] [Google Scholar]

- Binder JR, Mohr JP. The topography of callosal reading pathways. Brain. 1992;115:1807–1826. doi: 10.1093/brain/115.6.1807. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binney RJ, Embleton KV, Jefferies E, Parker GJ, Lambon-Ralph MA. The ventral and inferolateral aspects of the anterior temporal lobe are crucial in semantic memory: evidence from a novel direct comparison of distortion-corrected fMRI, rTMS, and semantic dementia. Cereb Cortex. 2010;20:2728–2738. doi: 10.1093/cercor/bhq019. [DOI] [PubMed] [Google Scholar]

- Booth JR, Mehdiratta N, Burman DD, Bitan T. Developmental increases in effective connectivity to brain regions involved in phonological processing during tasks with orthographic demands. Brain Res. 2008;1189:78–89. doi: 10.1016/j.brainres.2007.10.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cao F, Bitan T, Booth JR. Effective brain connectivity with reading difficulties during phonological processing. Brain Lang. 2008;107:91–101. doi: 10.1016/j.bandl.2007.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carreiras M, Seghier ML, Baquero S, Estévez A, Lozano A, Devlin JT, Price CJ. An anatomical signature for literacy. Nature. 2009;461:983–986. doi: 10.1038/nature08461. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Mechelli A, Estévez A, Price CJ. Brain activation for lexical decision and reading aloud: two sides of the same coin? J Cogn Neurosci. 2007;19:433–444. doi: 10.1162/jocn.2007.19.3.433. [DOI] [PubMed] [Google Scholar]

- Catani M, Jones DK, Donato RM, ffytche DH. Occipito-temporal connections in the human brain. Brain. 2003;126:2093–2107. doi: 10.1093/brain/awg203. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff MA, Michel F. The visual word form area. Spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123:291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehéricy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the visual word form area. Brain. 2002;125:1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Cohen L, Martinaud O, Lemer C, Lehéricy S, Samson Y, Obadia M, Slachevsky A, Dehaene S. Visual word recognition in the left and right hemispheres: anatomical and functional correlates and peripheral alexias. Cereb Cortex. 2003;13:1313–1333. doi: 10.1093/cercor/bhg079. [DOI] [PubMed] [Google Scholar]

- Cohen L, Henry C, Dehaene S, Martinaud O, Lehéricy S, Lemer C, Ferrieux S. The pathophysiology of letter-by-letter reading. Neuropsychologia. 2004;42:1768–1780. doi: 10.1016/j.neuropsychologia.2004.04.018. [DOI] [PubMed] [Google Scholar]

- Coltheart M, Curtis B, Atkins P, Haller M. Models of reading aloud: dual-route and parallel-distributed processing approaches. Psychol Rev. 1993;100:589–608. [Google Scholar]

- Coltheart M, Rastle K, Perry C, Langdon R, Ziegler J. DRC: a dual-route cascaded model of visual word recognition and reading aloud. Psychol Rev. 2001;108:204–256. doi: 10.1037/0033-295x.108.1.204. [DOI] [PubMed] [Google Scholar]

- Crinion JT, Lambon-Ralph MA, Warburton EA, Howard D, Wise RJ. Temporal lobe regions engaged during normal speech comprehension. Brain. 2003;126:1193–1201. doi: 10.1093/brain/awg104. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Damasio H. The anatomic basis of pure alexia. Neurology. 1983;33:1573–1583. doi: 10.1212/wnl.33.12.1573. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Geschwind N. The neural basis of language. Annu Rev Neurosci. 1984;71:127–147. doi: 10.1146/annurev.ne.07.030184.001015. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Le Clec'H G, Poline JB, Le Bihan D, Cohen L. The visual word form area: a prelexical representation of words in the fusiform gyrus. Neuroreport. 2002;13:321–325. doi: 10.1097/00001756-200203040-00015. [DOI] [PubMed] [Google Scholar]

- Dejerine J. Sur un cas de cecite verbale avec agraphie, suivi d'autopsie. Mémoires de la Société Biologique. 1891;3:197–201. [Google Scholar]

- Dejerine J. Contribution a l'etude anatomoclinique et clinique des differentes varietes de cecite verbal. Compte Rendu Hebdomadaire des Séances et Mémoires de la Société de Biologie. 1892;4:61–90. [Google Scholar]

- Friston KJ, Harrison L, Penny W. Dynamic causal modelling. Neuroimage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Gaillard R, Naccache L, Pinel P, Clémenceau S, Volle E, Hasboun D, Dupont S, Baulac M, Dehaene S, Adam C, Cohen L. Direct intracranial fMRI, and lesion evidence for the causal role of the left inferotemporal cortex in reading. Neuron. 2006;50:191–204. doi: 10.1016/j.neuron.2006.03.031. [DOI] [PubMed] [Google Scholar]

- Geschwind N. Disconnection syndromes in animals and man. Brain. 1965;88:237–294. doi: 10.1093/brain/88.2.237. [DOI] [PubMed] [Google Scholar]

- Harm MW, Seidenberg MS. Computing the meanings of words in reading: cooperative division of labor between visual and phonological processes. Psychol Rev. 2004;111:662–720. doi: 10.1037/0033-295X.111.3.662. [DOI] [PubMed] [Google Scholar]

- Jobard G, Crivello F, Tzourio-Mazoyer N. Evaluation of the dual route theory of reading: a metanalysis of 35 neuroimaging studies. Neuroimage. 2003;20:693–712. doi: 10.1016/S1053-8119(03)00343-4. [DOI] [PubMed] [Google Scholar]

- Kiebel SJ, Klöppel S, Weiskopf N, Friston KJ. Dynamic causal modelling: a generative model of slice timing in fMRI. Neuroimage. 2007;34:1487–1496. doi: 10.1016/j.neuroimage.2006.10.026. [DOI] [PubMed] [Google Scholar]

- Leff AP, Crewes H, Plant GT, Scott SK, Kennard C, Wise RJ. The functional anatomy of single word reading in patients with hemianopic and pure alexia. Brain. 2001;124:510–521. doi: 10.1093/brain/124.3.510. [DOI] [PubMed] [Google Scholar]

- Leff AP, Schofield TM, Stephan KE, Crinion JT, Friston KJ, Price CJ. The cortical dynamics of intelligible speech. J Neurosci. 2008;28:13209–13215. doi: 10.1523/JNEUROSCI.2903-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy J, Pernet C, Treserras S, Boulanouar K, Aubry F, Démonet JF, Celsis P. Testing for the dual-route cascade reading model in the brain: an effective connectivity account of an efficient reading style. PLoS One. 2009;4:e6675. doi: 10.1371/journal.pone.0006675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindenberg R, Scheef L. Supramodal language comprehension: role of the left temporal lobe for listening and reading. Neuropsychologia. 2007;45:2407–2415. doi: 10.1016/j.neuropsychologia.2007.02.008. [DOI] [PubMed] [Google Scholar]

- Liu L, Vira A, Friedman E, Minas J, Bolger D, Bitan T, Booth J. Children with reading disability show brain differences in effective connectivity for visual but not auditory word comprehension. PLoS One. 2010;5:e13492. doi: 10.1371/journal.pone.0013492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 2003;38:487–497. doi: 10.1016/s0896-6273(03)00197-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechelli A, Crinion JT, Long S, Friston KJ, Lambon Ralph MA, Patterson K, McClelland JL, Price CJ. Dissociating reading processes on the basis of neuronal interactions. J Cogn Neurosci. 2005;17:1753–1765. doi: 10.1162/089892905774589190. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Allison T, McCarthy G. Word recognition in the human inferior temporal lobe. Nature. 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- Okada K, Hickok G. Identification of lexical-phonological networks in the superior temporal sulcus using functional magnetic resonance imaging. Neuroreport. 2006;17:1293–1296. doi: 10.1097/01.wnr.0000233091.82536.b2. [DOI] [PubMed] [Google Scholar]

- Patterson K, Shewell C. Speak and spell: dissociations and word-class effects. In: Coltheart M, Sartori G, Job R, editors. The cognitive neuropsychology of language. London: Erlbaum; 1987. pp. 273–294. [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Penny WD, Stephan KE, Daunizeau J, Rosa MJ, Friston KJ, Schofield TM, Leff AP. Comparing families of dynamic causal models. PLoS Comput Biol. 2010;6:e1000709. doi: 10.1371/journal.pcbi.1000709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plaut DC, Shallice T. Deep dyslexia: a case study of connectionist neuropsychology. Cogn Neuropsychol. 1993;10:377–500. [Google Scholar]

- Plaut DC, McClelland JL, Seidenberg MS, Patterson K. Understanding normal and impaired word reading: computational principles in quasi-regular domains. Psychol Rev. 1996;103:56–115. doi: 10.1037/0033-295x.103.1.56. [DOI] [PubMed] [Google Scholar]

- Price CJ. The anatomy of language: contributions from functional neuroimaging. J Anat. 2000;197:335–359. doi: 10.1046/j.1469-7580.2000.19730335.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Mechelli A. Reading and reading disturbance. Curr Opin Neurobiol. 2005;15:231–238. doi: 10.1016/j.conb.2005.03.003. [DOI] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Frackowiak RS. Demonstrating the implicit processing of visually presented words and pseudowords. Cereb Cortex. 1996;6:62–70. doi: 10.1093/cercor/6.1.62. [DOI] [PubMed] [Google Scholar]

- Price CJ, McCrory E, Noppeney U, Mechelli A, Moore CJ, Biggio N, Devlin JT. How reading differs from object naming at the neuronal level. Neuroimage. 2006;29:643–648. doi: 10.1016/j.neuroimage.2005.07.044. [DOI] [PubMed] [Google Scholar]

- Pugh KR, Mencl WE, Jenner AR, Katz L, Frost SJ, Lee JR, Shaywitz SE, Shaywitz BA. Functional neuroimaging studies of reading and reading disability (developmental dyslexia) Ment Retard Dev Disabil Res Rev. 2000;6:207–213. doi: 10.1002/1098-2779(2000)6:3<207::AID-MRDD8>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- Richardson FM, Thomas MS, Price CJ. Neuronal activation for semantically reversible sentences. J Cogn Neurosci. 2010;22:1283–1298. doi: 10.1162/jocn.2009.21277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmahmann JD, Pandya DN, Wang R, Dai G, D'Arceuil HE, de Crespigny AJ, Wedeen VJ. Association fibre pathways of the brain: parallel observations from diffusion spectrum imaging and autoradiography. Brain. 2007;130:630–653. doi: 10.1093/brain/awl359. [DOI] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJ. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seghier ML, Price CJ. Reading aloud boosts connectivity through the putamen. Cereb Cortex. 2010;20:570–582. doi: 10.1093/cercor/bhp123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seghier ML, Zeidman P, Neufeld NH, Leff AP, Price CJ. Identifying abnormal connectivity in patients using dynamic causal modeling of fMRI responses. Front Syst Neurosci. 2010;4:142. doi: 10.3389/fnsys.2010.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seghier ML, Josse G, Leff AP, Price CJ. Lateralization for words is predicted by reduced coupling from left to right prefrontal cortex. Cereb Cortex. 2011 doi: 10.1093/cercor/bhq203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidenberg MS, McClelland JL. A distributed, developmental model of word recognition and naming. Psychol Rev. 1989;96:523–568. doi: 10.1037/0033-295x.96.4.523. [DOI] [PubMed] [Google Scholar]

- Simons JS, Koutstaal W, Prince S, Wagner AD, Schacter DL. Neural mechanisms of visual object priming: evidence for perceptual and semantic distinctions in fusiform cortex. Neuroimage. 2003;19:613–626. doi: 10.1016/s1053-8119(03)00096-x. [DOI] [PubMed] [Google Scholar]

- Spitsyna G, Warren JE, Scott SK, Turkheimer FE, Wise RJ. Converging language streams in the human temporal lobe. J Neurosci. 2006;26:7328–7336. doi: 10.1523/JNEUROSCI.0559-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starrfelt R, Habekost T, Leff AP. Too little, too late: reduced visual span and speed characterize pure alexia. Cereb Cortex. 2009;19:2880–2890. doi: 10.1093/cercor/bhp059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starrfelt R, Habekost T, Gerlach C. Visual processing in pure alexia: a case study. Cortex. 2010;46:242–255. doi: 10.1016/j.cortex.2009.03.013. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Harrison LM, Kiebel SJ, David O, Penny WD, Friston KJ. Dynamic causal models of neural system dynamics: current state and future extensions. J Biosci. 2007;32:129–144. doi: 10.1007/s12038-007-0012-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ. Bayesian model selection for group studies. Neuroimage. 2009;46:1004–1017. doi: 10.1016/j.neuroimage.2009.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Moran RJ, den Ouden HE, Daunizeau J, Friston KJ. Ten simple rules for dynamic causal modeling. Neuroimage. 2010;49:3099–3109. doi: 10.1016/j.neuroimage.2009.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turkeltaub PE, Coslett HB. Localization of sublexical speech perception components. Brain Lang. 2010;114:1–15. doi: 10.1016/j.bandl.2010.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turkeltaub PE, Gareau L, Flowers DL, Zeffiro TA, Eden GF. Development of neural mechanisms for reading. Nat Neurosci. 2003;6:767–773. doi: 10.1038/nn1065. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Haxby JV. ‘What’ and ‘where’ in the human brain. Curr Opin Neurobiol. 1994;4:157–165. doi: 10.1016/0959-4388(94)90066-3. [DOI] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Hervé PY, Duffau H, Crivello F, Houdé O, Mazoyer B, Tzourio-Mazoyer N. Meta-analyzing left hemisphere language areas: Phonology, semantics, and sentence processing. Neuroimage. 2006;30:1414–1432. doi: 10.1016/j.neuroimage.2005.11.002. [DOI] [PubMed] [Google Scholar]

- Vinckier F, Dehaene S, Jobert A, Dubus JP, Sigman M, Cohen L. Hierarchical coding of letter strings in the ventral stream: dissecting the inner organization of the visual word-form system. Neuron. 2007;55:143–156. doi: 10.1016/j.neuron.2007.05.031. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Goswami U. Reading acquisition, developmental dyslexia, and skilled reading across languages: a psycholinguistic grain size theory. Psychol Bull. 2005;131:3–29. doi: 10.1037/0033-2909.131.1.3. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Goswami U. Becoming literate in different languages: similar problems, different solutions. Dev Sci. 2006;9:429–436. doi: 10.1111/j.1467-7687.2006.00509.x. [DOI] [PubMed] [Google Scholar]