Abstract

In a companion paper, we proposed that cognitive debiasing is a skill essential in developing sound clinical reasoning to mitigate the incidence of diagnostic failure. We reviewed the origins of cognitive biases and some proposed mechanisms for how debiasing processes might work. In this paper, we first outline a general schema of how cognitive change occurs and the constraints that may apply. We review a variety of individual factors, many of them biases themselves, which may be impediments to change. We then examine the major strategies that have been developed in the social sciences and in medicine to achieve cognitive and affective debiasing, including the important concept of forcing functions. The abundance and rich variety of approaches that exist in the literature and in individual clinical domains illustrate the difficulties inherent in achieving cognitive change, and also the need for such interventions. Ongoing cognitive debiasing is arguably the most important feature of the critical thinker and the well-calibrated mind. We outline three groups of suggested interventions going forward: educational strategies, workplace strategies and forcing functions. We stress the importance of ambient and contextual influences on the quality of individual decision making and the need to address factors known to impair calibration of the decision maker. We also emphasise the importance of introducing these concepts and corollary development of training in critical thinking in the undergraduate level in medical education.

Keywords: Cognitive Biases, Decision Making, Diagnostic Errors, Patient Safety

Introduction

In the first of these two papers, we suggested that cognitive debiasing is an essential skill in developing sound clinical reasoning. We reviewed the origins of innate and acquired cognitive biases and some proposed mechanisms for how debiasing processes might work.1 In this paper, we first examine some barriers to debiasing and then review multiple strategies to address them.

Over the years, various strategies have been adopted to deal with shortcomings and failures in decision making. As early as 1772, Ben Johnson outlined a ‘moral algebra’ to improve his judgements and avoid rash decisions.2 Proverbs, aphorisms, caveats, mnemonics, lists and many other strategies have emerged that serve a similar purpose. Investigations on their effectiveness have not been so frequent and, presently, cognitive debiasing is an inexact science. Here, we offer a variety of strategies from both behavioural sciences and medicine that have been developed in recent years, which vary from experimental studies to simple observations to opinions, with varying levels of evidentiary support. Our purpose has been to develop an inclusive collection of strategies in a framework for learners, researchers and educators that will provide a practical scaffold for the work ahead.

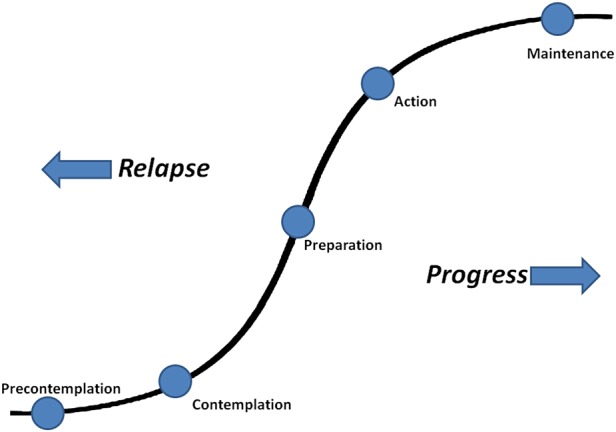

Cognitive debiasing involves changes that rarely come about through a discrete, single event but instead through a succession of stages—from a state of lack of awareness of bias, to awareness, to the ability to detect bias, to considering a change, to deciding to change, then initiating strategies to accomplish change, and finally, maintaining the change. These key steps are outlined in figure 1,3 which may help our understanding of how physicians might engage in debiasing. Several caveats need to be applied to this model: first, a clinician making a biased response does not necessarily mean that the decision maker was unaware of correct approaches to make decisions4; second, for biases to be successfully addressed, there needs to be such awareness as well as the motivation for change; third, the clinician needs to be aware of the direction in which the bias is taking him or her and its magnitude; and finally, even if the clinician is aware of a potential for bias in a particular situation, he or she might still be unable to shake the conviction of his or her biased judgement.5

Figure 1.

Transtheoretical model of change.

Many clinicians presently appear to be at the precontemplative level. They may be unaware of the powerful influence of unconscious factors on their reasoning, may not realise that cognitive and affective biases can affect their decision making, and therefore, see no reason to take any action to change their thinking. Introducing these ideas and raising awareness is a prerequisite for debiasing. Sometimes a sentinel event can catalyse the uptake of an important idea such as the publication of Groopman's book How Doctors Think.6 Conventional forms of information exchange, such as rounds, seminars, and morbidity and mortality conferences, may provide opportunities to address cognitive pitfalls within the context of engaging case examples. At times, however, a single experience, which can be shared in such moments, can change thinking. This happens especially if the event is emotionally laden because we tend to be particularly influenced by emotionally arousing experiences.7 8 For example, if a physician misdiagnoses a headache as benign and the patient subsequently dies from a subarachnoid haemorrhage, the powerful impact of this experience might produce long-standing changes in the physician's approach towards the diagnosis of patients presenting with headache.

Increasing physicians’ awareness of the need for debiasing does not guarantee, however, that debiasing actually occurs. Indeed, since Fischoff's pioneering work,9 a general mood of gloom and doom towards cognitive debiasing in the psychology and medical literature seems to have prevailed5 10; it is accepted to be a difficult but not an impossible task. A variety of factors may explain the intractability of cognitive bias and why it is so difficult to change. In addition to lack of awareness of the impact of bias in clinical reasoning, clinicians’ overconfidence in their own judgements may be one of the most powerful factors preventing debiasing.11 Even those physicians who are aware of the potential impact of biases on clinical judgement may not believe that they are vulnerable to them. Moreover, it is a human tendency to bolster existent beliefs rather than searching for new approaches, and it is easier to stay with the status quo rather than make efforts to learn new approaches and change current practice. Physicians are not immune to these tendencies. Finally, the invariably abstract, arid form of the discussions of cognitive processes contributes to these impediments: they typically lack the vividness and concrete nature of clinical disease presentations that are more appealing to clinicians.

This becomes even more challenging if one considers that biases tend to act unconsciously. A general problem with debiasing, as Horton notes, is that ‘the same kinds of biases that distort our thinking in general also distort our thinking about the biases themselves’.8 Indeed, many biases are applied unconsciously, and if physicians are unaware of them, they will have difficulty recognising the need for debiasing. Clinicians themselves may be just as vulnerable as their patients to a number of psychological factors that might compromise decision making.12 13 Nevertheless, the topic has attracted considerable interest and effort, reflecting a widespread perception of the need for solutions to the vulnerability of human reasoning.14

Strategies for cognitive debiasing

In the first of these combined papers, we suggested that debiasing would require a physician to detect the need to override the initial intuitive responses to a problem in order to engage in analytical processes to restructure reasoning and find alternative solutions. The extent to which a physician tends to engage—and succeed—in debiasing depends not only on his/her prior knowledge and experiences but also on thinking dispositions. Some of the strategies described aim at making physicians aware of the risk of biases, intervening during the contemplation and preparation steps to enhance their ability to detect the need for debiasing in the future; we have grouped these under ‘educational strategies’ in table 1. While educational strategies aim mostly to enhance physicians’ ability to debiasing in the future, other strategies may be implemented by the physician at the time of problem-solving, while reasoning about the problem at hand. These strategies have been grouped under ‘workplace strategies’ in table 1 and include both strategies that depend basically on physicians’ cognitive processes and those that require interventions in the settings of practice. Whereas some of these strategies have been evaluated and some empirical evidence exists that supports their use, others are supported mostly by research in other domains; still others have a long-standing tradition in clinical practice but have not usually been subjected to formal investigation.

Table 1.

Educational and workplace strategies for cognitive debiasing

| Strategy | Comment | Examples |

|---|---|---|

| Educational | ||

| training on theories of reasoning and medical decision making | Achieving improved diagnostic reasoning requires an understanding of cognitive theories about decision making and the impact of cognitive biases15–18 |

|

| Bias inoculation | A key recommendation is to teach about cognitive and affective biases and develop specific tools to test for them22–24 and for debiasing | |

| Specific educational interventions | Teaching specific skills may mitigate particular biases by providing basic knowledge leading to greater insight | |

| Cognitive tutoring systems | Computer-based systems can be used to construct a learner's profile of decision making and provide feedback on specific biases and strategies to mitigate them |

|

| Simulation training | Simulation may be a venue for teaching about, identifying and remediating cognitive errors31 |

|

| Workplace | ||

| Get more information | Heuristics and biases often arise in the context of insufficient information. Diagnostic accuracy is related to thoroughness of cue acquisition33 |

|

| Structured data acquisition | Forcing deliberate data acquisition may avoid ‘spot diagnoses’ 35 36 by ensuring that less obvious symptoms are considered |

|

| Affective debiasing | Virtually all decision making involves some degree of affective influence. Many affective biases are hard-wired. Decision makers often are unaware of the affective influences on decision making38 39 |

|

| Metacognition, decoupling, reflection, mindfulness | A deliberate disengagement or decoupling from intuitive judgements and engagement in analytical processes to verify initial impressions1 | |

| Slowing down strategies | Accuracy suffers when diagnoses are made too early and improves with slowing down | |

| Be more sceptical | A tendency in human thinking is to believe rather than disbelieve. Type 1 processing occurs by viewing something as more predictable and coherent than is really the case10 44 |

|

| Recalibration | When the decision maker anticipates additional risks, recalibration may reduce error |

|

| Group decision strategy | Seeking others’ opinions in complex situations may be of value. Crowd wisdom, at times, is greater than an individual decision maker46 |

|

| Personal accountability | When people know their decisions will be scrutinised and they are accountable, their performance may improve |

|

| Supportive environments | Friendly and supportive environments improve the quality of decision making49 |

|

| Exposure control | Limit exposure to information that might influence judgement before an impression is formed51 |

|

| Sparklines | Informational mini-graphics can be embedded in context in clinical data. Graphics have the potential to mitigate specific biases52 |

|

| Decision support systems | Support systems have been developed for clinical use54 55 |

|

In table 2, we describe a number of strategies that are collectively referred to as ‘forcing functions’. The degree of force can range from absolute constraints such that an erroneous response cannot be made, for example, removal of concentrated potassium solutions from hospital wards, to explicit ‘if this then this’ rules, to simply encouraging a desired response. Cognitive forcing functions are rules that depend on the clinician consciously applying a metacognitive step and cognitively forcing a necessary consideration of alternatives. Some of these functions can be easily recognised in clinical adages or warnings that, although rarely investigated, have long been part of clinical teaching. They do not all need to be explicit; sometimes it is possible to gently nudge people in a particular direction in order to obtain better outcomes.68

Table 2.

Forcing functions

| Forcing function | Comment | Examples |

|---|---|---|

| Statistical and clinical prediction rules (SPRs and CPRs) | Explicit SPRs and CPRs typically equal or exceed the reliability of expert ‘intuitive’ judgement. Easy to use, they address significant issues | |

| Cognitive forcing strategies (CFSs) | CFSs are special cases of forcing functions that require clinicians to internalise and apply the forcing function deliberately. They represent a systematic change in clinical practice. CFSs may range from universal to generic to specific |

|

| Standing rules | May be used in certain clinical settings that require a given diagnosis not be made unless other must-not-miss diagnoses have been ruled out |

|

| General diagnostic rules in clinical practice | Many diagnostic ‘rules’ are often passed to trainees that are intended to prevent diagnostic error |

|

| Rule Out Worst-Case Scenario (ROWS) | A simple but useful general strategy to avoid missing important diagnoses |

|

| Checklists | A standard in aviation and now incorporated into medicine in intensive care units, surgery and in the diagnostic process60 |

|

| Stopping rules | Stopping rules are an important form of forcing functions—they determine when enough information has been gathered to make an optimal decision63 64 |

|

| Consider the opposite | Seeking evidence to support a decision opposite to your initial impression may be a useful way of forcing consideration of other options | |

| Consider the control | Causal claims are often made without an appropriate control group67 |

|

These three groupings show considerable overlap and are not intended to be seen as discrete but as a spectrum. We have not included here in detail the diverse initiatives in clinical research and practice that fall under Cognitive Bias Mitigation (CBM). The main purpose of CBM is to modify cognitive and affective biases that underlie psychological dysfunction, associated mostly with anxiety and depression.69 Insofar as cognitive debiasing and CBM are both directed at changing biased cognition and behaviour, it would be expected that some CBM techniques would be effective for those interested in debiasing outside the psychiatric setting, especially for affective bias.

Prescriptive debiasing: are there specific cognitive pills for cognitive ills?

The different sources of bias might have implications for the choice of strategies that can effectively counteract them. While standard biases such as availability and representativeness likely have an evolutionary origin, that is, derive from heuristics that were adaptive in ancient environments, other biases may be acquired through individuals’ particular experiences. Examples of the latter are emotional dispositions and specific biases towards particular classes of patients, for example, drug seekers, patients with psychiatric comorbidity or the ‘frequent flyer’. These biases are usually acquired unconsciously through simply being in specific environments and passively taking cues from others.

Given the differing aetiologies of bias, we might ask if some are more robust, and therefore, more resistant to change than others, and should there be different approaches to debiasing them?70 Perhaps the hard-wired ‘evolutionary’ biases would be the most resistant to change and may need several different debiasing strategies as well as multiple interventions. Major cultural, sociocentric and other biases that have been established through learning may be easier to change, although it would be preferable that these biases not be allowed to form in the first place. Good role modelling, good teaching programmes and optimal learning environments will help minimise them.49 Locally acquired and individualistic biases might be expected to be the least intransigent and the most amenable to change. Regardless of their origin, affective biases may need fundamentally different approaches from cognitive biases.

The recent literature is becoming more specific about biases and their defining characteristics. Various taxonomic strategies have been proposed,5 71–74 and future work may predict which particular types of strategies might work for certain classes of biases, as Arkes has proposed.71 Readers interested in the process of tailoring debiasing strategies to specific biases may find a suitable starting point in the taxonomies proposed in Stanovich's work75 or that by Wilson and Brekke.5

How do we go forward?

A major goal will be to identify the parameters of change. How might the workplace be optimised to avoid bias in the first place? Which interventions are appropriate for which biases and for which group (students, residents, practicing clinicians). What maintenance strategies will be required, and for how long?

While this and its companion paper were being prepared, a comprehensive narrative review listing 42 tested interventions to mitigate cognitive errors has been published.76 The effectiveness of interventions and strategies for debiasing in clinical practice deserves most attention as studies on their use have largely been conducted in other domains.

Medical training has traditionally put an emphasis on declarative knowledge (knowing what, or information-based) rather than procedural knowledge (knowing how, or application-based). While clinical adages aimed at preventing cognitive pitfalls are a tradition in clinical teaching, they are addressed occasionally and without a theoretical basis. Recently, efforts have been made to increase emphasis on procedural knowledge by building critical thinking into the undergraduate curriculum.77 Not surprisingly, the ability to avoid bias is correlated with critical thinking ability.78 79 Many of the processes described above would be integral to this initiative: a knowledge and understanding of reasoning and decision-making theories, of cognitive and affective biases, of logical fallacies and of standards for clarity, precision, accuracy, relevance, logicalness, intellectual humility and other attributes.80 The strategies described in this paper can lead to an educational curriculum that brings the traditional clinical adages into a coherent framework and that engages students and residents with real case examples in which cognitive pitfalls and debiasing are showcased and studied.

Many decision makers in clinical practice appear to recognise at least some of their biases and put measures in place to control them. However, the interface between patient and doctor is unique and so dynamic that even the best minds are challenged. Many contextual influences are difficult to control: the patient's characteristics and personality, the demographics and presentation (both typical and atypical) of the disease process itself, the knowledge, experience, expertise, personality and other characteristics of the physician, and the ambient conditions under which the decision will be made. Cultural and other individual differences also play a role in the effectiveness of debiasing initiatives.

Type 1 processing is essential to cognitive functioning and generally serves us well; in fact we could not live without it.81 82 Given that the vast majority of our daily decisions involve Type 1 processes,83 there is considerable ground to be made in educating intuition.49 Better environments can be created by providing better mentoring and feedback, by having fewer insults to homeostasis (more rest, sleep and reduced cognitive overloading) and by having trainees learn their skills by making the scientific method intuitive. Strategies to avoid extraneous influences on decision making84 would also be worthwhile.

Clinicians must be informed and recognise the need for constant vigilance and surveillance of their thinking to mitigate diagnostic and other clinical errors. There is an ongoing imperative to self-monitor for bias and especially to be mindful of faulty decision making at vulnerable times, and for the risk of excessive reliance on intuitive judgements when further reflection is required. This is captured in a current definition of critical thinking: ‘the ability to engage in purposeful, self-regulatory judgment’.85 This paper has reviewed a rich variety of cognitive debiasing initiatives from social science and clinical medicine. Given recent advances in the understanding of clinical decision making, the time appears ripe for renewed research effort and we hope these two papers will provoke such effort.

Footnotes

Contributors: The article is based on a workshop conducted by the authors during the 2011 Diagnostic Error in Medicine Annual Conference in Chicago. PC conceived the work with substantial contributions from GS and SM. PC wrote the draft of the manuscript, and GS and SM revised it critically for important intellectual content. All authors approved the final version of the manuscript.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Croskerry P, Singhal G, Mamede S. Cognitive debiasing 1: origins of bias and theory of debiasing. 2013;0:1–7. doi:10.1136/bmjqs-2012-001712 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Newell BR, Wong KY, Cheung JCH, et al. Think, blink or sleep on it? The impact of modes of thought on complex decision making. Q J Exp Psychol 2009;62:707–32 [DOI] [PubMed] [Google Scholar]

- 3.Prochaska JO, DiClemente CC, Norcross JC. In search of how people change. Am Psychol 1992;47:1102–4 [DOI] [PubMed] [Google Scholar]

- 4.De Neys W, Moyens E, Vansteenwegen D. Feeling we're biased: autonomic arousal and reasoning conflict. Cogn Affect Behav Neurosci 2010;10:208–16 [DOI] [PubMed] [Google Scholar]

- 5.Wilson TD, Brekke N. Mental contamination and mental correction: unwanted influences on judgments and evaluations. Psychol Bull 1994;116:117–42 [DOI] [PubMed] [Google Scholar]

- 6.Groopman J. How doctors think. New York: Houghton Mifflin Co, 2007 [Google Scholar]

- 7.Zajonc RB. Feeling and thinking: preferences need no inferences. Am Psychol 1980;35:151–75 [Google Scholar]

- 8.Horton K. Aid and bias. Inquiry 2004;47:545–61 [Google Scholar]

- 9.Fischoff B. Debiasing. In: Kahneman D, Slovic P, Tversky A, eds. Judgment under uncertainty; heuristics and biases. Cambridge, England: Cambridge University Press, 1982:422–44 [Google Scholar]

- 10.Kahneman D. Thinking, fast and slow. New York: Doubleday Canada, 2011 [Google Scholar]

- 11.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med 2008;121(5 Suppl) Diagnostic Error in Medicine:S2–23 [DOI] [PubMed] [Google Scholar]

- 12.Beyerstein BL. Alternative medicine and common errors of reasoning. Acad Med 2001;76:230–7 [DOI] [PubMed] [Google Scholar]

- 13.Pronin E, Gilovich T, Ross L. Objectivity in the eye of the beholder: divergent perceptions of bias in self versus others. Psychol Rev 2004;111:781–99 [DOI] [PubMed] [Google Scholar]

- 14.Heath C, Heath D. Decisive. Toronto: Random House Canada, 2013 [Google Scholar]

- 15.Stanovich KE, West RF. Individual differences in reasoning: implications for the rationality debate? Behav Brain Sci 2000;23:645–65 [DOI] [PubMed] [Google Scholar]

- 16.Croskerry P. A universal model for diagnostic reasoning. Acad Med 2009;84:1022–28 [DOI] [PubMed] [Google Scholar]

- 17.Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract 2009;14(Suppl 1) Diagnostic Error in Medicine27–35 [DOI] [PubMed] [Google Scholar]

- 18.Croskerry P. The importance of cognitive errors in diagnosis and strategies to prevent them. Acad Med 2003;78:1–6 [DOI] [PubMed] [Google Scholar]

- 19.Croskerry P. Cognitive and affective dispositions to respond. In: Croskerry P, Cosby KS, Schenkel S, Wears R, eds. Patient safety in emergency medicine. Philadelphia: Lippincott Williams & Wilkins, 2008:219–27 [Google Scholar]

- 20.Croskerry P, Abbass A, Wu A. Emotional issues in patient safety. J Patient Saf 2010;6:1–7 [DOI] [PubMed] [Google Scholar]

- 21.Jenicek M. Medical error and harm: understanding, prevention and control. New York: Productivity Press, 2011 [Google Scholar]

- 22.Hershberger PJ, Part HM, Markert RJ, et al. Teaching awareness of cognitive bias in medical decision making. Acad Med 1995;70:661. [DOI] [PubMed] [Google Scholar]

- 23.Hershberger PJ, Markert RJ, Part HM, et al. Understanding and addressing cognitive bias in medical education. Adv Health Sci Educ 1997;1:221–6 [DOI] [PubMed] [Google Scholar]

- 24.Turk DC, Salovey P. Clinical information processing: bias inoculation. In: Ingram RE, ed. Information processing approaches to clinical psychology. Orlando, FL: Academic Press, 1986:305–23 [Google Scholar]

- 25.Mumma GH, Wilson SB. Procedural debiasing of primacy/anchoring effects in clinical-like judgments. J Clin Psychol 1995;51:841–53 [DOI] [PubMed] [Google Scholar]

- 26.Sherbino J, Dore KL, Siu E, et al. The effectiveness of cognitive forcing strategies to decrease diagnostic error: an exploratory study. Teach Learn Med 2011;23:78–84 [DOI] [PubMed] [Google Scholar]

- 27.Fong GT, Krantz DH, Nisbett R. The effect of statistical training on thinking about everyday problems. Cogn Psychol 1986;18:253–92 [Google Scholar]

- 28.Nisbett RE, Fong GT, Lahman DR, et al. Teaching reasoning. Science 1987;238:625–31 [DOI] [PubMed] [Google Scholar]

- 29.Eva KW, Hatala RM, LeBlanc VR, et al. Teaching from the clinical reasoning literature: combined reasoning strategies help novice diagnosticians overcome misleading information. Med Educ 2007;41:1152–8 [DOI] [PubMed] [Google Scholar]

- 30. doi: 10.1007/s10459-012-9374-z. Crowley RS, Legowski E, Medvedeva O, et al. Automated detection of heuristics and biases among pathologists in a computer-based system. Adv Health Sci Educ 2013;18:343–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stiegler MP, Neelankavil JP, Canales C, et al. Cognitive errors detected in anaesthesiology: a literature review and pilot study. Br J Anaesth 2012;108:229–35 [DOI] [PubMed] [Google Scholar]

- 32.Bond WF, Deitrick LM, Arnold DC, et al. Using simulation to instruct emergency medicine residents in cognitive forcing strategies. Acad Emerg Med 2004;79:438–46 [DOI] [PubMed] [Google Scholar]

- 33.Elstein AS, Shulman LS, Sprafka SA. Medical problem solving: an analysis of clinical reasoning. Cambridge, MA: Harvard University Press, 1978 [Google Scholar]

- 34.Umanath NS, Vessey I. Multiattribute data presentation and human judgment: a cognitive fit perspective. Decis Sci 1994;25:795–824 [Google Scholar]

- 35.Campbell WW. Augenblickdiagnose. Semin Neurol 1998;18:169–76 [DOI] [PubMed] [Google Scholar]

- 36.Silen W. Cope's early diagnosis of the acute abdomen. 15th edn New York: Oxford University Press, 1979 [Google Scholar]

- 37.Diagnostic Checklist 2011.. http://pie.med.utoronto.ca/dc/ (accessed 31 Mar 2013). [Google Scholar]

- 38.Whaley AL, Geller PA. Toward a cognitive process model of ethnic/racial biases in clinical judgment. Rev Gen Psychol 2007;11:75–96 [Google Scholar]

- 39.Vohs KD, Baumeister RF, Loewenstein G. eds. Do emotions help or hurt decision making? A Hedgefoxian perspective. New York: Russell Sage Foundation, 2007 [Google Scholar]

- 40.Mamede S, Penaforte JC, Schmidt HG. Effects of reflective practice on the accuracy of medical diagnoses. Med Educ 2008;42:468–5 [DOI] [PubMed] [Google Scholar]

- 41.Mamede S, van Gog T, van den Berge K, et al. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA 2010;304:1198–203 [DOI] [PubMed] [Google Scholar]

- 42.Moulton CAE, Regehr G, Mylopoulos M, et al. Slowing down when you should: a new model of expert judgment. Acad Med 2007;82:S109–16 [DOI] [PubMed] [Google Scholar]

- 43.Moulton CA, Regehr G, Lingard L, et al. Slowing down to stay out of trouble in the operating room: remaining attentive in automaticity. Acad Med 2010;85:1571–7 [DOI] [PubMed] [Google Scholar]

- 44.Schermer M. The believing brain. New York: Times Books, Henry Holt and Company, 2011 [Google Scholar]

- 45.Daumit GL, Pronovost PJ, Anthony CB, et al. Adverse events during medical and surgical hospitalizations or persons with schizophrenia. Arch Gen Psychiatry 2006;63:267–72 [DOI] [PubMed] [Google Scholar]

- 46.Surowiecki J. The wisdom of crowds. New York, NY: Anchor Books, 2005 [Google Scholar]

- 47.Bornstein G, Yaniv I. Individual and group behavior in the ultimatum game: are groups more “rational” players? Exp Econ 1998;1:101–8 [Google Scholar]

- 48.Tetlock PE, Kim JI. Accountability and judgment processes in a personality prediction task. J Pers Soc Psychol 1987;52: 700–9 [DOI] [PubMed] [Google Scholar]

- 49.Hogarth RM. Educating intuition. Chicago: University of Chicago Press, 2001 [Google Scholar]

- 50.Croskerry P. ED cognition: any decision by anyone at any time. ED administration series. CJEM.10.2310/8000.2013.131053 [DOI] [PubMed] [Google Scholar]

- 51.Gilbert DT. The assent of man: mental representation and the control of belief. In: Wegner DM, Pennebaker JW, eds. The handbook of mental control. Englewood Cliffs, NJ: Prentice Hall, 1993:57–87 [Google Scholar]

- 52.Radecki RP, Medow MA. Cognitive debiasing through Sparkelines in clinical data displays. AMIA Annu Symp Proc 2007;11:1085. [PubMed] [Google Scholar]

- 53.Bauer DT, Guerlain S, Brown PJ. The design and evaluation of a graphical display for laboratory data. J Am Med Inform Assoc 2010;17:416–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Barnett GO, Cimino JJ, Hupp JA, et al. An evolving diagnostic decision-support system. JAMA 1987;258:67–74 [DOI] [PubMed] [Google Scholar]

- 55.Ramnarayan P, Roberts GC, Coren M. et al., Assessment of the potential impact of a reminder system on the reduction of diagnostic errors: a quasi-experimental study. BMC Med Inform Decis Mak 2006;6:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Grove WM, Meehl PE. Comparative efficiency of informal (subjective, impressionistic) and formal (mechanical, algorithmic) prediction procedures: the clinical–statistical controversy. Psychol Public Policy Law 1996;2:293–323 [Google Scholar]

- 57.McGinn T, Jervis R, Wisnivesky J, et al. Tips for teachers of evidence-based medicine: clinical prediction rules (CPRs) and estimating pretest probability. Gen Intern Med 2008;23:1261–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Croskerry P. Cognitive forcing strategies in clinical decision making. Ann Emerg Med 2003;41:110–20 [DOI] [PubMed] [Google Scholar]

- 59.Trowbridge RL. Twelve tips for teaching avoidance of diagnostic errors. Med Teach 2008;30:496–500 [DOI] [PubMed] [Google Scholar]

- 60.Ely J, Graber M, Croskerry P. Checklists to reduce diagnostic errors. Acad Med 2011;86:307–13 [DOI] [PubMed] [Google Scholar]

- 61.Pronovost P, Needham D, Berenholtz S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med 2006;355:2725–32 [DOI] [PubMed] [Google Scholar]

- 62.Haynes AB, Weiser TG, Berry WR, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N Engl J Med 2009;360:491–9 [DOI] [PubMed] [Google Scholar]

- 63.Simon HA. The sciences of the artificial. Cambridge, MA: MIT Press, 1981 [Google Scholar]

- 64.Bazerman MH. Judgment in managerial decision making, 5th edn New York: Wiley & Sons, 2002 [Google Scholar]

- 65.Lord CG, Lepper MR, Preston E. Considering the opposite: a corrective strategy for social judgment. J Pers Soc Psychol 1984;47:1231–43 [DOI] [PubMed] [Google Scholar]

- 66.Galinsky AD, Moskowitz GB. Counterfactuals as Behavioral Primes: priming the simulation heuristic and consideration of alternatives. J Exp Soc Psychol 2000;36:384–409 [Google Scholar]

- 67.Bishop MA, Trout JD. Epistemology and the psychology of human judgment. New York, NY: Oxford University Press, 2005:148–52 [Google Scholar]

- 68.Thaler RH, Sunstein CR. Nudge: improving decisions about health, wealth, and happiness. New York: Penguin Books, 2009 [Google Scholar]

- 69.MacLeod C, Koster EHW, Fox E. Whither cognitive bias modification research? Commentary on the Special Section Articles. J Abnorm Psychol 2009;118:89–99 [DOI] [PubMed] [Google Scholar]

- 70.Keren G. Cognitive aids and debiasing methods: can cognitive pills cure cognitive ills? Adv Psychol 1990;68:523–52 [Google Scholar]

- 71.Arkes HR. Costs and benefits of judgment errors: implications for de-biasing. Psychol Bul 1991;110:486–98 [Google Scholar]

- 72.Arnott D. Cognitive biases and decision support systems development: a design science approach. Info Systems J 2006;16:55–78 [Google Scholar]

- 73.Campbell SA, Croskerry P, Bond W. Profiles in patient safety: a “perfect storm” in the emergency department. Acad Emerg Med 2007;14:743–9 [DOI] [PubMed] [Google Scholar]

- 74.Lawson AE, Daniel ES. Inferences of clinical diagnostic reasoning and diagnostic error. J Biomed Inform 2011;44: 402–12 [DOI] [PubMed] [Google Scholar]

- 75.Stanovich KE. Rationality and the reflective mind. New York: Oxford University Press, 2011:19. [Google Scholar]

- 76.Graber ML, Kissam S, Payne VL, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Qual Saf 2012;21:535–57 [DOI] [PubMed] [Google Scholar]

- 77.Millennium Conference 2011 on Critical Thinking. The Carl J. Shapiro Institute for Education and Research at Harvard Medical School and Beth Israel Deaconess Medical Center, Babson College, Wellesley, MA, May 19–21, 2011

- 78.Elder L, Paul R. Critical thinking development: a stage theory with implications for instruction. Tomales, CA: Foundation for Critical Thinking, 2010 [Google Scholar]

- 79.West RF, Toplak ME, Stanovich KE. Heuristics and biases as measures of critical thinking: associations with cognitive ability and thinking dispositions. J Educ Psychol 2008;100:930–41 [Google Scholar]

- 80.Lilienfield SO, Ammirati R, Landfield K. Giving debiasing away: can psychological research on correcting cognitive errors promote human welfare? Perspect Psychol Sci 2009;4:390–8 [DOI] [PubMed] [Google Scholar]

- 81.Croskerry P. Context is everything or how could I have been that stupid? Healthc Q 2009;12:167–73 [DOI] [PubMed] [Google Scholar]

- 82.Smallberg G. Bias is the nose for the story. In: Brockman J, ed. This will make you smarter. New York: Harper Perennial, 2012, 43–5 [Google Scholar]

- 83.Lakoff G, Johnson M. Philosophy in the flesh: the embodied mind and its challenge to Western thought. New York: Basic Books, 1999 [Google Scholar]

- 84.Danzigera S, Levavb J, Avnaim-Pessoa L. Extraneous factors in judicial decisions. Proc Nat Acad Sci 2011;108:6889–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Facione PA. Critical thinking: what it is and why it counts. Millbrae, CA: Insightassessment.com, 2011 [Google Scholar]