Abstract

Numerous studies have shown that diagnostic failure depends upon a variety of factors. Psychological factors are fundamental in influencing the cognitive performance of the decision maker. In this first of two papers, we discuss the basics of reasoning and the Dual Process Theory (DPT) of decision making. The general properties of the DPT model, as it applies to diagnostic reasoning, are reviewed. A variety of cognitive and affective biases are known to compromise the decision-making process. They mostly appear to originate in the fast intuitive processes of Type 1 that dominate (or drive) decision making. Type 1 processes work well most of the time but they may open the door for biases. Removing or at least mitigating these biases would appear to be an important goal. We will also review the origins of biases. The consensus is that there are two major sources: innate, hard-wired biases that developed in our evolutionary past, and acquired biases established in the course of development and within our working environments. Both are associated with abbreviated decision making in the form of heuristics. Other work suggests that ambient and contextual factors may create high risk situations that dispose decision makers to particular biases. Fatigue, sleep deprivation and cognitive overload appear to be important determinants. The theoretical basis of several approaches towards debiasing is then discussed. All share a common feature that involves a deliberate decoupling from Type 1 intuitive processing and moving to Type 2 analytical processing so that eventually unexamined intuitive judgments can be submitted to verification. This decoupling step appears to be the critical feature of cognitive and affective debiasing.

Keywords: Patient safety, Cognitive biases, Decision making, Diagnostic errors

Introduction

Clinical decision making is a complex process. Clinical decisions about patient's diagnoses are made in one of two modes: either intuitive or analytical, also referred to, respectively as Type 1 and Type 2 processes. The former are more commonly used. They are fast, usually effective, but also more likely to fail. As they are largely unconscious, mistakes—when they occur—are seldom corrected.1–3 In contrast, Type 2 processes are fairly reliable, safe and effective, but slow and resource intensive. The intuitive mode of decision making is characterised by heuristics—short-cuts, abbreviated ways of thinking, maxims, ‘seen this many times before’, ways of thinking. Heuristics represent an adaptive mechanism that saves us time and effort while making daily decisions. Indeed, it is a rule of thumb among cognitive psychologists that we spend about 95% of our time in the intuitive mode.4 We perform many of our daily activities through serial associations—one event automatically triggers the next with few events of deliberate, focused, analytical thinking. We have a prevailing disposition to use heuristics, and while they work well most of the time, they are vulnerable to error. Our systematic errors are termed biases,3 and there are many of them—over a hundred cognitive biases5 and approximately one dozen or so affective biases (ways in which our feelings influence our judgment).6 Bias is inherent in human judgment, and physicians are, of course, also subject to them.

Indeed, one of the principal factors underlying diagnostic error is bias.7–9 Post hoc analyses of diagnostic errors10 11 have in fact suggested that flaws in clinical reasoning rather than lack of knowledge underlie cognitive diagnostic errors, and there is some experimental evidence that, at least when problems are complex, errors were associated with intuitive judgments and could be repaired by analytical reasoning.12–14 Moreover, a few experimental studies have supported the claim that bias may misdirect diagnostic reasoning, thus leading to errors.15 16 While the evidence for the role of bias in medical diagnostic error is still scarce, research findings in other domains1 3 is sufficient to justify concerns with the potential adverse influence of bias on diagnostic reasoning. Two clinical examples of biased decision making leading to diagnostic failure are given in Case 1 and Case 2, displayed, respectively, in boxes 1 and 2. From the case descriptions, the mode of decision making the physician was relying upon cannot be determined. This is indeed a limitation of studies on diagnostic reasoning, which only have indirect evidence or post hoc inference of reasoning processes, at least until other tools such as functional MRI (fMRI) can be experimentally employed.

Box 1. Example of biased decision making leading to diagnostic failure: case 1.

A 55-year-old man presents to a walk-in clinic towards the end of the evening. It has been a busy day for the clinic and they are about to close. His chief complaint is constipation. He has not had a bowel movement in 4 days, which is unusual for him. He complains of pain in his lower back and lower abdomen and also some tingling in his legs. He thinks that he will feel better with a laxative because what he has tried so far has not worked. He was briefly examined by the physician who did not find anything remarkable on his abdominal examination. There was some mild suprapubic tenderness, which was attributed to the patient's need to urinate. Bowel sounds were good, his abdomen was soft and there were no masses or organomegaly. The physician prescribed a stronger laxative and advised the patient to contact his family doctor for further follow-up.

During the night, the patient was unable to urinate and went to the emergency department. On examination, his lower abdomen appeared distended and he was found to have a residual volume of 1200cc on catheterisation. His rectum was markedly distended with soft stool that required disimpaction. He recalled straining his lower back lifting about 4 days earlier. The emergency physician suspected cauda equina syndrome and this was confirmed on MRI. He was taken immediately to the operating room for surgical decompression. He did well postoperatively and regained full bladder control.

Comment: The patient was initially misdiagnosed and might have suffered permanent loss of bladder function requiring lifelong catheterisation. The principle biases for the physician who saw him in the clinic were framing, search satisficing and premature diagnostic closure. These may have led to the incomplete history-taking, an incomplete physical exam, failure to consider symptoms that appeared discordant with constipation (back pain, leg paresthesias) and to consider other diagnoses. Fatigue may have been a contributing factor; it is known to increase the likelihood of defaulting to System 1 and vulnerability to bias (for a description of particular cognitive biases, see ref. 7 17 18).

Box 2. Example of biased decision making leading to diagnostic failure: case 2.

A mildly obese, 19-year-old woman is admitted to a psychiatric hospital for stabilisation and investigation. She has suffered depressive symptoms accompanied by marked anxiety. Over the last week she has had bouts of rapid breathing which have been attributed to her anxiety. However, she has also exhibited mild symptoms of a respiratory infection and the psychiatry resident transfers her to a nearby emergency department (ED) of a tertiary care hospital to ‘rule out pneumonia’. She is on no medications other than birth control pills. At triage, she is noted to have an elevated heart rate and respiratory rate. She is uncomfortable, anxious and impatient, and does not want to be in the ED. She is noted to be ‘difficult’ with the nurses.

After several hours she is seen by an emergency medicine resident who finds her very irritable but notes nothing remarkable on her chest or cardiac examination. However, to ensure that pneumonia is ruled out he orders a chest X-ray. He reviews the patient with his attending noting that the patient is anxious to return to the psychiatric facility and is only at the ED ‘because she was told to come’. He expresses his view that her symptoms are attributable to her anxiety and that she does not have pneumonia. Nevertheless, he asks the attending to review the chest X-ray to ensure he has not missing something. The attending confirmed that there was no evidence of pneumonia and agreed with the resident that the patient could be returned to the psychiatric hospital.

While awaiting transfer back to the psychiatric hospital the patient requests permission on several occasions to go outside for a cigarette and is allowed to do so. Later, on the sidewalk outside the ED, the patient has a cardiac arrest. She is immediately brought back into the ED but could not be resuscitated. At autopsy, massive pulmonary saddle emboli are found as well as multiple small emboli scattered throughout both lungs.

Comment: The patient died following a diagnostic failure. Various cognitive and affective biases are evident. The principle ones are framing, diagnostic momentum, premature diagnostic closure and psych-out error7 (see ref. 7 for a description of the biases). The patient's demeanour towards staff and the resident may have engendered some negative antipathy which may have further compromised decision making.

In this first paper, we discuss how biases are generated, and situations that make physicians more vulnerable to bias. Building upon dual process theories (DPTs) of reasoning,1 3 we discuss the origins of biases, and the theoretical basis of how cognitive debiasing actually works. In the second paper, we summarise the known strategies for cognitive debiasing that have been proposed to counteract different types of biases.

Biases are ‘predictable deviations from rationality’.19 Many biases that diagnosticians have can possibly be recognised and corrected. Essentially, this is the process that underlies learning and refining of clinical behaviour. We may have acquired an inappropriate response to a particular situation that, in turn, leads to a maladaptive habit. Through feedback, however, or other processes, some insight or revelation occurs and we are able to change our thinking to achieve a more successful outcome. The basic premise is that if we can effectively debias our thinking, from innate and learned biases, we will be better thinkers and more accurate diagnosticians.

Clinical decision making is a complex process. Besides the overall vulnerability of the human mind towards biases in decision making, it is generally appreciated that the quality of decision making is also influenced by ambient conditions: prevailing conditions in the immediate environment—context, team factors, patient factors, resource limitations, physical plant design and ergonomic factors. Individual factors such as affective state, general fatigue, cognitive load, decision fatigue, interruptions and distractions, sleep deprivation and sleep-debt, are influential too. Other individual factors such as personality, intelligence, rationality, gender and other variables also impact decision making. Since our judgments are so vulnerable to biases and so many different factors affect decision making, the challenge of developing clinical decision makers who consistently make optimal and reliable decisions appears daunting. Two questions need to be answered: can we improve our performance by using cognitive debiasing to repair incorrect judgments made under the influence of bias? This means appropriately alerting the analytical mode to situations in which a bias might arise so that it can be detected and a debiasing intervention applied. Second, can we mitigate the impact of adverse ambient conditions, either by improving conditions in the decision making environment, or by changing the threshold for detection of bias and initiating debiasing strategies?

The origins of cognitive biases

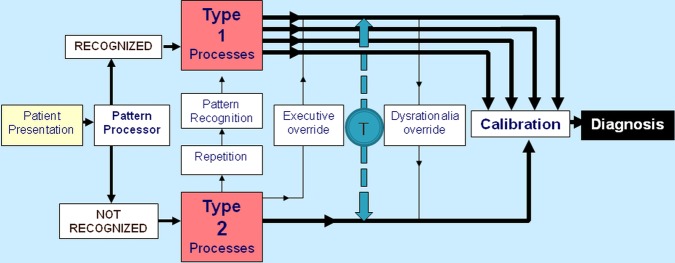

There appear to be a prevailing assumption that biases are all created equal, that all are difficult to overcome and that some common debiasing strategy might work. However, as Larrick points out, many biases have multiple determinants, and it is unlikely that there is a ‘one-to-one mapping of causes to bias or of bias to cure’20; neither is it likely that one-shot debiasing interventions will be effective.21 From DPT and other work of cognitive psychologists we know that, although biases can occur in both types of processes, most biases are associated with heuristics and typically are Type 1 (intuitive) processes. Other theories of reasoning exist, but DPT has become prevalent, gaining increasing support including from functional MRI studies.22 DPT has been used as a template for the diagnostic process (figure 1).23

Figure 1.

Dual process model for decision making. From: Croskerry23 (T is the toggle function, which means that the decision maker is able to move forth and back between Type 1 and Type 2 processes).

In the figure, the intuitive system is schematised as Type 1 processes and the analytic system by Type 2 processes. There are eight major features of the model:

Type 1 processing is fast, autonomous, and where we spend most of our time. It usually works well, but as it occurs largely unconsciously and uses heuristics heavily, unexamined decision making in the intuitive mode is more prone to biases.

Type 2 processing is slower, deliberate, rule-based and takes places under conscious control, which may prevent mistakes.

The predictable deviations from rationality that eventually lead to errors tend to occur more frequently in the Type 1 processes, in line with findings of dual-process researchers in other domains.24–26

Repetitive processing using Type 2 processes may allow processing in Type 1. This is the basis of skill acquisition.

Biases that negatively affect judgments, often unconsciously, can be overridden by an explicit effort at reasoning. Type 2 processes can perform an executive override function—which is key to debiasing.

Excessive reliance on Type 1 processes can override Type 2, preventing reflection and leading to unexamined decisions—this works against debiasing.

The decision maker can toggle (T) back and forth between the two systems—shown as broken line in figure 1.

The brain generally tries to default to Type 1 processing whenever possible

These operating characteristics have been described in more detail elsewhere.22 23 27 The model does not imply that one single reasoning mode accounts for a diagnostic decision or that a particular mode is always preferable over the other one. Current thinking is that making diagnoses usually involves some interactive combination of intuitive and analytical processing in different degrees.28 And whereas in some circumstances a high degree of System 1 processing may work well or be even lifesaving, such as in imminent life-threatening conditions, in others a high degree of reflection (System 2) may be required. Optimal diagnostic reasoning would appear to be a blend of the two reasoning modes in appropriate doses.22 Further, not all biases originate in Type 1 processing, but when a bias does occur it can only be dealt with by activating Type 2 processing. Thus, a good balance of Type 1 and Type 2 processes is required for a well-calibrated performance.

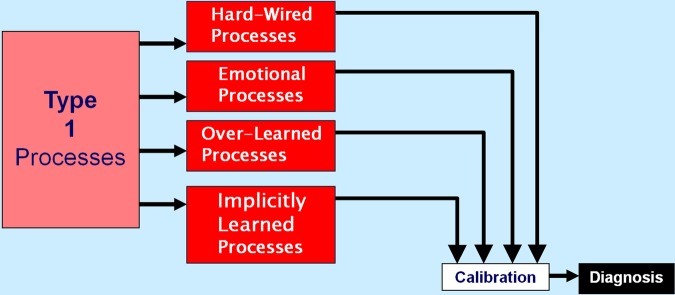

Importantly, the intuitive processes are multiple and varied. Stanovich29 has recently categorised these ‘autonomous’ Type 1 processes according to their origins, and describes four main groups (figure 2).

Processes that are hard-wired. These were naturally selected (in the Darwinian sense) in our evolutionary past for their adaptation value. Examples of such ‘innate’ heuristics that may induce biases are: the metaheuristics (anchoring and adjustment, representativeness, availability), search satisficing, overconfidence and others.

Processes that are regulated by our emotions. These too may be evolved adaptations (hard-wired) and are grouped into six major categories: happiness, sadness, fear, surprise, anger and disgust.30 Fear of snakes, for example, is universally present in all cultures. Or they may be socially constructed (acquired, learned), or combinations of the two—hard-wired modified by learning for example, visceral reactions against particular types of patients.31

Processes that become firmly embedded in our cognitive and behavioural repertoires through overlearning. These might include explicit cultural and social habits, but also those associated with specific knowledge domains. An example of a bias acquired through repetitive exposures might be a ‘frequent flyer’ in a family doctor's office or in the emergency department where the bias may be the expectation that no significant new diagnosis will be found.

Processes that have developed through implicit learning. It is well recognised that we learn in two fundamental ways. First, through deliberate explicit learning such as occurs in school and in formal training, and second, through implicit learning which is without intent or conscious awareness. Such learning plays an important role in our skills, perceptions, attitudes and overall behaviour. Implicit learning allows us to detect and appreciate incidental covariance and complex relationships between things in the environment without necessarily being able to articulate that understanding. Thus, some biases may be acquired unconsciously. Medical students and residents might subtly acquire particular biases by simply spending time in environments where others have these biases, even though the bias is never deliberately articulated or overtly expressed to them that is, the hidden curriculum. Examples might be the acquisition of biases towards age, socioeconomic status, gender, race, patients with psychiatric comorbidity, obesity and others.

Figure 2.

Origins of biases in Type I processes. This is a modified section of the dual process model of diagnosis expanding upon the origins of Type 1 processes (based on Stanovich).27

Although Type 1 processes appear the most vulnerable to bias and suboptimal decision making, they are not the only source of impaired judgment. Cognitive error may also arise through biases that have become established through inferior strategies or imperfect decision rules. Arkes points out that error due to biases also occurs with Type 2 processes,32 that is, even though the decision maker may be deliberately and analytically applying accepted strategies or rules, they may be flawed. Thus, there may have been a problem in the initial selection of a strategy which may then underestimate or overestimate a diagnosis. Of the two, it would seem preferable to always overestimate (as in the forcing strategy ‘rule out worst case scenario’) so that important diagnoses do not get missed; however this can sometimes be wasteful of resources. Generally, suboptimal strategies get selected when the stakes are not high.

Situation-dependent biases: An important question is: are there situations in which biases are more likely? Evidence suggests that certain conditions such as fatigue, sleep deprivation and cognitive overload, predispose decision makers to using Type 1 processes.33 In addition, specific clinical situations might increase vulnerability to specific biases. Some will set the physician up for exposure to particular biases whereas others will produce exposure to a wide range of biases. Some common situations are described in table 1.

Table 1.

High-risk situations for biased reasoning

| High-risk situation | Potential biases |

|---|---|

| 1. Was this patient handed off to me from a previous shift? | Diagnosis momentum, framing |

| 2. Was the diagnosis suggested to me by the patient, nurse or another physician? | Premature closure, framing bias |

| 3. Did I just accept the first diagnosis that came to mind? | Anchoring, availability, search satisficing, premature closure |

| 4. Did I consider other organ systems besides the obvious one? | Anchoring, search satisficing, premature closure |

| 5. Is this a patient I don't like, or like too much, for some reason? | Affective bias |

| 6. Have I been interrupted or distracted while evaluating this patient? | All biases |

| 7. Am I feeling fatigued right now? | All biases |

| 8. Did I sleep poorly last night? | All biases |

| 9. Am I cognitively overloaded or overextended right now? | All biases |

| 10. Am I stereotyping this patient? | Representative bias, affective bias, anchoring, fundamental attribution error, psych out error |

| 11. Have I effectively ruled out must-not-miss diagnoses? | Overconfidence, anchoring, confirmation bias |

How does debiasing work? While debiasing is an integral part of everyday living, some will do better than others. Those who are successful learn the consequences of their actions and take steps to avoid falling into the same thinking traps. Often this can be done using forcing strategies or deliberately suppressing impulsivity in certain situations. We can't find our car keys at a time when we are in a hurry, so many of us learn the forcing strategy of always putting them in a specific place as soon as we arrive home. In some situations, we can adopt simple, protective forcing rules whenever we are going to do something irreversible for example, by following the maxim ‘measure twice, cut once’. In other domains, we have come to know that it is a good idea to suppress belief and be sceptical when we are offered deals that are too good to be true such as the email notifying us we have just won a large sum of money. Interestingly, increased intelligence does not protect against such follies.29

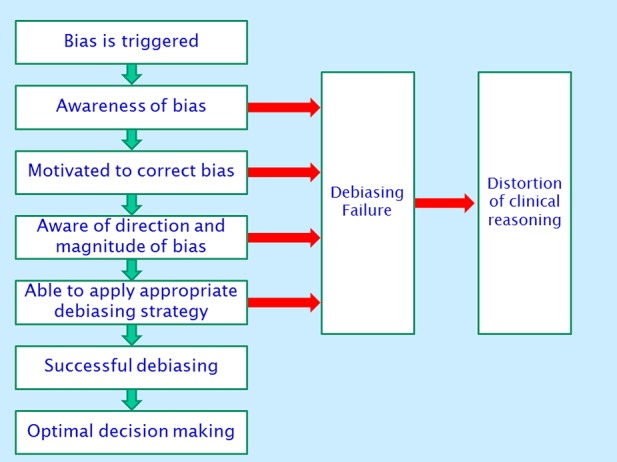

Wilson and Brekke,35 in their extensive review, refer to cognitive bias as ‘mental contamination’ and debiasing as ‘mental correction’. They suggest an algorithmic approach, delineating a series of steps to avoid bias (figure 3). Bazerman sees the key to debiasing is first that some disequilibrium of the decision maker needs to occur so that the individual wants to move from a previously established response and change.36 This could come about by the individual simply being informed of a potential bias, or that their past judgment has raised the possibility they might be biased, or by developing insight into the adverse consequences of bias. This critical step may be more than simply becoming aware of the existence of biases and their causes; sometimes a vivid, perhaps emotion-laden experience needs to occur to precipitate cognitive change. The next step involves learning how the change will occur and what alternate strategies need to be learned. Finally, the last step occurs when the new approach is incorporated into the cognitive make-up of the decision maker and (with maintenance) becomes part of their regular thinking behaviour. An algorithmic approach has also been proposed by Stanovich and West,37 in which they further delineate characteristics of the decision maker needed to inhibit bias. Importantly, the decision maker must (1) be aware of the rules, procedures and strategies (mindware)38 needed to overcome the bias, (2) have the ability to detect the need for bias override, and (3) be cognitively capable of decoupling from the bias. Stanovich has examined the theoretical basis of debiasing in considerable depth.29 He proposes that a critical feature of debiasing is the ability to suppress automatic responses in the intuitive mode by decoupling from it. This is depicted in figure 1 as the executive override function. The decision maker must be able to use situational cues to detect the need to override the heuristic response and sustain the inhibition of the heuristic response while analysing alternative solutions,37 and must have knowledge of these alternative solutions. These solutions must of course have been learned and previously stored in memory as mindware. Debiasing involves having the appropriate knowledge of solutions and strategic rules to substitute for a heuristic response as well as the thinking dispositions that are able to trigger overrides of Type 1 (heuristic) processing.

Figure 3.

Successive steps in cognitive debiasing (adapted from Wilson and Brekke).35 Green arrows=yes; Red arrows=no

Caveats are abundant in medical training and at its completion we are probably at our most cautious due to lack of experience and high levels of uncertainty. Experience subsequently accumulates but does not guarantee expertise. However, many clinicians will develop their own debiasing strategies to avoid the predictable pitfalls that they have experienced, or have learned through the experience of others. Morbidity and mortality rounds may be a good opportunity for such vicarious learning, provided that they are carefully and thoughtfully moderated. These rounds tend to inevitably remove the presented case from its context and to make it unduly salient in attendees’ minds, which may hinder rather than improve future judgment.

Although a general pessimism appears to prevail about the feasibility of cognitive debiasing,3 clearly people can change their minds and behaviours for the better. While evidence of debiasing in medicine is lacking, shaping and otherwise modifying our behaviours, extinguishing old habits, and developing new strategies and approaches are features of everyday life. Overall, we are faced with the continual challenge of debiasing our judgments throughout our careers. In our second paper, we review a number of general and specific strategies that have been grouped under the rubric of cognitive debiasing.

Footnotes

Contributors: The article is based on a workshop conducted by the authors during the 2011 Diagnostic Error in Medicine Annual Conference in Chicago. PC conceived the work with substantial contributions from GS and SM. PC wrote the draft of the manuscript, and GS and SM revised it critically for important intellectual content. All authors approved the final version of the manuscript.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Evans JStB, Frankish K. In two minds: dual processes and beyond. Oxford, England: Oxford University Press, 2009 [Google Scholar]

- 2.Gilovic J, Griffin D, Kahneman D. Heuristics and biases: the psychology of intuitive judgment. Cambridge, UK: Cambridge University Press, 2002 [Google Scholar]

- 3.Kahneman D. Thinking fast and slow. Canada: Doubleday, 2011 [Google Scholar]

- 4.Lakoff G, Johnson M. Philosophy in the flesh: the embodied mind and its challenge to Western thought. New York: Basic Books, 1999 [Google Scholar]

- 5.Jenicek M. Medical error and harm: understanding, prevention and control. New York: Productivity Press, 2011 [Google Scholar]

- 6.Croskerry P, Abbass AA, Wu AW. How doctors feel: affective issues in patients’ safety. Lancet 2008;372:1205–6 [DOI] [PubMed] [Google Scholar]

- 7.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med 2003;78:775–80 [DOI] [PubMed] [Google Scholar]

- 8.Klein JG. Five pitfalls in decisions about diagnosis and prescribing. BMJ 2005;330:781–3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Redelmeier DA. Improving patient care. The cognitive psychology of missed diagnoses. Ann Intern Med 2005;142:115–20 [DOI] [PubMed] [Google Scholar]

- 10.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med 2005;165:1493–9 [DOI] [PubMed] [Google Scholar]

- 11.Singh H, Thomas E, Khan MM, et al. Identifying diagnostic errors in primary care using an electronic screening algorithm. Arch Intern Med 2007;167:302–8 [DOI] [PubMed] [Google Scholar]

- 12.Mamede S, Schmidt HG, Penaforte JC. Effects of reflective practice on the accuracy of medical diagnoses. Med Educ 2008;42:468–75 [DOI] [PubMed] [Google Scholar]

- 13.Mamede S, Schmidt HG, Rikers RM, et al. Conscious thought beats deliberation without attention in diagnostic decision-making: at least when you are an expert. Psychol Res 2010;74:586–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Coderre S, Mandin H, Harasym PH, et al. Diagnostic reasoning strategies and diagnostic success. Med Educ 2003;37:695–703 [DOI] [PubMed] [Google Scholar]

- 15.Schmidt HG, Mamede S, van den BK, et al. Exposure to media information causes availability bias in medical diagnosis. Submitted 2013

- 16. doi: 10.1001/jama.2010.1276. Mamede S, van Gog T, van den Berge K, et al. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA 2010; 304:1198–203. [DOI] [PubMed] [Google Scholar]

- 17.Barger LK, Ayas NT, Cade BE, et al. Impact of extended-duration shifts on medical errors, adverse events, and attentional failures. PLoS Med 2006;3:e487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Landrigan CP, Rothschild JM, Cronin JW, et al. Effect of reducing interns’ work hours on serious medical errors in intensive care units. N Engl J Med 2004;351:1838–48 [DOI] [PubMed] [Google Scholar]

- 19.Arnott D. Cognitive biases and decision support systems development: a design science approach. Info Systems J 2006;16:55–78 [Google Scholar]

- 20.Larrick R. Debiasing. In: Koehler D, Harvey N, eds. The blackwell handbook of judgment and decision making. Oxford: Blackwell Publishing, 2004:316–37 [Google Scholar]

- 21.Lilienfeld S, Ammirati R. Giving debiasing away. Can psychological research on correcting cognitive errors promote human welfare? Perspect Psychol Sci 2009;4:390–8 [DOI] [PubMed] [Google Scholar]

- 22.Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract 2009;14Suppl 1:27–35 [DOI] [PubMed] [Google Scholar]

- 23.Croskerry P. A universal model of diagnostic reasoning. Acad Med 2009;84:1022–8 [DOI] [PubMed] [Google Scholar]

- 24.Kahneman D. A perspective on judgment and choice: mapping bounded rationality. Am Psychol 2003;58:697–720 [DOI] [PubMed] [Google Scholar]

- 25.Evans JS. Dual-processing accounts of reasoning, judgment, and social cognition. Annu Rev Psychol 2008;59:255–78 [DOI] [PubMed] [Google Scholar]

- 26.Evans JS, Curtis-Holmes J. Rapid responding increases belief bias: evidence for the dual-process theory of reasoning. Think Reasoning 2005;11:382–9 [Google Scholar]

- 27.Croskerry P. Context is everything or How could I have been that stupid? Healthcare Quarterly 2009;12:167–73 [DOI] [PubMed] [Google Scholar]

- 28.Graber ML, Kissam S, Payne VL, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Quality & Safety 2012;21:535–57 [DOI] [PubMed] [Google Scholar]

- 29. Stanovich KE. Rationality and the reflective mind. New York: Oxford University Press, 2011:19–22. [Google Scholar]

- 30.Elkman P, Friesen W, Ellsworth P. Emotion in the human face: guidelines for research and an integration of findings. New York: Pergamon Press, 1972 [Google Scholar]

- 31.Groves JE. Taking care of the hateful patient. N Engl J Med 1978;298:883–7 [DOI] [PubMed] [Google Scholar]

- 32.Arkes HR. Costs and benefits of judgment errors: implications for de-biasing. Psychol Bull 1991;110:486–98 [Google Scholar]

- 33.Croskerry P. ED Cognition: any decision by anyone at any time. ED administration series. CJEM In press 2012 [DOI] [PubMed] [Google Scholar]

- 34. Graber ML, Singh H, Sorensen A, et al. Checklists to reduce diagnostic error in emergency medicine. AHRQ ACTION 1 Contract Task Order #8 to RTI International: Develop Patient Safety Interventions to Reduce Diagnostic Error in the Ambulatory Setting. AHRQ Contract Number HHSA290200600001I, 2010.

- 35.Wilson TD, Brekke N. Mental contamination and mental correction: unwanted influences on judgments and evaluations. Psychol Bull. 1994;116:117–42 [DOI] [PubMed] [Google Scholar]

- 36.Bazerman M. Judgment in managerial decision making. 5 edn. New York: Wiley & Sons, 2002 [Google Scholar]

- 37.Stanovich KE, West RF. On the relative independence of thinking biases and cognitive ability. J Pers Soc Psychol 2008;94:672–95 [DOI] [PubMed] [Google Scholar]

- 38.Perkins D. Outsmarting IQ: the emerging science of learnable intelligence. New York: Free Press, 1995 [Google Scholar]