Abstract

Risk is a ubiquitous feature of life. It plays an important role in economic decisions by affecting subjective reward value. Informed decisions require accurate risk information for each choice option. However, risk is often not constant but changes dynamically in the environment. Therefore, risk information should be updated to the current risk level. Potential mechanisms involve error-driven updating, whereby differences between current and predicted risk levels (risk prediction errors) are used to obtain currently accurate risk predictions. As a major reward structure, the orbitofrontal cortex is involved in coding key reward parameters such as reward value and risk. In this study, monkeys viewed different visual stimuli indicating specific levels of risk that deviated from the overall risk predicted by a common earlier stimulus. A group of orbitofrontal neurons displayed a risk signal that tracked the discrepancy between current and predicted risk. Such neuronal signals may be involved in the updating of risk information.

Introduction

Very few things in life are certain. Most of our decisions involve some degree of uncertainty. Even if we know the values of desired objects, we often don't know for sure whether we will obtain them. Our decisions benefit greatly from knowing how certain these objects are. To make reasonable decisions, we appreciate two vital pieces of information, namely the value of each desired object and the uncertainty of obtaining it. More formally, the two main parameters influencing our decisions are the first two statistical moments of reward probability distributions, namely the expected value (anticipated mean) and the variance. The variance and its square root, standard deviation (SD), are commonly referred to as risk. Importantly, these parameters are not constants but vary dynamically in the environment. Therefore, it is important to update our knowledge to the currently valid levels of expected value and risk.

Error-driven mechanisms provide common methods for updating knowledge about important decision variables. For example, reward prediction errors, which capture the discrepancy between current and predicted reward values, are thought to critically serve the learning about future reward values (Rescorla and Wagner, 1972; Sutton and Barto, 1981). A similar mechanism may function to update the learning of the risk. Specifically, a risk prediction error that captures the discrepancy between current and predicted risk may be involved in updating our knowledge about risk (Preuschoff et al., 2008).

Reward value and risk are encoded by neurons in the orbitofrontal cortex (Thorpe et al., 1983; Tremblay and Schultz, 1999; Hikosaka and Watanabe, 2000; Wallis and Miller, 2003; Roesch and Olson, 2004; Padoa-Schioppa and Assad, 2006; Kepecs et al., 2008; Kennerley et al., 2009, 2011; O'Neill and Schultz, 2010). Moreover, human imaging studies suggest coding of reward value prediction errors in orbitofrontal cortex (O'Doherty et al., 2003; Dreher et al., 2006). These data suggest the presence of error-driven mechanisms in the orbitofrontal cortex. Given the involvement of orbitofrontal cortex in risk processing and the possibility of error-driven mechanisms, we investigated whether single neurons in the orbitofrontal cortex code risk prediction errors. In a simple task, monkeys viewed visual cues that indicated transitions in risk. We identified a population of orbitofrontal neurons that tracked the discrepancies between current and predicted risk, thus displaying a neurophysiological risk prediction error signal.

Materials and Methods

Subjects.

We used two adult male rhesus monkeys (Macaca mulatta), weighing 10–14 kg. The monkeys were implanted, under general anesthesia, with a head holder and a stainless steel chamber on the skull to enable daily electrophysiological recordings from single neurons. All surgical and experimental procedures were performed under a Home Office License according to the United Kingdom Animals (Scientific Procedures) Act 1986.

Behavioral task.

During training and testing, the monkeys were on a restricted water schedule 6 d of the week and 24 h water ad libitum. The monkeys were trained to sit in a restraining chair in front of a computer monitor with the head fixed and to perform a memory-guided saccade task. An aperture in the front of the chair provided access to a touch-sensitive key. To commence a trial, the monkey fixated on a red spot in the center of the monitor and contacted the key. After 1.5 s, a visual cue appeared in pseudorandom alternation to either the left or right of the fixation spot for 0.5 s (Fig. 1A). The animal maintained fixation for an additional 2 s before the center spot was extinguished, which was the signal for the monkey to saccade to the left or right cue location. A successful saccade led to the appearance of a red fixation spot at the peripheral location. After fixation for 1 s, the spot turned green, and the animal released the key. Juice reward was delivered 1 s later. The next trial started with the appearance of the central fixation spot at 3.5 s after the reward. Thus, the intertrial interval was 3.5 s, and the total cycle time (trial duration plus intertrial interval) was 10.5 s. For neuronal recordings, only one cue was shown per trial. We assessed the monkey's risk preferences in a subset of behavioral sessions in which the animal chose between two cues (one safe and one risky).

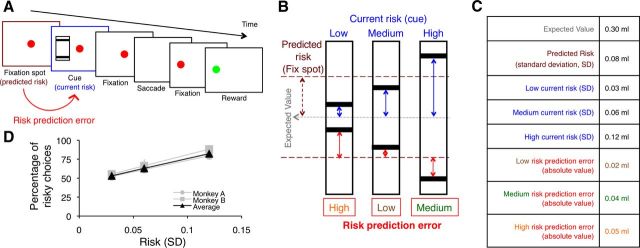

Figure 1.

Risk prediction error. A, B, Task design. An initial ocular fixation spot predicts the overall risk, derived from the overall reward probability distribution (maroon arrow in B). The subsequent appearance of one of the risk cues defines the current risk for each trial. Each risk cue predicts an equiprobable (p = 0.5 each), high or low amount of liquid reward delivered after saccadic eye movement to the cue location. Thus, each risk cue indicates current risk (defined as standard deviation of each specific reward probability distribution) (blue arrows in B). The risk prediction error on any given trial is the specific risk indicated by the risk cue for the current trial minus the predicted, overall risk indicated by the fixation spot common for all trials (red arrows in B). C, Actual measures used. D, Behavioral responses. As risk increased, both monkeys showed an increasing preference for the risky cues compared with the safe cue (which had an outcome that equaled the expected value of the risky cues). Thus, the monkeys showed risk preference. Data points show mean percentage preference. Error bars show SEM.

Stimuli and independent variables.

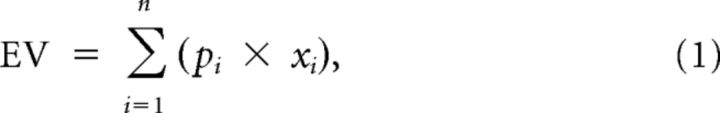

As risk cues, we used black bars on framed, rectangular white backgrounds. The vertical position of the bar indicated juice volume. Two bars within the rectangle indicated that one of two possible juice volumes would be delivered with equal probability (p = 0.5 each), thus explicitly indicating the risk of the outcomes (Fig. 1B). We used three pseudorandomly alternating gambles whose reward volumes were 0.27 and 0.33 ml for gamble 1, 0.24 and 0.36 ml for gamble 2, and 0.18 and 0.42 ml for gamble 3, resulting in the same mathematical expected value (EV; Eq. 1) of 0.3 ml for each gamble (and thus a common EV of 0.3 ml). Only one reward volume was delivered at trial end. The gambles had three different levels of risk (Fig. 1B). We defined risk as SD of a probability distribution with the following:

|

|

where n is the number of possible juice volumes (two volumes for current risk at each cue, six volumes for overall risk at the fixation spot).

Using Equation 2, the current risk is defined as the SD of each risk cue, and the predicted risk is defined as the SD of all possible reward outcomes (Fig. 1B,C). We defined risk prediction error as the current risk minus the predicted risk (Eq. 3). Thus, the risk prediction error (RiPE) was calculated as the SD indicated by each risk cue minus the predicted SD indicated by the preceding fixation spot:

This measure provided three different levels of signed RiPE, as indicated by the red arrows above and below the maroon horizontal dashed line in Figure 1B. The signed RiPEs were correlated with risk because the overall risk prediction, which is subtracted from current risk, was constant for all three gambles. Therefore, we considered unsigned, absolute RiPEs, which were 0.05, 0.02, and 0.04 ml for the low-, medium-, and high-risk cues, respectively (Fig. 1B,C).

The red fixation spot at trial onset was identical in all trial types and predicted that one of the three possible risk cues would follow. Therefore, the global, predicted risk at the time of the fixation spot was identical in all trials (Fig. 1B, maroon arrow; Eq. 2). The subsequent appearance of the explicit risk cue indicated the specific risk in the current trial (Fig. 1B, blue arrows; Eq. 2). In addition to the explicit risk information, with each risk cue there was a transition from the global, predicted risk at the time of the fixation spot to the specific risk signaled by the explicit risk cue in the current trial (Fig. 1B, red arrows; Eq. 3). Therefore, appearance of a specific cue indicated the risk per se in any given trial and also elicited a RiPE between the risk indicated by that cue and the global risk predicted by the fixation spot. Note that the expected value was constant for all cues. A reward value prediction error is calculated as the difference in reward value from expected value and is thus zero at the time of cue presentation.

However, monkeys displayed subjective preferences for risk, as described before (McCoy and Platt, 2005; O'Neill and Schultz, 2010). Thus, although all outcomes had the same expected objective value, their subjective values varied with risk level. Hence, there was a subjective value prediction error at the time of cue presentation. To assess the subjective values derived from the influence of risk on value, we investigated behavioral choices between a safe cue and each of the risky cues. The safe cue consisted of a single bar that indicated a safe juice volume equal to the expected value of the risky cues (p = 1.0). The identical expected objective values of juice volumes of the safe and the risky cues allowed us to assess the subjective value derived from risk sensitivity without confounding differences in expected objective value. We used two-way ANOVA to assess the monkeys' preferences in choices between safe and risky cues (percentage of choice of the risky over the safe option), with level of risk and monkey as factors. The percentage preference for each of the risky cues compared with the safe cue was taken as a numerical measure of subjective value. Thus, the subjective value prediction error (SVPE) was calculated as the subjective value (SV) of each risk cue minus the predicted subjective value indicated by the preceding fixation spot:

The predicted SV at the fixation spot was the numerical average of the subjective values of the three risk cues as assessed by the respective behavioral preferences. The current SV was derived from the numerical preference measure (percentage) for each risk cue. Because we were interested in comparing the neuronal responses to the unsigned, absolute RiPEs, we considered the absolute SVPEs, which were 10, 0, and 18% for the low-, medium-, and high-risk cues, respectively.

Neuronal recording and data analysis.

We isolated and recorded the activity of single neurons in the orbitofrontal cortex while monkeys performed the task, according to procedures described previously (O'Neill and Schultz, 2010). In the first step of analysis, we defined the presence of cue-related neuronal responses by the Wilcoxon test, which compared neuronal activity during a period of 0.1–0.6 s following cue onset against a control period of 1.0 s before the fixation spot. In the second step, we performed a multiple linear regression analysis on the cue responses identified by the Wilcoxon test.

The cues indicated both the RiPE and the risk per se on each trial. Therefore, both these terms were included in a multiple regression model used to assess the relationship of the cue responses to each of these variables:

where Y is the neuronal firing rate; |RiPE| is the unsigned, absolute risk prediction error; β1 and β2 are corresponding regression coefficients; β0 is the intercept; and e is error. Note that because of the risk-seeking attitude of our animals (Fig. 1D), risk covaried with subjective value, neither of which were of primary interest for this study. However, the prediction errors in these variables might correlate. Therefore, we disambiguated RiPE from SVPE as described below (Eqs. 7, 8).

To confirm the capacity of RiPE for explaining variance of neuronal activity in addition to that accounted for by the risk regressor of Equation 5, we used a hierarchical approach in which we compared the full regression model against a reduced model with the F test (Snedecor and Cochran, 1989). Thus, we compared Equation 5 with the reduced model:

For comparisons between different regressors, we normalized their slopes (β) and calculated the standardized regression coefficient (SRC) for the ith regressor xi as ai × si/sy, with ai as the original slope regression coefficient (β) and si and sy as the SDs of xi and the dependent variable y. To quantify the extent to which the regressors accounted for the variance of the neuronal data, we used the coefficient of partial determination (CPD).

To assess the potential influence of SVPE, we performed separate single linear regressions using SVPE and RiPE as regressors, as adding SVPE to Equation 5 would exceed the number of trial types allowed in the configuration of our multiple regression model.

where |SVPE| indicates the unsigned, absolute SVPE. Comparisons of the r2 between these two regressions served to assess whether the |RiPE| captured more of the variance in the data than the |SVPE|.

Results

We recorded the extracellular activity of 242 single neurons in the orbitofrontal cortex during task performance. Of these, 180 neurons (74%) responded significantly to the cues (p < 0.05, Wilcoxon test).

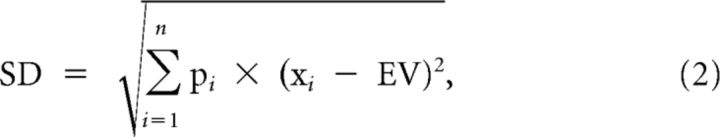

The multiple regression analysis (Eq. 5) revealed that the cue responses of 33 of 180 neurons (18%) coded the unsigned, absolute RiPE (all p < 0.05), with 15 of 33 showing significant positive and 18 of 33 showing significant negative correlation coefficients (Fig. 2A–C, left and right, respectively). The hierarchical regression analysis revealed that RiPE explained additional variance after risk was taken into account in 30 of the 33 neurons identified by Equation 5 (p ≤ 0.05; F test on Eq. 5 vs Eq. 6; remaining three neurons, p ≤ 0.06). The standardized regression coefficients were significantly higher for RiPE than for risk in the 15 neurons with positive coefficients (Fig. 2D, left; F(1,28) = 5.31, p = 0.03, one-way ANOVA) and lower in the 18 neurons with negative coefficients (Fig. 2D, right; F(1,34) = 48.36, p < 0.001, one-way ANOVA).

Figure 2.

Orbitofrontal neurons code risk prediction error. A, Single neuron examples. Smoothed histograms show responses from two example neurons coding risk prediction error with positive slope (left) and negative slope (right). B, Population responses. Smoothed histograms show averaged population responses from all neurons coding risk prediction error with positive slopes (left) and negative slopes (right). Gray bars indicate the time windows for analysis. C, Regressions on risk prediction error. Data points show mean firing rates averaged from all neurons during the shaded periods in B. Error bars show SEM. D, SRCs from linear regressions on neurons with significant positive betas (left) and negative betas (right) for RiPE at cue presentation. E, Scatterplot of CPDs for neurons with significant correlation coefficients for risk prediction error (red dots), risk (blue dots), and both (green dots) at cue presentation (left). R2 values from single linear regressions with risk prediction error and subjective value prediction errors as regressors (right).

Of the 180 neurons with cue responses, 23 coded only RiPE, 42 coded only risk per se, and 10 coded both RiPE and risk (p < 0.05). A χ2 test failed to detect a significant difference between the likelihood of a RiPE-coding neuron to code risk compared with any task-related neuron coding risk (χ2 = 0.127, p = 0.721), suggesting that risk coding did not occur preferentially in RiPE-coding neurons. In addition, the amount of variance explained was not correlated between RiPE and risk (Fig. 2E, left). Thus, RiPE coding was mostly distinct from risk coding.

To control for subjective value prediction error (SVPE) coding, we derived a measure of subjective value for the three risky cues from the monkeys' behavioral preferences (Fig. 1D). The monkeys preferred the risky option more as the risk increased (main effect of risk: F(2,51) = 28.02, p < 0.001, two-way ANOVA), and this effect was not statistically different between the two monkeys (main effect of monkey; F(1,51) = 2.87, p = 0.1, n.s.; risk × monkey interaction: F(2,51) = 0.2, n.s.). Therefore, we averaged the monkeys' preference ratings (Fig. 1D, black triangles) to calculate the SVPE (Eq. 4). The variance in the neuronal data was better accounted for by a RiPE than an SVPE in the regressions (Fig. 2E, right; p = 0.02, Wilcoxon signed-rank test between r2 derived from Eqs. 7 and 8). Thus RiPE coding was not explained by SVPE coding in this neuronal population.

As an additional test on the suitability of a linear regression analysis on our data of neuronal firing rates, we log transformed the data from all neurons and re-ran the full analysis. This resulted in 31 of 180 neurons (17%) with significant regression coefficients for RiPE at cue presentation, comparable with the results from the analysis on the raw data.

The distribution of the 33 orbitofrontal neurons with cue-related RiPE responses was not significantly different between orbitofrontal areas 11 (14 of 83 neurons), 12 (2 of 5 neurons), 13 (15 of 88 neurons), and 14 (2 of 4 neurons) (χ2 = 4.5, p = 0.216, χ2 test).

Discussion

This study investigated the neurophysiological coding of RiPEs, as defined by the difference between the predicted risk and the current risk. A group of orbitofrontal neurons coded the unsigned, absolute RiPE with a positive or negative slope. This error coding was mostly distinct from risk coding per se and subjective value coding.

Prediction error is a general term that can be derived from any predictable variable. It is defined as the difference between the current measure and the predicted measure. For example, reward value prediction error is defined as current reward value minus predicted reward value and constitutes a crucial component in reinforcement learning (Rescorla and Wagner, 1972). In analogy, RiPE is defined as current risk minus predicted risk, as used here and in previous studies (Preuschoff et al., 2008; d'Acremont et al., 2009). This definition allows calculation of RiPE at the cue and the outcome, as both events are preceded by well defined levels of risk prediction. This definition follows that of previous studies that also used binary gambles (Preuschoff et al., 2008; d'Acremont et al., 2009). The previous studies derived risk from value prediction error, whereas we calculated the SD directly. Although the calculations for deriving risk differ between the previous studies and ours, in binary gambles these two measures are numerically identical (Preuschoff et al., 2008, their Table S1). Therefore, with either approach, the difference between the current and the predicted risk, the RiPE, is equivalent. In our experiment, RiPEs calculated in this way amount to zero at reward outcome. Therefore, we were only able to test for RiPEs at cue presentation.

Investigations of neuronal risk processing have revealed the involvement of several brain structures. Studies defining risk as statistical variance or SD have identified risk processing in frontal cortex, parietal cortex, cingulate cortex, striatum, amygdala, and insula (Sanfey et al., 2003; Hsu et al., 2005; McCoy and Platt, 2005; Huettel et al., 2006; Kepecs et al., 2008; Preuschoff et al., 2008; Christopoulos et al., 2009; Tobler et al., 2009; O'Neill and Schultz, 2010). In addition, activity in the human insula correlates with RiPEs (Preuschoff et al., 2008; d'Acremont et al., 2009). Together with our findings, the orbitofrontal cortex and the insula seem to be involved in processing both risk per se and deviations from predicted risk. In addition, the orbitofrontal cortex is involved in encoding deviations from expected reward value (value prediction errors; O'Doherty et al., 2003; Dreher et al., 2006). These findings suggest an important role of orbitofrontal cortex in updating the key variables of reward probability distributions, namely expected value and risk.

Prediction errors can be signed or unsigned. Signed prediction errors are positive for greater-than-predicted outcomes and negative for less-than-predicted outcomes. In reinforcement learning about reward value, signed prediction errors serve for updating the value function. In contrast, unsigned, absolute value prediction errors serve for adjusting the value learning coefficient in the associability learning rules (Mackintosh, 1975; Pearce and Hall, 1980). These distinct roles are a direct consequence of the prediction errors being signed or unsigned; signed value prediction errors signal that values are less than or greater than predicted, whereas unsigned prediction errors simply track the difference from prediction regardless of whether it is greater than or less than predicted. Similar roles may hold for risk; signed RiPEs could be involved in the main process of risk updating (Preuschoff et al., 2008; d'Acremont et al., 2009). In contrast, the currently reported unsigned RiPE signal in orbitofrontal neurons could serve to set the coefficient of error-driven risk learning to adjust or modulate the main process of updating risk information, analogous to unsigned value prediction errors. Thus, an organism conceivably would learn most efficiently and flexibly about risks by using different forms of RiPE signals. These signals may be coded within the same brain area or in different areas, such as the insular (Preuschoff et al., 2008; d'Acremont et al., 2009) and orbitofrontal cortex.

Footnotes

This work was supported by the Wellcome Trust, the European Research Council, the Behavioural and Clinical Neuroscience Institute Cambridge, and the Human Frontier Science Program. We thank Peter Bossaerts, Armin Lak, and William Stauffer for discussions and Mercedes Arroyo for technical support.

The authors declare no competing financial interests.

References

- Christopoulos GI, Tobler PN, Bossaerts P, Dolan RJ, Schultz W. Neural correlates of value, risk, and risk aversion contributing to decision making under risk. J Neurosci. 2009;29:12574–12583. doi: 10.1523/JNEUROSCI.2614-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- d'Acremont M, Lu ZL, Li X, Van der Linden M, Bechara A. Neural correlates of risk prediction error during reinforcement learning in humans. Neuroimage. 2009;47:1929–1939. doi: 10.1016/j.neuroimage.2009.04.096. [DOI] [PubMed] [Google Scholar]

- Dreher JC, Kohn P, Berman KF. Neural coding of distinct statistical properties of reward information in humans. Cereb Cortex. 2006;16:561–573. doi: 10.1093/cercor/bhj004. [DOI] [PubMed] [Google Scholar]

- Hikosaka K, Watanabe M. Delay activity of orbital and lateral prefrontal neurons of the monkey varying with different rewards. Cereb Cortex. 2000;10:263–271. doi: 10.1093/cercor/10.3.263. [DOI] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kepecs A, Uchida N, Zariwala HA, Mainen ZF. Neural correlates, computation and behavioural impact of decision confidence. Nature. 2008;455:227–231. doi: 10.1038/nature07200. [DOI] [PubMed] [Google Scholar]

- Mackintosh NJ. A theory of attention: variations in the associability of stimuli with reinforcement. Psychol Rev. 1975;82:276–298. doi: 10.1037/h0076778. [DOI] [Google Scholar]

- McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/S0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- O'Neill M, Schultz W. Coding of reward risk by orbitofrontal neurons is mostly distinct from coding of reward value. Neuron. 2010;68:789–800. doi: 10.1016/j.neuron.2010.09.031. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearce JM, Hall G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol Rev. 1980;87:532–552. doi: 10.1037/0033-295X.87.6.532. [DOI] [PubMed] [Google Scholar]

- Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. 2008;28:2745–2752. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical conditioning, Vol II, Current research and theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Hastie R, Colvin MK, Grafman J. Phineas gauged: decision-making and the human prefrontal cortex. Neuropsychologia. 2003;41:1218–1229. doi: 10.1016/S0028-3932(03)00039-3. [DOI] [PubMed] [Google Scholar]

- Snedecor GW, Cochran WG. Statistical methods. Ed. 8. Ames, IA: Iowa State UP; 1989. Extension of the analysis of variance. Chap 17.4. [Google Scholar]

- Sutton RS, Barto AG. Toward a modern theory of adaptive networks–expectation and prediction. Psychol Rev. 1981;88:135–170. doi: 10.1037/0033-295X.88.2.135. [DOI] [PubMed] [Google Scholar]

- Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- Tobler PN, Christopoulos GI, O'Doherty JP, Dolan RJ, Schultz W. Risk-dependent reward value signal in human prefrontal cortex. Proc Natl Acad Sci U S A. 2009;106:7185–7190. doi: 10.1073/pnas.0809599106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]