Abstract

Context

“Meaningful use” of electronic health records to improve quality of care has remained understudied. We evaluated an approach to improving patients’ safety and quality of care involving the secondary use of data from a hospital electronic prescribing and decision support system (ePDSS).

Methods

We conducted a case study of a large English acute care hospital with a well-established ePDSS. Our study was based on ethnographic observations of clinical settings (162 hours) and meetings (28 hours), informal conversations with clinical staff, semistructured interviews with ten senior executives, and the collection of relevant documents. Our data analysis was based on the constant comparative method.

Findings

This hospital's approach to quality and safety could be characterized as “technovigilance.” It involved treating the ePDSS as a warehouse of data on clinical activity and performance. The hospital converted the secondary data into intelligence about the performance of individuals, teams, and clinical services and used this as the basis of action for improvement. Through a combination of rapid audit, feedback to clinical teams, detailed and critical review of apparent omissions in executive-led meetings, a focus on personal professional responsibility for patients’ safety and quality care, and the correction of organizational or systems defects, technovigilance was—based on the hospital's own evidence—highly effective in improving specific indicators. Measures such as the rate of omitted doses of medication showed marked improvement. As do most interventions, however, technovigilance also had unintended consequences. These included the risk of focusing attention on aspects of patient safety made visible by the system at the expense of other, less measurable but nonetheless important, concerns.

Conclusions

The secondary use of electronic data can be effective for improving specific indicators of care if accompanied by a range of interventions to ensure proper interpretation and appropriate action. But care is needed to avoid unintended consequences.

Keywords: electronic health records, meaningful use, patient safety, quality of care, ethnography, medication errors

If one consistent message has emerged from the literature on improving quality and safety in health care, it is that high-quality intelligence is indispensable (Pronovost, Miller, and Wachter 2007; Wachter 2012). Without good evidence about how well systems and individuals are functioning, organizations may be misled as to the quality of their services, fail to recognize and reward excellence where it exists, or miss opportunities to identify and remedy weaknesses (Francis 2010). Yet producing intelligence that is credible, timely (Bradley et al. 2004; Pronovost et al. 2004), close to the point of care (Weiner et al. 2005), and actionable (Szent-Gyorgyi et al. 2011; Whippy et al. 2011) continues to pose frustrating challenges. Some challenges relate to the practical difficulties of obtaining good-quality data; others relate to how data can best be turned into intelligence and then used to inform effective action.

One important challenge relates to the burden of data collection for assessing performance or identifying potential defects in care. Traditional clinical audits, whether local or national, continue to be important but suffer from many limitations. For example, chart review is expensive, time-consuming, and dependent on specialist skills that are in short supply (Stanfill et al. 2010). Local organizations often struggle to set up high-quality data collection systems (Dixon-Woods et al. 2012), and feedback from national audits or other large-scale data collection efforts may lag so far behind practice that its value in influencing change is diminished (Bradley et al. 2004). Administrative data are rarely satisfactory for purposes of monitoring quality and safety (Donaldson and Darzi 2012; Sinha et al. 2012), and the validity of “data for improvement” collected as part of quality improvement cycles is often dubious (Benn et al. 2009).

The secondary use of electronic health records (EHRs) to generate information on quality and safety offers a promising means of addressing at least some of these problems (Szent-Gyorgyi et al. 2011). The primary purpose of EHRs is recording information about the care of individual patients, though they are increasingly complemented by prescribing and decision-support software. To date, much research has focused on evaluating the primary uses of information technology (IT), with studies examining, for instance, how well IT can increase productivity through efficiency gains (Jones et al. 2012) and the effectiveness of its direct impacts on clinical decisions and actions (Huckvale et al. 2010). The evidence remains equivocal (Black et al. 2011) on these issues, and they are not the focus of this article. Instead, we concentrate on secondary use.

The potential for the secondary use of routinely collected electronic data to facilitate the evaluation and enhancement of system performance and professional practice (Ash et al. 2012; Friedman, Wong, and Blumenthal 2010; Weiner, Fowles, and Chan 2012) while at the same time improving timeliness and reducing the effort associated with data capture has attracted increasing interest (Glance et al. 2011). Recent reports have identified the possibilities of using electronic data to track patient safety and quality of care in organizations (Institute of Medicine Committee on Patient Safety and Health Information Technology 2011). Interest has been stimulated as well in the United States by the Health Information Technology for Economic and Clinical Health Act, which mandates the “meaningful use” of EHRs, defined as use by providers to achieve significant improvements in care (Blumenthal and Tavenner 2010; Jones et al. 2011). Research on secondary use has thus far focused mainly on how it can be used for research purposes (Sittig et al. 2012) and public health surveillance (Safran et al. 2007), as well as on technical questions such as those relating to data validity and interoperability (Chan, Fowles, and Weiner 2010; Kern et al. 2013; Parsons et al. 2012; Weiner, Fowles, and Chan 2012). Evaluations of specific models of “meaningful use” for purposes of improving quality and safety of care in health care organizations remain relatively rare, however. What is clear is that such models must recognize the social complexities of information and communication technologies in health care organizations (Greenhalgh et al. 2009; Takian et al. 2012).

In this article, we report on an ethnographic case study of a large National Health Service (NHS) acute care hospital in England (referred to here as “Rayburn”) that made the secondary use of data from its electronic prescribing and decision-support system (ePDSS; see box 1) a key part of its strategy for ensuring and improving quality and safety.

BOX 1 History and Features of the Electronic Prescribing and Decision Support System (ePDSS) at Rayburn Hospital

The ePDSS was developed in-house over several years, beginning in 1999.

It gradually spread throughout all clinical areas in the hospital (except the emergency department, where paper-based prescribing was still being used while a new IT system was introduced).

The primary function of the ePDSS is to provide an electronic prescribing, clinical decision support, and alerting system.

It is used in all inpatient wards, covering approximately 1,200 inpatient beds, and for all inpatient prescriptions except some chemotherapy regimens that were still being developed at the time of our study.

The system is accessed by clinical staff (around 600 doctors, 1,600 nurses, and 300 other health care professionals such as pharmacists and dieticians) using passwords, allowing records to be maintained of each action taken on the system and of who undertook the action.

Around 125,000 drug administrations each week (mostly by nurses) are recorded, with the time and date of administration automatically recorded when staff click “administered” on the system to show the drug has been given to the patient.

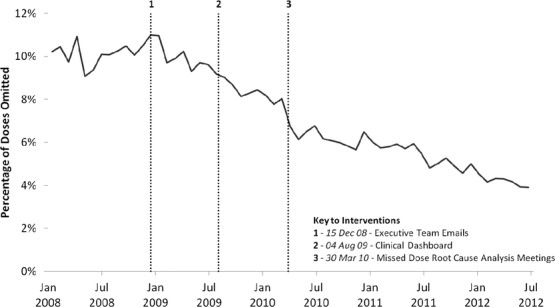

Besides reporting high performance across a number of domains, a particularly remarkable improvement secured by the case study hospital was a substantial and sustained reduction in the number of missed doses of prescribed medicines (figure 1). Medicines that are prescribed but never delivered to patients—or are delivered late—are an important source of medication-related harm in hospitals (Coleman, McDowell, and Ferner 2012). The UK's National Patient Safety Agency (NPSA) received reports of 27 deaths, 68 severe injuries, and 21,383 patient safety incidents related to omitted or delayed medicines in the UK between 2006 and 2009 (National Patient Safety Agency 2010). Many of these were doses that were prescribed but not administered (as distinct from those that should have been prescribed but were not). Particularly serious are delays or omissions of antibiotics, which are implicated in poor outcomes of sepsis (Kumar et al. 2006). The problem has proved hard to monitor, however. Most UK hospitals continue to use paper-based prescribing and medical notes, so calculating rates of omitted doses has traditionally required laborious manual review of patients’ records (Warne et al. 2010). The challenges associated with generating data on missed doses is thus a good example of the problems associated with traditional chart-based audits when real-time intelligence is needed and when “meaningful use” might offer some opportunities for improvement.

FIGURE 1.

Antibiotic dose omission rates at Rayburn Hospital, plotted at four-week intervals.

Source: Data from ePDSS at Rayburn Hospital.

The case study hospital sought to improve the rate of missed doses and other areas using an approach that we termed “technovigilance,” which drew extensively on the audit capability of its ePDSS and combined it with an emphasis on personal accountability and organizational systems improvement. Consistent with good practice in the evaluation of improvement interventions (Shojania and Grimshaw 2005), in this article we describe this approach, explore how it worked in practice, and characterize the mechanisms by which it worked. We also tried to identify the unanticipated consequences of technovigilance, given evidence that improvement interventions may have inadvertent adverse effects (Huskins et al. 2011; Morgan et al. 2009; Weiner, Fowles, and Chan 2012).

Methods

Our study design was a case study (Yin 2009) in which the hospital was the unit of analysis. Ethnographic methods are especially well suited to research questions like ours (Greenhalgh and Swinglehurst 2011). We spent 162 hours in ethnographic observation, recorded as field notes, on four clinical areas (two wards, the pharmacy, and a specialist clinical unit within the hospital). These settings were chosen to represent a range of environments that provided both acute and complex care. We did not conduct formal recorded interviews with clinical and ward-level staff, as our early observations showed that it was difficult for them to schedule time during working hours to participate. Instead, we held (with their permission) informal chats and conversations with front-line staff that we encountered during our ethnographic observations. To this extent, these staff were not intentionally sampled but instead were included in the study because of their relevance to the areas under study. Informal conversations were recorded as field notes. Collaboration with researchers during ethnographic observations was very high, characterized by the ward staff's willingness to be “shadowed” by researchers and to answer detailed questions. Staff did not refuse any researcher's requests to observe or to talk informally.

We conducted ten semistructured interviews with hospital senior executives, including all executive members of the board and those executives with senior clinical or operational responsibility within the hospital. We refer to these executives collectively as “the executive team.” These interviews were recorded using a digital recorder; they were fully transcribed, and the interviewees’ names were deleted. We also observed five care omission meetings and eight additional meetings (28 hours) relating to the ePDSS. Relevant documents, including nonconfidential in-house policies and external hospital reports, also were collected.

We based our data analysis on the constant comparative method (Glaser and Strauss 1967), revising, expanding, and collapsing the initial open codes through detailed analysis and eventually organizing them into categories in a coding scheme. After discussing these with the project team, we then used them to build a theoretically informed interpretation. Coding was facilitated by NVIVO software. Myles Leslie did the coding, and Mary Dixon-Woods verified it. Extracts from the interviews and field notes are presented in the findings to support the analysis. In order to preserve anonymity, some identifying details have been altered.

The research was led and coordinated by a team outside the hospital. Researchers independent of those who introduced the ePDSS conducted the data collection and analysis. One of the attending physicians involved in system development participated in the research to help identify suitable locations for data collection, secure access, clarify issues of context, and aid interpretation of the results. An NHS Research Ethics Committee (REC) gave the study a favorable opinion, and NHS research governance approval was obtained. Patient and staff information sheets were distributed on the wards and clinical areas before and during the fieldwork, and posters relating to the study were displayed on notice boards. Participation in the study was voluntary, and participants could decline or withdraw at any time. Signed consent was obtained from staff who were interviewed, and verbal permission for observing activities was obtained.

Findings

Our analysis suggested that technovigilance at Rayburn emerged over time and came to display a set of distinctive features (box 2). The hospital relied on the secondary use of electronic data to provide information on a wide range of quality indicators. This information was made available to both executive and clinical staff and was, combined with close surveillance of individual behavior, sufficient to secure substantial changes in some areas. Some issues, however, including that of missed doses, proved less tractable to an information-and-feedback approach. For these problems, technovigilance was escalated to include carefully strategized and choreographed meetings led by the executive team to call clinical teams to account and to take action through reinforcing personal accountability and/or improving organizational systems.

BOX 2

What Did Technovigilance Involve at the Case Study Hospital?

Technovigilance at Rayburn Hospital involved the purposive secondary use of electronic health records to generate intelligence on clinical performance combined with focused intervention to understand and address identified problems with organizational systems and/or to improve personal accountability.

Technoviligance's surveillance strategies included

- Rapid (and some instant) audit of quality indicators.

- Monitoring care processes.

- Monitoring individual performance.

- Complementing electronically generated data with other forms of intelligence, including letters from patients and/or caregivers, and ePDSS user forums.

And its interventions included

- Regular feedback of performance against indicators to clinical teams, with requests when appropriate to make improvements.

- Dashboard displays specific to clinical areas.

- Identification, support, and remedial action for individuals whose performance appeared to be of concern.

- Automated emails about specific care omissions, which would escalate upward through the organizational hierarchy if no action were taken.

- Automated emails notifying all cases of death to senior members of the organization, with requests to review and identify lessons.

- Care omission meetings led by the hospital's most senior executives with clinical teams, focused on understanding causes of problems in carefully selected cases and then improving organizational systems and/or reinforcing personal accountability.

Emergence of Technovigilance

In both interviews and observed meetings, the executive team expressed a deep commitment to ensuring the safety and quality of the services provided by the hospital. Members of the team identified the ePDSS as a major strategic component of this commitment and made an accordingly heavy investment (approximately UK£25 million or US$38 million over ten years). Interviewees emphasized the system's primary purpose as improving patient safety, particularly in directly providing decision support and a graded series of alerts and warnings for averting errors. Thought by the executive team to be very successful—capturing each year an estimated 78,000 errors of varying types and severity—the ePDSS was not originally designed to do more than serve its primary function. Over time, however, a latent secondary use of the system—one that allowed scrutiny of practice and performance and provided the basis for action—became evident. As a consequence, the ePDSS was re-tasked so that data created as a “by-product” of its primary function could be used to monitor quality of care close to real time at very low marginal cost.

Participants described the ePDSS's large-scale re-tasking as dating back to the discovery in late 2008 of the system's ability to make visible those aspects of care that had previously been opaque. Before this point, the executive team had been using the ePDSS for certain quality-monitoring purposes. They had also been running root cause analysis (RCA) meetings to address issues relating to MRSA (Methicillin-Resistant Staphylococcus Aureus) infections. A shift followed what was described as a eureka moment during one of these meetings, when the executive team recognized the ePDSS's ability to provide data on missed doses.

It happened by accident. [The executive team] was in an RCA, and we started discussing this MRSA bacteremia, and an issue came up around how quickly the MRSA specimen had got back to the lab and … whether decolonization therapy had started. [A colleague] said, “I know, I'll look it up on ePDSS” … [he] suddenly put the drug chart up, and we were going, “Whoa! Look at all those red crosses [signaling omitted doses]!” Now it's not just [the missed] antibiotics; this patient hasn't had this, this and this. … You started to look into it, and we found all sorts of stuff that was going on. (executive team member 1)

From then onward, the data generated by the system were used in multiple new ways to generate intelligence; to make practices, behaviors, and performance visible; and to take the necessary action. All the interviewees were adamant, however, that the information generated by ePDSS did not secure change by itself; it needed to be supplemented with other interventions and activities.

Until we interrogated [ePDSS] and had … conversations with staff and did all that, that is when we got the cultural change. It did not just happen by putting in ePDSS. I think that is what we need to remember and not get carried away with [saying] “The system is amazing!” Yes, but you won't get that change unless you do all the stuff around it. (executive team member 4)

The “stuff around it” included using electronic data for surveillance, instituting care omission meetings, emphasizing professional responsibility, and improving organizational systems.

Using Electronic Data for Surveillance and Feedback on Individual Behavior and Quality Indicators

Secondary data from the ePDSS were used extensively to provide information to the executive team about individual, team, and unit-level behavior and performance, including prescribing and drug administration activities, staff response to warnings and alerts issued by the system, and staff use of information supplied by the system. One of its key features was that it was able to produce, very quickly, data that would otherwise involve time-consuming chart-based audits. For example, it enabled rapid audits of quality indicators such as length of time from prescription of antibiotic to administration of the first dose, rates of completion of venous thromboembolism risk assessment, completeness of clinical observations aimed at detecting patient deterioration, and rates of specific infections (e.g., Clostridium difficile). The executive team saw this very close monitoring of staff behavior as an explicit part of their strategy for improvement.

We get told we're very big brotherish here, and we are big brotherish here. I mean we're watching what everybody's doing or not doing, and we're only able to do that via the electronic systems that we've got. So we measure and quantify and drive forward improvements in patient safety and quality, fundamentally using data. Absolutely. (executive team member 8)

The system allowed the hospital to discover which staff members were generating high numbers of alerts and warnings from the system in response to their prescribing decisions and also what action they took as a result of the alerts—for example, changing the prescription or proceeding with the prescription despite the alert. Data generated by the system also made it possible to determine which individuals had looked at the dashboard displays. It further allowed scrutiny of specific behaviors that the executive team believed indicated individuals’ diligence, such as checking the on-line version of a pharmaceutical reference book—the British National Formulary (BNF)—for information on dosing and contraindications before prescribing or administering medication.

Reports generated by the system were discussed in different forums, ranging from the hospital's “blunt end” (the board of the hospital and various strategic and operational committees) through to the frontline staff at the “sharp end.” Monthly performance reporting based on ePDSS data was used at operational, executive, and board levels to identify trends, and detailed performance reviews took place three times a year. The hospital's quality meeting, chaired by the medical director, met every month to examine evidence from the system regarding quality and safety. In addition, emails were triggered automatically for each death in the hospital and were expected to provoke scrutiny by both the executive team and the clinical team that had cared for the patient.

Every patient that dies in this organization, there's an email that goes out automatically every day at 11:45 a.m. to all of the exec team and a whole bunch of other people. And they sit there and they review the mortalities on a day-by-day basis. We have between five and six deaths a day. And if you get admitted electively off a waiting list to this hospital and you die, the consultant [attending doctor] that was in charge of your care gets sent an electronic questionnaire that they have to complete online. Was it a high-risk case? Could anything have been done better? Could you get better support from the hospital? Cause of death? That goes into a central pod that's reviewed by the medical director every month. (executive team member 8)

Much of the ePDSS information on quality indicators was made directly available to frontline clinical staff and middle managers in a variety of ways. When logged into the system, staff could choose to look at dashboards that converted raw ePDSS data into displays of calibrated dials of thirty-four indicators that showed their clinical area's performance against the hospital's average performance. Information on individual patients’ missed doses and infections could be viewed by clinical staff at any time by logging on to a hospital computer. Clinical staff regularly received feedback in various forms (conversations, emails, and meetings) on how their areas were performing against a defined standard. The surgery division received feedback on the percentage of patients undergoing cardiac surgery who received care compliant with best-practice standards, for example, and they were expected to use this feedback to identify opportunities for improvement. This “gather-data-and-feedback” approach produced many locally led improvements, which often involved clarifying processes or responsibilities—for example, allocating clearly defined tasks to specific named individuals rather than assuming the tasks would somehow get done.

In addition to audit and feedback mechanisms, Rayburn made some real-time use of the data to intervene in care. This was evident, for example, in a system of automated emails that were triggered when specific breaches of quality standards occurred and escalated if a response was not received. For example, a warning email was automatically issued to the ward matron (senior nurse) in the event of a patient's missing more than two doses of antibiotics, and further emails would—if no action was taken—escalate upward, eventually reaching the chief executive. Signals received by the chief executive that a failure of patient care had occurred were taken seriously at all levels and were used to initiate both remedial action in the short term and an investigation and improvement in the longer term.

Care Omissions Meetings

The executive team found that for some problems, strategies of tight surveillance, “gather and feedback,” using escalating emails, and reliance on locally led improvement were not sufficient. Missed doses fell into this category. Missed doses were defined by the hospital as individual doses that were due for administration but were recorded as “not given” and were distinguished from “noncharted doses” (failure to record a drug that had been administered). Because the executive team found that rates of missed doses had remained stubbornly high over a year of monitoring and feedback (figure 1), they decided on a new approach. This involved a special dedicated root cause analysis (RCA) meeting, held monthly and initially known as the “missed doses” meeting. The executive team explained that these meetings were held to investigate the reasons for prescribed medicines not being administered and to take action. Over time, the meetings evolved and were renamed “care omissions” meetings when they began to deal with a wider range of incidents affecting the quality and safety of care.

As we discuss next, care omission meetings had a number of important features: (1) they were used to demonstrate organizational purposes; (2) they involved the strategic selection of cases to produce specific intelligence for analysis, learning, and comprehensive review, as well as structuring the discussion of clinical cases; (3) they drew on additional or complementary intelligence; and (4) they were used as the basis of remedial action targeting both organizational systems and individuals.

Demonstration of Organizational Purpose

The executive team used the care omissions meetings as an opportunity to demonstrate the organization's values and commitments to patients. Our observations showed that they were always chaired by a very senior person in the hospital—usually the chief executive or the medical director. This level of leadership was seen by interviewees as essential to signaling the executive team's seriousness of intent.

You know it sends a message out into the organization that this is something that's going to be focused on, that's really important and that the execs and everybody needs to take and are taking very seriously and we have to get this right. (executive team member 8)

Strategic Selection and Structured Discussion of Cases

Cases to be discussed at the care omissions meetings were found by querying the ePDSS system to identify patients who had been prescribed medicines but were recorded as either not receiving them or not receiving them on time. Choosing which of these cases was to be discussed was carefully strategized. The executive team examined data relating to the nonadministration of prescribed doses on the ePDSS, including any reasons for the nonadministration recorded by staff either by using a drop-down menu or by entering free text. In their selection of cases, the executive team favored examples of what appeared to be potential failures of process, such as poor prescribing, inadequate stock, and apparent defects in training or behavior, as well as patient factors such as refusal of medication. Especially in the initial meetings, they purposely chose cases that seemed to illustrate egregious failures of care.

You have to be absolutely rigid about making sure that you're choosing examples which no sane person is going to argue is not bad care. … So the first cases we chose were people who had a high white [cell] count [and had] missed two or more doses of antibiotics consecutively. ’Cuz [that gave us] evidence that they probably [had an] infection and nobody is bothering to give them the treatment for it. And there is pretty clear evidence that if you delay and screw up the administration of antibiotics, mortality rises. So you're immediately in a space that nobody is going to say, “Why are you making such a fuss? That doesn't really matter. …” You know, [then we're able to ask,] “Why wasn't it given?” (executive team member 3)

The incontestability of the selected cases was also seen as critical to the deliberately challenging nature of the meetings. The executive team wanted to focus the discussion on figuring out what had gone wrong rather than arguing about whether it had gone wrong.

The meetings are deliberately deeply unpleasant … because you get a room full of senior professionals and you use the very precise and detailed unarguable information that you have on the screen … you put people in a position where they have to say, “Yeah, you're right, that wasn't acceptable, was it?” (executive team member 3)

Clinical and managerial teams on the wards where the selected incidents had occurred were invited to meetings. The invited teams were made up of the senior nurses and physicians from the relevant wards, as well as divisional directors when relevant, though in practice it was mostly the nurses who attended. Before coming to the meetings, these teams were required to fully review the patient's care from admission to discharge using a modified root cause analysis methodology and then to make a presentation to the committee. A structured discussion then took place, with the electronic information from the ePDSS and the patient's treatment record displayed throughout. During the meetings, examination of cases by the executive team was detailed and focused on trying to understand what had happened to cause the omission of care.

[Person A] made a considerable effort at various points during the meeting to ask questions and understand the practices and systems in place within the hospital about which they did not have firsthand experience or knowledge. Nurses were frequently asked to describe and explain how specific procedures took place on the wards. (field notes, meeting observations)

Use of Complementary Forms of Intelligence

Although the data from the ePDSS were a key focus of discussion at the care omission meetings, other forms of information also were converted into intelligence, including correspondence from patients. In one meeting, for example, a letter of complaint from a patient whose chemotherapy administration was severely delayed on the day she attended was discussed in detail. The patient reported that she had suffered distress and anxiety and had missed collecting her children from school. Her letter was treated in the meeting as a valuable source of insight into systems problems, particularly as a way of discovering problems known to exist at certain levels of the organization but not articulated or discussed.

The issue was in part slow delivery from the pharmacy. … The patient did indicate in her letter that she asked a nurse why things were taking so long and the nurse replied with something to the effect of the whole chemo process is not working, and this is an ongoing issue. The consensus of the executive team was that this is just not good enough in terms of service delivery. … [Name] was observing how the nurses seemed to be well aware of this—of problems in the system—but weren't in a position where they could do much about them, and she asked the question “what opportunity are the nurses given to make changes?” (field notes, meeting observations)

Basis for Action

A strong feature of the meetings was an emphasis on what action should be taken as a result of the new understandings gained. Through their detailed examinations, the executive team identified different kinds of reasons for care omissions. They discovered sometimes benign explanations for patients apparently not receiving their medicines at the right time. For instance, sometimes patients were absent from the ward for reasons relating to their treatment (e.g., undergoing procedures or investigations) when the drug was supposed to be administered. This meant that it was not possible for the drug to be administered, but it was not, strictly speaking, a care omission. This issue was addressed through improved data capture on the ePDSS; nurses were enabled to record “off ward” more readily. Two apparently more tractable areas for intervention were, however, consistently identified: cultural tolerance of missing doses, and weaknesses in organizational systems that interfered with the timely administration of medicines. Actions for improvement, as we look at next, tended to focus on these.

Action: Emphasis on Personal Accountability

Technovigilance was intentionally used as a way of ensuring that individuals knew that their behavior could be monitored and that they personally would be open to scrutiny. Linked to this, the executive team explicitly rejected the idea of a “no blame” culture.

[You're] delivering the best in care, you're reducing emergency mortality, you're providing better care to the patients. That's got to be why you are here, and if that's not why you are here, go and get a job somewhere else. There's plenty of other organizations will take you if you don't want to work in an organization where errors and omissions are not tolerated. We have a fair blame culture. (executive team member 1)

One way in which this was manifested was in the use of data from the ePDSS to identify those individuals about whom there were concerns. Personal professional accountability was strongly stressed during the care omission meetings, especially when the executive team believed that specific nurses had made little effort to ensure that patients received their drugs on time, instead seeing it as acceptable to omit the dose if the medicines could not be readily be found or there was some other obstruction.

We've now got a tool—a live tool—that will tell me, by individual nurse, what their missed dose rate is. And there's one nurse who's been responsible for just over 1,400 doses of antibiotics in the last year, and they [only] failed to give seven [of those], which is not bad, really, to be fair. And there's somebody else who's responsible for virtually the same number—1,450 [doses] or something like that—who's failed to give 319. So somebody needs to be sitting down with the 319 person and unpacking that. (executive team member 3)

What I say to them in there is this is not punitive, so I might be cross because care wasn't great, but I'm not going to cut your head off and stick it on a pole at the Tower of London here. I'm doing this so you learn to not do it again this way, and I think it's about being fair, it's the fair blame thing. (executive team member 9)

For specific individuals who were identified as underperforming, corrective strategies included requiring education and training and, in some cases, adding comments to personnel records as a disciplinary measure or conducting disciplinary interviews.

[Name] commented that the nurse's explanation for the missed dose was “Woolly [i.e., vague, confused and unconvincing]. Very woolly.” This raised the issue of putting these mistakes on nurses’ records so that “sloppy repetitive mistakes don't get repeated.” (field notes, meeting observations)

Though not strictly intended to be punitive, being called to a care omissions meeting was itself recognized by the executive team as a deeply unpleasant and uncomfortable experience for staff, who were therefore highly motivated to avoid it. The executive team reported that the emphasis on individual professional accountability was important to causing a cultural shift among ward staff, so that it now was no longer regarded as acceptable to omit patients’ medication.

We have definitely changed the culture of nursing here, I am confident of that, so they now know it is not acceptable to miss, it is not acceptable to not bother to go find that drug just because it is not available in your ward. So we have really changed that mentality in most of the wards. (executive team member 4)

Action: Improving Organizational Systems

The executive team repeatedly emphasized that reinforcing personal professional accountability was not the answer to all problems. Often, they suggested, challenges in delivering safe, high-quality care arose because of deficiencies in organizational systems. Care omission meetings frequently made visible weaknesses that previously had not been clear to the executive team. For instance, secondary analysis of ePDSS appeared to suggest that sometimes patients did not receive antibiotics when they were due. During meetings, the executive team discovered that doctors might order the drugs early to make sure they would definitely be available on the ward when the patient needed them because these doctors were concerned about delivery times from the pharmacy. But if the patient was not scheduled to start the antibiotics until the next day, the doses appeared on the system as “not administered,” so it looked as though doses had been omitted. In reality, they were simply not due to be given to the patient on that day. Through discussion, the problem became understood as one of delivery times from pharmacy to ward rather than as a failure in the conscientiousness of individual ward staff. The action to address the problem involved channeling resources toward better delivery time and reliability to ensure that drugs were available on the ward when needed.

In actual fact, all they'd done is get the doctor to prescribe it early so they could get it ordered from the pharmacy so they were sure it was there when the patient [needed it]. So people were doing clever things because we'd not got the system [for delivering drugs to the ward] right to start with. So we can put those systems right. (executive team member 10)

In another instance, problems were identified with locating drugs on wards. Through the care omissions meetings, the executive team came to understand that medications could be stored in any one of a number of places and that the systems for allocating drugs to these places were poorly specified and inconsistently operated.

When a patient is on a ward, some drugs are kept in the patient locker at the bedside, and some are kept on the trolley [cart] to be administered during the drugs rounds. (field notes, sharp end observations)

When nurses were unable to easily find where the drugs were stored, delays could occur and patients might miss a dose. Again, the solution was seen to lie in fixing the organizational systems rather than reinforcing personal accountability. For example, a pharmacy stock indicator function was introduced to enable nurses to locate drugs on another ward if necessary.

Intended and Unintended Consequences of Technovigilance

The hospital's executive team credited technovigilance with delivering important improvements, including those measured against specific performance indicators. The “gather-and-feedback” approach, leaving it to clinical teams on the front line to secure local action without further intervention by the executive team, was reported to have made important improvements in several areas. For example, the proportion of patients undergoing coronary artery bypass graft who were discharged on angiotensin converting enzyme inhibitors was 89.7 percent (262 of 292 patients) in 2009 and rose to 100 percent (235 of 235) by 2011, a compliance rate that persisted into 2012.

According to a consultant at the meeting, this was due to that particular percentage […] being fed back [so] that the staff were able to improve their quality of care. (field notes, meeting observations)

The care omissions meetings were also credited with much of the specific improvement in relation to missed doses (figure 1 and table 1).

TABLE 1.

Antibiotic Dose Omission Rates and Prescribing Totals per 24-Week Period at Rayburn Hospital

| Start date | 01 Jan 08 | 17 Jun 08 | 02 Dec 08 | 19 May 09 | 03 Nov 09 | 20 Apr 10 | 05 Oct 10 | 22 Mar 11 | 06 Sep 11 |

| End date | 16 Jun 08 | 01 Dec 08 | 18 May 09 | 02 Nov 09 | 19 Apr 10 | 04 Oct 10 | 21 Mar 11 | 05 Sep 11 | 20 Feb 12 |

| Doses not administered | 20084 | 19580 | 20620 | 18091 | 17491 | 13758 | 12982 | 11612 | 10611 |

| Total doses due | 201608 | 191202 | 202230 | 200084 | 220815 | 219118 | 218951 | 211706 | 224773 |

| Non-admin rate | 10.0% | 10.2% | 10.2% | 9.0% | 7.9% | 6.3% | 5.9% | 5.5% | 4.7% |

If you look at that, the trend over a period of time, we've dropped our omitted antibiotic dose rate from 12 percent about eighteen months ago down to now 5 percent. So that's a big reduction, it's more than halved in eighteen months. And if you look at other hospitals like [Name 1] and [Name 2], they're still up at around 12 percent at the moment. And when you look at the graph over a trend period of time, there are two what statisticians call step changes in reductions. One is due to the introduction of the clinical dashboard. The second is when the RCAs first started. (executive team member 8)

Technovigilance was further seen as consistent with a deeply held value of honesty.

One of our values is honesty … we should know how good our outcomes are: not say, “Well, we think they're quite good,” we should actually know. And we've spent a huge amount of effort and resource on knowing what our outcomes are. … It's warts and all. (executive team member 1)

Technovigilance was not, however, without unanticipated or unwanted consequences. Some of these arose because of the very close surveillance of staff behavior. Users logging on to the system were told that “activities on this system are monitored and recorded and are subject to audit; use of this system provides express consent to such monitoring and recording.” Staff were aware that each of their interactions with the system, or failure to interact with it, left behind a trace that was visible to unseen observers. This could be used to call them to account either through either individual feedback (including automated emails) or attendance at a care omissions meeting. Observations and chats with staff on the wards showed that the meetings were regarded as a stressful, high-impact ordeal that they wished to avoid.

Five consequences of technovigilance, carried out in this way, were evident from our ethnography. First, in their routine work, staff were inclined to direct their attention to activities that they knew would be monitored by the ePDSS and for which they could be “caught” because their compliance (or lack of it) could be easily discovered.

[The ward nurse was] saying she feels like she spends a lot of time checking things, not because she knows [they might reveal something] detrimental to the patient, but because … she's being tracked and she needs to make sure … all the boxes have been ticked and everything has been stamped. (field notes and sharp end observations)

Given that staff have limited time, attention, and capacity (Dixon-Woods et al. 2009), there was some evidence that activities not subject to ePDSS surveillance sometimes tended to be downgraded in priority. Our observations suggested an emphasis in some settings on task-based activities (recorded on ePDSS) at the expense of less easily measured caring activities that involved engaging with patients on a personal level. For example, in one ward, immobilized patients were left in the dark during winter afternoons because staff said they did not have time to turn on the lights.

I am still struck by the task-based nature of interactions between patients and nurses. Nurses interact with patients seemingly only when a task needs to be completed. I saw no attempt being made at comforting a patient or initiating a conversation about how a patient was feeling. (field notes, sharp end observations)

Second, staff sometimes spent more time justifying why they had not done something than seemed appropriate. For example, although the intention was that nurses would choose an option from a drop-down menu on ePDSS to explain their nonadministration (e.g., “no intravenous access”), they occasionally wrote lengthy accounts or “essays” justifying why a dose had not been administered. This latter behavior seemed to be a defense against being called to a care omissions meeting at which they might have to explain themselves.

[They feel that] if they don't give full chapter and verse on why they didn't give it, then it's going to come back on them. But the trouble is they might be taking several minutes to … not administer a drug, which just delays the whole round. (executive team member 11)

A third unwanted consequence was that technovigilance produced incentives for staff to “game” the system. For example, newly qualified nurses knew that the number of times they clicked through to check the British National Formulary was tracked by the system, and an informal folklore rule had therefore developed that they should “click through” at least twice per shift. A small number of staff also suggested that sometimes nurses clicked that a dose had been given when in fact it had not, though we did not witness any instances of this occurring.

He spoke about missing doses, and he said—his exact words were—sometimes people just click “Dose Given” anyway, so that they don't get into trouble. And that there was this general feeling that they need to be really vigilant about that and in a fearful kind of way. And he said, instead of being able to look after the patient, he spends his day looking back, making sure everything is as it should be on the system. (field notes, sharp end observations)

Fourth, there was evidence that some ward-based staff believed that relying on the ePDSS as a source of data, together with the RCAs, had increased the distance between the “sharp end” and the “blunt end” of the organization and, in particular, had diminished the executive team's insight into the realities of looking after patients on the front line. Despite the opportunities identified during the care omissions meetings for nurses’ intelligence to contribute to improve organizational functioning, nurses often perceived that they were not listened to and that they lacked influence in securing change. They also pointed out what they perceived as the distorting effects of technovigilance, arguing that the ePDSS's focus was narrow, even though caring was complex.

She said that the executive team believes that “technology doesn't lie,” but she thinks it does [in failing to represent the totality of caring for patients]. She said, “Well, it doesn't tell the whole truth is the answer. And what worries me is that [the executive team] makes judgments, remote judgments. Whereas they used to come and visit the wards, they would meet the people involved, they would see the challenges on those wards.” (field notes, sharp end observations)

Finally, the nursing staff in particular believed that the way in which technovigilance operated in the hospital did not distribute blame fairly and that nurses ended up being watched more carefully and punished more frequently than other kinds of staff. Nurses, for example, were almost invariably called to account at the RCA meetings, yet physicians attended much less frequently and were able to escape the scrutiny and rebuke. The executive team was aware of this issue and made a number of attempts to resolve it, though, at the time of the observations, without notable success.

[The chair] admonished senior medical staff for not attending the meeting along with the rest of the team presenting a case. The chair suggested that there was no point in calling only the nursing staff to task. The chair also was willing to hold a special meeting for doctors who weren't able to attend. (field notes, meeting observations)

Discussion

Our ethnographic study described an approach to quality of care that turned the secondary use of data from an electronic prescribing and decision support system into a key resource for improvement. Technovigilance, as we dubbed it, treated the ePDSS as a warehouse of data regarding clinical activity and performance and made “meaningful use” of these data by seeking to turn them into intelligence about the performance of individuals, teams, and clinical services and to use that intelligence as the basis of action for improvement. A defining characteristic of our case study hospital's model of technovigilance was that the data did not have to be retrieved or reconstructed through cumbersome and expensive chart reviews. Instead, this information could be used in, or very close to, real time to monitor quality and safety of care, and it could complemented by other forms of intelligence, including patients’ complaints. Based on the hospital's own evidence, technovigilance was highly effective in securing improvement against specific indicators through a combination of rapid audit, detailed and critical reviews of apparent omissions of care in meetings led by senior hospital figures, emphasis on personal accountability, and changes in organizational systems. Measures such as rate of omitted doses showed marked improvement. As do most interventions, however, technovigilance (at least in this form) had some unwanted consequences.

We are aware that our study has a number of limitations. It is a single case study in an acute care hospital in a single country. We did not conduct formal interviews with those at the sharp end of practice and thus may have missed some opportunities to explore their views in more detail, or given undue prominence to the views of the executive team. We focused primarily on the secondary use of data from the system and did not analyze in detail how secondary and primary uses of the system might mutually inform each other. Nonetheless, we found little to suggest that technovigilance depends on the use of a specific computer system in a specific setting. We did find much to suggest that some of its useful principles could be transferred to other settings and that the specific model could be updated to benefit from the learning generated by our study—in particular to address its unintended consequences.

The case study hospital's version of technovigilance was notable for a number of important features, some of them contradicting current orthodoxies about how to improve quality. Although in the “gather-and-feedback” mode, it stimulated clinical teams to make improvements for themselves, technovigilance could not be characterized more generally as participatory and “bottom-up” (Bate, Robert, and Bevan 2004). It was not typically led by professionals at the sharp end (Dopson et al. 2002), nor did it rely on mutual cooperation and collaboration (Hulscher, Schouten, and Grol 2009), conventional quality improvement methods such as Plan-Do-Study-Act (PDSA) cycles (Varkey, Reller, and Resar 2007), or large cross-institution collaborative activity (Dixon-Woods et al. 2011). The executive team was clearly and explicitly in charge, and it drove improvement activities mainly through hierarchical structures and the exercise of power. It demanded accountability, exercised detailed scrutiny, and did not shirk from intervening coercively.

The practice of technovigilance was distinctive in the executive team's explicit rejection of the influential view that a “no blame” culture was the one most likely to foster patients’ safety and quality of care (Hudson 2003). Instead, consistent with some recent arguments (Wachter and Pronovost 2009), individual personal responsibility was strongly emphasized. Also critical to technovigilance was linking intelligence to action. The executive team was adamant that simply using the ePDSS as a means of surveillance was not enough to secure change. Various forms of real-time feedback were used to stimulate clinical teams to find for themselves ways of doing better, and examples of where this had been successful were evident across the hospital. Going beyond feedback, the care omissions meetings brought together the blunt end and the sharp end of the organization to discuss situations in which some failing was evident. These were highly structured and strategized encounters, designed to show organizational purpose, demand accountability, gain organizational learning, and initiate action. The executive team used the intelligence created by the ePDSS to allocate culpability for failure to provide high-quality and safe care to patients, and the team also used this intelligence to detect and correct weaknesses in organizational systems. Actions were therefore not confined to blaming individuals for apparent failures of care; instead, the emphasis on personal responsibility was combined with a genuine commitment to improving organizational systems.

Technovigilance, to some extent, might be regarded as a special case of performance management. Part of what explained the improvement seen in missed doses and other indicators was the clinical staff's increasing sensitization to the expectations of management and awareness that their performance was open to easy scrutiny. The positive effects of assessing performance against specific standards in other contexts include the ability to identify variations in quality of care, to signal an organization's priority on quality, to enhance accountability, and to create opportunities for remedial action (Spertus et al. 2005). We found evidence of all these positive effects in how technovigilance was used in our case study. Perhaps intensified in the case of technovigilance, however, were the risks of producing important perverse effects. Re-tasking the ePDSS took advantage of its supervisory capacity (Freidson 1988), turning it into an accountability machine that made visible—and thus much more controllable—those aspects of staff performance that had previously been opaque to the senior management team. Perverse effects of performance management include effort substitution, which involves staff directing their behavior toward the things being measured to the possible exclusion of other valuable activities (Kelman and Friedman 2009). They also include gaming, in which staff manipulate reporting in order to create the appearance of better performance (Bevan and Hamblin 2009; Bird et al. 2005; Kelman and Friedman 2009), and the risk of eliciting ritualized displays of compliance without necessarily improving underlying quality (Power 2003).

These effects occur in part because the time and attention of health care staff are limited, and they must choose what to prioritize (Dixon-Woods et al. 2009). Faced with the possibility of being called to a “deliberately unpleasant” meeting and having negative comments entered into their personnel record, it was not surprising that activities that were less easy to capture on the ePDSS—such as talking to patients and ensuring their comfort—were given lower priority or that staff sometimes found ways of suggesting that performance on monitored indicators was better than it really was. Another interesting and unintended consequence of technovigilance, in this context, was that the ePDSS's re-tasking meant that a system developed to support prescribing by doctors was now being used predominantly to control and discipline the work of nurses. The lower professional status of nurses and the kinds of tasks they undertook may have been contributing factors; technovigilance operated in ways that meant it was easier to secure accountability from nurses than doctors. It was also perceived by some as increasing the remoteness of the executive team from the realities of the sharp end of practice. What was made visible to the executive team was determined by what was collected, displayed, and prioritized by the ePDSS, rather than by the human interactions that derived from visiting clinical areas in person.

One risk of technovigilance is that the executive team may believe that they are more in control than they in fact are, or they may gain a misleading impression of quality of care. The sense of control and full scrutiny offered by technovigilance may, to some extent, be illusory: just as some aspects of performance come more sharply into focus and can easily be monitored, others recede and may be diminished. Technovigilance may thus conceal even as it reveals. It may focus too much on specific aspects of practice or on the performance of easily identifiable professional groups while neglecting other parts of sharp-end reality. It may therefore need to draw on a wider range of strategies to gain insights into where the problems lie and how they can best be addressed. Using alternative and complementary forms of intelligence, including evidence of patients’ experience and executive visits to clinical areas, may therefore be especially important to avoiding the privileging of technologically derived data and to gaining a more complete picture of the quality and safety of patient care. Finally, promoting more participatory approaches (Dixon-Woods et al. 2011) may also help counter some of the perverse effects associated with the top-down nature of technovigilance as practiced in this hospital.

Conclusions

This case study of a large hospital, which appears to have secured substantial improvements in the quality and safety of care across a number of indicators, uses a novel and distinctive approach that combined real-time surveillance of care with senior-level intervention. Although technovigilance appears to have had important positive effects, it also had side effects, such as the risks that those at the sharp end may engage in behaviors that are mere displays of compliance or that evade compliance or that they may focus most of their energies on those areas for which compliance can most easily be checked.

Acknowledgments

We thank the participants in our study and also Anna Rajakumar for conducting ethnographic observations and contributing to our team discussions. This study was funded as part of a wider program of work by the Department of Health Policy Research Program (award no. 0770017). We thank all our colleagues on this project, including Michael West and Lorna McKee, who provided helpful comments on this manuscript. Jamie Coleman and Sabi Redwood were funded by the National Institute for Health Research (NIHR) through the Collaborations for Leadership in Applied Health Research and Care for Birmingham and Black Country (CLAHRC-BBC) program. The views expressed in this publication are not necessarily those of the NIHR, the Department of Health, any NHS hospitals, the University of Leicester, or the University of Birmingham.

References

- Ash JS, Sittig DF, Guappone KP, Dykstra RH, Richardson J, Wright A, Carpenter J, McMullen C, Shapiro M, Bunce A, Middleton B. Recommended Practices for Computerized Clinical Decision Support and Knowledge Management in Community Settings: A Qualitative Study. BMC Medical Informatics and Decision Making. 2012;12:6. doi: 10.1186/1472-6947-12-6. doi: 10.1186/1472-6947-12-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bate P, Robert G, Bevan H. The Next Phase of Healthcare Improvement: What Can We Learn from Social Movements. Quality & Safety in Health Care. 2004;13(1):62–66. doi: 10.1136/qshc.2003.006965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benn J, Burnett S, Parand A, Pinto A, Iskander S, Vincent C. Studying Large-Scale Programmes to Improve Patient Safety in Whole Care Systems: Challenges for Research. Social Science & Medicine. 2009;69(12):1767–76. doi: 10.1016/j.socscimed.2009.09.051. [DOI] [PubMed] [Google Scholar]

- Bevan G, Hamblin R. Hitting and Missing Targets by Ambulance Services for Emergency Calls: Effects of Different Systems of Performance Measurement within the UK. Journal of the Royal Statistical Society. 2009;172(1):161–90. doi: 10.1111/j.1467-985X.2008.00557.x. Series A (Statistics in Society) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bird SM, Cox D, Farewell VT, Goldstein H, Holt T, Smith PC. Performance Indicators: Good, Bad, and Ugly. Journal of the Royal Statistical Society. 2005;168(1):1–27. Series A (Statistics in Society) [Google Scholar]

- Black AD, Car J, Pagliari C, Anandan C, Cresswell K, Bokun T, McKinstry B, Procter R, Majeed A, Sheikh A. The Impact of eHealth on the Quality and Safety of Health Care: A Systematic Overview. PLoS Medicine. 2011;8(1):e1000387. doi: 10.1371/journal.pmed.1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenthal D, Tavenner M. The “Meaningful Use” Regulation for Electronic Health Records. New England Journal of Medicine. 2010;363(6):501–4. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- Bradley EH, Holmboe ES, Mattera JA, Roumanis SA, Radford MJ, Krumholz HM. Data Feedback Efforts in Quality Improvement: Lessons Learned from US Hospitals. Quality and Safety in Health Care. 2004;13(1):26–31. doi: 10.1136/qhc.13.1.26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan KS, Fowles JB, Weiner JP. Review: Electronic Health Records and the Reliability and Validity of Quality Measures: A Review of the Literature. Medical Care Research and Review. 2010;67(5):503–27. doi: 10.1177/1077558709359007. [DOI] [PubMed] [Google Scholar]

- Coleman JJ, McDowell SE, Ferner RE. Dose Omissions in Hospitalized Patients in a UK Hospital: An Analysis of the Relative Contribution of Adverse Drug Reactions. Drug Safety. 2012;35(8):677–83. doi: 10.1007/BF03261964. [DOI] [PubMed] [Google Scholar]

- Dixon-Woods M, Bosk CL, Aveling EL, Goeschel CA, Pronovost PJ. Explaining Michigan: Developing an Ex Post Theory of a Quality Improvement Program. The Milbank Quarterly. 2011;89(2):167–205. doi: 10.1111/j.1468-0009.2011.00625.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon-Woods M, Leslie M, Bion J, Tarrant C. What Counts? An Ethnographic Study of Infection Data Reported to a Patient Safety Program. The Milbank Quarterly. 2012;90(3):548–91. doi: 10.1111/j.1468-0009.2012.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon-Woods M, Suokas A, Pitchforth E, Tarrant C. An Ethnographic Study of Classifying and Accounting for Risk at the Sharp End of Medical Wards. Social Science & Medicine. 2009;69(3):362–69. doi: 10.1016/j.socscimed.2009.05.025. [DOI] [PubMed] [Google Scholar]

- Donaldson LJ, Darzi A. Quality Measures: Bridging the Cultural Divide. BMJ Quality & Safety. 2012;21(11):969–72. doi: 10.1136/bmjqs-2012-001477. [DOI] [PubMed] [Google Scholar]

- Dopson S, Fitzgerald L, Ferlie E, Gabbay J, Locock L. No Magic Targets! Changing Clinical Practice to Become More Evidence-Based. Health Care Management Review. 2002;27(3):35–47. doi: 10.1097/00004010-200207000-00005. [DOI] [PubMed] [Google Scholar]

- Francis R. Independent Inquiry into Care Provided by Mid Staffordshire NHS Foundation Trust: January 2005–March 2009. London: Stationery Office; 2010. [Google Scholar]

- Freidson E. Profession of Medicine: A Study of the Sociology of Applied Knowledge. Chicago: University of Chicago Press; 1988. [Google Scholar]

- Friedman CP, Wong AK, Blumenthal D. Achieving a Nationwide Learning Health System. Science Translational Medicine. 2010;2(57):57cm29. doi: 10.1126/scitranslmed.3001456. [DOI] [PubMed] [Google Scholar]

- Glance LG, Neuman M, Martinez EA, Pauker KY, Dutton RP. Performance Measurement at a “Tipping Point”. Anesthesia & Analgesia. 2011;112(4):958–66. doi: 10.1213/ANE.0b013e31820e778d. [DOI] [PubMed] [Google Scholar]

- Glaser B, Strauss A. The Discovery of Grounded Theory. Hawthorne, NY: Aldine; 1967. [Google Scholar]

- Greenhalgh T, Potts H, Wong G, Bark P, Swinglehurst D. Tensions and Paradoxes in Electronic Patient Record Research: A Systematic Literature Review Using the Meta-Narrative Method. The Milbank Quarterly. 2009;87(4):729–88. doi: 10.1111/j.1468-0009.2009.00578.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T, Swinglehurst D. Studying Technology Use as Social Practice: The Untapped Potential of Ethnography. BMC Medicine. 2011;9(1):45. doi: 10.1186/1741-7015-9-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huckvale C, Car J, Akiyama M, Jaafar S, Khoja T, Bin Khalid A, Sheikh A, Majeed A. Information Technology for Patient Safety. Quality and Safety in Health Care. 2010;19(suppl. 2):i25–i33. doi: 10.1136/qshc.2009.038497. [DOI] [PubMed] [Google Scholar]

- Hudson P. Applying the Lessons of High Risk Industries to Health Care. Quality and Safety in Health Care. 2003;12(suppl. 1):i7–i12. doi: 10.1136/qhc.12.suppl_1.i7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hulscher M, Schouten L, Grol R. Collaboratives. London: Health Foundation; 2009. [Google Scholar]

- Huskins WC, Huckabee CM, O'Grady NP, Murray P, Kopetskie H, Zimmer L, Walker ME, Sinkowitz-Cochran RL, Jernigan JA, Samore M, Wallace D, Goldmann DA, STAR*ICU Trial Investigators Intervention to Reduce Transmission of Resistant Bacteria in Intensive Care. New England Journal of Medicine. 2011;364(15):1407–18. doi: 10.1056/NEJMoa1000373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institute of Medicine Committee on Patient Safety and Health Information Technology. Health IT and Patient Safety: Building Safer Systems for Better Care. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- Jones SS, Heaton P, Friedberg MW, Schneider EC. Today's “Meaningful Use” Standard for Medication Orders by Hospitals May Save Few Lives; Later Stages May Do More. Health Affairs. 2011;30(10):2005–12. doi: 10.1377/hlthaff.2011.0245. [DOI] [PubMed] [Google Scholar]

- Jones SS, Heaton PS, Rudin RS, Schneider EC. Unraveling the IT Productivity Paradox—Lessons for Health Care. New England Journal of Medicine. 2012;366(24):2243–45. doi: 10.1056/NEJMp1204980. [DOI] [PubMed] [Google Scholar]

- Kelman S, Friedman JN. Performance Improvement and Performance Dysfunction: An Empirical Examination of Distortionary Impacts of the Emergency Room Wait-Time Target in the English National Health Service. Journal of Public Administration Research and Theory. 2009;19(4):917–46. [Google Scholar]

- Kern LM, Malhotra S, Barron Y, Quaresimo J, Dhopeshwarkar R, Pichardo M, Edwards AM, Kaushal R. Accuracy of Electronically Reported “Meaningful Use” Clinical Quality Measures: A Cross-Sectional Study. Annals of Internal Medicine. 2013;158(2):77–83. doi: 10.7326/0003-4819-158-2-201301150-00001. [DOI] [PubMed] [Google Scholar]

- Kumar A, Roberts D, Wood KE, Light B, Parrillo JE, Sharma S, Suppes R, Feinstein D, Zanotti S, Taiberg L, Gurka D, Kumar A, Cheang M. Duration of Hypotension before Initiation of Effective Antimicrobial Therapy Is the Critical Determinant of Survival in Human Septic Shock. Critical Care Medicine. 2006;34(6):1589–96. doi: 10.1097/01.CCM.0000217961.75225.E9. [DOI] [PubMed] [Google Scholar]

- Morgan DJ, Diekema DJ, Sepkowitz K, Perencevich EN. Adverse Outcomes Associated with Contact Precautions: A Review of the Literature. American Journal of Infection Control. 2009;37(2):85–93. doi: 10.1016/j.ajic.2008.04.257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Patient Safety Agency. Rapid Response Report NPSA/2010/RRR009: Reducing Harm from Omitted and Delayed Medicines in Hospital. February 2010. London: 2010. [Google Scholar]

- Parsons A, McCullough C, Wang J, Shih S. Validity of Electronic Health Record-Derived Quality Measurement for Performance Monitoring. Journal of the American Medical Informatics Association. 2012;19(4):604–9. doi: 10.1136/amiajnl-2011-000557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power M. Auditing and the Production of Legitimacy. Accounting, Organizations and Society. 2003;28(4):379–94. [Google Scholar]

- Pronovost PJ, Miller M, Wachter RM. The GAAP in Quality Measurement and Reporting. JAMA. 2007;298(15):1800–1802. doi: 10.1001/jama.298.15.1800. [DOI] [PubMed] [Google Scholar]

- Pronovost PJ, Nolan T, Zeger S, Miller M, Rubin H. How Can Clinicians Measure Safety and Quality in Acute Care. The Lancet. 2004;363(9414):1061–67. doi: 10.1016/S0140-6736(04)15843-1. [DOI] [PubMed] [Google Scholar]

- Safran C, Bloomrosen M, Hammond WE, Labkoff S, Markel-Fox S, Tang PC, Detmer DE. Toward a National Framework for the Secondary Use of Health Data: An American Medical Informatics Association White Paper. Journal of the American Medical Informatics Association. 2007;14(1):1–9. doi: 10.1197/jamia.M2273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shojania KG, Grimshaw JM. Evidence-Based Quality Improvement: The State of the Science. Health Affairs. 2005;24(1):138–50. doi: 10.1377/hlthaff.24.1.138. [DOI] [PubMed] [Google Scholar]

- Sinha S, Peach G, Poloniecki JD, Thompson MM, Holt PJ. Studies Using English Administrative Data (Hospital Episode Statistics) to Assess Health-Care Outcomes—Systematic Review and Recommendations for Reporting. European Journal of Public Health. 2012;23(1):86–92. doi: 10.1093/eurpub/cks046. [DOI] [PubMed] [Google Scholar]

- Sittig DF, Hazlehurst BL, Brown J, Murphy S, Rosenman M, Tarczy-Hornoch P, Wilcox AB. A Survey of Informatics Platforms That Enable Distributed Comparative Effectiveness Research Using Multi-Institutional Heterogeneous Clinical Data. Medical Care. 2012;50(suppl.1):S49–S59. doi: 10.1097/MLR.0b013e318259c02b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spertus JA, Eagle KA, Krumholz HM, Mitchell KR, Normand ST. American College of Cardiology and American Heart Association Methodology for the Selection and Creation of Performance Measures for Quantifying the Quality of Cardiovascular Care. Journal of the American College of Cardiology. 2005;45(7):1147–56. doi: 10.1016/j.jacc.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Stanfill MH, Williams M, Fenton SH, Jenders RA, Hersh WR. A Systematic Literature Review of Automated Clinical Coding and Classification Systems. Journal of the American Medical Informatics Association. 2010;17(6):646–51. doi: 10.1136/jamia.2009.001024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szent-Gyorgyi LE, Coblyn J, Turchin A, Loscalzo J, Kachalia A. Building a Departmental Quality Program: A Patient-Based and Provider-Led Approach. Academic Medicine. 2011;86(3):314–20. doi: 10.1097/ACM.0b013e318209346e. [DOI] [PubMed] [Google Scholar]

- Takian A, Petrakaki D, Cornford T, Sheikh A, Barber N. Building a House on Shifting Sand: Methodological Considerations When Evaluating the Implementation and Adoption of National Electronic Health Record Systems. BMC Health Services Research. 2012;12:105. doi: 10.1186/1472-6963-12-105. doi: 10.1186/1472-6963-12-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Varkey P, Reller MK, Resar RK. Basics of Quality Improvement in Health Care. Mayo Clinic Proceedings. 2007;82(6):735–39. doi: 10.4065/82.6.735. [DOI] [PubMed] [Google Scholar]

- Wachter RM. Understanding Patient Safety. 2nd ed. New York: McGraw-Hill Medical; 2012. [Google Scholar]

- Wachter RM, Pronovost PJ. Balancing “No Blame” with Accountability in Patient Safety. New England Journal of Medicine. 2009;361(14):1328. doi: 10.1056/NEJMsb0903885. 1401–6. [DOI] [PubMed] [Google Scholar]

- Warne S, Endacott R, Ryan H, Chamberlain W, Hendry J, Boulanger C, Donlin N. Non-Therapeutic Omission of Medications in Acutely Ill Patients. Nursing in Critical Care. 2010;15(3):112–17. doi: 10.1111/j.1478-5153.2010.00365.x. [DOI] [PubMed] [Google Scholar]

- Weiner JP, Fowles JB, Chan KS. New Paradigms for Measuring Clinical Performance Using Electronic Health Records. International Journal for Quality in Health Care. 2012;24(3):200–205. doi: 10.1093/intqhc/mzs011. [DOI] [PubMed] [Google Scholar]

- Weiner M, Stump TE, Callahan CM, Lewis JN, McDonald CJ. Pursuing Integration of Performance Measures into Electronic Medical Records: Beta-Adrenergic Receptor Antagonist Medications. Quality and Safety in Health Care. 2005;14(2):99–106. doi: 10.1136/qshc.2004.011049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whippy A, Skeath M, Crawford B, Adams C, Marelich G, Alamshahi M, Borbon J. Kaiser Permanente's Performance Improvement System, Part 3: Multisite Improvements in Care for Patients with Sepsis. Joint Commission Journal on Quality and Patient Safety. 2011;37(11):483–93. doi: 10.1016/s1553-7250(11)37061-4. [DOI] [PubMed] [Google Scholar]

- Yin RK. Case Study Research: Design and Methods. 4th ed. London: Sage; 2009. [Google Scholar]