Abstract

Context

Evidence briefs have emerged as a promising approach to synthesizing the best available research evidence for health system policymakers and stakeholders. An evidence brief may draw on systematic reviews and many other types of policy-relevant information, including local data and studies, to describe a problem, options for addressing it, and key implementation considerations. We conducted a systematic review to examine the ways in which context- and issue-related factors influence the perceived usefulness of evidence briefs among their intended users.

Methods

We used a critical interpretive synthesis approach to review both empirical and nonempirical literature and to develop a model that explains how context and issues influence policymakers’ and stakeholders’ views of the utility of evidence briefs prepared for priority policy issues. We used a “compass” question to create a detailed search strategy and conducted electronic searches in CINAHL, EMBASE, HealthSTAR, IPSA, MEDLINE, OAIster (gray literature), ProQuest A&I Theses, ProQuest (Sociological Abstracts, Applied Social Sciences Index and Abstracts, Worldwide Political Science Abstracts, International Bibliography of Social Sciences, PAIS, Political Science), PsychInfo, Web of Science, and WilsonWeb (Social Science Abstracts). Finally, we used a grounded and interpretive analytic approach to synthesize the results.

Findings

Of the 4,461 papers retrieved, 3,908 were excluded and 553 were assessed for “relevance,” with 137 included in the initial sample of papers to be analyzed and an additional 23 purposively sampled to fill conceptual gaps. Several themes emerged: (1) many established types of “evidence” are viewed as useful content in an evidence brief, along with several promising formatting features; (2) contextual factors, particularly the institutions, interests, and values of a given context, can influence views of evidence briefs; (3) whether an issue is polarizing and whether it is salient (or not) and familiar (or not) to actors in the policy arena can influence views of evidence briefs prepared for that issue; (4) influential factors can emerge in several ways (as context driven, issue driven, or a result of issue-context resonance); (5) these factors work through two primary pathways, affecting either the users or the producers of briefs; and (6) these factors influence views of evidence briefs through a variety of mechanisms.

Conclusions

Those persons funding and preparing evidence briefs need to consider a variety of context- and issue-related factors when deciding how to make them most useful in policymaking.

Keywords: health policy, systematic review, evidence brief, context, issues, politics, knowledge translation and exchange

A consensus is emerging that efforts to strengthen health systems need to be informed by the best available research evidence in both high- and low-income settings (Frenk 2010; Lavis 2009; Lavis et al. 2005a, 2009b, 2010; Mitton et al. 2007; The Lancet 2008; WHO 2004). However, there is an undesirable gap between what is known from research evidence and the policies pursued by health policymakers and stakeholders, which cannot be explained by the influence alone of the many political factors that compete for their attention. The existence of this “know-do” gap suggests that many of the findings from high-quality health research are not mobilized in efforts to improve health systems and population health.

Despite the call for an increased use of research evidence in policymaking worldwide, several barriers constrain the use of research evidence in health policymaking processes. The first (often taken as given) challenge is that the policy process is complex and that research evidence is only one of several factors competing for policymakers’ attention, along with institutional constraints, interest-group pressure, values, and “external” events (IDRC 2011; Lavis et al. 2006b; WHO 2004). Second, research evidence is not easy to use, and the ways in which results are packaged and presented often are unhelpful for the types of decisions that policymakers face and the settings in which they work (Innvaer et al. 2002; Oxman et al. 2009a). Additional barriers that have been acknowledged in the literature include the mutual mistrust that often exists between policymakers and researchers, and policymakers’ tendency to place little value on research evidence as an input into policy decisions (Innvaer et al. 2002; Lavis et al. 2006b; Oxman et al. 2009a).

One way of overcoming some of these barriers is a type of policy-relevant research synthesis commonly referred to as “evidence briefs” (or, sometimes, “policy briefs”). These syntheses differ from other knowledge synthesis products in that they begin by identifying a priority policy issue, rather than starting with the research evidence. They then work backward to mobilize the full range of synthesized research (e.g., systematic reviews) and local evidence (e.g., local program evaluations) to help policymakers and those who support them understand and systematically think through (1) the problem underlying the priority policy issue; (2) the potential options available for addressing the issue; and (3) the factors that need to be considered when implementing the options (Lavis and Panisset 2010; Lavis et al. 2009d). Recent examples are an evidence brief written to inform a deliberative dialogue on task shifting for maternal and child health in Uganda (Nabudere, Asiimwe, and Mijumbi 2010) and a brief written to inform a deliberative dialogue on strengthening primary health care in Canada (Lavis and Boyko 2009).

Evidence briefs are viewed as a promising approach because they build on the factors found in two systematic reviews to increase the likelihood that research evidence will be used by policymakers. First, they address the need for timeliness, because briefs can be prepared in days or weeks rather than in the months or years it takes to produce single studies or reviews. Second, they provide a basis for facilitating interactions among researchers, policymakers, and stakeholders, particularly when used as an input into deliberative dialogues, and third, they promote the consideration about how values, beliefs, and political goals accord with the best available research evidence (Lavis et al. 2005a, 2005b, 2009d). Furthermore, they package research evidence in a way that both showcases its relevance to policymakers and is easy to use, thereby overcoming the fact that research evidence is generally not presented in a manner that achieves this (Innvaer et al. 2002; Lavis et al. 2005a, 2009d; Mays, Pope, and Popay 2005; Oxman et al. 2009a).

Although other review-derived synthesis products tailored for use by policymakers are currently being developed and tested (Rosenbaum et al. 2011), the types of content that should be included in briefs or the ways in which they should be formatted so they are optimally suited to the needs of their intended audiences have not been explored (Lavis et al. 2009d). Furthermore, despite the availability of theoretical frameworks that can help explain the broad application of knowledge-translation efforts (Contandriopoulos et al. 2010; Dobbins et al. 2002; Ebener et al. 2006; Hanney et al. 2003; Lavis et al. 2003) or assess knowledge translation efforts on a country level (Lavis et al. 2006b), a theoretical foundation that can inform inquiries focused on specific knowledge translation mechanisms has not been created. In particular, almost nothing is known about whether and how the ways in which evidence briefs are designed, the types of information that they contain, the contexts in which they are prepared, and the issue(s) that they address will influence how useful these syntheses are likely to be in supporting the use of research evidence by policymakers and stakeholders (Lavis and Panisset 2010; Lavis et al. 2009d). The paucity of evidence to inform the development of particular strategies to encourage evidence-informed policymaking, such as evidence briefs, is a serious deficiency in evidence-informed health policy (Mitton et al. 2007, 2009).

Given the lack of theoretical development to explain evidence briefs’ role in translating knowledge, we need focused and systematic efforts to gain insights into how their intended users’ views about different content and design features may be affected by various contextual and issue-related factors (Lavis et al. 2009d). This deeper understanding could help tailor future evidence briefs so that they may achieve their intended results. It also could serve as the theoretical touchstone for empirical studies in understanding the influence of briefs in policy processes, as well as informing decisions about whether to scale up their preparation. Using a systematic review of the literature, this article tries to fill these conceptual gaps and to offer a theoretical framework identifying important context- and issue-related variables and explaining how they are likely to influence policymakers’ and stakeholders’ views of briefs.

Methods

We considered several approaches to systematically reviewing the heterogeneous literature that can help inform questions related to policymaking (Dixon-Woods et al. 2005; Mays, Pope, and Popay 2005; Petticrew and Roberts 2006). Because of the lack of available literature specifically on evidence briefs, we knew that our primary objective could not be a synthesis of what is currently known about whether, how, and why they work as a mechanism for knowledge translation. This in turn prompted us to adopt the critical interpretive synthesis (CIS) approach to qualitative systematic review, as it is ideally suited to deal with a heterogeneous body of literature that is not amenable to the application of traditional systematic review methods (Dixon-Woods et al. 2005, 2006; Greenhalgh et al. 2005; Noblit and Hare 1988; Pawson et al. 2005). The core objective of the CIS approach is the development of a theoretical framework based on insights and interpretation drawn from relevant sources, not just those that meet particular design or quality criteria. This is a strength of the method that is useful when the question addressed is likely to draw on literature that is not particularly well developed or focused, as is the case with much of the literature on mechanisms to support the use of research evidence in policymaking, especially evidence briefs (Boyko 2010; Dixon-Woods et al. 2006; Lavis et al. 2009d; Mitton et al. 2007).

In designing the review, we used a two-pronged approach that would complement a systematic literature review with purposive sampling and inductive analysis. First, we employed a very explicit and structured approach—not unlike traditional systematic review methodology—to search the indexed literature electronically. Second, we borrowed the inductive methods often associated with qualitative research designs to ensure that our final sample of included papers was theoretically rich and relevant to the question posed. These methodological approaches were integrated (1) while compiling the keywords used in the search strategies, (2) while narrowing the search results to a manageable size, (3) while purposively sampling documents for inclusion in the analysis from the pool of retrieved and potentially relevant documents generated by electronic searches, (4) while analyzing them, and (5) while carrying out additional purposive sampling concurrently during the analysis to fill conceptual gaps in our initial sample of literature.

We adopted a “compass” question to underpin the design and conduct of the review (Boyko 2010; Dixon-Woods et al. 2006): “How do the contexts in which evidence briefs are prepared, and the issues that they address, influence policymakers’ and stakeholders’ views of their content and design features?” As the compass question suggests, the main purpose of the synthesis was to explain how context- and issue-related factors may influence their intended audience's views of evidence briefs when prepared in a particular context and for a particular issue. However, the precise meaning of these factors and what they refer to is often only vaguely described in the literature. For example, “historical, cultural, health services, system, and resource” factors, “political, ideological and economic factors,” as well as networks can influence the pathway of evidence in policy (Bowen and Zwi 2005). Moreover, these factors are seldom sorted into meaningful analytic categories. Accordingly, in addition to explaining the how, we also defined more precisely what context- and issue-related factors were of interest. The investigative team's training background (primarily in the fields of health services and policy research, health policy analysis, and political science) shaped this approach. In particular, we sought a clear framing of these factors by drawing on concepts related to political context—including a range of institutional (and historical), interest-group, and idea-related factors well established in political science literature—while remaining open to other factors that emerged as important contributions during the analysis. Finally, we note that consistent with an interpretive synthesis method, the strategy outlined next aimed for the relevance, rather than the comprehensiveness, of the included papers.

Electronic Searches

Using the compass question and relying on prior knowledge of the topic addressed by the review, we constructed a table of Boolean-linked keywords and their synonyms and then tested several search strategies. After adjusting some elements of these strategies, we searched the following electronic databases: CINAHL, EMBASE, HealthSTAR, IPSA, MEDLINE, OAIster (gray literature), ProQuest A&I Theses, ProQuest (Sociological Abstracts, Applied Social Sciences Index and Abstracts, Worldwide Political Science Abstracts, International Bibliography of Social Sciences, PAIS, Political Science), PsychInfo, Web of Science, and WilsonWeb (Social Science Abstracts). We wanted to use a similar search string for each database based on the strings developed in the pilot testing but found that with each search interface, we needed to make small adjustments to ensure that the formatting was optimized for the database functionality. We carried out our searches between October and November 2011 (although additional papers were purposively sampled in 2012 to fill conceptual gaps throughout the stages of analysis). The details of each search string we used are in a supplementary appendix that is available on request.

Article Selection

Excluding Irrelevant Articles

We created an explicit set of exclusion criteria in order to remove any retrieved articles, based on the title and abstract, that were obviously not relevant to the purpose of our study. Among them were papers that did not provide insights into the political, economic, and social contexts in which policymaking takes place, the policymaking process and the factors that influence it, and the nature of the issues addressed in the policymaking process.

First, we excluded those articles focusing on patients, including papers on shared decision making, facilitation of patient decision making, patient choice, and education of patients about their care. Second, we excluded those with a clinical focus, such as papers on evidence-based medicine; clinical practice guidelines; clinical programs or interventions; implementation of clinical practice guidelines; influences on clinical decision making; epidemiology; burden of disease; ethical dimensions of treatment or health services programs; primary/single studies or cost-effectiveness analyses evaluating health care services; and frameworks for analyzing the content, implementation, uptake, and impacts of health care services. Third, we excluded papers on public health programs and services unless they pertained to the policymaking processes related to public health programs and services. This included papers detailing the strategies that could be used to address the social determinants of health and population-based health promotion or disease prevention strategies. Finally and fourth, we excluded papers that assessed the effectiveness of policy options or approaches to their monitoring and evaluation. These were papers on the effects of options, on the methodology for developing policy-relevant indicators, on performance measurement, and on using performance indicators to inform policy decisions (but if the papers discussed how these types of information could be translated to decision makers, we did consider them).

Purposive Sampling and Inclusion of Relevant Papers

All records remaining after the exclusion phase were deemed “potentially relevant,” and the principal investigator (Kaelan A. Moat) read each title and abstract in the pool of papers. We constructed a schema to select relevant papers from this pool through purposive sampling, as opposed to a predefined list of inclusion criteria to identify an exhaustive inventory, which Moat discussed at length with another member of the study team (John N. Lavis). During several more assessments of the titles and abstracts deemed “potentially relevant,” we revised this schema. Once the method was established, we read titles, abstracts, and full-text papers in order to identify and purposively sample from the pool of “potentially relevant” papers those that were most relevant and likely to offer important conceptual insights that would help us answer our research question. The sampling schema helped ensure that this stage was as transparent as possible while acknowledging the necessity of interpretation during the process (a cornerstone of the CIS methodology). Two guidelines served as broad grounding principles in this approach: (1) a paper had to provide clear insights into the political, economic, and social contexts in which policymaking takes place, the policymaking process and the factors that influence it, or the nature of the issues addressed in the policymaking process; and (2) a paper had to contribute concepts that helped answer the compass question underpinning our study. Similar to a grounded theory approach (Glaser and Strauss 1967), additional stages of purposive sampling of papers other than those returned through electronic searches proceeded in tandem with data analysis in order to fill conceptual gaps and tie themes together as they emerged during the interpretive synthesis. The investigative team's training background and knowledge of relevant sources, in addition to input from colleagues working in the same field, helped us identify additional papers in these sampling stages and also in the final selection of papers.

Data Analysis and Synthesis

We read all the included papers in full and prepared a one- to two-paragraph summary of each. We also put into a standardized form the citations and data related to the year published, the disciplines from which the papers came, and the methods employed. Our data analysis proceeded in five stages. First, we noted recurring concepts that helped contribute to an understanding of how context- and issue-related factors might influence views about an evidence brief (e.g., descriptions of political contestation and division), and we used high-level categories of these concepts to group key points found in the summaries (e.g., “polarization”). Next, we developed “synthetic constructs” for each category by interpreting the underlying evidence found in the included papers (e.g., polarization as a representation of heterogeneous views of an issue). We used the constant comparative method throughout our analysis to ensure that the emerging synthetic constructs were grounded in the data. This consisted of comparing the emerging synthetic constructs and the data at various levels of abstraction (including the original summaries prepared for each paper and memos prepared during coding stages). We then critiqued the emerging synthetic constructs as a whole in light of the included literature to identify conceptual gaps in the available evidence in relation to the compass question. Third, we continued our purposive sampling of additional papers that were not retrieved in electronic searches (described earlier), in order to fill the gaps identified in the previous analytic stages. Similar to grounded theory analysis, this continued in tandem with analysis until theoretical saturation was reached (Creswell 2007; Glaser and Strauss 1967). Fourth, we integrated the emerging synthetic constructs and themes to form a “synthesizing argument” as an interpretive theoretical model to explain how context and issues may influence how policymakers and stakeholders perceive the various design and content features of an evidence brief. Finally, we cross-validated the emerging synthesizing argument, as well as each synthetic construct, at various stages throughout the analysis. We did this through ongoing consultation with other members of the investigative team, through discussions of core concepts emerging in the study with other researchers engaged in work on health policymaking, and by searching for both confirmatory and disconfirmatory data in the sampled documents.

Results

Search Results and Article Selection

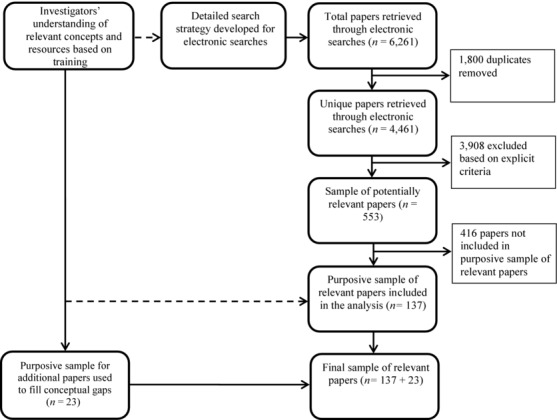

We retrieved 4,461 documents through electronic searches, from which we excluded 3,908 after reading the titles and abstracts. We deemed the remaining 553 as “potentially relevant,” and we read each title, abstract, and, when necessary, the full text to determine the relevance of the sources and to construct our purposive sampling schema. We selected 137 documents according to our schema to include in our final analysis. After more stages of purposive sampling that proceeded in tandem with data analysis, we added twenty-three more documents (see figure 1). The majority (73%) of documents selected from electronic search results were published after 2004, whereas the majority of those included during additional stages of purposive sampling (which typically were “classic” papers in relevant fields) were published before 2000. Of the empirical studies, the most common designs were case descriptions (which the authors defined as describing a policy case or decision-making process that did not clearly employ a particular methodological approach) and single case studies. Table 1 provides an overview of the characteristics of included studies. The full list of included studies is available on request.

FIGURE 1.

QUORUM flow chart of the inclusion/exclusion process.

TABLE 1.

Characteristics of Included Studies Retrieved in Searches and with Additional Purposive Sampling

| Number | ||||

|---|---|---|---|---|

| Characteristic | Searches | Purposive | Percentage of Totala | |

| Year published | Before 2000 | 12 | 17 | 18.1 |

| 2000–2004 | 24 | 3 | 16.9 | |

| 2005 | 14 | 0 | 8.8 | |

| 2006 | 11 | 1 | 7.5 | |

| 2007 | 12 | 0 | 7.5 | |

| 2008 | 12 | 0 | 7.5 | |

| 2009 | 20 | 0 | 12.5 | |

| 2010 | 21 | 1 | 13.8 | |

| 2011 | 11 | 0 | 6.9 | |

| 2012 | 0 | 1 | 0.6 | |

| Discipline | Health policy | 104 | 4 | 67.5 |

| Health services and policy research/health economics | ||||

| Population health policy research | 13 | 0 | 8.1 | |

| Social policy/public administration/political science | 16 | 19 | 21.9 | |

| International development | 4 | 0 | 2.5 | |

| Empirical versus conceptual | Empirical studies | 98 | 7b | 65.6 |

| Conceptual papers and opinion pieces | 39 | 16b | 34.4 | |

| Study designs (empirical papers) | Case description | 42 | 0 | 40.0 |

| Case study (single) | 17 | 1 | 17.1 | |

| Key informant interviews and/or focus groups | 9 | 0 | 8.6 | |

| Mixed/multiple methods | 9 | 0 | 8.6 | |

| Survey | 7 | 0 | 6.7 | |

| Case study (multiple) | 6 | 6 | 11.4 | |

| Systematic review | 3 | 0 | 2.9 | |

| Narrative review | 3 | 0 | 2.9 | |

| Document analysis | 2 | 0 | 2.9 | |

Notes:

Total percentages may be over 100 due to rounding.

Distinguishing between conceptual and empirical was less meaningful for the papers that were purposively sampled to fill conceptual gaps, as all of them were sampled because it was known by members of the research team that they had important theoretical concepts to offer. Papers sampled purposively and coded above as “empirical” are those that rely primarily on a clearly defined policy case or cases to develop, illustrate, and support their theoretical arguments.

Policy-Relevant Research Evidence versus Policy-Relevant Evidence

Our analysis soon showed that in the policymaking process, research evidence is difficult to separate from other types of information that may be considered “evidence” by policymakers and stakeholders. This tension has been highlighted in other studies and has led scholars to recommend integrating research evidence and other policy-relevant evidence in a way that does not prioritize one over the other but offers them as complementary inputs into the policymaking process (Contandriopoulos et al. 2010; Dobrow et al. 2006; Dobrow, Goel, and Upshur 2004; Mays, Pope, and Popay 2005; Pope, Mays, and Popay 2006a). To address this challenge, we adopted the more holistic term “policy-relevant information,” so that both the information derived from research evidence (e.g., systematic reviews) and the information derived from other sources that might be conceived of as “evidence” in the context of policymaking could be considered together. This fits well with the purpose of evidence briefs as we defined them in our study, which are meant to combine various types of research evidence (mainly from systematic reviews) and other types of evidence (such as local health system indicators) in a way that is relevant to the policy process. Overall, this approach allowed us to focus on interpreting the themes that emerged from the included papers in a way that helped address our primary objective—to determine how the context in which an evidence brief is prepared, and the issues that it addresses, may influence policymakers’ and stakeholders’ views of its content and design features—rather than debating about what constitutes “evidence” in the context of policymaking. The types and sources of research evidence (systematic reviews in particular) that can be consulted to provide a range of policy-relevant information for those preparing evidence briefs have been provided elsewhere (Lavis 2009; Lavis et al. 2009a, 2009c, 2009e), so will not be discussed here.

Conceptualizing What Is Meant by “Context” and “Issues”

Context is often thought to be essential to determining what types of information policymakers and stakeholders consider relevant to policy (Dobrow et al. 2006; Dobrow, Goel, and Upshur 2004). Context can also dictate the “realm of the possible” when developing knowledge translation strategies to inform policymaking (Contandriopoulos et al. 2010). The specific characteristics of policy issues also are important to determining the ways in which stakeholders and policymakers view research evidence as an input in the policy process. Different issues, for example, can result in very different reactions by the public and those involved in the policymaking process and, as a result, may either lead to or halt any related political activity (Contandriopoulos et al. 2010; Mebane and Blendon 2001). Taken together, both the context in which a brief is produced and the issues that it addresses have implications for the types of policy-relevant information (content) that will be viewed as useful, along with the preferred presentation of this content (formatting). They can also influence policymakers’ and stakeholders’ views of a particular evidence brief as a whole and its usefulness as an input in the policymaking process.

As we explained earlier, the term “context” and its associated variables are often only vaguely defined in the literature focused on the role of evidence in the policy process (Bowen and Zwi 2005). Our analysis found that a traditional political science framework provides three very useful categories of contextual variables (often referred to as the “3i's”) that can be found in a given political setting and can influence the policymaking process: institutions, interests, and ideas (Lavis et al. 2002, 2012; Weatherford and Mayhew 1995). Institutions include factors such as government structures (e.g., whether policy is made in a unitary or a federal state) and the legacies of past policies that may shape the policy process by creating incentives and giving some political actors access to more or fewer resources than others, and by creating policy networks that can determine who has access to the policy process (Arnold 1990; Pierson 1993). The interests category captures the characteristics of political actors (e.g., traits of interest groups, civil society, and legislators), whether they win or lose as a result of a given policy, and by how much (Coleman and Skogstad 1990; Olson 1965). Ideas include the societal values that characterize a particular policy arena, and the knowledge that actors in that arena have (e.g., values about what ought to be or beliefs about what is) (Hall 1993). The framework also considers what are often referred to as “external events,” such as economic downturns or the outbreak of a disease pandemic. We adopted these concepts as they emerged as the most useful in shaping our ongoing analysis, and they were essential to identifying contextual factors that could influence the intended users’ views of an evidence brief.

Conversely, the characteristics of issues are related to a separate set of factors that are intricately linked to how a given issue can shape the policy arena by whether it is polarizing, salient, or familiar (Carden 2009; Contandriopoulos et al. 2010). First, certain issues can be inherently polarizing—that is, they cause fragmentation in the positions held by various interests represented in the policy process (Contandriopoulos et al. 2010). This can pit actors against one another in policy debates and divide the public. Specifically, low issue polarization refers to situations in which actors engaged in the policy process have similar preferences and ideas about the way the problem underlying the issue has been framed about the priorities that need to be addressed, and the criteria against which potential solutions should be assessed (Contandriopoulos et al. 2010). In contrast, as the consensus on these key components diminishes, issue polarization increases (Contandriopoulos et al. 2010).

Second, issue salience can help determine how various policy actors perceive the importance of an issue. High-salience issues are those perceived to be top priorities in the policy arena by those involved with, or likely to be affected by, a decision about the issue, including members of the public (Contandriopoulos et al. 2010; Mebane and Blendon 2001; Steele et al. 2008). They are more likely to engage large numbers of interested stakeholders and to be covered more extensively by the media (Mebane and Blendon 2001; Steele et al. 2008). Issues are more likely to be of high salience if they affect many people or if they threaten the status quo (Ssengooba et al. 2011).

In contrast, low-salience issues in the policy arena receive little public attention and no media attention, involve fewer high-profile policy actors, and are often seen by the actors in the policy arena as the “nitty gritty,” less urgent, and lower-priority issues (Mebane and Blendon 2001). Finally, whether the policymakers and stakeholders are familiar with policy issues matters, as some issues can gain prominence on the agenda while others fall off (Downs 1972; Kingdon 2003). As this process of agenda setting proceeds, some issues may recur because they are common to the government's policy agenda, making them more familiar to the policymakers and stakeholders engaged in the policy process, when compared with other issues. This level of familiarity can also influence the type of information desired by the policymakers and stakeholders engaged in the policy process (Carden 2009).

Because both these approaches provided fruitful avenues for exploring and, ultimately, determining how context and issues influence how policymakers and stakeholders view an evidence brief as an input in the policy process, we draw on them extensively in the remainder of this article.

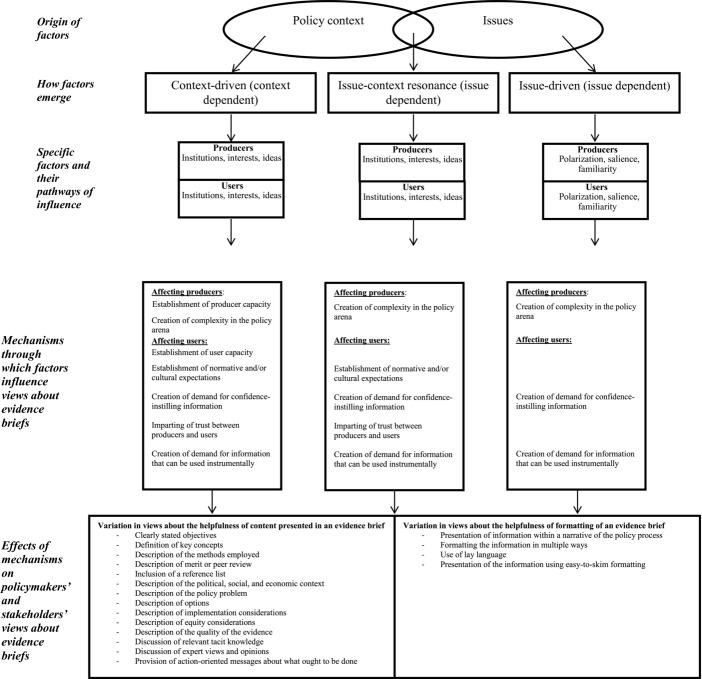

Understanding How Context and Issues Influence Views of Evidence Briefs for Policy

Figure 2 provides an overview of the concepts that emerged from our analysis and represents how contexts and issues may influence policymakers’ and stakeholders’ views of an evidence brief. Factors related to the contexts in which a particular brief is prepared and the issues that it addresses were found to emerge in three ways, as context-driven, issue-context resonance, or issue-driven factors. They also were found to influence both the producers and the users of a brief, to manifest as several types of specific factors (e.g., institutions, interests, and ideas for context-related factors, and polarization, salience, and familiarity for issue-related factors), and to influence views of an evidence brief through a number of mechanisms (e.g., by creating complexity for producers or capacity among users of a brief). These mechanisms may produce different views of evidence briefs among their intended users, particularly of the types of policy-relevant information they contain and the formats in which this information is presented.

FIGURE 2.

How issues and contexts create factors that influence views of evidence briefs.

One noteworthy theme that emerged during the early stages of the analysis is that much of the current work on synthesizing policy-relevant information to support health policy decision making is determining (often normatively) what information should be provided to policymakers and what formats should be used to present this information. This is captured at the bottom of figure 2 and is presented as two groups of features (i.e., content and formatting). First, the specific types of information, or content, that are important to making policy decisions (i.e., policy-relevant information) and should be included in a synthesis serving as an input in the policy process are regularly discussed. For example, clearly stated objectives, a description of the policy problem, and options to address the problem are content that should be included. Second, the formats in which this content should be presented to policymakers and stakeholders to maximize its usefulness are commonly suggested, along with using lay language and presenting content in ways that make it easy to skim. The items in these two thematic categories are relatively consistent with the characteristics commonly found across countries in evidence briefs (Lavis and Panisset 2010; Lavis et al. 2009d; Nabudere, Asiimwe, and Mijumbi 2010; Wilson and Lavis 2011). They are summarized in table 2. Interestingly, the most frequently discussed feature in the reviewed literature is the inclusion of a comprehensive and detailed description of the underlying policy problem related to the policy issue being addressed (Bero and Jadad 1997; Colby et al. 2008; Durrant 1995; Kapiriri et al. 2003; Lavis 2009; Lavis et al. 2009f; Lomas 2005; Mays, Pope, and Popay 2005; Mercer et al. 2010; Milstien et al. 2010; Pope, Mays, and Popay 2006a; Williams et al. 2009; Williams and Bryan 2007), suggesting its relative importance to efforts to synthesize policy-relevant information to support policymakers and stakeholders.

TABLE 2.

Content and Formatting Features to Be Considered When Preparing Evidence Briefs for Policy

| Features | Sources Discussing These Themes | |

|---|---|---|

| Content | 1. Clearly stated objectives | Lomas 2005; Rosenbaum et al. 2011 |

| 2. Explanation/definition of key concepts related to the content included in the evidence brief | Rosenbaum et al. 2011 | |

| 3. Description of the methods employed to prepare the evidence brief | Chambers 2011; Hanvoravongchai 2008; Kiefer et al. 2005; Lavis et al. 2006a, 2008a, 2008c; Mercer et al. 2010; Mindell et al. 2004; Oxman et al. 2009b; Pope, Mays, and Popay 2006a; Samet, McMichael, and Wilcox 2010; Tantivess, Teerawattananon, and Mills 2009; Yothasamut, Tantivess, and Teerawattananon 2009 | |

| 4. Description of whether the evidence brief has been subjected to peer/merit review | Department for Business Innovation and Skills 2010; Lavis et al. 2009d; Mindell et al. 2004 | |

| 5. Inclusion of a reference list | Rosenbaum et al. 2011 | |

| 6. Description of the political, social, and economic context in relation to the research evidence addressing various aspects of the policy issue | Cherney and Head 2010; Cummins and Macintyre 2002; Golden 2007; Gruen 2005; Hoffmann et al. 2002; Kapiriri, Norheim, and Heggenhougen 2003; Kothari and Armstrong 2011; Landry 2006; Lavis et al. 2009c; Lehoux et al. 2004; Sexton 1995; Williams and Bryan 2007 | |

| 7. Description of the policy problem related to the issue addressed by the evidence brief | Bero and Jadad 1997; Colby et al. 2008; Durrant 1995; Kapiriri, Norheim, and Heggenhougen 2003; Lavis 2009; Lavis et al. 2009f; Lomas 2005; Mays, Pope, and Popay 2005; Mercer et al. 2010; Milstien et al. 2010; Pope, Mays, and Popay 2006a; Williams et al. 2009; Williams and Bryan 2007 | |

| 8. Description of several viable options that can address the problem, along with what is known, based on research evidence about each option (including benefits/harms, costs/cost-effectiveness/technical feasibility/budget workability) | Achenbaum 1988; Ayuk and Ali Marouani 2007; Baum et al. 2007; Bellew, Bauman, and Brown 2010; Bero and Jadad 1997; Burris et al. 2011; Campbell et al. 2009; Cherney and Head 2010; Colby et al. 2008; Contandriopoulos et al. 2010; Department for Business Innovation and Skills 2010; Elliot and Popay 2000; Gold 2009; Gruen 2005; Hanvoravongchai 2008; Haynes et al. 2011; Kouri 2009; Lavis 2009; Lavis et al. 2005a, 2009e; Lomas 2005; Mays, Pope, and Popay 2005; McCaughey 2010; Mercer et al. 2010; Milstien et al. 2010; Pawson et al. 2005; Pope, Mays, and Popay 2006a; Williams et al. 2009 | |

| 9. Description of key implementation considerations related to the options | Chambers 2011; Dobrow, Goel, and Upshur 2004; Dobrow et al. 2006; Lavis 2009; McCaughey 2010; Oxman et al. 2009a, 2009b; Pawson et al. 2005; Ssengooba et al. 2011; Williams et al. 2009; Williams and Bryan 2007 | |

| 10. Description of equity considerations as well as the perceived positive or negative impact that options may have on various members of society | Adeoye and Bozic 2007; Baum et al. 2007; Hanvoravongchai 2008; Hoffmann et al. 2002; Hyder et al. 2011; Lavis et al. 2009e; Milstien et al. 2010; Rijkom et al. 2000 | |

| 11. Description of the quality of the research evidence related to the various aspects of the policy issue and highlighting any uncertainty associated with the research evidence | Ayuk and Ali Marouani 2007; Carter 2010; Chambers 2011; Colby et al. 2008; Court 2005; Daniels 2008; Department for Business Innovation and Skills 2010; Golden 2007; Greenfield et al. 2004; Hanney et al. 2003; Hoffmann and der Schulenburg 2000; Lavis et al. 2004, 2005a, 2006a, 2009e; Mays, Pope, and Popay 2005; Mindell et al. 2004; Pope, Mays, and Popay 2006a; Tantivess, Teerawattananon, and Mills 2009; Theobald and Nhlema-Simwaka 2008; Tulloch et al. 2011; Wirtz, Cribb, and Barber 2003; Yothasamut, Tantivess, and Teerawattananon 2009 | |

| 12. Discussion about stakeholders’ relevant tacit knowledge about the various aspects of the policy issue | Kothari and Armstrong 2011; Kouri 2009; Lomas 2005 | |

| 13. Discussion about the views held by those considered to be experts on the various aspects of the policy issue | Bellew, Bauman, and Brown 2010; Chambers 2011; Department for Business Innovation and Skills 2010; Kiefer et al. 2005; Linder 1994; Lomas 2005; Madden 2009; Milstien et al. 2010; Pilli-Sihvola et al. 2010; Rijkom et al. 2000; Ruane 2010; Smith 2007; Ssengooba et al. 2011; Williams et al. 2009; Wirtz, Cribb, and Barber 2003 | |

| 14. Provision of action-oriented messages about what course of action might be taken in light of the information presented in the evidence brief | Adeoye and Bozic 2007; Burris et al. 2011; Colby et al. 2008; Contandriopoulos and Brousselle 2010; Court 2005; Haynes et al. 2011; Lomas 2005; Mercer et al. 2010; Milstien et al. 2010; Rosenbaum et al. 2011; Williams and Bryan 2007; Wirtz, Cribb, and Barber 2003 | |

| Formatting | 15. Presentation of information within a narrative of the policy process | Cherney and Head 2010; Golden 2007; Hoffmann and der Schulenburg 2000; Kapiriri, Norheim, and Heggenhougen 2003; Kothari 2002; Landry 2006; Lavis et al. 2006a; Lehoux et al. 2004; Sexton 1995; Williams and Bryan 2007 |

| 16. Formatting the information in multiple ways (e.g., graded entry, electronic/hard copies) | Bero and Jadad 1997; Kiefer et al. 2005; Lavis et al. 2005a; Lomas 2005; Mercer et al. 2010; Pope, Mays, and Popay 2006a, 2006b; Rosenbaum et al. 2011 | |

| 17. Use of lay language | Adeoye and Bozic 2007; Bero and Jadad 1997; Colby et al. 2008; Court 2005; Daniels 2008; Hyder et al. 2011; Kiefer et al. 2005; Kouri 2009; Lavis et al. 2005a; McCaughey 2010; Mindell et al. 2004; Rosenbaum et al. 2011 | |

| 18. Use of easy-to-skim formatting, enabling quick identification of headings and key words | Colby et al. 2008; Hoffmann et al. 2002 |

As our analysis suggested and the corresponding model illustrates, despite the preoccupation in the literature with suggestions for content and formatting, such prescriptions are only one element of the larger picture. Often these features are presented without considering how the views of them may be influenced by the first three-quarters of figure 2 and are therefore promoted as a “one-size-fits-all” approach that will help support the use of evidence in health policymaking, regardless of the contexts in which they are prepared and the issues that they address. Determining how contexts and issues may influence views of these features served as the main driver of our study and constitutes the bulk of what is presented in figure 2. It is these aspects of the model that will ultimately determine views of the various features of an evidence brief, and thus they need to be considered before we can understand how the different content and formatting features discussed earlier will be perceived by the intended users of an evidence brief. Next we discuss how these factors emerge, what the specific factors are, and what their pathways of influence are, as well as the mechanisms by which these factors influence views of an evidence brief prepared in a particular context and focused on a particular policy issue.

How Factors Emerge from Context and Issues to Shape Views of Evidence Briefs

As noted in the previous section, the factors identified as influential in determining policymakers’ and stakeholders’ views of evidence briefs emerged in three different ways: (1) as context-driven factors, (2) as issue-driven factors, and (3) as issue-context resonance factors.

Context-driven factors are the relatively stable (in the short term) attributes of the policy context—in particular, the attributes of the existing institutions, interests, and ideas—that may influence how an evidence brief is viewed, independently of the issue's characteristics. For example, the prevailing values (which are one type of “ideas”) in a polity may help create a climate that supports the use of research evidence in general, and evidence briefs in particular, in policymaking, regardless of the nature of the policy issue considered (Lavis et al. 2006b). For this reason, we conceived context-driven factors as having context-dependent origins. That is, these factors are independent of the issue.

We conceived the second and third categories of factors as having issue-dependent origins, which are dynamic in that the characteristics of priority policy issues are responsible for defining the factors that are influential in determining policymakers’ and stakeholders’ views of an evidence brief. In other words, they are dependent on the nature of the emerging policy issues and cannot be determined without first considering the issue's specific attributes.

The first type of issue-dependent factor is what we will refer to as an “issue-driven factor.” These factors influence the policy process according to whether they are polarizing issues, whether they are salient, and how familiar they are to relevant policymakers, stakeholders, and the public (Contandriopoulos and Brousselle 2010; Contandriopoulos et al. 2010). For example, a controversial issue like abortion may polarize the public and the policy community, be highly salient when it does emerge as a priority, and, as a result, change these actors’ views of how useful an evidence brief about the issue is as an input in the policy process.

Finally, issue-context resonance factors emerge at the intersection between an issue's characteristics and its context. In particular, they refer to contextual factors (institutions, interests, and ideas) that become important considerations as a result of the characteristics of the policy issue addressed, which also makes them issue dependent. Framed in another way, these factors emerge as a result of contextual resonance with attributes of a policy issue. For example, given the constitutional rules about jurisdictional authority (an institutional feature), a policy issue may implicate two levels of government in the policy process, whereas the characteristics of another issue could mean that only one level of government (or no level of government) is involved in decisions about that issue. Similarly, an issue may imply the involvement of a broad array of interests. In contrast, other issues may relegate policy decision-making involvement to a few actors in closed policy networks, in which they are insulated from the rest of the policy arena and have greater access to (and influence over) the decision-making process.

Our analysis also identified two major pathways through which these factors might influence stakeholders’ and policymakers’ views of an evidence brief. First, factors may influence views about the content, formatting, and usefulness of an evidence brief by modifying the producers’ ability to craft documents that will be useful to those reading them. Second, factors may influence views of evidence briefs through their users. In particular, factors that emerge as a result of the context in which a brief is prepared and the issue that it addresses may define the types of content desired by those engaged in the policy process, or the formats that these actors are most likely to find useful. Next we use these concepts to organize our discussion of the various factors found to influence views of evidence briefs, as well as the specific mechanisms for such influence.

The Mechanisms through Which Factors Influence Views of Evidence Briefs

Table 3 summarizes the specific context-driven, issue-driven, and issue-context resonance factors that we found in our analysis as likely influences on views of an evidence brief. The specific mechanisms through which they can affect views of evidence briefs, to which we return later, are also mapped onto each set of factors. They influence both the producers and the users through seven distinct mechanisms, by (1) establishing producer capacity, (2) creating complexity in the policy arena, (3) establishing user capacity, (4) establishing normative and/or cultural expectations, (5) imparting trust between the producers and the users of evidence briefs, (6) creating a demand for information that promotes confidence in evidence briefs, and (7) creating a demand for information that can be used by policymakers and stakeholders in instrumental ways. Next, we discuss and provide illustrative examples from the results of our analysis of the types of mechanisms through which these context-driven, issue-driven, and issue-context-resonance factors may influence views of an evidence brief.

TABLE 3.

List of Factors and Examples Found in the Synthesis, along with the Mechanisms through Which They Affect Policymakers’ and Stakeholders’ Views of Evidence Briefs

| Context-Driven | |||

|---|---|---|---|

| Pathway of Influence | Type of Factor | Example | Mechanisms of Influence |

| Producers | Institutions | • Formal/institutionalized interactions between producers and users of briefs (versus no formal/institutionalized interactions) | Establishment of producer capacity |

| • High frequency of government turnover (versus low frequency of government turnover) | Creation of complexity in the policy arena | ||

| Interests | • High levels of civil freedom (versus low levels of civil freedom) | Establishment of producer capacity | |

| • Producers of briefs have training/past experience in policymaking (versus no training/past experience in policymaking) | Creation of complexity in the policy arena | ||

| • Producers of briefs have training in communications (versus no training in communications) | |||

| • Producers of briefs are multidisciplinary (versus focused on a particular discipline) | |||

| Users | Institutions | • Institutional/organizational incentives exist to promote the use of research evidence in policy processes (versus institutional/organizational incentives don't exist) | Establishment of normative and/or cultural expectations |

| • Universal publicly financed health system (versus fragmented sources of health system financing) | Imparting of trust between producers and users | ||

| • Institutionalized research units in government (versus no institutionalized research units in government) | Establishment of user capacity | ||

| • Formal/institutionalized interactions between producers and users of briefs (versus no formal/institutionalized interactions) | Creation of demand for confidence-instilling information | ||

| • Interactions facilitated by actors who are intermediaries (versus not facilitated by intermediaries) | |||

| • High frequency of government turnover (versus low frequency of government turnover)a | |||

| • Bureaucracy characterized by specialists (versus generalists) | |||

| Interests | • Producers of briefs perceived as credible sources of policy-relevant information (versus not perceived as credible sources) | Imparting of trust between producers and users | |

| • Producers of briefs perceived as biased interest (versus perceived as unbiased intermediaries) | Creation of demand for confidence-instilling information | ||

| • High levels of civil freedom (versus low levels of civil freedom) | Establishment of normative and/or cultural expectations | ||

| • Users of briefs have past training/experience as a researcher (versus no past training/experience as a researcher) | Establishment of user capacity | ||

| Creation of demand for information that can be used instrumentally | |||

| • Users of briefs have roles focused on policy making at the subnational or local level of the health system (versus focused on the national level of the health system) | |||

| Ideas | • Cultural values place emphasis on use of research evidence as an input into policymaking (versus no emphasis on use of research evidence) | Establishment of normative and/or cultural expectations | |

| • Cultural values place emphasis on equality and social collectivism (versus no emphasis on equality and social collectivism) | |||

| Issue-Driven | |||

|---|---|---|---|

| Pathway of Influence | Category of Factor | Example of Factor | Mechanisms of Influence |

| Producers | Polarization | • Issue is highly polarizing (versus not polarizing) | Creation of complexity in the policy arena |

| Salience | • Issue is highly salient (versus not salient) | Creation of complexity in the policy arena | |

| Familiarity | • Issue is new (versus familiar) | Creation of complexity in the policy arena | |

| Users | Polarization | • Issue is highly polarizing (versus not polarizing) | Creation of demand for confidence-instilling information |

| Creation of demand for information that can be used instrumentally | |||

| Salience | • Issue is highly salient (versus not salient) | Creation of demand for confidence-instilling information | |

| Creation of demand for information that can be used instrumentally | |||

| Familiarity | • Issue is new (versus familiar)b | Creation of demand for information that can be used instrumentally | |

| Issue-Context Resonance | |||

|---|---|---|---|

| Pathway of Influence | Category of Factor | Example of Factor | Mechanisms of Influence |

| Producers | Institutions | • Issue implies diffuse decision-making authority (versus concentrated decision-making authority) | Creation of complexity in the policy arena |

| • Issue implies involvement of many levels of government (versus few levels of government) | |||

| • Issue implies involvement of a broad array of actors who compete to have their positions considered by decision makers (versus few actors with exclusive access to and influence over decision makers) | |||

| Interests | • Issue motivates many interests to mobilize (versus few interests to mobilize) | Creation of complexity in the policy arena | |

| Ideas | • High levels of uncertainty in research evidence related to the issue (versus low uncertainty) | Creation of complexity in the policy arena | |

| Users | Institutions | • Issue implies involvement of many levels of government (versus few levels of government) | Creation of demand for confidence-instilling information |

| • Issue implies involvement of a broad array of actors who compete to have their positions considered by decision makers (versus few actors with exclusive access to and influence over decision makers)c | Imparting of trust between producers and users | ||

| Creation of demand for information that can be used instrumentally | |||

| Interests | • Issue motivates many interests to mobilize (versus few interests to mobilize) | Creation of demand for information that can be used instrumentally | |

| Creation of demand for confidence-instilling information | |||

| Ideas | • High levels of uncertainty in research evidence related to the issue (versus low uncertainty) | Creation of demand for information that can be used instrumentally | |

Notes:

In this instance, the low frequency of government turnover (rather than high frequency) is associated with the mechanism that emerged in the analysis. In particular, a lower frequency of government turnover could serve to establish (and maintain) user capacity as well as create an atmosphere more amenable to the development of trusted relationships between users and producers.

Our analysis found that new issues would create a demand for information that can be used instrumentally and, in particular, information that can be used to understand the problem underlying the policy issue. However, it is conceivable that familiar issues would also create a demand for information that could be used instrumentally to help find new solutions to familiar problems (which would make this factor work in the opposite direction with respect to this mechanism as well). Our analysis, however, did not find the latter to be the case.

In this instance, the relationship between the type of network that the issue implies would be involved in the policy process and the influence that this has on the users of briefs is more nuanced than when considered as an influence on producers of briefs. In particular, a network characterized by a broad array of actors that compete to have their positions considered by decision makers could create demand for confidence-instilling information and for information that can be used instrumentally, whereas an exclusive network may impart trust (depending on the dynamics within the network).

Hypothesized Mechanisms: Producer Influences

Context-driven, issue-driven, and issue-context resonance factors all were found to influence the producers in ways that affect users’ views of evidence briefs because they either affect the capacity of those preparing them to produce useful documents or they increase the complexity of the policy arena in ways that would make it more difficult to prepare a useful evidence brief.

The first mechanism—the establishment of producer capacity—results from factors that influence producers by either reducing or enhancing their ability to prepare a brief in a way that will be useful for their intended audiences. For example, institutionalized interactions between producers and potential users (a context-driven institutional factor), or past experience as a policymaker (a context-driven interest factor), can help promote a better understanding of the policy process among those preparing the brief and, as a result, will increase their capacity to assess and appropriately respond to the information needs of those engaged in the policy process (Ayuk and Ali Marouani 2007; Buse 2008; Colby et al. 2008; Daniels 2008; Gold 2009; Golden 2007; Haynes et al. 2011; Hyder et al. 2011; Kuruvilla, Mays, and Walt 2007; Landry 2006; Lehoux et al. 2005; McCaughey 2010; Mebane and Blendon 2001; Mercer et al. 2010; Ssengooba et al. 2011). Presumably, the resulting effect of this mechanism would be to improve policymakers’ and stakeholders’ views of the usefulness of the evidence brief as a whole.

The second mechanism that can influence views of a brief through the producer pathway is the creation of complexity in the policy arena by a contextual or issue factor. This mechanism emerges as a result of factors that influence producers by creating a more (or less) heterogeneous policy arena, or a more (or less) complex institutional framework, in which the likelihood of preparing a useful evidence brief that meets diverse needs and targets the information and formatting appropriately is reduced (or enhanced). For example, context-driven institutional factors, such as high rates of turnover in government, may continually change the target audience for evidence briefs (Carden 2009; Lehoux et al. 2005). This increasing complexity of the policy arena makes it more difficult for those preparing a brief to tailor it to the preferences of their intended audience. Similar complexity may result from the emergence of issue-driven factors such as high polarization and of issue-context resonance factors such as the need to consider policy actors at multiple levels of government for a given issue—both of which may complicate the policy arena by making it more heterogeneous and thus more difficult to prepare evidence briefs that meet the demands of all potential users (Carden 2009; Contandriopoulos et al. 2010; Eddama and Coast 2009; Gold 2009; Kapiriri, Norheim, and Heggenhougen 2003; Wright, Parry, and Mathers 2007).

One interesting aspect of these mechanisms is that they seem to be able to influence views of a brief overall, rather than views of particular content and formatting features. This contrasts with the more nuanced effects that influence views through the demand side, to which we now turn.

Hypothesized Mechanisms: User Influences

Our analysis suggested that context-driven, issue-driven, and issue-context resonance factors can also affect the target audiences or users in ways that will influence their views of an evidence brief, and they do so through the following mechanisms: (1) the establishment of user capacity to engage with policy-relevant information, (2) the establishment of normative rules and/or cultural expectations that influence the types of policy-relevant information demanded by users, (3) the creation of a demand for confidence-instilling information, (4) the imparting of trust between the users and the producers of an evidence brief, and (5) the creation of a demand for information that can be used instrumentally, based on an identified practical need for a particular type of policy-relevant information.

The first identified mechanism is through the establishment of user capacity, which results from factors that influence users by either reducing or enhancing the ability of those reading briefs to utilize the policy-relevant information presented to them. For example, past experience/training in research can ensure that those reading an evidence brief are comfortable reading and digesting policy-relevant information that includes research evidence (a context-driven interest factor) (Anderson, Cosby, and Swan 1999; Campbell et al. 2009; Carden 2009; Cherney and Head 2010; Crosswaite and Curtice 1994; Daniels 2008; Gold 2009; Golden 2007; Hoffmann and der Schulenburg 2000; Hoffmann et al. 2002; Kiefer et al. 2005; Mosquera, Gomez, and Mendez 2005; Niedzwiedzka 2003; Sheldon 2005; Williams and Bryan 2007; Yothasamut, Tantivess, and Teerawattananon 2009). The resulting influence might be that as a result of their capacity to understand it, those reading a brief view the research evidence as particularly helpful.

The second important mechanism through which factors may affect users’ views of briefs is establishing normative rules or cultural expectations that influence the types of information that are expected inputs in the policymaking process. For example, the promotion of an evidence-based approach to policy development by powerful interests engaged in a particular issue may create expectations by all actors that evidence briefs should be consulted (an issue-context resonance interest factor) (Burris et al. 2011; Gold 2009; Shiffman and Smith 2007). The creation of a demand for information that instills confidence is the third mechanism through which factors may influence views of evidence briefs, and it emerges as the result of factors that influence users by increasing or decreasing the perceived need for information that helps establish confidence in the validity, rigor, and trustworthiness of an evidence brief. For example, when issues are polarizing and thus more likely to create situations in which evidence briefs are used to support an argument, users want reassurance that the evidence and messages provided in the brief have little chance of being discredited or found questionable as grounds for a particular position in a debate (an issue-driven polarization factor) (Bekker et al. 2010; Contandriopoulos et al. 2010; Cummins and Macintyre 2002).

The fourth mechanism that can influence users’ views of evidence briefs results from factors that increase trust between the producers and the users of evidence briefs, minimizing the users’ demand for information that justifies confidence in the validity, rigor, and trustworthiness of an evidence brief while increasing the likelihood that explicit action-oriented messages are viewed as helpful. For example, when interactions are ongoing and institutionalized, trust can develop between those preparing and those reading briefs, obviating the need for information that promotes confidence in the source and increasing the expectation that clear, action-oriented decision support is provided (a context-driven institutional factor) (Ayuk and Ali Marouani 2007; Bekker et al. 2010; Bellew, Bauman, and Brown 2010; Bero and Jadad 1997; Best et al. 2009; Cherney and Head 2010; Colby et al. 2008; Crosswaite and Curtice 1994; Durrant 1995; Elliot and Popay 2000; Franklin et al. 2004; Hanney et al. 2003; Hyder et al. 2011; Kapiriri, Norheim, and Heggenhougen 2003; Kennedy et al. 2010; Kiefer et al. 2005; Kouri 2009; Landry 2006; Lavis et al. 2005a, 2008b; Logar 2011; Lomas 2005; Madden 2009; McCaughey 2010; McGregor and Brophy 2005; Mubyazi and Gonzalez-Block 2005; Oxman et al. 2009a; Pope, Mays, and Popay 2006a; Teerawattananon 2009; Theobald and Nhlema-Simwaka 2008; Tran et al. 2009). Although related, this mechanism differs from the third mechanism (the creation of a need for confidence-instilling information), in that it is linked to trust in the producers of briefs themselves, which then spurs (or depresses) the demand for specific content such as recommendations (when producers are trusted) or information about the methods used (when producers are not trusted as much). In contrast, the need for confidence-instilling information is more directly linked to the ways in which factors in the policy arena shape the demand for content, regardless of the level of trust (e.g., the need to have confidence in the information if engaged in debate).

The fifth and final mechanism that affects users is the creation of a demand for information that can be used instrumentally. This mechanism is the result of the emergence of factors that stimulate the need for information that serves a pragmatic or instrumental purpose in light of the specific characteristics of a given policy process. For example, unfamiliar issues create a demand for information that helps those reading a brief understand the problem underlying the issue (an issue-driven familiarity factor) (Campbell et al. 2009; Carden 2009; Downs 1972; Hanney et al. 2003; Kingdon 2003; Pilli-Sihvola et al. 2010; Sexton 1995). As a result, views about content that highlights the various aspects of the policy problem addressed by the evidence brief are likely to be more favorable.

Discussion

This study and the resulting theoretical insights that have emerged and are summarized in figure 2 are an important first step in understanding how factors related to the political context and the characteristics of policy issues may influence how the intended audience of a particular strategy to support the use of research evidence in policymaking—in this case, preparing evidence briefs—may view them as an input in the policy process. These factors were found to emerge in different ways, as context-dependent, as issue-dependent, and as a result of issue-context resonance. They also were found to work through various mechanisms that influence both the producers of evidence briefs (by establishing producer capacity and creating complexity in the policy arena) and the users (by establishing user capacity and normative/cultural expectations, by creating demand for confidence-instilling information, by imparting trust between users and producers, and by creating demand for instrumentally useful information). As our analysis suggests, these factors, as well as the mechanisms through which they work, can affect policymakers’ and stakeholders’ views of an evidence brief overall, as well as its particular content and formatting features.

While we based the methodology used in this study on a relatively new approach to systematic review that required us to remain flexible, we believe that our adoption of a two-pronged strategy—in which a structured and systematic electronic search was complemented by inductive, iterative, and purposive sampling—enabled us to be rigorous and transparent while overcoming some of the inherent difficulties in approaching a broad question that had only sparse and heterogeneous sources of literature from which to draw. Our strategy also ensured that we were able to answer the question with the available literature. Indeed, the fact that “case description” was the most frequently utilized study design in the included empirical papers that we retrieved using electronic searches suggests that some of the traditional methods of systematic review would have excluded some of the most important sources for gaining insights that helped address this study's question. Furthermore, our additional purposive sampling of key sources that helped fill conceptual gaps and tie together the themes that emerged during the analysis was an additional strength of this approach. In particular, many of the sources retrieved electronically were recently published, whereas some of the more seminal political science papers that helped bridge conceptual gaps were published before 2000 and were not retrieved from the electronic searches. When the goal of a synthesis or review is interpretive rather than summative, this additional step is an especially important one, as it enables those undertaking the analysis to present a more logical and cohesive theoretical argument that has identified and overcome any obvious gaps.

Despite the merits of this approach, some challenges need to be highlighted. First, our electronic searches sought literature related to health and health care policy, rather than policy in general, as the uniqueness of health care issues and policymaking (e.g., when compared with pension reform) seemed to warrant attention. Nevertheless, the literature unrelated to health issues could have been useful as well (although our purposive sample did draw mainly from the policy analysis and political science literature that is not focused on health per se). Second, we had difficulty utilizing the structured and explicit approaches to searching and article selection that are commonly associated with traditional systematic review methodology—mainly during the inclusion/exclusion phase. Specifically, our aim to prioritize the relevance of a paper over the specific design or quality criteria for inclusion led to the need for extensive discussions by the research team about inclusions and exclusions. For example, while the relevance of a particular paper often seemed obvious to the principal investigator (Kaelan A. Moat), who selected the bulk of studies and did most of the purposive sampling from the initial pool of potentially relevant papers, what was relevant versus not relevant could be quite ambiguous and vary depending on each investigator's own understanding of the study's purpose, as reflected in the compass question. The only way to overcome this was extensive discussion until we reached a mutual and compatible understanding, which extended the process of article selection. Finally, the additional stages of purposive sampling, as well as the analysis and interpretation of results, were likely influenced and framed by the investigative team's training in health services and policy research, health policy analysis, and political science. Thus, the results may well speak mainly to those who also work in these disciplines, and the additional stages of purposive sampling thus may have overlooked some relevant sources of literature from disciplines that were less familiar to the team. Accordingly, we may have overlooked some contextual and issue-related factors in this study, along with some mechanisms that affect users’ views of evidence briefs.

On the whole, the theoretical propositions developed here are just a first attempt to understand a very complex field of inquiry. Nevertheless, the results of our study carry with them several implications. First, they provide important insights for those supporting the use of research evidence, particularly the producers of evidence briefs. The results can be used in the preparation of briefs because they highlight important considerations that need to be acknowledged and incorporated when working in the complex process of policymaking. Second, the results may also enable potential users to consider how various factors shape their own views of evidence briefs and help them communicate their preferences for policy-relevant information to those preparing briefs as a way to optimize efforts to support the use of research evidence in policymaking. Third, the results serve as a point of departure for researchers undertaking empirical work that focuses on the ways in which contextual factors and the characteristics of policy issues affect the influences and views of evidence briefs and other strategies for supporting the use of research evidence in policymaking processes. Finally, this study can be seen as an attempt to advance the theoretical and conceptual conversation regarding political context, as well as issue characteristics, by those who are both studying and/or engaged in ways to support the use of research evidence in health policymaking. At present, conceptual and empirical gaps in our understanding about these factors still exist, even though research evidence is only one potential input in complex policy processes.

References

- Achenbaum A. The Place of Researchers in Social Security Policy Making. Journal of Aging Studies. 1988;2(4):301–9. [Google Scholar]

- Adeoye S, Bozic K. Influence of Economic Evaluations on Public Health Policy. Current Opinion in Orthopaedics. 2007;18(1):28–32. [Google Scholar]

- Anderson M, Cosby J, Swan B. The Use of Research in Local Health Service Agencies. Social Science & Medicine. 1999;49(8):1007–19. doi: 10.1016/s0277-9536(99)00179-3. [DOI] [PubMed] [Google Scholar]

- Arnold R. The Logic of Congressional Action. New Haven, CT: Yale University Press; 1990. [Google Scholar]

- Ayuk E, Ali Marouani M. Policy Paradox in Africa: Strengthening Links between Economic Research and Policymaking. Ottawa: International Development Research Centre/Africa World Press; 2007. [Google Scholar]

- Baum NM, Gollust SE, Goold SD, Jacobson PD. Looking Ahead: Addressing Ethical Challenges in Public Health Practice. Journal of Law, Medicine and Ethics. 2007;35(4):657–67. doi: 10.1111/j.1748-720X.2007.00188.x. [DOI] [PubMed] [Google Scholar]

- Bekker M, Van Egmond S, Wehrens R, Putters K, Bal R. Linking Research and Policy in Dutch Healthcare: Infrastructure, Innovations and Impacts. Evidence and Policy. 2010;6(2):237–53. [Google Scholar]

- Bellew B, Bauman A, Brown W. Evidence-Based Policy and Practice of Physical Activity in Australia: Awareness and Attitudes of Attendees at a National Physical Activity Conference (the PAPPA Study) Health Promotion Journal of Australia: Official Journal of Australian Association of Health Promotion Professionals. 2010;21(3):222–28. doi: 10.1071/he10222. [DOI] [PubMed] [Google Scholar]

- Bero L, Jadad A. How Consumers and Policymakers Can Use Systematic Reviews for Decision Making. Annals of Internal Medicine. 1997;127:37–42. doi: 10.7326/0003-4819-127-1-199707010-00007. [DOI] [PubMed] [Google Scholar]

- Best A, Terpstra JL, Moor G, Riley B, Norman C, Glasgow RE. Building Knowledge Integration Systems for Evidence-Informed Decisions. Journal of Health Organization and Management. 2009;23(6):627–41. doi: 10.1108/14777260911001644. [DOI] [PubMed] [Google Scholar]

- Bowen S, Zwi AB. Pathways to “Evidence-Informed” Policy and Practice: A Framework for Action. PLoS Medicine. 2005;2(7):600–605. doi: 10.1371/journal.pmed.0020166. e166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyko J. Deliberative Dialogues as a Mechanism for Knowledge Translation and Exchange. McMaster University; 2010. PhD diss. [DOI] [PubMed] [Google Scholar]

- Burris H, Parkhurst J, Adu-Sarkodie Y, Mayaud P. Getting Research into Policy—Herpes Simplex Virus Type-2 (HSV-2) Treatment and HIV Infection: International Guidelines Formulation and the Case of Ghana. Health Research Policy and Systems. 2011;9(suppl. 1):S5. doi: 10.1186/1478-4505-9-S1-S5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buse K. Addressing the Theoretical, Practical and Ethical Challenges Inherent in Prospective Health Policy Analysis. Health Policy and Planning. 2008;23(5):351–60. doi: 10.1093/heapol/czn026. [DOI] [PubMed] [Google Scholar]

- Campbell DM, Redman S, Jorm L, Cooke M, Zwi AB, Rychetnik L. Increasing the Use of Evidence in Health Policy: Practice and Views of Policy Makers and Researchers. Australia and New Zealand Health Policy. 2009;6(21) doi: 10.1186/1743-8462-6-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carden F. Knowledge to Policy: Making the Most of Development Research. Ottawa: International Development Research Centre/Sage; 2009. [Google Scholar]

- Carter BJ. Evidence-Based Decision-Making: Practical in the Appraisal of Evidence to Inform Policy and Practice. Australian Health Review. 2010;34:435–40. doi: 10.1071/AH09778. [DOI] [PubMed] [Google Scholar]

- Chambers D. Maximizing the Impact of Systematic Reviews in Health Care Decision Making: A Systematic Scoping Review of Knowledge-Translation Resources. The Milbank Quarterly. 2011;89(1):131–56. doi: 10.1111/j.1468-0009.2011.00622.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherney A, Head B. Evidence-Based Policy and Practice: Key Challenges for Improvement. Australian Journal of Social Issues. 2010;45(4):440–509. [Google Scholar]

- Colby DC, Quinn BC, Williams CH, Bilheimer LT, Goodell S. Research Glut and Information Famine: Making Research Evidence More Useful for Policymakers. Health Affairs. 2008;27(4):1177–82. doi: 10.1377/hlthaff.27.4.1177. [DOI] [PubMed] [Google Scholar]

- Coleman WD, Skogstad GD. Policy Communities and Public Policy in Canada: A Structural Approach. Mississauga, ON: Copp Clark Pitman; 1990. [Google Scholar]

- Contandriopoulos D, Brousselle A. Reliable in Their Failure: An Analysis of Healthcare Reform Policies in Public Systems. Health Policy. 2010;95(2/3):144–52. doi: 10.1016/j.healthpol.2009.11.018. [DOI] [PubMed] [Google Scholar]

- Contandriopoulos D, Lemire M, Denis J, Trembley E. Knowledge Exchange Processes in Organizations and Policy Arenas: A Narrative Systematic Review of the Literature. The Milbank Quarterly. 2010;88(4):444–83. doi: 10.1111/j.1468-0009.2010.00608.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Court J. Workshop to Promote Evidence-Based Policy Making in the Small and Medium Enterprise Sector in Egypt. Cairo. London: Overseas Development Institute; 2005. 27 Feb.–1 Mar. 2005. [Google Scholar]

- Creswell J. Qualitative Inquiry & Research Design: Choosing among Five Approaches. Thousand Oaks, CA: Sage; 2007. [Google Scholar]

- Crosswaite C, Curtice L. Disseminating Research Results—The Challenge of Bridging the Gap between Health Research and Health Action. Health Promotion International. 1994;9(4):289–96. [Google Scholar]

- Cummins S, Macintyre S. Food Deserts Evidence and Assumption in Health Policy Making. BMJ. 2002;325:436–38. doi: 10.1136/bmj.325.7361.436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daniels K. Translating Research into Maternal Health Care Policy: A Qualitative Case Study of the Use of Evidence in Policies for the Treatment of Eclampsia and Pre-Eclampsia in South Africa. Health Research Policy and Systems. 2008;6(12) doi: 10.1186/1478-4505-6-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Department for Business Innovation and Skills. Guidelines on Scientific Analysis in Policy Making. London: 2010. [Google Scholar]