Abstract

Survey researchers are making increasing use of paradata – such as keystrokes, clicks, and timestamps – to evaluate and improve survey instruments but also to understand respondents and how they answer surveys. Since the introduction of paradata, researchers have been asking whether and how respondents should be informed about the capture and use of their paradata while completing a survey. In a series of three vignette-based experiments, we examine alternative ways of informing respondents about capture of paradata and seeking consent for their use. In all three experiments, any mention of paradata lowers stated willingness to participate in the hypothetical surveys. Even the condition where respondents were asked to consent to the use of paradata at the end of an actual survey resulted in a significant proportion declining. Our research shows that requiring such explicit consent may reduce survey participation without adequately informing survey respondents about what paradata are and why they are being used.

Keywords: paradata, web surveys, informed consent

1 Introduction

The two most important ethical principles for survey researchers are protecting respondents from potential harm and assuring their autonomy in deciding whether or not to participate in the research (National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research, 1979).1 In practice, this means safeguarding the confidentiality of the data researchers collect and obtaining respondents’ informed consent. The latter requirement has nothing to do with protecting subjects from harm, and everything to do with assuring that they are treated as autonomous individuals with the right to make informed, voluntary decisions about participation.

This article is concerned with the ethical and practical questions arising from the growing use of paradata – the data collected by computerized systems during data collection – in surveys, especially those conducted online. The term “paradata” was coined by Couper (1998; see Couper and Lyberg 2005) to refer to the data automatically generated by computer-assisted interviewing systems, including keystroke files or audit trails. The early use of paradata focused on identifying problems with the survey instruments or evaluating interviewer performance. More recently, the term paradata has been expanded to include a broad range of auxiliary data on the survey process, most notably call record information (see Kreuter, Couper and Lyberg 2010). In this paper, “paradata” is used in its original sense of data automatically generated by computer-assisted interviewing systems.

The collection and use of paradata in Web surveys is widespread (e.g., Baker and Couper 2007; Callegaro 2010; Callegaro et al. 2009; Couper et al. 2006; Haraldsen, Kleven and Stålnacke 2006; Heerwegh 2003; McClain and Crawford 2011; Stern 2008; Stieger and Reips 2010; Yan and Tourangeau 2008). While most of these studies focus on improving the quality of research procedures and, particularly, the questionnaire, paradata are increasingly being used to enhance other information provided by respondents – that is, turning from purely methodological research to more substantive research. There is no consensus on whether, or under what conditions, respondents should be informed that para-data are being collected and may be used. Arguably, they ought to be informed if researchers plan to use such data in conjunction with other information provided by respondents in order to make inferences about individuals. In other words, as the paradata (information about the process) are turned into data (information about respondents), informed consent issues may arise. If this is the case, we need to find ways to inform respondents about the collection and use of such paradata without impeding the research.

This is not a paper on whether or not informed consent should be obtained for paradata use. Rather, it is a paper exploring the effects on survey participation of asking for such consent.

2 Background

Web Survey Paradata

There are several different types of paradata that can be collected in Web surveys, and different ways in which the paradata can be collected. For example, every time a browser connects to a Website (such as the home page of a survey), it transmits a user agent string. This information can be used to identify the device connected to the Internet, the operating system being used, the type of browser, the screen and browser resolution, and whether the user has JavaScript, Flash, cookies, etc., enabled (Callegaro 2012). Such information is typically used to deliver an optimal browsing experience, or redirect people to a customized Website, e.g., for those using smart phones. But the user agent string also captures the IP address associated with the device, which can be used to identify individual machines. The ESOMAR Guideline for Online Research notes that there is no international consensus about the status of IP addresses, as they “can often identify a unique computer or other device, but may or may not identify a unique user” (ESOMAR 2011:8). The Guideline also notes that “in general, the user is unable to prevent the capture of the IP address from taking place”. While the user agent string tells us something about the browsers being used by respondents to access the Web survey, it tells us nothing about the users themselves, or about their online behavior. A second level of paradata – server-side paradata – captures the information received by the server when the user presses the “next” or “continue” button. This includes the information entered on that Web page, and the time and date the page was submitted. Client-side paradata uses active scripting (such as JavaScript) to capture user behavior while on a Web page (see Heerwegh 2011). This could include, for example, the elapsed time to each mouse click or key press, and the keystrokes, clicks or mouse movements on the page. Client-side paradata (CSP) for Web surveys was first developed by Heerwegh (see https://perswww.kuleuven.be/~u0034437/public/csp.htm), who cautioned: “Use CSP only for genuine (methodological) research needs. Do not use CSP simply to ‘spy’ on your respondents, and never use the information from CSP to replace the final answers given by the respondent on the web survey.”

Paradata should be distinguished from other types of unobtrusive data captured online. Paradata are focused on the behavior of the respondent during completion of the survey only, and do not include any other browsing behavior. Further, paradata do not require the use of cookies or any other software to be downloaded to the user's computer.

Cookies (small text files stored on a user's computer) can be used for a variety of purposes, from keeping track of a respondent ID from one Web page to the next, to identifying previous respondents to prevent duplicates. Cookies come in different flavors too. Transient cookies last only for the duration of a Web session, while persistent cookies reside on a user's machine and transmit information back to the Website when the user returns there at a later time. First-party cookies send information only to the site visited by the user, while third-party cookies send information to a third party such as an advertiser (see ESOMAR 2012). The use of cookies can range from the benign (e.g., remembering a user's preferences on a Website) to more intrusive (e.g., delivering ads or special offers based on a user's browsing history). Cookies are thus not intrinsically harmful, and indeed are ubiquitous on the Web. But given the different ways cookies can be used, there is a lot of concern about their use. For example, legislation is pending in the European Union that may require consent to place or store a cookie, and only if the user is provided with “clear and comprehensive information” about the cookie (see ESOMAR 2011).

Further along the continuum of unobtrusive data capture are a variety of active agent technologies or behavioral tracking data that capture a user's online activities. Keystroke loggers capture every keystroke entered by the user, and can be used (for example) to capture passwords and other private information. According to the Council of American Survey Research Organizations (CASRO 2011) code, “Active agent technology is defined as any software or hardware device that captures the behavioral data about data subjects in a background mode, typically running concurrently with other activities.” When this is done without the subject's knowledge, it is known as “spyware”, and the ESOMAR code makes clear that “the use of spyware by researchers is strictly prohibited” (ESOMAR 2011).

Existing ethical codes are not very clear on the issue of paradata. According to the ESOMAR code, privacy statements require “a clear statement of any processing and technologies related to the survey that is taking place. . . . Statements should say clearly what information is being captured and used during the interview (e.g. data collected for tracking purposes [or] to deliver a page optimized to suit the browser) and whether any of this information is being handled as part of the survey or administrative records” (ESOMAR 2011:5). The CASRO (2011) code notes that active agent technology “needs to be carefully managed by the research industry via the application of research best practices.” The CASRO code further notes that “the use of cookies is permitted if a description of the data collected and its use is fully disclosed in a Research Organizations’ privacy policy.” The CASRO code prohibits the use of spyware, and defines the use of keystroke loggers “without obtaining the data subject's a rmed consent” as an unacceptable practice. Although neither the ESOMAR nor the CASRO code2 speaks directly to the issue of paradata, both could be interpreted as including it if regulations restricting active agent technologies or online behavioral tracking define these terms broadly.

On the one hand, paradata capture can be viewed as nothing more than collecting information about the process of completing a survey that is already covered by the informed consent statement for the survey itself. No behavior outside the survey is captured, whether during the process of completing the survey or afterwards, and no software is loaded onto a respondent's computer. Thus, it can be argued that no additional consent is needed, although the question of whether and how to inform respondents about the capture of paradata remains. On the other hand, it can be argued that respondents are not aware that such additional information is being collected, do not have a reasonable expectation of such capture and use, and, if they were aware of it, might change their behavior or decide not to participate in the survey. Under these circumstances, difficult questions arise about how best to provide information about the collection of paradata while at the same time maintaining respondent cooperation with the survey.

Informed Consent

The problem of obtaining consent for paradata use is not unlike that arising when consent for data linkage is required: the person may already have consented to the survey, but is now faced with a request for additional data. Surveys, especially longitudinal surveys, increasingly aim to link the answers provided in the survey itself to information stored in administrative records, for example Social Security or Medicare records, in order to expand the information available for a given respondent, improve accuracy, and reduce burden and cost. Typically, however, the agencies holding such records require evidence of respondents’ consent before providing the records to the survey organization (Bates 2005), and there is some evidence that such consent rates are declining (Bates 2005; Dahlhamer et al., 2007). Sakshaug and Kreuter (2012, Table 1) have documented the variation in consent rates among some 23 surveys, but, as Fulton notes, “there do not appear to be any widely accepted ‘best practices’ for soliciting permission to access respondent records” (2012, ch. 1, p. 16).

Table 1.

Mean Willingness to Participate and Percentage Willing to Participate, by Paradata Manipulations (Study I)

| Paradata description | Mean WTP (std. err) | Percent willing |

|---|---|---|

| 1) No mention (n=1264) | 5.86 (0.089) | 65.3 |

| 2) Simple description (n=1267) | 5.09 (0.092) | 55.5 |

| 3) Explicit description (n=1383) | 5.32 (0.089) | 57.4 |

| 4) Simple description + link (n=1284) | 5.37 (0.090) | 60.1 |

| Overall (n=5198) | 5.40 (0.045) | 59.5 |

Nevertheless, we might speculate that the factors affecting willingness to participate in a survey in the first place would also affect willingness to consent to explicit auxiliary data requests, such as requests for consent to record linkage or requests to consent to the collection and use of paradata.

In thinking about why people participate, we make use of leverage-salience theory (Groves, Singer and Corning 2000), which takes into account the valence of specific factors (that is, whether they are seen as costs or benefits by a particular respondent), their leverage, or weight, for a particular respondent, and whether or not they are made salient at the time of decision.

Using open-ended questions to probe respondents’ reasons for being willing to participate in surveys described in vignettes administered to members of several Web panels, we found that the reasons given fell into several broad categories, which we described as altruistic (for example, “The research is important,” “I want to be helpful” ); egoistic (for example, “I'd learn something,” “The money,” “I want my opinion heard”); and survey-related (“I like the topic,” “I trust the organization,” “the survey is short”). Reasons for not participating, on the other hand, fell into a number of general categories, such as not interested, too long, and too little time, as well as a large group of responses that were classified as privacy-related (e.g., “Don't like intrusions”; “don't like to give financial information”). A number of responses pertained to survey characteristics, such as the topic, the survey organization, or the mode, and a small number indicated that the survey did not offer enough benefits to make participation worth while (Singer 2011). It seemed reasonable to think that this broad group of reasons might influence decisions about allowing the collection and use of paradata as well, though their valence and leverage might well differ from those found in our earlier research. We return to this topic in the Discussion section.

This article reports on a series of three linked experiments we have carried out that bear on the problem of how best to provide meaningful information to respondents about a topic that most of them have had no experience with, and which is inherently difficult to comprehend, in such a way as to elicit their informed consent. None of the experiments we have so far carried out provides a satisfactory answer to this question, but together they demonstrate that how the information is provided to respondents has substantial consequences for their cooperation with the study. We do not directly address the issue whether the request for paradata collection and use should be an explicit separate consent process, but focus on the issue of how making such a request explicit may affect respondents’ willingness to participate in the survey and consent to paradata use.

3 Study I

The first experiment we designed was a very simple one, in which we varied only the description of the survey topic and the survey sponsor, and focused primarily on how the request for consent to paradata use was described. This experiment was previously described in Singer and Couper (2011). On the basis of earlier research on the informed consent process (see Couper et al. 2008, 2010; Singer and Couper 2010), we expected the information given to respondents about the collection and use of paradata to interact with certain survey as well as personal characteristics in affecting their willingness to participate in the survey and in permitting the use of the paradata collected.

Sample

As in our earlier experiments, we used hypothetical vignettes embedded in a survey administered to a web panel – for the first study, the Longitudinal Internet Studies for the Social Sciences (LISS) panel, administered by CentERdata at Tilburg University in the Netherlands.3 We added questions about privacy attitudes and concerns about confidentiality to the July 2008 LISS survey, and the hypothetical vignettes, together with questions about willingness to participate in the survey, to permit use of paradata, and reasons for willingness or lack of willingness, to the August 2008 survey. The response rate for the July survey was 67% and for August, 69%; a total of 5,198 respondents completed both questionnaires.

Experimental Design

Vignettes were constructed using one of four descriptions about the collection of paradata:

No mention of paradata

Simple description of what is collected: “In addition to your responses to the survey, we collect other data including keystrokes, time stamps, and characteristics of your browser. Like your answers themselves, this information is confidential.”

Explicit mention of what will be done with the paradata: “In addition to your responses to the survey, we collect other data including keystrokes, time stamps, and characteristics of your browser. Among other things, this makes it possible to see whether people change their answers, measure how long they take to answer, and keep the answers from questions they answered before they quit the survey. Like your answers themselves, this information is confidential.”

Simple description with a hyperlink to additional information: “In addition to your responses to the survey, we collect other data including keystrokes, time stamps, and characteristics of your browser. (Click here for more information.) Like your answers themselves, this information is confidential.” The hyperlink contained the additional information presented in version 3 above.

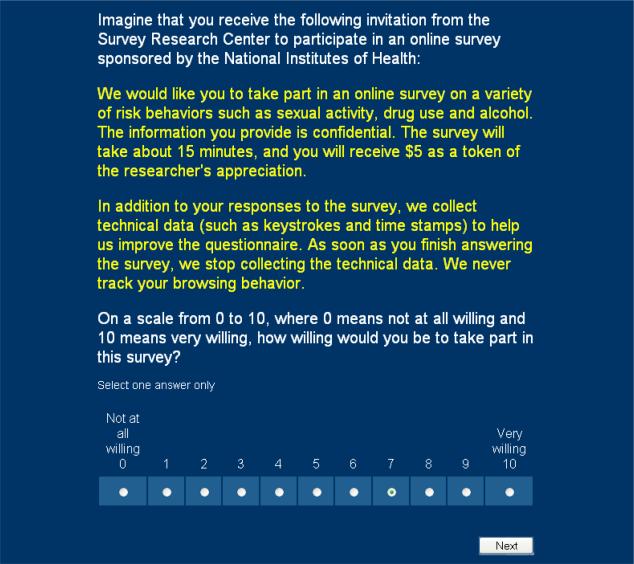

The paradata factor was crossed with two other factors – topic (risk behaviors, such as sexual behaviors and drug or alcohol use; and leisure activities, such as sports and other recreational activities) and sponsorship (a government health agency vs. a market research firm). Thus, we had a fully crossed 4 × 2 × 2 design, with 16 cells, yielding an average of 327 subjects per cell. A sample vignette (translated from the Dutch) is shown below:

Imagine that you receive the following e-mail invitation from a market research company to complete an online survey:

“We would like you to take part in an online survey on a variety of risk behaviors such as sexual activity, drug use and alcohol use. The information you provide is confidential. The survey will take about 15 minutes, and you will receive €5 as a token of the researcher's appreciation. In addition to your responses to the survey, we collect other data including characteristics of your browser, keystrokes, and time stamps. Like your answers themselves, this information is confidential.”

On a scale from 0 to 10, where 0 means not at all willing and 10 means very willing, how willing would you be to take part in this survey?

Following the vignette, respondents were asked how willing they would be to participate in the hypothetical survey, using a response scale ranging from 0 (not at all willing) to 10 (very willing). They were then asked an open follow-up question asking why or why not. Those in the conditions mentioning paradata (2, 3, and 4 of the paradata factor) who indicated willingness to participate (WTP) in the survey (6 or higher on the willingness scale) were also asked whether or not they would be willing to permit use of the paradata.

Hypotheses

On the basis of cost-benefit considerations, we developed the following hypotheses:

We expected WTP in the survey to be highest in the control condition (1), and higher in the condition that gives a simple description of paradata only (2) than in the condition that also describes how paradata might be used (a potential cost) (3). Because of the degree of control offered by the hyperlink (a potential benefit), we also expected WTP in that condition (4) to be higher than in condition 3.

On the basis of our earlier research (Couper et al. 2008, 2010), we expected an interaction between the sensitivity of the survey and information about paradata collection on WTP. That is, we expected the effect of the paradata manipulations to be stronger for the more sensitive topic (risk behaviors) than for the less sensitive one (leisure activities).

We also expected an interaction of privacy and confidentiality concerns (measured in an earlier survey) and the paradata manipulation on WTP. Again, we expected the effect of the mention of paradata to have a stronger effect on WTP for those with heightened concerns about privacy and confidentiality.

The main effects of the topic and sponsorship manipulations are reported elsewhere (Singer and Couper 2011); our focus here is on the paradata manipulations.

Analysis and Results

The mean WTP in the survey and the percent willing (those scoring 6-10 on WTP) are presented in Table 1. Regressing WTP on the three experimental factors (using OLS regression), we found a significant (p<.0001) main effect of the paradata manipulation, controlling for topic and sponsorship. The overall model with all three experimental factors yielded an R2 of just 0.023. The effect of the paradata manipulation is smaller than that of topic, but larger than survey sponsor (based on sums of squares from the model).

All three conditions that mentioned paradata had significantly lower WTP relative to the control condition but did not differ significantly from one another in mean score on the 11-point scale. While those given a simple description of paradata, plus a hyperlink, had higher WTP than those given either a simple description or an explicit description of paradata (as hypothesized), these differences were not statistically significant. Collapsing the WTP variable to a dichotomy (% willing) yields similar results (also shown in Table 1). While we found significant main effects of topic sensitivity and the privacy and confidentiality measures on WTP, we found no significant interactions of these with the paradata manipulation. That is, we did not find support for our second or third hypotheses.

Those who agreed to participate in the survey were asked, in addition, whether or not they would be willing to permit use of their paradata. Across the three paradata conditions, 67% of those who agreed to do the hypothetical survey also agreed to permit use of their paradata, but with only 58% agreeing to do the survey, this means that only about 38% of respondents were willing to do the survey and permit collection and use of the paradata. The details of these responses by experimental condition are presented in Table 2. None of the differences reaches statistical significance.

Table 2.

Percentage Agreeing to the Use of Paradata, Among Those Agreeing to Do Survey, and Among All Respondents (Study I)

| Among Those Agreeing to Do Survey |

Among All Respondents |

|||

|---|---|---|---|---|

| Paradata Description | Percent | n | Percent | n |

| 2) Simple description | 65.0 | 703 | 36.1 | 1267 |

| 3) Explicit description | 70.4 | 794 | 40.4 | 1383 |

| 4) Simple description + link | 64.2 | 771 | 38.6 | 1284 |

CentERdata staff coded a random subset of 1000 responses to an open question about why respondents would not be willing to participate in the survey (Cohen's kappa=0.798 for 102 double-coded cases). Approximately one third of the reasons made some reference to privacy concerns; there were no significant differences among the four conditions, including the control condition. Nor were there any significant differences among the three paradata conditions in the percentage making an explicit reference to paradata as a reason for unwillingness to participate in the survey. However, when these reasons were added to the privacy concerns, they accounted for just about half of all reasons given for unwillingness to participate. A substantial number of reasons mentioning paradata indicated that respondents were concerned about the risk of someone's “messing” with their browser, and continuing to do so even after the survey had been concluded. This suggests that many confused paradata collection with behavioral tracking.

In the prior month of data collection we elicited panelists’ views on issues related to privacy and confidentiality, along with trust in surveys. We found main effects of these variables on WTP in the expected direction (i.e., those with greater concerns about privacy or confidentiality were less willing to permit paradata use), but no interactions with the experimental manipulations (see Singer and Couper 2011).

Study I indicated that we had succeeded in making paradata collection salient to respondents, and that many of them considered this a “cost” rather than a benefit of participation. In addition, a substantial number did not really understand what collecting paradata entailed.

4 Study II

The second study tried to address some of the problems encountered in the first. We tried to make clear that we would not track respondents’ browser behavior beyond the specific study for which paradata were needed, and we tried to give respondents a good reason for allowing researchers to make use of their paradata – that is, we tried to indicate the benefits of paradata use for the researchers. We did this in two ways – one giving a technical reason for paradata collection, and the other giving a reason related to respondent behavior.

Sample and Data Collection

Study II was a Web survey funded by Time-Sharing Experiments for the Social Sciences (TESS; see http://www.tessexperiments.org) and fielded by Knowledge Networks (see http://www.knowledgenetworks.com), which, like LISS, uses a panel of US adults based on a sample initially recruited by probability methods. Our experiment was included with two other unrelated experiments in the survey, which was fielded in December 2009. The order of the three experiments was randomized to minimize any possible context effects. A total of 8,188 panelists were invited to the survey, of which 5,500 completed it, for a completion rate of 67.8%. The recruitment rate for this study, reported by Knowledge Networks, was 21.2% and the profile rate was 54.1%, for a cumulative response rate of 7.8% (see Callegaro and DiSogra 2008).

Experimental Design

The design of the second study was similar to that of the first. We created six experimental conditions:

No mention of paradata

Basic description of paradata: “In addition to your responses to the survey, we collect technical data (such as keystrokes and time stamps). As soon as you finish answering the survey, we stop collecting the technical data. We never track your browsing behavior.”

Respondent behavior explanation: Added “. . . to help us better understand your answers” to first sentence.

Technical quality explanation: Added “. . . to help us improve the questionnaire.”

Respondent behavior and technical quality explanation: Added “. . . to help us better understand your answers and to improve the questionnaire.”

Hyperlink to respondent behavior and technical quality explanation: as above, but explanation replaced with “(Click here for more information about how these data are used.)” and hyperlink.

These six conditions were crossed with the same two topics used in Study I, one sensitive and the other not; sponsorship was held constant for all the vignettes (National Institutes of Health). Thus, Study II uses a fully crossed 6 × 2=12-cell experimental design, with approximately 625 subjects per cell. Willingness to participate in the survey was measured by the same 11-point scale used in Study I; willingness to permit paradata use by a Yes/No question. We were unable to include open-ended questions on reasons for these responses. An example vignette is shown in Figure 1.

Figure 1.

Example Vignette from Study II, Showing Condition (4)

Note that although we tried to reassure respondents about their browser, and included reasons for the collection of paradata, we did not mention any benefits to respondents, either of participating in the survey or of permitting the use of paradata by researchers. We did, however, hypothesize that reassuring respondents about the limited use made of their browser would reduce the cost of responding affirmatively, relative to Study I. We also hypothesized that permitting such use for technical reasons would be less threatening than permitting it in order to enhance understanding of respondents’ behavior, and therefore expected a higher rate of consent for that condition.

Analysis and Results

Of the 5,550 respondents to the survey, 32 did not answer the key dependent variable, so our analyses are based on 5,518 cases. Key outcomes are shown in Table 3. The analysis used OLS regression analysis of responses to the 11-point scale and logistic regression of willingness to permit collection and use of paradata, controlling for sensitivity of the topic. As in Study I, we found a main effect of sensitivity of the topic, with the sensitive topic reducing willingness to participate in the survey, but no interaction of topic sensitivity and the paradata manipulation.

Table 3.

Mean Willingness to Participate and Percentage Willing to Participate, by Paradata Manipulations (Study II)

| Paradata description | Mean WTP (std. err) | Percent willing |

|---|---|---|

| 1) No mention (n=915) | 7.32 (0.10) | 72.9 |

| 2) Simple description (n=911) | 6.18 (0.12) | 60.5 |

| 3) Respondent behavior (n=911) | 6.96 (0.11) | 69.6 |

| 4) Technical quality (n=947) | 6.33 (0.11) | 62.3 |

| 5) Respondent behavior + technical quality (n=910) | 5.98 (0.11) | 58.0 |

| 6) Hyperlink to respondent behavior + technical quality (n=924) | 6.92 (0.11) | 70.5 |

| Overall (n=5518) | 6.62 (0.046) | 65.6 |

Although overall willingness to participate is higher than in Study I, this may be due to differences in the sample and/or country of administration rather than any effect of the manipulations. The overall model R2 is again small, at 0.021; however, unlike for Study I, the effect of the paradata manipulation is significantly larger than that of topic, with the effect of the former on WTP being statistically significant (p<.0001) while that for topic sensitivity is only marginally so (p=0.033). As can be seen in Table 3, mention of paradata reduces willingness to participate with respect to the control condition.

However, the effects for individual conditions are quite different than those for Study I. Providing a simple description of paradata use is associated with significantly (p<.0001)4 lower WTP than the control group. As in Study I, offering a hyperlink to a detailed explanation of use appears to elicit the highest percent willing to permit paradata use (and a mean WTP not significantly different from the control). However, contrary to our expectations, mentioning technical reasons for paradata capture lowered rather than raised willingness to participate in the survey relative to mentioning respondent behavior (cf. group 4 and group 3, p<.001 for mean difference), and the group mentioning both behavior and technical reasons (group 5) had the lowest level of WTP.

Across the five paradata conditions, 64% agreed to do the survey; of these, 84% also agreed to permit use of their paradata. This means that about 54% of respondents were willing to do the survey and permit collection and use of the paradata – much higher than the 38% for Study I. The details of these responses by experimental condition are presented in Table 4.

Table 4.

Percentage Agreeing to the Use of Paradata, Among Those Agreeing to Do Survey, and Among All Respondents (Study II)

| Among Those Agreeing to Do Survey |

Among All Respondents |

|||

|---|---|---|---|---|

| Paradata Description | Percent | n | Percent | n |

| 2) Simple description | 82.6 | 551 | 49.8 | 914 |

| 3) Respondent behavior | 87.7 | 634 | 60.5 | 919 |

| 4) Technical quality | 81.4 | 590 | 50.3 | 954 |

| 5) Respondent behavior + technical quality | 81.8 | 528 | 47.4 | 912 |

| 6) Hyperlink to respondent behavior + technical quality | 86.5 | 651 | 60.3 | 933 |

While we find stronger effects of the individual conditions in Study II than in Study I, the effects are not always consistent with our hypotheses. What is again clear, however, is that any attempt to describe the collection and use of paradata results in lower willingness to participate in the hypothetical survey relative to the group not told anything, and that restricting the analytic data set to those who additionally agree to permit their paradata to be used results in further sample losses.

We should again note that none of our descriptions of paradata use mentions any direct benefit to the respondent. One interpretation of these results (consistent with leverage-salience theory) is that a feature of the survey with unknown valence for the respondent that is made salient may be assumed to have negative valence, and hence lowers willingness to participate.

5 Study III

Sample and Data Collection

Study III embedded an experiment in a larger Web study of how information about disclosure risk might affect survey participation (Couper et al. 2010). The sample consisted of members of an opt-in panel in the US and included 9,200 respondents. Like all opt-in panels, this is essentially a volunteer sample, but with its members randomly assigned to experimental conditions.

Experimental Design

The third study consisted of three independent experiments embedded in the sample described above, designed to test (1) whether placement of the request for consent to paradata use (at the beginning or at the end of the survey) would affect the rate of consent and (2) whether there were differences in the rate of consent between requests in hypothetical vignettes and actual surveys.

Group 1 used essentially the same design as Studies I and II. Respondents received a vignette describing a hypothetical survey and were then asked whether they would be willing to participate in the survey; if yes, they were asked whether they were willing to permit use of their paradata. The same six paradata conditions crossed with two topics varying in sensitivity were used as in Study II, yielding a 6 by 2 design.

Respondents in Group 2 received a vignette describing a hypothetical survey that they had already completed, and were then asked whether they would be willing to permit use of their paradata. The “no mention of paradata” condition from Group 1 was dropped (given that completion of the survey was assumed), but the remaining five paradata conditions, crossed with topics varying in sensitive were used, yielding a 5 by 2 design.

Respondents in Group 3 received the request for paradata use at the end of the actual survey in which they had just participated, which had made salient issues of privacy and trust in survey organizations. They were asked only for consent to use paradata that had already been collected, using the same five paradata conditions as Groups 1 and 2 (again with the “no mention” group excluded). Since this was a request at the end of an actual survey, topic was not varied. Thus Group 3 only had 5 conditions.

Study III extends the exploration of this issue in several ways. First, Group 1 allows us to replicate the Study I and Study II results in a third sample. Second, the Group 2 treatments allow us to explore whether the sunk costs of having already participated in the hypothetical survey might affect willingness to provide paradata. That is, in the earlier experiments, respondents were first asked about willingness to participate in the hypothetical survey, then about willingness to permit capture and use of paradata. The two-step request may lower overall agreement rates in that, having just acceded to one request, subjects may be more inclined to say no to an additional request. Finally, Group 3 allows us to move away from hypothetical vignettes, to examine the request in an actual survey.

Analysis and Results

If we assume that those in Group 1 who did not express willingness to participate in the survey were also unwilling to permit paradata use, we can compare overall differences in consent to paradata use across the groups (collapsing across the 5 paradata conditions). Overall, 63.4% of those in Group 1 agreed to do the survey and consented to paradata use (61.8% for the more sensitive topic and 64.9% for the less sensitive one), compared to 59.2% in Group 2 (58.0% for the more sensitive topic and 60.3% for the less sensitive one), and 68.9% in Group 3. Differences between groups are statistically significant (χ2=52.66, d.f.=2, p<.0001); as we hypothesized, consent to permit the use of paradata that had actually been collected (Group 3) was higher than consent to either of the hypothetical vignettes. However, we had also hypothesized that consent in Group 2, which received a vignette stipulating that the survey had already been completed, would be higher than consent in Group 1, which was asked to consent prospectively; in actuality, consent in Group 2 was lower than in Group 1, and also, of course, lower than in Group 3.

For the remainder of the analyses, we examine each of the three groups separately. Group 1 is a conceptual replication of Study II, using an opt-in panel of volunteers rather than a probability-based sample. We expected similar effects of the experimental treatments as in Study II, but with different overall levels of willingness. All other things being equal, we would expect the volunteer participants to be more willing to participate in the hypothetical survey, and more willing to permit paradata use. We found this to be the case – in the “no paradata” condition, 78.8% expressed willingness to participate in the survey (see Table 5), compared with 72.9% in the same condition in Study II.

Table 5.

Mean Willingness to Participate and Percentage Willing to Participate in Survey, by Paradata Manipulations (Study III, Group 1)

| Paradata description | Mean WTP (std. err) | Percent willing |

|---|---|---|

| 1) No mention (n=462) | 7.54 (0.14) | 78.8 |

| 2) Simple description (n=484) | 6.86 (0.15) | 69.0 |

| 3) Respondent behavior (n=471) | 6.67 (0.15) | 64.5 |

| 4) Technical quality (n=526) | 6.88 (0.14) | 70.2 |

| 5) Respondent behavior + technical quality (n=502) | 6.82 (0.14) | 70.5 |

| 6) Hyperlink to respondent behavior + technical quality (n=493) | 6.52 (0.15) | 65.1 |

| Overall (n=2938) | 6.88 (0.059) | 69.6 |

As in Studies I and II, all paradata conditions resulted in lower willingness to participate in the survey than the control condition (see Table 5). In contrast to Study II, however, the respondent behavior condition (3) elicited lower levels of WTP than the technical quality condition (4).

Table 6 provides details of the willingness to provide paradata for each of the experimental conditions mentioning paradata. Consistent with Studies I and II, the mention of paradata, and the explicit request for their use, results in a further drop-off of those willing to provide this information. Differences among the paradata conditions are not significant.

Table 6.

Percentage Agreeing to the Use of Paradata, Among Those Agreeing to Do Survey, and Among All Respondents (Study III, Group 1)

| Among Those Agreeing to Do Survey |

Among All Respondents |

|||

|---|---|---|---|---|

| Paradata Description | Percent | n | Percent | n |

| 2) Simple description | 85.0 | 334 | 58.7 | 484 |

| 3) Respondent behavior | 84.9 | 304 | 54.8 | 471 |

| 4) Technical quality | 84.0 | 369 | 58.9 | 526 |

| 5) Respondent behavior + technical quality | 84.5 | 354 | 59.6 | 502 |

| 6) Hyperlink to respondent behavior + technical quality | 83.5 | 321 | 54.4 | 493 |

Group 2 of Study III allows us to explore the hypothesis that the fact of asking for permission twice (first, by asking for willingness to participate in the survey, then for consent to paradata use) may lower agreement rates. Group 2 avoids the first question by assuming that the respondent has already agreed to the hypothetical survey. We found no significant difference in consent to paradata use by topic, so focus on the paradata manipulations. These results are presented in Table 7.

Table 7.

Percentage Agreeing to the Use of Paradata, (Study III, Groups 2 and 3)

| Group 2 (Assuming Completion of Hypothetical Survey) |

Group 3 (At End of Actual Survey) |

|||

|---|---|---|---|---|

| Paradata Description | Percent | n | Percent | n |

| 2) Simple description | 62.3 | 515 | 71.1 | 492 |

| 3) Respondent behavior | 58.8 | 532 | 71.9 | 513 |

| 4) Technical quality | 57.8 | 542 | 69.3 | 514 |

| 5) Respondent behavior + technical quality | 59.9 | 528 | 65.5 | 539 |

| 6) Hyperlink to respondent behavior + technical quality | 57.2 | 507 | 66.7 | 501 |

Overall, about 59% of those asked about paradata use in the hypothetical survey they had just completed were willing to permit the use of paradata. We found no significant differences among the various conditions (χ2=3.52, d.f.=4, p=0.47).

Finally, the results for Group 3 (those asked about paradata use at the end of the actual survey they had just completed) are also presented in Table 7. The overall rate of agreement (68.9%) is higher than for the hypothetical survey, but this still means that almost one-third of respondents would not consent to paradata use at the end of an actual survey. As in Group 2, we found no significant differences among the five conditions (χ2=7.46, d.f.=4, p=0.11).

The survey included a set of questions about privacy and confidentiality concerns and trust in surveys. For those in Group 3, these were asked prior to the request for paradata consent. While we find the expected main effects of these variables (i.e., those with greater privacy, with less trust in the confidentiality of survey data, and with more negative views on the value of surveys, were less likely to consent), we found no interactions with the experimental manipulations. That is, the versions we tested were not differentially effective for different subgroups based on levels of concern and trust.

Summary of Study III Results

Study III was designed to test whether placement of the request for consent to paradata use (at the beginning or at the end of the survey) would affect the rate of consent, and (2) whether there were differences in the rate of consent between requests in hypothetical vignettes and actual surveys. We hypothesized that consent would be higher when placed at the end of a survey, both because respondents could better judge the sensitivity of the answers and because they had already invested time in completing the survey. When tested by two identical hypothetical vignettes, however, there was no support for this hypothesis: Those asked to assume they had already completed the survey were, if anything, less likely to give their consent than those asked at the beginning. Consent for paradata use requested at the end of an actual survey did elicit a higher consent rate than responses to hypothetical vignettes, but because the topics of the actual survey differed from those described in the vignettes, these results are at best suggestive. Even so, 31% of respondents in Group 3 refused their consent. Conditional on having agreed to do the survey (Groups 2 and 3), the varying descriptions of paradata do not differ significantly in their effect on consent.

We analyzed the responses of 150 sample members in each of the three experimental groups who had refused their consent to a question asking for their reasons. Some 38% raised concerns about aspects of paradata, with almost a third of these (14% of all concerns) mentioning the tracking of browsing behavior (e.g., “I feel such tracking goes beyond the stated purpose of the questions. All you need is the answers to the text questions”), and an additional 27% raised more general privacy-related concerns (e.g., “Don't trust,” “Invasion of privacy”). For Group 3, who were asked permission to use their actual paradata from the survey, many responses suggested confusion over the extent of paradata capture, e.g., “Don't like being tracked on things other than the survey I'm answering,” “I don't want anyone to track my browsing history,” “Too invasive. I never allow downloads or any kind of tracking,” and the like. Thus, it appears that our attempt to defuse concerns about tracking behavior was not very effective, or, perhaps, that the benefits of permitting such use did not outweigh the costs in the eyes of respondents, given the salience of the request.

6 Discussion

So far, three experiments soliciting consent to paradata use appear to have failed both to inform respondents adequately about this methodology and to elicit their consent. We can only speculate about some reasons why this might be so.

First, the concept of paradata is inherently difficult to grasp and is unfamiliar to virtually all respondents. The potential uses that might be made of such data are equally mysterious, and seem to be assimilated, despite our brief e orts, to the tracking behavior of advertisers and potential hackers or phishers. Inevitably, such associations lessen the likelihood of consent. Second, placing the request for consent in a brief vignette heightens the salience of paradata and as a result perhaps gives it undue influence relative to other features of the survey. More experiments with such a request at the end of actual surveys, or in a statement that makes other, attractive features of the survey more salient relative to the paradata request, might give a more accurate picture of the likelihood of consent. Third, in none of the three experiments did we attempt to explain what, if any, benefits the collection of paradata might have for respondents. Both “better understanding of your answers” and “to improve the questionnaire” are benefits for researchers. Future research should include appeals to respondents’ altruism, or mentions of possible respondent benefits, which might offset the perceived costs of consenting to paradata use. Fourth, respondents are in all probability not aware that paradata are unavoidably collected in the process of responding to a survey. The only relevant question, therefore, is whether respondents would consent to their “use”. Future experimentation should therefore focus on this question, rather than on asking about both willingness to participate and consent to paradata use. Fifth, there is experimental evidence to suggest that a consent procedure requiring respondents to opt out, rather than opting in, to the collection of paradata might achieve higher rates of consent (Bates 2005; Dahlhamer and Cox 2007; Pascale 2011), presumably because it makes it harder to refuse. It remains to be seen whether such a procedure would, in fact, increase consent to paradata use just as it has increased rates of consenting to data linkage, and whether respondents have an accurate perception that they have consented, and what they have consented to.

Finally, is it necessary to request consent to paradata use at all? The question of whether the use of paradata collected in Web surveys rises to the level needing explicit mention to respondents remains an open one. There are both ethical and legal implications. From an ethical perspective, the question is one of how much detail ought to be provided to respondents who participate in surveys, and where and how that information should be provided. For instance, is it sufficient to include information about paradata capture as part of a general privacy statement available upon request (e.g., through a hyperlink, as is common in commercial Websites), or does the capture and use of paradata require explicit mention at the time of consenting to the survey (as we have tested in our experiments)? From a legal perspective, recent EU online privacy legislation (The so-called “Cookie Law”; see ESOMAR 2012) and pending US regulations (such as the Federal Trade Commission's proposed Do Not Track rules; see http://www.ftc.gov/opa/reporter/privacy/donottrack.shtml) may end up requiring informed consent for the collection of any data other than the responses to the survey, depending on the rules adopted by each country. While the intent of these regulations is to limit online behavioral tracking, they may encompass a number of more benign activities such as para-data capture in surveys (see http://www.w3.org/2011/track-privacy/papers/CASRO-ESOMAR.pdf).

We are not advocating for one position or another. Rather, we believe that as concerns about online privacy drive new regulations that could include online surveys in their sweep, it is important for us to gather information on the likely consequences of such regulations and on the best ways to fulfill their intent without jeopardizing the public's willingness to participate in surveys. Our first e orts, described in this article, suggest that the explicit mention of paradata in the introductory description of the survey, and the explicit request for consent to its use, serve to lower participation. It appears that this may be due to an association with online behavioral tracking, spyware, or use of keystroke loggers for identity theft or other nefarious activities, rather than a genuine objection to the capture and use of survey paradata. Clearly more research is needed to explore this and related issues.

Acknowledgements

The first experiment was supported by the MESS (Measurement and Experimentation in the Social Sciences) project and carried out by CentERdata, University of Tilburg. Support for the second experiment came from TESS (Time-Sharing Experiments for the Social Sciences, funded by the US National Science Foundation), with data collection by Knowledge Networks. The third experiment was supported in part by a grant from the US National Institutes of Health (NICHD Grant #P01 HD045753-01), and carried out by Market Strategies International. We are grateful to all funders and organizations for their support of this work.

Footnotes

The third principle, justice, is more important in biomedical research, where it aims to assure that subjects who bear the risks and costs of research also reap its benefits, and vice versa.

The AAPOR code (revised May 2010) does not address the issue of online research specifically, but includes the broad statement, “We shall provide all persons selected for inclusion with a description of the research study sufficient to permit them to make an informed and free decision about their participation.”

The LISS panel consists of about 5,000 households (about 8,000 persons) recruited initially by probability sampling from the Dutch-speaking population of the Netherlands; those lacking access to the internet are provided such access. Members complete online questionnaires every month, and are paid for each completed questionnaire. See www.lissdata.nl.

Bonferroni adjustments for multiple comparisons are used for all of the individual contrasts.

References

- American Association for Public Opinion Research (AAPOR) The Code of Professional Ethics and Practices. AAPOR; Deer-field, IL: 2010. [Google Scholar]

- Baker RP, Couper MP. The Impact of Screen Size and Background Color on Response in Web Surveys.. Paper presented at the General Online Research conference (GOR07); Leipzig, Germany. 2007. [Google Scholar]

- Bates NA. Proceedings of the AAPOR-ASA Section on Survey Methods Research. American Statistical Association; Washington DC: 2005. Development and Testing of Informed Consent Questions to Link Survey Data with Administrative Records. pp. 3786–3792. [Google Scholar]

- Callegaro M. Do You Know Which Device Your Respondent Has Used to Take Your Online Survey? Using Paradata to Collect Information on Device Type. Survey Practice. 2010 [Google Scholar]

- Callegaro M. A Taxonomy of Paradata for Web Surveys and Computer Assisted Self Interviewing.. Poster presented at the General Online Research Conference; Mannheim, Germany. March.2012. [Google Scholar]

- Callegaro M, DiSogra C. Computing Response Metrics for Online Panels. Public Opinion Quarterly. 2008;72(5):1008–1032. [Google Scholar]

- Callegaro M, Yang Y, Bhola DS, Dillman DA, Chin T-Y. Response Latency as an Indicator of Optimizing in Online Questionnaires. Bulletin de Méthodologie Sociologique. 2009;103:10–25. [Google Scholar]

- Council of American Survey Research Organizations (CASRO) Code of Standards and Ethics for Survey Research. Port Jefferson; NY: CASRO: 2011. [Google Scholar]

- Couper MP. Measuring Survey Quality in a CASIC Environment.. Invited paper presented at the Joint Statistical Meetings of the American Statistical Association; Dallas. August.1998. [Google Scholar]

- Couper MP, Lyberg LE. The Use of Paradata in Survey Research.. Proceedings of the 55th Session of the International Statistical Institute; Sydney, Australia. April; 2005. [CD] [Google Scholar]

- Couper MP, Singer E, Conrad FG, Groves RM. Risk of Disclosure, Perceptions of Risk, and Concerns about Privacy and Confidentiality as Factors in Survey Participation. Journal of O cial Statistics. 2008;24(2):255–275. [PMC free article] [PubMed] [Google Scholar]

- Couper MP, Singer E, Conrad FG, Groves RM. An Experimental Study of Disclosure Risk, Disclosure Harm, Incentives, and Survey Participation. Journal of Official Statistics. 2010;26(2):287–300. [PMC free article] [PubMed] [Google Scholar]

- Couper MP, Singer E, Tourangeau R, Conrad FG. Evaluating the Effectiveness of Visual Analog Scales: A Web Experiment. Social Science Computer Review. 2006;24(2):227–245. [Google Scholar]

- Dahlhamer JM, Cox CS. Respondent Consent to Link Survey Data with Administrative Records: Results from a Split-ballot Field Test with the 2007 National Health Interview Survey; Proceedings of the Federal Committee on Statistical Methodology Research Meeting; Washington, DC. 2007. [Google Scholar]

- ESOMAR ESOMAR Guideline for Online Research. 2011 http://www.esomar.org/knowledge-and-standards/codes-and-guidelines/guideline-for-online-research.php.

- ESOMAR ESOMAR Practical Guide on Cookies. 2012 http://www.esomar.org/uploads/public/knowledge_and_standards/codes_and_guidelines/ESOMAR_Practical_Guide_on_Cookies_July_2012.pdf.

- Fulton J. Respondent Consent to Use Administrative Data. University of Maryland, Joint Program in Survey Methodology, PhD dissertation; College Park, MD: 2012. [Google Scholar]

- Groves RM, Singer E, Corning A. Leverage-saliency theory of survey participation - Description and an illustration. Public Opinion Quarterly. 2000;64(3):299–308. doi: 10.1086/317990. [DOI] [PubMed] [Google Scholar]

- Haraldsen G, Kleven Ø , Stålnacke M. Paradata Indications of Problems in Web Surveys; Paper presented at the European Conference on Quality in Survey Statistics; Cardiff, Wales. April.2006. [Google Scholar]

- Heerwegh D. Explaining Response Latencies and Changing Answers Using Client-Side Paradata from a Web Survey. Social Science Computer Review. 2003;21(3):360–373. [Google Scholar]

- Heerwegh D. Internet Survey Paradata. In: Das M, Ester P, Kaczmirek L, editors. Social Research and the Internet. Taylor and Francis; New York: 2011. pp. 325–348. [Google Scholar]

- Kreuter F, Couper MP, Lyberg LE. The Use of Paradata to Monitor and Manage Survey Data Collection.. Proceedings of the Joint Statistical Meetings; Vancouver. August.2010. pp. 282–296. [Google Scholar]

- McClain C, Crawford SD. Web Survey Live Validations What Are They Doing?. Paper presented at the annual conference of the American Association for Public Opinion Research; Phoenix, AZ. 2011. [Google Scholar]

- National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research . The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research. US Department of Health and Human Services; Washington, DC: 1979. [PubMed] [Google Scholar]

- Pascale J. Requesting Consent to Link Survey Data to Administrative Records: Results from a Split-Ballot Experiment in the Survey of Health Insurance and Program Participation (SHIPP) Center for Survey Measurement, Research and Methodology Directorate, US Census Bureau; Washington, DC: 2011. Survey Methodology Study Series #2011-03. [Google Scholar]

- Sakshaug J, Kreuter F. Assessing the magnitude of non-consent biases in linked survey and administrative data. Survey Research Methods. 2012;6(2):113–122. [Google Scholar]

- Singer E. Toward a Benefit-Cost Theory of Survey Participation: Evidence, Further Tests, and Implications. Journal of Official Statistics. 2011;27(2):379–392. [Google Scholar]

- Singer E, Couper MP. Communicating Disclosure Risk in Informed Consent Statements. Journal of Empirical Research on Human Research Ethics. 2010;5(3):1–8. doi: 10.1525/jer.2010.5.3.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer E, Couper MP. Ethical Considerations in Web Surveys. In: Das M, Ester P, Kaczmirek L, editors. Social Research and the Internet. Taylor and Francis; New York: 2011. pp. 133–162. [Google Scholar]

- Stern MJ. The Use of Client-Side Paradata in Analyzing the Effects of Visual Layout on Changing Responses in Web Surveys. Field Methods. 2008;20(4):377–398. [Google Scholar]

- Stieger S, Reips U-D. What Are Participants Doing While Filling in an Online Questionnaire: A Paradata Collection Tool and an Empirical Study. Computers in Human Behavior. 2010;26(6):1488–1495. [Google Scholar]

- Yan T, Tourangeau R. Fast Times and Easy Questions: The Effects of Age, Experience and Question Complexity on Web Survey Response Times. Applied Cognitive Psychology. 2008;22(1):51–68. [Google Scholar]