Abstract

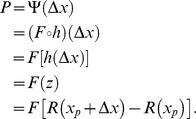

In a 2-alternative forced-choice (2AFC) discrimination task, observers choose which of two stimuli has the higher value. The psychometric function for this task gives the probability of a correct response for a given stimulus difference,  . This paper proves four theorems about the psychometric function. Assuming the observer applies a transducer and adds noise, Theorem 1 derives a convenient general expression for the psychometric function. Discrimination data are often fitted with a Weibull function. Theorem 2 proves that the Weibull “slope” parameter,

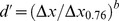

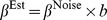

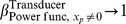

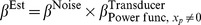

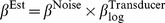

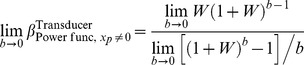

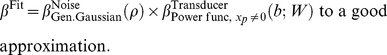

. This paper proves four theorems about the psychometric function. Assuming the observer applies a transducer and adds noise, Theorem 1 derives a convenient general expression for the psychometric function. Discrimination data are often fitted with a Weibull function. Theorem 2 proves that the Weibull “slope” parameter,  , can be approximated by

, can be approximated by  , where

, where  is the

is the  of the Weibull function that fits best to the cumulative noise distribution, and

of the Weibull function that fits best to the cumulative noise distribution, and  depends on the transducer. We derive general expressions for

depends on the transducer. We derive general expressions for  and

and  , from which we derive expressions for specific cases. One case that follows naturally from our general analysis is Pelli's finding that, when

, from which we derive expressions for specific cases. One case that follows naturally from our general analysis is Pelli's finding that, when  ,

,  . We also consider two limiting cases. Theorem 3 proves that, as sensitivity improves, 2AFC performance will usually approach that for a linear transducer, whatever the actual transducer; we show that this does not apply at signal levels where the transducer gradient is zero, which explains why it does not apply to contrast detection. Theorem 4 proves that, when the exponent of a power-function transducer approaches zero, 2AFC performance approaches that of a logarithmic transducer. We show that the power-function exponents of 0.4–0.5 fitted to suprathreshold contrast discrimination data are close enough to zero for the fitted psychometric function to be practically indistinguishable from that of a log transducer. Finally, Weibull

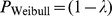

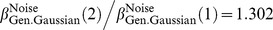

. We also consider two limiting cases. Theorem 3 proves that, as sensitivity improves, 2AFC performance will usually approach that for a linear transducer, whatever the actual transducer; we show that this does not apply at signal levels where the transducer gradient is zero, which explains why it does not apply to contrast detection. Theorem 4 proves that, when the exponent of a power-function transducer approaches zero, 2AFC performance approaches that of a logarithmic transducer. We show that the power-function exponents of 0.4–0.5 fitted to suprathreshold contrast discrimination data are close enough to zero for the fitted psychometric function to be practically indistinguishable from that of a log transducer. Finally, Weibull  reflects the shape of the noise distribution, and we used our results to assess the recent claim that internal noise has higher kurtosis than a Gaussian. Our analysis of

reflects the shape of the noise distribution, and we used our results to assess the recent claim that internal noise has higher kurtosis than a Gaussian. Our analysis of  for contrast discrimination suggests that, if internal noise is stimulus-independent, it has lower kurtosis than a Gaussian.

for contrast discrimination suggests that, if internal noise is stimulus-independent, it has lower kurtosis than a Gaussian.

Introduction

On each trial of a 2-alternative forced-choice (2AFC) discrimination task, observers are presented with two stimuli, one (often called the pedestal) with stimulus value  , and one (the target) with value

, and one (the target) with value  , where

, where  represents a value along some stimulus dimension, such as contrast, luminance, frequency, sound intensity, etc., and

represents a value along some stimulus dimension, such as contrast, luminance, frequency, sound intensity, etc., and  represents a (usually) positive increment in

represents a (usually) positive increment in  . The observer has to say which stimulus contained the higher value,

. The observer has to say which stimulus contained the higher value,  . For this task, the function relating stimulus difference,

. For this task, the function relating stimulus difference,  , to the probability of a correct response,

, to the probability of a correct response,  , is called the psychometric function. The form of the psychometric function can reveal characteristics of the underlying mechanisms, helping to constrain the set of possible models. In this paper we present four theorems that help us to understand the properties of the psychometric function and clarify the relationship between the psychometric function and the underlying model.

, is called the psychometric function. The form of the psychometric function can reveal characteristics of the underlying mechanisms, helping to constrain the set of possible models. In this paper we present four theorems that help us to understand the properties of the psychometric function and clarify the relationship between the psychometric function and the underlying model.

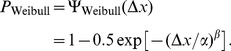

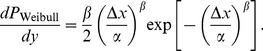

In order to fit the psychometric function to data, we need a mathematical function whose parameters can be adjusted to fit the kind of data set usually obtained. A widely used function is the Weibull function, and two of our theorems relate specifically to this function. Letting  represent the Weibull function, and letting

represent the Weibull function, and letting  represent its output (i.e., the predicted proportion correct), the Weibull function is given by

represent its output (i.e., the predicted proportion correct), the Weibull function is given by

|

(1) |

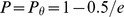

is the “threshold” parameter, the stimulus increment that gives rise to a proportion correct of

is the “threshold” parameter, the stimulus increment that gives rise to a proportion correct of  . In what follows, we will frequently refer to this threshold performance level as

. In what follows, we will frequently refer to this threshold performance level as  , so this term should be read as the constant,

, so this term should be read as the constant,  .

.  is often referred to as the “slope” parameter, because it is proportional to the gradient of the Weibull function at

is often referred to as the “slope” parameter, because it is proportional to the gradient of the Weibull function at  when

when  is plotted on a log abscissa.

is plotted on a log abscissa.

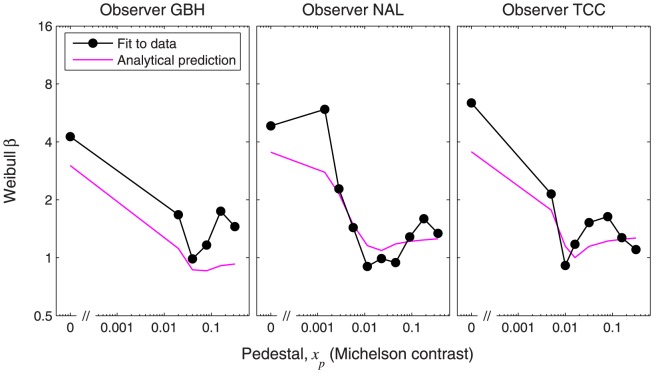

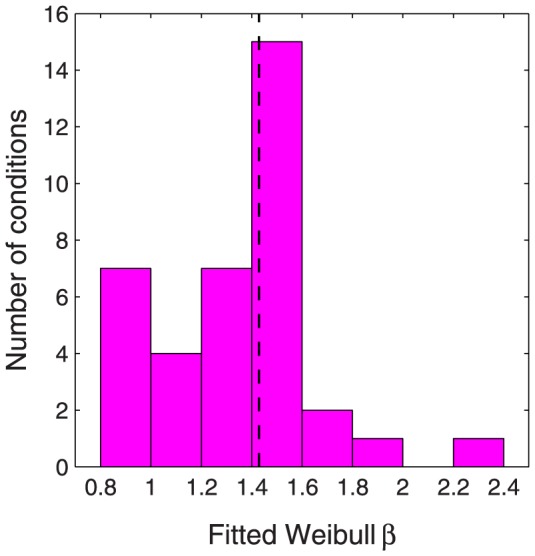

In 2AFC visual contrast discrimination experiments where the contrasts of both stimuli are at least as high as the detection threshold,  usually falls between 1 and 2, with a median of around 1.4 (see Table 1 and Figure 1). As the pedestal contrast approaches zero (making it a 2AFC contrast detection task),

usually falls between 1 and 2, with a median of around 1.4 (see Table 1 and Figure 1). As the pedestal contrast approaches zero (making it a 2AFC contrast detection task),  increases to a value of around 3 [1]–[5].

increases to a value of around 3 [1]–[5].

Table 1. Fitted Weibull function parameters for 2AFC contrast discrimination.

| λ = 0 | λ fitted | ||||||||

| Study | Condition/observer | Pedestal | α | β | W | α | β | λ | W |

| Bird et al. [34] | CMB | 0.03 | 0.00735 | 1.12 | 0.245 | 0.00735 | 1.12 | 5×10−13 | 0.245 |

| CMB | 0.3 | 0.0779 | 1.11 | 0.260 | 0.0692 | 1.21 | 0.0309 | 0.231 | |

| GBH | 0.03 | 0.00737 | 0.734 | 0.246 | 0.00643 | 0.832 | 0.0251 | 0.214 | |

| GBH | 0.3 | 0.0574 | 0.952 | 0.191 | 0.0541 | 0.993 | 0.0128 | 0.180 | |

| Foley & Legge [1] | JMF, 0.5 cpd | 0.00400 | 0.00165 | 1.35 | 0.412 | 0.00165 | 1.35 | 3×10−9 | 0.412 |

| JMF, 2 cpd | 0.00230 | 0.00111 | 1.56 | 0.484 | 0.00111 | 1.56 | 5×10−12 | 0.484 | |

| JMF, 8 cpd | 0.00300 | 0.00125 | 1.44 | 0.418 | 0.00123 | 1.46 | 0.0071 | 0.410 | |

| GW, 0.5 cpd | 0.00400 | 0.00134 | 1.50 | 0.335 | 0.00134 | 1.50 | 2×10−12 | 0.335 | |

| GW, 2 cpd | 0.00229 | 0.000923 | 1.58 | 0.404 | 0.000923 | 1.58 | 1×10−12 | 0.404 | |

| GW, 8 cpd | 0.00330 | 0.00117 | 1.40 | 0.353 | 0.000996 | 1.94 | 0.0544 | 0.301 | |

| Henning et al. [47] | CMB 2.09 cpd | 0.15 | 0.0421 | 1.49 | 0.281 | 0.0421 | 1.49 | 1×10−12 | 0.281 |

| CMB 8.37 cpd | 0.15 | 0.0461 | 1.81 | 0.307 | 0.0379 | 2.21 | 0.0796 | 0.253 | |

| GBH 2.09 cpd | 0.15 | 0.0363 | 1.49 | 0.242 | 0.0363 | 1.49 | 2×10−12 | 0.242 | |

| GBH 8.37 cpd | 0.15 | 0.0401 | 1.21 | 0.267 | 0.0244 | 6.70 | 0.0645 | 0.163 | |

| Henning & Wichman [40] | GBH* | 0* | 0.0219* | 4.26* | –* | ||||

| GBH* | 0.01* | 0.0102* | 13.1* | 1.02* | |||||

| GBH* | 0.02* | 0.00562* | 1.67* | 0.281* | |||||

| GBH | 0.04 | 0.00705 | 0.987 | 0.176 | |||||

| GBH | 0.08 | 0.0156 | 1.16 | 0.195 | |||||

| GBH | 0.16 | 0.0322 | 1.75 | 0.201 | |||||

| GBH | 0.32 | 0.0773 | 1.45 | 0.241 | |||||

| NAL* | 0* | 0.00619* | 4.84* | –* | |||||

| NAL* | 0.00141* | 0.00492* | 5.90* | 3.48* | |||||

| NAL* | 0.00283* | 0.00407* | 2.28* | 1.44* | |||||

| NAL* | 0.00566* | 0.00224* | 1.43* | 0.395* | |||||

| NAL | 0.0113 | 0.00272 | 0.902 | 0.241 | |||||

| NAL | 0.0226 | 0.00707 | 0.990 | 0.312 | |||||

| NAL | 0.0453 | 0.0150 | 0.943 | 0.331 | |||||

| NAL | 0.0905 | 0.0233 | 1.28 | 0.257 | |||||

| NAL | 0.181 | 0.0424 | 1.59 | 0.234 | |||||

| NAL | 0.362 | 0.0658 | 1.33 | 0.182 | |||||

| TCC* | 0* | 0.00838* | 6.38* | –* | |||||

| TCC* | 0.005* | 0.00443* | 2.14* | 0.886* | |||||

| TCC | 0.01 | 0.00339 | 0.912 | 0.339 | |||||

| TCC | 0.016 | 0.00787 | 1.17 | 0.492 | |||||

| TCC | 0.032 | 0.0126 | 1.52 | 0.393 | |||||

| TCC | 0.08 | 0.0301 | 1.64 | 0.377 | |||||

| TCC | 0.16 | 0.0381 | 1.27 | 0.238 | |||||

| TCC | 0.32 | 0.0686 | 1.10 | 0.214 | |||||

| Meese et al. [4] | Pedestal −∞ dB* | 0* | 0.00855* | 3.32* | –* | ||||

| Pedestal −10 dB* | 0.00316* | 0.00557* | 2.44* | 1.76* | |||||

| Pedestal −5 dB* | 0.00562* | 0.00346* | 1.47* | 0.615* | |||||

| Pedestal 0 dB | 0.01 | 0.00340 | 1.47 | 0.340 | |||||

| Pedestal 5 dB | 0.0178 | 0.00654 | 1.48 | 0.368 | |||||

| Pedestal 10 dB | 0.0316 | 0.0110 | 1.40 | 0.348 | |||||

| Pedestal 15 dB | 0.0562 | 0.0176 | 1.58 | 0.313 | |||||

| Pedestal 20 dB | 0.1 | 0.0233 | 1.47 | 0.233 | |||||

| Pedestal 25 dB | 0.178 | 0.0339 | 1.47 | 0.191 | |||||

| Pedestal 30 dB | 0.316 | 0.0536 | 1.36 | 0.170 | |||||

| Nachmias & Sansbury [6] | CS | 0.0079 | 0.00387 | 1.27 | 0.489 | ||||

| Mean of suprathreshold (non-starred) conditions | 1.32 | 0.298 | 1.82 | 0.297 | |||||

| Median of suprathreshold conditions | 1.38 | 0.274 | 1.49 | 0.267 | |||||

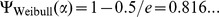

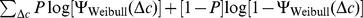

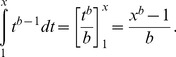

This table shows Weibull parameters fitted to 2AFC contrast discrimination data from six studies. The data from Meese et al. [4] are for their Binocular condition (plotted as squares in their Figure 5); these data were kindly provided by Tim Meese. For the other five papers, we read off the data points from digital scans of the figures (Bird et al. [34], Figure 1; Foley and Legge [1], Figure 1; Henning et al. [47], Figure 4 (sine wave stimuli only); Henning & Wichmann [40], Figure 4; Nachmias & Sansbury [6], Figure 2). In most cases, these figures plotted the proportion correct,  , for several different contrast differences,

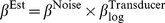

, for several different contrast differences,  , and we fitted the Weibull function using a maximum-likelihood method; specifically, we fitted the Weibull function by maximizing the expression

, and we fitted the Weibull function using a maximum-likelihood method; specifically, we fitted the Weibull function by maximizing the expression  , where

, where  is the Weibull function whose parameters were being fitted. In Henning & Wichmann's [40] paper, the figures plotted the

is the Weibull function whose parameters were being fitted. In Henning & Wichmann's [40] paper, the figures plotted the  values corresponding to 60%, 75%, and 90% correct on the fitted psychometric functions, so we had to fit Weibull functions to points sampled from Henning & Wichmann's own fitted psychometric functions, rather than to the raw data. Where possible, we fitted both the lapse-free Weibull function of Equation (1), and the Weibull function of Equation (2), which includes a fitted lapse rate parameter,

values corresponding to 60%, 75%, and 90% correct on the fitted psychometric functions, so we had to fit Weibull functions to points sampled from Henning & Wichmann's own fitted psychometric functions, rather than to the raw data. Where possible, we fitted both the lapse-free Weibull function of Equation (1), and the Weibull function of Equation (2), which includes a fitted lapse rate parameter,  . Parameters for the former fit appear under the heading “

. Parameters for the former fit appear under the heading “ ”, and those for the latter appear under the heading “

”, and those for the latter appear under the heading “ fitted”. In many cases, the data did not sufficiently constrain

fitted”. In many cases, the data did not sufficiently constrain  because there were no data points on the saturating portion of the psychometric function; in addition, Meese et al.'s Weibull fits did not include a lapse rate parameter. The Weber fraction,

because there were no data points on the saturating portion of the psychometric function; in addition, Meese et al.'s Weibull fits did not include a lapse rate parameter. The Weber fraction,  , is given by

, is given by  , where

, where  is the pedestal value. The means and medians at the bottom of the table are calculated from those studies for which the pedestal level exceeds the detection threshold, so that both stimuli were clearly visible. The cases where the pedestal is below detection threshold are starred in the table, and these were excluded from the means and medians.

is the pedestal value. The means and medians at the bottom of the table are calculated from those studies for which the pedestal level exceeds the detection threshold, so that both stimuli were clearly visible. The cases where the pedestal is below detection threshold are starred in the table, and these were excluded from the means and medians.

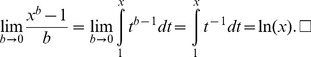

Figure 1. Distribution of fitted Weibull  values in Table 1.

values in Table 1.

The fitted  values from the suprathreshold (non-starred) conditions of Table 1 were dropped into bins with edges that stepped from 0.8 to 2.4 in jumps of 0.2 (the histogram thus excludes one outlier, the value 6.70 for Henning et al. 's [47] observer GBH at 8.37 cpd). For this histogram, we used the

values from the suprathreshold (non-starred) conditions of Table 1 were dropped into bins with edges that stepped from 0.8 to 2.4 in jumps of 0.2 (the histogram thus excludes one outlier, the value 6.70 for Henning et al. 's [47] observer GBH at 8.37 cpd). For this histogram, we used the  values that had been fitted using a nonzero lapse rate parameter where available, as this is more likely to reflect the true

values that had been fitted using a nonzero lapse rate parameter where available, as this is more likely to reflect the true  . The median of this hybrid population (some including a lapse rate parameter, some not) was 1.43 (indicated by the vertical dashed line).

. The median of this hybrid population (some including a lapse rate parameter, some not) was 1.43 (indicated by the vertical dashed line).

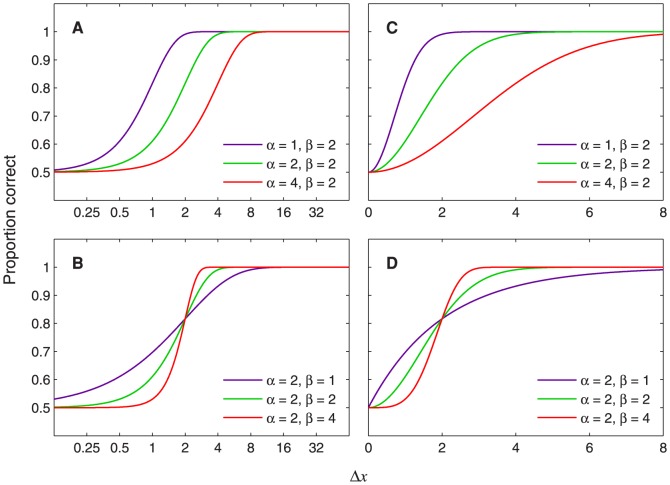

When  is plotted on a log abscissa, changing the value of

is plotted on a log abscissa, changing the value of  shifts the function horizontally, but otherwise leaves it unchanged (Figure 2A), and changing the value of

shifts the function horizontally, but otherwise leaves it unchanged (Figure 2A), and changing the value of  linearly stretches or compresses the function horizontally, leading to a change of slope (Figure 2B). On this log abscissa, the Weibull function always has the same basic shape, up to a linear horizontal scaling. When

linearly stretches or compresses the function horizontally, leading to a change of slope (Figure 2B). On this log abscissa, the Weibull function always has the same basic shape, up to a linear horizontal scaling. When  is plotted on a linear abscissa, changing the value of

is plotted on a linear abscissa, changing the value of  linearly stretches or compresses the function horizontally as well as changing the threshold (Figure 2C), while changing the value of

linearly stretches or compresses the function horizontally as well as changing the threshold (Figure 2C), while changing the value of  changes the shape of the function in a way that cannot be described as a linear horizontal scaling (Figure 2D).

changes the shape of the function in a way that cannot be described as a linear horizontal scaling (Figure 2D).

Figure 2. Effect of varying Weibull  and

and  on log and linear abscissas.

on log and linear abscissas.

(A) Varying  on a log abscissa: The curve shifts horizontally. (B) Varying

on a log abscissa: The curve shifts horizontally. (B) Varying  on a log abscissa: The curve is linearly stretched or compressed horizontally. (C) Varying

on a log abscissa: The curve is linearly stretched or compressed horizontally. (C) Varying  on a linear abscissa: The curve undergoes a linear horizontal stretch and a change of threshold. (D) Varying

on a linear abscissa: The curve undergoes a linear horizontal stretch and a change of threshold. (D) Varying  on a linear abscissa: The shape changes in a way that cannot be described as a linear scaling.

on a linear abscissa: The shape changes in a way that cannot be described as a linear scaling.

Since  is proportional to the slope of the Weibull function on a log abscissa, the low value of

is proportional to the slope of the Weibull function on a log abscissa, the low value of  for contrast discrimination (compared with detection) often leads to the psychometric function for discrimination being described as “shallow”, and that for detection as “steep”. However, psychometric functions for contrast discrimination can be steeper than for detection when plotted on a linear contrast abscissa (e.g., Nachmias & Sansbury [6], Figure 2; Foley & Legge [1], Figure 1). We must therefore be vigilant not to be misled by the common practice of referring to

for contrast discrimination (compared with detection) often leads to the psychometric function for discrimination being described as “shallow”, and that for detection as “steep”. However, psychometric functions for contrast discrimination can be steeper than for detection when plotted on a linear contrast abscissa (e.g., Nachmias & Sansbury [6], Figure 2; Foley & Legge [1], Figure 1). We must therefore be vigilant not to be misled by the common practice of referring to  as the “slope” parameter.

as the “slope” parameter.  does control the slope of the Weibull function on a log abscissa, and this fact plays a key role in the proof of Theorem 2 of this paper, but the psychometric function is often plotted on a linear abscissa, and, in this case,

does control the slope of the Weibull function on a log abscissa, and this fact plays a key role in the proof of Theorem 2 of this paper, but the psychometric function is often plotted on a linear abscissa, and, in this case,  and

and  both affect the slope (Figures 2C and 2D); on a linear abscissa,

both affect the slope (Figures 2C and 2D); on a linear abscissa,  additionally controls the threshold and

additionally controls the threshold and  additionally controls the overall shape of the psychometric function. Thus, when considering a linear abscissa, it would be more appropriate to describe

additionally controls the overall shape of the psychometric function. Thus, when considering a linear abscissa, it would be more appropriate to describe  as the “shape” parameter, rather than the “slope” parameter.

as the “shape” parameter, rather than the “slope” parameter.

The Weibull function defined in Equation (1) asymptotes to perfect performance ( ). This is rarely achieved by human observers due to lapses of concentration, etc., and this can lead to a dramatic underestimation of

). This is rarely achieved by human observers due to lapses of concentration, etc., and this can lead to a dramatic underestimation of  if the observer makes just one lapse on an easy trial [7]. Because of this problem, many researchers use a version of the Weibull function that includes a “lapse rate” parameter,

if the observer makes just one lapse on an easy trial [7]. Because of this problem, many researchers use a version of the Weibull function that includes a “lapse rate” parameter,  :

:

| (2) |

This function asymptotes to  , and reduces to Equation (1) in the case of

, and reduces to Equation (1) in the case of  . The psychometric function described by Equation (2) would result if the observer performed according to Equation (1) on a proportion

. The psychometric function described by Equation (2) would result if the observer performed according to Equation (1) on a proportion  of trials, and guessed randomly on the remaining trials.

of trials, and guessed randomly on the remaining trials.

The Weibull function was originally proposed by Weibull [8] as a useful, general-purpose statistical distribution. Its widespread use as a psychometric function can be traced back to Quick [9], who was apparently unaware of Weibull's prior work. Quick proposed this function because, given certain assumptions, the Weibull function makes it easy to calculate how detection performance will be affected by adding extra stimulus components or increasing the size or duration of the stimulus, an approach that has become known as probability summation [10]–[13]. Quick focused on yes/no detection tasks, where the observer has to make a binary decision about a single stimulus (as opposed to the 2AFC tasks that we consider in this paper, in which the observer makes a binary decision about a pair of stimuli), but a similar analysis can be applied to 2AFC tasks [2].

Most treatments of probability summation with the Weibull function invoke the “high threshold assumption” that a zero-contrast stimulus never elicits a response in the detection mechanism, so detection errors are always unlucky guesses. This assumption makes a number of predictions that have turned out to be false [2], [14]–[16]. Furthermore, the convenient mathematics of probability summation with the Weibull function only applies to detection. For suprathreshold discrimination, where both stimuli are easily detectable, these computational benefits do not apply. Despite this, many researchers have continued to use the Weibull function to fit data from both detection and discrimination experiments for three perfectly valid reasons: it is well-known, fits well to the data, and is built into QUEST [17], probably the most widely used adaptive psychophysical method.

Different models of visual processing will deliver different mathematical forms for the psychometric function. Therefore, because of the widespread practice of fitting a Weibull function to data, it is of interest to know what happens when we fit a Weibull function to a psychometric function that is not a Weibull. In Theorem 2 of this paper, we derive a general analytical expression that gives a very accurate approximation of  when the Weibull function is fitted to non-Weibull psychometric functions.

when the Weibull function is fitted to non-Weibull psychometric functions.

Although the usage of the Weibull function has its origin in outdated theoretical views, the Weibull function has very recently become more relevant again, due to the work of Neri [18]. He argues that the internal noise on the decision variable has a Laplace distribution, which, as we explain later in this Introduction, can lead to a psychometric function that has the form of a Weibull function with  .

.

First, we consider how the psychometric function might arise from the properties of the observer. In 2AFC discrimination experiments, the observer can be modelled using a transducer, followed by constant additive noise. The transducer converts the stimulus value,  , into some internal scalar signal value,

, into some internal scalar signal value,  .

.  is called the transducer function. A noise sample from a stationary, stimulus-invariant distribution is then added to the internal signal,

is called the transducer function. A noise sample from a stationary, stimulus-invariant distribution is then added to the internal signal,  , to give a noisy internal signal value. If the noise has zero mean, then

, to give a noisy internal signal value. If the noise has zero mean, then  will be the mean internal signal for stimulus value

will be the mean internal signal for stimulus value  . The observer compares the noisy internal signal values from the two stimuli, and chooses the stimulus that gave the higher value.

. The observer compares the noisy internal signal values from the two stimuli, and chooses the stimulus that gave the higher value.

From the experimenter's perspective, the observer behaves as if a sample of noise,  , is added to the difference of mean signals,

, is added to the difference of mean signals,  , given by

, given by

| (3) |

The observer is correct when  , i.e. when

, i.e. when  . The probability,

. The probability,  , of this happening is given by

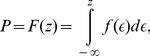

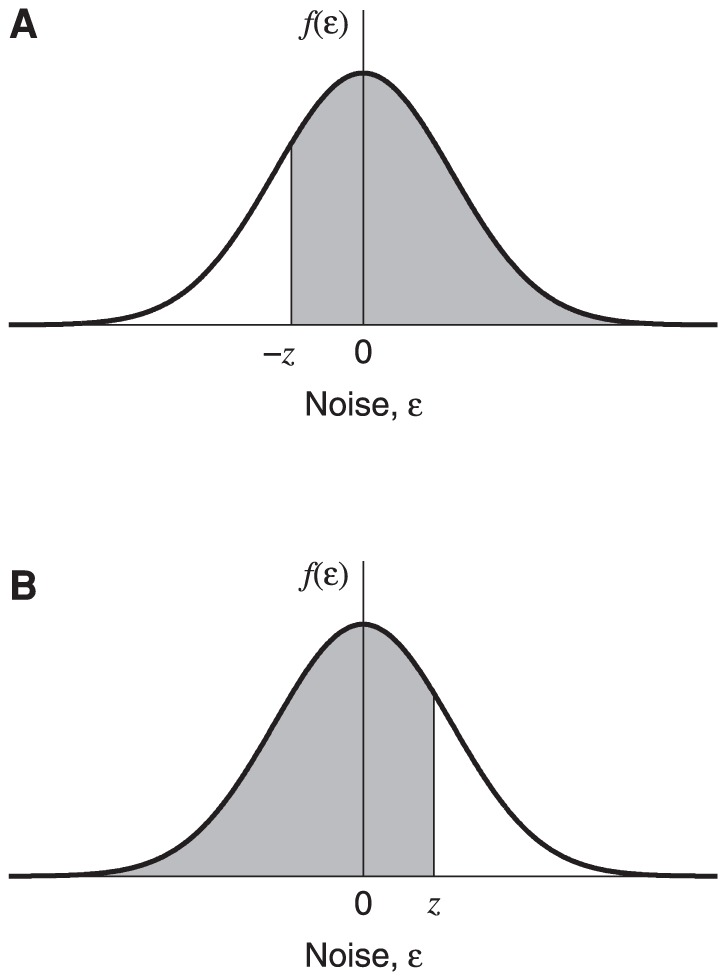

, of this happening is given by

|

(4) |

where  is the probability density function (PDF) of the noise,

is the probability density function (PDF) of the noise,  . This integral corresponds to the shaded area in Figure 3A.

. This integral corresponds to the shaded area in Figure 3A.  has to be even-symmetric, even if the noise added to the output of the transducer is not. This is because the noise sample on

has to be even-symmetric, even if the noise added to the output of the transducer is not. This is because the noise sample on  is equal to the noise sample on the target minus the noise sample on the nontarget. This is equivalent to swapping the sign of the nontarget noise sample and adding it to the target noise sample. The sign-reversed noise sample on the nontarget will have a PDF with mirror symmetry relative to the PDF of the noise sample on the target, so the sum of these two values will have an even-symmetric PDF. From the even symmetry of

is equal to the noise sample on the target minus the noise sample on the nontarget. This is equivalent to swapping the sign of the nontarget noise sample and adding it to the target noise sample. The sign-reversed noise sample on the nontarget will have a PDF with mirror symmetry relative to the PDF of the noise sample on the target, so the sum of these two values will have an even-symmetric PDF. From the even symmetry of  we have

we have

|

(5) |

where  is the cumulative distribution function (CDF) of the observer's noise on the internal difference signal, and corresponds to the shaded area in Figure 3B. So the psychometric function for 2AFC discrimination, expressed as a function of

is the cumulative distribution function (CDF) of the observer's noise on the internal difference signal, and corresponds to the shaded area in Figure 3B. So the psychometric function for 2AFC discrimination, expressed as a function of  , will trace out the positive half of the internal noise CDF, increasing from 0.5 to 1 as

, will trace out the positive half of the internal noise CDF, increasing from 0.5 to 1 as  increases from 0 to

increases from 0 to  .

.

Figure 3. Graphical representation of the probability of a correct response.

The shaded areas in A and B correspond to the integrals in Equations (4) and (5), respectively. The smooth curves trace out the PDF of the noise,  , on the internal difference signal,

, on the internal difference signal,  . As explained in the text,

. As explained in the text,  has to be even-symmetric, and this means that the two integrals in Equations (4) and (5) are equal. The shaded areas correspond to the probability of a correct response. The psychometric function (expressed as a function of

has to be even-symmetric, and this means that the two integrals in Equations (4) and (5) are equal. The shaded areas correspond to the probability of a correct response. The psychometric function (expressed as a function of  ) is the CDF of the noise, increasing from 0.5 to 1 as

) is the CDF of the noise, increasing from 0.5 to 1 as  increases from 0 to

increases from 0 to  .

.

Figure 4 plots the CDFs and PDFs for several different forms of noise distribution (the mathematical definitions of these distributions will be given later). These CDFs (plotted as functions of  ) do not have a sigmoidal shape: The point of inflection is at zero on the abscissa. This is because the point of inflection corresponds to the peak of the derivative, and the derivative of these functions is the noise PDF, which peaks at 0 in each case.

) do not have a sigmoidal shape: The point of inflection is at zero on the abscissa. This is because the point of inflection corresponds to the peak of the derivative, and the derivative of these functions is the noise PDF, which peaks at 0 in each case.

Figure 4. CDFs and PDFs of four different noise distributions.

The top row shows noise CDFs,  , for (A) a Laplace distribution (generalized Gaussian with

, for (A) a Laplace distribution (generalized Gaussian with  ), (B) a Gaussian distribution (generalized Gaussian with

), (B) a Gaussian distribution (generalized Gaussian with  ), (C) a generalized Gaussian with

), (C) a generalized Gaussian with  , (D) a logistic distribution. Each panel in the bottom row shows the PDF,

, (D) a logistic distribution. Each panel in the bottom row shows the PDF,  , corresponding to the CDF above it. Only the positive halves of the distributions are shown (i.e.

, corresponding to the CDF above it. Only the positive halves of the distributions are shown (i.e.  ). Note that the use of these colours for the different noise distributions is maintained in Figures 7, 8, 10, 11, 12, 15, and 16.

). Note that the use of these colours for the different noise distributions is maintained in Figures 7, 8, 10, 11, 12, 15, and 16.

In summary,  is the CDF of the internal noise, and takes an input of

is the CDF of the internal noise, and takes an input of  (Equation (5));

(Equation (5));  is the output of

is the output of  , a function that is determined by the transducer and pedestal, and takes an input of

, a function that is determined by the transducer and pedestal, and takes an input of  (Equation (3)). The composition of these two functions,

(Equation (3)). The composition of these two functions,  , gives the observer's psychometric function when it is plotted as a function of

, gives the observer's psychometric function when it is plotted as a function of  . We use

. We use  to represent this composition of functions:

to represent this composition of functions:

|

(6) |

If we fit the Weibull function,  , of Equation (1) to the psychometric function,

, of Equation (1) to the psychometric function,  , of Equation (6), then the Weibull slope parameter,

, of Equation (6), then the Weibull slope parameter,  , will be determined by both the noise CDF,

, will be determined by both the noise CDF,  , and the transducer,

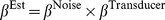

, and the transducer,  . In Theorem 2, we show that, to a good approximation,

. In Theorem 2, we show that, to a good approximation,  can be partitioned into a product of two factors,

can be partitioned into a product of two factors,  and

and  .

.  estimates the

estimates the  of the Weibull function that fits best to the noise CDF, while

of the Weibull function that fits best to the noise CDF, while  depends on the transducer function. Weibull

depends on the transducer function. Weibull  is found by multiplying these two factors together. We derive general analytical formulae for both factors, and then derive, from these formulae, specific expressions for

is found by multiplying these two factors together. We derive general analytical formulae for both factors, and then derive, from these formulae, specific expressions for  for a variety of noise distributions, and specific expressions for

for a variety of noise distributions, and specific expressions for  for several commonly used transducer functions.

for several commonly used transducer functions.

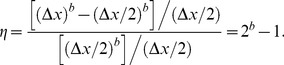

Our work greatly extends a result previously published by Pelli [19]. He showed that, for 2AFC detection or discrimination,

| (7) |

where  is the slope of

is the slope of  [20] against

[20] against  on log-log axes. Pelli derived this relationship using the concrete example of contrast detection, but it is a purely mathematical relationship (outlined in his “Analysis” section, Ref. [19], p. 121), which makes no assumptions about the underlying model, and could equally well be applied to discrimination along any unspecified stimulus dimension by replacing the contrast term,

on log-log axes. Pelli derived this relationship using the concrete example of contrast detection, but it is a purely mathematical relationship (outlined in his “Analysis” section, Ref. [19], p. 121), which makes no assumptions about the underlying model, and could equally well be applied to discrimination along any unspecified stimulus dimension by replacing the contrast term,  , with

, with  in his Equations (14) to (21).

in his Equations (14) to (21).

Pelli's analysis ran as follows. Given the definition of  for 2AFC,

for 2AFC,

| (8) |

(where  is the cumulative Gaussian), and the observation or assumption

is the cumulative Gaussian), and the observation or assumption

(where

(where  is the value of

is the value of  corresponding to a proportion correct of

corresponding to a proportion correct of  , giving

, giving  , and

, and  is the log-log slope of

is the log-log slope of  against

against  ), we have

), we have

| (9) |

Note that Equation (9) has the same form as Equation (6) if the pedestal,  , is zero, the transducer is a power function,

, is zero, the transducer is a power function,  , and the internal noise CDF is the cumulative Gaussian (as is usually assumed). If we let

, and the internal noise CDF is the cumulative Gaussian (as is usually assumed). If we let  represent the

represent the  of the Weibull function,

of the Weibull function,  , that fits best to the cumulative Gaussian,

, that fits best to the cumulative Gaussian,  , then, substituting this Weibull function for

, then, substituting this Weibull function for  in Equation (9) yields a Weibull function with

in Equation (9) yields a Weibull function with  given by

given by  , which is Relation (7).

, which is Relation (7).

In our terms, the “ ” part of Relation (7) is

” part of Relation (7) is  , the factor determined by the transducer; we will show that, in the case of a power-function transducer and zero pedestal, our general expression for

, the factor determined by the transducer; we will show that, in the case of a power-function transducer and zero pedestal, our general expression for  reduces to

reduces to  . We obtain Weibull

. We obtain Weibull  by multiplying

by multiplying  and

and  together, resulting in an estimated

together, resulting in an estimated  given by

given by  , which is equal to

, which is equal to  in the scenario just described. In this paper, we derive general analytical expressions for

in the scenario just described. In this paper, we derive general analytical expressions for  and

and  so that we can easily estimate Weibull

so that we can easily estimate Weibull  for any combination of noise distribution and transducer function, not just the specific case considered by Pelli.

for any combination of noise distribution and transducer function, not just the specific case considered by Pelli.

In many situations, the observer can be modelled using a linear filter. This is equivalent to using a linear transducer,  , where

, where  is a constant. For this transducer, Equation (6) gives

is a constant. For this transducer, Equation (6) gives

| (10) |

Thus, the linear observer's psychometric function (plotted on a linear abscissa,  ) will have the same basic shape as the internal noise CDF,

) will have the same basic shape as the internal noise CDF,  , just differing by a horizontal scaling factor,

, just differing by a horizontal scaling factor,  . So, if the observer behaves in a linear fashion, the psychometric function plotted on linear axes gives us a direct plot of the shape of the internal noise CDF. In this situation, since

. So, if the observer behaves in a linear fashion, the psychometric function plotted on linear axes gives us a direct plot of the shape of the internal noise CDF. In this situation, since  controls the Weibull function's shape on linear axes, the

controls the Weibull function's shape on linear axes, the  that fits best to the psychometric function will be the

that fits best to the psychometric function will be the  that fits best to the noise CDF (the sensitivity parameter,

that fits best to the noise CDF (the sensitivity parameter,  , will determine the best-fitting

, will determine the best-fitting  , since

, since  controls the Weibull function's horizontal scaling on linear axes).

controls the Weibull function's horizontal scaling on linear axes).

The internal noise is usually assumed to be Gaussian, but Neri [18] has recently disputed this assumption. Using reverse correlation methods, he attempted to measure both the “deterministic transformation” (in our terms, the transducer function for contrast), and the shape of the internal noise distribution. He concluded that, for temporal 2AFC detection of a bright bar in noise, the contrast transducer was linear, and the internal noise had a Laplace distribution (whose CDF and PDF are given in Figures 4A and 4E, respectively). This is a radical departure from the Gaussian assumption that has usually been made since the invention of signal detection theory in the 1950s [14]. The Laplace distribution has higher kurtosis (i.e., has a sharper peak and heavier tails) than the Gaussian (compare Figure 4E with 4F). As we shall see later on, for positive  , the Laplace distribution has a CDF that takes the form of a Weibull function with

, the Laplace distribution has a CDF that takes the form of a Weibull function with  . Since the psychometric function has the same shape as the internal noise CDF for a linear observer, Neri's proposal that the transducer is linear and the internal noise has a stimulus-independent Laplace distribution predicts that the observer's psychometric function should, like the Laplace CDF, be a Weibull function with

. Since the psychometric function has the same shape as the internal noise CDF for a linear observer, Neri's proposal that the transducer is linear and the internal noise has a stimulus-independent Laplace distribution predicts that the observer's psychometric function should, like the Laplace CDF, be a Weibull function with  . As noted earlier (and shown in Table 1 and Figure 1), this does not generally seem to be the case – with noise-free stimuli,

. As noted earlier (and shown in Table 1 and Figure 1), this does not generally seem to be the case – with noise-free stimuli,  is around 3 for contrast detection and, even for suprathreshold contrast discrimination, where

is around 3 for contrast detection and, even for suprathreshold contrast discrimination, where  is substantially lower, it is still usually found to be greater than 1; later, we shall show that, assuming additive noise, these

is substantially lower, it is still usually found to be greater than 1; later, we shall show that, assuming additive noise, these  values are more consistent with a distribution that has lower kurtosis than a Gaussian.

values are more consistent with a distribution that has lower kurtosis than a Gaussian.

Although the whole of this paper is couched in terms of the transducer model, it is not necessary to accept the transducer model to find the results useful; we just have to assume that the psychometric function has a form consistent with a particular combination of internal noise distribution and transducer function. For example, the intrinsic uncertainty model produces psychometric functions that are consistent with additive noise following an expansive power-function transducer with exponent that increases with channel uncertainty [21], but the model itself has no explicit transducer. Alternatively, suppose the observer carries out the discrimination task by making noisy estimates of each stimulus value and comparing them. Due to the noise, repeated presentations of the same stimulus value,  , will give a distribution of estimated values,

, will give a distribution of estimated values,  , around the mean estimate. If we can find a function,

, around the mean estimate. If we can find a function,  , such that the shape and width of the distribution of

, such that the shape and width of the distribution of  is independent of

is independent of  , then the observer is equivalent to a transducer model with additive noise. In this class of model, the stimulus value,

, then the observer is equivalent to a transducer model with additive noise. In this class of model, the stimulus value,  , is transduced to give

, is transduced to give  , and then stimulus-independent noise is added to the signal. But we do not have to assume that this is literally how the observer works – the noisy estimates of the stimulus values could have arisen from all sorts of mechanisms, not just a transducer followed by additive noise.

, and then stimulus-independent noise is added to the signal. But we do not have to assume that this is literally how the observer works – the noisy estimates of the stimulus values could have arisen from all sorts of mechanisms, not just a transducer followed by additive noise.

In keeping with our terminology of  for the threshold performance level, we introduce the terms

for the threshold performance level, we introduce the terms  and

and  to represent the values of

to represent the values of  and

and  at threshold, i.e. the values of

at threshold, i.e. the values of  and

and  when the proportion correct is

when the proportion correct is  , which we define as

, which we define as  .

.

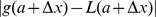

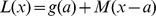

Theorem 1: A General Expression for the Psychometric Function in Terms of the Stimulus Values and the Threshold

Introduction

Equation (6) gives a general equation for the psychometric function in terms of the transducer function,  , and the noise CDF,

, and the noise CDF,  . The sensitivity of the system (which determines the discrimination threshold,

. The sensitivity of the system (which determines the discrimination threshold,  ) can be adjusted either by changing the gain of the transducer function (i.e., stretching or compressing

) can be adjusted either by changing the gain of the transducer function (i.e., stretching or compressing  along its vertical axis), or by adjusting the spread of the noise CDF (i.e., stretching or compressing

along its vertical axis), or by adjusting the spread of the noise CDF (i.e., stretching or compressing  along its horizontal axis), or both. Since the units in which we express the internal signal are arbitrary, researchers will usually either (1) fix the spread of the noise CDF at some convenient standard value (say, unit variance), and vary the transducer gain to achieve the desired threshold, or (2) fix the gain of the transducer at some convenient standard value (say, unit gain), and vary the spread of the noise CDF to achieve the desired threshold. However, for our purposes, it is more convenient to reformulate Equation (6) so that both the spread of the noise CDF and the gain of the transducer are set to convenient values, and the threshold is specified directly. This allows us to consider general forms of the transducer and noise, without having to worry about specifying the gain of the transducer or spread of the noise correctly – the reformulated equation will take care of the spread of the psychometric function automatically. Theorem 1 derives an expression for the psychometric function that meets these requirements.

along its horizontal axis), or both. Since the units in which we express the internal signal are arbitrary, researchers will usually either (1) fix the spread of the noise CDF at some convenient standard value (say, unit variance), and vary the transducer gain to achieve the desired threshold, or (2) fix the gain of the transducer at some convenient standard value (say, unit gain), and vary the spread of the noise CDF to achieve the desired threshold. However, for our purposes, it is more convenient to reformulate Equation (6) so that both the spread of the noise CDF and the gain of the transducer are set to convenient values, and the threshold is specified directly. This allows us to consider general forms of the transducer and noise, without having to worry about specifying the gain of the transducer or spread of the noise correctly – the reformulated equation will take care of the spread of the psychometric function automatically. Theorem 1 derives an expression for the psychometric function that meets these requirements.

Statement of Theorem 1

Theorem 1 has three parts:

- The expression for the psychometric function,

, in Equation (6) can be rewritten as

, in Equation (6) can be rewritten as

where

(11)  is the stimulus difference corresponding to a performance level of

is the stimulus difference corresponding to a performance level of  .

. If we change the gain of the transducer by replacing the function

with

with  , this will have no effect on the psychometric function,

, this will have no effect on the psychometric function,  , in Equation (11).

, in Equation (11).Similarly, if we change the spread of the noise CDF by replacing the function

with

with  , this will have no effect on

, this will have no effect on  in Equation (11).

in Equation (11).

Proof of Theorem 1

First, let us substitute the threshold values of  and

and  into Equation (6):

into Equation (6):

| (12) |

Equation (12) can be rearranged to give

| (13) |

Since the left hand side of Equation (13) is equal to 1, we can multiply anything by this expression, and leave it unchanged. Multiplying the argument of  in (6) by this expression, we obtain Equation (11), which proves Part 1 of the theorem. If we replace the transducer,

in (6) by this expression, we obtain Equation (11), which proves Part 1 of the theorem. If we replace the transducer,  , in Equation (11) with one that has a different gain,

, in Equation (11) with one that has a different gain,  , the

, the  's will obviously cancel out, leaving the psychometric function,

's will obviously cancel out, leaving the psychometric function,  , unchanged, which proves Part 2 of the theorem. To prove Part 3 of the theorem, consider what happens if we replace the function,

, unchanged, which proves Part 2 of the theorem. To prove Part 3 of the theorem, consider what happens if we replace the function,  , in Equation (11) with one that has a different spread,

, in Equation (11) with one that has a different spread,  . Then the inverse function is given by

. Then the inverse function is given by  , and the

, and the  's cancel out:

's cancel out:

|

which is identical to Equation (11).□

Discussion of Theorem 1

Equation (11) gives us an expression for the psychometric function (parameterized by the threshold,  ) in which we can use any convenient standard form of the transducer function or noise distribution, without having to worry about setting the right gain or spread.

) in which we can use any convenient standard form of the transducer function or noise distribution, without having to worry about setting the right gain or spread.

Although, for most of this paper, we define the threshold as the stimulus difference that gives rise to a performance level,  , defined as

, defined as  , Theorem 1 actually holds for any value that

, Theorem 1 actually holds for any value that  could have taken.

could have taken.

Note that, in the special case of a zero pedestal ( ) and a transducer that gives zero output for zero input (

) and a transducer that gives zero output for zero input ( ), Equation (11) reduces to

), Equation (11) reduces to

| (14) |

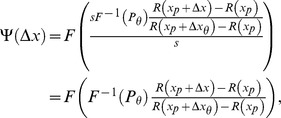

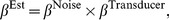

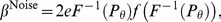

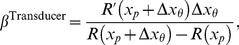

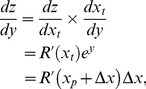

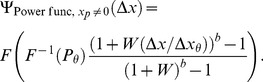

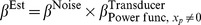

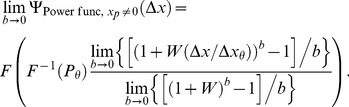

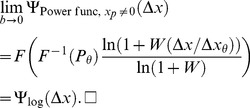

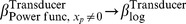

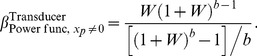

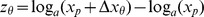

Theorem 2. An Expression That Estimates the Best-Fitting Weibull β for Unspecified Noise and Transducer

Statement of Theorem 2

Theorem 2 has two parts:

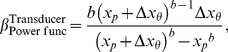

- If the parameters of the Weibull function,

, of Equation (1) can be set to provide a good fit to Equation (6), then the best-fitting beta will be well approximated by

, of Equation (1) can be set to provide a good fit to Equation (6), then the best-fitting beta will be well approximated by

where

(15)  and

and  are given by the following expressions:

are given by the following expressions:

(16)

and

(17)  and

and  are the derivatives of, respectively,

are the derivatives of, respectively,  and

and  with respect to their inputs.

with respect to their inputs.  is an estimate of the

is an estimate of the  of the Weibull function that fits best to the noise CDF,

of the Weibull function that fits best to the noise CDF,  , in Equation (6).

, in Equation (6).

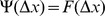

Proof of Theorem 2

By assumption, the Weibull function provides a close fit to  of Equation (6), so the gradient of

of Equation (6), so the gradient of  at threshold will closely match the gradient of the best-fitting Weibull function at threshold. Therefore, since

at threshold will closely match the gradient of the best-fitting Weibull function at threshold. Therefore, since  is proportional to the gradient of the Weibull function at threshold with an abscissa of

is proportional to the gradient of the Weibull function at threshold with an abscissa of  , we can derive a close approximation to

, we can derive a close approximation to  from the gradient of

from the gradient of  at threshold on this abscissa. To create a log abscissa, let

at threshold on this abscissa. To create a log abscissa, let  , so that

, so that

| (18) |

If we substitute  for

for  in Equation (1), we find that the gradient of the Weibull function on the log abscissa,

in Equation (1), we find that the gradient of the Weibull function on the log abscissa,  , is given by

, is given by

|

(19) |

For the Weibull function at threshold performance ( ), it follows that

), it follows that  . Substituting

. Substituting  for

for  in (19) gives

in (19) gives

| (20) |

and so

| (21) |

To evaluate Equation (21), we use the chain rule to expand the derivative:

| (22) |

As noted above, the assumed good fit of the Weibull function,  , of Equation (1) to

, of Equation (1) to  of Equation (6) means that the output,

of Equation (6) means that the output,  , of the Weibull function is close to the output of

, of the Weibull function is close to the output of  , which is the proportion correct,

, which is the proportion correct,  . Substituting

. Substituting  for

for  in Equation (22) therefore gives us a good estimate of

in Equation (22) therefore gives us a good estimate of  , which we call

, which we call  :

:

| (23) |

From Equation (6), we see that  , so

, so  is given by

is given by  , the noise PDF (which is the derivative of

, the noise PDF (which is the derivative of  with respect to

with respect to  ). At threshold,

). At threshold,  , and so,

, and so,

| (24) |

We will see that the first part of Equation (24),  , is proportional to

, is proportional to  , the

, the  -estimate of the Weibull function that fits best to the noise CDF, and the second part,

-estimate of the Weibull function that fits best to the noise CDF, and the second part,  at threshold, is proportional to

at threshold, is proportional to  defined above. Most of the work involves deriving an expression for

defined above. Most of the work involves deriving an expression for  at threshold.

at threshold.

Using Equation (18) to substitute for  in Equation (3), we get

in Equation (3), we get

| (25) |

Let us define  as the target stimulus value:

as the target stimulus value:

| (26) |

Using Equation (26) to substitute for  in Equation (25), we have

in Equation (25), we have

| (27) |

Then,

|

(28) |

where  is the derivative of

is the derivative of  with respect to its input. At threshold, we can substitute

with respect to its input. At threshold, we can substitute  for

for  in Equation (28), giving

in Equation (28), giving

| (29) |

Using Equation (29) to substitute for  in Equation (24), we obtain

in Equation (24), we obtain

| (30) |

From Equation (6), we have  , so, considering the values of

, so, considering the values of  and

and  at threshold,

at threshold,

| (31) |

Using Equation (31) to substitute for  in Equation (30), we have

in Equation (30), we have

| (32) |

To evaluate this expression as written in Equation (32), we need to know the gain of the transducer and the spread of the noise CDF, or at least their ratio. However, if we know the shape of the transducer (apart from the gain), and we know the shape of the noise CDF (apart from the spread), we can work out the ratio of gain to spread from  . But it is much more convenient to reformulate Equation (32) so that this is taken care of, and we can arbitrarily set the spread of the noise CDF and the gain of the transducer to any convenient values. We can use the same trick that we used in Theorem 1: We multiply the expression in Equation (32) by the left hand side of Equation (13), which equals 1. After doing this, and rearranging the terms, we obtain

. But it is much more convenient to reformulate Equation (32) so that this is taken care of, and we can arbitrarily set the spread of the noise CDF and the gain of the transducer to any convenient values. We can use the same trick that we used in Theorem 1: We multiply the expression in Equation (32) by the left hand side of Equation (13), which equals 1. After doing this, and rearranging the terms, we obtain

| (33) |

Equation (33) can be written in the form given by Equations (15) to (17), which proves the Part 1 of the theorem.

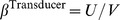

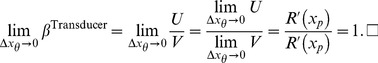

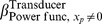

We now prove Part 2, that  is the estimated β of the Weibull function that fits best to the noise CDF,

is the estimated β of the Weibull function that fits best to the noise CDF,  . First, note that all linear transducers have the form

. First, note that all linear transducers have the form  . This gives

. This gives  , and so, from Equation (17),

, and so, from Equation (17),  , regardless of the value of

, regardless of the value of  ,

,  or

or  . Therefore, from (15),

. Therefore, from (15),  for a linear transducer. Now, consider the linear transducer

for a linear transducer. Now, consider the linear transducer  . For this transducer, Equation (6) gives

. For this transducer, Equation (6) gives  . The estimate of β when the Weibull function,

. The estimate of β when the Weibull function,  , is fitted to

, is fitted to  is given by

is given by  , as it will be for any linear transducer. Since, in this case,

, as it will be for any linear transducer. Since, in this case,  , the Weibull function has also been fitted to the noise CDF, and the estimated

, the Weibull function has also been fitted to the noise CDF, and the estimated  of this fitted function is given by

of this fitted function is given by  .□

.□

Discussion of Theorem 2

To get an intuition into how Weibull  is partitioned into the two terms,

is partitioned into the two terms,  and

and  , let us refer back to Equation (21). This equation shows that

, let us refer back to Equation (21). This equation shows that  is proportional to

is proportional to  at threshold. We used the chain rule to express

at threshold. We used the chain rule to express  as

as  , which is approximately equal to

, which is approximately equal to  .

.  depends only on the noise distribution, and is proportional to

depends only on the noise distribution, and is proportional to  ;

;  at threshold generally depends on the transducer, the pedestal and the threshold, and is proportional to

at threshold generally depends on the transducer, the pedestal and the threshold, and is proportional to  ; their product is proportional to Weibull

; their product is proportional to Weibull  . This is essentially where Equations (15) to (17) come from. The equations were tidied up by specifying the constants of proportionality, and defining

. This is essentially where Equations (15) to (17) come from. The equations were tidied up by specifying the constants of proportionality, and defining  and

and  in such a way that they are independent of any horizontal scaling of the noise distribution, or any vertical scaling of the transducer function. Thus, the

in such a way that they are independent of any horizontal scaling of the noise distribution, or any vertical scaling of the transducer function. Thus, the  term will be the same for, for example, all Gaussian distributions, whatever the spread, and the

term will be the same for, for example, all Gaussian distributions, whatever the spread, and the  term will be the same for, for example, all power functions with a particular exponent, whatever the gain.

term will be the same for, for example, all power functions with a particular exponent, whatever the gain.

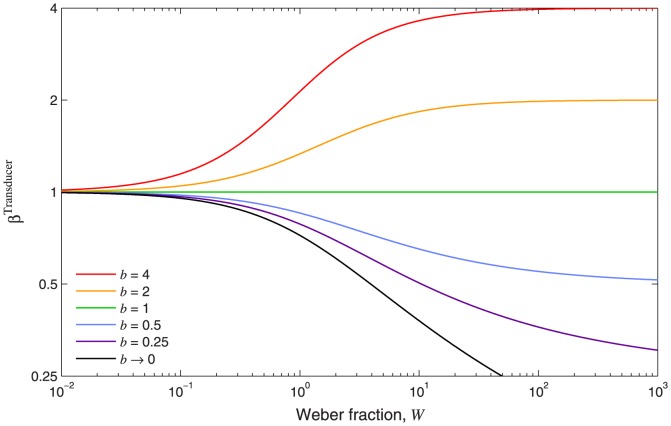

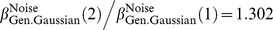

Equation (17) expresses  as a function of the threshold stimulus difference,

as a function of the threshold stimulus difference,  . Alternatively, for nonzero pedestals, we can reformulate Equation (17) as a function of the Weber fraction,

. Alternatively, for nonzero pedestals, we can reformulate Equation (17) as a function of the Weber fraction,  , defined as the ratio

, defined as the ratio  at threshold:

at threshold:

| (34) |

From Equation (34), we obtain  , and, using this expression to substitute for

, and, using this expression to substitute for  in Equation (17), we can rewrite the expression for

in Equation (17), we can rewrite the expression for  in terms of

in terms of  :

:

| (35) |

The Weber fraction can only be defined if  . If

. If  and

and  , Equation (17) reduces to

, Equation (17) reduces to

| (36) |

When the stimulus dimension of interest is contrast, a discrimination experiment with a zero pedestal is called a contrast detection experiment.

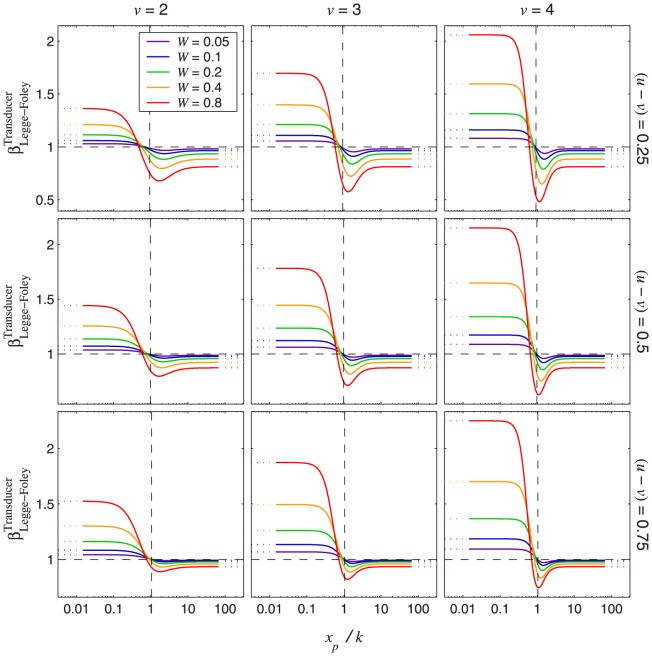

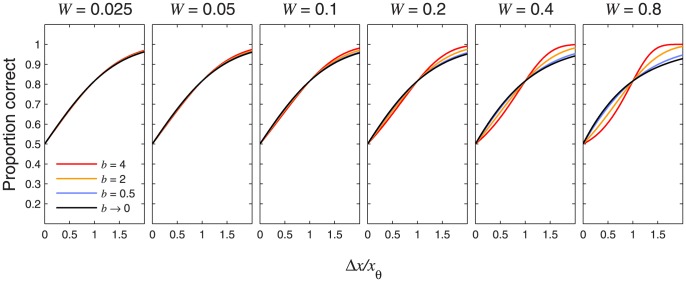

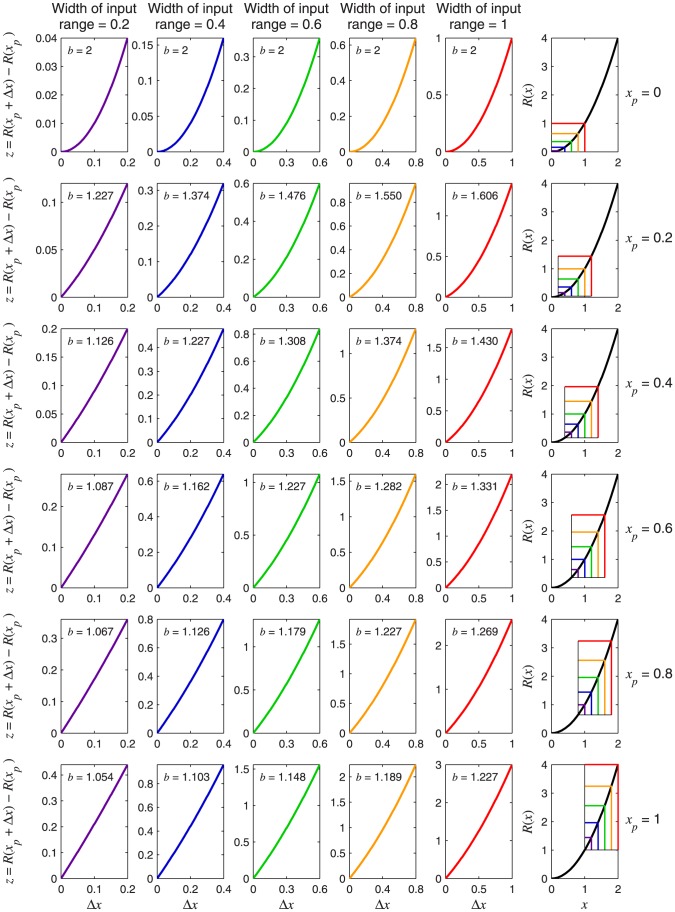

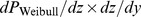

One important property of  is that it is always greater than 1 for a fully expansive transducer function (i.e., one for which the slope always increases away from zero with increasing input), and is always less than 1 for a fully compressive transducer function (i.e., one for which the slope always decreases towards zero with increasing input). Here we provide a geometrical argument (illustrated in Figure 5) to explain why this is the case.

is that it is always greater than 1 for a fully expansive transducer function (i.e., one for which the slope always increases away from zero with increasing input), and is always less than 1 for a fully compressive transducer function (i.e., one for which the slope always decreases towards zero with increasing input). Here we provide a geometrical argument (illustrated in Figure 5) to explain why this is the case.

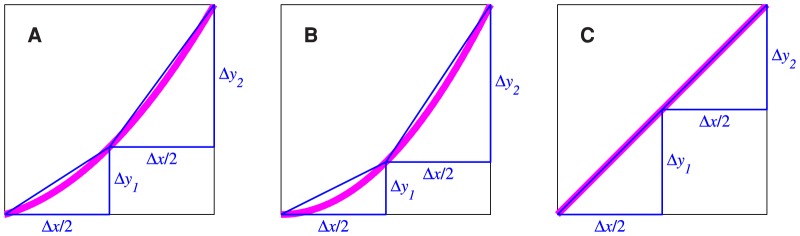

Figure 5. Geometrical interpretation of the expression for  .

.

In each panel, the thick, magenta curve represents the transducer function. The horizontal axes represent the transducer input, and the vertical axes represent the transducer output.  is the pedestal level, and

is the pedestal level, and  is the discrimination threshold. The gradient of the blue line,

is the discrimination threshold. The gradient of the blue line,  , is equal to

, is equal to  , defined in Equation (39). The green line is the tangent to the transducer at point

, defined in Equation (39). The green line is the tangent to the transducer at point  ; its gradient is equal to

; its gradient is equal to  , defined in Equation (38). The ratio

, defined in Equation (38). The ratio  is equal to

is equal to  . For an expansive transducer (panel A),

. For an expansive transducer (panel A),  , so

, so  . For a compressive transducer (panel B),

. For a compressive transducer (panel B),  , so

, so  . For a linear transducer (panel C),

. For a linear transducer (panel C),  , so

, so  .

.

First, note that we can rewrite Equation (17) as

| (37) |

where

| (38) |

and

| (39) |

with

| (40) |

These quantities are illustrated for an expansive transducer in Figure 5A, where the thick, magenta curve represents the transducer. The filled circles mark points  and

and  . The gradient of the blue line connecting these two points is

. The gradient of the blue line connecting these two points is  , defined in Equation (39). The short, green, line segment is the tangent to the transducer at

, defined in Equation (39). The short, green, line segment is the tangent to the transducer at  ; its gradient is

; its gradient is  , defined in Equation (38). It is clear from the diagram that, for an expansive transducer, like the one illustrated, the gradient of the transducer at

, defined in Equation (38). It is clear from the diagram that, for an expansive transducer, like the one illustrated, the gradient of the transducer at  must always be steeper than the blue line, because, as we travel along the transducer function from

must always be steeper than the blue line, because, as we travel along the transducer function from  to

to  , the transducer approaches the second point from below the blue line. Therefore,

, the transducer approaches the second point from below the blue line. Therefore,  must always be greater than

must always be greater than  , so, from Equation (37),

, so, from Equation (37),  must always be greater than 1.

must always be greater than 1.

Figure 5B illustrates the situation for a compressive transducer. Here, as we travel along the transducer function from  to

to  , the transducer approaches the second point from above the blue line, and so the gradient of the transducer at the second point must be lower than the gradient of the blue line. Thus,

, the transducer approaches the second point from above the blue line, and so the gradient of the transducer at the second point must be lower than the gradient of the blue line. Thus,  must always be less than

must always be less than  , so, from Equation (37),

, so, from Equation (37),  must always be less than 1.

must always be less than 1.

Finally, Figure 5C illustrates the situation for a linear transducer, i.e. one that is neither expansive nor compressive. Here, the gradient of the transducer is equal to the gradient of the blue line, so  , and therefore

, and therefore  . This provides a geometrical insight into the previously proved fact that

. This provides a geometrical insight into the previously proved fact that  for a linear transducer.

for a linear transducer.

In conclusion, Weibull  can be partitioned into two factors:

can be partitioned into two factors:  (Equation (16)), which estimates the

(Equation (16)), which estimates the  of the Weibull function that fits best to the noise CDF,

of the Weibull function that fits best to the noise CDF,  ; and

; and  (Equation (17), (35) or (36)), which is determined partly (or, as we shall see, sometimes completely) by the shape of the transducer function,

(Equation (17), (35) or (36)), which is determined partly (or, as we shall see, sometimes completely) by the shape of the transducer function,  .

.  is greater than 1 for an expansive transducer, less than 1 for a compressive transducer, and equal to 1 for a linear transducer.

is greater than 1 for an expansive transducer, less than 1 for a compressive transducer, and equal to 1 for a linear transducer.  is independent of the spread (i.e. horizontal scaling) of the CDF (analogously, Weibull β is independent of the spread of the Weibull function on linear axes);

is independent of the spread (i.e. horizontal scaling) of the CDF (analogously, Weibull β is independent of the spread of the Weibull function on linear axes);  is independent of the gain (i.e. vertical scaling) of the transducer. Multiplying

is independent of the gain (i.e. vertical scaling) of the transducer. Multiplying  and

and  together gives us

together gives us  , the estimate of Weibull

, the estimate of Weibull  . The expressions for

. The expressions for  and

and  derived above are completely general. In later sections, we derive values for

derived above are completely general. In later sections, we derive values for  given specific noise distributions, and expressions for

given specific noise distributions, and expressions for  given specific transducers.

given specific transducers.

There are two possible sources of error in the Weibull  estimate,

estimate,  . Firstly, the derivation of the expression for

. Firstly, the derivation of the expression for  relies on the use of

relies on the use of  as an approximation of

as an approximation of  at threshold in the step from Equation (22) to (23), where

at threshold in the step from Equation (22) to (23), where  is the output of the psychometric function,

is the output of the psychometric function,  , and

, and  is the output of the best-fitting Weibull function. The accuracy of

is the output of the best-fitting Weibull function. The accuracy of  relies on these two slopes being close at the threshold performance level. A second potential source of inaccuracy is that, even if these two slopes are very close at the threshold level, the overall psychometric function,

relies on these two slopes being close at the threshold performance level. A second potential source of inaccuracy is that, even if these two slopes are very close at the threshold level, the overall psychometric function,  , might still not be well fit by a Weibull function, in which case the best-fitting Weibull

, might still not be well fit by a Weibull function, in which case the best-fitting Weibull  could deviate substantially from

could deviate substantially from  . However, as we will show, in the range of conditions usually encountered, the Weibull function does provide a good fit to the psychometric function, so

. However, as we will show, in the range of conditions usually encountered, the Weibull function does provide a good fit to the psychometric function, so  is accurate. In cases where

is accurate. In cases where  is a Weibull function, the best-fitting Weibull function will fit exactly, and

is a Weibull function, the best-fitting Weibull function will fit exactly, and  gives the exact value of the best-fitting Weibull

gives the exact value of the best-fitting Weibull  .

.

Deriving  for Specific Noise Distributions

for Specific Noise Distributions

As proved in Theorem 2,  is an estimate of the

is an estimate of the  of the Weibull function that fits best to the noise CDF. In this section, we evaluate the analytical expression for

of the Weibull function that fits best to the noise CDF. In this section, we evaluate the analytical expression for  (Equation (16)) for several different noise distributions. We also compare each value with the

(Equation (16)) for several different noise distributions. We also compare each value with the  value obtained by fitting the Weibull function to the noise CDF numerically. There is of course no single correct answer to the question of what is the best-fitting

value obtained by fitting the Weibull function to the noise CDF numerically. There is of course no single correct answer to the question of what is the best-fitting  – it depends on both the fitting criterion and the points on the psychometric function that are sampled. When Pelli [19] fitted the Weibull function to the Gaussian CDF, he minimized the maximum error over all positive inputs. We instead performed a maximum-likelihood fit over all inputs from 0 to twice the threshold (actually, we approximated this by sampling the psychometric function in discrete steps of one thousandth of the threshold). Our rationale for this approach was that fitting the psychometric function is usually done by maximum likelihood, and the threshold usually falls around the middle of the set of stimulus values.

– it depends on both the fitting criterion and the points on the psychometric function that are sampled. When Pelli [19] fitted the Weibull function to the Gaussian CDF, he minimized the maximum error over all positive inputs. We instead performed a maximum-likelihood fit over all inputs from 0 to twice the threshold (actually, we approximated this by sampling the psychometric function in discrete steps of one thousandth of the threshold). Our rationale for this approach was that fitting the psychometric function is usually done by maximum likelihood, and the threshold usually falls around the middle of the set of stimulus values.

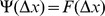

Evaluating  for a generalized Gaussian noise CDF

for a generalized Gaussian noise CDF

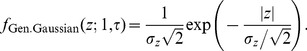

Most psychophysical models use Gaussian noise. This is partly because the Gaussian is often easy to handle analytically, but also because, according to the Central Limit Theorem, the sum of independent sources of noise tends towards a Gaussian-distributed random variable, whatever the distribution of the individual noise sources. However, as noted earlier, Neri [18] has recently argued that internal sensory noise is closer to a Laplace distribution. Both the Gaussian and the Laplace are parameterizations of the generalized Gaussian, which we consider in this section.

The generalized Gaussian CDF is given by the following expression, with horizontal scaling (i.e. spread) determined by  , and shape determined by

, and shape determined by  :

:

| (41) |

where  for

for  and

and  for

for  , and

, and  is the lower incomplete gamma function, defined as

is the lower incomplete gamma function, defined as

|

(42) |

in Equation (42) is the gamma function, which is a continuous generalization of the factorial, given by

in Equation (42) is the gamma function, which is a continuous generalization of the factorial, given by

|

(43) |

Note, the lower incomplete gamma function is often defined without the normalization term,  , but it is more convenient for us to define it as in Equation (42), because otherwise we would just have to divide by

, but it is more convenient for us to define it as in Equation (42), because otherwise we would just have to divide by  anyway, complicating the expression for the generalized Gaussian in Equation (41); in addition, the MATLAB function gammainc evaluates the function as defined in Equation (42).

anyway, complicating the expression for the generalized Gaussian in Equation (41); in addition, the MATLAB function gammainc evaluates the function as defined in Equation (42).

The variance of the generalized Gaussian distribution is given by

| (44) |

We use the subscript,  , in Equation (44) to indicate that this is the variance of the noise on the difference of mean signals,

, in Equation (44) to indicate that this is the variance of the noise on the difference of mean signals,  , as opposed to the variance of the noise on the transducer outputs, which we could call

, as opposed to the variance of the noise on the transducer outputs, which we could call  . As long as the noise on the two transducer outputs within a trial is uncorrelated and has zero mean, then we have

. As long as the noise on the two transducer outputs within a trial is uncorrelated and has zero mean, then we have  , and so

, and so  , whatever form the noise CDF takes.

, whatever form the noise CDF takes.

The PDF of the generalized Gaussian distribution is given by the derivative of the CDF:

| (45) |

As noted above, the shape of the distribution is determined by the parameter,  . When

. When  , Equation (45) describes the Gaussian PDF:

, Equation (45) describes the Gaussian PDF:

| (46) |

When  , Equation (45) describes the Laplace PDF:

, Equation (45) describes the Laplace PDF:

|

(47) |

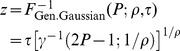

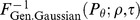

For positive  , the inverse of the generalized Gaussian CDF is given by

, the inverse of the generalized Gaussian CDF is given by

|

(48) |

(we don't need to worry about negative  , because, for any monotonically increasing transducer, and positive

, because, for any monotonically increasing transducer, and positive  ,

,  as defined in Equation (3) is always positive). The inverse of the lower incomplete gamma function,

as defined in Equation (3) is always positive). The inverse of the lower incomplete gamma function,  , in Equation (48) can be evaluated using the MATLAB function gammaincinv. At threshold,

, in Equation (48) can be evaluated using the MATLAB function gammaincinv. At threshold,  . Substituting these values into Equation (48), we get

. Substituting these values into Equation (48), we get

| (49) |

We can use the expression for  in Equation (49) to substitute for

in Equation (49) to substitute for  in Equation (16), and we can use the expression for

in Equation (16), and we can use the expression for  in Equation (45) to substitute for

in Equation (45) to substitute for  in Equation (16). The different instances of

in Equation (16). The different instances of  cancel out, giving us an expression for

cancel out, giving us an expression for  for the generalized Gaussian noise distribution that is a function of

for the generalized Gaussian noise distribution that is a function of  :

:

| (50) |

where

| (51) |

The subscript, “Gen.Gaussian”, on  in Equation (50) indicates the general form of the noise CDF.

in Equation (50) indicates the general form of the noise CDF.

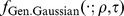

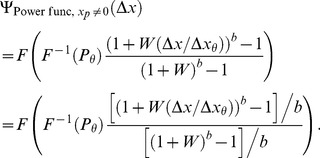

Figure 6 plots  as a function of

as a function of  . As proved in Appendix S1,

. As proved in Appendix S1,  as

as  . Values of

. Values of  for

for  = 1, 2, and 4 are given by

= 1, 2, and 4 are given by

| (52) |

| (53) |

| (54) |

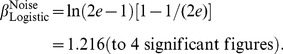

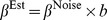

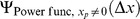

The value of  for the Laplace distribution (Equation (52)) is exactly 1. This is because the positive half of its CDF is a Weibull function with

for the Laplace distribution (Equation (52)) is exactly 1. This is because the positive half of its CDF is a Weibull function with  . This can be seen from the fact that

. This can be seen from the fact that  , and so Equation (41) gives, for positive

, and so Equation (41) gives, for positive  ,

,

| (55) |

The Weibull function with  therefore gives an exact fit to the Laplacian noise CDF, and the estimated Weibull

therefore gives an exact fit to the Laplacian noise CDF, and the estimated Weibull  , given by

, given by  , is exactly correct.

, is exactly correct.

Figure 6.

plotted as a function of

plotted as a function of  .

.

This curve plots the predicted  when the Weibull function is fitted to the CDF of generalized Gaussian distributions with a range of different

when the Weibull function is fitted to the CDF of generalized Gaussian distributions with a range of different  values. The graph asymptotes to a value of

values. The graph asymptotes to a value of  (see Appendix S1), indicated by the horizontal dashed line. The shape of the generalized Gaussian distribution is determined by

(see Appendix S1), indicated by the horizontal dashed line. The shape of the generalized Gaussian distribution is determined by  .

.  -values of 1 and 2 are special cases:

-values of 1 and 2 are special cases:  gives a Laplace distribution, and

gives a Laplace distribution, and  gives a Gaussian distribution.

gives a Gaussian distribution.

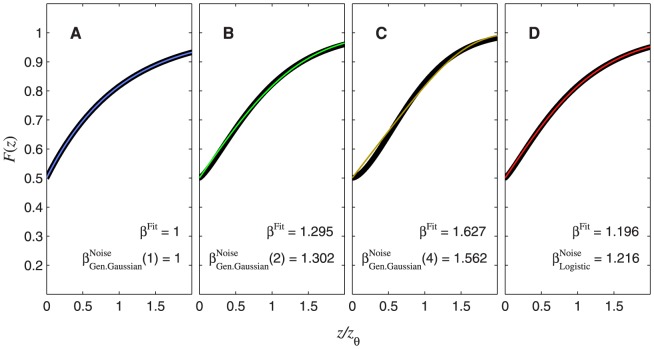

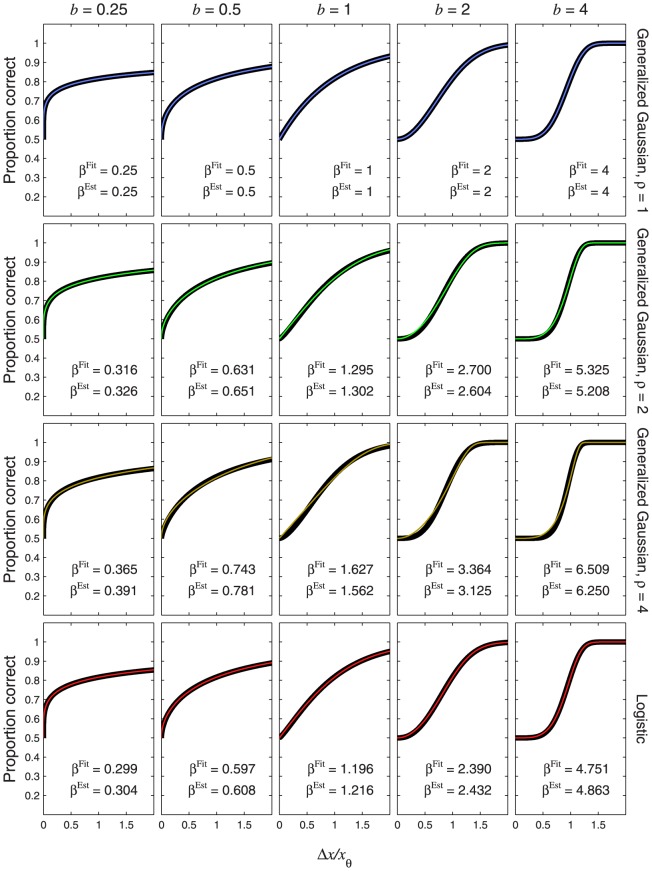

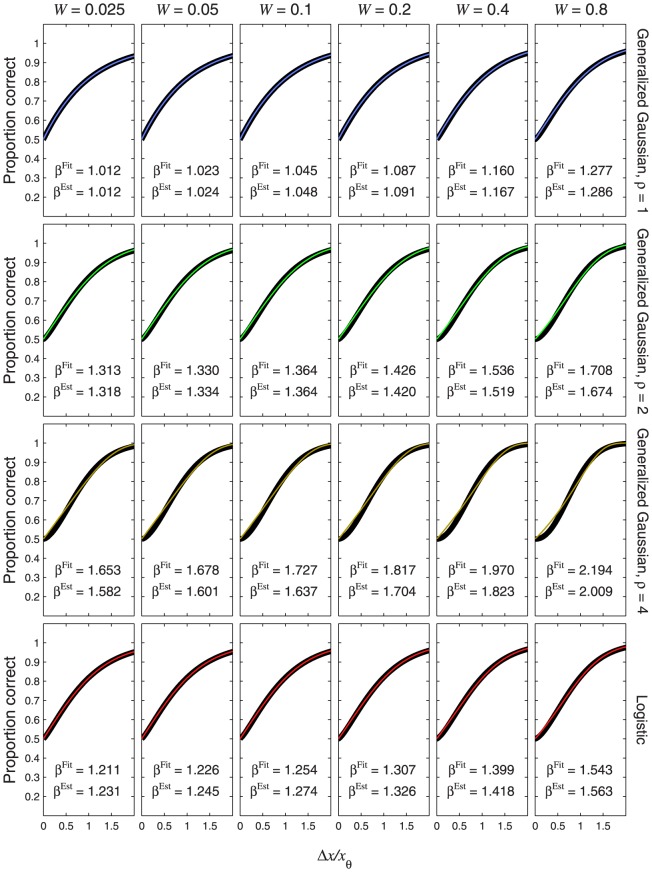

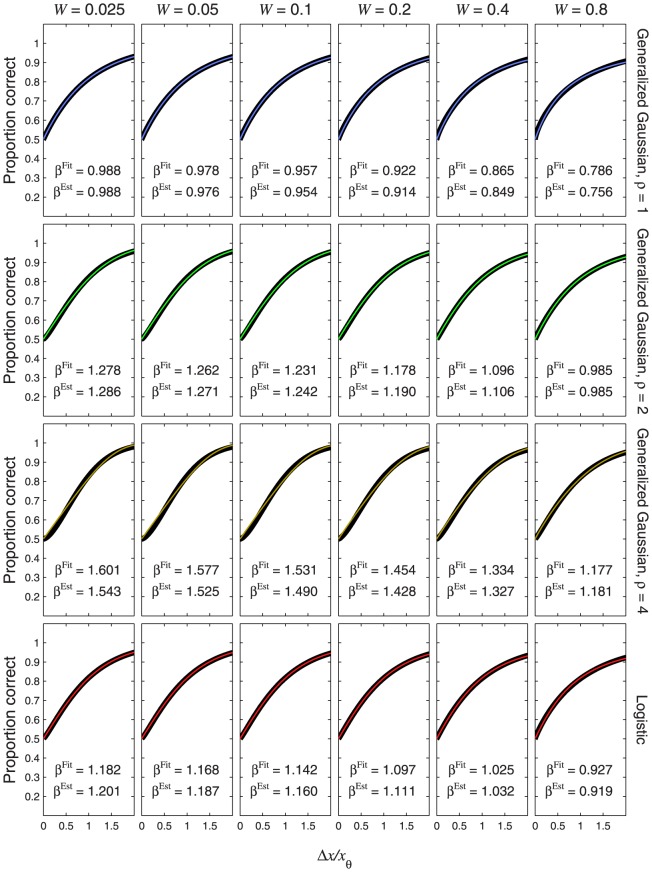

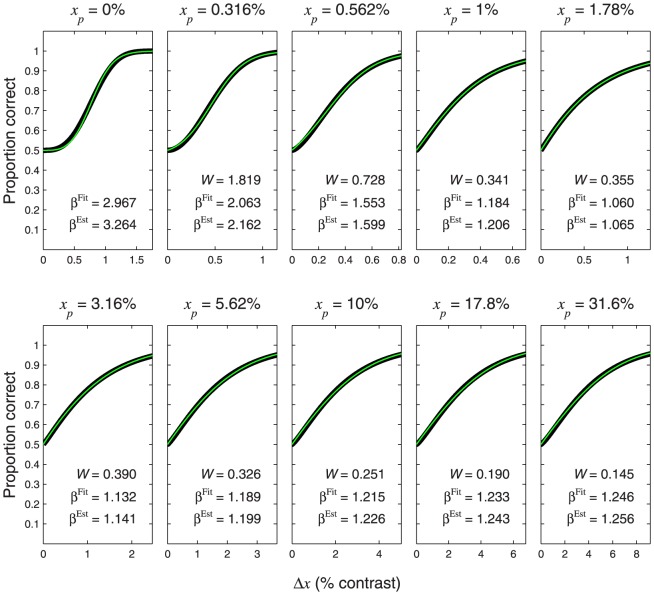

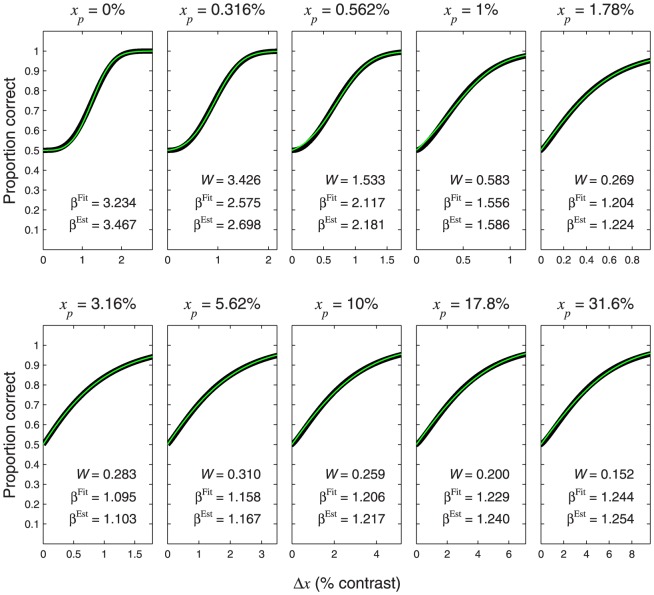

The coloured curves in Figures 7A, 7B, and 7C show the generalized Gaussian noise CDFs for  = 1, 2, and 4, respectively, and the thick, black curves show the best-fitting Weibull functions (maximum-likelihood fit over inputs from 0 to twice the threshold). Also shown in each panel is the appropriate value of

= 1, 2, and 4, respectively, and the thick, black curves show the best-fitting Weibull functions (maximum-likelihood fit over inputs from 0 to twice the threshold). Also shown in each panel is the appropriate value of  from equations (52) to (54), and the best-fitting Weibull