Abstract

The capacity of the two types of non-symbolic emotional stimuli most widely used in research on affective processes, faces and (non-facial) emotional scenes, to capture exogenous attention, was compared. Negative, positive and neutral faces and affective scenes were presented as distracters to 34 participants while they carried out a demanding digit categorization task. Behavioral (reaction times and number of errors) and electrophysiological (event-related potentials—ERPs) indices of exogenous attention were analyzed. Globally, facial expressions and emotional scenes showed similar capabilities to attract exogenous attention. Electrophysiologically, attentional capture was reflected in the P2a component of ERPs at the scalp level, and in left precentral areas at the source level. Negatively charged faces and scenes elicited maximal P2a/precentral gyrus activity. In the case of scenes, this negativity bias was also evident at the behavioral level. Additionally, a specific effect of facial distracters was observed in N170 at the scalp level, and in the fusiform gyrus and inferior parietal lobule at the source level. This effect revealed maximal attention to positive expressions. This facial positivity offset was also observed at the behavioral level. Taken together, the present results indicate that faces and non-facial scenes elicit partially different and, to some extent, complementary exogenous attention mechanisms.

Keywords: exogenous attention, emotion, ERPs, fusiform gyrus, precentral gyrus

INTRODUCTION

Evolutionary success depends heavily on the efficiency of the nervous system in detecting biologically important events and reorienting processing resources to them. This efficiency relies on exogenous attention, also called automatic, stimulus-driven, or bottom–up attention, among several other terms. Therefore, exogenous attention can be understood as an adaptive tool that permits the detection and processing of biologically salient events even when the individual is engaged in a resource-consuming task. Indeed, several experiments show that emotional stimuli (by definition, important for the individual) presented as distracters interfere with the ongoing task (Constantine et al., 2001; Vuilleumier et al., 2001; Eastwood et al., 2003; Carretié et al., 2004a, 2009, 2011; Doallo et al., 2006; Huang and Luo, 2007; Thomas et al., 2007; Yuan et al., 2007). However, emotional stimuli need to exceed a critical threshold value to capture attention (Mogg and Bradley, 1998; Koster et al., 2004). This threshold depends on several factors, such as level of involvement in the ongoing cognitive task (Pessoa and Ungerleider, 2004; Schwartz et al., 2005) and the individual’s state and trait characteristics (Mogg and Bradley, 1998; Bishop, 2008). The third factor, particularly relevant for this study, is the nature and intensity of the emotional stimulus itself.

The rich variety of emotional stimuli that humans process in their everyday life has been categorized by experimental practice according to their physical nature. Within the visual modality, stimuli can be divided into symbolic (e.g. written emotional language, signs or simple drawings) and non-symbolic material. The latter, important in evolutionary terms and the focus of this study, can be further subdivided into facial and non-facial affective stimuli (non-facial affective stimulation will be referred to as ‘emotional scenes’ from now on). Indeed, several research-oriented databases have been created for each of these categories (Lundqvist et al., 1998; Bradley and Lang, 1999; Lang et al., 2005). This segregation is reasonable bearing in mind that each category has its own affective frame (e.g. its own valence and arousal scale), and is managed, in some phases of processing, by a differential neural circuitry whose activity is reflected in specific temporal and spatial patterns in the main neural signals.

A question that arises is whether there is some type of inter-categorical (together with intra-categorical) differentiation in the capacity to capture exogenous attention. Previous studies have clearly shown differences between symbolic and non-symbolic categories. For example, there is broad agreement that verbal emotional material is less arousing than other types of visual affective items such as facial expressions or emotional scenes (Vanderploeg et al., 1987; Mogg and Bradley, 1998; Keil, 2006; Kissler et al., 2006; Frühholz et al., 2011). Consequently, verbal emotional material would be less capable of capturing attention than emotional pictorial stimuli (Hinojosa et al., 2009; Frühholz et al., 2011; Rellecke et al., 2011). In the case of non-symbolic stimuli, both facial (Vuilleumier et al., 2001; Eastwood et al., 2003) and non-facial distracters (Doallo et al., 2006; Carretié et al., 2009) have shown to interfere with the ongoing task. However, there have up to now been no direct comparisons within non-symbolic categories (faces and scenes) investigating whether they have a different impact on exogenous attention.

Indirect data do not provide enough information to solve this question. On the one hand, facial expressions are the most important visual signal of emotion from others: they are omnipresent in our daily life, either in natural situations or through visual media. This communicative function of facial expressions is in addition to their intrinsic affective meaning (Izard, 1992), and is absent in the majority of emotional scenes usually employed in research. On the other hand, there are also several arguments suggesting the superiority of emotional scenes for capturing attention. First, facial expressions are not as associated with extreme affective reactions, such as phobias, as are stimuli usually employed within emotional scenes (e.g. blood, snakes or spiders). This is probably due to the omnipresence of facial expressions, which make them unavoidable (even already-phobic stimuli cease to be so when they cannot be avoided: Linden, 1981; Zlomke and Davis, 2008; Cloitre, 2009). Second, facial expressions are not always reliable indices of emotion, as their affective meaning is ambiguous in some circumstances, particularly when they are presented in a decontextualized—disembodied—fashion (e.g. social smile vs spontaneous, happiness-related smile; Ekman, 1993; Fridlund, 1997). This ambiguity contrasts with the more direct and explicit affective meaning of non-facial scenes usually employed in research.

The present study sought to clarify the relative role of facial expressions and emotional scenes in exogenous attention. Unlike previous studies, we used a single experimental design to investigate the commonalities and differences of the effect of faces and scenes on exogenous attention, in order to further understanding the influence of affective visual events on attention. To this end, emotional faces and affective scenes were presented as distracters while participants carried out a demanding digit categorization task under the same experimental conditions. The main behavioral index of exogenous attention to distracters is the extent of disruption in the ongoing task. This disruption is reflected in significantly longer reaction times or lower accuracy in the task whenever a distracter captures attention to a greater extent than other distracters.

At the neural level, exogenous attention effects are expected to be observed in two ERP components. First, the anterior P2 (P2a) component of ERPs (i.e. a positive component peaking between 150 and 250 ms at anterior scalp, although it is labeled in different ways) shows significant amplitude increments when visual stimuli capture exogenous attention in a wide variety of tasks (Kenemans et al., 1989, 1992; Carretié et al., 2004a, 2011; Doallo et al., 2006; Huang and Luo, 2007; Thomas et al., 2007; Yuan et al., 2007; Kanske et al., 2011), in contrast to other components such as late positive potentials, rather reflecting endogenous attention to emotional events (see the review by Olofsson et al., 2008; see also Hajcak and Olvet, 2008). Second, facial distracters are expected to elicit specific electrophysiological effects, particularly in N170 component. N170 amplitude is enhanced in response to faces (Bentin et al., 1996), and is modulated by their affective charge (e.g. Pizzagalli et al., 2002; Batty and Taylor, 2003; Miyoshi et al., 2004; Stekelenburg and Gelder, 2004; Blau et al., 2007; Krombholz et al., 2007; Japee et al., 2009; Vlamings et al., 2009; Marzi and Viggiano, 2010; Rigato et al., 2010; Wronka and Walentowska, 2011; but see Eimer et al., 2003; Leppänen et al., 2007). Interestingly, N170 sensitivity to faces with respect to other visual stimuli (Carmel and Bentin, 2002), as well as N170 sensitivity to facial affective meaning (Pegna et al., 2011), has been reported even when endogenous attention is not directed to faces, such as in the present experiment (but see Wronka and Walentowska, 2011).

An important difficulty in the study of these two ERP components is that they overlap in time: their reported peaks usually occur between 150 and 200 ms. Thus, despite their different polarity, they may influence each other. Spatial analyses might help to segregate them, since N170 is maximal at lateral parietal and occipital areas, while P2a is maximal at frontal sites. Effects observed in N170 are expected to be associated with face processing areas, such as superior temporal or fusiform gyri. Meanwhile, P2a effects are most likely related to activity in non-specialized—multicategorical—areas of visual cortex or in networks underlying exogenous attention (dorsal/ventral attention networks: Posner et al., 2007; Corbetta et al., 2008). These behavioral and electrophysiological responses to facial and scene distracters during the same digit categorization task are expected to reveal differential mechanisms underlying exogenous attention to the two types of emotional non-symbolic visual stimuli.

METHODS

Participants

Thirty-eight individuals participated in this experiment, although data from only 34 of them could eventually be analyzed, as explained later (28 women, age range of 18–51 years, mean = 22.79, s.d. = 7.75). The study had been approved by the Universidad Autónoma de Madrid’s Ethics Committee. All participants were students of Psychology at that university and took part in the experiment voluntarily after providing informed consent. They reported normal or corrected-to-normal visual acuity.

Stimuli and procedure

Participants were placed in an electrically shielded, sound-attenuated room. According to the type of distracter, six types of stimuli were presented to participants in two blocks separated by a rest period: negative, neutral and positive faces (F−, F0, F+; positioned in the centre of the picture), and negative, neutral and positive scenes1 (S−, S0, S+). The complete set of stimuli employed in this experiment is available at http://www.uam.es/CEACO/sup/CaraEscena12.htm. Happy expressions were employed as positive faces. Since expressions other than happiness are problematic with respect to recognition rate at the positive valence extreme (Tracy and Robins, 2008), a single expression was also employed in the negative counterpart so as to make the experimental design symmetrical. Disgust faces were selected since, along with sad faces, their effect in ERPs has been shown to be quicker and more widely distributed than other negative expressions, such as anger or fear (Esslen et al., 2004). Moreover, the disgust expression is better recognized (in terms of both reaction time and accuracy) than other negative expressions, such as fear or sadness (Tracy and Robins, 2008). Finally, disgust-related visual stimuli have been shown to capture exogenous attention to a greater extent than other negative stimuli (Charash and McKay, 2002; Carretié et al., 2011).

The size for all stimuli, which were presented on a back-projection screen through a RGB projector, was 75.17° (width) × 55.92° (height). Each of these pictures contained two central digits (4.93° height), yellow in color and outlined in solid black, so that they could be clearly distinguished from the background. Each picture was displayed on the screen for 350 ms, and stimulus onset asynchrony was 3000 ms. The task was related to the central digits: participants were required to press, ‘as accurately and rapidly as possible’, one key if both digits were even or if both were odd (i.e. if they were ‘concordant’), and a different key if one central digit was even and the other was odd (i.e. if they were ‘discordant’). There were 40 combinations of digits in all, half of them concordant and the other half discordant. The same combination of digits was repeated across emotional conditions in order to ensure that task demands were the same for F−, F0, F+, S−, S0, and S+. Therefore, 40 trials of each type were presented (20 different pictures, each of them presented twice, i.e. with concordant and discordant central digits). Stimuli were presented in semi-random order in such a way that there were never more than three consecutive trials for the same emotional or numerical category. Participants were instructed to look continuously at a fixation mark located in the centre of the screen and to blink preferably after a beep that sounded 1300 ms after each stimulus onset.

Facial stimuli were taken both from the MMI Facial Expression DataBase (http://www.mmifacedb.com/) and from our own emotional picture database (EmoMadrid; http://www.uam.es/CEACO/EmoMadrid.htm). At the end of the recording session, participants were instructed to indicate, through an open response questionnaire (i.e. a priori emotional categories to choose among were not provided), the emotion expressed by each face. Average recognition rates (ranging from 0 to 1) were 0.876, 0.846 and 0.963 for F−, F0 and F+, respectively. Scenes were also taken from EmoMadrid. These images were selected according to valence and arousal average assessments provided in that database (n > 30 in all pictures), the latter being similar for positive and negative scenes. Moreover, after the recording session participants themselves filled out a bidimensional scale for each scene, so that their assessments on valence and arousal were recorded (Table 1).

Table 1.

Means and S.E.M. (in parenthesis) of: (i) subjective responses to each of the six types of stimuli used as distracters, (ii) behavioral responses (reaction times—RTs—and accuracy) and (iii) neural (P2 and N170 factor scores, linearly related to amplitudes) to each distracter type

| Faces |

Scenes |

|||||

|---|---|---|---|---|---|---|

| Negative | Neutral | Positive | Negative | Neutral | Positive | |

| Subjective ratings | ||||||

| Valence (1, negative to 5, positive) | 2.157 (0.017) | 2.921 (0.019) | 3.853 (0.020) | 1.715 (0.032) | 3.149 (0.020) | 4.103 (0.027) |

| Arousal (1, calming to 5, arousing) | 3.410 (0.020) | 3.013 (0.016) | 3.047 (0.030) | 4.396 (0.025) | 2.874 (0.026) | 4.132 (0.034) |

| Behavior | ||||||

| RTs (ms) | 929.200 (26.802) | 927.701 (28.322) | 947.976 (26.359) | 938.673 (27.249) | 939.912 (26.894) | 917.448 (25.266) |

| Accuracy (0–1) | 0.878 (0.013) | 0.894 (0.010) | 0.867 (0.013) | 0.856 (0.016) | 0.892 (0.014) | 0.890 (0.013) |

| Scalp level ERPs | ||||||

| P2 factor scores | 0.433 (0.119) | 0.278 (0.120) | 0.346 (0.121) | 0.201 (0.116) | 0.157 (0.095) | 0.153 (0.103) |

| N170 factor scores | −1.362 (0.195) | −1.401 (0.208) | −1.576 (0.199) | −0.899 (0.197) | −0.930 (0.202) | −0.653 (0.191) |

Recording

Electroencephalographic (EEG) activity was recorded using an electrode cap (ElectroCap International) with tin electrodes. Thirty electrodes were placed at the scalp following a homogeneous distribution. All scalp electrodes were referenced to the nosetip. Electrooculographic (EOG) data were recorded supra- and infraorbitally (vertical EOG) as well as from the left vs right orbital rim (horizontal EOG). A bandpass filter of 0.3–40 Hz was applied. Recordings were continuously digitized at a sampling rate of 230 Hz. The continuous recording was divided into 1000 ms epochs for each trial, beginning 200 ms before stimulus onset. Behavioral activity was recorded by means of a two-button keypad whose electrical output was also continuously digitized at a sampling rate of 230 Hz. Trials for which subjects responded erroneously or did not respond were eliminated. Ocular artifact removal was carried out through an independent component analysis (ICA)-based strategy (see a description of this procedure and its advantages over traditional regression/covariance methods in Jung et al., 2000), as provided in Fieldtrip software (http://fieldtrip.fcdonders.nl, Oostenveld et al., 2011). After the ICA-based removing process, a second stage of visual inspection of the EEG data was conducted. If any further artifact was present, the corresponding trial was discarded. This artifact and error rejection procedure led to the average admission of 84.93% F− trials, 87.21% F0, 84.04% F+, 83.16% S−, 86.91% S0 and 86.54% S+. The minimum number of trials accepted for averaging was 66% (2/3) per participant and condition. As already mentioned, data from four participants were eliminated since they did not meet this criterion.

Data analysis

Detection and quantification of P2a and N170

Detection and quantification of P2a and N170 was carried out through a covariance-matrix-based temporal principal components analysis (tPCA), a strategy that has repeatedly been recommended for these purposes (e.g. Chapman and McCrary, 1995; Dien, 2010). In brief, tPCA computes the covariance between all ERP time points, which tend to be high between those time points involved in the same component and low between those belonging to different components. Temporal factor score, the tPCA-derived parameter in which extracted temporal factors can be quantified, is linearly related to amplitude. The decision on the number of factors to select was based on the screen test (Cliff, 1987). Extracted factors were submitted to promax rotation (Dien et al., 2005).

Analyses on experimental effects

In all contrasts described below, the Huynh–Feldt (HF) epsilon correction was applied to adjust degrees of freedom where necessary. Effect sizes were computed using the partial eta-square ( ) method. Post hoc comparisons to determine the significance of pairwise contrasts were performed using the Bonferroni correction procedure and the Tukey HSD method. As recently recommended (NIST/SEMATECH, 2010), the former procedure was employed when only a subset of pairwise comparisons was of interest, whereas the latter was computed when all pairwise levels submitted to the ANOVA required post hoc contrast. The specific characteristics of analyses according to the nature of the dependent variable were as follows.

) method. Post hoc comparisons to determine the significance of pairwise contrasts were performed using the Bonferroni correction procedure and the Tukey HSD method. As recently recommended (NIST/SEMATECH, 2010), the former procedure was employed when only a subset of pairwise comparisons was of interest, whereas the latter was computed when all pairwise levels submitted to the ANOVA required post hoc contrast. The specific characteristics of analyses according to the nature of the dependent variable were as follows.

Behavior. Performance in the digit categorization task was analyzed. To this end, reaction times (RTs) and accuracy (proportion of correct responses) were submitted to repeated-measures ANOVAs introducing Category (Face, Scene) and Emotion (Negative, Neutral, Positive) as factors. In the case of RTs, outliers, defined as responses >2000 or <200 ms, were omitted in the analyses.

Scalp P2a and N170 (2D). Experimental effects on P2a and N170 at the scalp level (2D) were also tested. Category, Emotion and Electrode Site were introduced as factors in ANOVAs performed on factor scores (or amplitudes) corresponding to both ERP components.

Source (3D) analyses. Temporal factor scores corresponding to relevant components were submitted to standardized low-resolution brain electromagnetic tomography (sLORETA). sLORETA is a 3D, discrete linear solution for the EEG inverse problem (Pascual-Marqui, 2002). Although solutions provided by EEG-based source-location algorithms should be interpreted with caution due to their potential error margins, the use of tPCA-derived factor scores instead of direct voltages (which leads to more accurate source-localization analyses: Dien et al., 2003; Carretié et al., 2004b) and the relatively large sample size employed in the present study (n = 34) contribute to reducing such error margins. Pairwise differences at the voxel level between stimulus conditions showing significant effects at the behavioral and scalp levels were computed in order to detect potential sources of variability. Regions of interest (ROIs) were defined for those sources and ROI current densities were submitted to a repeated-measures ANOVA using Category and Emotion as factors.

RESULTS

Experimental effects on behavior

Accuracy (hit rate, ranging from 0 to 1) and RTs in the digit categorization task are showed in Table 1. Repeated-measures ANOVAs showed no significant main effects of distracter Category (Faces vs Scenes) on either RTs or accuracy (P > 0.05 in both cases). However, these ANOVAs revealed significant main effects of distracter Emotion (Negative, Neutral, Positive) on accuracy [F(2,66) = 4.342, HF corrected P < 0.025,  = 0.116]. Emotional distracters (both negative and positive) caused lower accuracy than neutral distracters, the difference between negative and neutral being significant according to post hoc tests. A Category × Emotion interaction was also observed in accuracy (F(2,66) = 3.685, HF corrected P < 0.05). Within facial distracters, post hoc tests showed lower accuracy for F+ than for F0 (which elicited maximal accuracy), whereas within scene distracters, S− was associated with lower accuracy than both S0 and S+.

= 0.116]. Emotional distracters (both negative and positive) caused lower accuracy than neutral distracters, the difference between negative and neutral being significant according to post hoc tests. A Category × Emotion interaction was also observed in accuracy (F(2,66) = 3.685, HF corrected P < 0.05). Within facial distracters, post hoc tests showed lower accuracy for F+ than for F0 (which elicited maximal accuracy), whereas within scene distracters, S− was associated with lower accuracy than both S0 and S+.

Detection and quantification of P2a and N170

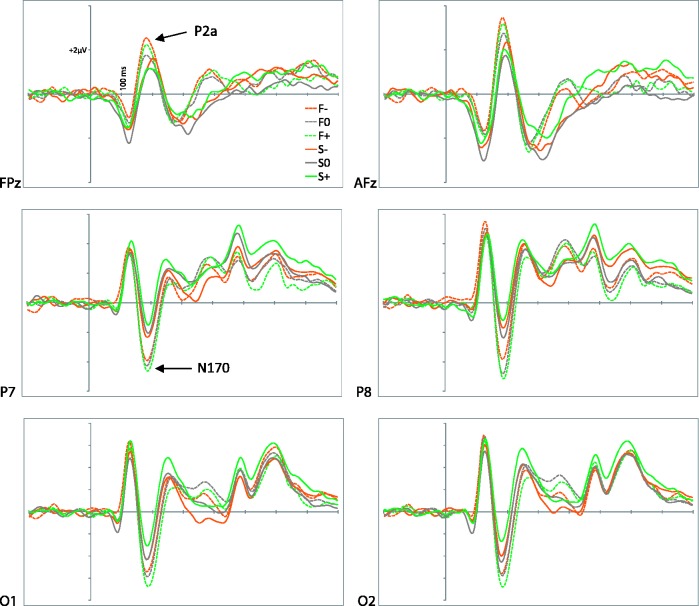

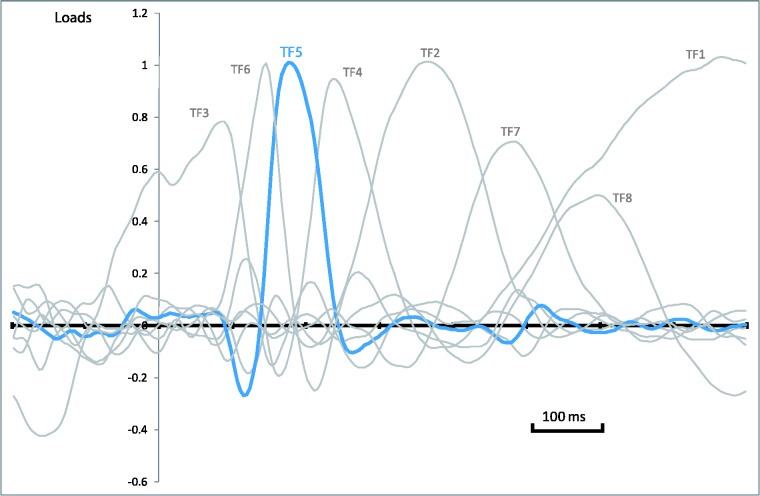

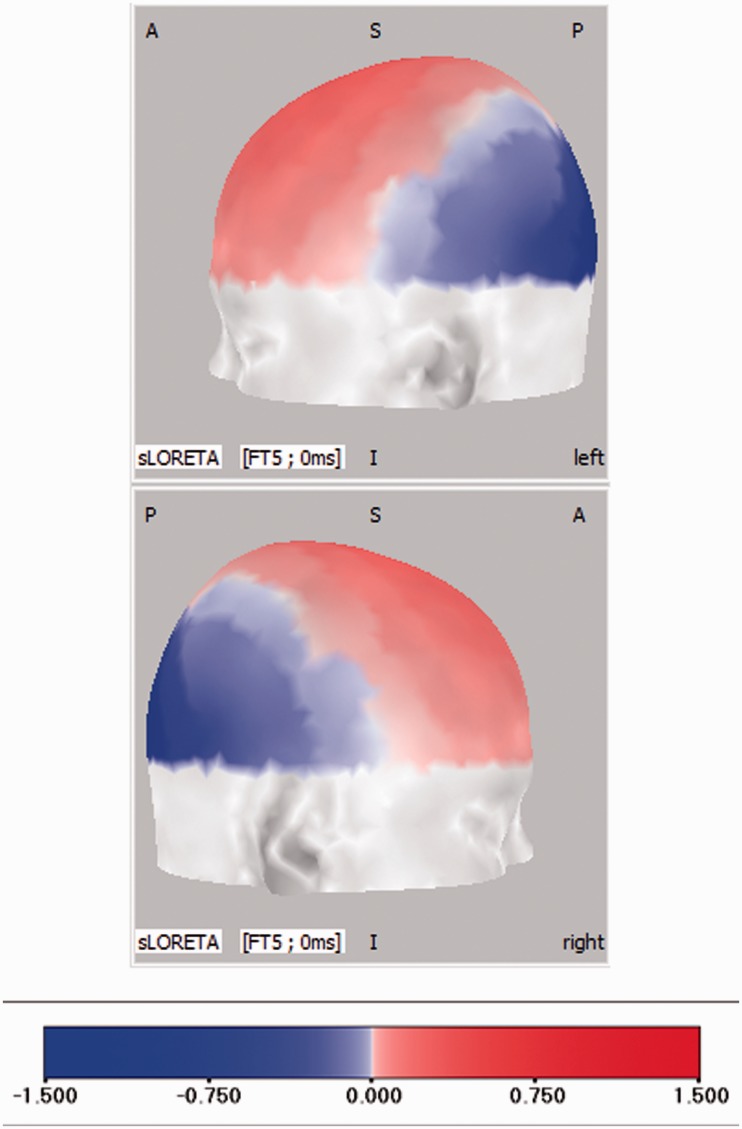

Figure 1 shows a selection of grand averages after subtracting the baseline (prestimulus) activity from each ERP. These grand averages correspond to frontal and parieto-occipital areas, where the critical ERP components, P2a and N170, are most prominent. The first analytical step consisted in detecting and quantifying these components (see section on Data Analysis). Seven temporal factors (TF) were extracted by tPCA and submitted to promax rotation (Figure 2). Factor peak-latency and topography characteristics revealed TF5 as the key component, being associated with both P2a and N170 (Figure 3). Indeed, tPCA revealed that the two components were evoked at the same latency (peaking at 180 ms). Differential characteristics of N170 and P2a were patent both at the polarity and the spatial level (i.e. scalp topography and 3D sources, as described later).

Fig. 1.

Grand averages at anterior and posterior areas, where P2a and N170, respectively, are prominent (F−, negative faces; F0, neutral faces; F+, positive faces; S−, negative scenes; S0, neutral scenes; S+, positive scenes).

Fig. 2.

tPCA: Factor loadings after promax rotation. Temporal factor 5 (P2a/N170) is drawn in blue (TF, temporal factor).

Fig. 3.

P2a/N170 temporal factor scores in the form of scalp maps.

Experimental effects on scalp P2a and N170 (2D)

P2a

Table 1 shows means and standard error of the means (S.E.M.) of P2a parameters. Temporal factor scores (linearly related to amplitude, as explained) of P2a were computed for frontal pole channels (Fp1, Fpz, Fp2 and AFz), where it was specially prominent. ANOVAs detailed in section on Data Analysis showed significant main effects of Category [Faces vs Scenes; F(1,33) = 8.949, HF corrected P < 0.01], faces eliciting greater amplitudes than scenes. Also, main effects of Emotion (negative, neutral, positive) were observed [F(2,66) = 3.265, HF corrected P < 0.05]. Amplitudes were maximal in response to negative distracters, which post hoc tests showed to be significantly greater than those elicited by neutral distracters. No significant Category × Emotion interaction was detected (P > 0.05), suggesting that the emotional content of faces and scenes affected P2a amplitudes similarly.

Latency was also analyzed for P2a, since grand averages suggested a face-scene differentiation in the temporal dimension (Figure 1). Given that, by definition, latency cannot be measured in tPCA-derived factor scores, direct P2a peak voltage-associated latencies were computed for frontal pole channels (Fp1, Fpz, Fp2 and AFz). To this end, latency associated with the maximal voltage within the 148–235 ms time window was computed. ANOVAs revealed a significant effect of Category [F(3,96) = 21.316, HF corrected P < 0.001], facial distracters eliciting earlier latencies than scene distracters. No effects of Emotion or of the Category × Emotion interaction were observed (P > 0.05 in both cases).

N170

Table 1 shows means and S.E.M. of N170 temporal factor scores. They were computed for occipital and lateral parieto-occipital channels (P7, P8, O1, Oz, O2), where it was prominent. As expected for this face-specific component, ANOVAs revealed conspicuous main effects of Category [Faces vs Scenes; F(1,33) = 38.444, HF corrected P < 0.001], which showed larger amplitudes for faces than for scenes. No significant main effects of Emotion (negative, neutral, positive) were observed (P > 0.05). In contrast, the Category × Emotion interaction yielded significant effects [F(2,66) = 7.414, HF corrected P < 0.01]. Within facial distracters (the relevant stimulus category with respect to N170), F+ elicited the greatest (i.e. the most negative) amplitudes, significantly differing from F− according to post hoc tests.

Experimental effects on 3D sources

Region-of-interest (ROI) analyses

As explained in the Methods section, several ROIs were defined following data-driven criteria. First, given that faces elicited greater amplitudes both in P2a and N170, a ROI was defined as those voxels in which maximal faces > scenes current densities were observed. Second, ROIs comprising voxels showing maximal S− > S+ and S− > S0 (both contrasts being significant with respect to accuracy) and maximal F+ > F0 and F+ > F− (significant with respect to accuracy and N170, respectively) current densities were also computed. Table 2 describes the ROIs obtained in both cases.

Table 2.

ROI characteristics, data-based justification, category analyzed and statistical results regarding each of the ROIs submitted to analyses

| Anatomical location | Fusiform gyrus (BA20) | BA40 | BA8 | Precentral gyrus (BA6/4) | BA9 | IPL (BA40) | BA22 |

|---|---|---|---|---|---|---|---|

| Peak voxel coordinates (x, y, z) | −50, −25, −30 | 55, −50, 50 | −5, 35, 50 | −40, −10, 40 | 35, 35, 45 | 55, −45, 50 | 65, −15, 10 |

| Data-based ROI justification (voxelwise maximal differences) | Faces > Scenes | Scenes > Faces | S− > S+ | S− > S0 | F+ > F− | F+ > F0 | F− > F0 |

| 3-Level ANOVAs on: | Faces | Scenes | Scenes | Scenes | Faces | Faces | Faces |

| F(2,66) | P < 0.05 | n.s. | n.s. | P < 0.05 | n.s. | P < 0.05 | n.s. |

| Post hoc comparisons (Tukey) | F+ > F− | S− > S0 | F+ > F− | ||||

| F+ > F0 | S− > S+ | F+ > F0 |

In gray, those ROIs in which statistical contrasts yielded significant results. Precentral gyrus, dorsolateral frontal cortex; IPL, inferior parietal lobule; n.s., non–significant; F−, negative faces; F0, neutral faces; F+, positive faces; S−, negative scenes; S0, neutral scenes; S+, positive scenes.

Current densities associated with each of these ROIs were quantified and submitted to ANOVAs using Emotion (Negative, Neutral, Positive) as factor. Three ROIs showed significant sensitivity to this factor (Figure 4). First, the ANOVA on the left fusiform ROI, focused on current densities evoked by Facial distracters, revealed a significant effect of Emotion [F(2,66) = 3.227, HF corrected P < 0.05]. Maximal current densities were elicited by F+, which significantly differed from F0 and F− according to post hoc tests. Similarly, analyses on the right inferior parietal lobule (IPL) ROI, also focused on current densities to Facial distracters, yielded significant effects of Emotion [F(2,66) = 3.648, HF corrected P < 0.05]. Again, maximal current densities were elicited by F+, which significantly differed from F0 and F−. Finally, the analysis of left precentral ROI with respect to scene distracters also revealed a significant effect of Emotion [F(2,66) = 4.851, HF corrected P < 0.25]. In this case, maximal current densities were elicited by S−, which significantly differed from S0 and S+.

Fig. 4.

ROIs in which current densities were sensitive to the experimental conditions. Average current densities for each condition are also shown (F−, negative faces; F0, neutral faces; F+, positive faces; S−, negative scenes; S0, neutral scenes; S+, positive scenes). Error bars represent S.E.M.

Source (3D)–scalp (2D) associations

Although these results suggest that fusiform and IPL ROIs were more involved in N170 than in P2a scalp activity (since they reflected maximal activity in response to F+ distracters) whereas the precentral ROI was mainly related to P2a (negative stimuli was associated with greater activity), two linear regression analyses (forward procedure) were carried out to statistically test ROI–scalp associations. In the first regression analysis, average P2a factor scores (amplitudes) at frontal pole channels (Fp1, Fpz, Fp2 and AFz) was introduced in the regression analysis as the dependent variable, and current densities in fusiform, IPL and precentral ROIs as independent variables. A positive association was observed for both precentral (beta = 0.481) and fusiform (beta = 0.136) current densities and frontal pole scalp P2a amplitudes (P < 0.05 in both cases). In the second regression analysis, average N170 amplitude at parieto-occipital electrodes (PO7, PO8, O1, Oz and Os) was introduced as the dependent variable. Independent variables were the same as in the previous analysis. A significant association was observed for fusiform (beta = −0.517) and IPL (beta = −0.122; P < 0.05 in both cases).

DISCUSSION

The behavioral and electrophysiological indices presented in this study reveal that, beneath their common capability to capture exogenous attention, faces and non-facial scenes show relevant differential characteristics. Below, the main findings are described and discussed from a comparative perspective, rather than providing a parallel description for the two categories of affective visual stimuli. Several implications and conclusions are mentioned and presented at the end of this section.

Behavior

Behavioral indices of attentional capture to distracters, consisting of lower accuracy in the digit task, did not show significant differences depending on the facial/non-facial nature of stimulation. However, both categories showed an interaction with emotional charge when capturing attention. A face-scene differential pattern was observed in this respect. Among faces, accuracy level was minimal—i.e. interference with the ongoing task was maximal—in response to positive (happy) expressions. This pattern is probably related to two well-known processing biases. The first one is the ‘happy face advantage’, which favors the processing of happy faces in expression recognition tasks. Indeed, happy expressions are identified more accurately and/or more quickly than the rest of emotional expressions (Leppänen and Hietanen, 2004; Palermo and Coltheart, 2004; Tracy and Robins, 2008; Calvo and Nummenmaa, 2011). This recognition bias toward happiness expressions would have favored their attentional capture when employed as distracters. On the other hand, the ‘positivity offset’, a processing bias important in social interaction which facilitates processing of appetitive stimulation (e.g. Cacioppo and Gardner, 1999), may also yield to enhanced attention to expressions communicating positive states. Additional discussion on this bias is provided below. Disgust expressions were also associated with less accuracy than neutral faces, although the differences did not reach significance, despite their having been reported to be better recognized than other negative expressions such as fear or sadness (Tracy and Robins, 2008). Therefore, present results suggest that positivity is the characteristic that preferentially captures attention in the case of faces.

As regards scenes, negative pictures elicited the lowest accuracy level. This result is also in line with previous behavioral data showing an advantage of non-facial negative distracter scenes over positive and/or neutral ones to capture attention when participants are engaged in a cognitive task (Constantine et al., 2001; Carretié et al., 2004a, 2009, 2011; Doallo et al., 2006; Huang and Luo, 2007; Thomas et al., 2007; Yuan et al., 2007). This pattern has been related to the ‘negativity bias’, a term that describes the fact that danger or harm-related stimuli tend to elicit faster and more prominent responses than neutral or positive events (Taylor, 1991; Cacioppo and Gardner, 1999). As discussed later, electrophysiological indices of bias to negative faces were also found in this study. A behavioral correlate was not found in this case, probably because behavioral performance reflects the final single output of several cognitive and affective processes that are not necessarily convergent. As we are about to see, some of these processes point to the opposite direction: maximal responses to positive facial expressions.

Electrophysiology: scalp and source levels

P2a

As expected, P2a was sensitive to the emotional content of distracters. The amplitude of P2a (i.e. a positive component peaking between 150 and 250 ms at anterior scalp regions, although it is labeled in different ways) has been reported to increase when a stimulus attracts attention in a bottom-up fashion (Kenemans et al., 1989, 1992; Carretié et al., 2004a, 2011; Doallo et al., 2006; Huang and Luo, 2007; Thomas et al., 2007; Yuan et al., 2007; Kanske et al., 2011). Negative stimuli, both faces and scenes, elicited the maximal amplitude in this component. In the case of scenes, this negative advantage was reflected in behavior, as previously indicated, while the net effect of faces in performance was dominated by processes reflected in N170, as explained below. The present data support those of previous studies reporting P2a as particularly sensitive to the negativity bias toward visually presented emotional scenes (Carretié et al., 2001a, 2001b, 2004a; Delplanque et al., 2004; Doallo et al., 2006; Huang and Luo, 2006). A question that arises is why the bias toward negative facial distracters reflected in P2a did not significantly affect behavior (negative faces caused lower accuracy than neutral faces, but the difference did not reach significance).

One reason could be related to the already mentioned fact that the final motor response is a single output resulting from a complex balance of different neural processes. As discussed below, N170 responded to faces in the opposite direction: positive facial distracters elicited the highest amplitude. Another reason, complementary to the previous one, is that P2a overlaps in time, space and polarity with the frontal face positivity (FFP), a component typically elicited by faces which is sensitive to their emotional charge (Eimer et al., 2003; Holmes et al., 2003; Wronka and Walentowska, 2011). Interestingly, and when faces are displayed centrally—as in the present experiment—FFP is sensitive to facial expression even when endogenous attention is focused on non-emotional characteristics of stimulation (Wronka and Walentowska, 2011). In other words, P2a to faces is probably reflecting mixed processes: those related to attentional capture processes sensitive to the negativity bias—discussed earlier—and those related to facial processing. This could have led to P2a showing greater amplitudes and shorter latencies in response to faces, along with the general negativity bias effect.

Indeed, further analyses revealed two sources to be significantly associated with P2a scalp activity. One of them was located in the fusiform gyrus (BA20), a region well known to be involved in face processing (Perrett et al., 1982; Halgren et al., 2000; Kanwisher and Yovel, 2006). Although, to the best of our knowledge, no source location data on the FFP has ever been reported, the present data suggest that the fusiform gyrus contributes to its generation and underlies significant face > scene differences. Moreover, fusiform ROI current densities were sensitive to facial expression (they were maximal to positive expressions). The second source associated with scalp P2a was the left precentral gyrus (BA6), whose current density was greater for negative than for neutral scenes, though was not sensitive to the emotional content of faces. This source would underlie the multicategorical, unspecific, exogenous attention effect linked to P2a. Indeed, the left precentral gyrus is one of the main areas activated when a salient distracter exogenously captures attention (see the review by Theeuwes, 2010). The area discussed here is located at the intersection of two relevant functional regions: the frontal eye fields and the inferior frontal junction. Both are consistently activated in experimental paradigms in which different stimuli or tasks compete for access to attentional resources, such as the present one (see reviews in Derrfuss et al., 2005; Corbetta et al., 2008). The precentral gyrus has been linked to the ‘dorsal attention network’. This network is responsible for overt and covert reorienting of processing resources toward distracters according to their priority (Gottlieb, 2007; Bisley and Goldberg, 2010; Ptak, in press). Negative events would have ‘biological priority’: the consequences of a negative event are often much more dramatic than the consequences of ignoring or reacting slowly to neutral or even appetitive stimuli (Ekman, 1992; Öhman et al., 2000).

N170

With regard to the N170 results, two relevant conclusions can be drawn. First, this component showed greater amplitudes to facial than to non-facial distracters. Although data on whether N170 amplitude is greater to endogenously attended than to endogenously unattended faces are still inconclusive (see a review in Palermo and Rhodes, 2007), the present data support the idea that this component responds to a greater extent to faces than to non-facial visual stimuli even when endogenous attention is directed elsewhere. Second, N170 was sensitive to the affective meaning of faces, in line with previous findings (e.g. Pizzagalli et al., 2002; Batty and Taylor, 2003; Miyoshi et al., 2004; Stekelenburg and Gelder, 2004; Blau et al., 2007; Krombholz et al., 2007; Japee et al., 2009; Vlamings et al., 2009; Marzi and Viggiano, 2010; Rigato et al., 2010; Wronka and Walentowska, 2011). As in the present experiment, other recent data also suggest that this sensitivity of N170 to emotional expression does not require endogenous attention to be directed to faces (Pegna et al., 2011). However, since data showing insensitivity of N170 to emotional expression also exist (Eimer et al., 2003; Leppänen et al., 2007), characterization of this component in response to affective facial information—which was not among present study’s scopes—requires additional research.

Happy faces elicited greater N170 amplitudes than disgust faces. Advantages for faces charged with positive affective meaning over faces charged with negative meaning have been reported previously for both N170 latency (Batty and Taylor, 2003) and N170 amplitude (Pizzagalli et al., 2002; Marzi and Viggiano, 2010; these studies compared faces judged as pleasant and unpleasant, but they did not present evident facial expressions). The effect of happiness vs disgust facial expressions in N170 amplitude has been scarcely explored, no significant differences being reported (Batty and Taylor, 2003; Eimer et al., 2003). However, there are marked differences between the present experimental design and previous ones, so that continued research on this issue is recommended. During binocular rivalry, both happy and disgust faces predominate over neutral and, additionally, happy faces predominate over disgust faces (Yoon et al., 2009). The later result results has been interpreted in terms of ‘positivity offset’ (Yoon et al., 2009). As already mentioned, this processing bias facilitates processing of appetitive stimulation, and would have been favored by evolution to facilitate exploratory and approaching behaviors (e.g. Cacioppo and Gardner, 1999). The positivity offset would be especially relevant in social interactions, where friendly faces preferentially attract attention. Then, a nexus could be established between the ‘happy face advantage’ described earlier and the ‘positivity offset’.

Two sources were linked to N170 scalp effects. On the one hand, the activity of the fusiform gyrus (BA20) was also reflected in this component (to a greater extent than in P2a, according to the regression results). This result is in line with those of previous studies exploring the origin of N170, reporting the critical involvement of the fusiform gyrus (Itier and Taylor, 2004; Sadeh et al., 2010). As at the behavior and scalp levels, fusiform ROI current densities were maximal to positive facial distracters. This pattern suggests two important ideas. First, the fusiform gyrus could be at the basis of the ‘happy face advantage’ described earlier. And second, the fusiform gyrus is able, under some circumstances, to process facial information even when controlled resources are directed elsewhere. The fact that left rather than right fusiform gyrus showed significant effects of facial expression may seem inconsistent with previous literature, since identity of faces tend to preferentially involve right fusiform face area (see a review in Yovel et al., 2008, but see Proverbio et al., 2010). However, no right fusiform superiority, or even left dominance, has been reported with respect to emotional expression processing (see a meta-analysis in Fusar-Poli et al., 2009; see also Japee et al., 2009; Hung et al., 2010; Monroe et al., in press).

The second source associated with scalp N170 effects was the inferior parietal lobule (IPL, BA40). Two different functions of the IPL are, a priori, relevant to the present results. First, recent models of exogenous attention propose that the posterior parietal cortex (PPC)—including the IPL—represents the salience or priority map responsible for bottom–up attention (Ptak, in press; Theeuwes, 2010). Second, the IPL has shown itself to be strongly involved in facial expression processing (see a review in Haxby and Gobbini, 2011). Taking into account that this ROI was sensitive to facial but not to scene emotional meaning in this latency and in the present experimental conditions, the second explanation seems more plausible in this particular case.

CONCLUSIONS

Globally, facial expressions and emotional scenes showed similar capabilities for attracting exogenous attention using equivalent experimental conditions for the two categories. This conclusion was evident at the behavioral level, where no main effect of the stimulus category (faces or scenes) was found. At the electrophysiological level, and at first sight, there was an advantage of faces in terms of both amplitude and latency. However, this facial advantage should be interpreted with caution, since face-specific processing mechanisms overlapped in time and partially in space with more general/multicategorical mechanisms usually involved in exogenous attention. The face-specific mechanism was mainly (but not exclusively) reflected in N170 at the scalp level, and in the fusiform gyrus and IPL at the source level. This specific mechanism was biased toward positive faces. Multicategorical mechanisms underlying exogenous attention were reflected in P2a at the scalp level, and in the precentral gyrus at the source level, a part of the dorsal attention network. This unspecific mechanism showed negativity bias: both faces and scenes elicited greater P2a amplitudes when they presented negative valence. Taken together, the present results indicate that faces and non-facial scenes elicit different and, to some extent, complementary processing mechanisms which should be taken into account in attempts to produce a complete picture of attention to emotional visual stimuli.

ACKNOWLEDGEMENTS

This work was supported by the grants PSI2008-03688, PSI2009-08607 and PSI2011-26314 from the Ministerio de Economía y Competitividad (MINECO) of Spain. MINECO also supports Jacobo Albert through a Juan de la Cierva contract (JCI-2010-07766).

Footnotes

1It is important to note that 8.33% of scenes (distributed among the three emotional categories) included central views of distinguishable human facial elements. They were maintained since cutting, occluding or blurring them in those natural scenes depicted in the images would have introduced artificial elements whose effect could be more pernicious than maintaining this facial information.

REFERENCES

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop SJ. Neural mechanisms underlying selective attention to threat. Annals of the New York Academy of Sciences. 2008;1129:141–52. doi: 10.1196/annals.1417.016. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annual Review of Neuroscience. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions. 2007;3:1–13. doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Affective Norms for English Words (ANEW): Instruction Manual and Affective Ratings. Gainesville, FL: University of Florida; 1999. [Google Scholar]

- Cacioppo JT, Gardner WL. Emotion. Annual Review of Psychology. 1999;50:191–214. doi: 10.1146/annurev.psych.50.1.191. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Nummenmaa L. Time course of discrimination between emotional facial expressions: the role of visual saliency. Vision Research. 2011;51:1751–9. doi: 10.1016/j.visres.2011.06.001. [DOI] [PubMed] [Google Scholar]

- Carmel D, Bentin S. Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition. 2002;83:1–29. doi: 10.1016/s0010-0277(01)00162-7. [DOI] [PubMed] [Google Scholar]

- Carretié L, Hinojosa JA, López-Martín S, Albert J, Tapia M, Pozo MA. Danger is worse when it moves: neural and behavioral indices of enhanced attentional capture by dynamic threatening stimuli. Neuropsychologia. 2009;47:364–9. doi: 10.1016/j.neuropsychologia.2008.09.007. [DOI] [PubMed] [Google Scholar]

- Carretié L, Hinojosa JA, Martín-Loeches M, Mercado F, Tapia M. Automatic attention to emotional stimuli: neural correlates. Human Brain Mapping. 2004a;22:290–9. doi: 10.1002/hbm.20037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carretié L, Martín-Loeches M, Hinojosa JA, Mercado F. Emotion and attention interaction studied through event-related potentials. Journal of Cognitive Neuroscience. 2001a;13:1109–28. doi: 10.1162/089892901753294400. [DOI] [PubMed] [Google Scholar]

- Carretié L, Mercado F, Tapia M, Hinojosa JA. Emotion, attention, and the ‘negativity bias’, studied through event-related potentials. International Journal of Psychophysiology. 2001b;41:75–85. doi: 10.1016/s0167-8760(00)00195-1. [DOI] [PubMed] [Google Scholar]

- Carretié L, Ruiz-Padial E, López-Martín S, Albert J. Decomposing unpleasantness: differential exogenous attention to disgusting and fearful stimuli. Biological Psychology. 2011;86:247–53. doi: 10.1016/j.biopsycho.2010.12.005. [DOI] [PubMed] [Google Scholar]

- Carretié L, Tapia M, Mercado F, Albert J, López-Martin S, de la Serna JM. Voltage-based versus factor score-based source localization analyses of electrophysiological brain activity: a comparison. Brain Topography. 2004b;17:109–15. doi: 10.1007/s10548-004-1008-1. [DOI] [PubMed] [Google Scholar]

- Chapman RM, McCrary JW. EP component identification and measurement by principal components analysis. Brain and Cognition. 1995;27:288–310. doi: 10.1006/brcg.1995.1024. [DOI] [PubMed] [Google Scholar]

- Charash M, McKay D. Attention bias for disgust. Journal of Anxiety Disorders. 2002;16:529–41. doi: 10.1016/s0887-6185(02)00171-8. [DOI] [PubMed] [Google Scholar]

- Cliff N. San Diego, Calif: Harcourt Brace Jovanovich; 1987. Analyzing multivariate data. [Google Scholar]

- Cloitre M. Effective psychotherapies for posttraumatic stress disorder: a review and critique. CNS Spectrums. 2009;14(1 Suppl. 1):32–43. [PubMed] [Google Scholar]

- Constantine R, McNally RJ, Hornig CD. Snake fear and the pictorial emotional stroop paradigm. Cognitive Therapy and Research. 2001;25:757–64. [Google Scholar]

- Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: from environment to theory of mind. Neuron. 2008;58:306–24. doi: 10.1016/j.neuron.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delplanque S, Lavoie ME, Hot P, Silvert L, Sequeira H. Modulation of cognitive processing by emotional valence studied through event-related potentials in humans. Neuroscience Letters. 2004;356:1–4. doi: 10.1016/j.neulet.2003.10.014. [DOI] [PubMed] [Google Scholar]

- Derrfuss J, Brass M, Neumann J, Von Cramon DY. Involvement of the inferior frontal junction in cognitive control: meta–analyses of switching and stroop studies. Human Brain Mapping. 2005;25:22–34. doi: 10.1002/hbm.20127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dien J. Evaluating two step PCA of ERP data with geomin, infomax, oblimin, promax, and varimax rotations. Psychophysiology. 2010;47:170–83. doi: 10.1111/j.1469-8986.2009.00885.x. [DOI] [PubMed] [Google Scholar]

- Dien J, Beal DJ, Berg P. Optimizing principal components analysis of event-related potentials: matrix type, factor loading weighting, extraction, and rotations. Clinical Neurophysiology. 2005;116:1808–25. doi: 10.1016/j.clinph.2004.11.025. [DOI] [PubMed] [Google Scholar]

- Dien J, Spencer KM, Donchin E. Localization of the event-related potential novelty response as defined by principal components analysis. Cognitive Brain Research. 2003;17:637–50. doi: 10.1016/s0926-6410(03)00188-5. [DOI] [PubMed] [Google Scholar]

- Doallo S, Holguin SR, Cadaveira F. Attentional load affects automatic emotional processing: evidence from event-related potentials. Neuroreport. 2006;17:1797–801. doi: 10.1097/01.wnr.0000246325.51191.39. [DOI] [PubMed] [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Negative facial expression captures attention and disrupts performance. Attention, Perception, and Psychophysics. 2003;65:352–8. doi: 10.3758/bf03194566. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, McGlone FP. The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cognitive, Affective, and Behavioral Neuroscience. 2003;3:97–110. doi: 10.3758/cabn.3.2.97. [DOI] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and Emotion. 1992;6:169–200. [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48:384. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Esslen M, Pascual-Marqui RD, Hell D, Kochi K, Lehmann D. Brain areas and time course of emotional processing. NeuroImage. 2004;21:1189–203. doi: 10.1016/j.neuroimage.2003.10.001. [DOI] [PubMed] [Google Scholar]

- Fridlund AJ. The new ethology of human facial expressions. In: Russell JA, Fernández-Dols JM, editors. The Psychology of Facial Expression. Cambridge: Cambridge University Press; 1997. pp. 103–29. [Google Scholar]

- Frühholz S, Jellinghaus A, Herrmann M. Time course of implicit processing and explicit processing of emotional faces and emotional words. Biological Psychology. 2011;87:265–74. doi: 10.1016/j.biopsycho.2011.03.008. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, et al. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry and Neuroscience. 2009;34:418. [PMC free article] [PubMed] [Google Scholar]

- Gottlieb J. From thought to action: the parietal cortex as a bridge between perception, action, and cognition. Neuron. 2007;53:9–16. doi: 10.1016/j.neuron.2006.12.009. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Olvet DM. The persistence of attention to emotion: brain potentials during and after picture presentation. Emotion. 2008;8:250–5. doi: 10.1037/1528-3542.8.2.250. [DOI] [PubMed] [Google Scholar]

- Halgren E, Raij T, Marinkovic K, Jousmäki V, Hari R. Cognitive response profile of the human fusiform face area as determined by MEG. Cerebral Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI. Distributed neural systems for face perception. In: Calder AJ, Rhodes G, Johnson MH, Haxby JV, editors. The Oxford Handbook of Face Perception. Oxford: Oxford University Press; 2011. pp. 93–107. [Google Scholar]

- Hinojosa JA, Carretié L, Valcárcel MA, Méndez-Bértolo C, Pozo MA. Electrophysiological differences in the processing of affective information in words and pictures. Cognitive, Affective and Behavioral Neuroscience. 2009;9:173. doi: 10.3758/CABN.9.2.173. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Brain Research. Cognitive Brain Research. 2003;16:174–84. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Huang YX, Luo YJ. Temporal course of emotional negativity bias: an ERP study. Neuroscience Letters. 2006;398:91–6. doi: 10.1016/j.neulet.2005.12.074. [DOI] [PubMed] [Google Scholar]

- Huang YX, Luo YJ. Attention shortage resistance of negative stimuli in an implicit emotional task. Neuroscience Letters. 2007;412:134–8. doi: 10.1016/j.neulet.2006.10.061. [DOI] [PubMed] [Google Scholar]

- Hung Y, Smith ML, Bayle DJ, Mills T, Cheyne D, Taylor MJ. Unattended emotional faces elicit early lateralized amygdala-frontal and fusiform activations. NeuroImage. 2010;50:727–33. doi: 10.1016/j.neuroimage.2009.12.093. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Source analysis of the N170 to faces and objects. Neuroreport. 2004;15:1261–5. doi: 10.1097/01.wnr.0000127827.73576.d8. [DOI] [PubMed] [Google Scholar]

- Izard CE. Basic emotions, relations among emotions, and emotion-cognition relation. Psychological Review. 1992;99:561–5. doi: 10.1037/0033-295x.99.3.561. [DOI] [PubMed] [Google Scholar]

- Japee S, Crocker L, Carver F, Pessoa L, Ungerleider LG. Individual differences in valence modulation of face-selective m170 response. Emotion. 2009;9:59. doi: 10.1037/a0014487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Humphries C, Lee TW, Mckeown MJ, Iragui V, et al. Removing electroencephalographic artifacts by blind source separation. Psychophysiology. 2000;37:163–78. [PubMed] [Google Scholar]

- Kanske P, Plitschka J, Kotz SA. Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia. 2011;49:3121–9. doi: 10.1016/j.neuropsychologia.2011.07.022. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philosophical Transactions of the Royal Society B: Biological Sciences. 2006;361:2109–28. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keil A. Macroscopic brain dynamics during verbal and pictorial processing of affective stimuli. Progress in Brain Research. 2006;156:217–32. doi: 10.1016/S0079-6123(06)56011-X. [DOI] [PubMed] [Google Scholar]

- Kenemans JL, Verbaten MN, Melis CJ, Slangen JL. Visual stimulus change and the orienting reaction: event-related potential evidence for a two-stage process. Biological Psychology. 1992;33:97–114. doi: 10.1016/0301-0511(92)90026-q. [DOI] [PubMed] [Google Scholar]

- Kenemans JL, Verbaten MN, Roelofs JW, Slangen JL. Initial- and change-orienting reactions: an analysis based on visual single-trial event-related potentials. Biological Psychology. 1989;28:199–226. doi: 10.1016/0301-0511(89)90001-x. [DOI] [PubMed] [Google Scholar]

- Kissler J, Assadollahi R, Herbert C. Emotional and semantic networks in visual word processing: insights from ERP studies. Progress in Brain Research. 2006;156:147–83. doi: 10.1016/S0079-6123(06)56008-X. [DOI] [PubMed] [Google Scholar]

- Koster EH, Crombez G, Van Damme S, Verschuere B, De Houwer J. Does imminent threat capture and hold attention? Emotion. 2004;4:312–7. doi: 10.1037/1528-3542.4.3.312. [DOI] [PubMed] [Google Scholar]

- Krombholz A, Schaefer F, Boucsein W. Modification of N170 by different emotional expression of schematic faces. Biological Psychology. 2007;76:156–62. doi: 10.1016/j.biopsycho.2007.07.004. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Gainesville, FL: University of Florida; 2005. [Google Scholar]

- Leppänen JM, Hietanen JK. Positive facial expressions are recognized faster than negative facial expressions, but why? Psychological Research. 2004;69:22–9. doi: 10.1007/s00426-003-0157-2. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Kauppinen P, Peltola MJ, Hietanen JK. Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Research. 2007;1116:103–9. doi: 10.1016/j.brainres.2007.06.060. [DOI] [PubMed] [Google Scholar]

- Linden W. Exposure treatments for focal phobias: a review. Archives of General Psychiatry. 1981;38:769. doi: 10.1001/archpsyc.1981.01780320049005. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Ohman A. Karolinska Directed Emotional Faces. Stockholm: Psychology Section, Department of Clinical Neuroscience, Karolinska Institutet; 1998. [Google Scholar]

- Marzi T, Viggiano M. When memory meets beauty: insights from event-related potentials. Biological Psychology. 2010;84:192–205. doi: 10.1016/j.biopsycho.2010.01.013. [DOI] [PubMed] [Google Scholar]

- Miyoshi M, Katayama J, Morotomi T. Face-specific N170 component is modulated by facial expressional change. Neuroreport. 2004;15:911–4. doi: 10.1097/00001756-200404090-00035. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. A cognitive-motivational analysis of anxiety. Behaviour Research and Therapy. 1998;36:809–48. doi: 10.1016/s0005-7967(98)00063-1. [DOI] [PubMed] [Google Scholar]

- Monroe JF, Griffin M, Pinkham A, Loughead J, Gur RC, Roberts TPL, et al. The fusiform response to faces: explicit versus implicit processing of emotion. Human Brain Mapping. in press doi: 10.1002/hbm.21406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NIST/SEMATECH. E-Handbook of Statistical Methods. 2010 http://www.itl.nist.gov/div898/handbook/ [Google Scholar]

- Öhman A, Hamm A, Hugdahl K. Cognition and the autonomic nervous system: orienting, anticipation, and conditioning. In: Cacioppo JT, Tassinary LG, Bernston GG, editors. Handbook of Psychophysiology. 2nd edn. Cambridge: Cambridge University Press; 2000. pp. 533–75. [Google Scholar]

- Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: an integrative review of ERP findings. Biological Psychology. 2008;77:247–65. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience 2011. 2011 doi: 10.1155/2011/156869. Article ID 156869, 9 pages. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palermo R, Coltheart M. Photographs of facial expression: accuracy, response times, and ratings of intensity. Behavior Research Methods. 2004;36:634–8. doi: 10.3758/bf03206544. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia. 2007;45:75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- Pascual-Marqui RD. Standardized low-resolution brain electromagnetic tomography (sLORETA): technical details. Methods and Findings in Experimental and Clinical Pharmacology. 2002;24(Suppl. D):5–12. [PubMed] [Google Scholar]

- Pegna AJ, Darque A, Berrut C, Khateb A. Early ERP modulation for task-irrelevant subliminal faces. Frontiers in Psychology. 2011;2:88. doi: 10.3389/fpsyg.2011.00088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett DI, Rolls ET, Caan W. Visual neurones responsive to faces in the monkey temporal cortex. Experimental Brain Research. 1982;47:329–42. doi: 10.1007/BF00239352. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Ungerleider LG. Neuroimaging studies of attention and the processing of emotion-laden stimuli. Progress in Brain Research. 2004;144:171–82. doi: 10.1016/S0079-6123(03)14412-3. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Marqui RD, Davidson RJ. Affective judgments of faces modulate early activity (<160 ms) within the fusiform gyri. NeuroImage. 2002;16:663–77. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Posner MI, Rueda MR, Kanske P. Probing the mechanisms of attention. In: Cacioppo JT, Tassinary JG, Berntson GG, editors. The Handbook of Psychophysiology. 3rd edn. Cambridge: Cambridge University Press; 2007. pp. 410–32. [Google Scholar]

- Proverbio AM, Riva F, Martin E, Zani A. Face coding is bilateral in the female brain. PloS One. 2010;5:e11242. doi: 10.1371/journal.pone.0011242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ptak R. The frontoparietal attention network of the human brain: action, saliency, and a priority map of the environment. The Neuroscientist. in press doi: 10.1177/1073858411409051. [DOI] [PubMed] [Google Scholar]

- Rellecke J, Palazova M, Sommer W, Schacht A. On the automaticity of emotion processing in words and faces: event-related brain potentials evidence from a superficial task. Brain and Cognition. 2011;77:23–32. doi: 10.1016/j.bandc.2011.07.001. [DOI] [PubMed] [Google Scholar]

- Rigato S, Farroni T, Johnson MH. The shared signal hypothesis and neural responses to expressions and gaze in infants and adults. Social Cognitive and Affective Neuroscience. 2010;5:88–97. doi: 10.1093/scan/nsp037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadeh B, Podlipsky I, Zhdanov A, Yovel G. Event-related potential and functional MRI measures of face–selectivity are highly correlated: a simultaneous ERP fMRI investigation. Human Brain Mapping. 2010;31:1490–501. doi: 10.1002/hbm.20952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz S, Vuilleumier P, Hutton C, Maravita A, Dolan RJ, Driver J. Attentional load and sensory competition in human vision: modulation of fMRI responses by load at fixation during task-irrelevant stimulation in the peripheral visual field. Cerebral Cortex. 2005;15:770–86. doi: 10.1093/cercor/bhh178. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777. doi: 10.1097/00001756-200404090-00007. [DOI] [PubMed] [Google Scholar]

- Taylor SE. Asymmetrical effects of positive and negative events: the mobilization-minimization hypothesis. Psychological Bulletin. 1991;110:67–85. doi: 10.1037/0033-2909.110.1.67. [DOI] [PubMed] [Google Scholar]

- Theeuwes J. Top-down and bottom-up control of visual selection. Acta Psychologica. 2010;135:77–99. doi: 10.1016/j.actpsy.2010.02.006. [DOI] [PubMed] [Google Scholar]

- Thomas SJ, Johnstone SJ, Gonsalvez CJ. Event-related potentials during an emotional stroop task. International Journal of Psychophysiology. 2007;63:221–31. doi: 10.1016/j.ijpsycho.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Tracy JL, Robins RW. The automaticity of emotion recognition. Emotion. 2008;8:81. doi: 10.1037/1528-3542.8.1.81. [DOI] [PubMed] [Google Scholar]

- Vanderploeg RD, Brown WS, Marsh JT. Judgments of emotion in words and faces: ERP correlates. International Journal of Psychophysiology. 1987;5:193–205. doi: 10.1016/0167-8760(87)90006-7. [DOI] [PubMed] [Google Scholar]

- Vlamings PHJM, Goffaux V, Kemner C. Is the early modulation of brain activity by fearful facial expressions primarily mediated by coarse low spatial frequency information? Journal of Vision. 2009;9:1–13. doi: 10.1167/9.5.12. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Wronka E, Walentowska W. Attention modulates emotional expression processing. Psychophysiology. 2011;48:1047–56. doi: 10.1111/j.1469-8986.2011.01180.x. [DOI] [PubMed] [Google Scholar]

- Yoon KL, Hong SW, Joormann J, Kang P. Perception of facial expressions of emotion during binocular rivalry. Emotion. 2009;9:172–82. doi: 10.1037/a0014714. [DOI] [PubMed] [Google Scholar]

- Yovel G, Tambini A, Brandman T. The asymmetry of the fusiform face area is a stable individual characteristic that underlies the left-visual-field superiority for faces. Neuropsychologia. 2008;46:3061–8. doi: 10.1016/j.neuropsychologia.2008.06.017. [DOI] [PubMed] [Google Scholar]

- Yuan J, Zhang Q, Chen A, Li H, Wang Q, Zhuang Z, et al. Are we sensitive to valence differences in emotionally negative stimuli? electrophysiological evidence from an ERP study. Neuropsychologia. 2007;45:2764–71. doi: 10.1016/j.neuropsychologia.2007.04.018. [DOI] [PubMed] [Google Scholar]

- Zlomke K, Davis TE., III One-session treatment of specific phobias: a detailed description and review of treatment efficacy. Behavior Therapy. 2008;39:207–23. doi: 10.1016/j.beth.2007.07.003. [DOI] [PubMed] [Google Scholar]