Abstract

Numerous event-related brain potential (ERP) studies reveal the differential processing of emotional and neutral stimuli. Yet, it is an ongoing debate to what extent the ERP components found in previous research are sensitive to physical stimulus characteristics or emotional meaning. This study manipulated emotional meaning and stimulus orientation to disentangle the impact of stimulus physics and semantics on emotional stimulus processing. Negative communicative hand gestures of Insult were contrasted with neutral control gestures of Allusion to manipulate emotional meaning. An elementary physical manipulation of visual processing was implemented by presenting these stimuli vertically and horizontally. The results showed dissociable effects of stimulus meaning and orientation on the sequence of ERP components. Effects of orientation were pronounced in the P1 and N170 time frames and attenuated during later stages. Emotional meaning affected the P1, evincing a distinct topography to orientation effects. Although the N170 was not modulated by emotional meaning, the early posterior negativity and late positive potential components replicated previous findings with larger potentials elicited by the Insult gestures. These data suggest that the brain processes different attributes of an emotional picture in parallel and that a coarse semantic appreciation may already occur during relatively early stages of emotion perception.

Keywords: attention, emotion, ERP, gestures, non-verbal communication

INTRODUCTION

Reacting rapidly and appropriately to threatening or life-sustaining stimuli is imperative for an organism’s survival in a complex environment. Thus, motivationally relevant external stimuli may automatically induce a state of natural selective attention (Lang et al., 1997) and effectively guide ensuing perceptual processes. Research utilizing event-related brain potentials (ERPs) has determined several indicators of preferential emotion processing along the time scale of visual perception (Schupp et al., 2006; Flaisch et al., 2008b). As emotionally guided perceptual amplification supposedly occurs from very early to relatively late stages of visual processing, the extent to which the observed emotional effects are driven by stimulus differences on a purely physical level or the semantic information associated with such differences is under debate. In this study, we provide evidence that early indicators of emotion perception conceivably reflect semantic stimulus content and demonstrate that the effects of physical and semantic stimulus attributes can be differentiated along the sequence of emotion perception.

Preferential processing of affective pictorial stimuli unfolds in a temporal sequence from relatively early to later stages (Schupp et al., 2006). Studies utilizing ERP measures identified several electro-cortical components presumably reflecting distinct sub-processes of emotion perception. A large body of research has established the ‘early posterior negativity’ (EPN) and the ‘late positive potential’ (LPP) as indices of emotion processing. The EPN is characterized by a more negative-going deflection of the surface potential when viewing emotional compared with neutral pictures (Schupp et al., 2007; Flaisch et al., 2008a). It is typically observed over temporo-parieto-occipital sensors and occurs around 150–350 ms after stimulus onset. Subsequently, this difference is followed by the LPP which occurs around 350–750 ms over fronto-parieto-central positions as a relative positivity to emotional stimuli (Schupp et al., 2006; Flaisch et al., 2008b). These effects may reflect the amplified or prioritized processing of emotional stimuli as they are observed with striking similarity in passive task contexts across a wide variety of visual stimulus materials, including naturalistic scenes (International Affective Picture System (IAPS), Schupp et al., 2007; Flaisch et al., 2008b), facial expressions (Schupp et al., 2004b; Leppänen et al., 2007), written words (Kissler et al., 2009; Herbert et al., 2008) and communicative hand gestures (Flaisch et al., 2009, 2011).

There is some evidence that emotional pictures are detected even earlier in the processing stream. Several studies have reported differences between emotional and neutral picture stimuli as early as the P1, occurring around 60–120 ms over parieto-occipital sensor positions (Pourtois et al., 2005; van Heijnsbergen et al., 2007; Flaisch et al., 2011). In addition, several studies have investigated the N170 component observed over occipito-temporal sites with a latency around 170 ms, which is held to reflect the perceptual encoding of faces and body parts (for a recent review see Minnebusch and Daum, 2009). Mixed evidence has been obtained regarding the susceptibility of the N170 to the affective nature of face stimuli. Although some studies do report emotional modulations of the N170 (Stekelenburg and De Gelder, 2004; Mühlberger et al., 2009; Frühholz et al., 2011), others have failed to find such effects (Schupp et al., 2004b; Pourtois et al., 2005; Leppänen et al., 2007). In sum, the perceptual processing of emotional picture stimuli unfolds in a temporal sequence which may be traced by various emotion-sensitive ERP components.

However, such modulations may not exclusively indicate semantic evaluative processes. Instead, similar ERP effects may be observed in purely physical manipulations that either affect perceptual processes or interact with the semantic evaluation of according stimuli. Several studies hint at various physical parameters that may influence emotion perception at various stages, effectively impeding clear emotion interpretation. One such parameter is visual noise since degrading the perceptual quality of a picture stimulus generally diminishes the P3/LPP, a component widely held to reflect semantic stimulus encoding (Kok, 2001). Perceptually degraded pictures also invoke reduced or even abolished P1 and EPN amplitudes (Schupp et al., 2008), most likely reflecting hampered object recognition. A differentiated picture emerges when image size is varied: smaller pictures elicit less preferential processing at the level of the EPN, but later processes as indexed by the LPP seem to be largely unaffected by the physical size of the stimulus (De Cesarei and Codispoti, 2006). This suggests that these components reflect physical-perceptual and semantic processing differentially. Interestingly, emotional pictures presented in the periphery, compared with the fovea, appear to lose the ability to induce preferential processing (De Cesarei et al., 2009). Other studies have focused on the relation of spatial frequency and preferential emotion processing (Vuilleumier et al., 2003; Holmes et al., 2005; Pourtois et al., 2005). The results suggest that, in particular, early brain responses to emotional pictures rely on the information contained in the low-frequency spectrum of the images (Pourtois et al., 2005; Alorda et al., 2007; Vlamings et al., 2009). In contrast, subsequent ERP indices, i.e. the N170 and the LPP, showed a much smaller or even no preference for frequency filtered emotional pictures (Pourtois et al., 2005; Alorda et al., 2007; Vlamings et al., 2009; De Cesarei and Codispoti, 2011). Picture composition may also obscure semantic emotion effects. Comparing emotional and neutral pictures with a simple figure-ground composition to more complex images revealed pronounced ERP effects in the EPN time window due to picture complexity while emotional effects were limited to the LPP (Bradley et al., 2007). This finding was interpreted as evidence that the EPN would mainly reflect the perceptual organization of a stimulus while the LPP would indicate its actual emotional appreciation. In sum, existing research shows that the different ERP indices of preferential emotion processing may be susceptible to various physical stimulus manipulations. Thus, the exact nature of the various emotion-sensitive ERP components, in particular whether they reflect purely physical-perceptual or semantic-evaluative processes, remains to be determined.

To fully appreciate physical and semantic processing differences along the sequence of emotion perception requires assessing effects from relatively early up to the later stages in the same participants utilizing the same stimulus materials. Although previous studies sporadically speak to this issue, a comprehensive assessment of this question is still amiss. One reason for this may be found in the specifics of conventional stimulus materials. The results from research using emotional facial expressions are difficult to generalize as faces have a very unique status in the brain (Bentin et al., 1996; Kanwisher et al., 1997; Farah et al., 1998), presumably due to their evolutionary significance (Öhman et al., 2000). This restriction also applies to naturalistic pictures, most notably to those most reliably eliciting emotion processing, i.e. erotic or violent content (Schupp et al., 2004a). Furthermore, the vastly differing physical composition of IAPS pictures makes it difficult to disentangle physical from semantic effects based on these materials. Finally, no previous study has succeeded in delineating physical from semantic processes and thereby assessed the full sequence of emotion-modulated ERP components.

Here, we approached this question by utilizing communicative hand gestures (Efron, 1972/1941; Morris, 1994). Specifically, we compared negative and neutral gestures rotated to either vertical or horizontal orientation. These materials seem particularly suited regarding the present research question. First, emotional gestures are processed preferentially by the brain (Flaisch et al., 2009), as demonstrated for the P1, the subsequent EPN and the later LPP (Flaisch et al., 2011). In addition, body stimuli elicit a N170 (Minnebusch and Daum, 2009); however, no emotion modulation has yet been reported for non-facial body stimuli. Second, the used hand gestures share a very comparable and simple picture composition, minimizing the effects of uncontrolled stimulus physics, such as perceptual quality, visual eccentricity, spatial frequency or visual complexity. Third, the semantic meaning attached to the used hand gestures does not rely on evolutionary significance as it is based on cultural convention and social learning (Buck and VanLear, 2002). Together, this allowed manipulating stimulus orientation, a low-level visual attribute known to elicit early processing differences between horizontal and vertical stimuli (Kenemans et al., 1993, 1995; Grill-Spector and Malach, 2004) while at the same time preserving overall recognizability and semantic meaning. Based on previous research, emotion and stimulus orientation effects were expected to differ across the processing stream. Although stimulus orientation effects were expected to be most pronounced during early processing stages, emotion effects are usually most prominent during subsequent stimulus processing. High-density ERP recordings served to differentiate between emotion and orientation effects regarding time course and topographical appearance.

MATERIALS AND METHODS

Participants

Twenty (10 females) volunteers aged between 20 and 32 years (mean = 23.6) participated in the study. The experimental procedures were approved by the ethical committee of the University of Konstanz, and all participants provided informed consent. They received monetary compensation or course credit for participation.

Stimuli

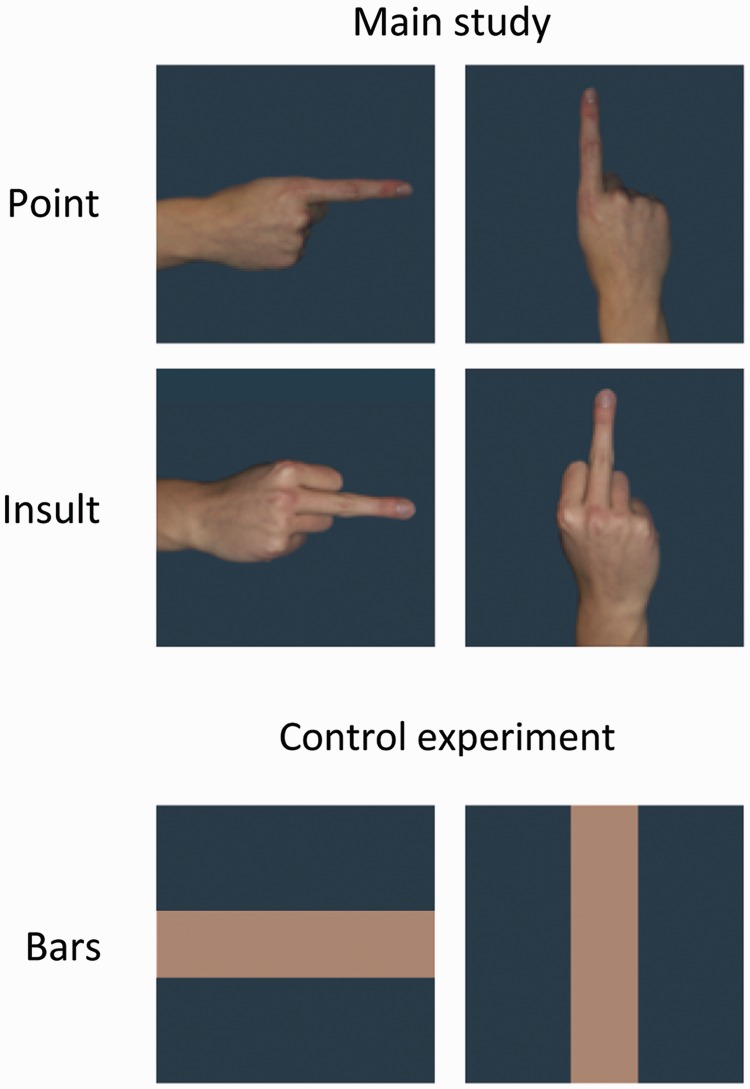

The middle finger jerk, which is among the strongest gestures of sexual insult and produced by the upward thrusting of the stiff middle finger served as the emotional gesture (‘Insult’), while the index finger pointing in a specific direction served as the emotionally neutral control gesture (‘Point’; Morris, 1979). Both gestures were rotated to appear in vertical and horizontal orientations (Figure 1). Each gesture was posed by four women and four men. All gestures were displayed with the back of the hand facing the viewer and with a neutral, monotone gray-blue background. All pictures also appeared mirrored along the vertical axis to control for possible lateralization effects.

Fig. 1.

Examples of stimuli. Stimuli from one actor for all experimental cells in the main study (top) and bar stimuli utilized in the control experiment (bottom).

Self-report

Following ERP measurement, participants were asked to rate the viewed gestures according to their perceived pleasantness (1 = most pleasant; 9 = most unpleasant) and arousal (1 = least arousing; 9 = most arousing) using the Self-Assessment Manikin (SAM; Bradley and Lang, 1994). For statistical analysis, these ratings were entered into a two-factorial repeated measure analysis of variance (ANOVA; Gesture: Point vs Insult and Orientation: vertical vs horizontal).

Procedure, ERP data acquisition and analysis

To limit eye movements to a single fixation, a stimulus was presented for 117 ms followed by a blank interstimulus interval of 900 ms. The entire picture set consisting of the differentially oriented and mirrored gestures (8 × 2 × 2 × 2 = 64) was repeated 20 times resulting in a total of 1280 picture presentations. The pictures were shown in a randomized order in which no more than three pictures of the same experimental category were presented in succession and the transition frequencies between all categories were controlled. The session was divided into four blocks, allowing for posture adjustments during the pauses in between. Participants were instructed to simply view the pictures.

Brain and ocular scalp potential fields were measured with a 256-lead geodesic sensor net (GSN 200 v2.0; EGI: Electrical Geodesics Inc., Eugene, OR), on-line bandpass filtered from 0.01 to 100 Hz and sampled at 250 Hz using Netstation acquisition software and EGI amplifiers. Using EMEGS software (Peyk et al., 2011), data editing and artifact rejection were based on an elaborate method for statistical artifact control, specifically tailored for the analysis of dense sensor ERP recordings (Junghöfer et al., 2000). Data were converted to an average reference and stimulus synchronized epochs extracted from 200 ms pre- until 1000 ms post-stimulus onset. After baseline adjustment (100 ms pre-stimulus), separate average waveforms were calculated for all experimental categories for each sensor and participant.

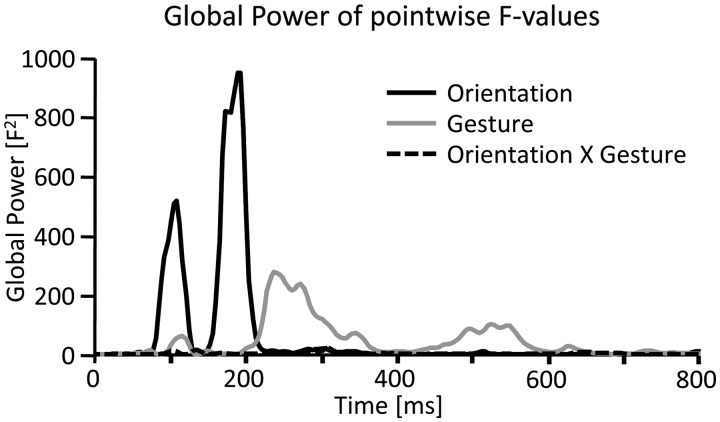

In a first exploratory analysis, each time point and sensor was individually tested using a two-factorial ANOVA (Gesture: Point vs Insult and Orientation: vertical vs horizontal). Significant effects were thresholded at P < 0.05 for at least eight continuous data points (32 ms) and two neighboring sensors to provide a conservative guarding against chance findings (Sabbagh and Taylor, 2000). Based on the resulting F values, the Global Power across all sensors was calculated to determine the time course of modulation as a function of both the main effects and the interaction. From this, five modulations became readily apparent, two as a function of Orientation and three for Gesture, respectively (Figure 2). To further detail these effects, specific time intervals and sensor clusters were defined. Averaged data within these intervals and clusters were subjected to three-factorial ANOVAs with repeated measurement on the factors Gesture, Orientation and Cluster.

Fig. 2.

Global power of exploratory single sensor analysis. Global power plot of the F values resulting from the 2 × 2 ANOVAs (Gesture × Orientation) calculated for each sensor and time point separately. Statistical modulations for the main effect of stimulus orientation were clearly apparent in relatively early time frames, i.e. around 80–120 ms and 160–210 ms (black line), while modulations due to gesture type were most pronounced during later time windows, i.e. around 200–320 ms and 450–600 ms (gray line). No clear interaction effects were obvious from this analysis.

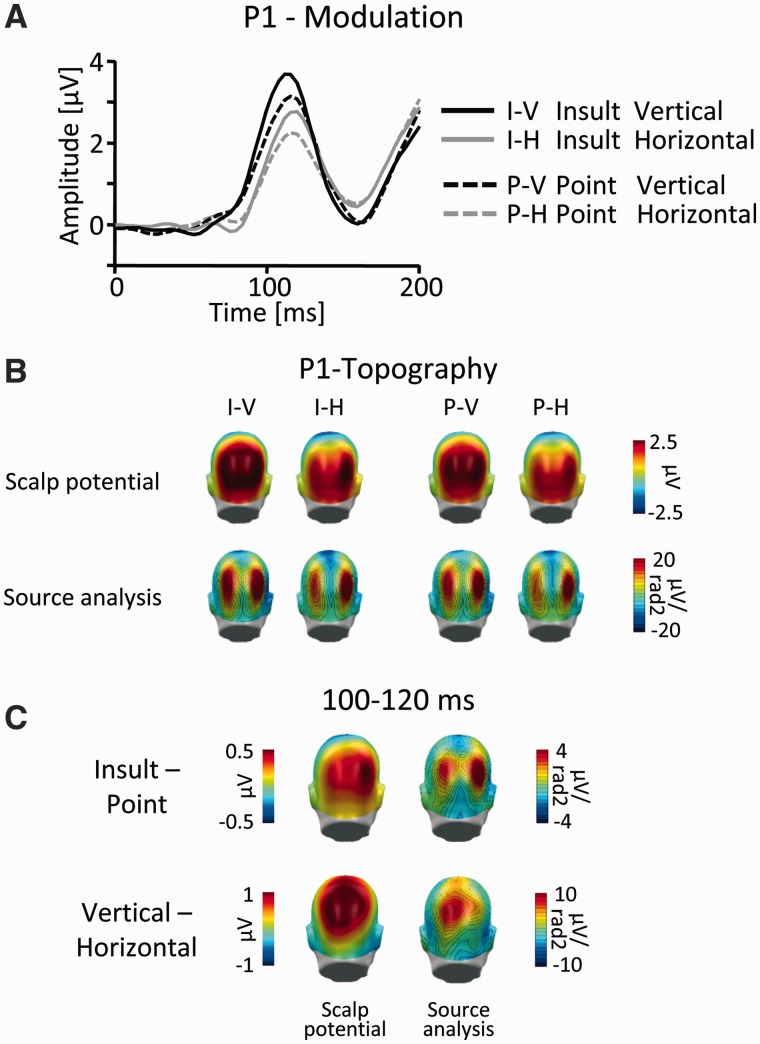

Visual inspection of the two main effects of Gesture and Orientation in the P1 time frame suggested that the two effects differed regarding topographical appearance. Specifically, although the main effect of Gesture appeared to closely mirror the topographical distribution of the visual P1, the main effect of Orientation suggested a more superior and comparatively central localization (Figure 3). Thus, to accurately capture possible distinctions between the two effects, they were assessed in three sensor clusters in the interval 100–120 ms. Two lateralized parieto-occipital clusters were centered on the P1 peak of the overall ERP across all conditions (Figure 4), and a centro-parietal sensor cluster was positioned at sensor sites showing the most pronounced effects of stimulus orientation (Figure 4).

Fig. 3.

P1 component. (A) Illustration of the ERP waveforms for a right parieto-occipital sensor (EGI #152; Fig. 4). (B) Scalp topography maps (top) and according source analysis (bottom) for all picture categories in the time window 100–120 ms. (C) Scalp difference maps (vertical–horizontal and Insult–Point; left) and according source analysis (right) in the time window 100–120 ms. Please note the different scales.

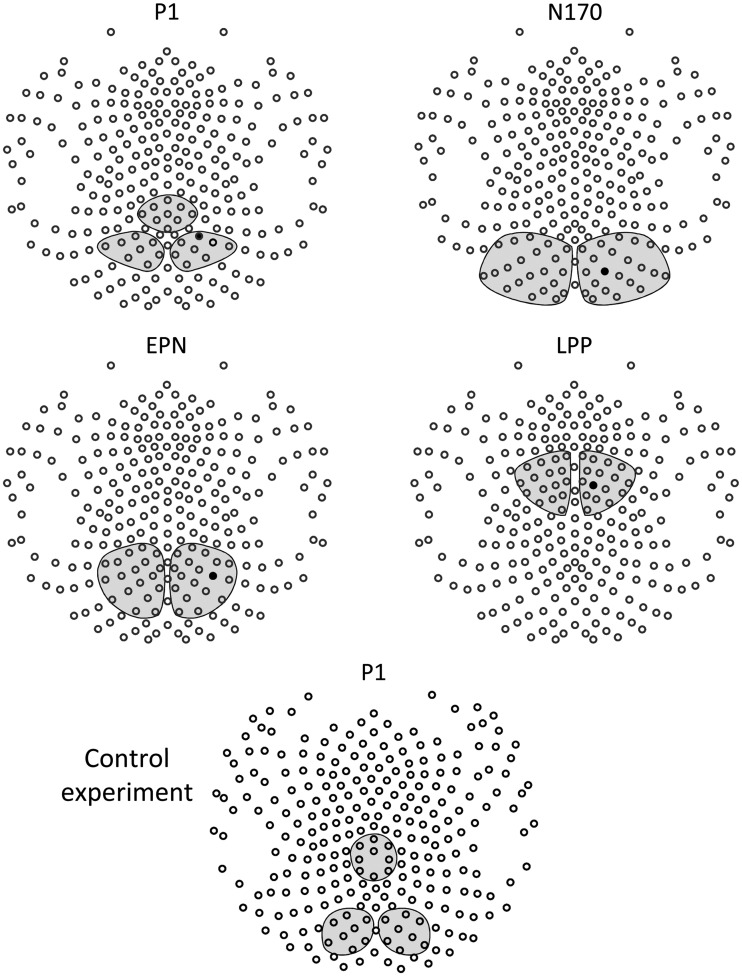

Fig. 4.

Analyzed sensor clusters. Illustration of all sensor clusters as analyzed for each ERP effect. Black sensors indicate channels presented in detail in Figures 3 and 6–8. The sensor configuration is displayed from the top with the nose pointing up.

The N170 was scored in the interval 160–200 ms in two lateralized occipito-temporal clusters (Figure 4). The EPN was captured in the interval 220–320 ms in two lateralized parieto-occipital clusters (Figure 4). The LPP was scored in the interval 460–560 ms in two lateralized fronto-central sensor clusters (Figure 4).

To assess topographic differences between ensuing effects in more detail, current source densities were calculated (Junghöfer et al., 1997). This approach is based on a physiological volume conductor model that is well suited for dense array electroencephalography (EEG) data and which indicates a focal generator source by a sink/source pattern of inward/outward flow of current.

Control experiment

To provide an empirical control for orientation effects independent of semantic meaning, a second study utilizing simple horizontal and vertical bar stimuli was conducted (Figure 1). The width and color of the bars closely mirrored the gesture images, and the same background was utilized. Twelve volunteers (7 females; age: 20–37 years, mean = 25.7) participated in the study. Participants passively viewed 640 (2 × 320) picture presentations of the bar stimuli and all further experimental parameters were identical to the main study. Continuous EEG was recorded using 256-channel HydroCel Geodesic Sensor Nets (EGI) and was processed as described for the main study.

Visual inspection of the difference wave (vertical–horizontal) revealed a modulation in the P1 time frame over parieto-central sensors, which preceded the P1 peak (∼110 ms). To statistically assess this effect and to test for its topographical accordance with the visual P1, the same approach was chosen as for the P1 analysis in the main study. Specifically, the time interval 72–92 ms post-stimulus was analyzed in two lateralized parieto-occipital sensor clusters, which were centered on the peak of the visual P1 (Figure 4), and in a centro-parietal sensor cluster, which was positioned at sensor sites showing the most pronounced effects of stimulus orientation (Figure 4). The extracted data were entered into a two-factorial ANOVA with repeated measurement on the factors Orientation and Cluster.

RESULTS

SAM-ratings

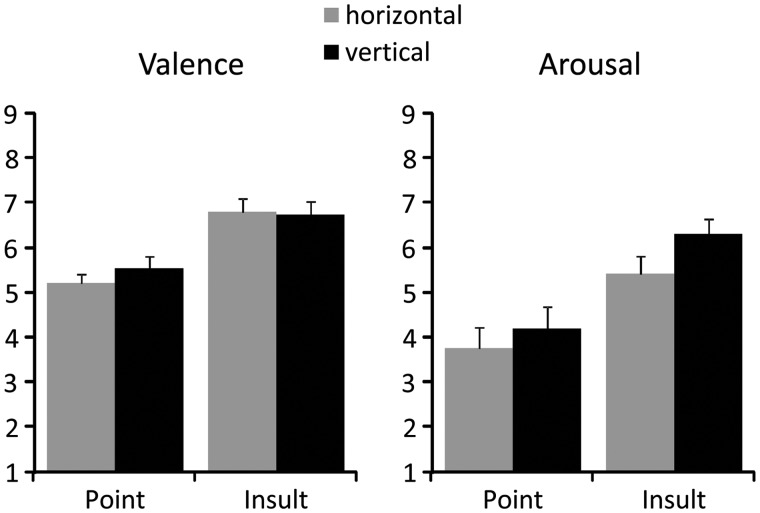

Participants perceived the Insult as more unpleasant than the Point gestures, regardless of stimulus orientation (Gesture: F(1,19) = 21.2, P < 0.001; Point: mean = 4.63, SEM = 0.2; Insult: mean = 3.23, SEM = 0.23; Figure 5). Similarly, the Insult gestures were also rated as more arousing (Gesture: F(1,19) = 21.9, P < 0.001; Point: mean = 3.98, SEM = 0.43; Insult: mean = 5.86, SEM = 0.32; Figure 5). In addition, the vertical gestures were also perceived as slightly more arousing than the horizontal stimuli (Orientation: F(1,19) = 5.1, P < 0.05; vertical: mean = 5.25, SEM = 0.35; horizontal: mean = 4.59, SEM = 0.35; Figure 5).

Fig. 5.

Subjective self-report. Mean valence and arousal ratings as a function of gesture type and stimulus orientation.

Event-related potentials

P1 modulation

A first modulation of the scalp potential emerged around 80–120 ms post-stimulus. Visual inspection of the Global Power (Figure 2) suggested significant effects of both experimental factors in this time frame. However, the two main effects seemed to differ regarding their scalp topography (Figure 3C). Although effects of gesture type closely mirrored the appearance of the visual P1 (Figure 3B), effects of stimulus orientation appeared maximally pronounced at more superiorly and centrally located parietal sensor positions. To capture these differences, the ERP was scored in three sensor clusters in the time window 100–120 ms and submitted to a three-factorial repeated measures ANOVA (Gesture: Point vs Insult; Orientation: vertical vs horizontal and Cluster: left parieto-occipital vs right parieto-occipital vs centro-parietal). Interactions of Gesture by Cluster (F(2,38) = 5.9, P = 0.01) and Orientation by Cluster (F(2,38) = 5.3, P = 0.01) confirmed the observations suggested by visual inspection. These interactions were followed up by reduced model ANOVAs for each of the three clusters, incorporating the factors Gesture and Orientation, respectively.

Main effects of Gesture were found in all three sensor clusters, albeit most pronounced over left and right parieto-occipital (P1 centered) sensor clusters (left: F(1,19) = 11.9, P < 0.01; Point: mean = 1.99, SEM = 0.35; Insult: mean = 2.32, SEM = 0.42; right: F(1,19) = 22.2, P < 0.001; Point: mean = 2.22, SEM = 0.28; Insult: mean = 2.63, SEM = 0.34; central: F(1,19) = 5.6, P < 0.05; Point: mean = 0.94, SEM = 0.31; Insult: mean = 1.15, SEM = 0.35).

A somewhat different picture emerged for the main effect of Orientation, which was also significant in all sensor clusters but maximally pronounced for the parieto-central cluster (left: F(1, 19) = 28.0, P < 0.001; vertical: mean = 2.62, SEM = 0.42; horizontal: mean = 1.68, SEM = 0.37; right: F(1, 19) = 16.7, P < 0.001; vertical: mean = 2.78, SEM = 0.33; horizontal: mean = 2.06, SEM = 0.31; central: F(1,19) = 54.7, P < 0.001; vertical: mean = 1.66, SEM = 0.33; horizontal: mean = 0.43, SEM = 0.35).

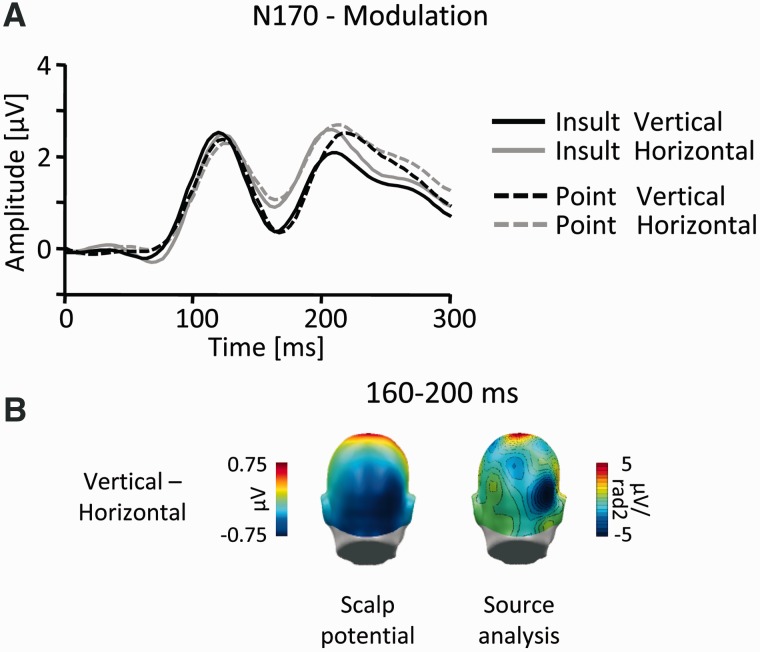

N170 modulation

The next modulation emerged around 150–210 ms post-stimulus as a main effect of stimulus orientation (Figure 2). To assess this modulation in detail, the N170 was scored in the time window 160–200 ms in two lateralized occipito-temporal sensor clusters, and the data were submitted to a three-factor repeated measures ANOVA (Gesture: Point vs Insult; Orientation: vertical vs horizontal and Cluster: left vs right). The results revealed a highly significant main effect for Orientation (F(1,19) = 53.8, P < 0.001; vertical: mean = 0.45, SEM = 0.43; horizontal: mean = 0.99, SEM = 0.43), indicating an increased negative-going deflection of the ERP to vertically oriented gestures (Figure 6).

Fig. 6.

N170 component. (A) Illustration of the ERP waveforms for a right temporo-occipital sensor (EGI #159; Figure 4). (B) Scalp difference maps (vertical–horizontal, left) and according source analysis (right) in the interval 160–200 ms.

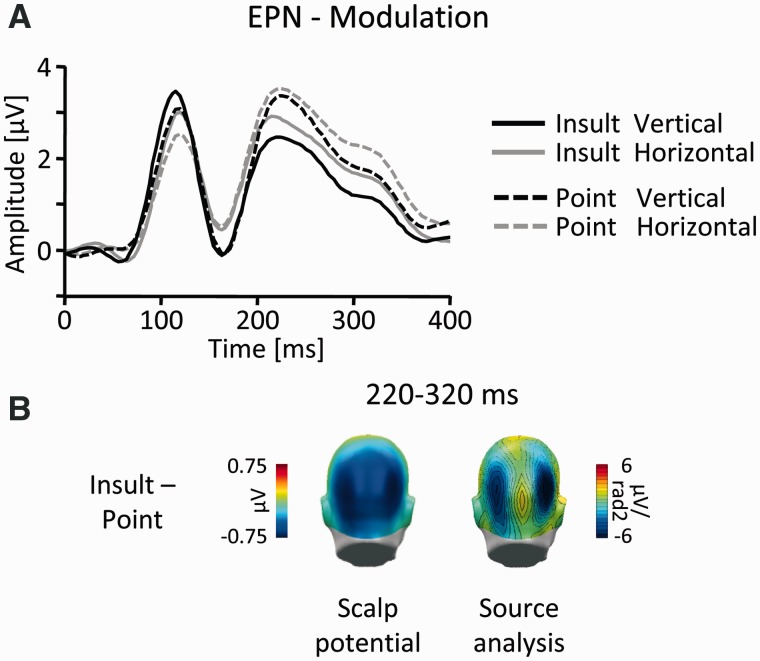

EPN modulation

Further down the temporal cascade, the Global Power yielded a third modulation over posterior leads (Figures 2 and 7B), beginning around 200 ms and lasting until around 350 ms post-stimulus. This effect was captured in a time window from 220 to 320 ms in two lateralized parieto-occipital sensor clusters with a slightly more superior localization. Again, the data were submitted to a three-way ANOVA (Gesture: Point vs Insult; Orientation: vertical vs horizontal and Cluster: left vs right). A highly significant main effect of Gesture (F(1,19) = 45.6, P < 0.001; Point: mean = 2.15, SEM = 0.36; Insult: mean = 1.62, SEM = 0.37) indicated that the modulation was exclusively due to more negative amplitudes to Insult gestures (Figure 7).

Fig. 7.

EPN-component. (A) Illustration of the ERP waveforms for a right temporo-occipital sensor (EGI #161; Figure 4). (B) Scalp difference maps (Insult-Point, left) and according source analysis (right) in the interval 220–320 ms.

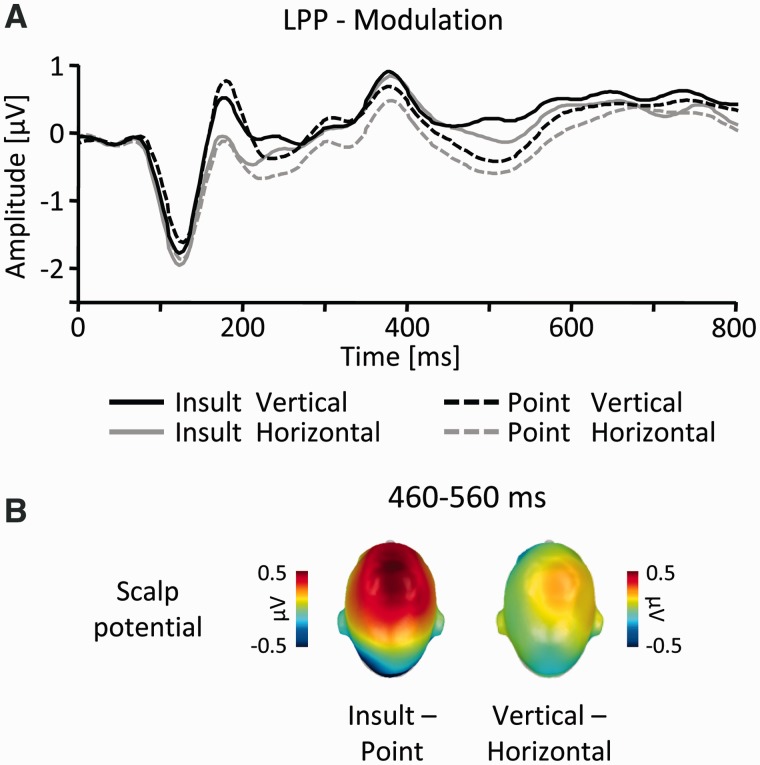

LPP modulation

A final modulation emerged around 440–580 ms and was located over fronto-central regions (Figures 2 and 8B). Accordingly, the ERP was assessed in two lateralized fronto-central sensor clusters in the time window 460–560 ms. The three-way ANOVA (Gesture: Point vs Insult; Orientation: vertical vs horizontal and Cluster: left vs right) revealed a significant higher order interaction between Gesture, Orientation and Cluster (F(1,19) = 6.9, P < 0.05). This was followed up by reduced-model ANOVAs for each lateralized cluster, incorporating the factors Gesture and Orientation, respectively. Significant main effects of Gesture (F’s(1,19) > 20.3, P’s < 0.001) were observed for left (Point: mean = –0.36, SEM = 0.18 and Insult: mean = 0.02, SEM = 0.19) and right (Point: mean = –0.09, SEM = 0.18 and Insult: mean = 0.32, SEM = 0.21) sensor clusters, indicating an increased positivity for Insult as compared with Point gestures. In contrast, an effect of Orientation (F(1, 19) = 7.3, P < 0.05; vertical: mean = 0.2, SEM = 0.2; horizontal: mean = 0.03, SEM = 0.19) was specific to right sensor sites, with vertical compared with horizontal gestures eliciting a slightly increased positive deflection (Figure 8).

Fig. 8.

LPP-component. (A) Illustration of the ERP waveforms for a right fronto-central sensor (EGI #186, Fig. 4). (B) Scalp difference maps (Insult–Point, left side; vertical–horizontal, right) in the interval 460–560 ms.

Control experiment

Visual inspection revealed an early difference between vertical and horizontal bar stimuli around 60–100 ms post-stimulus over centro-parietal leads, which preceded the P1 peak by ∼30–40 ms. This effect was scored in the time window 72–92 ms in three sensor clusters (Figure 4) and submitted to a repeated-measures ANOVA (Orientation: vertical vs horizontal and Cluster: left parieto-occipital vs right parieto-occipital vs centro-parietal). A significant interaction of Orientation by Cluster (F(2, 22) = 7.3, P < 0.01) confirmed that vertical bars elicited increased amplitudes over parieto-central leads (F(1,11) = 10.6, P < 0.01; vertical: mean = 0.14, SEM = 0.10; horizontal: mean = –0.27, SEM = 0.13) but not over P1-centered left and right sensor clusters (F’s(1,11) < 0.5, ns.; vertical left: mean = –0.13, SEM = 0.18; horizontal left: mean = –0.18, SEM = 0.23; vertical right: mean = 0.00; SEM = 0.22, horizontal right: mean = –0.11, SEM = 0.25). Inspection of the N170, EPN and LPP time frames revealed no further orientation differences.

DISCUSSION

This study aimed to dissociate the processing differences of emotional gestures according to simple physical features and effects due to the semantic content of the stimuli. Varying the orientation of emotional and neutral hand gestures allowed for the manipulation of a fundamental sensory attribute while largely preserving the inherent semantic meaning of the stimuli. Consistent with the notion of hierarchical visual processing (Grill-Spector and Malach, 2004), results demonstrate physical processing differences predominantly during early stages of visual perception, while semantic content gained increasing ascendancy over relatively later processing stages. Interestingly, the impact of semantic content was not exclusively restricted to later stages, commonly assumed to reflect elaborate stimulus processing, but was also distinctly apparent at the early P1 and EPN components. These results provide evidence that the brain processes physical and semantic stimulus attributes in parallel and that emotional meaning may be detected at relatively early stages of visual perception.

The first differentiation between the experimental categories emerged around the P1 peak (80–120 ms) over parieto-occipital sensors, appearing as relatively augmented amplitudes to vertical stimuli on the one hand and Insult gestures on the other hand. However, while the modulation due to gesture type appeared to act directly on the perceptual processes reflected by the visual P1, effects of stimulus orientation appeared as differential ERP activity superimposing the P1 (Luck, 2005). This interpretation is supported by the results of the control experiment, which also showed increased amplitudes in the P1 time frame to vertical stimuli over centro-parietal but not over P1-centered parieto-occipital sensor clusters. In the control experiment, effects of stimulus orientation were also characterized by a relatively earlier onset. Interestingly, analyzing an even earlier time frame in the main study (i.e. 80–100 ms) revealed very comparable orientation effects but no hint of modulation due to gesture type. Thus, it seems likely that the orientation effect observed in the two experiments reflects purely physically driven low-level perceptual processing in early visual cortical areas. As such, this observation is reminiscent of studies comparing vertical to horizontal spatial gratings (Kenemans et al., 1993, 1995). Interestingly, another study found that mental rotation elicited increased neural activity in dorsal visual pathways located in the superior parietal lobe (Gauthier et al., 2002). In contrast, the P1 modulation, due to gesture type, may possibly reflect an early semantic differentiation of physically simple stimuli based on coarse stimulus features. Although it may not conclusively be ruled out that minor physical differences other than stimulus orientation may account for these findings, several studies speak to the brain’s ability to distinguish between stimuli at this early stage. Consistent with the assumption of motivationally guided attention allocation to Insult gestures, enhanced P1 amplitudes have been observed repeatedly in classical spatial attention research for attended when compared with unattended stimuli (for an overview see Luck et al., 2000). More importantly, it has been shown that the P1 is affected by object-based attention (Taylor, 2002), and a recent study reported that the P1 is selectively modulated by relatively complex visual stimuli depicting fearful, disgusting and neutral contents (Krusemark and Li, 2011). These observations are also highly consistent with other results showing emotionally augmented P1 amplitudes over lateral occipital regions (Pourtois et al., 2004, 2005). Finally, in the study by Gauthier et al. (2002), object recognition was associated with activations along ventral visual areas but was not affected by mental rotation suggesting different mechanisms underlying the resolution of geometric deviance on the one hand and object perception on the other hand. In sum, the present effects in the P1 time frame are consistent with the notion of parallel and distinct processing mechanisms subserving mental rotation and visual orientation on the one hand and early emotion recognition on the other hand.

Subsequently, a second ERP modulation over temporo-occipital leads was revealed with a latency around 160–200 ms post-stimulus. This difference appeared as a more negative-going ERP deflection to vertical when compared with horizontal stimuli. Time course and localization of this effect are reminiscent of the facial processing related N170. Although the N170 is widely regarded as an indicator of face-specific categorical processing (see e.g. Bentin et al., 1996; Itier and Taylor, 2004), recent studies have reported a very similar component related to the processing of bodies and body parts (Stekelenburg and De Gelder, 2004; Meeren et al., 2005; Thierry et al., 2006; Minnebusch and Daum, 2009; De Gelder et al., 2010). Interestingly, only hand gestures, but not meaningless bars, elicited a notable N170 difference of stimulus orientation suggesting the structural encoding of body parts. However, the N170 component was not affected by emotional meaning. Although mixed results have been reported regarding the emotional modulation of the N170 both for facial, as well as for body part stimuli (De Gelder et al., 2010), these results may relate to a study directly comparing emotional and neutral face and body expressions (Stekelenburg and De Gelder, 2004). Interestingly, although emotional modulations of the N170 to face stimuli were readily apparent in this study, no such effect was found for the body-related N170. The finding that the N170 was strongly influenced by stimulus orientation may in turn relate to studies examining mental rotation of hand stimuli (Thayer et al., 2001; Thayer and Johnson, 2006). In these studies, increased ERP negativities with very similar time course and topography to the present N170 were found when processing rotated hands. Interestingly, the same studies also reported rotation effects on the earlier P1. Taken together, the present N170 effects are consistent with the notion of physically driven processing differences during the encoding of body parts in higher order visual regions.

A further ERP difference emerged as an EPN for emotional when compared with neutral gestures around 200–360 ms post-stimulus. This effect closely resembles emotion modulations as reported in previous studies using gestures (Flaisch et al., 2009, 2011) but also in studies using physically and semantically different stimulus materials including naturalistic scenes (Schupp et al., 2007; Flaisch et al., 2008a), facial expressions (Schupp et al., 2004b; Leppänen et al., 2007) and written words (Kissler et al., 2007, 2009; Herbert et al., 2008). However, comparing images with simple figure-ground configuration to complex scenes, another study reported very comparable effects due to picture composition (Bradley et al., 2007). In this regard, these EPN findings provide new evidence supporting the notion of semantic differentiation at the level of the EPN. Utilizing stimuli with very simple basic physical configuration on the one hand and very high perceptual similarity on the other hand enables the effects of emotion selection to be dissociated from purely physically driven ERP differences. This notion is further strengthened not only by demonstrating a null effect of stimulus orientation on the EPN but also more so by showing that the applied physical manipulation indeed had a profound impact on the ERP, i.e. the P1 and the N170 components. This dissociates physical from semantic processing along the time course of stimulus perception. These data are in this respect consistent with explicit attention studies showing that simple and complex target stimuli are differentiated as early as 150 ms post-stimulus (Thorpe et al., 1996; Smid et al., 1999; Codispoti et al., 2006). In sum, these data suggest that the EPN, as observed for a variety of stimulus categories, likely reflects stimulus selection based on the emotional meaning of the stimuli.

These results revealed the latest modulations in the time frame 440–580 ms post-stimulus as increased positivity over fronto-central sensors, both as a function of emotional meaning as well as stimulus orientation. The appearance of this effect is reminiscent of the LPP, a component typically found across a variety of visual stimulus materials when comparing emotional to neutral stimuli (Schupp et al., 2004b; Leppänen et al., 2007; Flaisch et al., 2008b; Herbert et al., 2008). The LPP has been related to elaborate stimulus processing and semantic evaluation (Schupp et al., 2006) and fits into the overall scheme of P300-like modulations, which assumedly index stimulus discrimination and resource allocation at a post-perceptual processing stage (Johnson, 1988; Schupp et al., 2006; Bradley et al., 2007). Thus, these results may indicate the selection and increased allocation of attention to emotionally charged Insult gestures. Although the effect of stimulus orientation also acting at this stage was comparatively small and distinctly observed with a right-lateralized topographical distribution, it may seem puzzling. However, the self-report data and the absence of any LPP-like modulation in the control experiment hint at the possibility of an emotional differentiation between even vertical and horizontal gestures. Specifically, vertical gestures were rated as more arousing than the horizontal ones, suggesting that vertical gestures may have a higher inherent significance, possibly reflected by the present orientation effect on the LPP. Consistent with this notion, signalling and warning hand signs intended to catch other people’s attention typically involve raising the arm above one’s head with an upright gesture. Taken together, the observed LPP effects are consistent with the notion of stimulus selection and the elaborate processing of emotionally significant visual stimuli.

The aims of this study were 2-fold: (i) isolating physical from semantic processing differences using a set of highly comparable emotional stimuli under preservation of their natural appearance and (ii) tracing potential effects of stimulus physics and semantics across the sequence of emotion perception as typically observed in passive viewing experimental contexts. The results show dissociable effects of stimulus meaning and orientation on all emotion-relevant ERP components. The result pattern suggests a pre-dominance of low-level physical stimulus features in early time windows encompassing the P1 and the N170, while the impact of stimulus meaning pronouncedly surpassed that of orientation on the later EPN and LPP components. Effects of stimulus semantics were observable as early as the P1 and most importantly for the EPN. These results suggest that the brain processes different attributes of an emotionally significant picture stimulus in parallel and that a coarse semantic appreciation may already occur during early stages of emotion perception.

Acknowledgements

We thank Julia Moser and Anna Kenter for their assistance in data collection.

REFERENCES

- Alorda C, Serrano-Pedraza I, Campos-Bueno JJ, Sierra-Vázquez V, Montoya P. Low spatial frequency filtering modulates early brain processing of affective complex pictures. Neuropsychologia. 2007;45:3223–33. doi: 10.1016/j.neuropsychologia.2007.06.017. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cogn Neurosci. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Hamby S, Löw A, Lang PJ. Brain potentials in perception: picture complexity and emotional arousal. Psychophysiology. 2007;44:364–73. doi: 10.1111/j.1469-8986.2007.00520.x. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: the Self-Assessment Manikin and the Semantic Differential. J Behav Ther Exp Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Buck R, van Lear CA. Verbal and nonverbal communication: distinguishing symbolic, spontaneous, and pseudo-spontaneous nonverbal behavior. J Commun. 2002;52:522–41. [Google Scholar]

- Codispoti M, Ferrari V, Junghöfer M, Schupp HT. The categorization of natural scenes: brain attention networks revealed by dense sensor ERPs. Neuroimage. 2006;32:583–91. doi: 10.1016/j.neuroimage.2006.04.180. [DOI] [PubMed] [Google Scholar]

- de Cesarei A, Codispoti M. When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology. 2006;43:207–15. doi: 10.1111/j.1469-8986.2006.00392.x. [DOI] [PubMed] [Google Scholar]

- de Cesarei A, Codispoti M. Scene identification and emotional response: which spatial frequencies are critical? J Neurosci. 2011;31:17052–7. doi: 10.1523/JNEUROSCI.3745-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Cesarei A, Codispoti M, Schupp HT. Peripheral vision and preferential emotion processing. Neuroreport. 2009;20:1439–43. doi: 10.1097/WNR.0b013e3283317d3e. [DOI] [PubMed] [Google Scholar]

- de Gelder B, van den Stock J, Meeren HKM, Sinke CBA, Kret ME, Tamietto M. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci Biobehav Rev. 2010;34:513–27. doi: 10.1016/j.neubiorev.2009.10.008. [DOI] [PubMed] [Google Scholar]

- Efron D. Gesture, Race and Culture. Mouton: The Hague; 1972/1941. [Google Scholar]

- Farah MJ, Wilson KD, Drain M, Tanaka JN. What is “special” about face perception? Psychol Rev. 1998;105:482–98. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- Flaisch T, Häcker F, Renner B, Schupp HT. Emotion and the processing of symbolic gestures: an event-related brain potential study. Soc Cogn Affect Neurosci. 2011;6:109–18. doi: 10.1093/scan/nsq022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flaisch T, Junghöfer M, Bradley MM, Schupp HT, Lang PJ. Rapid picture processing: affective primes and targets. Psychophysiology. 2008a;45:1–10. doi: 10.1111/j.1469-8986.2007.00600.x. [DOI] [PubMed] [Google Scholar]

- Flaisch T, Schupp HT, Renner B, Junghöfer M. Neural systems of visual attention responding to emotional gestures. Neuroimage. 2009;45:1339–46. doi: 10.1016/j.neuroimage.2008.12.073. [DOI] [PubMed] [Google Scholar]

- Flaisch T, Stockburger J, Schupp HT. Affective prime and target picture processing: an ERP analysis of early and late interference effects. Brain Topogr. 2008b;20:183–91. doi: 10.1007/s10548-008-0045-6. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Jellinghaus A, Herrmann M. Time course of implicit processing and explicit processing of emotional faces and emotional words. Biol Psychol. 2011;87:265–74. doi: 10.1016/j.biopsycho.2011.03.008. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Hayward WG, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. BOLD activity during mental rotation and viewpoint-dependent object recognition. Neuron. 2002;34:161–71. doi: 10.1016/s0896-6273(02)00622-0. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–77. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Herbert C, Junghofer M, Kissler J. Event related potentials to emotional adjectives during reading. Psychophysiology. 2008;45:487–98. doi: 10.1111/j.1469-8986.2007.00638.x. [DOI] [PubMed] [Google Scholar]

- Holmes A, Winston JS, Eimer M. The role of spatial frequency information for ERP components sensitive to faces and emotional facial expression. Brain Res Cogn Brain Res. 2005;25:508–20. doi: 10.1016/j.cogbrainres.2005.08.003. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14:132–42. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Johnson R., Jr. The amplitude of the P300 component of the event-related potential: review and synthesis. In: Ackles PK, Jennings JR, Coles MGH, editors. Advances in Psychophysiology. Vol. 3. Greenwich, CT: JAI Press; 1988. pp. 69–138. [Google Scholar]

- Junghöfer M, Elbert T, Leiderer P, Berg P, Rockstroh B. Mapping EEG-potentials on the surface of the brain: a strategy for uncovering cortical sources. Brain Topogr. 1997;9:203–17. doi: 10.1007/BF01190389. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37:523–32. [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kenemans JL, Kok A, Smulders FT. Event-related potentials to conjunctions of spatial frequency and orientation as a function of stimulus parameters and response requirements. Electroencephalogr Clin Neurophysiol. 1993;88:51–63. doi: 10.1016/0168-5597(93)90028-n. [DOI] [PubMed] [Google Scholar]

- Kenemans JL, Smulders FT, Kok A. Selective processing of two-dimensional visual stimuli in young and old subjects: electrophysiological analysis. Psychophysiology. 1995;32:108–20. doi: 10.1111/j.1469-8986.1995.tb03302.x. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Peyk P, Junghofer M. Buzzwords: early cortical responses to emotional words during reading. Psychol Sci. 2007;18:475–80. doi: 10.1111/j.1467-9280.2007.01924.x. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Winkler I, Junghofer M. Emotion and attention in visual word processing: an ERP study. Biol Psychol. 2009;80:75–83. doi: 10.1016/j.biopsycho.2008.03.004. [DOI] [PubMed] [Google Scholar]

- Kok A. On the utility of P3 amplitude as a measure of processing capacity. Psychophysiology. 2001;38:557–77. doi: 10.1017/s0048577201990559. [DOI] [PubMed] [Google Scholar]

- Krusemark EA, Li W. Do all threats work the same way? Divergent effects of fear and disgust on sensory perception and attention. J Neurosci. 2011;31:3429–34. doi: 10.1523/JNEUROSCI.4394-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Motivated attention: affect, activation, and action. In: Lang PJ, Simons RF, Balaban M, editors. Attention and emotion: Sensory and Motivational Processes. Mahwah, NJ: Erlbaum; 1997. pp. 97–135. [Google Scholar]

- Leppänen JM, Kauppinen P, Peltola MJ, Hietanen JK. Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Res. 2007;1166:103–9. doi: 10.1016/j.brainres.2007.06.060. [DOI] [PubMed] [Google Scholar]

- Luck S. Ten simple rules for designing and interpreting ERP experiments. In: Handy TC, editor. Event-Related Potentials. A Methods Handbook. Cambridge, MA: MIT Press; 2005. pp. 17–32. [Google Scholar]

- Luck SJ, Woodman GF, Vogel EK. Event-related potential studies of attention. Trends Cogn Sci. 2000;4:432–40. doi: 10.1016/s1364-6613(00)01545-x. [DOI] [PubMed] [Google Scholar]

- Meeren HKM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proc Natl Acad Sci U S A. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minnebusch DA, Daum I. Neuropsychological mechanisms of visual face and body perception. Neurosci Biobehav Rev. 2009;33:1133–44. doi: 10.1016/j.neubiorev.2009.05.008. [DOI] [PubMed] [Google Scholar]

- Morris D. Gestures: Their Origins and Distribution. London: J. Cape; 1979. [Google Scholar]

- Morris D. Bodytalk: The Meaning of Human Gestures. New York, NY: Crown Publishers; 1994. [Google Scholar]

- Mühlberger A, Wieser MJ, Herrmann MJ, Weyers P, Tröger C, Pauli P. Early cortical processing of natural and artificial emotional faces differs between lower and higher socially anxious persons. J Neural Transm. 2009;116:735–46. doi: 10.1007/s00702-008-0108-6. [DOI] [PubMed] [Google Scholar]

- Öhman A, Flykt A, Lundqvist D. Unconscious emotion: evolutionary perspectives, psychophysiological data and neuropsychological mechanisms. In: Lane RD, Nadel L, Ahern G, editors. Cognitive Neuroscience of Emotion. New York, NY: Oxford University Press; 2000. pp. 296–327. [Google Scholar]

- Peyk P, De Cesarei A, Junghöfer M. ElectroMagnetoEncephalography software: overview and integration with other EEG/MEG toolboxes. Comput Intell Neurosci. 2011;2011:861705. doi: 10.1155/2011/861705. doi:10.1155/2011/861705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P. Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum Brain Mapp. 2005;26:65–79. doi: 10.1002/hbm.20130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb Cortex. 2004;14:619–33. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Sabbagh MA, Taylor M. Neural correlates of theory-of-mind reasoning: an event-related potential study. Psychol Sci. 2000;11:46–50. doi: 10.1111/1467-9280.00213. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Cuthbert BN, Bradley MM, Hillman CH, Hamm AO, Lang PJ. Brain processes in emotional perception: motivated attention. Cogn Emot. 2004a;18:593–611. [Google Scholar]

- Schupp HT, Flaisch T, Stockburger J, Junghöfer M. Emotion and attention: event-related brain potential studies. Prog Brain Res. 2006;156:31–51. doi: 10.1016/S0079-6123(06)56002-9. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Ohman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO. The facilitated processing of threatening faces: an ERP analysis. Emotion. 2004b;4:189–200. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Stockburger J, Codispoti M, Junghöfer M, Weike AI, Hamm AO. Selective visual attention to emotion. J Neurosci. 2007;27:1082–9. doi: 10.1523/JNEUROSCI.3223-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schupp HT, Stockburger J, Schmälzle R, Bublatzky F, Weike AI, Hamm AO. Visual noise effects on emotion perception: brain potentials and stimulus identification. Neuroreport. 2008;19:167–71. doi: 10.1097/WNR.0b013e3282f4aa42. [DOI] [PubMed] [Google Scholar]

- Smid HG, Jakob A, Heinze HJ. An event-related brain potential study of visual selective attention to conjunctions of color and shape. Psychophysiology. 1999;36:264–79. doi: 10.1017/s0048577299971135. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, de Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777–80. doi: 10.1097/00001756-200404090-00007. [DOI] [PubMed] [Google Scholar]

- Taylor MJ. Non-spatial attentional effects on P1. Clin Neurophysiol. 2002;113:1903–8. doi: 10.1016/s1388-2457(02)00309-7. [DOI] [PubMed] [Google Scholar]

- Thayer ZC, Johnson BW. Cerebral processes during visuo-motor imagery of hands. Psychophysiology. 2006;43:401–12. doi: 10.1111/j.1469-8986.2006.00404.x. [DOI] [PubMed] [Google Scholar]

- Thayer ZC, Johnson BW, Corballis MC, Hamm JP. Perceptual and motor mechanisms for mental rotation of human hands. Neuroreport. 2001;12:3433–7. doi: 10.1097/00001756-200111160-00011. [DOI] [PubMed] [Google Scholar]

- Thierry G, Pegna AJ, Dodds C, Roberts M, Basan S, Downing P. An event-related potential component sensitive to images of the human body. Neuroimage. 2006;32:871–9. doi: 10.1016/j.neuroimage.2006.03.060. [DOI] [PubMed] [Google Scholar]

- Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–2. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- van Heijnsbergen CCRJ, Meeren HKM, Grèzes J, de Gelder B. Rapid detection of fear in body expressions, an ERP study. Brain Res. 2007;1186:233–41. doi: 10.1016/j.brainres.2007.09.093. [DOI] [PubMed] [Google Scholar]

- Vlamings PHJM, Goffaux V, Kemner C. Is the early modulation of brain activity by fearful facial expressions primarily mediated by coarse low spatial frequency information? J Vis. 2009;9(12):1–13. doi: 10.1167/9.5.12. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]