Abstract

More than a decade of research has demonstrated that faces evoke prioritized processing in a ‘core face network’ of three brain regions. However, whether these regions prioritize the detection of global facial form (shared by humans and mannequins) or the detection of life in a face has remained unclear. Here, we dissociate form-based and animacy-based encoding of faces by using animate and inanimate faces with human form (humans, mannequins) and dog form (real dogs, toy dogs). We used multivariate pattern analysis of BOLD responses to uncover the representational similarity space for each area in the core face network. Here, we show that only responses in the inferior occipital gyrus are organized by global facial form alone (human vs dog) while animacy becomes an additional organizational priority in later face-processing regions: the lateral fusiform gyri (latFG) and right superior temporal sulcus. Additionally, patterns evoked by human faces were maximally distinct from all other face categories in the latFG and parts of the extended face perception system. These results suggest that once a face configuration is perceived, faces are further scrutinized for whether the face is alive and worthy of social cognitive resources.

Keywords: face perception, social cognition, mind perception, MVPA

INTRODUCTION

At one level of analysis, a face is simply a visual object; a pattern of light cast across the retina that can be described in terms of lines, colors and textures. Yet, at some point, these visual features give rise to the recognition that the face belongs to another living being, a minded agent with the potential for thoughts, feelings and actions. For this reason, faces are exceptionally salient. Although much is known about the brain regions activated when viewing a face, how the brain determines that a face is an emblem of a mind is less well understood.

Here we propose that the perception of animacy is the crucial step between viewing a face as a collection of visual features in a particular configuration and viewing a face as a social entity. By animacy we mean being alive, with the capacity for self-propelled motion. Converging evidence suggests that animacy, defined in this way, is a fundamental conceptual categorization schema based on domain-specific neural mechanisms (Caramazza and Shelton, 1998). The ability to discriminate animate agents from inanimate objects develops early (Gelman and Spelke, 1981; Legerstee, 1992; Rakison and Poulin-Dubois, 2001) and is one of most robust conceptual discriminations to survive in patients with semantic dementia (Hodges et al., 1995). Furthermore, healthy volunteers are highly sensitive to static visual cues that convey animacy in faces (Looser and Wheatley, 2010).

Neuroimaging and single-cell recording in non-human primates has indicated that there are several face-sensitive patches of cortex in each individual’s brain (Tsao et al., 2008) yet there are three regions that are consistently and robustly activated across subjects. This network has been characterized as the ‘core face perception system’ and is comprised of three areas: the inferior occipital gyrus (IOG), posterior superior temporal sulcus (pSTS) and the lateral aspect of the mid-fusiform gyrus (latFG) (Haxby et al., 2000). The conceptualization of this core face perception system arose from multiple findings comparing neural activity when viewing human faces to activity when viewing non-face stimuli [e.g. chairs, tools (e.g. Haxby et al., 2001); scrambled faces (e.g. Puce et al., 1995) and houses (e.g. Kanwisher et al., 1997)]. However, human faces differ from non-face stimuli in at least two ways: animacy, faces are living while non-face objects are not, and global form, faces have particular features arranged in a particular configuration that is distinct from non-face objects. Thus, increased activation to human faces vs non-faces cannot determine whether observed activations reflect the processing of global form ‘does it look like a face?’, the processing of animacy, ‘does it look alive?’ or both. A number of findings suggest that it may not be only shape-based form because cues to animacy activate regions coincident with human face processing, even in the absence of human faces [e.g. biological motion (Grossman and Blake, 2002); animal faces (Tong et al., 2000); non-face animations (Castelli et al., 2000; Gobbini et al., 2007; robot faces (Gobbini et al., 2011))]. Further, the inference of animacy activates regions within the face perception system, even when form and motion cues are held constant (Wheatley et al., 2007) suggesting that face-processing regions may be broadly tuned to animacy cues that include, but are not limited to, facial form.

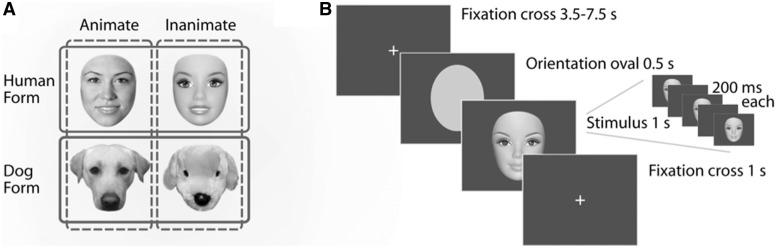

The present study manipulated global form and animacy orthogonally to determine if regions of the core face network represent faces based on their overall configuration, as markers of living agents or both. We recorded BOLD activity as 30 subjects viewed images of living faces (humans and dogs) and life-like faces (dolls and toy dogs). Although all categories have subtle differences in their visual features, these categories can be grouped by animacy or by form, based on their overall structure (Figure 1a). Although the nodes of the face network responded strongly to all face images, multivariate pattern analyses revealed distinct similarity structures within each region of interest (ROI).

Fig. 1.

Stimuli and paradigm. (A) Examples of the four stimulus categories. Solid line indicates similarity by form; dashed line indicates similarity by animacy. (B) Each trial consisted of an orientation oval followed by a single image flashed three times at 200 ms intervals. Trials were separated by variable (4.5–8.5 s) periods of fixation.

METHODS

All 30 participants (17 females and 13 males) were right-handed, with normal or corrected to normal vision and no history of neurological illness. Participants received partial course credit or were financially compensated. Informed consent was obtained for each participant in accordance with procedures approved by the Committee for the Protection of Human Subjects at Dartmouth College.

Stimuli

Stimuli were five grayscale exemplars from each of the following categories: human faces, doll faces, dog faces, toy dog faces and clocks (Figure 1a). Images were cropped to exclude all information outside of the face, resized to the same height (500 pixels) and placed on a medium gray background. All categories were matched for mean luminance.

Scanning procedure

Over the course of 10 runs, a 3.0-T Philips fMRI scanner acquired 1250 BOLD responses (TR = 2 s) from each participant. Each trial consisted of an orientation oval to signal the start of a trial, followed by a ‘stimulus triplet’ comprised of a single image flashed three times at 200 ms intervals (Figure 1b). To ensure attention, each run also contained five probe trials (one from each category) in which the third image of the triplet did not match the first two. Participants were instructed to press a button each time this non-match occurred, in order to ensure attention to all trials. These trials were defined as a condition of no-interest and not analyzed. All trials were separated by variable (4.5–8.5 s) periods of fixation with a low level attention task in which participants were instructed to press a button each time the fixation cross changed color.

ANALYSIS

fMRI preprocessing

Three subjects were excluded because of malfunctions with the stimulus presentation software. For the remaining 27 subjects, functional and anatomical images were pre-processed and analyzed using AFNI (Cox, 1996). As the slices of each volume were not acquired simultaneously, a timing correction procedure was used. All volumes were motion corrected to align to the functional volume acquired closest to the anatomical image. Transient spikes in the signal were suppressed with the AFNI program 3dDespike and head motion was included as a regressor to account for signal changes due to motion artifact. Data were normalized to the standardized space of Talairach and Tournoux (1988), and smoothed with a 4-mm full width at half maximum (FWHM) Gaussian smoothing kernel.

Regions of interest selection

In order to select ROIs that were not biased toward any particular face category, we avoided a traditional face localizer (which would have selected voxels only responsive for human faces) and instead included voxels that were more active for faces than clocks in at least one of the following four comparisons: human > clock, doll > clock, real dog > clock, and toy dogs > clock. Because a traditional face localizer was not used, we avoided labels associated with traditional face localizers (occipital face area—OFA, fusiform face area—FFA and face superior temporal sulcus—fSTS) and instead used the anatomical nomenclature of inferior occipital gyrus (IOG), lateral fusiform gyrus (latFG) and posterior superior temporal sulus (pSTS). The union analysis was performed at the group level and warped into individual space for each subject to provide consistent ROIs across participants (Mahon and Caramazza, 2010). ROIs were thresholded at P < 0.05, FDR corrected for multiple comparisons, except for pSTS, which was thresholded at P < 0.10, FDR corrected for multiple comparisons, in order to have enough voxels to perform the multivariate pattern analysis. A summary of all clusters that survived this face-sensitive union mask is shown in Table 1.

Table 1.

Cluster summary for each ROI

| Hemi | vxls | Center of mass |

Extent |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | Min. x | Max. x | Min. y | Max. y | Min. z | Max. z | |||

| Inf. frontal | R | 568 | −45.8 | −20.5 | 20.3 | −61.5 | −28.5 | −43.5 | 1.5 | −3.5 | 38.5 |

| Sup. frontal | 224 | −1.2 | −9 | 49.8 | −13.5 | 10.5 | −28.5 | 16.5 | 35.5 | 56.5 | |

| Precentral gyrus | L | 201 | 36.1 | 22.6 | 54.1 | 22.5 | 52.5 | 13.5 | 34.5 | 44.5 | 65.5 |

| Fusiform gyrus | L | 51 | 38.3 | 45.9 | −16.7 | 34.5 | 43.5 | 37.5 | 52.5 | −21.5 | −12.5 |

| R | 121 | −36.9 | 43.3 | −19.4 | −46.5 | −22.5 | 31.5 | 55.5 | −27.5 | −12.5 | |

| Insula | L | 162 | 29.2 | −17.7 | 5.2 | 22.5 | 40.5 | −28.5 | −1.5 | −6.5 | 17.5 |

| R | 224 | −37.4 | −19.7 | 3.2 | −49.5 | −25.5 | −28.5 | −7.5 | −9.5 | 11.5 | |

| Sup. temp. sulcus | R | 56 | −49.4 | 40.7 | 9.1 | −64.5 | −40.5 | 31.5 | 52.5 | 5.5 | 14.5 |

| Inf. occip. gyrus | R | 96 | −43.4 | 67.9 | −3.2 | −49.5 | −37.5 | 61.5 | 76.5 | −12.5 | 5.5 |

| Amygdala | L | 52 | 15.7 | 4.5 | −7.7 | 7.5 | 22.5 | −1.5 | 10.5 | −12.5 | −0.5 |

| R | 99 | −20.7 | 2.3 | −9.4 | −28.5 | −10.5 | −10.5 | 13.5 | −15.5 | −0.5 | |

Multivariate pattern analysis

Each individual’s ROIs were interrogated with multivariate pattern analyses to identify the similarity structure of the population responses in each participant. Analyses were performed separately in all ROIs for each of the 27 subjects.

Within each voxel, responses at 6, 8 and 10 s after stimulus presentation were averaged to capture the peak of the hemodynamic response function for each trial. These values were z-scored across all trials of interest, within each individual voxel. All trials for each category were averaged to represent each voxel’s response to each of the four categories. Thus, each voxel contributed one value to each of the four pattern vectors. The response of all voxels in an ROI for a category represented the pattern of activation in that ROI for that category.

These category patterns were correlated with each other and converted into a correlation distance, here defined as 1—Pearson correlation, resulting in a 4 × 4 dissimilarity matrix for each ROI in each subject. Correlation distance is a useful metric for quantifying the relationship between populations of voxels or neurons and can range from 0 (maximally similar patterns) to 2 (maximally dissimilar patterns). Importantly, using 1—Pearson correlation as the distance metric ensures that the overall magnitude of activation is orthogonal to the relationship between activation patterns (Haxby et al., 2001; Kriegeskorte et al., 2008). This is due to the fact that two distributions with very different overall means can be perfectly correlated, while two distributions with identical overall means may not be correlated at all. As the information used to create the ROIs (i.e. mean intensity) is not taken into account when performing the pattern analysis, selecting face sensitive regions based on magnitude cannot influence the relationship between patterns that exist within these regions. Used together, these approaches can provide complementary insight because the analysis used to define ROIs captures global magnitude while MVPA examines the relationship between normalized patterns.

RESULTS

Multivariate pattern analysis results

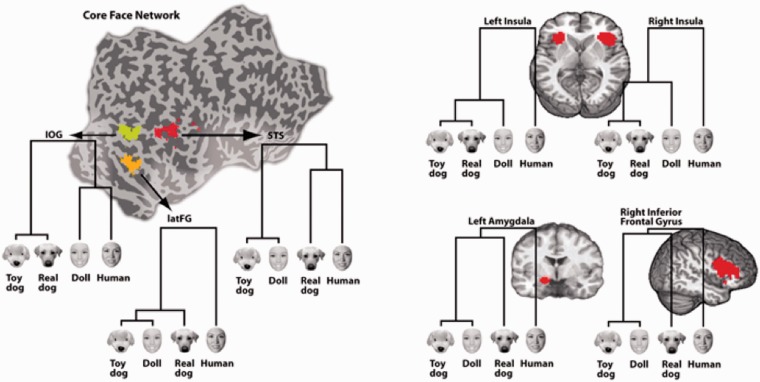

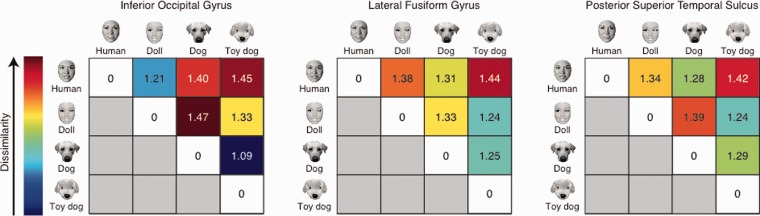

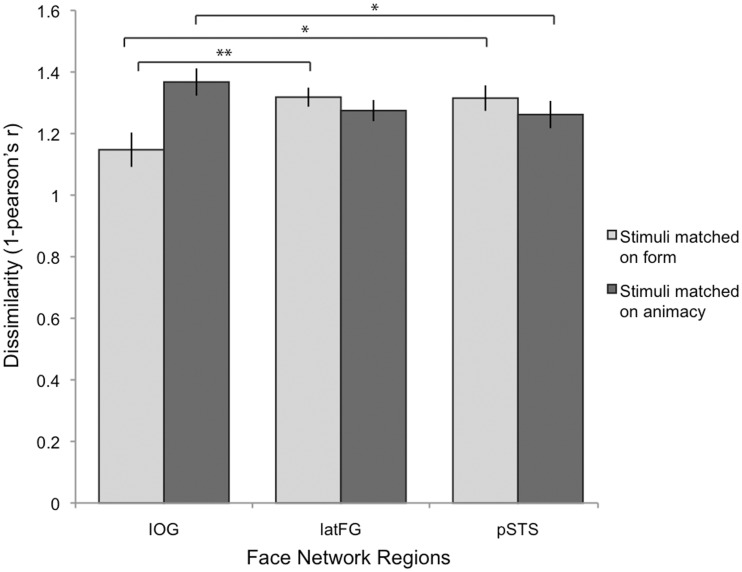

Pair-wise correlation distances were visualized with dendrograms (Figure 2) computed using correlation distances (Figure 3) and a single linkage algorithm (dendrogram function in Matlab) and quantified using a repeated-measures ANOVA. To test for effects of animacy and form across the ROIs, we calculated the pair-wise dissimilarity (1—Pearson correlation) between all face category patterns and then calculated an average correlation distance for the dimension of form ([ρ(human, doll)) + ρ(dog, toydog)]/2) and the dimension of animacy ([ρ(human, dog) + ρ(doll, toydog)]/2) for each participant and submitted these values to a 2 (Dimension: form, animacy) × 3 (ROI: IOG, latFG, pSTS) repeated measures ANOVA (Figure 4). There was a no significant main effect of the type of correlation distance: Wilks’ Lambda = 0.976, F(1,26) = 0.646, P = 0.429 or ROI: Wilks’ Lambda = 0.870, F(2,25) = 1.86, P = 0.177. However, there was a significant interaction between Dimension and ROI, Wilks’ Lambda = 0.759, F(2,25) = 3.963, P = 0.032, suggesting that the three ROIs encoded animacy and form in different ways. Planned comparisons tested the hypotheses that: (i) IOG population responses prioritize form information more than latFG and STS, which would be evidenced by a smaller correlation distance between faces that share the same form (e.g. humans and dolls) in IOG than in latFG and STS and (ii) latFG and STS population responses prioritize animacy information more than IOG which would be evidenced by a smaller correlation distance for pairs that share animacy (e.g. humans and dogs). Unless otherwise noted, all statistical tests are two-tailed.

Fig. 2.

Regions of Interest (ROI) masks and response pattern similarity for the core face network and four regions of the extended face network. Dendrograms display the results of a hierarchical cluster analysis on response patterns within each ROI.

Fig. 3.

Mean pair-wise correlation distance matrices for the core face network ROIs.

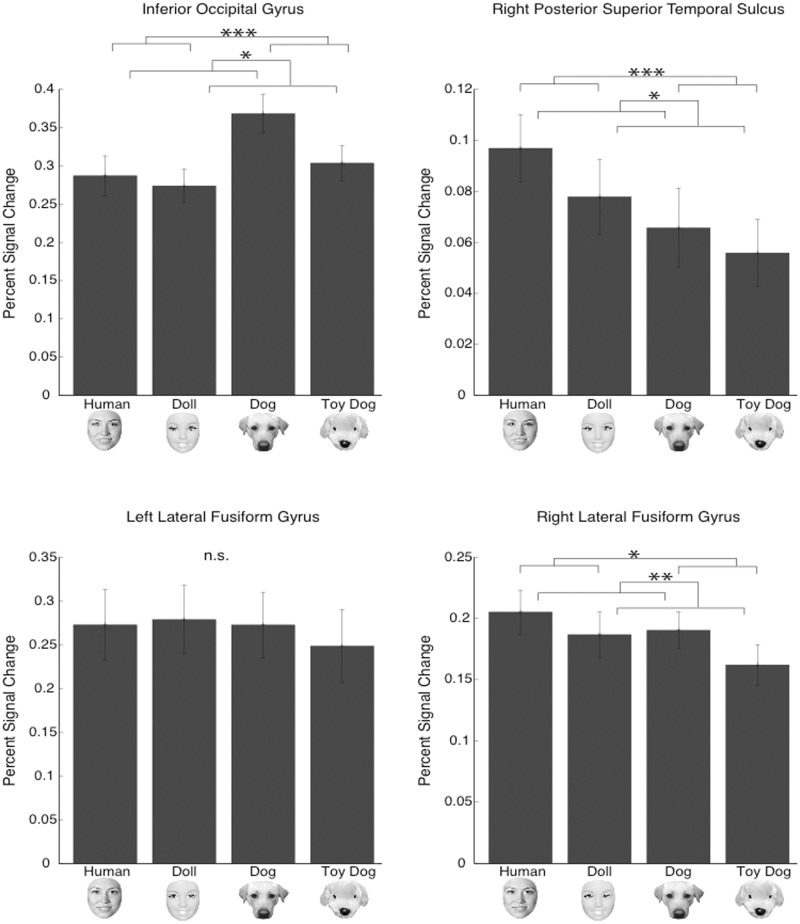

Fig. 4.

Average correlation distance (1-Pearson’s r, from 0, maximally similar patterns, to 2 maximally dissimilar patterns) for form and animacy, by ROI (IOG, latFG, pSTS). Patterns for faces that matched on form [ρ(human, doll), ρ(dogs, toy dogs)] were more similar in IOG than in latFG and pSTS. Patterns for faces that matched on animacy [ρ(human, dogs), ρ(doll, toy dogs)] were more dissimilar in IOG than in pSTS (*P < 0.05; **P < 0.01).

Consistent with our hypotheses, IOG had smaller within-form correlation distances (M = 1.15, s.d. = 0.29) both compared to pSTS [M = 1.32, s.d. = 0.22; t(26)=2.713, P = 0.012] and compared to latFG [M = 1.32, s.d. = 0.16; t(26) = 2.918, P = 0.007]; whereas there were smaller within-animacy correlation distances in pSTS (M = 1.26, s.d. = 0.23) than in IOG [M = 1.36, s.d. = 0.23; t(26) = 2.065, P = 0.049]. There were also smaller within-animacy correlation distances in latFG (M = 1.27, s.d. = 0.18) than in IOG, yet this was only significant with a one-tailed test [t(26) = 1.726, P = 0.048]. A large body of literature (e.g. Kanwisher et al., 1997) suggests that latFG is specialized for human face processing, a view consistent with the structure visualized in latFG (see Figure 2). By combining human and dogfaces, the animacy dimension in the ANOVA may have obscured a distinct pattern for human faces in latFG. To test the human-as-special hypothesis directly, we calculated whether the difference (correlation distance) between the pattern evoked by human faces and patterns evoked by non-human faces was greater than the difference (correlation distance) between any two non-human categories by conducting a 2 (Dimension: form, animacy) × 2 (Pair-type: human–non-human, non-human–non-human) repeated-measures ANOVA. Consistent with the human-as-special hypothesis for latFG, the results revealed only a main effect of pair type [Wilks’ Lambda = 0.627, F(1,26) = 15.46, P = 0.001]. Pair-wise correlation distances between human and non-human categories (M = 1.35, s.d. = 0.18) were greater than pair-wise correlation distances between any other two non-human categories (M = 1.24, s.d. = 0.21).

Magnitude analysis and results

Although the experiment was designed to investigate patterns within face-sensitive regions, we also analyzed the differences in average magnitude levels within the ROIs using a standard GLM. The combined approach of analyzing both patterns and overall magnitude allowed us to compare the information gleaned from each analysis. To investigate the relationship between the four face categories within each ROI, we performed a 2 (form: human, dog) × 2 (animacy: animate, inanimate) random effects ANOVA on the mean of the three time points (6, 8 and 10 s) that surrounded the peak activation for each condition, for each subject. As anticipated, all face stimuli robustly activated the face network (Figure 5), but a less consistent picture emerged with regards to the relationship between the stimulus categories when using the coarser GLM analysis.

Fig. 5.

Magnitude analysis. Mean magnitude in each ROI of the core face network. Percent signal change from mean across all runs: *P < 0.05; **P < 0.01; ***P < 0.001.

IOG, right STS and right latFG each showed a significant effect of form and a significant effect of animacy on the average magnitude response of that region (see Figure 3). There were no interactions between form and animacy in any of these regions. Post hoc comparisons revealed that real dogs elicited the greatest (magnitude) response in IOG compared to the other three face categories. In the right STS, humans and dolls elicited a significantly greater response than toy dog faces. In right latFG, human, doll and real dog faces elicited significantly greater responses compared to toy dog faces. In left latFG, there were no differences in average magnitude between the four face conditions. However, all of these comparisons should be cautiously interpreted because recent functional imaging work has demonstrated that many brain regions encode ‘distinct but overlapping’ neural representations (e.g. Haxby et al., 2001; Kriegeskorte et al., 2007; Peelen and Downing, 2007). Averaging over these patterns to produce a single magnitude value (GLM analysis) necessarily loses the information held in these patterns. For this reason, an equivalent averaged-magnitude response in left latFG to humans and dolls may belie two very distinct patterns; one to humans and another to dolls. Indeed, this is exactly what was uncovered by the MVPA analysis. We suggest that the GLM analysis here can be taken as evidence that all faces, relative to clocks, robustly activated all four ROIs. However, interpretations based on finer-grained comparisons of magnitude between categories (or lack there of) are to be avoided due to the likelihood that these regions contain distinct but spatially overlapping representations. In such a case, analyses that preserve the spatial patterns of activation, such as MVPA, are preferred to analyses that do not preserve these patterns.

DISCUSSION

These results suggest that face processing has two distinct priorities: the detection of global, shaped based form and the detection of animacy. IOG response patterns firmly evinced the former priority, being more strongly organized along the dimension of form than response patterns in pSTS and latFG. Conversely, presumed later face processing regions of pSTS and latFG more strongly prioritized the dimension of animacy compared to IOG.

LatFG responses were best characterized as having a human-as-special organizational schema in which human faces evoked a response pattern distinct from the response patterns of all other stimulus categories. Although the extended face perception system was not the intended focus of this paper, this ‘human as distinct’ organizational schema was observed in regions of the extended face perception system (left amygdala, bilateral anterior insula and right inferior frontal gyrus, Figure 2), thought to be recruited for face perception tasks related to social understanding (Haxby et al., 2000). This result is consistent with dynamical causal modeling of fMRI data, which suggests that the latFG exerts a dominant influence on the extended face perception system (Fairhall and Ishai, 2007). Taken together, these results suggest that the latFG may gate the recruitment of the extended face network in order to extract social meaning from animate faces.

The ability of the brain to process stimuli based on both global form and animacy suggests two stages of face processing, wherein objects that match a coarse face pattern are additionally analyzed for animacy (Looser and Wheatley, 2010; Wheatley et al., 2011). The prioritization of form may maximize survival: better to false alarm to a spurious face-like pattern in a rock than miss a predator. Indeed, newborns appear to be hard-wired for an orienting response to faces (Goren et al., 1975; Johnson et al., 1991) and all faces—even schematic line drawings and cartoon faces—capture attention more rapidly than other objects (Suzuki and Cavanagh, 1995; Ro et al., 2001; Bindemann et al., 2005; Jiang et al., 2007; Langton et al., 2008) and evoke a rapid electrocortical response (e.g. Bentin et al., 1996; Allison et al., 1999). This liberal, rapid face response is consistent with the tenets of signal detection theory (Green and Swets, 1966) in which the frequency of false-alarms is correlated with the importance of detecting a stimulus.

Yet false alarms are not without cost. The perception of other animate beings evokes intensive cognitive processes, such as those supporting person knowledge (Todorov et al., 2007) action prediction (Brass et al., 2007) and mentalizing (Castelli et al., 2000; Wagner et al., 2011). Thus, animacy-based encoding may serve to enhance processing of faces deemed worthy of additional mental resources. Recent work has shown that people are highly sensitive to the visual cues that indicate animacy in a face (Looser and Wheatley, 2010) and while mannequins, dolls, statues, and robots may evoke a rapid, face-specific electrocortical response, only human faces sustain a longer positivity potential (Wheatley et al., 2011). These findings, along with the present work, suggest a second stage of processing that discards false-alarms before these simulacra unnecessarily tax social-cognitive resources. Such a dual processing system would allow rapid attention to face-like shapes while reserving social cognitive resources for animate faces capable of thoughts, feelings, and action.

The present study replicates existing literature on the neural correlates of face perception and extends these findings by demonstrating that faces are represented in terms of their overall spatial configuration in IOG while animacy becomes an additional priority in pSTS and latFG. The latFG appears particularly tuned to human faces as a distinct category of animate agents consistent with theories of specialized processing in this region (e.g. Kanwisher et al., 1997). Distinctiveness of the human face was also observed in areas that comprise the extended face perception network. Taken together, these results suggest that once a face configuration is detected, it is processed to determine whether it is alive and worthy of our social-cognitive energies.

FUNDING

This work was supported by the Department of Psychological and Brain Sciences at Dartmouth College.

REFERENCES

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception: potentials generated in occipitotemporal cortex by face and non-face stimuli. Cerebral Cortex. 1999;9:415–30. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Hooge IT, Jenkins R, de Haan EH. Faces retain attention. Psychonomic Bulletin & Review. 2005;12:1048–53. doi: 10.3758/bf03206442. [DOI] [PubMed] [Google Scholar]

- Brass M, Schmitt RM, Spengler S, Gergely G. Investigating action understanding: inferential processes versus action simulation. Current Biology. 2007;17:2117–21. doi: 10.1016/j.cub.2007.11.057. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain the animate-inanimate distinction. Journal of Cognitive Neuroscience. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happé F, Frith U, Frith C. Movement and mind: a functional imaging study of perception and interpretation of complex intentional movement patterns. Neuroimage. 2000;12:314–25. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–73. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cerebral Cortex. 2007;17:2400–6. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Gelman R, Spelke ES. The development of thoughts about animate and inanimate objects: Implications for research on social cognition. In: Flavell JH, Ross L, editors. Social Cognitive Development. New York: Cambridge University Press; 1981. pp. 43–66. [Google Scholar]

- Gobbini MI, Koralek AC, Bryan RE, Montgomery KJ, Haxby JV. Two takes on the social brain: a comparison of theory of mind tasks. Journal of Cognitive Neuroscience. 2007;19:1803–14. doi: 10.1162/jocn.2007.19.11.1803. [DOI] [PubMed] [Google Scholar]

- Goren CC, Sarty M, Wu PYK. Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics. 1975;56:544–9. [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. New York: Wiley; 1966. [Google Scholar]

- Grossman ED, Blake R. Brain areas active during visual perception of biological motion. Neuron. 2002;35:1167–75. doi: 10.1016/s0896-6273(02)00897-8. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schonten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–30. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman E, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Graham N, Patterson K. Charting the progression in semantic dementia: implications for the organisation of semantic memory. Memory. 1995;3:587–604. doi: 10.1080/09658219508253161. [DOI] [PubMed] [Google Scholar]

- Jiang Y, Costello P, He S. Processing of invisible stimuli: faster for upright faces and recognizable words to overcome interocular suppression. Psychological Science. 2007;18:349–55. doi: 10.1111/j.1467-9280.2007.01902.x. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Dziurawiec S, Ellis H, Morton J. Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition. 1991;40:1–19. doi: 10.1016/0010-0277(91)90045-6. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proceedings of the National Academy of Science. 2007;104:20600–05. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis – connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience. 2008;2:4. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langton SRH, Law AS, Burton AM, Schweinberger SR. Attention capture by faces. Cognition. 2008;107:330–42. doi: 10.1016/j.cognition.2007.07.012. [DOI] [PubMed] [Google Scholar]

- Legerstee M. A review of the animate-inanimate distinction in infancy: implications for models of social and cognitive knowing. Early Development and Parenting. 1992;1:59–67. [Google Scholar]

- Looser CE, Wheatley T. The tipping point of animacy: how, when, and where we perceive life in a face. Psychological Science. 2010;21:1854–62. doi: 10.1177/0956797610388044. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. Judging semantic similarity: An event-related fMRI study with auditory word stimuli. Neuroscience. 2010;169:279–86. doi: 10.1016/j.neuroscience.2010.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Using multi-voxel pattern analysis of fMRI data to interpret overlapping functional activations. Trends in Cognitive Sciences. 2007;11(1):4–5. doi: 10.1016/j.tics.2006.10.009. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. Face-sensitive regions in human extrastriate cortex studied by functional MRI. Journal of Neurophysiology. 1995;74:1192–9. doi: 10.1152/jn.1995.74.3.1192. [DOI] [PubMed] [Google Scholar]

- Rakison DH, Poulin-Dubois D. Developmental origin of the animate-inanimate distinction. Psychological Bulletin. 2001;127:209–28. doi: 10.1037/0033-2909.127.2.209. [DOI] [PubMed] [Google Scholar]

- Ro T, Russell C, Lavie N. Changing faces: a detection advantage in the flicker paradigm. Psychological Science. 2001;12:94–9. doi: 10.1111/1467-9280.00317. [DOI] [PubMed] [Google Scholar]

- Suzuki S, Cavanagh P. Facial organization blocks access to low-level features: an object inferiority effect. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:901–13. [Google Scholar]

- Talairach J, Tournoux P. Co-planar Stereotactic Atlas of the Human Brain. Stuttgart: Gerorg Thieme-Verlag; 1988. [Google Scholar]

- Todorov A, Gobbini MI, Evans KK, Haxby JV. Spontaneous retrieval of affective person knowledge in face perception. Neuropsychologia. 2007;45:163–73. doi: 10.1016/j.neuropsychologia.2006.04.018. [DOI] [PubMed] [Google Scholar]

- Tong F, Nakayama K, Moscovitch M, Weinrib O, Kanwisher N. Response properties of the human fusiform face area. Cognitive Neuropsychology. 2000;17:257–79. doi: 10.1080/026432900380607. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Moeller S, Freiwald W. Comparing face patch systems in macaques and humans. Proceedings of the National Academy of Science. 2008;49:19514–9. doi: 10.1073/pnas.0809662105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner DD, Kelley WM, Heatherton TF. Individual differences in the spontaneous recruitment of brain regions supporting mental state understanding when viewing natural social scenes. Cerebral Cortex. 2011;21(21):2788–96. doi: 10.1093/cercor/bhr074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheatley T, Milleville SC, Martin A. Understanding animate agents: distinct roles for the social network and mirror system. Psychological Science. 2007;18:469–74R. doi: 10.1111/j.1467-9280.2007.01923.x. [DOI] [PubMed] [Google Scholar]

- Wheatley T, Weinberg A, Looser CE, Moran T, Hajcak G. Mind perception: real but not artificial faces sustain neural activity beyond the N170/VPP. PLoS One. 2011;6(3):e17960. doi: 10.1371/journal.pone.0017960. [DOI] [PMC free article] [PubMed] [Google Scholar]