Abstract

The emotions displayed by others can be cues to predict their behavior. Happy expressions are usually linked to positive consequences, whereas angry faces are associated with probable negative outcomes. However, there are situations in which the expectations we generate do not hold. Here, control mechanisms must be put in place. We designed an interpersonal game in which participants received good or bad economic offers from several partners. A cue indicated whether the emotion of their partner could be trusted or not. Trustworthy partners with happy facial expressions were cooperative, and angry partners did not cooperate. Untrustworthy partners cooperated when their expression was angry and did not cooperate when they displayed a happy emotion. Event-Related Potential (ERP) results showed that executive attention already influenced the frontal N1. The brain initially processed emotional expressions regardless of their contextual meaning but by the N300, associated to affective evaluation, emotion was modulated by control mechanisms. Our results suggest a cascade of processing that starts with the instantiation of executive attention, continues by a default processing of emotional features and is then followed by an interaction between executive attention and emotional factors before decision-making and motor stages.

Keywords: social interactions, emotion, executive attention, conflict, ERP, N1, N300

Everyday, we experience different emotions and also perceive various emotional expressions in people around us. Such emotions prioritize goals, signal the relevance of events and also prepare us to respond (see Frijda, 1986; Villeumier, 2005; Pessoa, 2009). We also use them to predict what is going on in other people’s minds (Frith and Frith, 2006) therefore they play an essential role in social interactions (Manstead, 1991; Olsson and Ochsner 2008). Evolutionary theory suggests that humans have learned to associate different emotions with specific meanings (Darwin, 1872). For example, previous research relates happiness expressions with signals of cooperative intents (Fridlund, 1995) and trust (Eckel and Wilson, 2003). However, the effects that emotions generate in our behavior sometimes have to be controlled to meet the goals set by the current situation. In this study, we used electrophysiological recordings to investigate the stages of information processing at which executive attention mechanisms modulate emotional processing in social contexts.

Facial displays of emotions are analyzed through fast and many times implicit neural pathways (Vuilleumier and Pourtois, 2007). Research shows that emotional processing can be modulated by several factors, which include attention and context. Many reports suggest that spatial attention enhances emotional processing (e.g. Pourtois et al., 2004). Other studies have shown that situational context (e.g. the emotional valence of background images or the meaning conveyed by stories accompanying facial expressions) often bias the ascription of facial displays to different emotional categories (e.g. Carroll and Russell, 1996; Righart and de Gelder, 2006). Regarding task context, emotional incongruent stimuli generate interference in Stroop- (Whalen et al., 1998) or flanker-type paradigms (Egner et al., 2008; Ochsner et al., 2009). In these cases, control mechanisms come into play to adjust behavioral responses to task demands (Ochsner and Gross, 2005; Wager et al., 2008).

Executive attention mediates stimulus and response conflict, as well as other forms of mental effort linked to cognitive control (e.g. Posner and Rothbart, 1998).When different dimensions of a situation lead to opposite action tendencies, conflict arises and executive attention mechanisms bias processing to favor goal-relevant processes at the expense of irrelevant information. The need of control is also present in social interactions (see Zaki et al., 2010). People may need to control and/or camouflage their own emotional expressions or they may learn that the emotional displays of some people cannot be taken as indicative of their future actions. In certain contexts, untrustworthy people (Todorov et al., 2008) could conceal their true emotional state and express a different one. These situations may need executive attention mechanisms to adapt the response provided to the same emotional expressions that are contextually associated to different consequences.

Little is known about the temporal dynamics and stages of processing at which executive attention and emotion processing intersect. To study this, we employed the paradigm of Ruz and Tudela (2011) in which participants had to accept or reject divisions of money that different partners proposed to them. They were asked to use the emotions conveyed by their partners to anticipate their most likely behavior, which was either a good or bad economic offer. However, there were two different types of partners. The emotional expressions of trustworthy partners predicted their default or ‘natural’ consequences (i.e. happy expressions predicted good offers and angry expressions predicted bad ones), whereas the opposite happened for untrustworthy partners (i.e. happy expressions predicted bad offers, whereas angry expressions predicted good ones). On the basis of previous studies that suggest that happy expressions generate initial trust and cooperative tendencies (Scharlemann et al., 2001) and angry ones signal threat and lead to avoidance tendencies (Marsh et al., 2005), we hypothesized that untrustworthy partners would generate conflict (reflected in more errors and slower response times (RTs) in behavioral indexes) and would call for executive mechanisms, given that they required a response contrary to the natural tendencies that emotions generate (see Ruz and Tudela, 2011).

We focused our analyses on electrophysiological potentials that have been linked to emotion processing and attention by previous research. By 120 ms, the brain already differentiates emotional from neutral facial displays (see Vuilleumier and Pourtois, 2007). This effect, observed in the fronto-central N1, is thought to reflect the fast extraction of the affective meaning of stimuli through coarse visual cues (Vuilleumier et al., 2003). With a similar timing, the posterior P1 potential is often enhanced by increased attention to negative and also positive stimuli compared with neutral material (Brosch et al., 2008). As these two potentials reflect initial stages of emotional processing, if executive attention modulates emotion in a social context from an early point in time, we expected increased amplitudes of the N1 and/or P1 for untrustworthy compared with trustworthy partners.

Two negativities that appear from 200 to 300 ms in centro-medial and frontal channels were also of interest to our study. The amplitude of the N2 is usually more negative for high than for low-conflict stimuli (Folstein and Van Petten, 2008). Hence, we expected that untrustworthy partners would generate an N2 of larger amplitude than trustworthy ones. On the other hand, the N300 has been linked to the affective evaluation of stimuli (Carretié and Iglesias, 1995; Rossignol et al., 2005). This potential is supposed to reflect the depth of emotional processing, or the affective significance of stimuli rather than their physical characteristics. Our manipulation of trustworthiness was meant to modify the meaning of the emotional facial expressions, and with this, the emotional value attributed to the happy and angry facial displays of the game partners. Thus, we expected that the typical pattern of a heightened N300 for angry facial expressions (e.g. Schutter et al., 2004) would be observable in the trustworthy condition but absent for untrustworthy partners, which would lead to an interaction on this potential between the displayed emotions and their trustworthiness.

Finally, the P300 is also often modulated by the emotional and/or arousing content of stimuli. Several studies employing emotional facial expressions and affective pictures have shown that the amplitude of this slow positive wave is enhanced when participants view emotional compared with neutral material (e.g. Carretié et al., 1997; Schupp et al. 2004), and this modulation is stronger for highly arousing stimuli (e.g. Cacioppo et al., 1993). Emotional stimuli capture attention and receive increased resources, which facilitate their processing compared with neutral material, and such facilitation seems to relate to the modulation in the P300 (e.g. Rossignol et al., 2005). In addition, in domains separate from research on emotion, this potential is thought to reflect a cascade of attentional and also mnemonic processes. Results mostly coincide in that the amplitude of the P300 reflects the amount of resources available to perform the task; the more resources, the larger its amplitude (see Polich, 2007). Therefore, although we did not expect differences in the P300 depending on the emotional expression of the partners, we hypothesized that its amplitude would be smaller for untrustworthy partners, as responding to them was more demanding.

To summarize, with the aim of studying the stages of information processing at which executive attention modulates perceived emotion in a social context, we analyzed the effect of these two factors in several electrophysiological potentials related to attention and/or emotional processing.

METHODS

Participants

Twenty-four right-handed healthy participants (average 20.7 years old, six men) were recruited from the University of Granada. They all signed a consent form approved by the local Ethics Committee and received course credits and a chocolate token in exchange for their participation.

Stimuli and procedure

Participants played a game with several different partners represented by pictures on the computer screen, who proposed them good or bad economic offers. They were told that the offers were taken from the responses of participants who completed standardized questionnaires related to social situations and trustworthiness. Their goal was to sum more money than all of their partners together; and if they won this game, they would receive a chocolate token as a reward. Half of these offers would be beneficial to them (‘good offers’), in the sense that they received more money than the partner, and the other half would be beneficial to the partners (‘bad offers’) because they received less money than the partner. If participants accepted the offer, they would keep their share of the division and their partner would take the other amount. If they rejected it instead, no amount would be added to any account for that trial. Thus, the best strategy to win the game was to accept the good offers and reject the bad ones; participants were told so, although they were not instructed to always reject bad offers.

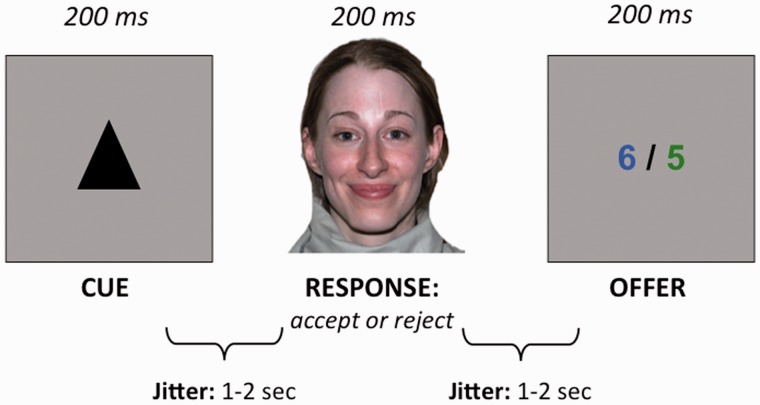

For each of the partners, we provided information regarding how trustworthy they were by means of a cue presented at the beginning of every trial. For trustworthy partners, a smile would mean that most likely the offer would be good, and an angry expression would predict a probable bad offer. Untrustworthy partners, on the other hand, would smile in anticipation of bad offers and would have an angry expression before a probable good offer. Participants had to use the information provided by the cue together with the emotion displayed by their partner to accept or reject the offers before they were presented. The offer was presented afterwards (see Figure 1 for a display of the sequence of the events in a trial). In addition, participants were asked to respond to their partner as fast as possible; they were told that if they took too long (>1.5 s), the highest amount in the offer would be added to their partner’s account.

Fig. 1.

Schematic display of the sequence of events in a trial.

The cues were triangular and squared black shapes (counterbalanced across participants). One hundred sixty faces from the Karolinska Directed Emotional Faces database (Lundqvist et al. 1998; 50% female), displaying happy or angry (50%) facial expressions, were used as partners who offered the participants a split of a sum of money. There were 32 different offers, displayed as a green and a blue number (from 1 to 9) separated by a slash symbol. The difference between the two numbers was always 1. The left–right location of the highest number and the colors were matched across trials. The participants were assigned the amount coded in one color and the partners the other number/amount (counterbalanced across participants). In half of the trials, the highest number was displayed in the color that corresponded to the participant and in the other half in the partner’s color. This manipulation was orthogonal to the emotion displayed by the partners and to the cue. The predictions of the cue and the emotional expression of the target were valid in 80% of the trials. That is, offers were good to the participants in 80% of the trials in which a trustworthy partner smiled or an untrustworthy partner had an angry expression and they were bad in 80% of the trials in which a trustworthy partner had an angry expression or an untrustworthy partner smiled.

All stimuli were presented centrally in a 17-inch monitor controlled by Biological E-prime software (Schneider et al., 2002). Each trial comprised the following events (see Figure 1), all displayed in a grey background. The cue (2.5° × 2.5°) was flashed in the centre of the screen for 200 ms, followed by an interval displaying a fixation point (0.5° × 0.5°) with an average duration of 1500 ms (±500 ms). Then the picture of the partner (5.5° × 6.5°) was presented for 200 ms, after which another variable interval was presented, with the same structure as the previous one. Finally, the offer (4° × 1°) was presented for 200 ms, and was followed by a third variable interval with an average duration of 1500 ms ( ±500 ms). On an average, a trial lasted 5.1 s. In total, there were 480 trials divided into four blocks of 120 trials each (approximately 41 min).

Participants pressed one of two buttons with the index and middle fingers of their right (dominant) hand to make speeded responses (counterbalanced across participants) to the facial displays. Before performing the task, they completed a short training session with a different set of faces, to become familiar with the task.

Electroencephalography acquisition and analyses

Electroencephalography (EEG) was collected with a high-density EEG system [Geodesics Sensor Net with 128 electrodes from Electrical Geodesics, Inc.], referenced to the vertex channel. The electrodes located above, beneath and to the left and right of the external canthi of the eyes were used to detect blinks and eye movements. The EEG net was connected to an AC-coupled, high-input impedance amplifier (200 MΩ). Electrode impedances were kept below 50 kΩ, as recommended for the Electrical Geodesics high-input impedance amplifiers. The signal was amplified (0.1–100 Hz band pass) and digitized at a sampling rate of 250 Hz (16 bits A/D converter). Data were filtered off-line with a 30 Hz low-pass filter. Then the continuous EEG recording was segmented into epochs, 200 ms before and 600 ms after target (face) onset, and subsequently submitted to software processing for identification of artifacts. Epochs showing an excessively noisy EEG (±100 µV from one sample to the next) and eye-movements artifacts (blinks or saccades: ±70 µV on Electrooculogram channels) were eliminated from the analyses. Data from individual channels that were consistently bad for a specific subject (>20% of trials) were replaced using a spherical interpolation algorithm (Perrin et al., 1989). Finally, we established a minimum criterion of 30 artifact-free trials per subject and condition to maintain an acceptable signal-to-noise ratio.

Four group-averaged Event-Related Potential (ERP) waveforms were constructed according to the trustworthiness of the partners (trustworthy vs untrustworthy) and their emotional expression (happy vs angry). ERP were re-referenced to the average, and the 200 ms pre-stimulus epoch served as baseline. Trials that did not meet the behavioral requirements (see subsequent sections) were not included in the ERP averages.

As a first step, the averaged files were submitted to a segmentation analysis performed with Cartool software (https://sites.google.com/site/fbmlab/cartool; see, for example, Murray et al., 2004; Brunet et al., 2011, for reviews of the usefulness of topographical analyses of EEG data). Cartool uses a clustering method to find, at the level of the group-average data, the set of topographies that are predominant for each experimental condition as a function of time. Scalp topographies do not vary randomly, but rather remain in a stable configuration for a period of time and then change to a different configuration. The segmentation approach finds such periods of stability in the map representation of the normalized ERP (with the constraint that maps should remain stable for at least 20 ms, and that the correlation between different maps should not be <92%) and computes the average map for each of these stable periods. Stable maps are thought to represent computational stages of information processing. Differences between maps indicate differences in the underlying brain sources (Lehmann, 1987). Variations in the amplitude of the signal between conditions do not affect results because data are normalized before comparisons. The number of maps that best explains the whole group-averaged data set is defined by a cross-validation criterion (Pascual-Marqui et al., 1995).

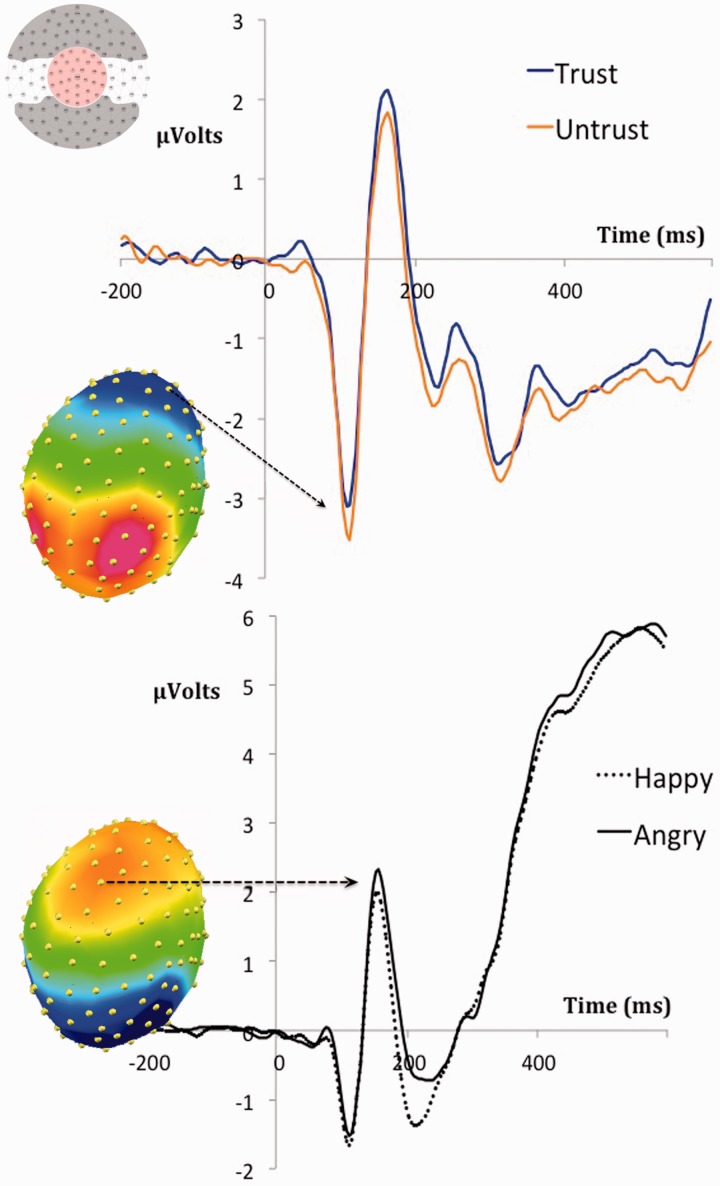

Afterwards, we tested for differences in the amplitude of the ERP across the four task conditions. We focused on the target-locked ERP given that previous functional magnetic resonance imaging (fMRI) analyses using the same paradigm (Ruz and Tudela, 2011) did not reveal any differences between trustworthy and untrustworthy cues at standard statistical thresholds. Given their relevance in previous studies of emotion and attention, we analyzed the occipital P1, the fronto-medial N1, the fronto-central N2, N300 and the P300 potentials elicited by the face of the partners. For this, we selected sets of contiguous electrodes in anterior, centro-medial and posterior regions of the scalp (see the distribution of selected channels in Figure 3), which on an initial visual inspection seemed to reflect task effects on the amplitude of the voltage of the potentials of interest. The hemisphere factor was not included, as visual inspection of the data did not indicate any differences on this direction. We then calculated the average amplitude on the selected electrodes across the three selected spatial windows of the scalp (anterior, medial and frontal). The latency of the peaks of the potentials of interest was used to define the temporal windows of the analyses, which were always within the time windows revealed by the segmentation process.

Fig. 3.

Face-locked ERPs showing the modulation of the frontal N1 by the control context (upper graph) and the modulation of the VPP by the emotion displayed by the partner (lower graph). Each ERP is presented together with the scalp topography dominant during the relevant time window (see Methods). The distribution of the spatial windows employed for all analyses is represented in the upper-left diagram.

We used the same factors in the analysis of variances (ANOVAs) for the behavioral and ERP data. These were Control (trustworthy, untrustworthy) and Emotion (happy, angry). We only included trials in which the response of participants was useful to fulfill the goal of the game, and also those which were within three standard deviations from the mean RT. The average number of trials entered in the analyses were the following: 93 (trustworthy happy), 91 (trustworthy angry), 88 (untrustworthy happy) and 85 (untrustworthy angry). Only results that fulfill the P < 0.05 significance criterion are reported.

RESULTS

Behavioral

Participants’ responses helped them to fulfill the goal of the game in 91.15% of the trials. There were differences between the trustworthy (94%; Standard Error (SE) = 1.02) and the untrustworthy condition (86%; SE = 2.24), F1,23 = 20.58, P < 0.001. The effect of emotion was also significant F1,23 = 10.11, P < 0.004 (angry 88% vs happy 92%), but the interaction between executive attention and emotion was not (F < 1).

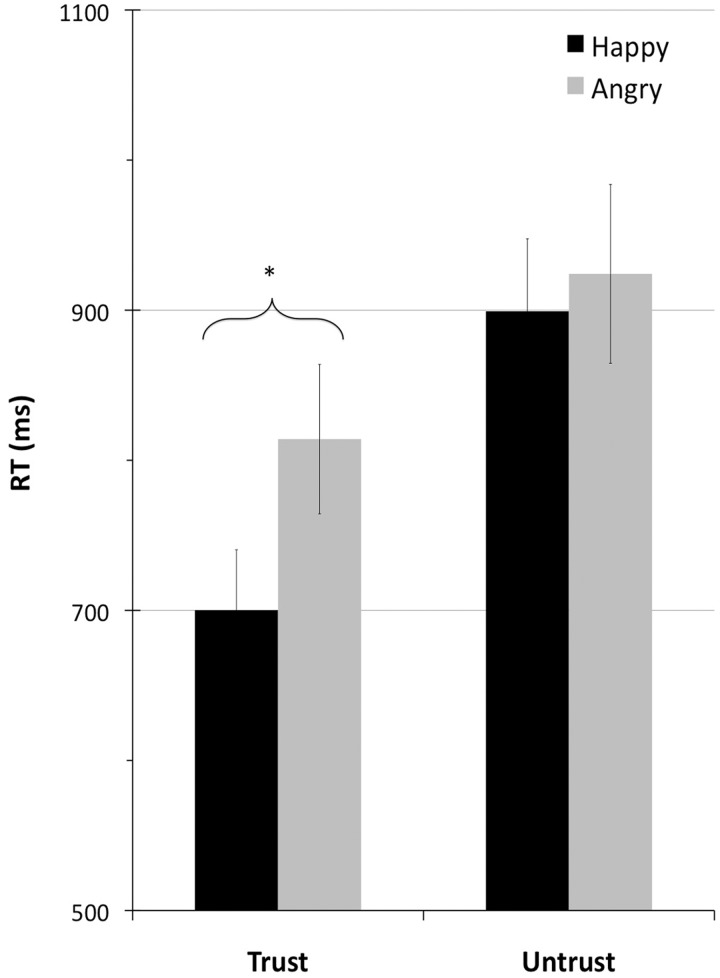

The average RT was 834.4 ms. Responses were faster in the trustworthy (757.1 ms; SE = 46.41) than in the untrustworthy (911.70 ms; SE = 46.0) condition, F1,23 = 82.9, P < 0.001 and were also faster for happy (799.7 ms; SE = 48.88) than for angry (869.1; SE = 45.86) partners, F1,23 = 17.43, P < 0.001. The interaction between control and emotion was significant, F1,23 = 28.6, P < 0.001, as the difference between emotions was significant in the trustworthy (120.3 ms), F1,23 = 51.48, P < 0.001, but not in the untrustworthy condition1, see Figure 2.

Fig. 2.

Reaction times (RTs) for trustworthy and untrustworthy partners displaying happy and angry emotional expressions.

Electrophysiological

The topographical analyses revealed a sequence of four different maps. The first one, from 80 to 130 ms, had a positive polarity in occipital channels and a negative frontal one, and corresponded to the P1 and N1 potentials. The second map contained the N170 and Vertex Positive Potential (VPP), from 130 to 180 ms and showed a reversed polarity. The third topography extended from 180 to 350 ms, encompassed the N2 and N300 potentials, and was again positive at posterior electrode locations and negative at anterior ones. The fourth map (from 350 to 600 ms) displayed the typical centro-medial positivity of the P300.

P1 and N1

Both the posterior P1 and frontal N1 peaked at around 110 ms. The ANOVAs with average amplitude values from 100 to 120 showed a main effect of executive attention on the N1 at anterior electrode locations, F1,23 = 4.507, P < 0.05. This was because the amplitude of the frontal N1 was larger for untrustworthy (−2.25 µV; SE = 0.22) than for trustworthy partners (−2.10 µV; SE = 0.22; see Figure 3). This difference was only marginal in centro-medial channels (−0.64 vs −0.48 µV, SE = 0.16), F1,23 = 3.82, P = 0.063. There were no significant effects on the P1 at posterior channels, F1,23 = 2.83, P > 0.1.

N2 and N300

The third topographical map contained the peaks of the N2 and N300 potentials, which had their respective maxima at 230 and 310 ms. We performed two different ANOVAs on the average amplitudes during a window of 32 ms surrounding each peak (16 ms on each side). From 214 to 246 ms, encompassing the N2, there was an effect of emotion on this potential at centro-medial electrodes, F1,23 = 9.17, P < 0.01, as it was more negative for happy (−0.29 µV; SE = 0.2) than for angry faces (0.01 µV; SE = 0.22). There was also a marginal effect of emotion at anterior locations, F1,23 = 3.96, P = 0.058, again with a more negative deflection for happy (−1.96 µV; SE = 0.32) than for angry faces (−1.71 µV; SE = 0.35). All other contrasts during this temporal window were non-significant (all Fs < 1).

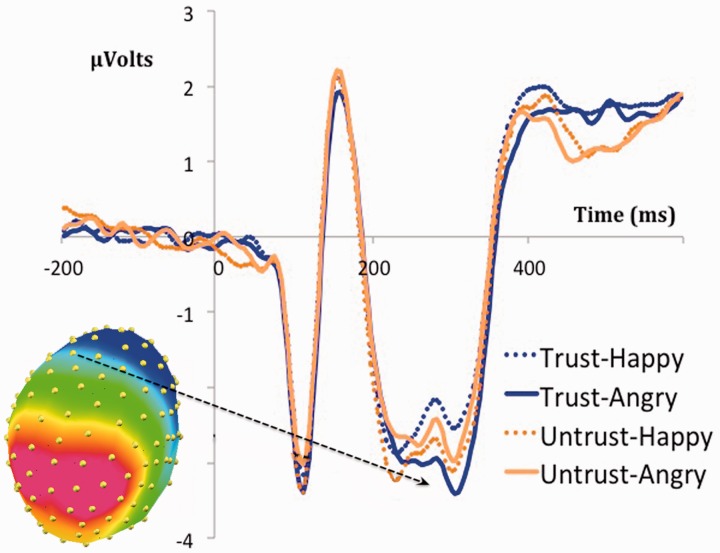

From 294 to 326 ms, encompassing the N300, there was an emotion × executive attention interaction on this potential at centro-medial electrodes, F1,23 = 5.19, P < 0.05 (Figure 4). This interaction was due to a emotion effect for trustworthy, F1,23 = 8.81, P < 0.01 (angry −1.23 µV, SE = 0.31 vs happy −0.72 µV; SE = 0.30), but not for untrustworthy faces, F < 1. All other contrasts were non-significant (all Fs < 1).

Fig. 4.

Interaction between emotion and cognitive control in the N300 potential.

P300

From 376 to 476 ms there was an effect of attention at the centro-medial P300, F1,23 = 8.45, P < 0.005, (see Figure 5). The average P300 amplitude was larger for trustworthy (3.57 µV; SE = 0.40) than for untrustworthy partners (3.14 µV; SE = 0.34). All other contrasts were non-significant (all Fs < 1).

Fig. 5.

Main effect of control in the P300 amplitude.

DISCUSSION

This study aimed at exploring the temporal dynamics and stages of information processing at which executive attention interacts with emotion in a social context. First, our results show that when the emotions of happiness and anger of partners in an economic game are not tied to their natural consequences, behavioral responses are slowed down. Second, ERP results indicate that in the present social context, attention mechanisms modulate processing from an early point in time, as reflected by modulations in the N1 potential, and interact with emotion analysis during the N300 time-window, before motor decision and execution stages indexed by the P300.

Our research manipulated the emotion displayed by the partner (see Van Kleef et al., 2010) within a social context (Parkinson, 1996). A few studies in the field of behavioral economic games show that social information can bias decision-making, especially under high levels of contextual uncertainty (Ruz et al., 2011; Gaertig, Moser and Ruz, 2012). These and other studies suggest that the emotions of happiness and anger by default lead to trust (cooperative) and distrust (competitive) behavior in this type of bargaining games (see Van Kleef et al., 2010). In addition, our study introduces the factor of control over the meaning of emotions. Although for trustworthy partners happiness and anger were associated to their default consequences, this pattern was the opposite for untrustworthy partners, and thus participants had to adjust their responses accordingly. Admittedly, our use of the term ‘trustworthiness’ does not fully overlap with the construct of trustworthiness as an inherent characteristic that leads people to either trust or distrust one another (e.g. Todorov et al., 2008). In our case, the trustworthiness of partners was an experimental manipulation, instructed to participants, and it was not linked to specific facial features (see Vrtičkaa et al., 2008, which used the terms ‘friend’ and ‘foe’ in a similar manipulation).

The behavioral results replicated previous studies (Ruz and Tudela, 2011). Responses were faster and more accurate to partners whose emotions indicated their default consequences compared with untrustworthy partners, which relates to the need of executive attention (see Egner et al., 2008). Responses to happy partners were faster than responses to angry people in the trustworthy condition, which could be related to avoidance tendencies associated to negative emotions (Marsh et al., 2005). This difference, however, disappeared in the untrustworthy condition. The reasons for such interaction are unclear. It could be due to a ceiling effect in the untrustworthy condition, or perhaps to differences in affective evaluation across conditions (see related discussion on the pattern of results of the N300 potential).

Electrophysiological results showed that executive attention affected processing from an early point in time. The frontal N1 had larger amplitude for untrustworthy people. Such enhancement could be related to an initial amplification of relevant processing generated by top-down factors (Hillyard and Picton, 1987). Untrustworthy partners were associated to higher processing demands (as indicated by behavioral data), and thus the N1 enhancement could be related to the allocation of more resources in this condition (e.g. Williams, et al., 2003). Although the poor spatial resolution of the EEG prevents us from drawing strong conclusions regarding the source of such modulations, the involvement of prefrontal regions would be plausible given that a previous experiment using a very similar paradigm in combination with fMRI showed higher activations in bilateral middle frontal cortices for the untrustworthy condition (Ruz and Tudela, 2011). Thus, it could be the case that the more pronounced N1 indexed activation in those or similar areas from an early point in time. The fact that the facial displays of partners were preceded by cues could help explain the readily nature of these signals.

Emotion also modulated the amplitude of the N2 in centro-medial channels irrespective of its contextual meaning (see Kanske and Kotz, 2010). We had predicted that the N200 would have larger amplitude in the untrustworthy condition, given that it entails a conflict between the natural expectations that emotions generate and their meaning in the game. Trustworthiness, however, did not modulate this potential. This null result may be taken as indication that the untrustworthy condition did not engage control mechanisms. Still, RT results show a marked slowing of responses in this situation. In addition, previous fMRI results (Ruz and Tudela, 2011) show that the Anterior Cingulate Cortex and bilateral frontal cortices were more active in this condition, which suggests that it actually triggered some form of conflict. The lack of effect on the N2, although puzzling, may be explained by differences between the current paradigm and previous emotional conflict (flanker) tasks. Further research will be needed to replicate these results and explain them in more detail.

As predicted, the N300 potential displayed an interaction between executive attention and emotion. Whereas there was no differentiation between happy and angry emotional expressions of untrustworthy partners, trustworthy angry partners generated a larger N300 than happy ones (see Schutter et al., 2004). This pattern fits nicely with previous reports relating the N300 with an emotional evaluation of stimuli (Carretié and Iglesias, 1995; Carretié et al., 1997; Rossignol et al., 2005). In this game, only trustworthy partners conveyed emotions that matched their natural effects. Thus, a normal emotional evaluation could only be performed in this condition, as reflected in N300 amplitude. Emotions displayed by untrustworthy partners, on the other hand, could not be integrated with the default evaluation associated to happy and angry expressions, and this could have led to a lack of differences in the affective processing indexed by this potential. Interestingly, the pattern of modulations in the N300 mimics the behavioral RT effects. This may suggest that the increased amplitude/RT for angry compared with happy partners in the trustworthy condition may be related to a deeper affective analysis of angry partners in that context (Carretié and Iglesias, 1995), a difference that may dilute in the untrustworthy condition due to the mismatch between the default and context-dependent meaning. This, however, is only a working hypothesis, which would need further testing.

Finally, the P300 was modulated only by the level of executive attention triggered by the partners. The lack of differences between emotions was predicted based on previous results (for a review, see Hajcak et al., 2010). Instead, the P300 modulation may indicate the availability of more resources in the untrustworthy condition (Polich, 2007), which agrees with behavioral data. Differences between conditions in terms of arousal levels could also be affecting the amplitude of this potential (Carretié and Iglesias, 1995).

Our investigation represents an advance in the study of the emotional displays of other people as predictors of their behavior in interpersonal interactions associated to differential control requirements. Results show a cascade of processing in which executive attention mechanisms operate from early on as reflected by the frontal N1. Afterward, emotions are processed differentially depending on their positive vs negative valence but irrespective of their contextual meaning N2 modulation). At the level of the N300, associated to affective evaluation, emotion and attention factors interact to display emotional modulations only for trustworthy partners.

Future studies should explore whether the precedence of executive attention observed in the current experiment would remain in a game in which the trustworthy or untrustworthy nature of the partners was not provided by a previous cue but by the identity of each specific partner. Another open question is the extent to which our results depend on the use of faces as partners in a social context, or whether similar findings could also be observed with other type of positive and negative, or even neutral, stimuli. Further experiments would be needed to study their potential effects over social decision-making and their neural correlates.

Acknowledgments

The Cartool software (brainmapping.unige.ch/cartool) has been programmed by Denis Brunet, from the Functional Brain Mapping Laboratory, Geneva, Switzerland, and is supported by the Center for Biomedical Imaging (CIBM) of Geneva and Lausanne.

Financial support to this research came from the Spanish Ministry of Science and Innovation through a ‘Ramón y Cajal’ research fellowship and grant PSI2010- 16421 to M.R. Andalusian Autonomous Government through grant SEJ2007.63247 and grant P09.HUM.5422 to P.T.

Footnotes

1An additional analysis including all trials did not change the pattern of results (all significant Ps remained <0.001).

REFERENCES

- Brosch T, Sander D, Pourtois G, Scherer KR. Beyond fear: rapid spatial orienting toward positive emotional stimuli. Psychological Science. 2008;19:362–370. doi: 10.1111/j.1467-9280.2008.02094.x. [DOI] [PubMed] [Google Scholar]

- Brunet D, Murray MM, Michel CM. Spatiotemporal analysis of multichannel EEG: CARTOOL. Computational Intelligence and Neuroscience. 2011;2011:813870. doi: 10.1155/2011/813870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cacioppo JT, Klein DJ, Berntson GG, Hatfield E. The psychophysiology of emotion. In: Lewis R, Haviland JM, editors. The Handbook of Emotion. New York: Guilford Press; 1993. pp. 119–142. [Google Scholar]

- Carretié L, Iglesias J. An ERP study on the specificity of facial expression processing. International Journal of Psychophysiology. 1995;19:183–192. doi: 10.1016/0167-8760(95)00004-c. [DOI] [PubMed] [Google Scholar]

- Carretié L, Iglesias J, García T, Ballesteros M. N300, P300 and the emotional processing of visual stimuli. Electroencephalography and Clinical Neurophysiology. 1997;103:298–303. doi: 10.1016/s0013-4694(96)96565-7. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205–218. doi: 10.1037//0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Darwin C. The Expression of Emotions in Man and Animals. London: John Murray; 1872. [Google Scholar]

- Eckel CC, Wilson RK. The human face of game theory: trust and reciprocity in sequential games. In: Ostrom E, Walker J, editors. Trust and Reciprocity: Interdisciplinary Lessons from Experimental Research. New York: Russel Sage Foundation; 2003. pp. 245–274. [Google Scholar]

- Egner T, Etkin A, Gale S, Hirsch J. Dissociable neural systems resolve conflict from emotional versus non-emotional distracters. Cerebral Cortex. 2008;18:1475–1484. doi: 10.1093/cercor/bhm179. [DOI] [PubMed] [Google Scholar]

- Folstein JR, Van Petten C. Influence of cognitive control and mismatch on the N2 component of the ERP: a review. Psychophysiology. 2008;45:152–170. doi: 10.1111/j.1469-8986.2007.00602.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frijda NH. The Emotions. Cambridge, UK: Cambridge University Press; 1986. [Google Scholar]

- Fridlund AJ. Human Facial Expression: An Evolutionary View. London: Academic Press; 1995. [Google Scholar]

- Frith CD, Frith U. How we predict what other people are going to do. Brain Research. 2006;10791:36–46. doi: 10.1016/j.brainres.2005.12.126. [DOI] [PubMed] [Google Scholar]

- Gaertig C, Moser A, Alguacil S, Ruz M. Social information and economic decision-making in the ultimatum game. Front. Neurosci. 2012;6:103. doi: 10.3389/fnins.2012.00103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hajcak G, MacNamara A, Olvet DM. Event-related potentials, emotion, and emotion regulation: an integrative review. Developmental Neuropsychology. 2010;35:129–155. doi: 10.1080/87565640903526504. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Picton TW. Electrophysiology of cognition. In: Mountcastle VB, Plum F, Geiger ST, editors. 1987. Handbook of Physiology, Sect. 1, Vol. 5, Pt. 2. Bethesda (MD): American Physiological Society, pp. 519-584. [Google Scholar]

- Joyce C, Rossion B. The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology. 2005;116:2613–2631. doi: 10.1016/j.clinph.2005.07.005. [DOI] [PubMed] [Google Scholar]

- Kanske P, Kotz SA. Modulation of early conflict processing: N200 responses to emotional words in a flanker task. Neuropsychologia. 2010;48:3661–3664. doi: 10.1016/j.neuropsychologia.2010.07.021. [DOI] [PubMed] [Google Scholar]

- Lehmann D. Principles of spatial analyses. In: Gevins AS, Remond A, editors. Handbook of Electroencephalography and Clinical Neurophysiology Methods of Analysis of Brain Electrical and Magnetic Signals. Amsterdam: Elsevier; 1987. pp. 309–354. [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. The Karolinska Directed Emotional Faces—KDEF, CD ROM from Department of Clinical Neuroscience, Psychology section. Karolinska Institutet. 1998 ISBN 91-630-7164-9. [Google Scholar]

- Luo W, Feng W, He W, Wang NY, Luo YJ. Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage. 2010;49:1857–1867. doi: 10.1016/j.neuroimage.2009.09.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manstead ASR. Emotion in social life. Cognition & Emotion. 1991;5:353–362. [Google Scholar]

- Marsh AA, Ambady N, Kleck RE. The effects of fear and anger facial expressions on approach- and avoidance-related behaviors. Emotion. 2005;5:119–124. doi: 10.1037/1528-3542.5.1.119. [DOI] [PubMed] [Google Scholar]

- Murray MM, Foxe DM, Javitt DC, Foxe JJ. Setting boundaries: brain dynamics of modal and amodal illusory shape completion in humans. Journal of Neuroscience. 2004;24:6898–6903. doi: 10.1523/JNEUROSCI.1996-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ. The cognitive control of emotion. Trends in Cognitive Sciences. 2005;9:242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Hughes B, Robertson ER, Cooper JC, Gabrieli JD. Neural systems supporting the control of affective and cognitive conflicts. Journal of Cognitive Neuroscience. 2009;21:1842–1855. doi: 10.1162/jocn.2009.21129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olsson A, Ochsner KN. The role of social cognition in emotion. Trends in Cognitive Sciences. 2008;12:65–71. doi: 10.1016/j.tics.2007.11.010. [DOI] [PubMed] [Google Scholar]

- Parkinson B. Emotions are social. British Journal of Psychology. 1996;87:663–683. doi: 10.1111/j.2044-8295.1996.tb02615.x. [DOI] [PubMed] [Google Scholar]

- Pascual-Marqui RD, Michel CM, Lehmann D. Segmentation of brain electrical activity into microstates: model estimation and validation. IEEE Transaction on Biomedical Engineering. 1995;42:658–665. doi: 10.1109/10.391164. [DOI] [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Echallier JF. Spherical splines for scalp potential and current density mapping. Electroencephalography and Clinical Neurophysiology. 1989;72:184–187. doi: 10.1016/0013-4694(89)90180-6. [DOI] [PubMed] [Google Scholar]

- Pessoa L. How do emotion and motivation direct executive control? Trends in Cognitive Sciences. 2009;13:160–166. doi: 10.1016/j.tics.2009.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polich J. Updating P300: an integrative theory of P3a and P3b. Clinical Neurophysiology. 2007;118:2128–2148. doi: 10.1016/j.clinph.2007.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI, Rothbart MK. Attention, self-regulation and consciousness. Philosophical Transactions Royal Society of London B Biological Sciences. 1998;353:1915–1927. doi: 10.1098/rstb.1998.0344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P. Enhanced extrastriate visual response to band-pass spatial frequency filtered fearful faces: time-course and topographic evoked-potentials mapping. Human Brain Mapping. 2005;26:65–79. doi: 10.1002/hbm.20130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context Influences early perceptual analysis of faces—an electrophysiological study. Cerebral Cortex. 2006;16:1249–1257. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Rossignol M, Philippot P, Douilliez C, Crommelinck M, Campanella S. The perception of fearful and happy facial expression is modulated by anxiety: an event-related potential study. Neuroscience Letters. 2005;377:115–120. doi: 10.1016/j.neulet.2004.11.091. [DOI] [PubMed] [Google Scholar]

- Ruz M, Moser A, Webster K. Social expectations bias decision-making in uncertain inter-personal situations. PLoS One. 2011;6:e15762. doi: 10.1371/journal.pone.0015762. doi:10.1371/journal.pone.0015762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruz M, Tudela P. Emotional conflict in interpersonal interactions. Neuroimage. 2011;54:1685–1691. doi: 10.1016/j.neuroimage.2010.08.039. [DOI] [PubMed] [Google Scholar]

- Scharlemann JPW, Eckel CC, Kacelnik A, Wilson RK. The value of a smile: game theory with a human face. Journal of Economic Psychology. 2001;22:617–640. [Google Scholar]

- Schneider W, Eschman A, Zuccolotto A. E-Prime User's Guide. Pittsburg, PA: Psychology Software Tools, Inc; 2002. [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41:441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Schutter DJ, de Haan EH, van Honk J. Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. International Journal of Psychophysiology. 2004;53:29–36. doi: 10.1016/j.ijpsycho.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Todorov A, Baron SG, Oosterhof NN. Evaluating face trustworthiness: a model based approach. Social Cognitive and Affective Neuroscience. 2008;3:119–127. doi: 10.1093/scan/nsn009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Kleef GA, De Dreu CKW, Manstead ASR. An interpersonal approach to emotions in social decision-making: the emotions as social information model. In: Zanna MP, editor. Advances in Experimental Social Psychology. Vol. 42. Burlington: Academic Press; 2010. pp. 45–96. [Google Scholar]

- Vrtickaa P, Andersson F, Grandjean D, Sander D, Vuilleumier P. Individual attachment style modulates human amygdala and striatum activation during social appraisal. PLoS ONE. 2008;3:1–11. doi: 10.1371/journal.pone.0002868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Wager TD, Davidson ML, Hughes BL, Lindquist MA, Ochsner KN. Prefrontal-subcortical pathways mediating successful emotion regulation. Neuron. 2008;59:1037–1050. doi: 10.1016/j.neuron.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ, Bush G, McNally RJ, Wilhelm S, McInerney SC, Jenike MA, Rauch SL. The emotional counting Stroop paradigm: a functional magnetic resonance imaging probe of the anterior cingulate affective division. Biological Psychiatry. 1998;44:1219–1228. doi: 10.1016/s0006-3223(98)00251-0. [DOI] [PubMed] [Google Scholar]

- Williams LL, Bahramali H, Hemsley DR, Harris AW, Brown K, Gordon E. Electrodermal responsivity distinguishes ERP activity and symptom profile in schizophrenia. Schizophrenia Research. 2003;59:115–125. doi: 10.1016/s0920-9964(01)00368-1. [DOI] [PubMed] [Google Scholar]

- Zaki J, Hennigan K, Weber J, Ochsner KN. Social cognitive conflict resolution: contributions of domain-general and domain-specific neural systems. Journal of Neuroscience. 2010;30:8481–8488. doi: 10.1523/JNEUROSCI.0382-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]