Abstract

Mixed reality environments for medical applications have been explored and developed over the past three decades in an effort to enhance the clinician’s view of anatomy and facilitate the performance of minimally invasive procedures. These environments must faithfully represent the real surgical field and require seamless integration of pre- and intra-operative imaging, surgical instrument tracking, and display technology into a common framework centered around and registered to the patient. However, in spite of their reported benefits, few mixed reality environments have been successfully translated into clinical use. Several challenges that contribute to the difficulty in integrating such environments into clinical practice are presented here and discussed in terms of both technical and clinical limitations. This article should raise awareness among both developers and end-users toward facilitating a greater application of such environments in the surgical practice of the future.

Keywords: Minimally invasive surgery and therapy, Image-guided interventions, Virtual, Augmented and mixed reality, environments, Clinical translation

1. Introduction

1.1. Virtual, augmented and mixed reality

Multi-modality data visualization has become a focus of research in medicine and surgical technology, toward improved diagnosis, surgical training, planning, and interventional guidance. Various approaches have been explored to alleviate the clinical issues of incomplete visualization of the entire surgical field during minimally invasive procedures by complementing the clinician’s visual field with necessary information that facilitates task performance. This technique is known as augmented reality (AR) and it was first defined by Milgram et al. [1], as a technique of “augmenting natural feedback to the operator with simulated cues”. This approach allows the integration of supplemental information with the real-world environment.

Augmented environments represent the “more real” subset of mixed reality environments. The latter spans the spectrum of the reality-virtuality continuum (Fig. 1) and integrates information ranging from purely real (i.e. directly observed objects) to purely virtual (i.e. computer graphic representations). The spatial and temporal relationship between the real and virtual components and the real world distinguishes AR environments from virtual reality (VR) environments. A common interpretation of a VR environment is one in which the operator is immersed into a synthetic world consisting of virtual representations of the real world that may or may not represent the properties of the real-world environment [2]. Moreover, both AR and VR environments belong to the larger class identified as mixed reality environments. Mixed realities may include either primarily real information complemented with computer-generated data, or mainly synthetic data augmented with real elements [2,3]. While the former case constitutes a typical AR environment, the latter extends beyond AR into augmented virtuality (AV) [4–6].

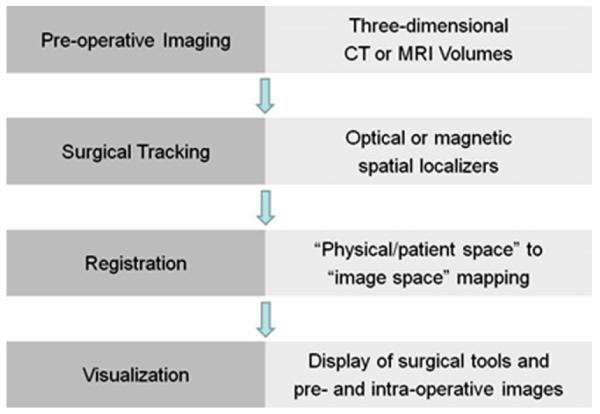

Fig. 1.

Components of a mixed reality image guidance process for surgical interventions:pre-operative imaging, surgical instrument localization, data integration, and lastly visualization, information display and surgical navigation.

The first attempt toward augmented reality occurred weeks after Roentgen announced his discovery of X-rays in 1896, when several inventors announced a fluoroscopic device under different names: the “Cryptoscope” by Enrico Salvioni, the “Skiascope” by William Francis Magie, and the “Vitascope” by Thomas Edison. These devices were all described as a “small darkroom adapted to the operator’s eyes and fitted with a fluorescent screen” [7]. This device was later referred to as the “Fluoroscope” in the English speaking countries [7], while in the French literature it was called the “Bonnette Radioscopique” [8].

The “more modern” forms of augmented and virtual realities have their beginnings in the 1980s with the development of LCD-based head-mounted displays; the first VR system known as VIVED (virtual visualization environmental display) [9] was developed in 1982; the VIEW (virtual interface environment workstation) system [10] was launched in late 1980s; and one of the pioneer augmented virtuality (AV) systems was introduced in the context of surgery by Paul et al. [11] in the early 2000s.

1.2. Augmented environments in image-guided therapy

The advent of VR and AR environments in the medical world was driven by the need to enhance or enable therapy delivery under limited visualization and restricted access conditions. Computers have become an integral part of medicine: patient records are stored electronically, computer software enables the acquisition, visualization and analysis of medical images, and computer-generated environments enable clinicians to perform procedures that presented difficulties decades ago via new minimally invasive approaches [12,13]. Technological developments and advances in medical therapies have led to the use of less invasive treatment approaches for conditions that require surgery and involve patient trauma and complications.

Medical mixed realities have their beginnings in the 1980s [14,15]. Augmented reality surgical guidance began in neurosurgery in the 1980s with systems incorporated into the operating microscope [16–18]. The first simulated surgery for tendon transplants was published in 1989 and an abdominal surgery simulator was reported in 1991 [19]. Graphical representations of realistic images of the human torso, accompanied by deformable models, and later complemented by more realistic simulations of a variety of medical procedures using the Visible Human Dataset from the National Library of Medicine in 1994 were published in [20,21]. Virtual endoscopy had its beginnings in the mid-1990s and experienced simultaneous developments from several groups; by the late-1990s a wide variety of imaging, advanced visualization, and mixed reality systems were developed and employed in medicine [22]. The MAGI system described the technical stages required to provide AR guidance in the neurosurgical microscope and was one of the first to undergo significant clinical evaluation [23,24]. Today, VR and AR medical environments are employed for diagnosis and treatment planning [25], surgical training [26–30], pre- and intra-operative data visualization [31–34], and for intra-operative navigation [12,35–38].

2. Components and infrastructure

In spite of the wealth of information available due to the advances in medical technology, the extent of diagnostic data readily available to the clinician during therapy is still limited, emphasizing the need for interventional guidance platforms that enable the integration of pre- and intra-operative imaging and surgical navigation into a common environment (Fig. 1).

2.1. Imaging

Minimally invasive interventions benefit from enhanced visualization provided via medical imaging, enabling clinicians to“see” inside the body given the restricted surgical and visual access. Pre-operative images are necessary to understand the patient’s anatomy, identify a suitable treatment approach, and prepare a surgical plan. These data are often in the form of high-quality images that provide sufficient contrast between normal and abnormal tissues, along with a representation of the patient that is sufficiently faithful for accurate image guidance [39]. The most common imaging modalities used pre-operatively include computed tomography (CT) and magnetic resonance imaging (MRI).

Since the surgical field cannot be observed directly, intra-operative imaging is critical for visualization. The technology must operate in nearly real time with minimal latency (i.e., that does nor interfere with the normal interventional workflow) to provide accurate guidance, be compatible with standard operating room (OR) equipment, however, at the expense of spatial resolution or image fidelity. Common real-time intra-operative imaging modalities include ultrasound (US) imaging, X-ray fluoroscopy, and more recently, cone-beam CT and intra-operative MRI.

2.1.1. Computed tomography

Computed tomography produces 3D image volumes of tissue electron densities based on the attenuation of X-rays [40]. Conventional multi-detector CT imaging systems are rapidly competing with the latest generation of 320-slice scanners, including the Aquilion™ system from Toshiba [41] and the Brilliance™ iCT system from Philips [42], which can acquire an entire image of the torso in just a few seconds, as well as allowing “cine” imaging and dynamic visualization of the cardiac anatomy.

CT has been employed for both diagnostic imaging [43] and surgical planning [44]. However, classical CT scanners present limited intra-operative use. If used for direct guidance, physicians would need to reach with their hands inside the scanner [45]. This option is not feasible considering the increased radiation dose during prolonged procedures. In addition, since dynamic CT images are acquired in single axial slices, it is difficult to track a catheter or guide-wire that is advanced in the axial direction, as its tip is only visible in the image for a short time. Consequently, CT is more suitable for procedures where the tools are remotely manipulated and in the axial plane [46].

2.1.2. Magnetic resonance imaging

MR images are computed based on the changes in frequency and phase of the precessing hydrogen atoms in the water molecules present in soft tissue in response to a series of magnetic fields [47]. Magnetic resonance imaging (MRI) provides excellent soft tissue characterization, allowing a clear definition of the anatomical features of interest. In interventional radiology, where catheters and probes are navigated through the vasculature, MRI presents several advantages over the traditional X-ray fluoroscopy guidance. Not only does it provide superior visualization of the vasculature, catheter, and target organ, often without the need of contrast enhancement, but it spares both the patient and clinical staff from prolonged radiation exposure.

Several designs of intra-operative MR systems have been developed to date, including the General Electric Signa-SP “Double Doughnut” [48–50], the Siemens Medical Systems Magnetom Open interventional magnet [51], the Philips Panorama™ system [52], and the Medtronic Odin PoleStar™ N-20 system [53], which has been integrated with the StealthStation™ TREON navigation platform. Other MRI guidance systems employ modified versions of clinical MR scanners featuring shorter bore, larger diameter magnets that enable the physician to reach inside the magnet and manipulate instruments [54,55]: the 1.5 T Gyroscan ACS-NT15 [54] (Philips Medical Systems, Best, the Netherlands) and the 1.5 T iMotion [55] (Magnex, Abingdon, UK) system.

Intra-operative MRI suites are typically implemented as MR-compatible multi-room facilities. When not used for guidance, the MR scanner is used for routine diagnosis; for interventions, either the patient is moved to the adjacent room for imaging or the magnet is wheeled on overhead rails into the actual OR [56]. This enables integration of other devices, such as fluoroscopy, outside the field of the MRI scanner, a setup which has been adapted in the construction of XMR (combined X-ray and MR imaging) suites [57] for cardiac interventions. The main advantage of such suites is the acquisition and visualization of intrinsically registered morphological, functional, and guidance images. However, while their proponents are enthusiastic and supportive for their future use, their intra-operative adoption has, nevertheless, been slow. Intra-operative MR imaging systems have now been evaluated for at least a decade and there is still significant debate with regards to the costs associated with their use and the limitations they impose on the OR workflow.

2.1.3. Ultrasound

Ultrasound imaging transducers emit sonic pulses which propagate through tissue and reflect energy back when encountering tissue interfaces [58]. The reconstructed sonic images provide effective visualization of the morphology, as well as tissue stiffness, and blood flow. Ultrasound has been employed in interventional guidance since the early-1990s [59], initially for neurosurgical procedures. US systems are inexpensive, mobile, and compatible with the OR equipment and can acquire real-time images at various user-controlled positions and orientations, with a spatial resolution ranging from 0.2 to 2mm.

Transducers are available in different forms. The most common probes consist of a linear array of elements, referred to as 2D transducers, as their field of view is a single image slice. Over the past decade, 3D transducers have become available; these probes consist of a multi-row array of elements or single array of elements equipped with electronic beam steering. Moreover, images can be acquired either from the body surface [60–62] or by inserting the transducer inside the body (trans-esophageal [63], tranrectal [64] or intracardiac [65] probes), for close access to the anatomy being imaged.

In response to the slow progress of US for intra-operative use, common techniques to enhance anatomical visualization have included the generation of volumetric datasets from 2D US image series [66,67], optical or magnetic (also referred to by others as electromagnetic) tracking of the US probe to reconstruct 3D images [68–70], fusion of US and pre-operative CT or MRI images or models [71–74,63], and fusion of real-time US images with direct human vision [75].

2.1.4. X-ray fluoroscopy

Radiograph images were the first medical images employed for guidance. Despite conce rns regarding radiation exposure, X-ray fluoroscopy is the standard imaging modality in interventional radiology. The X-ray tube and detector are mounted on a curved arm (C-arm) facing each other, with the patient table in-between [45], rapidly collecting 2D projection images through the body [76] at rates of over 30 Hz. Some C-arm systems are portable, allowing them to be rolled in and out of different operating rooms as needed [77]. Fluoroscopic images show clear contrast between different materials, such as a catheter and tissue or bone and soft tissue organs, and different tissue densities, such as heart and lungs. However, many soft tissues cannot be differentiated without the use of contrast agent. Since fluoroscopic images are 2D projections through 3D anatomy, they appear as a series of “overlapping shadows”.

Several extensions of X-ray and fluoroscopy imaging such as CT-fluoroscopy [78–80] and cone-beam CT (CBCT) systems [81–83] have been employed for intra-operative guidance. CBCT has evolved from C-arm based angiography and can acquire high-resolution 3D images of organs in a single gantry rotation [84–87].

2.2. Modeling

The acquired image datasets can be quite large, making them challenging to manipulate in real time. A common approach is to extract the information of interest and generate models that can be used to plan the procedures and provide interactive visualization during guidance. Anatomical models are commonly employed in image-guided interventions (IGI) and they consist primarily of a surface extraction of the organ of interest, generated using image segmentation.

Although manual segmentation has been accepted as the gold-standard approach for segmentation, given the superior soft tissue contrast available in the MR and CT images, semi- or fully automatic techniques have been employed, as part of available packages, such as ITK-SNAP (http://www.itksnap.org/) or Analyze™ (Biomedical Imaging Resource, Mayo Clinic, Rochester, USA) [88]. In general, although progress has been made in automated segmentation, the methods are not perfect and some manual editing is still required. This process can be laborious and segmentation is one of the potential barriers to adoption of mixed reality guidance systems. Moreover, several model-based techniques, including classical deformable models [89,90], level sets [91], active shape and appearance models [92,93], and atlas-based approaches [94,95] have also been explored. A large and continuously growing body of literature is dedicated to image segmentation, ranging from low-level techniques such as thresholding, region growing, clustering methods, and morphology-based segmentation to more complex, model-based techniques. A review of these methods can be found in Ref. [96].

Surface extraction [97,98] is a trade-off between the fidelity of the surface and the amount of polygonal data used to represent the organ model: the denser the surface mesh, the better the surface features are preserved, but at the expense of manipulation times. These limitations are addressed via decimation – a process that identifies surface regions with low curvature and replaces several small polygons with a larger one [99]. This post-processing step reduces the amount of polygonal data, while preserving the desired surface features.

Recently, thanks to the advances in computing technology and the power of graphics processing units (GPU), 3D and 4D models of the anatomy can be rapidly generated using volume rendering of pre-operative MRI and CT datasets [100]. These techniques allow the user to appreciate the full multi-dimensional attributes of the pre-operative images, while maintaining all the original data, rather than discarding most of them during surface extraction using segmentation methods.

2.3. Surgical instrument localization

Most interventions require precise knowledge of the position and orientation of the surgical instrument with respect to the treatment target at all times during the procedure. Hence, the localization system is an essential component of any image-guided intervention platform. These devices define a coordinate system within the operating room in which surgical tools and the patient can be tracked.

The tracking technologies most frequently employed in IGI use either optical [101] or magnetic [102–104] approaches (Fig. 2). Common optical tracking systems (OTS) include the Micron Tracker (Claron Technologies, Toronto, Canada), the Optotrak 3020 (Northern Digital Inc, Waterloo, Canada) [105,106], the Flashpoint 5000 (Boulder Innovation Group Inc., Boulder, CO, USA) [107–109], and the Polaris (Northern Digital Inc.) [110,111]. Despite their tracking accuracy on the order of 0.5mm [101], they require an unobstructed line-of-sight between the transmitting device and the sensors mounted on the instrument, which prevents their use for within-body applications. As such, their application is limited to procedures performed outside the body, or interventions where the rigid delivery instruments extend outside the body. An exception is the use of vision-based tracking in endoscopic procedures, where the video view through the endoscope is used to provide camera tracking.

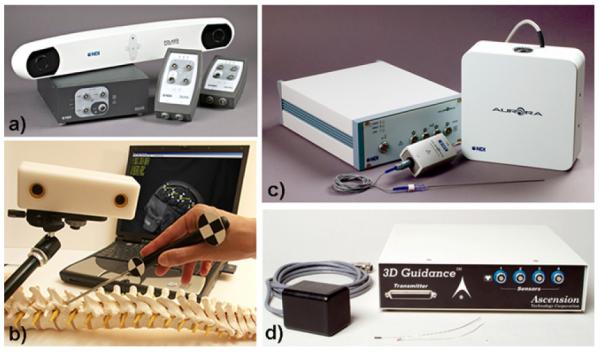

Fig. 2.

Commonly employed optical tracking systems (a) Polaris Spectra™ from NDI and (b) Micron Tracker™ from Claron Technologies (Toronto, Canada), and magnetic tracking systems: (c) Aurora™ from NDI and (d) 3D Guidance™ from Ascension.

Electromagnetic tracking systems (MTS), on the other hand, do not suffer from such limitations and allow the tracking of flexible instruments inside the body, such as a catheter tip [112], endoscope [103,113] or TEE transducer [114]. However, their performance may be limited by the presence of ferro-magnetic materials in the vicinity of the field generator, or inadequate placement of the “surgical field” within the tracking volume of the MTS. Three magnetic tracking systems typically used in IGI include the NDI Aurora™ (Northern Digital Inc., Waterloo, Canada), the 3D Guidance system from Ascension (Burlington, VT, USA), and the transponder-based system developed by Calypso Medical Systems (Seattle, WA, USA).

2.4. Environment integration: patient registration and data assembly

The development of surgical guidance platforms entails the spatial and temporal integration of various components, including images acquired before and during the procedure, representations of surgical instruments and targets, and the patient. The combined data are then displayed visually to the interventionalist within the mixed reality environment to access the target to be treated. The data displayed within the navigation framework must provide an accurate representation of the surgical field, which cannot be directly visualized by the clinician given the minimally invasive approach.

Image registration is an enabling technology for the development of surgical navigation environments. It plays a vital role in aligning images, features, and/or models with each other, as well as establishing the relationship between the virtual environment (pre- and intra-operative images and surgical tool representations), and the physical patient [115]. Registration is the process of aligning images such that corresponding features can be easily analyzed [116]. However, over time, the term has expanded to include the alignment of images, features or models with other image, features or models, as well as with corresponding features in the physical space of the patient, as defined by the tracking devices, i.e. patient registration.

Registration techniques vary from fully manual to completely automatic. A common manual approach is rigid-body landmark-based registration, that consists of the selection of homologous landmarks in multiple datasets [117]. While this approach may be suitable for applications involving rigid anatomy, such as orthopedic interventions, homologous rigid landmarks are very difficult to identify in soft tissue images.

Automatic registration methods require little or no input from the user and are generally classified into intensity- and geometry-based techniques. Intensity-based methods determine the optimal alignment using different similarity relationships [118,119] among voxels of the multiple image datasets. Geometry-based techniques provide a registration using homologous features or surfaces defined in multiple datasets. One of the first algorithms was the “head and hat” introduced by Pelizzari et al. [120] and served as precursor of what is now commonly known as the iterative closest point (ICP) method introduced independently under its current name by Besl and McKay [121]. These registration algorithms provide sufficient accuracy in the region of interest, involve structures (surfaces or point clouds) that can be generated with limited “manuality”, and can be performed sufficiently quickly during a typical surgical procedure. Care must be taken when performing surface-based registration, since regions that have translational or rotational symmetry are not well constrained. Ideally regions of higher curvature should be used, but here the solution can have local minima. Generally, surface registration can be accurate provided a good starting estimate is chosen.

While conventional IGI does not provide direct feedback of changes in the inter-relationship between anatomical structures (i.e. organ deformation), such data could be provided by intra-operative sensing or imaging, used to update the pre-operative plan; such correspondence between pre- and intra-operative images or models of soft tissue structures is ideally established via non-rigid registration. Although such techniques are required for almost any soft tissue intervention using imaging for guidance, recently there have only been few reports of image guidance systems that employ non-rigid registration. Nevertheless, it has been suggested that such technology would have a major impact on some of the most life-threatening conditions that require intervention [122]. Several examples of procedures that incorporate non-rigid registration include the use of statistical atlases for neurosurgical interventions [123]; deformable atlases of bony anatomy for spine interventions [124,125]; motion models for image-guided radio-therapy of the lung [126] and liver [127]; and interventional cardiac applications [128,92,129].

Despite the wealth of available registration algorithms, some approaches may not be suitable for use in time-critical interventional applications in the OR. Instead, fast and OR-friendly registration techniques are employed. Similar observations were raised by Yaniv et al. [118] in establishing criteria for evaluation of registration approaches in interventional guidance, which include metrics such as performance time, alignment accuracy, robustness, user interaction, and reliability.

A thorough description of image registration for interventional guidance is provided in chapters 6 and 7 of the book by Peters and Cleary [39], and additional references are available in Hajnal et al. [130].

2.5. Multi-modality data visualization and display

Visualization is an important component of any surgical guidance platform. Whatever the adopted approach may be, it must present the surgical scene in a 3D fashion and provide a high fidelity representation of the surgical field. For most minimally invasive interventions, this information is not accessible via direct sight. The advent of multi-modality 3D and 4D medical imaging has fueled the development of high-performance computing for effective manipulation and visualization of large image datasets in real time. Many computational visualization methods are available for clinical application, including both 2D and 3D techniques, along with specific display types.

2.5.1. Two-dimensional visualization

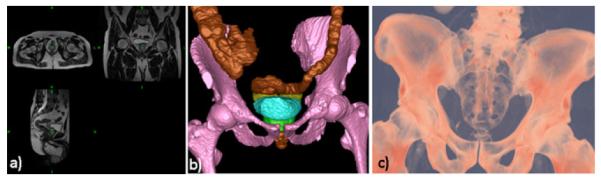

The most common 2D visualization methods include orthogonal and oblique planar views. Orthogonal multi-planar image visualization enables the exploration of 3D volumetric datasets along the non-acquired orthogonal orientations of the volume (i.e., the exploration of a CT dataset acquired transaxially along the coronal or sagittal directions). Multi-planar image displays usually consist of multi-panel displays: one panel typically shows the full 3D volumetric dataset via either intersecting orthogonal sections or a “cube of data” with interactive slider control, while the other panels display the 2D data along the three standard anatomical orientations (axial, coronal and sagittal) at the location of interest (Fig. 3).

Fig. 3.

Examples of common rendering paradigms: (a) orthogonal cuts; (b) segmented surface rendering; (c) volume rendering.

If the desired 2D image is at a particular orientation through the anatomy that is not parallel to one of the three standard planes, an oblique section is necessary. The specification and identification of such oblique sections is performed via well-defined landmarks, selected based on several orthogonal images from the volume dataset. For example, the selection of three landmarks in the image volume will identify a unique oblique plane through the dataset, which may need to be rotated within the volume to achieve the proper orientation. Similarly, two landmarks define an axis which can in turn serve as a normal along which orthogonal planes can be generated. Alternatively, a tracked, navigated probe can be used to define a plane through the dataset.

2.5.2. Three-dimensional visualization

Three-dimensional visualization typically involves surface or volume rendering representations. Surface rendering requires the extraction of contours that define the surface of the structure to be visualized [131]. The previously mentioned marching cubes algorithm [97] then places tiles at each contour point and the surface is rendered visible. The advantage of this technique lies within the relatively small amount of data that is retained for manipulation and the polygonal representation is easily handled by graphics hardware, resulting in fast rendering speeds. However, as discrete contour extraction is required prior to rendering, all volume information is lost.

On the other hand, volume rendering is one of the most versatile and powerful image display and manipulation techniques, based on ray-casting algorithms that provide direct visualization of the volumetric images without the need of prior segmentation and surface extraction, therefore preserving all the information and context of the original dataset [132,133]. Nevertheless, the significantly large size of the traditionally acquired high-resolution image volumes challenges the volume rendering algorithms themselves, as well as the computing systems on which they are implemented.

Examples of 3D visualization include virtual endoscopy, surgery planning and rehearsal and dynamic parametric mapping. An important visualization application that enables non-invasive exploration of the internal anatomy for screening purposes (i.e. colonoscopy) is virtual endoscopy. Such visualizations can be generated either by direct volume rendering, placing the perspective view point within the volume of interest and casting divergent rays in any viewing direction, or by first segmenting the image volume then allocating a common viewpoint within the anatomic model and obtaining a sequence of surface-rendered views by changing the camera position [22].

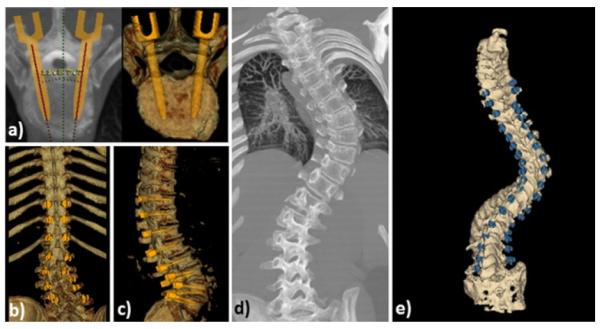

Visualization for interventional planning, rehearsal, and guidance has been successfully and increasingly used over the past three decades. Fig. 4 shown an application where volume rendering was employed to generate patient-specific models of the spine from 3D CT image volumes for pedical screw implantation procedures. The visualization is augmented with virtual templating capabilities that enables the surgeon to select and “implant” virtual screws into the pre-operative virtual spine model and render the virtual “post-operative” model to visualize the procedure outcome [134].

Fig. 4.

Application of volume rendering visualization for planning of spinal deformity correction procedures. (a) Virtual templating performed by inserting virtual pedicle screws models into image and volume rendered image data; (b) coronal and (c) sagittal volume renderings of the spine showing the complete plan after virtual templating; (d) example of a clinical case showing the pre-operative patient CT image volume and volume rendered pre-operative plan after templating.

Finally, a significant application of interactive 3D visualization is the generation of electro-anatomical models of the heart for diagnosis, therapy planning, and guidance. Fig. 5 illustrates a dynamic and parametric mapping of the heart with electro-physiology data registered and superimposed onto the endocardial left atrial surface obtained from a CT dataset. As a result, such visualizations provide simultaneous hybrid multi-modal representations of both anatomy and function for intuitive and effective target localization and therapy delivery [22].

Fig. 5.

(a) Example of parametric mapping of electro-physiological activation times onto patient-specific model of the left atrium; (b) “cut-away” view into the electro-anatomical model showing the activation map on the posterior left atrial wall.

2.5.3. Information display

Another key aspect to robust visualization is choosing the most appropriate information display technology for the image guidance platform. It could become overwhelming for surgeons to visualize, analyze, interpret, and fuse all the possible information available during procedures for optimal therapy delivery. Provided adequate visualization, research studies have emphasized the importance of selecting the most relevant information from the available multi-modality imaging data to be displayed to the operator at a particular stage of the procedure. The solution proposed by Jannin et al. [135] relies on the modeling of the surgical workflow to identify the required information and deliver it at the right time. Mixed environments have provided solutions for enhanced visualization, ranging from fully immersive displays (Fig. 6) that do not provide the user with any real display of the surgical field, to those that combine computer graphics with a direct or video view of the actual surgical scene.

Fig. 6.

In vivo porcine study on mitral valve replacement performed via model-enhanced US guidance: the multi-modality guidance environment is displayed to the surgeons via stereoscopic head-mounted displays.

Reported systems combine computer graphics with a direct or video view of the real surgical scene. The first head-mounted display (HMD)-based AR system combined real and virtual images by means of a semi-transparent mirror and was introduced by Sutherland et al. in 1968. Operating binoculars and microscopes were also augmented using a similar approach, as described by Kelly et al. [16] and Edwards et al. [136] for applications in neurosurgery, and further improved and exploited by Birkfellner and colleagues [137,138] for maxillofacial surgery.

Distinct from the user-worn devices, AR window-based displays allow augmentation without using a tracking system. This technology emerged in 1995 with the device introduced by Masutani et al. [139], which consisted of a transparent mirror placed between the user and the object to be augmented. Another example was the tomographic overlay described in Refs. [140,141], which made use of a semi-transparent mirror to provide a direct view of the patient together with a CT slice correctly positioned within the patient’s anatomy. A comprehensive review of medical AR displays is provided by Sauer et al. [142] and Sielhorst et al. [143].

3. Challenges/barriers to clinical implementation

The introduction of new technology into the OR must be approached in a very systematic manner and for valid reasons: is there a real clinical need for the new technology? What are the clinical and technological obstacles that must be overcome to implement the new techniques into the OR? How will the new technology improve the overall outcome of the procedure? Cohen et al. apply this analysis for AR guidance in prostatectomy [144].

3.1. Technical barriers

3.1.1. Equipment and hardware

Most image-guided interventions require several pieces of complex equipment, and often several personnel are needed for the different facets of the operation in a constrained working environment. Despite their real-time benefits and high-quality images, systems that rely on real-time MRI guidance are not only expensive and require special infrastructure for implementation, but also hamper the clinical workflow due to their incompatibility with the OR instrumentation. Moreover, if surgical tracking is employed, the delivery instruments must be built or adapted from existing clinical tools, such that they incorporate the tracking sensors [145]. In addition, most off-the-shelf surgical instruments do not comply with the “ferromagnetic free environment” requirement imposed by the presence of magnetic tracking technologies in the OR. Therefore, medical device manufacturers must be engaged from the very start to design instruments that are both appropriate for the application and compatible with the guidance environment.

3.1.2. Calibration and communication

Assuming successful integration of the necessary hardware equipment into the surgical suite, adequate calibration and synchronization of all data sources is yet another challenge. All displayed images, models and surgical tool representations must be integrated into a common environment, which, in turn, must be registered to the patient. Moreover, several guidance platforms employ tracked US imaging, in which case additional calibration steps are required to ensure that the location and geometry of the imaged features are accurately presented [146,147].

Besides spatial calibration, temporal synchronization between the information displayed in the visualization environment and the actual anatomy needs to be maintained during real-time visualization. Ideally, a high-fidelity image guidance environment would enable image acquisition, registration, surgical tracking, and information display at ~20–30 frames per second. However, these processes take time and in spite of the real-time intra-operative imaging, the virtual information is necessarily delayed due to the latency of image formation, tracking and rendering [148,149]. As reported by Azuma [150], end-to-end system delays of 100mm are fairly typical on existing systems; simpler systems may experience less delay, while delays of 250mm or more can be experiences by heavily loaded, networked systems [148].

One should note, nevertheless, that end-to-end system delays give rise to registration errors when motion occurs. In their work on assessing latency effects in a head-mounted medical augmented reality system, Figl et al. collected realistic data on surgeon head movement velocity in the operating theatre and assessed the resulting latency and spatial error [151]. Their study reported a slower than 10°/s head movement with velocities ranging from ~ 10mm/s to ~28 mm/s, resulting in a spatial shift and temporal delay of ~1.24–2.62mm and ~0.12–0.09 s (i.e., 100ms on average temporal delay), respectively.

The ultimate goal is to minimize latency to such an extent that any lag in the system’s response does not interfere with the normal workflow. The patient registration must also be updated in a nearly real-time fashion, leading to a trade-off between accuracy, simplicity, and invasiveness [143]. Thanks to the increasing computational power of modern GPUs, deformable image registration algorithms have been optimized to yield accuracies on the order of 1–2mm in a few seconds [152,153].

3.1.3. Data representation and information delivery

To appreciate the full 3D attributes of medical image data, three major approaches have been undertaken for surgical planning and guidance: slice rendering, surface rendering, and volume rendering. The slice rendering approach is most commonly employed in radiology. Clinicians often use this technique to view CT or MR image volumes one slice at a time using the traditional ortho-plane display. This method has been established as the clinical standard approach for visualizing and analyzing medical images. However, this approach may not be optimal for navigation, as it only provides information in the viewing plane and requires further manipulation of the viewing planes to interactively scan through the acquired volume. Surface rendering provides information beyond that offered via slice rendering and shows three-dimensional representations of the structures of interest. However, the segmentation process involves a binary decision process to decide where the object lies, which affects the fidelity of the models. Volume rendering techniques, on the other hand, employ all of the original 3D imaging data, rather than discarding most of them when surfaces are extracted using segmentation. Viewing rays are cast through the intact volumes and individual voxels in the dataset are mapped onto the viewing plane, maintaining their 3D relationship while making the display appearance intuitive to the observer [154].

3.1.4. Engineering evaluation and validation

From a clinical stand-point, the success of an intervention is judged by its therapeutic outcome. From an engineering perspective, navigation accuracy is constrained by the inherent limitations of the IGI system. The overall targeting error within an IGI framework is dependent on the uncertainties associated with each of the components, emphasizing the requirement that a proper overall validation of image-guided surgery systems should estimate the errors at each stage of the IGI process [155] and study their propagation through the entire system and workflow [156]. The accuracy challenge can be posed as a series of questions: how accurate is the pre-operative modeling and planning? How accurate is the image-/model-to-patient registration? How accurate is the surgical tracking? What is the overall targeting accuracy of the system? What is the ultimate accuracy required by the procedure, how is it determined and can it be fulfilled given the technology at hand?

Validation and evaluation of augmented environment systems are crucial to ensure proper use and demonstrate their added value. The validation task involves different aspects from technical studies, impact on the surgeon’s cognitive processes, changes of surgical strategies and procedures, to impact on patient outcome [157]. The lack of adequate validation and evaluation is a major obstacle to the clinical introduction of augmented reality.

3.2. Clinical barriers

3.2.1. New technology footprint

Augmented reality has the potential to facilitate surgical interventions by providing the clinician with an enhanced view of the underlying patient anatomy. As high definition video has been introduced into the laparoscopic surgical environment, the surgeon has an excellent view of the surface anatomy. With the use of augmented reality, the underlying anatomic structures as visualized from intra-operative ultrasound or pre-operative tomographic imaging can be displayed to aid in surgical decision making. This display may provide an increased safety profile through decreased risk, increased surgeon confidence, and decreased operation time through increased efficiency.

One of the major barriers in introducing AR technology into the clinical environment is the perceived “cost vs. benefit” issue, although it might be argued that the benefit might not be known in advance. To introduce AR technology into a procedure, resources, including the technical team and equipment, are required. However, there are very few centers that are positioned to support this endeavor. A typical AR system requires a tracking device, special purpose software, and engineering support in the OR. This is expensive, potentially cumbersome, and can slow down the OR workflow and procedure time. Very little progress can be made without cooperative clinical partners who are forward thinking and research oriented with regard to evaluation of the technology and its impact on the surgical workflow, as suggested by Neumuth et al. [158] when studying the workflow-induced changes during AR-guided interventions.

3.2.2. What does the surgeon expect? Addressing a real clinical need

Surgical success is dependent upon an accurate knowledge of both normal and pathological patient-specific anatomy and physiology in a dynamic environment. This environment must be recognized at the onset of the surgical procedure and must be continuously re-assessed with the physical changes induced by the intervention. A robust, real-time integration of the relevant and surrounding anatomy into the visual surgical field permits the surgeon to direct his dissection more precisely toward the target tissues and away from adjacent uninvolved tissues. This information can limit collateral damage and inadvertent injury. Such real-time anatomic information is far more useful than simple pre-operative imaging, which has limited value once surgical exposure has begun. As the procedure continues, and the focus of the procedure narrows to the area of relevance, information regarding adjacent structures and the spatial orientation of the area of interest narrow and become even more important (Table 1).

Table 1.

Examples of clinical applications in abdominal interventions which would benefit from advanced clinically-reliable image guidance technology.

| Clinical applications in abdominal interventions |

Potential uses of image guidance |

|---|---|

| Partial nephrectomy for tumor and congenital abnormality |

Vascular anatomic mapping |

| Nerve-sparing radical prostatectomy |

Nerve mapping |

| Partial pancreatectomy and splenic preserving operations |

Selective vascular distribution |

| Biliary reconstruction | Tumor vascularization mapping |

| Retroperitoneal lymphadenectomy (including nerve sparing) |

Adjacent structure localization |

| Retrovesical procedures for congenital anomalies |

Tumor painting |

The importance of robust anatomical information is applicable to both reconstructive and ablative surgical procedures. In reconstructive procedures, the critical component is the exposure of the relevant anatomy to permit effective reconstruction. Avoiding collateral tissue damage with efficient exposure is essential to a successful procedure. In ablative surgery, the need for continuous anatomic information applies for most of the procedure. The most critical elements are those that involve vascular control: a clear view of the vascular supply to the affected area to be removed is as critical as the vascular supply to the healthy tissues. Other anatomic relationships at this point of the procedure can be important as well, including the urinary collecting system (in partial nephrectomy), the biliary tree (in hepato-biliary reconstruction), the prostate neurovascular bundles, and the pancreas. The ability to isolate and control specific vascular beds can be of critical importance to the success of any procedure, particularly in complex solid organs such as the liver and kidney in which partial resection is largely based upon vascular distribution. Therefore, robust, real-time presentation of the critical anatomic information to the operating team can be critical to the success of the procedure.

While these procedures can be successfully completed without augmented environments, the presence of AR may potentially reduce uncertainty, hesitancy, collateral tissue damage, and hemorrhage with its associated morbidity. Augmented reality depiction of the relevant structural, functional, and pathological anatomy, particularly in the increasingly digital surgical environment of minimally invasive procedures, will further serve to enhance surgical efficiency while minimizing peri-operative morbidity.

3.2.2.1. Clinical case example

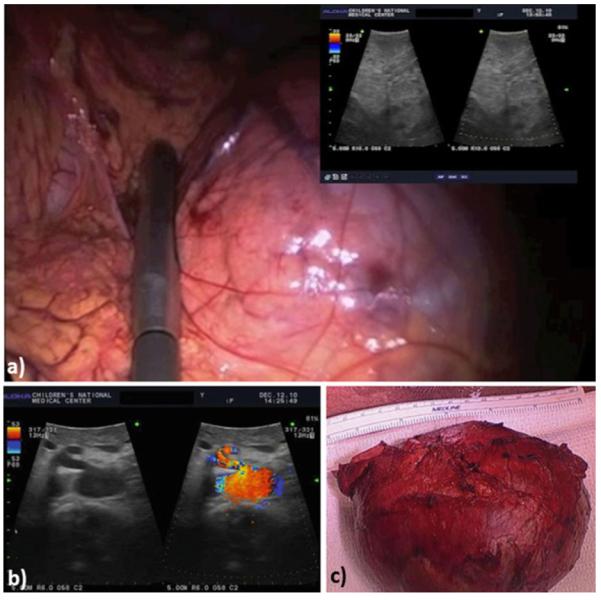

To outline the interventional workflow associated with a traditional laparoscopic abdominal intervention, a clinical example is presented. While the described experience does not rely on use of imaging for guidance, it is, nevertheless, intended to emphasize how image guidance or mixed reality visualization could enhance guidance and facilitate the procedure.

We present the case of a 13-year-old female who underwent laparoscopic-assisted resection of a large pancreatic mass. Identification of the surrounding vasculature by intra-operative ultrasound allowed for the safe resection of this mass by the laparoscopic method, as well as for the preservation of her spleen. Following diagnostic work-up, which revealed a large (13cm × 11.9cm × 11.2cm) mass in the tail of the pancreas, it was recommended to the patient and her family that she undergo resection of this mass. Given the size and location of the mass, the possibility for using an open approach to remove the mass, as well as the possibility for splenectomy given the proximity to the spleen, was discussed. The abdomen was accessed via a 12mm umbilical trocar. Additional trocars were placed in the left upper quadrant (5 mm), right lateral abdomen (5 mm), and left lateral abdomen parallel to the umbilicus (12 mm). An intra-operative ultrasound probe was used to identify the superior mesenteric artery (SMA) and the superior mesenteric vein (SMV) (Fig. 7). Following US confirmation of the vasculature, the body of the pancreas was dissected free from the splenic vein, then transected with a surgical stapler proximal to the mass. The remainder of the pancreas with the mass was mobilized distally and circumferentially, and removed while sparing the blood supply to the spleen (Fig. 7).

Fig. 7.

Clinical case report: 13-year-old female who underwent laparoscopic-assisted resection of a large pancreatic mass. Use of B-mode (a) and Doppler US (b) images acquired with a laparoscopic transducer helps identify the pancreatic vasculature; (c) post-resection image showing removed mass and part of the pancreas (lower panel).

In this case report, there was no co-registration of the US image with the intra-operative laparoscopic video. While US imaging was helpful in identifying the vasculature, registration of the US images with the intra-operative images would have been significantly helpful in enhancing the surgeon’s orientation in the operative field. In addition, continuous assessment of the associated vasculature via an AR-like fusion of US imaging and real-time video might have enabled a more reliable orientation for the surgeon since the tumor precluded direct observation of the vessels beneath the mass. Image-guided navigation and fusion of pre-operative images with real-time ultrasound has the potential to significantly benefit laparoscopic surgery, particularly tumor resection, where the ultimate goal is to ensure that no diseased tissue is left behind.

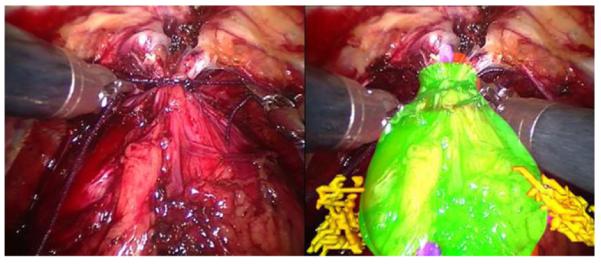

Fig. 8 shows an example of an augmented reality overlay onto the prostate, illustrating the position of the prostate itself and the location of the nerve bundles that need to be preserved during cancer tumour resection to maintain the patient’s urinary and sexual function.

Fig. 8.

Example of an augmented reality overlay onto the prostate, showing the position of the prostate itself and the nerve bundles that need to be preserved to maintain urinary and sexual function.

3.2.3. Clinical evaluation and validation

While there is no formal definition of clinical accuracy, it may be defined as the maximum error that can be tolerated during an intervention without compromising therapy goals or causing increased risk to the patient. Such tolerances are difficult to define, as they are procedure and patient specific. Moreover, to identify a robust measure of the clinically imposed accuracy, in vivo experiments are required, where all variables must be closely controlled – a very challenging task for most in vivo interventional experiments. The translation of clinical accuracy expectations into engineering accuracy constraints is also difficult to formulate, especially when accuracy errors in the image guidance platforms begin to affect clinical performance. Consequently, the clinical accuracy requirements ultimately dictate the engineering approach used, therefore posing a higher level of complexity on the evaluation and validation process, as suggested by Jannin et al. [157].

According to the guidelines of Health Care Technology Assessment, any IGI platform undergoes a complex and diverse, multi-level evaluation process. At Level 1, the technical feasibility and behaviour of the system are evaluated. These include characterization of the system’s intrinsic parameters, such as accuracy and response time. At Level 2 the diagnostic and therapeutic reliability of the system are assessed, including clinical accuracy, user and patient safety, as well as the system’s reliability in the clinical setting. The efficacy of the system is assessed via clinical trials at Level 3, including its direct and indirect impact on intervention strategy and performance. These studies are further extended to evaluate the system’s comparative effectiveness during large-scale multi-site randomized clinical trials at Level 4. Level 5 focuses on the assessment of the system’s economic impact, based on cost effectiveness and cost-benefit ratio. Finally, the system’s social, legal and ethical impact is assessed at Level 6. Based on these assessments, the regulatory agencies – the US Food and Drug Administration (FDA) or the European Community (CE) – are advised on whether or not to allow the commercial and clinical use of tha system.

3.2.4. User-dependent challenges

Additional constraints associated with the development and implementation of new visualization and navigation paradigms revolve around the clinician. In minimally invasive interventions the guidance environment is the surgeon’s only visual access to the surgical site, raising the following questions: what information is appropriate? How much is sufficient? When and how should it be displayed? How can the surgeon interact with the data?

These environments are different from those to which clinicians are accustomed. After looking at radiographs or conventional views of the anatomy provided via digital medical imaging, and after performing open surgery for decades, some clinicians may be intimidated by the novelty of the VR and AR environments and may find the complex displays confusing rather than intuitive. One approach may be to make “the new” look similar to “the old”, gradually introducing new information to avoid overload, employing standard anatomical views first and progressively establishing an intuitive transition toward 3D displays. On the other hand, another approach may consist of making the new environment available to the clinicians, even if they would not be fully convinced of its use, and provide them with the necessary training and sufficient time to explore and understand the guidance platform. It is also critical that clinicians be actively involved in the development of such environments, as they can raise important issues and concerns that may be overlooked by the engineers.

Common paradigms for information manipulation and user interaction with classical 2D medical displays include windows, mouse pointers, menus, and dials. Despite their extensive use, these approaches do not translate well for 3D displays. In their work, Bowman et al. [159] provide a comprehensive overview of 3D user interfaces along with detailed arguments as to why 3D information manipulation is difficult. Furthermore, Reitinger et al. [160] have proposed a 3D VR user interface for procedure planning of liver interventions. On a similar topic, Satava and Jones [161] stated that patient-specific models would permit surgeons to practice a delicate surgical procedure by means of a virtual environment registered to the patient intra-operatively. All these groups concluded that each procedure should involve a limited number of meaningful visualization poses that are suitable for the user. Therefore, environments must be modified to control the degree of user interaction by identifying the necessary navigation information at each stage in the workflow and ensuring its optimal delivery without information overload [162].

4. Examples of pre-clinical applications of mixed reality guidance

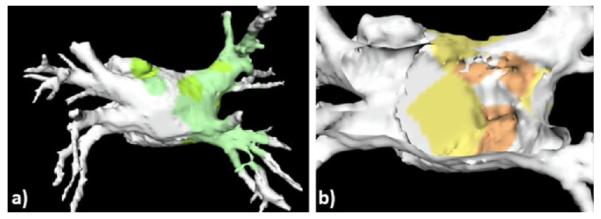

4.1. Mitral valve repair

Fig. 9 illustrates a novel use of the model-enhanced US mixed reality guidance platform for mitral valve repair. The paradigm is implemented via the integration of pre- and intra-operative data, with surgical instrument tracking [163]. The pre-operative virtual anatomical models of the heart augment the intra-operative transesophageal US imaging and act as guides to facilitate the tool-to-target navigation. Ultimately, the user positions the tool on target under real-time US image guidance.

Fig. 9.

Mixed reality guidance platform for intracardiac mitral valve repair showing real-time trans-esophageal echocardiography data combined with geometric models for trans-esophageal probe, NeoChord tool, mitral and aortic valve annuli.

In this surgical procedure, a novel tool (NeoChord DS1000, Neo-Chord, Minnetonka, MN, USA) is inserted into the apex of the beating heart and navigated to a prolapsing mitral valve leaflet [164]. The tool attaches a replacement artificial chord on the flail leaflet and re-anchors it at the apex, thus restoring proper valve function. Since this procedure is performed in the blood-filled environment of the beating heart, line of sight guidance is impossible, and echocardiography provides the primary method for guidance.

This image-guided surgery platform uses magnetic tracking technology (NDI Aurora, Northern Digital Inc., Waterloo, Canada) to track the locations of both real-time trans-esophageal US images and the NeoChord device, representing them in a more intuitive 3D context. Fig. 10 shows the platform in use during an animal study, with the cardiac surgeon using the mixed reality visualization data to plan an optimal surgical path.

Fig. 10.

Intracardiac mixed reality navigation as used in an in vivo porcine study.

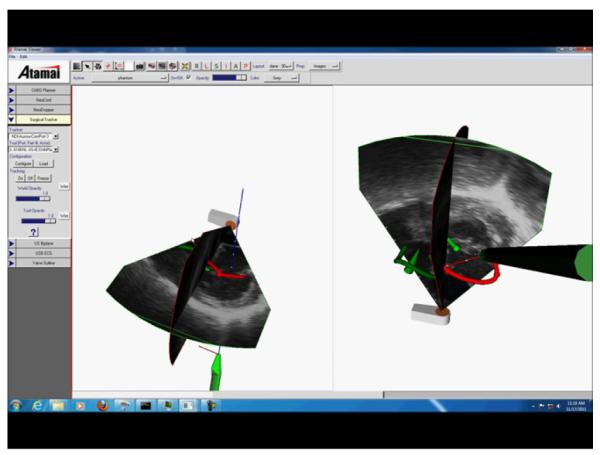

4.2. Needle-based spinal therapy delivery

US imaging is readily available, significantly less expensive and more OR compatible compared to other imaging modalities, and presents no risk of dose exposure to the patient or clinical staff. However, on their own US images can be difficult to interpret; moreover, in order to adequately visualize small needles, higher imaging frequencies are preferred, which, in turn, lead to higher attenuations and shallower penetrations, making it difficult to identify the facet joints. The goal of the work proposed by Moore et al. [165] was to develop an anesthesia delivery system that allowed the integration of virtual models derived from diagnostic imaging such as CT and tracked instruments, all intended to enhance real time US data. Their solution integrates 2D US with virtual representations of anatomical targets and the tracked therapy needle, enhancing the US imaging information available to the clinician.

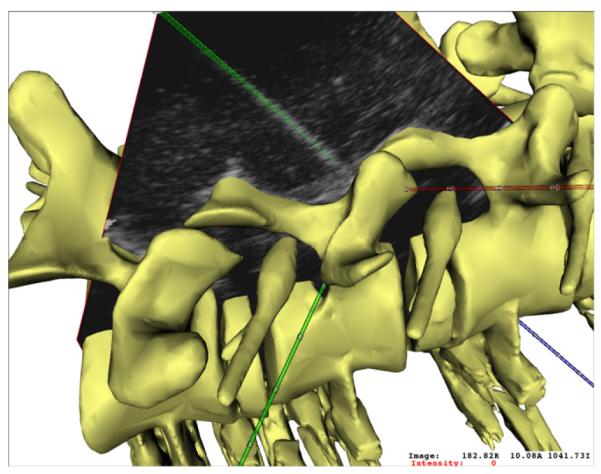

Fig. 11 shows a mixed reality visualization for delivery of spinal needle therapies. In this case, a magnetic tracking system was used to track an US transducer in concert with a cannulated needle. The real time 2D ultrasound image data is represented in a three-dimensional context with geometric representations of the ultrasound transducer, the needle, and patient-specific spine data derived from pre-procedural CT data. The system has been shown to improve accuracy of therapy delivery as well as procedural time and safety.

Fig. 11.

A mixed reality visualization platform for needle based spinal therapies, integrating real time ultrasound image data with geometric representations of a magnetically tracked needle and 3D spine model.

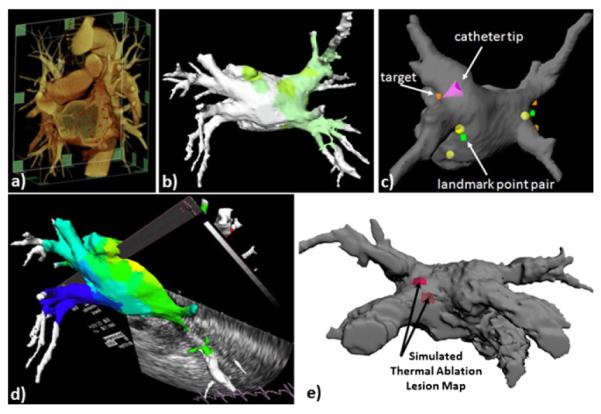

4.3. Advanced visualization and guidance for left atrial ablation therapy delivery

The prototype system for advanced visualization for image-guided left atrial ablation therapy developed at the Biomedical Imaging Resource (Mayo Clinic, Rochester, MN, USA) [166] is built on an architecture designed to integrate information from pre-operative imaging, intra-operative imaging, left atrial electrophysiology, and real-time positioning of the ablation catheter into a single user interface. The system interfaces to a commercial cardiac mapping system (Carto XP, Biosense Webster Inc., Diamond Bar, CA, USA), which transmits the location of the tracked catheter to the system. While sufficiently general to be utilized in various catheter procedures, the system has been primarily tested and evaluated for the treatment of left atrial fibrillation. The user interface displays a surface-rendered, patient-specific model of the left atrium (LA) and associated pulmonary veins (PV) segmented from a pre-operative contrast-enhanced CT scan, along with points sampled on the endocardial surface with the magnetically tracked catheter Fig. 12. The model-to-patient registration is continuously updated during the procedures, as additional “intra-operative” locations are sampled within the left atrium [167]; this data is further augmented with tracked ICE imaging, enabling precise catheter positioning for therapy delivery [65]. Once on target, RF energy is delivered to the tissue. Ongoing developments focus on the real-time visualization of the changes occurring in the tissue in response to the RF energy delivery, which will be provided to the clinician via a thermal ablation model, which will provide the local temperature maps and lesion size superimposed onto the patient-specific left atrial model Fig. 12e.

Fig. 12.

Advanced platform for image guidance and visualization for left atrial ablation therapy. (a) Volume-rendered pre-operative patient-specific CT scan; (b) electro-anatomical model showing superimposed activation data, integrated with real-time magnetic catheter tracking (c) and real-time tracked US imaging (d); (e) simulated representation and visualization of thermal model maps for therapy monitoring and guidance of successive lesions obtained by superimposing local tissue temperature distribution and lesion size onto left atrial model.

4.3.1. Mixed reality applications for neurosurgical interventions

Some of the first applications of augmented reality in medicine were proposed at the University of North Carolina (Chapel Hill, NC, USA) and their implementation was in the form of a “see-thru” display [168,169]. Another system was developed by Sauer et al. [170] at Siemens Corporate Research (Princeton, NJ, USA) – a commercial VR HMD system known as the RAMP (Reality Augmentation for Surgical procedures) system. In addition to the left- and right-eye stereoscopic cameras, the RAMP device was equipped with a third black-and-white camera instrumented with an infrared LED flash. This third camera works in conjunction with the retro-reflective markers framing the surgical workspace. This setup prevented the user from occluding the “sight-lines” of a traditional optical tracking system, therefore diminishing the limitations associated with the use of optical tracking systems. The RAMP system was adopted for neurosurgical procedures [171], later adapted for use with interventional MRI [172], integrated with an US scanner, and tested for CT- and MRI-guided needle placements [173].

Another form of mixed reality technology employed in neurosurgery guidance was a large-screen AR developed at MIT in collaboration with the Surgical Planning Laboratory at Brigham & Women’s Hospital (Boston, MA, USA) [174]. The system was equipped with optical tracking to localize the patient’s head and the surgical instruments, as well as a camera that acquired live images of the patient’s head. To achieve the image-to-patient registration prior to guidance, a set of 3D surface points acquired from the patient’s scalp using a tracked probe were registered with the skin surface extracted from the patient’s MRI scan [175]. As a result, the monitor displayed the live video image of the patient’s head shown in transparent fashion and augmented with internal anatomical structures overlaid onto the video of the head.

5. Summary and forecast: the future is bright

Mixed reality environments represent an advanced form of image guidance which enable physicians to look beyond the surface of the patient and “see” anatomy and surgical instruments otherwise hidden from direct view. Mixed reality visualization includes the patient, allowing the physician to interpret the diagnostic, planning and guidance information at the surgical site. To make this technology successful, it needs to be used in the appropriate applications. It may be argued that these augmented medical environments will have a greater positive impact on challenging, complex procedures. Simple surgical tasks that can be conducted under direct vision do not benefit from the use of mixed reality, and may even diminish its value. Truly challenging clinical tasks allow the surgeon to appreciate the real added value of the image-guided navigation environment [176].

The registration requirement for AR is difficult to address and is yet to be solved, however, a few systems, as outlined here, have achieved adequate results. The open-loop system (i.e., open-loop systems provide no feedback on how closely the real and virtual components actually align) developed by Azuma and Bishop [177] shows registration typically within±5mm from several viewpoints for objects located at about arm’s length from the display. Closed-loop systems (i.e., closed-loop systems typically use a digitized image of the real environment to bring feedback into the system and enforce registration to the virtual counterparts), on the other hand, have demonstrated nearly perfect registration, accurate to within a pixel [178–182]. Moreover, the clinical assessment study of the MAGI stereo AR navigation system developed by Edwards et al., demonstrated a registration accuracy of 1mm or better in five and 2mm or better in eleven of the 17 cases performed [24]. As also suggested by Azuma [150], duplicating the proposed registration methods remains a nontrivial task, due to the complexity of the algorithms and the required hardware. On the other hand, simple, yet effective registration solutions could would speed the acceptance of AR systems.

As mentioned in Section 2.2, segmentation as a means for anatomical modeling is another potential barrier to the adoption of mixed reality guidance. The fidelity of the resulting anatomical models is typically expected to be on the order of 5mm or better [183], due to both the partial volume effects, as well as motion artifacts and noise present in the acquired image datasets.

As far as surgical tracking, optical systems provide instrument localization accuracy of 0.5mm or better [110,101], but are limited to applications that allow for direct kline-of-sight between the infrared camera and the tracked object. Magnetic tracking systems, required for instrument tracking inside the body, feature a localization accuracy of 2mm or better [184,103,104] or as much as 2.5mm in certain laboratory environments [185].

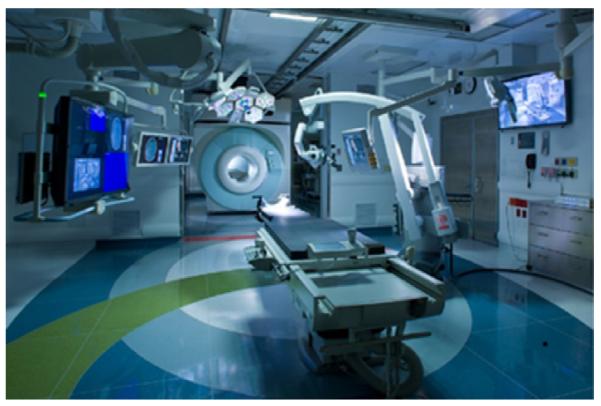

The system that is best placed to have an impact on clinical outcome will be a well-engineered system with not only accurate registration and tracking, robust visualization and convincing displays, but also one that fits seamlessly within the standard OR, provides smooth data transfer, and does not interfere with the traditional clinical workflow. The integration of advanced imaging and surgical tracking technology into interventional suites has, in fact, been achieved and one example of such a facility is the advanced multi-modality image guided operating (AMIGO) suite as part of the clinical arm of the National Center for Image Guided Therapy (NCIGT) at the Brigham & Women’s Hospital and Harvard Medical School [186]. AMIGO is a highly integrated, 5700 sq. ft. completely sterile, fully functional three-room procedural suite that allows a team of physicians to conduct interventions with access to advanced multi-modality intra-operative imaging. In AMIGO, real-time anatomical imaging modalities like X-ray fluoroscopy and US are combined with tomographic digital imaging systems such as CT and MRI. In addition, AMIGO takes advantage of the most advanced molecular imaging technologies like PET/CT and optical imaging to enable“molecular imaging-guided therapy”. In addition to multi-modality imaging modalities, AMIGO also integrates guidance or navigational devices that can help localize tumors or other abnormalities that can be identified in the images, but may not be visible on the patient. This technology also enables real-time tracking of needles, catheters and endoscopes within the body, and combines imaging and guidance with the latest therapy and robotic devices (Fig. 13).

Fig. 13.

The advanced multi-modality image guided operating suite at Brigham & Women’s Hospital – a first step into the operating room of the future.

This high-technology clinical research environment allows the exploration, development, and refinement of new and less invasive approaches compared to traditional open surgeries. Once perfected, these clinically tested procedures would migrate into the mainstream of major hospitals operating rooms and interventional suites.

A critical question is whether mixed reality environments show improvement in clinical utility. We believe that two important factors need to be addressed to evaluate the clinical impact of these systems: (1) perception studies will provide insights toward optimally fusing real images and computer generated data to ensure their correct registration in the user’s mind; and (2) usability studies will help identify the direct benefits to the user provided by AR and VR environments in comparison to other visualization approaches. However, that would not suffice; we must also make compelling arguments to convince clinicians that if these technologies become standard tools for routine procedures, they could provide real benefits, including reduced intervention time, lower patient risk, and streamlined data management, and, most importantly, improved patient outcome. However, it is not enough to limit the use of AR to new or complex procedures. The real impact of the technology will be felt when mixed reality environments become standard tools for routine surgical procedures. Then there will be real benefits in terms of reduced learning curves, reduced operating times and lower risk to patients as well as better surgical outcomes. It is our job as researchers in this field to ensure this message is passed on to our clinical colleagues.

Acknowledgments

We would like to thank our colleagues and clinical collaborators at Mayo Clinic, Robarts Research Institute, the Sheikh Zayed Institute for Pediatric Surgical Innovation, Brigham & Women’s Hospital and Harvard University, Department of Surgery and Cancer at Imperial College London, Center for Computer-Assisted Medical Procedures and Augmented Reality at the Technical University of Munich, and the INSERM Research group at Université de Rénnes I for their invaluable support. We also acknowledge funding for this work provided by: the Natural Sciences and Engineering Research Council of Canada, the Canadian Institutes of Health Research (MOP-179298), the Surgical Planning Laboratory IGT and AMIGO Project Grants (P41 RR019703 and P41 EB015898), the Canada Foundation for Innovation, the Cancer Research UK (Project A8087/C24520), the Pelican Foundation PAM project, and the National Institute for Biomedical Imaging and Bioengineering (NIBIB-EB002834).

Footnotes

Conflict of interest statement There is no conflict of interest to be declared.

References

- [1].Milgram P, Takemura H, Utsumi A, Kishino F. Augmented reality: a class of displays on the reality-virtuality continuum. Proc SPIE 1994: Telemanipulator and Telepresence Technology. 1994;vol. 2351:282–92. [Google Scholar]

- [2].Milgram P, Kishino F. A taxonomy of mixed reality visual displays. IEICE Trans Inform Syst. 1994;ED-77:1–15. [Google Scholar]

- [3].Metzger PJ. Adding reality to the virtual. Proc IEEE Virtual Reality Int Symp.1993. pp. 7–13. [Google Scholar]

- [4].Takemura H, Kishino F. Cooperative work environment using virtual workspace. Proc Comput Support Cooper Work. 1992:226–32. [Google Scholar]

- [5].Kaneko M, Kishino F, Shimamura K, Harashima H. Toward the new era of visual communication. IEICE Trans Commun. 1993;E76-B(6):577–91. [Google Scholar]

- [6].Utsumi A, Milgram P, Takemura H, Kishino F. Investigation of errors in perception of stereoscopically presented virtual object locations in real display space. Proc Hum Factors Ergon Soc. 1994;38:250–4. [Google Scholar]

- [7].Grigg ERN. The trail of the invisible light. Charles C. Thomas Pub Ltd; Springfield, IL: 1965. [Google Scholar]

- [8].Jaugeas F. Précis de radiodiagnostic. Masson & Cie; Paris, France: 1918. [Google Scholar]

- [9].Fisher SS. Virtual environments, personal simulation and telepresence. In: Helsed S, Roth J, editors. Virtual reality: theory, practice and promise. Meckler Publishing; Westport, CT: 1991. [Google Scholar]

- [10].Fisher SS, McGreevy M, Humphries J, Robinet W. Virtual environment display system. ACM Workshop on Interactive 3D Graphics.1986. [Google Scholar]

- [11].Paul P, Fleig O, Jannin P. Augmented virtuality based on stereoscopic reconstruction in multimodal image-guided neurosurgery: methods and performance evaluation. IEEE Trans Med Imaging. 2005;24:1500–11. doi: 10.1109/TMI.2005.857029. [DOI] [PubMed] [Google Scholar]

- [12].Shuhaiber JH. Augmented reality in surgery. Arch Surg. 2004;139:170–4. doi: 10.1001/archsurg.139.2.170. [DOI] [PubMed] [Google Scholar]

- [13].Peters TM. Image-guidance for surgical procedures. Phys Med Biol. 2006;51:R505–40. doi: 10.1088/0031-9155/51/14/R01. [DOI] [PubMed] [Google Scholar]

- [14].Burdea G, Coiffet P. Virtual reality technology. Wiley; New York: 1994. [Google Scholar]

- [15].Akay M, March A. Information technologies in medicine. Wiley; New York: 2001. [Google Scholar]

- [16].Kelly PJ, Alker GJ, Jr, Goerss S. Computer-assisted stereotactic microsurgery for the treatment of intracranial neoplasms. Neurosurgery. 1982;10:324–31. doi: 10.1227/00006123-198203000-00005. [DOI] [PubMed] [Google Scholar]

- [17].Roberts DW, Strohbehn JW, Hatch jF, Murray W, Kettenberger H. A frameless stereotaxic integration of computerized tomographic imaging and the operating microscope. J Neurosurg. 1986;65:545–9. doi: 10.3171/jns.1986.65.4.0545. [DOI] [PubMed] [Google Scholar]

- [18].Friets EM, Strohbehn JW, Hatch JF, Roberts DW. A frameless stereotaxic operating microscope for neurosurgery. IEEE Trans Biomed Eng. 1989;36:608–17. doi: 10.1109/10.29455. [DOI] [PubMed] [Google Scholar]

- [19].Satava R. Advanced technologies for surgical practice. Wiley; New York: 1998. Cybersurgery. [Google Scholar]

- [20].Robb RA. Virtual endoscopy: development and evaluation using the Visible Human datasets. Comput Med Imaging Graph. 2000;24:133–51. doi: 10.1016/s0895-6111(00)00014-8. [DOI] [PubMed] [Google Scholar]

- [21].Robb RA, Hanson DP. Biomedical image visualization research using the visible human datasets. Clin Anat. 2006;18:240–53. doi: 10.1002/ca.20332. [DOI] [PubMed] [Google Scholar]

- [22].Robb RA. Biomedical imaging: visualization and analysis. Wiley; New York: 2000. [Google Scholar]

- [23].Edwards PJ, King AP, Maurer CR, Jr, de Cunha DA, Hawkes DJ, Hill DLG, et al. Design and evaluation of a system for microscope-assisted guided interventions (MAGI) IEEE Trans Med Imaging. 2000;19:1082–93. doi: 10.1109/42.896784. [DOI] [PubMed] [Google Scholar]

- [24].Edwards PJ, Johnson LG, Hawkes DJ, Fenlon MR, Strong AJ, Gleeson MJ. Clinical experience and perception in stereo augmented reality surgical navigation. Proc Medical Imaging and Augmented Reality. 2004;vol. 3150 of Lect Notes Comput Sci:369–76. [Google Scholar]

- [25].Ettinger GL, Leventon ME, Grimson WEL, Kikinis R, Gugino L, Cote W, et al. Experimentation with a transcranial magnetic stimulation system for functional brain mapping. Med Image Anal. 1998;2:477–86. doi: 10.1016/s1361-8415(98)80008-x. [DOI] [PubMed] [Google Scholar]

- [26].Feifer A, Delisle J, Anidjar M. Hybrid augmented reality simulator: preliminary construct validation of laparoscopic smoothness in a urology residency program. J Urol. 2008;180:1455–9. doi: 10.1016/j.juro.2008.06.042. [DOI] [PubMed] [Google Scholar]

- [27].Magee D, Zhu Y, Ratnalingam R, Gardner P, Kessel D. An augmented reality simulator for ultrasound guided needle placement training. Med Biol Eng Comput. 2007;45:957–67. doi: 10.1007/s11517-007-0231-9. [DOI] [PubMed] [Google Scholar]

- [28].Kerner KF, Imielinska C, Rolland J, Tang H. Augmented reality for teaching endotracheal intubation: MR imaging to create anatomically correct models. Proc Annu AMIA Symp. 2003;88:8–9. [PMC free article] [PubMed] [Google Scholar]

- [29].Rolland JP, Wright DL, Kancherla AR. Towards a novel augmented-reality tool to visualize dynamic 3-D anatomy. Stud Health Technol Inform. 1997;39:337–48. [PubMed] [Google Scholar]

- [30].Botden SM, Jakimowicz JJ. What is going on in augmented reality simulation in laparoscopic surgery? Surg Endosc. 2009;23:1693–700. doi: 10.1007/s00464-008-0144-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Koehring A, Foo JL, Miyano G, Lobe T, Winer E. A framework for interactive visualization of digital medical images. J Laparoendosc Adv Surg Tech. 2008;18:697–706. doi: 10.1089/lap.2007.0240. [DOI] [PubMed] [Google Scholar]

- [32].Lovo EE, Quintana JC, Puebla MC, Torrealba G, Santos JL, Lira IH, et al. A novel, inexpensive method of image coregistration for applications in image-guided surgery using augmented reality. Neurosurgery. 2007;60:366–71. doi: 10.1227/01.NEU.0000255360.32689.FA. [DOI] [PubMed] [Google Scholar]

- [33].Friets EM, Strohbehm JW, Roberts DW. Curvature-based nonfiducial registration for the stereotactic operating microscope. IEEE Trans Biomed Eng. 1995;42:477–86. doi: 10.1109/10.412654. [DOI] [PubMed] [Google Scholar]

- [34].Kaufman S, Poupyrev I, Miller E, Billinghurst M, Oppenheimer P, Weghorst S. New interface metaphors for complex information space visualization: an ECG monitor object prototype. Stud Health Technol Inform. 1997;39:131–40. [PubMed] [Google Scholar]

- [35].Vosburgh KG, San José Estépar R. Natural Orifice Transluminal Endoscopic Surgery (NOTES): an opportunity for augmented reality guidance. Proc MMVR. 2007;vol. 125 of Stud Health Technol Inform:485–90. [PubMed] [Google Scholar]

- [36].Vogt S, Khamene A, Niemann H, Sauer F. An AR system with intuitive user interface for manipulation and visualization of 3D medical data. Proc MMVR. 2004;vol. 98 of Stud Health Technol Inform:397–403. [PubMed] [Google Scholar]

- [37].Teber D, Guven S, Simpfend”orfer T, Baumhauer M, Güven EO, Yencilek F, et al. Augmented reality: a new tool to improve surgical accuracy during laparoscopic partial nephrectomy? Preliminary in vitro and in vivo results. Eur Urol. 2009;56:332–8. doi: 10.1016/j.eururo.2009.05.017. [DOI] [PubMed] [Google Scholar]

- [38].Nakamoto M, Nakada K, Sato Y, Konishi K, Hashizume M, Tamura S. Intraoperative magnetic tracker calibration using a magneto-optic hybrid tracker for 3-D ultrasound-based navigation in laparoscopic surgery. IEEE Trans Med Imaging. 2008;27:255–70. doi: 10.1109/TMI.2007.911003. [DOI] [PubMed] [Google Scholar]

- [39].Peters TM, Cleary K. Image-guided interventions: technology and applications. Springer; Heidelberg, Germany: 2008. [DOI] [PubMed] [Google Scholar]

- [40].Hounsfield GN. Computerized transverse axial scanning (tomography). Part 1. Description of system. Br J Radiol. 1973;46:1016–22. doi: 10.1259/0007-1285-46-552-1016. [DOI] [PubMed] [Google Scholar]

- [41].Mori S, Endo M, Tsunoo T, Kandatsu S, Kusakabe M, et al. Physical performance evaluation of a 256-slice CT-scanner for four-dimensional imaging. Med Phys. 2004;31:1348–56. doi: 10.1118/1.1747758. [DOI] [PubMed] [Google Scholar]

- [42].Klass O, Kleinhans S, Walker MJ, Olszewski M, Feuerlein S, Juchems M, et al. Coronary plaque imaging with 256-slice multidetector computed tomography: interobserver variability of volumetric lesion parameters with semiautomatic plaque analysis software. Int J Cardiovasc Imaging. 2010;26:711–20. doi: 10.1007/s10554-010-9614-3. [DOI] [PubMed] [Google Scholar]

- [43].van Mieghem CA, van der Ent M, de Feyter PJ. Percutaneous coronary intervention for chronic total occlusions: value of pre-procedural multislice CT guidance. Heart. 2007;93:1492–3. doi: 10.1136/hrt.2006.105031. [DOI] [PMC free article] [PubMed] [Google Scholar]