Abstract

A good understanding of the population dynamics of algal communities is crucial in several ecological and pollution studies of freshwater and oceanic systems. This paper reviews the subsequent introduction to the automatic identification of the algal communities using image processing techniques from microscope images. The diverse techniques of image preprocessing, segmentation, feature extraction and recognition are considered one by one and their parameters are summarized. Automatic identification and classification of algal community are very difficult due to various factors such as change in size and shape with climatic changes, various growth periods, and the presence of other microbes. Therefore, the significance, uniqueness, and various approaches are discussed and the analyses in image processing methods are evaluated. Algal identification and associated problems in water organisms have been projected as challenges in image processing application. Various image processing approaches based on textures, shapes, and an object boundary, as well as some segmentation methods like, edge detection and color segmentations, are highlighted. Finally, artificial neural networks and some machine learning algorithms were used to classify and identifying the algae. Further, some of the benefits and drawbacks of schemes are examined.

Keywords: Algae identification, segmentation, neural network, feature extraction, identification

Introduction

Algae are a very huge and diverse collection of simple, normally autotrophic organisms, ranging from unicellular to multicellular forms. They affect water properties such as water color, odor, taste, and the chemical composition, which may cause potential hazards for human and animal health.1 They are highly sensitive to the changes in their environment.2 Shift in algal species and population can be used to identify the environmental changes and the status of nutrient content.3 Algae are very good biological indications for water pollution assessment; therefore, they have long been used to assess the quality of waters in lakes, ponds, reservoirs, rivers, and so on. However, identification of algae at their taxonomy level and the application in environmental assessment is a difficult process. Several studies reported the conventional identification of algae by using microscopy images, which is a time consuming process. This has led many researchers to develop several systems to automate the analyzing and classifying algal images.2,3 An automated computer-based recognition and classification system for the rapid identification of algae will definitely reduce the burden of routine identifications by taxonomists.4–6 This identification and classification would allow many people to identify and know about the algae without any knowledge of algae.

Image processing is an effective technology to analyze the digital images for various applications in society. In that category, it is used in several places, such as in medical images, spatial images, underwater images, and other biological images. Several studies were carried out on the biodiversity of algae in India.7–13 Very little research was identified on automatic algal identification using image processing techniques.

Most research applied image processing to detect, count, identify, and classify algal groups; some of this approach was efficient with 92% accuracy.14 Some developed tools are used effectively for online monitoring, some for measurements of density of microorganism in water, and other tools were developed to assist in recognition process, such as enhancing images, noise elimination, and edge-extracted segmentation. 15–17 A combination of image processing techniques and Artificial Neural Network (ANN) algorithms are used to automate the process of detection and recognition.18 Other techniques used included was image processing with genetic algorithms or ANN for recognition purpose.15,19–22 MatLab based image processing tools were used for the complete enhancement and analytical operations. An automated object recognition segments the algal images and locates possible objects accurately by their boundary and texture without human interaction.23 Automatic identification and classifications of diatoms with a circular shape were achieved by using contour and texture analysis.24

Image Processing Methodology

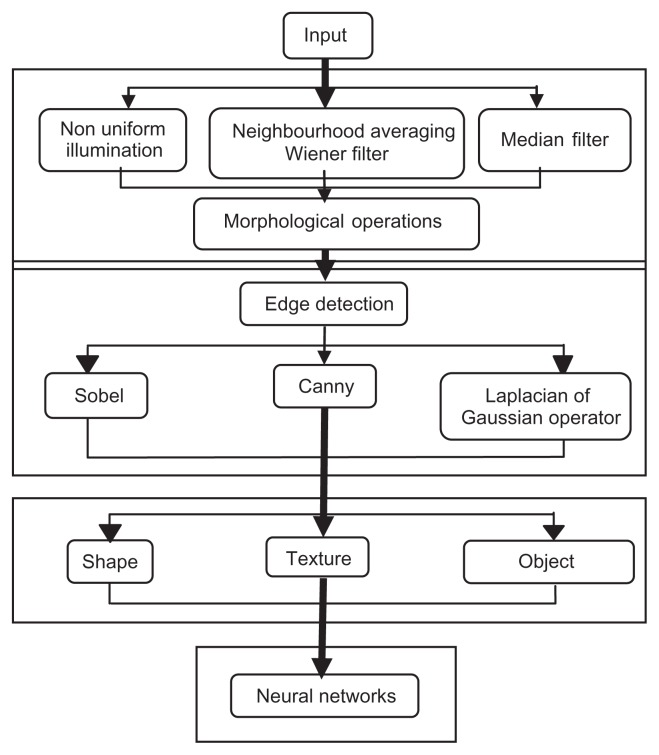

Identification of the algal community from images consists of various steps namely preprocessing, segmentation, morphological operations, feature extraction, classification, and identification. Figure 1 gives the architectural layout of the image processing method used in the identification and the classification of algae. In the following section, we will discuss the functionality of each processing technique.

Figure 1.

Proposed methodology of automatic algal identification.

Image Preprocessing

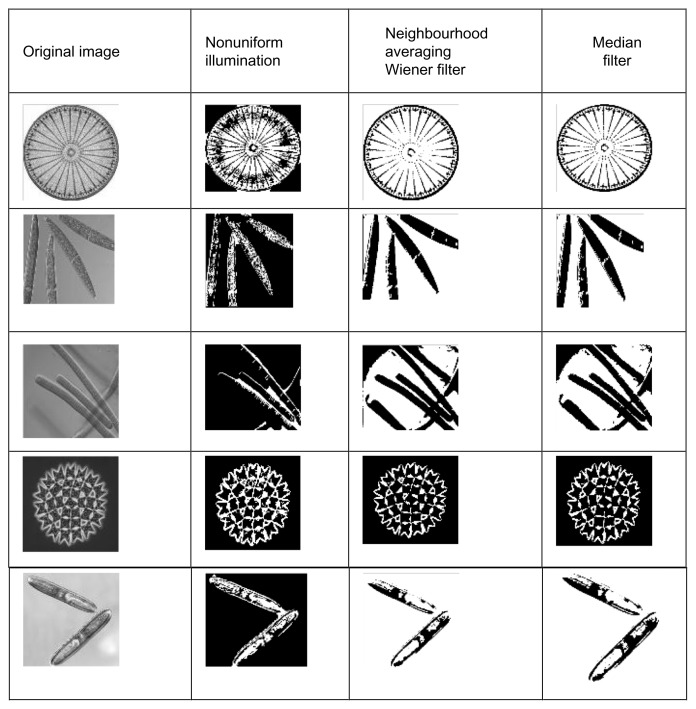

Correct object detection depends upon many factors, such as the type of illumination, the presence of shadows, the level of noise, the state of focus, the overlapping of objects, as well as level of object similarity to the background.25,26 The digital grayscale images captured from a microscope are preprocessed to reduce the effects of nonuniform illumination and other noise. A median filter (size 3×3 and 5×5) was used to reduce image noise.15,27 In the present study, the neighborhood averaging technique was used to enhance the image and morphological features were processed for noise elimination, and to keep the cyanobacteria structure clear (Fig. 2).

Figure 2.

Pre processed images by various filters.

Note: The original images were collected from Algal Resource Database, Microbial cluture collection, National Institute for Environmental Studies. http://www.shigen.nig.ac.jp/algae/.

Nonuniform illumination was corrected using the top-hat filter. Neighborhood averaging technique using Wiener filter and median filter methods were used to reduce image noise and to preserve edges. The performance of the three methods were analyzed statistically and the results were shown in Table 1. Based on mean squared error and peak signal noise ratio values, the median method showed a better result than the other two methods.

Table 1.

Comparison of noise removal filters using MSE and PSNR metrics.

| Image | Median filter | Wiener filter | Non uniform illumination using top-hat filter | |||

|---|---|---|---|---|---|---|

|

|

|

|

||||

| MSE | PSNR | MSE | PSNR | MSE | PSNR | |

| Diatom | 0.0122 | 30.6193 | 0.0115 | 31.0761 | 0.3481 | 23.3133 |

| Closterium acerosum | 0.0152 | 30.8247 | 0.0120 | 35.4253 | 0.3542 | 23.1095 |

| Oscillatoria | 0.0076 | 33.4772 | 0.0078 | 43.4040 | 0.3090 | 23.9395 |

| Pediastrum | 0.0135 | 30.9478 | 0.0184 | 32.3668 | 0.4764 | 22.3336 |

| Pinnularia | 0.0058 | 35.6971 | 0.0069 | 36.3533 | 0.4965 | 24.4697 |

Image Segmentation

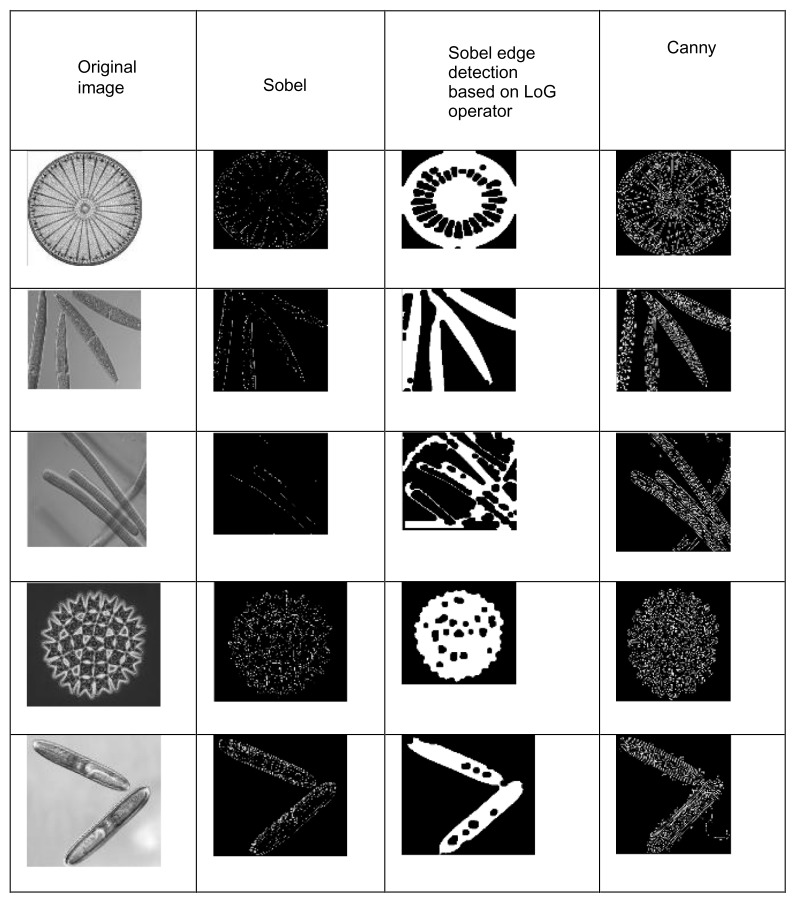

Objects within each image are separated from the background via a process called segmentation. Segmentation is the key part in the image processing.25,26 Algal images showed various shapes for the same species. The edges and contour of the objects are more meaningful. So far, much research on the automatic identification of algae has been done using edge detection; this is achieved by the Sobel edge detector.28 Another algorithm called the Canny edge detector algorithm is a powerful edge detector for image segmentation.15,24,29

In this study, both the Canny and Sobel edge detection methods were adopted for image segmentation.25 After the Sobel edge detector method is applied, the resulting images had many discontinuities. Laplacian of Gaussian operator was applied on the Sobel image to smooth the image.28 The edges of the algae with minimum discontinuities were detected in the Canny edge detector method. To avoid the discontinuities, the same method was repeated for several times on the detected edges. A mean square error of the Canny edge detection method is slightly greater than the Sobel edge detection method. The peak signal noise ratio of the Canny method is slightly lesser than the Sobel method. Finally, the object result from the Sobel method was better than the Canny edge detection method; this is shown in Figure 3 and Table 2.

Figure 3.

Edge detection methods.

Table 2.

Comparison of the noise edge detection methods using MSE and PSNR metrics.

| Image | Sobel | Canny | ||

|---|---|---|---|---|

|

|

|

|||

| MSE | PSNR | MSE | PSNR | |

| Diatom | 0.4546 | 25.7925 | 0.4236 | 27.8187 |

| Closterium acerosum | 0.3674 | 24.4938 | 0.3630 | 27.0445 |

| Gloeotrichia | 0.3016 | 26.2720 | 0.3097 | 27.6404 |

| Pediastrum | 0.5193 | 24.7969 | 0.4967 | 27.1131 |

| Pinnularia | 0.5087 | 25.9998 | 0.4941 | 27.4304 |

Feature Extraction

Feature extraction used to transform a binary and color image from the preprocessed stage into a set of parameters that described the algae features.15 Once an interesting feature has been detected, the illustration of this feature will be used to compare with all possible features known to the processor.

There are two main methods for object identification that use boundary information.26 The first is the Fourier descriptor method, and the second is the moment invariant method. In the Fourier descriptor method, the boundary is divided into N = 2n parts to produce N equidistant boundary points. The coordinates of these points were now processed using fast Fourier transform. This will produce frequency classification of the boundary. The second method is finding moment invariants. In this technique, seven moment invariants can be derived, all of which are invariant to objects and changes made in magnification.23

Two-dimensional moment invariants of a digitally sampled M × M image.

f (x, y), (x, y = 0 … M − 1) is given as,

| (1) |

where p, q = 0, 1, 2, 3

The moments f (x, y) translated by an amount (a, b), are defined as,

| (2) |

Thus, the central moments m′pq or μpq can be computed from (2) on substituting a = −χ̄ and b = −ȳ as,

| (3) |

When scaling normalization is applied the central moments change as,

| (4) |

In particular, Hu defines seven values, which are computed by normalizing central moments through order three, which are invariant to object scale, position, and orientation.30 In terms of the central moments, the seven moments are given as,

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

The moment invariant features are given in Table 3.

Table 3.

Moment invariants for the algae.

| Image | Moment invariant |

|---|---|

| Anabaena | 0.0211, 0.0004, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Closte | 0.0189, 0.0004, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Diatom | 0.0191, 0.0004, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Eremo | 0.0183, 0.0003, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Fibro | 0.0184, 0.0003, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Gloeo | 0.0183, 0.0003, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Microcystis | 0.0225, 0.0005, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Oscillatoria | 0.0235, 0.0006, 0.0000, 0.0000, 0.0000, 0.0000,0 |

| Penium | 0.0189, 0.0004, 0.0000, 0.0000, 0.0000, 0.0000,0 |

Walker et al26 used new features to classify an object into one of the number of classes, (ie, Microcystis, Anabaena, and so on) it is essential to quantitatively measure characteristics of the object that may indicate its class membership. For example, the feature “area” is an excellent discriminator of class membership when classifying algae such as Microcystis and Anabaena cyanobacteria, as these two genera differ substantially in size. The features of each object, including morphometric properties (the area, circularity, and perimeter length), object boundary, shape features, frequency domain features, and spatial statistics containing Gray level co-occurrence matrix measures are used for identification.

The principal component analysis (PCA) method is widely used in most image processing applications to reduce the number of features by a normalization process.1 PCA involves a mathematical procedure that transforms a number of (possibly) correlated variables into a (smaller) number of uncorrelated variables called principal components. The first principal component accounts for as much of the variability in the data as possible, and each succeeding component accounts for as much of the remaining variability as possible. The Fourier spectrum is ideally suitable for describing the directionality of periodic or almost periodic two-dimensional patterns in a round image.24,30

Identification

The classification method uses a set of features or parameters to differentiate each object, where these features should be related to the task at hand. A human expert has to determine into what classes an object may be categorized and also has provided a set of sample objects with known classes. This set of identified objects is called the training set. This is used to train the classification programs to learn how to classify objects.

Automated recognition of blue-green algae implemented a discriminant analysis for classification. It is a statistical method that provides a discriminator function for each different species. Discriminant analysis may be used for two objectives: to assess the adequacy of classification, given the group memberships of the objects under study; or to assign objects to one of a number of (known) groups of objects.

Gao et al24 proposed a neural networks classification. Here, neural networks are designed with 15, 30, 40, 60, or 80 nodes in a single hidden layer and six nodes for each class in the output layer to test the performance.

Mansoor et al1 presented multilayer perceptron feed forward ANN to perform an identification process for selected cyanobacteria. ANN architecture consists of six outputs, three outputs, and three neurons in a hidden layer—0.78 for learning rate, and 0.5 for momentum. The classifier is used to index the database content during the training mode for categorizing purposes.

Walker et al26 implemented a general Bayes decision function for assumed Gaussian feature distributions with unequal variance–covariance matrices. The resulting decision surface is of hyperquadtric form. In this, the target is only the anabaena and microcystis genera. So, the microalgea in water samples were classified to the genus level.

Fang et al19 used perceptron and the feed forward back propagation scheme of the neural network. The perceptron has six neurons and its accuracy is 100% sensitivity and 39.8% specificity. The result is 97.8% sensitivity and 72.4% specificity for this application.

Anggraini et al27 implemented Bayes classifier in each node. The performance of this classification model was evaluated using 20 microphotographs obtained from different blood smears, which are identified as infested erythrocytes with sensitivity of 92.59%, specificity of 99.65%.

In this study, a back propagation neural network was used to classify the images that achieved 100% of classification accuracy on the trained images and 80% classification accuracy on tested images. The results are shown in Table 4.

Table 4.

Observation and analysis on existing system.

| Author | Year | Objectives | Methods | Results | ||

|---|---|---|---|---|---|---|

|

|

||||||

| Segmentation | Feature extraction | Classification | ||||

| Stefan et al | 1995 | Automated recognition of blue green algae | Sobel edge detection | Fourier descriptors and moment invariants | Discriminant analysis | 98% |

| Gao et al | 2011 | Automatic identification of diatoms with circular shape using texture analysis | Canny edge detection | Fourier spectrum Neural | Networks | 94.44% |

| Mansoor et al | 2011 | Automatic recognition system for some cyanobacteria using image processing techniques and ANN approach | Thresholding technique | Principal component analysis | Multilayer perceptron feed forward artificial neural networks | 95% |

| Walker et al | 2011 | Fluroscence-assissted image analysis of freshwater microalgae | Binary segmentation | Co occurrence matrix measures | Bayes decision function | – |

| Fang et al | 2011 | Automatic identification of mycobacterium tuberculosis in acid-fast stain sputum smears with image processing neural networks | – | – | Perceptron and FFNN | 100% |

| Anggraini et al | 2011 | Automated status identification of microscopic images obtained from malaria thin blood smears using bayes decesion | Edge detection, thresholding, segmentation and watershed algorithm | – | Bayes classifier | 99.65% |

Conclusion

This paper reviewed various techniques of preprocessing, segmentation, feature extraction, and classification in image processing. The achieved detection rate of combining all the features was more than 98%. Particularly, using the neural network, 86.5% of the identification rate was achieved. In total, 95% accuracy was achieved in the identification and classification of four genera of cyanobacteria using back propagation and shape boundary features. Then, 97% of the classification accuracy was achieved by object size, shape, and texture based on feature extraction techniques. For automatic algal identification, the identification accuracy was increased by several features such as shape, size, object boundary, and textures combined with morphological operators. The automatic identification rate is increased by using different segmentation methods and developing new features for microscopic algae images.

Footnotes

Author Contributions

Conceived and designed the experiments: NS, CP, PS, SK. Analyzed the data: Wrote the first draft of the manuscript: NS, CP, PS. Contributed to the writing of the manuscript: NS, CP, PS. Agree with manuscript results and conclusions: NS, CP, PS. Jointly developed the structure and arguments for the paper: NS, CP, PS, SK. Made critical revisions and approved final ver ion: NS, CP, PS, SK. All authors reviewed and approved of the final manuscript.

Competing Interests

Author(s) disclose no potential conflicts of interest.

Disclosures and Ethics

As a requirement of publication the authors have provided signed confirmation of their compliance with ethical and legal obligations including but not limited to compliance with ICMJE authorship and competing interests guidelines, that the article is neither under consideration for publication nor published elsewhere, of their compliance with legal and ethical guidelines concerning human and animal research participants (if applicable), and that permission has been obtained for reproduction of any copyrighted material. This article was subject to blind, independent, expert peer review. The reviewers reported no competing interests.

Funding

Authors would like to thank University Grants Commission, Government of India, for funding to carry out this project.

References

- 1.Mansoor H, Sorayya M, Aishah S, Moshleh MAA. Automatic recognition system for some cyanobacteria using image processing techniques and ANN approach. 2011 International Conference on Environmental and Computer Science. 2011;19:73–8. [Google Scholar]

- 2.Anton A. Malayan Nature Society. Intern Development and Research Centre of Canada; 1991. Algae in the conservation and management of freshwaters. [Google Scholar]

- 3.Culverhouse PF, Williams R, Benfield M, et al. Automatic image analysis of plankton: future perspectives. Mar Ecol Progr Ser. 2006;312:297–309. [Google Scholar]

- 4.Weeks PJD, Gauld ID, Gaston KJ, O’Neill MA. Automating the identification of insects: a new solution to an old problem. Bull Entomol Res. 1997;87(2):203–11. [Google Scholar]

- 5.Culverhouse PF, Williams R, Reguera B, Herry V, González-Gil S. Do experts make mistakes? A comparison of human and machine identification of dinoflagellates. Mar Ecol Progr Ser. 2003;247:17–25. [Google Scholar]

- 6.Patrick R. What are the requirements for an effective biomonitor? In: Loeb SL, Spacie A, editors. Biological Monitoring of Aquatic Systems. Boca Raton, FL: Lewis Publishers; 1994. pp. 23–9. [Google Scholar]

- 7.Anand N. Indian Fresh Water Microalgae. Dehradun, India: Bishen Singh Mahendrapal Singh; 1998. [Google Scholar]

- 8.Mishra PK, Srivastava AK, Prakash J, Asthana DK, Rai SK. Some fresh water algae of Eastern Uttar Pradesh, India. Our Nature. 2005;3:77–80. [Google Scholar]

- 9.Mahendraperumal G, Anand N. Manual of Fresh Water Algae of Tamilnadu. Dehradun, India: Bishen Singh Mahendrapal Singh; 2008. p. 124. [Google Scholar]

- 10.Sankaran V. Fresh water algal biodiversity of the Anaimalai hills, Tamil Nadu-Chlorophyta-Chlorococcales. Biology and Biodiversity of Microalagae. 2009:84–93. [Google Scholar]

- 11.Arulmurugan PS, Nagaraj S, Anand N. Biodiversity of fresh water algae from temple tanks of Kerala. Recent Research in Science and Technology. 2010;2(6):58–72. [Google Scholar]

- 12.Makandar BM, Bhatnagar A. Biodiversity of microalgae and cyanobacteria from freshwater bodies of Jodhpur, Rajesthan (India) Journal of Algal Biomass Utilization. 2010;1(3):54–69. [Google Scholar]

- 13.Arulmurugan P, Nagaraj S, Anand N. Biodiversity of fresh water algae from Guindy campus of Chennai, India. Journal of Ecobiotechnology. 2011;3(10):19–29. [Google Scholar]

- 14.Jefferies HP, Berman MS, Poularikas AD, et al. Automated sizing, counting and identification of zooplankton by pattern recognition. Marine Biology. 1984;78:329–34. [Google Scholar]

- 15.Mosleh MA, Manssor H, Malek S, Milow P, Salleh A. A preliminary study on automated freshwater algae recognition and classification system. BMC Bioinformatics. 2012;13(Suppl 17):S25. doi: 10.1186/1471-2105-13-S17-S25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Katsinis C, Poularikas AD. Image processing and pattern recognition with applications to marine biological images. Proceedings of the SPIE 7th Meeting on Applications of Digital Image Processing; San Diego, CA. 1984. pp. 324–9. [Google Scholar]

- 17.Estep KW, Maclntyre F, Hjörleifsson E, Sieburth JM. MacImage: a userfriendly image-analysis system for the accurate mensuration of marine organisms. Mar Ecol Prog Ser. 1986;33:243–53. [Google Scholar]

- 18.Kamath SB, Chidambar S, Brinda BR, Kumar MA, Sarada R, Ravishankar GA. Digital image processing-an alternate tool for monitoring of pigment levels in cultured cells with special reference to green alga Haematococcus pluvialis. Biosens Bioelectron. 2005;21(5):768–73. doi: 10.1016/j.bios.2005.01.022. [DOI] [PubMed] [Google Scholar]

- 19.Cheng J, Ji G, Feng C, Zheng H. Application of connected morphological operators to image smoothing and edge detection of algae. International Conference on Information Technology and Computer Science; 2009. pp. 73–6. [Google Scholar]

- 20.Schultze-Lam S, Harauz G, Beveridge TJ. Participation of a cyanobacterial S layer in fine-grain mineral formation. J Bacteriol. 1992;174(24):7971–81. doi: 10.1128/jb.174.24.7971-7981.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Blackburn N, Hagstrom A, Wikner J, Cuadros-Hansson R, Bjornsen PK. Rapid determination of bacterial abundance, biovolume, morphology, and growth by neural network-based image analysis. Appl Environ Microbiol. 1998;64(9):3246–55. doi: 10.1128/aem.64.9.3246-3255.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Siena I, Adi K, Gernowo R, Miransari N. Development of algorithm tuberculosis bacteria identification using color segmentation and neural networks. International Journal of Video and Image Processing and Network Security. 2012;12(4):9–13. [Google Scholar]

- 23.Stefan UT, Ron JW, Davies LJ. Automated object recognition of blue-green algae for measuring water quality using digital image processing techniques. Environ Int. 1995;21(2):233–6. [Google Scholar]

- 24.Luo Q, Gao Y, Luo J, Chen C, Liang J, Yang C. Automatic identification of diatoms with circular shape using texture analysis. Journal of Software. 2011;6(3):428–35. [Google Scholar]

- 25.Gupta S, Purkayastha SS. Image enhancement and analysis of microscopic images using various image processing techniques. Proceedings of the International Journal of Engineering Research and Applications. 2012;2(3):44–8. [Google Scholar]

- 26.Walker RF, Ishikawa K, Kumagai M. Fluorescence-assisted image analysis of freshwater microalgae. J Microbiol Methods. 2002;51(2):149–62. doi: 10.1016/s0167-7012(02)00057-x. [DOI] [PubMed] [Google Scholar]

- 27.Anggraini D, Nugroho AS, Pratama C, Rozi IE, Pragesjvara V, Gunawan M. Automated status identification of microscopic images obtained from malaria thin blood smears using Bayes decision: a study case in Plasmodium falciparum. Proceedings of the International Conference on Advanced Computer Science & Information Systems; Jakarta, India. Dec 17–18, 2011; pp. 347–52. [Google Scholar]

- 28.Gonzalez RC, Woods RE. Digital Image Processing. 3rd ed. Readings, MA: Addison-Wesley Publishers; 1992. [Google Scholar]

- 29.Canny JA. A computational approach to edge detection. IEEE Transactions on Pattern Analysis and Intelligence. 1986;8(6):679–98. [PubMed] [Google Scholar]

- 30.Jolliffe IT. Principal Component Analysis. 2nd ed. New York, NY: Springer; 2002. [Google Scholar]