Summary

Background

Abnormal test results do not always receive timely follow-up, even when providers are notified through electronic health record (EHR)-based alerts. High workload, alert fatigue, and other demands on attention disrupt a provider’s prospective memory for tasks required to initiate follow-up. Thus, EHR-based tracking and reminding functionalities are needed to improve follow-up.

Objectives

The purpose of this study was to develop a decision-support software prototype enabling individual and system-wide tracking of abnormal test result alerts lacking follow-up, and to conduct formative evaluations, including usability testing.

Methods

We developed a working prototype software system, the Alert Watch And Response Engine (AWARE), to detect abnormal test result alerts lacking documented follow-up, and to present context-specific reminders to providers. Development and testing took place within the VA’s EHR and focused on four cancer-related abnormal test results. Design concepts emphasized mitigating the effects of high workload and alert fatigue while being minimally intrusive. We conducted a multifaceted formative evaluation of the software, addressing fit within the larger socio-technical system. Evaluations included usability testing with the prototype and interview questions about organizational and workflow factors. Participants included 23 physicians, 9 clinical information technology specialists, and 8 quality/safety managers.

Results

Evaluation results indicated that our software prototype fit within the technical environment and clinical workflow, and physicians were able to use it successfully. Quality/safety managers reported that the tool would be useful in future quality assurance activities to detect patients who lack documented follow-up. Additionally, we successfully installed the software on the local facility’s “test” EHR system, thus demonstrating technical compatibility.

Conclusion

To address the factors involved in missed test results, we developed a software prototype to account for technical, usability, organizational, and workflow needs. Our evaluation has shown the feasibility of the prototype as a means of facilitating better follow-up for cancer-related abnormal test results.

Key words: Diagnostic errors, patient safety, test results, care delays, health information technology

1. Background

Improving the timeliness of abnormal test result follow-up is a significant challenge for clinical informatics [1, 2]. Notifying providers of abnormal findings via electronic health record (EHR)-based alerts is insufficient because notification is no guarantee of follow-up. In previous work involving 2,500 asynchronous alerts related to abnormal laboratory and imaging results, we found that 7% of laboratory and 8% of imaging result alerts lacked timely follow-up at 4 weeks [3, 4], even in cases with explicit acknowledgement of the notification by the recipient. Such lapses could lead to diagnosis and treatment delays, which can have serious implications [5, 6], especially for cancer, which often benefits from early diagnosis and treatment [7].

Missed or delayed follow-up of abnormal test results is a multifaceted safety issue that occurs within the complex “sociotechnical” system of healthcare, involving interactions between technical and human elements, such as work processes and organizational factors [8–12]. The Department of Veterans Affairs (VA) uses an EHR called VistA, also known by its front-end component CPRS (Computerized Patient Record System). Similar to other EHRs, VistA automatically generates notifications in response to abnormal laboratory or radiology results. These notifications are transmitted to a receiving inbox, called the “ViewAlert” window in CPRS. This inbox is visible after logging-in to the EHR, and when switching between patient records. In principle, a provider would see an alert, open it up to read it, make a care planning decision based on the results, place orders, and document the care plan. However, successful follow-up of an abnormal test result relies on many processes, involving various human and technical resources [13, 14]. Factors thought to contribute to missed results include information overload from all types of alerts [15], other competing demands on a provider’s attention and memory, handoffs and coordination challenges [16, 17], and system limitations related to tracking. Consequently, any intervention intended to improve follow-up must address these factors as well as the dimensions of the encompassing sociotechnical system [11, 18, 19], such as human-computer interaction, workflow, personnel, and organizational context [20].

2. Objectives

To address the aforementioned issues, we developed and evaluated a functional prototype of a multi-faceted software system for cognitive support for providers to reduce missed test results. We focused on abnormal test result alerts related to four types of cancers: colorectal, lung, breast, and prostate. A facility can use the tool on other types of asynchronous alerts, bearing in mind that use should be part of a quality improvement process, and targeted only on a small set of alerts that have high risk of missed or delayed follow-up with significant clinical consequences. The software is designed for CPRS, but the design concept is exportable to other EHRs.

2.1 Rationale and Design Goals

Providers manage multiple patients at one time and receive alerts related to many patients in their inbox, thus creating a high burden on the providers’ “prospective” memory, the cognitive process involved in remembering an intention to perform a task in the future [21]. The need for multitasking and the presence of frequent interruptions [22, 23] further strain prospective memory [24]. Simply communicating alerts, such as what most EHRs do, does not necessarily support prospective memory. In CPRS, alerts remain in the ViewAlert window until they are opened to view, or until a facility-specified time limit is reached (usually 14 or 30 days). Because alerts no longer appear after being viewed, they cannot serve as external memory aids [24–26]. Simultaneously, there are concerns about the high number of alerts received, including large numbers of alerts that are not considered important by the providers [20, 27–29], all of which contributes to “alert fatigue” [30] ignoring of alerts [31], and poor situation awareness [32]. Our prototype is designed to supplement (not replace) the ViewAlert window, helping to mitigate the challenges posed by the pressures of multitasking and alert fatigue.

Within the VA, revisions to the CPRS software code are managed at higher VA levels, owing to national implications for more than 200,000 users and over 8 million veterans (i.e., patients). Thus, in order to be successful, field-based innovations, such as this one, need to limit software coding changes and related interdependencies within the software code. This potentially reduces the extent of testing needed pre-and post-implementation and facilitates adoption of the solution across different VA facilities.

In order to address this in a multifaceted sociotechnical context, we intended the functional prototype design to meet the following goals, many of which were conceived based on previous research findings: [1, 3, 4, 27–29, 33–37]

Mitigate strain on providers’ prospective memory by serving as an external memory aid to track the need for follow-up and when necessary, remind providers.

Facilitate the process of ordering appropriate follow-up by minimizing provider effort.

Minimize provider interruptions and maximize compatibility with provider workflow.

Minimize changes to the underlying VistA/CPRS code to minimize conflicts with existing versions of VistA/CPRS and facilitate testing and adoption across the VA system.

Address the technical, organizational, and policy constraints of the complex sociotechnical system, such that the Information Technology (IT) staff can configure and maintain it within the facility policies.

Achieve high-reliability follow-up by tracking missed alerts at the clinic or facility level and supporting identification of patients with test results still requiring interventions to facilitate timely follow-up.

Thus, the sociotechnical dimensions to be addressed include software usability, technical compatibility, and fit with clinical workflow and organization.

3. Methods

To address these goals, we used a development and formative evaluation process that involved iterative testing and refinement with stakeholders representing different roles. The core team was comprised of: clinician and informatician field-based researchers, VA contract usability professionals with expertise in health IT, and VA contract programmers with expertise in VistA/CPRS. This latter expertise was also essential to achieve design goal 4 (minimization of VistA/CPRS code changes). Participants included VA primary care providers, quality and safety managers, and clinical IT specialists who were responsible for EHR configuration and maintenance regarding clinical content, including alerts, which was the focus of design goal 5. The development and evaluation project started in late 2010 and continued to the end of 2011.

The prototype software system called AWARE (Alert Watch And Response Engine) has two components. The first component is designed to provide cognitive support to busy providers in initiating follow-up (the Reminder Prompt), while the second component is designed to support the detection of alerts that likely did not receive any follow-up (the Quality Improvement Tool).

3.1 AWARE Software

3.1.1 Reminder Prompt

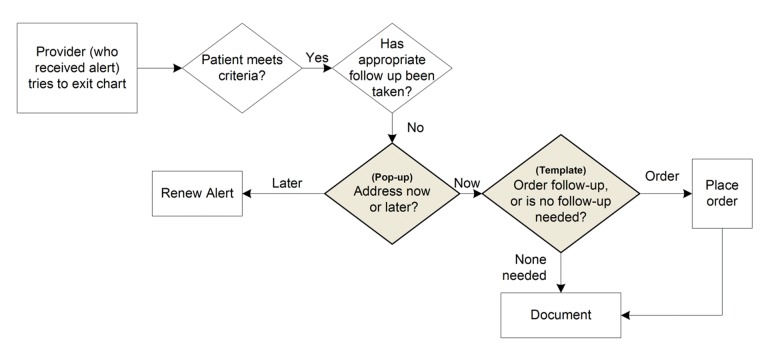

► Figure 1 depicts the actions of the Reminder Prompt. Based on previous evidence on diagnostic delays [38–42], we included four types of high-risk high-priority outpatient alerts: abnormal chest imaging, positive fecal occult blood test (FOBT), abnormal mammogram, and abnormal prostate specific antigen (PSA). When the provider attempts to exit the patient’s chart, the Reminder Prompt software first checks whether the patient has one of these alerts, and whether the alert is assigned to the viewing provider. It then analyzes structured data in the documentation to see if the provider has already placed any orders for appropriate follow-up actions for that alert. This series of checks helps minimize intrusion (design goal 3). Based on these checks, the provider may be presented with a Reminder Prompt pop-up (► Figure 2). This reminder serves to support information processing via distributed cognition [43] and aids the provider’s prospective memory [44] (design goal 1).

Fig. 1.

Process for checking for follow-up and launching reminder prompt.

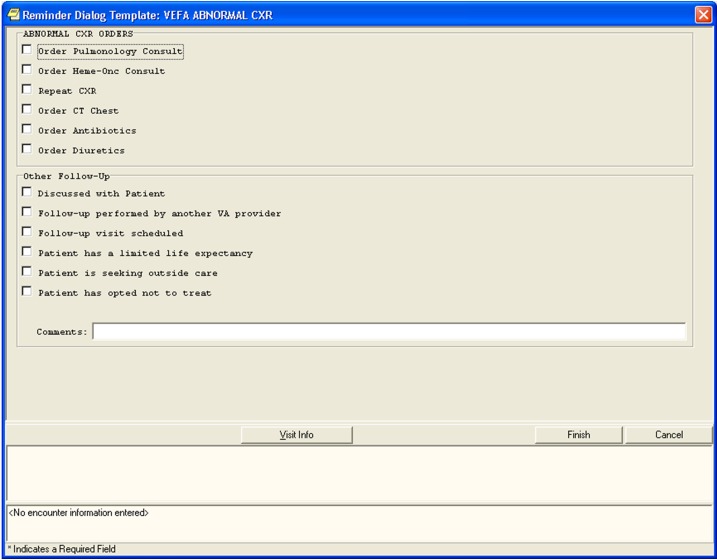

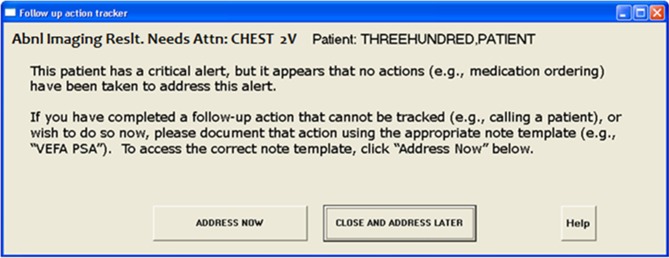

Fig. 2.

Pop-up for reminder prompt for abnormal chest radiograph.

The provider can postpone addressing the follow-up by selecting the “Close and Address Later” button which will keep the alert in the provider’s ViewAlert inbox as opposed to it disappearing, which is what happens in CPRS currently. This helps maximize fit with workflow (design goal 3). While the alert is still active, the software performs the same checks each time the provider exits the patient’s chart. If the provider chooses the “Address Now” button, he or she is prompted to select an “encounter and progress note title” with which to associate a follow-up action, and is then presented with a window containing follow-up action options specific to the particular alert type (► Figure 3). Based on the provider’s selections, a template-based progress note and selected orders are automatically generated for review, edits and signature, thus facilitating documentation and order entry (design goal 2). Additionally, the status of the alert is changed to “completed” which stops the pop-up from appearing again, and keeps the alert from being put back in the ViewAlerts inbox.

Fig. 3.

Reminder prompt template for abnormal chest radiograph.

The list of follow-up actions for each type of abnormal result was based on a review of the literature and expert consensus. However, this list is customizable at the facility-level, enabling the software to be adapted to different practice patterns. Furthermore, the prompt was designed to include a free-text field and additional structured fields for situations when follow-up actions might not be warranted, which allows more flexibility. See ► Figure 3.

3.1.2 Quality Improvement (QI) Tool

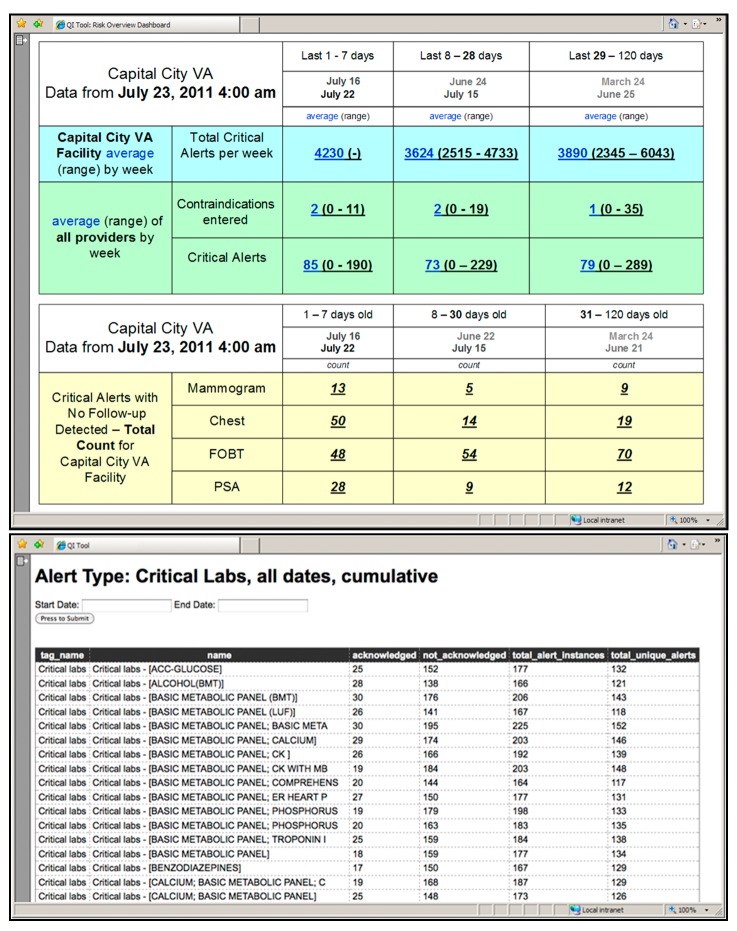

The QI Tool contains a database that stores information on alerts generated from the VistA alert tracking file and from follow-up actions generated from the AWARE Reminder Prompt. The QI Tool can generate reports for displaying transmitted alerts by provider, patient, time-frame, and/or alert type to facilitate system quality measurement. Users can sort and filter data and obtain more information on specific alerts, providers, or patients. For example, at periodic intervals, the user could identify patients for whom no follow-up has been detected (thus supporting design goal 6, identification of cases still requiring intervention). The QI Tool dashboard is a prototype interface that provides an overview and navigation support [45] for risk analysis and detection of patients without follow-up (► Figure 4).

Fig. 4.

QI tool dashboard and sample report.

3.2 Software Evaluation

Throughout the software development process, we conducted formative evaluations to assess the software on various sociotechnical dimensions related to the design goals – the technical fit of the software within the VistA/CPRS platform, the software usability, and its compatibility with the workflow and the organization. See ► Table I.

Table 1.

Design goals and corresponding formative evaluation elements.

| 1. Support prospective memory | Interviews | Reminder Prompt Usability test | ||

| 2. Facilitate follow-up | ||||

| 3. Minimize burden | ||||

| 4. Minimize technical dependencies | Installation | |||

| 5. Fit with organization and workflow | QI Tool Usability test | |||

| 6. Additional safety through test result follow-up at facility level |

3.2.1 Study Participants

We recruited a total of 40 participants. The 23 providers (15 residents and 8 staff physicians, all with both outpatient and inpatient experience) participated in the evaluation of the Reminder Prompt. The 17 non-physician participants evaluated the QI Tool. This group included 9 clinical IT (Information Technology) specialists, of whom 6 had clinical backgrounds, 2 had technical backgrounds, and 1 had both. Their responsibilities included the configuration of alerts, turning alerts on and off for providers, detecting and managing problems with alerts, and constructing templates and reports. Additionally, there were 8 quality/safety managers, consisting of 3 Patient Safety Managers, 2 clinical service chiefs, 2 performance and quality coordinators, and 1 chief of quality management, all of whom were involved with facility-level quality and safety activities.

3.2.2 Usability and Fit with Workflow and Organization

Each participant took part in an approximately 1 hour session, involving both semi-structured interview questions, and a usability test. For the physicians, the session focused on the Reminder Prompt. For the clinical IT and quality/safety managers, the session focused on the QI Tool. The interviews and usability tests were conducted by a team of experienced usability professionals following a scripted protocol.

For half of the staff physicians, and almost all of the clinical IT specialists and the quality/safety managers, remote interviewing and usability testing was performed, with the participant accessing the prototype remotely from their own home or office computer, using computer audio or speaker-phone to converse with the usability specialist. For all of the residents, and the rest of the other participants, the interviewing and usability testing took place in a conference room at the local VA medical center, with the usability specialist present. In both situations, the participant was video- and audio-recorded, and the computer screen was video-captured.

Usability testing of the Reminder Prompt and the QI Tool was conducted using scenarios designed to identify potential usability problems [46, 47]. The tool was loaded onto a test copy of the VistA/CPRS EHR populated with artificial data. Participants were asked to think-aloud [48] as they performed a small set of tasks using the functional prototype . Comments about the tool were captured. Additionally, two human factors experts individually performed heuristic evaluations [46, 49] by walking through each step in the of use of the tool and reviewing it according to established usability heuristics [50], afterwards collaborating to arrive at a consensus.

Usability testing of the Reminder Prompt was conducted with residents and staff physicians. Providers performed tasks that involved acknowledging alerts, accessing patients’ charts in VistA/CPRS, responding to the appearance of the Pop-up, and addressing follow-up needs with the Reminder Prompt template. For example, one task involved the participant acknowledging an abnormal alert, but required exiting the chart prior to ordering follow-up. The AWARE Pop-up appeared, and the participant responded to it and to the Reminder Prompt, using it to input the follow-up order.

To provide context and enable the clinical IT specialists and quality/safety managers to learn about the components of the software to be used by providers, they were shown and given the opportunity to interact with the Reminder Prompt prior to the usability testing of the QI Tool. The usability tasks for the QI Tool involved generating database reports for particular types of alerts for a specified time frame, as well as finding specific information within generated tables. For example, one task was: “Please find out how many total alerts were delivered to providers at the (City) VA Medical Center during the month of December 2010. Of those alerts, how many went unacknowledged by the providers?” Interview questions addressed both the usability of the software, and the potential fit of the software within the larger sociotechnical system, particularly with the workflow and the organizational dimensions. Background questions were asked before the usability test. An example question asked of providers was: “Please explain the process you go through when managing your alerts.” An example question asked of clinical IT specialists and quality/safety managers was: “Can you tell me a little about your daily responsibilities?”

After the usability test, we asked participants about their impressions of the software and its potential impact on their work. For example, “How much time do you think this process [the Reminder Prompt] would add to the time you spend working with patient records?” We asked the clinical IT specialists about the tasks involved in configuring and managing the Reminder Prompt and how they envisioned using the QI Tool. For example: “How do you see [the QI Tool] affecting your role, positively or negatively?” Additionally, we asked the quality/safety managers about how they would use the QI Tool. For example: “How might you use this tool to look at issues related to risk of patients not getting timely follow-up to abnormal alerts?”

3.2.3 Installation

In order to ensure that the Reminder Prompt could run on a full scale VistA/CPRS EHR with real medical records, it was installed on a non-production (testing/development) copy of VistA/CPRS populated with real but de-identified data.

3.2.4 Analysis

Using the recordings, the set of responses for each interview question were reviewed by the usability team to identify patterns. Each participant’s response was then categorized, and categories were aggregated. Additionally, other comments and “think aloud” verbalizations from the usability testing were reviewed, and items pertinent to tool’s usability or fit with workflow or organization were noted. The initial findings were reviewed with the multidisciplinary research team to provide a secondary check on interpretation, particularly from the perspective of physicians, and from clinical IT and safety/quality perspectives.

Observations of performance on the usability tasks, along with verbalizations and impressions from participants, were used to identify problems with the usability of the software.

Analysis of the installation involved checking if the software correctly responded to patient records with and without test alerts lacking the corresponding follow-up orders. Additionally, a debriefing session was conducted with the IT staff to identify areas for improvement regarding the installation and configuration process.

4. Results

4.1 Usability

The usability testing of the Reminder Prompt indicated that many providers (11, 48%) experienced some confusion when using the tool for the first time. Most of the confusion was related to the wording on the Pop-up, which was identified as the primary source of confusion by 6 (26%) provider. One specific problem was using “Proceed” to label the Pop-up button responsible for advancing to the Reminder Prompt template. This conveyed to one provider that clicking the button would proceed with closing the chart. Using this information, the button label was revised to “Address Now.” Other problems identified included that the text on the pop-up did not successfully explain the reason why the Pop-up appeared, and instructions provided were not specific enough to assist in choosing a note template. The text was modified to include this information.

No problems affecting task performance were observed with the Reminder Prompt template. However, the heuristic evaluation of the Reminder Prompt identified terminology inconsistencies and a layout issue that could lead to misinterpretation of a text field. These issues have been addressed via layout and wording changes.

The main usability problems identified for the QI Tool concerned navigation between and within screens. For example, 100% of the clinical IT specialists and quality/safety managers (17) expected to be able to drill down into hyperlinked data cells to obtain more detail, which was not consistently supported by the tested version of the QI Tool. The heuristic review of the QI Tool identified issues with the graphical formatting of the reports, the need for better navigation support, and an inconsistency in how date ranges are defined (compared to other tools in CPRS). Changes to navigation and formatting in the QI Tool have been made in response to these findings.

4.2 Fit with Workflow and Organization

Providers felt the Reminder Prompt would be useful, 100% of those responding said yes to the question “Do you think this feature would help you manage alerts?” However, 11 (48%) estimated that the tool would increase the amount of time it took to process alerts, compared to 8 (35%) who estimated that it would take the same or less time with the tool active.

Specific features of interest included the ability of the tool to generate a semi-automated note documenting orders and the ability to enter contraindications to future work-up. Both residents and staff physicians commented that the tool would be more useful for the outpatient rather than inpatient setting because multiple providers are generally monitoring hospitalized patients’ conditions and test results daily. Members of both groups initially expressed concern about the small number of options for follow-up actions in the Reminder Prompt for abnormal chest-x ray alerts, given the wide range of possibilities for those alerts.

The quality/safety managers and the clinical IT specialists saw utility in the QI Tool. When asked about what benefits this tool might provide, 100% of participants indicated patient safety benefits from monitoring. The quality/safety managers, and especially the three Patient Safety Managers, saw the QI Tool as being particularly useful for their roles, more so than the clinical IT specialists. The quality/safety managers saw their roles as including the analysis of data to find and manage unacknowledged alerts, to identify trends, and to investigate alert-related patient safety issues. They emphasized the utility of actionable information where someone could intervene to prevent missed or delayed follow-up. On the other hand, the clinical IT specialists envisioned using the QI Tool primarily to obtain details on specific alert instances for purposes of trouble-shooting. They indicated that their role in supporting the Reminder Prompt would primarily be to customize and create new reminder dialogues and train and support providers.

4.3 Installation

Despite differences between the version of VistA/CPRS used during development and the version of VistA/CPRS being run at the facility, the clinical IT team and the programmers were able to run the Reminder Prompt on the full-scale, testing/development copy of VistA/CPRS. It operated successfully, including detecting the test alerts that lacked follow-up actions.

5. Discussion

The design of our software system addresses several features that have been proposed for reliable test result follow-up [51]. Our development and evaluation process took into account multiple sociotechnical factors involved with missed test results (technical, usability, and fit with workflow and organization), and incorporated input from key user- and stakeholder-groups (resident and staff physicians, clinical IT specialists, and quality/safety managers). The evaluation has shown that the prototype design is efficacious in detecting specific cancer-related abnormal test results lacking follow-up, presenting reminder notifications to providers, and enabling providers to enter orders for follow-up actions or other appropriate responses. Results suggest that this is a feasible design strategy for helping to reduce missed or delayed follow-up of certain high-risk abnormal test results. The six design goals are discussed below:

5.1 Support prospective memory

The Reminder Prompt can facilitate the follow-up of abnormal alerts by serving as an external prospective memory [21]. It provides an automated tracking system to serve as a back-up to protect patients from the risk of missed or delayed follow-up by directing the attention of the provider to an omission that would otherwise go undetected.

5.2 Facilitate follow-up:

Under conditions when the provider is too busy or distracted to make follow-up orders in response to an abnormal test result, no additional action is needed from the provider in order for the software tool to keep track of the need for follow-up. This has advantages over solutions that require the provider to actively set up future reminders [52]. Participants agreed that the software could help providers manage alerts and contribute to patient safety.

Because the Reminder Prompt pop-up appears when the provider is already within the specific patient’s chart, this information is provided just-in-time and facilitates generation of orders and notes at the point of care. Usability testing showed that providers will be able to use the tool to respond with follow-up actions. An important design element for supporting successful performance is including information on the pop-up about the reason for the notification, and what to do next.

5.3 Minimize burden:

The prototype minimizes provider burden by appearing only when the provider receives one of the important alerts and no recommended follow-up action had been ordered by the time of chart exit. Thus, the Reminder Prompt serves specifically as a critiquing system [53] and avoids influencing the decisions [54] of providers who might have already ordered follow-up actions. Moreover, this timing is least disruptive because the provider would have just completed other tasks [55] related to that patient.

The free text field in the template ensures that unanticipated situations can be accommodated [43]. Additionally, the “Address Later” button on the pop-up makes it easy for the provider to defer responding until a more convenient time without the risk of losing the alert, a significant advantage over the current EHR functionality. Nonetheless, many providers anticipated that they would take more time processing alerts with this software present.

5.4 Minimize technical dependencies:

Because of the status of VistA as mission-critical legacy software, and the need for careful control over VistA modifications, our field-based software innovation was designed to minimize the required changes to the underlying VistA/CPRS software code. Furthermore, the successful installation of the software on a full-scale copy of a large VA’s VistA/CPRS EHR demonstrates that the software system fits with the hardware and software dimensions of the larger sociotechnical system.

5.5 Fit with organization and workflow:

By addressing the needs of the different types of users [56] in the formative evaluation, we were able to make sure the Reminder Prompt and the QI Tool accommodated the workflow of these various stakeholders and the organizational structure of the facility. We were able to check that clinical IT specialists would be able to manage technical support tasks (i.e., configuration and maintenance). We also found that monitoring for alerts lacking follow-up (a potential main use of the QI Tool) is more of a patient safety function than a clinical IT function. Through the involvement of providers, we learned that the software fit better with outpatient than inpatient care, and that implementing a template for imaging results could be more challenging than for laboratory test results.

5.6 Additional safety through test result follow-up at facility level:

Even with the Reminder Prompt system, there are still ways that an abnormal test result may not be addressed in a timely manner. For example, an over-burdened provider may fail to respond to the Reminder Prompt or may not access the patient’s chart again, or alerts might not be sent to the correct provider (such as with trainees who have rotated to another service). Quality/safety managers using the QI Tool could serve as independent monitors that could still detect gaps in test result follow-up, and initiate an escalation process if necessary. Furthermore, the tool can help quality/safety managers identify patterns in the management of alerts of different types, for different clinics or teams, and from different time frames. However, usability testing showed that adequate navigation methods are important for supporting this exploration and identification of patterns. Identifying such patterns could foster a better understanding of what factors in the facility lead to risks of missed or delayed follow-up. Thus, safety is reinforced at multiple organizational levels, i.e., at the provider, clinic/team and facility levels, avoiding the problems of ineffective redundancy in systems safety [57]. The Reminder Prompt captures information from the providers through a task that reduces memory demands and facilitates placing orders, while the QI Tool combines that information with other data to support the needs of the quality/safety managers. In this way AWARE avoids the problem of burdening providers with data entry tasks for administrative purposes [58].

Thus, AWARE could potentially help providers avoid missed test results by providing support for prospective memory and situation awareness [32], while also accounting for the side-effects and limitations of the reminder functionality itself.

6. Limitations

6.1 Limitations of Prototype Design

The software does not have the ability to detect contraindications or follow-up actions documented in free-text notes, so the Reminder Prompt might appear unnecessarily at times. As such, the software is intended only for a small number of high-risk high-priority alerts and thus we expect these situations to occur infrequently. The Reminder Prompt may never be triggered, such as when the assigned provider or trainee is no longer at the facility. However, the QI Tool will still capture the lack of documented follow-up on the alert, enabling safety/quality personnel to respond. Additionally, follow-up action orders may be placed but not fulfilled; however, tracking these downstream events is beyond the scope of this tool.

6.2 Study Limitations and Next Steps

Because the software is not currently at a stage where it can be installed on an in-use production EHR system, our evaluation was only able to collect data on how the tool is most likely to be used and its fit with workflow, and was unable to assess actual real-world use, or follow-up rate outcomes. Our research on the organizational fit of the software system was done in the VA system, potentially limiting its generalizability. However, not only are many other EHR systems beginning to notify providers of abnormal test results through the EHR, but early experiences in commercial EHRs are showing similar cognitive support problems [59] that led us to design this tool. Thus, the design goals we have followed could offer useful lessons to them.

Next steps involve getting the software to the state where it can be tested in clinical-use at a facility. An important part of implementing AWARE in a facility will be the use of the QI Tool to monitor the performance of the Reminder Prompt, using the data to optimally configure which alerts for which providers at which time periods, in order to further reduce unnecessary intrusions while simultaneously improving rates of timely follow-up for targeted results. Thus, the Reminder Prompt is not a stand-alone solution, but part of a quality improvement process supported by the QI Tool.

7. Conclusions

Our field-based informatics research led to the development and evaluation of a novel functional prototype system to improve safety of abnormal test follow-up in EHR systems. The problem-specific Reminder Prompt supports the provider’s prospective memory while minimizing additional reminder burden and disruptions. Furthermore, in recognition of the limitations of the notification system and that of the overburdened providers who deal with hundreds of test results per week [5], AWARE’s QI Tool supports other members of the organization in detecting patients at risk of missed abnormal test results.

Clinical Relevance

Previous research on follow-up for abnormal test results found 7–8% lacked timely follow-up [3, 4], which can lead to treatment delays with potential negative consequences, especially for cancer [5, 6]. This work demonstrates a feasible solution for the problem of providers having difficulty keeping track of important abnormal tests results in need of follow-up.

Statement on competing interests

All authors declare that they have no competing interests. The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

Protection of Human Subjects

The Baylor College of Medicine Institutional Review Board approved the study.

Authors’ contributions

AE, DFS and HS conceived the product design and project. DM, AL, BR, HS and MS supervised and participated in product design and development, and study design and data collection. MS conducted analyses and drafted the manuscript. All authors read and approved the final manuscript.

Acknowledgements

The authors wish to acknowledge the expert contributions of: Dana Douglas, Mark Becker, and Dick Horst of UserWorks, Inc.; Bryan Campbell and Angela Schmeidel Randall of Normal Modes, LLC; and Ignacio Valdes and Fred Trotter of Astronaut Contracting, LLC. Additional thanks to Velma Payne, Varsha Modi, Kathy Taylor, Roxie Pierce, Pawan Gulati, and George Welch. Project supported by the VA Innovation Initiative (XNV 33–124), VA National Center of Patient Safety and in part by the Houston VA HSR&D Center of Excellence (HFP90–020). The views expressed in this article are those of the author(s) and do not necessarily represent the views of the Department of Veterans Affairs. There are no conflicts of interest for any authors.

References

- 1.Singh H, Naik A, Rao R, Petersen L. Reducing Diagnostic Errors Through Effective Communication: Harnessing the Power of Information Technology. Journal of General Internal Medicine 2008; 23(4): 489–494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Singh H, Graber M. Reducing diagnostic error through medical home-based primary care reform. JAMA 2010; 304(4): 463–464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Singh H, Thomas EJ, Mani S, Sittig DF, Arora H, Espadas D, Khan MM, Petersen LA. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med 2009; 169(17): 1578–1586 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Singh H, Thomas EJ, Sittig DF, Wilson L, Espadas D, Khan MM, Petersen LA. Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? Am J Med 2010; 123(3): 238–244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Poon E, Gandhi T, Sequist T, Murff H, Karson A, Bates D. „I wish I had seen this test result earlier!“: Dissatisfaction with test result management systems in primary care. Archives of internal medicine 2004; 164(20): 2223–2228 [DOI] [PubMed] [Google Scholar]

- 6.Wahls T. Diagnostic errors and abnormal diagnostic tests lost to follow-up: a source of needless waste and delay to treatment. The Journal of ambulatory care management 2007; 30(4): 338–343 [DOI] [PubMed] [Google Scholar]

- 7.Singh H, Sethi S, Raber M, Petersen LA. Errors in cancer diagnosis: current understanding and future directions. J Clin Oncol 2007; 25(31): 5009–5018 [DOI] [PubMed] [Google Scholar]

- 8.Nemeth CP, Cook RI, Woods DD. The Messy Details: Insights From the Study of Technical Work in Healthcare. IEEE Transactions on Systems, Man, and Cybernetics – Part A: Systems and Humans 2004; 34(6): 689–692 [Google Scholar]

- 9.Carayon P, Schoofs Hundt A, Karsh BT, Gurses AP, Alvarado CJ, Smith M, Flatley BP. Work system design for patient safety: the SEIPS model. Quality & safety in health care 2006; 15 Suppl 1(suppl 1): i50–i58 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wears R, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA 2005; 293(10): 1261–1263 [DOI] [PubMed] [Google Scholar]

- 11.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Quality and Safety in Health Care 2010; 19(Suppl 3): i68–i74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Carayon P, Bass E, Bellandi T, Gurses A, Hallbeck S, Mollo V. Socio-Technical Systems Analysis in Health Care: A Research Agenda. IIE transactions on healthcare systems engineering 2011; 1(1): 145–60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Graber M, Franklin N, Gordon R. Diagnostic error in internal medicine. Archives of internal medicine 2005; 165(13): 1493–1499 [DOI] [PubMed] [Google Scholar]

- 14.Raab S, Grzybicki D. Quality in cancer diagnosis. CA: a cancer journal for clinicians 2010; 60(3): 139–65 [DOI] [PubMed] [Google Scholar]

- 15.Singh H, Spitzmueller C, Petersen NJ, Sawhney MK, Sittig DF. Information Overload and Missed Test Results in Electronic Health Record Based Settings. JAMA Internal Medicine 2013; 1–3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wears R, Perry S, Patterson E. Handoffs and Transitions of Care. Carayon P. () Handbook of Human Factors and Ergonomics in Healthcare and Patient Safety. L. Erlbaum Associates Inc.; 2006: 163–172 [Google Scholar]

- 17.Pennathur P, Bass E, Rayo M, Perry S, Rosen M, Gurses A. Handoff Communication: Implications For Design. Proceedings of the Human Factors and Ergonomics Society Annual Meeting 2012; 56(1): 863–866 [Google Scholar]

- 18.Ash JS, Sittig DF, Poon EG, Guappone K, Campbell E, Dykstra RH. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2007; 14(4): 415–423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lawler E, Hedge A, Pavlovic-Veselinovic S. Cognitive ergonomics, socio-technical systems, and the impact of healthcare information technologies. International Journal of Industrial Ergonomics 2011Apr [Google Scholar]

- 20.Singh H, Spitzmueller C, Petersen NJ, Sawhney MK, Smith MW, Murphy DR, Espadas D, Laxmisan A, Sittig DF. Primary care practitioners views on test result management in EHR-enabled health systems: a national survey. J Am Med Inform Assoc 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dismukes K. Remembrance of Things Future: Prospective Memory in Laboratory, Workplace, and Everyday Settings. In Reviews of human factors and ergonomics. Human Factors and Ergonomics Society 2010: 79–122 [Google Scholar]

- 22.Westbrook J, Coiera E, Dunsmuir W, Brown B, Kelk N, Paoloni R, Tran C. The impact of interruptions on clinical task completion. Quality and Safety in Health Care 2010; 19(4): 284–289 [DOI] [PubMed] [Google Scholar]

- 23.Laxmisan A, Hakimzada F, Sayan O, Green R, Zhang J, Patel V. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. International Journal of Medical Informatics 2007; 76(11-12): 801–811 [DOI] [PubMed] [Google Scholar]

- 24.Reason J. Combating omission errors through task analysis and good reminders. Qual Saf Health Care 2002; 11(1): 40–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Saleem JJ, Russ AL, Sanderson P, Johnson TR, Zhang J, Sittig DF. Current challenges and opportunities for better integration of human factors research with development of clinical information systems. Yearb Med Inform 2009; 48–58 [PubMed] [Google Scholar]

- 26.Hutchins E. How a cockpit remembers its speeds. Cognitive Science 1995; 19(3): 265–288 [Google Scholar]

- 27.Murphy D, Reis B, Sittig DF, Singh H. Notifications Received by Primary Care Practitioners in Electronic Health Records: A Taxonomy and Time Analysis. American Journal of Medicine 2012; 125(2): 209–el [DOI] [PubMed] [Google Scholar]

- 28.Hysong S, Sawhney M, Wilson L, Sittig D, Esquivel A, Singh S, Singh H. Understanding the Management of Electronic Test Result Notifications in the Outpatient Setting. BMC Med Inform Decis Mak 2011; 11(1): 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Murphy D, Reis B, Kadiyala H, Hirani K, Sittig D, Khan M, Singh H. Electronic Health Record-Based Messages to Primary Care Providers: Valuable Information or Just Noise? Arch Intern Med 2012; 172(3): 283–285 [DOI] [PubMed] [Google Scholar]

- 30.van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. Journal of the American Medical Informatics Association : JAMIA 2006; 13(2): 138–147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sorkin R, Woods D. Systems with Human Monitors: A Signal Detection Analysis. Human–Computer Interaction 1985; 1(1): 49–75 [Google Scholar]

- 32.Singh H, vis Giardina T, Petersen L, Smith M, Paul L, Dismukes K, Bhagwath G, Thomas E. Exploring situational awareness in diagnostic errors in primary care. BMJ Quality & Safety 2011Sep 2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Singh H, Arora H, Vij M, Rao R, Khan MM, Petersen L. Communication outcomes of critical imaging results in a computerized notification system. J Am Med Inform Assoc 2007; 14(4): 459–466 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Singh H, Wilson L, Petersen L, Sawhney MK, Reis B, Espadas D, Sittig DF. Improving follow-up of abnormal cancer screens using electronic health records: trust but verify test result communication. BMC Medical Informatics and Decision Making 2009; 9(49). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Singh H, Vij MS. Eight recommendations for policies for communicating abnormal test results. Jt Comm J Qual Patient Saf 2010; 36(5): 226–232 [DOI] [PubMed] [Google Scholar]

- 36.Hysong SJ, Sawhney MK, Wilson L, Sittig DF, Espadas D, Davis T, Singh H. Provider management strategies of abnormal test result alerts: a cognitive task analysis. J Am Med Inform Assoc 2010; 17(1): 71–77 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Singh H, Wilson L, Reis B, Sawhney MK, Espadas D, Sittig DF. Ten Strategies to Improve Management of Abnormal Test Result Alerts in the Electronic Health Record. Journal of Patient Safety 2010; 6(2): 121–123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gandhi TK, Kachalia A., Thomas EJ, Puopolo A.L., Yoon C, Brennan TA, Studdert DM. Missed and delayed diagnoses in the ambulatory setting: A study of closed malpractice claims. Ann Intern Med 2006; 145(7): 488–496 [DOI] [PubMed] [Google Scholar]

- 39.Nepple KG, Joudi FN, Hillis SL, Wahls TL. Prevalence of delayed clinician response to elevated prostate-specific antigen values. Mayo Clin Proc 2008; 83(4): 439–448 [DOI] [PubMed] [Google Scholar]

- 40.Singh H, Kadiyala H, Bhagwath G, Shethia A, El-Serag H, Walder A, Velez ME, Petersen LA. Using a multifaceted approach to improve the follow-up of positive fecal occult blood test results. Am J Gastroenterol 2009; 104(4): 942–952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Singh H, Hirani K, Kadiyala H, Rudomiotov O, Davis T, Khan MM, Wahls TL. Characteristics and predictors of missed opportunities in lung cancer diagnosis: an electronic health record-based study. J Clin Oncol 2010; 28(20): 3307–3315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Zeliadt SB, Hoffman RM, Etzioni R, Ginger VA, Lin DW. What happens after an elevated PSA test: the experience of 13,591 veterans. J Gen Intern Med 2010; 25(11): 1205–1210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Saleem J, Patterson E, Militello L, Render M, Orshansky G, Asch S. Exploring Barriers and Facilitators to the Use of Computerized Clinical Reminders. J Am Med Inform Assoc 2005; 12(4): 438–447 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Grundgeiger T, Sanderson PM, MacDougall HG, Venkatesh B. Distributed prospective memory: An approach to understanding how nurses remember tasks. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting. SAGE Publications; 2009: 759–763 [Google Scholar]

- 45.Woods DD, Watts JC. How not to have to navigate through too many displays. In: Helander MG, Landauer TK, Prabhu PV. Handbook of Human-Computer Interaction, Second Edition North Holland; 1997: 617–50 [Google Scholar]

- 46.Jaspers MW. A comparison of usability methods for testing interactive health technologies: Methodological aspects and empirical evidence. International Journal of Medical Informatics 2009; 78(5): 340–353 [DOI] [PubMed] [Google Scholar]

- 47.Smith PJ, Stone RB, Spencer AL. Applying cognitive psychology to system development. In: Marras WS, Karwowski W. Fundamentals and Assessment Tools for Occupational Ergonomics (The Occupational Ergonomics Handbook, Second Edition CRC Press; 2006: 24–1 [Google Scholar]

- 48.Ericsson A, Simon H. Verbal Reports as Data. Psychological Review 1980; 87(3): 215–251 [Google Scholar]

- 49.Dumas JS, Salzman MC. Usability Assessment Methods. Reviews of Human Factors and Ergonomics 2006; 2(1): 109–140 [Google Scholar]

- 50.Nielsen J. Finding usability problems through heuristic evaluation. In Proceedings of the SIGCHI conference on Human factors in computing systems. ACM; 1992: 373–380 [Google Scholar]

- 51.Schiff G. Medical Error. JAMA 2011; 305(18): 1890–1898 [DOI] [PubMed] [Google Scholar]

- 52.Poon E, Wang S, Gandhi T, Bates D, Kuperman G. Design and implementation of a comprehensive outpatient Results Manager. Journal of Biomedical Informatics 2003; 36(1-2): 80–91 [DOI] [PubMed] [Google Scholar]

- 53.Guerlain S, Smith P, Obradovich J, Rudmann S, Strohm P, Smith J, Svirbely J, Sachs L. Interactive Critiquing as a Form of Decision Support: An Empirical Evaluation. Human Factors: The Journal of the Human Factors and Ergonomics Society 1999; 72–89 [Google Scholar]

- 54.Smith PJ, McCoy CE, Layton C. Brittleness in the design of cooperative problem-solving systems: the effects on user performance. Systems, Man and Cybernetics, Part A: Systems and Humans, IEEE Transactions on 1997; 27(3): 360–371 [Google Scholar]

- 55.Adamczyk P, Bailey B. If not now, when?: the effects of interruption at different moments within task execution. In Proceedings of the SIGCHI conference on Human factors in computing systems. Vienna, Austria: ACM; 2004: 271–278 [Google Scholar]

- 56.Grudin J. Groupware and social dynamics: eight challenges for developers. Communications of the ACM 1994; 37(1): 92–105 [Google Scholar]

- 57.Leveson N, Dulac N, Marais K, Carroll J. Moving Beyond Normal Accidents and High Reliability Organizations: A Systems Approach to Safety in Complex Systems. Organization Studies 2009; 30(2-3): 227–249 [Google Scholar]

- 58.Karsh BT, Weinger M, Abbott P, Wears R. Health information technology: fallacies and sober realities. J Am Med Inform Assoc 2010; 17(6): 617–623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.McDonald C, McDonald M. INVITED COMMENTARY – Electronic Medical Records and Preserving Primary Care Physicians’ Time: Comment on „Electronic Health Record-Based Messages to Primary Care Providers“. Arch Intern Med 2012; 172(3): 285–287 [DOI] [PubMed] [Google Scholar]