Abstract

The diversity of neuron models used in contemporary theoretical neuroscience to investigate specific properties of covariances in the spiking activity raises the question how these models relate to each other. In particular it is hard to distinguish between generic properties of covariances and peculiarities due to the abstracted model. Here we present a unified view on pairwise covariances in recurrent networks in the irregular regime. We consider the binary neuron model, the leaky integrate-and-fire (LIF) model, and the Hawkes process. We show that linear approximation maps each of these models to either of two classes of linear rate models (LRM), including the Ornstein–Uhlenbeck process (OUP) as a special case. The distinction between both classes is the location of additive noise in the rate dynamics, which is located on the output side for spiking models and on the input side for the binary model. Both classes allow closed form solutions for the covariance. For output noise it separates into an echo term and a term due to correlated input. The unified framework enables us to transfer results between models. For example, we generalize the binary model and the Hawkes process to the situation with synaptic conduction delays and simplify derivations for established results. Our approach is applicable to general network structures and suitable for the calculation of population averages. The derived averages are exact for fixed out-degree network architectures and approximate for fixed in-degree. We demonstrate how taking into account fluctuations in the linearization procedure increases the accuracy of the effective theory and we explain the class dependent differences between covariances in the time and the frequency domain. Finally we show that the oscillatory instability emerging in networks of LIF models with delayed inhibitory feedback is a model-invariant feature: the same structure of poles in the complex frequency plane determines the population power spectra.

Keywords: correlations, linear response, Hawkes process, leaky integrate-and-fire model, binary neuron, linear rate model, Ornstein–Uhlenbeck process

1. Introduction

The meaning of correlated neural activity for the processing and representation of information in cortical networks is still not understood, but evidence for a pivotal role of correlations increases (recently reviewed in Cohen and Kohn, 2011). Different studies have shown that correlations can either decrease (Zohary et al., 1994) or increase (Sompolinsky et al., 2001) the signal to noise ratio of population signals, depending on the readout mechanism. The architecture of cortical networks is dominated by convergent and divergent connections among the neurons (Braitenberg and Schüz, 1991) causing correlated neuronal activity by common input from shared afferent neurons in addition to direct connections between pairs of neurons and common external signals. It has been shown that correlated activity can faithfully propagate through convergent-divergent feed forward structures, such as synfire chains (Abeles, 1991; Diesmann et al., 1999), a potential mechanism to convey signals in the brain. Correlated firing was also proposed as a key to the solution of the binding problem (von der Malsburg, 1981; Bienenstock, 1995; Singer, 1999), an idea that has been discussed controversially (Shadlen and Movshon, 1999). Independent of a direct functional role of correlations in cortical processing, the covariance function between the spiking activity of a pair of neurons contains the information about time intervals between spikes. Changes of synaptic coupling, mediated by spike-timing dependent synaptic plasticity (STDP, Markram et al., 1997; Bi and Poo, 1999), are hence sensitive to correlations. Understanding covariances in spiking networks is thus a prerequisite to investigate the evolution of synapses in plastic networks (Burkitt et al., 2007; Gilson et al., 2009, 2010).

On the other side, there is ubiquitous experimental evidence of correlated spike events in biological neural networks, going back to early reports on multi-unit recordings in cat auditory cortex (Perkel et al., 1967; Gerstein and Perkel, 1969), the observation of closely time-locked spikes appearing at behaviorally relevant points in time (Kilavik et al., 2009; Ito et al., 2011) and collective oscillations in cortex [recently reviewed in Buzsáki and Wang (2012)].

The existing theories explaining correlated activity use a multitude of different neuron models. Hawkes (1971) developed the theory of covariances for linear spiking Poisson neurons (Hawkes processes). Ginzburg and Sompolinsky (1994) presented the approach of linearization to treat fluctuations around the point of stationary activity and to obtain the covariances for networks of non-linear binary neurons. The formal concept of linearization allowed Brunel and Hakim (1999) and Brunel (2000) to explain fast collective gamma oscillations in networks of spiking leaky integrate-and-fire (LIF) neurons. Correlations in feed-forward networks of LIF models are studied in Moreno-Bote and Parga (2006), exact analytical solutions for such network architectures are given in Rosenbaum and Josic (2011) for the case of stochastic random walk models, and threshold crossing neuron models are considered in Tchumatchenko et al. (2010) and Burak et al. (2009). Covariances in structured networks are investigated for Hawkes processes (Pernice et al., 2011), and in linear approximation for LIF (Pernice et al., 2012) and exponential integrate-and-fire neurons (Trousdale et al., 2012). The latter three works employ an expansion of the propagator (time evolution operator) in terms of the order of interaction. Finally Buice et al. (2009) investigate higher order cumulants of the joint activity in networks of binary model neurons.

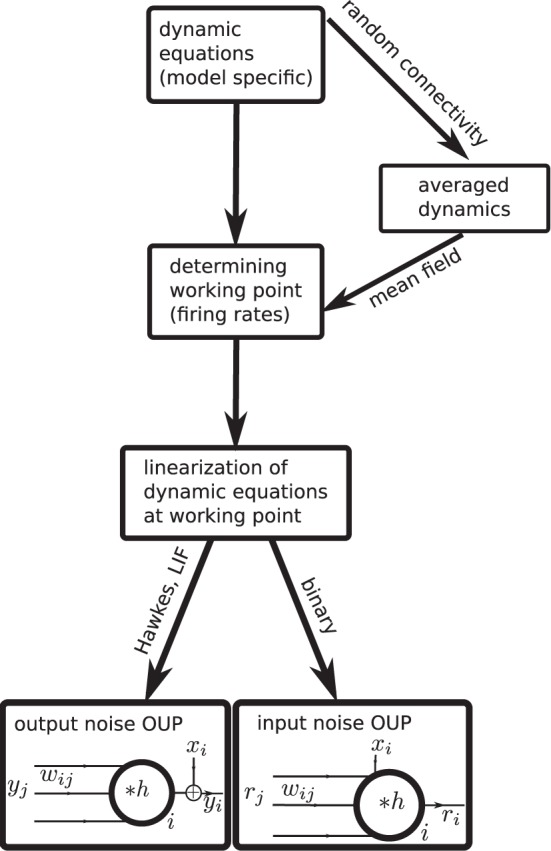

Analytical insight into a neuroscientific phenomenon based on correlated neuronal activity often requires a careful choice of the neuron model to arrive at a solvable problem. Hence a diversity of models has been proposed and is in use. This raises the question which features of covariances are generic properties of recurrent networks and which are specific to a certain model. Only if this question can be answered one can be sure that a particular result is not an artifact of oversimplified neuronal dynamics. Currently it is unclear how different neuron models relate to each other and whether and how results obtained with one model carry over to another. In this work we present a unified theoretical view on pairwise correlations in recurrent networks in the asynchronous and collective-oscillatory regime, approximating the response of different models to linear order. The joint treatment allows us to answer the question of genericness and moreover naturally leads to a classification of the considered models into only two categories, as illustrated in Figure 1. The classification in addition enables us to extend existing theoretical results to biologically relevant parameters, such as synaptic delays and the presence of inhibition, and to derive explicit expressions for the time-dependent covariance functions, in quantitative agreement with direct simulations, which can serve as a starting point for further work.

Figure 1.

Mapping different descriptions of neuronal dynamics to linear rate models (LRM). The arrows indicate analytical methods which enable a mapping from the original spiking (LIF model, Hawkes model) or binary neuron dynamics to the analytically more tractable linear rate models. Depending on the original dynamics (spiking or binary) the resulting LRM contains an additive noise component x either on the output side (left) or on the input side (right).

The remainder of this article is organized as follows. In the first part of our results in “Covariance structure of noisy rate models” we investigate the activity and the structure of covariance functions for two versions of linear rate models (LRM); one with input the other with output noise. If the activity relaxes exponentially after application of a short perturbation, both models coincide with the OUP. We mainly consider the latter case, although most results hold for arbitrary kernel functions. We extend the analytical solutions for the covariances in networks of OUP (Risken, 1996) to the neuroscientifically important case of synaptic conduction delays. Solutions are derived first for general forms of connectivity in “Solution of the convolution equation with input noise” for input noise and in “Solution of convolution equation with output noise” for output noise. After analyzing the spectral properties of the dynamics in the frequency domain in “Spectrum of the dynamics,” identifying poles of the propagators and their relation to collective oscillations in neuronal networks, we show in “Population-averaged covariances”' how to obtain pairwise averaged covariances in homogeneous Erdös-Rényi random networks. We explain in detail the use of the residue theorem to perform the Fourier back-transformation of covariance functions to the time domain in “Fourier back transformation” for general connectivity and in “Explicit expression for the population averaged cross covariance in the time domain” for averaged covariance functions in random networks, which allows us to obtain explicit results and to discuss class dependent features of covariance functions.

In the second part of our results in “Binary neurons,” “Hawkes processes,” and “Leaky integrate-and-fire neurons” we consider the mapping of different neuronal dynamics on either of the two flavors of the linear rate models discussed in the first part. The mapping procedure is qualitatively the same for all dynamics as illustrated in Figure 1: Starting from the dynamic equations of the respective model, we first determine the working point described in terms of the mean activity in the network. For unstructured homogeneous random networks this amounts to a mean-field description in terms of the population averaged activity (i.e., firing rate in spiking models). In the next step, a linearization of the dynamical equations is performed around this working point. We explain how fluctuations can be considered in the linearization procedure to improve its accuracy and we show how the effective linear dynamics maps to the LRM. We illustrate the results throughout by a quantitative comparison of the analytical results to direct numerical simulations of the original non-linear dynamics. The appendices “Implementation of noisy rate models,” “Implementation of binary neurons in a spiking simulator code,” and “Implementation of Hawkes neurons in a spiking simulator code.” describe the model implementations and are modules of our long-term collaborative project to provide the technology for neural systems simulations (Gewaltig and Diesmann, 2007).

2. Covariance structure of noisy rate models

2.1. Definition of models

Let us consider a network of linear model neurons, each characterized by a continuous fluctuating rate r and connections from neuron j to neuron i given by the element wij of the connectivity matrix w. We assume that the response of neuron i to input can be described by a linear kernel h so that the activity in the network fulfills

| (1) |

where f(◦ − d) denotes the function f shifted by the delay d, x is an uncorrelated noise with

| (2) |

e.g., a Gaussian white noise and (f * g)(t) = ∫t− ∞f(t − t′) g(t′)dt′ is the convolution. With the particular choice b = wδ(◦ − d)* we obtain

| (3) |

We call the dynamics (3) the linear noisy rate model (LRM) with noise applied to output, as the sum r + x appears on the right hand side. Alternatively, choosing b = 1 we define the model with input noise as

| (4) |

Hence, Equations (3) and (4) are special cases of (1). In the following we consider the particular case of an exponential kernel

| (5) |

where θ denotes the Heaviside function, θ(t) = 1 for t > 0, 0 else. Applying to (1) the operator which has h as a Green's function (i.e., Oh = δ) we get

| (6) |

which is the equation describing a set of delay coupled Ornstein-Uhlenbeck-processes (OUP) with input or output noise for b = 1 or b = wδ(◦ − d)*, respectively. We use this representation in “Binary neurons” to show the correspondence to networks of binary neurons.

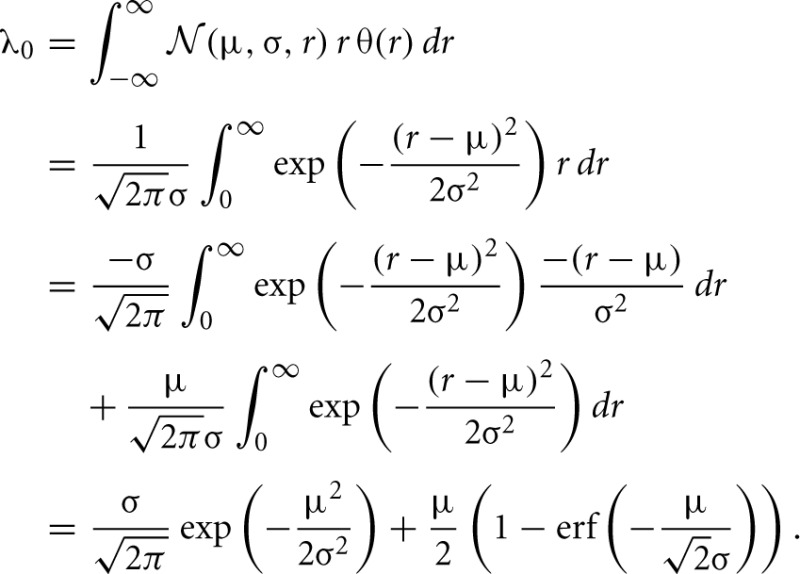

2.2. Solution of the convolution equation with input noise

The solution for the system with input noise obtained from the definition (4) after Fourier transformation is

| (7) |

where the delay is consumed by the kernel function . We use capital letters throughout the text to denote objects in the Fourier domain and lower case letters for objects in the time domain. Solved for R = (1 − Hdw)−1HX the covariance function of r in the Fourier domain is found with the Wiener–Khinchin theorem (Gardiner, 2004) as 〈R(ω)RT(−ω)〉, also called the cross spectrum

| (8) |

where we introduced the matrix D = 〈X(ω)XT(−ω)〉. From the second to the third line we used the fact that the non-delayed kernels H(ω) can be replaced by delayed kernels Hd(ω) and that the corresponding phase factors eiωd and e− iωd cancel each other. If x is a vector of pairwise uncorrelated noise, D is a diagonal matrix and needs to be chosen accordingly in order for the cross spectrum (8) to coincide (neglecting non-linear effects) with the cross spectrum of a network of binary neurons, as described in “Equivalence of binary neurons and Ornstein–Uhlenbeck processes”.

2.3. Solution of convolution equation with output noise

For the system with output noise we consider the quantity yi = ri + xi as the dynamic variable representing the activity of neuron i and aim to determine pairwise correlations. It is easy to get from (3) after Fourier transformation

| (9) |

which can be solved for R = (1 − Hdw)−1HdwX in order to determine the Fourier transform of Y as

| (10) |

The cross spectrum hence follows as

| (11) |

with D = 〈X(ω)XT(−ω)〉. D is a diagonal matrix with the i-th diagonal entry ρ2i. For the correspondence to spiking models D must be chosen appropriately, as discussed in “Hawkes processes” and “Leaky integrate-and-fire neurons” for Hawkes processes and LIF neurons, respectively.

2.4. Spectrum of the dynamics

For both linear rate dynamics, with output and with input noise, the cross spectrum C(ω) has poles at certain frequencies ω in the complex plane. These poles are defined by the zeros of det(Hd(ω)−1 − w) and the corresponding term with the opposite sign of ω. The zeros of det(Hd(ω)− 1 − w) are solutions of the equation

where Lj is the j-th eigenvalue of w. The same set of poles arises from (1) when solving for R. For d > 0 and the exponential kernel (5), the poles can be expressed as

| (12) |

where Wk is the k-th of the infinitely many branches of the Lambert-W function (Corless et al., 1996). For vanishing synaptic delay d = 0 there is obviously only one solution for every Lj given by .

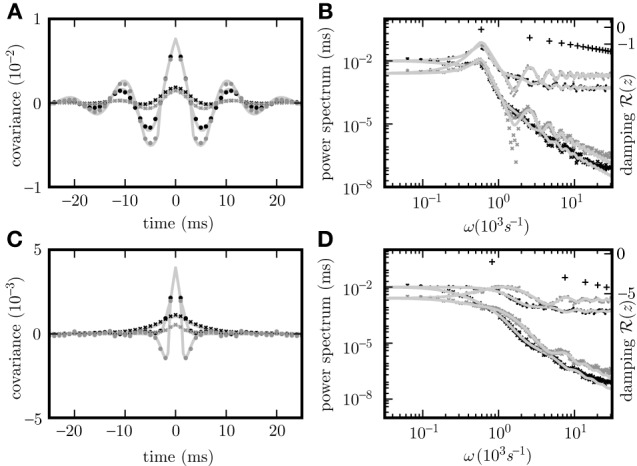

Given the same parameters d, w, τ, the pole structures of the cross spectra of both systems (8) and (11) are identical, since the former can be obtained from the latter by multiplication with (Hd(ω)Hd(−ω))−1 = (H(ω)H(−ω))−1, which has no poles. The only exception causing a different pole structure for the two models is the existence of an eigenvalue Lj = 0 of the connectivity matrix w, corresponding to a pole . However, this pole corresponds to an exponential decay of the covariance for input noise in the time domain and hence does not contribute to oscillations. For output noise, the multiplication with the term (H(ω)H(−ω))−1, vanishing at , cancels this pole in the covariance. Consequently both dynamics exhibit similar oscillations. A typical spectrum of poles for a negative eigenvalue Lj < 0 is shown in Figures 2B,D.

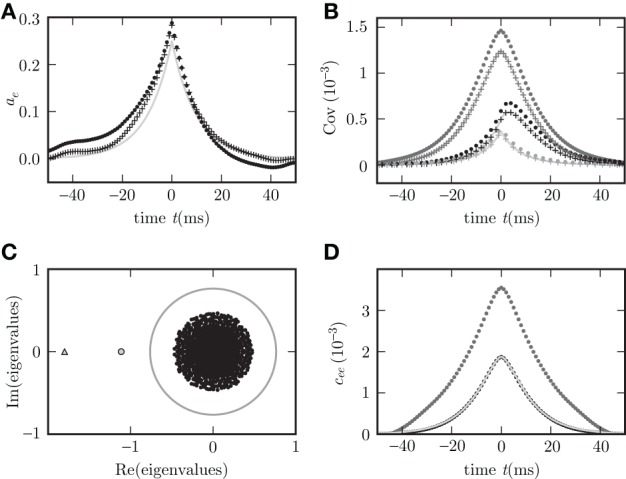

Figure 2.

Pole structure determines dynamics. Autocovariance of the population activity (A,C) measured in ρ2/τ and its Fourier transform called power spectrum (B,D) of the rate models with output noise (dots) (3) and input noise (diagonal crosses) (4) for delays d = 3 ms (A,B), and d = 1 ms (C,D). Black symbols show averages over the excitatory population activity and gray symbols over the inhibitory activity obtained by direct simulation. Light gray curves show theoretical predictions for the spectrum (20) and the covariance (21) for output noise and the spectrum (17) and the covariance (18) for input noise. Black crosses (12) in (B,D) denote the locations of the poles of the cross spectra - with the real parts corresponding to the damping (vertical axis), and the imaginary parts to oscillation frequencies (horizontal axis). The detailed parameters for this and following figures are given in “Parameters of simulations”.

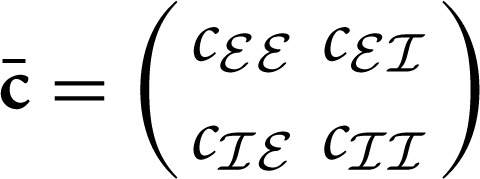

2.5. Population-averaged covariances

Often it is desirable to consider not the whole covariance matrix but averages over subpopulations of pairs of neurons. For instance the average over the whole network would result in a single scalar value. Separately averaging pairs, distinguishing excitatory and inhibitory neuron populations, yields a 2 by 2 matrix of covariances. For these simpler objects closed form solutions can be obtained, which already preserve some useful information and show important features of the network. Averaged covariances are also useful for comparison with simulations and experimental results.

In the following we consider a recurrent random network of Ne = N excitatory and Ni = γN inhibitory neurons with synaptic weight w for excitatory and −gw for inhibitory synapses. The probability p determines the existence of a connection between two randomly chosen neurons. We study the dynamics averaged over the two subpopulations by introducing the quantities and noise terms for a ∈ {![]() ,

, ![]() }; indices

}; indices ![]() and

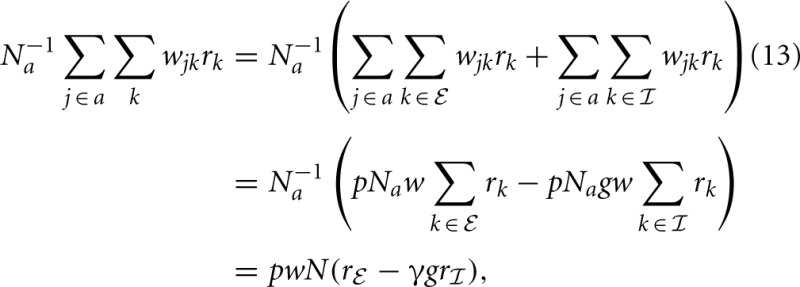

and ![]() stand for inhibitory and excitatory neurons and corresponding quantities. Calculating the average local input N−1a∑j ∈ awjkrk to a neuron of type a, we obtain

stand for inhibitory and excitatory neurons and corresponding quantities. Calculating the average local input N−1a∑j ∈ awjkrk to a neuron of type a, we obtain

where, from the second to the third line we used the fact that in expectation a given neuron k has pNa targets in the population a. The reduction to the averaged system in (13) is exact if in every column k in wjk there are exactly K non-zero elements for j ∈

where, from the second to the third line we used the fact that in expectation a given neuron k has pNa targets in the population a. The reduction to the averaged system in (13) is exact if in every column k in wjk there are exactly K non-zero elements for j ∈ ![]() and γK for j ∈

and γK for j ∈ ![]() , which is the case for networks with fixed out-degree (number of outgoing connections of a neuron to the neurons of a particular type is kept constant), as noted earlier (Tetzlaff et al., 2012). For fixed in-degree (number of connections to a neuron coming in from the neurons of a particular type is kept constant) the substitution of rj ∈ a by ra is an additional approximation, which could be considered as an average over possible realizations of the random connectivity. In both cases the effective population-averaged connectivity matrix M turns out to be

, which is the case for networks with fixed out-degree (number of outgoing connections of a neuron to the neurons of a particular type is kept constant), as noted earlier (Tetzlaff et al., 2012). For fixed in-degree (number of connections to a neuron coming in from the neurons of a particular type is kept constant) the substitution of rj ∈ a by ra is an additional approximation, which could be considered as an average over possible realizations of the random connectivity. In both cases the effective population-averaged connectivity matrix M turns out to be

| (14) |

with K = pN. So the averaged activities fulfill the same Equations (3) and (4) with the non-averaged quantities r, x, and w replaced by their averaged counterparts r = (r , r

, r )T, x = (x

)T, x = (x , x

, x )T, and M. The population averaged activities ra are directly related to the block-wise averaged covariance matrix

)T, and M. The population averaged activities ra are directly related to the block-wise averaged covariance matrix  , with cab = N−1aN−1b∑i ∈ a∑j ∈ b

cij. With

, with cab = N−1aN−1b∑i ∈ a∑j ∈ b

cij. With

| (15) |

we replace D by and c by c so that the same Equations (11) and (8) and their general solutions also hold for the block-wise averaged covariance matrices.

The covariance matrices separately averaged over pairs of excitatory, inhibitory or mixed pairs are shown in Figure 2 for both linear rate dynamics (3) and (4). (Parameters for all simulations presented in this article are collected in “Parameters of simulations,” the implementation of LRM is described in “Implementation of noisy rate models”). The poles of both models shown in Figure 2B are given by (12) and coincide with the peaks in the cross spectra (8) and (11) for output and input noise, respectively. The results of direct simulation and the theoretical prediction are shown for two different delays, with the longer delay leading to stronger oscillations.

Figure 3C shows the distribution of eigenvalues in the complex plane for two random connectivity matrices with different synaptic amplitudes w. The model exhibits a bifurcation, if at least one eigenvalue assumes a zero real part. For fixed out-degree the averaging procedure (13) is exact, reflected by the precise agreement of theory and simulation in Figure 3D. For fixed in-degree, the averaging procedure (13) is an approximation, which is good only for parameters far from the bifurcation. Even in this regime still small deviations of the theory from the simulation results are visible in Figure 3B. On the stable side close to a bifurcation, the appearance of long living modes causes large fluctuations. These weakly damped modes appearing in one particular realization of the connectivity matrix are not represented after the replacement of the full matrix w by the average M over matrix realizations. The eigenvalue spectrum of the connectivity matrix provides an alternative way to understand the deviations. By the averaging the set of N eigenvalues of the connectivity matrix is replaced withby the two eigenvalues of the reduced matrix M, one of which is zero due to identical rows of M. The eigenvalue spectrum of the full matrix is illustrated in Figure 3C. Even if the eigenvalue(s) LM of M are far in the stable region (corresponding to ![]() (z(LM)) > 0) some eigenvalues Lw of the full connectivity matrix in the vicinity of the bifurcation region may still have an imaginary part becoming negative and the system can feel their influence, shown in Figure 3D.

(z(LM)) > 0) some eigenvalues Lw of the full connectivity matrix in the vicinity of the bifurcation region may still have an imaginary part becoming negative and the system can feel their influence, shown in Figure 3D.

Figure 3.

Limits of the theory for fixed in-degree and fixed out-degree. Autocovariance (A) and covariance (B) in random networks with fixed in-degree (dots) and fixed out-degree (crosses). Simulation results for c

, c

, c

, and c

, and c

are shown in dark gray, black and light gray, respectively for synaptic weight w = 0.011 far from bifurcation. For larger synaptic weight w = 0.018 close to bifurcation (see text at the end of “Population-averaged covariances”), c

are shown in dark gray, black and light gray, respectively for synaptic weight w = 0.011 far from bifurcation. For larger synaptic weight w = 0.018 close to bifurcation (see text at the end of “Population-averaged covariances”), c

is also shown in (D) for fixed in-degree (dark gray dots) and for fixed out-degree (black dots). Corresponding theoretical predictions for the autocovariance (34) (A) and the covariance (18) (B,D) are plotted as light gray curves throughout. The set of eigenvalues is shown as black dots in panel (C) for the smaller weight. The gray circle denotes the spectral radius (Rajan and Abbott, 2006; Kriener et al., 2008) confining the set of eigenvalues for the larger weight. The small filled gray circle and the triangle show the effective eigenvalues L of the averaged systems for small and large weight, respectively.

is also shown in (D) for fixed in-degree (dark gray dots) and for fixed out-degree (black dots). Corresponding theoretical predictions for the autocovariance (34) (A) and the covariance (18) (B,D) are plotted as light gray curves throughout. The set of eigenvalues is shown as black dots in panel (C) for the smaller weight. The gray circle denotes the spectral radius (Rajan and Abbott, 2006; Kriener et al., 2008) confining the set of eigenvalues for the larger weight. The small filled gray circle and the triangle show the effective eigenvalues L of the averaged systems for small and large weight, respectively.

2.6. Fourier back transformation

Although the cross spectral matrices (8) and (11) for both dynamics look similar in the Fourier domain, the procedures for back transformation differ in detail. In both cases, the Fourier integral along the real ω-axis can be extended to a closed integration contour by a semi-circle with infinite radius centered at 0 in the appropriately chosen half-plane. The half-plane needs to be selected such that the contribution of the integration along the semi-circle vanishes. By employing the residue theorem (Bronstein et al., 1999) the integral can be replaced by a sum over residua of the poles encircled by the contour. For a general covariance matrix we only need to calculate c(t) for t ≥ 0, as for t < 0 the solution can be found by symmetry c(t) = cT(−t).

For input noise it is possible to close the contour in the upper half-plane where the integrand C(ω)eiωt vanishes for |ω| → ∞ for all t > 0, as |Cij(ω)| decays as |ω|−2. This can be seen from (8), because the highest order of H−1d ∝ ω appearing in det(H−1d − w) is equal to the dimensionality N of w (N = 2 for M), and in det(adjugate matrix ij of H−1d − w) it is N − 1 (i = j) or N − 2 (i ≠ j). So |(H−1d − w)−1| is proportional to |ω|−1|e− iωd| and |C(ω)|∝|ω|−2 for large |ω|.

For the case of output noise (11) C(ω) can be obtained from the C(ω) for input noise (8) multiplied with (Hd(ω)Hd(−ω))−1 ~ |ω|2 for large |ω|. The multiplication with this factor changes the asymptotic behavior of the integrand, which therefore contains terms converging to a constant value and terms decaying like |ω|−1 for |ω| → ∞. These terms result in non-vanishing integrals over the semicircle in the upper half-plane and have to be considered separately. To this end we rewrite (11) as

| (16) |

and find the constant term D which turns into a δ-function in the time domain. The first term in the second line of (16) decays like |ω|−2 and can be transformed just as C(ω) for input noise closing the contour in the upper half-plane. The second and third term are the transposed complex conjugates of each other, because of the dependence of H on −ω instead of ω, and require a special consideration. Multiplied by eiωt under the Fourier integral, the first term is proportional to Hdeiωt ~ ω−1eiω(t − d) and vanishes faster than |ω|−1 for large |ω| in the upper half-plane for t > d and in the lower half plane for t < d. For the second term the half planes are interchanged. The application of the residue theorem requires closing the integration contour in the half-plane where the integral over the semi-circle vanishes faster than |ω|−1. For w = M and in the general case of a stable dynamics all poles of the first term are in the upper half-plane ![]() (zk(Lj)) > 0, and have no contribution to c(t) for t < d. For the second term the same is true for t > −d; these terms correspond to the jumps of c(t) after one delay, caused by the effect of the sending neuron arriving at the other neurons in the system after one synaptic delay. These terms correspond to the response of the system to the impulse of the sending neuron – hence we call them “echo terms” in the following (Helias et al., 2013). The presence of such discontinuous jumps at time points d and −d in the case of output noise is reflected in the convolution of hw with D in the time domain in (37). For input noise the absence of discontinuities can be inferred from the absence of such terms in (33), where the derivative of the correlation function is equal to the sum of finite terms. The first summand in (16) corresponds to the covariance evoked by fluctuations propagating through the system originating from the same neuron and we call it “correlated input term”. In the system with input noise a similar separation into effective echo and correlated input terms can be performed. We obtain the correlated input term as the covariance in an auxiliary population without outgoing connections and echo terms as the difference between the full covariance between neurons within the network and the correlated input term.

(zk(Lj)) > 0, and have no contribution to c(t) for t < d. For the second term the same is true for t > −d; these terms correspond to the jumps of c(t) after one delay, caused by the effect of the sending neuron arriving at the other neurons in the system after one synaptic delay. These terms correspond to the response of the system to the impulse of the sending neuron – hence we call them “echo terms” in the following (Helias et al., 2013). The presence of such discontinuous jumps at time points d and −d in the case of output noise is reflected in the convolution of hw with D in the time domain in (37). For input noise the absence of discontinuities can be inferred from the absence of such terms in (33), where the derivative of the correlation function is equal to the sum of finite terms. The first summand in (16) corresponds to the covariance evoked by fluctuations propagating through the system originating from the same neuron and we call it “correlated input term”. In the system with input noise a similar separation into effective echo and correlated input terms can be performed. We obtain the correlated input term as the covariance in an auxiliary population without outgoing connections and echo terms as the difference between the full covariance between neurons within the network and the correlated input term.

2.7. Explicit expression for the population averaged cross covariance in the time domain

We obtain the population averaged cross spectrum in a recurrent random network of Ornstein–Uhlenbeck processes (OUP) with input noise by inserting the averaged connectivity matrix w = M (14) into (8). The explicit expression for the covariance function follows by taking into account all (both) eigenvalues of M with values 0 and L = Kw(1 − γg). The detailed derivation of the results presented in this section are documented in “Calculation of the Population Averaged Cross Covariance in Time Domain”. The expression for the cross spectrum (8) takes the form

| (17) |

where we introduced f(ω) = (Hd(ω)−1 − L)−1 as a short hand. Sorting the terms by their dependence on ω, introducing the functions Φ1(ω),…,Φ4(ω) for this dependence, and φ1(t),…,φ4(t) for the corresponding functions in the time domain, the covariance in the time domain takes the form

The previous expression is valid for arbitrary D. In simulations presented in this article we consider identical marginal input statistics for all neurons. In this case the averaged activities for excitatory and inhibitory neurons are the same, so we can insert the special form of D given in (15), which results in

| (18) |

The time-dependent functions φ1,…,φ4 are the same in both cases. Using the residue theorem for t ≥ 0 they can be expressed as a sum over the poles zk(L) given by (12) and the pole of Hd(ω). At ω = zk(L) the residue of f(ω) is Res(f, ω = zk(L)) = (idL + iτeiωd)−1, the residue of Hd(ω) at is , so that the explicit forms of φ1,…,φ4 follow as

| (19) |

The corresponding expression for C(ω) for output noise is obtained by multiplying (17) with H−1d(ω)H−1d(−ω) = (1 + ω2τ2)

| (20) |

which, after Fourier transform, provides the expression for c(t) in the time domain for t ≥ 0

| (21) |

As in (18), the first line holds for arbitrary D, and the second for D given by (15), valid if the firing rates are homogeneous. φ1 is defined as before, and

| (22) |

vanishes for t < d. All matrix elements of the first term in (21) are identical. Therefore all elements of c(t) are equal for 0 < |t| < d. Both rows of the matrix in front of φ0 are identical, so for t > 0 the off diagonal term c

coincides with c

coincides with c

and c

and c

with c

with c

and vice versa for t < 0.

and vice versa for t < 0.

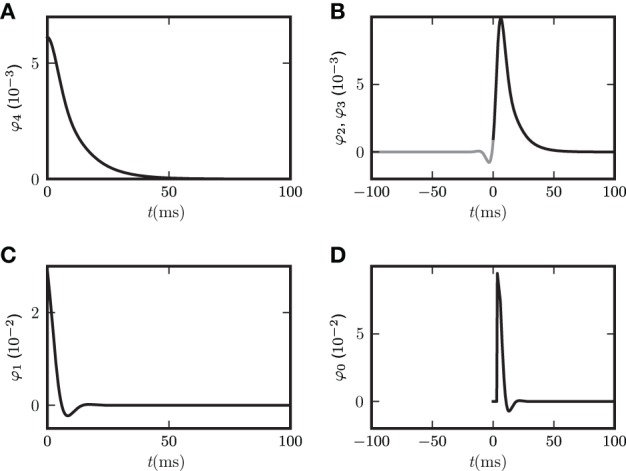

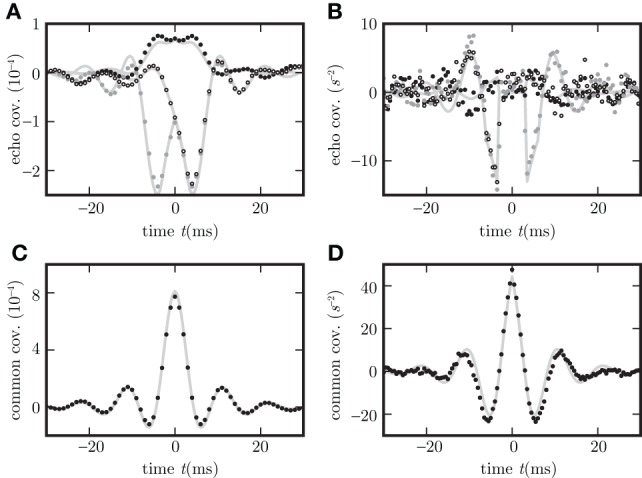

As an illustration we show the functions φ0,…,φ4 for one set of parameters in Figure 4. The left panels (A,C) correspond to contributions to the covariance caused by common input to a pair of neurons, the right panels (B,D) to terms due to the effect of one of the neurons' activities on the remaining network (echo terms). The upper panels (A,B) belong to the model with input noise, the lower panel (C,D) to the one with output noise.

Figure 4.

Functions φ0 (D), φ1 (C), φ2, φ3 (B), φ4 (A) introduced in (19) and (22) for decomposition of covariance c(t). In (B) φ3(−t) is shown in gray and φ2(t) in black. The two functions are continuations of each other, joint at t = 0. Both functions appear in the echo term for input noise. The function φ0 in (D) describing the corresponding echo term in the case of output noise is shifted to be aligned with the function in (B) to facilitate the comparison of (B,D). Parameters in all panels are d = 3 ms, τ = 10 ms, L = −1.72.

For the rate dynamics with output noise, the term with φ1 in (21) (shown in Figure 4C) is symmetric and describes the common input covariance and the term with φ0 (shown in Figure 4D) is the echo part of the covariance. For the rate dynamics with input noise (18) the term containing φ4 (shown in Figure 4A) is caused by common input and is hence also symmetric, the terms with φ2 and φ3 (shown in Figure 4B) correspond to the echo part and have hence their peak outside the origin. The second echo term in (18) is equal to the first one transposed and with opposite sign of the time argument, so we show φ2(t) and φ3(−t) together in one panel in Figure 4B. Note that for input noise, the term with φ1 describes the autocovariance, which corresponds to the term with the δ-function in case of output noise.

The solution (18) is visualized in Figure 6, the solution (21) in Figure 7, and the decomposition into common input and echo parts is also shown and compared to direct simulations in Figure 8.

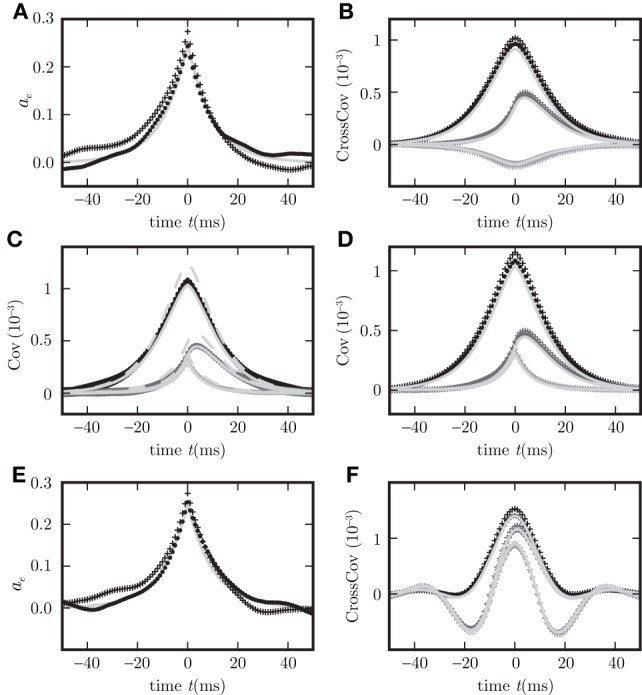

Figure 6.

Binary model neuron corresponds to OUP model with input noise. Autocovariance (A), crosscovarince (B), and autocovariance of population averaged activity (C,D), for binary neurons (dots) and rate model with input noise (crosses). c

, c

, c

and c

and c

are shown in black, gray, and light gray. Corresponding theoretical predictions (18) in (C,D), (34) in (A), their difference in (C) are plotted as light gray curves throughout. Dashed curve in (C) represents the theoretical prediction using the linearization with the slope at the mean activity (26), the solid curve shows the results for the slope averaged over Gaussian distributed input fluctuations (27). The spread of the simulation results for binary neurons in (C) is due to different realizations of the random connectivity. (E,F) are the same as (A,B) but for the presence of a synaptic delay d = 10 ms instead of d = 0.1 ms.

are shown in black, gray, and light gray. Corresponding theoretical predictions (18) in (C,D), (34) in (A), their difference in (C) are plotted as light gray curves throughout. Dashed curve in (C) represents the theoretical prediction using the linearization with the slope at the mean activity (26), the solid curve shows the results for the slope averaged over Gaussian distributed input fluctuations (27). The spread of the simulation results for binary neurons in (C) is due to different realizations of the random connectivity. (E,F) are the same as (A,B) but for the presence of a synaptic delay d = 10 ms instead of d = 0.1 ms.

Figure 7.

Covariance structure in spiking networks corresponds to OUP with output noise. (A) Autocovariance obtained by direct simulation of the LIF (black), Hawkes (gray), and OUP (light gray) models for excitatory (dots) and inhibitory neurons (crosses). (B) Covariance c

averaged over disjoint pairs of neurons for LIF (black dots), Hawkes (gray dots), and OUP with output noise (empty circles). (C) Covariance averaged over disjoint pairs of neurons of the same type. (D) Autocovariance of the population averaged activity. Averages in (C,D) over excitatory neurons as black dots, over inhibitory neurons as gray dots. Corresponding theoretical predictions (21) are plotted as light gray curves in all panels except (A). Light gray diagonal crosses in (A,D) denote theoretical peak positions determined by the firing rate r as rΔt (where Δt = 0.1 ms is the time resolution of the histogram).

averaged over disjoint pairs of neurons for LIF (black dots), Hawkes (gray dots), and OUP with output noise (empty circles). (C) Covariance averaged over disjoint pairs of neurons of the same type. (D) Autocovariance of the population averaged activity. Averages in (C,D) over excitatory neurons as black dots, over inhibitory neurons as gray dots. Corresponding theoretical predictions (21) are plotted as light gray curves in all panels except (A). Light gray diagonal crosses in (A,D) denote theoretical peak positions determined by the firing rate r as rΔt (where Δt = 0.1 ms is the time resolution of the histogram).

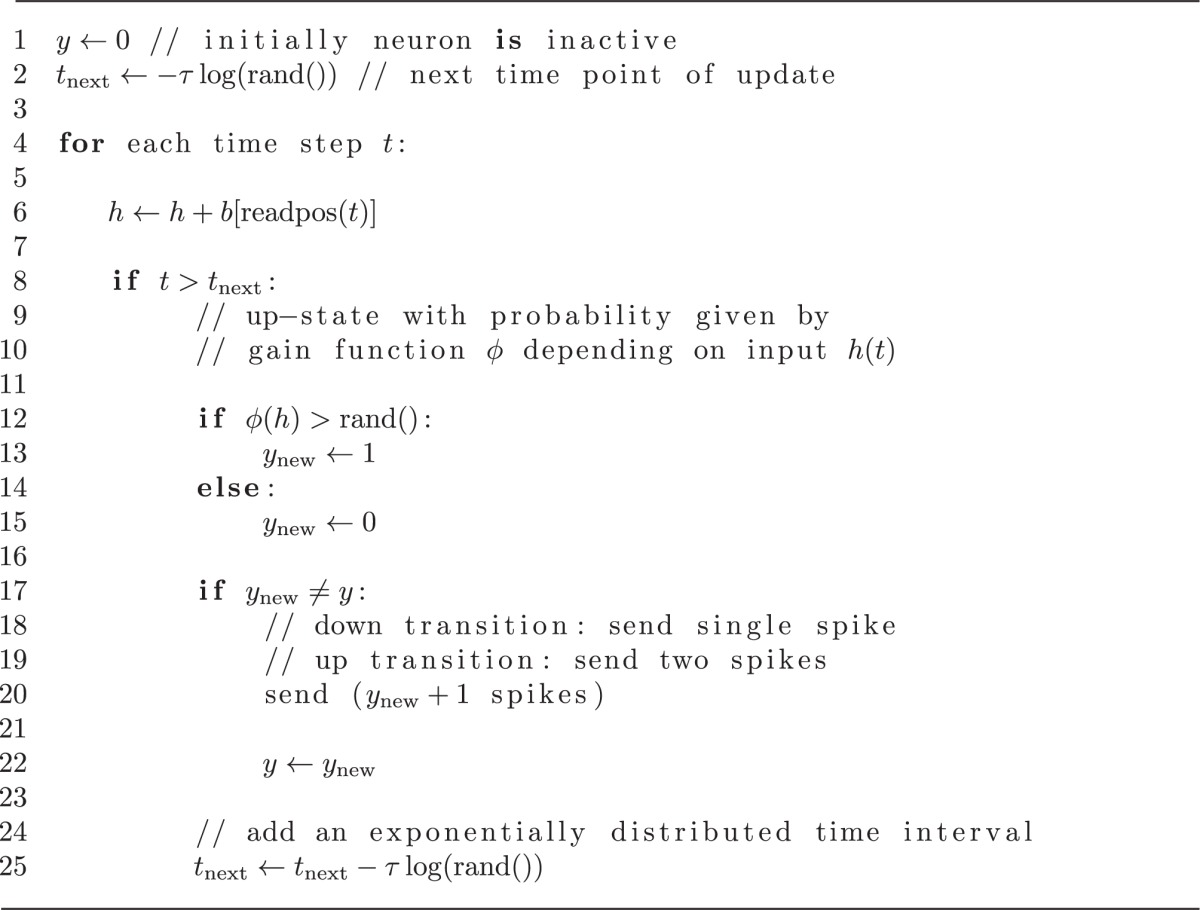

Figure 8.

Different echo terms for spiking and non-spiking neurons. Binary non-spiking neurons shown in (A,C) and LIF in (B,D). (A),(B) Echo terms by direct influence of the neuron's output on the network in dependence of neuron types (in A,B

c

,c

,c

, and c

, and c

are plotted as black, gray dots and circles). (C,D), Contributions to the covariance evoked by correlated and common input (black dots) measured with help of auxiliary model neurons which do not provide feedback to the network. Corresponding theoretical predictions (16) are plotted as light gray curves throughout.

are plotted as black, gray dots and circles). (C,D), Contributions to the covariance evoked by correlated and common input (black dots) measured with help of auxiliary model neurons which do not provide feedback to the network. Corresponding theoretical predictions (16) are plotted as light gray curves throughout.

3. Binary neurons

In the following sections we study, in turn, the binary neuron model, the Hawkes model and the LIF model and show how they can be mapped to one of the two OUPs; either the one with input or the one with output noise, so that the explicit solutions (18) and (21) for the covariances derived in the previous section can be applied. In the present section, we start with the binary neuron model (Ginzburg and Sompolinsky, 1994; Buice et al., 2009).

Following Ginzburg and Sompolinsky (1994) the state of the network of N binary model neurons is described by a binary vector n ∈ {0, 1}N and each neuron is updated at independently drawn time points with exponentially distributed intervals of mean duration τ. This stochastic update constitutes a source of noise in the system. Given the i-th neuron is updated, the probability to end in the up-state (ni = 1) is determined by the gain function Fi(n) which depends on the activity n of all other neurons. The probability to end in the down state (ni = 0) is 1 − Fi(n). Here we implemented the binary model in the NEST simulator (Gewaltig and Diesmann, 2007) as described in “Implementation of Binary Neurons in a Spiking Simulator~Code”. Such systems have been considered earlier (Ginzburg and Sompolinsky, 1994; Buice et al., 2009), and here we follow the notation employed in the latter work. In the following we collect results that have been derived in these works and refer the reader to these publications for the details of the derivations. The zero-time lag covariance function is defined as cij(t) = 〈ni(t)nj(t)〉 − ai(t)aj(t), with the expectation value 〈〉 taken over different realizations of the stochastic dynamics. Here a(t) = (a1(t),…,aN(t))T is the vector of mean activities ai(t) = 〈ni(t)〉. cij(t) fulfills the differential equation

In the stationary state, the covariance therefore fulfills

| (23) |

The time lagged covariance cij(t, s) = 〈ni(t)nj(s)〉 − ai(t)aj(s) fulfills for t > s the differential equation

| (24) |

This equation is also true for i = j, the autocovariance. The term 〈Fi(n, t)(nj(s) − aj(s))〉 has a simple interpretation: it measures the influence of a fluctuation of neuron j at time s around its mean value on the gain of neuron i at time t (Ginzburg and Sompolinsky, 1994). We now assume a particular form for the coupling between neurons

| (25) |

where Ji is the vector of incoming synaptic weights into neuron i and ϕ is a non-linear gain function. Assuming that the fluctuations of the total input Jin into the i-th neuron are sufficiently small to allow a linearization of the gain function ϕ, we obtain the Taylor expansion

where

| (26) |

is the slope of the gain function at the point of mean input.

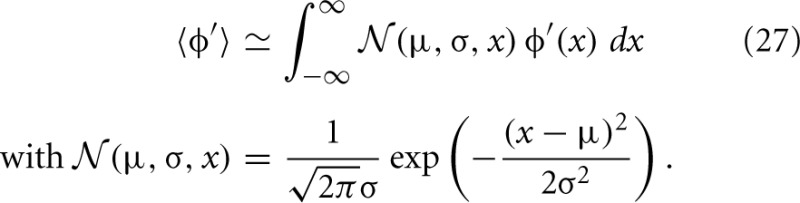

Up to this point the treatment of the system is identical to the work of Ginzburg and Sompolinsky (1994). Now we present an alternative approach for the linearization which takes into account the effect of fluctuations in the input. For sufficiently asynchronous network states, the fluctuations in the input Jin(t − d) to neuron i can be approximated by a Gaussian distribution ![]() (μ, σ). In the following we consider a homogeneous random network with fixed in-degree as described in “Population-averaged covariances”. As each neuron receives the same number K of excitatory and γK inhibitory synapses, the marginal statistics of the summed input to each neuron is identical. The mean input to a neuron then is μ = KJ(1 − γg)a, where a is the mean activity of a neuron in the network. If correlations are small, the variance of this input signal distribution can be approximated as the sum of the variances of the individual contributions from the incoming signals, resulting in σ2 = KJ2(1 + γg2)a(1 − a), where we used the fact that the variance of a binary variable with mean a is a(1 − a). This results from a direct calculation: since n ∈ {0, 1}, n2 = n, so that the variance is 〈n2〉 − 〈n〉2 = 〈n〉 − 〈n〉2 = a(1 − a). Averaging the slope ϕ′ of the gain function over the distribution of the input variable results in the averaged slope

(μ, σ). In the following we consider a homogeneous random network with fixed in-degree as described in “Population-averaged covariances”. As each neuron receives the same number K of excitatory and γK inhibitory synapses, the marginal statistics of the summed input to each neuron is identical. The mean input to a neuron then is μ = KJ(1 − γg)a, where a is the mean activity of a neuron in the network. If correlations are small, the variance of this input signal distribution can be approximated as the sum of the variances of the individual contributions from the incoming signals, resulting in σ2 = KJ2(1 + γg2)a(1 − a), where we used the fact that the variance of a binary variable with mean a is a(1 − a). This results from a direct calculation: since n ∈ {0, 1}, n2 = n, so that the variance is 〈n2〉 − 〈n〉2 = 〈n〉 − 〈n〉2 = a(1 − a). Averaging the slope ϕ′ of the gain function over the distribution of the input variable results in the averaged slope

The two alternative methods of linearization of ϕ are illustrated in Figure 5. In the given example, the linearization procedure taking into account the fluctuations of the input signal results in a smaller effective slope 〈ϕ′〉 than taking the slope ϕ′(a) at the mean activity a near its maximum. Averaging the slope 〈ϕ′〉 over this distribution fits simulation results better than ϕ′(a) calculated at the mean of a, as shown in Figure 6.

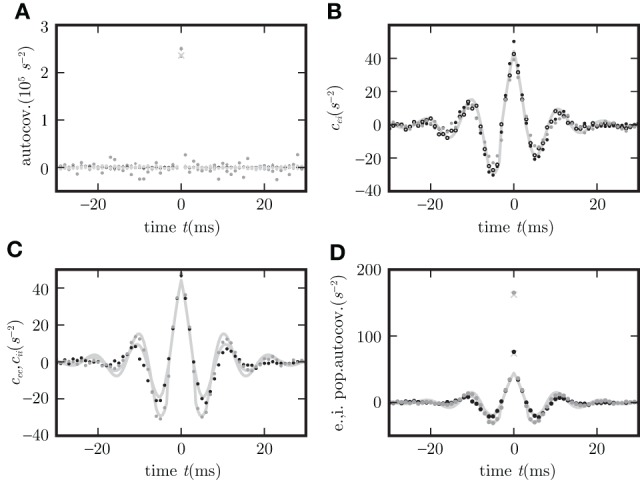

Figure 5.

Alternative linearizations of the binary neuron model. The black curve represents the non-linear gain function tanh(βx). The dashed gray line is its tangent at the mean input value (denoted by the diagonal cross). The solid curve is the slope 〈ϕ′〉 averaged over the distribution of the fluctuating input (27). This distribution estimated from direct simulation is presented by black dots, the corresponding theoretical prediction of a normal distribution  (μ,σ) (27) is shown as the light gray curve.

(μ,σ) (27) is shown as the light gray curve.

The finite slope of the non-linear gain function can be understood as resulting from the combination of a hard threshold with an intrinsic local source of noise. The inverse strength of this noise determines the slope parameter β (Ginzburg and Sompolinsky, 1994). In this sense, the network model contains two sources of noise, the explicit local noise, quantified by β and the fluctuating synaptic input interpreted as self-generated noise on the network level, quantified by σ. Even in the absence of local noise (β → ∞), the above mentioned linearization is applicable and yields a finite effective slope 〈ϕ′〉 (27). In the latter case the resulting effective synaptic weight is independent of the original synapse strength (Grytskyy et al., 2013).

We now extend the classical treatment of covariances in binary networks (Ginzburg and Sompolinsky, 1994) by synaptic conduction delays. In (25) Fi(n, t) must therefore be understood as a functional acting on the function n(t′) for t′ ∈ [−∞, t], so that also synaptic connections with time delay d can be realized. We define an effective weight vector to absorb the gain factor as wi = βiJi, with either βi = ϕ′(μ) or βi = 〈ϕ′〉 depending on the linearization procedure, and expand the right hand side of (24) to obtain

Thus the cross-covariance fulfills the matrix delay differential equation

| (28) |

This differential equation is valid for t > s. For the stationary solution, the differential equation only depends on the relative timing u = t − s

| (29) |

The same linearization applied to (23) results in the boundary condition for the solution of the previous equation

| (30) |

or, if we split c into its diagonal and its off-diagonal parts ca and c≠

| (31) |

In the following section we use this representation to demonstrate the equivalence of the covariance structure of binary networks to the solution for OUP with input noise.

3.1. Equivalence of binary neurons and ornstein–uhlenbeck processes

In the following subsection we show that the same Equations (29) and (31) for binary neurons also hold for the Ornstein-Uhlenbeck process (OUP) with input noise. In doing so here we also extend the existing framework of OUP (Risken, 1996) to synaptic conduction delays d. A network of such processes is described by

| (32) |

where x is a vector of pairwise uncorrelated white noise with 〈x(t)〉x = 0 and 〈xi(t)xj(t + t′)〉x = δijδ(t′)ρ2. With the help of the Green's function G satisfying , namely , we obtain the solution of Equation (32) as

The equation for the fluctuations δr(t) = r(t) − 〈r(t)〉x around the expectation value

coincides with the noisy rate model with input noise (4) with delay d and convolution kernel h = G. In the next step we investigate the covariance matrix cij(t, s) = 〈δri(t + s)δrj(t)〉x to show for which choice of parameters the covariance matrices for the binary model and the OUP with input noise coincide. To this end we derive the differential equation with respect to the time lag s for positive lags s > 0

| (33) |

where we used 〈x(t + s))δr(t)〉x = 0, because the noise is realized independently for each time step and the system is causal. Equation (33) is identical to the differential equation satisfied by the covariance matrix (28) for binary neurons (Ginzburg and Sompolinsky, 1994). To determine the initial condition of (33) we need to take the limit c(t, 0) = lims → +0c(t, s). This initial condition can be obtained as the stationary solution of the following differential equation

Here we used that 〈x(t + s)δrT(t)〉 vanishes due to independent noise realizations and causality and

In the stationary state, c only depends on the time lag s and is independent of the first time argument t, which, with the symmetry c(−d)T = c(d) yields the additional condition for the solution of (33)

or, if c is split in diagonal and off-diagonal parts ca and c≠, respectively,

with O = wca(−d) + (wca(−d))T. In the equation for the autocovariance ca the first two terms are contributions due to the cross covariance. In the state of asynchronous network activity with cij ~ N−1 for i≠ j these terms are typically negligible in comparison to the third term because ∑k wikcki ~ wKN−1 = pw, which is typically smaller than 1 for small effective weights w < 1 and small connection probabilities p « 1. In this approximation with (33) the temporal shape of the autocovariance function is exponentially decaying with time constant τ. With ca(0) ≈ D/2 the approximate solution for the autocovariance is

| (34) |

The cross covariance then satisfies the initial condition

which coincides with (31) for binary neurons if the diagonal matrix containing the zero time autocorrelations ca(0) for binary neurons is equal to D/2, i.e., if the amplitude of the input noise ρ2 = 2τ a(1 − a) and the effective linear coupling satisfies wi = βiJi. Figure 6 shows simulation results for population averaged covariance functions in binary networks and in networks of OUPs with input noise where the parameters of the OUP network are chosen according to the requirements derived above. The theoretical results (18) agree well with the direct simulations of both systems. For comparison, both methods of linearization, as explained above, are shown. The linearization procedure which takes into account the noise on the input side of the non-linear gain function results in a more accurate prediction. Moreover, the results derived here extend the classical theory (Ginzburg and Sompolinsky, 1994) by considering synaptic conduction delays. Figure 8 shows the decomposition of the covariance structure for a non-zero delay d = 3 ms. For details of the implementation see “Implementation of binary neurons in a spiking simulator code”. The explicit effect of introducing delays into the system, such as the appearance of oscillations in the time dependent covariance, is presented in (E,F) of Figure 6, differing from (A,B) of this figure, respectively, only in the delay (d = 10 ms for (E,F), d = 0.1 ms for (A,B)).

4. Hawkes processes

In the following section we show that to linear order the covariance functions in networks of Hawkes processes (Hawkes, 1971) are equivalent to those in the linear rate network with output noise. Hawkes processes generate spikes randomly with a time density given by r(t), where neuron i generates spikes at a rate ri(t), realized independently within each infinitesimal time step. Arriving spike trains s influence r according to

| (35) |

with the connectivity matrix J and the kernel function hd including the delay. Here ν is a constant base rate of spike emission assumed to be equal for each neuron. Here we employ the implementation of the Hawkes model in the NEST simulator (Gewaltig and Diesmann, 2007). The implementation is described in “Implementation of Hawkes neurons in a spiking simulator code”.

Given neuron j spiked at time u ≤ t, the probability of a spike in the interval [t, t+δt) for neuron i is 1 if i = j, u = t (the neuron spikes synchronously with itself) and ri(t)δt + o(δt2) otherwise. Considering the system in the stationary state with the time averaged activity r = 〈s(t)〉 we obtain a convolution equation for time lags τ ≥ 0 for the covariance matrix with the entry cij(τ) for the covariance between spike trains of neurons i and j

| (36) |

with the diagonal matrix Dr = δ(τ)diag(r), which has been derived earlier (Hawkes, 1971). If the rates of all neurons are equal, ri = r, all entries in the diagonal matrix are the same, Dr = δ(τ)1r. In the subsequent section we demonstrate that the same convolution Equation (36) holds for the linear rate with output noise.

4.1. Convolution equation for linear noisy rate neurons

For the linear rate model with output noise we use Equation (3) for time lags τ > 0 to obtain a convolution equation for the covariance matrix of the output signal vector y = r + x as

| (37) |

where we utilized that due to causality the random noise signal generated at t+τ has no influence on r(t), so the respective correlation vanishes. D is the covariance of the noise as in (11), Dij(τ) = 〈xi(t)xj(t + τ)〉 = δijδ(τ)ρ2. If ρ is chosen such that ρ2 coincides with the averaged activity r in a network of Hawkes neurons and the connection matrix w is identical to J of the Hawkes network, the Equations (36) and (37) are identical. Therefore the cross spectrum of both systems is given by (11).

4.2. Non-linear self-consistent rate in rectifying hawkes networks

The convolution Equation (36) for the covariance matrix of Hawkes neurons is exact if no element of r is negative, which is particularly the case for a network of only excitatory neurons. Especially in networks including inhibitory couplings, the intensity ri of neuron i may assume negative values. A neuron with ri < 0 does not emit spikes, so the instantaneous rate is given by λi = [ri(t)]+ = θ(ri(t)) ri(t), with the Heaviside function θ. We now take into account this effective nonlinearity –the rectification of the Hawkes model neuron– in a similar manner as we already used to linearize binary neurons. If the network is in the regime of low spike rates, the fluctuations in the input of each neuron due to the Poissonian arrival of spikes are large compared to the fluctuations due to the time varying intensities r(t). Considering the same homogeneous network structure as described in “Population-averaged covariances,” the input statistics is identical for each cell i, so the mean activity λ0 = 〈λi〉 is the same for all neurons i. The superposition of the synaptic inputs to neuron i cause an instantaneous intensity ri that follows approximately a Gaussian distribution ![]() (μ,σ,ri) with mean μ = 〈r〉 = ν+λ0KJ(1 − gγ) and standard deviation . These expressions hold for the exponential kernel (5) due to Campbell's theorem (Papoulis and Pillai, 2002), because of the stochastic Poisson-like arrival of incoming spikes, where the standard deviation of the spike count is proportional to the square root of the intensity λ0. The rate λ0 is accessible by explicit integration over the Gaussian probability density as

(μ,σ,ri) with mean μ = 〈r〉 = ν+λ0KJ(1 − gγ) and standard deviation . These expressions hold for the exponential kernel (5) due to Campbell's theorem (Papoulis and Pillai, 2002), because of the stochastic Poisson-like arrival of incoming spikes, where the standard deviation of the spike count is proportional to the square root of the intensity λ0. The rate λ0 is accessible by explicit integration over the Gaussian probability density as

This equation needs to be solved self-consistently (numerically or graphically) to determine the rate in the network, as the right hand side depends on the rate λ0 itself through μ and σ. Rewritten as

| (38) |

Pμ,σ(r > 0) is the probability that the intensity of a neuron is above threshold and therefore contributes to the transmission of a small fluctuation in the input. A neuron for which r < 0 acts as if it was absent. Hence we can treat the network with rectifying neurons completely analogous to the case of linear Hawkes processes, but multiply the synaptic weight J or −gJ of each neuron with Pμ,σ(r > 0), i.e., the linearized connectivity matrix is

| (39) |

Figure 7 shows the agreement of the covariance functions obtained from direct simulation of the network of Hawkes processes and the analytical solution (21) with average firing rate λ0 determined by (38), setting the effective strength of the noise ρ2 = λ0, and the linearized coupling as described above. The detailed procedure for choosing the parameters in the direct simulation is described together with the implementation of the Hawkes model in “Implementation of Hawkes neurons in a spiking simulator code”.

5. Leaky integrate-and-fire neurons

In this section we consider a network of LIF model neurons with exponentially decaying postsynaptic currents and show its equivalence to the network of OUP with output noise, valid in the asynchronous irregular regime. A spike sent by neuron j at time t arrives at the target neuron i after the synaptic delay d, elicits a synaptic current Ii that decays with time constant τs and causes a response in the membrane potential Vi proportional to the synaptic efficacy Jij. With the time constant τm of the membrane potential, the coupled set of differential equations governing the subthreshold dynamics of a single neuron i is (Fourcaud and Brunel, 2002)

| (40) |

where the membrane resistance was absorbed into the definitions of Jij and Ii. If Vi reaches the threshold Vθ at time point tik the neuron emits an action potential and the membrane potential is reset to Vr, where it is clamped for the refractory time τr. The spiking activity of neuron i is described by this sequence of action potentials, the spike train si(t) = ∑kδ(t − tik). The dynamics of a single neuron is deterministic, but in network states of asynchronous, irregular activity and in the presence of external Poisson inputs to the network, the summed input to each cell can well be approximated as white noise (Brunel, 2000) with first moment μi = τm∑jJijrj and second moment σ2i = τm∑jJ2ijrj, where rj is the stationary firing rate of neuron j. The stationary firing rate of neuron i is then given by Fourcaud and Brunel (2002)

| (41) |

with Riemann's zeta function ζ. The response of the LIF neuron to the injection of an additional spike into afferent j determines the impulse response wijh(t) of the system. The time integral wij = wij ∫∞0h(t)dt is the DC-susceptibility, which can formally be written as the derivative of the stationary firing rate by the rate of the afferent rj, which, evaluated by help of (41), yields (Helias et al., 2013, Results and App. A)

| (42) |

In the strongly fluctuation-driven regime, the temporal behavior of the kernel h is dominated by a single exponential decay, whose time constant can be determined empirically. In a homogeneous random network the firing rates of all neurons are identical ri = r and follow from the numerical solution of the self-consistency Equation (41). Approximating the autocovariance function of a single spike train by a δ-peak scaled by the rate rδ(t), one obtains for the covariance function c between pairs of spike trains the same convolution Equation (36) as for Hawkes neurons (Helias et al., 2013, cf. equation 5). As shown in “Convolution equation for linear noisy rate neurons” this convolution equation coincides with that of a linear rate model with output noise (37), where the diagonal elements of D are chosen to agree to the average spike rate ρ2 = r. The good agreement of the analytical cross covariance functions (21) for the OUP with output noise and direct simulation results for LIF are shown in Figure 7.

6. Discussion

In this work we describe the path to a unified theoretical view on pairwise correlations in recurrent networks. We consider binary neuron models, LIF models, and linear point process models. These models containing a non-linearity (spiking threshold in spiking models, non-linear sigmoidal gain function in binary neurons, strictly positive rates in Hawkes processes) are linearized, taking into account the distribution of the fluctuating input.

The work presents results for several neuron models: We derive analytical expressions for delay-coupled OUP with input and with output noise, we extend the analytical treatment for stochastic binary neurons to the presence of synaptic delays, present a method that takes into account network-generated noise to determine the effective gain function, extend the theory of Hawkes processes to the existence of delays and inhibition, and present in Equation (12) a condition for the onset of global oscillations caused by delayed feedback, generalized to feedback pathways through different eigenvalues of the connectivity.

Some results qualitatively extend the existing theory (delays, inhibition), others improve the accuracy of existing theories (linearization including fluctuations). More importantly, our approach enables us to demonstrate the equivalence of each of these models after linear approximation to a linear model with fluctuating continuous variables. The fact that linear perturbation theory leads to effective linear equations is of course not surprising, but the analytical procedure firstly enables a mapping between models that conserves quantitative results and secondly allows us to uncover common structures underlying the emergence of correlated activity in recurrent networks. For the commonly appearing exponentially decaying response kernel function, these rate models coincide with the OUP (OUP, Uhlenbeck and Ornstein, 1930; Risken, 1996). We find that the considered models form two groups, which, in linear approximation merely differ by a matrix valued factor scaling the noise and in the choice of variables interpreted as neural activity. The difference between these two groups corresponds to the location of the noise: spiking models—LIF models and Hawkes models—belong to the class with noise on the output side, added to the activity of each neuron. The non-spiking binary neuron model corresponds to an OUP where the noise is added on the input side of each neuron. The closed solution for the correlation structure of OUP holds for both classes.

We identify different contributions to correlations in recurrent networks: the solution for output noise is split into three terms corresponding to the δ-peak in the autocovariance, the covariance caused by shared input, and the direct synaptic influence of stochastic fluctuations of one neuron on another–the latter echo terms are equal to propagators acting with delays (Helias et al., 2013). A similar splitting into echo and correlated input terms for the case of input noise is shown in Figure 8. For increasing network size N → ∞, keeping the connection probability p fixed, so that K = pN, and with rescaled synaptic amplitudes J ~ 1/ (van Vreeswijk and Sompolinsky, 1996; Renart et al., 2010) the echo terms vanish fastest. Formally this can be seen from (18): the multiplicative factor of the common covariance term φ4 does not change with N while the other coefficients decrease. So ultimately all four entries of the matrix c have the same time dependence determined by the common covariance term φ4. In particular the covariance between excitation and inhibition c

becomes symmetric in this limit. This finally provides a quantitative explanation of the observation made in (Renart et al., 2010) that the time-lag between excitation and inhibition vanishes in the limit of infinitely large networks. For a different synaptic rescaling J ~ N−1 while keeping ρ2 constant by appropriate additional input to each neuron (see Helias et al., 2013 applied to the LIF model), all multiplicative factors decrease ~ N−1 and so does the amplitude of all covariances. Hence the asymmetry of c

becomes symmetric in this limit. This finally provides a quantitative explanation of the observation made in (Renart et al., 2010) that the time-lag between excitation and inhibition vanishes in the limit of infinitely large networks. For a different synaptic rescaling J ~ N−1 while keeping ρ2 constant by appropriate additional input to each neuron (see Helias et al., 2013 applied to the LIF model), all multiplicative factors decrease ~ N−1 and so does the amplitude of all covariances. Hence the asymmetry of c

does not vanish in this limit. The same results hold for the case of output noise where the term with φ1 describes the common input part of the covariance. In this case and for finite network size, c

does not vanish in this limit. The same results hold for the case of output noise where the term with φ1 describes the common input part of the covariance. In this case and for finite network size, c

coincides with c

coincides with c

and c

and c

with c

with c

for t > 0, having a discontinuous jump at the time of the synaptic delay t = d. For time lags smaller than the delay all four covariances coincide. This is due to causality, as the second neuron cannot feel the influence of a fluctuation that happened in the first neuron less than one synaptic delay before. The covariance functions for systems corresponding to an OUP with input noise contain neither discontinuities nor sharp peaks at t = d, but c

for t > 0, having a discontinuous jump at the time of the synaptic delay t = d. For time lags smaller than the delay all four covariances coincide. This is due to causality, as the second neuron cannot feel the influence of a fluctuation that happened in the first neuron less than one synaptic delay before. The covariance functions for systems corresponding to an OUP with input noise contain neither discontinuities nor sharp peaks at t = d, but c

and c

and c

have maxima and minima near this location. This observation can be interpreted as a result of the stochastic nature of the binary model where changes in the input influence the state of the neuron only with a certain probability. So, the entries of c in this case take different values for |t| < d but show the tendency to approach each other with increasing |t| » d. This tendency increases with network size. Our analytical solutions (18) for input noise and (21) for output noise hence explain the model-class dependent differences in the shape of covariance functions.

have maxima and minima near this location. This observation can be interpreted as a result of the stochastic nature of the binary model where changes in the input influence the state of the neuron only with a certain probability. So, the entries of c in this case take different values for |t| < d but show the tendency to approach each other with increasing |t| » d. This tendency increases with network size. Our analytical solutions (18) for input noise and (21) for output noise hence explain the model-class dependent differences in the shape of covariance functions.

The two above mentioned synaptic scaling procedures are commonly termed “strong coupling” (J ~ 1/) and “weak coupling” (J ~ 1/N), respectively. The results shown in Figure 6 were obtained for J = 2/ and β = 0.5, so the number of synapses required to cause a notable effect on the gain function is 1/(β J) = , which is small compared to the number of incoming synapses pN. Hence the network is in the strong coupling regime. Also note that for infinite slope of the gain function, β → ∞, the magnitude of the covariance becomes independent of the synaptic amplitude J, in agreement with the linear theory presented here. This finding can readily be understood by the linearization procedure, presented in the current work, that takes into account the network- generated fluctuations of the total input. The amplitude σ of these fluctuations scales linearly in J and the effective susceptibility depends on J/σ in the case β → ∞, explaining the invariance (Grytskyy et al., 2013). In the current manuscript we generalized this procedure to finite slopes β and to other models than the binary neuron model.

Our approach enables us to map results obtained for one neuron model to another, in particular we extend the theory of all considered models to capture synaptic conduction delays, and devise a simpler way to obtain solutions for systems considered earlier (Ginzburg and Sompolinsky, 1994). Our derivation of covariances in spiking networks does not rely on the advanced Wiener-Hopf method (Hazewinkel, 2002), as earlier derivations (Hawkes, 1971; Helias et al., 2013) do, but only employs elementary methods. Our results are applicable for general connectivity matrices, and for the purpose of comparison with simulations we explicitly derive population averaged results. The averages of the dynamics of the linear rate model equations are exact for random network architectures with fixed out-degree, and approximate for fixed in-degree. Still, for non-linear models the linearization for fixed in-degree networks are simpler, because the homogeneous input statistics results in an identical linear response kernel for all cells. Finally we show that the oscillatory properties of networks of integrate-and-fire models (Brunel, 2000; Helias et al., 2013) are model-invariant features of all of the studied dynamics, given inhibition acts with a synaptic delay. We relate the collective oscillations to the pole structure of the cross spectrum, which also determines the power spectra of population signals such as EEG, ECoG, and the LFP.

The presented results provide a further step to understand the shape and to unify the description of correlations in recurrent networks. We hope that our analytical results will be useful to constrain the inverse problem of determining the synaptic connectivity given the correlation structure of neurophysiological activity measurements. Moreover the explicit expressions for covariance functions in the time domain are a necessary prerequisite to understand the evolution of synaptic amplitudes in systems with spike-timing dependent plasticity and extend the existing methods (Burkitt et al., 2007; Gilson et al., 2009, 2010) to networks including inhibitory neurons and synaptic conduction delays.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We gratefully appreciate ongoing technical support by our colleagues in the NEST Initiative, especially Moritz Deger for the implementation of the Hawkes model. Binary and spiking network simulations performed with NEST (www.nest-initiative.org). Partially supported by the Helmholtz Association: HASB and portfolio theme SMHB, the Jülich Aachen Research Alliance (JARA), the Next-Generation Supercomputer Project of MEXT, and EU Grant 269921 (BrainScaleS).

Appendix

Calculation of the population averaged cross covariance in time domain

We obtain the population averaged cross spectrum for the Ornstein-Uhlenbeck process with input noise by inserting the averaged connectivity matrix w = M (14) into (8). The two eigenvalues of M are 0 and L = Kw(1 − γg). Taking these into account, we first rewrite the term

where we introduced f(ω) = (Hd(ω)−1 − L)−1. The corresponding transposed and conjugate complex term follows analogously. Hence we obtain the expression for the cross spectrum (17). The residue of f(ω) at ω = zk(L) is

where in the last step we used the condition for a pole Hd(zk)−1 = eizkd(1 + izkτ) = L (see “Spectrum of the dynamics”). The residue of Hd(ω) at is . Using the residue theorem, we need to sum over all poles within the integration contour to get the expression for Res(C(z), z)eizt for t ≥ 0. Sorting (17) to obtain four matrix prefactors and remainders with different frequency dependence, Φ1(ω) = f(ω)f(−ω), Φ2(ω) = f(ω)f(−ω)Hd(ω), Φ3(ω) = Φ2(−ω), and Φ4(ω) = f(ω)f(−ω)Hd(ω)Hd(−ω), we get (18). C(ω) for output noise (20) is obtained by multiplying the expression for C(ω) for input noise with H−1d(ω)H−1d(−ω) = (1 + ω2τ2). In order to perform the back Fourier transformation one first needs to rewrite the cross spectrum in order to isolate the frequency independent term and the two terms that vanish for either t < d or t > d, as described in “Fourier back transformation,”

where in the last step we used , because M is symmetric, obtaining (21). For each of the first three terms in the last expression the right integration contour needs to be chosen as described in “Fourier back transformation” on the example of the general expression (16).

Implementation of noisy rate models

The dynamics is propagated in time steps of duration Δt (note that in other works we use h as a symbol for the computation step size, which here is used as the symbol for the kernel). The product of the connectivity matrix with the vector of output variables at the end of the previous step i − 1 is the vector I(ti) of inputs at the current step i. The intrinsic time scale of the system is determined by the time constant τ. For sufficiently small time steps Δt « τ these inputs can be assumed to be time independent within one step. So we can use (3) or (4) and analytically convolve the kernel function h assuming the input to be constant over the time interval Δt. This corresponds to the method of exponential integration (Rotter and Diesmann, 1999, see App. C.6) requiring only local knowledge of the connectivity matrix w. Note that this procedure becomes exact for Δt → 0 and for finite Δt is an approximation. The propagation of the initial value rj(ti − 1) until the end of the time interval takes the form rj(ti − 1) e−Δt/τ because h(ti) = h(ti − 1) e−Δt/τ, so we obtain the expression rj(ti) at the end of the step as

| (43) |

where Ij denotes the input to the neuron j. For output noise the output variable of neuron j is yj = rj + xj, with the locally generated additive noise xj and hence the input is Ij(ti) = (w y(ti))j. In the case of input noise the output variable is rj and the additional noise is added to the input variable, Ij(ti) = (w r(ti))j + xj(ti). In both cases xj is implemented as a binary noise: in each time step, xj is independently and randomly chosen to be 1 or −1 with probability 0.5 multiplied with ρ/ to satisfy (2) for discretized time. Here the δ-function is replaced by a “rectangle” function that is constant on the interval of length Δt, vanishes elsewhere, and has unit integral. The factor Δt−1 in the expression for x2 ensures the integral to be unity. So far, the implementation assumes the synaptic delay to be zero. To implement a non-zero synaptic delay d, each object representing a neuron contains an array b of length ld = d/Δt acting as a ring buffer. The input Ij(ti) used to calculate the output rate at step i according to (43) is then taken from position i mod ld of this array and after that replaced by the input presently received from the network, so that the new input will be used only after one delay has passed. This sequence of buffer handling can be represented as

The model is implemented in Python version 2.7 (Python Software Foundation, 2008) using numpy 1.6.1 (Ascher et al., 2001) and scipy 0.9.0 (Jones et al., 2001).

Implementation of binary neurons in a spiking simulator code

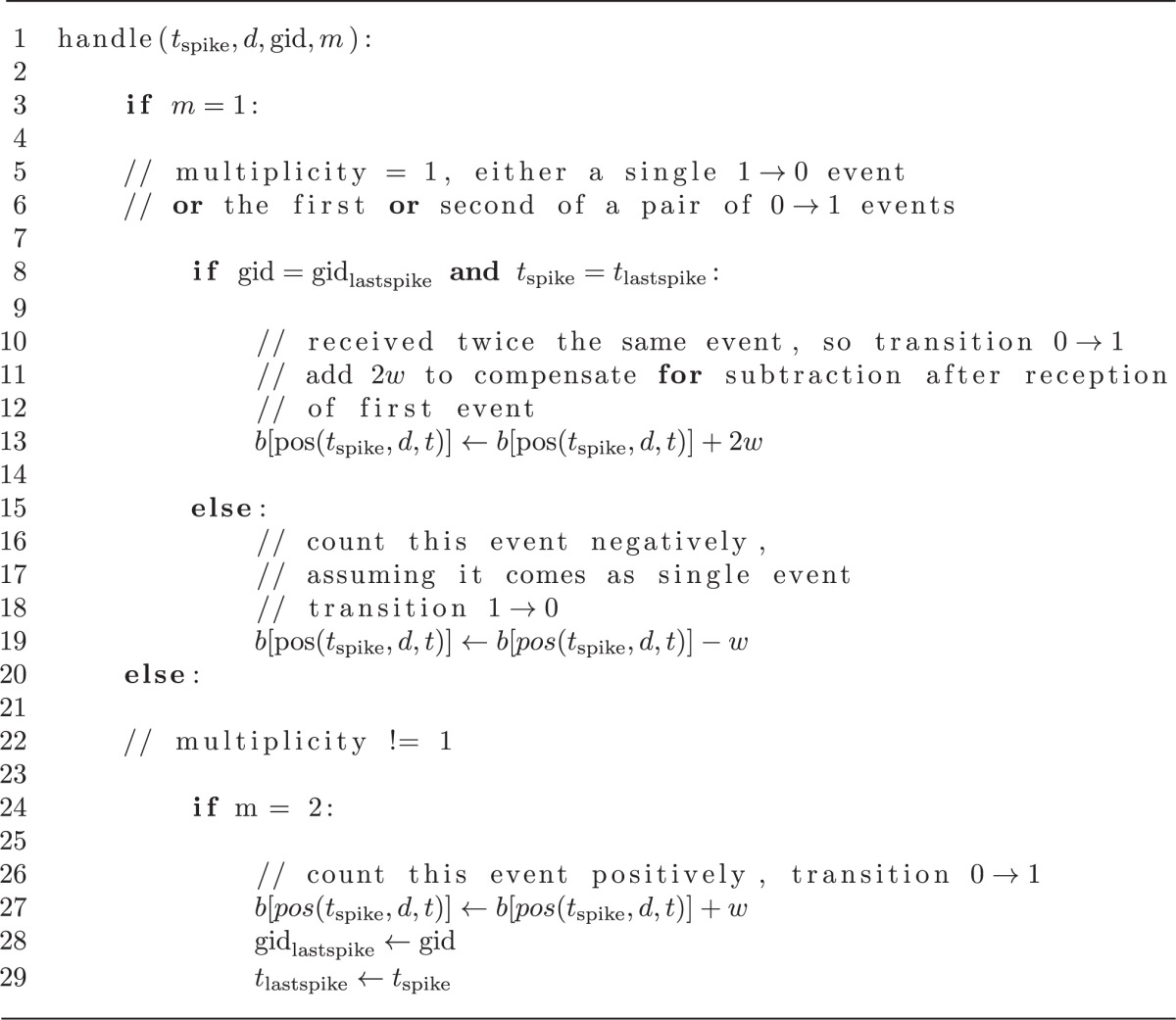

The binary neuron model is implemented in the NEST simulator, version 2.2.1 (Gewaltig and Diesmann, 2007), which allows distributed simulation on parallel machines and handles synaptic delays in the established framework for spiking neurons (Morrison et al., 2005). The name of the model is “ginzburg_neuron”. In NEST information is transmitted in form of point events, which in case of binary neurons are sent if the state of the neuron changes: one spike is sent for a down-transition and two spikes at the same time for an up-transition, so the multiplicity reflects the type of event. The logic to decode the original transitions is implemented in the function handle shown in Alg. 2. If a single spike is received, the synaptic weight w is subtracted from the input buffer at the position determined by the time point of the transition and the synaptic delay. In distributed simulations a single spike with multiplicity 2 sent to another machine is handled on the receiving side as two separate events with multiplicity 1 each. In order to decode this case on the receiving machine we memorize the time (tlast) and origin (global id gidlast of the sending neuron) of the last arrived spike. If both coincide to the spike under consideration, the sending neuron has performed an up transition 0 → 1. We hence add twice the synaptic weight 2w to the input buffer of the target neuron, one that reflects the real change of the system state and another that compensates the subtraction of w after reception of the first spike of a pair. The algorithm relies on the fact that within NEST two spikes that are generated by one neuron at the same time point are delivered sequentially to the target neurons. This is assured, because neurons are updated one by one: The update propagates each neuron by a time step equal to the minimal delay dmin in the network. All spikes generated within one update step are written sequentially into the communication buffers, and finally the buffers are shipped to the other processors (Morrison et al., 2005). Hence a pair of spikes generated by one neuron within a single update step will be delivered consecutively and will not be interspersed by spikes from other neurons with the same time stamp.