Summary

Despite the fact that toxicology uses many stand-alone tests, a systematic combination of several information sources very often is required: Examples include: when not all possible outcomes of interest (e.g., modes of action), classes of test substances (applicability domains), or severity classes of effect are covered in a single test; when the positive test result is rare (low prevalence leading to excessive false-positive results); when the gold standard test is too costly or uses too many animals, creating a need for prioritization by screening. Similarly, tests are combined when the human predictivity of a single test is not satisfactory or when existing data and evidence from various tests will be integrated. Increasingly, kinetic information also will be integrated to make an in vivo extrapolation from in vitro data.

Integrated Testing Strategies (ITS) offer the solution to these problems. ITS have been discussed for more than a decade, and some attempts have been made in test guidance for regulations. Despite their obvious potential for revamping regulatory toxicology, however, we still have little guidance on the composition, validation, and adaptation of ITS for different purposes. Similarly, Weight of Evidence and Evidence-based Toxicology approaches require different pieces of evidence and test data to be weighed and combined. ITS also represent the logical way of combining pathway-based tests, as suggested in Toxicology for the 21st Century. This paper describes the state of the art of ITS and makes suggestions as to the definition, systematic combination, and quality assurance of ITS.

Keywords: Integrated testing strategies, prioritization, predictivity, quality assurance, Tox-21c

Introduction

Replacing a test on a living organism with a cellular, chemicoanalytical, or computational approach obviously is reductionistic. Sometimes this might work well, e.g., when an extreme pH is a clear indication of corrosivity. In general, however, it is naïve to expect a single system to substitute for all mechanisms, the entire applicability domain (substance classes), and degrees of severity. Still, toxicology has long neglected this when requesting a one-to-one replacement to substitute for the traditional animal test. We might even extend this to say it is similarly naïve to address an entire human health effect with a single animal experiment using inbred, young rodents … The only way to approximate human relevance is to mimic the complexity and responsiveness of the organ situation and to model the respective kinetics, i.e., the target hoped for from the human-on-a-chip approach (Hartung and Zurlo, 2012). everything else requires making use of several information sources, if not compromising the coverage of the test. Genotoxicity is a nice example, where patches have continuously been added to cover the various mechanisms. However, here the simplest possible strategy, i.e., a battery of tests where every positive result is considered a liability, causes problems. We have seen where the inevitable accumulation of false-positives leads (Kirkland et al., 2005), ultimately undermining the credibility of in vitro approaches.

The solution is the “intelligent” or “integrated” use of several information sources in a testing strategy (ITS). There is a lot of confusion around this term, especially regarding how to design, validate, and use ITS.

This article aims to elaborate on these aspects with examples and to outline the prospects of ITS in toxicology. It thereby expands the thoughts elaborated for the introduction to the road-map for animal-free systemic toxicity testing (Basketter et al., 2012). The underlying problems and the approach are not actually unique to toxicology. The most evident similarity is to diagnostic testing strategies in clinical medicine, where several sources of information are used, similarly, for differential diagnosis; we discussed these similarities earlier (Hoffmann and Hartung, 2005).

Consideration1: The two origins of ITS in safety assessments

When do we need a test and when do we need a testing strategy? We need more than one test, if:

not all possible outcomes of interest (e.g., modes of action) are covered in a single test

not all classes of test substances are covered (applicability domains)

not all severity classes of effect are covered

when the positive test result is rare (low prevalence) and the number of false-positive results becomes excessive (Hoffmann and Hartung, 2005)

the gold standard test is too costly or uses too many animals and substances need to be prioritized

the accuracy (human predictivity) is not satisfying and predictivity can be improved

existing data and evidences from various tests shall be integrated

kinetic information shall be integrated to make an in vivo extrapolation from in vitro data (Basketter et al., 2012)

All together, it is difficult to imagine a case where we should not apply a testing strategy. It is astonishing how long we have continued to pursue “one test suits all” solutions in toxicology. A restricted usefulness (applicability domain) was stated, but it was only within the discussion on Integrated testing of in vitro, in silico, and toxicokinetics (adsorption, distribution, metabolism, excretion, i.e., ADME) information that such integration was attempted. Bas Blaauboer and colleagues long ago spearheaded this (DeJongh et al., 1999; Forsby and Blaauboer, 2007; Blaauboer, 2010; Blaauboer and Barratt, 1999). The first ITS were accepted as OECD test guidelines in 2002 for eye and skin irritation (OECD TG 404, 2002a; OECD TG 405, 2002b). A major driving force then was the emerging REACH legislation, which sought to make use of all available information for registration of chemicals (especially existing chemicals) in order to limit costs and animal use. this prompted the call for Intelligent TS (Anon., 2005; Van leeuwen et al., 2007; Ahlers et al., 2008; Schaafsma et al., 2009; Vonk et al., 2009; Combes and Balls, 2011; leist et al., 2012; Gabbert and Benighaus, 2012; Rusyn et al., 2012). The two differ to some extent as the REACH-ITS also include in vivo data and are somewhat restricted to the tools prescribed in legislation. This largely excludes the 21st century methodologies (van Vliet, 2011), i.e., omics, high-throughput, and high-content imaging techniques, which are not mentioned in the legislative text. the very narrow interpretation of the legislative text in administrating REACH does not encourage such additional approaches. This represents a tremendous lost opportunity, and some additional flexibility and “learning on the job” would benefit one of the largest investments in consumer safety ever attempted.

Astonishingly, despite these prospects and billions of euros spent for REACH, the literature on ITS for safety assessments is still poor, and little progress toward consensus and guidance has been made. For example, two In Vitro testing Industrial Platform workshops were summarized stating (De Wever et al., 2012): “As yet, there is great dispute among experts on how to represent ITS for classification, labeling, or risk assessments of chemicals, and whether or not to focus on the whole chemical domain or on a specific application. The absence of accepted Weight of Evidence (WoE) tools allowing for objective judgments was identified as an important issue blocking any significant progress in the area.” Similarly, the ECVAM/EPAA workshop concluded (Kinsner-Ovaskainen et al., 2012): “Despite the fact that some useful insights and preliminary conclusions could be extracted from the dynamic discussions at the workshop, regretfully, true consensus could not be reached on all aspects.”

We earlier commissioned a white paper on ITS (Jaworska and Hoffmann, 2010) in the context of our transatlantic think tank for toxicology (t4) and a 2010 conference on 21st Century Validation Strategies for 21st Century Tools. It similarly concluded: “Although a pressing concern, the topic of ITS has drawn mostly general reviews, broad concepts, and the expression of a clear need for more research on ITS (Hengstler et al., 2006; Worth et al., 2007; Benfenati et al., 2010). Published research in the field remains scarce (Gubbels-van Hal et al., 2005; Hoffmann et al., 2008a; Jaworska et al., 2010a).”

It is worth noting, also, that testing strategies from the pharmaceutical industry do not help much. they try to identify an active compound (the future drug) out of thousands of substances, without regard to what they miss – but this approach is unacceptable in a safety ITS. Pharmacology screening also typically starts with a target, i.e., a mode of action, while toxicological assessments need to be open to various mechanisms, some as yet uncharacterized, until we have a comprehensive list of relevant pathways of toxicity (Hartung and McBride, 2011).

Due to them having grown from alternative methods and REACH, ITS discussions are more predominant in Europe (Hartung, 2010d). However, in principle they resonate very strongly with the US approach of toxicity testing in the 21st century (tox-21c) (Hartung, 2009c). The latter suggests moving regulatory toxicology to mechanisms (the pathways of toxicity, Pot). This means breaking the hazard down into its modes of action and combining them with chemico-physical properties (including QSAR) and PBPK models. This implies, similarly, that different pieces of evidence and tests be strategically combined.

Consideration 2: The need for a definition of ITS

Currently, the best reference for definitions of terminology is provided by OECD guidance document 34 on validation (OECD, 2005). An extract of the most relevant definitions is given in Box 1. Notably, the term (integrated) test strategy is not defined.

Following a series of ECVAM internal meetings, an ECVAM/ EPAA workshop was held to address this (Kinsner-Ovaskainen et al., 2009), and it came up with a working definition: “As previously defined within the literature, an ITS is essentially an information-gathering and generating strategy, which in itself does not have to provide means of using the information to address a specific regulatory question. However, it is generally assumed that some decision criteria will be applied to the information obtained, in order to reach a regulatory conclusion. Normally, the totality of information would be used in a weight-of-evidence (WoE) approach.” Woe had been addressed in an earlier ECVAM workshop (Balls et al., 2006): “Weight of evidence (WoE) is a phrase used to describe the type of consideration made in a situation where there is uncertainty and which is used to ascertain whether the evidence or information supporting one side of a cause or argument is greater than that supporting the other side.” It is of critical importance to understand that Woe and ITS are two different concepts although they combine the same types of information! In Woe there is no formal integration, usually no strategy, and often no testing. Woe is much more a “polypragmatic shortcut” to come to a preliminary decision, where there is no or only limited certainty. As proponents of evidence-based toxicology (EBT) (Hoffmann and Hartung, 2006), we have to admit that the term EBT further contributes to this confusion (Hartung, 2009b). However, there is obvious cross-talk between these approaches when, for example, the quality scoring of studies developed for EBT (Schneider et al., 2009) helps to filter their use in WoE and ITS approaches.

The following definition was put forward by the ECVAM/EPAA workshop (Kinsner-Ovaskainen et al., 2009): “In the context of safety assessment, an Integrated Testing Strategy is a methodology which integrates information for toxicological evaluation from more than one source, thus facilitating decision-making. This should be achieved whilst taking into consideration the principles of the Three Rs (reduction, refinement and replacement).” In line with the proposal put forward in the 2007 OECD Workshop on Integrated Approaches to testing and Assessment, they reiterated, “a good ITS should be structured, transparent, and hypothesis driven” (OECD, 2008).

Jaworska and Hoffmann (2010) defined ITS somewhat differently: “In narrative terms, ITS can be described as combinations of test batteries covering relevant mechanistic steps and organized in a logical, hypothesis-driven decision scheme, which is required to make efficient use of generated data and to gain a comprehensive information basis for making decisions regarding hazard or risk. We approach ITS from a system analysis perspective and understand them as decision support tools that synthesize information in a cumulative manner and that guide testing in such a way that information gain in a testing sequence is maximized. This definition clearly separates ITS from tiered approaches in two ways. First, tiered approaches consider only the information generated in the last step for a decision as, for example, in the current regulated sequential testing strategy for skin irritation (OECD, 2002[a]) or the recently proposed in vitro testing strategy for eye irritation (Scott et al., 2009). Secondly, in tiered testing strategies the sequence of tests is prescribed, albeit loosely, based on average biological relevance and is left to expert judgment. In contrast, our definition enables an integrated and systematic approach to guide testing such that the sequence is not necessarily prescribed ahead of time but is tailored to the chemical-specific situation. Depending on the already available information on a specific chemical the sequence might be adapted and optimized for meeting specific information targets.”

It might be useful to start from scratch with our definitions to avoid some glitches.

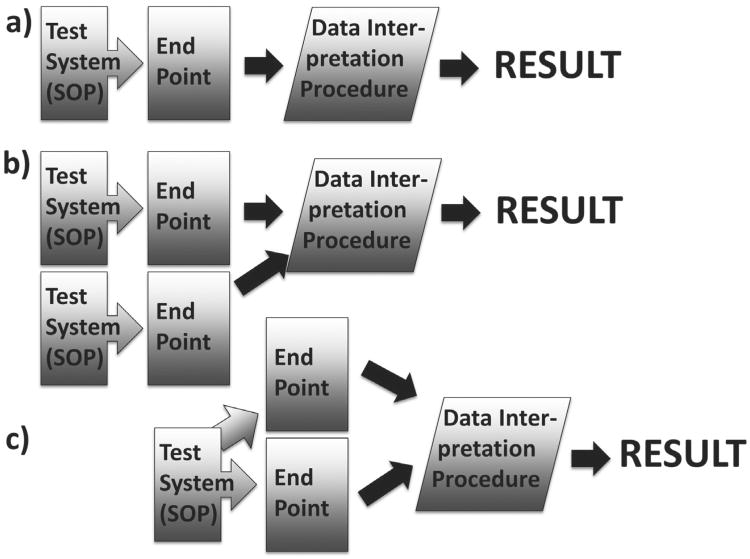

The leading principle should be that a test gives one result, and it does not matter how many endpoints (measurements) the test requires. Figure 1 shows these different scenarios. A test/assay thus consists of a test system (biological in vivo or in vitro model) and a Standard Operation Protocol (SOP) including endpoint(s) to measure, reference substance(s), data interpretation procedure (a way to express the result), information on reproducibility / uncertainty, applicability domain / information on limitations and favorable performance standards. Note that tests can include multiple test systems and/or multiple endpoints as long as they lead to one result.

An integrated test strategy is an algorithm to combine (different) test result(s) and, possibly, non-test information (existing data, in silico extrapolations from existing data or modeling) to give a combined test result. They often will have interim decision points at which further building blocks may be considered.

A battery of tests is a group of tests that complement each other but are not integrated into a strategy. A classical example is the genotoxicity testing battery.

Tiered testing describes the simplest ITS, where a sequence of tests is defined without formal integration of results.

A probabilistic TS describes an ITS, where the different building blocks change the probability for a test result.

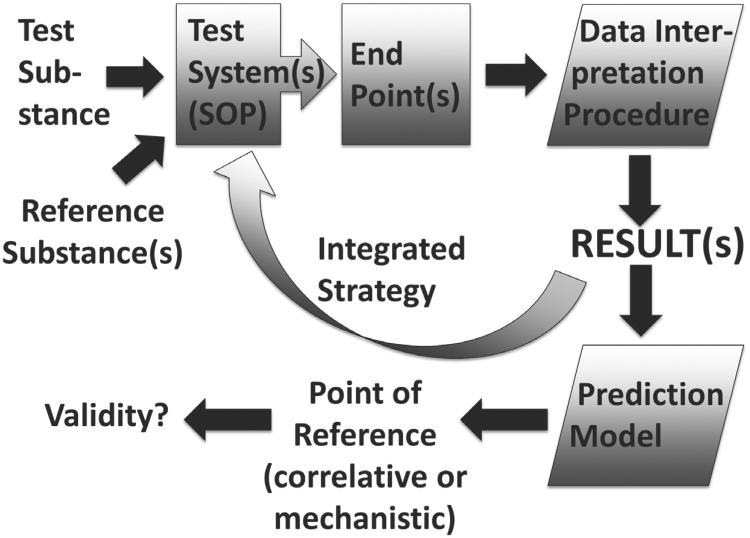

Validation of a test or an ITS requires a prediction model (a way to translate it to the point of reference) and the point of reference itself, which can be correlative on the basis of results, or mechanistic.

Fig. 1. Three prototypic tests.

(a) a simple test with one endpoint, (b) two test systems giving a joint result, and (c) multiple endpoints (including omics and other high-content analysis)

Some of these aspects are shown in Figure 2.

Fig. 2. Components of a test (strategy) and its traditional (correlative) or mechanistic validation.

Consideration 3: Composition of ITS – no GOBSATT!

The ITS in use to date is based on consensus processes often called “weight of evidence” (WoE) approaches. Such “Good old boys sitting around the table” (GOBSATT) is not the method of choice to compose ITS. The complexity of data and the multiplicity of performance aspects to consider (costs, animal use, time, predictivity, etc.) (Nordberg et al., 2008; Gabbert and van Ierland, 2010) call for simulation based on test data. the shortcomings of existing ITS were recently analyzed in detail by Jaworska et al. (2010): “Though both current ITS and WoE approaches are undoubtedly useful tools for systemizing chemical hazard and risk assessment, they lack a consistent methodological basis for making inferences based on existing information, for coupling existing information with new data from different sources, and for analyzing test results within and across testing stages in order to meet target information requirements.” And in more detail in (Jaworska and Hoffmann, 2010): “The use of flow charts as the ITS' underlying structure may lead to inconsistent decisions. There is no guidance on how to conduct consistent and transparent inference about the information target, taking into account all relevant evidence and its interdependence. Moreover, there is no guidance, other than purely expert-driven, regarding the choice of the subsequent tests that would maximize information gain.” Hoffmann et al. (2008a) provided a pioneering example of ITS evaluation focused on skin irritation. They compiled a database of 100 chemicals. A number of strategies, both animal-free and inclusive of animal data, were constructed and subsequently evaluated considering predictive capacities, severity of misclassifications, and testing costs. Note that the different ITS to be compared were “hand-made,” i.e., based on scientific reasoning and intuition, but not on any construction principles. They correctly conclude: “To promote ITS, further guidance on construction and multi-parameter evaluation need to be developed.” Similarly, the ECVAM/EPAA workshop only stated needs (Kinsner-Ovaskainen et al., 2009): “So far, there is also a lack of scientific knowledge and guidance on how to develop an ITS and, in particular, on how to combine the different building blocks for an efficient and effective decision-making process. Several aspects should be taken into account in this regard, including:

the extent of flexibility in combining the ITS components;

the optimal combination of ITS components (including the minimal number of components and/or combinations that have a desired predictive capacity);

the applicability domain of single components and the whole ITS; and

the efficiency of the ITS (cost, time, technical difficulties)” Using this “wish list” as guidance some aspects will be discussed.

Extent of flexibility in combining the ITS components

This is a key dilemma – any validation “sets tests into stone” and “freezes them in time” (Hartung, 2007). An ITS, however, is so much larger than individual tests that there are even more reasons for change (technical advances, limitations of individual ITS components for the given study substance, availability of all tests in a given setting, etc.). What is needed here is a measure of similarity of tests and performance standards. The latter concept was introduced in the modular approach to validation (Hartung et al., 2004) and is now broadly used for the new validations. It defines what criteria a “me-too” development (a term borrowed from the pharmaceutical industry, where a competitor follows the innovative, pioneering work of another company, introducing a compound with the same active principle) must fulfil to be considered equivalent to the original one. The idea is to avoid undertaking another full-blown validation ring trial which requires enormous resources. There is some difference in interpretation as to whether there still needs to be a multi-laboratory exercise to establish inter-laboratory reproducibility and transferability as well. Note that this requires demonstrating the similarity of tests, for which we have no real guidance. It also implies, however, that any superiority of the new test compared to the originally validated one cannot be shown. For ITS components, in the same way, similarity and performance criteria need to be established to allow exchange for something different without a complete reevaluation of the ITS. This can first be based on the scientific relevance and the PoT covered, as argued earlier (Hartung, 2010b). this means that two assays that cover the same mechanism can substitute for each other. Alternatively, it can be based on correlation of results. Two assays that agree (concordance) to a sufficient degree, can be considered similar. We might call these two options “mechanistic similarity” and “correlative similarity.”

The optimal combination of ITS components

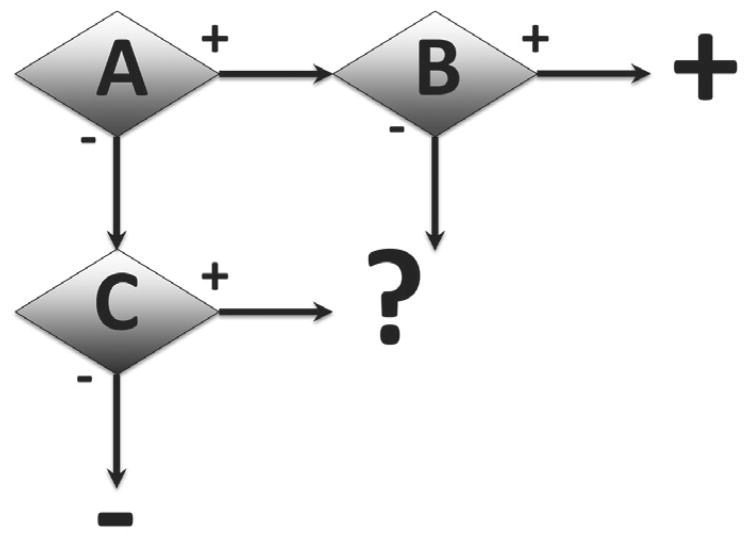

The typical combination of building blocks so far follows a Boolean logic, i.e., the logical combinations are AND, OR, and NOT. Table 1 gives the different examples for combining two tests with dichotomous (plus/minus) outcome with such logic and the consequences for the joint applicability domain and the validation need. Note that in most cases the validation of the building blocks will suffice, but the joint applicability domain will be just the overlap of the two tests' applicability domains. This is a simple application of set theory. Only if the two tests measure the same but for different substances / substance severity classes, the logical combination OR results in the combined applicability domain. If the result requires that both tests are positive, e.g., when a screening test and a confirmatory test are combined, it is necessary to validate the overall ITS outcome.

Tab. 1. Test combinations and consequences for applicability domain and validation needs.

| Logic | Example | Joint Applicability Domain | Validation Need |

|---|---|---|---|

| Boolean | |||

| A AND B | Screening plus confirmatory test | Overlap | Total ITS |

| A OR B | Different Mode of Action | Overlap | Building Blocks |

| Different Applicabilty Domain or Severity Grades | Combined | Building Blocks | |

| A NOT B | Exclusion of a property (such as cytotoxicity) | Overlap | Total ITS |

| IF A positive: B IF A negative: C See Figure 3 |

Decision points, here confirmation of result in a second test | Combined overlap A/B and overlap A/C | Total ITS |

| Fuzzy / Probabilistic | |||

| p(A, B) i.e., probability as function of A and B | Combined change of probability, e.g., priority score | Overlap | Building Blocks |

The principal opportunities in combining tests into the best ITS lie, however, in interim decision points (Figure 3 shows a simple example, where the positive or negative outcome is confirmed). Here, the consequences for the joint applicability domain are more complex and typically only the overall outcome can be validated. The other opportunity is to combine tests not with Boolean logic but with fuzzy/probabilistic logic. This means that the result is not dichotomous (toxic or not) but a probability or score is assigned. We could say that a value between 0 (non-toxic) and 1 (toxic) is assigned. Such combinations typically will only allow use in the overlapping applicability domains. It also implies that only the overall ITS can be validated. The challenge here lies mostly in the point of reference, which normally needs to be graded and not dichotomous as well.

Fig. 3. Illustration of a simple decision tree, where outcomes of test A are confirmed by different second tests B or C.

The advantages of a probabilistic approach were recently summarized by Jaworska and Hoffmann (2010): “Further, probabilistic methods are based on fundamental principles of logic and rationality. In rational reasoning every piece of evidence is consistently valued, assessed, and coherently used in combination with other pieces of evidence. While knowledge- and rule-based systems, as manifested in current testing strategy schemes, typically model the expert's way of reasoning, probabilistic systems describe dependencies between pieces of evidence (towards an information target) within the domain of interest. This ensures the objectivity of the knowledge representation. Probabilistic methods allow for consistent reasoning when handling conflicting data, incomplete evidence, and heterogeneous pieces of evidence.”

The applicability domain of single components and the whole ITS

Simple logic shows, as discussed above, that in most instances an ITS can be applied only where all building blocks applied to a substance allow so. The picture changes only if the combination serves exactly the purpose of expanding the applicability domain (by combining two tests with OR). This implies, however, that essentially the same thing is measured (i.e., similarity of tests); if tests differ in applicability domain and what they measure, a hierarchy needs to be established first. This is one of the key arguments for flexibility of ITS, as we need to exchange building blocks for others to meet the applicability domain for a given substance.

The efficiency of the ITS

Typically, efficiency refers to resources such as cost and labor. Animal use and suffering, however, lies outside its scope. How to value the replacement of an animal test is a societal decision. In the EU legislation, the term “reasonably available” is used to mandate the use of an alternative (Hartung, 2010a). This leaves room for interpretation, but there certainly are limits: How much more costly can an alternative method be to be reasonably available? The cost/benefit calculation also needs to include societal acceptability. However, this is missing the point: In the end, the concept of efficiency centers on predicting human health and environmental effects. What are the costs of a test versus the risk of a scandal? If we only attempt to be as good as the animal test, however, this argument has no leverage. Thus we need to advance to human relevance if we really want impact. This is difficult on the level of correlation, because we typically do not have the human data for a statistically sufficient number of substances. More and more, however, we do now the mechanisms relevant to human health effects. thus, the efficiency with which a test system covers relevant mechanisms for human health and environmental effects is becoming increasingly important. I have called this “mechanistic validation” (Hartung, 2007). this requires that we establish causality for a given mechanism to create a health or environmental effect. the classical frameworks of the Koch-Dale postulates (Dale, 1929) and Bradford-Hill criteria (Hill, 1965) for assessing evidence of causation come to mind first. Dale translated the Koch postulates that need to be fulfilled to prove a pathogen to be the cause of a certain disease to ones that prove a mediator (at the time histamine and neurotransmitters) causes a physiological effect. We recently applied this to systematically evaluate the nature of the Gram-positive bacterial endotoxin (Rockel and Hartung, 2012). Similarly, we can translate this to a Pot being responsible for the manifestation of an adverse cellular outcome of substance X:

Evidence for presence of the PoT in affected cells

Perturbation/activation of the PoT leads to or amplifies the adverse outcome

Hindering PoT perturbation/activation diminishes manifestation of the adverse outcome

Blocking the PoT once perturbed/activated diminishes manifestation of the adverse outcome

Please note that the current debate as to whether a PoT represents a chemico-biological interaction impacting on the biological system or the perturbed normal physiology is reflected in using both terminologies.

Similarly, the Bradford-Hill criteria can be applied:

Strength: the stronger an association between cause and effect the more likely a causal interpretation, but a small association does not mean that there is not a causal effect.

Consistency: Consistent findings of different persons in different places with different samples increase the causal role of a factor and its effect.

Specificity: The more specific an association is between factor and effect, the bigger the probability of a causal relationship.

Temporality: The effect has to occur after the cause.

Biological gradient: Greater exposure should lead to greater incidence of the effect, with the exception that it can also be inverse, meaning greater exposure leads to lower incidence of the effect.

Plausibility: A possible mechanism between factor and effect increases the causal relationship, with the limitation that knowledge of the mechanism is limited by best available current knowledge.

Coherence: A coherence between epidemiological and laboratory findings leads to an increase in the likelihood of this effect. However, the lack of laboratory evidence cannot nullify the epidemiological effect on the associations.

Experiment: Similar factors that lead to similar effects increase the causal relationship of factor and effect.

Most recently, a new approach to causation was proposed, originating from ecological modeling (Sugihara et al., 2012; Marshall, 2012). Whether this offers an avenue to systematically test causality in large datasets from omics and/or high-throughput testing needs to be explored. It might represent an alternative to choosing meaningful biomarkers (Blaauboer et al., 2012), being always limited to the current state of knowledge.

As a more pragmatic approach, DeWever et al. (De Wever et al., 2012) suggested key elements of an ITS:

“(1) Exposure modelling to achieve fast prioritisation of chemicals for testing, as well as the tests which are most relevant for the purpose. Physiologically based pharmacokinetic modelling (PBPK) should be employed to determine internal doses in blood and tissue concentrations of chemicals and metabolites that result from the administered doses. Normally, in such PBPK models, default values are used. However, the inclusion of values or results form in vitro data on metabolism or exposure may contribute to a more robust outcome of such modelling systems.

(2) Data gathering, sharing and read-across for testing a class of chemicals expected to have a similar toxicity profile as the class of chemicals providing the data. In vitro results can be used to demonstrate differences or similarities in potency across a category or to investigate differences or similarities in bio-availability across a category (e.g. data from skin penetration or intestinal uptake).

(3) A battery of tests to collect a broad spectrum of data focussing on different mechanisms and mode of actions. For instance changes in gene expression, signalling pathway alterations could be used to predict toxic events which are meaningful for the compound under investigation.

(4) Applicability of the individual tests and the ITS itself has to be assured. The acceptance of a new method depends on whether it can be easily transferred from the developer to other labs, whether it requires sophisticated equipment and models, or if intellectual property issues and the costs involved are important. In addition, an accurate description of the compounds that can and cannot be tested is essential in this context.

(5) Flexibility allowing for adjustment of the ITS to the target molecule, exposure regime or application.

(6) Human-specific methods should be prioritised whenever possible to avoid species differences and to eliminate ‘low dose’ extrapolation. Thus, the in vitro methods of choice are based upon human tissues, human tissue slices or human primary cells and cell lines for in vitro testing. If in vivo studies be unavoidable, transgenic animals should be the preferred choice if available. If not, comparative genomics (animal versus human) and computational models of kinetics and dynamics in animals and humans may help to overcome species differences.”

This “shopping list” extends ITS from hazard identification to exposure considerations and the inclusion of existing data beyond de novo testing (including some quite questionable approaches of read-across and forming of chemical classes, for which no guidance or quality assurance is yet available). It similarly calls for flexibility, a key difference from the current guidance documents from ECHA or OECD. Compared to REACH, it calls for human predictivity and mode-of-action information in the sense of Toxicity Testing for the 21stCentury. Similarly, an earlier report, also based on an IVTP symposium to which the author contributed, made further recommendations relating to a concept based on pathways of toxicity (Pot) (Berg et al., 2011): “When selecting the battery of in vitro and in silico methods addressing key steps in the relevant biological pathways (the building blocks of the ITS) it is important to employ standardized and internationally accepted tests. Each block should be producing data that are reliable, robust and relevant (the alternative 3R elements) for assessing the specific aspect (e.g. biological pathway) it is supposed to address. If they comply with these elements they can be used in an ITS.”

Hoffmann et al. (2008a) added an important consideration: “Furthermore, the study underlined the need for databases of chemicals with testing information to facilitate the construction of practical testing strategies. Such databases must comprise a good spread of chemicals and test data in order that the applicability of approaches may be effectively evaluated. Therefore, the (non-) availability of data is a caveat at the start of any ITS construction. Whilst in silico and in vitro data may be readily generated, in vivo data of sufficient quality are often difficult to obtain.” this brings us back to both the need for data-sharing (Basketter et al., 2012) and the construction of a point of reference for validation exercises (Hoffmann et al., 2008b).

The most comprehensive framework for ITS composition so far was produced by Jaworska and Hoffmann as a t4-commissioned white paper (Jaworska and Hoffmann, 2010), see also (Jaworska et al., 2010):

“ITS should be:

a) Transparent and consistent

As a new and complex development, key to ITS, as to any methodology, is the property that they are comprehensible to the maximum extent possible. In addition to ensuring credibility and acceptance, this may ultimately attract the interest needed to gather the necessary momentum required for their development. The only way to achieve this is a fundamental transparency.

Consistency is of similar importance. While difficult to achieve for weight of evidence approaches, a well-defined and transparent ITS can and should, when fed with the same, potentially even conflicting and/or incomplete information, always (re-)produce the same results, irrespective of who, when, where, and how it is applied. In case of inconsistent results, reasons should be identified and used to further optimize the ITS consistency.

In particular, transparency and consistency are of utmost importance in the handling of variability and uncertainty. While transparency could be achieved qualitatively, e.g., by appropriate documentation of how variability and uncertainty were considered, consistency in this regard may only be achievable when handled quantitatively.

b) Rational

Rationality of ITS is essential to ensure that information is fully exploited and used in an optimized way. Furthermore, generation of new information, usually by testing, needs to be rational in the sense that it is focused on providing the most informative evidence in an efficient way.

c) Hypothesis-driven

ITS should be driven by a hypothesis, which will usually be closely linked to the information target of the ITS, a concept detailed below. In this way the efficiency of an ITS can be ensured, as a hypothesis-driven approach offers the flexibility to adjust the hypothesis whenever new information is obtained or generated.

… Having defined and described the framework of ITS, we propose to fill it with the following five elements:

Information target identification;

Systematic exploration of knowledge;

Choice of relevant inputs;

Methodology to evidence synthesis;

Methodology to guide testing.”

The reader is referred to the original article (Jaworska and Hoffmann, 2010) and its implementation for skin sensitization (Jaworska et al., 2011).

Consideration 4: Guidance from testing strategies in clinical diagnostics

We earlier stressed the principal similarities between a diagnostic and a toxicological test strategy (Hoffmann and Hartung, 2005). In both cases, different sources of information have to be combined to come to an overall result. Vecchio pointed out as early as 1966 the problem of single tests in unselected populations (Vecchio, 1966) leading to unacceptable false-positive rates. Systematic reviews of an evidence-based toxicology (EBT) approach (Hoffmann and Hartung, 2006; Hartung, 2009b) and meta-analysis could serve the evaluation and quality assurance of toxicological tests. the frameworks for evaluation of clinical diagnostic tests are well developed (Deeks, 2001; Devillé et al., 2002; Leeflang et al., 2008) and led to the Cochrane Handbook for Diagnostic test Accuracy Reviews (Anon., 2011). Devillé et al. (2002) give very concise guidance on how to evaluate diagnostic methods. This is closely linked to efforts to improve reporting on diagnostic tests; a set of minimal reporting standards for diagnostic research has been proposed: Standards for Reporting of Diagnostic Accuracy statement (STARD)1. We argued earlier that this represents an interesting approach to complement or substitute for traditional method validation (Hartung, 2010b). Deeks (2001) summarize their experience as follows [with translation to toxicology inserted in brackets]: “Systematic reviews of studies of diagnostic [hazard assessment] accuracy differ from other systematic reviews in the assessment of study quality and the statistical methods used to combine results. Important aspects of study quality include the selection of a clinically relevant cohort [relevant test set of substances], the consistent use of a single good reference standard [reference data], and the blinding of results of experimental and reference tests. The choice of statistical method for pooling results depends on the summary statistic and sources of heterogeneity, notably variation in diagnostic thresholds [thresholds of adversity]. Sensitivities, specificities, and likelihood ratios may be combined directly if study results are reasonably homogeneous. When a threshold effect exists, study results may be best summarised as a summary receiver operating characteristic curve, which is difficult to interpret and apply to practice.”

Interestingly, Schünemann et al. (2008) developed GRADE for grading quality of evidence and strength of recommendations for diagnostic tests and strategies. This framework uses “patient-important outcomes” as measures, in addition to test accuracy. A less invasive test can be better for a patient even if it does not give the same certainty. Similarly, we might frame our choices by aspects such as throughput, costs, or animal use.

Consideration 5: The many faces of (I)TS for safety assessments

As defined earlier, any systematic combination of different (test) results represents a testing strategy. It does not really matter if these results already exist, are estimated from structures or related substances, measured by chemicophysical methods, or stem from testing in a biological system or from human observations and studies. Jaworska et al. (2010) and Basketter et al. (2012) list many of the more recently proposed ITS. One of the authors (THA) had the privilege to coordinate from the side of the European Commission the ITS development within the guidance for REACH implementation for industry, which formed the basis for current ECHA guidance.2 Classical examles in toxicology, some of them commonly used without the label ITS, are:

Test battery of genotoxicity assays

Several assays (3-6) depending on the field of use (Hartung, 2008) are carried out and, typically, any positive result is taken as an alert. They often are combined with further mutagenicity testing in vivo (Hartung, 2010c). The latter is necessary to reduce the tremendous rate of false-positive classifications of the battery, as discussed earlier (Basketter et al., 2012). Interestingly, Aldenberg and Jaworska (2010) applied a Bayesian network to the dataset assembled by Kirkland et al. showing the potential of a probabilistic network to analyze such datasets.

ITS for eye and skin irritation

As already mentioned, these were the first areas to introduce internationally accepted though relatively simple ITS, e.g., suggesting a pH test before progressing to corrosivity testing. The rich data available from six international validation studies, eight retrospective assessments, and three recently completed validation studies of new tests (Adler et al., 2011; Zuang et al., 2008) make it an ideal test case for ITS development. For ocular toxicity, the OECD TG 405 in 2002 provided an ITS approach for eye irritation and corrosion (OECD 2002a). In spite of this TG, the Office of Pesticide Programs (OPPs) of the US EPA requested the development of an in vitro eye irritation strategy to register anti-microbial cleaning products. The Institute for In Vitro Sciences, in collaboration with industry partners, developed such an ITS of three in vitro approaches, which was then accepted by regulators (De Wever et al., 2012). ITS development has advanced greatly as a result of this test case (McNamee et al., 2009; Scott et al., 2009).

For skin irritation, we already referred to the work by Hoffmann et al. (2008a), which was based on an evaluation of the prevalence of this hazard among new chemicals (Hoffmann et al., 2005). The study showed the potential of simulations to guide ITS construction.

Embryonic Stem Cell test (EST) – an ITS?

The EST (Spielmann et al., 2006; Marx-Stoelting et al., 2009; Seiler and Spielmann, 2011) is an interesting test case for our definition of an ITS. It consists of two test systems (mouse embryonic stem cells and 3T3 fibroblasts) and two endpoints (cell differentiation into beating cardiomyocytes and cytotoxicity in both cell systems). The result (embryotoxicity), however, is only deduced from all this information. According to the suggested definition of tests and ITS, therefore, this represents a test and not an ITS. Note, however, that the EST formed a key element of the ITS developed at the end of the Integrated Project ReProTect (Hareng et al., 2005); a feasibility study showed the tremendous potential of this approach (Schenk et al., 2010).

Skin sensitization

This area has been subject to intense work over the last decade, which resulted in about 20 test systems. As outlined in the roadmap process (Basketter et al., 2012), the area now requires the creation of an ITS. It seems that only the gridlock of the political decision process on the 2013 deadline, which includes skin sensitization as an endpoint, hinders the finalization of this important work. Since, at the same time, this represents a critical endpoint for REACH (notably all chemicals under REACH currently require a local lymph node assay for skin sensitization), such delays are hardly acceptable. It is very encouraging that BASF has pushed the area by already submitting their ITS (Mehling et al., 2012) for ECVAM evaluation. Pioneering work to develop a Bayesian ITS for this hazard was referred to earlier (Jaworska et al., 2011).

In silico ITS

There also are attempts to combine only various in silico (QSAR) approaches. We have discussed some of the limitations of the in silico approaches in isolation earlier (Hartung and Hoffmann, 2009). Since they are referred to in REACH as “non-testing methods” they might actually be called “Integrated Non-Testing Strategies” (INTS). An example for bioaccumulation, already proposed to suit ITS (De Wolf et al., 2007; Ahlers et al., 2008), was reported recently (Fernández et al., 2012), showing improved prediction by combining several QSAR.

Consideration 6: Validation of ITS

Concepts for the validation of ITS are only now emerging. The ECVAM/EPAA workshop (Kinsner-Ovaskainen et al., 2009) noted only: “There is a need to further discuss and to develop the ITS validation principles. A balance in the requirements for validation of the individual ITS components versus the requirements for the validation of a whole ITS should be considered.” Later in the text, the only statement made was: “It was concluded that a formal validation should not be required, unless the strategy could serve as full replacement of an in vivo study used for regulatory purposes.” the workshop stated that for screening, hazard classification & labeling, and risk assessment neither a formal validation of the ITS components nor the entire ITS is required. We would respectfully disagree, as validation certainly is desirable for other uses, but it should be tailored to the use scenario and the available resources. The follow-up workshop (Kinsner-Ovaskainen et al., 2012) did not go much further with regard to recommendations for validation: “Firstly, it was agreed that the validation of a partial replacement test method (for application as part of a testing strategy) should be differentiated from the validation of an in vitro test method for application as a stand-alone replacement. It was also agreed that any partial replacement test method should not be any less robust, reliable or mechanistically relevant than standalone replacement methods. However, an evaluation of predictive capacity (as defined by its accuracy when predicting the toxicological effects observed in vivo) of each of these test methods would not necessarily be as important when placed in a testing strategy, as long as the predictive capacity of the whole testing strategy could be demonstrated. This is especially the case for test methods for which the relevant prediction relates to the impact of the tested chemical on the biological pathway of interest (i.e. biological relevance). The extent to which (or indeed how) this biological relevance of test methods could, and should, be validated, if reference data (a ‘gold standard’) were not available, remained unclear.

Consequently, a recommendation of the workshop was for ECVAM to consider how the current modular approach to validation could be pragmatically adapted for application to test methods, which are only used in the context of a testing strategy, with a view to making them acceptable for regulatory purposes.

Secondly, it was agreed that ITS allowing for flexible and ad hoc approaches cannot be validated, whereas the validation of clearly defined ITS would be feasible. However, even then, current formal validation procedures might not be applicable, due to practical limitations (including the number of chemicals needed, cost, time, etc).

Thirdly, concerning the added value of a formal validation of testing strategies, the views of the group members differed strongly, and a variety of perspectives were discussed, clearly indicating the need for further informed debate. Consequently, the workshop recommended the use of EPAA as a forum for industry to share case studies demonstrating where, and how, in vitro and/or integrated testing strategies have been successfully applied for safety decision-making purposes. Based on these case studies, a pragmatic way to evaluate the suitability of partial replacement test methods could be discussed, with a view to establishing conditions for regulatory acceptance and to reflect on the cost/benefit of formal validation, i.e. the confirmation of scientific validity of a strategy by a validation body and in line with generally accepted validation principles, as provided in OECD Guidance Document 34 (OECD, 2005).

Finally, the group agreed that test method developers should be encouraged to develop and submit to ECVAM, not only tests designed as full replacements of animal methods, but also partial replacements in the context of a testing strategy.”

Going somewhat further, De Wever et al. (2012) noted: “In some cases, the assessment of predictive capacity of a single building block may not be as important, as long as the predictive capacity of the whole testing strategy is demonstrated. However, … the predictive capacity of each single element of an ITS and that of the ITS as a whole needs to be evaluated.”

Berg et al. go even further, challenging the validation need and suggesting a more hands-on approach to gain experience (Berg et al., 2011): “Does it make sense to validate a strategy that builds upon tests for hazard identification which change over time, but is to be used for risk assessment? One needs to incorporate new thinking into risk assessment. Regulators are receptive to new technologies but concrete data are needed to support their use. Data documentation should be comprehensive, traceable and make it possible for other investigators to retrieve information as well as reliably repeat the studies in question regardless of whether the original work was performed to GLP standards.”

What is the problem? If we follow the traditional approach of correlating results, we need good coverage of each branch of the ITS with suitable reference substances to establish correct classification. Even for these very simple stand-alone tests, however, we are often limited by the low number of available well-characterized reference compounds and how much testing we can afford. However, such an approach would be valid only for static ITS anyway, and it would lose all the flexibility of exchanging building blocks. The opportunity lies in the earlier suggested “mechanistic validation.” If we can agree that a certain building block covers a certain relevant mechanism, we might relax our validation requirements and also accept as equivalent another test covering the same mechanism. This does not blunt the need for reproducibility assessments, but a few pertinent toxicants relevant to humans should suffice to show that we at least identify the liabilities of the past. The second way forward is to stop making any test a “game-changer”: If we accept that each and every test only changes probabilities of hazard, we can relax and fine-tune the weight added with each piece of evidence “on the job.” It appears that such probabilistic hazard assessment also should, ideally, be compatible with probabilistic PBPK modeling and probabilistic exposure modeling (van der Voet and Slob, 2007; Hartung et al., 2012). This is the tremendous opportunity of probabilistic hazard and risk assessment (Thompson and Graham, 1996; Hartung et al., 2012).

Consideration 7: Challenges ahead

Regulatory acceptance

A key recommendation from the ECVAM/EPAA workshop (Kinsner-Ovaskainen et al., 2009) was: “It is necessary to initiate, as early as possible, a dialogue with regulators and to include them in the development of the principles for the construction and validation of ITS.” An earlier OECD workshop in 2008 (OECD, 2008) made some first steps and posed some of the most challenging questions addressing:

how these tools and methods can be used in an integrated approach to fulfil the regulatory endpoint, independent of current legislative requirements;

how the results gathered using these tools and methods can be transparently documented; and

how the degree of confidence in using them can be communicated throughout the decision-making process.

With impressive crowd-sourcing of about 60 nominated experts and three case studies, a number of conclusions were reached:

“There is limited acceptability for use of structural alerts to identify effects. Acceptability can be improved by confirming the mode of action (e.g., in vitro testing, in vivo information from an analogue or category).

There is a higher acceptability for positive (Q)SAR results compared to negative (Q)SAR results (except for aquatic toxicity).

The communication on how the decision to accept or reject a (Q)SAR result can be based on the applicability domain of a (Q)SAR model and/or the lack of transparency of the (Q)SAR model.

The acceptability of a (Q)SAR result can be improved by confirming the mechanism/mode of action of a chemical and using a (Q)SAR model applicable for that specific mechanism/ mode of action.

Read-across from analogues can be used for priority setting, classification & labeling, and risk assessment.

The combination of analogue information and (Q)SAR results for both target chemical and analogue can be used for classification & labeling and risk assessment for acute aquatic toxicity if the target chemical and the analogue share the same mode of action and if the target chemical and analogue are in the applicability domain of the QSAR.

Confidence in read-across from a single analogue improves if it can be demonstrated that the analogue is likely to be more toxic than the target chemical or if it can be demonstrated that the target chemical and the analogue have similar metabolization pathways.

Confidence in read-across improves if experimental data is available on structural analogues “bracketing” the target substance. The confidence is increased with an increased number of “good” analogues that provide concordant data.

Lower quality data on a target chemical can be used for classification & labeling and risk assessment if it confirms an overall trend over analogues and target.

Confidence is reduced in cases where robust study summaries for analogues are incomplete or inadequate.

It is difficult to judge analogues with missing functional groups compared to the target; good analogues have no functional group compared to the target and when choosing analogues, other information on similarity than functional groups is requested.”

Taken together, these conclusions address more a WoE approach and the use of non-testing information than actual ITS. They still present important information on the comfort zone of regulators and how to handle such information for inclusion into ITS. Note that the questions of documentation and expressing confidence were not tackled.

Flexibility by determining the Most Valuable (next) Test

A key problem is to break out of the rigid test guideline principles of the past. ITS must not be forced into a scheme with a yearlong debate of expert consensus and committees. Too often, technological changes to components, difficulties with availability and applicability of building blocks, and case-by-case adaptations for the given test sample will be necessary. For example, the integration of existing data, obviously at the beginning of an ITS, already creates a very different starting point. Chemico-physical, structural properties (including read-across or chemical category assignments) and prevalence also will change the probability of risk even before the first tests are applied. In order to maintain the desired flexibility in applying an ITS the MVT (most valuable test) to follow needs to be determined at each moment. Such an approach should have the following features:

Assess, finally, the probability of toxicity from the different test results.

Determine most valuable next test given from previous test results and other information.

Have a measure of model stability (e.g., confidence intervals) and robustness.

Assessing the probability of toxicity for given tests can be done by machine learning tools. Generative models work best for providing the values needed to find a most valuable test given prior tests. One simple generative model would predict probability of toxicity using a discriminative model (e.g., Random Forests (Breimann, 2001)), and test probability via a generative model (e.g., Naive Bayes). A classifier for determining risk of chemical toxicity must have the following traits:

Outputs: unbiased and consistent probability estimates for toxicity (e.g., by cross-validation).

Outputs: probability estimates even when missing certain results (both Random Forests and Naive Bayes can handle missing values).

Reliable and stable results based on cross-validation measures.

The MVT identification based on previous tests is not a direct consequence of building a toxicity probability estimator. To find MVTs we need a generative model capable of determining test probabilities. One simple and effective way to determine the MVT is via the same method that decision trees use, i.e., an iterative process of determining which tests gives the most “information” on the endpoint. Information gain can be calculated given a generative model. To determine the test that gives the most information, we can find the test that yields the greatest reduction in Shannon entropy. This is basically a measure that quantifies information as a function of the probability of different values for a test and the impact those values have on the endpoint category (toxic vs. non-toxic). The mathematical formula is:

Where T is the test in question and p(Ti) signifies the probability of a test taking on one of its values (enumerated by i). To determine the most valuable test we need not only the toxicity classifier but also the probability estimates for every test as a function of all other tests. To determine these transition probabilities we need to discretize every test into the n buckets shown in the above equation.

We can expect that users applying this model would want to determine probabilities of toxicity for their test item within some risk threshold in the fewest number of test steps or minimizing the costs. When we start testing for toxicity we may want to check the current level of risk before deciding on more testing. For example, we might decide to stop testing if a test item has less than 10% chance of being toxic or a greater than 90% chance. Finding MVTs from a generative model has an advantage over directly using decision trees. Unfortunately, decision trees cannot handle sparse data effectively. The amount of data needed to determine n tests increases exponentially with the number of tests. By calculating MVTs on top of a generative model we can leverage a simple calculation from a complex model that is not as heavily constrained by data size.

Combining the ITS concept with Tox-21c

As discussed above, tox-21c relies on breaking risk assessment down into many components. These need to be put together again in a way that allows decision making, ultimately envisioned as systems toxicology by simulation (Hartung et al., 2012). Before this, an ITS-like integration and possibly a probabilistic condensation of evidence into a probability of risk are the logical approaches. However, there are special challenges: Most importantly, the technologies promoted by tox-21c, at this stage mainly omics and high-throughput (Hattis, 2009), are very different from the information sources promoted in the European ITS discussion. We see how the ITS discussion is crossing the Atlantic, however – in the context of endocrine disruptor testing, for example (Willett et al., 2011). They are so data-rich that, from the beginning, a data-mining approach is necessary, which means that the weighing of evidence is left to the computer. Not all regulators are comfortable with this.

Our own research is approaching this for metabolomics (Bouhifd et al., in press); using endocrine disruption as a test case might illustrate some of the challenges of the high-throughput, systems biology methods and omics technologies. Metabolomics – defined as measuring the concentration of “all” low molecular weight (<1500 Da) molecules in a system of interest – is the closest “omics” technology to the phenotype, and it represents the upstream consequences of whatever changes are observed in proteomic or transcriptomic studies. Small changes in the concentration of a protein, which might be undetectable at the level of transcriptomics or proteomics, can result in large changes in the concentrations of metabolites – changes that often are invisible at one level of analysis (i.e., co-factor regulation of an enzyme), are more likely to be apparent in a metabolic profile. By taking a global view, metabolomics provides clues to the systemic response to a challenge from a toxin and does so in a way that provides both mechanistic information and candidates for biomarkers (Griffin, 2006; Robertson et al., 2011). In other words, metabolomics offers both the possibility of seeing the high-level pattern of altered biological pathways while drilling down for relevant mechanistic details.

Metabolomics produces many of the same challenges as other high-content methods – namely, how to integrate the surfeit of data into a meaningful framework, but at the same time, it has some unique challenges. In particular, metabolomics lacks the large-scale, integrated databases that have been crucial to the analysis of transcriptomic and proteomic data. As was the case in the early years of microarrays, we are still without established methods to interpret data. Exploring data sets via several methods (Sugimoto et al., 2012) (ORA, QEA, correlation analysis, and genome-scale network reconstruction), hopefully, will provide some guidance for future toxicological applications for metabolomics and help us to better understand the puzzle as well as to develop and provide new perspectives on how to integrate several “-omics” technologies. At some level, metabolomics remains, at this stage, a process of hypothesis generation and, potentially, biomarker discovery, and as such will be dependent on validation by other means.

One critical problem for metabolomics is that while a more-or-less complete “parts list” and wiring diagrams exist for genomic and proteomic networks, knowledge of metabolic networks is still relatively incomplete. Currently, there are three non-tissue specific genome-scale human metabolic networks: Recon 1 (Rolfsson et al., 2011), the Edinburgh Human Metabolic Network (EHMN) (Ma et al., 2007), and HumanCyc (Romero et al., 2005). These reconstructions are “first drafts” – in addition to genes and proteins of unknown function, as well as “dead end” or “orphaned” metabolites that are not associated with specific anabolic or catabolic pathways. Furthermore, the networks are not tissue-specific. Many toxicants, including endocrine disruptors, exhibit tissue-specific toxicity, and a cell or tissue-specific metabolic network (Hao et al., 2012) should provide a more accurate model of pathology than a generic, global human metabolic network. In the long term, a well-characterized, biochemically complete network will help make the leap from pathway identification to a parameterized model than can be used for more complex simulations such as metabolic control analysis, flux analysis, and systems control theory to clarify the wiring diagram that allows the cell to maintain homeostasis and to determine where, within that wiring diagram, there are vulnerabilities.

Steering the new developments

At this stage, no strategic planning and coordination for the challenge of ITS implementation exists. this was noticed in most of the meetings so far, e.g., (Berg et al., 2011): “… there was a clear call from the audience for a credible leadership with the capacity to assure alignment of ongoing activities and initiation of concerted actions, e.g. a global human toxicology project.” the Human toxicology Project Consortium3 is one of the advocates for such steering (Seidle and Stephens, 2009). There is still quite a way to go (Hartung, 2009a). While we aim to establish some type of coordinating center in the US at Johns Hopkins (working title PoToMaC – Pathway of toxicity Mapping Center), no such effort is yet in place in Europe. We suggested the creation of a European Safety Sciences Institute (ESSI) in our policy program, but this discussion is only starting. It is evident, however, that we need such structures for developing the new toxicological toolbox, along with a global collaboration of regulators of the different sectors, to finally revamp regulatory safety assessment.

Box 1.

Relevant definitions from OECD Series on Testing and Assessment No. 34 (OECD, 2005)

Adjunct test

Test that provides data that add to or help interpret the results of other tests and provide information useful for the risk assessment process Assay: Uses interchangeably with test.

Data interpretation procedure (DIP)

An interpretation procedure used to determine how well the results from the test predict or model the biological effect of interest. See Prediction Model.

Decision Criteria

The criteria in a test method protocol that describe how the test method results are used for decisions on classification or other effects measured or predicted by the test method.

Definitive test

A test that is considered to generate sufficient data to determine the specific hazard or lack of hazard of the substance without the need for further testing, and which may therefore be used to make decisions pertaining to hazard or safety of the substance.

Hierarchical (tiered) testing approach

An approach where a series of tests to measure or elucidate a particular effect are used in an ordered sequence. In a typical hierarchical testing approach, one or a few tests are initially used; the results from these tests determine which (if any) subsequent tests are to be used. For a particular chemical, a weight-of-evidence decision regarding hazard could be made at any stage (tier) in the testing strategy, in which case there would be no need to proceed to subsequent tiers.

In silico models

Approaches for the assessment of chemicals based on the use computer-based estimations or simulations. examples include structure-activity relationships (SAR), quantitative structure-activity relationships (QSARs), and expert systems.

(Q)SARs (Quantitative Structure-Activity Relationships)

Theoretical models for making predictions of physicochemical properties, environmental fate parameters, or biological effects (including toxic effects in environmental and mammalian species). They can be divided into two major types, QSARs and SARs. QSARs are quantitative models yielding a continuous or categorical result while SARs are qualitative relationships in the form of structural alerts that incorporate molecular substructures or fragments related to the presence or absence of activity.

A screen/screening test is often a rapid, simple test method conducted for the purpose of classifying substances into a general category of hazard. The results of a screening test generally are used for preliminary decision making in the context of a testing strategy (i.e., to assess the need for additional and more definitive tests). Screening tests often have a truncated response range in that positive results may be considered adequate to determine if a substance is in the highest category of a hazard classification system without the need for further testing, but are not usually adequate without additional information/tests to make decisions pertaining to lower levels of hazard or safety of the substance

Test (or assay)

An experimental system used to obtain information on the adverse effects of a substance. Used interchangeably with assay.

Test battery

A series of tests usually performed at the same time or in close sequence. Each test within the battery is designed to complement the other tests and generally to measure a different component of a multi-factorial toxic effect. Also called base set or minimum data set in ecotoxicological testing.

Test method

A process or procedure used to obtain information on the characteristics of a substance or agent. Toxicological test methods generate information regarding the ability of a substance or agent to produce a specified biological effect under specified conditions. Used interchangeably with “test” and “assay”.

Acknowledgments

The support by NIH transformative research grant “Mapping the Human toxome by Systems toxicology” (RO1ES020750) and FDA grant “DNTox-21c Identification of pathways of developmental neurotoxicity for high throughput testing by metabolomics” (U01FD004230) is gratefully appreciated.

Footnotes

References

- Adler S, Basketter D, Creton S, et al. Alternative (non-animal) methods for cosmetics testing: current status and future prospects – 2010. Arch Toxicol. 2011;85:367–485. doi: 10.1007/s00204-011-0693-2. [DOI] [PubMed] [Google Scholar]

- Ahlers J, Stock F, Werschkun B. Integrated testing and intelligent assessment-new challenges under REACH. Env Sci Pollut Res Int. 2008;15:565–572. doi: 10.1007/s11356-008-0043-y. [DOI] [PubMed] [Google Scholar]

- Aldenberg T, Jaworska JS. Multiple test in silico weight-of-evidence for toxicological endpoints. Issues Toxi-col. 2010;7:558–583. [Google Scholar]

- Anon. REACH and the need for Intelligent testing Strategies [EUR 21554 EN] Institute for Health and Consumer Protection, EC Joint Research Centre; Ispra, Italy: 2005. http://reach-support.com/download/Intelligent%20testing.pdf. [Google Scholar]

- Anon. Cochrane Handbook for Systematic Reviews of Diagnostic test Accuracy. 2011 doi: 10.1002/14651858.ED000163. http://srdta.cochrane.org/hand-book-dta-reviews. [DOI] [PMC free article] [PubMed]

- Balls M, Amcoff P, Bremer S, et al. The principles of weight of evidence validation of test methods and testing strategies. The report and recommendations of ECVAM workshop 58. Altern Lab Animal. 2006;34:603–620. doi: 10.1177/026119290603400604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basketter DA, Clewell H, Kimber I, et al. A road-map for the development of alternative (non-animal) methods for systemic toxicity testing – t4 report. ALTEX. 2012;29:3–91. doi: 10.14573/altex.2012.1.003. [DOI] [PubMed] [Google Scholar]

- Benfenati E, Gini G, Hoffmann S, Luttik R. Comparing in vivo, in vitro and in silico methods and integrated strategies for chemical assessment: problems and prospects. Altern Lab Animal. 2010;38:153–166. doi: 10.1177/026119291003800201. [DOI] [PubMed] [Google Scholar]

- Berg N, De Wever B, Fuchs HW, et al. Toxicology in the 21st century – working our way towards a visionary reality. Toxicol In Vitro. 2011;25:874–881. doi: 10.1016/j.tiv.2011.02.008. [DOI] [PubMed] [Google Scholar]

- Blaauboer BJ. Biokinetic modeling and in vitro-in vivo extrapolations. J Toxicol Environ Health B Crit Rev. 2010;13:242–252. doi: 10.1080/10937404.2010.483940. [DOI] [PubMed] [Google Scholar]

- Blaauboer B, Barratt M. The integrated use of alternative methods in toxicological risk evaluation. Altern Lab Animal. 1999;27:229–237. doi: 10.1177/026119299902700211. [DOI] [PubMed] [Google Scholar]

- Blaauboer BJ, Boekelheide K, Clewell HJ, et al. The use of biomarkers of toxicity for integrating in vitro hazard estimates into risk assessment for humans. ALTEX. 2012;29:411–425. doi: 10.14573/altex.2012.4.411. [DOI] [PubMed] [Google Scholar]

- Bouhifd M, Hartung T, Hogberg HT, et al. Review: Toxicometabolomics. J Appl Toxicol. doi: 10.1002/jat.2874. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman L. Random Forests. Statistics Department, University of California. Machine learning; 2001. http:oz.berkeley.edu/users/breiman/randomforest2001.pdf. [Google Scholar]

- Combes RD, Balls M. Integrated testing strategies for toxicity employing new and existing technologies. Altern Lab Animal. 2011;39:213–225. doi: 10.1177/026119291103900303. [DOI] [PubMed] [Google Scholar]

- Dale HH. Croonian lectures on some chemical factors in the control of the circulation. Lancet. 1929;213:1285–1290. [Google Scholar]

- De Wever B, Fuchs HW, Gaca M, et al. Implementation challenges for designing integrated in vitro testing strategies (ITS) aiming at reducing and replacing animal experimentation. Toxicol. 2012;26:526–534. doi: 10.1016/j.tiv.2012.01.009. [DOI] [PubMed] [Google Scholar]

- De Wolf W, Comber M, Douben P. Animal use replacement, reduction, and refinement: Development of an integrated testing strategy for bioconcentration of chemicals in fish. Integr Environ Assess Manag. 2007;3:3–17. doi: 10.1897/1551-3793(2007)3[3:aurrar]2.0.co;2. [DOI] [PubMed] [Google Scholar]

- Deeks JJ. Systematic reviews in health care: Systematic reviews of evaluations of diagnostic and screening tests. Brit Med J. 2001;323:157–162. doi: 10.1136/bmj.323.7305.157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devillé WL, Buntinx F, Bouter LM, et al. Conducting systematic reviews of diagnostic studies: didactic guidelines. BMC Med Res Methodol. 2002;2:9. doi: 10.1186/1471-2288-2-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dejongh J, Forsby A, Houston JB, et al. An Integrated Approach to the Prediction of Systemic toxicity using Computer-based Biokinetic Models and Biological In vitro test Methods: Overview of a Prevalidation Study Based on the ECITTS Project. Toxicol In Vitro. 1999;13:549–554. doi: 10.1016/s0887-2333(99)00030-2. [DOI] [PubMed] [Google Scholar]

- Fernández A, Lombardo A, Rallo R, et al. Quantitative consensus of bioaccumulation models for integrated testing strategies. Environ Intern. 2012;45:51–58. doi: 10.1016/j.envint.2012.03.004. [DOI] [PubMed] [Google Scholar]

- Forsby A, Blaauboer B. Integration of in vitro neurotoxicity data with biokinetic modelling for the estimation of in vivo neurotoxicity. Hum Exp Toxicol. 2007;26:333–338. doi: 10.1177/0960327106072994. [DOI] [PubMed] [Google Scholar]

- Gabbert S, van Ierland EC. Cost-effectiveness analysis of chemical testing for decision-support: How to include animal welfare? Hum Ecol Risk Assess. 2010;16:603–620. [Google Scholar]

- Gabbert S, Benighaus C. Quo vadis integrated testing strategies? experiences and observations from the work floor. J Risk Res. 2012;15:583–599. [Google Scholar]

- Griffin JLJ. The Cinderella story of metabolic profiling: does metabolomics get to go to the functional genomics ball? Philosophical Transactions of the Royal Society B: Biological Sciences. 2006;361:147–161. doi: 10.1098/rstb.2005.1734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gubbels-van Hal WM, Blaauboer BJ, Barentsen HM, et al. An alternative approach for the safety evaluation of new and existing chemicals, an exercise in integrated testing. Regul Toxicol Pharmacol. 2005;42:284–295. doi: 10.1016/j.yrtph.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Hao T, Ma HW, Zhao XM, Goryanin I. The reconstruction and analysis of tissue specific human metabolic networks. Molec BioSyst. 2012;8:663–670. doi: 10.1039/c1mb05369h. [DOI] [PubMed] [Google Scholar]

- Hareng L, Pellizzer C, Bremer S, et al. The Integrated Project ReProTect: A novel approach in reproductive toxicity hazard assessment. Reprod Toxicol. 2005;20:441–452. doi: 10.1016/j.reprotox.2005.04.003. [DOI] [PubMed] [Google Scholar]

- Hartung T, Bremer S, Casati S, et al. A modular approach to the ECVAM principles on test validity. Altern Lab Anim. 2004;32:467–472. doi: 10.1177/026119290403200503. [DOI] [PubMed] [Google Scholar]

- Hartung T. Food for thought … on validation. ALTEX. 2007;24:67–73. doi: 10.14573/altex.2007.2.67. [DOI] [PubMed] [Google Scholar]

- Hartung T. Food for thought … on alternative methods for cosmetics safety testing. ALTEX. 2008;25:147–162. doi: 10.14573/altex.2008.3.147. [DOI] [PubMed] [Google Scholar]

- Hartung T. A toxicology for the 21st century – mapping the road ahead. Toxicol Sci. 2009a;109:18–23. doi: 10.1093/toxsci/kfp059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartung T. Food for thought … on evidence-based toxicology. ALTEX. 2009b;26:75–82. doi: 10.14573/altex.2009.2.75. [DOI] [PubMed] [Google Scholar]

- Hartung T. Toxicology for the twenty-first century. Nature. 2009c;460:208–212. doi: 10.1038/460208a. [DOI] [PubMed] [Google Scholar]

- Hartung T, Hoffmann S. Food for thought … on in silico methods in toxicology. ALTEX. 2009;26:155–166. doi: 10.14573/altex.2009.3.155. [DOI] [PubMed] [Google Scholar]

- Hartung T. Comparative analysis of the revised Directive 2010/63/EU for the protection of laboratory animals with its predecessor 86/609/EEC – a t4 report. ALTEX. 2010a;27:285–303. doi: 10.14573/altex.2010.4.285. [DOI] [PubMed] [Google Scholar]

- Hartung T. Evidence-based toxicology – the toolbox of validation for the 21st century? ALTEX. 2010b;27:253–263. doi: 10.14573/altex.2010.4.253. [DOI] [PubMed] [Google Scholar]

- Hartung T. Food for thought … on alternative methods for chemical safety testing. ALTEX. 2010c;27:3–14. doi: 10.14573/altex.2010.1.3. [DOI] [PubMed] [Google Scholar]

- Hartung T. Lessons learned from alternative methods and their validation for a new toxicology in the 21st century. J Toxicol Environ Health B Crit Rev. 2010d;13:277–290. doi: 10.1080/10937404.2010.483945. [DOI] [PubMed] [Google Scholar]

- Hartung T, McBride M. Food for thought … on mapping the human toxome. ALTEX. 2011;28:83–93. doi: 10.14573/altex.2011.2.083. [DOI] [PubMed] [Google Scholar]

- Hartung T, Zurlo J. Alternative approaches for medical countermeasures to biological and chemical terrorism and warfare. ALTEX. 2012;29:251–260. doi: 10.14573/altex.2012.3.251. [DOI] [PubMed] [Google Scholar]

- Hartung T, van Vliet E, Jaworska J, et al. Food for thought … systems toxicology. ALTEX. 2012;29:119–128. doi: 10.14573/altex.2012.2.119. [DOI] [PubMed] [Google Scholar]

- Hattis D. High-throughput testing – the NRC vision, the challenge of modeling dynamic changes in biological systems, and the reality of low-throughput environmental health decision making. Risk Anal. 2009;29:483–484. doi: 10.1111/j.1539-6924.2008.01167.x. [DOI] [PubMed] [Google Scholar]

- Hengstler JG, Foth H, Kahl R, et al. The REACH concept and its impact on toxicological sciences. Toxicol. 2006;220:232–239. doi: 10.1016/j.tox.2005.12.005. [DOI] [PubMed] [Google Scholar]

- Hill AB. The environment and disease: association or causation? Proc R Soc Med. 1965;58:295–300. [PMC free article] [PubMed] [Google Scholar]

- Hoffmann S, Hartung T. Diagnosis: toxic! – trying to apply approaches of clinical diagnostics and prevalence in toxicology considerations. Toxicol Sci. 2005;85:422–428. doi: 10.1093/toxsci/kfi099. [DOI] [PubMed] [Google Scholar]

- Hoffmann S, Cole T, Hartung T. Skin irritation: prevalence, variability, and regulatory classification of existing in vivo data from industrial chemicals. Regulat Toxicol Pharmacol. 2005;41:159–166. doi: 10.1016/j.yrtph.2004.11.003. [DOI] [PubMed] [Google Scholar]

- Hoffmann S, Hartung T. Toward an evidence-based toxicology. Hum Exp Toxicol. 2006;25:497–513. doi: 10.1191/0960327106het648oa. [DOI] [PubMed] [Google Scholar]