Abstract

Introduction

The goal of this of this project was to develop and validate a new tool to evaluate learners’ knowledge and skills related to research ethics.

Methods

A core set of 50 questions from existing computer based on-line teaching modules were identified, refined and supplemented to create a set of 74 multiple-choice, true/false and short answer questions. The questions were pilot tested and item discrimination (ID) calculated for each question. Poorly performing items were eliminated or refined. Two comparable assessments were created. These assessments were administered as a pre-test and post-test to a cohort of 58 Indian junior investigators before and after exposure to a new course on research ethics. Half of the investigators were exposed to the course on-line, the other half in person. Item discrimination (ID) was calculated for each question and Cronbach’s Alpha for each assessment. A final version of the assessment that incorporated the best questions from the pre-/post-test phase was used to assess retention of research ethics knowledge and skills three months after course delivery.

Results

The final version of the REKASA includes 41 items. The final version of the REKASA had a Cronbach’s alpha of .837.

Conclusion

The results illustrate, in one sample of learners, the successful, systematic development and use of a knowledge and skills assessment in research ethics capable of reliably assessing the application of important analytic and reasoning skills, without reliance on essay or discussion-based examination.

INTRODUCTION

In 2000, the National Institutes of Health (NIH) instituted a policy “requiring education on the protection of human research participants” for all key personnel on federal grants and contracts that involved human participants [1]. Human subject training requirements are often satisfied through the use of self-directed computer based training (CBT) modules. Successful completion of the CBT, usually determined by a passing grade on a short multiple-choice quiz, certifies the trainee as eligible to compete for NIH funds. The advantage of multiple-choice quizzes is the ease of administration and scoring. The disadvantage is that it is hard to create multiple-choice items that test the application of ethical analysis skills. There is concern that such assessments measure only the learners’ ability to memorize factual information or basic ethics knowledge. Thus instructors in the academic setting often have learners produce responses to open-ended questions or write essays that demonstrate their ethical analysis skills. The primary disadvantage of this approach is the burden and potential inconsistency of scoring open ended responses. While considerable attention has focused on the topics that must be covered in research ethics training, almost no comparable attention has been devoted to the assessment of the educational outcomes of such training. In our review of the literature, we found some published instruments and evaluative questions related to assessing knowledge and skills in medical ethics, but no validated assessment tools to test knowledge and skills in research ethics and integrity [2–7].

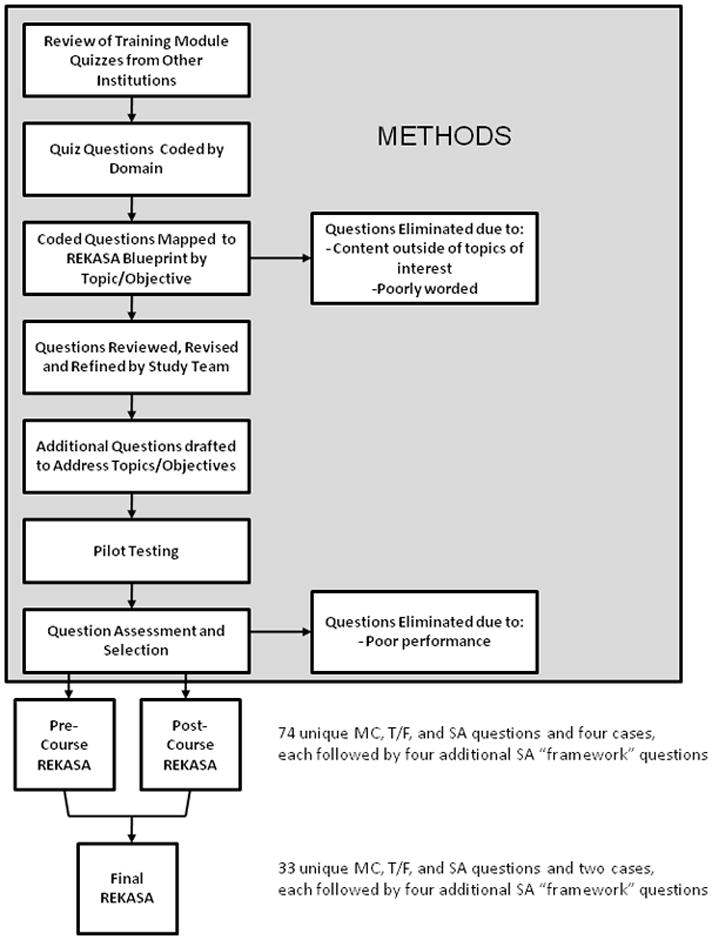

A National Institutes of Health (NIH) sponsored effort to build capacity in research ethics among junior health research investigators in India in 2009 provided the opportunity to rigorously develop an original research ethics knowledge and analytic skills assessment (REKASA) tool. Figure 1 provides a summary of the steps taken to create this novel assessment tool. The REKASA was designed to measure baseline and post-test knowledge and analytic skills in research ethics among the targeted learners and to distinguish any differences in learning between those taught through different teaching strategies. This paper describes the development and reliability testing of the REKASA.

Figure 1.

Overview of REKASA Development

METHODS

Our ultimate goal was to create a single tool with a set of highly reliable and valid items to assess learner knowledge and skills. We describe below the process completed to reach this goal. We conducted a review of the websites of 22 high volume academic medical centers in the US to identify the type of human subject training they require of investigators and how investigator knowledge is assessed [8]. Of the 22 institutions identified, 13 institutions were using either Collaborative Institutional Training Initiative (CITI) modules as their primary required training method or included them among options investigators could choose to complete [9]. Two institutions among those we reviewed used NIH-produced modules as their primary training method [10]. The remaining seven used tools unique to their institution. All of these tools were internet based and self-administered, and all used a multiple choice, true/false and/or short answer format. These tools ranged in length from 10 to 114 of questions (mean = 30.8; median = 20).

We abstracted the publicly available quiz items from all of the modules and compiled a list of 271 total items. Each item then was coded according to its source, type of question (e.g. MC, T/F, short answer), and level of difficulty (easy, medium, difficult). The level of difficulty was assessed by at least two members of the study team based on experience and expertise. Questions were also coded based on a set of seven criteria we developed to organize the subject matter of the available questions: IRB procedures, regulatory requirements, facts related to the oversight system in general, notable events in the history of research ethics, ethical reasoning, application of ethical analysis skills, and ethical sensitivity. See Table 1 for definitions of each criterion and sample questions. The 271 questions were first coded for these seven categories independently by two Research Assistants. Based on their results, codes were discussed, modified, and refined by two investigators, one of whom re-coded the quiz questions. Forty percent of the items from all of the quizzes reviewed were coded as “Facts concerning the substance of regulations or other advisory documents” and approximately one-fifth were coded as “IRB procedures for which the Principal Investigator is responsible” (22%) and “application of ethics analysis skills” (19%). None of the questions were coded as assessing “ethical sensitivity” (See Table 1).

Table 1.

Domains Covered in Existing “Quizzes” from Computer Based Research Ethics Training Modules

| Domain | Description | Example from data | Cognitive Level | Number (percentage) (n=271) |

|---|---|---|---|---|

| IRB procedures for which the Principal Investigator is responsible. | Questions that are specific to IRB procedures and ask for the identification of what the P.I. must do, procedurally. | Who can submit a research protocol for IRB review? | Low | 59 (22%) |

| Facts concerning the substance of regulations or other advisory documents | Questions that ask for the identification of requirements or certain features of regulations and guidelines concerning human subjects research. | What are the elements of informed consent as described in the Code of Federal Regulations? What three principles of ethics are found in the Belmont Report?” | Low | 109 (40%) |

| General facts related to the oversight system | Questions that ask for the identification of the roles, responsibilities, and/or relationships of governing bodies and/or review boards. | What is the primary responsibility of the IRB? | Low | 20 (7%) |

| Ethics historical facts | Questions that ask about the historical sequence and/or significance of particular ethics- related events and documents. | Which of the following human subjects research documents resulted from World War II and the Nuremberg trials? | Low | 11 (4%) |

| Ethical reasoning | Questions that present a fact pattern with a clear ethical dilemma and the question asks for a conclusion about the appropriate course of action | You are applying for a renewal of your grant. Although your postdoc’s term will expire just about the time the renewal would take effect and you have no intention of renewing her appointment because of behavior problems, you want to propose continuing the work she has done. You ask her to write a section of the proposal that describes how her work could be followed up in the proposed renewal, but you do not tell her that she would be a part of the continuing research team. You receive notice of funding of your proposal just before the postdoc is about to terminate. You do not renew her appointment and she sues you and the University for a commitment to renew her appointment. What should have been done to outcome? achieve a more satisfactory outcome? | Medium | 21 (8%) |

| Application of ethics analysis skills | Questions that ask for the translation of ethical theory into practice. | Identify the ethical principle that provides the foundation for the practice of Informed Consent. Which of the following principles of ethics found in the Belmont Report would prevent the unjustified recruitment of a study population consisting solely of men? | High | 51 (19%) |

| Ethical sensitivity | Questions that present a fact pattern where the ethical dilemma(s) is not clear and it asks for the identification of the ethical dilemma(s). | High | 0 (0%) |

Assessment Tool Development

Using established principles of curriculum development and our previous experience teaching many courses and workshops on research ethics, we drafted a comprehensive list of learning objectives for what might be a standard workshop or short training in research ethics [11]. This included both content areas (e.g., ethical principles, informed consent, study design) relevant to training as well as whether the learning goal was acquisition of knowledge vs. skills. These learning objectives formed the basis for developing a blueprint for the REKASA. Based on critical guidance from experts in test development, the tool blueprint was structured around each learning objective and then whether that learning objective required assessment of knowledge, skills, or both, how many questions should be asked for each objective based on the relative importance of that objective, and how many questions of each level of difficulty (easy, medium, hard) should be asked for each objective. The blueprint included five broad learning objectives and eighteen sub-objectives regarding learners’ knowledge of research ethics and their skills applying ethics principles and reasoning to ethical issues in human subject research.

Our next step was to transform our blueprint into a list of course topics and related objectives. Overall, there were 11 topics identified as relevant to research ethics instruction, covering 35 learning objectives. Each of these learning objectives was coded by investigators, through prior independent coding and then discussion, as being of high (n=23), medium (n=9), or low (n=3) importance to research ethics teaching. For example, the ability to apply ethical principles was rated high priority, while ability to accurately define principles was medium priority. Importance of the objective served to determine the number of questions that should be asked in relation to each objective. As a result of this process, the group decided to remove three objectives consistently rated as a low priority for a final total of 11 topics and 32 objectives to be measured by our new tool – Research Ethics Knowledge and Analytical Skills Assessment (REKASA) (See Table 2). Our goal was to generate enough questions to develop two parallel REKASA instruments, each with unique but comparable sets of questions with comparable levels of difficulty covering all 32 of our learning objectives to be used for pre and post testing with the intent of combining the best questions from each to create our final instrument.

Table 2.

Course Topics and Objectives

| Topic | Objectives |

|---|---|

| History of Research Ethics |

|

| Ethical Principles |

|

| Introduction to Ethical Framework |

|

| Ethics Review Committees (RECs) |

|

| Informed Consent Requirements |

|

| Effective Informed Consent Practices |

|

| Study Design |

|

| Risk/Benefit Assessment |

|

| Honesty in Science |

|

| Privacy and Confidentiality |

|

| Justice |

|

At this point in time, the study team returned to the 271 items abstracted from the publicly available quizzes. First, items were matched to our objectives. Items unrelated to our own 32 learning objectives were eliminated. Second, poorly worded questions were eliminated. Poorly worded questions included those that had poor sentence structure or grammar and those that violated basic survey methodology such as questions that were vague or double barreled (a question that includes two different concepts but requires one answer) [12]. Third, any questions with poor responses choices (e.g. response patterns that were not mutually exclusive) were eliminated, or response options were revised or rewritten [13]. Fifty questions remained. Each of these 50 questions was matched to a particular REKASA objective We wanted to develop up to 74 multiple choice, true false and short answer questions to pilot test with the goal of having at least 30 questions for two comparable instruments (pre and post test) - each with a similar number of questions for each domain and level of difficulty. To 74 questions to pilot, we drafted an additional 24 items, including many in the “difficult” category. Finally, certain questions were modified to be easier or more difficult based on the requirements outlined in the REKASA blueprint.

Our next step was to ask colleagues with expertise in research ethics to assess the face and content validity of our questions. Three experienced bioethics faculty, two international research ethics scholars, and one bioethics PhD student reviewed the 74 draft items and provided feedback. Items were revised and refined based on their feedback.

We then arranged for the pilot testing of the 74 items with a group of students similar to the Indian target learners. Fifty international students attending summer courses at [specifics removed for review] were recruited to complete the assessment on-line [14]. Our goal in pilot testing the questions was to determine the length of time it would take the students to complete the assessment, to learn the spread of scores among students and to make final determinations as to which items were equivalent in each content area to create two comparable versions of the REKASA with the ultimate goal of creating one reliable and valid tool. The draft assessment was uploaded into a web-based survey program for easy administration. Questions were organized so as to avoid “cueing” effect, as would be done in the actual tests.

The MC and T/F questions were scored electronically (automatically). We then reviewed the scores for each pair of questions linked to a single objective in order to determine whether the paired questions elicited similar rates of correct responses. Any pairs of questions under the same objective that had less than a 10% difference in correct/incorrect score were deemed comparable. If we found greater than 10% difference in score between two “parallel” questions, we revised one question to be what we thought would be harder or easier with the intent of making the pair more comparable. As a result of this process three questions were revised to make the question pairs perform more similarly. The final items were subsequently allocated to create two comparable instruments. Each instrument was designed to have the same number of MC, T/F, and short answer (SA) questions within each content area and of comparable difficulty. We achieved two unique versions, each with 37 unique MC, T/F, and SA items with both versions comparable in style, length, concepts covered, and nature of items asked.

A final measurement goal was to assess learners’ analytic skills in research ethics. As such, we created a Framework for Analyzing a Research Ethics Case. The framework draws from foundational ethics principles and their applications as outlined in the Belmont Report and CIOMS Guidelines and by other scholars [15–18]. The framework consists of 7 questions to guide the user through the ethical analysis of a case, including identifying key facts, key moral concerns, associated ethics principles and requirements, balancing of competing concerns, identifying options for resolution, and recommendation. The framework was incorporated into the teaching of research ethics and then assessed in each pre and posttest version. Specifically, to test the learners’ ability to apply the framework, two easy and two harder cases were developed. In the pre and posttests, four short answer questions were asked of learners corresponding to the different stages of the framework. As such, the final version of each test had 41 items.

The final pre and post tests were administered on-line to both the cohort of trainees who had taken research ethics in person and the cohort who had taken research ethics online [19]. There were twenty-nine trainees exposed to each version of research ethics training. The first cohort of 29 trainees completed a 3.5 week research ethics course online in July 2009 and the second cohort of 29 trainees completed a short 3.5 day research ethics course on-site ([specifics removed for review]) in September 2009. Each trainee was allotted 90 minutes (electronically timed) to complete the pre-test within a four day window immediately before initiating the course, then had 90 minutes to complete the parallel, unique post-test within a four day window immediately after completing the course. The average trainee spent 56 minutes completing each assessment.

The MC and T/F items were scored electronically (automatically). All short answer responses, including responses to the framework questions, were coded separately by two faculty-investigators. Both coders were blinded to the names of the trainees and whether the responses were provided during the pre- or post- test period (this was done to avoid any potential bias to score the post test more liberally indicating an artificial increase in knowledge and skills). Short answers were graded as “0” for incorrect and “1” for correct. Inconsistencies in the application of codes were determined by a third reviewer and were reconciled through a deliberative process among all three reviewers.

Once the tests were scored and data entered, we calculated the Item Discrimination (ID) for each item. The Cronbach’s Alpha for each version of the REKASA was calculated using the method of Ferguson and Takane [20]. Using pre-determined cutoffs, items from either version of the REKASA were retained that had an item discrimination of 0.20 or higher, and items from either version that did not achieve a Crohbach’s Alpha of 0.70 or higher were discarded [21, 22]. (See Table 3).

Table 3.

Comparison of Pre and Post Test Research Ethics Assessment Instruments

| Pre-Test | Post-Test | 3-mo. Post-Test | |

|---|---|---|---|

| # of questions included by question type | |||

| MCQ | 24 | 23 | 22 |

| SA | 11 | 11 | 9 |

| TF | 2 | 3 | 2 |

| Framework | 8 | 8 | 8 |

| Combined | 45 | 45 | 41 |

| # of points allocated to each question | 1 | 1 | 1 |

| Ave. time to complete (mins.) | 53 | 60 | 55 |

| Cronbach’s Alpha | |||

| Cohort 1 (online) | 0.798 | 0.827 | 0.879 |

| Cohort 2 (on-site) | 0.834 | 0.766 | 0.729 |

| Combined | 0.815 | 0.81 | 0.837 |

| Item Discrimination (# of questions (%)) | |||

| ≥ 0.20 | 34 (75.5) | 20 (44.4) | 36 (87.8) |

| 0.10–0.19 | 8 (17.7) | 13 (18.8) | 3 (7.3) |

| < 0.10 | 3 (6.6) | 12 (26.6) | 2 (4.8) |

Based on these results we selected items from the pre-and post-tests with ID values above 0.20 to include in a third and final REKASA to be implemented 3 months after the post-test assessment. Our goal was to create a final version of the REKASA with the most predictive items to test trainee retention across all or our course objectives. At this point two new items were created to assess content areas that did not attain an ID of 0.20 or above on previous instruments.

RESULTS

The final version of REKASA included 33 of the most statistically robust MC, T/F and SA items and two “ethical framework cases”, each of which was followed by four short answer questions. Thus, each instrument had a total of 41 questions. The final version of the REKASA had a Cronbach’s alpha of .837 (instrument available on request).

Finally, we engaged in a three additional revisions to the final instrument to maximize performance and to simplify scoring. With the goal of maximizing the performance of the REKASA we removed questions with an item discrimination of less than .2220. This led to the removal of five poorly-performing questions (three MCs and two SAs) which increased the Cronbach’s alpha to .843. With the removal of these five items the REKASA covers all eleven topics listed in Table 1 but not all of the objectives. The 5 poorly-performing questions are noted on the final version of the REKASA available on request.

Knowing that an instrument will be less attractive that includes items requiring extensive faculty time to score and negotiate acceptable answers to certain questions, we conducted two sensitivity analyses of the REKASA. First we removed the open ended framework questions that were the more time consuming to score (n=8). The Cronbach’s alpha for this shortened REKASA was .720, just above our goal of ≥ .70. Our second sensitivity analysis was conducted on a version of the REKASA with all seventeen open ended short answer questions removed. The Cronbach’s alpha for this second shorter version (24 items; 22 MC and 2 T/F questions) was .665 (less than our goal of ≥ .70). As with the version noted above, each of these shortened versions of the REKASA included at least one question related to each of the 11 topics but did not cover all 32 objectives.

CONCLUSION

Using established principles of curriculum development, questionnaire construction, and reliability and validity testing, we developed a tool to evaluate learners on the topic of research ethics. In an effort to develop a reliable REKASA instrument, we discovered that most computer-based training modules include quizzes. No systematic assessment of the reliability and validity of these quizzes is available to assure institutions that scoring well on such quizzes indicates competence in research ethics or covers broad domains of interest. As research ethics education is required of investigators at institutions that receive U.S. Federal funds there is a need for a reliable and valid tool to assess knowledge of research ethics. It also is important for there to be a reliable and valid measure of learners’ analytic skills, not only their knowledge of research ethics history and principles (1). Indeed, if the goal of research ethics training is to encourage the adoption of ethical practice in the conduct of human subject research, courses must introduce trainees to the skills of ethical analysis and problem solving, and assessments must measure their proficiency in such skills.

This study is an early step in developing such a tool. The results illustrate the successful, systematic development and use of a knowledge and skills assessment capable of reliably assessing the application of analytic skills, without reliance on essay or discussion-based examination.

There were several limitations to our study. First, only one group of trainees completed the multiple versions of the REKASA. Future studies will use the REKASA to assess knowledge and skills among diverse populations of learners to further refine the tool. Second, this tool was tested among one population in India. Given that a significant push for research ethics education and quiz development has occurred within the United States, testing this tool in U.S. research populations also is critical. Third, the performance of the REKASA was validated with face validity and content validity. However, the lack of an existing validated instrument prevented us from assessing criterion validity. Further study is also needed to assess predictive validity. Finally, it would be important to test this tool among groups with greater and lesser preexisting knowledge of research ethics in order to determine if its ability to distinguish changes in knowledge is similarly achieved in these different groups.

To our knowledge, we are the first to systematically develop a novel instrument to assess knowledge and skills in research ethics based on a set of learning objectives It is our goal that other researchers, ethicists, and investigators would also use and test this model in order to continue to achieve a robust, reliable, and valid instrument for measuring baseline and changed knowledge and skills. Our ultimate goal is to contribute a reliable and valid tool for use by a variety of stake-holders interested in promoting proficiency in the ethics of human subjects research. In addition, the methods described can be used in similar research-related activities that require development of knowledge assessments, and can also inform processes for academic (non-research) course and test development.

Contributor Information

Holly A. Taylor, Assistant Professor, Department of Health Policy and Management, Johns Hopkins Bloomberg School of Public Health, Core Faculty, Johns Hopkins Berman Institute of Bioethics, 624 N. Broadway, HH 353, Baltimore, MD 21205 USA.

Nancy E. Kass, Phoebe R. Berman Professor of Bioethics and Public Health, Johns Hopkins Bloomberg School of Public Health, Deputy Director for Public Health, Johns Hopkins Berman Institute of Bioethics, Baltimore, MD USA.

Joseph Ali, Research Scientist, Johns Hopkins Berman Institute of Bioethics, Baltimore, MD USA.

Stephen Sisson, Associate Professor of Medicine, Department of Medicine, Johns Hopkins School of Medicine, Director, Johns Hopkins Medicine Internet Learning Center, Baltimore, MD USA.

Amanda Bertram, Senior Research Program Coordinator, Department of Medicine, Johns Hopkins School of Medicine, Baltimore, MD USA.

Anant Bhan, Researcher in Bioethics and Global Health, Pune, India.

References

- 1.National Institutes of Health. [Accessed 6/2/11.];Required Education in the Protection of Human Research Participants. 2000 http://grants.nih.gov/grants/guide/notice-files/NOT-OD-00-039.html.

- 2.Self DJ, Schrader DE, Baldwin DC, Jr, et al. The moral development of medical students: a pilot study of the possible influence of medical education. Med Ed. 1993;27:26–34. doi: 10.1111/j.1365-2923.1993.tb00225.x. [DOI] [PubMed] [Google Scholar]

- 3.McAlpine H, Kristjanson L, Poroch D. Development and testing of the ethical reasoning tool (ERT): an instrument to measure the ethical reasoning of nurses. J Adv Nurs. 1997;25:1151–61. doi: 10.1046/j.1365-2648.1997.19970251151.x. [DOI] [PubMed] [Google Scholar]

- 4.Self DJ, Olivarez M, Baldwin DC., Jr Clarifying the relationship of medical education and moral development. Acad Med. 1998;73:517–20. doi: 10.1097/00001888-199805000-00018. [DOI] [PubMed] [Google Scholar]

- 5.Patenaude J, Niyonsenga T, Fafard D, et al. Changes in the components of moral reasoning during students’ medical education: a pilot study. Med Ed. 2003;37:822–9. doi: 10.1046/j.1365-2923.2003.01593.x. [DOI] [PubMed] [Google Scholar]

- 6.Packer S. The ethical education of ophthalmology residents: an experiment. Trans Am Ophthalmol Soc. 2005;103:240–269. [PMC free article] [PubMed] [Google Scholar]

- 7.Shuman LJ, Sindelar MF, Besterfield-Sacre M, et al. Can our students recognize and resolve ethical dilemmas? [Accessed June 2, 2011.];Proceedings of the 2004 American Society for Engineering Education Annual Conference & Exposition [abstract 1526] [Google Scholar]

- 8.Brigham & Women’s Hospital, Case Western Reserve University, Cornell University, Emory University, Johns Hopkins University (2), Penn State University, Scripps Research Institute, Stanford University, University of California Los Angeles, University of California San Diego, University of California San Francisco, University of Chicago, University of North Carolina-Chapel Hill, University of Michigan, University of Minnesota, University of Pittsburgh, University of Washington, University of Wisconsin - Madison, Washington University, Vanderbilt University and Yale University.

- 9.Braunschweiger P. Collaborative Institutional Training Initiative (CITI) Journal of Clinical Research Best Practices. 2010;6:1–6. [Google Scholar]

- 10.National Institutes of Health. [Accessed June 2, 2010];Protecting Human Research Subjects. http://phrp.nihtraining.com/users/login.php.

- 11.Kern DE, Thomas PA, Howard DM, Bass EB. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: The Johns Hopkins University Press; 1998. [Google Scholar]

- 12.Dillman DA. Mail and Internet Surveys: The Tailored Design Method. 2. New York, NY: John Wiley & Sons, Inc; 2000. [Google Scholar]

- 13.Case SM, Swanson DB. Constructing Written Test Questions for the Basic and Clinical Sciences. 3. Philadelphia, pa: National Board of Medical Examiners; 2002. [Google Scholar]

- 14.Finley R. Survey Monkey. SurveyMonkey.com; Portland, OR: [Accessed 6/2/11]. [Google Scholar]

- 15.National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. The Belmont Report. [Accessed June 2, 2011];Ethical Principles and Guidelines for the Protection of Human Subjects of Research. 1979 http://www.hhs.gov/ohrp/humansubjects/guidance/belmont.html. [PubMed]

- 16.Emanuel EJ, Wendler D, Grady C. What makes clinical research ethical? JAMA. 2000;283:2701–11. doi: 10.1001/jama.283.20.2701. [DOI] [PubMed] [Google Scholar]

- 17.Council for International Organizations of Medical Sciences. International Ethical Guidelines for Biomedical Research Including Humans. Geneva, Switzerland: CIOMS; 2002. [Accessed June 2, 2011.]. http://www.cioms.ch/publications/layout_guide2002.pdf. [Google Scholar]

- 18.Emanuel EJ, Wendler D, Killen J, et al. What makes clinical research in developing countries ethical? The benchmarks of ethical research. J Infect Dis. 2004;189:930–7. doi: 10.1086/381709. [DOI] [PubMed] [Google Scholar]

- 19.[specifics removed for review]

- 20.Ferguson GA, Takane Y. Statistical analysis in psychology and education. 6. New York, NY: McGraw-Hill Book Company; 1989. [Google Scholar]

- 21.Cook DA, Beckman TJ. Current concepts in validity and reliability for psychometric instruments: Theory and application. Am J Med. 2006;119:e7–16. doi: 10.1016/j.amjmed.2005.10.036. [DOI] [PubMed] [Google Scholar]

- 22.Woodward CA. Questionnaire construction and question writing for research in medical education. Med Educ. 1988;22:345–63. doi: 10.1111/j.1365-2923.1988.tb00764.x. [DOI] [PubMed] [Google Scholar]