Abstract

Background

Despite advances in surgical training, microsurgery is still based on an apprenticeship model. To evaluate skill acquisition and apply targeted feedback to improve our training model, we applied the Structured Assessment of Microsurgery Skills (SAMS) to microsurgical fellows training. We hypothesized that subjects would demonstrate measurable improvement in performance throughout the study period and consistently across evaluators.

Methods

Seven fellows were evaluated during 118 microsurgical cases by 16 evaluators over three 1-month evaluation periods in 1 year (2010-2011). Evaluators used SAMS, which consists of 12 items in four areas: dexterity, visuo-spatial ability, operative flow, and judgment. To validate the SAMS data, microsurgical anastomoses in rodents performed by the fellows in a laboratory at the beginning and end of the study period were evaluated by five blinded plastic surgeons using the SAMS questionnaire. Primary outcomes were change in scores between evaluation periods and inter-evaluator reliability.

Results

Between the first two evaluation periods, all skill areas and overall performance significantly improved. Between the second two periods, most skill areas improved, but only a few significantly. Operative errors decreased significantly between the first and subsequent periods (81 vs. 36; p<0.05). In the laboratory study, all skills were significantly (p<0.05) or marginally (0.05≤p<0.10) improved between time points. The overall inter-evaluator reliability of SAMS was acceptable (α=0.67).

Conclusions

SAMS is a valid instrument for assessing microsurgical skill, providing individualized feedback with acceptable inter-evaluator reliability. Between the first two evaluation intervals, the microsurgical fellows’ skills increased significantly, but they plateaued thereafter. The use of SAMS is anticipated to enhance microsurgical training.

INTRODUCTION

Concepts of technical training differ depending on the discipline. Many trainable tasks consist of a series of definable steps for which a systematic curriculum can be designed, developed, and executed and the results measured; flight schools, for example, follow this training model. Other disciplines that tend to be more artisanal have flourished under an apprenticeship model in which the trainee is expected to absorb much of what is needed through observation and then apply a diverse skill set under a variety of circumstances; most of the arts follow this model and so, traditionally, has surgical training.

Concepts of instructional design are focused on creating “instructional experiences which make the acquisition of knowledge and skill more efficient, effective, and appealing.1 This process consists of determining the current skill level and needs of the learner, defining the goal of instruction, and designing an “intervention” to facilitate a transition. 1-2 Ideally, the surgical training process is informed by pedagogical (teaching) and anagogical (adult learning) theories and can include self-study, surgical simulation, and instructor-led scenarios. The outcome of instruction may be directly observable and scientifically measured, or completely unexamined and assumed, as has historically been the case in surgical training. 3-4

Two forces—work hour restrictions and the desire for health outcomes assessment--have resulted in a desire for a more systematic measurable approach to surgical training. Under conditions of restricted training time, concerns have arisen about not only compliance but also the adequacy of surgical training in a compressed amount of time. 5-8 In order to provide appropriate training under the auspices of the Accredited Council for Graduate Medical Education (ACGME) guidelines, a paradigm shift has emerged where the training of surgical residents is no longer limited to the hospital and the operating theater. The other force behind a shift to more systematic training is the desire for measurable quality outcomes in health care. The Institute of Medicine has made this a priority, and for good or bad, the Affordable Health Care Act will use outcome measures to drive reimbursement and resource allocation.

The need for systematic evaluation of our surgical training programs cannot be overstated. In a world that is increasingly dominated by metrics, developing a reproducible, valid, low-cost, and easy to administer evaluation instrument is essential for self-improvement. Proactive pursuit of this goal, besides being worthy in and of itself, is a critical defense against having such metrics imposed upon us from external sources, who will likely have a dimmer understanding of the principles and practice of our profession. One instrument for surgical evaluation is the Structured Assessment of Microsurgical Skills (SAMS), a model designed to assess technical skills during microsurgery that was formulated from other assessment tools such as the Imperial College Surgical Assessment Device (ICSAD) and the Observed Structured Assessment of Technical Skills (OSATS). 9--12 The SAMS is an appropriate instrument for microsurgical assessment because it covers the core components of microsurgical skills. To insure that the instrument was valid in the context of our clinical training program, we chose to validate the instrument in a controlled laboratory setting.

The purpose of this study was to use SAMS to monitor the maturation process of microsurgical skills in a cohort of microsurgical fellows over a 1-year period, with the ultimate goal of providing targeted feedback to enhance our training model. To our knowledge, this is the first study that evaluates microsurgical skill in a cohort of microsurgical trainees in a clinical setting, while simultaneously validating the assessment instrument in a laboratory model.

MATERIALS AND METHODS

Microsurgery Fellowship Training Environment

Seven fellows were enrolled in a 1-year (July 2010-June 2011) microsurgery fellowship at The University of Texas MD Anderson Cancer Center after completing the requisite plastic surgery residency training. The training program has been in existence for 21 years, and more than 120 fellows have completed the program. Currently, the program has 19 full-time microsurgery faculty, and it emphasizes graduated responsibility in both patient care and operative experience. Fellows participate in pre/postoperative clinic visits, operative cases, didactic education, and clinical research. Performance feedback from faculty is encouraged during each case, and formal, structured written and face-to-face feedback is provided by the program director (CEB) twice yearly. Fellows are expected to progress during the year of training and to competently perform microsurgery reconstructions independently by the end of the year.

Experimental Design

The fellow cohort underwent an identical didactic and clinical training during the same time period at the same institution. The evaluation of their skills was based on SAMS and included clinical assessment as well as a laboratory evaluation, where conditions were standardized.

Clinical Assessment

In the first of this two-part study, 7 fellows were evaluated during 118 microsurgical cases by 16 faculty members in the Department of Plastic Surgery during three 1-month intervals at the beginning (August), middle (February), and end (May) of the training year. For each of the 1-month evaluation periods, the fellows were evaluated after each consecutive microsurgical case during that month. Each fellow participated in an average of 7 (range, 5 – 10) cases per month. Fellows selected cases weekly on the basis of their preference resulting in one faculty evaluator per case. Each of the participating faculty members evaluated all the fellows by the end of the study period.

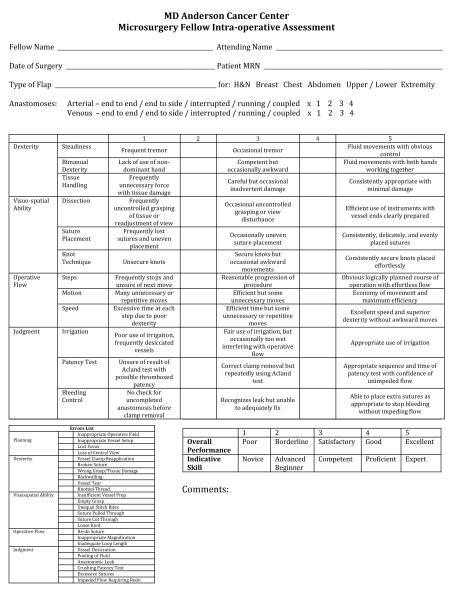

The evaluators used the SAMS, which consists of 12 items grouped into four main categories of microsurgical skills: dexterity, visuo-spatial ability, operative flow, and judgment (Fig. 1). Each category is subdivided into three technical components. The overall score is the average of all 12 items and can range from 1 to 5. Specific errors (e.g., unequal stitch bites, back walling) were documented from a list of 25 common mistakes made during microsurgical anastomosis (appendix 1).

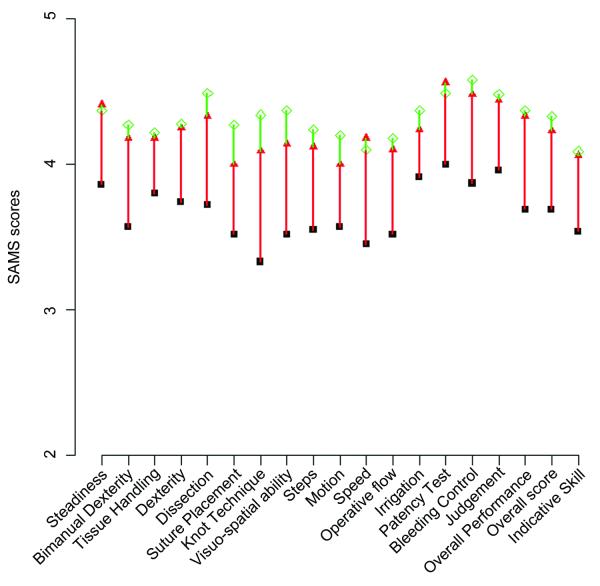

Figure 1.

Graph demonstrating the mean scores and changes in each measured technical component during all three study intervals. The black squares indicate the scores in August 2010; the red triangles show the scores in February 2011, and the green diamonds show the scores in May 2011. The red lines show the differences in scores from August to February, and the green lines show the difference in scores from February to May.

Laboratory Assessment

In part two, to validate the SAMS as an evaluative instrument, 7 fellows performed microsurgical rat femoral artery anastomoses at the beginning and end of the study period (two procedures per fellow). The surgeries were videotaped, and each video was evaluated by five plastic surgeons using the SAMS questionnaire. The videos were de-identified and the audio was removed so the evaluators were blinded to the identities of the fellows, as well as the time point.

Statistical Analysis

A paired t-test was used to compare changes in the SAMS score for each technical component for each fellow over the study period. Cronbach’s alpha coefficient was used to determine inter-evaluator reliability in both the clinical and laboratory settings for each fellow at each time point. Alpha values of less than 0.6 were considered unacceptable, values of 0.6 – 0.7 were considered acceptable, values of 0.7 – 0.8 were considered good, and values greater than 0.8 were considered excellent. 13 A P-value less than 0.05 was considered significant. All tests were two-sided. The analyses were performed using SAS 9.2 (SAS Institute Inc., Cary, NC) and R (The R Foundation for Statistical Computing).

RESULTS

Clinical Assessment

Forty-four cases were evaluated in August 2010, 33 in February 2011, and 41 in May 2011. Table 1.1 lists the mean SAMS scores for each of the components during the first two study periods, August 2010 and February 2011. All of the technique component scores, as well as the overall performance and indicative skill scores, increased significantly over time with the exception of tissue handing (p=0.20), motion (p=0.08), and irrigation (p=0.08). Speed improved the most (0.76 difference in mean scores), and tissue handling showed the least improvement (0.36 difference).

Table 1.1.

Comparison of mean SAMS* scores in August 2010 and February 2011

| August 2010 |

February 2011 |

||||

|---|---|---|---|---|---|

| Microsurgery Skills | Mean | Mean | Diff. | SD | p-value |

| Dexterity | 3.74 | 4.26 | 0.53 | 0.46 | 0.02 |

| Bimanual Dexterity | 3.53 | 4.20 | 0.67 | 0.48 | 0.01 |

| Tissue Handling | 3.83 | 4.19 | 0.36 | 0.67 | 0.20 |

| Visuo-spatial Ability | 3.53 | 4.12 | 0.59 | 0.14 | <0.01 |

| Dissection | 3.71 | 4.36 | 0.65 | 0.33 | <0.01 |

| Suture Placement | 3.55 | 3.95 | 0.40 | 0.23 | <0.01 |

| Knot Technique | 3.34 | 4.03 | 0.69 | 0.32 | <0.01 |

| Operative Flow | 3.53 | 4.15 | 0.62 | 0.47 | 0.01 |

| Steps | 3.55 | 4.18 | 0.63 | 0.50 | 0.02 |

| Motion | 3.57 | 4.04 | 0.47 | 0.60 | 0.08 |

| Speed | 3.46 | 4.22 | 0.76 | 0.46 | <0.01 |

| Judgment | 3.94 | 4.49 | 0.55 | 0.32 | <0.01 |

| Irrigation | 3.90 | 4.33 | 0.43 | 0.54 | 0.08 |

| Patency Test | 3.98 | 4.59 | 0.60 | 0.38 | 0.01 |

| Bleeding Control | 3.84 | 4.44 | 0.63 | 0.41 | 0.01 |

| Overall Score | 3.68 | 4.25 | 0.57 | 0.29 | <0.01 |

| Overall Performance | 3.67 | 4.36 | 0.70 | 0.33 | <0.01 |

| Indicative Skill | 3.52 | 4.13 | 0.61 | 0.63 | 0.04 |

Table 1.2 compares the mean scores of the components in the second and third study periods, February 2011 and May 2011. Most of the technique component scores increased slightly between these two intervals. Overall visuo-spatial ability significantly improved (diff=0.28, p=0.01), as did knot technique and suture placement (0.34, p=0.03 and 0.37, p=0.05, respectively). The scores of speed and patency, as well as overall performance and indicative skill scores, demonstrated slight, non-statistically significant decreases (0.07, 0.06, 0.004, and 0.02, respectively). A graphic representation of changes in the mean SAMS scores over all three study intervals is shown in Fig. 1.

Table 1.2.

Comparison of mean SAMS* scores in February 2011 and May 2011

| February 2011 |

May 2011 |

||||

|---|---|---|---|---|---|

| Microsurgery Skills | Mean | Mean | Diff. | SD | p-value |

| Dexterity | 4.26 | 4.31 | 0.04 | 0.34 | 0.74 |

| Bimanual Dexterity | 4.20 | 4.28 | 0.08 | 0.39 | 0.61 |

| Tissue Handling | 4.19 | 4.22 | 0.03 | 0.47 | 0.88 |

| Visuo-spatial Ability | 4.12 | 4.40 | 0.28 | 0.21 | 0.01 |

| Dissection | 4.36 | 4.52 | 0.15 | 0.24 | 0.14 |

| Suture Placement | 3.95 | 4.32 | 0.37 | 0.40 | 0.05 |

| Knot Technique | 4.03 | 4.36 | 0.34 | 0.32 | 0.03 |

| Operative Flow | 4.15 | 4.23 | 0.08 | 0.30 | 0.51 |

| Steps | 4.18 | 4.29 | 0.11 | 0.22 | 0.24 |

| Motion | 4.04 | 4.27 | 0.23 | 0.46 | 0.24 |

| Speed | 4.22 | 4.15 | -0.07 | 0.37 | 0.61 |

| Judgment | 4.49 | 4.50 | 0.01 | 0.22 | 0.91 |

| Irrigation | 4.33 | 4.37 | 0.03 | 0.41 | 0.83 |

| Patency Test | 4.59 | 4.53 | -0.06 | 0.24 | 0.56 |

| Bleeding Control | 4.44 | 4.57 | 0.11 | 0.13 | 0.08 |

| Overall Score | 4.25 | 4.36 | 0.10 | 0.20 | 0.22 |

| Overall Performance | 4.36 | 4.36 | -0.004 | 0.30 | 0.97 |

| Indicative Skill | 4.13 | 4.11 | -0.02 | 0.38 | 0.88 |

SAMS, Structured Assessment of Microsurgery Skills Diff., Difference between means at each interval SD, Standard deviation

The number of tabulated errors decreased from 81 total errors in August to 36 total errors in both February and May. The most common error was unequal stitch bites (Table 2).

Table 2.

Occurrence of errors at the three time points

| Error List | Aug. 2010 | Feb. 2011 | May 2011 | |

|---|---|---|---|---|

| Planning | Inappropriate Operative Field | 1 (2.27%) | 0 | 0 |

| Inappropriate Vessel Setup | 1 (2.27%) | 0 | 0 | |

| Lost Focus | 5 (11.36%) | 0 | 1 (2.44%) | |

| Loss of Central View | 3 (6.82%) | 0 | 0 | |

| Dexterity | Vessel Clamp Reapplication | 0 | 2 (6.06%) | 0 |

| Broken Suture | 8 (18.18) | 0 | 4 (9.76%) | |

| Wrong Grasp/Tissue Damage | 5 (11.36%) | 3 (9.09%) | 5 (12.2%) | |

| Backwalling | 3 (6.82%) | 1 (3.03%) | 0 | |

| Vessel Tear | 2 (4.55%) | 1 (3.03%) | 1 (2.44%) | |

| Knotted Thread | 0 | 1 (3.03%) | 0 | |

| Visuo-spatial | Insufficient Vessel Prep | 0 | 0 | 1 (2.44%) |

| Ability | Empty Grasp | 0 | 2 (6.06%) | 1 (2.44%) |

| Unequal Stitch Bites | 18 (40.91%) | 9 (27.27%) | 6 (14.63%) | |

| Suture Pulled Through | 4 (9.09%) | 1 (3.03%) | 2 (4.88%) | |

| Suture Cut Through | 0 | 0 | 3 (7.32%) | |

| Loose Knot | 11 (25.00) | 3 (9.09%) | 2 (4.88%) | |

| Operative Flow | Re-do Suture | 4 (9.09%) | 0 | 2 (4.88%) |

| Inappropriate Magnification | 1 (2.27%) | 0 | 0 | |

| Inadequate Loop Length | 4 (9.09%) | 1 (3.03%) | 0 | |

| Judgment | Vessel Desiccation | 4 (9.09%) | 2 (6.06%) | 2 (4.88%) |

| Pooling of Fluid | 7 (15.91) | 3 (9.09%) | 1 (2.44%) | |

| Anastomotic Leak | 2 (4.55%) | 7 (21.21%) | 4 (9.76%) | |

| Crushing Patency Test | 0 | 0 | 0 | |

| Excessive Sutures | 0 | 0 | 0 | |

| Impeded Flow Requiring Redo | 2 (4.55%) | 0 | 1 (2.44%) | |

|

| ||||

| Total | 81 | 36 | 36 | |

Laboratory Assessment

Tables 3 and 4 show the pre- and post-training period scores for overall performance and indicative skills. These and all other measurable parameters in the SAMS demonstrated statistically significant increases in skill from the first to the second laboratory session. The inter-evaluator reliability of assessment for each skill component is shown in Table 5. The inter-evaluator reliability was acceptable (0.6<α<0.7) for suture placement, motion, irrigation, and bleeding control and unacceptable (α<0.5) for bimanual dexterity, knot technique, and steps. The inter-evaluator reliability was good (α>0.7) for all remaining skills. In addition to calculating the inter-evaluator reliability for each of the microsurgery skills separately, we also pooled all the skills and found that overall reliability for the laboratory component was acceptable (α=0.67).

Table 3.

Overall performance in laboratory evaluation

| Pre* |

Post* |

|||||

|---|---|---|---|---|---|---|

| Fellow | Mean | SD | Mean | SD | Diff. | p-value |

| 1 | 2.0 | 0.8 | 4.0 | 0.8 | 2.0 | |

| 2 | 3.3 | 1.0 | 4.0 | 0.8 | 0.8 | |

| 3 | 2.5 | 0.6 | 3.5 | 1.3 | 1.0 | |

| 4 | 3.0 | 0.8 | 3.0 | 0.8 | 0.0 | |

| 5 | 2.3 | 0.5 | 3.3 | 0.5 | 1.0 | |

| 6 | 2.5 | 1.0 | 4.0 | 0.0 | 1.5 | |

| 7 | 3.8 | 0.5 | 4.0 | 0.8 | 0.3 | |

| Overall | 2.8 | 0.6 | 3.7 | 0.4 | 0.9 | 0.01 |

Note: Pre indicates laboratory anastomoses performed at the beginning of the study period and Post indicates those performed at the end.

Table 4.

Indicative skill in laboratory evaluation

| Pre |

Post |

|||||

|---|---|---|---|---|---|---|

| Fellow | Mean | SD | Mean | SD | Diff. | p-value |

| 1 | 2.0 | 0.8 | 3.8 | 0.5 | 1.8 | |

| 2 | 3.5 | 0.6 | 3.8 | 1.0 | 0.3 | |

| 3 | 2.8 | 0.5 | 3.3 | 1.0 | 0.5 | |

| 4 | 2.8 | 1.0 | 3.0 | 0.8 | 0.3 | |

| 5 | 2.0 | 0.0 | 3.5 | 0.6 | 1.5 | |

| 6 | 2.5 | 1.0 | 3.5 | 0.6 | 1.0 | |

| 7 | 3.8 | 0.5 | 3.8 | 0.5 | 0.0 | |

| Overall | 2.8 | 0.7 | 3.5 | 0.3 | 0.7 | 0.03 |

Table 5.

Inter-evaluator reliability in laboratory evaluation

| Microsurgery Skills | Cronback’s alpha* |

|---|---|

| Dexterity | |

| Steadiness | 0.73 |

| Bimanual Dexterity | 0.30 |

| Tissue Handling | 0.74 |

| Visuo-spatial Ability | |

| Dissection | 0.71 |

| Suture Placement | 0.64 |

| Knot Technique | 0.37 |

| Operative Flow | |

| Steps | 0.49 |

| Motion | 0.66 |

| Speed | 0.77 |

| Judgment | |

| Irrigation | 0.66 |

| Patency Test | 0.79 |

| Bleeding Control | 0.69 |

| Overall Performance | 0.72 |

| Indicative Skill | 0.68 |

Cronbach’s alpha scale: <0.6 = unacceptable, (0.6 - 0.7) = acceptable, (0.7 - 0.8) = good, (0.8 - 0.9) = excellent

The material cost per fellow, per anastomosis for the laboratory component was $127.91. This included $55.71 per rat, $11.20 for 10-0 nylon suture ($5.60 per pack, 2 packs per anastomosis), $61.00 for the technical fee of anesthetizing the rat, and no cost for the videotaping and evaluation.

DISCUSSION

In this study, a cohort of microsurgical fellows subjected to the same training model demonstrated statistically significant improvements in the first 6 months of the training year in all main SAMS categories of skills and overall performance. During this time, the number of technical errors decreased by more than half. We also demonstrated that these improvements and error reductions tapered off, or plateaued in the second half of the training year, although trends toward improvement were still seen. We further demonstrated that an assessment instrument can be validated in the laboratory by showing that evaluators rated videotaped microsurgical performances with relative consistency. Finally, we found that both the clinical evaluation and the laboratory component can be easily administered at low cost and with minimal inconvenience to a busy microsurgical practice and training program.

Rather than relying on an apprenticeship model of learning, microsurgical training can be broken down into components that can be evaluated, measured, and analyzed. In the clinical portion of this evaluation, fellows demonstrated significant improvement in all measurable areas over the first half of the study period, indicating that early in the fellowship, important technical experience and learning skills specific to performance of a microvascular anastomosis were being imparted, whether actively or passively. In the second half of the study period, improvement in the technical aspects of performing a microsurgical anastomosis was considerably more modest. This perhaps suggests that skill acquisition related to the microvascular anastomosis itself had plateaued, and improvements were taking place in other surgical parameters that were intentionally unmeasured by the SAMS such as flap design, surgical planning, overall operative sequence, and decision-making. This explanation would be consistent with the educational model of our training program, which concentrates on the mechanics of the anastomosis early and the subtleties of surgical planning and flap design elevation and inset later in the year.

The laboratory portion of the study confirmed that SAMS is valid for measuring microsurgical ability because (1) evaluators consistently rated fellows as more skilled at the end of the year, even though the evaluators were blinded to the time the videos were recorded and (2) evaluators were in acceptable agreement about the performance characteristics of each of the fellows in most skill areas and in each evaluation period. There are some specifics of the scoring distribution that require explanation. Most of the fellow scores occur in the three to four range, and fellows who began with a lower baseline score (e.g. fellows 1, 5 and 6) tended to improve more than fellows beginning with a higher baseline score (e.g. fellow 2 and 7). We hypothesize that fellows who enter the program at a lower skill level can improve relatively dramatically because obvious deficiencies and overtly poor technique can be corrected quickly with simple interventions (penetrate the vessel wall at a right angle, withdrawal the needle along the curve, set the knot down square, etc.). Conversely, fellows who begin at a very high level, or have improved to a high level throughout the year, require less gross instruction, and improvements will be, by their nature, smaller and more subtle. This may reflect the asymptotic nature of skill acquisition.

Another observation is that there appears to be a ceiling effect among the study participants at a score of around 4. We propose an explanation: The scoring system from 1 to 5 represents the entire spectrum of potential performances from complete novice (1) to word-class expert (5). Not surprisingly, microsurgery fellows with some technical micro experience at the beginning of the training program will achieve scores above the compete novice, but below world-class expert. It is difficult for a fellow to achieve a score of 5, just as it is difficult to achieve a score of 1. These scores are outliers. Although a good and proficient performance (score of 4), can be consistently achieved by skilled fellows by the end of the fellowship, perfect and expert performances (score of 5) are very difficult to achieve and this level of achievement may only occur after years in practice, if ever. For this reason, scores of 5 were rare, perhaps again reflecting the reality of skill distribution and the asymptotic nature of skill acquisition.

The foundation of the field of instructional design was laid in World War II, when the U.S. military had to rapidly train large numbers of enlisted men to perform complex technical tasks. Drawing on the research and theories of B.F. Skinner on operant conditioning, training programs focused on observable behaviors.14 Tasks were broken down into components, and mastery was assumed to be possible for every learner, given enough repetition and feedback. Following the war, this training model was replicated in business, industrial training, and in the classroom.15 This type of systematic approach, although still common in the military, has not been applied in any meaningful way to surgical training, in spite of the fact that the two environments are analogous in the sense of consisting of definable tasks with severe consequences if unsuccessfully completed.

In contrast to the military’s systematic approach there are few reports of novel microsurgical training approaches beyond the use of artificial tubes to simulate small vessels, in vitro animal models, and chicken parts, and even fewer studies validating the utility of these models. 16-18 Some studies have explored, with occasionally promising results, the use of simulators and surgical robots in microsurgery in an attempt to validate trainee competency with other instruments and parameters. 19,20 Although a variety of different models exist to assess surgical technique and operative skills, a validated, reliable, and reproducible assessment of the microsurgical anastomosis, per se, has not been conducted.

The strengths of this study include a robust number of trainees, evaluators, and cases and a controlled validation process for our assessment instrument. Having 7 fellows and 14 faculty members participate in the evaluation process allowed us to generate a robust body of data over a single training period. Since a 1-year fellowship is short, being able to generate these data quickly was critical to the success of this study. The same trainees and evaluators participated in both the laboratory and clinical assessments. This allowed us to rate the consistency with which the assessment instrument was being applied and validate the clinical component of the study.

There were several inherent limitations to this study. Not all evaluators rated every fellow in each interval, so there may have been some psychometric divergence among evaluators per evaluation period, even if the instrument was generally being applied with consistency. Similarly, there was not an even distribution of evaluators and microsurgical cases. All evaluators reviewed all participating fellows, but did not do so in each of the three study periods. Because of normal variations in individual faculty member’s clinical practices, the number of evaluations was greater in busier microsurgeons. The evaluators in the laboratory consisted of both busy microsurgeons and less busy microsurgeons, as well as both senior and junior faculty. The purpose of this was to insure that there was inter-rater reliability across a diverse set of faculty characteristics. This consistency gives us confidence that just because more evaluations were completed by clinically busier faculty, that this did not alter the way the instrument was utilized or skew results.

Second, every case and the circumstances of every anastomosis are different, which introduces some inevitable variance in the process being evaluated. In addition, some evaluators assessed fewer cases, which leads to missing values and disables certain types of calculations. Finally, expectations about how a trainee should evolve or progress over the course of the year might change the way the trainee is graded. For instance, a trainee may be graded more harshly for a similar performance at the end of the year than at the beginning because of expectations of improvement over that time. In spite of these challenges, the authors thought it was important to evaluate the trainees in actual clinical scenarios.

Tangible and immediate benefits of the proposed training program include the ability to provide specific, periodic feedback to our fellows, rather than anecdotal reports or arbitrary and retrospectively biased ordinal grades. The later type of comment is generally unhelpful, and occasionally hurtful, particularly when the trainee is offered no method of remediation. The next step in our maturation as a training program will be to design interventions that target the deficiencies exposed by the SAMS assessment and then evaluate the effectiveness of the interventions in generating improvement. Although we believe that this study represents a considerable step forward in validating a microsurgical assessment instrument,and providing specific feedback, we clearly have a long way to go before we reach the theoretical and actual goals of standardizing curricula, assessing learning needs, creating benchmarks, defining measurable outcomes, and creating targeted feedback and interventions.

This study is the first, modest step towards achieving those goals. Work hour restrictions and the increasing use of metrics to assess quality will bring the issue of trainee evaluation to our doorstep whether we like it or not. We are fortunate in microsurgery in that we have a set of clearly defined tasks that are amenable to this type of analysis, and it behooves microsurgery training programs to engage in this process in an effort to set our own standards, so that others do not set them for us.

CONCLUSION

The SAMS is a valid instrument for assessing microsurgical skill, with good inter-evaluator reliability. Over the course of a year’s training, microsurgical fellows’ skills increased significantly in the first half of the year, but not the second. Suture placement, knot tying, and dissection continue to show modest improvement throughout the year. The shape of this learning curve is commensurate with a microsurgical curriculum that emphasizes anastomotic technique in the early part of the training and progresses to the subtleties of flap design and surgical planning in the latter part. We have moved one step closer to the goal of establishing training objectives, evaluating skill level, identifying deficiencies, and targeting feedback and interventions to enhance microsurgical training.

Acknowledgments

Funding Sources: This research is supported in part by the National Institutes of Health through MD Anderson’s Cancer Center Support Grant CA016672.

appendix 1.

|

Footnotes

Disclosure: The authors have no disclosures or conflicts of interest relevant to the content of this manuscript.

Products Mentioned: SAS 9.2 (SAS Institute Inc., Cary, NC) and R (The R Foundation for Statistical Computing)

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Merrill MD, Drake L, Lacy MJ, Pratt J, ID2_Research_Group Reclaiming instructional design. Educational Technology. 1996;36(5):5–7. [Google Scholar]

- 2.Mayer RE. Cognition and instruction: their historic meeting within educational psychology. Journal of Educational Psychology. 1992;84(4):405–412. [Google Scholar]

- 3.Moalem J, Schwartz SI. Three-phase model for surgical training: a proposal for improved resident training, assessment, and satisfaction. J Surg Educ. 2012;69(1):70–76. doi: 10.1016/j.jsurg.2011.07.003. [DOI] [PubMed] [Google Scholar]

- 4.Bhatti NI, Cummings CW. Competency in surgical residency training: defining and raising the bar. Acad Med. 2007;82(6):569–573. doi: 10.1097/ACM.0b013e3180555bfb. [DOI] [PubMed] [Google Scholar]

- 5.Hope WW, Griner D, Van Vliet D, Menon RP, Kotwall CA, Clancy TV. Resident case coverage in the era of the 80-hour workweek. J Surg Educ. 2011;68(3):209–212. doi: 10.1016/j.jsurg.2011.01.005. [DOI] [PubMed] [Google Scholar]

- 6.Tabrizian P, Rajhbeharrysingh U, Khaitov S, Divino CM. Persistent noncompliance with the work-hour regulation. Arch Surg. 2011;146(2):175–178. doi: 10.1001/archsurg.2010.337. [DOI] [PubMed] [Google Scholar]

- 7.Raval MV, Wang X, Cohen ME, et al. The influence of resident involvement on surgical outcomes. J Am Coll Surg. 2011;212(5):889–898. doi: 10.1016/j.jamcollsurg.2010.12.029. [DOI] [PubMed] [Google Scholar]

- 8.Freiburg C, James T, Ashikaga T, Moalem J, Cherr G. Strategies to accommodate resident work-hour restrictions: impact on surgical education. Surg Educ. 2011;68(5):387–392. doi: 10.1016/j.jsurg.2011.03.011. [DOI] [PubMed] [Google Scholar]

- 9.Balasundaram I, Aggarwal R, Darzi LA. Development of a training curriculum for microsurgery. Br J Oral Maxillofac Surg. 2010;48(8):598–606. doi: 10.1016/j.bjoms.2009.11.010. [DOI] [PubMed] [Google Scholar]

- 10.Temple CL, Ross DC. A new, validated instrument to evaluate competency in microsurgery: the University of Western Ontario Microsurgical Skills Acquisition/Assessment instrument. Plast Reconstr Surg. 2011;127(1):215–222. doi: 10.1097/PRS.0b013e3181f95adb. [DOI] [PubMed] [Google Scholar]

- 11.Chan W, Niranjan N, Ramakrishnan V. Structured assessment of microsurgery skills in the clinical setting. J Plast Reconstr Aesthet Surg. 2010;63(8):1329–1334. doi: 10.1016/j.bjps.2009.06.024. [DOI] [PubMed] [Google Scholar]

- 12.Chan WY, Matteucci P, Southern SJ. Validation of microsurgical models in microsurgery training and competence: a review. Microsurgery. 2007;27(5):494–499. doi: 10.1002/micr.20393. [DOI] [PubMed] [Google Scholar]

- 13.Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16(3):297–334. [Google Scholar]; 19 Peterson GB. A day of great illumination: B. F. Skinner’s discovery of shaping. J Exp Anal Behav. 2004;82(3):317–328. doi: 10.1901/jeab.2004.82-317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Skinner BF. The operant side of behavior therapy. J Behav Ther Exp Psychiatry. 1988;19(3):171–179. doi: 10.1016/0005-7916(88)90038-9. [DOI] [PubMed] [Google Scholar]

- 15.Parry-Cruwys DE, Neal CM, Ahearn WH, et al. Resistance to disruption in a classroom setting. J Appl Behav Anal. 2011;44(2):363–367. doi: 10.1901/jaba.2011.44-363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jeong HS, Moon MS, Kim HS, Lee HK, Yi SY. Microsurgical training with fresh chicken legs and their histological characteristics. Ann Plast Surg. 2011 doi: 10.1097/SAP.0b013e31822f9931. [DOI] [PubMed] [Google Scholar]

- 17.Matsumura N, Horie Y, Shibata T, Kubo M, Hayashi N, Endo S. Basic training model for supermicrosurgery: a novel practice card model. J Reconstr Microsurg. 2011;27(6):377–382. doi: 10.1055/s-0031-1281518. [DOI] [PubMed] [Google Scholar]

- 18.Phoon AF, Gumley GJ, Rtshiladze MA. Microsurgical training using a pulsatile membrane pump and chicken thigh: a new, realistic, practical, nonliving educational model. Plast Reconstr Surg. 2010;126(5):278e–279e. doi: 10.1097/PRS.0b013e3181ef82e2. [DOI] [PubMed] [Google Scholar]

- 19.Karamanoukian RL, Bui T, McConnell MP, Evans GR, Karamanoukian HL. Transfer of training in robotic-assisted microvascular surgery. Ann Plast Surg. 2006;57(6):662–665. doi: 10.1097/01.sap.0000229245.36218.25. [DOI] [PubMed] [Google Scholar]

- 20.Lee JY, Mattar T, Parisi TJ, Carlsen BT, Bishop AT, Shin AY. Learning curve of robotic-assisted microvascular anastomosis in the rat. J Reconstr Microsurg. 2011 doi: 10.1055/s-0031-1289166. [DOI] [PubMed] [Google Scholar]