Abstract

Sensorimotor regions of the brain have been implicated in simulation processes such as action understanding and empathy, but their functional role in these processes remains unspecified. We used functional magnetic resonance imaging (fMRI) to demonstrate that postcentral sensorimotor cortex integrates action and object information to derive the sensory outcomes of observed hand–object interactions. When subjects viewed others' hands grasping or withdrawing from objects that were either painful or nonpainful, distinct sensorimotor subregions emerged as showing preferential responses to different aspects of the stimuli: object information (noxious vs. innocuous), action information (grasps vs. withdrawals), and painful action outcomes (painful grasps vs. all other conditions). Activation in the latter region correlated with subjects' ratings of how painful each object would be to touch and their previous experience with the object. Viewing others' painful grasps also biased behavioral responses to actual tactile stimulation, a novel effect not seen for auditory control stimuli. Somatosensory cortices, including primary somatosensory areas 1/3b and 2 and parietal area PF, may therefore subserve somatomotor simulation processes by integrating action and object information to anticipate the sensory consequences of observed hand–object interactions. Hum Brain Mapp, 2013. © 2012 Wiley Periodicals, Inc.

Keywords: action perception, pain observation, empathy, somatosensory, fMRI, tactile discrimination

INTRODUCTION

For the social brain, others' behavior supplies a rich source of information about objects, contexts, and even mental and emotional states. Our ability to readily recognize and process such information may rely on brain mechanisms which relate others' motor, sensory, and emotional experiences to our own. Indeed, converging evidence from studies using behavioral measures, transcranial magnetic stimulation, lesion mapping, and functional imaging, shows that the observation of others' actions recruits regions that are crucially involved in the control of one's own actions, even at the level of single neurons [e.g., “mirror neurons,” di Pellegrino et al.,1992; see Keysers and Gazzola,2009; Rizzolatti and Sinigaglia,2010 for recent reviews]. Similarly, seeing a painful incident happen to someone else engages regions involved in representing the affective‐motivational [Hutchison et al.,1999; Jackson et al.,2005; Lamm et al.,2009; Morrison et al.,2004; Singer et al.,2004] and sensory [Avenanti et al.,2005; Betti et al.,2009; Bufalari et al.,2007; Cheng et al.,2008] aspects of our own pain. Observing others being touched recruits somatosensory areas [Blakemore et al.,2005; Ebisch et al.,2008; Keysers et al.,2004; Schaefer et al.,2009; see also Morrison et al.,2011a,b]. Indeed, there is mounting evidence that somatosensory areas are important in social perception [Keysers et al.,2010; Meyer et al.,2011].

We routinely witness others handling objects in daily life and can predict the sensations associated with these actions. For example, when a friend grasps the sharp end of a knife, we do not need to see her facial expression to know that the action is probably painful. What is the underlying sensory representation of the observed action? To derive the painful consequences that result from others' behavior, the brain needs to integrate action‐related information (for example, reaching with the intention to grasp a knife) with pain‐related information (for example, the recognition that the knife's blade is dangerously sharp). Observing hand–object interactions may, therefore, involve not only action representation but also an “expectation” of how the object's properties will affect the sensory surface of the acting person's hand. This “sensory expectation” (SE) proposal, therefore, extends two ideas from the action planning domain to the domain of action observation: first, that somatosensory and motor systems are necessarily closely functionally coupled in the visual guidance of action [Dijkerman and de Haan,2007]; second, that to this end, higher level somatosensory areas integrate multimodal sensory and motor information in a predictive manner [Eickhoff et al.,2006a,b; Gazzola and Keysers,2009]. Accumulating evidence points to postcentral somatosensory regions as likely candidates for encoding observed pain and action information.

A recent model specifies the involvement of secondary somatosensory cortex (SII) and primary somatosensory (SI) areas BA1 and BA2 (Brodmann Areas 1 and 2), alongside adjacent somatomotor regions PF and PFG, across numerous social observation experiments involving either touch, pain, or action [Keysers et al.,2010]. Could the functional contribution of these postcentral somatosensory regions lie in pain–action integration during observation of others?

To address this question, we devised a novel paradigm combining action observation with pain observation. Subjects viewed others' hands grasping or withdrawing from objects that were either painful or nonpainful, and judged in a delayed response whether action and object were appropriate to one another (i.e., grasping an innocuous object and withdrawing from a noxious object) or inappropriate (i.e., grasping a noxious object and withdrawing from an innocuous object).

This action–pain observation paradigm allowed us to distinguish between three ways—not mutually exclusive—in which relevant somatosensory brain regions may support the action understanding task. First, they may simply be involved in coding sensory‐tactile qualities of the objects. If this is the case, some regions should show a preference for actions involving noxious objects, irrespective of whether they are grasped (the main effect of noxious vs. innocuous objects). Second, they may also differentiate among different action types, for example, those involving tactile contact (grasps) and those that do not (withdrawals). Some somatosensory regions should then show increased activation for observed grasps, irrespective of whether the object is painful or not (the main effect of grasps vs. withdrawals). However, if certain somatosensory areas have an integrative role in representing painful sensory action outcomes, they should be specifically activated for painful grasps compared with all other conditions (painful grasps vs. all). Importantly, painful grasp stimuli did not depict any tissue damage to the actor, so any pain‐specific response would draw on the observer's object and action knowledge.

Forming SEs based on the viewing of others' actions may also be modulated by factors such as the individual's previous experience in interacting with a given object. In turn, predicting the sensory component of an observed action may modulate sensory perception itself. Therefore, we also explored the role of previous experience on neural responses in somatosensory regions. Importantly, we also investigated the effects of viewing others' painful actions on participants' own tactile detection. These experiments led to findings supporting the SE proposal during combined action and pain observation. They demonstrate that when someone else grasps a painful object, postcentral somatosensory and adjacent somatomotor regions use object and action information to anticipate the tactile consequences of the observed action. Further, these findings demonstrate that the somatosensory system also becomes biased toward detecting directly felt tactile stimulation—as if the viewers were actually interacting with the painful objects.

METHODS

Imaging Experiment

Participants

Participants (n = 14, seven females, 23–35 years, all right‐handed, normal or corrected‐to‐normal vision) satisfied all requirements in volunteer screening and gave informed written consent approved by the School of Psychology at Bangor University, Wales, and the North‐West Wales Health Trust, and in accordance with the Declaration of Helsinki.

Stimuli and experimental design

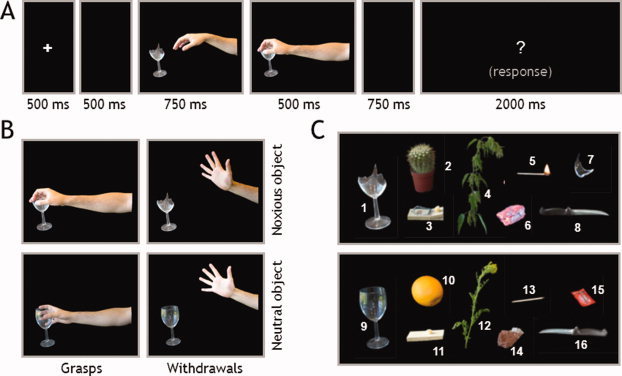

Participants viewed right hands either grasping (e.g., Fig. 1B, left column) or withdrawing (e.g., Fig. 1B, right column) from objects. Objects were either potentially noxious (e.g., a broken glass, see Fig. 1B, upper row) or neutral (e.g., an intact glass; Fig. 1B, lower row). Following each trial, the subjects judged whether the actions were appropriate (i.e., withdrawing from a painful object or grasping a neutral object) or inappropriate (i.e., grasping a painful object or withdrawing from a neutral object).

Figure 1.

(A) Trial structure; (B) example of the four possible final frames emerging from the combination of action types (grasps and withdrawals) and object types (noxious and neutral). (C) Object stimuli depicted as action targets (1–8: noxious, 9–16: innocuous).

Stimuli consisted of 1,250‐ms two‐frame sequences of photographs (see Fig. 1A, for an example). The first frame of each sequence (750 ms) showed a right hand in a neutral position beside a target object. In half of the sequences, the target object was one of eight potentially harmful objects (see Fig. 1C, objects 1–8), and in the other half it was one of eight visually matched neutral objects (see Fig. 1C, objects 9–16). The final frame (500 ms) showed the hand either grasping or withdrawing from the object. Because there was no interstimulus interval, a strong impression of apparent motion was created. There were a total of 32 two‐frame sequences in the set, balanced for precision grip and whole‐hand prehension, grasps and withdrawals, and noxious and neutral objects. Figure 1C shows noxious objects labeled as follows: (1) broken glass, (2) cactus, (3) sprung mousetrap, (4) stinging nettle (common in Britain), (5) lit match, (6) glowing coal, (7) glass shard, and (8) knife blade (turned toward hand); innocuous controls were as follows: (9) intact glass, (10) orange, (11) cheese on board, (12) flowering plant, (13) match, (14) stone, (15) ketchup packet, and (16) knife handle (turned toward hand).

Before the experiment, participants rated the objects with regard to (a) how painful they would be to touch and (b) to what degree they judged this from personal experience on a five‐point scale anchored by the terms “very much” and “not at all” [adapted from a questionnaire described in detail in Bach et al.,2005; see also Bach et al.,2010]. Each subject performed two runs of the experiment, lasting for about 12 min each. The second run of one subject was discarded because of excessive motion induced by a coughing fit. Each run contained 127 trials. The first two trials in each run served as a history for the critical trials and were not further analyzed. In the remaining 125 trials, the experimental conditions were presented at equal rates. Twenty percent of the trials showed a hand grasping a nonpainful object, 20% trials showed a hand grasping a painful object, 20% trials showed a hand withdrawing from a nonpainful object, and 20% trials showed a hand withdrawing from a painful object. Thus, in one half of the trials an “appropriate” response was required, and in the other half an “inappropriate” response was required. The remaining 20% were trials without any stimulation (black screen throughout), with the same duration as the trials in the other conditions [i.e., “null events,” Friston et al.,1999]. Trials were presented in a pseudo‐randomized order, controlling for both n − 2 and n − 1 condition repetitions. This was realized by a Matlab script that ensured that each of the five conditions (the four experimental conditions and the null condition) followed each condition in the previous trial and each condition in the trial before the previous trial an equal number of times. This resulted in a completely balanced but seemingly random design, in which trial‐to‐trial carry‐over effects (e.g., because of the overlap in hemodynamic responses or participants' strategies) were controlled.

Trial onsets were synchronized with the scanning sequence, with an intertrial interval of 6 s (2 repetition times, or TRs). Each trial began with the presentation of a fixation cross for 500 ms. After a 500‐ms blank screen, the stimulus sequence was presented (1,250 ms total). Following a subsequent 750‐ms blank screen, a question mark prompted the participants to make their response with previously designated “appropriate” or “inappropriate” keys with the right hand. Participants had 2,000 ms in which to make a response. No response feedback was given. Half of the participants pressed a left key to indicate an appropriate action and a right key for an inappropriate action. The other half used the reverse assignment. See Figure 1A for a schematic of the timing of a trial sequence.

Data acquisition and analysis

All data were acquired on a 1.5 T Philips magnetic resonance imaging (MRI) scanner, equipped with a parallel head coil. For functional imaging, an echo planar imaging (EPI) sequence was used (repetition time = 3,000 ms, echo time TE = 50 ms, flip angle 90°, field of view = 192, 30 axial slices, 64 × 64 in‐plane matrix, and 5 mm slice thickness). The scanned area covered the whole cortex and most of the cerebellum. Preprocessing and statistical analysis of MRI data was performed using BrainVoyager 4.9 and QX (Brain Innovation, Maastricht, The Netherlands). Functional data were motion‐corrected, spatially smoothed with a Gaussian kernel (6 mm FWHM, full width half‐maximum), and low‐frequency drifts were removed with a temporal high‐pass filter (0.006 Hz). Functional data were manually coregistered with 3D anatomical T1 scans (1 × 1 × 1.3 mm3 resolution) and then resampled to isometric 3 × 3 × 3 mm3 voxels with trilinear interpolation. The 3D scans were transformed into Talairach space [Talairach and Tournoux, 1988), and the parameters for this transformation were subsequently applied to the coregistered functional data. To generate predictors for the multiple regression analyses, the event time series for each of the four experimental conditions were convolved with a delayed gamma function (delta = 2.5 s; tau = 1.25 s). The event time series for each trial in each condition was defined as a 1,250 ms interval, capturing both frames of the action of each trial, time‐locked to the onset of the first frame. The response period was not modeled. Voxel time series were z‐normalized for each run, and additional predictors accounting for baseline differences between runs were included in the design matrix. The regressors were fitted to the MR time series in each voxel. Whole‐brain random‐effects contrasts were corrected for multiple comparisons, using BrainVoyager's cluster threshold estimator plug‐in, which uses a Monte Carlo simulation procedure (1,000 iterations) to establish the critical cluster size threshold corresponding to a family‐wise alpha of 0.05 corrected for the whole brain.

Pain and Action Meta‐Analyses

Two separate meta‐analyses were performed to determine the functional localization of pain‐ and action‐related activation over a large number of studies. We used the BrainMap database (http://www.brainmap.org), a comprehensive database that allows users to retrieve and analyze functional coordinates and accompanying metadata for a sampling of more than 1,700 papers [Fox and Lancaster,2002; Laird et al.,2009]. The Sleuth software (version 1.2) was used to identify all studies in the BrainMap database that reported activation in two separate behavioral domains: (1) pain and (2) action execution. For each meta‐analysis, studies involving drug manipulations or patient populations were only included if they reported contrasts for healthy, drug‐free controls.

Pain

The database search was limited to studies involving acute cutaneous pain (thermal including laser heat and cold pressor 58%, electrical 23%, mechanical 14%, and chemical 5%). Studies involving visceral, muscle, joint, or pathological pain were excluded, as those were involving semantic or graphic manipulations without a pain‐only condition (e.g., word stimuli independent of pain stimulation). The resulting data set consisted of 62 papers with a total N of 814 subjects.

Action execution

The database search was limited to studies involving actions made with the hand (irrespective of handedness). Studies involving eye movements were excluded unless they reported contrasts for hand‐only conditions. The resulting data set consisted of 234 papers with a total N of 4,019 subjects.

Coordinates for both meta‐analyses were transformed to MNI space (stereotaxic coordinates of the Montreal Neurological Institute), where necessary. To determine the likely spatial convergence of reported activations across studies, the resulting coordinates were submitted to an ALE (activation likelihood estimate) analysis, which takes spatial uncertainty into account, using GingerALE software [Laird et al.,2009] and thresholded with a false discovery rate of q < 0.05. The resulting statistical maps were visualized on the Montreal Neurological Institute (MNI) anatomical template using MRIcron software (http://www.cabiatl.com/mricro/).

Tactile Detection Experiment

Stimuli and apparatus

The visual stimulus material was identical to the imaging experiment. The stimuli were presented using Presentation (http://www.neurobs.com) on 3.2‐Ghz Pentium computer running Windows XP. Tactile stimulation was delivered via a custom‐built amplifier and Oticon BC462 bone conductors (100 Ω), which were attached with a gauze band to the underside of the tip of the participants' right index fingers. The bone conductors convert auditory input from the computer's sound card into vibrations that can be varied in terms of frequency and amplitude. The tactile stimulus was a 200 Hz sine wave overlaid with white noise of 50 ms duration. The first and last 10 ms were faded in and out to prevent sharp transients.

Procedure

The participants [n = 24, 15 females, 20–42 years [M = 26.4, standard deviation (SD) = 6.1], two left‐handed, normal or corrected‐to‐normal vision] were seated in a dimly lit room facing a color monitor at a distance of 60 cm. After the tactile stimulators were connected to their right index finger and the ear plugs were inserted, a calibration was performed to find the participants approximate detection threshold of the experimental stimuli. The tactile stimuli later to be detected in the main experiment were delivered in a constant stream every 1,000 ms. Stimulation began at the lowest intensity and was slowly increased until the subject reliably reported detecting the tactile stimuli. This threshold was then validated in a simple tactile detection task lasting for about 3 min. Participants were instructed to press a space bar whenever they detected the stimuli. To match the visual input to the main experiment, participants were instructed to look at their own hand during this procedure. Sixty tactile stimuli were delivered in a constant train, every 1,500 ms, with 36 trials without stimulation randomly interspersed. Stimuli were delivered at 90, 88, 86, 84, and 82% of the threshold intensity established in the first calibration session. After that the experimenter analyzed the detection probabilities across these intensities. If the data showed a decrease of accurate detection at 90% stimulus intensity to below chance performance at 82% stimulus intensity, the main experiment began. (Performance at 82% stimulus intensity was, on average, 19%, whereas at 90% stimulus intensity, participants accurately detected 90% of the tactile targets; see Supporting Information Fig. 1A.) If no such decrease was detectable, a new calibration session was performed.

The experiment properly began with a computer‐driven instruction and a short training phase of 32 trials, which was repeated until the experimenter was satisfied that the task was understood. The experiment lasted for about 45 min. During this time, the experimenter remained in the room and paused the experiment upon the participant's request. A total of 320 trials were presented, in which the two‐frame action sequences were presented at equal rates in a randomized order. Each of the 32 action sequences (two actions by eight painful and nonpainful objects) was presented 10 times, with tactile stimulation either absent or administered at 90, 88, 86, 84, and 82% of the stimulation threshold established in the first calibration session.

The overall trial structure and timing was identical to the imaging experiment. It differed in that the tactile stimulation was delivered 150 ms after onset of the critical second frame of the action sequence, and participants had to indicate whether they detected the stimulation by pressing the space bar with their left hand. No error feedback was given, but the instruction emphasized accuracy over response speed. As in the imaging experiment, participants judged the appropriateness of the seen action in a delayed response after a question mark appeared on the screen with a verbal response that was recorded by the experimenter.

Above‐threshold detection control experiment

A further experiment in which the tactile targets were presented well above threshold at supraliminal levels was used to replicate the effects previously obtained (see Supporting Information). Reaction times (RTs) were measured to test whether seeing painful grasps speeds up detection of supraliminal tactile stimuli.

Auditory control experiment

To ensure that the effects of the visual stimuli are specific to somatosensory perceptual systems rather than producing general arousal effects across sensory modalities, a control experiment was performed using auditory stimuli. This experiment was identical to the tactile detection experiment except that the tactile vibrators were not attached to participants' index fingers but placed in the same spatial location on the desktop occupied by participants' hands in the tactile detection experiment, while the right hand rested in the participants' lap (see Supporting Information).

RESULTS

Imaging Experiment

Behavioral data

The participant's error rates in the four conditions were entered into a 2 × 2 repeated‐measures analysis of variance (ANOVA) with the factors action (grasp and withdrawal) and object (noxious and neutral). The ANOVA only yielded a main effect of action (F[1,13] = 13.0; P < 0.005), with participants making more errors when judging withdrawals (M = 3.2% and SD = 2.1%) than grasps (M = 1.7% and SD = 2.6%). The reason for this difference is probably that grasping actions are easier to judge because, if inappropriate, they reflect an action that will have painful consequences. The same is not true for withdrawals, for which the appropriateness has to be encoded on a more abstract level. The main effect of object and the interaction of action and object were not significant (F[1,13] = 2.65, P > 0.1; F[1,13] < 1, respectively).

Regions encoding SE

The main purpose of this study was to identify brain regions encoding the sensory consequences of observed painful hand–object interactions. To this end, we contrasted painful grasp trials with trials depicting neutral grasps, neutral withdrawals, and withdrawals from painful objects (painful grasps − 1/3 neutral grasps − 1/3 noxious withdrawal − 1/3 neutral withdrawal). This painful grasp contrast revealed significant activation in middle, superior, and inferior frontal cortices as well as posterior parietal and occipital regions (Table I; Fig. 2, green). As predicted, robust activation was seen in bilateral somatosensory regions, encompassing superior postcentral gyrus (including BA1/3b and BA2), inferior parietal lobule (IPL), and dorsocaudal secondary somatosensory territories with peaks on the postcentral gyrus (Fig. 2). At a noncluster corrected threshold of P < 0.005, the painful grasp contrast also revealed an area of midcingulate cortex (MCC; xyz = 3, −10, 40; maximum t = 4.32) previously implicated in coding affective and motoric components of pain observation [Morrison et al.,2007].

Table I.

Activations for painful grasps, main effects of actions, object, and the interaction, thresholded at P < 0.005

| Contrast/region | Peak coordinates (Talairach, x,y,z) | Maximum t‐score | Cluster size (mm3) |

|---|---|---|---|

| Painful grasp (pain grasp > all) | |||

| SFG | −18, 50, 22 | 4.82 | 349 |

| MFG | −39, 38, 13 | 5.84 | 954 |

| IFG | −51, 11, 13 | 4.20 | 136 |

| PCG/IPL | −48, −25, 34 | 7.13 | 4,174 |

| PCG/IPL | 54, −22, 37 | 4.40 | 336 |

| Superior postcentral (SI) | −30, −37, 40 | 7.63 | 1,954 |

| Superior postcentral (SI) | 33, −31, 37 | 4.59 | 202 |

| Posterior parietal | −12, −52, 55 | 5.00 | 232 |

| Posterior parietal | 27, −43, 49 | 4.85 | 365 |

| Ventral occipital cortex | 36, −58, −8 | 6.85 | 5,189 |

| Ventral occipital cortex | 36, −79, 1 | 6.84 | 6,522 |

| Ventral occipital cortex | −12, −82, −8 | 4.58 | 1,031 |

| Main effect of action (grasp > withdraw) | |||

| PCG/IPL | 63, −19, 36 | 5.41 | 96 |

| PCG/IPL | −63, −28, 28 | 5.05 | 1,158 |

| PCC | −6, −31, 37 | 4.97 | 258 |

| PMTC/LOC | 45, −55, −5 | 8.80 | 1,439 |

| Ventral occipital cortex | 36, −82, 4 | 6.71 | 5,125 |

| Ventral occipital cortex | −9, −85, −14 | 5.46 | 581 |

| Main effect of action (withdraw > grasp) | |||

| Putamen | 21, 5, 13 | 4.99 | 380 |

| Ventral occipital cortex | −9, −70, −8 | 5.98 | 631 |

| Main effect of object (noxious > neutral) | |||

| Temporal pole | 39, 11, −11 | 5.13 | 169 |

| PCG/IPL | −51, −25, 31 | 5.37 | 265 |

| IPL | −63, −25, 37 | 4.64 | 322 |

| Paracentral lobule | −15, −25, −52 | 5.21 | 206 |

| Cerebellum | −18, −52, −14 | 7.98 | 683 |

| Cerebellum | −1, −58, −29 | 5.78 | 191 |

| Cerebellum | −9, −64, −14 | 4.68 | 563 |

| Ventral occipital cortex | 18, −73, −11 | 6.06 | 2,214 |

| Occipital cortex | −18, −79, 13 | 5.26 | 508 |

| PMTC/LOC | 30, −88, 4 | 10.36 | 3,848 |

| PMTC/LOC | −30, −82, −2 | 4.59 | 276 |

| Interaction of object and action (appropriate > inappropriate actions) | |||

| Superior frontal | −15, 53, 25 | 6.26 | 401 |

| Frontal | 27, 44, 28 | 4.70 | 163 |

| IFG | −51, 17, 4 | 5.20 | 547 |

| IFG | −27, 29, 4 | 4.93 | 156 |

| IFG | 52, 17, 1 | 4.56 | 174 |

| Midcingulate | 0, −10, 52 | 5.66 | 311 |

| Superior parietal | −9, −55, 58 | 5.25 | 1,087 |

| Superior parietal | 21, −49, 58 | 4.21 | 300 |

| PMTC/LOC | −42, −61, 7 | 4.86 | 392 |

| Cerebellum | 24, −73, −32 | 5.45 | 385 |

| Cerebellum | 27, −61, −23 | 4.85 | 305 |

Contrasts were whole‐brain corrected at a family‐wise alpha of 0.05. For each contrast, activations are ordered from rostral to caudal.

IPL, inferior parietal lobule; PCC, posterior cingulate cortex; PCG, postcentral gyrus; LOC, lateral occipital complex; MFG, middle frontal gyrus; PMTC, posterior middle temporal cortex; SFG, superior frontal gyrus; IFG, inferior frontal gyrus.

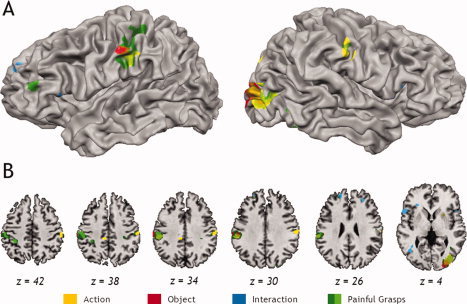

Figure 2.

Partly inflated cortical surface (A) and axial slices (B) showing selective activation for noxious objects (red), contact as consequence of grasping actions (yellow), interaction between pain and action (blue), and painful grasps (green). On axial slices (B), bright green color shows voxels that survived the painful grasp conjunction analysis (stronger activation in the painful grasp condition than any other condition plus an interaction). All activations thresholded at P < 0.005 at whole‐brain corrected family‐wise error P < 0.05, on the cluster level for all voxels in the brain volume. The left side of the image corresponds to the left side of brain in all images.

A second whole‐brain analysis aimed to confirm the specificity of the responses yielded by the painful grasp contrast and to identify only those voxels that showed both (a) larger responses in the painful grasp trials than in any of the other conditions that do not produce painful consequences (neutral grasps, neutral withdrawals, and withdrawals from painful objects) and (b) show an interaction reflecting that the region distinguishes between noxious and neutral objects when being grasped, but not when withdrawing from them. We computed the intersection of these four contrasts [painful grasps − neutral grasps ∩ painful grasps − noxious withdrawals ∩ painful grasps − neutral withdrawals ∩ (painful grasps + neutral withdrawals) − (neutral grasps + painful withdrawals)], the individual contrast maps being thresholded at P < 0.05 (uncorrected). The conjunction of these activations yielded two sites that passed family‐wise multiple comparison correction for the whole brain (P <0.05, whole brain corrected): the ventral (xyz = −52, −23, 36, cluster size = 1,188 mm3, max t = 3.068) and dorsal regions of the left postcentral gyrus (xyz = −34, −41, 45; cluster size = 1,917 mm3; max t = 3.15), overlapping with the two left‐hemispheric postcentral clusters identified by the painful grasp contrast. No other activation site passed cluster size correction for multiple comparisons. The conjunction analysis, therefore, identifies the left dorsal and ventral postcentral somatosensory cortex as the sole regions showing the features predicted for regions encoding the sensory consequences of painful hand–object interactions: both the interaction of object and action factors as well as stronger responses in the painful grasp condition than in any other condition.

Action, object, and appropriateness discrimination

Other relevant task aspects were action (grasp or withdrawal) and the object of the action (painful or nonpainful), and whether the action was appropriate for the object (grasps for neutral objects and withdrawals from painful objects; this would also reflect an interaction between action and object factors). To ascertain which parts of the brain are involved in encoding these aspects, we computed three pairwise contrasts, corresponding to the two main effects and the interaction in a 2 × 2 ANOVA design. First, to find regions encoding grasping actions regardless of object painfulness, we contrasted painful and neutral grasps with painful and neutral withdrawals (action contrast; grasps vs. withdrawals: + painful grasp + neutral grasps − noxious withdrawals − neutral withdrawals). This contrast is equivalent to the main effect of action. Next, to find regions discriminating noxious object features regardless of action type, we contrasted the trials showing grasps of, and withdrawals from, noxious objects with those showing grasps of, and withdrawals from, neutral objects (object contrast; painful vs. nonpainful objects: + painful grasp − neutral grasps + noxious withdrawals − neutral withdrawals). This contrast is statistically equivalent to the main effect of object type in ANOVA models. Third, to find regions encoding the appropriateness of the action in their object context, we contrasted appropriate actions (grasps of neutral objects and withdrawals from painful objects) with inappropriate actions (grasps of noxious objects and withdrawals of neutral objects) (appropriateness contrast: + painful grasp − neutral grasps − noxious withdrawals + neutral withdrawals). This contrast reflects the interaction of the action and object factors in an ANOVA model and identifies regions in which appropriate hand–object interactions yield either more or less activation than inappropriate actions.

Again, these contrasts revealed activation throughout the brain (Table I). The object (red, Fig. 2) and action (yellow, Fig. 2) contrasts revealed stronger activation for grasps and noxious objects in visual areas, encompassing extrastriate body area (EBA)/middle temporal (MT) visual area for the action contrast, and more medial inferior temporal areas in the object contrast. The object contrast revealed additional painful object‐related activation in the cerebellum, and the action contrast identified withdrawal‐related responses in the putamen and the ventral occipital cortex. The appropriateness/interaction contrast (blue, Fig. 2) only revealed activation that showed stronger responses for inappropriate than appropriate actions. Activated regions included left and right inferior frontal regions, often implicated in processing action goals, as well as the superior frontal gyrus, the parietal lobe, cerebellum, and a region in posterior temporal lobe/superior temporal sulcus. Importantly, both the action and the object contrasts (but not the appropriateness contrast) revealed activation in left somatosensory cortices, adjacent to the cluster identified by the painful grasp contrast, and the action contrast revealed additional activation in the right ventral postcentral gyrus.

Post hoc 2 × 2 ANOVAs with the factors action (grasp and withdrawal) and object (noxious and neutral) tested whether these somatosensory regions exclusively respond to the aspect that was used to identify the region or whether they also respond to the other task aspects. This test was performed, first, on the general linear model (GLM) parameter estimates of the 14 subjects in each of the four conditions at the peak voxel (3 × 3 × 3 mm3). Only the ANOVA results of contrasts orthogonal to the voxel selection criteria are reported for each region‐of‐interest (ROI). Second, whole‐brain, low‐threshold maps (P < 0.05, uncorrected) were created for each of the three contrasts (action, object, and interaction) for all voxels activated in any of the contrasts of interests, a larger scale view of the presence of main effects or interactions in the activated clusters (Supporting Information). The results of the ANOVAs showed that the bilateral clusters identified by the action contrast (grasps > withdrawals) neither showed an interaction (left, F[1,13] = 0.04, P = 0.837; right, F[1,13] = 1.02, P = 0.330) nor responded strongly to whether the object was painful or neutral (left, F[1,13] = 4.82, P = 0.054; right, F[1,13] = 1.70, P = 0.214). Similarly, the more lateral cluster identified by the object contrast (noxious > neutral objects) neither showed significant responses for the interaction contrast (F[1,13] = 0.16, P = 0.694) nor whether the action was a grasp or a withdrawal (F[1,13] = 2.70, P = 0.124). Only the more medial object activation that overlapped with the larger ventral postcentral activation identified by the painful grasp contrast showed strong action activation (F[1,13] = 12.50, P = 0.004), while not showing any evidence for an interaction (F[1,13] = 2.35, P = 0.149). See Supporting Information Figure 3 for low threshold maps showing the results of the action, object, and appropriateness (interaction) contrasts for all activated voxels in any contrast of interest in the main analysis.

Experience dependence of activation in postcentral somatosensory regions

Correlational analyses examined the extent to which the two left postcentral regions identified by the painful grasp contrasts reflect SE of painful action outcomes. This analysis was based on eight predictors, each modeling the responses to each of the eight different objects in the painful grasp condition. Twenty‐four additional predictors modeled the responses to seeing interactions with eight objects in the three other conditions (neutral grasps, painful withdrawals, and neutral withdrawals). Relatively large ROIs (cube of 9 mm side length, covering all significantly activated voxels in the painful grasp contrast, centered on the peak voxel) were used to increase power for detecting reliable variations across the eight different painful objects. For each participant separately, we correlated the GLM parameter estimates of viewing the interactions with each of the eight painful objects in the painful grasp condition with the participant's individual ratings of how painful each object would be to touch and to what extent this judgment was based on personal experience. (One participant did not fill out the questionnaire.) To assess significance of the correlations across subjects, Fisher‐transformed correlation coefficients of each rating were compared across participants with simple two‐sided t‐tests (df = 12) against zero. This analysis is completely independent of the selection criteria for the ROI, as the criteria are based on overall differences between conditions (averaging across objects), whereas the correlational analysis ignores this between‐condition variability and only takes within‐condition differences between the eight objects into account.

No significant relationships with either experience or painfulness were observed in the dorsal postcentral cluster identified by the painful grasp contrast. However, the left inferior postcentral cluster (Fig. 2, green) showed a significant negative across‐subject correlation with the participants' interaction experience with the objects (mean r = −0.22; P = 0.04) and a marginally significant across‐subjects correlation with the perceived painfulness of interacting with the objects (mean r = 0.17; P = 0.08). Thus, recruitment of this region increased when the hand–object interaction was perceived to be more painful and when the subject was less able to judge the interaction's painfulness based on experience. Importantly, these relationships were absent when the same correlations were calculated for the painful withdrawal condition, that is, when seeing a hand withdrawing from the same painful objects (experience, mean r = 0.11; P = 0.33; painfulness, r = −0.01; P = 0.96). Direct comparison of the mean correlations between viewing painful objects being grasped and seeing withdrawals revealed significant differences for experience (P < 0.01), but not painfulness, even though the mean strength of the correlation decreased numerically also for this comparison. This indicates that the responses in the inferior postcentral cluster to hands grasping painful objects (but not seeing a hand withdraw from these objects) are indeed related to participants' experience in interacting with the objects.

A similar pattern was found when the ROI was defined on the basis of the conjunction analysis (encompassing only voxels showing both stronger responses for painful grasps than any other condition, as well as an interaction). Again, responses in a left inferior postcentral cluster correlated negatively with the amount of experience people had in interacting with the objects (mean r = −0.20; P = 0.03) and differed significantly (P = 0.039) from the same correlations calculated for withdrawals from painful objects (mean r = −0.06; P = 0.57). The correlation with object painfulness was still positive but failed to reach significance (mean r = 0.13; P = 0.15). Supporting Information Table 1 shows the average Fisher‐transformed across‐subjects correlations in the other somatosensory and MCC ROIs identified by the painful grasp, action, and object contrasts. A similar pattern was also seen in the more medial cluster identified by the object contrast, which overlapped/was directly adjacent to the cluster identified by the painful grasp contrast, but this pattern was not seen in either of the other somatosensory clusters identified by the contrasts not related to sensory consequences.

Encoding of noxious object features

Regions that encode the noxious features of an object should show similar responses to seeing the different painful objects irrespective of whether the object is grasped or whether the hand withdraws from it. To test whether the regions identified by the object contrast indeed have such properties, we relied on the same regressor model as the experience/painfulness analysis above and computed whether the single subjects' responses to withdrawals from the eight different objects correlated with the responses to the same objects when the hand grasped them. Indeed, the only region showing such similar responses to painful objects irrespective of action type was the more medial postcentral cluster identified by the object contrast (across participant mean r = 0.142, t = 2.24, P < 0.05). This further supports the proposal that the object‐specific responses in inferior postcentral gyrus indeed reflect retrieval of sensory object information, irrespective of the action performed on the object. As shown by Supporting Information Table 1, however, no such relationship was found for the more lateral cluster identified by the object contrast (mean r = −0.126, t = 0.804, P = 0.434) nor for any of the postcentral activations identified by the painful grasp or action contrast (all ts < 1.5).

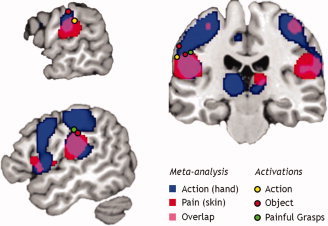

Pain and Action Meta‐Analyses

The pain meta‐analysis revealed activation foci in bilateral somatosensory cortices, midcingulate with supplementary and presupplementary motor cortices, bilateral insula, and thalamus, with smaller peaks in periaqueductal gray, cerebellum, perigenual frontal cortex, and precuneus. The action execution meta‐analysis revealed activation foci covering posterior parietal and somatosensory cortices, dorsal and ventral lateral prefrontal cortices, supplementary and presupplementary cortices, bilateral inferior frontal gyrus/anterior insula, thalamus, and cerebellum, with smaller peaks in bilateral posterior middle temporal gyrus.

When the pain and action execution maps were overlaid (Fig. 3), common activation fell on the postcentral gyrus bilaterally, with activation extending from the parietal operculum onto the free surface of the gyrus (from z = 11 to z = 34 on the left and z = 15 to z = 28 on the right in MNI coordinates), and small bilateral peaks in SI (z = 55 on the left and z = 54–60 on the right in MNI coordinates). Common activations also fell on inferior frontal gyrus (IFG) bilaterally, with a greater extent on the right (from z = −5 to z = 44 in MNI coordinates). Overlap also occurred in midcingulate, supplementary, and presupplementary cortices as well as in thalamus and cerebellum.

Figure 3.

Peak activation loci with respect to meta‐analyses of action execution and pain. A meta‐analysis of studies in the BrainMap database involving action execution with the hand (blue; N = 4,019 subjects) is overlaid on a meta‐analysis of studies involving cutaneous pain (red; N = 814 subjects). Peak action observation and pain activations in postcentral cortex show a degree of overlap (pink). Filled circles show MNI‐transformed peak activation loci in left somatosensory cortex for action (yellow; grasps > withdrawals; MNI xyz = −66, −25, 30), object (red; painful > nonpainful; MNI xyz = −66, −21, 40 and −53, −22, 33), and painful grasps (green; painful grasps > all; MNI xyz = −50, −22, 36). Sagittal views show x = −52 (top left) and x = −62 (bottom left); coronal view shows y = −23 (right). Meta‐analyses were thresholded at a false discovery rate of q < 0.05; statistical maps for this study were thresholded at P < 0.005 at whole‐brain corrected family‐wise error P < 0.05. The left side of the image corresponds to the left side of brain.

To relate our left somatosensory activations to the meta‐analysis results, peak coordinates were transformed to MNI space using GingerALE's foci conversion tool [Lancaster et al.,2007] and plotted on an MNI template. With the exception of the lateral object activation, all peaks fell on the superior boundary of the overlap area between action execution and pain (Fig. 3). The lateral object activation was located superior to this within the territory related to action execution activation.

Tactile Detection Experiment

Detection rates

For the analysis, the percentage of correct detections (“hits,” Supporting Information Fig. 1b) was entered into a repeated‐measures ANOVA with the factors action (grasp/withdrawal) and object (neutral/noxious). There were main effects of action (F[1,23] = 31.4, P < 0.001) and object (F[1,23] = 15.4, P = 0.001) and a significant interaction of both factors (F[1,23] = 5.6, P = 0.027). Post hoc t‐tests showed that participants responded more often to tactile stimuli when the model grasped a painful object compared with a neutral object (P < 0.001); for withdrawals there was no difference between the two object types (P = 0.37). The analysis of the false alarms (Supporting Information Fig. 1c) revealed a similar pattern. There was no main effect of action (F[1,23] < 1), but a main effect of object (F[1,23] = 6.3, P = 0.020), and a significant interaction of object and action (F[1,23] = 5.3, P = 0.031). Participants more often erroneously reported stimulation when they saw a hand grasp a painful object than when the hand grasped a neutral object (P = 0.01), but there was no difference between the two object types for withdrawals (P = 0.48).

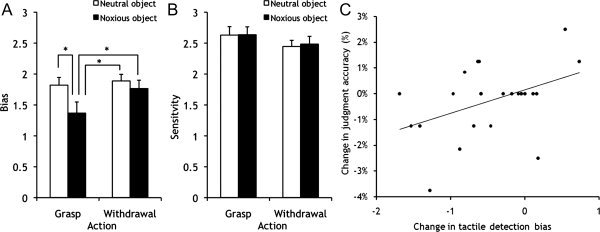

Signal detection analysis

The analysis of hits and the false alarms suggests that observing painful grasps has a direct effect on the observers' own tactile processes. This effect does not appear to reflect a better differentiation between stimulation and no stimulation, but suggests an increased readiness to detect stimulation, even when there was none. To confirm this effect on bias rather than tactile sensitivity, we performed a signal detection analysis. This transforms the hit and false alarm data into measures of (a) the participants' ability to distinguish stimulation from no stimulation (d‐prime) and (b) their likelihood of reporting a tactile stimulus (bias). For the d‐prime measure (Fig. 4B), there was a trend toward a main effect of action (F[1,23] = 3.6, P = 0.070), but no main effect of object and no interaction (F < 1, for both) and planned comparisons failed to reveal differences in tactile acuity between the painful grasp condition and any of the other conditions. Effects were, however, found on the bias measure (Fig. 4A) and mirrored the data from the false alarms and hits. There were main effects of action (F[1,23] = 4.8, P = 0.039) and object (F[1,23] = 7.5, P = 0.012) that were qualified by a significant interaction (F[1,23] = 5.2, P = 0.032). Post hoc t‐tests showed that participants have a greater propensity to report tactile stimulation whilst viewing painful grasps compared with all other conditions (P < 0.01, for all). There were no significant differences between any of the other conditions.

Figure 4.

Mean bias (A) and sensitivity scores (B) for the detection of tactile stimuli, whilst observing hands approaching and grasping, or withdrawing from, potentially painful and neutral objects. *Significant difference between means (P < 0.001). Error bars represent SEM. (C) Correlation between the bias to report tactile stimulation for painful grasps and accurate judgments of action appropriateness, r = 0.43, P = 0.036.

Correlational analysis

The analysis of the bias measure suggests that participants map the painful outcome of observed actions onto their own sensory tactile system. To test whether this mapping serves a role in the participants' subsequent action judgments, we correlated each participant's tactile detection bias for seeing painful grasps relative to neutral grasp with the corresponding difference in their accuracy of judging painful grasps relative to neutral grasps. Indeed, those participants that showed a stronger bias to report tactile stimulation for painful grasps also made fewer errors in action judgments in the painful grasp condition relative to the neutral grasp condition (r = 0.43, P = 0.036; Fig. 4C), indicating a close linkage between sensory tactile mapping processes and subsequent grasp judgments. These relationships were not found for the corresponding comparisons for tactile detection bias and judgments of painful and neutral withdrawal actions (r = −0.18, P = 0.39).

Above‐threshold detection control

A further experiment, in which the tactile targets were presented well above threshold at supraliminal levels, replicated these effects (see Supporting Information). Namely, RTs were fastest to detect tactile targets when the hand was seen to grasp a pain‐evoking object than in any other condition (Supporting Information Fig. 1).

Auditory control experiment

In contrast to tactile target detection, seeing painful grasps had no effect on auditory detection rates for auditory targets while viewing stimuli. This implies that the effects on bias in the tactile experiment reflected a perceptual effect (actual perception of a tactile stimulation even in the absence of stimulation) rather than a bias at the decision‐making level. For details, see Supporting Information Figure 2, Supporting Information Table 2, and Supporting Information.

DISCUSSION

This investigation used a novel task combining action and pain observation to elucidate specific functional contributions of sensorimotor systems to the processing of others' hand–object interactions. In both functional magnetic resonance imaging (fMRI) experiment and tactile detection experiment, participants watched hands either grasping or withdrawing from objects that were either noxious or neutral, and judged whether object and action were appropriate to one another. This task was designed to pinpoint the neural encoding of the expected sensory consequences of the observed actions (is the action potentially painful?). It also allowed us to ascertain how tactile object properties (is the object noxious or neutral?), action properties (does the action involve tactile contact?), and the overall appropriateness of action to the object are encoded in the brain.

Sensory Expectation

Multiple, spatially distinct subregions in anterior parietal cortex and parietal lobule were engaged by specific sensory‐tactile aspects of the observed actions and goal objects: object noxiousness, action type, and their integration. Separate somatosensory/IPL subregions responded more strongly when the observed actions targeted noxious objects compared with neutral objects, irrespective of the action carried out with them. This suggests an encoding of tactile object properties independent of action properties. Other subregions responded more strongly to observed grasps than to withdrawals, indicating a further discrimination of goal‐directed actions involving tactile contact from actions that do not involve contact, irrespective of whether the object is painful.

The largest and most robust activation was found in somatosensory cortices/IPL during the painful grasp condition compared with all other conditions, consistent with the hypothesis that these regions encode the painful consequences that emerge from actions on painful objects. No skin damage was ever shown in the stimuli. To derive these sensory outcomes of painful actions, the brain must combine knowledge that a grasping action has taken place (action recognition), and that the specific object is likely to imply pain (pain recognition). Activation in the painful grasp condition thus reflects not just a discriminative but an integrative role in action observation, with sensitivity to the consequences emerging from actions toward painful objects.

Converging lines of evidence from this study support the notion that activation in the painful grasp condition reflects encoding of expected painful action consequences. First, subjects' blood‐oxygen‐level‐dependent (BOLD) responses in somatosensory cortices/IPL when seeing painful grasps (but not when seeing withdrawals from the same objects) were related to their own previous experience with the stimulus objects. Despite a positive correlation with painfulness ratings for each object, the BOLD response was less when others' hands grasped objects with which the subjects reported a greater degree of previous painful experience in handling. This inverse relationship could indicate a heightened recruitment of visuotactile processing to “fill in” information that is relatively scantily supplied by real experience. This is consistent with the evidence that inexperienced controls show greater activation in somatosensory cortex than acupuncture specialists who are accustomed to the sight of needles entering skin [Cheng et al.,2007], and that IPL responses are higher for cartoon hands suffering mishaps when compared with their photographic counterparts [Gu and Han,2007]. In the domain of action observation, parietal and premotor cortices show similar inverse relationships with the subjects' sensorimotor experience with the observed tool actions [Bach et al.,2010]. Activation for predicting others' reach ranges also correlates with subjects' visual imagery skill [Lamm et al.,2007a]. Our activations could reflect sensory aspects of the painful stimuli independent of affective evaluations [Lamm et al.,2007b]. In that regard, it is interesting that despite a diminished ability to identify or describe their own emotions, individuals with alexithymia nevertheless show comparable bilateral activation in this region to that of nonalexithymic controls when viewing others' pain in transitive hand–object interactions [Moriguchi et al.,2007].

The proposal that the postcentral and IPL responses during painful grasp observation reflect SE is further supported by the finding that sensory detection thresholds were also altered in the painful grasp condition. In a separate psychophysical experiment outside the scanner, participants watched and judged the same actions as in the imaging experiment, but had to detect vibrotactile stimulation to their own fingers while viewing the actions. Object and action factors did not affect participants' ability to detect a stimulus, but seeing painful grasps increased the bias for reporting stimulation when none had occurred. Signal‐detection analysis showed differences in the bias measure, which reflects how much evidence participants need to accept that they felt stimulation. Sensitivity (d‐prime) was not influenced by the experimental conditions. Rather, viewing another person's hand grasping a pain‐evoking object shifted sensory expectancy such that participants were more likely to report the presence of a touch on trials where no stimulation was actually delivered (false alarms).

Other work has shown that when a visual stimulus predicts a tactile stimulus, postcentral cortex responds on trials where only the visual stimulus is presented [Carlsson et al.,2000]. This response in the absence of a tactile stimulus reflects an anticipation of touch. Our finding that seeing a painful action biases one's own tactile detection suggests a process by which visual and tactile processing converge or summate, with somatosensory/IPL regions likely playing a central role in their integration. In the case of an actual reaching‐grasping action, such a prediction would prepare motor and sensory circuits for performing the action. In the case of merely observing a painful action, processing within this system requires less evidence from first‐hand somatosensory information to detect a tactile stimulus. Low stimulation—or no stimulation—may become superthreshold as a result of SE. Seeing others' pain could make us “thin‐skinned” even to the point of hallucinating a feeling of touch. It must be emphasized that this skin‐thinning effect is not merely a passive, reactive response: the greater someone's bias, the more accurate they were at judging an action's appropriateness. This correlation provides the first evidence that such SE meaningfully enhances action understanding and may pave the way for empathy.

Somatosensory Cortices and IPL Areas in Pain and Action

Somatosensory cortices in anterior parietal cortex include primary somatosensory areas on the postcentral gyrus and sulcus as well as a complex of secondary somatosensory areas on and near the operculum [Eickhoff et al.,2006a,b, 2007]. Adjacent regions on the IPL include cytoarchitectonic area PF on the supramarginal gyrus [Caspers et al.,2006]. These regions have been consistently activated in both pain and action execution in neuroimaging experiments. This is reflected in the meta‐analysis results, in which a pain–action overlap encompasses parts of primary and secondary somatosensory cortices as well as part of IPL (Fig. 3). It is also consistent with other meta‐analyses that implicate subregions of somatosensory cortex and IPL in pain observation [Keysers et al.,2010; Lamm et al.,2011] and action observation [Caspers et al.,2010; Keysers et al.,2010].

The overlap territory in anterior parietal somatosensory cortex and IPL includes several functionally and anatomically distinct areas. Activations in these subregions have been labeled with varying degrees of consensus in the existing literature, but here we consider our activations with respect to observer‐independent cytoarchitectural boundaries and meta‐analytical functional localization [Caspers et al.,2008; Eickhoff et al.,2006a,b]. These methods reliably and usefully identify functional differentiation among brain areas by revealing statistical convergence of anatomical or functional boundaries across individuals and studies. Peak voxels for viewing interactions with painful objects, as well as for viewing painful grasps, fell in postcentral gyrus/sulcus, with activation clusters extending to left rostral IPL. Activation for viewing grasps regardless of the painfulness of the object also extended onto rostral IPL. The IPL subregion engaged here is likely area PF, with object‐related activation in rostral PFt, and action‐ and painful‐grasp‐related activation closer to the boundary between PFt and PFop subregions, taking into account the SD of cytoarchitectural boundaries among individual brains [Caspers et al.,2008].

PF is associated with sensorimotor processing during object manipulation [Binkofski et al.,1999], and rostral PF is also implicated in coding the sensory features of objects during haptic object exploration [Miquée et al.,2008]. It is densely interconnected with nearby somatosensory areas [e.g., Rozzi et al.,2006], and integrates visual with motor, sensory, and proprioceptive information [Eickhoff et al.,2006a,b; Gazzola and Keysers,2009; Hinkley et al.,2007; Naito and Ehrsson,2006; Oouchida et al.,2004]. Likewise, a high proportion of somatosensory and visual responses alongside action‐related responses have been reported in monkey PF [Dong et al.,1994; Rozzi et al.,2008]. It is also a specific locus of activation for the observation of object‐related actions [Caspers et al.,2010; Cunnington et al.,2006]. PF has been proposed as the human homolog of macaque PFG/PF, in which mirror‐neuron properties have been recorded [Fogassi et al.,2005; Rozzi et al.,2008].

Our findings are consistent with the view of IPL's role as part of a “mirroring” or “simulation” system for socially derived information [Gazzola and Keysers,2009; Keysers et al.,2010] and suggest that its contribution to such “mirroring” processes is related to sensory‐tactile aspects of the observed skin–object interactions. Possible roles for PF alongside other IPL subregions in action planning are in predictive coding of the next step in goal‐directed action sequences [Fogassi et al.,2005] and in transforming somatosensory information into motor terms [Bonini et al.,2011; Rozzi et al.,2008]. In the case of action observation, visual information about others' actions is similarly transformed. Our findings indicate that this area is sensitive not only to sensory information during action observation but also to goal object features to the extent that it can distinguish noxious from innocuous objects.

It has been proposed that SII is part of a parietal sensorimotor circuit including these more caudal anterior parietal areas [Binkofski et al.,1999; Bodegård et al.,2001]. SII activation alongside IPL engagement has been reported for tasks involving object manipulation, haptic perception, and kinesthetic imagery [e.g., Reed et al.,2004], though its engagement is inconsistent [e.g., Stoeckel et al.,2003]. Conversely, some reported activations for touch, pain, and action and their observation, localized to SII, may also involve anterior IPL subregions [e.g., Ebisch et al.,2008; Jackson et al.,2006; Keysers et al.,2004; Lamm et al., 2007a; Porro et al.,2004]. Left IPL activation extending into postcentral somatosensory areas has been found for observation of hands manipulating everyday objects [Meyer et al.,2011]. This recent study showed that activation patterns in SI (predominantly BA2) and SII region OP1 predicted which objects subjects had viewed, with better classification performance in SII than in SI.

SII is also consistently activated by pain and tactile stimulation [e.g., Mazzola et al.,2006], though the results of the pain–action meta‐analysis indicate that activations for painful cutaneous stimulation are not limited to somatosensory regions but also engage the nearby and closely interconnected parietal regions associated with action. The activation peaks from the experiment occupied a sensory‐action border territory here, except for one grasp‐related activation (Fig. 3). Consistent with this, most of these postcentral peak voxels resulting from action, pain, and painful grasp observation fell on boundaries between “sensory”‐ and “action”‐associated cytoarchitectonically defined regions. Painful object activations extended to left dorsocaudal SII (the free surface region of left OP1 bordering with PFt); a more medial activation for painful objects fell on the dorsal boundary of OP1; and the left painful grasp activation fell in PFt near the dorsal boundary of OP4. We conclude from these observations that the integrative processing of observed pain‐relevant tactile and action information may take place in zones of convergence between higher order somatosensory processing and adjacent sensorimotor territories.

The activated area of somatosensory cortex extended into likely SI (BA1/3b and BA2), consistent with a recruitment of primary sensory processing during pain observation [Betti et al.,2009; Cheng et al.,2008; Keysers et al.,2010]. Indeed, individuals who experience a higher degree of unpleasant somatic feelings when viewing others' pain show greater SI and SII activity compared with “nonresponders” [Osborn and Derbyshire,2010]. In the functional‐anatomical model of the human mirror system proposed by Gazzola and Keysers [Gazzola and Keysers,2009; Keysers et al.,2010], somatosensory areas, in particular area 3b of SI, are involved in tactile and proprioceptive associations during action observation [see also Meyer et al.,2011]. Motor preparation for grasping a goal object may, therefore, involve an expectation of the object's surface properties, such as its shape and texture. This is consistent with the “SE” proposal that sensorimotor areas can form an experience‐based expectation of tactile properties during action and pain observation.

Visuotactile Network Activations

As noted, the responses in somatosensory cortices/IPL were accompanied by other activation sites throughout the brain. Of key interest is the appropriateness contrast, which identified regions that responded more strongly to inappropriate than appropriate actions, for both grasps and withdrawals, irrespective of whether they produce painful action outcomes. These regions therefore do not encode consequences resulting simply from motor acts (whether they involve contact), sensory object features (whether they are noxious or neutral), or the sensory consequences of interacting with painful objects. Instead, they appear to encode actions as a whole—whether the observed action is appropriate to the object—responding more strongly for inappropriate actions.

This contrast revealed a network of regions spanning the bilateral IFG, superior parietal and frontal cortices, the left posterior middle temporal cortex/lateral occipital complex (PMTC/LOC), the midcingulate, and the cerebellum. In none of these regions, with the exception of the cerebellum, any main effects for either object information (noxious vs. neutral) or action (grasps vs. withdrawals) were found even at liberal thresholds (Supporting Information). The IFG is often regarded as a key node in the human mirror neuron system. Our data suggest that this role does not emerge from an encoding of single action or object properties, but from an integration of the action's appropriateness in the object context [cf. de Lange et al.,2008]. This is consistent with recent findings that that the motor priming effects during action observation reflect a matching of action to the affordances of the goal object [Bach et al.,2011; see also Lindemann et al.,2011]. Regions in the left PMTC/LOC identified by the appropriateness task are often seen in action observation tasks and have been associated with action semantics [Noppeney,2008], specifically the judgment of action–object appropriateness [Kellenbach et al.,2003] as was found here. This area is a vital node in a visual and tactile object recognition stream [Reed et al.,2004], which processes visual object information and is active during tactile imagery [Miquée et al.,2008] and action observation [Caspers et al.,2010]. The MCC, which contributes to grasp initiation and termination [Rostomily et al.,1991; Shima and Tanji,1998] and has been associated with motor‐related processing during pain observation [Morrison et al.,2007], also showed greater activation for inappropriate actions.

Regions showing main effects of object or action, but without their interaction, were seen in right hemisphere ventral visual areas, consistent with its role in discriminating objects during action observation [Meyer et al.,2011]. An area in the ventral visual stream corresponding to the EBA [Downing et al., 2001] responded to actions, responding more strongly to grasps than withdrawals. The involvement of this area may be connected to the presence of body parts—hands and arms—in the stimuli [Taylor et al.,2007]. The right PMTC/LOC showed stronger responses to grasping actions and noxious objects. Other areas yielded by the painful grasp contrast, such as superior and posterior parietal cortices, were primarily driven by object–action interactions and indeed overlapped with the regions identified by the appropriateness contrast.

Empathy

Our findings provide an investigation of functional selectivity in anterior parietal somatosensory regions and IPL, indicating that their role in SE extends to social observation. Crucially, they also demonstrate a relationship between somatosensory responses and action understanding. Early functional imaging studies suggested that somatosensory areas contribute very little to the neural response when seeing others' pain or empathizing with it [Morrison et al.,2004; Singer et al.,2004]. Evidence from motor‐ and sensory‐evoked potentials [Avenanti et al.,2005,2007; Bufalari et al.,2007] as well as magnetoencephalography [Betti et al.,2009; Cheng et al.,2008] has challenged this conception. Subsequent neuroimaging studies have increasingly reported visual modulation of secondary somatosensory areas on the inferior parietal operculum [Jackson et al.,2006; Lloyd et al.,2006; Ogino et al.,2007; Saarela et al.,2007]. Indeed, SI and SII are more likely to be activated in experiments involving depictions of somatic, rather than more abstractly mediated, types of observed pain [Keysers et al.,2010; Lamm et al.,2011]. Yet, assigning a functional role to these regions has remained mostly speculative because direct investigations of their functional selectivity in empathy have so far been lacking.

This study provides a unifying framework for empathy, linking somatosensory/IPL activity to prediction of the sensory consequences of others' transitive actions. The observation of others' hand actions [Avikainen et al.,2002; Hasson et al.,2004; Meyer et al.,2011; Rossi et al.,2002] and touch interactions [Blakemore et al.,2005; Ebisch et al.,2008; Keysers et al.,2004; Schaefer et al.,2009] consistently recruits anterior parietal somatosensory areas and PF/PFG. These regions show shared specificity for both observation and execution of hand actions [Dinstein et al.,2007; Gazzola and Keysers,2009; Keysers and Gazzola,2009] and are engaged by watching human and robotic agents perform grasping actions on objects [Gazzola et al.,2007] as well as by a sensory illusion involving hand–object contact [Naito and Ehrsson,2006]. Strikingly, studies demonstrating pain empathy responses in these sensorimotor regions used stimuli in which hands interacted with objects. Anterior parietal somatosensory areas have responded to both painful objects [e.g., needles and knives; Jackson et al.,2006; Lloyd et al.,2006; Morrison and Downing,2007] and disgusting objects [e.g., beetles and worms; Benuzzi et al.,2008] touching hands and feet, with higher responses when the object touching the skin is potentially tissue damaging.

CONCLUSIONS

These findings reveal highly structured, selective responses in somatosensory cortices and IPL to distinct sensory aspects of observed actions. Responses here show a relationship with the degree of past experience the observer has had with the goal object. These regions likely receive inputs from earlier visual areas encoding body parts (EBA) and object identities (PMTC/LOC) as well as tactile sensory properties (SI). Importantly, this network can be activated by the mere observation of the hand–object interactions of others, providing “free” information about the consequences of potentially harmful encounters. The psychophysical results demonstrate that just viewing others' painful actions biases participants to report tactile stimulation even when none occurred. These crucial findings not only demonstrate an integrative role for anterior parietal somatosensory cortices and IPL in action and pain observation but also more closely explore the precise nature of its engagement by action‐ and pain‐related social stimuli as a form of “SE.”

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supplementary Figure 1. Tactile detection experiment: a) mean tactile detection calibration results. Detections at stimulus intensity 0% are false alarms, whereas detections from 82‐90% are hits. b) Mean correct tactile detections (hits). c) Incorrect tactile detections (false alarms). Tactile control experiment: d) mean reaction times for suprathreshold detection. *Significant difference between means (p < .015). Error bars represent SEM.

Supplementary Figure 2. Auditory control experiment. a) Mean accurate auditory detection rates (hits). b) Incorrect auditory detections (false alarms) c) Likelihood of reporting auditory stimulation (bias) and d) ability to discriminate no auditory stimulation and auditory stimulation trials (sensitivity/ dprime). Error bars represent SEM.

Supplementary Figure 3. Cortical surface (Panel A) and axial slices (Panel B) showing low threshold maps (p < .05, uncorrected) of the object, action and interaction (appropriateness) contrasts in the regions significantly activated by the contrast of interest in the main analysis. Images shown are in radiological convention (left side of image corresponds to right side of brain).

Supporting Information

Supplementary Table 1. Average across‐subject correlation coefficients are shown for regions‐of‐interest in somatosensory cortex/IPL and MCC, separately for grasps of and withdrawals from painful objects (* p < 0.1; ** p < .05). IPL = inferior parietal lobule, PCG = postcentral gyrus, SI = primary somatosensory cortex, MCC = midcingulate cortex.

Supplementary Table 2. Comparison of tactile detection and auditory control experiment results. * = p < .1 ** p < .05 *** p < .01.

Acknowledgements

The authors thank Matthew Richins, Astrid Roeh, the radiographers, and staff at Ysbyty Gwynedd Hospital in Bangor for valuable help with data collection and Håkan Olausson, Donna Lloyd, Paul Downing, and two anonymous reviewers for helpful comments on early drafts.

Re‐use of this article is permitted in accordance with the Terms and Conditions set out at http://wileyonlinelibrary.com/onlineopen#OnlineOpen_Terms

REFERENCES

- Avenanti A, Bueti D, Galati G, Aglioti SM ( 2005): Transcranial magnetic stimulation highlights the sensorimotor side of empathy for pain. Nat Neurosci 8: 955–960. [DOI] [PubMed] [Google Scholar]

- Avenanti A, Bolognini N, Maravita A, Aglioti SM ( 2007): Somatic and motor components of action simulation. Curr Biol 7: 2129–2135. [DOI] [PubMed] [Google Scholar]

- Avikainen S, Forss N, Hari R ( 2002): Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage 15: 640–646. [DOI] [PubMed] [Google Scholar]

- Bach P, Knoblich G, Gunter TC, Friederici AD, Prinz W ( 2005): Action comprehension: Deriving spatial and functional relations. J Exp Psychol Hum Percept Perform 31: 465–479. [DOI] [PubMed] [Google Scholar]

- Bach P, Peelen MV, Tipper SP ( 2010): On the role of object information in action observation: An fMRI study. Cereb Cortex 20: 2798–2809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach P, Bayliss AP, Tipper SP ( 2011): The predictive mirror: Interactions of mirror and affordance processes during action observation. Psychon Bull Rev 18: 171–176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benuzzi F, Lui F, Duzzi D, Nichelli PF, Porro CA ( 2008): Does it look painful or disgusting? Ask your parietal and cingulate cortex. J Neurosci 28: 923–931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Betti V, Zappasodi F, Rossini PM, Aglioti SM, Tecchio F ( 2009): Synchronous with your feelings: Sensorimotor {gamma} band and empathy for pain. J Neurosci 29: 12384–12392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F, Buccino G, Posse S, Seitz RJ, Rizzolatti G, Freund H ( 1999): A fronto‐parietal circuit for object manipulation in man: Evidence from an fMRI‐study. Eur J Neurosci 11: 3276–3286. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J ( 2005): Somatosensory activations during the observation of touch and a case of vision‐touch synaesthesia. Brain 128: 1571–1583. [DOI] [PubMed] [Google Scholar]

- Bonini L, Serventi FU, Simone L, Rozzi S, Ferrari PF, Fogassi L ( 2011): Grasping neurons of monkey parietal and premotor cortices encode action goals at distinct levels of abstraction during complex action sequences. J Neurosci 31: 5876–5886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bodegård A, Geyer S, Grefkes C, Zilles K, Roland PE ( 2001): Hierarchical processing of tactile shape in the human brain. Neuron 31: 317–328. [DOI] [PubMed] [Google Scholar]

- Bufalari I, Aprile T, Avenanti A, Di Russo F, Aglioti SM ( 2007): Empathy for pain and touch in the human somatosensory cortex. Cereb Cortex 17: 2553–2561. [DOI] [PubMed] [Google Scholar]

- Carlsson K, Petrovic P, Skare S, Petersson KM, Ingvar M ( 2000): Tickling expectations: Neural processing in anticipation of a sensory stimulus. J Cogn Neurosci 12: 691–703. [DOI] [PubMed] [Google Scholar]

- Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K ( 2006): The human inferior parietal cortex: Cytoarchitectonic parcellation and interindividual variability. Neuroimage 33: 430–448. [DOI] [PubMed] [Google Scholar]

- Caspers S, Eickhoff SB, Geyer S, Scheperjans F, Mohlberg H, Zilles K, Amunts K ( 2008): The human inferior parietal lobule in stereotaxic space. Brain Struct Funct 212: 481–495. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB ( 2010): ALE meta‐analysis of action observation and imitation in the human brain. Neuroimage 50: 1148–1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng Y, Lin C‐P, Liu H‐L, Hsu Y‐Y, Lim K‐E, Hung D, Decety J ( 2007): Expertise modulates the perception of pain in others. Curr Biol 9: 1708–1713. [DOI] [PubMed] [Google Scholar]

- Cheng Y, Yang CY, Lin CP, Lee PL, Decety J ( 2008): The perception of pain in others suppresses somatosensory oscillations: A magnetoencephalography study. Neuroimage 40: 1833–1840. [DOI] [PubMed] [Google Scholar]

- Cunnington R, Windischberger C, Robinson S, Moser E ( 2006): The selection of intended actions and the observation of others' actions: A time‐resolved fMRI study. Neuroimage 29: 1294–1302. [DOI] [PubMed] [Google Scholar]

- de Lange FP, Spronk M, Willems RM, Toni I, Bekkering H ( 2008): Complementary systems for understanding action intentions. Curr Biol 18: 454–457. [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, de Haan EH ( 2007): Somatosensory processes subserving perception and action. Behav Brain Sci 30: 189–201. [DOI] [PubMed] [Google Scholar]

- Dinstein I, Hasson U, Rubin N, Heeger DJ ( 2007): Brain areas selective for both observed and executed movements. J Neurophysiol 98: 1415–1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- di Pellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G ( 1992): Understanding motor events: A neurophysiological study. Exp Brain Res 91: 176–809. [DOI] [PubMed] [Google Scholar]

- Dong WK, Chudler EH, Sugiyama K, Roberts VJ, Hayashi T ( 1994): Somatosensory, multisensory, and task‐related neurons in cortical area 7b (PF) of unanesthetized monkeys. J Neurophysiol 72: 542–564. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N ( 2001): A cortical area selective for visual processing of the human body. Science 293: 2470–2473. [DOI] [PubMed] [Google Scholar]

- Ebisch SJ, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V ( 2008): The sense of touch: Embodied simulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. J Cogn Neurosci 20: 1611–1623. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Amunts K, Mohlberg H, Zilles K ( 2006a) Human parietal operculum I. Cytoarchetectonic mapping of subdivisions. Cereb Cortex 16: 254–267. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Lotze M, Wietek B, Amunts K, Enck P, Zilles K ( 2006b): Human parietal operculum II: Stereotaxic maps and correlation with functional imaging results. Cereb Cortex 16: 268–279. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR ( 2007): The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb Cortex 17: 1800–1811. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G ( 2005): Parietal lobe: From action organization to intention understanding. Science 308: 662–667. [DOI] [PubMed] [Google Scholar]

- Fox PT, Lancaster JL ( 2002): Mapping context and content: The Brain Map model. Nat Rev Neurosci 3: 319–321. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Zarahn E, Josephs O, Henson RN, Dale AM ( 1999): Stochastic designs in event‐related fMRI. Neuroimage 10: 607–619. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Keysers C ( 2009): The observation and execution of actions share motor and somatosensory voxels in all tested subjects: Single‐subject analyses of unsmoothed fMRI data. Cereb Cortex 19: 1239–1255. [DOI] [PMC free article] [PubMed] [Google Scholar]