Abstract

One of the most critical issues in mental health services research is the gap between what is known about effective treatment and what is provided to consumers in routine care. Concerted efforts are required to advance implementation science and produce skilled implementation researchers. This paper seeks to advance implementation science in mental health services by over viewing the emergence of implementation as an issue for research, by addressing key issues of language and conceptualization, by presenting a heuristic skeleton model for the study of implementation processes, and by identifying the implications for research and training in this emerging field.

Keywords: Implementation, Evidence-based practice, Mental health services, Translation two research

One of the most critical issues in mental health services research is the gap between what is known about effective treatment and what is provided to and experienced by consumers in routine care in community practice settings. While university-based controlled studies yield a growing supply of evidence-based treatments and while payers increasingly demand evidence-based care, there is little evidence that such treatments are either adopted or successfully implemented in community settings in a timely way (Bernfeld et al. 2001; Institute of Medicine 2001; National Advisory Mental Health Council 2001; President's New Freedom Commission on Mental Health 2003; U.S. Department of Health and Human Services 1999, 2001, 2006). Indeed new interventions are estimated to “languish” for 15–20 years before they are incorporated into usual care (Boren and Balas 1999). The implementation gap prevents our nation from reaping the benefit of billions of US tax dollars spent on research and, more important, prolongs the suffering of millions of Americans who live with mental disorders (President's New Freedom Commission on Mental Health 2003). Ensuring that effective interventions are implemented in diverse settings and populations has been identified as a priority by NIMH Director Thomas Insel (2007).

The gap between care that is known to be effective and care that is delivered reflects, in large measure, a paucity of evidence about implementation. Most information about implementation processes relies on anecdotal evidence, case studies, or highly controlled experiments that have limited external validity (Glasgow et al. 2006) and yield few practical implications. A true science of implementation is just emerging. Because of the pressing need to accelerate our understanding of successful implementation, concerted efforts are required to advance implementation science and produce skilled implementation researchers.

This paper seeks to advance implementation science in mental health services by over viewing the emergence of implementation as an issue for research, by addressing key issues of language and conceptualization, by presenting a skeleton heuristic model for the study of implementation processes, and by identifying the implications for research and training in this emerging field.

An Emerging Science

The seminal systematic review on the diffusion of service innovations conducted by Trisha Greenhalgh et al. (2004) included a small section on implementation which was defined as “active and planned efforts to mainstream an innovation within an organization” (Greenhalgh et al. 2004, p. 582). Their review led these authors to conclude that “the evidence regarding the implementation of innovations was particularly complex and relative sparse” and that at the organizational level, the move from considering an adoption to successfully routinizing it is generally a nonlinear process characterized by multiple shocks, setbacks, and unanticipated events” (Greenhalgh et al. 2004, p. 610). They characterized the lack of knowledge about implementation and sustainability in health care organizations as “the most serious gap in the literature … uncovered” (Greenhalgh et al. 2004, p. 620) in their review.

Fortunately, there is evidence that the field of implementation science is truly emerging. In particular, the mental health services field appears to be primed to advance the science of implementation, as reflected by several initiatives. NIMH convened a 2004 meeting, “Improving the fit between mental health intervention development and service systems.” Its report underscored that “few tangible changes have occurred” in intervention implementation (National Institute of Mental Health 2004), requiring new and innovative efforts to advance the implementation knowledge and the supply of implementation researchers. The meeting revealed a rich body of theory ripe for shaping testable implementation strategies and demonstrated that diverse scholars could be assembled around the challenge of advancing implementation science. This meeting was followed by other NIMH events, including a 2005 meeting, “Improving the fit between evidence-based treatment and real world practice,” a March 2007 technical assistance workshop for investigators preparing research proposals in the areas of dissemination or implementation, and sessions and an interest group devoted to implementation research at the 2007 NIMH Services Research Conference.

Implementation research is advancing in “real time.” The NIH released the Dissemination and Implementation Program Announcement (PAR-06-039), appointed an NIMH Associate Director for dissemination and implementation research, and established a cross-NIH ad hoc review committee on these topics. NIH has funded a small number of research grants that directly address dissemination and implementation (including randomized trials of implementation strategies). More recently, the Office of Behavioral and Social Science (OBSSR) launched an annual NIH Dissemination and Implementation conference and a journal on Implementation Science was launched in 2006. While these developments are important stepping stones to the development of the field of implementation science, they reflect only the beginnings of an organized and resourced approach to bridge the gap between what we know and what we deliver.

Evolving Language for an Emerging Field

In emerging fields of study, language and constructs are typically fluid and subject to considerable discussion and debate. Implementation research is no exception. Creating “a common lexicon…of implementation…terminology” is important both for the science of implementation and for grounding new researchers in crucial conceptual distinctions (National Cancer Institute 2004). Indeed currently the development of theoretical frameworks and implementation models of change is hampered by “diverse terminology and inconsistent definition of terms such as diffusion, dissemination, knowledge transfer, uptake or utilization, adoption, and implementation” (Ellis et al. 2003).

Implementation Research Defined

Implementation research is increasingly recognized as an important component of mental health services research and as a critical element in the Institute of Medicine's translation framework, particularly it's Roadmap Initiative on Re-Engineering the Clinical Research Enterprise (Rosenberg 2003; Sung et al. 2003). In their plenary address to the 2005 NIMH Mental Health Services Research Conference, “Challenges of Translating Evidence-Based Treatments into Practice Contexts and Service Sectors”, Proctor and Landsverk (2005) located implementation research within the second translation step that is between treatment development and integration of efficacious treatments in local systems. The second translation step underscores the need for implementation research, distinct from efficacy and effectiveness research in outcomes, substance, and method. A number of similar definitions of implementation research are emerging (Eccles and Mittman 2006). For example, Rubenstein and Pugh (2006) propose a definition of implementation research for health services research:

Implementation research consists of scientific investigations that support movement of evidence-based, effective health care approaches (e.g., as embodied in guidelines) from the clinical knowledge base into routine use….Such investigations form the basis for health care implementation science…, a body of knowledge (that can inform)…the systematic uptake of new or underused scientific findings into the usual activities of regional and national health care and community organizations, including individual practice sites” (p. S58).

The CDC has defined implementation research as “the systematic study of how a specific set of activities and designated strategies are used to successfully integrate an evidence-based public health intervention within specific settings” (RFA-CD-07-005).

Dissemination Versus Implementation

NIH PAs on Dissemination and Implementation Research in Health distinguish between dissemination–”the targeted distribution of information and intervention materials to a specific public health or clinical practice audience” with “the intent to spread knowledge and the associated evidence-based interventions” and implementation–”the use of strategies to introduce or change evidence-based health interventions within specific settings”. The CDC makes similar distinctions. Within this framework, evidence-based practices are first developed and tested through efficacy studies and then refined and adapted through effectiveness studies (which may entail adaptation and modification to increase external validity and feasibility). Resultant findings and EBP's are then disseminated, often passively via simple information dissemination strategies, usually with very little uptake. Considerable evidence suggests that active implementation efforts must follow, for creating evidence-based treatments does not ensure their use in practice (U.S. Department of Health and Human Services 2006). In addition to an inventory of evidence-based practices, the field needs carefully designed strategies developed through implementation research. Implementation research has begun with a growing number of observational studies to assess barriers and facilitators which are now being followed by a very small number of experimental studies to pilot test, evaluative, and refine specific implementation strategies. This research may lead to further refinement and adoption, yielding implementation “programs” that are often multi-component. These implementation programs are then ready for “spread” to other sites. We would argue (as does The Road Ahead Report; U.S. Department of Health and Human Services 2006) that implementation research in the area of mental health care is needed in a variety of settings, including specialty mental health, medical settings such as primary care where mental health is also delivered, and non-specialty settings such as criminal justice, school systems, and social services where there is increasing importation of mental health care delivery. In fact, we would also argue that a critical discussion is needed regarding whether implementation research models might differ significantly between these very different sectors or organizational platforms for mental health care delivery.

Diffusion and Translation Research

The CDC defines diffusion research as the study of factors necessary for successful adoption of evidence-based practices by stakeholders and the targeted population, resulting in widespread use (e.g., state or national) (RFA-CD-07-005). Greenhalgh et al. (2004) further distinguish between diffusion which is the passive spread of innovations, and dissemination, which involves “active and planned efforts to persuade target groups to adopt and innovation” (p. 582). Thus implementation is the final step in a series of events, characterized under the broadest umbrella of translation research that includes a wide range of complex processes (diffusion and dissemination and implementation).

Practice or Treatment Strategies Versus Implementation Strategies

Two technologies are required for evidence-based implementation: practice or treatment technology, and a distinct technology for implementing those treatments into service system settings of care. Implementation is dependent on a supply of treatment strategies. Presently a “short lists” of interventions that have met a threshold of evidence (according to varying criteria) are ready or have moved into implementation; these would include examples such as MST (Multisystemic Therapy), Assertive Community Treatment (ACT), supported employment, and chronic care management/collaborative care. Research suggests that features of the practices themselves bear upon “acceptability,” “uptake,” and “fit” or compatibility with the context for use (Cain and Mittman 2002; Isett et al. 2007). Typically issues of fidelity, adaptation, and customization arise, leading ultimately to the question, “where are the bounds of flexibility before effectiveness is compromised?”

Implementation strategies are specified activities designed to put into practice an activity or program of known dimensions (Fixsen et al. 2005). In short, they comprise deliberate and purposeful efforts to improve the uptake and sustainability of treatment interventions. Implementation strategies must deal with the contingencies of various service system or sectors (e.g., specialty mental health, medical care, and non-specialty) and practice settings, as well as the human capital challenge of staff training and support, and various properties of interventions that make them more/less amenable to implementation. They must be described in sufficient detail such that independent observers can detect the presence and strength of the specific implementation activities. Successful implementation requires that specified treatments are delivered in ways that ensure their success in the field, that is: feasibly and with fidelity, responsiveness, and sustainability (Glisson and Schoenwald 2005).

Currently, the number of identifiable evidence-based treatments clearly outstrips the number of evidence-based implementation strategies. Herschell et al. (2004) review of progress, and lack thereof, in the dissemination of EBP's. Several groups of treatment and service developers have produced similar approaches taking an effective model to scale, but methods have been idiosyncratic, and as likely to be informed by field experience as by theory and research. Most implementation strategies remain poorly defined, can be distinguished grossly as “top down” and “bottom up,” and typically involve a “package” of strategies. These include a variety of provider decision supports, EBP-related tool kits and algorithms, practice guidelines; system and organizational interventions from management science, economic, fiscal and regulatory incentives; multi-level quality improvement strategies (e.g., Institute for Health Improvement's Collaborative Breakthrough series, the VA QUERI program); and business strategies (e.g., Deming/Shewart Plan-Do-Check-Act Cycle). Some implementation strategies are becoming systematic, manualized and subject to empirical test, including Glisson's ARC model and Chaffin and Aarons' “cascading diffusion” model based on work by Chamberlain et al. (in press). The field can ill afford to continue an idiosyncratic approach to a public health issue as crucial as the research-practice gap. The Road Ahead report calls for research that can develop better understanding of mechanisms underlying successful implementation of evidence-based interventions in varying service settings and with culturally and ethnically diverse populations.

Implementation Versus Implementation Research

Implementation research comprises study of processes and strategies that move, or integrate, evidence-based effective treatments into routine use, in usual care settings. Understanding these processes is crucial for improving care, but currently this research is largely case study or anecdotal report. Systematic, empirical or robust research on implementation is just beginning to emerge, and this field requires substantial methodological development.

Implementation Research: The Need for Conceptual Models

The emerging field of implementation research requires a comprehensive conceptual model to intellectually coalesce the field and guide implementation research. This model will require language with clearly defined constructs as discussed above, a measurement model for these key constructs, and an analytic model hypothesizing links between measured constructs. Grimshaw (2007) noted at the 2007 OBSSR D & I Conference that we now have >30 definitions of dissemination and implementation and called for the development of a theory and fewer small theories to guide this emerging field. In our view, no single theory exists because the range of phenomena of interest is broad, requiring different perspectives. This paper seeks to advance the field by proposing a “skeleton” model, upon which various theories can be placed to help explain aspects of the broader phenomena.

Stage, Pipeline Models

Our developing implementation research conceptual model draws from three extant frameworks. First is the “stage pipeline” model developed by the National Cancer Institute (2004) and adapted for health services by VA's QUERI program (Rubenstein and Pugh 2006). In the research pipeline, scientists follow a five phase plan, beginning with hypothesis development and methods development (Phase 1 and 2), continuing into controlled intervention trials (Phase 3 efficacy) and then defined population studies (Phase 4 effectiveness), and ending with demonstration and implementation (Phase 5). Here the process is considering as a linear progress with implementation as the “final” stage of intervention development (Proctor and Landsverk 2005). However Addis (2002) has reviewed the limitations of unidirectional, linear models of dissemination. The NIH Roadmap (nihroadmap.nih.gov) has challenged the research community to re-engineer the clinical research enterprise, namely to move evidence-based treatments “to bedside” into service delivery settings and communities thereby improving our nation's health. The Roadmap has compressed the five stages into two translation steps, with the first step moving from basic science to intervention development and testing, and the second translation phase moving from intervention development to implementation in real world practice settings. However, “pipeline” models assume an unrealistic unilinear progression from efficacy to broad uptake, remaining unspecified regarding the organizational and practice contexts for these stages. Moreover, we would argue that NIH's primary focus as indicated by resource allocation, remains the first translation step, with little specification or emphasis on the second translation.

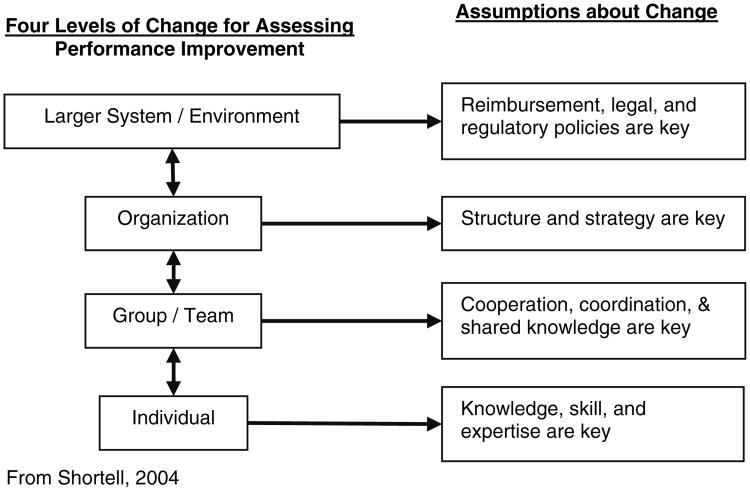

Multi-level Models of Change

Our heuristic model further draws from Shortell's (2004) multi-level model of “change for performance improvement”. This framework offers enormous benefit because it specifies multiple levels in the practice context that are likely to be a key to change. This model points to hierarchical levels ranging from what Greenhalgh and colleagues would characterize as the outer context (interorganizational) through the inner context (organizational) to the actual practice setting where providers and consumers interact. We posit that the four levels in the Shortell model provide contexts where concepts must be specified and addressed in implementation research as follows.

The model's top level, the policy context, is addressed in a wide rang of disciplines. Implementation research has a long history in policy research, where most studies take a “top-down” (Van Meter and Van Horn 1975) or a “bottom-up” (Linder and Peters 1987) perspective. Legislatures mandate policies, with some form of implementation more or less assured. But policy translation into practice through corresponding regulation needs empirical study. Policy implementation research is often retrospective, using focus group or case study methodology (Conrad and Christianson 2004; Cooper and Zmud 1990; Essock et al. 2003; Herschell et al. 2004) which would argue for greater use of hypothesis driven statistical approaches for policy implementation research.

The middle two levels, “organization” and “group/team,” are informed by organizational research, with some rigorous study of topics such as business decision support systems (Alavi and Joachimsthaler 1992) and implementing environmental technology (Johnston and Linton 2000). Their themes echo those of health services: “champions” and environmental factors were associated with successful implementation (of material requirements planning) in manufacturing (Cooper and Zmud 1990). Also relevant to the organizational level are provider financial incentives to improve patient health outcomes and consumer satisfaction. Conrad and Christianson (2004) offer a well-specified graphic model of the interactions between local health care market and social environments (health plans, provider organizations, and decisions of organizations, physicians, and patients) with mathematically derived statements. Organizational level financial and market factors at the organizational level clearly affect evidence-based practice implementation in mental health services (Proctor et al. 2007). Moreover, agency organizational culture may wield the greatest influence on acceptance of empirically supported treatments and the willingness and capacity of a provider organization to implement such treatments in actual care. Indeed the organizational context of implementation, particularly where context is emphasized, reflects the most substantial deviation from linear, “pipeline” phase models from the literature emphasizing development and spread of interventions. Complexity science (Fraser and Greenhalgh 2001; Liyaker et al. 2006) aims to capture the practice landscape, while quality improvement approaches such as the IHI and QUERI models further inform implementation at the organizational level.

Finally of course, at the bottom level, the key role of individual behavior in implementation must be addressed. Individual providers have been focused upon in the sizable body of research on implementing practice guidelines in medical settings and EBP's in mental health settings (Baydar et al. 2003; Blau 1964; Ferlie and Shortell 2001; Gray 1989; Herschell et al. 2004; Woolston 2005). Qualitative studies have documented barriers and stakeholders' attitudes toward EBP (Baydar et al. 2003; Corrigan et al. 2001; Ferlie and Shortell 2001). Essock et al. (2003) have identified stakeholder concerns about EBP that impede implementation. The limitations of guideline literature prompted Rubenstein and Pugh (2006) to recommend that clinical guideline developers routinely incorporate implementation research findings into new guideline recommendations.

Models of Health Service Use

Models of implementation can further be informed by well known and well specified conceptual models of health services that distinguish structural characteristics, clinical care processes, and outcomes, including Aday and Andersen's (1974) comprehensive model of access to care, Pescosolido's “Network-Episode Model” of help-seeking behavior that has informed research on MH care utilization (Costello et al. 1998; Pescosolido 1991, 1992) and Donabedian's (Donabedian 1980, 1988) pioneering work on quality of care (McGlynn et al. 1988). While these models do not directly address implementation, they underscore that active ingredients of strategy must be specified and linked to multiple types of outcomes, as discussed below.

A Draft Conceptual Model of Implementation Research

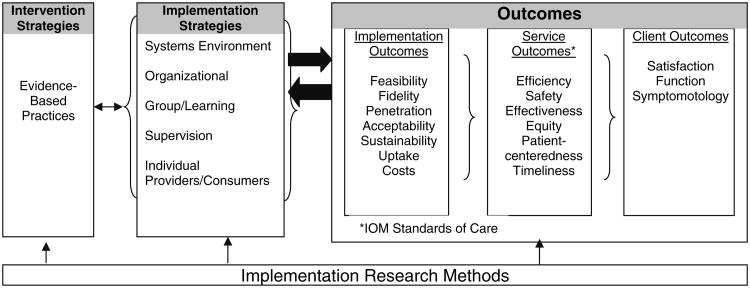

Informed by these three frameworks, we propose a heuristic model that posits nested levels, reflects prevailing quality improvement perspectives, and distinguishes but links key implementation processes and outcomes (Fig. 1). An outgrowth of Proctor & Landsverk's plenary address at the 2005 NIMH services research meeting, the model distinguishes two required “core technology” or strategies: evidence-based intervention strategies and separate strategies for implementing those interventions in usual care. It also provides for classification of multi-level implementation strategies, drawing on Fig. 2). The model accommodates theories of dissemination (Torrey et al. 2001), transportability (Addis 2002; Hohmann and Shear 2002), implementation (Beutler et al. 1995), diffusion of innovation [posited most prominently by the seminal work of Rogers (1995) as a social process], and literatures that have been reviewed extensively and synthesized (Glasgow et al. 2001; Greenhalgh et al. 2004; Proctor 2004). Indeed some implementation strategies (distinct from empirically based treatments) emerge for facilitating the transport and implementation of evidence-based medical (Clarke 1999; Garland et al. 2001), substance abuse (Backer et al. 1995; Brown et al. 1995; Brown et al. 2000), and mental health (Blasé et al. 2005) treatments. While some heuristics (Ferlie and Shortell 2001; Hohmann and Shear 2002; Schoenwald and Hoagwood 2001) for transportability, implementation, and dissemination have been posited (Brown and Flynn 2002; Chambers et al. 2005), this literature is too often considered from a sole-disciplinary perspective (e.g., organizational, or economic, or psychological), and has not “placed” key variables within levels. Nor has it distinguished types of outcomes. Our draft model illustrates three distinct but interrelated types of outcomes–implementation, service, and client outcomes—that are geared to constructs from the four level models (Committee on Crossing the Quality Chasm: Adaption to Mental Health, Addictive Disorders 2006; Institute of Medicine 2001). Furthermore, this mode informs methodology, which long has plagued diffusion research (Beutler et al. 1995; McGlynn et al. 1988; Rogers 1995). Systematic studies of implementation require creative multi-level designs to address the challenges of sample size estimation; by definition, larger system levels carry sample sizes with lower potential power estimates than do individual level analyses. The model requires involvement of multiple stakeholders at multiple levels.

Fig. 1. Conceptual model of implementation research.

Fig. 2. Levels of change.

Yet to be discovered is whether one comprehensive implementation model may emerge, or different models reflecting specific clinical conditions, treatment types (psycho-social vs. pharmacological, or staged interventions), or service delivery settings (specialty mental health vs. primary care vs. non-medical sectors such as child welfare, juvenile justice, geriatric, homeless services). The relationship between the first column, evidence-based practices, and the second column, implementation strategies, needs to be empirically tested, particularly in light of recent evidence that different evidence-based practices carry distinct implementation challenges (Isett et al. 2007).

Implications for Research and Training

Advancing the field of implementation science has important implications. This paper identifies and briefly discusses three issues: the methodological issues in studying implementation processes, who should conduct this important research, and the need to train for this emergent field.

The Challenge of Implementation Research Methods

The National Institute of Mental Health (2004) report on Advancing the Science of Implementation; calls for advances in the articulation of constructs relevant to implementation, converting constructs into researchable questions, and advancing the measurement of constructs key to implementation research. Given its inherent multilevel nature as demonstrated in the prior section, the advancement of implementation research requires attention to a number of formidable design and measurement challenges. While a detailed analysis of the methodological challenges in IR is beyond the scope of this paper, two of the major issues will be briefly identified, namely measurement and design.

Regarding the measurement challenge, the key processes involved—both EST's and implementation processes—must be modeled, measured, and their fidelity assessed. Moreover, researchers must conceptualize and measure the distinct intervention outcomes and implementation outcomes (Fixsen et al. 2005). Improvements in consumer well-being provide the most important criteria for evaluating both treatment and implementation strategies—the particular individuals who received treatments in the case of treatment research and the pool of individuals served by the providing system in the case of implementation research. But implementation research requires outcomes that are conceptually and empirically distinct from those of service and treatment effectiveness. These include the intervention's penetration within a target organization, its acceptability to and adoption by multiple stakeholders, the feasibility of its use, and its sustainability over time within the service system setting. The measurement challenge for intervention processes and outcomes requires that measures developed for the conduct of efficacy trials must be adapted and tested for feasible and efficient use in ongoing service systems. It is unlikely that the extensive and data rich batteries of measures developed for efficacy studies, including those developed for efficacy tests of organizational interventions, will be appropriate or feasible for implementation in services systems. Thus researchers need to find ways to shorten measurement tools, recalibrate “laboratory” versions, and link adapted measures to the outcomes monitored through service system administrative data.

In the area of design, studying EBP implementation in even one service system or organization is conceptually and logistically ambitious, given multiple stakeholders and levels of influence. Yet even complex studies have inherently limited sample size, so implementation research is typically beset by a “small n” problem. Moreover, to capture the multiple levels affecting implementation, researchers must employ multi-level designs and corresponding methods for statistical analysis. Other challenges included modeling and analyzing relationships among variables at multiple levels, and costing both interventions and implementation.

Thus the maturation of implementation science requires a number of methodological advances. Most early research on implementation, especially that on the diffusion of innovation, has employed naturalistic case study approaches. Only recently have prospective, experimental, designs been introduced and the methodological issues identified here begun to be systematically addressed.

Who Should Conduct Implementation Research? A Conjoining of Perspectives

Implementation research, whether health (Rubenstein and Pugh 2006) or mental health, is necessarily multi-disciplinary and requires a convergence of perspectives. To tackle the challenges of implementation, Bammer (2003) calls for collaboration and integration both within and outside the research sphere. Researchers must work together across boundaries, for no one research tradition alone can address the fundamental issue of public health impact (Stetler et al. 2006). Proctor and Landsverk (2005) urged treatment developers and mental health services researchers to partner for purposes of advancing research on implementation, as did Gonzales et al. (2002), who call for truly collaborative, innovative and interdisciplinary work to overcome implementation and dissemination obstacles. Implementation research requires a partnership of treatment developers, service system researchers, and quality improvement researchers. Yet their perspectives will not be sufficient. They need to be joined by experts from field such as economics and business and management. Collaboration is needed between treatment developers who bring expertise in their programs, mental health services researchers who bring expertise in service settings, and quality improvement researchers who bring conceptual frameworks and methodological expertise for the multilevel strategies required to change systems, organizations, and providers.

Because implementation research necessarily occurs in the “real world” of community based settings of care, implementation researchers also must partner with community stakeholders. National policy directives from NIMH, CDC, IOM, and AHRQ (Institute of Medicine 2001; National Advisory Mental Health Council, 2001; Trickett and Ryerson Espino 2004; US Department of Health and Human Services 2006) urge researchers to work closely with consumers, practitioners, policy makers, payers, and administrators around the implementation of evidence-based practices. The recent NIMH Workgroup Report, “The Road Ahead: Research Partnerships to Transform Services,” asserts that truly collaborative and sustainable partnerships can significantly improve the public health impact of research (US Department of Health and Human Services 2006). Successful collaboration demands “transactional” models in which all stakeholders equally contribute to and gain from the collaboration and where cultural exchange is encouraged. Such collaborations can move beyond traditional, unidirectional models of “diffusion” of research from universities to practice, to a more reciprocal, interactive “fusing” of science and practice (Beutler et al. 1995; Glasgow et al. 2001; Hohmann and Shear 2002). Implementation research is an inherently collaborative form of inquiry in which researchers, practitioners, and consumers must leverage their different perspectives and competencies to produce new knowledge about a complex process.

Knowledge of partnered research is evolving, stimulated in part by network development cores to NIMH advanced centers, by research infrastructure support programs, and by reports such as the NIMH Road Ahead report. Yet the partnership literature remains largely anecdotal, case study, or theoretical, with collaboration and partnership broadly defined ideals; “there is more theology than conclusion, more dogma than data” (Trickett et al. 2004, p. 62) and there are few clearly articulated models to build upon. Recent notable advances in the mental health field include Sullivan et al. (2005) innovative mental health clinical partnership program within the Veterans Healthcare Administration, designed to enhance the research capacity of clinicians and the clinical collaborative skills of researchers. Evaluation approaches, adapted from public health participatory research (Naylor et al. 2002), are emerging to systematically examine each partnership process and the extent to which the equitable participatory goals are achieved. Wells et al. (2004) also advocate for and work to advance Community-Based Participatory Research (CBPR) in mental health services research. McKay (in press) models collaboration between researchers and community stakeholders, highlighting various collaborative opportunities and sequences across the research process. Borkovec (2004) cogently argues for developing Practice Research Networks, providing an infrastructure for practice-based research and more efficient integration of research and practice. Community psychology, prevention science, and public health literatures also provide guidance for researcher-agency partnerships and strategies for collaboratives that involve CBO representatives, community stakeholders, academic researchers, and service providers (Israel et al. 1998; Trickett and Ryerson Espino 2004). Partnerships between intervention and services researchers, policy makers, administrators, providers, and consumers hold great promise for bridging the oft-cited gap between research and practice. Implementation research requires unique understanding and use of such partnerships.

Training: Building Human Capital for Implementation Research

No single university-based discipline or department is “home” to implementation science. Nor does any current NIMH-funded pre- or post-doctoral research training program (e.g., T32) explicitly focus on preparing new researchers for implementation research. The absence of organized programs of research and training on implementation research underscores the importance of training in this field. The Bridging Science and Services Report (National Institute of Mental Health 1999) encourages the use of NIMH-funded research centers as training sites, for research centers are information-rich environments that demand continual, intensive learning and high levels of productivity from their members (Ott 1989), attract talented investigators with convergent and complementary interests, and thus provide ideal environments for training in emerging fields such as implementation science (Proctor 1996). The developmental status of implementation science underscores the urgency of advancing the human capital, as well as intellectual capital, for this important field.

Conclusion

In a now classic series of articles in Psychiatric Services, a blue-ribbon panel of authors reviewed the considerable evidence on the effectiveness and cost saving of several mental health interventions. In stark contrast to the evidence about treatments, these authors could find “no research specifically on methods for implementing” these treatments (Phillips et al. 2001, p. 775), nor any proven implementation strategies (Drake et al. 2001). Unfortunately, 7 years later, implementation science remains embryonic. Members of an international planning group that recently launched the journal Implementation Science concur that systematic, pro-active efforts are required to advance the field of implementation science, to establish innovative training programs, to encourage and support current implementation researchers, and to recruit and prepare a new generation of researchers focused specifically on implementation. Ultimately, implementation science holds promise to reduce the gap between evidence-based practices and their availability in usual care, and thus contribute to sustainable service improvements for persons with mental disorder. We anticipate that the next decade of mental health services research will require, and be advanced by, the scientific advances associated with implementation science.

Acknowledgments

The preparation of this article was supported in part by the Center for Mental Health Services Research, at the George Warren Brown School of Social Work, Washington University in St Louis; through an award from the National Institute of Mental Health (5P30 MH068579).

Contributor Information

Enola K. Proctor, Email: ekp@wustl.edu, George Warren Brown School of Social Work, Washington University, 1 Brookings Drive Campus Box 1196, St Louis, MO 63130, USA.

John Landsverk, George Warren Brown School of Social Work, Washington University, 1 Brookings Drive Campus Box 1196, St Louis, MO 63130, USA; Child and Adolescent Services Research Center, San Diego Children's Hospital Work, San Deigo, CA, USA.

Gregory Aarons, Department of Psychiatry, University of California, San Diego, CA, USA.

David Chambers, Dissemination and Implementation Research Program Division of Services and Intervention Research, National Institute of Mental Health, Bethesda, MD, USA.

Charles Glisson, Children's Mental Health Services Research Center, University of Tennessee, Knoxville, TN, USA.

Brian Mittman, Department of Veterans Affairs, VA Center for Implementation Research & Improvement Science, Greater Los Angeles Healthcare System, Los Angeles, CA, USA.

References

- Aday LA, Andersen R. A framework for the study of access to medical care. Health Services Research. 1974;9:208–220. [PMC free article] [PubMed] [Google Scholar]

- Addis ME. Methods for disseminating research products and increasing evidence-based practice: Promises, obstacles, and future directions. Clinical Psychology Science & Practice. 2002;9(4):367–378. [Google Scholar]

- Alavi M, Joachimsthaler EA. Revisiting DSS implementation research: A meta-analysis of the literature and suggestions for researchers. MIS Quarterly. 1992;16(1):95–116. [Google Scholar]

- Backer TE, David SL, Saucy G, editors. Synthesis of behavioral science learnings about technology transfer in reviewing the behavioral science knowledge base on technology transfer. Rockville, MD: US Department of Health and Human Services; 1995. [Google Scholar]

- Bammer G. Integration and implementation sciences: Will developing a new specialization improve our effectiveness in tackling complex issues? 2003 Retrieved January 16 1008 from http://www.anu.edu.au/ilsn/

- Baydar N, Reid MJ, Webster-Stratton C. The role of mental health factors and program engagement in the effectiveness of a preventive parenting program for Head Start mothers. Child Development. 2003;74:1433–1453. doi: 10.1111/1467-8624.00616. [DOI] [PubMed] [Google Scholar]

- Bernfeld GA, Farrington DP, Leschied AW. Offender rehabilitation in practice: Implementing and evaluating effective programs. New York: Wiley; 2001. [Google Scholar]

- Beutler LE, Williams RE, Wakefield PJ, Entwistle SR. Bridging scientist and practitioner perspectives in clinical psychology. American Psychologist. 1995;50(12):984–994. doi: 10.1037//0003-066x.50.12.984. [DOI] [PubMed] [Google Scholar]

- Blasé KA, Fixsen DL, Naoom SF, Wallace F. Operationalizing implementation: Strategies and methods. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute; 2005. [Google Scholar]

- Blau P. Exchange and power in social life. New York: Wiley; 1964. [Google Scholar]

- Boren SA, Balas EA. Evidence-based quality measurement. Journal of Ambulatory Care Management. 1999;22(3):17–23. doi: 10.1097/00004479-199907000-00005. [DOI] [PubMed] [Google Scholar]

- Borkovec TD. Research in training clinics and practice research networks: A route to the integration of science and practice. Clinical Psychology: Science and Practice. 2004;11:211–215. [Google Scholar]

- Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. Journal of Substance Abuse Treatment. 2002;22:245–257. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- Brown SA, Inaba RK, Gillin JC, Schuckit MA, Stewart MA, Irwin MR. Alcoholism and affective disorder: Clinical course of depressive symptoms. American Journal of Psychiatry. 1995;152:45–52. doi: 10.1176/ajp.152.1.45. [DOI] [PubMed] [Google Scholar]

- Brown SA, Tapert SF, Tate SR, Abrantes AM. The role of alcohol in adolescent relapse and outcome. Journal of Psychoactive Drugs. 2000;32:107–115. doi: 10.1080/02791072.2000.10400216. [DOI] [PubMed] [Google Scholar]

- Cain M, Mittman R. Diffusion of Innovation in Health Care. Oakland, CA: Institute for the Future; 2002. Retrieved September 25 2006, from http://www.chcf.org/documents/ihealth/DiffusionofInnovation.pdf. [Google Scholar]

- Chamberlain P, Price J, Reid J, Landsverk J. Cascading implementation of a foster parent intervention: Partnerships, logistics, transportability, and sustainability. Child Welfare. in press. [PMC free article] [PubMed] [Google Scholar]

- Chambers D, Ringeisen H, Hickman EE. Federal, state, and foundation initiatives around evidence-based practices for child and adolescent mental health. Child and Adolescent Psychiatric Clinics of North America. 2005;14:307–327. doi: 10.1016/j.chc.2004.04.006. [DOI] [PubMed] [Google Scholar]

- Clarke GN. Prevention of depression in at-risk samples of adolescents. In: Essau CA, Petermann F, editors. Depressive disorders in children and adolescents: Epidemiology, risk factors, and treatment. Northvale, NJ: Jason Aronson, Inc; 1999. pp. 341–360. [Google Scholar]

- Committee on Crossing the Quality Chasm: Adaption to Mental Health, Addictive Disorders. Improving the quality of health care for mental and substance-use conditions. Washington, DC: National Academies Press; 2006. [Google Scholar]

- Conrad DA, Christianson JB. Penetrating the “Black Box”: Financial incentives for enhancing the quality of physician services. Medical Care Research and Review. 2004;61:37S–68S. doi: 10.1177/1077558704266770. [DOI] [PubMed] [Google Scholar]

- Cooper RB, Zmud RW. Information technology implementation research: A technological diffusion approach. Management Science. 1990;36:123–139. [Google Scholar]

- Corrigan PW, Steiner L, McCracken SG, Blaser B, Barr M. Strategies for disseminating evidence-based practices to staff who treat people with serious mental illness. Psychiatric Services. 2001;52(12):1598–1606. doi: 10.1176/appi.ps.52.12.1598. [DOI] [PubMed] [Google Scholar]

- Costello JE, Pescosolido BA, Angold A, Burns B. A family network-based model of access to child mental health services. Research in Community and Mental Health. 1998;9:165–190. [Google Scholar]

- Donabedian A. The definition of quality and approaches to its assessment. Ann Arbor, MI: Health Administration Press; 1980. [Google Scholar]

- Donabedian A. The quality of care: How can it be assessed? Journal of the American Medical Association. 1988;260:1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- Drake RE, Essock SM, Shaner A, Carey KB, Minkoff K, Kola L, et al. Implementing dual diagnosis services for clients with severe mental illness. Psychiatric Services. 2001;52(4):469–476. doi: 10.1176/appi.ps.52.4.469. [DOI] [PubMed] [Google Scholar]

- Eccles MP, Mittman BS. Welcome to implementation Science. Implementation Science. 2006;1(1) doi: 10.1186/1748-5908-1-1. [DOI] [Google Scholar]

- Ellis P, Robinson P, Ciliska D, Armour T, Raina P, Brouwers M, et al. Evidence Report/Technology Assessment No 79. Rockville, MD: Agency for Healthcare Research and Quality; 2003. Diffusion and dissemination of evidence-based cancer control interventions(summary) pp. 1–5. [PMC free article] [PubMed] [Google Scholar]

- Essock SM, Goldman HH, Van Tosh L, Anthony WA, Appell CR, Bond GR, et al. Evidence-based practices: Setting the context and responding to concerns. Psychiatric Clinics of North America. 2003;26:919–938. doi: 10.1016/s0193-953x(03)00069-8. [DOI] [PubMed] [Google Scholar]

- Ferlie EB, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: A framework for change. Milbank Quarterly. 2001;79:281–315. doi: 10.1111/1468-0009.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature(No FMHI Publication #231) Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health InstituteNational Implementation Research Network; 2005. [Google Scholar]

- Fraser SW, Greenhalgh T. Complexity science: Coping with complexity: Educating for capability. British Medical Journal. 2001;323(7316):799–803. doi: 10.1136/bmj.323.7316.799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garland AF, Hough RL, McCabe KM, Yeh M, Wood PA, Aarons GA. Prevalence of psychiatric disorders in youth across five sectors of care. Journal of the American Academy of Child & Adolescent Psychology. 2001;40:409–418. doi: 10.1097/00004583-200104000-00009. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Green LW, Klesges LM, Abrams DB, Fisher EB, Goldstein MG, et al. External validity: We need to do more. Annals of Behavioral Medicine. 2006;31(2):105–108. doi: 10.1207/s15324796abm3102_1. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Orleans CT, Wagner EH, Curry SJ, Solberg LI. Does the chronic care model serve also as a template for improving prevention? Milbank Quarterly. 2001;79:579–612. doi: 10.1111/1468-0009.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C, Schoenwald SK. The ARC organizational and community intervention strategy for implementing evidence-based children's mental health treatments. Mental Health Services Research. 2005;7(4):243–259. doi: 10.1007/s11020-005-7456-1. [DOI] [PubMed] [Google Scholar]

- Gonzales JJ, Ringeisen HL, Chambers DA. The tangled and thorny path of science to practice: Tensions in interpreting and applying “evidence”. Clinical Psychology: Science and Practice. 2002;9:204–209. [Google Scholar]

- Gray B. Collaborating: Finding common ground for multiparty problems. San Francisco: Jossey-Bass; 1989. [Google Scholar]

- Greenhalgh T, Robert G, MacFarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Quarterly. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimshaw J. Improving the scientific basis of health care research dissemination and implementation; Paper presented at NIH Conference on Building the Sciences of Dissemination and Implementation in the Service of Public Health; Washington, DC. 2007. [Google Scholar]

- Herschell AD, McNeil CB, McNeil DW. Clinical child psychology's progress in disseminating empirically supported treatments. Clinical Psychology Science & Practice. 2004;11:267–288. [Google Scholar]

- Hohmann AA, Shear MK. Community-based intervention research: Coping with the “noise” of real life in study design. American Journal of Psychiatry. 2002;159:201–207. doi: 10.1176/appi.ajp.159.2.201. [DOI] [PubMed] [Google Scholar]

- Insel TR. Devising prevention and treatment strategies for the nation's diverse populations with mental illness. Psychiatric Services. 2007;58(3):395. doi: 10.1176/appi.ps.58.3.395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- Isett KR, Burnam MA, Coleman-Beattie B, Hyde PS, Morrissey JP, Magnabosco J, et al. The state policy context of implementation issues for evidence-based practices in mental health. Psychiatric Services. 2007;58(7):914–921. doi: 10.1176/ps.2007.58.7.914. [DOI] [PubMed] [Google Scholar]

- Israel BA, Schulz AJ, Parker EA, Becker AB. Review of community-based research: Assessing partnership approaches to improve public health. Annual Review of Public Health. 1998;19:173–202. doi: 10.1146/annurev.publhealth.19.1.173. [DOI] [PubMed] [Google Scholar]

- Johnston DA, Linton LD. Social networks and the implementation of environmental technology. IEEE Transactions on Engineering Management. 2000;47(4):465–477. [Google Scholar]

- Linder SH, Peters BG. A design perspective on policy implementation: The fallacies of a misplaced prescription. Policy Studies Review. 1987;6(3):459–475. [Google Scholar]

- Liyaker D, Tomolo A, Liberatore V, Stange KC, Aron D. Using complexity theory to build interventions that improve health care delivery in primary care. Journal of General Internal Medicine. 2006;21(2):S30–S34. doi: 10.1111/j.1525-1497.2006.00360.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGlynn EA, Norquist GS, Wells KB, Sullivan G, Liberman RP. Quality-of-care research in mental health: Responding to the challenge. Inquiry. 1988;25:157–170. [PubMed] [Google Scholar]

- McKay M. Collaborative child mental health services research: theoretical perspectives and practical guidelines. In: Hoadwood K, editor. Collaborative research to improve child mental health services. in press. [Google Scholar]

- National Advisory Mental Health Council. Blueprint for Change: Research on Child and Adolescent Mental Health A Report by the National Advisory Mental Health Council's Workgroup on Child and Adolescent Mental Health Intervention Development and Deployment. Bethesda, MD: National Institutes of Health, National Institute of Mental Health; 2001. [Google Scholar]

- National Cancer Institute. Dialogue on dissemination: Facilitating dissemination research and implementation of evidence-based cancer prevention, early detection, and treatment practices. 2004 Retrieved September 25 2005, from http://www.dccps.cancer.gov/d4d/dod_summ_nov-dec.pdf.

- National Institute of Mental Health. Bridging Science and Service: A report by the National Advisory Mental Health Council's Clinical Treatment and Services Research Workgroup. Washington, DC: National Institutes of Health; 1999. [Google Scholar]

- National Institute of Mental Health. Advancing the science of implementation: Improving the fit between mental health intervention development and service systems. 2004 Retrieved September 25 2006, from http://www.nimh.nih.gov/scientificmeetings/scienceofimplementation.pdf.

- Naylor PJ, Wharf-Higgins J, Blair L, Green L, O'Connor B. Evaluating the participatory process in a community-based heart health project. Social Science & Medicine. 2002;55:1173–1187. doi: 10.1016/s0277-9536(01)00247-7. [DOI] [PubMed] [Google Scholar]

- Ott EM. Effects of the male-female ratio at work: Policewomen and male nurses. Psychology of Women Quarterly. 1989;13:41–57. [Google Scholar]

- Pescosolido BA. Illness careers and network ties: A conceptual model of utilization and compliance. Advances in Medical Sociology. 1991;2:161–184. [Google Scholar]

- Pescosolido BA. Beyond rational choice: The social dynamics of how people seek help. American Journal of Sociology. 1992;97(4):1096–1138. [Google Scholar]

- Phillips SD, Burns BJ, Edgar ER, Mueser KT, Linkins KW, Rosenheck RA, et al. Moving assertive community treatment into standard practice. Psychiatric Services. 2001;52(6):771–779. doi: 10.1176/appi.ps.52.6.771. [DOI] [PubMed] [Google Scholar]

- President's New Freedom Commission on Mental Health. Achieving the promise: Transforming mental health in America Final report. Rockville, MD: DHHS Publication; 2003. [Google Scholar]

- Proctor EK. Research and research training in social work: Climate, connections, and competencies. Research on Social Work Practice. 1996;6(3):366–378. [Google Scholar]

- Proctor EK. Leverage points for the implementation of evidence-based practice. Brief Treatment and Crisis Intervention. 2004;4(3):227–242. [Google Scholar]

- Proctor EK, Knudsen KJ, Fedoravicius N, Hovmand P, Rosen A, Perron B. Implementation of evidence-based practice in community behavioral health: Agency director perspectives. Administration and Policy in Mental Health. 2007;34(5):479–488. doi: 10.1007/s10488-007-0129-8. [DOI] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J. Challenges of translating evidence-based treatments into practice contexts and service sectors; Paper presented at The Mental Health Services Research Conference; Washington, DC. 2005. [Google Scholar]

- Rogers EM. Diffusion of innovations. New York: Free Press; 1995. [Google Scholar]

- Rosenberg RN. Translating biomedical research to the bedside: A national crisis and a call to action. Journal of the American Medical Association. 2003;289:1305–1306. doi: 10.1001/jama.289.10.1305. [DOI] [PubMed] [Google Scholar]

- Rubenstein LV, Pugh J. Strategies for promoting organizational and practice change by advancing implementation research. Journal of General Internal Medicine. 2006;21:S58–S64. doi: 10.1111/j.1525-1497.2006.00364.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Hoagwood K. Effectiveness, transportability, and dissemination of interventions: What matters when? Psychiatric Services. 2001;52:1190–1197. doi: 10.1176/appi.ps.52.9.1190. [DOI] [PubMed] [Google Scholar]

- Shortell SM. Increasing value: A research agenda for addressing the managerial and organizational challenges facing health care delivery in the United States. Medical Care Research and Review. 2004;61(3):12S–30S. doi: 10.1177/1077558704266768. [DOI] [PubMed] [Google Scholar]

- Stetler CB, Legro MW, Wallace CM, Bowman C, Guihan M, Hagedorn H, et al. The role of formative evaluation in implementation research and the QUERI experience. Journal of General Internal Medicine. 2006;21:S1–S8. doi: 10.1111/j.1525-1497.2006.00355.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan G, Duan N, Mukherjee S, Kirchner J, Perry D, Henderson K. The role of services researchers in facilitating intervention research. Psychiatric Services. 2005;56:537–542. doi: 10.1176/appi.ps.56.5.537. [DOI] [PubMed] [Google Scholar]

- Sung NS, Crowley WF, Jr, Genel M, Salber P, Sandy L, Sherwood LM, et al. Central challenges facing the national clinical research enterprise. Journal of the American Medical Association. 2003;289(10):1278–1287. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- Torrey WC, Drake RE, Dixon L, Burns BJ, Flynn L, Rush AJ, et al. Implementing evidence-based practices for persons with severe mental illnesses. Psychiatric Services. 2001;52(1):45–50. doi: 10.1176/appi.ps.52.1.45. [DOI] [PubMed] [Google Scholar]

- Trickett EJ, Ryerson Espino SL. Collaboration and social inquiry: Multiple meanings of a construct and its role in creating useful and valid knowledge. American Journal of Community Psychology. 2004;34(1/2):1–69. doi: 10.1023/b:ajcp.0000040146.32749.7d. [DOI] [PubMed] [Google Scholar]

- US Department of Health and Human Services. Mental health: A report of the Surgeon General. Washington, DC: Office of the Surgeon General, Department of Health and Human Services; 1999. [Google Scholar]

- US Department of Health and Human Services. Mental health: Culture, race, and ethnicity—A supplement to Mental health: A report of the Surgeon General. Rockville, MD: US Department of Health and Human Services, Public Health Service Office of the Surgeon General; 2001. [Google Scholar]

- US Department of Health and Human Services. The road ahead: Research partnerships to transform services A report by the National Advisory Mental Health Council's Workgroup on Services and Clinical Epidemiology Research. Bethesda, MD: National Institutes of Health, National Institute of Mental Health; 2006. [Google Scholar]

- Van Meter DS, Van Horn CE. The policy implementation process: A conceptual framework. Administration and Society. 1975;6:445–488. [Google Scholar]

- Wells K, Miranda J, Bruce ML, Alegria M, Wallerstein N. Bridging community intervention and mental health services research. American Journal of Psychiatry. 2004;161:955–963. doi: 10.1176/appi.ajp.161.6.955. [DOI] [PubMed] [Google Scholar]

- Woolston JL. Implementing evidence-based treatments in organizations. Journal of the American Academy of Child & Adolescent Psychology. 2005;44(12):1313–1316. doi: 10.1097/01.chi.0000181041.21584.7a. [DOI] [PubMed] [Google Scholar]