Abstract

Introduction: Preventing the occurrence of hospital readmissions is needed to improve quality of care and foster population health across the care continuum. Hospitals are being held accountable for improving transitions of care to avert unnecessary readmissions. Advocate Health Care in Chicago and Cerner (ACC) collaborated to develop all-cause, 30-day hospital readmission risk prediction models to identify patients that need interventional resources. Ideally, prediction models should encompass several qualities: they should have high predictive ability; use reliable and clinically relevant data; use vigorous performance metrics to assess the models; be validated in populations where they are applied; and be scalable in heterogeneous populations. However, a systematic review of prediction models for hospital readmission risk determined that most performed poorly (average C-statistic of 0.66) and efforts to improve their performance are needed for widespread usage.

Methods: The ACC team incorporated electronic health record data, utilized a mixed-method approach to evaluate risk factors, and externally validated their prediction models for generalizability. Inclusion and exclusion criteria were applied on the patient cohort and then split for derivation and internal validation. Stepwise logistic regression was performed to develop two predictive models: one for admission and one for discharge. The prediction models were assessed for discrimination ability, calibration, overall performance, and then externally validated.

Results: The ACC Admission and Discharge Models demonstrated modest discrimination ability during derivation, internal and external validation post-recalibration (C-statistic of 0.76 and 0.78, respectively), and reasonable model fit during external validation for utility in heterogeneous populations.

Conclusions: The ACC Admission and Discharge Models embody the design qualities of ideal prediction models. The ACC plans to continue its partnership to further improve and develop valuable clinical models.

Key Words: 30-day All-Cause Hospital Readmission, Readmission Risk Stratification Tool, Predictive Analytics, Prediction Model, Derivation and External Validation of a Prediction Model, Clinical Decision Prediction Model

Introduction

Curbing the frequency and costs associated with hospital readmissions within 30 days of inpatient discharge is needed to improve the quality of health care services [1-3]. Hospitals are held accountable for care delivered through new payment models, with incentives for improving discharge planning and transitions of care to mitigate preventable readmissions [4,5]. Consequently, hospitals must reduce readmissions to evade financial penalties by the Centers for Medicare & Medicaid Services (CMS) under the Hospital Readmissions Reduction Program (HRRP) [6]. In 2010, Hospital Referral Regions (HRRs) in the Chicago metropolitan area had higher readmission rates for medical and surgical discharges when compared with the national average [7], and were among the top five HRRs in Illinois facing higher penalties [8].

Although penalizing high readmission rates has been debated since the introduction of the policy [9], there has been consensus on the need for coordinated and efficient care for patients beyond the hospital walls to prevent unnecessary readmissions. Augmenting transitions of care during the discharge process and proper coordination between providers across care settings are key drivers needed to reduce preventable readmissions [10-12]. Preventing readmissions must be followed up with post-discharge and community-based care interventions that can improve, as well as sustain, the health of the population to decrease hospital returns. While several interventions have been developed that aim to reduce unnecessary readmissions by improving the transition of care process during and post-discharge [13-17], there is a lack of evidence on what interventions are most effective with readmission reductions on a broad scale [18].

One approach to curtailing readmissions is to identify high risk patients needing effective transition of care interventions using prediction models [19]. Ideally, the design of prediction models should offer clinically meaningful discrimination ability (measured using the C-statistic); use reliable data that can be easily obtained; utilize variables that are clinically related; be validated in the populations in which use is intended; and be deployable in large populations [20]. For a clinical prediction model, a C-statistic of less than 0.6 has no clinical value, 0.6 to 0.7 has limited value, 0.7 to 0.8 has modest value, and greater than 0.8 has discrimination adequate for genuine clinical utility [21]. However, prediction models should not rely exclusively on the C-statistic to evaluate utility of risk factors [22]. They should also consider bootstrapping methods [23] and incorporate additional performance measures to assess prediction models [24]. Research also suggests that prediction models should maintain a balance between including too many variables and model parsimony [25,26].

A systematic review of 26 hospital readmission risk prediction models found that most tools performed poorly with limited clinical value (average C-statistic of 0.66), about half relied on retrospective administrative data, a few used external validation methods, and efforts were needed to improve their performance as usage becomes more widespread [27]. In addition, a few parsimonious prediction models were developed after this review. One was created outside the U.S. and yielded a C-statistic of 0.70 [28]. The other did not perform external validation for geographic scalability and had a C-statistic of 0.71 [29]. One of the major limitations of most prediction models is that they are mostly developed using administrative claims data. Given the myriad of factors that can contribute to readmission risk, models should also consider including variables obtained in the Electronic Health Record (EHR).

Fostering collaborative relationships and care coordination with providers across care settings is needed to reduce preventable readmissions [18]. Care collaboration and coordination is central to the Health Information Technology for Economic and Clinical Health (HITECH) Act in promoting the adoption and meaningful use of health information [30]. Therefore, health care providers should also consider collaborating with information technology organizations to develop holistic solutions that improve health care delivery and the health of communities.

Advocate Health Care, located in the Chicago metropolitan area, and Cerner partnered to create optimal predictive models that leveraged Advocate Health Care’s population risk and clinical integration expertise with Cerner's health care technology and data management proficiency. The Advocate Cerner Collaboration (ACC) was charged with developing a robust readmission prevention solution by improving the predictive power of Advocate Health Care’s current manual readmission risk stratification tool (C-Statistic of 0.69), and building an automated algorithm embedded in the EHR that stratifies patients at high risk of readmission needing care transition interventions.

The ACC developed their prediction models taking into consideration recommendations documented in the literature to create and assess their models’ performance, and performed an external validation for generalizability using a heterogeneous population. While previous work relied solely on claims data, the ACC prediction models incorporated patient data from the EHR. In addition, the ACC team used a mixed-method approach to evaluate risk factors to include in the prediction models.

Objectives

The objectives of this research project were to: 1) develop all-cause hospital readmission risk prediction models for utility at admission and prior to discharge to identify adult patients likely to return within 30-days; 2) assess the prediction models’ performance using key metrics; and 3) externally validate the prediction models’ generalizability across multiple hospital systems.

Methods

A retrospective cohort study was conducted among adult inpatients discharged between March 1, 2011 and July 31, 2012 from 8 Advocate Health Care hospitals located in the Chicago metropolitan area (Figure 1).

Figure 1.

Geographic Location of 8 Advocate Health Care Hospitals

An additional year of data prior to March 1, 2011, was extracted to analyze historical patient information and prior hospital utilization. Inpatient visits thru August 31, 2012, were also extracted to account for any readmissions occurring within 30 days of discharge after July 31, 2012. Encounters were excluded from the cohort if they were observation, inpatient admissions for psychiatry, skilled nursing, hospice, rehabilitation, maternal and newborn visits, or if the patient expired during the index admission. Clinical data was extracted from Cerner’s Millennium® EHR software system and Advocate Health Care’s Enterprise Data Warehouse (EDW). Data from both sources was then loaded into Cerner’s PowerInsight® (PIEDW) for analysis.

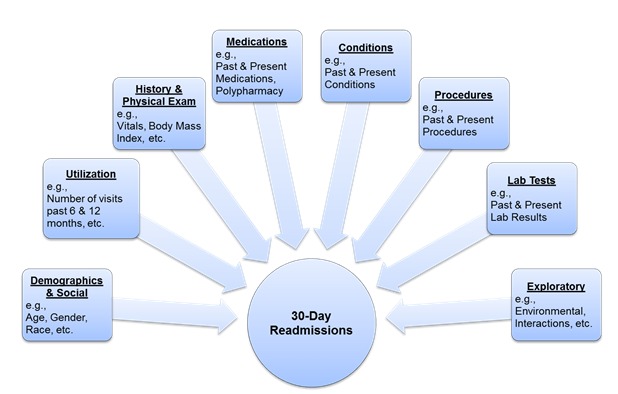

The primary dependent variable for the prediction models was hospital readmissions within 30-days from the initial discharge. Independent variables were segmented into 8 primary categories: Demographics and Social characteristics, Hospital Utilization, History & Physical Examination (H&P), Medications, Laboratory Tests, Conditions and Procedures (using International Classification of Diseases, Ninth Revision, Clinical Modification codes ICD-9 CM), and an Exploratory Group (Figure 2).

Figure 2.

ACC Readmission Risk Prediction Conceptual Model

Risk factors considered for analysis were based on literature reviews and a mixed-method approach using qualitative data collected from clinical input. Qualitative data were collected from site visits at each Advocate Health Care hospital through in-depth interviews and focus groups with clinicians and care mangers, respectively. Clinicians and care mangers were asked to identify potential risk factors that caused a patient to return to hospital. Field notes were taken during the site visits. Information gleaned was used to identify emerging themes that helped inform the quantitative analyses.

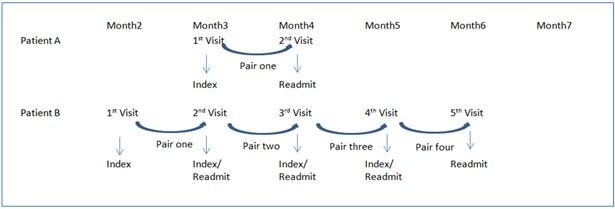

All quantitative statistical analyses were conducted using SAS® version 9.2 (SAS Institute). Descriptive and inferential statistics were performed on the primary variable categories to identify main features of the data and any causal relationships, respectively. The overall readmission rate was computed using the entire cohort. For modeling, one consecutive encounter pair (index admission and readmission encounter) was randomly sampled from each patient to control for bias due to multiple admissions. Index encounters were restricted to a month prior to the study period’s end date to capture any readmissions that occurred within 30 days (Figure 3).

Figure 3.

Multiple Readmission Sampling Methodology

To develop and internally validate the prediction models, the cohort was then split into a derivation dataset (75%) and a validation dataset (25%). Model fitting was calculated using bootstrapping method by randomly sampling two-thirds of the data in the derivation dataset. The procedure was repeated 500 times and the averaged coefficients were applied to the validation dataset. Stepwise logistic regression was performed and predictors that were statistically significant using a p-value ≤ 0.05 were included in the model. Two predictive models were developed: one at admission and one prior to discharge using readily available data for the patient. The admission prediction included baseline data available for a patient once admitted to the hospital. The discharge prediction model was more comprehensive, including additional data that became available prior to discharge.

The performance of each prediction model was assessed by 3 measures. First, discrimination ability was quantified by sensitivity, specificity, and the area under the receiver operating characteristic (ROC) curve, or C-statistic that measures how well the model can separate those who do and do not have the outcome. Second, calibration was performed using the Hosmer-Lemeshow (H&L) goodness-of-fit test, which measures how well the model fits the data or how well predicted probabilities agree with actual observed risk, where a p-value > 0.05 indicates a good fit. Third, overall performance was quantified using Brier’s score, which measures how close predictions are to the actual outcome.

External validation of the admission and discharge prediction models were also performed using Cerner’s HealthFacts® data. HealthFacts® is a de-identified patient database that includes over 480 providers across the U.S. with a majority from the Northeast (44%), having more than 500 beds (27%), and are teaching facilities (63%). HealthFacts® encompasses encounter level demographic information, conditions, procedures, laboratory tests, and medication data. A sample was selected from HealthFacts® data consistent with the derivation dataset. The fit of both prediction models was assessed by applying the derivation coefficients, then recalibrating the coefficients with the same set of predictors and the coefficients by using the HealthFacts® sample. The performance between models was then compared.

Results

A total of 126,479 patients comprising 178,293 encounters met the cohort eligibility criteria, of which 18,652 (10.46%) encounters resulted in readmission to the same Advocate Health Care hospital within 30 days. After sampling, 9,151 (7.25%) encounter pairs were defined as 30-day readmissions. Demographic characteristics of the sample cohort are characterized in Table 1.

Table 1. Demographic Characteristics of the Sample Cohort.

| Demographic Characteristics |

30 Day Readmission | No Readmission | ||||||

|---|---|---|---|---|---|---|---|---|

| n=9,151 (7.25%) |

n=117,328 (92.75%) |

|||||||

| Age | µ = 66.01 | µ = 57.65 | ||||||

| Gender | ||||||||

| Female | 5,045 | (55.13) | 70,917 | (60.44) | ||||

| Male | 4,106 | (44.87) | 46,411 | (39.56) | ||||

| Race | ||||||||

| Caucasian | 5,737 | (62.69) | 71,796 | (61.19) | ||||

| African American | 2,357 | (25.76) | 26,446 | (22.54) | ||||

| Hispanic | 648 | (7.08) | 10,867 | (9.26) | ||||

| Other | 409 | (4.47) | 8,219 | (7.01) | ||||

| Language | ||||||||

| English | 6,851 | (94.26) | 141,624 | (93.29) | ||||

| No English | 417 | (5.74) | 10,187 | (6.71) | ||||

| Marital Status | ||||||||

| Married | 3,771 | (41.21) | 58,159 | (49.57) | ||||

| Not Married | 5,380 | (58.79) | 59,169 | (50.43) | ||||

| Employment Status | ||||||||

| Employed | 991 | (10.83) | 23,073 | (19.67) | ||||

| Not Employed | 4,930 | (53.87) | 46,973 | (40.04) | ||||

| Unknown | 3,230 | (35.30) | 47,282 | (40.30) | ||||

| Insurance Type | ||||||||

| Commercial | 2,828 | (30.90) | 56,286 | (47.97) | ||||

| Medicare | 5,118 | (55.93) | 44,187 | (37.66) | ||||

| Medicaid | 778 | (8.50) | 8,352 | (7.12) | ||||

| Self-pay | 378 | (4.13) | 6,751 | (5.75) | ||||

| Other | 49 | (0.54) | 1,752 | (1.49) | ||||

The ACC Admission Model included 49 independent predictors: Demographic, Utilization, Medications, Labs, H&P, and Exploratory variables. The ACC Discharge Model included 58 independent predictors comprising all the aforementioned variables plus Conditions, Procedures, Length of Stay (LOS), and Discharge Disposition. The variables included in the ACC Admission and Discharge Prediction Models are presented in Table 2.

Table 2. ACC Admission and Discharge Prediction Models’ Variables.

| Variables | Admission Model (n=49) | Discharge Model (n=58) |

|---|---|---|

|

Demographics

|

✓ |

✓ |

|

Utilization

|

✓ |

✓ |

|

Lab Tests

|

✓ |

✓ |

|

Exploratory

|

✓ |

✓ |

|

H&P

|

✓ |

✓ |

|

Medications

|

✓ |

✓ |

|

Conditions

|

|

✓ |

|

Procedures

|

|

✓ |

|

Length of Stay

|

|

✓ |

| Discharge Disposition | ✓ |

Assessment of the ACC Admission Model’s performance yielded C-statistics of 0.76 and 0.75, H&L goodness-of-fit tests of 36.0 (p<0.001) and 23.5 (p=0.0027), and Brier Scores of 0.062 (7.6% improvement from random prediction) and 0.063 (6.6% improvement from random prediction) from the derivation and internal validation datasets, respectively. Assessment of the ACC Discharge Model’s performance yielded C-statistics of 0.78 and 0.77, H&L goodness-of-fit tests of 31.1 (p<0.001) and 19.9 (p=0.01), and Brier Scores of 0.060 and 0.061 (9.1% improvement from random prediction) from the derivation and internal validation datasets, respectively. The average C-statistic for the ACC Admission Model was 0.76 and for the Discharge Model it was 0.78 after the 500 simulations in derivation dataset, resulting in a small range of deviation between individual runs.

External validation of the ACC Admission and Discharge model resulted in C-statistics of 0.76 and 0.78, H&L goodness-of-fit tests of 6.1 (p=0.641) and 14.3 (p=0.074), and Brier Scores of 0.061 (8.9% improvement from random prediction) and 0.060 (9.1% improvement from random prediction) after recalibrating and re-estimating the coefficient using Healthfacts® data, respectively. The ACC Admission and Discharge Models’ performance measures are represented in Table 3.

Table 3. ACC Admission and Discharge Prediction Model’s Performance Measures.

| Dataset | Performance Measures | Admission Model | Discharge Model |

|---|---|---|---|

|

Derivation

(n=94,859) |

Discrimination |

|

|

| C-statistic |

0.76 |

0.78 |

|

| Calibration |

|

|

|

| Hosmer-Lemeshow goodness-of-fit test

(p-value) |

36.0 (p<0.001) |

31.1 (p<0.001) |

|

| Overall Performance |

|

|

|

| Brier Score (% improvement) |

0.062 (7.6%) |

0.060 (9.1%) |

|

| Bootstrapping |

|

|

|

|

|

500 simulations average (min. to max.) |

0.76 (0.75 to 0.76) |

0.78 (0.77 to 0.78) |

|

Internal Validation (n=31,619)

|

Discrimination |

|

|

| C-statistic |

0.75 |

0.77 |

|

| Calibration |

|

|

|

| Hosmer-Lemeshow goodness-of-fit test

(p-value) |

23.5 (p=0.003) |

19.9 (p=0.01) |

|

| Overall Performance |

|

|

|

| Brier Score (% improvement) |

0.063 (6.6%) |

0.061 (9.1%) |

|

|

External Validation (Without Recalibration)

(n=6,357) |

Discrimination |

|

|

| C-statistic |

0.69 |

0.71 |

|

| Calibration |

|

|

|

| Hosmer-Lemeshow goodness-of-fit test

(p-value) |

216.9 (p<0.001) |

156.3 (p<0.001) |

|

| Overall Performance |

|

|

|

| Brier Score (% improvement) |

0.065 (2.5%) |

0.064 (4.0%) |

|

|

External Validation

(With Recalibration) (n=6,357) |

Discrimination |

|

|

| C-statistic |

0.76 |

0.78 |

|

| Calibration |

|

|

|

| Hosmer-Lemeshow goodness-of-fit test

(p-value) |

6.1 (p=0.641) |

14.3 (p=0.074) |

|

| Overall Performance |

|

|

|

| Brier Score (% improvement) | 0.061 (8.9%) | 0.060 (9.1%) |

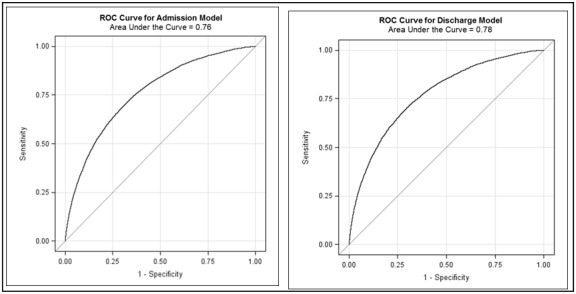

The probability thresholds for identifying high risk patients (11%), was determined by balancing the tradeoff between sensitivity (70%) and specificity (71%) by maximizing the area under ROC curves for the prediction models (Figure 4).

Figure 4.

ROC Curves for ACC Admission & Discharge Model

Discussion

We observed several key findings during the development and validation of our ACC Admission and Discharge Models. Both our all-cause models performed reasonably better than most predictive models reviewed in the literature used to identify patients at risk of readmission [27-29]. Both our models yielded a C-statistic between 0.7 and 0.8 during derivation, internal validation, and external validation after recalibration—a modest value for a clinical predictive rule. When comparing C-statistics between the Admission (C-statistic of 0.76) and Discharge Models (C-statistic 0.78), the Discharge Model’s discrimination ability improved because Conditions and Procedures, LOS, and Discharge Disposition variables were included; which helped further explain a patient’s readmission risk since medical conditions and surgical procedures accounts for immediate health needs, LOS represents severity of illness, and discharge disposition to a post-acute setting that doesn’t meet their discharge needs could result in a return to the hospital. We also observed the same C-statistic for our ACC Discharge Model on the development and external validation sample, suggesting that it performs well both in the intended population and when using a heterogeneous dataset. Our ACC Discharge Model also had a somewhat higher C-statistic during derivation when compared to the C-statistic observed during internal validation (C-statistic of 0.77), which is typically higher when assessing predictive accuracy using the derivation dataset to develop the model [21].

Our ACC Admission and Discharge Models also demonstrated reasonable model fit during external validation after recalibrating the coefficient estimates. A non-significant H&L p-value indicates the model adequately fits the data. However, caution must be used when interpreting H&L statistics because they are influenced by sample size [31]. Our models did not demonstrate adequate model fit during derivation and internal validation due to a large sample. Yet during external validation with a smaller sample, the H&L statistics for both the ACC Admission and Discharge Model improved to a non-significant level. Since the H&L statistic is influenced by sample size, the Brier Score should also be taken into account to assess prediction models because it captures calibration and discrimination features. The closer the Brier Score is to zero, the better the predictive performance [24]. Both our prediction models had low Brier Scores, with the ACC Discharge Model’s 0.06 representing consistent percent (9.1%) improvement over random prediction during derivation, internal validation, and external validation after recalibration.

There was concern that too many independent variables would increase the possibility of building an over-specified model that only performs well on the derivation dataset. Thus, validating a comprehensive model using an external dataset to replicate the derivation results would be challenging. Our findings indicate that our model’s performance slightly diminished during the ACC Admission Model’s external validation when compared with the more comprehensive ACC Discharge Model. When we externally validated our ACC Admission Model, the C-statistic was 0.74 on the development dataset, but reduced to 0.66 when using the initial derivation coefficients on the external dataset, and then increased to 0.70 after recalibrating the coefficients. The C-statistic for our ACC Discharge Model decreased from 0.78 on the development dataset, to 0.71 using the unchanged derivation coefficients, and then increased back to 0.78 after recalibration using the external validation sample dataset. It is expected to see performance decrease from derivation to validation, but our models had no more than 10% shrinkage from derivation to the validation results [32]. We further tested our ACC Admission Model using only baseline data available for a patient (e.g., demographic and utilization variables). The C-statistic for a more parsimonious admission model was 0.74 on the development dataset, decreased to 0.66 when using the derivation coefficients on the external dataset, but then increased to 0.70 after recalibrating the coefficients. Our findings suggest that including additional variables in the model is more likely to generalize better in comparison with a parsimonious model during external validation post-recalibration.

Overall, our Admission and Discharge Models’ performance indicates modest discrimination ability. While other studies relied on retrospective administrative data, our models incorporated data elements from the EHR. We utilized a mixed-method approach to evaluate clinically-related variables. Our models were internally validated in the intended population and externally validated for utility in heterogeneous populations. Our Admission Model offers a practical solution with data available during hospitalization. Our Discharge Model has a higher level of predictability according to the C-statistic and improved performance according to the Brier Score once more data is accessible during discharge.

Creating a highly accurate predictive model is multifaceted and contingent on copious factors, including, but not limited to, the quality and accessibility of data, the ability to replicate the findings beyond the derivation dataset, and the balance between a parsimonious and comprehensive prediction model. To facilitate external validation, we discovered that a compromise between a parsimonious and comprehensive model was needed when developing logistic regression prediction models. We also found that utilizing a mixed-method approach was valuable and additional efforts are needed when selecting risk factors that are of high-quality data, easily accessible, and generalizable across multiple populations. We also believe that bridging statistical acumen and clinical knowledge is needed to further develop decision support tools of genuine clinical utility, by soliciting support from clinicians when the statistics does not align with clinical intuition.

Limitations

Our findings should be considered under the purview of several limitations. There might be additional research conducted on readmission risk tools developed after the systematic review performed by Kansagara. Additional readmission risk prediction models were developed [33,34], but they did not publish their performance statistics to help us compare our prediction models.

Our readmission rate was limited to visits occurring at the same hospital. Readmission rates based on same-hospital visits can be unreliable and dilute the true hospital readmission rate [35]. One promising approach is using a master patient index (MPI) to track patients across hospitals. Data is being linked across hospitals and to outside facilities through MPI and claims data. Using our own method to create a MPI match, we performed some preliminary analysis and were able to identify 5% more readmissions across the other Advocate Health Care hospitals, increasing the readmission rate by approximately 1%. We also assessed the utility of claims data to match the other hospitals with Millennium® encounters to gauge a more representative readmission rate. The claims data allowed us to track approximately 8% more readmissions, increasing the readmission rate by approximately 1%. Overall, using both approaches we were able to identify a more representative readmission rate that increased from 10.46% to 12.5%. We are currently working to see how this impacts our models’ performance.

Data captured through EHRs is growing, but are incomplete with respect to data relevant to hospital readmission prediction and the lack of standard data representations limits generalizability of predictive models [36]. As a result, we could not include certain data elements into our models due to data quality issues, a large percentage of missing data, and since some of the information is difficult to glean. Therefore, we could not include social determinants identified by clinicians and care managers during qualitative interviews such as social isolation (i.e., living alone) and living situation (e.g., homelessness) known to be salient factors and tied to hospital readmissions [37,38]. Initially, we only mined a single source for this information in the EHR. However, new data sources have been identified in the EHR and the utility of these risk factors are currently being assessed in our prediction models. Additional factors are also being considered in our models such, as functional status [37,39], medication adherence and availability of transportation for follow-up visits post-discharge [40].

Our prediction models do not distinguish between potentially preventable readmissions (PPR) [41,42]. We did perform some preliminary analysis and found that the overall PPR readmission rate for Advocate Health Care in 2012 was about 6% of all admissions. We estimated around 60% of all readmissions were deemed avoidable. This is higher than the median proportion of avoidable readmissions (27.1%), but falls within the range of 5% to 79% [43]. We plan to further assess PPR methodology and test our models’ ability to recognize potentially avoidable readmissions to help intervene where clinical impact is most effective.

Our initial analysis plan proposed to include observation patients (n=51,517) in the entire inpatient cohort. We performed some preliminary analysis and found the overall readmission rate increased to 10.72%, but the C-statistics for our Admission and Discharge Models reduced to 0.75 and 0.77, respectively. Our models’ discrimination ability probably diminished due to improved logic needed in making a distinction in situations where observation status changes to inpatient and vice-versa. Further assessment of observation patients is needed to better understand their importance in an accountable care environment.

Steps are underway to mitigate limitations and continue to improve the clinical utility of our readmission risk prediction models. Data is being linked across hospitals and to outside facilities through MPI and claims data. Additional data sources in the EHR that encompass social determinants and other risk factors were identified are being assessed for use in our models. Also, we are researching potentially preventable readmissions so that the models can focus on cases where clinical impact is most needed.

Conclusions

The ACC Admission and Discharge Models exemplify design qualities of ideal prediction models. Both our models demonstrated modest predictive power for identifying high-risk patients early during hospitalization and at hospital discharge, respectively. Performance assessment of both our models during external validation post-recalibration indicates reasonable model fit and can be deployed in other population settings. Our Admission Model offers a practical and feasible solution with limited data available on admission. Our Discharge Model offers improved performance and predictability once more data is presented during discharge. The ACC partnership offers an opportunity to leverage proficiency from both organizations to improve and continue in the development of valuable clinical prediction models, building a framework for future prediction model development that achieves scalable outcomes.

Acknowledgements

Mitali Barman, MS2, Technical Architect, ACC

Sam Bozzette, MD, PhD1, Physician Executive, Cerner Research

Darcy Davis, PhD2, Data Scientist, ACC

Cole Erdmann, MBA1, Project Manager, ACC

Tina Esposito, MBA2 VP, Center for Health Information Services (CHIS)

Zachary Fainman, MD2, Director Medical Care Management

Mary Gagen, MBA2, Manager, Business Analytics, CHIS

Harlen D. Hays, MPH1, Manager, Quantitative Research and Biostatistics

Stephen Himes, JD, PhD, Copy Editor

Qingjiang Hou, MS1, Scientist, Life Science Research

Andrew A. Kramer, PhD1, Senior Research Manager, Critical Care ABU

Jing Li, PhD1, Data Analyst, ACC

Douglas S. McNair MD, PhD1, Engineering Fellow & President, Cerner Math

Bryan Nyary, MBA, Medical Editor

Samir Rishi, BSRT, MHA, LEAN2, Clinical Process Designer, ACC

Lou Ann Schraffenberger, Manager, Clinical Data, CHIS

Rishi Sikka, MD2, VP, Clinical Transformation

Rajbir Singh, MS1, Engineer, ACC

Bharat Sutariya, MD1, VP & CMO, Population Health

Xinyong Tian, PhD2, Data Analyst, ACC

Luke Utting, BS1, Senior Engineer, ACC

Fran Wilk, RN, BSN, MA2, Clinical Process Designer, ACC

Center for Health Information Services team2

Advocate Health Care hospital site visit participants 2

References

- 1.Jencks SF, Williams MV, Coleman EA. 2009. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 360(14), 1418-28 10.1056/NEJMsa0803563 [DOI] [PubMed] [Google Scholar]

- 2.Hackbarth AD,, Berwick DM. 2012. Eliminating waste in US health care. JAMA. 307(14), 1513-16 10.1001/jama.2012.362 [DOI] [PubMed] [Google Scholar]

- 3. Boutwell A, Jencks S, Nielsen G, et al. State Action on Avoidable Rehospitalizations (STAAR) Initiative: Applying early evidence and experience in front-line process improvements to develop a state based strategy. Cambridge, MA: Institute for Healthcare Improvement; 2009 May 1[cited 2013 Apr 15]. Available from: http://www.ihi.org/offerings/Initiatives/STAAR/Documents/STAAR%20State%20Based%20 Strategy.pdf

- 4.Medicare Payment Advisory Commission (MedPAC). Washington, DC: Report to the Congress: Reforming the Delivery System; 2008 June 13 [cited 2013 April 22]. Available from: http://www.medpac.gov/documents/jun08_entirereport.pdf

- 5.Patient Protection and Affordable Care Act, Pub. L. No. 111-148, §2702, 124 Stat. 119, 318-319 (March 23, 2010).

- 6.Centers for Medicaid and Medicare Services. Readmissions Reduction Program; 2012 Oct 1[cited 2013 Apr 15]. Available from: http://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/Readmissions-Reduction-Program.html

- 7.Goodman DC, Fisher ES, Chang CH. After Hospitalization: A Dartmouth Atlas Report on Post-Acute Care for Medicare Beneficiaries. Lebanon, NH: The Dartmouth Institute for Health Policy and Clinical Practice; 2011 Sept 28 [cited 2013 Apr 15]. Available from: http://www.dartmouthatlas.org/downloads/reports/Post_discharge_events_092811.pdf [PubMed]

- 8.Kaiser Health News. 2013 Medicare Readmissions Penalties By State; 2012 Aug 13 [updated 2012 Oct 22; cited 2013 Apr 30]. Available from: http://www.kaiserhealthnews.org/Stories/2012/August/13/2013-readmissions-by-state.aspx

- 9.Joynt KE, Jha AK. 2013. A Path Forward on Medicare Readmissions. N Engl J Med. 368, 1175-77 10.1056/NEJMp1300122 [DOI] [PubMed] [Google Scholar]

- 10.Medicare Payment Advisory Commission (MedPAC). Report to Congress: Promoting Greater Efficiency in Medicare; 2007 June 15 [cited 2013 Apr 15]. Available from: http://www.medpac.gov/documents/jun07_EntireReport.pdf

- 11.Kripalani S, LeFevre F, Phillips CO, Williams MV, Basaviah P, et al. 2007. Deficits in Communication and Information Transfer between Hospital-Based and Primary Care Physicians. JAMA. 297(8), 831-41 10.1001/jama.297.8.831 [DOI] [PubMed] [Google Scholar]

- 12.Tilson S, Hoffman GJ. Addressing Medicare Hospital Readmissions. Congressional Research Service; 2012 May 25 [cited 2013 Apr 30].

- 13.Jack BW, Chetty VK, Anthony D, et al. 2009. A reengineered hospital discharge program to decrease rehospitalization. Ann Intern Med. 150, 178-87 10.7326/0003-4819-150-3-200902030-00007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Parry C, Min SJ, Chugh A, Chalmers S, Coleman EA. 2009. Further application of the care transitions intervention: Results of a randomized controlled trial conducted in a fee-for-service setting. Home Health Care Serv Q. 28, 84-99 10.1080/01621420903155924 [DOI] [PubMed] [Google Scholar]

- 15.Coleman EA, Parry C, Chalmers S, Min SJ. 2006. The care transitions intervention: Results of a randomized controlled trial. Arch Intern Med. 166, 1822-28 10.1001/archinte.166.17.1822 [DOI] [PubMed] [Google Scholar]

- 16.Naylor MD, Brooten D, Campbell R, et al. 1999. Comprehensive discharge planning and home follow-up of hospitalized elders: A randomized clinical trial. JAMA. 281, 613-20 10.1001/jama.281.7.613 [DOI] [PubMed] [Google Scholar]

- 17.Project BOOST Team. The Society of Hospital Medicine Care Transitions Implementation Guide: Project BOOST: Better Outcomes by Optimizing Safe Transitions. Society of Hospital Medicine website, Care Transitions Quality Improvement Resource Room. [cited 2013 Apr 30]. Avaiable from: http://www.hospitalmedicine.org

- 18.Mittler JN, O'Hora JL, Harvey JB, Press MJ, Volpp KG, et al. 2013. Turning Readmission Reduction Policies into Results: Some Lessons from a Multistate Initiative to Reduce Readmissions [Epub ahead of print] Popul Health Manag. 10.1089/pop.2012.0087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Brennan N. Centers for Medicare & Medicaid Services: Applying Smart Analytics to the Medicare Readmission Problem. 2012 Oct 16 [cited Apr 15, 2013] Available from: http://strataconf.com/rx2012/public/schedule/detail/27500

- 20.Krumholz HM, Brindis RG, Brush JE, et al. 2006. American Heart Association; Quality of Care and Outcomes Research Interdisciplinary Writing Group; Council on Epidemiology and Prevention; Stroke Council; American College of Cardiology Foundation; Endorsed by the American College of Cardiology Foundation. Standards for statistical models used for public reporting of health outcomes: an American Heart Association Scientific Statement from the Quality of Care and Outcomes Research Interdisciplinary Writing Group: cosponsored by the Council on Epidemiology and Prevention and the Stroke Council. Circulation. 113(3), 456-62 10.1161/CIRCULATIONAHA.105.170769 [DOI] [PubMed] [Google Scholar]

- 21.Ohman EM, Granger CB, Harrington RA, Lee KL. 2000. Risk Stratification and Therapeutic Decision Making in Acute Coronary Syndromes. JAMA. 284(7), 876-78 10.1001/jama.284.7.876 [DOI] [PubMed] [Google Scholar]

- 22.Cook NR. 2007. Use and misuse of the receiver operating characteristics curve in risk prediction. Circulation. 115(7), 928-35 10.1161/CIRCULATIONAHA.106.672402 [DOI] [PubMed] [Google Scholar]

- 23.Austin PC, Tu JV. 2004. Bootstrap Methods for Developing Predictive Models. Am Stat. 58(2), 131-37 10.1198/0003130043277 [DOI] [Google Scholar]

- 24.Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, et al. 2010. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology. 21(1), 128-38 10.1097/EDE.0b013e3181c30fb2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Murtaugh PA. 1998. Methods of variable selection in regression modeling. Comm Statist Simulation Comput. 27(3), 711-34 10.1080/03610919808813505 [DOI] [Google Scholar]

- 26.Wears RL, Roger JL. 1999. Statistical Models and Occam's Razor. Acad Emerg Med. 6(2), 93-94 10.1111/j.1553-2712.1999.tb01043.x [DOI] [PubMed] [Google Scholar]

- 27.Kansagara D, Englander H, Salanitro A, et al. 2011. Risk Prediction Models for Hospital Readmission: A Systematic Review. JAMA. 306(15), 1688-98 10.1001/jama.2011.1515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Billings J, Blunt I, Steventon A, Georghiou T, Lewis G, Bardsley M. Development of a predictive model to identify inpatients at risk of re-admission within 30 days of discharge (PARR-30). BMJ Open. 2012 Aug 10;2(4). [DOI] [PMC free article] [PubMed]

- 29.Donzé J, Aujesky D, Williams D, Schnipper JL. 2013. Potentially Avoidable 30-Day Hospital Readmissions in Medical Patients: Derivation and Validation of a Prediction Model. JAMA Intern Med. 173(8), 632-38 [DOI] [PubMed] [Google Scholar]

- 30.U.S. Department of Health & Human Services. HITECH Act Enforcement Interim Final Rule; 2009 Feb 18 [cited 2013 April 30]. Available from: http://www.hhs.gov/ocr/privacy/hipaa/administrative/enforcementrule/hitechenforcementifr.html

- 31.Kramer AA, Zimmerman JE. 2007. Assessing the calibration of mortality benchmarks in critical care: the hosmer-lemeshow test revisited. Crit Care Med. 35(9), 2052-56 10.1097/01.CCM.0000275267.64078.B0 [DOI] [PubMed] [Google Scholar]

- 32.Patetta M, Kelly D. Predictive Modeling Using Logistic Regression. SAS Institute INC. 2008Jul.

- 33.The Advisory Board Company. About Crimson Real Time Readmissions. 2013 [cited 2013 Apr 22]. Accessed from: http://www.advisory.com/Technology/Crimson-Real-Time-Readmissions/About-Crimson-Real-Time-Readmissions

- 34.IBM. New IBM Software Helps Analyze The World's Data For Healthcare Transformation. Seton Healthcare Family Adopts Complementary Watson Solution To Improve Patient Care and Help Reduce Costs. 2011 Oct 25 [cited 2013 Apr 22]. Accessed from: http://www-03.ibm.com/press/us/en/pressrelease/35597.wss

- 35.Nasir K, Lin Z, Bueno H, Normand SL, Drye EE, et al. 2010. Is same-hospital readmission rate a good surrogate for all-hospital readmission rate? Med Care. 48(5), 477-81 10.1097/MLR.0b013e3181d5fb24 [DOI] [PubMed] [Google Scholar]

- 36.Cholleti S, Post A, Gao J, Lin X, Bornstein W, Cantrell D, Saltz J. Leveraging derived data elements in data analytic models for understanding and predicting hospital readmissions. AMIA Annu Symp Proc. 2012 Nov 3;2012:103-111. [PMC free article] [PubMed]

- 37.Arbaje AI, Wolff JL, Yu Q, Powe NR, Anderson GF, et al. 2008. Postdischarge environmental and socioeconomic factors and the likelihood of early hospital readmission among community-dwelling Medicare beneficiaries. Gerontologist. 48(4), 495-504 10.1093/geront/48.4.495 [DOI] [PubMed] [Google Scholar]

- 38.Calvillo-King L, Arnold D, Eubank KJ, Lo M, Yunyongying P, et al. 2013. Impact of Social Factors on Risk of Readmission or Mortality in Pneumonia and Heart Failure: Systematic Review. J Gen Intern Med. 28(2), 269-82 10.1007/s11606-012-2235-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Coleman EA, Min SJ, Chomiak A, Kramer AM. 2004. Posthospital care transitions: patterns, complications, and risk identification. Health Serv Res. 39(5), 1449-1466 10.1111/j.1475-6773.2004.00298.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Alper E, O'Malley TA, Greenwald J. Hospital Discharge. Up to Date. 2013 Mar 25 [cited 2013 Apr 15]. Available from: http://www.uptodate.com/contents/hospital-discharge

- 41.Goldfield NI, McCullough EC, Hughes JS. 2008. ang AM, Eastman B, Rawlins LK, Averill RF. Identifying potentially preventable readmissions. Health Care Financ Rev. 30(1), 75-91 [PMC free article] [PubMed] [Google Scholar]

- 42.Vest JR, Gamm LD, Oxford BA, Gonzalez MI, Slawson KM. 2010. Determinants of preventable readmissions in the United States: a systematic review. Implement Sci. 5, 88 10.1186/1748-5908-5-88 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.van Walraven C, Bennett C, Jennings A, Austin PC, Forster AJ. 2011. Proportion of hospital readmissions deemed avoidable: a systematic review. CMAJ. 183(7), E391-402 10.1503/cmaj.101860 [DOI] [PMC free article] [PubMed] [Google Scholar]