Abstract

BACKGROUND:

Following the severe acute respiratory syndrome outbreak in 2003, hospitals have been mandated to use infection screening questionnaires to determine which patients have infectious respiratory illness and, therefore, require isolation precautions. Despite widespread use of symptom-based screening tools in Ontario, there are no data supporting the accuracy of these screening tools in hospitalized patients.

OBJECTIVE:

To measure the performance characteristics of infection screening tools used during the H1N1 influenza season.

METHODS:

The present retrospective cohort study was conducted at The Ottawa Hospital (Ottawa, Ontario) between October and December, 2009. Consecutive inpatients admitted from the emergency department were included if they were ≥18 years of age, underwent a screening tool assessment at presentation and had a most responsible diagnosis that was cardiac, respiratory or infectious. The gold-standard outcome was laboratory diagnosis of influenza.

RESULTS:

The prevalence of laboratory-confirmed influenza was 23.5%. The sensitivity and specificity of the febrile respiratory illness screening tool were 74.5% (95% CI 60.5% to 84.8%) and 32.7% (95% CI 25.8% to 40.5%), respectively. The sensitivity and specificity of the influenza-like illness screening tool were 75.6% (95% CI 61.3% to 85.8%) and 46.3% (95% CI 38.2% to 54.7%), respectively.

CONCLUSIONS:

The febrile respiratory illness screening tool missed 26% of active influenza cases, while 67% of noninfluenza patients were unnecessarily placed under respiratory isolation. Results of the present study suggest that infection-control practitioners should re-evaluate their strategy of screening patients at admission for contagious respiratory illness using symptom- and sign-based tests. Future efforts should focus on the derivation and validation of clinical decision rules that combine clinical features with laboratory tests.

Keywords: H1N1, Infection control, Infection screening tools, Influenza, Test characteristics

Abstract

HISTORIQUE:

Après l’éclosion de syndrome respiratoire aigu sévère en 2003, les hôpitaux ont été mandatés pour utiliser les questionnaires de dépistage des infections afin de déterminer les patients atteints d’une maladie respiratoire infectieuse et, par conséquent, nécessitant un isolement préventif. Malgré l’utilisation généralisée d’outils de dépistage fondés sur les symptômes en Ontario, aucune donnée n’en ’étaye l’exactitude chez les patients hospitalisés.

OBJECTIF:

Mesurer les caractéristiques de rendement des outils de dépistage des infections utilisés pendant la saison de la grippe H1N1.

MÉTHODOLOGIE:

La présente étude rétrospective de cohorte a été menée à L’Hôpital d’Ottawa, en Ontario, entre octobre et décembre 2009. Les patients hospitalisés consécutifs admis par le département d’urgence y étaient inclus s’ils avaient 18 ans ou plus, avaient subi une évaluation par outil de dépistage à leur présentation et avaient obtenu un diagnostic principal de nature cardiaque, respiratoire ou infectieuse. L’issue de référence était un diagnostic de grippe en laboratoire.

RÉSULTATS:

La prévalence de grippes confirmées en laboratoire s’élevait à 23,5 %. La sensibilité et la spécificité de l’outil de dépistage de maladie respiratoire fébrile étaient de 74,5 % (95 % IC 60,5 % à 84,8 %) et de 32,7 % (95 % IC 25,8 % à 40,5 %), respectivement. La sensibilité et la spécificité de l’outil de dépistage de maladie pseudogrippale correspondaient à 75,6 % (95 % IC 61,3 % à 85,8 %) et à 46,3 % (95 % IC 38,2 % à 54,7 %), respectivement.

CONCLUSIONS:

L’outil de dépistage de maladie respiratoire fébrile ne distinguait pas 26 % des cas de grippe active, tandis que 67 % des patients n’ayant pas la grippe étaient placés à tort en isolement respiratoire. D’après les résultats de la présente étude, les praticiens de contrôle des infections devraient réévaluer leur stratégie de dépistage des maladies respiratoires contagieuses des patients à leur admission au moyen de tests fondés sur les signes et symptômes. Il faudra porter les futurs efforts sur la dérivation et la validation des règles de décision cliniques qui associent les caractéristiques cliniques aux tests de laboratoire.

Contagious respiratory illnesses are important and preventable causes of health care-acquired infections (1). One way of preventing nosocomial transmission is to screen patients for infectious signs and symptoms at the time of admission to hospital. Special precautions can then be applied, such as isolating high-risk patients and using personal protective equipment during care. This approach was one of several infection-control precautions attributed to the control of the 2003 severe acute respiratory syndrome (SARS) outbreak in Canada (2–4) and has become a cornerstone technique in controlling health care-acquired respiratory infections.

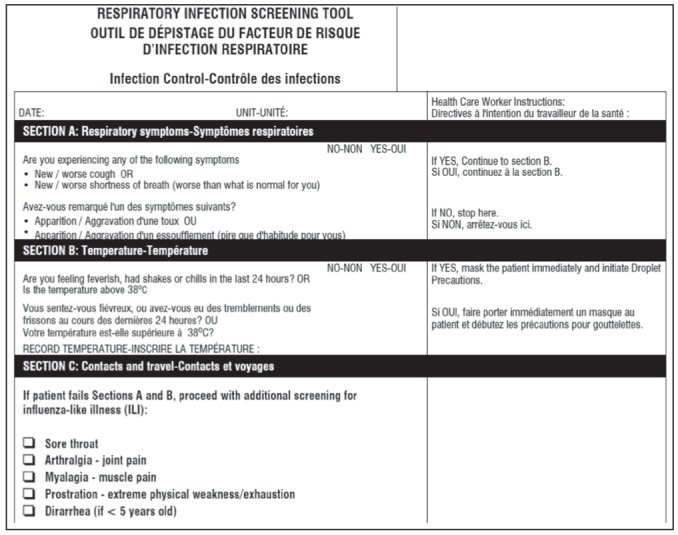

Routine screening of patients presenting to emergency departments (EDs) has been recommended by various groups, including the Ministry of Health and Long-Term Care, Ontario Provincial Infectious Diseases Advisory Committee and the Public Health Agency of Canada (5–7). Based on this mandate, many hospital EDs hired dedicated personnel to screen all patients with two symptom-based screening tools during the pandemic H1N1 season. Ontario hospitals used the Febrile Respiratory Illness (FRI) and Influenza-Like Illness (ILI) questionnaires (8) (Appendix 1). The FRI screening tool queried symptoms of new shortness of breath, cough and fever, while the ILI asked more pointed questions about influenza-associated symptoms including sore throat, arthralgias, myalgias, prostration and diarrhea (Appendix 1). If the FRI screen was positive, patients were placed under droplet isolation precautions. The FRI and ILI screening tools were used in conjunction to guide decisions regarding respiratory isolation precautions during the H1N1 influenza pandemic before confirmatory testing was available.

While this type of symptom-based screening approach helped to control the SARS outbreak in 2003 (4), there are no data supporting its application in preventing spread of other illnesses, such as influenza, in the hospitalized patient population.

We conducted the present retrospective cohort study to measure the performance test characteristics of the FRI and ILI screening questionnaires as they were applied during the 2009 H1N1 pandemic influenza season. Given that screening tools were used routinely along with gold-standard laboratory testing, this provided a unique opportunity to assess the accuracy of the current screening approach in a pandemic influenza season.

METHODS

Study design and setting

The present retrospective cohort study was performed at the Ottawa Hospital (TOH), a 1000-bed academic hospital located in Ottawa, Ontario. TOH provides both secondary and tertiary care for patients in the surrounding areas. The present study was approved by TOH’s institutional Research Ethics Board.

Study population

Consecutive inpatients who were at least 18 years of age, admitted from the ED between October 2 and December 29, 2009, underwent a screening tool assessment at presentation, and had a most responsible diagnosis that was cardiac, respiratory or infectious in nature (Appendix 2) were included. Although it was mandated that all patients presenting to the emergency room be screened by dedicated personnel, there were some cases in which the screening tool was incompletely or incorrectly applied. These cases represented <10% of all patients screened for inclusion in the study. Any patient with incomplete screening tool data was excluded from the study.

Application of infection screening tools

All patients were initially screened using the FRI screening tool. The FRI was considered to be positive if the patient exhibited shortness of breath or cough, and the presence of fever or chills (Appendix 1). Droplet isolation precautions were applied if the FRI screen was positive. All patients with positive FRI screens underwent additional screening using the ILI tool. If the ILI was negative, droplet precautions were maintained. If the ILI was positive, patients may have also been placed under airborne isolation. Due to evolving ministry policy and resource limitations, airborne isolation was not used consistently throughout the study period, even with a positive ILI screen.

Outcomes

The gold-standard outcome in the present study was laboratory-confirmed influenza by polymerase chain reaction (PCR). Influenza-specific PCR was conducted on samples from nasopharyngeal swabs. For most studies, PCR is considered to be the gold standard for influenza diagnosis (9,10). Multiplex PCR was not used routinely at the TOH during this time and data regarding other respiratory viruses were not collected. The secondary outcome was a clinical diagnosis of influenza, as indicated by the most responsible diagnosis on the hospital discharge summary.

Data collection

Using the the Ottawa Hospital Data Warehouse, a list of consecutive adult patients admitted to hospital via the ED was obtained. The Ottawa Hospital Data Warehouse is a relational database containing information from several of TOH’s most important operation information systems. Extensive assessments of quality are executed routinely as new data are loaded. All eligible patient encounters underwent chart review using an objective abstraction tool to determine baseline characteristics, the treating physician’s clinical diagnosis, the FRI and ILI results, and the microbiological test results. Standard approaches for calculating test characteristics and 95% confidence limits were applied (11). The Charlson Index was calculated using a validated method for administrative data (12). Codes used for ‘complications’ were excluded and only ‘comorbid’ diagnoses were used.

Analysis

In the primary analysis, peformance test characteristics were calculated using a laboratory-confirmed diagnosis of influenza as the gold standard. The primary analysis group consisted of a subset of patients in the study cohort who underwent laboratory testing (200 of 391 patients). The secondary analysis was conducted on all patients in the study cohort (n=391), and used a clinical diagnosis of influenza as the gold-standard diagnosis based on the hospital discharge summary. This was used as the secondary analysis to assess the possibility of bias introduced through selective laboratory testing for influenza. Agreement between clinical and laboratory diagnoses of influenza was assessed using the kappa statistic (13).

RESULTS

Baseline characteristics are described in Table 1. Forty-seven of 200 (23.5%) patients in the primary analysis group had laboratory-confirmed influenza according to PCR analysis. Fifty-four of 391 (13.8%) patients in the secondary analysis group had a clinical diagnosis of influenza as documented on the hospital discharge summary.

TABLE 1.

Characteristics of the study cohort according to laboratory-confirmed and clinically diagnosed influenza cases

| Laboratory-confirmed influenza (n=200) | Clinical diagnosis of influenza (n=391) | |||

|---|---|---|---|---|

|

|

|

|||

| Yes (n=47) | No (n=153) | Yes (n=54) | No (n=337) | |

| Admitting service | ||||

| Internal medicine | 30 (63.8) | 103 (67.3) | 37 (68.5) | 207 (61.4) |

| Respirology | ≤5 (10.6) | 7 (4.6) | ≤5 (5.6) | 32 (9.5) |

| Family medicine | ≤5 (4.3) | 13 (8.5) | ≤5 (3.7) | 21 (6.2) |

| Oncology | ≤5 (4.3) | ≤5 (3.3) | ≤5 (3.7) | 14 (4.2) |

| Critical care | ≤5 (4.3) | 7 (4.6) | ≤5 (3.7) | 11 (3.3) |

| Hematology | ≤5 (6.4) | ≤5 (3.3) | ≤5 (5.6) | 10 (3.0) |

| Nephrology | ≤5 (2.1) | ≤5 (1.3) | ≤5 (1.9) | 6 (1.8) |

| Obstetrics and gynecology | ≤5 (4.3) | ≤5 (1.3) | ≤5 (5.6) | ≤5 (0.9) |

| Other | n/a | 9 (5.8) | ≤5 (1.7) | 33 (9.7) |

| Sex | ||||

| Female | 24 (51.1) | 84 (54.9) | 30 (55.6) | 180 (53.4) |

| Male | 23 (48.9) | 69 (45.1) | 24 (44.4) | 157 (46.6) |

| Charlson Index score | ||||

| 0 | 23 (48.9) | 49 (32.0) | 28 (51.9) | 86 (25.5) |

| 1–2 | 18 (38.3) | 55 (35.9) | 16 (29.6) | 128 (38.0) |

| 3–4 | ≤5 (6.4) | 28 (18.3) | ≤5 (9.3) | 65 (19.3) |

| ≥5 | ≤5 (6.4) | 21 (13.7) | ≤5 (9.3) | 58 (17.2) |

| Admission month | ||||

| October | 6 (12.8) | 20 (13.1) | 6 (11.1) | 49 (14.5) |

| November | 41 (87.2) | 96 (62.7) | 47 (87.0) | 183 (54.3) |

| December | 0 (0.0) | 37 (24.2) | ≤5 (1.9) | 105 (31.2) |

| Age, years, mean ± SD | 49.13±17.36 | 66.87±18.31 | 49.19±18.69 | 67.93±18.46 |

| Length of stay, days, mean ± SD | 7.94±9.64 | 10.74±13.02 | 7.91±9.62 | 9.74±11.45 |

| Intensive care unit admission | 11 (23.4) | 35 (22.9) | 11 (20.4) | 55 (16.3) |

| Risk-adjusted mortality, mean ± SD | 0.05±0.07 | 0.15±0.13 | 0.06±0.07 | 0.14±0.13 |

Data presented as n (%) unless otherwise indicated. n/a Not applicable

Compared with patients in whom laboratory tests were negative, patients with laboratory-confirmed influenza were younger, had a lower prevalence of comorbid conditions (according to Charlson Index), had shorter length of hospital stay and had lower baseline probability of in-hospital mortality.

Test characteristics of the FRI and ILI are reported in Table 2. In the primary analysis, the sensitivity and specificity of the FRI screening tool were 74.5% (95% CI 60.5% to 84.8%) and 32.7% (95% CI 25.8% to 40.5%), respectively. The sensitivity and specificity of the ILI screening tool were 75.6% (95% CI 61.3% to 85.8%) and 46.3% (95% CI 38.2% to 54.7%), respectively. In the secondary analysis (n=391), sensitivity and positive predictive values (PPVs) were similar.

TABLE 2.

Performance test characteristics of the febrile respiratory illness (FRI) and influenza-like illness (ILI) infection screening tools in primary and secondary analysis groups

| Positive, n | Negative, n | % (95% CI) | Predictive value | Likelihood ratio, % (95% CI) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| True | False | True | False | Sensitivity | Specificity | Positive | Negative | Positive | Negative | |

| Laboratory-confirmed influenza (n=200) | ||||||||||

| FRI | 35 | 103 | 50 | 12 | 74.5 (60.5–84.8) | 32.7 (25.8–40.5) | 25.4 | 80.6 | 1.1 (0.92–1.36) | 0.78 (0.45–1.32) |

| ILI* | 34 | 73 | 63 | 11 | 75.6 (61.3–85.8) | 46.3 (38.2–54.7) | 31.8 | 85.1 | 1.4 (1.12–1.77) | 0.53 (0.31–0.91) |

| Clinical diagnosis of influenza (n=391) | ||||||||||

| FRI | 39 | 131 | 206 | 15 | 72.2 (59.1–82.3) | 61.1 (55.8–66.1) | 22.9 | 93.2 | 1.9 (1.50–2.30) | 0.45 (0.29–0.70) |

| ILI* | 38 | 87 | 229 | 14 | 73.1 (59.8–83.2) | 72.4 (67.3–77.1) | 30.4 | 94.2 | 2.7 (2.08–3.39) | 0.37 (0.24–0.58) |

Total numbers in the ILI groups were fewer because all participants with a FRI did not have an ILI screen completed

The strength of agreement between the laboratory and clinical diagnosis of influenza was calculated among 200 patients in the present study. These 200 patients had both a clinical diagnosis and mirobiological testing performed. The kappa statistic was 0.799 (95% CI 0.70 to 0.90), which is considered to be ‘good agreement’ (13).

DISCUSSION

The FRI and ILI screening tools implemented in hospitalized patients presenting to the ED during the pandemic H1N1 influenza season were inaccurate reflections of whether a patient had influenza. The FRI screening tool missed 26% of active laboratory-confirmed influenza cases, while 67% of noninfluenza patients were inappropriately isolated. Misclassification of influenza cases in the ED has detrimental consequences. As observed during the SARS epidemic in 2003, infected patients who were not isolated transmitted illness to other patients and health care workers (3,5). The consequences of nosocomial transmission were drastic during the SARS epidemic (2). Over a six-month period in 2003, the Ontario Ministry of Health and Long-Term Care reported 375 cases and 44 SARS-related deaths in Ontario (2). More than 1000 physicians were quarantined during this time, representing 5% of all physicians in Ontario (14). Furthermore, isolating noninfectious patients is associated with adverse clinical events and negative psychological consequences, including higher patient scores for anxiety and depression (15–17). Studies have also demonstrated that health care providers spend less time with and perform physical examinations less frequently on patients under isolation precautions (15,16). Unnecessary isolation procedures are also financially wasteful and can lead to backlogs in the ED.

Studies of symptom prediction rules for influenza have found similar results in the outpatient population (18–25). Combining laboratory information with clinical findings may improve screening tool accuracy; Bewick et al (26) used this approach to identify a more accurate rule. Screening and diagnostic strategies that combine clinical data with rapid influenza diagnostic tests (RIDTs) may prove to be the optimal solution in the future. RIDTs or ‘point-of-care’ tests can detect influenza viral proteins and provide results in ≤30 min (27). There are a variety of commercially available tests in Canada but their sensitivity is poor (28). A recent study by Sutter et al (29) demonstrated the poor sensitivity of these tests when compared with PCR (29). If RIDTs become less expensive and more accurate in the future, they could play an important role in screening processes for patients with FRI. They could help inform clinical decisions regarding isolation precautions and antiviral therapy in a relevant time frame to reduce costs and the consequences of inappropriate isolation.

We also noted that patients with laboratory-confirmed influenza in the present study had a shorter length of hospital stay and lower risk-adjusted mortality (Table 1). This trend could be explained by the observation that patients who tested negative for influenza by PCR were actually older on average and had a higher burden of comorbidities (according to Charlson Index) compared with patients without laboratory-confirmed influenza. This trend is also noted in the secondary analysis group. Other confounding factors, such as specific respiratory and cardiac comorbidities and patient functional status, may have contributed to the higher mortality and length of stay in the noninfluenza group.

Our study had several limitations. First, the retrospective methodology made it difficult to control for all residual confounding factors. For example, we did not analyze single comorbidities, such as chronic respiratory conditions, malignancy, cardiac disease or pulmonary thromboembolic disease, in the cohort. These comorbidities could affect the result of the screening tool (because patients are more likely to have a positive screen due to the nature of these disease-related symptoms) as well as the likelihood of contracting influenza.

Second, our primary analysis group represented a subset of the study cohort because only 200 of 391 patients were swabbed for influenza. There may have been factors that led to the decision to test patients for influenza that we could not account for. This introduces a selection bias into the study and represents a limitation of its retrospective design. To account for this, we compared the strength of agreement between influenza diagnosis in the laboratory-confirmed and clinical-diagnosis groups and found ‘good agreement’ according to the kappa statistic. In addition, sensitivities of the FRI tool were similar between primary analysis and secondary analysis groups, suggesting that the selection bias was not significant. A prospective study would better address residual confounders and bias, and is certainly the next step to assess the performance of the FRI and ILI screening tools in hospitals. We believed that a retrospective study was first warranted given the lack of peer-reviewed data on screening tool performance in the hospitalized patient population.

Third, the present study was performed during a unique influenza season (pandemic H1N1) at a single academic hospital, where the prevalence of influenza was unusually high (23.5% in the laboratory-confirmed influenza group). Furthermore, clinician awareness of influenza was heightened and there may have been greater frequency of testing compared with other influenza seasons. Given this, we cannot generalize the performance characteristics of the FRI and ILI screening tools to all influenza seasons. However, the PPV of the FRI and ILI screening tools as calculated in the present study would represent a conservative estimate of the ‘true’ PPV, given the unusually high prevalence of influenza. The PPV of the FRI and ILI questionnaires in low-prevalence influenza seasons is likely to be lower. Therefore, in times of low prevalence, one would be less likely to have influenza with a positive screening tool. We are not aware of and do not have any data from other influenza seasons in which the performance of the FRI and ILI screening tools were evaluated in hospitalized patients. Future prospective research should examine influenza seasons with variable prevalence to better understand the FRI screening tool performance.

Fourth, our institution was not routinely using multiplex PCR during the study period and, therefore, coinfection or infection with other respiratory viruses may not have been detected. Based on viral culture results, we identified another respiratory virus in only two other patients (two of 200). However, because we did not systematically test for other pathogens, we did not include these data. It is possible that excluding other respiratory viruses may have led to reduced specificity and PPV of the FRI and ILI screening tools. Future work using multiplex PCR as the gold-standard viral diagnostic test is required to overcome this limitation.

Finally, some may argue that a sensitivity of 74.5% (95% CI 60.5% to 84.8%) is adequate for this type of infection screening tool. We believe that 75% (three of four) is too low when dealing with a contagious illness that has a significant morbidity and mortality impact within a hospitalized population. To illustrate this, we calculated the pretest and post-test probability of influenza if the screening tool is negative during the pandemic season. In our cohort of patients with laboratory-confirmed influenza, the prevalence of influenza was 23.5% and the negative likelihood ratio was 0.78. Therefore, the post-test probability of having influenza with a negative screening tool is 19.3%. A post-test probability >10% is too high for cases missed by screening. However, if the prevalence of influenza is lower (for example, 5% or 10%) in a given influenza season, then the post-test probability of having influenza with a negative screening tool drops below 10%. This illustrates that while these screening tools maybe more effective during times of low influenza activity, they do not perform adequately in times of greater influenza burden. We liken this example to that of tuberculosis (TB), another contagious respiratory disease. The tuberculin skin test is falsely negative 30% of the time when used in the assessment of active TB (30). This means that 30% of active disease would be missed and would represent a substantial transmission risk. Thus, guidelines have not advocated for use of the tuberculin skin test in detection of active TB (30). Transmission of viral illnesses in hospital can also have significant morbidity and mortality, as seen with SARS; therefore, a sensitivity of 75% is inadequate.

Our study suggests that policy makers and infection-control practitioners should be cautious of the accuracy of symptom-based screening for contagious respiratory illness in the hospitalized patient population. During times of increased prevalence of influenza, use of these screening tools in hospitals may lead to significant misuse of isolation precautions, increased costs and the potential for nosocomial infection. Further prospective research is required to confirm the accuracy of infection screening tools and inform current policies.

Acknowledgments

The authors acknowledge and thank the Ontario Thoracic Society and the sponsors of the Cameron C Gray Fellowship Award. Dr Sunita Mulpuru is supported by a 2011–2012 Cameron C Gray Fellowship Award from the Ontario Thoracic Society. SM, VRR and AJF contributed to the study design, data analysis and critical review of the manuscript. SM completed the data collection, chart review and initial draft of the manuscript. VRR and AF provided content and methodological expertise. NL completed the chart abstraction from The Ottawa Hospital Data Warehouse. All authors gave final approval of the submitted version.

Appendix 1).

Sample of the febrile respiratory illness and influenza-like illness screening tools used at The Ottawa Hospital, Ottawa, Ontario

APPENDIX 2.

International Classification of Diseases, 10th Revision diagnostic codes used to identify the study cohort

| Respiratory conditions: All J codes |

| Infections and sepsis: A15–A19, A37, A40, A41, A41.5, A41.8, A49 |

| Cardiac conditions: I26, I28, I50, I51.4, R57 |

REFERENCES

- 1.Respiratory Disease in Canada . Respiratory disease in Canada. Ottawa: Health Canada; 2001. < www.phac-aspc.gc.ca/publicat/rdc-mrc01/index-eng.php> (Accessed August 14, 2012). [Google Scholar]

- 2.Ontario Ministry of Health and Long Term Care Severe acute respiratory syndrome (SARS) press release. Apr, 2004. < www.health.gov.on.ca/en/public/publications/disease/sars.aspx> (Accessed August 14, 2012).

- 3.Dwosh HA, Hong HHL, Austgarden D, et al. Identification and containment of an outbreak of SARS in a community hospital. CMAJ. 2003;168:1415–20. [PMC free article] [PubMed] [Google Scholar]

- 4.Shaw K. The 2003 SARS outbreak and its impact on infection control practices. Pub Heath. 2006;120:8–14. doi: 10.1016/j.puhe.2005.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ontario Ministry of Health and Long-Term Care SARS: Directive to all Ontario acute care facilities under outbreak conditions. Oct, 2003. < www.health.gov.on.ca/english/providers/program/pubhealth/sars/sars_mn.html> (Accessed August 14, 2012).

- 6.Provincial Infectious Diseases Advisory Committee (PIDAC) Preventing Febrile Respiratory Illnesses, Protecting patients and staff. Ministry of Health and Long-Term Care; Aug, 2006. [Google Scholar]

- 7.Public Health Agency of Canada (PHAC) Guidance: Infection Prevention and Control Measures for Healthcare Workers in Acute Care and Long-term Care Settings. Dec, 2010. < www.phac-aspc.gc.ca/nois-sinp/guide/ac-sa-eng.php> (Accessed August 14, 2012).

- 8.Ontario Ministry of Health and Long Term Care (MOHLTC) Screening tool for influenza-like illness in the emergency department. Apr, 2009. < www.health.gov.on.ca/english/providers/program/emu/health_notices/ihn_gd_ed_042909.pdf> < www.health.gov.on.ca/english/providers/program/emu/health_notices/ihn_screening_tool_042909.pdf> (Accessed August 14, 2012).

- 9.Talbot HK, Williams JV, Zhu Y, Poehling KA, Griffin MR, Edwards KM. Failure of routine diagnostic methods to detect influenza in hospitalized older adults. Infect Control Hosp Epidemiol. 2010;7:683–8. doi: 10.1086/653202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lucas PM, Morgan OW, Gibbons TF, et al. Diagnosis of 2009 pandemic influenza A (pH1N1) and seasonal influenza using rapid influenza antigen tests, San Antonio, Texas, April–June 2009. Clin Infect Dis. 2011;52(Suppl 1):S116–22. doi: 10.1093/cid/ciq027. [DOI] [PubMed] [Google Scholar]

- 11.Newcombe RG. Two-sided confidence intervals for the single proportion: Comparison of seven methods. Stat Med. 1998;17:857–72. doi: 10.1002/(sici)1097-0258(19980430)17:8<857::aid-sim777>3.0.co;2-e. [DOI] [PubMed] [Google Scholar]

- 12.Quan H, Parsons GA, Ghali WA. Validity of information on comorbidity derived from ICD-9-CCM administrative data. Med Care. 2002;40:675–85. doi: 10.1097/00005650-200208000-00007. [DOI] [PubMed] [Google Scholar]

- 13.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–74. [PubMed] [Google Scholar]

- 14.Kantarevic J, Kralj B, Weinkauf D. Excess Burden of Infectious Diseases: Evidence from the SARS outbreak in Ontario, Canada. Toronto: Ontario Medical Association; Jan 30, 2005. [Google Scholar]

- 15.Abad C, Fearday A, Safdar N. Adverse effects of isolation in hospitalised patients: A systematic review. J Hosp Infect. 2010;76:97–102. doi: 10.1016/j.jhin.2010.04.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Saint S, Higgins LA, Nallamothu BK, et al. Do physicians examine patients in contact isolation less frequently? Am J Infect Control. 2003;31:354–6. doi: 10.1016/s0196-6553(02)48250-8. [DOI] [PubMed] [Google Scholar]

- 17.Stelfox HT, Bates DW, Redelmeier DA. Safety of patients isolated for infection control. JAMA. 2003;290:1899–905. doi: 10.1001/jama.290.14.1899. [DOI] [PubMed] [Google Scholar]

- 18.Ebell MH, Afonso A. A systematic review of clinical decision rules for the diagnosis of influenza. Ann Fam Med. 2011;9:69–77. doi: 10.1370/afm.1192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stein J, Louie J, Fanders S, et al. Performance characteristics of clinical diagnosis, a clinical decision rule, and a rapid influenza test in the detection of influenza infection in a community sample of adults. Ann Emerg Med. 2005;46:412–9. doi: 10.1016/j.annemergmed.2005.05.020. [DOI] [PubMed] [Google Scholar]

- 20.Govaert TM, Dinant GJ, Aretz K, Knottnerus JA. The predictive value of influenza symptomatology in elderly people. Fam Pract. 1998;15:16–22. doi: 10.1093/fampra/15.1.16. [DOI] [PubMed] [Google Scholar]

- 21.Boivin G, Hardy I, Tellier G, et al. Predicting influenza infections during epidemics with use of a clinical case definition. Clin Infect Dis. 2000;31:1166–9. doi: 10.1086/317425. [DOI] [PubMed] [Google Scholar]

- 22.Monto AS, Gravenstein S, Elliott M, et al. Clinical signs and symptoms predicting influenza infection. Arch Intern Med. 2000;160:3243–7. doi: 10.1001/archinte.160.21.3243. [DOI] [PubMed] [Google Scholar]

- 23.van den Dool C, Hak E, Wallinga J, et al. Symptoms of influenza virus infection in hospitalized patients. Infect Control Hosp Epidemiol. 2008;29:314–9. doi: 10.1086/529211. [DOI] [PubMed] [Google Scholar]

- 24.Walsh EE, Cox C, Falsey AR. Clinical features of influenza A virus infection in older hospitalized persons. J Am Geriatr Soc. 2002;50:1498–503. doi: 10.1046/j.1532-5415.2002.50404.x. [DOI] [PubMed] [Google Scholar]

- 25.Call SA, Vollenweider MA, Hornung CA, et al. Does this patient have influenza? JAMA. 2005;293:987–97. doi: 10.1001/jama.293.8.987. [DOI] [PubMed] [Google Scholar]

- 26.Bewick T, Myles P, Greenwood S, et al. Clinical and laboratory features distinguishing pandemic H1N1 influenza-related pneumonia from interpandemic community-acquired pneumonia in adults. Thorax. 2011;66:247–52. doi: 10.1136/thx.2010.151522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Centres for Disease Control and Prevention Interim Guidance for the Detection of novel Influenza A Virus using Rapid Influenza diagnostic tests. Aug, 2009. < www.cdc.gov/h1n1flu/guidance/rapid_testing.htm> (Accessed December 1, 2012).

- 28.Hatchette TF, Bastien N, Berry J, et al. The limitations of point of care testing for pandemic influenza: What clinicians and public health professionals need to know. Can J Public Heath. 2009;100:204–7. doi: 10.1007/BF03405541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sutter DE, Worthy SA, Hensley DM, et al. Performance of five FDA-approved rapid antigen tests in the detection of 2009 H1N1 influenza A virus. J Med Virol. 2012;84:1699–702. doi: 10.1002/jmv.23374. [DOI] [PubMed] [Google Scholar]

- 30.Canadian Tuberculosis Standards. 6th edn. Ottawa: Public Heath Agency of Canada; 2007. [Google Scholar]