Abstract

This study used eye-tracking technology to explore how individuals with different levels of health literacy visualize health-related information. The authors recruited 25 university administrative staff (more likely to have adequate health literacy skills) and 25 adults enrolled in an adult literacy program (more likely to have limited health literacy skills). The authors administered the Newest Vital Sign (NVS) health literacy assessment to each participant. The assessment involves having individuals answer questions about a nutrition label while viewing the label. The authors used computerized eye-tracking technology to measure the amount of time each participant spent fixing their view at nutrition label information that was relevant to the questions being asked and the amount of time they spent viewing nonrelevant information. Results showed that lower NVS scores were significantly associated with more time spent on information not relevant for answering the NVS items. This finding suggests that efforts to improve health literacy measurement should include the ability to differentiate not just between individuals who have difficulty interpreting and using health information, but also between those who have difficulty finding relevant information. In addition, this finding suggests that health education material should minimize the inclusion of nonrelevant information.

There are many definitions of health literacy, and the ways in which this concept is described and measured continues to evolve (Berkman, Davis, & McCormack, 2010). Berkman and colleagues (2010) suggested that, although numerous options exist, an optimal definition of health literacy should reflect the purpose of the study to be conducted. For this study, we used the definition outlined by the Institute of Medicine, which defined health literacy as an individual's ability to acquire, interpret, and use health information properly (Ad Hoc Committee on Health Literacy, 1999; Nielsen-Bohlman, Panzer, & Kindig, 2004). The purpose of our study was to understand how individuals of different health literacy capacities find and use health information presented in one of the available health literacy assessment instruments.

Limited health literacy is associated with a compromised understanding of health information and a decreased ability to adhere to prescriptions (Ad Hoc Committee on Health Literacy, 1999; National Center for Education Statistics, 2003; Nielsen-Bohlman et al., 2004). Patients with limited health literacy also have poorer overall health (National Center for Education Statistics, 2003) and a higher rate of hospitalizations (Safeer & Keenan, 2005). The National Assessment of Adult Literacy reported that approximately one third of American adults have limited health literacy skills (at the basic or below-basic level). Limited health literacy is more common among older adults, members of ethnic minority groups, and those of low socioeconomic status (Ad Hoc Committee on Health Literacy, 1999; Nielsen-Bohlman et al., 2004).

Although the importance of health literacy is increasingly clear to health researchers and clinicians, it remains unclear as to why some individuals have limited health literacy while others do not, even when they have similar sociodemographic characteristics and educational attainment. For example, one study showed that among individuals attending a clinic that provides care to a low-income, predominantly Hispanic population, health literacy skills assessed with multiple instruments ranged from very low to very high, regardless of whether testing was conducted in English or Spanish (Weiss et al., 2005). The reason for such variability is unclear.

Although additional research is necessary in order to explain that variability, the actual measurement tools used to assess health literacy may be involved and merit further investigation. Healthy People 2020 highlights the importance of improving assessment of health literacy skills (U.S. Department of Health and Human Services, 2011) and, although numerous measures of health literacy exist, they all assess health literacy in different ways and contexts. Some health literacy assessment instruments have been designed to evaluate knowledge specific to a particular illness, such as mental (Jorm, 2000) or oral health (Lee, Divaris, Baker, Rozier, & Vann Jr., 2012). Others, which are used to determine overall health literacy, include the Rapid Estimate of Adult Literacy in Medicine (REALM; Davis et al., 1993), Test of Functional Health Literacy in Adults (TOFHLA; Parker, Baker, Williams, & Nurss, 1995), and the Newest Vital Sign (NVS; Weiss et al., 2005). These instruments all determine health literacy levels using different combinations and complexity of tasks involving reading, numeracy, and information reasoning. Therefore, current measures differ in their approach, are limited in their ability to measure all aspects of health literacy, and do not provide a full understanding of how patients use information they receive. In turn, this limits our ability to broaden knowledge of health literacy (Pleasant, McKinney, & Rikard, 2011).

The NVS has been used to assess patient health literacy (Escobedo & Weismuller, 2013; Osborn et al., 2007; Powers, Trinh, & Bosworth, 2010; Shah, West, Bremmeyr, & Savoy-Moore, 2010; VanGeest, Welch, & Weiner, 2010). The NVS relies on the patient's ability to search for and find information on a nutrition label and answer questions about that information. The information is presented, however, in a visual context that also includes content not needed to answer the questions. At present, we do not understand how a patient sees or visualizes the NVS nutrition label—that is, at what information are they looking to determine answers to the NVS questions (i.e., the extent to which they look at pertinent vs. nonpertinent information). Having an understanding of the content at which the patient looks when completing the NVS health literacy measure may provide insight into how patients with different literacy skills develop their responses to the questions, and offer suggestions of how health literacy could be better measured.

The present study explored the use of eye-tracking technology as a way to measure how individuals with different levels of health literacy attend to the information presented in the NVS nutrition label. Eye tracking assesses what people are looking at when they view an object and how long they spend looking at specific components of that object. We hypothesized that individuals with limited health literacy skills might view health-related documents such as the NVS nutrition label in a fundamentally different way (i.e., spend more time looking at and fixating on certain pieces of information) than individuals with adequate health literacy. We used eye-tracking technology in this exploratory study to measure whether such differences do, in fact, exist. If they do, it might provide insights into differences in reading, literacy, and health literacy skills, and clues to how we might better design health information for patients and improve tools for assessing health literacy.

Materials and Methods

Sample

We purposefully recruited one group of participants more likely to have adequate health literacy skills and another group more likely to have limited health literacy skills. The first group consisted of 25 administrative staff working at a southwestern university. The second group was 25 students enrolled in an adult education program designed to increase literacy skills and encourage attainment of a general equivalency diploma (GED). All recruited participants spoke English.

Administrative staff members were recruited through an announcement on a campuswide event calendar and if interested, they volunteered for the study by contacting research assistants via an e-mail address. GED program students were recruited through an announcement at their education center, and were requested to contact their teachers if interested in participating.

Because of the potentially limited literacy of some participants, all participants gave oral consent to take part in the study after having the opportunity to read, and/or have read to them, a consent document. Upon completion of the study, participants received a US $40 gift card to a local grocery retailer as compensation for time spent on the study. The university's Institutional Review Board approved all study procedures.

Health Literacy Assessment

Health literacy of participants was measured with the NVS (Weiss et al., 2005). The NVS is a bilingual (English and Spanish) health literacy assessment instrument that is used in the United States and which has also been translated and validated for use in other countries (Fransen, Van Schaik, Twickler, & Essink-Bot, 2011; Ozdemir, Alper, Uncu, & Bilgel, 2010; Rowlands et al., 2013). For this study, only the English version was used.

The NVS consists of six orally administered questions that an individual answers after viewing an ice cream nutrition label. Two of the questions involve finding information (e.g., identifying the presence of an ingredient) and four involve numerical calculations (e.g., computing caloric intake if several servings of the food are consumed) that require finding the necessary information on the label. Thus, the NVS includes assessments of reading, numeracy, and understanding this information. Each correct answer receives one point, and participants are given a score of 0–6, which is based on how many questions they answer correctly. A score of 0–1 reflects a 50% or greater likelihood of limited health literacy, a 2–3 score indicates the possibility (25%) of limited health literacy, and a score of 4–6 score reflects adequate health literacy. Scores on the NVS correlate well with scores on other, more sophisticated and lengthy health literacy measurements (Weiss et al., 2005). Previous investigations have used the NVS as a stand-alone instrument to determine health literacy abilities in adults (Boxell et al., 2012; Gazmararian, Yang, Elon, Graham, & Parker, 2012; Shah et al., 2010; VanGeest et al., 2010).

After participants responded to the six standard NVS questions, we asked one additional question: “Which ingredient is there the most of? “in order to provide an additional item that involved finding information without the need for calculations. Answers to this item were not included in the total NVS score.

Demographic Information

All participants in the study provided verbal responses to orally administered demographic questions prior to viewing the NVS. This information included gender, race/ethnicity with which they identified themselves, age, and the highest level of education attained (Table 1).

Table 1.

Demographic information of sample (N = 49)

| Characteristic | Number | % |

|---|---|---|

| Sex | ||

| Male | 12 | 24.5 |

| Female | 37 | 75.5 |

| Race/ethnicity | ||

| Non-Hispanic White | 22 | 44.9 |

| Hispanic | 14 | 28.6 |

| Non-Hispanic Black | 8 | 16.3 |

| Other* | 5 | 10.2 |

| Age (years) | ||

| 18–24 | 7 | 14.3 |

| 25–34 | 19 | 38.8 |

| 35–44 | 13 | 26.5 |

| 45–54 | 6 | 12.2 |

| 55–64 | 5 | 8.2 |

| 65+ | — | — |

| Education | ||

| Less than high school | 19 | 38.8 |

| Some college | 9 | 18.4 |

| 2 year college degree | 1 | 2.0 |

| 4 year college degree | 13 | 26.5 |

| Graduate degree (MS., Ph.D., M.D.) | 7 | 14.3 |

| NVS score | ||

| 0–1 (high likelihood of limited literacy) | 9 | 18.4 |

| 2–3 (possibility of limited literacy) | 8 | 16.3 |

| 4–6 (adequate health literacy) | 32 | 65.3 |

Self-identified as multiple races.

Eye Tracking

The present study used a Tobii T60 eye tracker and Tobii Studio software for data collection. This eye-tracking setup shows a good ability to make calculation adjustments to changes in participant head and eye levels (Chien, 2011).

The eye-tracking procedure involved having an individual sit, in a stationary chair, in front of a computer monitor with an infrared eye tracker incorporated into the bottom of the monitor, while the individual observes stimuli presented on the monitor screen. This type of eye tracker does not require the participant to wear eye-tracking glasses or other head apparatus; thus, yielding a less distracting and more natural eye-tracking experience. The participant selects the distance from the screen they feel is most appropriate for reading small text on the monitor.

The participant's eye movements are then calibrated. This is done by having the individual follow a moving dot around the perimeter of the screen while the eye tracker evaluates this information and learns to identify the target of the participant's gaze. Calibration values that are not accepted by the software indicate an inability of the instrument to track a particular individual's eye movements—all participants in the present study met the calibration requirements.

The eye-tracking session then begins when a visual stimulus (in this case, the NVS nutrition label) is presented on the screen. The eye tracker records the subject's real-time eye movements by noting the individual's eye gaze path and length of time spent looking at various locations on the screen. These general eye-tracking procedures used for data collection have been employed this way in previous research (Pasch, Velazquez, & Champlin, 2013).

We displayed the NVS nutrition label during the entire time that participants were asked and answering the questions. Each participant was tested individually, in a quiet room accompanied only by a research assistant.

Measures

To determine which components of the NVS nutrition label gained the most attention, the nutrition label was coded using the Area of Interest (AOI) tool in the Tobii Studio Eye Tracking software. This tool enables the researcher, with the computer cursor, to draw a border of any shape around any piece of information or part of the image—thus selecting and coding all parts of the image being viewed by the individual. We coded each informational/text piece of the NVS nutrition label as a separate AOI by drawing boxes around each piece of information on the nutrition label. The blank, background space of the label and time spent looking off screen was also coded as an AOI to reflect the time spent on nonrelevant space that does not include text. In total, we coded 18 AOIs (see Figure 1).

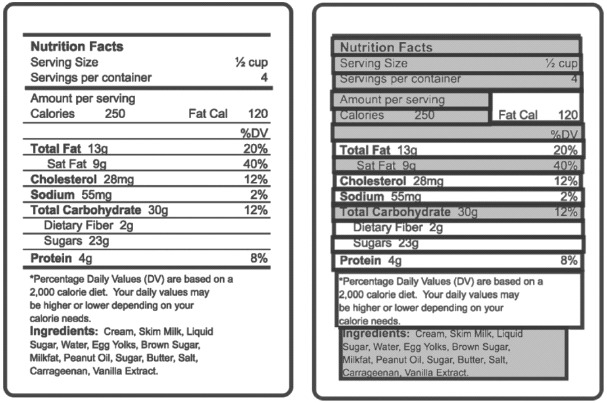

Figure 1.

The image on the left shows the Newest Vital Sign nutrition label as it appears in the health literacy assessment. The image on the right shows how the area of interest (AOI) tool was used in the Tobii Studio software to draw boxes around each piece of information on the NVS label. Each box is a separate AOI.

The software then provides data on the total time spent fixated on an AOI, with fixation defined as slow eye movement that occurs (at a space of ≤35 pixels on the monitor) looking within one AOI. It also provides the number of fixations that occurred for each AOI. These are respectively referred to as fixation duration and fixation count. These fixation variables reflect attention and interest given to a visual stimulus and have been used in this way in previous research (Fox, Krugman, Fletcher, & Fischer, 1998; Pasch et al., 2013; Peterson, Thomsen, Lindsay, & John, 2010). Data for these two variables were recorded for each of the 18 AOIs.

The 18 AOIs were then coded as containing “relevant” or “nonrelevant” pieces of information based on whether the AOI element being viewed was needed to answer any of the six questions used in the NVS health literacy assessment (Figure 2). For example, on the NVS nutrition label, the “calories” information is needed to answer the first NVS question, and would therefore be coded as relevant information. In contrast, there is no NVS question asking about dietary fiber and the “dietary fiber” element on the NVS nutrition label would be coded as “nonrelevant.” Coding the pieces of health information on the NVS nutrition label in this way allowed us to examine whether individuals with different levels of literacy skill spent more time looking at information that is relevant vs. not relevant to a given task.

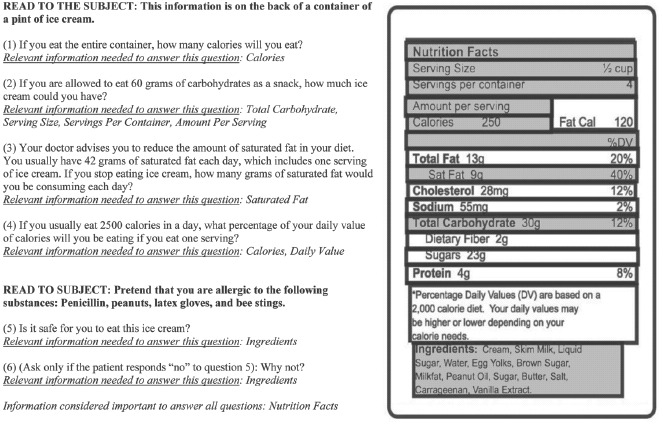

Figure 2.

The Newest Vital Sign (NVS) Measure of Health Literacy: Relevant and nonrelevant information details on the NVS nutrition label deemed relevant for answering each of the six NVS questions are highlighted in gray. Details that are not relevant for answering NVS items are shown on the label in white.

Given that limited health literacy is found more commonly among ethnic minorities and those with less than high school education or a GED (Kirsh, Jungeblut, Jenkins, & Kolstad, 1993; Nielsen-Bohlman et al., 2004), data on participants' race/ethnicity and highest level of education attained were obtained for inclusion in our analysis of covariates. Race/ethnicity options included non-Hispanic White, Hispanic, non-Hispanic Black, Asian American/Pacific Islander, American Indian/Alaskan Native, and other. Race/ethnicity was coded as White or non-White for analyses. Levels of education included less than high school, some college, 2-year college degree, 4-year college degree, master's degree, doctorate degree, and professional degree. For analyses, master's degree, doctorate degree, and professional degree were all coded as graduate-level education.

Results

Participant Characteristics

Of the 50 individuals who volunteered to participate in the study, data were available for only 49 because the eye-tracking software crashed during one participant session and data could not be recovered. Of the remaining 49 participants, the majority (65.3%) had an NVS score of four or greater, indicating adequate health literacy, while 16.3% had an NVS score of 2–3, indicating possibility of limited literacy, and 18.4% had an NVS score of 0–1, indicating high likelihood of limited literacy.

Eye-Tracking Results

A paired samples t test suggested that there were significant differences in the average fixation duration (p < .05) and average number of fixation counts (p < .05) between the relevant and nonrelevant groups of information—where, on average, greater time (M = 70.0 s) and number of fixations (M = 170.5) were given to relevant information than to nonrelevant information (M = 58.2 s and M = 145.1 s, respectively).

We then performed a bivariate analysis using Pearson correlation coefficients to determine whether participant score on the NVS was associated with fixation duration and fixation count on relevant and nonrelevant information on the nutrition label. Patient NVS score and fixation duration on nonrelevant information showed a statistically significant negative association (r = −.408, p < .005). We next performed a linear regression analysis to determine whether any significant relationships between NVS score and fixation duration on nonrelevant information remained significant after controlling for race and highest level of education attained. A p value of ≤ .05 was considered significant. These analyses were performed using SPSS version 20.0.

Fixation Duration

For fixation duration, participants spent an average of 128.2 total seconds viewing the NVS nutrition label (SD = 37.7), with a range from 73.4 to 264.5 seconds. The total amount of time spent fixating on the NVS nutrition label was not correlated with NVS score, nor did total fixation duration differ significantly across levels of health literacy. An average of 70.0 seconds was spent on relevant NVS information (SD = 26.9), and an average of 58.2 seconds was spent on nonrelevant information (SD = 22.3).

Fixation Count

For fixation count, the average number of total fixations on the NVS nutrition label was 315.6 (SD = 96.4), with a range from 176 to 569 total fixations on the label. The total number of fixations on the NVS nutrition label was not correlated with NVS score, nor did total fixation count differ significantly across levels of health literacy. An average of 170.5 fixations were on relevant information (SD = 65.1) and 145.1 fixations were on nonrelevant information (SD = 61.0).

Association Between NVS Score and Fixation

NVS health literacy score was associated with the fixation duration on nonrelevant information; for each point decrease in NVS score, the amount of time looking at nonrelevant information increased by 4.21 seconds. This association was statistically significant (p < .005), and remained significant after controlling for race and highest level of education in the multiple regression analysis (B = −4.690, β = −.454, t(45) = −2.267, p < .05). Health literacy score was not significantly associated with fixation duration or fixation count for relevant information or for fixation count on nonrelevant information.

Discussion

Key Findings

This exploratory study was the first to use eye-tracking technology to investigate whether those with different health literacy capacities might view information in different ways. The most important finding of our study was that individual score on the NVS was not related to the amount of time spent looking at relevant information, but rather poorer health literacy skills as measured by the NVS were significantly associated with greater duration of fixation on nonrelevant information.

In addition, although poorer health literacy skills were significantly associated with greater fixation duration at nonrelevant information, they were not significantly associated with fixation count. This finding suggests that those with lower health literacy scores are looking in one spot, even if irrelevant, for longer amounts of time than those with higher health literacy scores.

Implications

Our results suggest that health literacy measurement tools could be designed to better discriminate between patients who struggle with finding relevant health information versus those who might have difficulty understanding and applying that information once they have found it. Some individuals who are determined to have inadequate health literacy scores on assessment instruments could have greater difficulty with distinguishing useful health information from that which is not useful—rather than simply having difficulty understanding the information. Our current measures of health literacy fall short of being able to make this distinction.

The difference between having difficulty finding versus understanding information also has implications for the definition of health literacy. As definitions of health literacy continue to evolve (Berkman et al., 2010), these findings suggest that the ability to find health information should be an important component of how health literacy is defined.

The present study also offers an innovative method for exploring the visualization of health information by patients. This study is the first, to our knowledge, to include a real-time analysis approach (eye tracking) to understanding what participants look at when they search for health-related visual content. This has many potential applications that could inform us about how to improve health literacy measurement such as examining the ability to find and to interpret health information separately. The present study was the first to investigate what people look at when viewing the NVS; in addition to this component, future research should also consider the sequential order in which individuals of different health literacy capacities scan the NVS stimulus.

Perhaps most important is that our findings tell us that health information for patients should be designed to help them better distinguish important from unimportant information. One method for this might be using bolded or a different color font to emphasize key health details. While the most important information in any given source of health information will vary based on a variety of individual factors, the content most likely to be important to the greatest number of people can often be determined and emphasized in presentation of the information—for example, by highlighting of key nutrition facts on food packaging that can lead consumers to full nutrition information (Schor, Maniscalco, Tuttle, Alligood, & Reinhardt Kapsak, 2010). In addition, health care providers should be trained to direct patients to health information that is most essential, tailoring healthcare advice beyond what is possible with print materials alone. In doing this, providers can increase the likelihood of patients getting directly connected to important health information and save valuable appointment time. There are well-recognized guidelines for developing patient-friendly materials (Doak, Doak, & Root, 1996; National Cancer Institute, 2003; Weiss, 2007), but research using eye-tracking technology has the potential to help us further improve health education materials for patients. There is also evidence that materials designed to meet the needs of lower health literate populations are deemed acceptable by more health literate audiences (Mackert, Whitten, & Garcia, 2008), so practical implications of this work will have broad benefits for the effective communication of health information.

Limitations

Despite the importance of our findings, there are limitations to our study methods that should be considered when interpreting the results of this preliminary study. First, our study included only a small number of participants who were recruited using convenience sampling techniques. However, the sample size was actually large relative to other eye-tracking studies, and despite the small sample size and associated limited statistical power, we were able to demonstrate meaningful and statistically significant differences in how individuals with higher versus lower health literacy view health information.

Second, we based our labeling of participants' health literacy skills solely on their NVS scores. The NVS, as with all health literacy measures such as the REALM (Davis et al., 1993) and the TOFHL-A (Parker et al., 1995), is not a definitive measure of general or health literacy, as each of these instruments measures a somewhat different aspect of literacy skill and cognitive function (Osborn et al., 2007). Thus, further investigation is needed to better understand the precise relationship of information finding, as measured by eye tracking, to health literacy. In addition, education was measured with answer choice options that grouped all participants with less than a high school education into one category. Ideally, a more nuanced measured of educational attainment would be beneficial as there may be important differences in health literacy among those who have not completed high school and this may vary by highest grade completed.

Conclusions

Despite study limitations, this research advances understanding of health literacy measurement and how such instruments can assess health literacy. Specifically, participants with limited health literacy skills did not spend significantly different amounts of time viewing relevant information, but they spent more time viewing nonrelevant information. This suggests when health information for patients is designed, whether it is a nutrition label or some other form of information, layouts that force the eye toward the most important and relevant content may make it easier and quicker for patients to find out what they need to know. M ore significant is that it points to a need to better understand what health literacy instruments actually assess; better understanding of existing instruments is a key step toward designing better health literacy instruments in the future.

References

- Ad Hoc Committee on Health Literacy. Health literacy: Report of the Council on Scientific Affairs. JAMA. 1999;281:552–557. [PubMed] [Google Scholar]

- Berkman N. D., Davis T. C., McCormack L. Health literacy: What is it? Journal of Health Communication. 2010;15(Suppl. 2):9–19. doi: 10.1080/10810730.2010.499985. [DOI] [PubMed] [Google Scholar]

- Boxell E. M., Smith S. G., Morris M., Kummer S., Rowlands G., Waller J., Simon A. E. Increasing awareness of gynecological cancer symptoms and reducing barriers to medial help seeking: Does health literacy play a role? Journal of Health Communication. 2012;17(Suppl. 3):265–279. doi: 10.1080/10810730.2012.712617. [DOI] [PubMed] [Google Scholar]

- Chien S. H. No more top-heavy bias: Infants and adults prefer upright faces but not top-heavy geometric or face-like patterns. Journal of Vision. 2011;11(6):1–14. doi: 10.1167/11.6.13. [DOI] [PubMed] [Google Scholar]

- Davis T. C., Long S. W., Jackson R. H., Mayeaux E. J., George R. B., Murphy P. W., Crouch M. A. Rapid Estimate of Adult Literacy in Medicine: A shortened screening instrument. Family Medicine. 1993;25:391–395. [PubMed] [Google Scholar]

- Doak C. C., Doak L. G., Root J. Teaching patients with low literacy skills. 2nd ed. Philadelphia, PA: Lippincott; 1996. [Google Scholar]

- Escobedo W., Weismuller P. Assessing health literacy in renal failure and kidney transplant patients. Progress in Transplantation. 2013;23(1):47–54. doi: 10.7182/pit2013473. [DOI] [PubMed] [Google Scholar]

- Fox R. J., Krugman D. M., Fletcher J. E., Fischer P. M. Adolescents' attention to beer and cigarette print ads and associated product warnings. Journal of Advertising. 1998;27(3):57–68. [Google Scholar]

- Fransen M. P., Van Schaik T. M., Twickler T. B., Essink-Bot M. L. Applicability of internationally available health literacy measures in the Netherlands. Journal of Health Communication. 2011;16(Suppl. 3):134–149. doi: 10.1080/10810730.2011.604383. [DOI] [PubMed] [Google Scholar]

- Gazmararian J. A., Yang B., Elon L., Graham M., Parker R. Successful enrollment in Text4Baby more likely with higher health literacy. Journal of Health Communication. 2012;17(Suppl. 3):303–311. doi: 10.1080/10810730.2012.712618. [DOI] [PubMed] [Google Scholar]

- Jorm A. F. Mental health literacy: Public knowledge and beliefs about mental disorders. British Journal of Psychiatry. 2000;177:396–401. doi: 10.1192/bjp.177.5.396. [DOI] [PubMed] [Google Scholar]

- Kirsh I., Jungeblut A., Jenkins L., Kolstad A. Adult literacy in America: A first look at the results of the National Adult Literacy Survey. Washington, DC: National Center for Education Statistics, U.S. Department of Education; 1993. [Google Scholar]

- Lee J. Y., Divaris K., Baker D., Rozier G., Vann W. F., Jr. The relationship of oral health literacy and self-efficacy with oral health status and dental neglect. American Journal of Public Health. 2012;102:923–929. doi: 10.2105/AJPH.2011.300291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackert M., Whitten P., Garcia A. Evaluating e-health interventions designed for low health literate audiences. Journal of Computer-Mediated Communication. 2008;13:504–515. [Google Scholar]

- National Cancer Institute. Clear & simple: Developing effective print materials for low-literate readers. 2003. Retrieved from http://www.cancer.gov/cancertopics/cancerlibrary/clear-and-simple/AllPages.

- National Center for Education Statistics. National Assessment of Adult Literacy. 2003. Retrieved from http://nces.ed.gov/naal/kf_demographics.asp.

- Nielsen-Bohlman L., Panzer A., Kindig D., editors. Health literacy: A prescription to end confusion. Washington, DC: National Academy of Sciences; 2004. [PubMed] [Google Scholar]

- Osborn C. Y., Weiss B. D., Davis T. C., Skripkauskas S., Rodrigue C., Bass P. F., Wolf M. S. Measuring adult literacy in health care: performance of the newest vital sign. American Journal of Health Behavior. 2007;31(Suppl. 1):S36–S46. doi: 10.5555/ajhb.2007.31.supp.S36. [DOI] [PubMed] [Google Scholar]

- Ozdemir H., Alper Z., Uncu Y., Bilgel N. Health literacy among adults: A study from Turkey. Health Education Research. 2010;25:464–477. doi: 10.1093/her/cyp068. [DOI] [PubMed] [Google Scholar]

- Parker R. M., Baker D. W., Williams M. V., Nurss J. R. The Test of Functional Health Literacy in Adults: A new instrument for measuring patients' literacy skills. Journal of General Internal Medicine. 1995;10:537–541. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- Pasch K. E., Velazquez C. E., Champlin S. E. A multi-method study to understand how youth perceive and evaluate food and beverage advertisements. In: Williams J. D., Pasch K. E., Collins C., editors. Advances in communication research to reduce childhood obesity. New York, NY: Springer; 2013. pp. 243–266. [Google Scholar]

- Peterson E. B., Thomsen S., Lindsay G., John K. Adolescents' attention to traditional and graphic tobacco warning labels: An eye-tracking approach. Journal of Drug Education. 2010;40:227–244. doi: 10.2190/DE.40.3.b. [DOI] [PubMed] [Google Scholar]

- Pleasant A., McKinney J., Rikard R. V. Health literacy measurement: A proposed research agenda. Journal of Health Communication. 2011;16(Suppl. 3):11–21. doi: 10.1080/10810730.2011.604392. [DOI] [PubMed] [Google Scholar]

- Powers B. J., Trinh J. V., Bosworth H. B. Can this patient read and understand written health information? JAMA. 2010;304(1):76–84. doi: 10.1001/jama.2010.896. [DOI] [PubMed] [Google Scholar]

- Rowlands G., Khazaezadeh N., Oreng-Ntim E., Seed P., Barr S., Weiss B. D. Development and validation of a measure of health literacy in the UK: The Newest Vital Sign. BMC Public Health. 2013;13:116. doi: 10.1186/1471-2458-13-116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Safeer R. S., Keenan J. Health literacy: The gap between physicians and patients. American Family Physician. 2005;72:463–468. [PubMed] [Google Scholar]

- Schor D., Maniscalco S., Tuttle M. M., Alligood S., Reinhardt Kapsak W. Nutrition facts you can't miss: The evolution of front-of-pack labeling: Providing consumers with tools to help select foods and beverages to encourage more healthful diets. Nutrition Today. 2010;45(1):22–32. [Google Scholar]

- Shah L. C., West P., Bremmeyr K., Savoy-Moore R. T. Health literacy instrument in family medicine: The “Newest Vital Sign” ease of use and correlates. Journal of American Board of Family Medicine. 2010;23:195–203. doi: 10.3122/jabfm.2010.02.070278. [DOI] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. Healthy People 2020—Improving the Health of Americans. 2011. Retrieved from http://www.healthypeople.gov/2020/default.aspx.

- VanGeest J. B., Welch V. L., Weiner S. J. Patients' perceptions of screening for health literacy: Reactions to the Newest Vital Sign. Journal of Health Communication. 2010;15:402–412. doi: 10.1080/10810731003753117. [DOI] [PubMed] [Google Scholar]

- Weiss B. D. Health literacy and patient safety: Help patients understand: A manual for clinicians. 2nd ed. Chicago, IL: American Medical Association Foundation and American Medical Association; 2007. [Google Scholar]

- Weiss B. D., Mays M. Z., Martz W., Castro K. M., DeWalt D. A., Pignone M. P., Hale F. A. Quick assessment of literacy in primary care: The Newest Vital Sign. Annals of Family Medicine. 2005;3:514–522. doi: 10.1370/afm.405. doi: 10.1370/afm.405. [DOI] [PMC free article] [PubMed] [Google Scholar]