Abstract

Males have been suggested to have advantages over females in reactions to child facial resemblance, which reflects the evolutionary pressure on males to solve the adaptive paternal uncertainty problem and to identify biological offspring. However, previous studies showed inconsistent results and the male advantage in child facial resemblance perception, as a kin detection mechanism, is still unclear. Here we investigated the behavioral and brain mechanisms underlying the self-resembling faces processing and how it interacts with sex and age using ERP technique. The results showed a stable male advantage in self-resembling child faces processing, such that males have higher detectability to self-resembling child faces than females. For ERP results, males showed smaller N2 and larger LPC amplitudes for self-resembling child faces, which may reflect face-matching and self-referential processing in kin detection, respectively. Further source analysis showed that the N2 and LPC components were originated from the anterior cingulate cortex and medial frontal gyrus, respectively. Our results support the male advantage in self-resembling child detection and further indicate that such distinctions can be found in both early and late processing stages in the brain at different regions.

Keywords: Kin recognition, Facial resemblance, Parental uncertainty, Paternity cue

Introduction

Previous research suggested that the detection of genetic relatedness modulates our social attribution, mate preference and social behavior (DeBruine, 2002, 2005; DeBruine, Jones, Little, & Perrett, 2008; Hauber & Sherman, 2001; Neff & Sherman, 2002). Facial resemblance, as a cue of human kin detection, can help us to identify kinship relationships (Alexandra Alvergne, Faurie, & Raymond, 2007; Bressan & Grassi, 2004). The inclusive fitness theory (Hamilton, 1964) predicts that facial resemblance will increase prosocial behaviors, such as investing, trustworthiness or general attractiveness, which have been demonstrated in several behavioral studies (A. Alvergne, Faurie, & Raymond, 2009; DeBruine, 2002, 2004a). Brain imaging studies also confirmed that trustworthiness rating to self-resembling faces evoked reward-related brain regions, such as ventral superior frontal gyrus, right ventral inferior frontal gyrus, and left medial frontal gyrus (Platek, Krill, & Wilson, 2009). Accordingly, self-resembling faces have been characterized as being a kin detection cue that is correlated with more positive social attribution. Several findings demonstrated that people are fairly accurate in detecting the genetic relatedness of faces in a face-matching task (Alexandra Alvergne et al., 2007; Bredart & French, 1999; Bressan & Dal Martello, 2002; Bressan & Grassi, 2004; G. Kaminski, Dridi, Graff, & Gentaz, 2009).

An important factor in facial resemblance detection is age. A consistent finding is the own age bias (i.e., people show better performance on discriminating own age faces) (Anastasi & Rhodes, 2005; Harrison & Hole, 2009; Hills & Lewis, 2011; Melinder, Gredeback, Westerlund, & Nelson, 2010). Such an influence of age has also been found to interact with the factor of sex(Rehnman & Herlitz, 2006). Based on the parental investment theory and the asymmetry in parental certainty, males are supposed to have evolved to be more sensitive to self-facial resemblance than females to identify offspring (Bressan, 2002). The parental uncertainty predicts that males need more genotype cues to identify offspring. That is, unlike the inherent maternity certainty of females, males are with higher uncertainty to their offspring and thus evolved the sensitivity to parent-child facial resemblance. Some previous research on self-resembling faces processing has confirmed such a male advantage in self-facial child resemblance discrimination. For example, males showed higher attractiveness rating, parental investment and adoption decisions to self-morphed children (Platek, Burch, Panyavin, Wasserman, & Gallup, 2002; Platek et al., 2003). Another study showed that the actual parent-child facial resemblance could predict father’s but not mother’s investment decision (A. Alvergne, Faurie, & Raymond, 2010). Furthermore, functional MRI studies on the self-resembling faces processing also found that males show stronger cortical response to self-resembling child faces than females (Platek, Keenan, Gallup, & Mohamed, 2004; Platek, Keenan, & Mohamed, 2005; Platek, Raines, et al., 2004). However, other studies manipulating facial resemblance showed inconsistent results. For example, research investigating the link between facial resemblance and social perception (i.e., trust and attractiveness) with morphed self-resembling child faces did not find any sex differences (Bressan, Bertamini, Nalli, & Zanutto, 2009; DeBruine, 2004b, 2005). In research using real family photographs, both males and females reported higher closeness and altruism ratings toward siblings that are more closely resemble themselves (Lewis, 2011; Platek et al., 2003). Such preference for self-resemblance was also found in making parental investment decisions for both males and females, whereas this preference was modulated by mate retention behaviors in males only (Welling, Burriss, & Puts, 2011). Moreover, study using morphed self-resembling faces found that females actually showed higher preference to self-resembling child faces than males (Bressan et al., 2009). So the sex difference in self-resembling child face processing is still controversial.

Though inconsistent results were found, a common view of self-resembling facial processing is that it involves not only physical facial processing and familiarity discrimination processing, but also self-referent phenotype matching processing (i.e., kin detection) (Burch & Gallup, 2000; Daly & Wilson, 1982; DeBruine et al., 2008; Platek et al., 2002; Platek et al., 2003; Platek et al., 2005). For example, fMRI studies have shown that facial resemblance detection activates the anterior cingulate (ACC) and medial prefrontal cortex (MPFC)(Platek et al., 2005; Platek, Krill, & Kemp, 2008), two brain regions related to self-referential processing(Bartels & Zeki, 2004). However, previous behavioral studies could not differentiate these two processes very well, especially the temporal dynamics. Electrophysiological brain responses may provide powerful evidence for this issue because its high temporal resolution may help differentiate early and late self-resembling faces processing. Although to the best of our knowledge, there is still no ERP study directly investigated the sex differences in self-resembling faces processing, studies in other fields have identified different ERP components for facial perception, familiarity or self-referential processing (Hu, Wu, & Fu, 2011; Ma & Han, 2009). First, the early physical facial processing is associated with the N170, which reflects the face perceptual coding (Batty & Taylor, 2003; Eimer & Holmes, 2002; Henson et al., 2003; Itier & Taylor, 2004; Jemel et al., 2003; Rossion et al., 2000). Secondly, the familiar discrimination is correlated to the P300 (or late positive component, LPC), such that familiar stimuli usually evoked larger P300 than unknown or unrelated stimuli (Meijer, Smulders, Merckelbach, & Wolf, 2007; Miyakoshi, Kanayama, Iidaka, & Ohira, 2010; Ninomiya, Onitsuka, Chen, Sato, & Tashiro, 1998; Rosenfeld, Shue, & Singer, 2007). Thirdly, the self-referential processing is related to more positive potential from 220–500ms (i.e., N2 and P300) (Su et al., 2010, 2006). For instance, an ERP study demonstrated that mothers elicited more positive amplitude for their own children’s faces from very early component (100–200ms) to LPC(Grasso, Moser, Dozier, & Simons, 2009). This positive going potentials were also found for parents’ faces(Grasso & Simons, 2011). These ERP findings suggest that brain response discriminate genetic-related people and other people from early phase physical process to later self-referential and familiarity processing.

The aim of the present study is to investigate the time course of self-resembling faces processing and how it interacts with age and sex using a self-resemblance judgment task. We hypothesize that the male advantage in self-resembling child face detection could be reflected by not only behavioral responses, but also ERP components from early to later stages, such as N2 and LPC components.

Methods

Participants

Forty-one right-handed native Chinese speakers, 21 males (Mean age= 23.52 years) and 20 females (Mean age= 25.65 years) participated the study with payment. All subjects signed a written informed consent approved by the IRB of Beijing Normal University.

Stimuli

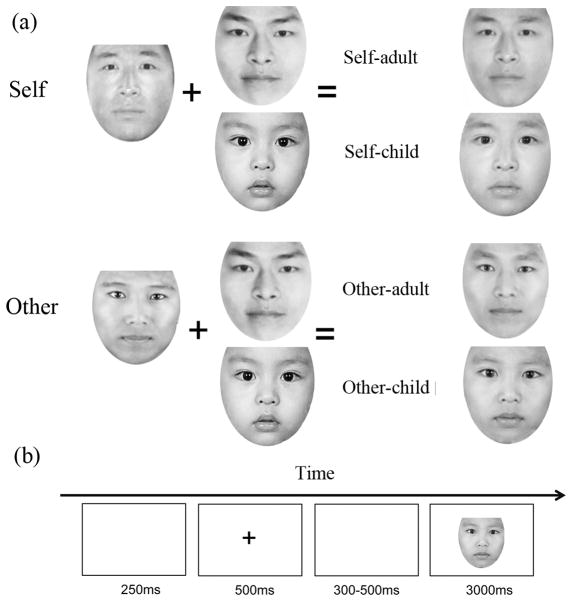

A full-face photograph of each subject was taken before the formal study. Subjects were asked to keep neutral expression when facing the camera. We created four experimental conditions (self-child, self-adult, other-child and other-adult) by morphing subject face with one of two adult faces with neutral expression (a 23 years old male face or a 23 years old female face, according to subject’s gender) and a 1.5 years old child face (DeBruine, 2004b; Platek et al., 2002; Platek et al., 2005; Platek, Raines, et al., 2004) (figure. 1). To exclude the gender effect of the child face, we did a gender rating task to the child face in a 5 point scale (1=a girl, 2= maybe a girl, 3=not sure, 4= maybe a boy, 5= a boy), and the rating result indicated that both male (mean rating = 3.17, SD=1.47) and female (mean rating = 2.69, SD=1.13) subjects showed uncertainty of the gender. Therefore, the other-adult face and other-child face was the same for each female or male subject. All faces were processed with Adobe Photoshop CS to standardize the picture to black and white and merely interior characteristics of face being retained. Then the Abrosoft Fanta Morph (www.fantamorph.com) software was used to create morphed faces with 50% percentage as previous studies (Platek et al., 2003; Platek et al., 2005; Platek & Kemp, 2009; Platek, Raines, et al., 2004). Thirty calibration locations were used to make the morphed face in a standard face space and all output morphed faces were resized to 300×300dpi. All stimuli were presented on a 17-inch Dell monitor with a screen resolution of 1024 × 768 pixels and 60 Hz refresh frequency, the visual angle of the face images is 4.3°×4.6° and the mean luminance of stimulus was 166 cd/m2.

Figure 1. Example of a subject’s or others’ face morphed with a child and adult face (a) and experimental procedure (b).

The task is to judge whether the presented face is resemble to the subject him- or herself.

2.3 Procedure

Subjects were seated in a quiet room with their eyes approximately 60 cm from a 14-inch screen. All stimuli were displayed in the center of the screen with E-prime 2.0. First, a central fixation appeared for 500ms in the beginning of each trial to engage the participants’ attention. A blank screen followed this fixation for a random duration from 300 to 500ms. The morphed faces then appeared with a maximum duration of 3000ms, which will disappear if subject press a key. The inter trial interval lasted for 250ms. Participants were instructed to make self-resemblance judgments to four types of faces with left or right hand key press (press “A” if the face is resemble to him- or herself, press “L” if not). For each subject, there were 4 pictures and presented 50 times for each stimuli. Therefore, the probability of four types of stimuli was matched (i.e., 25%). The response keys were counterbalanced. There were 50 trials for each condition and the whole task lasted approximately 20 minutes.

2.4 Electroencephalogram recording and data analysis

The EEG was recorded by using a 64-channel Brain Amp MR with on-line reference of the left mastoid. All electrode impedance was maintained below 10 kΩ and the EEG signal were recorded with a bandpass of 0.01–100 Hz and sampled at 500 Hz/channel. All electrodes were re-referenced to the average of the left and right mastoids and filtered with a low pass of 30Hz off-line. EEG time-locked to the remaining events of interest was epoched beginning 200ms before stimulus onset until 800ms post-stimulus. Trials with EOG artifacts were excluded from averaging and ±80μV was used to remove any remaining artifacts.

On the basis of previous studies and the topographical distribution of grand averaged ERP, certain electrodes were selected for the statistical analysis of ERP components. Specifically, PO7 and PO8 were analyzed for the N170 component (peak amplitude: 140–190ms); Cz, C3, C4, Fz, F3, F4, FCz, FC3, FC4 were selected for the N2 component (peak amplitude: 200 to 240ms), and Cz, C3, C4, Fz, F3, F4, Pz, P3, P4 were selected for the LPC component (mean amplitude: 400–600ms). A four-way mixed ANOVA on the amplitude and latency of each ERP component was conducted with Sex (Male vs. Female) as the between-subject variable, and Age (Adult vs. child), Morph (Self vs. Other) and Electrode site as the within-subject variables. Based on the possible male advantage in self-resemble child face processing, we also performed a three-way (Sex × Morph × Electrodes) mixed ANOVA to child faces only.

2.5 ERP source analysis

ERP source analysis was conducted on the self vs. other difference waves. The BESA (Brain Electrical Source Analysis, v5.3.7, MEGIS Software GmbH, Munich, Bavaria, Germany) dipole modeling software was used to perform dipole source analysis with the four-shell ellipsoidal head model. In order to estimate the number of dipoles needed to explain the difference wave, principal component analysis (PCA) was employed. When the number of dipoles was determined with PCA, software automatically determined the dipoles’ locations (with Talairach coordinates) and orientations. To focus on the male advantage on child facial resemblance detection, we only performed source analysis on the N2 and LPC components that showed significant self vs. other difference in the grand average waves (figure 3 and 4).

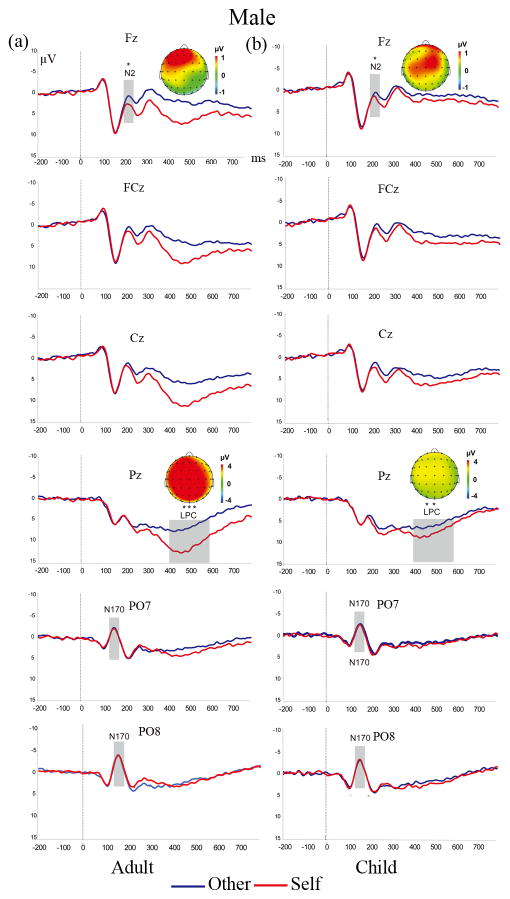

Figure 3. ERP waves and scalp topographies show divergent difference waves (Self-Other) for adult (a) and child (b) faces in males.

ERP components are marked gray. Self-morphed faces elicited more positive going N2 and LPC amplitudes than other-morphed faces for both adult- and child- morphed faces. * p < 0.05 ** p< 0.01 *** p < 0.001

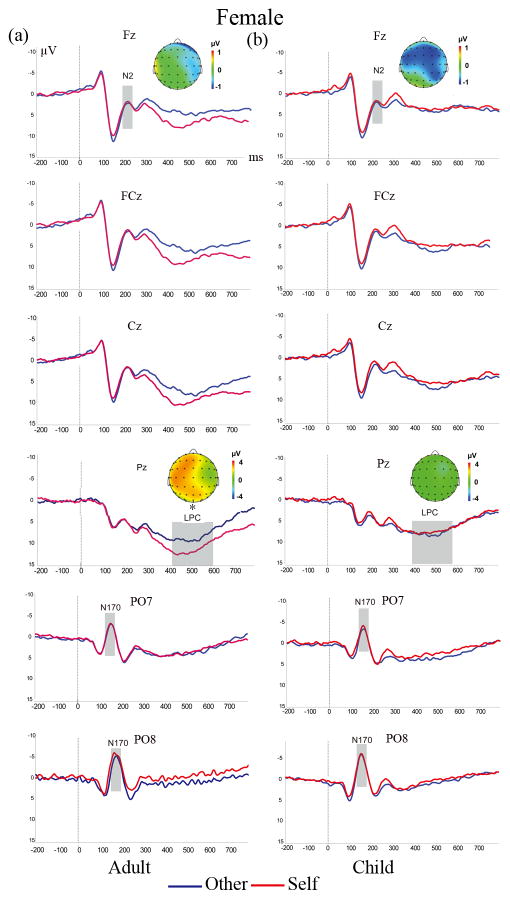

Figure 4. ERP waves and scalp topographies show divergent difference waves (Self-Other) for adult (a) and child (b) faces in females.

ERP components are marked gray. Self-morphed faces elicited larger LPC amplitudes than other- morphed faces only for adult- morphed faces. * p < 0.05 ** p< 0.01 *** p < 0.001

3. Results

Two participants were excluded from the final analysis due to excessive artifact. Hence the following results were analyzed on the remaining 39 subjects (19 males and 20 females).

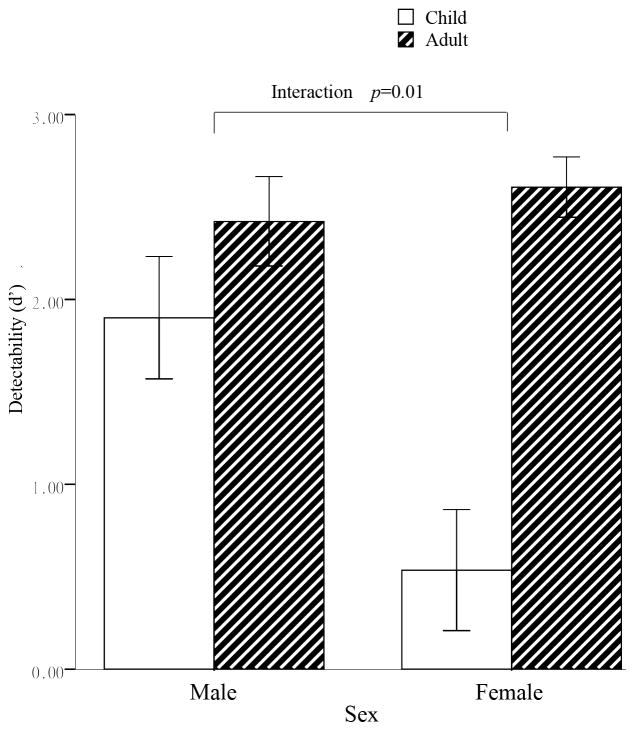

3.1 Behavioral results

Following previous studies (Dal Martello & Maloney, 2006; DeBruine et al., 2009), we calculated the signal detection rates in the self-resembling judgment to adult and child faces separately (figure 2). The d′ was put into a mix ANOVA with Age (adult vs. child) as within subject factor and Sex (male vs. female) as between subject factor. The results showed significant main effect of Age (F(1, 37)=20.43, p<0.001, ηp2= 0.356) and Sex (F(1, 37) = 5.13, p < 0.05, ηp2= 0.12), such that subjects showed higher detectability for adult faces (M = 2.52, SD =0.89, than child faces (M = 1.22, SD =1.59), and males showed higher detectability (M = 2.16, SD =0.89) than females (M = 1.57, SD =0.73). Importantly, there was also a significant two way interaction (F (1, 37)=7.30, p=0.01, ηp2= 0.17), such that males showed significantly greater d′ values to child faces (M =1.90, SD =1.44) than females (M = 0.54, SD =1.46), p< 0.01. In addition, only females showed higher d′ for adult faces (M =2.61, SD = 0.73) than child faces (M = 0.54, SD =1.46), p< 0.001. These results indicated that males do have advantages over females on detecting child facial resemblance.

Figure 2. Signal detection analysis results for adult and child faces as a function of sex.

Left panel depicts the d′ for males; right panel depicts the d′ for females. The error bar stands for one standard error. Males showed significant higher d′ for child faces than females.

3.2 ERP results

The ERPs and topographic maps elicited by the four face types in males and females were shown in Figure 3 and Figure 4.

3.2.1 N170

The ANOVA in N170 amplitude did not reveal any morph effect but only a significant main effect of Age (F (1, 37)=5.62, p<0.05, ηp2=0.13), such that child faces (M =−1.34μV, SE = 0.46) evoked larger N170 amplitude than adult faces (M =−1.01μV, SE = 0.49), p = 0.023. The significant Age × Electrode effect (F(1, 37) =5.42, p<0.05, ηp2= 0.16) indicated longer latency for child faces (M =158.71ms, SE =1.49) than adult faces (M =155.69ms, SE =1.75) in PO7, p=0.003.

3.2.2 N2

Significant Morph× Sex interaction (F (1, 37)=7.01, p < 0.05, ηp2= 0.16) on N2 amplitude indicated that only males showed a larger N2 amplitude for other faces (M = 3.39μV, SE = 0.78) than self faces (M =2.23μV, SE = 0.92), p=0.008. In addition, an Electrode ×Morph ×Age interaction showing larger N2 amplitude for self-child faces than self-adult faces in Fz, F3 and FC3, ps<0.03.

This male advantage on N2 amplitude was confirmed by the Morph× Sex interaction (F (1, 37) =7.18, p<0.05, ηp2= 0. 16) in the ANOVA analyses for child faces (see figure 3. b). Males elicited larger N2 amplitude for other-child faces (M =2.12μV, SE = 0. 94) than self-child faces (M =3.22μV, SE = 0.83), p=0.018, whereas females did not. Such an interaction was not significant for adult faces (F (1, 37)=2.53, p=0.12, ηp2= 0. 06). These results confirmed that the male advantage on facial resemblance detection is mainly on child faces.

As for N2 latency, an Electrode site ×Morph× Sex interaction (F (1, 37) =2.48, p < 0.05, ηp2=0.06) showed longer N2 latency for other-faces than self-faces, particularly for males (males in Fz, FCz, F3, F4, FC4 and C3 while C4 for females), ps<0.05.

The topographical maps of the difference wave (Self minus Other) in the time windows of 200–240ms showed that the N2 differences found in both adult and child faces discrimination for males were distributed mainly on the fronto-central regions (figure 3), which were not found for females (figure 4).

3.2.3 LPC

Consistent of the male advantage found in N2, the ANOVA of the LPC amplitude also showed a significant Morph × Sex interaction (F (1, 37) =6.93, p<0.05, ηp2= 0.16), such that only males showed larger LPC amplitude for self faces (M =7.01μV, SE = 0.57) than other faces (M =4.55μV, SE = 0.43), p< 0.05. Additionally, we also found a significant Morph× Age interaction in LPC amplitude, F(1, 37) =18.72, p<0.001, ηp2= 0.34), such that adult faces elicited a significant self vs. other difference in LPC (p <0.001), whereas child faces did not (p= 0.064). In the ANOVA to LPC elicited by child faces only, a significant Morph× Sex interaction (F(1, 37) =6.85, p<0.001, ηp2= 0.16) was also observed, which suggested self-child faces (M =5.52μV, SE = 0.58) elicited larger LPC than other-child faces (M = 4.21μV, SE = 0.46) for males, whereas this pattern was absent for females, p = 0.616 (see Figure 4. b).

Again, this Morph× Sex interaction did not replicated in adult faces (see figure 3. a and figure 4. a). In the ANOVA to LPC for adult faces only, a main effect of Morph (F(1, 37) =30.98, p<0.001, ηp2= 0.46) indicated a larger LPC evoked by self-adult faces than other-adult faces regardless of sex. Additionally, this self vs. other discrimination effect was not found in the ANOVA of the LPC latency analyses.

The topographical maps of the difference wave (Self minus Other) in the time windows of 400–600ms showed that the LPC differences found in both adult and child faces discrimination for males were distributed widely on the frontal and parietal regions (figure 3), which were also found in adults faces but not child faces for females (figure 4).

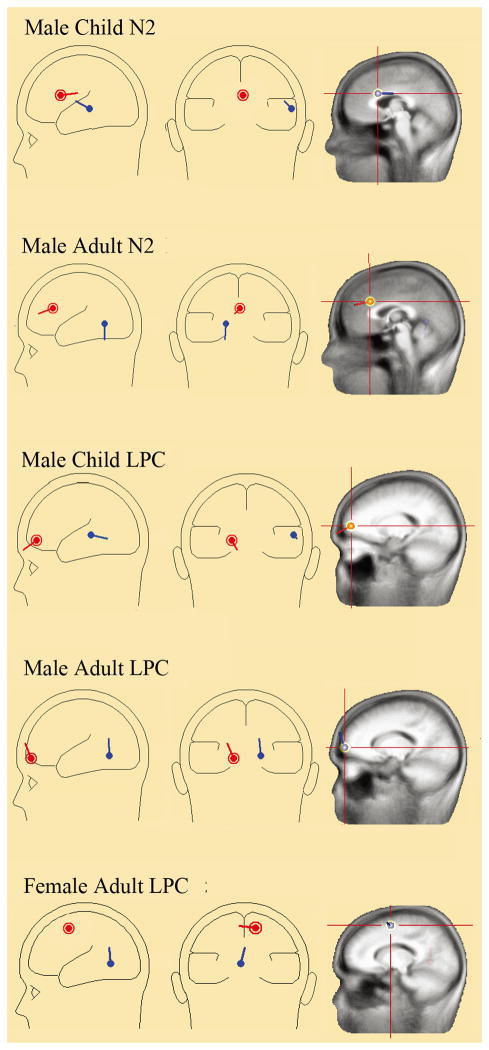

3.3 ERP Source analysis results

For the males, PCA decomposition the self-other N2 differences for adult faces indicated two components that could explain 99.1% of the variance in the data (figure 5). Two dipoles were located approximately in the anterior cingulate cortex (x = 2, y = 25, z = 29, BA32, the red dipole) and the lingual gyrus (x = −16, y = −57, z = −1, BA19, the blue dipole), with a residual variance (R.V.) of 13.5%. Additionally, the self-other LPC differences for adult faces also identified two dipoles (R.V= 8.61%), which located in the left medial frontal gyrus (x = −15, y =64, z =4, BA 10, the red dipole) and the lingual gyrus (x =18, y =−57, z =−4, BA 19, the blue dipole).

Figure 5. ERP source analysis results for the self vs. others difference waves in males and females.

For males, both adult and child faces elicited N2 and LPC differences from the anterior cingulated cortex and medial frontal gyrus, respectively. For females, only adult faces elicited LPC differences from the medial frontal gyrus.

The dipole model of self-other N2 difference for child face also yielded two dipoles (R.V=15.03%) that located in the anterior cingulate cortex (x = 4, y = 15, z = 26, BA24, the red dipole) and superior temporal gyrus (x = 63, y = −28, z = −4, BA22, the blue dipole). Consistent with adult faces, the dipole model of self-other LPC for child faces also identified two dipoles (R.V=23.9%), one located in the left medial frontal gyrus (x = −8, y = 56, z = 7, BA10, the red dipole), the other located in the right superior temporal gyrus (x = 58, y = −29, z = 6, BA22, the blue dipole).

For the females, a two dipoles model (R.V=13.8%) was fitted with the self-other LPC difference wave, which could explain 98.8% of the variance in the data. The result indicated that two dipoles located approximately in the medial frontal gyrus (x = 14, y = 0, z = 55, BA6, the red dipole) and the lingual gyrus (x =−4, y =−58, z =0, BA 19, the blue dipole) separately.

4. Discussion

To the best of our knowledge, this is the first study integrated behavioral and ERPs to examine the temporal dynamic of self-resembling faces detection and its interaction with sex and age. Our results showed that males do have advantages over females in detecting child facial resemblance, even in an explicit resemblance judgment task. Such an advantage can be found in both early and late processing stages in the brain, reflected by different ERP components and brain regions.

Behaviorally, we found a significant interaction between sex and age in self-resembling faces detection. A consistent finding in facial resemblance detection is the own age bias, such that human commonly showed better performance on own age faces (Anastasi & Rhodes, 2005; Harrison & Hole, 2009; Hills & Lewis, 2011; G. Kaminski et al., 2009). However, in our results, only females showed a higher detectability (d′ value) for self-resembling adult faces than child faces. In contrast, males’ detectability to child faces was as sensitive as to adults, which was obviously against the own age bias. In addition, males also showed significantly higher detectability (d′ value) on self-resembling child faces (p < 0.01) than females. These results together directly confirmed the male advantage in self-resembling child faces detection. Though previous studies have shown that people’s performance in face-matching task was better than chance (Gwenael Kaminski, Ravary, Graff, & Gentaz, 2010; Oda, Matsumoto-Oda, & Kurashima, 2002), most of these studies are based on other family photographs (Maloney & Dal Martello, 2006; Nesse, Silverman, & Bortz, 1990). As far as we know, so far no study have found significant sex differences in self-resembling child face detection using explicit resemblance judgment task. Our results thus provide evidence for the male advantage in self-resembling child faces detection and further support the parental investment theory.

In the ERPs, males showed more positive going N2 and LPC components to both self-resembling adult and child faces than other-morphed faces, which is consistent with previous self-face (Purmann, Badde, Luna-Rodriguez, & Wendt, 2011) or self-hands study (Su et al., 2010) showing more positive going potentials from 220–500ms for self-face and hands. Interestingly, the N2 differences in the self vs. other contrast were only found in males but not in females, even for the adult faces. We proposed that the N2 component was a male-specific kin detection-related ERP component. Such a reduced N2 amplitude for self-resembling faces is also in line with previous studies that observed decreased N2 to famous (Nessler, Mecklinger, & Penney, 2005), self- (Sui, Liu, & Han, 2009) or beloved faces (Langeslag, Jansma, Franken, & Van Strien, 2007) than strangers’ faces. The scalp topography indicated that the N2 differences mainly generated from the fronto-central sites (figure 3). The ERP source analysis further showed that the differences of N2 in the self vs. other contrast originated from the ACC in both adult and child faces. Previous studies have shown that the frontal N2 originating from ACC was associated with attention regulation to novel stimuli (Daffner et al., 1998; Stam et al., 1993) or conflict monitoring (Donkers & van Boxtel, 2004; Yeung, Botvinick, & Cohen, 2004). We thus proposed that the N2 component might reflect the conflict monitoring of ACC during kin detection (i.e., the face-comparison of presented face and self-face). That is, due to the parental uncertainty, males may show more conflict monitoring process if the face does not resemble him. From this respective, the self-resembling faces should always elicit smaller N2 than other faces because the smaller conflict between self-resembling faces and self-faces. In contrast, the other faces should always elicit larger N2 because of the higher conflict between self-genotype matching and inhibition of “Yes” response (Grasso et al., 2009).

We also observed larger LPC to both self-resembling adult and child faces than other faces for males. As we mentioned in the introduction, larger LPC reflects greater familiarity (Wilckens, Tremel, Wolk, & Wheeler, 2011; Wolk et al., 2006) and deeper self-referential processing (Su et al., 2010). In contrast to the N2 in early conflict monitoring, the LPC differences in our results may suggest the involvement of further familiarity processing and the self-referent phenotype matching(Platek et al., 2005). The ERP source analysis localized the LPC components to the medial frontal gyrus, a region that plays a key role in self-referential stimuli processing (Fossati et al., 2003; Kircher et al., 2001; Platek, Keenan, et al., 2004; Uddin, Kaplan, Molnar-Szakacs, Zaidel, & Iacoboni, 2005; Zhang et al., 2006; Zhu, Zhang, Fan, & Han, 2007). Thus, males showed higher familiarity or more self-referential phenotype matching to both self-resembling adult and child faces than other faces.

Notably, a larger LPC was also observed in self-resembling adult faces on females, though this effect was absent in child faces. Thus, females also showed higher familiarity or self-referential processing to self-resembling adult faces but not child faces. This result indicated that the LPC effect was consistent with the own age bias in the behavioral results, such that both males and females discriminated self vs. other adult faces better than child faces through more familiarity and self-referential processing. This finding, may also explain why some previous studies did not find sex differences on self-resembling faces detection using adult faces (DeBruine, 2002, 2005; DeBruine et al., 2009), that is, both males and females showed relative stronger self vs. other differences in adult faces, which may interference the sex effect.

We also found difference between adult and child face on the source analysis results. Adult faces localized in lingual gyrus for N2 and LPC both male and female subjects, while child face localized at superior temporal gyrus. It has been suggested that the lingual gyrus is one of the visual area that is activated in visual or spatial attention tasks (Mangun, Buonocore, Girelli, & Jha, 1998; Paradis et al., 2000). Such a brain area indicated the adult face involves visual brain area in early and late processing stages. However, the source analysis of self vs. other N2 and LPC of child face indicated a common dipole at superior temporal gyrus (STG). As previous study reports superior temporal gyrus activation in the other-self contrast (Uddin et al., 2005) or self face processing (Kircher et al., 2001; Platek, Keenan, et al., 2004; Platek et al., 2006), the STG dipole for child face may suggest more self-processing for male subjects.

Finally, longer latency and larger amplitude for child faces than adult faces was also observed at the N170, a component that has been widely accepted as an index of face processing. The faster and smaller N170 for adult faces may also indicate the own age bias, such that own age faces receive faster processing and require less facial configure processing than child faces (Halit, de Haan, Schyns, & Johnson, 2006; Holmes, Winston, & Eimer, 2005; Wiese, Schweinberger, & Hansen, 2008). Considering the absent of self vs. other differences on N170, it is likely that the kin detection process starts from 200ms (i.e., N2) but not the early facial configure processing (N170). Additionally, we failed to find familiarity effect on N170 component, which was consistent previous studies showed N170 was not sensitive to familiarity (Bentin & Deouell, 2000; Cauquil, Edmonds, & Taylor, 2000; Eimer, 2000).

However, several limitations of the present study have to be acknowledged. One limitation of the current study is that we adapted the methods developed by Plate k et al (2002, 2003, 2005), which might introduce some potential problems (DeBruine, 2004b). For example, we only presented morphs made from adults of the same sex and the morphed child faces may not accurately represent real children’s faces. Thus future ERP studies with other paradigms, such as DeBruine et al (2004b, 2005b, 2008), will be very promising to explore more details about the time course of facial resemblance detection. Another limitation is that the trials for each condition may not enough especially if we need to do further trial-by-trial analysis or analysis “yes/no” responses separately.

In summary, we confirmed that males evolve higher sensitivity to self-resembling child faces than females in an explicit self-resembling judgment task. In addition, the behavioral male advantage was reflected by more positive brain potentials (N2 and LPC) to self-resembling child faces than other-morphed child faces, which originated from the ACC and MPFC at the brain. Such ERP effects suggested that the N2 was associated with the early conflict monitoring processing and the LPC component mainly reflected the late facial familiarity and self-referential processing. In conclusion, our results provided direct evidence that males have evolved higher sensitivity to facial resemblance cues and thus supported the parental uncertainty hypothesis.

Acknowledgments

This work was supported by the National Basic Research (973) Program (2011CB711000), the National Natural Science Foundation of China (NSFC) (91132704, 30930031), the National Key Technologies R & D Program (2009BAI77B01), the Global Research Initiative Program, National Institutes of Health, USA (1R01TW007897) to YJL, the NSFC (31170971) to CL and the Fundamental Research Funds for the Central Universities(2012YBXS01) to Haiyan Wu.

References

- Alvergne A, Faurie C, Raymond M. Differential facial resemblance of young children to their parents: who do children look like more? Evolution and Human Behavior. 2007;28(2):135–144. [Google Scholar]

- Alvergne A, Faurie C, Raymond M. Father-offspring resemblance predicts paternal investment in humans. Animal Behaviour. 2009;78(1):61–69. [Google Scholar]

- Alvergne A, Faurie C, Raymond M. Are parents’ perceptions of offspring facial resemblance consistent with actual resemblance? Effects on parental investment. Evolution and Human Behavior. 2010;31(1):7–15. [Google Scholar]

- Anastasi JS, Rhodes MG. An own-age bias in face recognition for children and older adults. Psychonomic Bulletin & Review. 2005;12(6):1043–1047. doi: 10.3758/bf03206441. [DOI] [PubMed] [Google Scholar]

- Bartels A, Zeki S. The neural correlates of maternal and romantic love. Neuroimage. 2004;21(3):1155–1166. doi: 10.1016/j.neuroimage.2003.11.003. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17(3):613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, Deouell LY. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology. 2000;17(1–3):35–54. doi: 10.1080/026432900380472. [DOI] [PubMed] [Google Scholar]

- Bredart S, French RM. Do babies resemble their fathers more than their mothers? A failure to replicate Christenfeld and Hill (1995) Evolution and Human Behavior. 1999;20(2):129–135. [Google Scholar]

- Bressan P. Why babies look like their daddies: paternity uncertainty and the evolution of self-deception in evaluating family resemblance. Acta ethologica. 2002;4(2):113–118. [Google Scholar]

- Bressan P, Bertamini M, Nalli A, Zanutto A. Men do not have a stronger preference than women for self-resemblant child faces. Archives of Sexual Behavior. 2009;38(5):657–664. doi: 10.1007/s10508-008-9350-0. [DOI] [PubMed] [Google Scholar]

- Bressan P, Dal Martello MF. Talis pater, talis filius: Perceived resemblance and the belief in genetic relatedness. Psychological Science. 2002;13(3):213–218. doi: 10.1111/1467-9280.00440. [DOI] [PubMed] [Google Scholar]

- Bressan P, Grassi M. Parental resemblance in 1-year-olds and the Gaussian curve. Evolution and Human Behavior. 2004;25(3):133–141. [Google Scholar]

- Burch RL, Gallup GG. Perceptions of paternal resemblance predict family violence. Evolution and Human Behavior. 2000;21(6):429–435. doi: 10.1016/s1090-5138(00)00056-8. [DOI] [PubMed] [Google Scholar]

- Cauquil AS, Edmonds GE, Taylor MJ. Is the face-sensitive N170 the only ERP not affected by selective attention? Neuroreport. 2000;11(10):2167–2171. doi: 10.1097/00001756-200007140-00021. [DOI] [PubMed] [Google Scholar]

- Daffner KR, Mesulam MM, Scinto LFM, Cohen LG, Kennedy BP, West WC, et al. Regulation of attention to novel stimuli by frontal lobes: an event-related potential study. Neuroreport. 1998;9(5):787–791. doi: 10.1097/00001756-199803300-00004. [DOI] [PubMed] [Google Scholar]

- Dal Martello MF, Maloney LT. Where are kin recognition signals in the human face? Journal of Vision. 2006;6(12):1356–1366. doi: 10.1167/6.12.2. [DOI] [PubMed] [Google Scholar]

- Daly M, Wilson MI. Whom Are Newborn Babies Said to Resemble. Ethology and Sociobiology. 1982;3(2):69–78. [Google Scholar]

- DeBruine LM. Facial resemblance enhances trust. Proceedings of the Royal Society of London Series B-Biological Sciences. 2002;269(1498):1307–1312. doi: 10.1098/rspb.2002.2034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeBruine LM. Facial resemblance increases the attractiveness of same-sex faces more than other-sex faces. Proceedings of the Royal Society of London Series B-Biological Sciences. 2004a;271(1552):2085–2090. doi: 10.1098/rspb.2004.2824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeBruine LM. Resemblance to self increases the appeal of child faces to both men and women. Evolution and Human Behavior. 2004b;25(3):142–154. [Google Scholar]

- DeBruine LM. Trustworthy but not lust-worthy: context-specific effects of facial resemblance. Proceedings of the Royal Society B-Biological Sciences. 2005;272(1566):919–922. doi: 10.1098/rspb.2004.3003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeBruine LM, Jones BC, Little AC, Perrett DI. Social perception of facial resemblance in humans. Archives of Sexual Behavior. 2008;37(1):64–77. doi: 10.1007/s10508-007-9266-0. [DOI] [PubMed] [Google Scholar]

- DeBruine LM, Smith FG, Jones BC, Roberts SC, Petrie M, Spector TD. Kin recognition signals in adult faces. Vision Research. 2009;49(1):38–43. doi: 10.1016/j.visres.2008.09.025. [DOI] [PubMed] [Google Scholar]

- Donkers FCL, van Boxtel GJM. The N2 in go/no-go tasks reflects conflict monitoring not response inhibition. Brain and Cognition. 2004;56(2):165–176. doi: 10.1016/j.bandc.2004.04.005. [DOI] [PubMed] [Google Scholar]

- Eimer M. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport. 2000;11(10):2319–2324. doi: 10.1097/00001756-200007140-00050. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002;13(4):427–431. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Fossati P, Hevenor SJ, Graham SJ, Grady C, Keightley ML, Craik F, et al. In search of the emotional self: An fMRI study using positive and negative emotional words. American Journal of Psychiatry. 2003;160(11):1938–1945. doi: 10.1176/appi.ajp.160.11.1938. [DOI] [PubMed] [Google Scholar]

- Grasso DJ, Moser JS, Dozier M, Simons R. ERP correlates of attention allocation in mothers processing faces of their children. Biological Psychology. 2009;81(2):95–102. doi: 10.1016/j.biopsycho.2009.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grasso DJ, Simons RF. Perceived parental support predicts enhanced late positive event-related brain potentials to parent faces. Biological Psychology. 2011;86(1):26–30. doi: 10.1016/j.biopsycho.2010.10.002. [DOI] [PubMed] [Google Scholar]

- Halit H, de Haan M, Schyns PG, Johnson MH. Is high-spatial frequency information used in the early stages of face detection? Brain Research. 2006;1117:154–161. doi: 10.1016/j.brainres.2006.07.059. [DOI] [PubMed] [Google Scholar]

- Hamilton WD. Genetical evolution of social behaviour I. Journal of Theoretical Biology. 1964;7(1):1. doi: 10.1016/0022-5193(64)90038-4. [DOI] [PubMed] [Google Scholar]

- Harrison V, Hole GJ. Evidence for a contact-based explanation of the own-age bias in face recognition. Psychonomic Bulletin & Review. 2009;16(2):264–269. doi: 10.3758/PBR.16.2.264. [DOI] [PubMed] [Google Scholar]

- Hauber ME, Sherman PW. Self-referent phenotype matching: theoretical considerations and empirical evidence. Trends Neurosci. 2001;24(10):609–616. doi: 10.1016/s0166-2236(00)01916-0. [DOI] [PubMed] [Google Scholar]

- Henson RN, Goshen-Gottstein Y, Ganel T, Otten LJ, Quayle A, Rugg MD. Electrophysiological and haemodynamic correlates of face perception, recognition and priming. Cerebral Cortex. 2003;13(7):793–805. doi: 10.1093/cercor/13.7.793. [DOI] [PubMed] [Google Scholar]

- Hills PJ, Lewis MB. The own-age face recognition bias in children and adults. Quarterly Journal of Experimental Psychology. 2011;64(1):17–23. doi: 10.1080/17470218.2010.537926. [DOI] [PubMed] [Google Scholar]

- Holmes A, Winston JS, Eimer M. The role of spatial frequency information for ERP components sensitive to faces and emotional facial expression. Cognitive Brain Research. 2005;25(2):508–520. doi: 10.1016/j.cogbrainres.2005.08.003. [DOI] [PubMed] [Google Scholar]

- Hu XQ, Wu HY, Fu GY. Temporal course of executive control when lying about self- and other-referential information: An ERP study. Brain Research. 2011;1369:149–157. doi: 10.1016/j.brainres.2010.10.106. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14(2):132–142. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Jemel B, Schuller AM, Cheref-Khan Y, Goffaux V, Crommelinck M, Bruyer R. Stepwise emergence of the face-sensitive N170 event-related potential component. Neuroreport. 2003;14(16):2035–2039. doi: 10.1097/00001756-200311140-00006. [DOI] [PubMed] [Google Scholar]

- Kaminski G, Dridi S, Graff C, Gentaz E. Human ability to detect kinship in strangers’ faces: effects of the degree of relatedness. Proceedings of the Royal Society B-Biological Sciences. 2009;276(1670):3193–3200. doi: 10.1098/rspb.2009.0677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaminski G, Ravary F, Graff C, Gentaz E. Firstborns’ disadvantage in kinship detection. Psychological Science. 2010;21(12):1746–1750. doi: 10.1177/0956797610388045. [DOI] [PubMed] [Google Scholar]

- Kircher TTJ, Senior C, Phillips ML, Rabe-Hesketh S, Benson PJ, Bullmore ET, et al. Recognizing one’s own face. Cognition. 2001;78(1):B1–B15. doi: 10.1016/s0010-0277(00)00104-9. [DOI] [PubMed] [Google Scholar]

- Langeslag SJE, Jansma BA, Franken IHA, Van Strien JW. Event-related potential responses to love-related facial stimuli. Biological Psychology. 2007;76(1–2):109–115. doi: 10.1016/j.biopsycho.2007.06.007. [DOI] [PubMed] [Google Scholar]

- Lewis DMG. The sibling uncertainty hypothesis: Facial resemblance as a sibling recognition cue. Personality and Individual Differences. 2011;51(8):969–974. [Google Scholar]

- Ma YN, Han SH. Self-face advantage is modulated by social threat - Boss effect on self-face recognition. Journal of Experimental Social Psychology. 2009;45(4):1048–1051. [Google Scholar]

- Maloney LT, Dal Martello MF. Kin recognition and the perceived facial similarity of children. J Vis. 2006;6(10):1047–1056. doi: 10.1167/6.10.4. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Buonocore MH, Girelli M, Jha AP. ERP and fMRI measures of visual spatial selective attention. Human Brain Mapping. 1998;6(5–6):383–389. doi: 10.1002/(SICI)1097-0193(1998)6:5/6<383::AID-HBM10>3.0.CO;2-Z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meijer EH, Smulders FTY, Merckelbach HLGJ, Wolf AG. The P300 is sensitive to concealed face recognition. International Journal of Psychophysiology. 2007:1–7. doi: 10.1016/j.ijpsycho.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Melinder A, Gredeback G, Westerlund A, Nelson CA. Brain activation during upright and inverted encoding of own- and other-age faces: ERP evidence for an own-age bias. Developmental Science. 2010;13(4):588–598. doi: 10.1111/j.1467-7687.2009.00910.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyakoshi M, Kanayama N, Iidaka T, Ohira H. EEG evidence of face-specific visual self-representation. Neuroimage. 2010;50(4):1666–1675. doi: 10.1016/j.neuroimage.2010.01.030. [DOI] [PubMed] [Google Scholar]

- Neff BD, Sherman PW. Decision making and recognition mechanisms. Proceedings of the Royal Society of London Series B: Biological Sciences. 2002;269(1499):1435–1441. doi: 10.1098/rspb.2002.2028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nesse RM, Silverman A, Bortz A. Sex-differences in ability to recognize family resemblance. Ethology and Sociobiology. 1990;11(1):11–21. [Google Scholar]

- Nessler D, Mecklinger A, Penney TB. Perceptual fluency, semantic familiarity and recognition-related familiarity: an electrophysiological exploration. Cognitive Brain Research. 2005;22(2):265–288. doi: 10.1016/j.cogbrainres.2004.03.023. [DOI] [PubMed] [Google Scholar]

- Ninomiya H, Onitsuka T, Chen CH, Sato E, Tashiro N. P300 in response to the subject’s own face. Psychiatry and Clinical Neurosciences. 1998;52(5):519–522. doi: 10.1046/j.1440-1819.1998.00445.x. [DOI] [PubMed] [Google Scholar]

- Oda R, Matsumoto-Oda A, Kurashima O. Facial resemblance of Japanese children to their parents. Journal of Ethology. 2002;20(2):81–85. [Google Scholar]

- Paradis AL, Cornilleau-Peres V, Droulez J, Van de Moortele PF, Lobel E, Berthoz A, et al. Visual perception of motion and 3-D structure from motion: an fMRI study. Cerebral Cortex. 2000;10(8):772–783. doi: 10.1093/cercor/10.8.772. [DOI] [PubMed] [Google Scholar]

- Platek SM, Burch RL, Panyavin IS, Wasserman BH, Gallup GG. Reactions to children’s faces - Resemblance affects males more than females. Evolution and Human Behavior. 2002;23(3):159–166. [Google Scholar]

- Platek SM, Critton SR, Burch RL, Frederick DA, Myers TE, Gallup GG. How much paternal resemblance is enough? Sex differences in hypothetical investment decisions but not in the detection of resemblance. Evolution and Human Behavior. 2003;24(2):81–87. [Google Scholar]

- Platek SM, Keenan JP, Gallup GG, Mohamed FB. Where am I? The neurological correlates of self and other. Cognitive Brain Research. 2004;19(2):114–122. doi: 10.1016/j.cogbrainres.2003.11.014. [DOI] [PubMed] [Google Scholar]

- Platek SM, Keenan JP, Mohamed FB. Sex differences in the neural correlates of child facial resemblance: an event-related fMRI study. Neuroimage. 2005;25(4):1336–1344. doi: 10.1016/j.neuroimage.2004.12.037. [DOI] [PubMed] [Google Scholar]

- Platek SM, Kemp SM. Is family special to the brain? An event-related fMRI study of familiar, familial, and self-face recognition. Neuropsychologia. 2009;47(3):849–858. doi: 10.1016/j.neuropsychologia.2008.12.027. [DOI] [PubMed] [Google Scholar]

- Platek SM, Krill AL, Kemp SM. The neural basis of facial resemblance. Neuroscience Letters. 2008;437(2):76–81. doi: 10.1016/j.neulet.2008.03.040. [DOI] [PubMed] [Google Scholar]

- Platek SM, Krill AL, Wilson B. Implicit trustworthiness ratings of self-resembling faces activate brain centers involved in reward. Neuropsychologia. 2009;47(1):289–293. doi: 10.1016/j.neuropsychologia.2008.07.018. [DOI] [PubMed] [Google Scholar]

- Platek SM, Loughead JW, Gur RC, Busch S, Ruparel K, Phend N, et al. Neural substrates for functionally discriminating self-face from personally familiar faces. Human Brain Mapping. 2006;27(2):91–98. doi: 10.1002/hbm.20168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platek SM, Raines DM, Gallup GG, Mohamed FB, Thomson JW, Myers TE, et al. Reactions to children’s faces: Males are more affected by resemblance than females are, and so are their brains. Evolution and Human Behavior. 2004;25(6):394–405. [Google Scholar]

- Purmann S, Badde S, Luna-Rodriguez A, Wendt M. Adaptation to frequent conflict in the eriksen flanker task:an ERP study. Journal of Psychophysiology. 2011;25(2):50–59. [Google Scholar]

- Rehnman J, Herlitz A. Higher face recognition ability in girls: Magnified by own-sex own-ethnicity bias. Memory. 2006;14(3):289–296. doi: 10.1080/09658210500233581. [DOI] [PubMed] [Google Scholar]

- Rosenfeld JP, Shue E, Singer E. Single versus multiple probe blocks of P300-based concealed information tests for self-referring versus incidentally obtained information. Biol Psychol. 2007;74(3):396–404. doi: 10.1016/j.biopsycho.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Rossion B, Gauthier I, Tarr MJ, Despland P, Bruyer R, Linotte S, et al. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport. 2000;11(1):69–74. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]

- Stam CJ, Visser SL, Decoul AAWO, Desonneville LMJ, Schellens RLLA, Brunia CHM, et al. Disturbed Frontal Regulation of Attention in Parkinsons-Disease. Brain. 1993;116:1139–1158. doi: 10.1093/brain/116.5.1139. [DOI] [PubMed] [Google Scholar]

- Su YH, Chen AT, Yin HZ, Qiu JA, Lv JY, Wei DT, et al. Spatiotemporal cortical activation underlying self-referencial processing evoked by self-hand. Biological Psychology. 2010;85(2):219–225. doi: 10.1016/j.biopsycho.2010.07.004. [DOI] [PubMed] [Google Scholar]

- Sui J, Liu CH, Han SH. Cultural difference in neural mechanisms of self-recognition. Social Neuroscience. 2009;4(5):402–411. doi: 10.1080/17470910802674825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sui J, Zhu Y, Han SH. Self-face recognition in attended and unattended conditions: an event-related brain potential study. Neuroreport. 2006;17(4):423–427. doi: 10.1097/01.wnr.0000203357.65190.61. [DOI] [PubMed] [Google Scholar]

- Uddin LQ, Kaplan JT, Molnar-Szakacs I, Zaidel E, Iacoboni M. Self-face recognition activates a frontoparietal “mirror” network in the right hemisphere: an event-related fMRI study. Neuroimage. 2005;25(3):926–935. doi: 10.1016/j.neuroimage.2004.12.018. [DOI] [PubMed] [Google Scholar]

- Welling LLM, Burriss RP, Puts DA. Mate retention behavior modulates men’s preferences for self-resemblance in infant faces. Evolution and Human Behavior. 2011;32(2):118–126. [Google Scholar]

- Wiese H, Schweinberger SR, Hansen K. The age of the beholder: ERP evidence of an own-age bias in face memory. Neuropsychologia. 2008;46(12):2973–2985. doi: 10.1016/j.neuropsychologia.2008.06.007. [DOI] [PubMed] [Google Scholar]

- Wilckens KA, Tremel JJ, Wolk DA, Wheeler ME. Effects of task-set adoption on ERP correlates of controlled and automatic recognition memory. Neuroimage. 2011;55(3):1384–1392. doi: 10.1016/j.neuroimage.2010.12.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolk DA, Schacter DL, Lygizos M, Sen NM, Holcomb PJ, Daffner KR, et al. ERP correlates of recognition memory: Effects of retention interval and false alarms. Brain Research. 2006;1096:148–162. doi: 10.1016/j.brainres.2006.04.050. [DOI] [PubMed] [Google Scholar]

- Yeung N, Botvinick MM, Cohen JD. The neural basis of error detection: Conflict monitoring and the error-related negativity. Psychological Review. 2004;111(4):931–959. doi: 10.1037/0033-295x.111.4.939. [DOI] [PubMed] [Google Scholar]

- Zhang L, Zhou TG, Zhang J, Liu ZX, Fan J, Zhu Y. In search of the Chinese self: An fMRI study. Science in China Series C-Life Sciences. 2006;49(1):89–96. doi: 10.1007/s11427-004-5105-x. [DOI] [PubMed] [Google Scholar]

- Zhu Y, Zhang L, Fan J, Han SH. Neural basis of cultural influence on self-representation. Neuroimage. 2007;34(3):1310–1316. doi: 10.1016/j.neuroimage.2006.08.047. [DOI] [PubMed] [Google Scholar]