Abstract

We present an analysis of the Locally Competitive Algotihm (LCA), which is a Hopfield-style neural network that efficiently solves sparse approximation problems (e.g., approximating a vector from a dictionary using just a few nonzero coefficients). This class of problems plays a significant role in both theories of neural coding and applications in signal processing. However, the LCA lacks analysis of its convergence properties, and previous results on neural networks for nonsmooth optimization do not apply to the specifics of the LCA architecture. We show that the LCA has desirable convergence properties, such as stability and global convergence to the optimum of the objective function when it is unique. Under some mild conditions, the support of the solution is also proven to be reached in finite time. Furthermore, some restrictions on the problem specifics allow us to characterize the convergence rate of the system by showing that the LCA converges exponentially fast with an analytically bounded convergence rate. We support our analysis with several illustrative simulations.

Keywords: Exponential convergence, global stability, locally competitive algorithm, Lyapunov function, nonsmooth objective, sparse approximation

I. Introduction

Sparse approximation has generated substantial interest in a wide range of research communities over the last two decades, including signal processing, machine learning, statistics, and computational neuroscience (see [2], [3] and references therein).1 Specifically, sparse approximation involves solving an optimization problem to represent a signal using just a few atoms from some specified (possibly overcomplete) dictionary. In addition to describing compelling models of neural coding for sensory information [4], [5], this approach has led to state-of-the-art results in many types of inverse problems. One example of a regime that has leveraged this type of signal model is the theory of compressed sensing (CS) [6], [7]. In this domain, highly undersampled signals are recovered by solving a sparse approximation problem, thereby shifting the burden of data acquisition from the front-end sensor to a computationally intensive back end.

Because of the increasing interest in the sparse approximation paradigm, significant effort has been made to design efficient algorithms for solving (or approximately solving) least squares optimization problems that are regularized with a sparsity-inducing penalty (e.g., [8]–[10]). However, all of these algorithms are developed to run on digital computers in discrete time, which are both implausible for neural systems and suffer from several drawbacks as engineering approaches. In particular, the computational time required by these algorithms presents a barrier to real-time signal processing applications with high-dimensional signals at significant data rates. Specifically, digital algorithms tend to have storage requirements and convergence times that scale unfavorably with the dimensionality of the signals being approximated. Additionally, the power consumption of digital solutions can be prohibitive for many applications. Given these considerations, a fast and low-power method for solving sparse approximation problems in a parallel architecture would be valuable both for engineering systems and viable models of neural coding.

Analog neural networks have long been proposed for solving optimization problems [11], with an early example being Hopfield's pioneering results [12] using networks to solve linear optimization problems. Analog neural networks offer several potential advantages over comparable digital algorithms, including their ability to be implemented in analog architectures that are highly parallel, fast, and power-efficient. Recent advances in very-large-scale integration (VLSI) reconfigurable analog chips [13] make the design of such systems more feasible and affordable than has often been true in the past. A recent neural network architecture, called a Locally Competitive Algorithm (LCA) [14], has been proposed to solve the types of nonsmooth optimization problems that come up in sparse approximation. These Hopfield-style networks appear to efficiently solve a whole family of sparse approximation problems by incorporating ideal thresholding nonlinearities in the network dynamics. The results in [14] provide encouraging evidence that biological systems and engineering applications can use neural networks to solve these important sparse approximation problems.

However, despite encouraging evidence of its performance, the LCA lacks strong convergence guarantees and estimates of the convergence rate. Furthermore, the specifics of the LCA architecture violate many of the assumptions used in previous analysis, making the extensive literature on neural network convergence inapplicable for this system. For example, in previous work the activation function is often assumed to be linear [15], piecewise linear [16], or nonlinear but increasing and bounded [17]–[20]. In contrast, the LCA activation function is nonlinear and unbounded. Other analyses assume an interconnection matrix which is positive definite [16], [21], [22] or nonsingular [23], [24], while the LCA interconnection matrix may have negative eigenvalues as well as a nontrivial nullspace (because of the approximation dictionary being overcomplete). Other analyses consider a nonsmooth objective function with constraints which shows convergence only when the constraints are nonzero and convex [25], [26]. Finally, other relevant analyses assume that the objective function is convex [27] or piecewise linear [28], while the work in [29] focuses on the controllability of the network path.

The main contributions of this paper are to present a formal analysis of the LCA network architecture and its convergence properties, despite: 1) an activation function that is nonsmooth and not necessarily bounded or increasing and 2) a potentially singular interconnection matrix. Section III contains our first main result, which states that the fixed points of the LCA network correspond to critical points of the objective function. In the special case, where the objective is convex, this set coincides with the global minima of the objective. In addition, we show that the network is globally stable and that the outputs are quasi-convergent, in the sense that they get infinitely close to a set of fixed points. Finally, in the case where the objective function has isolated minima, we show that the LCA converges to the solution of the sparse approximation problem for any initial point (i.e., a much stronger condition than just the nonincreasing property of the energy function that was shown in previous work [14]). This section also shows that the LCA is well behaved in that it converges in a finite number of switches (i.e., nodes crossing above or below threshold). Section IV expands on these general results to show that, under additional mild conditions on the problem specifics, the LCA actually converges exponentially fast to the solution. Furthermore, we give an analytic expression for this convergence rate that depends on the properties of the detailed approximation problem. Finally, Section V presents simulation results showing the correspondence of our analytic results with empirical observations of the network behavior.

II. Background

Before presenting our main results, in this section we briefly give a precise statement of the sparse approximation problems of interest, a description of the LCA architecture, and some preliminary observations on the LCA network dynamics that will be useful in the subsequent analysis.

A. Sparse Approximation

As mentioned above, sparse approximation is an optimization program that seeks to find the approximation coefficients of a signal on a prescribed dictionary, using as few nonzero elements as possible. To fix notation, we denote the input signal by , the unit-norm dictionary elements by the columns of the M × N matrix coefficients Φ = [Φ1, . . . , ΦN], and the by . Generally, (i.e., the approximation dictionary is overcomplete), and the problem of recovering a from y is underdetermined. While similar in spirit to the well-known winner-take-all problem [30], sparse approximation problems are generally formulated as the solution to an optimization program because this approach can often yield strong performance guarantees in specific applications (e.g., recovery in a CS problem). In the most generic form, the objective function is the sum of a quadratic data fidelity term (i.e., mean squared error) and a regularization term that uses a sparsity-inducing cost function C(·)

| (1) |

where the parameter λ is a tradeoff between the two terms in the objective function. The “ideal” sparse approximation problem has a cost function that simply counts the number of nonzero elements, resulting in a nonconvex objective function that has many local minima [31].

One of the most widely used programs from this family is known as Basis Pursuit DeNoising (BPDN) [32], which is given by the objective function

| (2) |

In BPDN, the is used as a convex surrogate the idealized counting norm. This program has gained in popularity as researchers have shown that, in many cases of interest, substituting the norm yields the same solution as using an idealized (and generally intractable) counting norm [33]. However, BPDN illustrates the canonical challenge of sparse approximation problems. Despite being convex, the BPDN objective function contains a nonsmooth nonlinearity which makes it considerably more difficult than a classic least-squares problem.

In the context of computational neuroscience, sparse approximation has been proposed as a neural coding scheme for sensory information. In one interpretation, programs such as BPDN can be viewed as Bayesian inference in a linear generative model with Gaussian noise and a prior with high kurtosis to encourage sparsity (e.g., the Laplacian prior in the case of BPDN) [5]. Given the prevalence of probabilistic inference as a successful description of human perception [34] and the theoretical benefits of sparse representations [3], it has long been conjectured that sensory systems may encode stimuli via sparse approximation. In fact, in classic results, sparse approximation applied to the statistics of natural stimuli in an unsupervised learning experiment has been shown to be sufficient to qualitatively and quantitatively explain the receptive field properties of simple cells in the primary visual cortex [4], [35] as well as the auditory nerve fibers [36]. Only recently have there been proposals of efficient neural networks that could efficiently solve the necessary optimization problems to implement this type of encoding [14], [35], [37], [38].

B. LCA Structure

Our primary interest will be the LCA [14], which is an analog continuous-time dynamical system that is a type of Hopfield-style network. In particular, each node in the LCA network is characterized by the evolution of a set of internal state variables, un(t) for n = 1, . . . , N, and uses a nonlinear activation function Tλ(·) to produce output variables an(t) for n = 1, . . . , N. The activation function is typically a nonsmooth nonlinear function (such as a thresholding function) to induce sparsity in the outputs. The dynamics of the internal state variables are governed by a set of coupled nonlinear ordinary differential equations (ODEs)

| (3) |

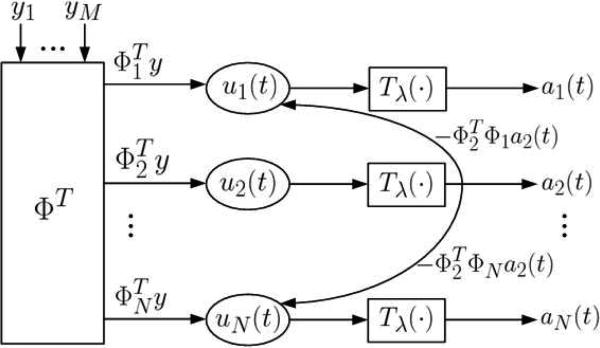

In this network, each node is associated with a single dictionary element Φn, n = 1, . . . , N, and the node outputs will be shown to be the solution to the optimization problem of interest. The architecture of a typical LCA is shown in Fig. 1.

Fig. 1.

Block diagram of the LCA [14], which is a Hopfield-style network architecture for solving sparse approximation problems.

The inputs to the LCA network are feedforward connections computing the vector of driving synaptic inputs ΦT y, reflecting how well the signal y matches each dictionary element. The network also has recurrent inhibitory or excitatory connections between the nodes, modulated by weights corresponding to the interconnection matrix G = ΦTΦ – I (i.e., a modified Grammian matrix for the dictionary). The more overlap there is between a pair of nodes (characterized by the inner product between their dictionary elements), the stronger will be the potential inhibition between those nodes. While the modulating weight is symmetric between any pair of nodes, the total inhibition is not, because it is also modulated by the activity of each individual node. Moreover, the matrix G potentially has both negative and positive eigenvalues, as well as a nontrivial nullspace. This inhibition structure ensures that nodes that carry the same information will not become active at the same time, thus meeting the goals of sparse approximation. The time constant τ is dependent on the physical properties of the solver implementing the ODEs. For our purposes, we will often assume τ = 1 without loss of generality.

It was shown in [14] that the objective function in (1) is nonincreasing along the LCA trajectory with the following relationship ( such that an ≠ 0) between the cost penalty term C(·) and the activation function Tλ(·)

| (4) |

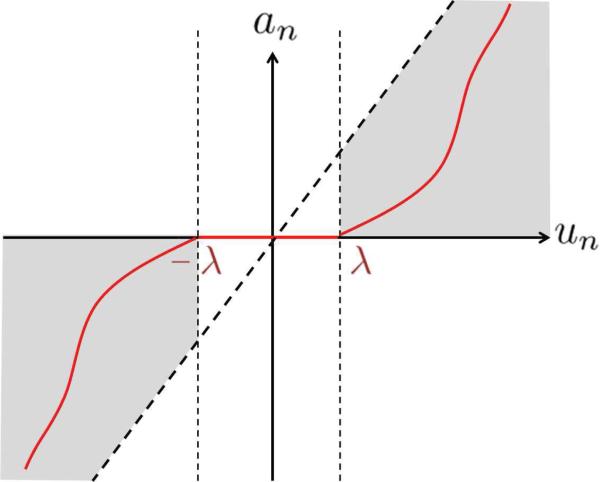

In the case of BPDN, the cost penalty is simply C(x)= |x| and the activation function obtained from (4) is the soft-thresholding function (Fig. 2) defined by

This function often arises in connection to algorithms for minimizing the absolute value of the coefficients (see [39]). Generalizing from the soft threshold, we focus on “thresholding” activation functions Tλ(·) of the form

| (5) |

where the function f(·) is real-valued defined and continuous on the domain , differentiable on the interior if , and satisfying the conditions

| (6a) |

| (6b) |

| (6c) |

Fig. 2.

Soft-thresholding activation function. When this is used for the thresholding function Tλ(·), the LCA solves the popular BPDN optimization problem used in many sparse approximation applications.

Remark 1: Equation (6a) ensures that Tλ(·) is continuous on all and, consequently, that u(t) and a(t) are continuous with respect to time. This ensures that the cost function C(·) is differentiable everywhere except at the origin. Equation (6b) makes f(·) a bijection on . Finally, (6a) and (6c) ensure, by a simple computation, that C(·) is positive and nondecreasing with the absolute value of the coefficient. Notice that the objective function satisfies the three criteria in (6), in addition to being convex (which is not required, but guarantees that the system will find a global minima). In Fig. 3, we plot a more generic stylized activation function that satisfies the conditions (6).

Fig. 3.

Generic activation function satisfying conditions (6). The area in gray represents where the activation function must lie in order to satisfy conditions (6b) and (6c).

As shown in Fig. 3, the activation function (5) is composed of two operating regions. The first region corresponds to the case where the internal state un is below the threshold λ, in which case the output an is zero. We call the nodes in this region inactive nodes. The second region corresponds to the case where the internal state un is above threshold, in which case (6b) guarantees that the output an is strictly increasing with un. We call the nodes that are above threshold active nodes, and we denote by Γ the active set (i.e., the set of indices corresponding to those nodes, Γ = {n ∈ [1, N] : |un(t)| > λ}). On the contrary, we denote the set of indices corresponding to nodes that are below threshold by Γc and call them the inactive set. While these two sets do change with time as the network evolves, for the sake of readability we omit the dependence on time in the notation.

Owing to the nonsmooth nature of the objective (1), we require the generalized notion of a subgradient (see [40] for more details). Note that V (a) is differentiable everywhere except at points a that contain zeros, due to the nondifferentiability of C(·) at the origin noted above for the cases of interest in sparse approximation. The subgradient ∂V (·) extends the classic notion of a gradient ∇V(·) at those points of discontinuity. The properties in (6) allow us to use the following simple definition:

where co is the convex hull, ΩV is the set of points where V fails to be differentiable, and is any set of Lebesgue measure 0 in . In other words, it is the smallest convex set containing the gradients of the function as we approach the discontinuity from any direction. Note that, when a is not a point of discontinuity, this simply reduces to ∇V(a).

C. LCA Node Dynamics

Because nodes can cross threshold and go from the inactive set to the active set (and vice versa), the LCA can be thought of as a switched system [41] where the ODEs change at each switching time (i.e., when a node crosses above or below threshold). In between two switching times, the active set Γ and inactive set Γc are fixed. Therefore, the dynamics on the two sets can be considered separately to facilitate analysis. In the following, is the matrix composed of the columns of Φ indexed by the set . Similarly, and refer to the elements in the original vectors indexed by . We also denote by the sequence of times for which the system switches from the set of active nodes Γk–1 to Γk. The notation Tλ(u(t)) refers to the vector [Tλ(u1(t)), . . . , Tλ(uN(t))]T, and we denote by F′(t) the associated Jacobian matrix with respect to the internal state variables u. Because the activation function has the form in (5), the matrix F′(t) is diagonal with diagonal elements equal to zero for indices in the inactive set Γc and equal to f′(un) for indices n in the active set Γ.

Using the chain rule and (5), we can calculate the derivative with respect to time of the outputs on the active set Γ as

| (7) |

As a consequence, the ODE (3) can be rewritten for nodes in the active set as follows:

| (8) |

The active nodes follow this ODE until the next switch occurs, changing the sets of active and inactive nodes. Similarly, we can rewrite the set of differential equations acting on the inactive nodes. Since the output of the activation function for nodes in Γc is zero, the ODE (3) on the inactive set becomes

| (9) |

Separating the system dynamics into two separate characterizations for the active nodes Γ and inactive nodes Γc yields two sets of differential equations that are partially decoupled, and this will be crucial for our subsequent analysis. This partial decoupling is possible because only the active nodes in the system produce inhibitory signals, coupling their dynamics to the dynamics of each node in the inactive set via the interconnection matrix. However, because inactive nodes do not inhibit active nodes, the dynamics on the active set are independent of the inactive set.

III. Convergence Results

In [14], the authors show that the LCA network trajectory is nonincreasing on the energy surface corresponding to the desired objective function. While this is a necessary behavior for a network that solves an optimization problem, it is not sufficient to actually show that the network converges to a fixed point (or set of points), let alone reaches a minimum of that desired objective function. Both of these are important guarantees to make before relying on this system as a viable model of neural processing or as an implementation in engineering applications.

In Section III-A, we analyze the general convergence properties of the LCA. In particular, in this section the interconnection matrix ΦTΦ – I may have many negative or zero eigenvalues, which complicates the analysis. The main results of this first section show that the fixed points of the LCA correspond to critical points of the objective (1) and the outputs of the network converge from any initial state to the set of fixed points. In the case of isolated critical points of (1), we show that the LCA is globally convergent in the sense that starting from any initial state, the system converges to a fixed point. Elaborating on these results, Section III-B shows that the LCA (even under very general assumptions) is very well behaved, converging in a finite number of switches (i.e., nodes crossing above or below threshold) and therefore recovering the solution support (i.e., the set of nonzero elements) in finite time. Owing to the difficulties described above, our approach relies on classic results from nonsmooth analysis, such as subgradients and a generalized chain rule (see Appendix A).

A. Global Asymptotic Convergence

To begin, we recall some useful definitions of stability and concepts from Lyapunov theory (see [42] for more background). There exist several notions of stability associated with dynamical systems that describe the evolution of the nodes or their outputs both locally and globally. For a neural network described by a differential equation of the form

| (10) |

with outputs z = Tλ(x) defind as in (5), we say that a constant vector is a fixed point of (10) if and only if

| (11) |

On the other hand, the outputs of a system reach a critical point ac of the objective function V(·) when they satisfy the inclusion

| (12) |

Note that, if V(·) is convex, then the critical points correspond exactly to the global minima of V. We say that (10) is (Lyapunov) stable at x* if, for each ε > 0, there exists R > 0 such that for all x0 with ∥x0 – x*∥ < R, and all the solutions x(·) with initial state x0

| (13) |

This is clearly a local notion of stability, guaranteeing that, once the trajectory is close to a fixed point, it remains nearby. But this type of stability is insufficient to guarantee global convergence, which means that trajectories approach a fixed point as time goes to infinity. The following notion of stability is slightly stronger, guaranteeing that trajectories approach at least a set of fixed points regardless of the initial state.

Definition 1: We say that the outputs of (10) are globally quasi-convergent if there exists a set such that for all , the outputs z(·) = Tλ (x(·)) with initial state x0 satisfy .

Finally, the strongest and most desirable form of convergence which guarantees that the nodes converge to a single fixed point is stated in the next definition.

Definition 2: We say that (10) is globally convergent, or equivalently globally asymptotically stable, if there exists a fixed point x* at which the system is stable, and if for all initial states the solutions x(·) satisfy .

Typically, global convergence is established through the use of a so-called Lyapunov function V. The notation V̇ refers to the derivative with respect to time, i.e., dV/dt.

Definition 3: A function is a weak Lyapunov function on if:

V(x) > 0 ;

V is continuous on ;

V̇(x) ≤ 0 ;

- V is radially unbounded

Similarly, a function is called a strict Lyapunov function if it meets the above conditions, with the exception of having a strict inequality in condition 3) (i.e., V̇(x) < 0, ).

Remark 2: When it is possible to find a weak Lyapunov function for a given dynamical system, the first theorem of Lyapunov [42] guarantees that any fixed point of the system is stable in the sense of (13). However, to show global convergence of a system, the second theorem of Lyapunov requires a strict Lyapunov function.

One can check that the objective function in (1) is a weak Lyapunov function for (3), thus guaranteeing that the LCA is Lyapunov stable (which improves on the stability result obtained in [14]). However, it is not a strict Lyapunov function. Indeed, (1) only depends on the active nodes, meaning that it could stop decreasing while subthreshold nodes are still evolving. Continued evolution of the inactive nodes could cause a node in Γc to become active, thereby causing the objective function to start decreasing again. As a consequence, condition 3) in Definition 3 is not satisfied with strict inequality, and the standard approach of using the objective function as a Lyapunov function is not sufficient to show global convergence of the LCA. To show global convergence of the system to a fixed point, it is necessary to account for the dynamics on both active and inactive nodes.

Our main convergence theorem guarantees the global quasi-convergence of the LCA toward a set of critical points of the objective function. In the case of isolated critical points, this implies global convergence.

Theorem 1: The LCA system defined in (3), with an activation function of the form (5) satisfying conditions (6):

has fixed points that are critical points of the objective function defined in (1);

has globally quasi-convergent outputs;

is globally convergent, provided that the critical points of (1) are isolated.

Note that part 2 of the above theorem only relates to the output variables, while part 3 is a much stronger condition on the entire dynamical system (including subthreshold states). In the highly relevant case of convex objective functions with unique minima (e.g., BPDN), part 3 of the above theorem applies directly and we have the strongest possible notion of convergence. In fact, for most dictionaries Φ (e.g., random Gaussian), the minimum of the objective function (2) is unique [43]. Recent results on subgradient dynamical systems [26], [44] lead us to believe that part 3 could still hold in the case where the fixed points of the system are not isolated and there exists a subspace of solutions to (1), but this conjecture is beyond the scope of this paper. Section V-A illustrates the convergence behavior of the LCA in simulation. The proof of this theorem is in Appendix A, and relies on generalized notions of a subgradient due to the nonsmooth nature of the objective.

B. Convergence in a Finite Number of Switches

In the theorem below, we strengthen the previous result to establish that, under some mild conditions, the support of the final solution is reached with a finite number of switches. To prove this result, it is sufficient to assume that no node in the solution lies exactly on the threshold λ. This assumption precludes unwanted infinite oscillation behavior on the boundaries, known as Zeno behavior. In other words, we assume that there exists a margin (r > 0) above and below the threshold which contains no node in u*. One would expect this condition to hold with near-certainty for any signal that was not pathologically constructed.

Theorem 2: If (3) converges to a fixed point u* such that there exists r > 0

then the system converges after a finite number of switches. This implies that the neural network recovers the support of the solution a* in finite time. Section V-A explores the number of switches during the convergence of the LCA in simulation.

Proof: Let Γ* be the set of active nodes in u*. By contradiction, assume that the sequence of switching times is infinite. Since the LCA converges to u*, we have

As a consequence, for r > 0, there exists such that . To begin, we show that for all k ≥ K, the state variables u(tk) are in the subsystem Γ*. For all k ≥ K, we have two cases.

- Nodes that are above threshold in u* are above threshold in u(tk). Indeed, , we have

Moreover, nodes are active with the correct sign; otherwise, we would have

which is a contradiction. - Nodes that are below threshold in u* are below threshold in u(tk). Indeed, , we have

As a consequence, for all k ≥ K, Γk = Γ*. However, Γk and Γk+1 must be different to define the switching time tk+1 and we reach a contradiction. This proves that after a finite number of switches K, there cannot be any switching out of subsystem Γ*.

IV. Exponential Convergence Rate

With Theorem 1 showing that the LCA is globally convergent, the most pressing issue remaining is to determine the convergence rate of the network. Such a bound will be especially important for implementations, which must guarantee solution times. In this section, we show that, under some additional conditions on the problem's specifics [given in (16)], the LCA network converges exponentially fast to a unique fixed point u*. We also give an analytic characterization of the convergence speed.2

To begin this section, we recall the definition of exponential convergence.

Definition 4: The dynamical system in (10) is exponentially convergent to the solution x* if there exists a constant c > 0 such that for any initial point x(0), there exists a constant κ0 > 0 [which may depend on x(0)] for which the trajectory x(t) of the system satisfies

The constant c is referred to as convergence speed of the system.

In order to state the main theorem of this section, we define the two following quantities. The first constant is denoted by α and provides a bound on the derivative of f(·) in (5)

| (14) |

Note that the constant α is always well defined since the trajectories un(t) are bounded and the function f(·) is continuous. The second constant, denoted by δ, is the smallest positive constant such that for any active set Γ visited by the algorithm and any vector x in with active set , where Γ* is the active set of the solution to (1); we have

| (15) |

The constant δ depends on the singular values of the matrix and on the sequence of active sets visited by the system. It may not be well defined for any matrix Φ or any input y. However, in many interesting cases in CS, the constant δ is close to 0 and the dictionary elements are almost orthogonal for any small enough active set [45]. The following theorem shows that this constant directly relates to the convergence speed of the neural network.

Theorem 3: Under conditions (6) on the activation function in (5), and provided that the constants α and δ, defined in (14) and (15), respectively, satisfy

| (16) |

the LCA system defined in (3) is globally exponentially convergent to a unique equilibrium, with convergence speed (1 – δ)/2τ.

If condition (16) is satisfied, the expression given for the convergence speed is positive and thus meaningful. It depends on the eigenvalues of the matrix , which vary with the active set Γ. A careful analysis of the sequence of active sets visited by the network is required to obtain a good estimate of δ. Since such a study is application dependent, we do not address this question here. Note that in the very interesting case of the soft-threshold function, α = 1 and so condition (16) reduces to δ < 1. The time constant τ of the physical solver implementing the LCA neural network appears in the expression of the speed of convergence. Lowering this time constant means the system will converge faster. Analog systems can have smaller time constants than their digital counterparts, which scale better with the problem size. Section V-B explores the convergence rate bounds for the LCA in simulation.

To establish the expression of the convergence speed, we use the following Lure-Postnikov-type Lyapunov function:

| (17) |

where we again redefine the output and state variables in terms of the distance from any arbitrary fixed point u* as

| (18) |

and the function , which captures how a perturbation of the internal state affects the output. Because the second term in (17) is nonnegative, we have trivially that this energy function bounds the mean-squared error of the current state values to their steady-state values, i.e., (1/2) . The theorem will prove that u* is unique. The properties presented in the following Lemma (see Appendix B for proof) are useful to prove the main result of this section.

Lemma 1: Assume that the activation function (5) satisfies the conditions (6). Then, the set of variables and defined in (18) satisfy the following properties:

;

for any (in particular for );

.

Armed with this lemma, we can now give the proof of the main theorem.

Proof of Theorem 3: We begin by noting that since u* and a* are the internal state variables and the outputs at a fixed point of (3); we can rewrite the dynamics in terms of the new variables

| (19) |

Using the chain rule, we find the expression for the derivative with respect to time of the energy function (17) as

Using (19), this becomes

Using the properties in Lemma 1 leads to the desired expression (and removing the term t to increase readability)

Adding and subtracting the term results in

We separate the vectors into their components onto the active set of and its complement as

We can now use the definition of δ in (15)

Now, using property 3) of Lemma 1, the expression becomes

From (16), we know that 1 – δ(2α – 1)2 ≥ 0, and so

Finally, using property 4) of Lemma 1 and the fact that δ ≤ 1, we arrive at

Knowing already that E(t) is an upper bound on the quantity of interest, we can integrate both sides of the above inequality (i.e., apply Gronwall's inequality) to get a bound on E(t)

| (20) |

proving that the LCA is exponentially convergent to a unique fixed point u*. Taking the square root, this expression also shows that the convergence speed in the exponential is (1 – δ)/(2τ), as stated in the theorem.

V. Simulations

In this section, we illustrate the previous theoretical results in simulation. Each plot is based on the following canonical sparse approximation problem. We generate a “true” sparse vector , with N = 512 and s = 5 nonzero entries. We select the locations of the nonzeros uniformly at random and draw their amplitudes from a normal Gaussian distribution (then normalizing to have unit norm). We choose a dictionary Φ as a union of the canonical basis and a sinusoidal basis having dimensions M × N with M = 256. The vector of measurements is , where η is Gaussian random noise with standard deviation 0.0062. We use an LCA with a soft-threshold activation function, with the threshold set to λ = 0.025 and u(0) = 0. We simulate the LCA dynamical equations (3) through a discrete approximation in Matlab with a step size of 0.001 and a solver time constant chosen to be equal to τ = 0.01.

A. Convergence Results

From Theorem 1, the LCA should converge and recover the solution to the sparse approximation problem (1), which has a unique minimizer. Since the signal a0 to recover is sparse, the outputs of the neural network are expected to converge to a solution close to the initial signal a0. Fig. 4 shows the evolution of a few nodes un(t) selected at random. We see that both active and inactive nodes converge relatively quickly. Fig. 5 shows the fixed point reached by the LCA system and compares it to the initial signal a0 and to the solution obtained using a standard digital solver for the same optimization program [8]. The solution reached by the network possesses exactly five nonzero entries which correspond to the nonzero entries in a0. The recovered amplitudes are very close to the initial amplitudes (it cannot be exact due to the added measurement noise) and to the ones produced by the reference digital solver. However, the LCA produces a sparse vector while the digital solver returns many small but nonzero entries that would have to be removed by postprocessing.

Fig. 4.

Plot of the evolution with respect to time of several LCA nodes uk(t). The plain lines correspond to nodes that are active in the final solution and the dashed lines correspond to nodes that are inactive in the final solution.

Fig. 5.

Output a* of the LCA after convergence. Only nonzero elements are plotted. The fixed point reached by the system is very close to the initial sparse vector used to create the measurement vector (it cannot be exact due to noise). The solution is also very close to a standard digital solver [8] run using the same inputs. Note that the LCA produces many coefficients that are exactly zero (therefore not plotted).

To illustrate the global convergence behavior, we also ran the LCA for 30 randomly generated initial points. We selected two nodes from the final active set and plotted the trajectories in the space defined by those two nodes. Fig. 6 clearly shows that the solution is attractive for any of those initial points.

Fig. 6.

Trajectories of u(t) in the plane defined by two nodes chosen randomly from the active set. Trajectories are shown for 30 random initial states.

To illustrate the number of switches used by the system (see Theorem 2), we generate 1000 sparse vectors a0 and measurements y and simulate the LCA dynamics. Fig. 7 shows a histogram of the number of switches needed for the system to converge. The figure illustrates that the number of switches before convergence of the neural network is finite and of the order of the dictionary size. This illustrates that this solver takes an efficient path toward the solution.

Fig. 7.

Histogram (in percentage) of the number of switches the LCA requires before convergence over 1000 trials.

B. Convergence Rate Results

To illustrate the convergence rate result in Theorem 3, it is necessary to find an expression for the convergence speed (1 – δ)/τ that appears in the exponential term in (20). This term bounds the error squared

which is normalized to have initial value at t = 0 of 1 and plotted using a log-scale on Fig. 8. Note that the constant δ defined in (15) depends on the sequence of active sets Γk visited by the system. However, it is very difficult to predict for a given input signal what sequence of active sets the algorithm is going to visit. To estimate this upper bound, we compute the constant δ using the matrix ΦΓ* composed of the dictionary elements that are active in the final solution. The corresponding upper bound on the decay e–(1–δ*)t/τ is plotted in Fig. 8. During the transient phase, the number of active nodes is actually larger and therefore we expect 1 – δ to be smaller than this estimate and the nodes to converge more slowly at some point during the transient phase. As a consequence, we keep track of the largest support visited by the network and compute the corresponding δ. This second upper bound e–(1–δmax)t/τ on the convergence rate is plotted on the same figure. As expected, the theoretical decay computed with the maximum support visited is an upper bound for the convergence speed. However, it can be seen that this estimate is very pessimistic and that the bound computed with the final active set is a better estimate. This simulation illustrates that the theoretical exponential convergence appears to capture the essential system behavior.

Fig. 8.

Convergence behavior of . The dashed line shows the theoretical decay in (20) with δ* computed by using the final solution support. The dash-dot line shows the theoretical decay with δmax computed on the largest support visited. While both estimates are showing theoretically correct behavior, the estimate of the rate based on δ* is more empirically accurate than the conservative estimate based on δmax.

VI. Conclusion

This paper presented a mathematical analysis of the convergence properties and convergence rate of the LCA, a neural network designed specifically for the challenging sparse approximation problem. Despite a nonsmooth activation function and possibly singular interconnection matrix that prevent the application of existing analysis approaches, we have shown that the system is globally convergent to the optimal solution. In addition, under some mild assumptions on the solution, we have shown that the trajectories follow a reasonable path and reach the final active set in finite time. Finally, under slightly stronger assumptions on the problem specifics (applicable at least in CS recovery problems), we established that the LCA is exponentially convergent and with a convergence rate that depends on problem-specific parameters.

This collection of results and analysis leads us to conclude that performance guarantees can be made for the LCA system that make it plausible for implementation in engineering applications and as a model of biological information processing. Indeed, providing such guarantees makes it easier to justify the expense associated with developing analog VLSI implementations, which could eventually result in significant improvements to the speed and power consumption necessary for real-time signal processing applications. Our future work will concentrate on finding reasonable estimates of the theoretical convergence speed (especially in well-studied special cases such as CS recovery) and further characterizations of the LCA system dynamics that may open up new applications of this system for time-varying input signals.

Acknowledgments

This work was supported in part by the National Science Foundation under Grant CCF-0905346 and the National Institutes of Health under Grant R01-EY019965.

Biography

Aurèle Balavoine (S'09) received the B.S. degree from Ecole Supérieure d'Electricité, Gif-sur-Yvette, France, in 2008, and the M.S. degree from the Georgia Institute of Technology, Atlanta, in 2009. She is currently pursuing the Ph.D. degree with the School of Electrical and Computer Engineering, Georgia Institute of Technology.

Her current research interests include sparsity-based signal processing, mathematical optimization, dynamical systems, and neural networks.

Justin Romberg (S'98–M'03–SM'12) received the B.S.E.E., M.S., and Ph.D. degrees from Rice University, Houston, TX, in 1997, 1999, and 2004, respectively.

He was a Researcher with Xerox PARC, Palo Alto, CA, in 2000, a Visitor with the Laboratoire Jacques-Louis, Lions, Paris, in 2003, and a Fellow with UCLA's Institute for Pure and Applied Mathematics, Los Angeles, CA, in 2004. He was a Post-Doctoral Scholar with the Department of Applied and Computational Mathematics, California Institute of Technology, Pasadena, from 2003 to 2006. He joined the faculty of Electrical and Computer Engineering, Georgia Institute of Technology, Atlanta, in 2006, and is currently an Associate Professor and a member of the Center for Signal and Image Processing.

Dr. Romberg was a recipient of ONR Young Investigator Award in 2008 and the PECASE Award and a Packard Fellowship in 2009. He was named a Rice University Outstanding Young Engineering Alumnus in 2010. From 2008 to 2011, he was an Associate Editor for the IEEE Transactions on Information Theory.

Christopher J. Rozell (S'00–M'09–SM'12) received the B.S.E. degree in computer engineering and the B.F.A. degree in performing arts technology (music technology) in 2000 from the University of Michigan, Ann Arbor, and the M.S. and Ph.D. degrees in electrical engineering from Rice University, Houston, TX, in 2002 and 2007, respectively.

He was a Texas Instruments Distinguished Graduate Fellow with Rice University, and following graduate school was a Post-Doctoral Research Fellow with the Redwood Center for Theoretical Neurosciences, University of California, Berkeley. He joined the faculty at the Georgia Institute of Technology, Atlanta, in 2008, where he is currently an Assistant Professor with the School of Electrical and Computer Engineering, and a member of the Laboratory for Neuroengineering, and the Center for Signal and Image Processing. His current research interests include constrained sensing systems, sparse representations, statistical signal processing, and computational neuroscience.

Appendix A

Proof of Theorem 1

Building on the earlier definition of a subgradient to give a notion that nondifferentiable functions can still be well behaved, a function (where X is a Banach space) is said to be regular [40, Def. 2.3.4] at x in X if:

- for all υ ∈ X, the usual one-sided directional derivative

- for all υ ∈ X, g′(x; υ) = g°(x; υ), where g°(x; υ) is the generalized directional derivative

It can easily been seen that, since the function C(·) is defined from to and is differentiable on , and the function Tλ(·) is continuous on all , then C(·) admits left and right derivatives and is clearly regular on . This implies that V(·) is regular on , and by [40, Prop. 2.3.3] we have that

| (21) |

where ∂C(a(t)) = [∂C(a1(t)), . . . , ∂C(aN(t))]T. We also recall the following result [40, Th. 2.3.9 (iii)], which is a generalization of the chain rule for regular functions.

Lemma 2: Suppose that is regular in and that is strictly differentiable on [0, +∞). Then, V(a(t)) is regular on , and we have

| (22) |

Note that, from this theorem, since V(a(t)) is regular, we can choose any element in ∂V(a(t)) to compute the time derivative of V(·) along the trajectories of the neural network. Armed with these tools, we proceed with the proof of Theorem 1.

Proof of Theorem 1: Beginning with part 1 of the theorem, we first show that any fixed point of system (3) is a critical point of the objective in (1). From (11), any fixed point u* of (3) satisfies the relationship u̇(t) = 0. Let Γ* be the active set at the fixed point u*. Equations (8) and (9) yield

| (23) |

| (24) |

According to (12), and using (21), a point a* is a critical point of V(·) if and only if

| (25) |

For the nodes in the active set n ∈ Γ*, C(an) admits a usual gradient, as defined in (4), and thus (25) yields

This is the same as condition (23). For nodes in the inactive set , we need to determine the subgradient of C(·) at zero. To do so, note that using (4) and the continuity of the function f(·) at λ [condition (6a)], we have

As a consequence, we find that

From the definition of the subgradient, these limits show that ∂C(0) = [–1, 1] . Using this, the condition in (25) restricted to the set of inactive nodes is equivalent to

We immediately see that this condition is the same as (24), since by definition of the inactive nodes . This shows that the fixed points a* coincide with the critical points of the objective function (1).

Moving on to establishing the convergence result in part 2 of the theorem, we first note that, from our analysis above, the same reasoning leads to the conclusion that

| (26) |

Using the chain rule (22) with ζ = –u̇(t) ∈ ∂V(a(t)) from (26) and using the expression in (7), we get the relationship

| (27) |

This expression is valid for all time t ≥ 0. In addition, because the function f(·) satisfies (6b), f′(un(t)) > 0, and thus

This means that V(a(t)) is nonincreasing for all t ≥ 0. Since V(a(t)) is continuous, bounded below by zero, and nonincreasing, V(a(t)) converges to a constant value V*, and its time derivative V̇(a(t)) tends to zero as t → ∞. Using (27) and (6b), we conclude that . As a consequence, we also have , so the outputs converge to the set E = {a : s.t. ȧ(t) = 0}, and the LCA outputs are quasi-convergent.

Moving on to part 3 of the theorem, we assume that the critical points of (1) are isolated. We need to show that both active and inactive nodes converge to a single fixed point. From part 2, we know that, since the nodes converge to E, after some time, the active nodes will be within a ball of radius R around one element a* ∈ E. However, since critical points of (1) are isolated, there exists a ball of radius ε > 0 around a* that does not contain any critical point

Since the system is stable, we know that once the trajectory gets close enough (within a ball of radius R) to one element in E, it cannot leave a ball of radius around this fixed point [see (13)]. As a consequence, the outputs remain within the ball , which contains only the fixed point a*. This proves that the active nodes converge to the point a* in E

| (28) |

This implies that the ODE (3) can be written in terms of the distance of the outputs from the solution

We let

and rewrite the ODE (3) as

Solving this ODE for all t ≥ 0 yields

While it is difficult to say anything directly about the trajectory of the system, it is helpful to consider a surrogate trajectory that is a straight line in the state-space u* + e–t (u(0) – u*). This linear path obviously converges to the fixed point u*, and if we are able to show that the actual trajectory u(t) asymptotically approaches this idealized linear path, we will have established that the system converges to u*. To take this approach, we examine the quantity

which is the deviation from the linear path. Consider the norm of this deviation

To show convergence to zero, we split the integral into two parts. Since , then for any , there exists a time tc ≥ 0 such that , . Moreover, since is continuous and goes zero as t goes to infinity, it admits a maximum μ, . This yields, for all t ≥ 2tc

Since the left term converges to 0 and can be chosen arbitrarily small, this shows that the trajectory u(t) converges to the trajectory u* + e–t (u(0) – u*) as t goes to infinity, and thus we can conclude that .

Because we have shown separately that both the active and inactive nodes converge for any initial state, it concludes our proof that the system is globally convergent.

Appendix B

Proof of Lemma 1

Proof: Each of the four cases will be treated separately.

- We can separate this proof into four cases. If and , we have . If and , according to the mean value theorem, since the function f(·) is continuous on , differentiable on and , there exist such that

If and , according to the mean value theorem, there exists such that

Finally, if and , according to the mean value theorem, there exists such that . - Using properties 1) and 2), we have

- Since the soft-threshold function is nondecreasing, then the function is also nondecreasing. Moreover, gn(0) = 0 and . As a consequence, we can bound the integral by

As a consequence

Footnotes

Preliminary versions of portions of this paper were presented in [1].

We reintroduce the time constant τ in this discussion of the LCA, since it appears in the expression for the convergence speed.

REFERENCES

- 1.Balavoine A, Rozell CJ, Romberg J. Global convergence of the locally competitive algorithm. Proc. IEEE Digital Signal Process. Workshop. 2011 Jan;:431–436. [Google Scholar]

- 2.Elad M, Figueiredo MAT, Ma Y. On the role of sparse and redundant representations in image processing. Proc. IEEE. 2010 Jun;98(6):972–982. [Google Scholar]

- 3.Olshausen BA, Field DJ. Sparse coding of sensory inputs. Current Opinion Neurobiol. 2004 Aug;14(4):481–487. doi: 10.1016/j.conb.2004.07.007. [DOI] [PubMed] [Google Scholar]

- 4.Olshausen BA, Field DJ. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996 Jun.381(6583):607–609. doi: 10.1038/381607a0. [DOI] [PubMed] [Google Scholar]

- 5.Olshausen BA, Field DJ. Sparse coding with an overcomplete basis set: A strategy employed by V1? Vis. Res. 1997 Dec.37(23):3311–3325. doi: 10.1016/s0042-6989(97)00169-7. [DOI] [PubMed] [Google Scholar]

- 6.Candes EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory. 2006 Feb.52(2):489–509. [Google Scholar]

- 7.Donoho DL. Compressed sensing. IEEE Trans. Inf. Theory. 2006 Apr.52(4):1289–1306. [Google Scholar]

- 8.Kim S-J, Koh K, Lustig M, Boyd S, Gorinevsky D. An interior-point method for large-scale L1-regularized least squares. IEEE J. Sel. Topics Signal Process. 2007 Dec.1(4):606–617. [Google Scholar]

- 9.Figueiredo MAT, Nowak RD, Wright SJ. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Topics Signal Process. 2007 Dec.1(4):586–598. [Google Scholar]

- 10.Daubechies I, Defrise M, De Mol C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004 Aug.57(11):1413–1457. [Google Scholar]

- 11.Cichocki A, Unbehauen R. Neural Networks for Optimization and Signal Processing. Wiley; New York: 1993. [Google Scholar]

- 12.Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc. Nat. Acad. Sci. 1982 Apr.79(8):2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Twigg C, Hasler P. Configurable analog signal processing. Digital Signal Process. 2009 Dec;19(6):904–922. [Google Scholar]

- 14.Rozell CJ, Johnson DH, Baraniuk RG, Olshausen BA. Sparse coding via thresholding and local competition in neural circuits. Neural Comput. 2008 Oct.20(10):2526–2563. doi: 10.1162/neco.2008.03-07-486. [DOI] [PubMed] [Google Scholar]

- 15.Hespanha JP. Uniform stability of switched linear systems: Extensions of LaSalle's invariance principle. IEEE Trans. Autom. Control. 2004 Apr.49(4):470–482. [Google Scholar]

- 16.Liu S, Wang J. A simplified dual neural network for quadratic programming with its KWTA application. IEEE Trans. Neural Netw. 2006 Nov.17(6):1500–1510. doi: 10.1109/TNN.2006.881046. [DOI] [PubMed] [Google Scholar]

- 17.Yang H, Dillon TS. Exponential stability and oscillation of Hopfield graded response neural network. IEEE Trans. Neural Netw. 1994 Sep.5(5):719–729. doi: 10.1109/72.317724. [DOI] [PubMed] [Google Scholar]

- 18.Liang X-B, Wang J. A recurrent neural network for nonlinear optimization with a continuously differentiable objective function and bound constraints. IEEE Trans. Neural Netw. 2000 Nov.11(6):1251–1262. doi: 10.1109/72.883412. [DOI] [PubMed] [Google Scholar]

- 19.Forti M, Tesi A. Absolute stability of analytic neural networks: An approach based on finite trajectory length. IEEE Trans. Circuits Syst. I, Reg. Papers. 2004 Dec.51(12):2460–2469. [Google Scholar]

- 20.Lu W, Wang J. Convergence analysis of a class of nonsmooth gradient systems. IEEE Trans. Circuits Syst. I, Reg. Papers. 2008 Dec.55(11):3514–3527. [Google Scholar]

- 21.Xia Y, Feng G, Wang J. A recurrent neural network with exponential convergence for solving convex quadratic program and related linear piecewise equations. Neural Netw. 2004 Sep.17(7):1003–1015. doi: 10.1016/j.neunet.2004.05.006. [DOI] [PubMed] [Google Scholar]

- 22.Ferreira LV, Kaszkurewicz E, Bhaya A. Support vector classifiers via gradient systems with discontinuous righthand sides. Neural Netw. 2006 Dec.19(10):1612–1623. doi: 10.1016/j.neunet.2006.07.004. [DOI] [PubMed] [Google Scholar]

- 23.Forti M, Tesi A. New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 1995 Jul.42(7):354–366. [Google Scholar]

- 24.Li L, Huang L. Dynamical behaviors of a class of recurrent neural networks with discontinuous neuron activations. Appl. Math. Model. 2009 Dec.33(12):4326–4336. [Google Scholar]

- 25.Forti M, Nistri P, Quincampoix M. Generalized neural network for nonsmooth nonlinear programming problems. IEEE Trans. Circuits Syst. I, Reg. Papers. 2004 Sep.51(9):1741–1754. [Google Scholar]

- 26.Forti M, Nistri P, Quincampoix M. Convergence of neural networks for programming problems via a nonsmooth Łojasiewicz inequality. IEEE Trans. Neural Netw. 2006 Nov.17(6):1471–1486. doi: 10.1109/TNN.2006.879775. [DOI] [PubMed] [Google Scholar]

- 27.Xue X, Bian W. Subgradient-based neural networks for nonsmooth convex optimization problems. IEEE Trans. Circuits Syst. I, Reg. Papers. 2008 Sep.55(8):2378–2391. [Google Scholar]

- 28.Liu QS, Wang J. Finite-time convergent recurrent neural network with a hard-limiting activation function for constrained optimization with piecewise-linear objective functions. IEEE Trans. Neural Netw. 2011 Apr.22(4):601–613. doi: 10.1109/TNN.2011.2104979. [DOI] [PubMed] [Google Scholar]

- 29.Lin H, Antsaklis PJ. Stability and stabilizability of switched linear systems: A survey of recent results. IEEE Trans. Autom. Control. 2009 Feb.54(2):308–322. [Google Scholar]

- 30.Maass W. On the computational power of winner-take-all. Neural Comput. 2000;12(11):2519–2535. doi: 10.1162/089976600300014827. [DOI] [PubMed] [Google Scholar]

- 31.Natarajan BK. Sparse approximate solutions to linear systems. SIAM J. Comput. 1995;24(2):227–234. [Google Scholar]

- 32.Chen SS, Donoho DL, Saunders MA. Atomic decomposition by basis pursuit. SIAM Rev. 2001 Mar.43(1):129–159. [Google Scholar]

- 33.Donoho DL, Tanner J. Sparse nonnegative solutions of under-determined linear equations by linear programming. Proc. Nat. Acad. Sci. United States Amer. 2005;102(27):9446–9451. doi: 10.1073/pnas.0502269102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Knill DC, Pouget R. The Bayesian brain: The role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27(12):712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- 35.Rehn M, Sommer FT. A network that uses few active neurones to code visual input predicts the diverse shapes of cortical receptive fields. J. Comput. Neurosci. 2007 Apr.22(2):135–146. doi: 10.1007/s10827-006-0003-9. [DOI] [PubMed] [Google Scholar]

- 36.Smith EC, Lewicki MS. Efficient auditory coding. Nature. 2006 Feb.439(7079):978–982. doi: 10.1038/nature04485. [DOI] [PubMed] [Google Scholar]

- 37.Fischer S, Redondo R, Perrinet L, Cristóbal G. Sparse approximation of images inspired from the functional architecture of the primary visual areas. EURASIP J. Adv. Signal Process. 2007;2007(1):1–17. [Google Scholar]

- 38.Perrinet L. Feature detection using spikes: The greedy approach. J. Physiol. (Paris) 2004 Jul.98(4–6):530–539. doi: 10.1016/j.jphysparis.2005.09.012. [DOI] [PubMed] [Google Scholar]

- 39.Bioucas-Dias JM, Figueiredo MAT. A new TwIST: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 2007 Dec.16(12):2992–3004. doi: 10.1109/tip.2007.909319. [DOI] [PubMed] [Google Scholar]

- 40.Clarke FH. Optimization and Nonsmooth Analysis. SIAM; Philadelphia, PA: Jan. 1987. [Google Scholar]

- 41.DeCarlo RA, Branicky MS, Pettersson S, Lennartson B. Perspectives and results on the stability and stabilizability of hybrid systems. Proc. IEEE. 2000 Jul.88(7):1069–1082. [Google Scholar]

- 42.Bacciotti A, Rosier L. Liapunov Functions and Stability in Control Theory. Springer-Verlag; New York: 2005. [Google Scholar]

- 43.Fuchs J-J. On sparse representations in arbitrary redundant bases. IEEE Trans. Inf. Theory. 2004 Jun.50(6):1341–1344. [Google Scholar]

- 44.Bolte J, Daniilidis A, Lewis A. The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 2007;17(4):1205–1223. [Google Scholar]

- 45.Candes EJ, Romberg JK, Tao T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006 Aug.59(8):1207–1223. [Google Scholar]