Abstract

This randomized-control trial examined the learning of preservice teachers taking an initial Early Literacy course in an early childhood education program and of the kindergarten or first grade students they tutored in their field experience. Preservice teachers were randomly assigned to one of two tutoring programs: Book Buddies and Tutor Assisted Intensive Learning Strategies (TAILS), which provided identical meaning-focused instruction (shared book reading), but differed in the presentation of code-focused skills. TAILS used explicit, scripted lessons, and the Book Buddies required that code-focused instruction take place during shared book reading. Our research goal was to understand which tutoring program would be most effective in improving knowledge about reading, lead to broad and deep language and preparedness of the novice preservice teachers, and yield the most successful student reading outcomes. Findings indicate that all pre-service teachers demonstrated similar gains in knowledge, but preservice teachers in the TAILS program demonstrated broader and deeper application of knowledge and higher self-ratings of preparedness to teach reading. Students in both conditions made similar comprehension gains, but students tutored with TAILS showed significantly stronger decoding gains.

Keywords: Teacher training, Preservice teachers, Randomized control trial, Early literacy instruction, Early childhood, Response to intervention, RTI

There is widespread concern about the substantial number of children who are unable to read on grade level. Early childhood and elementary teachers are seen as the frontline of defense in efforts to prevent future reading difficulties under the reading initiatives of The No Child Left Behind Act of 2001 (NCLB). Furthermore, under the Individuals with Disabilities Education Act (IDEA) (2004), a significant portion of special education funds may be used for general education initiatives to strengthen early reading instruction and to provide early intervening services. Indeed, many states are taking the option offered by IDEA 2004 to use a Response to Instruction (RTI) approach, which requires that all students at risk for reading difficulties receive evidence-based beginning reading instruction, and if they do not respond adequately, that they also receive small-group, individualized interventions before determining whether a child has a reading disability. Although there is a well-established body of research about the type of instruction it takes to prevent reading difficulties (Adams, 1990; National Reading Panel, 2000; Snow, Burns & Griffin, 1998; Snow, Griffin, & Burns 2005), converging evidence suggests a gap—some may call it a divide—between the research and teachers’ knowledge and beginning reading instructional practices (Bos, Mather, Dickson, Podhajski, & Chard, 2001; Brady & Moats, 1997; Fitzgerald, 2001; Hoffman & Roller, 2001; Mather, Bos, & Babur, 2001; Moats, 2009; Moats & Foorman, 2003; Moats & Lyon, 1996; Podhajski, Mather, Nathan, & Sammons, 2009; Spear-Swerling, 2009). Thus, the important question remains: how can future teachers be prepared to provide adequate Tier 1 instruction to prevent reading difficulties and implement small-group individualized interventions?

Answering this question is arguably one of the most pressing needs for the field of education. When surveyed, members of the Reading Hall of Fame faulted inadequate preservice education as the “most persistent problem” teachers face, specifically noting a lack of realistic field experiences (Bauman, Ro, Duffy-Hester, & Hoffman, 2000). Researchers agree that quality field experiences play a critical role in learning to teach reading (Hoffman et al., 2005; Olson & Gillis, 1983). Recent reviews of the literature concerning preparation for the teaching of reading generally support the efficacy of field experiences in helping preservice teachers (PSTs) connect theory and practice (Anders, Hoffman, & Duffy, 2000; National Reading Panel (NRP), 2000; Pang & Kamil, 2003).

However, the American Educational Research Association Panel on Research and Teacher Education (Cochran-Smith & Zeichner, 2005) cautioned that there is very little empirical evidence pertaining to effective methods of teacher preparation, including field experiences. Currently, few claims can be made as to what are effective field experiences because the extant literature inadequately describes the components of these experiences (Anders et al., 2000). Accordingly, research is needed that measures learning gains of both preservice teachers and students as a result of contrasting field experiences. The National Commission and Sites of Excellence in Reading Teacher Education has begun a research program to track graduates of high-quality teacher preparation programs. One of the criteria for selection of high-quality programs is a “strong emphasis on reading instruction and in-depth field experiences” (Maloch, Fline, & Flint, 2003, p. 348). Findings from the initial interviews suggest graduates described themselves as: (a) responsive to their students’ needs, (b) well-prepared and confident about their teaching, (c) knowledgeable about the reading process and assessments, (d) reflective about the need to adapt instruction for individual student needs, and (e) connected to communities of learners (Maloch et al., 2003).

The present study represents a small-scale first step in addressing the paucity of empirical research by examining the causal link between an initial field experience and preservice teacher (hereafter PST) pedagogical knowledge about the structure of language, application of such knowledge, self-rated preparedness to teach reading, and children’s reading growth. To our knowledge, this examination is one of the first to randomly assign PSTs to differing field experiences within an initial Early Literacy Instruction methods course.

Perspectives or theoretical framework of the early literacy course and fieldwork

We emphasize that this initial Early Literacy course and field experience is only a first step in professional development within our program and, in a broader sense, within a career toward developing knowledge-of-practice (Cochran-Smith & Lytle, 1999). In this perspective, PSTs learn, apply, test, dialog, and reflect about evidence-based practices through closely-linked coursework and fieldwork. Our study also draws on situational learning theory, which emphasizes authentic environments that help students to apply theory and knowledge in everyday teaching practice (Brown, Collins, & Duguid, 1989, p. 37).

Pedagogical knowledge about effective instruction

There is broad consensus that, in order to be a good reader, children require effective instruction (Pressley et al., 2001; National Reading Panel, 2000; Snow, 2002; Snow, Burns, & Griffin, 1998; Wharton-McDonald, Pressley, & Hampston, 1998). Furthermore, researchers also generally agree that prevention is more effective than remediation, which has led to widespread interest not only in early reading instruction, but also in improving teacher preparation to create a cadre of professional, accomplished teachers of reading. Thus, this initial literacy course focused on the pedagogical knowledge of what children need to be taught in order to be good readers. Three federally-sponsored reviews of the literature, Preventing Reading Difficulties (Snow, Burns, & Griffin, 1998), Reading for Understanding (Snow, 2002) and The NRP (2000) have documented the effectiveness of explicit and systematic literacy instruction in five critical components: phonological awareness, phonics, fluency, vocabulary, and comprehension.

However, a fairly recent study titled What education schools aren’t teaching about reading and what elementary teachers aren’t learning, conducted by the National Council on Teacher Quality (Walsh, Glaser, & Dunne-Wilcox, 2006) examined 223 randomly selected syllabi from required courses taught at 72 schools of education. The authors expressed alarm that only about 15% of the schools provided future elementary teachers coursework that was aligned with the science of reading, and that only four of the 227 texts used were consistent with the research base. Joshi et al. (2009) also reviewed several textbooks that are widely used in reading education courses and reported that many did not cover all the components and that relatively less information was provided on applying code-focused instructional principles.

It is noteworthy that syllabus for the literacy course in which PSTs in this study were enrolled was cited by Walsh et al. (2006) as having an appropriate text and as being well-aligned with the science of reading. The literacy course also taught preservice teachers that the ability to read generally develops in a predictable progression for most individuals (Chall, 1983; Ehri, 1998, 2002; Spear-Swerling & Sternberg, 1996). In the pre-reading stage, teachers should help students develop the fundamental language skills that are necessary for learning to read, such as phonological awareness, and help them acquire beginning levels of print awareness (Whitehurst & Lonigan, 1998). For students in the learning to read stage, teachers should focus on building students’ skills to read words, and in the reading to learn stage, teachers should expand their students’ reading vocabulary and comprehension skills, so that students begin using their ability to read as a learning tool. Teachers need to develop language to build vocabulary and general knowledge throughout each phase. Finally, the literacy course emphasized the importance of teacher knowledge about the structure of language (Moats, 1994, 2009). Teachers must master knowledge of phonology, orthography, and morphology in order to teach children who are struggling to learn phonological awareness, phonics, and how to crack the code through carefully-sequenced activities that follow thoughtfully-designed objectives (Foorman & Moats, 2004). Such knowledge is critical for understanding student errors and scaffolding development (Brady & Moats, 1997). Converging findings from several studies suggest that teachers’ knowledge of the structure of language positively impacts students’ reading development (McCutchen et al., 2002; Moats & Foorman, 2003; Podhajski, Mather, Nathan, & Sammons, 2009).

Application of pedagogical knowledge

A handful of researchers have shown that special education PSTs have improved their knowledge about language structure after participating in course and fieldwork (Al Otaiba & Lake, 2007; Mayhew & Welch, 2001; Spear-Swerling, 2009; Spear-Swerling & Brucker, 2004). Two of the three studies found a relationship between PST’s knowledge and student progress; one did not (Spear-Swerling, 2009). In the present study, we emphasized this relationship in the Early Literacy course using Gough and Tunmer’s Simple View (1986) of reading to provide preservice teachers with a useful and practical theoretical framework for understanding the importance of pedagogical knowledge in improving student progress. Namely, these five components, which PSTs called the Fab Five (NRP, 2000), may be categorized into two broad types of skill and knowledge that are required for proficient reading: (1) accurate and fluent word identification, or code-focused skills, and (2) comprehension of oral and written language, or meaning-focused skills. In particular, these novice preservice teachers needed to know that, if their students could not accurately identify or decode most of the words in a passage of text, it would be very difficult to comprehend the meaning of the passage. Likewise, if students could read text accurately, but did not know the meaning of many of the words or could not comprehend the concepts expressed, then reading comprehension would suffer. To be consistent, this simple view was also used to shape how preservice teachers applied their knowledge in their field experience, because teachers “need guidance about how to combine and prioritize various instructional approaches in the classroom and in particular about how to teach comprehension while attending to the often poor word-reading skills their students bring” (Sweet & Snow, 2002, pp. 47–48).

Contrasting tutorials used in the field experience

There is some controversy regarding the degree to which instructional materials (such as teacher editions of core reading programs) can contribute supportive guidance about effective instruction through scripted lessons (Al Otaiba, Kosanovich-Grek, Torgesen, Hassler, & Wahl, 2005) or whether scripted lessons hamper teachers’ ability to combine code-focused and meaning-focused instruction in authentic text and to individualize instruction (Allington & Nowak, 2004). The effectiveness of these two viewpoints was directly tested within our study.

TAILS

The first intervention—Tutor-Assisted Intensive Learning Strategies or TAILS (Al Otaiba, 2003)—provides scripted and structured instructional routines that potentially could support PSTs’ transfer of knowledge learned in coursework to instruction in all five components of reading (i.e., research-to-practice). TAILS is an evidence-based tutorial program designed for use by volunteers, paraeducators, or tutors in kindergarten through second grade settings. In the initial efficacy trial (Al Otaiba, Schatschneider, & Silverman, 2005), participating children were 73 kindergartners selected for their very low initial letter naming scores on the Dynamic Indicators of Basic Early Literacy Skills (DIBELS) (Good & Kaminski, 2002). Students attended four high-poverty Title I schools, and they were randomly assigned to condition within classrooms. Students tutored via TAILS 4 days per week demonstrated significantly more growth on the Basic Skills Cluster of the Woodcock Reading Mastery Test Revised (Woodcock & Johnson, 1997) than students in two comparison conditions (these were a two-days a week TAILS condition and a small group book reading condition). Large and educationally-important effect sizes (calculated as Cohen’s d) favored TAILS over comparison conditions on word identification (.79), passage comprehension (.90), and basic reading skills (.83).

TAILS, which is based on principles of direct instruction (e.g., Carnine, Silbert, & Kame’enui, 1998), has a clear scope and sequence, follows a model-lead-test format, and includes cumulative review and practice. Each TAILS session lasts approximately 30 min and includes activities designed to address the five important components of instruction supported by scientifically-based reading research: phonemic awareness, phonics, fluency, vocabulary, and comprehension (National Reading Panel, 2000; Snow et al., 1998).

First, tutors conduct code-focused instruction, beginning with a 5-min word building (Elkonin box) activity designed to teach phonological awareness and to link sound awareness to spelling (Adams, Foorman, Lundberg, & Beeler, 1997; Blachman, Ball, Black, & Tangel, 1998). Second, tutors conduct a 10-min code-focused activity that is borrowed from Peer-Assisted Learning Strategies (Fuchs, Fuchs, Mathes, & Simmons, 1997; Fuchs et al., 2001, 2002; Mathes, Fuchs, Fuchs, Henley, & Sanders, 1994; Mathes, Howard, Allen, & Fuchs, 1998). For kindergartners, the focus is partially on phonological awareness; activities include identifying pictures of things that share an initial sound (e.g., socks and sun) or that rhyme, and blending and segmenting. Both kindergarten and first grade students are taught to pronounce letter sounds, to decode phonetically-regular words, to read high-frequency sight words, and to read stories composed of now-familiar sight words and decodable words. Next, tutors use a brief speed game to practice fluency. The remaining 15 min of TAILS is meaning-focused (i.e., addressing vocabulary and comprehension), and tutors use a particular type of shared book reading that we call Dialogic/Character Reading, which was adapted (Al Otaiba, 2004; Lake, 2001) from research-validated dialogic reading practices (Lonigan, Anthony, Bloomfield, Dyer, & Samwel, 1999; Lonigan & Whitehurst, 1998; Valdez-Menchaca & Whitehurst, 1992). Prior to book reading, tutors select key vocabulary words and then give child-friendly definitions to students (Beck, McKeown, & Kucan, 2002). The tutors select books that were coded by Lake for specific themes related to character development or the moral in a story (e.g., for Goldilocks and the Three Bears, a theme might be “respect of others’ property”). Each book jacket included a list of comprehension questions and prompts ranging from literal completion questions (e.g., Who is in the story?) to more inferential and decontextualized character questions (e.g., What would you do if someone used your things without asking? or How do you respect other people’s things at home?)

Book buddies

The second tutorial condition, which we termed Book Buddies, also lasted 30 min. PSTs were provided the identical meaning-focused Dialogic/Character books and scripts from TAILS described above, but none of the code-focused instructional activities. Thus, PSTs in Book Buddies were instructed to provide code-focused instruction (phonological awareness, phonics, and fluency) in the context of the book reading. The rationale for not providing PSTs in the Book Buddies condition with stand-alone, scripted, code-focused instructional activities is based on research in effective first grade classrooms, which suggests reading teachers should teach beginning literacy skills in reaction to specific problems students encounter in text and emphasizes the need for teachers to be flexible enough to coordinate code-focused and meaning-focused strategies (Pressley et al. 2001; Wharton-McDonald et al., 1998), These authors argue that curriculum does not teach—teachers do.

Thus, the primary research aim of this small-scale, randomized control trial was to examine the relative effects of participating in one of two tutoring programs on PST’s knowledge, application of knowledge, and perceptions of preparedness to teach reading. The secondary aim was to examine the relative effects of the two tutoring programs on children’s code-focused and meaning-focused skills. We hypothesized that, because the course was well constructed, that PSTs in both conditions would have similar knowledge, but that PSTs in the TAILS condition would be better able to apply that knowledge, particularly with regard to the code-focused instruction that was scaffolded by materials. Similarly, we expect both groups of children to demonstrate similar growth on meaning focused skills, but that children in the TAILS condition will exhibit greater growth on code-focused skills.

Methods

Research design and participants

In this randomized control study, preservice teachers taking their initial literacy instructional methods course were randomly assigned to one of two contrast field experiences (tutorial conditions). Random assignment resulted in equivalence of the groups at the beginning of the study, and their membership in a single class within a single cohort ensured they received the same instructional history between the pre-and post-tests. In other words, preservice teachers shared the same instructor, instruction, text, and assignments, but differed only by tutorial condition.

Participants were a single cohort of 28 undergraduate students enrolled in their second semester of an Early Childhood Education Program (certification to teach age 3 to grade 3) at a large research university in the southeastern United States. During this semester, these students took courses in Early Literacy Instruction, Early Childhood Curriculum and Methods, and Early Childhood Observation and Participation (this later course was a two-day-a-week practicum in one of the local elementary schools in grades kindergarten, first, or multi-age kindergarten/first grade). Participation in this study was voluntary and all 28 students consented to participate in the study. As is typical, 27 of these 28 students were female students. Two were Hispanic-American, four were African-American, and 21 were Caucasian.

Preservice teachers (PSTs) were assigned practicum sites and subsequently met with their assigned classroom teachers (i.e., the kindergarten or first grade classroom teacher supervising the practicum) to solicit her recommendation as to which student the classroom teacher would identify as a struggling reader and nominate for tutoring. Once the mentor classroom teacher and PST agreed on a child, a permission form was sent to the child’s parent or guardian. Once the form was signed and returned, the tutoring began. Thus, there were 28 total student tutees (15 kindergarteners and 11 first graders), each selected from a unique classroom within the seven elementary schools where the PSTs were placed. The schools varied in the percentage of students receiving free and reduced price lunch participation from 8 to 76%.

Measures

PST measures

Given our interest in examining whether the treatment conditions would impact PST’s knowledge about teaching reading, we carefully reviewed the teacher preparation and reading literature to identify assessments used in prior work. We also developed a lesson log that would allow us to examine whether PSTs were accurately connecting lesson objectives with instructional activities and strategies when applying their knowledge.

Knowledge about teaching reading

(1) To evaluate PST’s pre- to post-treatment growth in knowledge about the structure of language, we used the Teacher Knowledge Assessment: Structure of Language (Mather et al., 2001). This is a 22-item multiple-choice measure designed to assess knowledge of language structure at the level of the word and the individual phoneme (e.g., How many speech sounds are there in the word grass ? [a] two, [b] three, [c] four, [d] five). In addition, questions also address methods of reading instruction. Test–retest reliability in the Mather et al. study was .83.

Self-reported preparedness to teach reading survey

To evaluate how well prepared participants feel to teach and assess reading, we used a 13-item Preparedness to Teach Reading Survey adapted from our prior work and described in (Al Otaiba & Lake, 2007). This is a low-inference self-assessment. Questions are worded so participants can respond on a scale of one to five (1 = strongly disagree to 5 = strongly agree) to statements such as “Please indicate your degree of agreement with each of the following items”. Some items were related to assessment and assessment to inform instruction (e.g., I feel well prepared to: analyze students’ error patterns in reading; adapt instruction of struggling readers to meet individual needs; assess students to identify strengths and weaknesses in literacy development). Other items specifically related to teaching the five components of reading (e.g., I feel well prepared to: help children develop phonological awareness; teach comprehension strategies; teach the relationship between letters and sounds). Total raw scores and percentiles are reported. In prior work, we have found this questionnaire to be sensitive to growth in knowledge and to be correlated to observations of teaching behaviors (Al Otaiba & Lake, 2007; Al Otaiba, Torgesen, & Lane, 2003).

Bi-weekly lesson logs

To evaluate the depth and breadth of application of knowledge, we asked PSTs to submit lesson logs (with an abbreviated form shown in Appendix A), as part of the requirements of the Early Literacy coursework. Specifically, they were asked to report the dates and times of tutoring sessions for the previous 2 weeks, describe the objectives and activities for each of the five components of reading, and tell which character education themed book had been read. An in-depth description of the moral/character thematic aspect of the study has been described in (Lake & Al Otaiba, 2009).

Child measures

To better understand whether PSTs using TAILS or Book Buddies would achieve greater code- or meaning-focused growth, researchers individually administered two types of assessments at pre- and post-treatment.

Dynamic indicators of basic early literacy skills (DIBELS, Kaminski & Good, 1996)

First, to assess aspects of code-focused fluency growth, we selected two criterion-referenced subtests that assessed the important beginning reading skills of phonological awareness and nonsense word decoding fluency. These tasks were selected because they are reliable and valid indicators of foundational reading skills that relate to national and our state-level benchmarks, which indicate grade-level performance for both kindergarten and first grade (Good, Simmons, & Kame’enui, 2001). The Phoneme Segmentation Fluency (PSF) task requires the child to segment the phonemes in an orally-presented word containing three or four phonemes. Scoring also allows for partial credit. For example, a response to sat that reflected the onset and rime rather than three phonemes would earn a score of 2. Alternate-form reliability is .90 and the variable of interest is the number of correct segments produced in 1 min. The Nonsense Word Fluency (NWF) task requires the child to read vowel-consonant and consonant–vowel-consonant, single-syllable pseudo-words, all of which have the short vowel sound. After a practice trial, the examiner instructs the child to read the make believe words as quickly and accurately as possible. If the child does not respond within 3 s, the examiner prompts with by asking the child to read the next word. The stimuli are presented in 12 rows of five words each. Scoring guidelines give credit for correctly producing individual phonemes or for producing the pseudoword as a blended unit. Thus, if the nonsense word is “vab” 3 points are awarded if the child says/v//a//b/or/vab/.” Alternate-form reliability is .83.

The early reading diagnostic assessment second edition (ERDA-2; The Psychological Corporation, 2003)

The second measure, the ERDA, assesses reading skill development in students from kindergarten to third grade. We administered the following subtests: phonological awareness, phonics, fluency, vocabulary, and story retell/listening comprehension. These subtests are suitable for both kindergarten and first grade. For example, for story retell/listening comprehension, the examiner reads a story aloud to the student, and the student must answer comprehension questions about the story. Rubrics are provided for evaluating the quality of the student’s response to each question. Raw scores can be converted to percentile ranges, but not to standard scores. The publisher-reported reliability for the subtests is high (.95).

Procedures

Preservice teacher (PST) training: TAILS and book buddies

Researchers separately trained both groups to administer their respective tutoring program during two three-hour sessions. The field experience component of class involved tutoring once a week for 8 weeks. Both tutoring programs were conducted for 30 minutes and included identical meaning-focused instruction. Tutors were given a range of books including folk tales, multi-cultural fiction, and informational texts, as well as alphabet and rhyming books.

Coding bi-weekly lesson logs

Sets of bi-weekly lesson logs for all 14 PSTs in the TAILS group were used as data in this study. However, only sets of bi-weekly lesson logs for 11 of the 14 PSTs in the Book Buddies group were used. Two sets of lesson logs were handwritten and unreadable, and one set of lesson logs was lost during the copying process. A total of 187 lesson logs were included in this study; 82 were from the Book Buddies group and 105 were from the TAILS group.

During an initial meeting between the first and third author, a three step coding system was developed to provide a low-inference score of depth-of-knowledge applied within the lesson logs. First, when coding lesson logs, we identified whether or not there was an objective for each of the five components of reading (phonological awareness (phonological awareness), phonics, fluency, vocabulary, and comprehension). Next, we evaluated if the stated objectives matched the listed activities. A mismatch was defined as having an objective that did not match the activity. For example, a mismatch was counted when a PST listed a phonics objective but described a vocabulary activity. Last, we evaluated the depth of application for each objective. Depth of application was scored from 0 to 3 where 0 indicated no objective was included, 1 indicated there was an objective, 2 indicated there was an objective and matching activity, and 3 indicated there was an objective, activity, and appropriate strategy. Appendix B shows exemplars of codes 1–3 across the components.

The third author was the primary coder of the studies; she trained the fourth author to code a subset of the studies to establish reliability. They independently coded two lesson logs, one from each group, and resolved any initial differences through discussion. Once the coding procedures were established, the fourth author coded one lesson log for each PST for reliability purposes (this was 13% of all submitted lesson logs). We calculated both percent agreement and Cohen’s kappa as an indicator of the inter-rater reliability or agreement between coders for inclusion of objectives, mismatches of objectives, and depth of application of knowledge. Percent agreement ranged from .77 to 1 with an average of .96 (SD = .07). While Cohen’s kappa is more robust than percentage agreement because it takes into account agreement occurring by chance, it cannot be used if each rater does not use each category, thus creating an unbalanced or non-square agreement table. Cohen’s kappa ranged from .6 to 1 with an average of .90 (SD = .15).

Results

We used a mixed-methods approach to address our primary research aim, which was to examine the relative effects of one of two tutoring programs on PST knowledge, perceptions of preparedness to teach reading, and application of knowledge within lesson logs. Specifically we used ANOVAs to compare the pre- and post-treatment growth in knowledge and preparedness. To examine and compare the depth of knowledge application, we analyzed lesson logs using ANOVAs, and we also examined differences using qualitative comparison methods (LeCompte & Preissle, 1993; Miles & Huberman, 1994). To address our secondary aim, we used ANOVAs to examine the relative effects of the two tutoring programs on children’s code- and meaning-focused skills.

PST knowledge, preparedness, and application of knowledge

Knowledge and preparedness

As seen in Table 1, at pretest, these PSTs’ Knowledge of the Structure of Language and Preparedness scores were very similar across both conditions. A two-factor repeated measures ANOVA with time (pre and post) and treatment (TAILS vs. Book Buddies) revealed that all PSTs, regardless of condition, demonstrated significant gains in Knowledge. However, a second two-factor repeated measures ANOVA with time (pre and post) and treatment (TAILS vs. Book Buddies) revealed a significant interaction, with a large effect size (Cohen’s d), indicating that TAILS PSTs scored significantly higher on Preparedness.

Table 1.

Preservice teacher’s knowledge and preparedness results

| Measure | Book buddies

(n = 14) |

TAILS (n

= 14) |

F (df) | p | Cohen’s d | |||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | |||||

| Knowledge of structure of language testa | Pre | 12.2 | 2.39 | 12.7 | 3.29 | |||

| Post | 14.6 | 2.27 | 15.6 | 2.21 | .223 (1.19) | 0.64 | 0.17 | |

| Preparedness to Teach readingb | Pre | 41.88 | 12.15 | 38.2 | 9.93 | |||

| Post | 57.38 | 9.35 | 64.5 | 4.8 | 4.37 (1.17) | 0.05 | 1.2 | |

Bos et al. (1999),

Depth and breadth of knowledge application within the lesson logs

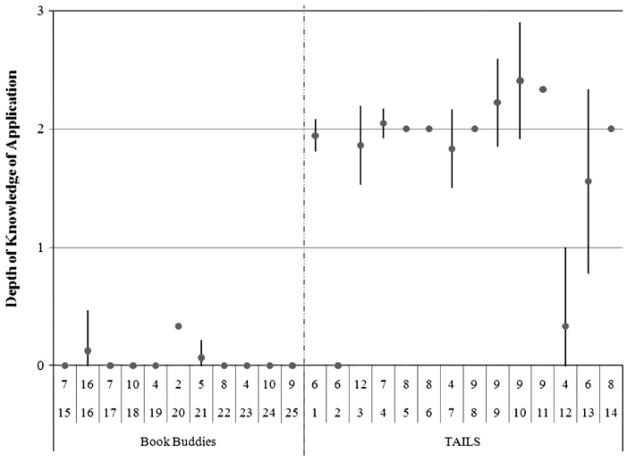

Next, we focused on the depth and breadth of application of knowledge within the lesson logs. First, we conducted a visual analysis to determine how much within-PST variation occurred across the five reading components. This visual analysis of variation served a second purpose, namely, to learn whether PSTs increased their depth of application across time or if their initial pattern of application remained constant. Specifically, we explored whether they progressed in depth from having only objectives (earning 1 point), to more detailed lesson logs that would also begin to include activities and strategies (earning 1 additional point for an activity or 2 points for an activity and a strategy). Examples of entries from lesson logs scored with this coding strategy are shown in Appendix B. Indeed, as shown in Figs. 1 and 2 (which shows the mean application score with standard deviation bars), there was relatively little variation in depth.

Fig. 1.

Mean code-focused depth of application of knowledge score with standard deviation lines for each preservice teacher

Fig. 2.

Mean meaning-focused depth of application of knowledge score with standard deviation lines for each preservice teacher

Given the consistent depth of application between lesson logs in both code- or meaning-focused skills, we next reduced the data for further analyses. We calculated the mean percent of lesson logs for which each PST provided an objective for each component, a mean mismatch of objectives and activities. We also calculated a mean depth of application of knowledge score, which was of most interest. Thus, a PST’s mean score of .82 for phonological awareness objectives indicates that 82% of her lesson logs included a phonological awareness objective. A score of .04 for phonological awareness mismatch indicates that 4% of phonological awareness objectives had a mismatch between objective and activity. A score of 2.3 for depth of implementation on phonological awareness indicates that the mean implementation score was 2.3; thus on average, the majority of lesson logs included phonological awareness objectives with at least an objective in combination with either and objective, a strategy, or both.

A series of ANOVAs revealed that TAILS PSTs had a significantly larger percentage of lesson logs with code-focused objectives (phonological awareness, phonics, and fluency) with F-statistics ranging from 36.526 to 65.726 and all p-values < .001. The magnitude of the effect size differences were very large (2.46–3.39). However, there were no significant differences between conditions regarding percentage of lesson logs with meaning-focused objectives (vocabulary or comprehension), although the data tended toward a higher percentage among TAILS PSTs. Table 2 displays the descriptive statistics, ANOVA results, and effect sizes for percentage of lesson plans with objectives by component and condition.

Table 2.

Percentage of lesson plans with objectives by component and group

| Component | Book buddies

(n = 11) |

TAILS (n

= 14) |

F (df) | p | Cohen’s d | ||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||

| Phonological awareness | 0.04 | 0.12 | 0.86 | 0.32 | 65.73 (1.23) | 0.000 | 3.39 |

| Phonics | 0.01 | 0.04 | 0.84 | 0.36 | 57.56 (1.23) | 0.000 | 3.24 |

| Fluency | 0.09 | 0.30 | 0.84 | 0.31 | 36.53 (1.23) | 0.000 | 2.46 |

| Vocabulary | 0.82 | 0.30 | 0.85 | 0.23 | 0.10 (1.23) | 0.751 | .11 |

| Comprehension | 0.90 | 0.22 | 0.94 | 0.15 | 0.24 (1.23) | 0.629 | .21 |

In both the TAILS and Book Buddy conditions, we observed several instances of mismatches, defined as objectives that did not match an activity. Approximately 2.94% had mismatches in phonological awareness; 14.27% had mismatches in phonics; 4% had mismatches in fluency; 1.74% had mismatches in vocabulary; and 17.28% had mismatches in comprehension. There was only one significant difference in the percentage of mismatches between TAILS and Book Buddies conditions: Book Buddies lesson logs had significantly more mismatches in phonics (F = 5.186; p = .032). An example of a mismatch follows: The Book Buddies PST had no phonics objective but stated “After I read to her I had her go through the book and read me some of her sight words.” (AA on 2/21).

Next, we investigated differences between groups on the depth of application of knowledge on each component of reading through another series of ANOVAs. Table 3 displays these descriptive statistics, ANOVA results, and effect sizes for the depth of application of knowledge by group for each of the components of reading. Specifically, lesson logs of the TAILS PSTs showed significantly stronger depth of application than Book Buddies in phonological awareness, phonics, and fluency with consistently very large effect sizes of 3.49, 3.07, and 3.16, respectively.

Table 3.

Depth of application of knowledge by component and group

| Book buddies

(n = 11) |

TAILS (n

= 14) |

F (df) | p | Cohen’s d | |||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||

| Phonological awareness | 0.02 | 0.06 | 1.83 | 0.73 | 66.47 (1, 23) | 0.000 | 3.49 |

| Phonics | 0.03 | 0.11 | 1.74 | 0.78 | 51.18 (1, 23) | 0.000 | 3.07 |

| Fluency | 0.09 | 0.30 | 1.69 | 0.65 | 57.07 (1, 23) | 0.000 | 3.16 |

| Vocabulary | 1.87 | 0.75 | 1.97 | 0.69 | 0.12 (1, 23) | 0.736 | .14 |

| Comprehension | 2.14 | 0.84 | 1.91 | 0.57 | 0.66 (1, 23) | 0.424 | −.32 |

0 = no Objective, 1 = Objective, 2 = Objective with activity or strategy, 3 = Objective with activity and strategy

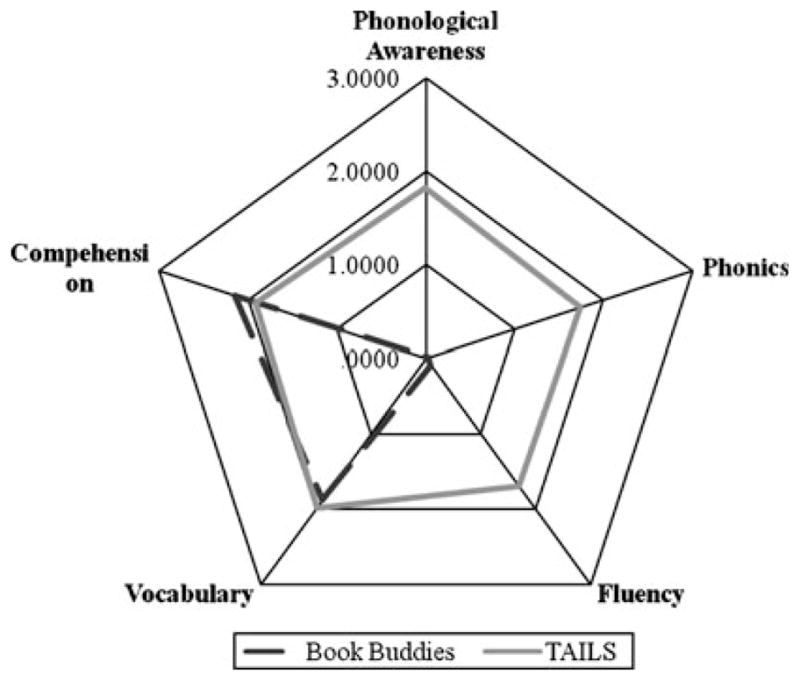

Another way to explore application of knowledge is to consider the multidimensional nature of reading instruction by investigating both the breadth and depth of application. Figure 3 shows the depth and breadth of application of knowledge by group. Each spoke on the radar in Fig. 3 represents one of the components of reading, with the center representing a depth of application score of 0 and the outside representing a depth of application score of 3. As can be seen, data from the TAILS group creates a pentagonal shape whereas the Book Buddies group creates a triangular shape. The shape represents the breath of application, showing that the TAILS group applied knowledge on all five components, where as the Book Buddies group primarily applied knowledge to the meaning-focused skills of comprehension and vocabulary. Furthermore, Fig. 3 shows that the Book Buddies group more deeply applied comprehension skills, but that the TAILS group more deeply applied all other skills.

Fig. 3.

The dashed line and the thick solid gray line represent the depth of application of knowledge scores on each of the five components of reading. The Book Buddies group showed little to no application of knowledge on phonological awareness, phonics, or fluency tasks, whereas the TAILS group showed both breadth and depth of application knowledge on all five components. There were statistically significant differences between the two groups on phonological awareness, phonics, and fluency depth of application of knowledge, but not on comprehension or vocabulary depth of application of knowledge

Finally, we explored patterns of correlations among the TAILS group and the Book Buddies group, as shown in Table 4. Within the TAILS group, the depth of application between phonological awareness, phonics, and fluency were all highly and significantly related (r ranged from .93 to .96). Comprehension was also significantly correlated with phonological awareness (r = .56) and with phonics (r = .66). However, within the Book Buddies group, code-focused skills were not at all correlated. The only significant positive correlation was between vocabulary and comprehension (r = .82); there was a negative correlation between fluency and vocabulary (r = −.83) and between phonological awareness and comprehension (r = −.68).

Table 4.

Correlations among depth of application of knowledge scores

| Component | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Phonological awareness | – | −.10 | −.10 | −.39 | −.68* |

| Phonics | .96** | – | −.100 | .25 | .29 |

| Fluency | .93** | .95** | – | −.83** | −.45 |

| Vocabulary | .28 | .34 | .30 | – | .82** |

| Comprehension | .56* | .60* | .49 | .49 | – |

Correlations on the bottom left are among TAILS PSTs, correlations on the upper right are among Book Buddies PSTs

signficant at p <.05;

significant at p <.01

Secondary analysis of student growth

The ultimate measure of teacher quality is student achievement. An additional ANOVA that was conducted to evaluate tutees’ gains revealed that TAILS students showed significantly more growth than Book Buddies students on the DIBELS nonsense word fluency measure F (1,20) = 4.32; p = .05; (ES = 0.50). As anticipated, we found a coherent pattern of findings that relates to treatment—there were no significant differences favoring TAILS students on measures of comprehension. However, we did not find significantly different growth as measured by any of the ERDA subtests. Table 5 shows the descriptive statistics, results of the ANOVAs, and effect sizes related to student growth.

Table 5.

Student pre-post test growth on DIBELS and ERDA

| Measure | Book buddies

(n = 14) |

TAILS (n

= 14) |

F (1,20) | p | Cohen’s d | |||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | |||||

| NWFa | Pre | 24.91 | 16.25 | 14.27 | 16.74 | |||

| Post | 31.55 | 17.27 | 29.55 | 19.03 | 4.32 | 0.05 | 0.5 | |

| PSFa | Pre | 28.18 | 15.19 | 16.45 | 18.26 | |||

| Post | 40.55 | 13.39 | 31.18 | 20.35 | 0.367 | 0.55 | 0.2 | |

| PAb | Pre | 12 | 5.25 | 7.55 | 5.21 | |||

| Post | 15.82 | 7.17 | 13.34 | 6.26 | 1.15 | 0.3 | 0.33 | |

| Phonicsb | Pre | 22.09 | 5.8 | 21.45 | 5.11 | |||

| Post | 22.68 | 5.22 | 24.36 | 3.97 | 3.269 | 0.09 | 0.46 | |

| Fluencyb | Pre | 5.32 | 6.43 | 7.14 | 8.16 | |||

| Post | 9.32 | 7.61 | 11.68 | 10.42 | 0.14 | 0.71 | 0.07 | |

| Vocabularyb | Pre | 8.45 | 1.63 | 6.55 | 2.07 | |||

| Post | 8.82 | 3.16 | 8.86 | 2.15 | 3.75 | 0.07 | 0.76 | |

| Comprehensionb | Pre | 10.5 | 3.94 | 11.31 | 4.24 | |||

| Post | 14.32 | 3.91 | 15.23 | 4.77 | 0.003 | 0.95 | 0.02 | |

NWF nonsense word fluency, PSF phoneme segmenting fluency, PA phonological awareness

DIBELS subtest;

ERDA subtest

Discussion

Results from this small-scale, empirical investigation of effective reading teacher preparation practices suggest that, early in their preservice teacher careers, PSTs’ may benefit from supported, structured tutorials and may acquire knowledge about language and reading instruction through coursework and field experiences. The PSTs’ improved level of knowledge about the structure of language was similar to gains (from a pretest mean of about 12 correct to a posttest mean of about 15 correct) found on the same 22-item test (Al Otaiba & Lake 2007; Bos et al., 2001). The magnitude of gains was similar to the magnitude of gains on slightly different tests of the same construct (McCutchen et al., 2002; Spear-Swerling, 2009; Spear-Swerling & Brucker, 2004). This increased knowledge may not be surprising given that the Early Literacy course we described had a syllabus and a text that were consistent with the NRP (2000) evidence base and the instructor, the final author of the study, was knowledgeable as well. Notably, none of the PSTs scored 100% correct on this test, indicating they still required additional teacher training related to the difficult construct of knowledge about the structure of language.

However, our findings strongly indicate that the scripted and structured code-focused activities within TAILS played an important role in helping PSTs apply their knowledge across code- and meaning-focused instruction, which consequently helped PSTs feel more prepared to teach reading. In contrast, the average depth of knowledge score for code-focused skills for PSTs in the Book Buddies group was 0 and ranged narrowly from only .02 to .09, suggesting that this group rarely taught code-focused skills. A rare example of such an objective, “J. and I worked on phonological awareness” noted in Appendix B, (DM on the lesson log dated 2/14).

Conversely, the average depth of knowledge score for code-focused skills for PSTs in the TAILS group was higher and ranged from 1.69 to 1.83, suggesting that PSTs in this group typically had objectives and that most did activities or used strategies. As shown in Appendix B (i.e., the phonics component of Susan’s lesson log of 2/16), this PST was also beginning to realize that she needed to adapt her instruction and that she needed to think about what she could do to help her student respond better. In this instance, she modified an Elkonin sound box activity to incorporate sounding out words. Further, this entry illustrates how Susan encouraged the student to focus.

With regard to meaning-focused instruction, the depth of application scores were similar between TAILS and Book Buddies PSTs. Yet, TAILS PSTs entries were generally longer and showed qualitatively more detailed information. Hence, TAILs participants had more scores of 3 for depth among their lesson logs.

At the end of this study, TAILS PSTs demonstrated greater feelings of confidence about their preparedness to teach reading than did Book Buddies PSTs. This confidence is also manifested in the examples seen in Appendix B, in which the TAILS PSTs, seemed better able to explain why they implemented a particular strategy. We caution, however, that TAILS was not entirely preservice teacher-proof. It is apparent from Appendix B and from the scores in Table 1, that not all TAILS PSTs excelled in applying their knowledge while tutoring a struggling reader. Thus, our findings are similar to Maloch et al. (2003) results, which indicate that graduates of teacher preparation programs who learned evidence-based practices described themselves as: (a) responsive to their students’ needs, (b) well-prepared and confident about their teaching, (c) knowledgeable about the reading process and assessments, and (d) reflective about the need to adapt instruction for individual student needs.

Our secondary aim was to examine gains of children tutored by the contrasting tutorials. Overall, tutored children improved their scores on most reading measures, and the pattern of effect sizes favored students tutored by TAILS. The positive effect of tutoring by preservice teachers converges with prior research (e.g., Allor & McCathren, 2004), including one additional study using TAILS (Al Otaiba, 2005). In this TAILS study, the comparison group was tutored with a direct instruction program by Title 1 tutors. In the present study, as in prior TAILS study, we were unable to include a no-treatment control group of children in the present study due to district policy that is prevalent in many schools- the school district would not approve a no-treatment condition because the students being tutored were struggling readers. Without a no-treatment control group, we cannot know whether these gains are the result of tutoring or instruction. Furthermore, classroom teachers nominated one struggling reader per classroom, and because schools only allow one preservice teacher per classroom, it was not be possible to directly compare TAILS and Book Buddies within a single classroom. Future research is needed with either a no-treatment control group, and that uses standardized test scores, to learn whether tutoring is better than no tutoring and whether tutoring helped struggling readers to catch up to national norms. Given the relatively small sample size and rather large variability in test scores, it was noteworthy that the children tutored by TAILS PSTs, who applied teaching more code-focused instruction, demonstrated significantly greater growth in phonetic decoding skills.

As with any research, there are a number of limitations to this study. First, data was lost for one and unreadable for two of the Book Buddy tutors. Second, fidelity of implementation was not systematically documented, except through the lesson logs. The lack of funding and resources limited our ability to observe all PSTs during tutoring, which would strengthen our findings. Further, we did not want to contaminate the study and so did not provide PSTs with constructive feedback about their lesson logs, which could have improved their implementation. Third, the course is an initial course, so possibly Book Buddies could be more effective as a field experience if implemented later within the program when PSTs have more experience and knowledge. This is important to note because there were only 8 weeks of tutoring within the field experience, although that did not impact TAILS PSTs who started applying their knowledge and did so fairly consistently throughout (as seen in Figs. 1, 2). Finally, although we did find some important differences in PST and child outcomes, we acknowledge that the relatively small sample size may limit our power to find other statistically significant and educationally important differences. Future empirical research with larger samples involving several cohorts or with larger samples using quasi-experimental designs that do not involve randomization of preservice teachers are needed (e.g., the quasi-experimental, multi-cohort study conducted by Spear-Swerling [2009] that documented positive learning outcomes for special education PSTs and their second grade tutees). Although we were able to randomly assign tutors within a single course, we learned that doing so was problematic because the Book Buddies PSTs appeared less prepared to teach at the end of the study. To address this problem, at the end of the study, we provided all the PSTs with their own copies of TAILS and committed to supporting TAILS implementation through a second literacy course.

These important limitations notwithstanding, this study addresses the critical shortage of studies using experimental or quasi-experimental designs that investigate preservice reading education by examining both teacher and student change (Pang & Kamil, 2003). It contributes to the exploration of how early childhood and elementary teachers are taught to apply current literacy theory and evidence-based practices. Without evidence of effective practices in our teacher preparation programs, “we will continually be faced with beginning teachers who are under-prepared to deliver high-quality reading instruction” (International Reading Association, 2005).

Supplementary Material

Contributor Information

Stephanie Al Otaiba, Email: salotaiba@fcrr.org, School of Teacher Education, Florida State University, Tallahassee, FL, USA. Florida Center for Reading Research, Tallahassee, FL, USA.

Vickie E. Lake, School of Teacher Education, Florida State University, Tallahassee, FL, USA

Luana Greulich, School of Teacher Education, Florida State University, Tallahassee, FL, USA. Florida Center for Reading Research, Tallahassee, FL, USA.

Jessica S. Folsom, School of Teacher Education, Florida State University, Tallahassee, FL, USA. Florida Center for Reading Research, Tallahassee, FL, USA

Lisa Guidry, School of Teacher Education, Florida State University, Tallahassee, FL, USA. Florida Center for Reading Research, Tallahassee, FL, USA.

References

- Adams MJ. Beginning to read: Thinking and learning about print. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- Adams MJ, Foorman B, Lundberg I, Beeler C. Phonemic awareness in young children: A classroom curriculum. Baltimore, MD: Brooks Publishing Co; 1997. [Google Scholar]

- Al Otaiba S. -2) Unpublished manuscriptFlorida Center for Reading Research; Tallahassee, FL: 2003. Tutor assisted intensive learning strategies for second grade (TAILS [Google Scholar]

- Al Otaiba S. Weaving moral elements and research-based reading practices in inclusive classrooms using shared book reading using shared book reading techniques. Early Child Development and Care. 2004;174:575–589. [Google Scholar]

- Al Otaiba S. How effective is code-based tutoring in English for English language learners and their preservice teacher/tutors? Remedial and Special Education. 2005;26(4):245–254. [Google Scholar]

- Al Otaiba S, Kosanovich-Grek ML, Torgesen JK, Hassler L, Wahl M. Reviewing core kindergarten and first grade reading programs in light of no child left behind: An exploratory study. Reading and Writing Quarterly. 2005a;21:377–400. [Google Scholar]

- Al Otaiba S, Lake VE. Preparing special educators to teach reading and use curriculum-based assessments. Reading and Writing: An Interdisciplinary Journal. 2007;20:591–617. [Google Scholar]

- Al Otaiba S, Schatschneider C, Silverman E. Tutor-assisted intensive learning strategies in kindergarten: How much is enough? Exceptionality. 2005b;13:195–208. [Google Scholar]

- Al Otaiba S, Torgesen J, Lane H. The role of a structured tutoring experience in the development of preservice teachers’ preparedness to teach reading. Poster presented at the Annual Meeting of the Society for the Scientific Study of Reading; Toronto, Canada. 2003. Jun, [Google Scholar]

- Allington R, Nowak R. “Proven programs” and other unscientific ideas. In: Lapp D, Block C, et al., editors. Teaching all children to read in your classroom. New York: Guilford; 2004. pp. 93–102. [Google Scholar]

- Allor J, McCathren R. The efficacy of an early literacy tutoring program implemented by college students. Learning Disabilities Practice. 2004;19(2):116–129. [Google Scholar]

- Anders PL, Hoffman JV, Duffy GG. Teaching teachers to teach reading: Paradigm shifts, persistent problems, and challenges. In: Kamil M, Masenthal P, Pearson PD, Barr R, editors. Handbook of reading research. 3. Mahwah, NJ: Lawrence Erlbaum Associates; 2000. pp. 719–744. [Google Scholar]

- Bauman JF, Ro JM, Duffy-Hester AM, Hoffman JV. Ten and now: Perspectives on the status of elementary reading instruction by prominent reading educators. Reading Research and Instruction. 2000;39:236–264. [Google Scholar]

- Beck IL, McKeown MG, Kucan L. Bringing words to life: Robust vocabulary instruction. New York: The Guilford Press; 2002. [Google Scholar]

- Blachman B, Ball E, Black S, Tangel D. Road to the code. Baltimore, MD: Paul Brookes Publishing; 1998. [Google Scholar]

- Bos C, Mather N, Dickson S, Podhajski B, Chard D. Perceptions and knowledge of preservice and inservice educators about early reading instruction. Annals of Dyslexia. 2001;51:97–120. [Google Scholar]

- Brady S, Moats LC. Position paper of the International Dyslexia Association. Baltimore, MD: International Dyslexia Association; 1997. Informed instruction for reading success: Foundations for teacher preparation. [Google Scholar]

- Brown JS, Collins A, Duguid S. Situated cognition and the culture of learning. Educational Researcher. 1989;18:32–42. [Google Scholar]

- Carnine D, Silbert J, Kame’enui EJ. Direct instruction reading. 3. Columbus, OH: Merrill; 1998. [Google Scholar]

- Chall JS. Learning to read: The great debate. New York: McGraw-Hill; 1983. (updated ed. ed.) [Google Scholar]

- Cochran-Smith M, Lytle S. Relationships of knowledge and practice: Teacher learning in communities. In: Iran-Nejar A, Pearson PD, editors. Review of research in education. Washington, DC: AERA; 1999. pp. 249–305. [Google Scholar]

- Cochran-Smith M, Zeichner KM, editors. Studying teacher education: The report of the AERA panel on research and teacher education. Mahwah, NJ: LEA Publishers; 2005. [Google Scholar]

- The Psychological Corporation. Early reading diagnostic assessment. 2. San Antonio, TX: The Psychological Corporation, a Harcourt Assessment Company; 2003. [Google Scholar]

- Ehri LC. Research on learning to read and spell: A personal-historical perspective. Scientific Studies of Reading. 1998;2:97–114. [Google Scholar]

- Ehri LC. Phases of acquisition in learning to read words and implications for teaching. In: Stainthorp R, Tomlinson P, editors. Learning and teaching reading. Leicester, UK: The British Psychological Society; 2002. pp. 7–28. [Google Scholar]

- Fitzgerald J. Can minimally trained college student volunteers help young at-risk children to read better? Reading Research. 2001;36(1):28–47. [Google Scholar]

- Foorman BR, Moats LC. Conditions for sustaining research-based practices in early reading instruction. Remedial and Special Education: Special Issue on Sustainability. 2004;25:51–60. [Google Scholar]

- Fuchs D, Fuchs LS, Mathes PG, Simmons DC. Peer-assisted learning strategies: Making classrooms more responsive to diversity. American Educational Research Journal. 1997;34:174–206. [Google Scholar]

- Fuchs D, Fuchs LS, Thompson A, Al Otaiba S, Yen L, Yang NJ, et al. Is reading important in reading-readiness programs? A randomized field trial with teachers as program implementers. Journal of Educational Psychology. 2001;93:251–267. [Google Scholar]

- Fuchs D, Fuchs LS, Thompson A, Al Otaiba S, Yen L, Yang NJ, et al. Exploring the importance of reading programs for kindergartners with disabilities in mainstream classrooms. Exceptional Children. 2002;68:295–311. [Google Scholar]

- Good RH, Kaminski RA. DIBELS Oral reading fluency passages for first through third grades (Report No 10) 2002 Retrieved from University of Oregon website: https://dibels.uoregon.edu/techreports/DORF_Readability.pdf.

- Good RH, Simmons DC, Kame’enui EJ. The importance and decision-making utility of a continuum of fluency-based indicators of foundational reading skills for third-grade high-stakes outcomes. Scientific Studies of Reading. 2001;5:257–288. [Google Scholar]

- Gough PB, Tunmer WE. Decoding, reading, and reading disability. RASE: Remedial & Special Education. 1986;7:6–10. [Google Scholar]

- Hoffman JV, Roller C. The IRA excellence in reading teacher preparation commission’s report: Current practices in reading teacher education at the undergraduate level in the United States. In: Roller C, editor. Learning to teach reading: Setting the research agenda. Newark, DE: International Reading Association; 2001. pp. 32–79. [Google Scholar]

- Hoffman JV, Roller C, Maloch B, Sailors M, Duffy G, Beretvas SN. Teachers’ preparation to teach reading and their experiences and practices in the first three years of teaching. The Elementary School Journal. 2005;105:267–287. [Google Scholar]

- Individuals with Disabilities Education Act, 2001 U.S.C 1400–1487 (1997, 2004).

- International Reading Association. Prepared to make a difference: An executive summary of the national commission on excellence in elementary teacher preparation for reading instruction. 2005 Retrieved from International Reading Association website: http://www.reading.org/downloads/resources/1061teacher_ed_com_summary.pdf.

- Joshi RM, Binks E, Hougen MC, Dean EO, Graham L, Smith DL. The role of teacher education programs in preparing teachers for implementing evidence-based reading practices. In: Rosenfield S, Berninger V, editors. Implementing evidence-based academic interventions in school settings. New York: Oxford University Press; 2009. pp. 605–625. [Google Scholar]

- Kaminski RA, Good RH. Toward a technology for assessing basic early literacy skills. School Psychology Review. 1996;25:215–227. [Google Scholar]

- Lake VE. Linking literacy and moral education in the primary classroom. The Reading Teacher. 2001;25:125–129. [Google Scholar]

- Lake VE, Al Otaiba S. Developing character education and literacy instruction pedagogy through service learning: An integrated model of teacher preparation. 2009. (in press) [Google Scholar]

- LeCompte MD, Preissle J. Ethnography and qualitative design in educational research. San Diego, CA: Academic Press; 1993. [Google Scholar]

- Lonigan CJ, Anthony JL, Bloomfield BG, Dyer SM, Samwel CS. Effects of two shared-reading interventions on emergent literacy skills of at-risk preschoolers. Journal of Early Intervention. 1999;22:306–322. [Google Scholar]

- Lonigan CJ, Whitehurst GJ. Relative efficacy of parent and teacher involvement in a shared-reading intervention for preschool children from low-income backgrounds. Early Childhood Research Quarterly. 1998;13:263–290. [Google Scholar]

- Maloch B, Fine J, Flint AS. “I just feel like I’m ready”: Exploring the influence of quality teacher preparation on beginning teachers. The Reading Teacher. 2003;56:348–350. [Google Scholar]

- Mather N, Bos C, Babur N. Perceptions and knowledge of preservice and inservice teachers about early literacy instruction. Journal of Learning Disabilities. 2001;34:472–482. doi: 10.1177/002221940103400508. [DOI] [PubMed] [Google Scholar]

- Mathes PG, Fuchs D, Fuchs LS, Henley AM, Sanders A. Increasing strategic reading practice with Peabody Classwide Peer Tutoring. Learning Disabilities Research & Practice. 1994;9:44–48. [Google Scholar]

- Mathes PG, Howard JK, Allen SH, Fuchs D. Peer-assisted learning strategies for first-grade readers: Responding to the needs of diverse learners. Reading Research Quarterly. 1998;33:62–94. [Google Scholar]

- Mayhew J, Welch M. A call to service: Service learning as a pedagogy in special education programs. Teacher Education and Special Education. 2001;24:208–219. [Google Scholar]

- McCutchen D, Abbott RD, Green LB, Beretvas SN, Cox S, Potter NS, et al. Beginning literacy: Links among teacher knowledge, teacher practice, and student learning. Journal of Learning Disabilities. 2002;35:69–86. doi: 10.1177/002221940203500106. [DOI] [PubMed] [Google Scholar]

- Miles MB, Huberman AM. Qualitative data analysis: An expanded sourcebook. 2. Thousand Oaks, CA: Sage Publications, Inc; 1994. [Google Scholar]

- Moats LC. The missing foundation in teacher education: Knowledge of the structure of spoken and written language. Annals of Dyslexia. 1994;44:81–102. doi: 10.1007/BF02648156. [DOI] [PubMed] [Google Scholar]

- Moats LC. Still wanted: Teachers with knowledge of language. Journal of Learning Disabilities. 2009;42:387–391. doi: 10.1177/0022219409338735. [DOI] [PubMed] [Google Scholar]

- Moats LC, Foorman BR. Measuring teachers’ content knowledge of language and reading. Annals of Dyslexia. 2003;53:23–45. [Google Scholar]

- Moats LC, Lyon GR. Wanted: Teachers with knowledge of language. Topics in Language Disorders. 1996;16:73–86. [Google Scholar]

- National Reading Panel. Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. Washington, DC: National Institute of Child Health and Human Development; 2000. [Google Scholar]

- No Child Left Behind Act of 2001. Pub. L. No. 107-110, (H. R. 1).

- Olson MW, Gillis M. Teaching reading study skills and course content to preservice teachers. Reading Research and Instruction. 1983;23:124–133. [Google Scholar]

- Pang ES, Kamil ML. Reviews of the reading research literature: Updates and extensions of the National Reading Panel reviews. Paper presented at the meeting of the American Educational Research Association; Chicago, IL. 2003. Apr, [Google Scholar]

- Podhajski B, Mather N, Nathan J, Sammons J. Professional development in scientifically based reading instruction: Teacher knowledge and reading outcomes. Journal of Learning Disabilities. 2009;42:403–417. doi: 10.1177/0022219409338737. [DOI] [PubMed] [Google Scholar]

- Pressley M, Wharton-McDonald R, Allington R, Block CC, Morrow L, Tracey D, et al. A study of effective first grade literacy instruction. Scientific Studies of Reading. 2001;15:35–58. [Google Scholar]

- Snow CE. Reading for understanding: Toward an R&D program in reading comprehension. Arlington, VA: RAND; 2002. [Google Scholar]

- Snow CE, Burns MS, Griffin P, editors. Preventing reading difficulties in young children. Washington, DC: National Academy Press; 1998. [Google Scholar]

- Snow CE, Griffin P, Burns MS, editors. Knowledge to support the teaching of reading: Preparing teachers for a changing world. San Francisco, CA: Jossey-Bass; 2005. [Google Scholar]

- Spear-Swerling L. A literacy tutoring experience for prospective special educators and struggling second graders. Journal of Learning Disabilities. 2009;42:431–443. doi: 10.1177/0022219409338738. [DOI] [PubMed] [Google Scholar]

- Spear-Swerling L, Brucker PO. Preparing novice teachers to develop basic reading and spelling skills in children. Annals of Dyslexia. 2004;54:332–359. doi: 10.1007/s11881-004-0016-x. [DOI] [PubMed] [Google Scholar]

- Spear-Swerling L, Sternberg RJ. Off track: When poor readers become “learning disabled”. Boulder, CO: Westview Press; 1996. [Google Scholar]

- Sweet A, Snow CE. Reconceptualizing reading comprehension. In: Block C, Gambrel L, Pressley M, editors. Improving comprehension instruction, rethinking research, theory, and classroom practice. San Francisco, CA: Jossey-Bass; 2002. pp. 17–53. [Google Scholar]

- Valdez-Menchaca MC, Whitehurst GJ. Accelerating language development through picture book reading: A systematic extension to Mexican day care. Developmental Psychology. 1992;28:1106–1114. [Google Scholar]

- Walsh K, Glaser D, Dunne-Wilcox D. What elementary teachers don’t know about reading and what teacher preparation programs aren’t teaching. Washington, DC: National Council for Teacher Quality; 2006. [Google Scholar]

- Wharton-McDonald R, Pressley M, Hampston JM. Literacy instruction in nine-first-grade classrooms: Teacher characteristics and student achievement. The Elementary School Journal. 1998;99:101–128. [Google Scholar]

- Whitehurst GJ, Lonigan CJ. Child development and emergent literacy. Child Development. 1998;69:848–872. [PubMed] [Google Scholar]

- Woodcock RW, Johnson MB. The Woodcock-Johnson tests of cognitive ability—Revised. Itasca, IL: Riverside; 1997. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.