Abstract

The physical effort required to seek out and extract a resource is an important consideration for a foraging animal. A second consideration is the variability or risk associated with resource delivery. An intriguing observation from ethological studies is that animals shift their preference from stable to variable food sources under conditions of increased physical effort or falling energetic reserves. Although theoretical models for this effect exist, no exploration into its biological basis has been pursued. Recent advances in understanding the neural basis of effort- and risk-guided decision making suggest that opportunities exist for determining how effort influences risk preference. In this review, we describe the intersection between the neural systems involved in effort- and risk-guided decision making and outline two mechanisms by which effort-induced changes in dopamine release may increase the preference for variable rewards.

Keywords: effort, risk, foraging, decision making, nucleus accumbens, dopamine, anterior cingulate cortex

Introduction

Foraging animals must often consider the variability or risk associated with resource delivery. For example, the preference for variable as opposed to stable sources of food can change dramatically based on a number of factors, such as an animal's current energetic state. In the case of food delivery, animals can express a preference for variable or “risky” food sources when the energetic demands of foraging are high (Caraco, 1981; Caraco et al., 1990). Juncos, for instance, prefer seed-bins with variable over fixed reinforcement schedules when ambient temperatures fall and metabolic demands rise (Caraco et al., 1990; but see Brito E Abreu and Kacelnik, 1999). Similarly, rats consuming high-calorie foods exhibit a reduced preference for variable schedules of reinforcement relative to groups of rats consuming low-calorie foods (Craft et al., 2011). Energetic expenditure due to physical effort can also enhance risk preference. For example, a study by Kirshenbaum et al. (2003) investigated the interaction between effort and risk in rats where effort was presented in the form of running in wheels with low (50 g) or high (120 g) spinning resistances. Risk was measured as the preference for variable or fixed schedules of reinforcement. The authors divided rats into high-effort and low-effort groups and observed that the high-effort group expressed a preference for variable reinforcement relative to the low-effort group. One explanation for this effect is the Daily Energy Budget (DEB) or Z-score rule (Stephens, 1981; Houston, 1991). According to this theory, when animals approach a critical energetic state (e.g., starvation), they choose to gamble by investing their remaining resources on a variable option that may yield a life-saving gain. This rule also states that organisms with adequate and stable energetic reserves will not make the gamble as they are better served by low-risk options that guarantee survival (For a thorough and critical review see Kacelnik and Bateson, 1996).

To our knowledge, no investigation into the neural basis for the influence of energetic state or physical effort on risk preference has been performed. Consequently, almost nothing is known regarding the neural basis for interactions between effort and risk. Opportunities do exist to explore this question given recent advances in the study of the neural basis of effort- and risk-guided decision making (Reviewed in Walton et al., 2006; Platt and Huettel, 2008; Salamone et al., 2012). These studies have identified a range of structures and neuromodulators involved in perceiving and setting the preference to exert effort or to pursue risky options. Surprisingly, the divergent networks involved in effort and risk intersect in a restricted set of cortical and subcortical structures; the most prominent being the anterior cingulate cortex (ACC), the basolateral amygdala (BLA), the nucleus accumbens (NAC), and the mesolimbic dopaminergic system. Given the considerable evidence for the role of these systems in setting the preference for both effort and risk, we believe that interactions between these structures underlie the capacity of physical effort to increase risk preference. To argue this point, we first review evidence that the neural processes involved in effort-guided (section Experimental Approaches for Studying Effort-Guided Behaviors, Cortical and Sub-Cortical Circuits Involved in Effort-Guided Behaviors and Dopamine and Effort-Guided Behavior) and risk-guided (section Neural Systems Involved in Setting The Preference for Risk) decision making intersect in these systems, and conclude with the discussion of a proposed neural mechanism by which effort increases the preference for risk, where risk preference is defined as a preference for variable over certain rewards.

Experimental approaches for studying effort-guided behaviors

An underlying assumption of most investigations of effort-guided decision making is that effort is a cost to be minimized. This is a reasonable assumption given that many species balance energetic losses from physical effort against food intake in order to maximize net energetic gain (Stephens et al., 2007). Bautista et al. (2001) explored this issue in an experiment in which starlings choose between either walking (low effort) in search of low-yield rewards or flying (high effort) to reach larger rewards. Analysis of choice behavior in response to manipulations of reward magnitude and flight distance indicated that starlings optimized net energetic gain by shifting their preference between walking and flying. Similarly, food-restricted rats alter their preference for high-effort/high-reward and low-effort/low-reward options in ways that indicate that they treat effort as a cost (Reviewed in Walton et al., 2006; Salamone et al., 2012). In most neurobiological experiments, effort is manipulated using either lever-press behaviors coupled with variable or fixed-ratio schedules of reinforcement (Floresco et al., 2008; Walton et al., 2009) or barrier-climbing tasks where animals are required to select between paths on a maze that lead to combinations of effort (the presence of a 30–40 cm barrier) and reward (e.g., Salamone, 1994; Bardgett et al., 2009; Cowen et al., 2012). Importantly, results from a number of studies suggest that the observed aversion to effort reported in these studies is not due to an aversion to temporal delays produced by the time required to lever-press or jump over barriers (Rudebeck et al., 2006; Floresco et al., 2008; Walton et al., 2009). What follows is a review of the involvement of neural systems in effort-guided behaviors, with a focus on those systems that are also implicated in processing risk.

Cortical and sub-cortical circuits involved in effort-guided behaviors

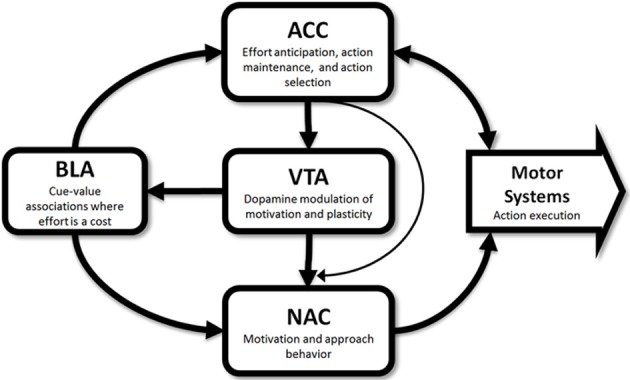

Results from studies that have selectively targeted cortical and subcortical structures, either through lesions or through pharmacological manipulations, suggest the existence of a network involved in effort-guided decision making; with the principal nodes of this network being localized in the ACC, BLA, and NAC. For example, lesions or inactivation of the ACC result in animals avoiding previously preferred high-effort/high-reward options on the barrier-climbing task (Walton et al., 2003; Rudebeck et al., 2006; Floresco and Ghods-Sharifi, 2007). Notably, the placement of a second barrier on the formerly low-effort arm results in lesioned animals returning to the high-reward/high-effort option (Walton et al., 2002), suggesting that the observed shift in preference is not a consequence of an inability to climb the barrier or in capacity to recall reward values. This effect does not appear to extend to prefrontal regions ventral to the ACC as lesions of the prelimbic (Walton et al., 2003) and orbitofrontal cortex (Rudebeck et al., 2006) do not result in effort-avoidance. Similar patterns of effort-avoidance have been observed following disruption of subcortical regions such as the NAC and BLA. For example, effort avoidance has been reported in rats performing lever-press tasks following muscimol/baclofen inactivation of the NAC core (Ghods-Sharifi and Floresco, 2010) and on barrier-climbing tasks following the infusion of dopaminergic antagonists into the NAC (Salamone et al., 1994) or 6-OHDA dopamine depletion of the NAC (Mai et al., 2012). Similarly, muscimol/baclofen inactivation of the BLA (Ghods-Sharifi et al., 2009) on a lever-press task and excitotoxic lesions of the BLA in a barrier-climbing task (Ostrander et al., 2011) also produce effort-aversion in rats. Taken together, these data suggest the presence of a distributed cortical-subcortical network for effort processing. A schematic of this network along with possible modes of interaction is presented in Figure 1.

Figure 1.

Schematic of potential functions of neural systems within the effort network. Results from studies in rodents and primates suggest the involvement of the anterior cingulate cortex (ACC), basolateral amygdala (BLA), nucleus accumbens (NAC), and dopamine neurons in the ventral tegmental area (VTA) in effort-guided decision making. Captions in each box indicate a proposed roles for each structure in effort-guided behavior and arrows indicate possible routes of information flow. These routes are based largely from the results of disconnection studies and anatomical studies performed in rodents.

Although experiments such as those described above have identified individual structures involved in effort-related processing, little is known regarding the specific roles these structures play in effort-guided decision making or how interactions between structures influence behavior. Important steps in this direction have been made through the investigation of the behavioral consequences of the functional disconnection of structures within this “effort network.” For example, the functional disconnection of the BLA and ACC through the targeted inactivation of the BLA/ACC in contralateral hemispheres (disconnecting within-hemisphere communication) results in a degree of effort-avoidance that is similar to the degree observed following bilateral ACC or BLA lesions (Floresco and Ghods-Sharifi, 2007). In this study, lesions were in the most caudal region of the BLA, a region that does not receive significant input from the ACC (Sesack et al., 1989). This suggests that information could move from the BLA to the ACC during effort-guided decision making. Evidence for directional flow of information from the BLA to the ACC, along with an established role of the BLA in ascribing positive and negative affective values to stimuli and outcomes (Baxter and Murray, 2002) indicates that information about the effort-discounted value of rewards could be transferred from the BLA to the ACC during decision making (Floresco and Ghods-Sharifi, 2007). The ACC, given its role in integrating sensory and motor information in the service of action-selection (Shima and Tanji, 1998; Kennerley et al., 2009), may utilize information about outcome value from regions such as the BLA to bias action selection toward high-value options (Walton et al., 2006). This interpretation, however, must be tempered as it is possible that the ACC drives activity in the posterior BLA through indirect routes (e.g., through the anterior BLA). Future experiments involving simultaneous recording from the ACC and BLA during effort-guided behavior could further elucidate the question of the direction of information flow.

Interactions between the ACC and the NAC also appear to influence effort-guided behaviors. For example, the excitotoxic disconnection of the ACC and NAC core also results in effort-avoidance on a barrier-climbing task (Hauber and Sommer, 2009). There are multiple routes through which an ACC-NAC disconnection could disrupt inter-region communication. For example, projections from the ACC to NAC exist (Zahm and Brog, 1992; Voorn et al., 2004; Haber et al., 2006), although these are relatively weak (Gabbott et al., 2005). A second route is through the ventral tegmental area (VTA) as the ACC projects to the VTA (Oades and Halliday, 1987) and given that ACC stimulation is capable of activating VTA neurons (Gariano and Groves, 1988), and the VTA, in turn, projects to the NAC (Oades and Halliday, 1987). In contrast to the clear direct and indirect projections from the ACC to the NAC, there are few projections from the NAC to the ACC (Hoover and Vertes, 2007), suggesting that information flows from the ACC to the NAC (Hauber and Sommer, 2009). The specific involvement of the NAC in motivated approach behavior (Berridge and Robinson, 2003; Salamone et al., 2007) suggests that the NAC receives ACC output related to the chosen action, and utilizes this information to trigger or sustain an approach response.

In agreement with the proposed role of the ACC in action selection (Shima and Tanji, 1998), the preceding results suggests that the ACC integrates information about outcome values from regions such as the BLA in order to guide decision making. Support for a role of the ACC in action selection, however, is weakened by recent physiological observations in rodents that indicate that neural responses in the ACC to actions and to anticipated effort actually occur after action selection (Cowen et al., 2012). These observations are in agreement with an alternative view of ACC involvement in sustaining goal-directed responses across delays (Cowen et al., 2012). For example, Narayanan and Laubach (2006) demonstrated that ACC activity is required for sustaining a lever-press response in a time-estimation task. This conclusion is further supported by observations from primate studies that implicate the ACC in maintaining actions across trials (Kennerley et al., 2006; Hayden et al., 2011).

It must be cautioned that while these observations highlight similarities between primates and rodents, such comparisons are complicated by notable anatomical and functional differences between species (Preuss, 1995; Uylings, 2003; but see Vogt and Paxinos, 2012). For example, there is considerable evidence that the primate ACC can be divided into motor, cognitive, and affective components along the dorso-ventral/posterior-anterior axis (Barbas and De Olmos, 1990; Luppino et al., 1991; Bates and Goldman-Rakic, 1993; Barbas and Blatt, 1995; Picard and Strick, 1996), with most studies of effort-driven responses in primates targeting dorsal/motor regions (e.g., Kennerley et al., 2009). Although anatomical divisions along the anterior-posterior axis of the rodent ACC do appear to correspond generally to the divisions reported in primates (Vogt and Paxinos, 2012), few studies of behavioral/functional differences along this anterior-posterior axis in the rodent ACC have been performed. Interestingly, differences in motor sensitivity along the dorso-ventral axis of the rodent medial prefrontal cortex have been observed, with individual neurons in dorsal regions, including the ACC, exhibiting the greatest sensitivity to movement (Cowen and McNaughton, 2007).

Dopamine and effort-guided behavior

Dopamine is traditionally viewed as a key modulator of reward-driven learning; however, the observation that dopamine modulates effort-guided behaviors suggests that this view requires elaboration (Wise, 2004; Salamone et al., 2012). For example, physical exercise in the form of swimming and running on a wheel or a treadmill and has been repeatedly associated with increases in dopamine concentration (Reviewed in Meeusen and De Meirleir, 1995). Such increases have been observed throughout the striatum (Freed and Yamamoto, 1985; Hattori et al., 1994; Meeusen et al., 1997), and a particularly strong relationship between dopamine concentration and running speed has been reported in the NAC (Freed and Yamamoto, 1985). In addition, rats receiving systemic administration of D1 (Bardgett et al., 2009) and D2 antagonists (Denk et al., 2005; Floresco et al., 2008; Bardgett et al., 2009; Salamone et al., 2012) shift their preference away from high-effort/high-reward options to low-effort/low-reward alternatives. Conversely, the application of the dopaminergic agonist D-amphetamine biases responses toward high-reward/high-effort options (Bardgett et al., 2009; but see Floresco et al., 2008).

The influence of dopamine on effort depends on dopamine's effect on specific structures within the effort-network. For instance, targeted 6-OHDA depletion of dopaminergic terminals or blockade of dopamine receptors within the NAC (Salamone, 1994; Cousins et al., 1996; Ishiwari et al., 2004) and the ACC (Schweimer and Hauber, 2006 but see Walton and Croxson, 2005) results in effort-avoidance during cost-benefit decision making. Furthermore, results from a microdialysis study of striatal dopamine during a random-ratio reinforcement task indicates that slow fluctuations in dopamine concentration (minutes) reflect an integration of factors such as motivational state (e.g., hunger) and the effort required to obtain rewards (Ostlund et al., 2011). Taken together, these data indicate that dopamine concentration within the ACC and the NAC influences the willingness of animals to exert physical effort in exchange for valued rewards. This function relates to recent theoretical proposals in which the level of tonic dopamine release modulates response vigor and response speed (Niv et al., 2005) as well as the estimation of long-term reinforcement rates (Niv et al., 2007; Ostlund et al., 2011).

Although there is clear evidence for a role of dopamine release in the NAC in effort-guided behaviors, virtually nothing is known regarding how this release is regulated. For example, the principal source of dopaminergic innervation of the NAC is the VTA, and it is unknown what systems drive VTA activity during effort-guided behaviors. The ACC could be involved given its capacity to trigger burst-firing in dopaminergic neurons (Gariano and Groves, 1988), and the previously described role of the ACC in sustaining motor actions (Narayanan et al., 2006; Cowen et al., 2012) would suggest, somewhat indirectly, ACC involvement in sustaining a dopaminergic response. An important path for future research is the exploration of interactions between systems within the effort network such as the ACC and BLA and the VTA.

Dopamine can modulate the activity of its targets across many time scales (Schultz, 2007), and the time scale most relevant for effort-guided behaviors has yet to be determined. For example, dopamine neurons can express tonic and phasic modes of firing activity (Grace, 1991), and these modes may play distinct roles in modulating effort-guided behaviors. Tonic activity is associated with slow release (seconds) of dopamine while phasic release is associated with brief (~300 ms) bursts of firing activity that produces large but temporary increases in dopamine concentration (Grace, 1991). There is some debate, however, regarding whether changes in extracellular dopamine concentration results from tonic or phasic release (Floresco et al., 2003; Owesson-White et al., 2012). Functionally, tonic patterns of firing activity of dopamine neurons have been associated with estimating the uncertainty of reward delivery in primates (Fiorillo et al., 2003); while phasic release is implicated in stimulus-driven orienting behaviors (Dommett et al., 2005) and associative plasticity (Hollerman and Schultz, 1998). Phasic and tonic patterns of release may also cooperate to regulate inter-region communication (Goto and Grace, 2005).

Little is known regarding the relative contribution of phasic and tonic release to effort-guided behaviors; however, three recent studies using fast scan cyclic voltammetry were unable to identify a clear effect of effort on phasic responses within the NAC (Day et al., 2010; Gan et al., 2010; Wanat et al., 2010). For example, two of these studies were unable to identify significant phasic response to effort-predictive cues in an instrumental task, but these studies did identify clear phasic responses to reward-predictive cues (Gan et al., 2010; Wanat et al., 2010). Furthermore, Day et al. (2010) reported an unanticipated reduction of phasic NAC dopamine release following increased effort. It is also unclear how short-duration phasic responses could exert the sustained effect on motivational state required to overcome costs such as physical effort (Niv et al., 2007). A possible way to accommodate this concern would be if phasic responses modulated the learning of associations between effort, stimuli, and outcomes during early stages of training as such learning could have lasting effects on behavior. Future work in this area may yield important results given considerable evidence for a role of phasic dopamine in learning (Schultz et al., 1997; Waelti et al., 2001). Indeed, such investigations may explain the difficult to resolve issue of how effort, under the right conditions, can enhance the reinforcing value of effort-associated cues, actions, and primary rewards (Eisenberger, 1992; Zentall, 2010; Johnson and Gallagher, 2011).

The variable effects of dopamine on effort-guided behaviors and the different timescales at which dopamine influences neural activity present significant challenges to researchers hoping to create a unifying theory of function. These challenges are compounded by experimental results that suggest that the involvement of dopamine and the NAC in effort-guided behaviors depends on the specific form of physical effort being employed. For example, 6-OHDA depletion of NAC dopamine significantly impairs effort-guided behavior when effort is assessed using fixed-ratio schedules; however, the same dopaminergic manipulation has no effect when effort is in the form of a weighted lever (Ishiwari et al., 2004). One interpretation of these different results is that NAC dopamine is required for sustaining effort over time. This interpretation, however, does not fit well with results from T-maze barrier climbing tasks in which the time required for expending effort within a trial is quite brief. Instead, dopaminergic manipulations may be most effective when sequences of effortful motor acts must be maintained. For example, the degree of motor control required to press a weighted lever a single time is probably similar to the control required for a single press of an unweighted lever. In contrast, the control required to execute a single lever-press is probably considerably less than what is required to sustain a repeating sequence of presses or, in the case of barrier-climbing, in executing a complex jumping procedure. This idea may relate to a formulation of decision-related costs proposed by Shenhav et al. (2013) in which cost is the degree to which control mechanisms must be engaged during a behavior. The authors go further to suggest that the dorsal ACC allocates control to a given mental or physical operation based on the estimated value of the outcome. This value signal used in this allocation process is suggested to arrive from regions such as the amygdala, insula, and dopaminergic system (Shenhav et al., 2013).

Neural systems involved in setting the preference for risk

Studies in humans, non-human primates, and rodents have identified diverse cortical and subcortical structures involved in setting the preference or aversion to risk. In human studies, the Iowa Gambling Task has become an established task for investigating the neural basis for risk-guided decision making (Bechara et al., 1994). In this task, subjects choose between four decks of cards with the decks having different levels of probabilistic gains and losses and different long-term returns. Risk-preference is measured as a preference for suboptimal high-risk/high-reward decks over optimal low-risk alternatives. A preference for high-risk options has been observed in patients with damage to the ventromedial prefrontal cortex and amygdala (Bechara et al., 1994, 1999). Furthermore, BOLD activation of the medial frontal gyrus, lateral orbitofrontal cortex, and insula increases when subjects choose suboptimal high-risk options (Lawrence et al., 2009).

A criticism of the use of the Iowa Gambling Task for assessing risk-preference is that the task places multiple cognitive demands upon subjects that are unrelated to risk. This is problematic as a preference for risky alternatives or neural responses related to risk preference could, in this task, result from a reduced capacity to shift attention, to perform behavioral reversals, to process losses, or to store items in working memory (Maia and McClelland, 2004; Dunn et al., 2006). To address this issue, some fMRI studies in humans and most studies in non-human primates and rodents use paradigms that more directly assess the preference or aversion to variability in reward delivery. These studies have implicated a large number of structures in risk-guided decision making such as the lateral frontal cortex (Tobler et al., 2009), medial prefrontal cortex (St. Onge and Floresco, 2010), insula (Preuschoff et al., 2008; Rudorf et al., 2012), posterior parietal cortex (Huettel et al., 2006), ACC (Christopoulos et al., 2009; Kennerley et al., 2009), posterior cingulate cortex (McCoy and Platt, 2005), VTA (Fiorillo et al., 2003), putamen (Preuschoff et al., 2008), and ventral striatum (Cardinal and Howes, 2005; Preuschoff et al., 2006; Stopper et al., 2013).

Connecting results from human studies to results from studies using non-human primates and rodents is a significant challenge given the wide range of structures identified within and across species. Even so, there are points of intersection. Interestingly, many of these points of intersection include elements of the effort network, such as the ACC, NAC, BLA, and dopamine (Amiez et al., 2006; Behrens et al., 2007; Pais-Vieira et al., 2007; Platt and Huettel, 2008; Preuschoff et al., 2008; Choi and Kim, 2010; Fitzgerald et al., 2010; Rivalan et al., 2011; Schultz et al., 2011; Zeeb and Winstanley, 2011). The following sections review the involvement of these systems in risk-guided behaviors.

Risk and the anterior cingulate cortex

Multiple lines of evidence suggest a role of the primate ACC in evaluating risk and in altering risk preference. In non-human primates, the spiking activity of subsets of ACC neurons changes monotonically with the probability that a cue predicts reward (Kennerley et al., 2009). In humans, BOLD activity in the ACC increases prior to decisions involving risk (Labudda et al., 2008; Weber and Huettel, 2008; Lawrence et al., 2009; Smith et al., 2009). Furthermore, the combination of BOLD activation of the ACC and striatum predicts risk-preference on subsequent trials (Christopoulos et al., 2009), suggesting that ACC/striatal activation sets a baseline preference for risk. The ACC may specialize in monitoring a form of risk known as volatility or “uncertain uncertainty,” a form of uncertainty in which distributions and means of outcome delivery are unknown (Rushworth and Behrens, 2008). BOLD activity in the ACC scales with the amount of information an outcome provides about the underlying outcome distribution (Behrens et al., 2007). For a foraging animal, adapting to volatility is important as it determines how much weight to place on the history of reward delivery, with high volatility indicating that more attention should be paid to recent history. Results from lesion studies of the primate ACC suggest that ACC plays an important role in tracking reward history (Kennerley et al., 2006) and so ACC responses to volatility would support this function.

When compared to results from primates, results from studies in rodents have not produced consistent evidence for a role of the ACC in risk-guided behaviors. Although inactivation (St. Onge and Floresco, 2010), lesions (Rivalan et al., 2011), or dopaminergic blockade (St. Onge et al., 2011) of the medial prefrontal cortex does alter risk-preference, studies that have specifically targeted the rodent ACC have not reported alterations in risk preference in two tests of risk preference (St. Onge and Floresco, 2010; Rivalan et al., 2011).

Risk, dopamine, and the nucleus accumbens

Dopamine plays a central albeit complex role in modulating the preference for risk. For example, systemic administration of dopamine D1 and D2 antagonists in rats results in the avoidance of high-risk/high-reward options in a 2-lever risk-discounting task (St. Onge and Floresco, 2009) while amphetamine or D1 or D2 agonists increased the preference for high-risk/high-reward options in the same task (St. Onge and Floresco, 2009). Conversely, a study that combined the risk of reward with the risk of punishment (foot shock) reported that systemic administration of D2 agonists and amphetamine reduced risk taking for suboptimal large-reward/punishment options (Simon et al., 2011). Similarly, another study reported that the systemic application of D2 antagonists resulted in the avoidance of high-risk/reward options on a rodent version of the Iowa Gambling Task that also incorporated punishment in the form of time-outs (Zeeb et al., 2009). The specific effects of systemic D2 antagonism can be difficult to interpret given that D2-like receptors are located on presynaptic dopaminergic afferents and on the postsynaptic terminals of their cortical and striatal targets (Palij et al., 1990; Timmerman et al., 1990; Santiago and Westerink, 1991). Taken together, these results do indicate that dopamine plays a clear but complex role in risk-guided behaviors, and the specific role may depend on the task, the presence or absence of punishment, and the receptor type that is targeted.

Results from single unit-studies of dopaminergic neurons in the primate VTA also suggest a role of dopamine in evaluating risk. For example, the activity of dopaminergic neurons follows the probability of receiving a future reward with the activity of these neurons peaking when the capacity to predict a reward is lowest (Fiorillo et al., 2003; Tobler et al., 2005). Similarly, imaging studies of the midbrain in humans show increased BOLD activation under conditions of outcome uncertainty (Aron et al., 2004). The effects of dopaminergic manipulations in humans also support a role for dopamine in shaping risk preference. For example, patients undergoing dopamine agonist therapy for the treatment of Parkinson's disease and restless leg syndrome exhibit a four-fold increase in gambling-related problems (Dodd et al., 2005; Dang et al., 2011), while low doses of amphetamine increase self-reports of the desire to gamble in problem gamblers (Zack and Poulos, 2004). Interestingly, the application of antagonists that targeted only D2 receptors increased the perceived reward of a slot-machine gambling episode (Zack and Poulos, 2007). Finally, changes in striatal and cortical dopamine receptor populations and dopamine transporters (DAT) over an organism's development correspond with age-associated shifts in risk preference (Wahlstrom et al., 2010).

As in effort-guided decision making, the influence of dopamine on risk preference may be through dopamine's regulation of the NAC. For example, inactivation or lesions of the NAC in rodents reduces the preference for large, uncertain rewards (Cardinal and Howes, 2005; Stopper et al., 2013). Furthermore, infusion of D1 antagonists into the NAC of rats decreases the preference for larger, uncertain rewards on a probability discounting task. In contrast, infusion of a D1 or D2/D3 agonists into the NAC increases risk preference when reward probability is high (Norbury et al., 2013; Stopper et al., 2013; however, see Mai and Hauber, 2012). Infusion of D1 agonists into the NAC may also “optimize” decision making in treated animals as one study reported that treated rats were more likely to choose the large and uncertain reward when reward probability was high, but were more likely to avoid this option when probabilities were low (Stopper et al., 2013). Further, background dopamine levels appear to peak on trials during which reward delivery is uncertain (St. Onge et al., 2012a). Similarly, one study reported that BOLD responses in the human NAC were largest under conditions of maximal uncertainty (Preuschoff et al., 2006). In another study, NAC BOLD activation increased before subjects made risky choices in a task involving decisions between high (stocks) or low (bonds) risk investments (Kuhnen and Knutson, 2005). These patterns of activation mirror patterns observed in the firing activities of dopaminergic neurons of the VTA of non-human primates (Fiorillo et al., 2003; Tobler et al., 2005).

Taken together, these results suggest that increased NAC dopamine enhances the tolerance of risk while reductions in NAC dopamine reduces risk preference. A similar pattern was observed for effort, with enhanced NAC dopamine resulting in an increased willingness to work for rewards and reduced dopamine resulting in effort avoidance. The following section presents possible mechanisms by which these similar patterns contribute to the influence of effort on risk preference.

Risk and the basolateral amygdala

The BLA is also associated with the assessment of risk, although fewer studies have targeted the BLA relative to the regions discussed previously. For example, results from a study by Ghods-Sharifi et al. (2009) suggest that inactivation of the BLA leads to risk-averse behavior. In this study, animals chose between a lever that delivered a small, certain reward and a lever that delivered a large reward with decreasing reinforcement probabilities that shifted from certain (reward on 100% of trials) to risky (reward on 12.5% of trials). Rats with bilateral inactivation of the BLA preferred the low-risk lever when compared to the saline control. In the same study, the authors investigated effort discounting using a ratio schedule of reinforcement and reported that BLA lesions also resulted in effort aversion (Ghods-Sharifi et al., 2009). Taken together, this suggests a general role for the BLA in overcoming costs associated with both effort and risk. However, observations from a recent disconnection study performed by the same group suggest that the role of the BLA and its interaction with other structures is nuanced. In this study, the authors disconnected the BLA and mPFC and the BLA and the NAC in a similar risk-discounting task (St. Onge et al., 2012b). The authors observed that BLA-mPFC disconnection resulted in increased risk preference while BLA-NAC disconnection resulted in risk aversion. The study further determined that it was the mPFC to BLA and not the BLA to mPFC connection that was involved in enhancing risk-preference. This finding suggests that the mPFC input to the BLA could serve to blunt risk-seeking so that animals can optimize foraging behavior over time.

How does effort alter risk preference?

Increased metabolic demands and expenditure of physical effort can enhance the preference for variable rewards (Caraco, 1980, 1981; Caraco et al., 1990; Kirshenbaum et al., 2003). It has been proposed that a shift in preference for variable rewards is an adaptive behavior that biases animals to gamble on potentially large and life-sustaining gains (Stephens, 1981). The neural mechanisms underlying the capacity of effort to alter risk preference are unknown. Broadly, there are at least two routes by which effort could influence risk. First, the notable intersection between the neural systems involved in effort and risk-guided behaviors (e.g., the NAC, ACC, and BLA) and the capacity neurons within some of these structures to respond to both effort and risk (e.g., Kennerley et al., 2009) suggests that activity in these structures that results from anticipated or experienced effort could alter and consequently bias the processes within these structures involved in evaluating risk. Alternatively, it is possible that neural structures that are not typically associated with effort or risk could become engaged only when environmental contingencies require the integration both of these considerations (Burke et al., 2013). For example, Burke et al. (2013) observed that significant BOLD activation of the frontal pole was only observed when combinations of effort and risk were being considered. The authors of this study did not investigate the neural basis for the capacity of effort to alter risk-preference, and, to our knowledge, no direct investigation of this question has been performed. It is therefore hoped that the following and admittedly speculative proposals will motivate future experiments to fill this gap in understanding.

Effort-triggered increases in NAC dopamine concentration enhances risk preference

Dopamine is an important modulator of both effort- and risk-guided behaviors. Here we propose that the release of dopamine in the NAC in response to physical effort enhances risk preference. This idea is supported by the following observations. First, dopamine concentrations within the NAC increase with physical exercise (Freed and Yamamoto, 1985). Furthermore, increases in dopamine concentration are associated with increased risk preference in humans (Zack and Poulos, 2004; Dodd et al., 2005; Dang et al., 2011) and rodents (St. Onge and Floresco, 2009; Stopper et al., 2013). Finally, stimulation of dopamine D1 receptors within the NAC enhances risk preference (Stopper et al., 2013; however, see Mai and Hauber, 2012). Consequently, we propose that effort-induced increases in NAC dopamine concentration drives risk preference by enhancing dopamine release in the NAC.

What remains to be specified is the mechanism by which NAC dopamine concentration is regulated during effort-guided behaviors. In this regard, the ACC may play a central role given abundant evidence for ACC involvement in effort-guided behaviors (Reviewed in section Experimental Approaches for Studying Effort-Guided Behaviors, Cortical and Sub-Cortical Circuits Involved in Effort-Guided Behaviors and Dopamine and Effort-Guided Behavior and see Figure 1). Furthermore, activation of the ACC is capable of driving the responses of dopaminergic neurons in the VTA (Gariano and Groves, 1988), the principal source of NAC dopamine. Activity within the dorsomedial prefrontal cortex, including the ACC, is also capable of sustaining motor activity during delay intervals (Narayanan and Laubach, 2006; Narayanan et al., 2006) suggesting that ACC neurons could sustain dopaminergic responses and enhance dopaminergic tone in the NAC during effortful behaviors. The increase in NAC dopaminergic tone could, as a secondary effect, alter risk-guided behaviors.

The capacity of the ACC to sustain responses over delays may be particularly important for maintaining effortful behaviors as such behaviors often require maintaining motor responses over seconds and minutes. Consequently, understanding the timescale of dopamine release in the NAC during effortful behavior may be critical for understanding its role in modulating risk-preference. As discussed in section Dopamine and Effort-Guided Behavior, dopamine release can occur on multiple timescales, and the timescale most relevant for effort- and risk-guided behaviors has yet to be determined, although evidence is building that phasic patterns of dopamine release in the NAC are not critical for effort-guided behaviors (Gan et al., 2010; Wanat et al., 2010). Instead, gradual shifts in dopamine release may modulate effort-guided decision making, facilitate the maintenance of effortful responses, and sustain a preference for risk. Increases in tonic firing of VTA neurons correlates with anticipated risk (Fiorillo et al., 2003), suggesting that effort-induced increases in dopamine may alter risk-preference.

Interestingly, a recent study has identified within-trial increases in striatal dopamine concentration that ramps up as rats approach goal locations on a T-maze (Howe et al., 2013). These increases appear to be independent of phasic dopamine release and occur at a faster time scale than typically reported for changes in background dopamine concentration. The ACC could potentially drive this ramping response given the capacity of medial prefrontal neurons to sustain firing activity in afferent targets (Narayanan and Laubach, 2006; Narayanan et al., 2006). As suggested by Howe et al. (2013), the ramping response may be involved in setting the ongoing level of motivation. Determining the level of motivation could require the integration of factors such as effort, risk, and expected reward.

Effort-triggered increases in dopamine enhances learning under conditions of uncertainty

The preceding account suggests that effort-induced changes in NAC dopamine enhance risk-preference and provides the motivational signal required for animals to sustain effortful behaviors. An alternative mechanism by which effort could influence risk is through dopamine's impact on stimulus-reward learning. Dopamine has an established role in facilitating such learning (Schultz et al., 1997; Day et al., 2007; Flagel et al., 2011; Steinberg et al., 2013), and both tonic and phasic patterns of dopamine release appear to enhance plasticity at hippocampal-NAC synapses (Goto and Grace, 2005). It is therefore possible that increases in NAC dopamine from both effort (Freed and Yamamoto, 1985) and risk (Fiorillo et al., 2003; St. Onge et al., 2012a) could produce an additive effect on plasticity, and, as a result, an additive effect on stimulus-reward learning under conditions that combine risk and effort. Anatomical connectivity between the VTA and the amygdala (Oades and Halliday, 1987) and the capacity of dopamine to gate plasticity within the amygdala (Bissière et al., 2003) suggest that enhancements in plasticity could extend to other limbic structures.

An interesting feature of this idea is that it may account for the paradoxical capacity of effort to enhance the reinforcing properties of cues and primary rewards. Pigeons, for example, prefer colored lights, spatial locations, and primary rewards associated with high effort (Clement et al., 2000; Friedrich and Zentall, 2004; Singer et al., 2007). Similar observations have been reported in starlings (Kacelnik and Marsh, 2002), mice (Johnson and Gallagher, 2011), and humans (Zentall, 2010). Indeed, mice can express a preference for low- over high-calorie foods when low-calorie foods are paired with high effort (Johnson and Gallagher, 2011). If effort-triggered dopamine release enhances stimulus-reward learning in, for example, the amygdala (Bissière et al., 2003), or striatum (Day et al., 2007; Cerovic et al., 2013), then such learning could result in a preference for rewards and reward-predictive cues learned under conditions of effort.

Conclusion

The recent trend of using ethologically motivated experimental design to connect field observations with observations from neuroscience (Glimcher, 2002; Bateson, 2003; Stephens, 2008; McNamara and Houston, 2009) has begun to produce interesting results (e.g., Pearson et al., 2009; Hayden et al., 2011; Kolling et al., 2012). One important insight from foraging research is that animals are particularly attentive to the variability of reward delivery, even when average amounts of rewards are held constant (Stephens and Krebs, 1987; Kacelnik and Bateson, 1996). A second interesting observation is that the preference for such variability can be modulated by energetic state (Caraco, 1981) and physical effort (Kirshenbaum et al., 2003). Although there is compelling behavioral evidence for interactions between effort and risk, neuroscientific investigations of these interactions have not been performed. Given the surprising degree of overlap between networks involved in effort- and risk-guided behaviors, there is good reason to believe that the neural systems involved in evaluating, processing, and setting the preference for effort and risk interact. Consequently, it is hoped that this review will stimulate interest in the risk-effort connection and encourage new experiments that investigate the nuanced role that physical effort plays in foraging and decision making.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Sara Burke, Nathan Insel, David Euston, Drew Maurer, David Redish, Rachel Samson, Robin Smith, and David Stephens for their insights and helpful contributions.

References

- Amiez C., Joseph J. P., Procyk E. (2006). Reward encoding in the monkey anterior cingulate cortex. Cereb. Cortex 16, 1040–1055 10.1093/cercor/bhj046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aron A. R., Shohamy D., Clark J., Myers C., Gluck M. A., Poldrack R. A. (2004). Human midbrain sensitivity to cognitive feedback and uncertainty during classification learning. J. Neurophys. 92, 1144–1152 10.1152/jn.01209.2003 [DOI] [PubMed] [Google Scholar]

- Barbas H., Blatt G. J. (1995). Topographically specific hippocampal projections target functionally distinct prefrontal areas in the rhesus monkey. Hippocampus 5, 511–533 10.1002/hipo.450050604 [DOI] [PubMed] [Google Scholar]

- Barbas H., De Olmos J. (1990). Projections from the amygdala to basoventral and mediodorsal prefrontal regions in the rhesus monkey. J. Comp. Neurol. 300, 549–571 10.1002/cne.903000409 [DOI] [PubMed] [Google Scholar]

- Bardgett M. E., Depenbrock M., Downs N., Points M., Green L. (2009). Dopamine modulates effort-based decision making in rats. Behav. Neurosci. 123, 242–251 10.1037/a0014625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates J. F., Goldman-Rakic P. S. (1993). Prefrontal connections of medial motor areas in the rhesus monkey. J. Comp. Neurol. 336, 211–228 10.1002/cne.903360205 [DOI] [PubMed] [Google Scholar]

- Bateson M. (2003). Interval timing and optimal foraging, in Functional and Neural Mechanisms of Interval Timing, ed Meck W. H. (Boca Raton, FL: CRC Press; ), 113–142 [Google Scholar]

- Bautista L. M., Tinbergen J., Kacelnik A. (2001). To walk or to fly? How birds choose among foraging modes. Proc. Natl. Acad. Sci. U.S.A. 98, 1089–1094 10.1073/pnas.98.3.1089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter M. G., Murray E. A. (2002). The amygdala and reward. Nat. Neurosci. 3, 563–573 10.1038/nrn875 [DOI] [PubMed] [Google Scholar]

- Bechara A., Damasio A. R., Damasio H., Anderson S. W. (1994). Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 50, 7–15 10.1016/0010-0277(94)90018-3 [DOI] [PubMed] [Google Scholar]

- Bechara A., Damasio H., Damasio A. R., Lee G. P. (1999). Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J. Neurosci. 19, 5473–5481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens T. E. J., Woolrich M. W., Walton M. E., Rushworth M. F. S. (2007). Learning the value of information in an uncertain world. Nat. Neurosci. 10, 1214–1221 10.1038/nn1954 [DOI] [PubMed] [Google Scholar]

- Berridge K. C., Robinson T. E. (2003). Parsing reward. Trends. Neurosci. 26, 507–13 10.1016/S0166-2236(03)00233-9 [DOI] [PubMed] [Google Scholar]

- Bissière S., Humeau Y., Lüthi A. (2003). Dopamine gates LTP induction in lateral amygdala by suppressing feedforward inhibition. Nat. Neurosci. 6, 587–592 10.1038/nn1058 [DOI] [PubMed] [Google Scholar]

- Brito E Abreu F., Kacelnik A. (1999). Energy budgets and risk-sensitive foraging in starlings. Behav. Ecol. 10, 338–345 10.1093/beheco/10.3.3389236010 [DOI] [Google Scholar]

- Burke C. J., Brünger C., Kahnt T., Park S. Q., Tobler P. N. (2013). Neural integration of risk and effort costs by the frontal pole: only upon request. J. Neurosci. 33, 1706–1713 10.1523/JNEUROSCI.3662-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caraco T. (1980). On foraging time allocation in a stochastic environment. Ecology 61, 119–128 10.2307/193716216597727 [DOI] [Google Scholar]

- Caraco T. (1981). Energy budgets, risk and foraging preferences in dark-eyed Juncos (Junco hyemalis). Behav. Ecol. Sociobiol. 8, 213–217 10.1007/BF00299833 [DOI] [Google Scholar]

- Caraco T., Blanckenhorn W. U., Gregory G. M., Newman J. A., Recer G. M., Zwicker S. M. (1990). Risk-sensitivity: ambient temperature affects foraging choice. Anim. Behav. 39, 338–345 10.1016/S0003-3472(05)80879-6 [DOI] [Google Scholar]

- Cardinal R. N., Howes N. J. (2005). Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci. 6:37 10.1186/1471-2202-6-37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cerovic M., d'Isa R., Tonini R., Brambilla R. (2013). Molecular and cellular mechanisms of dopamine-mediated behavioral plasticity in the striatum. Neurobiol. Learn. Mem. 105, 63–80 10.1016/j.nlm.2013.06.013 [DOI] [PubMed] [Google Scholar]

- Choi J.-S., Kim J. J. (2010). Amygdala regulates risk of predation in rats foraging in a dynamic fear environment. Proc. Natl. Acad. Sci. U.S.A. 107, 21773–21777 10.1073/pnas.1010079108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christopoulos G. I., Tobler P. N., Bossaerts P., Dolan R. J., Schultz W. (2009). Neural correlates of value, risk, and risk aversion contributing to decision making under risk. J. Neurosci. 29, 12574–12583 10.1523/JNEUROSCI.2614-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clement T. S., Feltus J. R., Kaiser D. H., Zentall T. R. (2000). “Work ethic” in pigeons: reward value is directly related to the effort or time required to obtain the reward. Psychon. Bull. Rev. 7, 100–106 10.3758/BF03210727 [DOI] [PubMed] [Google Scholar]

- Cousins M. S. S., Atherton A., Turner L., Salamone J. D. D. (1996). Nucleus accumbens dopamine depletions alter relative response allocation in a T-maze cost/benefit task. Behav. Brain. Res. 74, 189–197 10.1016/0166-4328(95)00151-4 [DOI] [PubMed] [Google Scholar]

- Cowen S. L., Davis G. A., Nitz D. A. (2012). Anterior cingulate neurons in the rat map anticipated effort and reward to their associated action sequences. J. Neurophysiol. 107, 2393–2407 10.1152/jn.01012.2011 [DOI] [PubMed] [Google Scholar]

- Cowen S. L., McNaughton B. L. (2007). Selective delay activity in the medial prefrontal cortex of the rat: contribution of sensorimotor information and contingency. J. Neurophysiol. 98, 303–316 10.1152/jn.00150.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craft B. B., Church A. C., Rohrbach C. M., Bennett J. M. (2011). The effects of reward quality on risk-sensitivity in Rattus norvegicus. Behav. Process. 88, 44–46 10.1016/j.beproc.2011.07.002 [DOI] [PubMed] [Google Scholar]

- Dang D., Cunnington D., Swieca J. (2011). The emergence of devastating impulse control disorders during dopamine agonist therapy of the restless legs syndrome. Clin. Neuropharmacol. 34, 66–70 10.1097/WNF.0b013e31820d6699 [DOI] [PubMed] [Google Scholar]

- Day J. J., Jones J. L., Wightman R. M., Carelli R. M. (2010). Phasic nucleus accumbens dopamine release encodes effort- and delay-related costs. Biol. Psychiatry 68, 306–309 10.1016/j.biopsych.2010.03.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day J. J., Roitman M. F., Wightman R. M., Carelli R. M. (2007). Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat. Neurosci. 10, 1020–1028 10.1038/nn1923 [DOI] [PubMed] [Google Scholar]

- Denk F., Walton M. E., Jennings K. A., Sharp T., Rushworth M. F. S., Bannerman D. M. (2005). Differential involvement of serotonin and dopamine systems in cost-benefit decisions about delay or effort. J. Psychopharmacol. 179, 587–596 10.1007/s00213-004-2059-4 [DOI] [PubMed] [Google Scholar]

- Dodd M. L. M., Klos K. J. K. J., Bower J. H. J. H., Geda Y. E., Josephs K. A., Ahlskog J. E. (2005). Pathological gambling caused by drugs used to treat Parkinson disease. Arch. Neural. 62, 1377–1381 10.1001/archneur.62.9.noc50009 [DOI] [PubMed] [Google Scholar]

- Dommett E., Coizet V., Blaha C. D., Martindale J., Lefebvre V., Walton N., et al. (2005). How visual stimuli activate dopaminergic neurons at short latency. Science 307, 1476–1479 10.1126/science.1107026 [DOI] [PubMed] [Google Scholar]

- Dunn B. D., Dalgleish T., Lawrence A. D. (2006). The somatic marker hypothesis: a critical evaluation. Neurosci. Biobehav. Rev. 30, 239–271 10.1016/j.neubiorev.2005.07.001 [DOI] [PubMed] [Google Scholar]

- Eisenberger R. (1992). Learned industriousness. Psychol. Rev. 99, 248–267 10.1037/0033-295X.99.2.248 [DOI] [PubMed] [Google Scholar]

- Fiorillo C. D., Tobler P. N., Schultz W. (2003). Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902 10.1126/science.1077349 [DOI] [PubMed] [Google Scholar]

- Fitzgerald T. H. B., Seymour B., Bach D. R., Dolan R. J. (2010). Differentiable neural substrates for learned and described value and risk. Curr. Biol. 20, 1823–1829 10.1016/j.cub.2010.08.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagel S. B., Clark J. J., Robinson T. E., Mayo L., Czuj A., Willuhn I., et al. (2011). A selective role for dopamine in stimulus-reward learning. Nature 469, 53–57 10.1038/nature09588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco S. B., Ghods-Sharifi S. (2007). Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb. Cortex 17, 251–260 10.1093/cercor/bhj143 [DOI] [PubMed] [Google Scholar]

- Floresco S. B., Tse M. T. L., Ghods-Sharifi S. (2008). Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology 33, 1966–1979 10.1038/sj.npp.1301565 [DOI] [PubMed] [Google Scholar]

- Floresco S. B., West A. R., Ash B., Moore H., Grace A. A. (2003). Afferent modulation of dopamine neuron firing differentially regulates tonic and phasic dopamine transmission. Nat. Neurosci. 6, 968–973 10.1038/nn1103 [DOI] [PubMed] [Google Scholar]

- Freed C. R., Yamamoto B. K. (1985). Regional brain dopamine metabolism: a marker for the speed, direction, and posture of moving animals. Science 229, 62–65 10.1126/science.4012312 [DOI] [PubMed] [Google Scholar]

- Friedrich A. M., Zentall T. R. (2004). Pigeons shift their preference toward locations of food that take more effort to obtain. Behav. Process. 67, 405–415 10.1016/j.beproc.2004.07.001 [DOI] [PubMed] [Google Scholar]

- Gabbott P. L. A., Warner T. A., Jays P. R. L., Salway P., Busby S. J. (2005). Prefrontal cortex in the rat: projections to subcortical autonomic, motor, and limbic centers. J. Comp. Neurol. 492, 145–177 10.1002/cne.20738 [DOI] [PubMed] [Google Scholar]

- Gan J. O., Walton M. E., Phillips P. E. M. (2010). Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat. Neurosci. 13, 25–27 10.1038/nn.2460 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gariano R. F., Groves P. M. (1988). Burst firing induced in midbrain dopamine neurons by stimulation of the medial prefrontal and anterior cingulate cortices. Brain Res. 462, 194–198 10.1016/0006-8993(88)90606-3 [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S., Floresco S. B. (2010). Differential effects on effort discounting induced by inactivations of the nucleus accumbens core or shell. Behav. Neurosci. 124, 179–191 10.1037/a0018932 [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S., St. Onge J. R., Floresco S. B. (2009). Fundamental contribution by the basolateral amygdala to different forms of decision making. J. Neurosci. 29, 5251–5259 10.1523/JNEUROSCI.0315-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher P. (2002). Decisions, decisions, decisions: choosing a biological science of choice. Neuron 36, 323–332 10.1016/50898-6273(02)00962-5 [DOI] [PubMed] [Google Scholar]

- Goto Y., Grace A. A. (2005). Dopamine-dependent interactions between limbic and prefrontal cortical plasticity in the nucleus accumbens: disruption by cocaine sensitization. Neuron 47, 255–266 10.1016/j.neuron.2005.06.017 [DOI] [PubMed] [Google Scholar]

- Grace A. A. (1991). Phasic versus tonic dopamine release and the modulation of dopamine system responsivity: a hypothesis for the etiology of schizophrenia. Neuroscience 41, 1–24 10.1016/0306-4522(91)90196-U [DOI] [PubMed] [Google Scholar]

- Haber S. N., Kim K.-S., Mailly P., Calzavara R. (2006). Reward-related cortical inputs define a large striatal region in primates that interface with associative cortical connections, providing a substrate for incentive-based learning. J. Neurosci. 26, 8368–8376 10.1523/JNEUROSCI.0271-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hattori S., Naoi M., Nishino H. (1994). Striatal dopamine turnover during treadmill running in the rat: relation to the speed of running. Brain Res. Bull. 35, 41–49 10.1016/0361-9230(94)90214-3 [DOI] [PubMed] [Google Scholar]

- Hauber W., Sommer S. (2009). Prefrontostriatal circuitry regulates effort-related decision making. Cereb. Cortex 19, 2240–2247 10.1093/cercor/bhn241 [DOI] [PubMed] [Google Scholar]

- Hayden B. Y., Pearson J. M., Platt M. L. (2011). Neuronal basis of sequential foraging decisions in a patchy environment. Nat. Neruosci. 14, 933–939 10.1038/nn.2856 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollerman J. R., Schultz W. (1998). Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci. 1, 304–309 10.1038/1124 [DOI] [PubMed] [Google Scholar]

- Hoover W. B., Vertes R. P. (2007). Anatomical analysis of afferent projections to the medial prefrontal cortex in the rat. Brain Struct. Funct. 212, 149–179 10.1007/s00429-007-0150-4 [DOI] [PubMed] [Google Scholar]

- Houston A. (1991). Risk-sensitive foraging theory and operant psychology. J. Exp. Anal. Behav. 3, 585–589 10.1901/jeab.1991.56-585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howe M. W., Tierney P. L., Sandberg S. G., Phillips P. E. M., Graybiel A. M. (2013). Prolonged dopamine signalling in striatum signals proximity and value of distant rewards. Nature 500, 575–579 10.1038/nature12475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huettel S. A., Stowe C. J., Gordon E. M., Warner B. T., Platt M. L. (2006). Neural signatures of economic preferences for risk and ambiguity. Neuron 49, 765–775 10.1016/j.neuron.2006.01.024 [DOI] [PubMed] [Google Scholar]

- Ishiwari K., Weber S. M., Mingote S., Correa M., Salamone J. D. (2004). Accumbens dopamine and the regulation of effort in food-seeking behavior: modulation of work output by different ratio or force requirements. Behav. Brain Res. 151, 83–91 10.1016/j.bbr.2003.08.007 [DOI] [PubMed] [Google Scholar]

- Johnson A. W., Gallagher M. (2011). Greater effort boosts the affective taste properties of food. Proc. Biol. Sci. 278, 1450–1456 10.1098/rspb.2010.1581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik A., Bateson M. (1996). Risky theories—the effects of variance on foraging decisions. Am. Zool. 36, 402–434 10.1093/icb/36.4.402 [DOI] [Google Scholar]

- Kacelnik A., Marsh B. (2002). Cost can increase preference in starlings. Anim. Behav. 63, 245–250 10.1006/anbe.2001.1900 [DOI] [Google Scholar]

- Kennerley S. S. W., Dahmubed A. A. F., Lara A. H., Wallis J. D. (2009). Neurons in the frontal lobe encode the value of multiple decision variables. J. Cogn. Neurosci. 21, 1162–1178 10.1162/jocn.2009.21100.Neurons [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley S. W., Walton M. E., Behrens T. E. J., Buckley M. J., Rushworth M. F. S. (2006). Optimal decision making and the anterior cingulate cortex. Nat. Neurosci. 9, 940–947 10.1038/nn1724 [DOI] [PubMed] [Google Scholar]

- Kirshenbaum A. P., Szalda-Petree A. D., Haddad N. F. (2003). Increased effort requirements and risk sensitivity: a comparison of delay and magnitude manipulations. Behav. Process. 61, 109–121 10.1016/S0376-6357(02)00165-1 [DOI] [PubMed] [Google Scholar]

- Kolling N., Behrens T. E. J., Mars R. B., Rushworth M. F. S. (2012). Neural mechanisms of foraging. Science 336, 95–98 10.1126/science.1216930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhnen C. M., Knutson B. (2005). The neural basis of financial risk taking. Neuron 47, 763–770 10.1016/j.neuron.2005.08.008 [DOI] [PubMed] [Google Scholar]

- Labudda K., Woermann F. G., Mertens M., Pohlmann-Eden B., Markowitsch H. J., Brand M. (2008). Neural correlates of decision making with explicit information about probabilities and incentives in elderly healthy subjects. Exp. Brain Res. 187, 641–650 10.1007/s00221-008-1332-x [DOI] [PubMed] [Google Scholar]

- Lawrence N. S., Jollant F., O'Daly O., Zelaya F., Phillips M. L. (2009). Distinct roles of prefrontal cortical subregions in the Iowa Gambling Task. Cereb. Cortex 19, 1134–1143 10.1093/cercor/bhn154 [DOI] [PubMed] [Google Scholar]

- Luppino G., Matelli M., Camarda R. M., Gallese V., Rizzolatti G. (1991). Multiple representations of body movements in mesial area 6 and the adjacent cingulate cortex: an intracortical microstimulation study in the macaque monkey. J. Comp. Neurol. 311, 463–482 10.1002/cne.903110403 [DOI] [PubMed] [Google Scholar]

- Mai B., Hauber W. (2012). Intact risk-based decision making in rats with prefrontal or accumbens dopamine depletion. Cogn. Affect. Behav. Neurosci. 4, 719–729 10.3758/s13415-012-0115-9 [DOI] [PubMed] [Google Scholar]

- Mai B., Sommer S., Hauber W. (2012). Motivational states influence effort-based decision making in rats: the role of dopamine in the nucleus accumbens. Cogn. Affect. Behav. Neurosci. 12, 74–84 10.3758/s13415-011-0068-4 [DOI] [PubMed] [Google Scholar]

- Maia T. V., McClelland J. L. (2004). A reexamination of the evidence for the somatic marker hypothesis: what participants really know in the Iowa gambling task. Proc. Natl. Acad. Sci. U.S.A. 101, 16075–16080 10.1073/pnas.0406666101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCoy A. N., Platt M. L. (2005). Risk-sensitive neurons in macaque posterior cingulate cortex. Nat. Neruosci. 8, 1220–1227 10.1038/nn1523 [DOI] [PubMed] [Google Scholar]

- McNamara J. M., Houston A. I. (2009). Integrating function and mechanism. Trends Ecol. Evol. 24, 670–675 10.1016/j.tree.2009.05.011 [DOI] [PubMed] [Google Scholar]

- Meeusen R., De Meirleir K. (1995). Exercise and brain neurotransmission. Sports Med. 20, 160–188 10.2165/00007256-199520030-00004 [DOI] [PubMed] [Google Scholar]

- Meeusen R., Smolders I., Sarre S., de Meirleir K., Keizer H., Serneels M., et al. (1997). Endurance training effects on neurotransmitter release in rat striatum: an in vivo microdialysis study. Acta physiol. Scand. 159, 335–341 10.1046/j.1365-201X.1997.00118.x [DOI] [PubMed] [Google Scholar]

- Narayanan N. S., Horst N. K., Laubach M. (2006). Reversible inactivations of rat medial prefrontal cortex impair the ability to wait for a stimulus. Neuroscience 139, 865–876 10.1016/j.neuroscience.2005.11.072 [DOI] [PubMed] [Google Scholar]

- Narayanan N. S., Laubach M. (2006). Top-down control of motor cortex ensembles by dorsomedial prefrontal cortex. Neuron 52, 921–931 10.1016/j.neuron.2006.10.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y., Daw N. D., Dayan P. (2005). How fast to work: response vigor, motivation and tonic dopamine, in Proceedings of the Neural Information Processing Systems (NIPS), eds Weiss Y., Scholkopf B., Platt J. (MIT Press; ), 1019–1026 [Google Scholar]

- Niv Y., Daw N. D., Joel D., Dayan P. (2007). Tonic dopamine: opportunity costs and the control of response vigor. J. Psychopharmacol. 191, 507–520 10.1007/s00213-006-0502-4 [DOI] [PubMed] [Google Scholar]

- Norbury A., Manohar S., Rogers R. D., Husain M. (2013). Dopamine modulates risk-taking as a function of baseline sensation-seeking trait. J. Neurosci. 33, 12982–12986 10.1523/JNEUROSCI.5587-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oades R. D., Halliday G. M. (1987). Ventral tegmental (A10) system: neurobiology. 1. Anatomy and connectivity. Brain Res. 434, 117–165 [DOI] [PubMed] [Google Scholar]

- Ostlund S. B., Wassum K. M., Murphy N. P., Balleine B. W., Maidment N. T. (2011). Extracellular dopamine levels in striatal subregions track shifts in motivation and response cost during instrumental conditioning. J. Neurosci. 31, 200–207 10.1523/JNEUROSCI.4759-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostrander S., Cazares V. A., Kim C., Cheung S., Gonzalez I., Izquierdo A. (2011). Orbitofrontal cortex and basolateral amygdala lesions result in suboptimal and dissociable reward choices on cue-guided effort in rats. Behav. Neurosci. 125, 350–359 10.1037/a0023574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owesson-White C. A., Roitman M. F., Sombers L. A., Belle A. M., Keithley R. B., Peele J. L., et al. (2012). Sources contributing to the average extracellular concentration of dopamine in the nucleus accumbens. J. Neurochem. 121, 252–262 10.1111/j.1471-4159.2012.07677.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pais-Vieira M., Lima D., Galhardo V. (2007). Orbitofrontal cortex lesions disrupt risk assessment in a novel serial decision-making task for rats. Neuroscience 145, 225–231 10.1016/j.neuroscience.2006.11.058 [DOI] [PubMed] [Google Scholar]

- Palij P., Bull D. R., Sheehan M. J., Millar J., Stamford J., Kruk Z. L., et al. (1990). Presynaptic regulation of dopamine release in corpus striatum monitored in vitro in real time by fast cyclic voltammetry. Brain Res. 509, 172–174 10.1016/0006-8993(90)90329-A [DOI] [PubMed] [Google Scholar]

- Pearson J. M., Hayden B. Y., Raghavachari S., Platt M. L. (2009). Neurons in posterior cingulate cortex signal exploratory decisions in a dynamic multioption choice task. Curr. Biol. 19, 1532–1537 10.1016/j.cub.2009.07.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picard N., Strick P. L. (1996). Motor areas of the medial wall: a review of their location and functional activation. Cereb. Cortex 6, 342–353 10.1093/cercor/6.3.342 [DOI] [PubMed] [Google Scholar]

- Platt M. M. L., Huettel S. S. A. (2008). Risky business: the neuroeconomics of decision making under uncertainty. Nat. Neurosci. 11, 398–403 10.1038/nn2062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschoff K., Bossaerts P., Quartz S. R. (2006). Neural differentiation of expected reward and risk in human subcortical structures. Neuron 51, 381–390 10.1016/j.neuron.2006.06.024 [DOI] [PubMed] [Google Scholar]

- Preuschoff K., Quartz S. R., Bossaerts P. (2008). Human insula activation reflects risk prediction errors as well as risk. J. Neurosci. 28, 2745–2752 10.1523/JNEUROSCI.4286-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuss T. (1995). Do rats have prefrontal cortex? The Rose-Woolsey-Akert program reconsidered. J. Cogn. Neurosci. 7, 1–24 10.1162/jocn.1995.7.1.1 [DOI] [PubMed] [Google Scholar]

- Rivalan M., Coutureau E., Fitoussi A., Dellu-Hagedorn F. (2011). Inter-individual decision-making differences in the effects of cingulate, orbitofrontal, and prelimbic cortex lesions in a rat gambling task. Front. Behav. Neurosci. 5:22 10.3389/fnbeh.2011.00022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck P. H., Walton M. E., Smyth A. N., Bannerman D. M., Rushworth M. F. S. (2006). Separate neural pathways process different decision costs. Nat. Neurosci. 9, 1161–1168 10.1038/nn1756 [DOI] [PubMed] [Google Scholar]

- Rudorf S., Preuschoff K., Weber B. (2012). Neural correlates of anticipation risk reflect risk preferences. J. Neurosci. 32, 16683–16692 10.1523/JNEUROSCI.4235-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth M. F. S., Behrens T. E. J. (2008). Choice, uncertainty and value in prefrontal and cingulate cortex. Nat. Neurosci. 11, 389–397 10.1038/nn2066 [DOI] [PubMed] [Google Scholar]

- Salamone J. (1994). The involvement of nucleus accumbens dopamine in appetitive and aversive motivation. Behav. Brain Res. 61, 117–133 10.1016/0166-4328(94)90153-8 [DOI] [PubMed] [Google Scholar]

- Salamone J., Correa M., Farrar A., Mingote S. (2007). Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology 191, 461–482 10.1007/s00213-006-0668-9 [DOI] [PubMed] [Google Scholar]

- Salamone J. D., Correa M., Nunes E. J., Randall P. A., Pardo M. (2012). The behavioral pharmacology of effort-related choice behavior: dopamine, adenosine and beyond. J. Exp. Anal. Behav. 97, 125–146 10.1901/jeab.2012.97-125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone J. D., Cousins M. S., Bucher S. (1994). Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav. Brain Res. 65, 221–229 10.1016/j.jneumeth.2007.03.007 [DOI] [PubMed] [Google Scholar]

- Santiago M., Westerink B. H. (1991). Characterization and pharmacological responsiveness of dopamine release recorded by microdialysis in the substantia nigra of conscious rats. J. Neurochem. 57, 738–747 10.1111/j.1471-4159.1991.tb08214.x [DOI] [PubMed] [Google Scholar]

- Schultz W. (2007). Multiple dopamine functions at different time courses. Annu. Rev. Neurosci. 30, 259–288 10.1146/annurev.neuro.28.061604.135722 [DOI] [PubMed] [Google Scholar]

- Schultz W., Dayan P., Montague P. R. (1997). A neural substrate of prediction and reward. Science 275, 1593–1599 10.1126/science.275.5306.1593 [DOI] [PubMed] [Google Scholar]

- Schultz W., O'Neill M., Tobler P. N., Kobayashi S. (2011). Neuronal signals for reward risk in frontal cortex. Ann. N.Y. Acad. Sci. 1239, 109–117 10.1111/j.1749-6632.2011.06256.x [DOI] [PubMed] [Google Scholar]

- Schweimer J., Hauber W. (2006). Dopamine D1 receptors in the anterior cingulate cortex regulate effort-based decision making. Learn. Mem. 13, 777–782 10.1101/lm.409306 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sesack S. R., Deutch A. Y., Roth R. H., Bunney B. S. (1989). Topographical organization of the efferent projections of the medial prefrontal cortex in the rat: an anterograde tract-tracing study with Phaseolus vulgaris leucoagglutinin. J. Comp. Neurol. 290, 213–242 10.1002/cne.902900205 [DOI] [PubMed] [Google Scholar]

- Shenhav A., Botvinick M. M., Cohen J. D. (2013). The expected value of control: an integrative theory of anterior cingulate cortex function. Neuron 79, 217–240 10.1016/j.neuron.2013.07.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shima K., Tanji J. (1998). Role for cingulate motor area cells in voluntary movement selection based on reward. Science 282, 1335–1338 10.1126/science.282.5392.1335 [DOI] [PubMed] [Google Scholar]

- Simon N. W., Montgomery K. S., Beas B. S., Mitchell M. R., LaSarge C. L., Mendez I. A., et al. (2011). Dopaminergic modulation of risky decision-making. J. Neurosci. 31, 17460–17470 10.1523/JNEUROSCI.3772-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer R. A., Berry L. M., Zentall T. R. (2007). Preference for a stimulus that follows a relatively aversive event: contrast or delay reduction? J. Exp. Anal. Behav. 87, 275–285 10.1901/jeab.2007.39-06 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith B. W., Mitchell D. G. V., Hardin M. G., Jazbec S., Fridberg D., Blair R. J. R., et al. (2009). Neural substrates of reward magnitude, probability, and risk during a wheel of fortune decision-making task. Neuroimage 44, 600–609 10.1016/j.neuroimage.2008.08.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg E. E., Keiflin R., Boivin J. R., Witten I. B., Deisseroth K., Janak P. H. (2013). A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci. 16, 966–973 10.1038/nn.3413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens D. (1981). The logic of risk-sensitive foraging preferences. Anim. Behav. 29, 628–629 10.1016/s0003-3472(81)80128-5 [DOI] [Google Scholar]

- Stephens D., Brown J., Ydenberg R. (2007). Foraging: Behavior and Ecology. Chicago, IL: University of Chicago Press [Google Scholar]

- Stephens D. W. (2008). Decision ecology: foraging and the ecology of animal decision making. Cogn. Affect. Behav. Neurosci. 8, 475–484 10.3758/CABN.8.4.475 [DOI] [PubMed] [Google Scholar]

- Stephens D. W., Krebs J. R. (1987). Foraging Theory. Princeton, NJ: Princeton University Press [Google Scholar]

- St. Onge J. R., Abhari H., Floresco S. B. (2011). Dissociable contributions by prefrontal D1 and D2 receptors to risk-based decision making. J. Neurosci. 31, 8625–8633 10.1523/JNEUROSCI.1020-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St. Onge J. R., Ahn S., Phillips A. G., Floresco S. B. (2012a). Dynamic fluctuations in dopamine efflux in the prefrontal cortex and nucleus accumbens during risk-based decision making. J. Neurosci. 32, 16880–16891 10.1523/JNEUROSCI.3807-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St. Onge J. R., Stopper C. M., Zahm D. S., Floresco S. B. (2012b). Separate prefrontal-subcortical circuits mediate different components of risk-based decision making. J. Neurosci. 32, 2886–2899 10.1523/JNEUROSCI.5625-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- St. Onge J. R., Floresco S. B. (2009). Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology 34, 681–697 10.1038/npp.2008.121 [DOI] [PubMed] [Google Scholar]

- St. Onge J. R., Floresco S. B. (2010). Prefrontal cortical contribution to risk-based decision making. Cereb. Cortex 20, 1816–1828 10.1093/cercor/bhp250 [DOI] [PubMed] [Google Scholar]

- Stopper C. M., Khayambashi S., Floresco S. B. (2013). Receptor-specific modulation of risk-based decision making by nucleus accumbens dopamine. Neuropsychopharmacology 38, 715–728 10.1038/npp.2012.240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Timmerman W., De Vries J. B., Westerink B. H. (1990). Effects of D-2 agonists on the release of dopamine: localization of the mechanism of action. Naunyn Schmiedebergs Arch. Pharmacol. 342, 650–654 10.1007/BF00175707 [DOI] [PubMed] [Google Scholar]

- Tobler P. N., Christopoulos G. I., Doherty J. P. O., Dolan R. J., Schultz W., O'Doherty J. P. (2009). Risk-dependent reward value signal in human prefrontal cortex. Proc. Natl. Acad. Sci. U.S.A. 106, 7185–7190 10.1073/pnas.0809599106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler P. N. P., Fiorillo C. D. C. C. D., Schultz W. (2005). Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 10.1126/science.1105370 [DOI] [PubMed] [Google Scholar]

- Uylings H. (2003). Do rats have a prefrontal cortex? Behav. Brain Res. 146, 3–17 10.1016/j.bbr.2003.09.028 [DOI] [PubMed] [Google Scholar]

- Vogt B. A., Paxinos G. (2012). Cytoarchitecture of mouse and rat cingulate cortex with human homologies. Brain Struct. Funct. [Epub ahead of print]. 10.1007/s00429-012-0493-3 [DOI] [PubMed] [Google Scholar]

- Voorn P., Vanderschuren L. J. M. J., Groenewegen H. J., Robbins T. W., Pennartz C. M. A. (2004). Putting a spin on the dorsal-ventral divide of the striatum. Trends Neurosci. 27, 468–474 10.1016/j.tins.2004.06.006 [DOI] [PubMed] [Google Scholar]

- Waelti P., Dickinson A., Schultz W. (2001). Dopamine responses comply with basic assumptions of formal learning theory. Nature 412, 43–48 10.1038/35083500 [DOI] [PubMed] [Google Scholar]

- Wahlstrom D., Collins P., White T., Luciana M. (2010). Developmental changes in dopamine neurotransmission in adolescence: behavioral implications and issues in assessment. Brain Cogn. 72, 146–159 10.1016/j.bandc.2009.10.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton M., Croxson P. (2005). The mesocortical dopamine projection to anterior cingulate cortex plays no role in guiding effort-related decisions. Behav. Neurosci. 119, 323–328 10.1037/0735-7044.119.1.323 [DOI] [PubMed] [Google Scholar]

- Walton M. E., Bannerman D. M., Alterescu K., Rushworth M. F. S. (2003). Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J. Neurosci. 23, 6475–6479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton M. E., Bannerman D. M., Rushworth M. F. S. (2002). The role of rat medial frontal cortex in effort-based decision making. J. Neurosci. 22, 10996–1003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton M. E., Groves J., Jennings K. A., Croxson P. L., Sharp T., Rushworth M. F. S., et al. (2009). Comparing the role of the anterior cingulate cortex and 6-hydroxydopamine nucleus accumbens lesions on operant effort-based decision making. Euro. J. Neurosci. 29, 1678–1691 10.1111/j.1460-9568.2009.06726.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton M. E., Kennerley S. W., Bannerman D. M., Phillips P. E. M., Rushworth M. F. S. (2006). Weighing up the benefits of work: behavioral and neural analyses of effort-related decision making. Neural Netw. 19, 1302–1314 10.1016/j.neunet.2006.03.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wanat M. J., Kuhnen C. M., Phillips P. E. M. (2010). Delays conferred by escalating costs modulate dopamine release to rewards but not their predictors. J. Neurosci. 30, 12020–12027 10.1523/JNEUROSCI.2691-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber B. J., Huettel S. A. (2008). The neural substrates of probabilistic and intertemporal decision making. Brain Res. 1234, 104–115 10.1016/j.brainres.2008.07.105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise R. (2004). Dopamine, learning and motivation. Nat. Rev. Neurosci. 5, 483–494 10.1038/nrn1406 [DOI] [PubMed] [Google Scholar]

- Zack M., Poulos C. X. (2004). Amphetamine primes motivation to gamble and gambling-related semantic networks in problem gamblers. Neuropsychopharmacology 29, 195–207 10.1038/sj.npp.1300333 [DOI] [PubMed] [Google Scholar]

- Zack M., Poulos C. X. (2007). A D2 antagonist enhances the rewarding and priming effects of a gambling episode in pathological gamblers. Neuropsychopharmacology 32, 1678–1686 10.1038/sj.npp.1301295 [DOI] [PubMed] [Google Scholar]

- Zahm D., Brog J. (1992). On the significance of subterritories in the “accumbens” part of the rat ventral striatum. Neuroscience 50, 751–767 10.1016/0306-4522(92)90202-D [DOI] [PubMed] [Google Scholar]

- Zeeb F. D., Robbins T. W., Winstanley C. A. (2009). Serotonergic and dopaminergic modulation of gambling behavior as assessed using a novel rat gambling task. Neuropsychopharmacology 34, 2329–2343 10.1038/npp.2009.62 [DOI] [PubMed] [Google Scholar]