Abstract

Previous studies have proposed that attention is not necessary for detecting simple features but is necessary for binding them to spatial locations. The present study tested this hypothesis, using the N2pc component of the event-related potential waveform as a measure of the allocation of attention. A simple feature detection condition, in which observers reported whether a target color was present or not, was compared with feature-location binding conditions, in which observers reported the location of the target color. A larger N2pc component was observed in the binding conditions than in the detection condition, indicating that additional attentional resources are needed to bind a feature to a location than to detect the feature independently of its location. This finding supports theories of attention in which attention plays a special role in binding features.

This study examines the role of attention in binding surface feature information to spatial locations in visual perception. Treisman’s feature integration theory proposes that spatially focused attention is necessary to localize and bind the features of an object to the locus of focused attention, but that simple, salient features can be detected without focusing attention onto their locations (Treisman, 1988; Treisman & Sato, 1990; Treisman & Gelade, 1980). Many studies have tested this proposal by comparing the attentional requirements of conjunction discrimination with the attentional requirements of feature detection (Cohen & Ivry, 1989; Cohen & Ivry, 1991; Luck, Girelli, McDermott, & Ford, 1997b; Luck & Hillyard, 1994a, 1994b, 1995; Prinzmetal, Presti, & Posner, 1986; Treisman & Sato, 1990; Treisman & Schmidt, 1982). However, there have been no truly direct tests of the hypothesis that perceiving the spatial location of a feature—which requires binding feature and location information—is more attention-demanding than the perception of feature presence.

The most relevant studies tested the hypothesis that single-feature targets can be detected even when they cannot be localized, whereas conjunction targets cannot be detected unless they are localized. Treisman and Gelade (1980) found exactly this pattern of results. However, Johnston & Pashler (1990) argued that these results could have been a result of a guessing strategy and minor errors in location reporting. They designed an experiment to eliminate these possibilities, and they found that observers were unable to correctly report the presence of a feature target unless they were able to at least coarsely localize it. Thus, they concluded that feature detection is accompanied by at least coarse feature localization.

Other studies have indirectly assessed the role of attention in localizing features by comparing performance for feature-defined and conjunction-defined targets in a variety of tasks. In some sense, features must be localized to be correctly bound together, and several studies have found evidence for a greater role of attention for conjunction targets than for feature targets (e.g., Luck & Ford, 1998; Luck et al., 1997b; Prinzmetal et al., 1986; Treisman & Sato, 1990; Treisman & Gelade, 1980). However, it is possible that the binding of multiple surface features such as color and location is achieved on the basis of implicit location information rather than an explicit perception of the feature locations. Indeed, patients with parietal lesions and disrupted attention and spatial abilities have been shown to exhibit implicit localization of features (Robertson, Treisman, Friedman-Hill, & Grabowecky, 1997). The present study was designed to provide a direct test of the attentional requirements of the explicit binding of surface features to their locations in space.

To assess the attentional requirements of feature detection and feature localization, we recorded event-related potentials (ERPs) and focused on the N2pc (N2-posterior-contralateral) component. This ERP component reflects the focusing of attention onto the location of a target item in a visual search array (Eimer, 1996; Luck, Chelazzi, Hillyard, & Desimone, 1997a; Luck & Hillyard, 1994a, 1994b; Woodman & Luck, 1999, 2003). It is generated primarily in ventral stream visual areas, including area V4 and the lateral occipital complex (Hopf et al., 2006a; Hopf et al., 2006b; Hopf et al., 2000). The N2pc appears to be an ERP analog of single-unit attention effects that have been observed in monkeys (Chelazzi, Duncan, Miller, & Desimone, 1998; Chelazzi, Miller, Duncan, & Desimone, 1993, 2001; Luck et al., 1997b), and N2pc-like activity has been observed in ERP recordings from monkeys (Woodman, Kang, Rossi, & Schall, 2007).

Previous research indicates that the amplitude of the N2pc component elicited by a given visual search target reflects the attentional requirements of performing the task for that target. For example, a large N2pc component is elicited both by targets and by nontargets that closely resemble the targets, but little or no N2pc activity is elicited by objects that can be rejected on the basis of salient feature information (Luck & Hillyard, 1994a, 1994b, 1995). In addition, conjunction targets elicit larger N2pc components than simple feature targets (Luck et al., 1997b). Thus, N2pc amplitude is a sensitive measure of the extent to which attention is allocated to a target item in a visual search task.

The goal of the present study was to use N2pc amplitude to determine whether discriminating feature-location bindings (i.e., localizing features) is more attention-demanding than detecting features independent of their locations. Previous N2pc and single-unit studies have provided suggestive evidence supporting this hypothesis. Specifically, when observers were required to make an eye movement toward a target, a larger N2pc was elicited than when they were required to simply press a button upon detecting the presence of the target (Luck et al., 1997b). The same pattern of results was obtained in single-unit recordings from monkeys: neural activity in inferotemporal cortex exhibited larger attentional modulations when the monkeys made eye movement responses to a target than when they made lever-release responses (Chelazzi & Desimone, 1994; Chelazzi et al., 1993). Making an eye movement to a target requires localization of the target, so this pattern of results suggests that the requirement to localize a target increases the attentional demands of the task. However, this result is merely suggestive, because target localization is not the only factor that differs between eye movement tasks and manual response tasks.

To address this issue more directly, we measured the N2pc component while observers performed visual search tasks that required detection, coarse localization, or fine localization of a target defined by a simple feature. Our primary interest was to determine whether N2pc amplitude would be greater in the localization conditions than in the detection condition, consistent with the proposed role of attention in feature localization. We compared the coarse and fine localization conditions to rule out the possibility that N2pc amplitude reflects the general level of task difficulty rather than a specific role of attention in binding features to locations. That is, N2pc amplitude might be larger for the coarse localization condition than for the detection condition simply because the coarse localization task is more difficult. If N2pc amplitude is simply related to the overall difficulty of the task, then it should be greater for the fine localization task than for the coarse localization task as well as being greater for the coarse localization task than for the detection task. If, however, N2pc amplitude is equivalent for the coarse and fine localization conditions but is greater for these conditions than for the detection condition, this will indicate that the N2pc effect is due to the specific need to bind feature and location information in the localization conditions rather than a general difference in task difficulty.

Method

Task Overview

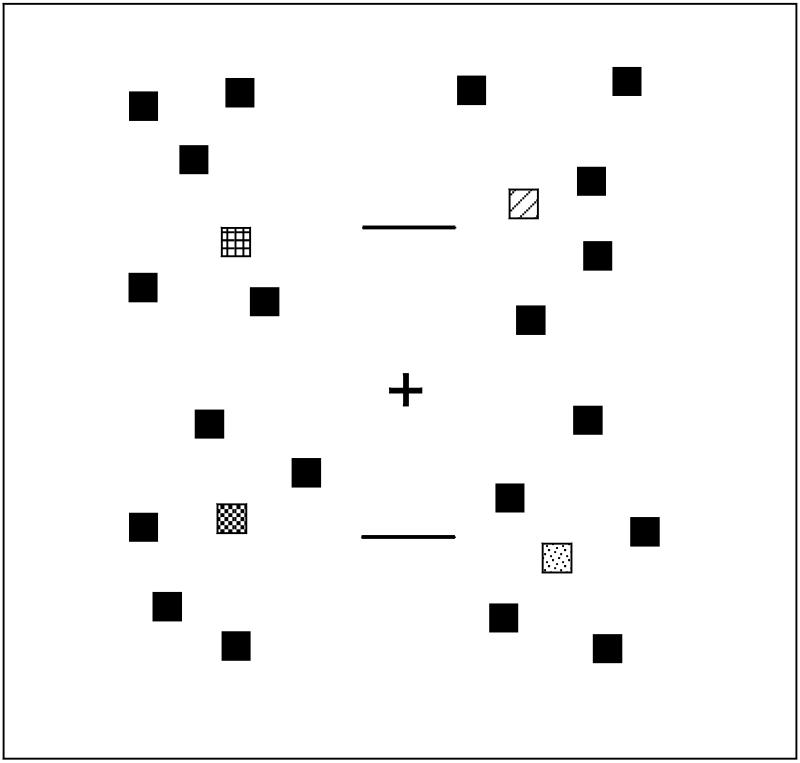

Because ERPs are highly sensitive to the physical properties of the eliciting stimulus, all three conditions of the experiment used the same stimulus arrays, and only the instructions varied. The stimuli are illustrated in Figure 1. In the detection condition, observers were instructed to report the presence or absence of a square drawn in a particular color, irrespective of its location. In the coarse localization condition, observers were instructed to indicate whether this colored square was above or below the horizontal meridian; it was always well above or well below, so this task required very little spatial resolution. In the fine localization condition, observers were instructed to indicate whether this colored square was above or below a nearby reference line; it was only slightly above or slightly below, so this condition required relatively high spatial resolution.

Figure 1.

Example stimulus array. Squares filled with patterns represent the colored items, and black squares represent the black distractor items. The stimuli were presented on a gray background.

Subjects

The subjects in this experiment were 20 neurologically normal students at the University of Iowa, between 18 and 26 years old. They were paid for their participation. All reported having normal or corrected-to-normal visual acuity and normal color vision. Fourteen of the participants were female, and 16 were right-handed.

Stimuli

The stimuli were presented on a CRT at a viewing distance of 70 cm (see Figure 1). Stimulus chromaticity was measured with a Tektronix J17 LumaColor chromaticity meter using the 1931 CIE (Commission International d’Eclairage) coordinate system. A black fixation cross (0.70° × 0.70°) and two black reference lines were continuously visible on a gray background (17.6 cd/m2). The references lines were centered 3.6° above and below the fixation point and subtended 0.16° vertically and 1.64° horizontally.

The search arrays consisted of 20 black squares and four colored squares, each subtending 0.46° × 0.46°. Five black squares and one colored square were presented within each of the four quadrants of the display. The squares were randomly placed within a 6.54° × 5.23° region in each quadrant, and this region was centered 3.89° × 3.23° from the fixation point. The objects within a region were separated from each other by at least 0.65° (center-to-center) and were at least 2° from the fixation point. The colored square was always centered 0.28° above or 0.28° below the nearest reference line, and was placed at one of three distances from the vertical meridian (2.3°, 2.8° or 3.3°, selected at random).

Five colors were used for the colored squares: red (x=.647, y=.325), blue (x=.290, y=.133), green (x=.328, y=.555), violet (x=.296, y=.139), and yellow (x=.320, y=.545). One of these colors was designated the target color for a given block of trials, and one of the four colored squares was the target color on target-present trials. The colors of the nontarget colored squares were selected at random (without replacement) from the four nontarget colors.

Procedure

Each search array was presented for 2000 ms, and successive arrays were separated by an intertrial interval that varied randomly between 800 and 1200 ms (rectangular distribution). The target color was present on 50% of trials in all three conditions. When the target was present, it was equally likely to appear in the upper or lower field, and it was also equally (and independently) likely to appear slightly above or slightly below the nearby reference line.

In the detection condition, subjects were instructed to press one of two gamepad buttons on each trial to indicate whether the target was present or absent. In the coarse localization condition, subjects were instructed to press one of two buttons to indicate whether the target was in the upper or lower visual field and to press a third button if the target was absent. In the fine localization condition, subjects were instructed to press one of two buttons to indicate whether the target was above or below the nearest reference line and to press a third button if the target was absent. Subjects used their dominant hand for button pressing and were instructed to use the index finger to indicate target presence or relative location and the middle finger to indicate target absence. Speed and accuracy were equally emphasized.

The experiment consisted of 15 blocks of 64 trials. The target color changed after every three blocks, and all five colors eventually served as target for each subject; the order of colors varied randomly across subjects. The three task conditions (detection, coarse localization, and fine localization) were tested in each set of three blocks in an order that varied randomly. At the beginning of each trial block, subjects were informed which one of the five colors would be the target for that block and whether they needed to detect it, localize it relative to the horizontal meridian, or localize it relative to the reference lines. Subjects were given 32 practice trials before the first time they experienced a particular task (i.e., before each of the first three blocks).

Recording and Analysis

The electroencephalogram (EEG) was recorded using tin electrodes mounted in an elastic cap. Recordings were obtained from ten standard scalp sites of the International 10/20 system (F3, F4, C3, C4, P3, P4, O1, O2, T5, and T6), two nonstandard sites (OL, halfway between O1 and T5, and OR, halfway between O2 and T6), and the left mastoid. All of these sites were referenced to an electrode on the right mastoid. The averaged ERP waveforms were algebraically re-referenced offline to the average of the activity at left and right mastoids (see Luck, 2005). The horizontal electrooculogram (HEOG) was recorded from electrodes placed lateral to the left and right eyes for monitoring horizontal eye movements. To monitor eye blinks, an electrode was placed below the left eye and referenced to the right mastoid. Electrode impedances were reduced to 5 KΩ or less. The EEG and EOG were amplified by an SA Instrumentation amplifier with a bandpass of 0.01-80 Hz and digitized at a rate of 250 Hz.

Trials with blinks or eye movements were automatically excluded from all behavioral and ERP analyses. Following our standard procedures, any subject with a rejection rate of 25% or higher was replaced; seven subjects were replaced for this reason. An average of 13.3% of trials per subject were rejected due to ocular artifacts in the remaining 20 subjects.

Averaged ERP waveforms were computed from target-present trials and collapsed across target color conditions; after collapsing, there were 160 target-present trials in each condition. Target-absent waveforms were not relevant for the hypotheses being tested and will not be discussed further. N2pc amplitude was measured from the target-present waveforms as the mean voltage between 200 and 300 ms poststimulus relative to a 200-ms prestimulus baseline interval; measurements were obtained at the medial occipital, lateral occipital, and posterior temporal electrode sites (O1/2, OL/R, and T5/6). To isolate the N2pc component from other overlapping ERP components, we computed difference scores in which the voltage for trials with an ipsilateral target (with respect to the current electrode site) was subtracted from the voltage for trials with a contralateral target, averaged across the left and right hemisphere electrode sites; this difference represents the amplitude of the N2pc component. These difference scores were analyzed in a two-way within-subjects analysis of variance (ANOVA). The ANOVA factors were task condition (detection vs. coarse localization vs. fine localization) and within-hemisphere electrode position (T5/6, OL/R, O1/2). All p-values reported here were corrected for nonsphericity using the Greenhouse-Geisser epsilon correction (Jennings & Wood, 1976).

Results

Behavioral Results

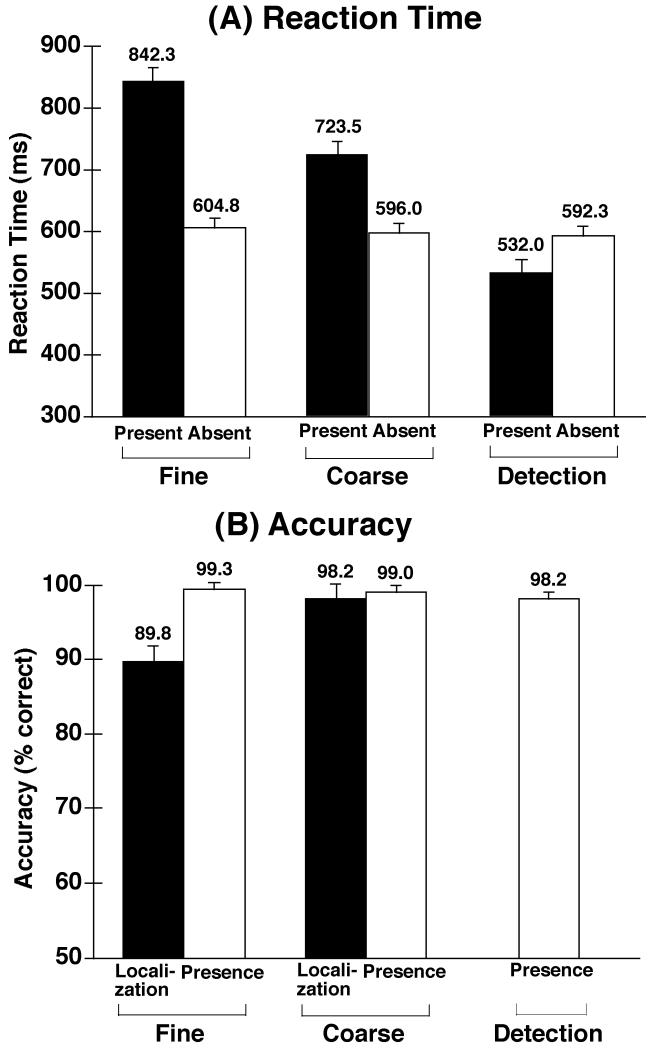

Mean reaction time (RT) from correct trials is shown in Figure 2A. Reaction times were computed separately for target-present and target-absent trials in each condition (collapsing across target locations in the localization conditions). RT for target-absent trials was largely constant across conditions, whereas RT for target-present trials was fastest for the detection condition, slowest for the fine localization condition, and intermediate for the coarse localization condition. In a 2-way ANOVA with factors of condition and target presence, this pattern led to a significant interaction, F(2, 38) = 194.31, p < .001. In addition, the differences among conditions led to a significant main effect of condition, F(2, 38) = 110.57, p < .001, and the slower responses for target-present responses relative to target-absent responses led to a significant main effect of target presence F(1, 19) = 81.72, p < .001. Planned pairwise comparisons of the three task conditions, conducted separately for target-present and target-absent trials, revealed significant differences between each pair of the conditions on target-present trials, ps < .001, but no significant differences on target-absent trials. The difference in target-present RTs between the coarse and fine localization conditions presumably reflects the greater difficulty of the fine discrimination task. Some of the difference between the detection and coarse localization conditions, however, almost certainly reflects the greater number of response alternatives in the coarse localization condition (RT typically increases as a function of the square root of the number of response alternatives – see Hick, 1952).

Figure 2.

Reaction time (A) and accuracy (B) for the fine, coarse localization, and detection conditions. Reaction times were calculated separately for target-absent and target-present trials; for target-present trials in the localization conditions, reaction times were averaged over the two different spatial locations. Accuracy was calculated in two different ways. First, bars labeled “Presence” show the accuracy of determining whether a target was present (irrespective of whether it was correctly localized in the localization conditions). Second, bars labeled “Localization” show the accuracy of the localization response on target-present trials in the localization conditions. Error bars represent within-subjects 95% confidence intervals (Loftus & Masson, 1994).

Accuracy, which is shown in Figure 2B, was computed in two ways. First, for all three conditions, we computed the percentage of trials for which subjects correctly indicated whether a target was present or absent (irrespective of whether the correct location was indicated; labeled Presence in Figure 2B). Second, for the two localization conditions, we computed the percentage of target-present trials for which the correct localization judgment was made (labeled Localization in Figure 2B). Accuracy for determining whether a target was present or absent was observed to be near ceiling for all three conditions; this observation was supported by a one-way ANOVA in which the effect of condition was not significant, F(2, 38) = 2.42, p > .10. In contrast, target localization on target-present trials was significantly less accurate in the fine localization condition than in the coarse localization condition, F(1, 19) = 36.01, p < .001.

Together, the RT and accuracy data indicate that the fine localization was, as intended, substantially more difficult than the coarse localization condition. In addition, the coarse localization and detection tasks yielded similar accuracy levels, but responses were slower in the coarse localization task (presumably due, in part, to the greater number of response alternatives).

Electrophysiological Results

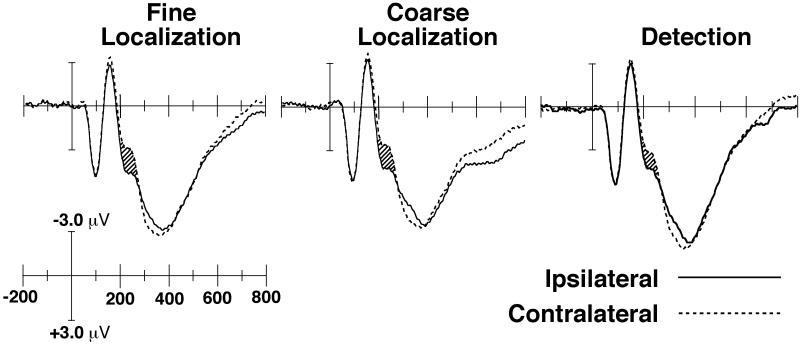

Figure 3 shows the grand-average ERPs recorded from a pair of lateral occipital electrode sites (OL and OR) in the detection, fine localization, and coarse localization conditions. These ERP waveforms compare activity elicited by targets that were ipsilateral versus contralateral to the electrode site, collapsed across the left and right hemispheres. That is, the contralateral waveform is the average of the left hemisphere waveform for right visual field targets and the right hemisphere waveform for left visual field targets, and the ipsilateral waveform is the average of the left hemisphere waveform for left visual field targets and the right hemisphere waveform for right visual field targets. The N2pc component was measured as the degree of difference in amplitude between the contralateral and ipsilateral waveforms between 200 and 300 ms poststimulus.

Figure 3.

Event-related potential waveforms for correctly classified target-present trials in the three conditions, recorded from posterior temporal electrode sites (OL/OR) and averaged across subjects. The N2pc component is indicated by the hatched regions. Negative is plotted upward by convention, and time zero represents search array onset. For purposes of visual clarity, the waveforms were digitally filtered before plotting by convolving the original waveforms with a gaussian impulse response function (standard deviation = 6 ms, 50% amplitude low-pass cutoff at approximately 35 Hz).

Consistent with previous studies, the N2pc component was largest at the lateral occipital sites (OL and OR), somewhat smaller at the posterior temporal sites (T5 and T6), and even smaller at the medial occipital sites (O1 and O2). This pattern led to a main effect of within-hemisphere electrode location in the omnibus ANOVA, F(2, 38) = 3.74, p < .05.

As can be seen in Figure 3, a clear N2pc component was present in all three conditions. The time course of the N2pc was similar across conditions, which is to be expected given that the stimuli and therefore the ease of finding the target should have been equivalent in all three conditions. N2pc amplitude was approximately equal in the two localization conditions (−0.74 μV for coarse localization and −0.70 μV for fine localization) but was smaller in the detection condition (−0.42 μV). The difference in N2pc amplitude across the three conditions led to a main effect of condition, F(2, 38) = 4.01, p < .05. In follow-up ANOVAs, a significant effect of condition was obtained when the detection and coarse localization conditions were compared, F(1, 19) = 8.06, p < .05, but not when the coarse and fine localization conditions were compared, F < 1. These analyses support the claim that the N2pc was larger in the localization conditions than in the detection condition but did not differ between the two localization conditions.

Discussion

N2pc amplitude is a sensitive index of the degree to which perceptual-level attention is allocated to a target (Luck et al., 1997b; Luck & Hillyard, 1994a, 1994b). Consequently, the present results indicate that more attention was allocated to targets in the localization tasks than in the detection task, but attention was allocated approximately equally to targets in the coarse and fine localization tasks. That is, the observers had a greater need to orient attention to the target color when they needed to report its location than when they merely needed to report its presence. This difference between the detection condition and the localization conditions supports for a key proposal in feature integration theory (Treisman, 1988; Treisman & Sato, 1990; Treisman & Gelade, 1980) and is consistent with other theories in which attention plays a key role in localizing features (Cohen & Ivry, 1989; Cohen & Ivry, 1991; Luck et al., 1997b; Luck & Hillyard, 1994a, 1994b, 1995; Prinzmetal et al., 1986).

Could these results be explained by differences in overall task difficulty rather than differences in the specific computations required for detection and localization? Not easily. Accuracy was at ceiling for the coarse localization and detection conditions, but N2pc amplitude differed. In contrast, accuracy was substantially impaired in the fine localization condition compared to the coarse localization condition, and yet there was no difference in N2pc amplitude between these conditions. RT was longer for the coarse localization condition than for the detection condition, but it was also longer for the fine localization condition than for the coarse localization condition. Thus, the difference in difficulty between the coarse and fine localization conditions appeared to be greater than the difference in difficulty between the detection and coarse localization conditions, and yet the N2pc differed only between the detection and coarse localization conditions. Moreover, previous studies have shown that that N2pc component is sometimes larger for easier targets than for more difficult targets (Luck & Hillyard, 1994a). Thus, although the coarse localization task presumably required additional processing relative to the detection task, the difference in N2pc amplitude between these conditions cannot easily be explained by overall differences in difficulty per se. Instead, the difference in N2pc amplitude reflects a specific difference in the computational requirements of the tasks, namely the increased need to use spatially focused attention when localizing a target.

We observed no difference in N2pc amplitude between the coarse and fine localization conditions. We cannot, of course, conclude from this null effect that coarse and fine localization have equivalent attentional demands. It is possible that, given a sufficiently large sample size, we would have observed significantly greater N2pc activity in the fine localization condition. However, we have conducted two additional experiments comparing coarse and fine localization tasks, and no tendency was observed toward larger N2pc amplitudes in the fine localization conditions in either experiment. Thus, we are fairly confident that if there are any differences in N2pc amplitude between fine and coarse localization tasks, these differences are negligible. It is also possible that a significant effect would have been observed in the present experiment if we had employed a more extreme manipulation of the localization accuracy necessary for the coarse and fine location tasks. We cannot rule out this possibility, but it is reasonably safe to conclude that the fairly large differences in localization requirements tested in the present experiment—which led to more than a 10% difference in accuracy and more than a 100-ms difference in reaction time—do not elicit measurably different N2pc amplitudes.

In the decades since Treisman and Gelade (1980) first proposed that attention plays a special role in localizing and binding features, many studies have be published that either support or conflict with this proposal. Some studies conclude that attention is required for both detecting individual features and for binding multiple features together (e.g., Joseph, Chun, & Nakayama, 1997; Kim & Cave, 1995; Theeuwes, Van der Burg, & Belopolsky, in press), and others conclude that even bindings can be detected without attention (e.g., Eckstein, 1998; Mordkoff & Halterman, in press; Palmer, Verghese, & Pavel, 2000). How do the present findings fit within this large and contradictory set of studies? One possibility is that the discrepancies are a result of treating attention as a unitary mechanism that operates at a single locus, when in fact considerable evidence indicates that attention operates within different cognitive subsystems under different conditions (see Luck & Vecera, 2002; Vogel, Woodman, & Luck, 2005). Feature Integration Theory was intended to apply to the intermediate to high levels of visual perception, and the present findings support the idea that attention plays a special role in localizing and binding features within these levels. However, attention may not play any special role in feature binding at lower levels of perception (e.g., feature extraction) or at higher levels of cognition (e.g., working memory encoding and response selection). Because behavioral responses reflect contributions from both perceptual and postperceptual attention mechanisms, behavioral studies are usually unable to determine the system in which attention is operating. Consequently, it is likely that many of the apparent discrepancies about the role of attention in localizing and binding features are a result of the use of different experimental paradigms that emphasize different mechanisms of attention.

As an example, consider the of Joseph et al. (1997), in which the second target was a masked visual search array. The size of the attentional blink effect was just as great for feature targets as for conjunction targets, leading to the conclusion that attention plays the same role for features and conjunctions. It has been well documented that the attentional blink reflects the operation of attention in working memory encoding rather than in perception (see, e.g., Giesbrecht & Di Lollo, 1998; Luck, Vogel, & Shapiro, 1996; Shapiro, Driver, Ward, & Sorensen, 1997; Vogel, Luck, & Shapiro, 1998), and it is also known that single-feature and multiple-feature objects require equivalent working memory capacity (Allen, Baddeley, & Hitch, 2006; Luck & Vogel, 1997; Vogel, Woodman, & Luck, 2001). Thus, the finding that the attentional blink is equivalent for features and conjunctions tells us nothing about the role of attention in feature binding during perception.

A significant advantage of neurophysiological measures of attention is that they can more easily isolate the operation of attention within a specific cognitive subsystem, making it possible to determine whether a given attention effect is perceptual or postperceptual and even subdividing perceptual processes (see reviews by Hillyard, Vogel, & Luck, 1998; Luck, 1995). In the present study, the N2pc results indicate that greater attentional resources are required to discriminate color-location bindings than to detect individual colors, but this claim can be limited to the attentional mechanism indexed by the N2pc component. As reviewed in the Introduction, we know that this mechanism operates after feature extraction but before object recognition is complete. It appears to be due spatial signal from the parietal lobe interacting with intermediate and high levels of extrastriate visual cortex (Hopf et al, 2000). As stated previously, this linking of spatial and feature information in the dorsal and ventral streams may be the most basic form of binding (Treisman, 1996).

Acknowledgments

This research was made possible by grants MH63001 and MH65034 from the National Institute of Mental Health.

References

- Allen RJ, Baddeley AD, Hitch GJ. Is the binding of visual features in working memory resource-demanding? Journal of Experimental Psychology: General. 2006;135:298–313. doi: 10.1037/0096-3445.135.2.298. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Desimone R. Responses of V4 neurons during visual search. Society for Neuroscience Abstracts. 1994;20:1054. [Google Scholar]

- Chelazzi L, Duncan J, Miller EK, Desimone R. Responses of neurons in inferior temporal cortex during memory-guided visual search. Journal of Neurophysiology. 1998;80:2918–2940. doi: 10.1152/jn.1998.80.6.2918. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Miller EK, Duncan J, Desimone R. A neural basis for visual search in inferior temporal cortex. Nature. 1993;363:345–347. doi: 10.1038/363345a0. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Miller EK, Duncan J, Desimone R. Responses of neurons in macaque area V4 during memory-guided visual search. Cerebral Cortex. 2001;11:761–772. doi: 10.1093/cercor/11.8.761. [DOI] [PubMed] [Google Scholar]

- Cohen A, Ivry R. Illusory conjunctions inside and outside the focus of attention. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:650–663. doi: 10.1037//0096-1523.15.4.650. [DOI] [PubMed] [Google Scholar]

- Cohen A, Ivry RB. Density effects in conjunction search: Evidence for a coarse location mechanism of feature integration. Journal of Experimental Psychology: Human Perception and Performance. 1991;17:891–901. doi: 10.1037//0096-1523.17.4.891. [DOI] [PubMed] [Google Scholar]

- Eckstein MP. The lower visual search efficiency for conjunctions is due to noise and not serial attentional processing. Psychological Science. 1998;9(2):111–118. [Google Scholar]

- Eimer M. The N2pc component as an indicator of attentional selectivity. Electroencephalography and clinical Neurophysiology. 1996;99:225–234. doi: 10.1016/0013-4694(96)95711-9. [DOI] [PubMed] [Google Scholar]

- Giesbrecht BL, Di Lollo V. Beyond the attentional blink: Visual masking by object substitution. Journal of Experimental Psychology: Human Perception & Performance. 1998;24:1454–1466. doi: 10.1037//0096-1523.24.5.1454. [DOI] [PubMed] [Google Scholar]

- Hick WE. On the rate of gain of information. Quarterly Journal of Experimental Psychology A. 1952;4:11–26. [Google Scholar]

- Hillyard SA, Vogel EK, Luck SJ. Sensory gain control (amplification) as a mechanism of selective attention: Electrophysiological and neuroimaging evidence. Philosophical Transactions of the Royal Society: Biological Sciences. 1998;353:1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopf J-M, Boehler CN, Luck SJ, Tsotsos JK, Heinze HJ, Schoenfeld MA. Direct neurophysiological evidence for spatial suppression surrounding the focus of atteniton in vision. Proceedings of the National Academy of Sciences. 2006a;103:1053–1058. doi: 10.1073/pnas.0507746103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopf J-M, Luck SJ, Boelmans K, Schoenfeld MA, Boehler N, Rieger J, et al. The neural site of attention matches the spatial scale of perception. Journal of Neuroscience. 2006b;26:3532–3540. doi: 10.1523/JNEUROSCI.4510-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopf J-M, Luck SJ, Girelli M, Hagner T, Mangun GR, Scheich H, et al. Neural sources of focused attention in visual search. Cerebral Cortex. 2000;10:1233–1241. doi: 10.1093/cercor/10.12.1233. [DOI] [PubMed] [Google Scholar]

- Jennings JR, Wood CC. The e-adjustment procedure for repeated-measures analyses of variance. Psychophysiology. 1976;13:277–278. doi: 10.1111/j.1469-8986.1976.tb00116.x. [DOI] [PubMed] [Google Scholar]

- Johnston JC, Pashler H. Close binding of identity and location in visual feature perception. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:843–856. doi: 10.1037//0096-1523.16.4.843. [DOI] [PubMed] [Google Scholar]

- Joseph JS, Chun MM, Nakayama K. Attentional requirements in a ‘preattentive’ feature search task. Nature. 1997;387:805–808. doi: 10.1038/42940. [DOI] [PubMed] [Google Scholar]

- Kim M-S, Cave KR. Spatial attention in visual search for features and feature conjunctions. Psychological Science. 1995;6:376–380. [Google Scholar]

- Loftus GR, Masson MEJ. Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review. 1994;1(4):476–490. doi: 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

- Luck SJ. Multiple mechanisms of visual-spatial attention: Recent evidence from human electrophysiology. Behavioural Brain Research. 1995;71:113–123. doi: 10.1016/0166-4328(95)00041-0. [DOI] [PubMed] [Google Scholar]

- Luck SJ. An Introduction to the Event-Related Potential Technique. MIT Press; Cambridge, MA: 2005. [Google Scholar]

- Luck SJ, Chelazzi L, Hillyard SA, Desimone R. Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. Journal of Neurophysiology. 1997a;77:24–42. doi: 10.1152/jn.1997.77.1.24. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Ford MA. On the role of selective attention in visual perception. Proceedings of the National Academy of Sciences, U.S.A. 1998;95:825–830. doi: 10.1073/pnas.95.3.825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ, Girelli M, McDermott MT, Ford MA. Bridging the gap between monkey neurophysiology and human perception: An ambiguity resolution theory of visual selective attention. Cognitive Psychology. 1997b;33:64–87. doi: 10.1006/cogp.1997.0660. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA. Electrophysiological correlates of feature analysis during visual search. Psychophysiology. 1994a;31:291–308. doi: 10.1111/j.1469-8986.1994.tb02218.x. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA. Spatial filtering during visual search: Evidence from human electrophysiology. Journal of Experimental Psychology: Human Perception and Performance. 1994b;20:1000–1014. doi: 10.1037//0096-1523.20.5.1000. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA. The role of attention in feature detection and conjunction discrimination: An electrophysiological analysis. International Journal of Neuroscience. 1995;80:281–297. doi: 10.3109/00207459508986105. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vecera SP. Attention. In: Yantis S, editor. Stevens’ Handbook of Experimental Psychology: Vol. 1: Sensation and Perception. 3rd ed. Wiley; New York: 2002. [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature. 1997;390:279–281. doi: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK, Shapiro KL. Word meanings can be accessed but not reported during the attentional blink. Nature. 1996;382:616–618. doi: 10.1038/383616a0. [DOI] [PubMed] [Google Scholar]

- Mordkoff JT, Halterman R. Feature integration without visual attention: Evidence from the correlated flankers task. Psychonomic Bulletin & Review. doi: 10.3758/pbr.15.2.385. in press. [DOI] [PubMed] [Google Scholar]

- Palmer J, Verghese P, Pavel M. The psychophysics of visual search. Vision Research. 2000;40:1227–1268. doi: 10.1016/s0042-6989(99)00244-8. [DOI] [PubMed] [Google Scholar]

- Prinzmetal W, Presti DE, Posner MI. Does attention affect visual feature integration? Journal of Experimental Psychology: Human Perception and Performance. 1986;12:361–369. doi: 10.1037//0096-1523.12.3.361. [DOI] [PubMed] [Google Scholar]

- Robertson L, Treisman A, Friedman-Hill S, Grabowecky M. The interaction of spatial and object pathways: Evidence from Balint’s syndrome. Journal of Cognitive Neuroscience. 1997;9(3):295–317. doi: 10.1162/jocn.1997.9.3.295. [DOI] [PubMed] [Google Scholar]

- Shapiro K, Driver J, Ward R, Sorensen RE. Priming from the attentional blink: A failure to extract visual tokens but not visual types. Psychological Science. 1997;8:95–100. [Google Scholar]

- Theeuwes J, Van der Burg I, Belopolsky A. Detecting the presence of a singleton involves focal attention. Psychonomic Bulletin & Review. doi: 10.3758/pbr.15.3.555. in press. [DOI] [PubMed] [Google Scholar]

- Treisman A. Features and objects: The fourteenth Bartlett memorial lecture. Quarterly Journal of Experimental Psychology. 1988;40:201–237. doi: 10.1080/02724988843000104. [DOI] [PubMed] [Google Scholar]

- Treisman A, Sato S. Conjunction search revisited. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:459–478. doi: 10.1037//0096-1523.16.3.459. [DOI] [PubMed] [Google Scholar]

- Treisman A, Schmidt H. Illusory conjunctions in the perception of objects. Cognitive Psychology. 1982;14:107–141. doi: 10.1016/0010-0285(82)90006-8. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Luck SJ, Shapiro KL. Electrophysiological evidence for a postperceptual locus of suppression during the attentional blink. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:1656–1674. doi: 10.1037//0096-1523.24.6.1656. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Woodman GF, Luck SJ. Storage of features, conjunctions, and objects in visual working memory. Journal of Experimental Psychology: Human Perception and Performance. 2001;27:92–114. doi: 10.1037//0096-1523.27.1.92. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Woodman GF, Luck SJ. Pushing around the locus of selection: Evidence for the flexible-selection hypothesis. Journal of Cognitive Neuroscience. 2005;17:1907–1922. doi: 10.1162/089892905775008599. [DOI] [PubMed] [Google Scholar]

- Woodman GF, Kang M-S, Rossi AF, Schall JD. Nonhuman primate event-related potentials indexing covert shifts of attention. Proceedings of the National Academy of Sciences. 2007;104:15111–15116. doi: 10.1073/pnas.0703477104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodman GF, Luck SJ. Electrophysiological measurement of rapid shifts of attention during visual search. Nature. 1999;400:867–869. doi: 10.1038/23698. [DOI] [PubMed] [Google Scholar]

- Woodman GF, Luck SJ. Serial deployment of attention during visual search. Journal of Experimental Psychology: Human Perception and Performance. 2003;29:121–138. doi: 10.1037//0096-1523.29.1.121. [DOI] [PubMed] [Google Scholar]