Abstract

Animals choose actions based on imperfect, ambiguous data. “Noise” inherent in neural processing adds further variability to this already-noisy input signal. Mathematical analysis has suggested that the optimal apparatus (in terms of the speed/accuracy trade-off) for reaching decisions about such noisy inputs is perfect accumulation of the inputs by a temporal integrator. Thus, most highly cited models of neural circuitry underlying decision-making have been instantiations of a perfect integrator. Here, in accordance with a growing mathematical and empirical literature, we describe circumstances in which perfect integration is rendered suboptimal. In particular we highlight the impact of three biological constraints: (1) significant noise arising within the decision-making circuitry itself; (2) bounding of integration by maximal neural firing rates; and (3) time limitations on making a decision. Under conditions (1) and (2), an attractor system with stable attractor states can easily best an integrator when accuracy is more important than speed. Moreover, under conditions in which such stable attractor networks do not best the perfect integrator, a system with unstable initial states can do so if readout of the system’s final state is imperfect. Ubiquitously, an attractor system with a nonselective time-dependent input current is both more accurate and more robust to imprecise tuning of parameters than an integrator with such input. Given that neural responses that switch stochastically between discrete states can “masquerade” as integration in single-neuron and trial-averaged data, our results suggest that such networks should be considered as plausible alternatives to the integrator model.

Keywords: Decision, Urgency-gating, Ramping, Transitions, Hidden Markov model, State sequence

Introduction

The making of timely choices based on ambiguous, impoverished stimulus information is a basic part of survival and success for all living things. The acquisition, processing and filtering of such information by sensory transduction organs and multiple central nervous system relays adds noise to an already noisy fragment of data. Thus, our ability to produce appropriate behavioral responses in choice situations is even more impressive than it might naïvely seem.

The dynamics of such decision-making processes has been studied primarily during perceptual decision-making via two-alternative forced choice tasks (Shadlen and Newsome 1996; Ratcliff and Rouder 1998; Platt and Glimcher 1999; Glimcher 2001; Gold and Shadlen 2001; Shadlen and Newsome 2001; Usher and McClelland 2001; Roitman and Shadlen 2002; Romo et al. 2002; Glimcher 2003; Romo et al. 2004; Smith and Ratcliff 2004; Huk and Shadlen 2005; Luna et al. 2005; Gold and Shadlen 2007; Ratcliff 2008; Stanford et al. 2010; Yoshida and Katz 2011). In such tasks, a subject makes one of two distinctive responses depending on which stimulus is present (or, alternatively, depending on which is the dominant stimulus in a mixture); performance is evaluated in terms of overall accuracy for a given response speed (or range of speeds).

These tasks have been the source of a wealth of models, each based either on simulations of neural activity (Wang 2002; Wong and Wang 2006; Wong et al. 2007; Beck et al. 2008) or on the mathematical analysis of diffusion in an effective potential (Ratcliff 1978; Zhang et al. 2009; Zhou et al. 2009; Zhang and Bogacz 2010), which can be derived from models of neural activity (Usher and McClelland 2001; Smith and Ratcliff 2004; Bogacz et al. 2006; Sakai et al. 2006; Roxin and Ledberg 2008; Eckhoff et al. 2011). Regardless of their bases, decision-making models are typically judged according to two distinct criteria. First, how good—or, as it is often stated, how close to optimal—is the model at producing correct responses in a timely manner, given limited evidence and noise in the system? Second, how well does the model reproduce key behavioral (Feng et al. 2009) and electrophysiological (Wang 2001; Ditterich 2006) results beyond the inevitable increase in accuracy with either increased stimulus presentation time or with increased difference between stimulus representations, which arises naturally in all models?

Signal detection theory tells us that perfect integration of the difference in evidence for two alternatives is the optimal method for choosing between these alternatives (Wald 1947; Wald and Wolfowitz 1948). A corollary of this result to which many neuroscientists ascribe is that perfect integration is therefore also the optimal framework in which to study the neural basis of decision-making (Gold and Shadlen 2007). That is, integrators, typically implemented as drift diffusion models with fixed thresholds (Ratcliff 1978), are thought to provide both the most optimal models of decision making, and to best reproduce the basic behavioral (Ratcliff and McKoon 2008) and neural data, the latter of which suggest accumulation of information across time, at least when spiking is averaged across trials (Roitman and Shadlen 2002; Ratcliff et al. 2003). Moreover, biologically realistic circuits of neurons can approximate such integrators (Wang 2002), further supporting the conclusion that they are the appropriate model type to explain decision making in the nervous system.

There remain reasons to question this conclusion, however. First, the proof of optimality implicitly assumes either unbounded integration or unlimited time for a response. It is less clear whether an integrator is still favored once biologically plausible constraints (Bertsekas 2005; Frazier and Yu 2007; Cisek et al. 2009; Nigoyi and Wong-Lin 2010; Zhang and Bogacz 2010; Eckhoff et al. 2011; Standage et al. 2011) enforce a timely readout of activity in the decision-making circuit. Even with perfect readout of the final state of the system, the perfect integrator may not be optimal if firing rates and response times are limited (Zhang and Bogacz 2010). Finally, any neural implementation of a perfect integrator requires both the precise tuning of connection strengths and low within-circuit noise (Seung 1996; Usher and McClelland 2001; Wang 2002; Miller et al. 2003; Eckhoff et al. 2009), such that more naturalistic conditions might again favor robust approximations to an integrator based on multiple discrete attractors (Koulakov et al. 2002; Goldman et al. 2003), for which performance can be enhanced by additional noise (Deco et al. 2009; Miller and Katz 2010; Deco et al. 2013).

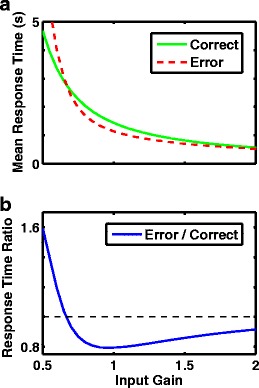

Furthermore, some of the extant behavioral data actually favor non-integrator models over perfect integrators. While most models reproduce the positive skewness of response times (a longer tail in the distribution for slow response times) (Ratcliff and Rouder 1998; Usher and McClelland 2001; Wong and Wang 2006), if bias and starting conditions are fixed, only nonlinear models reproduce the oft-observed phenomenon of slower responses on error trials than correct trials (Ditterich 2006; Wong and Wang 2006; Broderick et al. 2009). Perfect integrators must implement two separate mechanisms to produce slow and fast error responses: the former are produced by trial-to-trial variability in stimulus strength, while the latter are produced by trial-to-trial variability in the initial state of the system (Ratcliff and Rouder 1998).

Relatedly, the fit between the extant electrophysiological data and the predictions of an integrator model may not be as strong as once thought. Specifically, it has recently become clear that apparent ramps in neural activity can in some circumstances be artifactual results of across-trial averaging. Hidden-Markov model analyses of multi-unit neural activity (Jones et al. 2007), for instance, suggest that in some systems neural activity jumps between discrete states (Seidemann et al. 1996; Deco and Rolls 2006; Deco and Marti 2007; Okamoto et al. 2007; Eckhoff et al. 2009; Eckhoff et al. 2011; Ponce-Alvarez et al. 2012) but see (Bollimunta et al. 2012) with timing that varies from trial to trial. In such situations, the standard procedure of averaging across trials aligned to stimulus onset obscures the inherent structure of the system, forcing the emergence of an apparent ramp (Marti et al. 2008; Miller and Katz 2011). Without multi-neuronal data, such discrete jumps in activity are particularly difficult to recognize (but see (Okamoto et al. 2007)) or disprove, and thus it is as of yet unclear whether the activity of classically-defined decision-making neural ensembles have such structure.

Here we investigate conditions under which an attractor-based neural circuit, whose dynamics is most naturally described as jumps between discrete states (Deco et al. 2009; Miller and Katz 2010), could produce more accurate decisions than a perfectly linear integrating circuit. We have previously demonstrated that a multi-state attractor network built to reproduce such “jumpy” single-trial responses can out-perform (in terms of percent correct identification of appropriate taste-related behavior) the same network set to perform integration (Miller and Katz 2010). Here we present a more complete, rigorous comparison of perfect integrator and discrete attractor-based models.

We begin with the introduction of a simple time limit (a feature of many, if not most, sensorimotor decisions); we chose to begin with this constraint in order to put the perfect integrator in the best position—a model is less likely to reach the decision threshold in a finite time (we refer to the model as being “undecided” in such trials) when that system’s initial state is a stable attractor than when it is smoothly integrating. We then assess a range of methods for resolving such “undecided” trials, including the minimally accurate method (“guessing”) whereby 50 % of the trials that do not reach threshold are treated as correct, and a perfect mathematical readout whereby the sign of the decision variable determines the response, irrespective of the threshold. In between these extremes, we assess several biologically realistic mechanisms for reducing or eliminating undecided trials, each of which (see Methods for detailed descriptions) consists of either abruptly (“forcing a response”) or smoothly (an “urgency-gating signal”) pushing the system in the direction of the sign of the decision variable.

After describing a large number of simulations, the vast majority of which demonstrate limitations of the integrator model, we proceed to: 1) briefly explain how these results are consistent with previous work; and to 2) more closely examine extant behavioral and electrophysical data in relation to the models.

The sort of urgency-gating signal used in our simulations, which behavioral (Cisek et al. 2009) and electrophysiological data (Ditterich 2006; Churchland et al. 2008; Broderick et al. 2009) suggest arises during decision-making, speeds the likelihood of a response as time passes, as a reflection of the increasing cost of time spent accumulating information without acting upon a decision (Drugowitsch et al. 2012). We incorporate three methods for producing such an urgency-gating signal; specifically, we compare the implementation used by Cisek et al. (2009) to explain the relative lack of importance or weighting of early evidence compared to late evidence—a temporally ramping multiplicative factor (i.e. gain modulation) applied to the inputs to the decision-making system (see also (Ditterich 2006; Eckhoff et al. 2009; Nigoyi and Wong-Lin 2010; Eckhoff et al. 2011; Standage et al. 2011))—with both a ramping additive input current to the system and ramping decreases in the decision threshold (Bertsekas 2005; Frazier and Yu 2007). Each such signal is stimulus-nonspecific and could be produced by slow decay or rise in concentration of a neuromodulator such as norepinephrine (Shea-Brown et al. 2008), so is distinct from an integrator or accumulator.

Our formalism is a greatly simplified description of the dynamics of true neural circuitry, but it is complex enough that we cannot produce a single formula to fit to quantities such as a reaction time distribution. Other models do produce such analytic formulae, which gives them the benefit that parameters—such as level of noise, or threshold for response—can be adjusted with relative ease to produce best fits to experimental data.(Ratcliff et al. 2003; Eckhoff et al. 2008; Roxin and Ledberg 2008; Feng et al. 2009). Nevertheless, use of now-standard optimization routines with repeated calculations of probability distributions render trivial the fitting of parameters to data for more complicated models such as ours.

Within this formalism, we are able to compare multiple models of decision-making under a range of conditions, adjusting a single nonlinearity parameter to contrast perfect integrators (i. e., models with a nonlinearity of zero in our formalism), such as the drift diffusion model, with nonlinear, attractor-based models—two types of models that both reproduce an impressive range of behavioral and electrophysiological results. We analyze the decision-making accuracy of each model and assess a number of mechanisms that could improve accuracy, in the context of sensitivity to their biological plausibility. Moreover, we measure robustness of the results to imperfect tuning of parameters of the sort that almost certainly arises when the model is implemented within the brain’s neural circuitry. These analyses demonstrate that under many realistic conditions, nonlinear circuits that are not perfect integrators produce more accurate and more robust decision-making than the perfect integrator—a conclusion that, while suggested before, has not been tested in such a parametric manner previously.

Materials and methods

Our results are based on the temporal dynamics of the probability distribution of neural firing rates in a bounded system with fixed thresholds (Kiani et al. 2008). The probability distribution evolves following a deterministic term in the dynamics, which adds a constant drift term toward threshold in the perfect integrator, or produces a small shift in the distribution in the model with barriers (see Fig. 1). All models include a diffusion term, D, representing the variance in firing rates due to noise—such noise is essential for decisions to be made in models with a barrier. We also produce trajectories of firing rate as a function of time, to generate individual trials that can be compared with experiment. However, our calculations of quantities such as decision-making accuracy and the distribution of responses times do not depend on sampling trials, and are exact to within the precision of our numerical calculations (to within 10−4 for any probability value).

Fig. 1.

Effective potential for an integrator or point-attractor system. a Perfect integrator with b = 0. b Triple-attractor (sextic potential) with b = 9. a, b Blue: effective potential with no input. Brown: effective potential with an input bias of 20

Analysis of decision-making circuitry via a firing-rate model

Groups of spiking neurons with recurrent self-excitation and recurrent cross-inhibition can implement winner-takes-all decision-making (Wang 2002). Two such groups are needed to generate a binary decision based on two inputs, whereby each group receives one input. During a trial in which the “correct” choice is made, the group with greater input becomes active, suppressing the group with weaker input. One can analyze a firing-rate model of this situation (Usher and McClelland 2001; Bogacz et al. 2006; Wong and Wang 2006; Wong et al. 2007; Standage et al. 2011) and find that given certain conditions, such as fast responses of inhibitory cells (Wong and Wang 2006; Zhou et al. 2009) the system can be reduced to a two-variable model, described by the mean firing rate of each of the two groups of excitatory cells.

In this paper, we consider forms of this two-variable model that, while simple, contain the key features of neural responses—namely neural firing rates that rise monotonically with excitatory input and decrease with inhibitory input, but in which total firing rate is bounded between zero and a maximal level, r M. Under even more specific conditions, for instance when neural responses are linear and synaptic transmission is included (Usher and McClelland 2001; Bogacz et al. 2006a), or when excitation and inhibition are balanced, the two-variable model can be reduced to a single-variable (1D) model in terms of the difference in firing rates, r D = r 1 − r 2, between the two pools (Appendix A). Such a single-variable model can be described by an effective potential (Appendix B, Fig. 1), wherein the stationary states of the system (minima or maxima of the potential), the tendency for the rate-difference to drift in one direction or another (the slope of the potential), and the difficulty for the system to change from one state to another (the height of barriers to be crossed) can be easily visualized. To make the model a perfect integrator requires a further constraint that the effective potential is flat (that is, showing no tendency to drift to any preferred rate-difference) in the absence of input.

The majority of our calculations are for single-variable systems, for which a single parameter determines the flatness of the effective potential and thus the proximity of the system to a perfect integrator. A potential with a positive quadratic term has a stable state—typically the initial state of the system—and is a leaky integrator rather than a perfect integrator. Following deviation from the stable state, the system drifts back with a time constant inversely proportional to the quadratic nonlinearity. A potential with a negative quadratic term, meanwhile, is unstable, such that once the system becomes shifted away from the potential’s maximum, it has a tendency to move further and further away.

The addition of a uniform applied current to all cells in the system, such as would arise from nonselective input from other cortical circuits, can switch a stable quadratic potential into an unstable one (I S term in Eqs. (20–22)) but does not affect the potential of a linear integrator. If such a current ramps up over time, during the period in which a decision must be made, the effective potential of a non-linear system can gain an extra, negative quadratic component, the magnitude of which increases with said current. Just such a ramping negative quadratic component, which makes the symmetric spontaneous state less stable during the decision-making period, implements one version of the urgency-gating signal (Cisek et al. 2009; Standage et al. 2011) in our 1D model (via G u(t) in Eq. (1))—we add the same term to all models, including the perfect integrator, to allow the integrator to also gain any possible advantages of such a gradual destabilization of “undecided” trials.

A more realistic and sophisticated analysis of coupled groups of neurons (Wang 2002; Wong and Wang 2006) indicates that, as total current is increased without bias, the system changes from having a single attractor state with both groups firing at low rates, to a tri-stable system with the original attractor plus two extra attractors with just either one of the groups firing at a high rate, and then to a bistable system in which the original attractor is lost. Further increases of input can produce another tristable system in which a high activity state for both cell-groups is introduced, which eventually becomes, at highest input currents, the only stable state. Thus, in our analysis of the neural dynamics underlying decision-making, we designed our simplified system such that it could possess as many as three stable attractor states.

To simulate such a system (i. e., one with up to three stable attractors), we assume a sextic (i.e. 6th order) potential for the difference in firing rates. The potential is symmetric about the origin in the absence of biased input (and thus contains 3 terms with even powers, see Eqs. (2, 23)). We vary a nonlinearity (barrier) parameter (b), which scales all nonlinear terms equally, such that the location of all stable states is maintained while the barrier heights change (see Eqs. (2, 23)). In a perfect integrator, b = 0. If we set b < 0 (as in Fig. 3a) then the initial state becomes unstable.

Fig. 3.

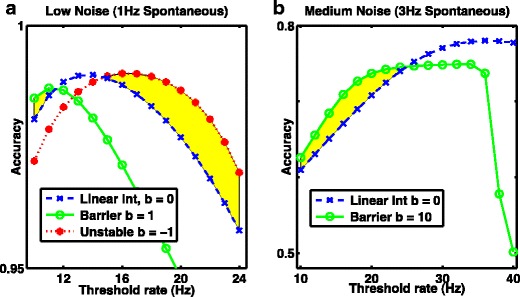

System with greatest accuracy depends on threshold. A system with a positive potential barrier (a stable point attractor) is more accurate when the threshold rate is low, while an inverted potential producing an unstable fixed point is more accurate when the threshold is high. The perfect integrator (linear, no potential barrier) is optimal at intermediate thresholds. a With low noise, D = 100Hz 2 s −1, a low threshold (<20Hz) is optimal, while b with moderate noise, D = 900Hz 2 s −1 a higher threshold (>20Hz) is optimal. a, b Blue dashed curve with crosses: linear integrator, b = 0. Green solid curve with open circles: nonlinear attractor model with barrier, a) b = 1, b) b = 10. a Dotted red curve with asterisks: unstable nonlinear model, b = −1. a, b Regions where nonlinear models are more accurate than the linear integrator are shaded yellow. Results are from 1D system with sextic potential and fixed thresholds with no other readout mechanism, so “undecided trials” are present

Stochastic fluctuations, or noise, are included in the model through a diffusion coefficient, D, which is proportional to the variance in firing rate of the spontaneous state, and is the rate of increase of variance with time in the perfect linear integrator. D contains two main components: 1) internal noise, D I, within the recurrent circuit that implements any decision-making model and that arises from the high coefficient of variation of spike trains in vivo and the probabilistic nature of vesicle release in synaptic transmission; and 2) signal noise, D S, in the inputs to the circuit, arising from these same neural processing properties as well as any signal transduction noise in sensory processing. Assuming independence of these two noise sources, the total noise variance is the sum of contributing terms, D = D I + D S, or equivalently, D I = f I D and D S = (1 − f I)D, where f I is the fraction of total noise arising from sources internal to the circuit. Thus, when we measure a decision-making circuit’s optimal performance by scaling its inputs with a gain factor, g, the signal noise variance scales as g 2, while the internal noise remains unchanged, leading to D = f I D + g 2(1 − f I)D = D(g 2 + f I − f I g 2). This dependence, combined with i d = g i (0) d produces the curves in Figs. 4 and 6 as a function of input gain, g, with different fractions of internal noise, f I.

Fig. 4.

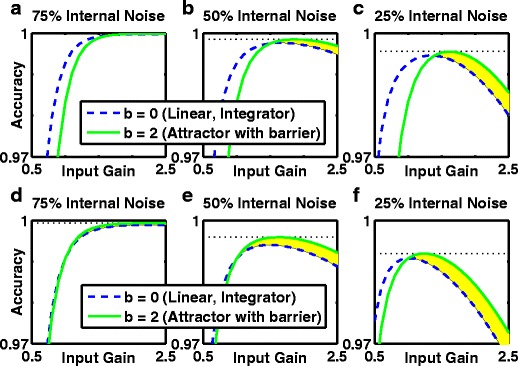

Fixed gain modulation, by scaling up or down the inputs, boosts accuracy. If the decision-making threshold is fixed, then response accuracy can be improved by scaling the input signal and input noise through gain modulation. a–c With moderate total noise (D = 900Hz 2 s −1, equivalent to 3Hz spontaneous activity) in the control system at a gain of unity, accuracy can be increased by reducing the gain (decreasing signal and noise) which slows response times. Highest accuracy is achieved with a point attractor system (green curve) when 50 % or 25 % of the total noise arises from the internal decision-making circuitry. Optimal gain is below unity (to reduce signal and noise) unless more than 50 % of the noise is internal. d–f With low total noise (D = 100Hz 2 s −1, equivalent to 1Hz spontaneous activity) in the control system at a gain of unity, accuracy can be increased by increasing the gain, which speeds up responses. Highest accuracy is achieved with a stable attractor system (green, solid curve) if 50 % of the total noise arises from the internal decision-making circuitry in d), but not 25 % in e-f). a, d 50 % of noise is internal, 50 % from stimulus. b-c, e-f 25 % of noise is internal, 75 % from stimulus. a-b, d-e “Undecided” trials are treated as guesses, ½ correct. c, f) Outcome of “undecided” trials determined by final sign of the decision variable (maximum accuracy). a–f Green solid curve: accuracy for the nonlinear point-attractor model with a barrier, a–c) b = 5, d–f) b = 1. a–f) Blue dashed curve: accuracy for the perfect integrator from the linear model with no barrier, b = 0. Shaded yellow: parameter region where the particular barrier model is more accurate. Black dashed horizontal line: optimal accuracy of attractor-based model

Fig. 6.

The nonlinear attractor-based model with a ramping (urgency) signal produces optimal accuracy even in low-noise systems. a-c Ramping signal, G u(t) = 2 s −1, acts to destabilize the symmetric, spontaneous state. d–f Ramping signal causes a dynamic threshold reduction (from 20Hz at t = 0, linearly to zero by stimulus offset at t = 2 s) with G u(t) = 0. a, d 75 % of the noise is internal, 25 % of noise scales with the stimulus as a function of gain. b, e 50 % of noise is internal, 50 % is input-dependent. c, f 25 % of noise is internal, 75 % is input-dependent. Linear integrator, b = 0. The threshold reduction used in d–f) eliminates undecided trials and “guessing” but the results are qualitatively identical to the use of the urgency signal. a–f Both methods for reducing the numbers of undecided trials boost performance more for the barrier model than the linear integrator (compare b–c and e–f with Fig. 4d–f). Green solid curve: accuracy for the nonlinear point-attractor model with a barrier, t = 2. Blue dashed curve: accuracy for the perfect integrator from the linear model with no barrier, t = 0. Shaded yellow: parameter region where the particular barrier model is more accurate. Black dashed horizontal line: optimal accuracy of attractor-based model

We define the threshold for decision-making—an absorbing boundary at which point the trial ends, with no further processing of inputs—to be at a rate-difference slightly larger than the unstable fixed points of the system. Any input bias produces a linear term in the potential (“tilting” it, Fig. 1). If sufficiently large (compared to the nonlinearity in the system), the bias alone can destabilize the initial (spontaneous) state and that of the non-preferred decision state. In the absence of stochastic noise, such a system’s response would perfectly follow the difference in inputs. By systematically varying the barrier height through the parameter, b we investigate the dynamics of jumps between stable states in comparison to gradual integration when noise is present.

Finally, we note that scaling relationships exist between variables, such that results for given values of noise variance, D, threshold, θ, and stimulus bias, I D, are identical in the perfect integrator to those with respective values D′ = k 2 D, θ′ = kθ and I ′D = kI D To produce identical results in the attractor model, a scaling of the potential (see below) is also required such that U′(r D) = k 2 U(r D /k) where U(r D) is the potential describing the original attractor. This first scaling relationship allows a comparison of results with either different thresholds or different stimulus biases. A similar scaling relationship allows a comparison of results with different input durations, as results with noise variance, D, stimulus bias, I D, and stimulus duration, t off, are identical for the perfect integrator to those with D′ = k′D, I ′D = k ′ I D and t ′off = t off /k ′. The attractor model’s results are then identical if the effective potential is also scaled, from its original U(r D) to U′(r D) = k′U(r D).

Thus our results with a single value of stimulus strength and duration are generalizable to other conditions.

Trajectories

In order to plot trajectories for the one-dimensional system, we simulate individual instances of the stochastic equation for the variation of rate differences as a function of time (using the forward Euler-Maruyama Method). In this case the dynamics follow:

| 1 |

where η(t) is a white-noise term with zero mean and unit standard deviation, . G U(t) and F(t) are respectively the urgency-gating signal and the forcing term (see below), each used in a subset of simulations.

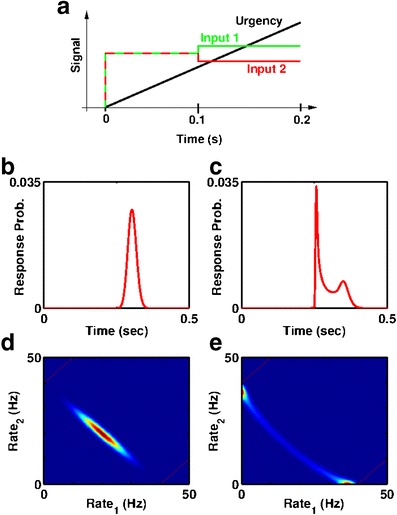

Mechanisms of urgency-gating signals

We included an urgency-gating signal in a subset of simulations, on the basis of evidence suggesting stimulus-nonspecific ramping activity during behavioral tasks. Such a signal could provide a ramping additive current to both cell-groups, or could provide a ramping multiplicative gain modulation to all inputs. In the single-variable model, we assess and compare three different implementations of an additive ramping current, as well as one implementation of multiplicative gain modulation, totaling four different versions of urgency-gating signal.

First, we added a destabilizing quadratic term in the potential, G U(t) in Eq. (1), which increased linearly from zero upon stimulus onset, to mimic an additive current. The motivation for including such an effect of an additive current is found in Eqs. (20–22), which show that in a nonlinear system and additive current destabilizes the spontaneous symmetric state with r D = 0. We also incorporate such a term in the perfect integrator model of decision-making, as a fair comparison, but doing so renders the model no longer a perfect integrator.

Second, we implemented urgency-gating using the same term, G U(t) in Eq. (1) to mimic an additive current, but with the trial-averaged linear ramp of gating signal produced by step functions on individual trials. Thus, the time at which G U(t) stepped from zero to its maximum, was drawn from a uniform probability distribution within the interval of the stimulus duration. Such simulations (data not shown) produced similar, but slightly more accurate responses than those with the linearly ramping input.

Our third method of implementing an additive urgency-gating signal is to decrease the threshold from its standard value at stimulus onset to zero by the end of the stimulus. This third method is instantiated if decisions are reached when the rate of an individual pool in the 2D model reaches a fixed value (rather than the rate-difference between the two pools reaching a fixed value). In such a case, the increase in individual firing rates caused by an additive increase in current leads to a reduction in the threshold for the difference in firing rate (intuitively, the symmetric point with r D = 0 is closer to threshold if the two cell-groups are firing more, so each closer to their individual thresholds). With such an implementation, the perfect integrator remains a perfect integrator, albeit with dynamic thresholds.

The first and third methods are implementations of two separate consequences of linearly ramping additive current to the full two-variable system. Thus, when we implement an additive urgency-gating signal in the two-variable system, we do not use these separate methods, but simply add the term G u(t) directly to the separate inputs for each neural group.

Finally, we included a subset of simulations with an urgency-gating signal consisting of a multiplicative increase in the inputs. Assuming a conductance-amplification, we multiplied the signal by [1 + G u(t)] and the noise variance term, D, by [1 + G u(t)]2 where G u(t) was a linearly ramping function. Note that, when making a decision based on the difference in two inputs, a multiplicative gain increases the effective stimulus (as the difference in inputs scales with the gain) whereas an additive urgency-gating signal has no impact on the effective stimulus (the difference in inputs is unchanged).

Forcing a choice

In simulations with weak input bias and low noise, the perfect integrator with a fixed threshold may fail to perform optimally simply because it fails to reach the threshold, even though the weight of integrated evidence is biased toward correct choice. However, the attractor model always produces more such “undecided” trials, because it is a “leaky integrator” whose neural firing rates inevitably drift back towards zero (and zero rate difference) in the absence of noise. Thus, compared to chance guessing, any method for producing readout of trials that do not reach threshold has more potential to enhance accuracy of an attractor-based model than the perfect integrator. Models with an urgency-gating signal are less prone to such “undecided” trials, though they still can arise, except if the urgency signal is incorporated by threshold reduction, in which case all trials reach the threshold.

One commonly used manner of “forcing” an “undecided” trial is simply to take the sign of the value of the rate-difference as the indicator of choice (sometimes this is termed the “Interrogation Paradigm” (Bogacz et al. 2006)). While such perfect readout is biologically unlikely, we present the results of such perfect readout in a subset of figures.

We also implemented a forced choice in a more biophysical manner, similar to that with which we implement the additive urgency-gating signal, but with one difference: rather than adding a linearly ramping modest unbiased input to the system (G U(t) in Eqs. (1–2)), to force a choice, we instead added a large, constant unbiased input for the 100 ms prior to the necessary response time (F(t) in Eqs. (1–2)). That is,

| 2 |

where F(t) is a step function equal to I F for t off − 0.1 < t < t off and equal to 0 otherwise.

We should point out that our use of F(t) in Eq. (2) allows us to force a choice by causing the final difference in firing rates to move away from zero for all systems, including the perfect integrator. Our analyses show that such a forcing is only easily achievable in a nonlinear system for which the dynamics of rate-differences depend on the total input current. This effect is visible in Eq. (22), as the negative quadratic term in the effective potential that is proportional to the sum of stimulus currents. Such a term appeared in the dynamic equations for the rate difference (Eqs. (16) and (20–21)) and is proportional to the nonlinearity in the firing rate curve for both quadratic and cubic versions. In a linear system, adding additional input equally to two groups has no impact on their difference of firing rates. It is only in the nonlinear attractor formalism above, an equal increase in applied current to all cells in a decision-making network leads to an increase in the difference in firing rates, ultimately forcing a choice. Machens and Brody (Machens et al. 2005) used essentially the same mechanism in a task with three epochs to produce a forced choice following integration of evidence. Even though the rate equations do not justify our inclusion of such a term for the perfect integrator, we do include the term in a model-independent manner so as to avoid any favoring of attractor models.

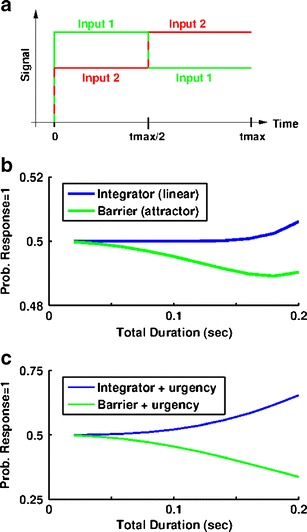

Compelled response inputs

Most models of tasks with a constant stimulus can reproduce the key results of improved accuracy with increased signal strength of decision-making time, and a unimodal, positively skewed distribution of response times. However, models’ predictions of the effects of temporal variation of the stimulus during decision-making can be more varied. For example, while perfect integrators weight all evidence equally, attractor models give most weight to evidence immediately before the decision; unstable models, meanwhile, give most weight to early information. Thus, examinations of two tasks with non-constant stimuli allowed us to more thoroughly evaluate and compare linear and nonlinear models.

In the first task, stimulus reversal, the sign of the stimulus in the single-variable model is switched at the midpoint of the total duration. Such a switch corresponds to a reversal of direction in a moving-random-dot perceptual experiment (Rüter et al. 2012).

The second task follows a pair of recent studies (Salinas et al. 2010; Shankar et al. 2011), which examined decision-making in monkeys trained to initiate a response (in a two-alternative forced choice task) in advance of the presentation of any information that might indicate which response is correct. In order to simulate such a task, we use the two-variable model with the mean current and any urgency-gating signal, G U(t) applied equally to the two cell-groups commencing at the start of the simulation but only apply the difference in current at the midpoint, t off/2, using a total duration of t off = 500 ms.

Solving the time evolution of the probability density function

In order to assess the decision-making accuracy of different systems over ranges of parameters, our principle method is to solve the time-evolution of the probability density function, P(r D, t) over a fixed stimulus duration of 2 s in a system with absorbing boundaries marking the decision threshold. The probability density function indicates the likelihood of the rate-difference, r D, at a particular value at a given time, t. A single calculation of the probability density function can take the place of a large number of simulated trials (and in fact describes the exact probability of any possible outcome for a trial) so its calculation is a much more efficient method for describing the system than brute force simulation.

The dynamics of the probability density function follow the Fokker-Plank equation, which includes two terms (Eq. (3)). The first, diffusive term contains the effects of noise and spreads out the probability density function as time progresses, adding variability. The second, deterministic term causes the system to follow any input bias or move toward an attractor state according to the deterministic rate equations (e.g. Eq. (9)). Thus the Fokker-Plank equation can be written as:

| 3 |

where the effective potential, U(r D,t), is stimulus-dependent and can in general possess three attractor states (Fig. 1). For our standard parameters, with a choice-threshold set at ±20Hz, we fix the location of attractor states at 0 and ±30Hz with the unstable fixed points at ±17Hz. Our results do not qualitatively depend on these values, so long as the threshold for making a response is not much greater than the highest stable steady states of the system (i.e. a rate-difference of ±30Hz with these parameters). Indeed, quadratic and quartic potentials (with a single steady state at r D = 0) produce almost identical results to the sextic potential (data not shown). We vary the stability of attractors (i.e. the height of barriers between stable states) through a single parameter, b. This parameter is zero for a perfect integrator, greater than zero for a model with a stable initial state, and less than zero for a model with an unstable initial state.

We assume the probability density function to be initiated as a δ-function at zero rate-difference (P(r D, 0) = δ(r D)) denoting a hard reset, so that the firing rates of the two groups are equal at the beginning of any trial. For each time step of the calculation, we accumulate the probability distribution crossing either threshold as the probability that a response has been reached in that time interval. We then set the probability distribution to zero outside the threshold (that is we assume absorbing boundaries and a fixed threshold as suggested by electrophysiological data (Kiani et al. 2008)).

The total fraction of responses categorized as “correct” corresponds to the sum over all time steps of the accumulated probability distribution reaching the positive threshold. The total fraction of responses deemed incorrect is a similar sum of the distribution reaching the negative threshold. We assess percent correct/incorrect and response latencies in a range of networks with different parameters, as shown in Table 1. D in this table is the level of noise variance, which determines likelihood of errors and spread of response times, values of which were chosen to lead to spontaneous firing rates in the absence of inputs, in the range of 1Hz to 5Hz, as is typical of cortical excitatory neurons. The input bias, I D(t), is the strength of the signal to be integrated. The range of the urgency-gating signal, G U(t), determines how easily the system can switch from a strongly stable to a strongly unstable spontaneous state over the course of stimulus presentation. The time to stimulus-onset, t on, determines the amount of variability in the system at the time of stimulus onset. In the majority of protocols, the stimulus remained for a fixed duration, up to a time, t off, at which time we either forced a response (see above) or assigned “undecided” trials (the fraction that had not reached threshold) equally as “correct” or “error” trials, or assigned all trials with a final value of r D > 0 as correct and those with r D < 0 as incorrect (perfect mathematical readout).

Table 1.

Parameters for Fokker-Planck equations for 1-D effective potential

| Parameter | Symbol | Standard Value | Range | Units |

|---|---|---|---|---|

| Diffusion constant (noise variance) | D | 900 | 100; 900; 2500 | Hz 2 s −1 |

| Bias term | i D | 20 | 20 | – |

| Stimulus duration | t off | 2 | 2–∞ | s |

| Choice threshold | θ | 20 | 10–100 | Hz |

| Time constant | τ | 0.010 | 0.010 | s |

| Quadratic term | α | 0–60 | 0–60 | – |

| Quartic factor | β | 4/900 | 4/900; 1/900 | – |

| Sextic factor | γ | β/1200 | β/1200; β/4800 | – |

| Urgency gating maximum | G maxU | 0 | 0–20 | – |

| Forcing term | I F | 0 | 0; 200 | – |

| Input gain | g | 1 | 0–3 | – |

| Internal fraction of noise | f I | N/A | 0.25, 0.5, 0.75 | – |

Probability density function for two variables (2D)

To ensure that our results for the single-variable system with an effective potential apply to the complete system with two variables (Table 2), we went on to simulate the evolution of the probability density function using the two rate variables, r 1 and r 2 (rather than just their difference, r D = r 1 − r 2). Equations (6) and (17) describe the deterministic terms (). Adding a diffusion term (D′ = D/2) leads to:

| 4 |

Table 2.

Parameters for 2-D Fokker-Planck equations

| Parameter | Symbol | Standard Value | Range | Units |

|---|---|---|---|---|

| Diffusion constant (noise variance) | D′ = D/2 | 450 | 50; 450; 1250 | Hz 2 s −1 |

| Choice threshold | θ | 20 | 20 | Hz |

| Time constant | τ | 0.010 | 0.010 | s |

| Maximum rate | r M | 100 | 100 | Hz |

| Maximum current | I M | 200 | 200 | – |

| Sum of stimuli | I S | 40 | 40–80 | – |

| Bias of stimuli | I D | 0.8 | 0.8 | – |

| Stimulus Duration | t off | 2 | 0.1–∞ | s |

| Nonlinearity | b | 0–0.5 | 0–0.5 | – |

We simulated the system on a discrete mesh with Δr = 0.2Hz, and confirmed key results using a finer mesh (Δr = 0.05Hz). Similarly we confirmed all results to be accurate to better than 1 part in 1000 with a decrease in Δt by a factor of 10 and to match the equivalent one-dimensional simulations of a quartic potential (Eq. (22)).

Simulation details

We simulated all dynamical equations in Matlab (Mathworks, Natick, MA) using the forward Euler-Maruyama method. Time steps, Δt, were chosen such that results did not change by more than 1 part in 10,000 using a 10-fold lower time step. This led to Δt = 2 × 10−6 s for all cases with D = 2500Hz 2 s −1and Δt = 5 × 10−5 s for D = 100Hz 2 s −1 in the absence of a forcing current and Δt = 1 × 10−5 s in all other cases. For Fokker-Plank simulations the “spatial” grid used Δr D = 0.2Hz, though key results were verified (unchanged to 1 part in 1000) using a finer mesh with Δr D = 0.05Hz. Code is available by visiting the website: http://people.brandeis.edu/~pmiller/Decision_code.

Results

Optimality of single-variable models

Single variable models are those in which the difference in firing rates is the only variable of importance—i. e., in which the dynamics of the system and response times depend only on the rate-difference between two neural groups. For such models an effective potential can be produced whose slope describes the direction of deterministic change in rate-difference. A static model is one whose parameters do not change over time—equivalently, after stimulus onset the effective potential is static. This represents a neural circuit with fixed connections responding to a constant input, and thus does not contain any urgency-gating signal. Below, we examine optimality in this sort of model, describing first the basic result and then the results of various parameter manipulations.

Decision-making accuracy with attractors and barriers to diffusion

In our standard simulations, starting with a “moderate” noise level of D = 900Hz 2 s −1, we find that increasing the nonlinearity factor from zero—i. e., turning the network from an ideal integrator into a network that hops from attractor to attractor—improved accuracy (Fig. 2a, solid green trace). The nonlinearity factor produces a barrier to noise-driven diffusion, reducing the spread of the probability distribution. This had two, opposing results. First, noise-driven errors—namely incorrect responses—became rarer with increasing barrier height (Fig. 2a, dashed red trace). At the same time, the number of “undecided” trials, for which threshold is not reached in the allotted 2 s of decision time, increased with barrier height (Fig. 2a, dot-dashed magenta trace); beyond an optimal barrier height, the mean response time took longer than the allowed decision time. However, for a range of barrier heights, the dominant effect was reduction in the error probability—the greatest accuracy was achieved with a non-zero barrier.

Fig. 2.

Probability of incorrect response decreases with increasing height of effective potential barrier. a Moderate noise, D = 900Hz 2 s −1, equivalent to 3Hz spontaneous activity. b Low noise, D = 100Hz 2 s −1, equivalent to 1Hz spontaneous activity. a, b Dotted blue = correct responses; Dashed red = incorrect responses; Dot-dashed magenta = no response (undecided); Solid green = minimum accuracy = correct + (undecided)/2; Solid black = maximum accuracy = probability of final rate greater than zero. Results are from 1D system with sextic potential and fixed thresholds

In the lower-noise system (D = 100 Hz 2 s −1) introduction of a barrier via a positive nonlinearity did not improve accuracy (Fig. 2b, solid green trace), because even without a barrier to diffusion of firing rates, the threshold was often not reached within the 2 s of integration time. Thus, the number of undecided trials (Fig. 2b, dot-dashed magenta trace), rather than the number of incorrect responses (Fig. 2b, dashed red trace), became the rate-limiting factor on accuracy in this case.

The negative impact of undecided trials in models with a barrier to diffusion is greatly ameliorated if the response can be determined by a perfect readout of the final sign of the decision variable (Fig. 2b, solid black trace). Since such perfect readout is unlikely in a real biological system, in later sections we will assess how biologically plausible mechanisms can improve accuracy of otherwise “undecided” trials towards that of a perfect readout.

To summarize, Fig. 2 shows that given a specific fixed input, a specific fixed threshold and a specific time limit for responses, an integrator can be, but need not, be the most accurate model, even though adding that time limit causes more “undecided” trials in the nonlinear model. Adding a barrier in Fig. 2a improves accuracy because it slows responses; the integrator responds in much less time than the 2 s available, but more of the quickest responses are errors.

Adjustment of response times via changing the location of a fixed threshold

In a later section we will go more deeply into an assessment of optimality when taking response time into account, but in the following two subsections we examine methods whereby the system could be improved simply by optimizing static parameters. We assess two methods that have reasonable claims to biological plausibility, namely adjustment of the decision threshold (as in (Lo and Wang 2006; Simen et al. 2006; Simen et al. 2009; Bogacz et al. 2010)—this subsection) and modulation of input gain (as in (Brown et al. 2005; Shea-Brown et al. 2008; Eckhoff et al. 2009)—see next subsection).

We assessed the system’s accuracy as a function of the static decision-making threshold—i.e. the firing rate needed to produce a response—under both low noise conditions (in which the perfect integrator produced many “undecided” responses) and high noise conditions (in which the perfect integrator reached threshold too quickly compared to the time available for stimulus integration). In the system with low noise, we found that the accuracy of the perfect integrator was greatest at a threshold below the standard level used in Fig. 2 (20Hz), while the optimal accuracy of a system with moderate noise was achieved at a threshold above the standard level. In both low and moderate noise, parametric variation of the threshold demonstrated the optimal accuracy of the perfect integrator was better than the optimal accuracy of any nonlinear system with a static barrier produced by a positive nonlinearity (Fig. 3a, dashed blue versus solid green traces). Rendering the initial state unstable via a negative nonlinearity, however, reliably resulted in improved accuracy and the highest absolute accuracy, for a non-integrator (Fig. 3a, dotted red trace). Yellow regions represent improvement in accuracy realized by inclusion of non-linearity.

Optimal performance via adjusting the level of a fixed input gain is constrained by internal circuit noise

The early stages of sensory processing modulate the amplitude and gain of any external signal, quite possibly moving them toward the optimal range for later processing. Indeed, it has been suggested that one function of norepinephrine is to produce precisely such gain modulation of the inputs to and within the decision-making circuitry (Brown et al. 2005; Eckhoff et al. 2009). Thus it is reasonable to ask what effect scaling of the inputs—and of any associated noise—has on accuracy, and whether optimization of this static parameter favors integrators, stable attractors, or unstable fixed points.

Of course, the “associated noise” is actually a sum of two sources of noise—input and internal (i.e. within the decision-making circuit). The former of these most likely scales with input gain (Gold and Shadlen 2000, 2003), while the latter does not. We therefore examined how performance was affected by parametric adjustments of the proportion of the total noise making up each fraction. Specifically, for a given proportion of internal noise at a gain of unity, whose value we held constant, we scaled both the input signal and the standard deviation of the input noise by a factor—the input gain—that we parametrically varied. Such an effect would arise by a scaling of the conductance of afferent synapses.

We found, in the system which possessed “medium” noise at a gain of unity, that the optimal accuracy of the model with a stable attractor and barrier (Fig. 4, solid green traces) is higher than that of the integrator (Fig. 4, dashed blue traces), except at a very low fraction (≤ 10 %) of internal noise (Fig. 4a–c). This medium noise system benefits from a reduction of input gain, because in our standard protocol (with a gain of unity) decisions were made more quickly—and thus with more errors—than is optimal. Reductions in input gain (which reduce the signal) can improve decision-making accuracy when they reduce the noise in the system sufficiently.

Since “undecided” trials are rare in the system with medium noise, implementing a perfect readout of the final state of the system (Fig. 4c, the maximum accuracy condition) has little impact on these results, except to further enhance the advantage of the attractor model (which has more undecided trials) over the perfect integrator.

Reduction of noise helps the attractor model less, because noise actually drives the stochastic transitions constituting a decision. Thus in Fig. 4b at the lowest input gains, the barrier model is more accurate if more noise is internal, so not scaled away (compare Fig. 4b green trace near zero gain with the same trace in Fig. 4a). Such a seemingly paradoxical result of enhanced accuracy with decreased signal to noise ratio, seen also in our spiking-neuron simulations of the task (Miller and Katz 2010), is actually a reliable phenomenon, akin to stochastic resonance, often observed in models with a stable initial state (Gammaitoni and Hänggi 1998; Gluckman et al. 1998; McDonnell and Abbott 2009; Miller and Katz 2010).

For the low noise system, optimal accuracy for the integrator (Fig. 4d–f, dashed blue traces) is below that of the attractor model with a barrier (Fig. 4d–f, solid green traces) if internal noise is > 50 % of the total, though differences between the two are miniscule. In the standard system (i. e. with unit gain) with low noise, accuracy is limited by the number of undecided trials, and thus improves as gains increase from unity. The improvement is larger if the increase in signal does not come with a concomitant increase in noise, which is the case when the dominant contribution to the noise is internal (compare Fig. 4d and e). If the “undecided” trials are settled based on the sign of the decision variable (i.e. the maximum accuracy condition, Fig. 4f) then accuracy increases considerably in all systems, but more so for the attractor model, such that it’s accuracy matches the perfect integrator even if only 25 % of the noise is internal. Since performance in the attractor system (under standard conditions) was more limited by undecided trials than was performance of the perfect integrator, in all cases the attractor system benefits more by any increase above unit gain (Fig. 4d–f, solid green traces), so the already-observed advantage over the perfect integrator only increases (Fig. 4d).

In summary, if a noise-free circuit were possible, the integrator could produce optimal accuracy, but if even a relatively small fraction (≥ 15 % of D = 900Hz 2 s −1) of the total noise in the decision-making circuitry is internal rather than arising from inputs, non-integrators achieve greater accuracy than the perfect integrator.

Decision-making accuracy in models with an urgency-gating signal

An urgency-gating signal is a method for speeding up the likelihood of a response as time passes, and for settling otherwise undecided trials. It has been suggested to explain behavioral data in some tasks (Cisek et al. 2009), and has been further suggested to allow greater accuracy in decision-making tasks than a true perfect integrator (Standage et al. 2011). The urgency-gating signal can be instantiated as a ramping multiplicative gain in the inputs, or a lowering of threshold with time, or a reduction of the stability of the initial, undecided state with time. We investigate and provide results for all three alternatives (and justify the third one, since it is novel).

In nonlinear models with an initial point attractor and barrier the initial, undecided state will be destabilized by a ramping input current (Eqs. (21–22)), increasing the likelihood of response. Moreover, such a ramping input current can multiplicatively increase the effect of any input bias (Eqs. (21–22)), thus providing a particularly simple biological implementation of urgency-gating. Such an unbiased ramping input current has no effect on rate-differences in a linear, perfect integrator, but to ensure a fair comparison, we added equivalent urgency signals to every single-variable model (see Methods). To summarize, we implement the urgency signal, equally for all types of barrier, either as a linearly increasing term, which destabilizes the initial spontaneous state (Fig. 5, dot-dashed green curve), or as a linearly decreasing threshold, which reaches zero by the end of the stimulus (Fig. 5, dashed red traces).

Fig. 5.

Addition of a ramping (urgency) signal boosts optimal accuracy and favors increased barrier height. a For the low noise system (D = 100Hz 2 s −1) the urgency gating signal boosts performance for both linear integrator (b = 0) and point attractor models (b > 0), though more so for larger barriers, such that optimal accuracy arises in a model with a barrier (black asterix, b = 1). An urgency signal that destabilizes the initial state (dot-dashed, green trace, G u(t) = 1.5 t) can produce greater accuracy than one that causes a reduction in thresholds (dashed red trace). b For the moderate noise system D = 900Hz 2 s −1, a destabilizing urgency gating signal (dot-dashed green trace, G u(t) = 5 t) reduces accuracy for the linear integrator (b = 0) but boosts accuracy for models with a large barrier, increasing optimal accuracy and increasing the optimal barrier height (black asterix, b = 18). An urgency signal which acts to reduce thresholds (dashed red trace) is less accurate, but again its optimal accuracy is for an attractor model with a barrier. Both forms of urgency signal cause threshold to be reached in over 99.9 % of trials for linear integrators and for nonlinear barrier systems, so “undecided trials” are lost. a–b Blue solid curve: no urgency signal. Red dashed curve: urgency signal by linearly ramping reduction of thresholds to zero. Green dot-dashed curve: urgency signal by slowly increasing the destabilization of the initial state

We find that optimal accuracy (Fig. 5 black asterisks on dot-dashed green traces) is achieved when nonlinearity provides a diffusive barrier such that the urgency-gating signal does not render the initial attractor state unstable until near the end of the stimulus duration. In all simulation conditions, an appropriate combination of barrier height and urgency-gating signal could be found that produced better accuracy than a perfect integrator (Fig. 5, y-intercepts), even one with linearly decreasing thresholds (Fig. 5, dashed red). Similarly, when we parametrically varied the level of the static threshold (as in Fig. 3), we ubiquitously found that some model with a positive nonlinearity to produce an initial barrier, combined with an urgency-gating signal, always produced greatest accuracy (data not shown).

In fact, a nonlinear, temporally varying system could perform better than the perfect integrator even when thresholds were specifically chosen to be optimal for the integrator and unchanged thereafter. In a system with high noise (D = 2500Hz 2 s −1, corresponding to 5Hz spontaneous activity), for instance, the optimal threshold for the perfect integrator was found at a rate higher than that of the high-rate attractor states of the sextic potential. Such a rate prevented decisions from being made (and producing chance performance) given moderate nonlinearity. However, an alternative potential with attractor states at r D = ±80Hz enabled a nonlinear system to reach the high threshold of 60Hz and perform better than the perfect integrator.

Our main result—optimal accuracy arises from a combination of an attractor model with an urgency-gating signal—was maintained when we parametrically varied the fixed input gain (Fig. 6, solid green curves). In Fig. 6 we present the results for the low-noise system (the only case where the perfect integrator was more accurate than the attractor model in our standard conditions), and find than with the inclusion of an urgency-gating signal an attractor model (solid green curve) produces optimal accuracy, regardless of whether the signal is implemented by destabilizing the initial state (Fig. 6a–c) or by linearly decreasing thresholds to zero (Fig. 6d–f), and regardless of whether the system is dominated by internal noise (Fig. 6a,d) or by input noise (Fig. 6c,f).

Decision-making accuracy when responses are forced

The long-standing analytic proof (Wald 1947; Wald and Wolfowitz 1948) of perfect integration as the optimal process for making a two-alternative forced choice for any fixed time interval (and conversely the process requiring the minimum time to reach a given accuracy) assumes either an unlimited duration with fixed thresholds, or an absence of threshold combined with a readout that perfectly responds to any difference in total accumulation of inputs.

A biological readout mechanism, however, is unlikely to reproduce such perfect analytic results. More likely is something resembling the mechanisms that we instantiate above, or the one below, wherein we force a response in such trials by rendering the undecided state of low firing rate difference unstable—by “forcing” a response to threshold. Such instability would arise in practice via a strong global input current in any nonlinear model.

A forcing term applied in the final 100 ms of the trial (instantiated as a large, negative quadratic addition to the effective potential, see Methods) led to qualitatively the same result as an urgency-gating signal, in that it improved accuracy of nonlinear models more than perfect integrators. The fraction of undecided trials fell to below 10−8 in all cases—a change favoring attractor models, which otherwise generated more undecided trials. Even in the low noise case, if 50 % of the noise is internal then at an optimal level of gain, an attractor model with a barrier (b = 1) is more accurate than the perfect integrator.

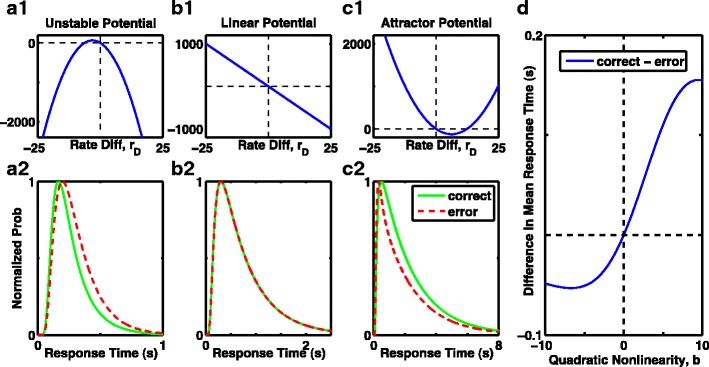

It might appear surprising that such a forcing term would produce better than chance responses from undecided trials in a barrier model—after all, while the system remains in its initial stable state, it has not integrated any of the prior input. However, the boost in accuracy provided by the forcing term can be understood from the shape of the effective potential prior to addition of the forcing term (Fig. 7a1–c1). The biasing current causes the stable “undecided” state to be offset from zero on the side of correct responses. Thus, when a response is forced, the otherwise undecided responses will more likely become correct responses than errors. This shift in the stable fixed point—which marks the peaks of the probability distribution for “undecided” trials—also explains why perfect readout improves performance for the barrier model beyond chance guessing, in Figs. 2 and 4c, f. Since the barrier model typically has more undecided responses without a forcing term, then the benefit of a forcing term can favor the barrier model more than a perfect integrator.

Fig. 7.

Expectation of faster or slower error responses depends on curvature of the nonlinear 1D model. a1), b1), c1) Effective potentials include a bias current, which produces correct responses at positive rate-difference r D. a1) Fixed point of the unstable potential (where dr D/dt = 0) is shifted left of the origin, so crossed on error but not correct trials, leading to slower error responses in a2. b1) Linear potential has constant gradient, leading to equal shapes of correct and error response distributions in b2. c1) Stable fixed point of the potential with a barrier is shifted to the right, so crossed on correct, but not error trials, leading to slower correct responses compared to errors in c2. a2), b2, c2) The corresponding response time distributions (scaled to a peak of 1, for easy comparison of the shapes—the number of errors is so much fewer than number of correct responses that shapes of the original distributions can not be visibly compared). Green solid curves: scaled distribution of correct response times. Red dashed curves: scaled distribution of error response times. a1), a2) b = −1. b1), b2) b = 0. c1), c2) b = 1. D Summary of the difference in mean response times as a function of quadratic curvature of the effective potential, or the stability of the initial fixed point (negative curvature is unstable, positive curvature is stable, zero curvature is marginally stable and equivalent to an integrator). Systems with an unstable fixed point produce slower errors, while systems with a stable fixed point produce faster errors. Simulations contained no fixed time limit, so a threshold was always reached

Explaining the above: the role of response time distributions for non-integrators and integrators

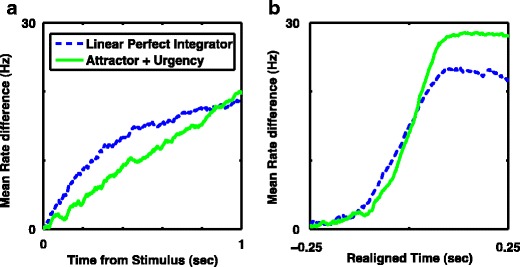

Given that the perfect integrator is often described to provide the optimal trade-off between mean reaction time and accuracy, it is quite reasonable to ask why we observe higher accuracy with a nonlinear system combined with an urgency-gating signal in all circumstances. The answer to this question can be found in examinations of simulations with a range of input currents and no time limit for decision-making. For both the perfect integrator and the nonlinear system, accuracy increased and reaction time decreased with increased input bias (input bias being the difference in input currents, representing coherence in motion tasks). At high input bias the nonlinear model with urgency-gating is more accurate than the linear integrator, although reaction time is slower; this situation reverses at low input bias, when the slower input-induced dynamics allow more time for the urgency-gating signal to effect a response. Figure 8a summarizes these data, showing that there is no input bias for which the nonlinear system produces both higher accuracy (Fig. 8a, dashed blue trace) and faster mean response times (Fig. 8a, green trace) than the perfect integrator. On the contrary, as expected, for all combinations of nonlinear system assessed, we found a small range of inputs for which the perfect integrator did produce more correct responses with a faster reaction time—just as predicted by standard theory.

Fig. 8.

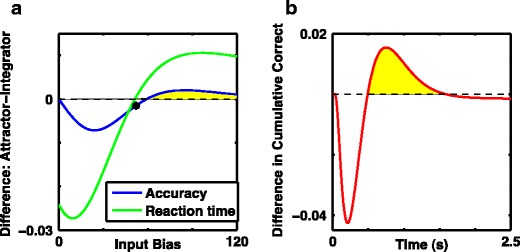

Mean response time or accuracy, but not both, can be improved with a nonlinear system and urgency-gating signal. a Difference in accuracy (blue) and difference in mean response time in units of seconds (green) between the nonlinear system with urgency-gating signal (NL + Urgency, which has discrete attractor states after stimulus onset at low input bias), and the perfect integrator as a function of input bias. b Difference in cumulative distribution of correct responses over the course of the stimulus presentation with a fixed input bias of 58 (black asterix in a), where mean response times are approximately equal, but performance is slightly higher with the perfect integrator. Note the middle epoch (shaded yellow) denoting the range of times for which the nonlinear system has produced more correct responses. Thus the nonlinear system with urgency-gating signal can produce better performance for fixed stimulus durations within this time interval. a–b All curves are with moderate noise, D = 900Hz 2 s −1 and response threshold, θ = 20. The perfect integrator is linear with b = 0 and G U(t) = 0 while the system with discrete attractors is nonlinear with a barrier given by b = 5 and has an added urgency-gating signal of G U(t) = 7.5 t. In order to obtain the full response time distribution, stimulus duration was not limited, so threshold was always reached

Any apparent contradiction with our prior results is resolved in Fig. 8b, which shows the cumulative fraction of correct responses for two systems with the same mean response times. Since the shapes of the distribution of response times differ between nonlinear and integrator systems, there is a range of response times for which the nonlinear system with urgency gating produces more correct responses than the perfect integrator, even if eventually, once all responses are produced and counted, the perfect integrator is more accurate. This effect arises because the response time distribution of the perfect integrator has greater skew—for the same mean response time the integrator has more very slow responses—a simple and direct consequence of the urgency signal ensuring responses are timely. Thus, it is the limited stimulus duration with fixed response time that allowed us to recognize nonlinear systems with an urgency signal to have consistently greater accuracy than the perfect integrator (Fig. 8b).

Rate of accumulation of reward in the absence of a time limit

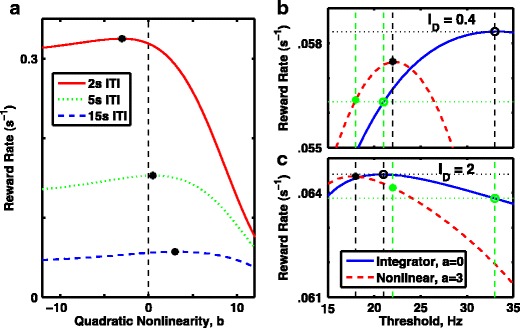

By limiting stimulus duration and response time, one necessarily affects the number of rewards that can be delivered (and achieved) within a particular length session. This variable is commonly known as the “reward rate.” Optimization of performance in decision-making tasks is the same as maximization of reward rate. Manipulation of the maximum achievable reward rate also has an impact on the optimal model, which we explore below.

In decision-making tasks with no fixed response time, the maximum achievable reward rate can be manipulated by varying the inter-trial interval (ITI), because the reward rate is equal to the probability of being correct (the accuracy) divided by the total time per trial (mean response time plus ITI) (Swensson 1972; Balci et al. 2011). The ITI determines where one should operate in the speed-accuracy tradeoff. When the ITI is long compared to the decision-making time, it is more important to take the time to be accurate, as each error is relatively costly; if the ITI is short, meanwhile, it can be worth making more errors, as the increase in the rate of trials initiated in a given period may compensate for the errors. Thus, when measuring reward rate in the absence of any constraints on stimulus duration or response time, an unstable, fast but error-prone system is optimal when the ITI is short (Fig. 9a, solid red trace), whereas the more accurate but slower attractor system is optimal when the ITI is long (Fig. 9a, dashed blue trace). The perfect integrator, meanwhile, is optimal at just a single, specific value of intermediate ITI (in this case approximately 5 s—Fig. 9a, dot-dashed green trace).

Fig. 9.

Optimal performance, measured as mean reward rate, depends on the inter-trial interval, the stimulus bias and threshold rate. a In the absence of a time limit for decisions, so threshold is always reached, reward rate is maximized with an unstable system (negative quadratic curvature), which forces faster, less-accurate responses is the inter-trial interval (ITI) is low (e.g. 2 s, red curve) but is maximized with a stable point attractor (positive curvature, indicating a barrier) if slower responses are less penalized due to a long inter-trial interval (e.g. 15 s, blue curve). A perfect integrator, exemplified by the linear system (curvature = 0, vertical dashed line) is optimal at a single, specific inter-trial interval, slightly less than 5 s (green line). All curves are with D = 400Hz 2 s −1, with stimulus bias, i D = 20 and with threshold, θ = 20Hz. Red solid curve: ITI = 2 s. Green dot-dashed curve: ITI = 5 s. Blue dashed curve: ITI = 15 s. The absence of time limit ensures an absence of “undecided” trials. b, c Reward rate with an ITI of 15 s for the perfect integrator (blue solid curve) and a barrier model (red dashed curve) as a function of static threshold. b Standard stimulus bias, i D = 20. c Large stimulus bias, i D = 50, leads to lower value for the optimal threshold rate. b, c Blue solid curve, linear integrator with a = 0; red dashed curve, nonlinear model with a = 3; black open circle, performance of linear integrator when threshold is optimal; black asterisk, performance of nonlinear model when threshold is optimal; green open circle, performance of linear integrator when threshold is optimal for the alternative stimulus; green asterisk, performance of nonlinear model when threshold is optimal for the other stimulus

For all systems studied in this situation with no fixed time limit, an optimal reward rate was achieved without an urgency signal. The primary consequence of an urgency-gating signal was to increase the barrier height corresponding to the maximum reward rate (i.e. shifted the peaks of curves in Fig. 9a to the right). However, the overall maximum reward rate was slightly reduced in these cases (data not shown). This result can be understood if the urgency-gating signal is characterized as a way to ensure a response in a given time window, so the signal is of value when stimulus duration or the time window for response is fixed.

The results of Fig. 9a were all produced with a fixed static threshold. Given our results in Figs. 3 and 8, as well as longstanding theoretical proofs (Wald 1947; Wald and Wolfowitz 1948), one might expect that for any particular fixed level of input an optimal threshold can be chosen for the integrator so that its rate of reward accumulation is greater than that of any other system. Indeed, this is the case: we show two examples in Fig. 9b–c, in which, with a 15 s ITI, the perfect linear integrator can be made to outperform the barrier model. Figure 9b demonstrates results for the same signal used in Fig. 9a, where the barrier model accumulates reward more rapidly at a threshold of 20Hz, because the integrators optimal threshold is much higher, at 35Hz. However, it is worth pointing out that with stronger input bias (just as with reduced ITI) the optimal threshold is lower for all systems. In fact the optimal threshold shifts more with changes in input for the linear perfect integrator than it does for the barrier model (compare Fig. 9b with Fig. 9c). Thus we find that even though the perfect integrator performs better when both systems are optimized for a given input and ITI, under many changes in the task—such as with a new input strength—one can easily find that the barrier model performs better than the perfect integrator at the previously optimized thresholds (green asterisks are higher than green circles in both Fig. 9b and c). Thus, in a laboratory setting with over-trained animals, where the inputs and task design have been learned over weeks or months, the integrator model will likely be optimal; in a natural environment however, in which the typical strength and frequency of an input may not be known a priori, the barrier model will likely be optimal.

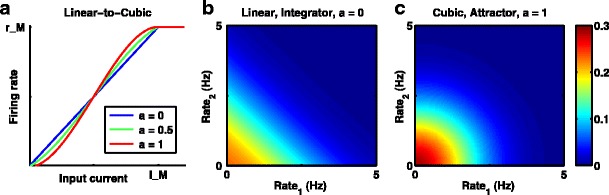

Extending our results to two-variable models

All of the above results made use of a model with a one-dimensional (1D) effective potential. To extend and validate these results, we also simulated the full two-variable system, using a firing-rate curve that could range from linear (α = 0) to cubic (α = 1) (Fig. 10a). In so doing, we were able to map from a perfect integrator to an attractor-based system with a fixed point at the origin. At the broadest level, the results of these latter simulations are simple to describe: the two-variable system recapitulates the results of the 1D system (i. e., demonstrating the frequent sub-optimality of the perfect integrator).

Fig. 10.

Models for a 2-variable decision-making from linear, perfect integrator to cubic, nonlinear attractor. a The parameter a, dictates the degree of nonlinearity, by adding a cubic term to the piece-wise linear portion of the firing-rate curve. b, c The steady state probability distribution of the pair of firing rates in the absence of input for the linear perfect integrator in b and the cubic nonlinear model in c. Spontaneous rates are constrained in the 2D system (unlike the perfect integrator in 1D) because of the threshold nonlinearity at zero rate (firing rates can not be negative, so noise-driven drift is constrained). b For the linear system (perfect integrator) for a given sum of the two rates, the probability density is independent of the difference in firing rates, whereas c) the nonlinear system constrains the difference in firing rates to produce elliptical contours of constant probability. Results are with D′ = 450Hz 2 s −1, equivalent to D = 900Hz 2 s −1 in the 1D system. Steady state distributions are shown, so no time-limit is included

Advantages of the two-variable model

The full two-variable model, while more computationally intensive, provided at least 3 advantages over the single variable model: First, use of the 2-variable system allowed us to directly relate the level of noise in the system to the range of spontaneous firing rates prior to stimulus presentation, demonstrating that the low noise and medium noise simulations used here correspond to approximate levels of 1Hz or 3Hz spontaneous activity respectively (see Appendix D); second, it allowed us to test the dependence of our main conclusions on whether fixed thresholds were separate for each group (i.e. if either r 1 or r 2 reach a threshold, a situation akin to competing accumulator models of decision-making or race models) or based on the difference in firing rates (i.e. if r D = r 1 − r 2 reaches a threshold, as occurs in the 1D system and the drift diffusion model); third, and arguably of greatest importance, it allowed us to relax constraints needed in the 1-dimensional system to ensure that the difference in firing rates did not depend on the sum of rates (see Requirements for an Effective Potential in Appendix B)—in particular the requirement ε = (W S − W x) = 0 that led to Eqs. (2) and (20), an essential condition for a single-variable nonlinear model—and thus to study the robustness of linear and nonlinear systems to mistuning of parameters (Fig. 11).

Fig. 11.

Robustness to mistuning increases with nonlinearity and an urgency-gating signal in 2D system. a Linear system (a = 0, blue dashed curve) is a perfect integrator with balanced cross-inhibition (W X = 0.5 where it outperforms the nonlinear system (green solid curve). With increased cross-inhibition (reducing stability) the nonlinear system (which enhances stability of the spontaneous state) is more accurate than the linear system. Note that in both cases, accuracy of the most optimal system (peaks of curves) exceeds that of the perfect integrator (linear, W X = 0.5). b Urgency-gating is included as a multiplicative gain term (max = 1, leading to an eventual doubling of stimulus and 4-fold increase in). The ramping multiplicative gain improves performance of the linear system but the nonlinear system with an initial barrier has the higher optimal accuracy. c Urgency-gating (max = 0.1) is included by an additional unbiased ramping current, with decision-threshold set at a fixed rate-difference (|r 1 − r 2| = 20Hz). Performance of the linear system deteriorates rapidly with imperfect tuning, and it is unaffected by an urgency-gating signal. The nonlinear system with attractors is more robust to a decrease in cross-inhibition, where its performance is significantly boosted by the urgency-gating signal (compare with a). d Urgency-gating included exactly as in c) but thresholds are at fixed individual population rates (r 1 = 20Hz or r 2 = 20Hz). a–d Dashed blue curve with asterisks: linear system with . Solid green curve with open circles: nonlinear attractor-based system with a = 0.1. Yellow shaded area indicates the range of parameters where the nonlinear system outperforms the linear system (which produces a perfect integrator at W X = 0.5). Low-noise, D′ = 50Hz 2 s −1 (equivalent to D = 100Hz 2 s −1 in the 1D system and 1Hz spontaneous activity) with a fixed duration of 2 s. Panels a-c) include the effect of “undecided” trials, while the urgency signals in panel d) ensure threshold is reached on over 99.9 % of trials

Robustness to parameter mistuning