Abstract

Perceptual learning is the improved performance that follows practice in a perceptual task. In this issue of Neuron, Yotsumoto et al. use fMRI to show that stimuli presented at the location used in training initially evoke greater activation in primary visual cortex than stimuli presented elsewhere, but this difference disappears once learning asymptotes.

Practicing a perceptual task can result in better detection and discrimination of relevant stimuli, a phenomenon known as perceptual learning. For instance, picking corn in a field can be challenging, as the cobs, sheathed in their green husks, blend in with the leafy plant and are difficult to identify (Figure 1A). With practice, however, the task becomes easier, and, after a few hours in a corn field, spotting the cobs becomes almost automatic. Deciphering fetal ultrasound images is also experience dependent: a trained professional can easily point out important details to novice parents, who can see little meaning in the image (Figure 1B).

Figure 1. Seeing Some Things Requires Learning.

(A) Corn cobs can be initially hard to spot on the plant. (B) You may need a professional to tell you it’s a boy.

Perceptual learning is considered to be a manifestation of neural plasticity in the adult brain, enabling adaptive responses to environmental changes. Such learning has been demonstrated psychophysically for many stimuli in different sensory modalities and is often highly specific—in visual tasks, improvements in stimulus detection or discrimination are usually limited to the particular location, orientation, or eye used in training (Seitz and Watanabe, 2005). This specificity suggests that perceptual learning occurs in early cortical regions, where receptive fields are selective for these attributes.

Previous studies attempting to characterize the changes in neural activity that accompany perceptual learning have usually focused on the final outcome—how neural activity had changed by the end of training. The dynamic changes in neural activity during a prolonged, gradual learning process have not been elucidated. In this issue of Neuron, a neuroimaging study by Yotsumoto, Watanabe, and Sasaki (Yotsumoto et al., 2008) addresses this topic by observing the changes in neural activity that occur over multiple scanning sessions, interspersed throughout the training period of a visual discrimination task.

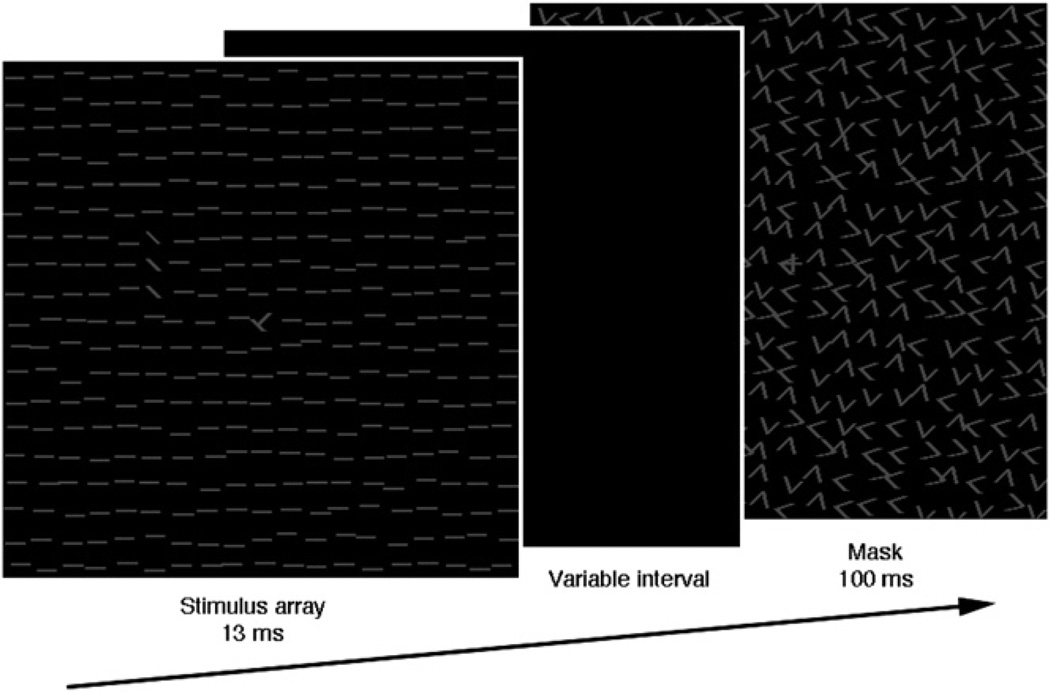

Yotsumoto et al. used a texture discrimination task (Figure 2) to investigate the development of both performance and brain activity over an extended period. Participants first performed the task in the scanner without receiving any training and then again (usually the next day) after a training session outside the scanner. They then received five additional training sessions, one every couple of days, followed by a third scanning session. Two weeks later, without additional training, they went through a fourth and final scanning session.

Figure 2. Schematic Depiction of the Texture Discrimination Task.

On each trial, a stimulus array was shown briefly, followed by a variable interval and a mask. To ensure that participants were fixating centrally, they were asked to report whether a “T” or “L” was presented at the center of the display. They then had to report whether the three obliquely oriented lines were arranged vertically or horizontally. This task has been used in many perceptual learning studies (Karni and Sagi, 1991).

In all scanning sessions, a constant 100 ms stimulus-mask interval was used, so brain activity could be compared for physically identical stimuli. During the training sessions, the duration of the interval between the visual stimulus and the mask was adaptively adjusted until participants’ threshold duration (~80% correct) was reached (short durations impair perception). This threshold duration decreased from one training session to the next, indicating that perceptual learning had occurred: participants reached the same level of performance with a shorter stimulus-mask interval.

Like the training sessions, the scanning sessions comprised trials where the target array for the texture discrimination task was presented at the trained location (upper- left quadrant). Scanning sessions also included trials in which the array was presented in an untrained location (bottom-right quadrant). This made it possible to compare both behavioral performance and brain activity for trained versus untrained locations.

Behavioral performance at the trained location improved over the first three scanning sessions and then remained roughly the same in the last session. There was no such improvement at the untrained location over all scanning sessions.

To investigate neural activity associated with perceptual learning, Yotsumoto et al. examined the blood-oxygen-level-dependent (BOLD) response at retinotopic regions of visual cortex. For each scanning session, they compared the activation evoked in corresponding retinotopic regions by stimulus arrays presented at the trained and untrained locations. The activity observed in primary visual cortex (V1) showed an interesting pattern: whereas in the first, pre-training, scanning session activity in the two locations was the same, in the following two scanning sessions there was greater activity at the trained than at the untrained location. In the last scanning session, however, after significant perceptual learning had taken place, activation in the trained and untrained areas no longer differed. Importantly, as the BOLD activation was normalized to each scanning session’s global mean, which may differ across sessions, it cannot be said that activity in the trained location increased and then declined over time; rather, the difference between the trained and untrained locations, which at first increased, disappeared by the last session.

This pattern of results was limited to V1; no pattern emerged in the development of the BOLD signal in other retinotopic regions, nor in frontal and parietal regions involved in attention and memory. This is consistent with previous evidence that perceptual learning occurs at an early neural locus, where neurons have feature-specific receptive fields.

To ensure that the last session’s disappearance of activation differences in V1 was the result of learning (rather than of receiving no more training between the last two scanning sessions), Yotsumoto et al. carried out a second experiment with a new group of participants. This experiment was similar to the previous one, except that participants had eight more training sessions in the 2 weeks between the third and fourth scanning sessions. The results were qualitatively similar to the first experiment, again showing improved performance that reached a plateau and an initial increase in the difference between trained and untrained locations’ V1 activation followed by no difference in the last scanning session.

Despite the overall similarity in the two experiments’ pattern of results, there is an intriguing difference between the effects observed in their second scanning sessions, each of which took place after a single training session. At this point, the procedures employed in the two experiments were identical, so results should have been similar. Whereas in experiment 1 this session showed the largest V1 activation difference, there was only a modest improvement in performance. Significant learning only occurred in the next session. In experiment 2, however, this session had the largest improvement in behavioral performance and the largest V1 activation difference between trained and untrained locations; neural activity corresponded to behavior.

Yotsumoto et al. explain this discrepancy by pointing out that, on average, participants in the second experiment had reached a <100 ms threshold stimulus- mask interval by the second scanning session, whereas participants in the first experiment still required longer durations to perform well. As a 100 ms interval was used in the scanning sessions, it is not surprising that performance was not the same across experiments in this session. This explanation, though post hoc, raises two interesting points: first, that the observed V1 activity may reflect the ongoing neural changes that underlie perceptual learning, rather than simply the behavior associated with such learning; second, that it may be possible (or even necessary) to take individual performance differences into account when investigating the neural underpinnings of perceptual learning.

In a previous neuroimaging study using the same texture discrimination task, Schwartz et al. (2002) presented stimuli monocularly and compared performance and BOLD activation at the same retinotopic location between the trained and untrained eye. That study comprised a single scanning session 1 day after intensive training and found greater activity in V1 for the trained than for the untrained eye. The results of Yotsumoto et al. are consistent with this finding (albeit for different locations rather than eyes) and go beyond it by showing that this difference in activation subsides with continued training.

Other neuroimaging studies of perceptual learning present a seemingly inconsistent picture. Using an orientation discrimination task,Schiltz et al. (1999) found that training led to diminished activation in early visual cortex in response to trained (compared to untrained) orientations;Furmanski et al. (2004) capitalized on the fact that horizontally oriented stimuli evoke greater BOLD activity in V1 than oblique ones and showed that learning abolished this difference. Like Yotsumoto et al. and Schwartz et al. (2002), neither study found any differential effects in higher cortical regions. In contrast, Sigman et al. (2005) report that training in a visual search task led to greater activation in retinotopic cortex when a trained, compared to an untrained, target was presented, but to diminished activation in higher cortical regions, including the lateral occipital area and regions in frontal and parietal cortex associated with attention. This was interpreted as reflecting a decline in the need for attention as the trained target became easier to detect.

These studies all plausibly assume that activation associated with an untrained stimulus can serve as an index of pre-training neural activity and infer learning-related changes from comparisons between trained and untrained stimuli. Using tasks in which rapid learning occurs, two studies have investigated perceptual learning in the course of a single scanning session, allowing for a direct observation of activity changes as learning progressed.Vaina et al. (1998) used a motion discrimination task and report an increase in the spatial extent and intensity of activation in the motion-sensitive area MT, alongside decreases in other regions associated with attention and motor planning. Using a contrast discrimination task, Mukai et al. (2007) report that for participants who reached a criterion learning level (only about half of them, again indicating the importance of individual differences), activation in early visual areas as well as higher-level regions associated with attention decreased as performance improved.

So, does perceptual learning lead to an increase or decrease in neural activity at early sensory sites? And are higher, attention- related cortical regions involved in such learning or not? It is tempting to conclude, in light of the conflicting evidence, that the answers may depend on the specific task and experimental protocol used, but the results of Yotsumoto et al. raise the possibility that previous studies may have tapped into different stages of the learning process. This may account for some of the variability of results in primary visual cortex but does not clear up the confusion surrounding higher regions, as Yotsumoto et al. found no differential effects outside V1 at any point. This may indicate that, for some perceptual tasks, learning occurs exclusively in the relevant primary sensory cortex, whereas others require active involvement of higher regions. The question of what determines whether learning a perceptual task will involve such regions relates to an ongoing debate regarding the degree to which attention is involved in perceptual learning (Seitz and Watanabe, 2005).

Yotsumoto et al. suggest that the pattern of results they report implies a two-stage neural mechanism underlying perceptual learning, with the first stage accounting for initially greater BOLD activation in response to trained versus untrained stimuli, and the second for a subsequent reduction in this difference once learning is consolidated. How could such mechanisms be implemented at the cellular level?

One process that could result in BOLD increases is suggested by single-unit perceptual learning studies of tactile and auditory discrimination (e.g., Recanzone et al., 1993), which have reported an increase in the area of (and number of neurons in) primary sensory cortex devoted to representing the trained stimulus. However, this has not been found in vision either in single-unit (Crist et al., 2001; Schoups et al., 2001) or neuroimaging studies (cf. Vaina et al., 1998), suggesting that different mechanisms may underlie learning in different modalities. There is evidence, though, that visual learning can increase contextual effects—local interactions mediated by horizontal and interlaminar connections between target-responsive neurons and those responding to stimuli outside the target’s receptive field (Crist et al., 2001). Strengthening such connections could enhance segregation of targets (e.g., the diagonal lines of the texture discrimination task) from a homogenous background (e.g., horizontal lines) and may also lead to an increase in the BOLD signal (Schwartz et al., 2002).

A decrease in the BOLD signal would require a different cellular mechanism to account for the neuronal downscaling proposed by Yotsumoto et al. One possibility is suggested by the finding (e.g., Schoups et al., 2001) that improved orientation discrimination was correlated with a narrowing of the tuning curves of the orientation-selective V1 neurons encoding fine distinctions around the trained orientation. Thus, fewer neurons would contribute considerably to stimulus processing, which would lead to a decreased BOLD signal.

Significantly, Yotsumoto et al. are the first to report a change in the direction of differential effects in V1 as learning progresses. An account of their findings at the cellular level would have to explain this shift, either by incorporating more than one of the mechanisms discussed above or by an as yet unknown mechanism.

REFERENCES

- Crist ER, Li W, Gilbert CD. Nat. Neurosci. 2001;4:519–525. doi: 10.1038/87470. [DOI] [PubMed] [Google Scholar]

- Furmanski CS, Schluppek D, Engel SA. Curr. Biol. 2004;14:573–578. doi: 10.1016/j.cub.2004.03.032. [DOI] [PubMed] [Google Scholar]

- Karni A, Sagi D. Proc. Natl. Acad. Sci. USA. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukai I, Kim D, Fukunaga M, Japee S, Marrett S, Ungerleider L. J. Neurosci. 2007;27:11401–11411. doi: 10.1523/JNEUROSCI.3002-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone GH, Schreiner CE, Merzenich MM. J. Neurosci. 1993;13:87–103. doi: 10.1523/JNEUROSCI.13-01-00087.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiltz C, Bodart JM, Dubois S, Dejardin S, Michel C, Roucoux A, Crommelinck M, Orban GA. Neuroimage. 1999;9:46–62. doi: 10.1006/nimg.1998.0394. [DOI] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Schwartz S, Maquet P, Frith CD. Proc. Natl. Acad. Sci. USA. 2002;99:17137–17142. doi: 10.1073/pnas.242414599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz A, Watanabe T. Trend. Cogn. Sci. 2005;9:329–334. doi: 10.1016/j.tics.2005.05.010. [DOI] [PubMed] [Google Scholar]

- Sigman M, Pan H, Yang Y, Stern E, Silbersweig D, Gilbert CD. Neuron. 2005;46:823–835. doi: 10.1016/j.neuron.2005.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaina L, Belliveau JW, Des Roziers EB, Zeffiro TA. Proc. Natl. Acad. Sci. USA. 1998;95:12657–12662. doi: 10.1073/pnas.95.21.12657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yotsumoto Y, Watanabe T, Sasaki Y. Neuron. 2008;57:827–833. doi: 10.1016/j.neuron.2008.02.034. this issue. [DOI] [PMC free article] [PubMed] [Google Scholar]